Abstract

Magnetic resonance images (MRI) is the imperative imaging modality utilized in medical diagnosis tool for detecting brain tumors. The MRI possess the capability to offer detailed information based on anatomical structures of brain. However, the major obstacle in the MRI classification is semantic gap among low-level visual information obtained by the high-level information alleged from clinician and MRI machine. This paper proposes the novel technique, named Chaotic whale cat swarm optimization-enabled Deep Convolutional Neural Network (CWCSO-enabled Deep CNN) for brain tumor classification. Here, pre-processing is employed for removing noise and artifacts contained in image. Moreover, Fractional Probabilistic Fuzzy Clustering is employed for segmentation for identifying the tumor regions. Consequently, the feature extraction is carried out from segmented regions of image using wavelet transform, Empirical Mode Decomposition (EMD), scattering transform, Local Directional Pattern (LDP) and information theoretic measures. In addition, Significant LOOP is newly developed through modifying Significant Local Binary Pattern (SLBP) by LOOP. The extracted features are induced by Deep CNN to determine non-tumor, edema, tumor, and enhanced tumor, which is trained by the proposed CWCSO. Thus, the resulted output of proposed CWCSO-based Deep CNN is employed for brain tumor classification. The proposed model showed improved results with maximal specificity of 98.59%, maximal accuracy of 95.52%, and maximal sensitivity of 97.37%, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the last few decades, the MRI have become very popular to detect the brain abnormalities by determining location and size of the affected tissues [21]. MRI is broadly utilized by radiologists for the visualization purpose of inner composition of human body. Thus, it provides useful information regarding the anatomy of human soft tissues, thereby effectively assists in process of brain tumor diagnosis [35]. MRI is the critical component in the treatment for the planning and diagnosis that increased the knowledge of diseased and normal anatomy significantly for the medical research [1, 30]. Due to high contrast of the soft tissues, MRI is effective when compared with other imaging techniques in brain tumor identification and detection [6, 7, 11]. However, the MRI is utilized to investigate, and analyze the brain behaviour. In addition, brain MRI is often used for monitoring tumor response to the treatment process [4]. Furthermore, the MRI does not use any ionizing radiation and thus provides the greater contrast among various soft tissues of human body [14].

The unusual tissue enhancement in brain is said to be brain tumor [35]. Brain tumors are the abnormal growths in brain that may be either noncancerous (benign) or cancerous (malignant). The effects on benign and the malignant brain tumors create similar type of problems based on the tumor type, and brain location. Every year more than 200,000 people are diagnosed with metastatic or primary brain tumor in United States [22, 25]. However, the brain tumor is one of the life threatening tumors, which is created either by abnormal and the uncontrolled cell division in brain or from the cancers present primarily in other parts of body. Furthermore, the tumor affects the healthy cells indirectly and directly, and cause brain swelling, and increases the pressure within the skull. In general, tumors are partitioned based on location of its origin and their malignancy [14, 18, 27]. Brain tumors are the cause of solid tumor cancer that causes death in children, surpassing the Acute Lymphoblastic Leukemia (ALL). They are second leading cancer death in male adults ages between \(20\) and \(29\), and fifth leading of cancer death in female adults ages from \(20\,to\,39\). In addition, the metastatic brain tumors spreads from other parts of body to brain, which leads to death, but the primary brain tumors do not spread to the other parts of body [19].

In the manual diagnosis, the MRI images are for visual interpretation which is inaccurate, intensive, and expensive. Moreover, human eye is very sensitive to any changes in MRI image, where sensitivity is decreased with the increase of images. The manual diagnosis is also based on subjective decisions of the radiologists that is difficult to quantify, and leads to the misclassification [19, 42]. Classification is a technique to classify objects into the corresponding classes. To classify the different image features initially the feature extraction is required for extraction, and then the classification is done to categorize the normal and the abnormal [37]. Several classification techniques are introduced for the medical images, like fuzzy c-means (FCM), artificial neural network (ANN), decision tree, support vector machine (SVM), Bayesian classification and K-Nearest Neighbour (KNN) [33]. Among, which ANN, SVM, and KNN are the supervised learning procedures. In addition, unsupervised learning for the data clustering, such as K-means clustering, Self Organizing Map etc. are also utilized for the brain tumor segmentation and the classification [23].

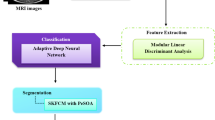

Its main goal is to devise a new technique, namely CWCSO-based Deep CNN using MRI for classifying brain tumor. Initially, input image is pre-processed for removing noise and artifacts existing in the image. Furthermore, Fractional Probabilistic Fuzzy Clustering is adapted for segmentation to generate segments. After that, wavelet transform, scattering transform, Empirical Mode Decomposition (EMD), Local Directional Pattern (LDP) and information theoretic measures with the new descriptor, named Significant LOOP are employed for feature extraction to generate the feature vector. The extracted features are then induced by Deep CNN for identifying the tumorous regions. The training of Deep CNN is performed by developed CWCSO, which is designed by incorporating Chaotic concept and WCSO algorithm. Here, the weights of Deep CNN are optimized by proposed CWCSO algorithm.

The major contribution of the paper is:

-

Proposed Significant LOOP for feature extraction: The new descriptor, named Significant LOOP is introduced, which is developed through modifying Significant Local Binary Pattern (SLBP) by LOOP to achieve good performance. These features are integrated to form the feature vectors.

-

Proposed CWCSO-based Deep CNN for brain tumor classification: The developed CWCSO-enabled Deep CNN is utilized to tune Deep CNN for brain tumor detection that categories into Edema, Non-tumor, Core tumor, and Enhanced tumor. Here, CWCSO-based Deep CNN is designed by integrating chaotic concept and WCSO.

The remaining sections of paper are arranged as follows: Sect. 2 elaborates description of the conventional brain tumor detection strategies utilized in literature and challenges faced, which are considered as the inspiration for developing the proposed technique. The detection of brain tumor based on CWCSO-based Deep CNN is portrayed in Sect. 3. The results of developed method with other methods are depicted in Sect. 4 and finally, Sect. 5 provides the conclusion.

2 Motivation

In this section, some of the previous brain tumor classification methods along with their limitations are explained, which motivate the researchers to develop a new method to perform brain tumor segmentation and classification.

2.1 Literature survey

The eight classical strategies based on brain tumor segmentation and classification along with its limitations is deliberated below: Angulakshmi and Lakshmi Priya [5] developed spectral clustering for brain tumor tissue segmentation from the MRI images. Initially, the tumoerous region was identified based on superpixel-enabled spectral clustering. The super pixels were calculated with the help of blocks central tendency values of image. Finally, Region of Interest (ROI) was segmented on the basis of spectral clustering for segmenting the tumor tissues. This method failed to extract multiple features from ROI in order to improve segmentation accuracy. Amin et al. [3] developed the method for classifying non-cancerous and cancerous regions of brain. In this framework, various methods were applied for candidate lesion segmentation. After that, the features set was chosen for each applicant lesion based on texture, shape, and the intensity. Finally, the SVM was introduced to classify brain tumor. The method does the eliminated actual tumor pixels. Ahmadvand et al. [2] presented Dynamic Classifier Selection Markov Random Field (DCSMRF) for brain MRI segmentation and the classification. Thus, this approach classifies brain tumor into White Matter (WM), Gray Matter (GM), and the Cerebrospinal Fluid (CSF). Although, the class overlapping issue was not solved for improving the system performances. Ilunga-Mbuyamba et al. [18] presented Localized Region-based Active Contour Model (LRASM) for segmenting the tumor regions of the brain. Other datasets are not included for improving the segmentation accuracy.

Chen et al. [15] developed Deep CNN for segmenting the brain tumors automatically. This DCNN was utilized to increase the feature extracted image quality with the combination of symmetry prior knowledge for segmentation. This method achieved better performance in both the accuracy and efficiency, but computational complexity was too high. Kumar Mallick et al. [24] developed an approach for compressing the image based on Deep Wavelet Autoencoder (DWA). This method uses feature reduction property of the autoencoder with the image decomposition property of the wavelet transform. The tremendous effect of sinking the feature set size was employed to ensure the classification step based on Deep Neural Network (DNN). Other datasets were not considered for analyzing the system performance. Sajid et al. [36] presented deep learning algorithm for segmentating brain tumor automatically. Here, efficiency of the two-stream parallel network was combined with the three-path network to form the hybrid model. In addition, the global and the local information was considered for predicting the signature label for the pixel, which make the device more adaptive for the tumor segmentation. More number of training samples were required for enhancing performance of the system. Thillaikkarasi and Saravanan [39] developed deep learning approach with the M-SVM for tumor segmentation efficiently. Initially, the MRI image was smoothed and improved by the Laplacian of Gaussian filtering (LoG) and Contrast Limited Adaptive Histrogram Equalization (CLAHE). After that, feature was extracted using tumor shape, location, and the surface features in the brain. Finally, the image classification was carried out based on M-SVM using the selected features. However, the detection performance is based on the dataset, provides poor performance under noisy conditions of the data.

Usman and Rajpoot [40] introduce Random forest classifier to increase the classification accuracy as evident by quantitative results but the feature images at third level are too small and not much useful for us. Feature extraction includes features intensity, intensity differences, neighbourhood information and wavelet to extract the wavelet features, which has not been explored and applied on MICCAI BraTS. Corso et al. [20] developed Gaussian Mixture Model to segment and classify in less than 1 min. Times are orders of magnitude faster than the current state of the art in medical image segmentation but direct comparison is not possible, GBM tumor contains some peculiarities not entirely captured by our models. The main contribution of this paper is the model-aware affinity, which is a step toward unifying these two disparate segmentation approaches by incorporating models. Nilesh et al. [32] introduce morphological operation which is used for the extraction of the boundary areas of brain images. The operation of addition and removing pixels to or from boundary region of the objects is based on the structuring element of the selected image. SVM extract information from the segmented tumor region and classify healthy and infected tumor tissue. It improve the accuracy of diagnosis system by selecting prominent features and increase the performance of classifiers on the diagnosis of the tumor from brain MRI with feature extraction but more theoretical measures are used for experimental results.

2.2 Challenges

The challenges confronted by the conventional strategies are deliberated below:

-

In [15], the Deep Convolutional Neural Network is developed for segmenting the brain tumors. Here, the accuracy was found better, but the method adopts only the single scale for extraction.

-

Deep wavelet autoencoder based on Deep NN is developed in [24] for brain MRI classification, but the method failed to combine DNN with other variation of autoencoder for evaluating the performance.

-

In [36], the deep learning is employed for detecting and classifying the brain tumors. However, the method failed to solve the over-fitting problem while considering less training data.

-

The method in [39] developed kernel-driven CNN for tumor classification of the brain, but still the method requires more iteration to enhance classification accuracy.

-

Gliomas segmentation based on computer-aided diagnosis is very challenging because of its irregular shape and the diffused boundaries of the tumor with the surrounding area [9].

3 Significant LOOP and Chaotic whale-cat swarm optimization-based deep CNN for brain tumor classification

Brain tumor segmentation and the level classification becomes very popular in treating and diagnosing patients. The proposed Significant is designed newly through modifying SLBP by LOOP to form the feature vector. Finally, the tumor classification is done based on Deep CNN that is trained by CWSCO. The CWCSO is developed by incorporating CWOA and CSO. Therefore, the proposed model generates four classes, like Edema, Non-tumor, Core tumor, and Enhanced tumor (Fig. 1).

Initially, input image is randomly selected in order to perform the classification mechanism among the number of images from the dataset. Assume the database as \(Q\) with \(g\) number of images are represented by,

where, \(Q\) refer to database, \(Z_{d}\) signifies total images, and \(Z_{g}\) refer to \(g^{th}\) image, which is selected as input image for processing the brain tumor classification.

3.1 Pre-processing

The input image \(Z_{g}\) is given to the pre-processing step. The main aim of the pre-processing is to enhance input image quality in order to ensure effective classification. In addition, the input image is RGB image and is fed to Hue color transformation. The Hue transformed image is utilized to offer brightness of image and it defines the image color. Then, the Hue transformed image is forwarded to morphological opening, thresholding, and the closing for enabling neighbourhood elements structuring. Thus, the morphological opening enables object removal that is smaller in morphological closing, and shape on binary image returns closed image. Hence, the important region is cropped from input image for ensuring pre-processed image so that it unleashes complexity related with classification. The output of pre-processed image is indicated as, \(H_{j}\).

3.2 Fractional probabilistic fuzzy clustering for segmentation

After pre-processing, segmentation is carried out using Fr-pFCM that is designed by incorporating standard probabilistic Fuzzy Clustering [10] in fractional concept [31]. In general, the segmentation ensures the classification accuracy, and therefore the segmentation is very appropriate for further processing. In standard probabilistic fuzzy clustering, the probabilistic distance measure is interpreted in standard FCM. In other words, the Bhattacharya distance measure in FCM is changed with probabilistic measure that performs better than standard FCM. In this paper, the fractional measure is added additionally with fractional probabilistic FCM in order to improve segmentation accuracy. The pre-processed image is taken as the input, and is forwarded to centroid update and when the stopping criteria is reached, the final segmented output is given to feature extraction module. The algorithmic procedure of fractional probabilistic FCM is illustrated below.

-

(a)

Initialization: Consider the data point set represented as, \(r = \left\{ {r_{1} ,\,r_{2} ,...,r_{mn} } \right\}\) with \(r_{j} \in M^{m}\) keep \(a\), \(2 \le a \le mn\), and then initialize \(N\,\left( 0 \right) \in S_{fcm}\).

-

(b)

Centroids computation: At \(s{\text{th}}\) iteration, the mean vectors \(b\) is computed using the below equation as,

$$J_{j}^{s + 1} = \frac{{\sum\limits_{k = 1}^{mn} {\left( {h_{jk}^{s} } \right)^{u} \,.\,r_{k} } }}{{\sum\limits_{k = 1}^{mn} {\,\left( {h_{jk}^{s} } \right)^{u} } }};\;j = 1,\,2,\,...,a$$(2)where, \(r_{k}\) denotes corresponding data points, \(h_{jk}^{s}\) denotes the membership partition value.

Thus, the above equation is utilized to compute the centroids in standard Probabilistic fuzzy clustering. In order to include fractional concept in standard algorithm, Eq. (1) is rearranged by,

$$J_{j}^{s + 1} - J_{j}^{s} = \frac{{\sum\limits_{k = 1}^{mn} {\left( {h_{jk}^{s} } \right)^{u} \,.\,r_{k} } }}{{\sum\limits_{k = 1}^{mn} {\,\left( {h_{jk}^{s} } \right)^{u} } }} - J_{j}^{s}$$(3)$$\beta^{\delta } \left[ {J_{j}^{s + 1} } \right] = \frac{{\sum\limits_{k = 1}^{mn} {\left( {h_{jk}^{s} } \right)^{u} \,.\,r_{k} } }}{{\sum\limits_{k = 1}^{mn} {\,\left( {h_{jk}^{s} } \right)^{u} } }} - J_{j}^{s}$$(4)$$J_{j}^{s + 1} - \delta \,J_{j}^{s} - \frac{1}{2}\,\delta \,J_{j}^{s - 1} - \frac{1}{6}\,\left( {1 - \delta } \right)\,J_{j}^{s - 2} - \frac{1}{24}\delta \,\left( {1 - \delta } \right)\,\left( {2 - \delta } \right)J_{j}^{s - 3} = \frac{{\sum\limits_{k = 1}^{mn} {\left( {h_{jk}^{s} } \right)^{u} \,.\,r_{k} } }}{{\sum\limits_{k = 1}^{mn} {\,\left( {h_{jk}^{s} } \right)^{u} } }} - J_{j}^{s}$$(5)The final equation of fractional probabilistic FCM, which integrated centroids of previous iteration for updating current iteration centroid, and is given below,

$$J_{j}^{s + 1} = \left( {\delta - 1} \right)\,J_{j}^{s} + \frac{1}{2}\,\delta \,J_{j}^{s - 1} + \frac{1}{6}\,\left( {1 - \delta } \right)\,J_{j}^{s - 2} + \frac{1}{24}\delta \,\left( {1 - \delta } \right)\,\left( {2 - \delta } \right)J_{j}^{s - 3} + \frac{{\sum\limits_{k = 1}^{mn} {\left( {h_{jk}^{s} } \right)^{u} \,.\,r_{k} } }}{{\sum\limits_{k = 1}^{mn} {\,\left( {h_{jk}^{s} } \right)^{u} } }}$$(6)The centroid of past event is denoted as \(J_{j}^{s - 1}\), \(J_{j}^{s - 2}\), and \(J_{j}^{s - 3}\), and the term \(\delta\) refer to fractional constant ranging from \(0\,and\,1\).

-

(c)

Evaluation of partition matrix: The partition matrix is also known as membership, and the is computed using below equation,

$$h_{jk}^{s + 1} = \frac{1}{{\sum\limits_{j = 1}^{a} {\left( {\frac{{\beta_{jk} }}{{\beta_{ik} }}} \right)^{{\frac{1}{u - 1}}} } }}$$(7)for \(1 \le j \le a\); \(1 \le k \le mn\), where \(\mu_{k} \in \gamma\).

-

(d)

Check the stopping criterion: The stopping criterion is done using partition matrix, and partition matrix of current and previous iterations are compared. If the difference of partition matrix is lesser than threshold value, then performs the last iteration. Thus, the stopping criterion is expressed by,

$$If\,\,\,\left\| {J^{s + 1} - J^{s} } \right\| < \kappa$$(8)In case, if the difference is greater than threshold, then the iteration is incremented as \(s = s + 1\) continue from step-2. \(\kappa\) signifies the small real positive constant. Therefore, the partition matrix signifies the membership function for cluster \(j\). The output of segmentation process is the segments that specifies non- tumor and tumor regions. The Fractional probabilistic FCM output is expressed by,

$$q = \left\{ {q_{1} ,\,q_{2} } \right\}$$(9)where, \(q_{1}\) refer to tumor region, and \(q_{2}\) signifies non-tumor region.

3.3 Feature extraction from the segments

The generated non-tumor and tumor regions from segmentation process passes to feature extraction for better classification. Thus, for feature extraction, the features like LDP features, EMD feature, wavelet features, and statistical features, like mean, gini-index, variance, entropy, and the skewness are included. In this paper, the proposed Significant LOOP is additionally added, which is designed by modifying Significant Local Binary Pattern (SLBP) by LOOP. At last, the above-mentioned features form feature vector, that is considered as input to the classifier for tumor level classification.

-

(a)

Local Directional Pattern: The segments obtained from fractional probabilistic FCM is is to be taken as input to LDP [13] in order to generate texture features so that complexity related with the classification is to be mitigated. The texture feature is utilized to represent robust features for tumor level classification; thus, classification accuracy is improved. Consider the image intensity centered at \(\left( {y,z} \right)\) as, \(K_{c}\). However, the intensity of neighbouring pixel is denoted as \(K_{p}\), and the value of \(p\) ranging from \(0\,and\,7\), and the pixel intensity at location \(\left( {y,z} \right)\) at eight directions, and the LDP is computed using the expression given below,

$$LDP\,\left( {y,z} \right) = \sum\limits_{p = 0}^{7} {\mu \left( {K_{c} - K_{p} } \right)\,.2^{p} }$$(10)$$\mu \,\left( \ell \right) = \left\{ {\begin{array}{*{20}c} {1;\quad if\,\,\ell \ge 0} \\ {0;\quad otherwise} \\ \end{array} } \right.$$(11)where, the krish mask is indicated as \(K_{p}\). The neighbourhood pixel value and intensity of center pixel is located at \(\left( {y,z} \right)\) attain greater pixel intensity while compared to neighbouring pixel, or else, pixel value is zero. Hence, texture features related to input image remains in binary values, and then frequency histogram is introduced to specify the image. The LDP histogram feature enable better representation of features for differentiating the image effective. The histogram feature dimension is indicated as, \(\left[ {1 \times 32} \right]\). The histogram representation of LDP features produce better interpretability with dimension \(\left[ {1 \times 64} \right]\).

-

(b)

Empirical Mode Decomposition: The EMD [17] feature is determined without computing the basis function, but it is generated adaptively based on input segment. The function of EMD is about the Intrinsic Mode Functions (IMF) or the feature extraction from decomposed image. Here, the EMD uses the input segment image for decomposing it as the EMD analysis adaptively, and the scales are adjustable. The three steps followed in decomposition are minimal and maximal point determination, curve interpolation point computation based on extreme points, and extracts the signal using IMF. Therefore, the input image is decomposed as residual term and IMF in which the IMF should satisfy the following conditions. The initial condition is that the difference in number of extreme, and zero points are not greater than one, whereas the second condition is related to local mean of both upper and the down envelope that is not near to the zero. Thus, the performance of EMD is based on the condition, and the image residue is identified finally of size \(\left[ {1 \times 32} \right]\).

-

(c)

Wavelet Transform: It is the effective tool to decompose the image as representation that indicates the image details as function of time. It characterizes and compresses data, reduces noise, transient events, and perform several operations related to brain tumor classification. The wavelet transformation is indicated as, \(\varpi\).

$$\varpi \,\left[ {q^{1} } \right] = \left[ {\begin{array}{*{20}c} {LL\,} & {LH} & {HL} & {HH} \\ \end{array} } \right]$$(12)The four bands of data each labelled as LL(Low–Low) HL(High–Low) LH(Low–High) and HH(High–High). The LL subband contains an approximation of the original image while the other subbands contain the missing details.The wavelet transform output is the sub-bands producing high as well as low frequency components with useful information about the image. The size of wavelet transform is denoted as, \(\left[ {1 \times 32} \right]\).

-

(d)

Information theoretic measures: This measure contributes effective tumor levels classification. The information theoretic measures, which includes mean, Gini index, entropy, variance, and skewness. However, these features are extracted from individual tumor segments is expressed by,

$$IM\,\left[ {q_{1} } \right] = \left[ {\begin{array}{*{20}c} {\begin{array}{*{20}c} {\rm M} & \nu \\ \end{array} } & G & {\rm E} & {\rm T} \\ \end{array} } \right]$$(13)where, the mean, variance, Gini index, entropy and skewness, are denoted as \({\rm M}\), \(\nu\), G, E, and \({\rm T}\). The dimension of information theoretic measure of about \(\left[ {1 \times 5} \right]\).

-

(i)

Mean: The mean is computed by taking average of pixels in tumor segment, and is given as,

$${\rm M} = \frac{1}{x}\,\sum\limits_{k = 1}^{x} {\eta_{k} }$$(14)where, the total pixel is represented as \(x\), and \(\eta_{k}\) refer to \(k^{th}\) pixel present in segment.

-

(ii)

Variance: The variance is utilized to measure by summing squared distance of individual pixel value, and mean to total pixels in segment. The variance equation is expressed as,

$$\nu = \frac{1}{y}\,\,\sum\limits_{l = 1}^{y} {\left( {\rho_{l} - \mu } \right)}^{2}$$(15) -

(iii)

Gini-index: Gini index is utilized to measure inequality based on pixel probability that results in improved identification more effective, and is represented as,

$$G = 1 - \sum\limits_{k = 1}^{x} {\Pr ob_{k}^{{}} }$$(16)where, the probability pixel of \(k^{th}\) segment is denoted as \(\Pr ob_{k}^{{}}\), with dimension \(\left[ {1 \times 1} \right]\).

-

(iv)

Entropy: The entropy calculates the entropy of segment where the deviation from entropy measure may report the tumor level. The data of entropy feature is indicated by,\({\rm E}\), and the equation is expressed as,

$${\rm E}\left( {\eta_{k}^{1} } \right) = - \sum\limits_{\alpha = 1}^{{rn\,\left( {\eta_{k}^{1} } \right)}} {R_{\alpha } \,\log \,R_{\alpha } }$$(17)where, the unique attributes of tumor segment is denoted as \(rn\,\left( {\eta_{k}^{1} } \right)\), and \(R_{\alpha }\) refer to probability values.

-

(v)

Skewness: Skewness is defined as measure of symmetry that extends distortion of distribution from normal distribution. The third momentum of distributed data is skewness, and is given by,

$$T = \hbar \,\left[ {\left( {\frac{{\eta_{k} - \gamma }}{{\ell^{2} }}} \right)^{3} } \right]$$(18)where, \(\ell^{2}\) signifies standard deviation, and T is the standard deviation of data.

-

(e)

Proposed significant LOOP: The Significant LOOP (Significant Local Optimal Oriented Pattern) [12] is the new descriptor developed by modifying the Significant Local Binary Pattern (SLBP) by LOOP. This descriptor takes the advantages from Local binary pattern (LBP), and Local Gradient Descent (LGD) such that orientation dependency, and demerits related with empirical assignment values are computed. The intensity of the image \(I\) is denoted by, \(Q^{ * }\) centered at \(\left( {g^{ * } ,h^{ * } } \right)\). The intensity of the neighbourhood pixel is indicated as \(P^{a}\) and \(a\) takes value ranges between \(0\) and \(7\). In addition, the Kirsch mask application produces output in particular direction that enables probability sign in presence of edge in that direction. The output of the eight Kirsch mask is represented by \(m_{b}\), and exponential weight \(\varpi\) is using rank of magnitude \(m_{b}\) from eight kirsch masks. Moreover, the LOOP value for \(\left( {g^{ * } ,h^{ * } } \right)\) pixel is expressed as,

$$Sig_{LOOP} \,\left( {g^{*} ,\,h^{*} } \right) = \sum\limits_{b = 0}^{7} {k\,\left( {Q^{b} - Q^{*} } \right)} \,.\,2^{\varpi }$$(19)where, \(k\left( d \right) = \left\{ {\begin{array}{*{20}c} {1;\quad if\,\,d \ge 0} \\ {0;\quad Otherwise} \\ \end{array} } \right.\).

Hence, the feature extraction output is given by,

where, the term \(B\) signifies the LDP output, \(C^{hist}\) refer to the histogram of LDP feature, the EMD feature output is indicated as \(A\), wavelet feature output is represented as \(W\), proposed significant LOOP is denoted as \(Sig_{LOOP}\), the term \(I\) refer to information theoretic measures output that includes mean \({\rm M}\), variance \(\nu\), Gini index \(G\), entropy \({\rm E}\), and skewness \({\rm T}\). The above-mentioned features extracted from segments of size, \(\left[ {1 \times 165} \right]\). Thus, the feature vector equation is expressed by,

where, the term \(F^{\omega }\) refer to feature vector that is taken as the input to Deep CNN. Therefore, Deep CNN classifier is optimally tuned based on optimization algorithm.

3.4 Chaotic whale-cat swarm optimization-based deep CNN for tumor level detection

3.4.1 Chaotic whale-cat swarm optimization

This research proposes a novel technique, named chaotic whale cat swarm optimization-enabled Deep Convolutional Neural Network for brain tumor classification.The proposed CWCSO algorithm is very effective in classifying the tumor level, but the features, such as mimicking behavior and the hierarchical order in the chaotic concept achieved enhanced performance in terms of both accuracy and robustness. The chaotic whale is used to improve the tracking mode step of the algorithm. Thus, the update equation of CWOA is modified using CSO. Hence, this modification enables the proposed method more efficient with the enhanced performance. The developed CWCSO-enabled Deep CNN is utilized to tune Deep CNN for brain tumor detection that categories into Enhanced tumor, Edema, Core tumor, and the Non-tumor. Here, CWCSO-based Deep CNN is designed by integrating chaotic concept and WCSO.

Once the appropriate features get extracted, tumor level detection is done based on Deep CNN. The feature vector is taken as input of Deep CNN for tumor level extraction. However, the Deep CNN tunes the biases and weights of classifier for improving the classification accuracy. In addition, the Deep CNN is trained by developed optimization method, named CWCSO that is designed newly by incorporating chaotic concept with WCSO, hence achieves minimal convergence time.

-

(a) Architecture of Deep CNN

Deep CNN [16] effectively performs the tumor level classification and achieved optimal classification results. The Deep CNN architecture composed of three different layers, such as pooling layer, convolutional layer, and the fully connected layer. Each layer existing in Deep CNN performs their own operations. The convolutional layer in Deep CNN classifier is utilized to compute feature map, and then sub-sampled by pooling layer. At last, the classification is carried out to find tumor levels based on fully connected layer. With increased number of convolutional layers in Deep CNN classifier, the classification accuracy will be improved. The neuron of the first layer is incorporated with individual neurons at next layer. Figure 2 depicts structure of the deep CNN classifier.

Convolutional layer: The convolutional layer generates the patterns for the femur points by using the convolutional filter, which connects the neurons from the previous layer to the next layer with the help of trainable weights. Let us consider the input of convolutional layer as \(U\) and output of convolutional layer is expressed by,

where, \(\left( {U_{m}^{n} } \right)_{g,h}\) refer to output of \(n{\text{th}}\) convolutional layer or feature map, and \(*\) represents a convolutional operator. \(\varpi_{m,z}^{n}\) represents the weight, and \(T_{m}^{n}\) denotes the bias of \(n{\text{th}}\) convolutional layer.

ReLU layer: ReLU is rectified linear unit that applies the non-saturating activation function. In this case, the negative values are effectively removed from activation map by fixing them to zero. The output produced by \(n - 1{\text{th}}\) layer is passed as input of next \(k{\text{th}}\) convolutional layer, and is given by,

where, the term \(A\) signifies the activation function.

Pooling layer: Pooling layer is otherwise called as non parametric layer, which performs the fixed operation without the usage of weight and bias.

Fully connected layers: The output of pooling layer is forwarded as the input of fully connected layer. The output attained from fully connected layer is expressed by,

where, the term \(\left( {\varpi_{m,z}^{n} } \right)_{x,y}\) represents the weights. The weight values are estimated to tune the Deep CNN so as to retrieve the optimal weight.

-

(b) Training of Deep CNN based on CWCSO

The training of Deep CNN classifier is done using the developed CWCSO for achieving better weight factor. The CWCSO is the combination of CWOA and CSO [8] for selecting the optimal weights effectively. The parametric features obtained from both optimization algorithms enable effective classification performance based on the input image. On the other hand, the CSO is performed using two modes, like seeking, and the tracing mode of cats. The cats in the seeking mode rest with the eye on their surroundings, whereas cats in the tracing mode chase their prey. The seeking mode has the factors such as, Seeking Memory Pool (SMP), Seeking Range of the selected Dimension (SRD), Counts of Dimension to Change (CDC), and Self-Position Consideration (SPC). The chaotic whale is used to improve the tracking mode step of the algorithm. However, global solution is achieved by above-mentioned two modes that are influenced by few control parameters. Thus, the update equation of CWOA is modified using CSO. Hence, this modification enables the solution more efficient with the enhanced performance. The algorithmic procedure of proposed CWCSO is given below.

-

(i)

Initialization: The initial step is population initialization (solutions) and is expressed by,

$${\rm Z} = \left\{ {{\rm Z}_{1} ,{\rm Z}_{2} , \cdots ,{\rm Z}_{c} , \cdots {\rm Z}_{a} } \right\}$$(25)where, \({\rm Z}_{c}\) represent the \(c{\text{th}}\) solution, and \(a\) represents the total number of solutions.

-

(ii)

Fitness function: The computation of fitness function is very essential for determining best solution of epileptic seizure prediction. Hence, the fitness function is estimated using the difference among actual output of classifier and estimated output value. Here, the function with minimal fitness value is considered as the best solution. Accordingly, fitness function is calculated as,

$$MS_{error} = \frac{1}{w}\sum\limits_{e = 1}^{w} {\left( {{\rm K}_{e} - V_{m}^{n} } \right)}$$(26)where, \(V_{m}^{n}\) refer to classifier output, and \({\rm K}_{e}\) signifies estimated output.

-

(iii)

Update the solution using the proposed CWCSO: The proposed CWCSO is updated by modifying the CWOA equation with CSO equation. Thus, the tent chaotic map of CWOA is given as,

$${\rm Z}^{\tau + 1} = \left\{ \begin{gathered} \frac{{{\rm Z}^{\tau } }}{0.7}\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,;\,\,\,{\rm Z}^{\tau } < 0.7 \hfill \\ \frac{10}{3}\left( {1 - {\rm Z}^{\tau } } \right)\,\,\,\,\,\,;{\rm Z}^{\tau } \ge 0.7 \hfill \\ \end{gathered} \right.$$(27)The performance is improved based on update process using CWOA. Hence, integrating CSO derives the optimal solution. Thus, the location of cat in tracing mode is given by,

$${\rm Z}^{\tau + 1} = Z^{\tau } + {\rm I}^{\tau }$$(28)$${\rm Z}^{\tau + 1} = Z^{\tau } + {\rm I}^{\tau } + d_{1} h_{1} \left( {{\rm Z}_{best} - Z^{\tau } } \right)$$(29)$${\rm Z}^{\tau + 1} = Z^{\tau } \left( {1 - d_{1} h_{1} } \right) + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best}$$(30)$$Z^{\tau } = \frac{{{\rm Z}^{\tau + 1} - {\rm I}^{\tau } - d_{1} h_{1} {\rm Z}_{best} }}{{1 - d_{1} h_{1} }}$$(31)Substituting Eq. (31) in Eq. (27th) first condition, the solution becomes,

$$Z^{\tau + 1} = \frac{{{\rm Z}^{\tau + 1} - {\rm I}^{\tau } - d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(32)$$Z^{\tau + 1} = \frac{{{\rm Z}^{\tau + 1} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}} - \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(33)$$\frac{{{\rm Z}^{\tau + 1} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}} - Z^{\tau + 1} = \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(34)$$Z^{\tau + 1} \left( {\frac{1}{{0.7\left( {1 - d_{1} h_{1} } \right)}} - 1} \right) = \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(35)$$Z^{\tau + 1} \left( {\frac{{1 - 0.7 + 0.7d_{1} h_{1} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}} \right) = \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(36)$$Z^{\tau + 1} \left( {\frac{{0.3 + 0.7 + 0.7d_{1} h_{1} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}} \right) = \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.7\left( {1 - d_{1} h_{1} } \right)}}$$(37)$$Z^{\tau + 1} = \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.3 + 0.7\left( {1 - d_{1} h_{1} } \right)}}$$(38)Substituting Eq. (31) in Eq. (27th) second condition,

$${\rm Z}^{\tau + 1} = \frac{10}{3}\left( {1 - \frac{{{\rm Z}^{\tau + 1} - {\rm I}^{\tau } - d_{1} h_{1} {\rm Z}_{best} }}{{1 - d_{1} h_{1} }}} \right)$$(39)$${\rm Z}^{\tau + 1} = \frac{10}{3}\left( {\frac{{1 - d_{1} h_{1} - {\rm Z}^{\tau + 1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{1 - d_{1} h_{1} }}} \right)$$(40)$${\rm Z}^{\tau + 1} = \frac{10}{3}\frac{{{\rm Z}^{\tau + 1} }}{{1 - d_{1} h_{1} }} + \frac{10}{3}\left( {\frac{{1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{1 - d_{1} h_{1} }}} \right)$$(41)$${\rm Z}^{\tau + 1} + \frac{10}{3}\frac{{{\rm Z}^{\tau + 1} }}{{1 - d_{1} h_{1} }} = \frac{10}{3}\left( {\frac{{1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{1 - d_{1} h_{1} }}} \right)$$(42)$${\rm Z}^{\tau + 1} \left( {1 - \frac{10}{{3\left( {1 - d_{1} h_{1} } \right)}}} \right) = \frac{10}{{3\left( {1 - d_{1} h_{1} } \right)}}\left( {1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} } \right)$$(43)$${\rm Z}^{\tau + 1} \left( {\frac{{3 - 3d_{1} h_{1} - 10}}{{3\left( {1 - d_{1} h_{1} } \right)}}} \right) = \frac{10}{{3\left( {1 - d_{1} h_{1} } \right)}}\left( {1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} } \right)$$(44)$${\rm Z}^{\tau + 1} \left( { - 3d_{1} h_{1} - 7} \right) = 10\left( {1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} } \right)$$(45)$${\rm Z}^{\tau + 1} = \frac{10}{{3d_{1} h_{1} + 7}}\left( {1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} } \right)$$(46)$${\rm Z}^{\tau + 1} = \left\{ \begin{gathered} \frac{{{\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} }}{{0.3 + 0.7\left( {1 - d_{1} h_{1} } \right)}}\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,;\,\,\,{\rm Z}^{\tau } < 0.7 \hfill \\ \frac{10}{{3d_{1} h_{1} + 7}}\left( {1 - d_{1} h_{1} + {\rm I}^{\tau } + d_{1} h_{1} {\rm Z}_{best} } \right)\,\,\,\,\,\,;{\rm Z}^{\tau } \ge 0.7 \hfill \\ \end{gathered} \right.$$(47)where, \({\rm Z}_{best}\) represents the position of at who has the best fitness value, \(d_{1}\) represents the random value varies in the range (0,1), \(h_{1}\) represents the constant, \(Z^{\tau }\) represents the position of cat at \(\tau {\text{th}}\) iterations.

-

(iv)

Computation of optimal solution: Once location of solution is updated, solution space performs fitness function evaluation. In this case, the optimal solution is determined by identifying the location providing the minimal fitness. Once new solution is identified, the best solution replaces the older solution.

-

(v)

Termination: The above-mentioned steps are continued till the best solution is obtained for brain tumor classification. Algorithm 1 portrays the pseudo code of developed model.

4 Results and discussion

This section elaborates comparison of developed strategy with classical strategies in terms of metrics.

4.1 Experimental setup

The execution of the developed method is done in MATLAB tool using PC with the Windows 10 OS, 2 GB RAM, and Intel i3 core processor.

4.2 Dataset description

The experimentation is done based on BRATS database [29]. This dataset holds two grades of the tumor images. In this case, each patient is offered with four various modalities, which includes T1, T2, T1C and the FLAIR. In addition, performance of developed model is analyzed with this dataset, and the comparative analysis is carried out based on performance metrics based on four images. Each modality possess 130 to 176 slices of brain that are considered in the analysis.

4.3 Evaluation metrics

The performance of developed CWCSO-based Deep CNN is computed based on three metrics, like specificity, accuracy, and sensitivity.

-

a)

Accuracy: The accuracy is defined to measure degree of the closeness of the estimated value related to their original value in optimal brain tumor classification, and is formulated by,

where, the term \({\rm T}_{p}\) refer to true positive, \({\rm K}_{p}\) signifies false positive, \({\rm T}_{n}\) indicates true negative and \({\rm K}_{n}\) refer to false negative, respectively.

-

b)

Sensitivity: It is utilized to measure the ratio of the positives, which are determined correctly by classifier, and is expressed by,

-

iii)

Specificity: It is utilized as ratio of the negatives that are identified correctly by classifier and is illustrated as,

-

iv)

Receiver Operating Characteristics (ROC) curve: ROC is graphical representation of relationship existing among TPR and TNR, and it is the measure that pictures the performance of the system.

ROC is graphical representation of relationship existing among TPR and TNR, and it is the measure that pictures the performance of the system.

4.3.1 TPR-True Positive Rate

The True Positive Rate defines how many correct positive results occur among all positive samples available during the test. The true-positive rate is also known as sensitivity.

4.3.2 TNR-True Negative Rate

The True Negative Rate is the proportion of the units with a known negative condition for which the predicted condition is negative. This rate is often called the specificity.

4.4 Simulation analysis

The simulation results of developed CWCSO-based Deep CNN is portrayed in Fig. 3. Figure 3a) refer to input image taken from BRATS 2018 dataset. Figure 3b) depicts segmented output image based on Fractional probabilistic Fuzzy Clustering, and the Fig. 3c) portrays output of the significant LOOP.

4.4.1 Simulation process

The simulation process in Fig. 3 can be represented as follows, Input image is given for segmentation using Fractional probabilistic Fuzzy Clustering approach for the four images and obtain the tumor segmented image. The obtained segmented image is again given for segmentation using Proposed Significant LOOP output to get the better tumor segmented image. These features form the feature vectors.

4.5 Comparative methods

The performance of developed approach is computed by comparing the developed with existing techniques, such as K-Nearest Neighbors (KNN) [28], Neural Networks (NN) [23], Multi-Support vector machine (MultiSVM) [38], Multi-Support Vector Neural Networks (Multi-SVNN) [26], Deep Belief Neural Networks (DBN) [41], Bayesian Fuzzy clustering approach + Harmony-Crow Search (HCS) Optimization + Multi-Support Vector Neural Networks (Bayesian HCS-MultiSVNN) [34], Whale-Cat Swarm Optimization based Deep Belief Network (WCSO-DBN), Deep Recurrent Neural Network (Deep RNN), respectively.

4.6 Comparative analysis

This section describes comparative analysis of developed CWCSO-based Deep CNN approach with respect to specificity, accuracy, and sensitivity metric with different training data percentage based on Image-1, Image-2, Image-3, and Image-4.

-

(a)

Analysis using Image-1

Table 1 represents the specificity, accuracy and sensitivity of various methods for 80% training data and ROC of the proposed CWCSO-enabled Deep CNN for the minimal of 10% as FPR, TPR obtained by various methods. Figure 4 illustrates the analysis of methods with different training data considering specificity, accuracy, and sensitivity parameters based on Image-1.

-

(b)

Analysis using Image-2

Table 2 represents the specificity, accuracy and sensitivity of various methods for 80% training data and ROC of the proposed CWCSO-enabled Deep CNN for the minimal of 10% as FPR, TPR obtained by various methods.

The analysis of methods with different training data considering specificity, accuracy, and sensitivity parameters based on Image-2 is illustrated in Fig. 5.

-

(iii)

Analysis using Image-3

Table 3 represents the specificity,accuracy and sensitivity of various methods for 80% training data and ROC of the proposed CWCSO-enabled Deep CNN for the minimal of 10% as FPR, TPR obtained by various methods.

Figure 6 illustrates the analysis of methods with different training data considering specificity, accuracy, and sensitivity parameters based on Image-3.

-

(iv)

Analysis using Image-4

Table 4 represents the specificity,accuracy and sensitivity of various methods for 80% training data and ROC of the proposed CWCSO-enabled Deep CNN for the minimal of 10% as FPR, TPR obtained by various methods.

Figure 7 portrays analysis of methods with different training data considering specificity, accuracy, and sensitivity parameters based on Image-4.

4.7 Comparative discussion

Table 5 elaborates analysis of maximum performance attained by the methods by changing training data percentage considering performance metrics. The maximal specificity attained by the developed CWCSO-enabled Deep CNN with value of 98.59%, whereas specificity of existing KNN is 73%, NN is 94.37%, MultiSVM is 94.43%, Multi-SVNN is 96%, DBN is 96%, Bayesian HCS-MultiSVNN is 96%, WCSO-DBN is 96%, and Deep CNN is 98.58%. The maximal accuracy computed by proposed CWCSO-based Deep CNN with a value of 95.52%, whereas the accuracy of existing KNN is 60%, NN is 88.35%, MultiSVM is 88.50%, Multi-SVNN is 92%, DBN is 92%, Bayesian HCS-MultiSVNN is 92%, WCSO-DBN is 92.75%, and Deep CNN is 94.45%. In addition, the maximal sensitivity value measured by CWCSO-based Deep CNN is 97.37%, whereas the existing KNN is 70%, NN is 70%, MultiSVM is 70%, Multi-SVNN is 94.99%, DBN is 94.99%, Bayesian HCS-MultiSVNN is 95%, WCSO-DBN is 95%, and Deep CNN is 97.36%, respectively.

5 Conclusion

This paper presents the CWCSO-enabled Deep CNN using MRI for brain tumor classification. Here, pre-processing is initially done by input image to improve quality of the image. Furthermore, Fractional probabilistic Fuzzy Clustering is introduced for segmentation for producing the segments using the pre-processed image. The segments are then subjected to feature extraction module, which is performed based on wavelet transform, scattering transform, EMD, LDP, information theoretic measures, and proposed Significant LOOP. This new descriptor is developed through modifying SLBP by LOOP. After feature extraction, Deep DNN is introduced for classification that is trained by the proposed optimization method, termed CWCSO. The CWCSO is designed newly by integrating chaotic concept and WCSO that categories into non-tumor, tumor, enhanced tumor, and edema. Hence, the approach is employed for diagnosing brain tumor based on proposed CWCSO-based Deep CNN from the MRI images. The experimentation of the developed model is performed using BRATS dataset. The proposed model provides superior performance with maximal specificity of 98.59%, maximal accuracy of 95.52%, and maximal sensitivity of 97.37%. In future work advanced optimization with the deep learning techniques will be included in computing to increase the efficiency of proposed method.

References

Abd-Ellah MK, Awad AI, Khalaf AAM, Hamed HFA (2016) Design and implementation of a computer-aided diagnosis system for brain tumor classification. In: ICM2016: 28th international conference on microelectronics. pp 73–76

Ahmadvand A, Daliri MR, Zahiri SM (2017) Segmentation of brain MR images using a proper combination of DCS based method with MRF. Multimedia Tools Appl 77(7):8001–8018

Amin J, Sharif M, Yasmin M, Fernandes SL (2017) A distinctive approach to brain tumor detection and classification using MRI. Pattern Recognit Lett 10:116–156

Anbeek P, Vincken KL, Viergever MA (2008) Automated MS-lesion segmentation by K-nearest neighbor classification. Midas J 1–8

Angulakshmi M, Priya GGL (2018) Brain tumour segmentation from MRI using superpixels based spectral clustering. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2018.01.009

Anitha V, Murugavalli S (2016) Brain tumour classification using two-tier classifier with adaptive segmentation technique. IET Comput Vis 10(1):9–17

Badran EF, Mahmoud EG, Hamdy N (2010) An algorithm for detecting brain tumors in MRI images. In: Proceedings, ICCES’2010—2010 international conference on computer engineering and systems. pp 368–373.

Bahrami M, Bozorg-Haddad O, Chu X (2018) Cat Swarm Optimization (CSO) algorithm. In: Advanced Optimization by Nature-Inspired Algorithms, Computational Intelligence, pp 9–18

Bauer S, Wiest R, Nolte LP, Reyes M (2013) A survey of MRI-based medical image analysis for brain tumor studies. Phys Med Biol 58(13):R97–R129

Bhaladhare PR, Jinwala DC (2018) A clustering approach using fractional calculus-bacterial foraging optimization algorithm for k-anonymization in privacy preserving data mining. Adv Comput Eng 2014:1–12

Cabria I, Gondra I (2017) MRI segmentation fusion for brain tumor detection. Inf Fusion 36:1–9

Chakraborti T, Mccane B, Mills S, Pal U (2017) Loop descriptor : encoding repeated local patterns for fine-grained visual identification of Lepidoptera. IEEE Signal Process Lett 1–5

Chakraborti T, McCane B, Mills S, Pal U (2018) LOOP descriptor: local optimal-oriented pattern. IEEE Signal Process Lett 25(5):635–639

Charutha S, Jayashree MJ (2014) An efficient brain tumor detection by integrating modified texture based region growing and cellular automata edge detection. In: 2014 international conference on control, instrumentation, communication and computational technologies (ICCICCT 2014). pp 1193–1199

Chen H, Qin Z, Ding Y, Tian L, Qin Z (2020) Brain tumor segmentation with deep convolutional symmetric neural network. Neurocomputing 392:305–313

Dian R, Li S, Guo A, Fang L (2018) deep hyperspectral image sharpening. IEEE Trans Neural Netw Learn Syst 29(11):5345–5355

Huang S, Zhang Y, Liu Z (2016) Image feature extraction and analysis based on Empirical mode decomposition. In: Proceedings of 2016 IEEE advanced information management, communicates, electronic and automation control conference, IMCEC 2016, no. 3. pp 615–619

Ilunga-Mbuyamba E, Avina-Cervantes JG, Cepeda-Negrete J, Ibarra-Manzano MA, Chalopin C (2017) Automatic selection of localized region-based active contour models using image content analysis applied to brain tumor segmentation. Comput Biol Med 91:69–79

Ismael MR, Abdel-Qader I (2018) Brain tumor classification via statistical features and back-propagation neural network. In: IEEE international conference on electro/information technology. pp 252–257

Jason J et al (2008) Efficient multilevel brain tumor segmentation with integrated bayesian model classification. IEEE Trans Med Imaging 27(5):629–640

John P (2012) Brain tumor classification using wavelet and texture based neural network. Int J Sci Eng Res 3(10):1–7

Karnan M, Logheshwari T (2010) Improved implementation of brain MRI image segmentation using Ant Colony system. In: 2010 IEEE international conference on computational intelligence and computing research (ICCIC 2010). pp 722–725

Kharat KD, Kulkarni PP, Nagori MB (2012) Brain tumor classification using neural network based methods. Int J Comput Sci Inform 1(4):85–90

Kumar Mallick P, Ryu SH, Satapathy SK, Mishra S, Nguyen GN, Tiwari P (2019) Brain MRI image classification for cancer detection using deep wavelet autoencoder-based deep neural network. IEEE Access 7:46278–46287

Lavanyadevi R, MacHakowsalya M, Nivethitha J, Niranjil Kumar A (2017) Brain tumor classification and segmentation in MRI images using PNN. In: Proceedings of the 2017 IEEE international conference on electrical, instrumentation and communication engineering (ICEICE 2017), vol 2017-December. pp 1–6

Ludwig O, Nunes U, Araujo R (2014) Eigenvalue decay : a new method for neural network regularization. Neurocomputing 124:33–42

Mathew AR, Anto PB (2018) Tumor detection and classification of MRI brain image using wavelet transform and SVM. In: Proceedings of the IEEE international conference on signal processing and communication (ICSPC 2017), vol 2018-January, no. July. pp 75–78

Meenakshi R, Anandhakumar P (2015) a hybrid brain tumor classification and detection mechanism using Knn and Hmm. Curr Med Imaging Rev 11(2):70–76

Menze BH et al (2015) The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans Med Imaging 34(10):1993–2024

Naik J, Patel PS (2013) Tumor detection and classification using decision tree in brain MRI. Int J Eng Dev Res 14:49–53

Nefti S, Oussalah M (2004) Probabilistic-fuzzy clustering algorithm. In: IEEE international conference on systems, man and cybernetics, vol 5. pp 4786–4791

Nilesh et al (2017) Image analysis for MRI based brain tumor detection and feature extraction using biologically inspired BWT and SVM. Int J Biomed Imaging. https://doi.org/10.1155/2017/9749108

Parveen, Singh A (2015) Detection of brain tumor in MRI images, using combination of fuzzy c-means and SVM. In: 2nd international conference on signal processing and integrated networks (SPIN) 2015. pp 98–102

Raju AR, Suresh P, Rao RR (2018) Bayesian HCS-based multi-SVNN: a classification approach for brain tumor segmentation and classification using Bayesian fuzzy clustering. Biocybern Biomed Eng 38(3):646–660

Rashid MHO, Mamun MA, Hossain MA, Uddin MP (2018) Brain tumor detection using anisotropic filtering, SVM classifier and morphological operation from MR images. In: International conference on computer, communication, chemical, material and electronic engineering (IC4ME2 2018). pp 3–6

Sajid S, Hussain S, Sarwar A (2019) Brain tumor detection and segmentation in MR images using deep learning. Arab J Sci Eng 44(11):9249–9261

Sharmila R, Suresh Joseph K (2018) Brain tumour detection of MR image using Naïve Beyer classifier and support vector machine. Int J Sci Res Comput Sci Eng Inf Technol 3(3):2456–3307

Suhag S, Saini LM (2015) Automatic brain tumor detection and classification using SVM classifier. Int J Adv Sci Eng Technol 3(1):119–123

Thillaikkarasi R, Saravanan S (2019) An enhancement of deep learning algorithm for brain tumor segmentation using kernel based CNN with M-SVM. J Med Syst 43(4):1–7

Usman K, Rajpoot K (2017) Brain tumor classification from multi-modality MRI using wavelets and machine learning. Pattern Anal Appli 20:871–881

Vojt J (2016) Deep neural networks and their implementation. Charles University, Prague

Zulpe N, Pawar V (2012) GLCM textural features for brain tumor classification. Int J Comput Sci Issues 9(3):354–359

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jemimma, T.A., Raj, Y.J.V. Significant LOOP with clustering approach and optimization enabled deep learning classifier for the brain tumor segmentation and classification. Multimed Tools Appl 81, 2365–2391 (2022). https://doi.org/10.1007/s11042-021-11591-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11591-8