Abstract

Human face recognition have been an active research area for the last few decades. Especially, during the last five years, it has gained significant research attention from multiple domains like computer vision, machine learning and artificial intelligence due to its remarkable progress and broad social applications. The primary goal of any face recognition system is to recognize the human identity from the static images, video data, data-streams and the knowledge of the context in which these data components are being actively used. In this review, we have highlighted major applications, challenges and trends of face recognition systems in social and scientific domains. The prime objective of this research is to sum-up recent face recognition techniques and develop a broad understanding of how these techniques behave on different datasets. Moreover, we discuss some key challenges such as variability in illumination, pose, aging, cosmetics, scale, occlusion, and background. Along with classical face recognition techniques, most recent research directions are deeply investigated, i.e., deep learning, sparse models and fuzzy set theory. Additionally, basic methodologies are briefly discussed, while contemporary research contributions are examined in broader details. Finally, this research presents future aspects of face recognition technologies and its potential significance in the upcoming digital society.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Face as a human identity spans over the centuries in human civilizations. It has been one of the most proactively studied topics in computer vision and machine learning research for more than five decades. Compared with other common biometrics such as iris, retina or fingerprint based identification, face recognition has the ability to uncover uncooperative subjects in a proficient manner. In recent years, a lot of successful research outcomes have been recorded for images in a controlled environment, but in an unconstrained environment like variable illumination and diverse pose, face recognition is still a complex and challenging problem [65, 73, 168, 206].

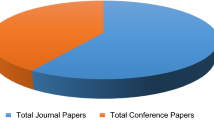

Face recognition has been an active research area for the past few decades. Especially, during the last five years, it has emerged as one the most flourishing research topic among image processing, computer vision, machine learning, artificial intelligence and visual surveillance due to broad social, scientific, commercial applications. The primary goal of face recognition system is to recognize the human identity from the static images [196], video data [264] or from data-streams [183] including the knowledge of the context in which these data components are being actively used. Due to rapid progress in sensors and imaging technologies, the face recognition systems have been broadly used in many real-world applications. Specifically, human-computer interaction, human-robot interaction, person identification for security objectives, face as a mobile biometric recognition, law enforcement, voter identification, counter-terrorism, border control and immigration, day-care centers, social security, banking, e-commerce [93, 132, 138] etc. With a wide range of real-world applications, face recognition has received remarkable attention from both commercial stakeholders and research communities. There is an essential demand for robust face recognition algorithms that are highly capable of dealing with complex real-world situations. As illustrated in Fig. 1, there is a continuous rising trend in number of peer review articles from the year 2000 to 2020. We collect data with keywords “face recognition” and “face identification” from two well-known research collection datasets i.e. dblp.org and web of science. Many literature reviews were presented during the last three decades on face recognition and identification research. Few of the recent [47, 93, 96, 200, 219, 229, 281, 284] literature reviews have also been considered. The main contributions of this paper are summarized as follows:

-

In recent years, many reviewers [1, 5, 17, 38, 44, 45, 52, 79, 149, 217, 229, 258, 285] reviewed only a limited aspect of face recognition literature like pose [52, 285], illumination [79], dimension [1, 38], feature types [229, 258], occlusion [17, 44], etc. We precisely attempt to cover all aspects from the past, present up-to-the future directions of face recognition technology that can act as a benchmark for next-generation researchers to understand this domain. Up to the best of our knowledge, this is a kind of unique effort in recent years.

-

We conducted a comprehensive analysis and cross-comparison for more than 32 classical (Table 3), 24 deep learning (Table 4), 16 dictionary learning (Table 5) and 8 fuzzy logic (Table 6) based face recognition approaches. Also, we summarized the recognition rate of each approach categorically (Tables 3-6 respectively) to have an insight into the experimental results of existing models.

-

Since many literature reviews are available on classical face recognition techniques (work done before 2015). We precisely summarize the classical work but briefly illustrate recent deep learning, dictionary learning and fuzzy logic based face recognition techniques.

-

We summarize face recognition approaches in a novel categorical way as presented in Fig. 6, for each category we compose the developments in chronological order.

-

One of our contributions is to integrate a complete overview of face recognition research, state-of-the-art technologies, common algorithms, popular vendors (Table 2) and recent face datasets (Table 1) in practice today.

The remaining part of this review is organized as follows. Before diving in details of traditionally used algorithms, a general overview of a face recognition system is presented in Section 2. We first introduced popular publically available face recognition datasets in Section 3. Later on we discuss leading face recognition technology vendors around the globe and some key application areas of face recognition technologies in Section 4 followed by major challenges in Section 5. Then, we categorically present four frameworks of face recognition methodologies in Section 6. Finally, we closed by discussing future research directions in Section 7 and conclusion in Section 8.

2 A general face recognition system

Generally, a face recognition system deals with the input image as a classification problem. An overview for a face recognition system is presented in Fig. 2:

2.1 Acquisition and preprocessing

In any image processing system for features extraction and image understanding, image acquisition and preprocessing is the first step. We extract the target images from the input source either from video streaming or static images. Image preprocessing is an integral part of image processing systems and helps in obtaining accurate results and avoid noise [13]. Here, systems have to face some challenges that can cause hindrance in the overall process especially in an unconstrained environment, which may include varying image background, pose, aging, illumination, and expression, etc. During the last two decades, different datasets have been developed that offer complete media set images to test the reliability of newly proposed algorithms. A substantial amount of work has been done for dealing with these challenges [13, 121, 228, 285]. Image preprocessing also depends on the area of application as well. For example, for a smartphone user, clear face acquisition is not much challenging as compared to an image that has been taken from a crowded place such as an airport to detect a suspicious person. Image preprocessing is always recommended as one of the initial stages, as the performance of feature extraction also depends on preprocessing. In the case of an unconstrained environment where learning depends on environmental settings, post-processing of data can also be considered for removal of noise from the given image.

2.2 Face detection

Face detection is a process of localizing and extracting the face region from the background. It is another essential step in overall facial recognition process and has been well-studied in the field of computer vision [167]. Early face detection approaches such as Viola-Jones face detector [252] was capable to detect real time facial regions from input images. With the passage of time, it become an active research area and a major part of any visual face understanding framework. Bunch of research efforts [35, 49, 167, 181, 197, 305] has been noted for robust face detection through a verity of algorithms in recent years.

2.3 Feature extraction

Feature extraction acts as a core activity for any face recognition system. It has a significant effect on overall system performance. Various types of features extractor models have been developed like SIFT, SVM, STIP, STISM [13, 71, 94]. We discuss these and many other recent feature extractors and descriptors in later part of this paper. Different aspects have been used to classify feature extraction such as global vs local feature extraction methods [229], hand-crafted vs learning-based methods, 2D vs 3D features extraction methods [127].

2.4 Feature selection

Most of the times feature extractor or descriptor generates a large feature space against a single image and it becomes more complex when we want to recognize a person from a video streaming. The large feature space is further processed through PCA, SVD, MDS, LDA, and LDR [43, 296] for selecting the principal features and dimensionality reduction. This has a positive impact on system performance because it reduces the overall cost and makes better use of system time for recognition purpose.

2.5 Feature matching

Once we get the principal features from the input face image then these features are iteratively matched with an existing feature database for the target objective. As shown in Fig. 2, face identity recognition is an iterative process. Basically, the system reveals the identification from the database. Many well-known classification techniques like SVM, RBFNN, NC-K-mean, PNI, and CNN [13, 241] are used for features matching purpose.

3 Known benchmark databases

Performance evaluation for a face recognition system is one of the key research challenges. A large number of face recognition benchmarks have been established and are publically available to facilitate newly proposed algorithms. Table 1 summarize the most widely used 2D and 3D face databases. The significance of each dataset has been highlighted through different imaging conditions such as the total number of images, number of subjects, color (Gray or RGB), reported resolution of subject images and year. Additionally, we have discussed a very brief introduction and few limitations for each of these datasets in light of the literature that we have studied for this survey.

-

MS-Celeb-1M [82]: The Microsoft Celeb is a face image database of around 8.2 million face images harvested from the internet to develop face recognition technologies. It is one of the largest publically available face image collection of around 100, 000 individuals. Most of the people in this dataset are American and British actors and popular internet celebrities. Since source images were not purposefully recorded, the dataset has very limited support for illumination and pose invariants.

-

PICS [244]: The Psychological Image Collection at Stirling (PICS) is another large collection of face images especially maintained to conduct facial expressions related research. The images are organized into sets, each set contains facial images and other objects as well. The presence of other object images makes it a little confusing for specialized facial recognition techniques.

-

CAS-PEAL [64]: The Chinese Academy of Sciences Pose, Expression, Accessory, and Lighting (CAS-PEAL) face dataset has been constructed as a key project of National Hi-Tech Program by Chinese Academy of Sciences. It was created to build up a central repository of face images from different Chinese ethnic groups. It has strong support for Pose, Expression, and illumination invariants but limitations occur at aging and occlusion like challenges. Also, only the availability of Chinses faces makes a bound for algorithm evaluation.

-

SoF [4]: Spaces on face dataset contains facial images with variable, pose, illumination and occlusion effects. The dataset image collection is special invariant to variable illumination occlusions effects. Moreover, three image filters, that may evade face detectors and facial recognition systems, were applied to each image. All generated images are categorized into three levels of difficulty i.e. easy, medium, and hard.

-

CMU-PIE [225]: Carnegie Mellon University - Pose Illumination and Expression (CMU-PIE) has been collected between October and December 2000. Each subject in the dataset has 13 different poses, 43 different illumination conditions, and 4 variable expression images. In the initial version, all images recorded in a single recording session i.e. dataset have no support for aging invariant.

-

FERET [191]: Facial Recognition Technology (FERET), initially collected under a collaborative effort between Dr. Harry Wechsler at George Mason University and Dr. Jonathan Phillips at the Army Research Laboratory in Adelphi, Maryland. Although, later versions have been improved, still it has certain limitations for state-of-the-art challenges.

-

LFW [103]: Labeled Faces in the Wild (LFW) dataset is very common in academic literature for general-purpose face recognition algorithm evaluation. The downside of this dataset is the limited number of per subject images for state-of-the-art challenges such as illumination, pose, and occlusion invariants.

-

SCface [77]: Surveillance Cameras Face Database (SCface) is a face image dataset purposefully recorded in an uncontrolled indoor environment using five different cameras of variable qualities. The collection involved only 130 individuals, this is a major hitch for machine learning bases algorithms.

-

AR [172]: The AR face database was created by Aleix Martinez and Robert Benavente in the Computer Vision Center (CVC) at the U.A.B. It contains over 4,000 color images of 126 individuals (70 men and 56 women). Images feature frontal view faces with different facial expressions, illumination conditions, and occlusions (sunglasses and scarf). All images were taken twice, each in two different sessions, separated by 14 days’ time gap.

-

FEI [242]: The FEI is a Brazilian face dataset, contains face images that were purposefully recorded between June 2005 and March 2006 at the Artificial Intelligence Laboratory of FEI in Sao Bernardo do Campo, Sao Paulo, Brazil. Mostly, images are of students and staff at FEI Lab, aging between 19 and 40 years old with variable pose, appearance, and hairstyle. All images were taken against a white homogenous background which is not a true representation of real-world diverse situations.

-

NIST-GBU [192]: The National Institute of Standards and Technology: Good, Bad and Ugly (NIST-GBU) face dataset was a part of Face and Ocular Challenge Series (FOCS) project. It consists of three frontal still face images for each subject participant with variable illumination effects at both indoors and outdoors environments.

-

Oulu Physics [171]: The University of Oulu Physics-Based face dataset was collected at the Machine Vision and Media Processing Unit, University of Oulu. It contains two unique properties, first 16 different cameras with variable illumination conditions were used to record the images. Secondly, the camera channel response and the spectral power distribution of illuminants are also provided. The database may be of general interest to face recognition researchers and of specific interest to color researchers.

-

BANCA [18]: Biometric Access Control for Networked and E-Commerce Applications (BANCA) face database was collected as part of the European BANCA project. Both high and low-quality microphones and cameras were used for image creation. The subjects were recorded in three different scenarios, controlled, degraded and adverse over 12 different sessions spanning three months.

-

Yale B [70]: Yale and Yale B are very common in academic literature for face recognition algorithm evaluation. The initial version Yale face dataset has only 165 grayscale images in GIF format of 15 individuals. The extended version Yale-B contains more participants with 64 illumination conditions per subject and 9 different pose angles.

-

MIT-CBCL [220] : The MIT-CBCL face recognition database contains face images of 10 subjects. It provides two training sets, the first one contains high-resolution images with frontal, full-face view, and half-face view. The second one is a synthetic part; it contains 324 images per subject from 3D head models for 10 subjects. The head models were generated by fitting a morphable model to the high-resolution training images.

-

AT&T/ORL [64]: The AT&T database of faces formerly referred to as the ORL face database contains 40 distinct subjects and 10 images for each subject. Images were recorded between April 1992 and April 1994 by the Speech, Vision and Robotics Group of the Cambridge University engineering department lab. The images were taken at different times, varying the lighting, facial expressions (open or closed eyes, smiling or not smiling), and facial details (glasses or no glasses). All the images were taken against a dark homogeneous background with the subjects in an upright, frontal position.

-

FRGC [190] : Face Recognition Grand Challenge (FRGC) contains both 2D & 3D facial images. Each subject has recorded images in different sessions with four controlled still images, two uncontrolled still images, and one three-dimensional image.

-

UoY-2 [245] : The University of York 3D face database has been gathered to facilitate research into 3D face recognition. Currently, a limited part of dataset is publically available that has certain hitches for illumination and aging invariants.

-

BU-3DFE [93] : The Binghamton University 3D Facial Expression (BU-3DFE) dataset is a fine collection of White, Black, central Asian, Indians face images and Narrow in term of pose, illumination, expression, Hispanic, and Latino people. The dataset contains seven expressions for each subject with four different intensity levels.

-

FRAV3D [41]: The FRAV3D face dataset was collected by Universidad Rey Juan Carlos, Spain. All data acquired through a low resolution Minolta VIVID 700 scanner. It is not suitable for aging invariant

-

Texas 3DFRD [243]: The University of Texas 3D face dataset is a collection of 3D color face images of 105 subjects. These images were acquired using a stereo imaging system at high spatial resolution of 0.32 mm along the x, y, and z dimensions. During the acquisition process, the color and range images were captured simultaneously.

-

GavabDB [216]: The dataset compiled by GAVAB research group Universidad Rey Juan Carlos, Spain. A limited use of examples in face recognition literature. Each image consists in a three-dimensional mesh representing a face surface. There are systematic variations over the pose and facial expression of each person. In particular, there are 2 front and 4 rotated images without any facial expressions, and 3 front images in which the subject presents different and accentuated facial expressions are included.

-

BJUT-3D [20]: The BJUT-3D is a Chinese 3D face dataset by Multimedia and Intelligent Software Technology Beijing. Design and construction of the face database mainly include acquisition of prototypical 3D face data, preprocessing and standardizing of the data and the structure design. Currently, BJUT-3D database is the largest Chinese 3D face database in the world.

4 Face recognition applications

With ongoing digital society, face recognition systems are being harvested by leading technology companies around the world. Due to potential commercial applications and growing market trends, there is a healthy competition among these companies for delivering the best possible performance. An overview of vendors providing face recognition solutions is illustrated in Table 2. Additionally, leading technology companies such as IBM, Google, Microsoft and Apple are fighting to gain a winning position in face recognition technology. A review on [86] with keywords “face recognition” and “face identification” demonstrates that the number of patents (granted) are drastically increasing in past two decades. As shown in Fig. 3, there is a growing interest of companies for owning face recognition frameworks in the form of patents registration. The statistics powered by WIPO (World Intellectual Property Organization) and USPTO (United States patent and trade office) also witness for the same stream. After two successful facial identification patents in August 2017 and January 2018, Google has introduced a bunch of recent patents for facial recognition [86]. Using these as an advantage, the new trends will allow Google to identify faces from personal communications, social networks, collaborative apps, blogs and much more.

In this section, we attempt to summarize rich face recognition applications and commercial significance. Popular vendors, specialized in face recognition such as IBM, Innovatrics, Advanced Biometrics and IDEMIA are providing convenient, reliable and flexible solutions with worldwide installation. Key application areas have been investigated where face recognition technologies are increasingly adopted and revolutionalizing automation capacities.

4.1 Access control

With the increasing popularity of face recognition systems, it has been adopted by various automatic access control mechanisms for human to machine interaction. It has also been replaced with other authentication control methods such as password protection, fingerprints, iris verification, etc. Furthermore, smartphone and CCTV cameras have become widespread, use for face-based authentication has been feasible for many real-world applications. Hardware-based verification systems are being rapidly extended to control face-based authorization for a single login to multiple networked services. Face-based automatic access to automatic teller machine (ATM), online funds transfer and access to cyphertext are also getting popular in a variety of social practices [200]. For the same reasons, face identity-based billing, cheque processing, sensitive laboratorys’ access, courier services for sensitive objects and face identity-based automatic access control is demanded everywhere.

4.2 Surveillance

Face recognition is one of the most important yet challenging tasks for fully automatic and smart surveillance systems. Surveillance is defined as close observation or monitoring, especially of a suspected spy or criminal. It is one of the most important and widespread face recognition applications. These systems are created to meet security objectives for both outdoor and indoor public crowds, for example, monitoring public areas, airports halls, banks, and geographical borders, etc. Due to huge data volume acquired by camera networks, brute force spy detection algorithms are not sufficient enough to intelligently recognize suspects and terrorists in sensitive places. State-of-the-art face recognition research provides a platform for intelligent video surveillance systems that involves hardware and software aspects, such as automatic interfaces, pattern recognition, signal processing, and machine learning algorithms to achieve highly optimistic results [292]. Additionally, the recognition capabilities of machine based techniques are proved to be more efficient than the humans [164] in real-world applications. To leverage the capabilities of humans and machines, face recognition solutions in surveillance systems can be effectively used as a tool to support human operators in carrying out complex monitoring and recognition tasks. Although, the state-of-the-art methods for surveillance systems have achieved satisfactory results but still there are lot of challenges for effective surveillance such as occlusion, blur subject images and limited training data [22, 77, 96].

4.3 Entertainment

It has been noticed that face recognition has been increasingly popular in the entertainment sector as well. The most exciting areas are virtual realities, mobile gaming, human-robot interaction, human-computer interaction, training, and theme park gaming zones [48] etc. Furthermore, recently T. Feltwell et al. [59] introduced an interesting game which asks the players to capture the likeness of members of the public. It is motivated by free-to-play models and the phenomenal success of famous game “Pokemon GO”, but they proposed a different experience where players can hunt and capture members practically in the real world.

4.4 Law enforcement

Face recognition systems have been proved to be a highly effective tool for law enforcement bodies to identify criminals or find missing persons. Manually investigating hours of video material for searching a specific identity is quite a tedious task for law enforcement officials. For example, it is recently reported [87] that law enforcement agencies in China have taken just seven minutes to locate a BBC reporter John Sudworth using its powerful CCTV camera network of more than 170 million cameras and face recognition technology. Face recognition research brings a new generation of intelligent and efficient investigative capabilities of law enforcement bodies [213]. Overstay and illegal citizenship is another challenging problem for densely populated communities all over the world. The advancement in face recognition technologies steps forwarded in capturing illegal immigrants and overstay foreigners. Furthermore, face recognition technology is also in practice for banking [144], voter identification [170], crime investigation [23, 96] counter-terrorism [60] and immigration [126] purposes.

4.5 Other common applications

Recently, Kwon and Lee [132] introduce a comprehensive set of techniques for face recognition in software applications. Similarly, Salici and Ciampini [214] presents face recognition applications for forensic investigation department. Experiments on 130 real cases for identification are proved to be successful and are validated by forensic experts. Another recent application is introduced by Calo et al. [29] for privacy control in viewing, updating and destroying digital information.

5 Key challenges for face recognition

Face recognition research in controlled environments has been proved to be very successful and obtain required targets for social applications, substantial challenges are still there for an uncontrolled environment where subjects are dynamic, variations are difficult to catch in machine perspective due to certain causes. In this section, we describe different challenges for face recognition technologies.

5.1 Pose variation

It mainly refers to change or rotation of subject image out of the plane in different poses on 2D or 3D perspectives [289]. The pose is the most difficult recognition challenge especially when the subject is in an uncooperative environment like searching a spy in a public crowed, terrorists protection on airport and finding thieves in big marts, etc. The most suitable solution of this task might be the collection of multiple gallery images of the subject but it seems to be impractical in most of the real-world applications. For example, if only a passport photo of every person is stored in the image database, then how can we identify a terrorist among a football stadium crowd? Numerically, the difference between two same images with a different pose is greater than the difference between two images of different persons. Therefore, local approaches such as Local Binary Pattern (LBP) and Elastic Bunch Graph Matching (EBGM) are considered to be more efficient than of holistic approaches [1]. Similarly, Beveridge et al. [27] introduce the Point-and-Shoot Face Recognition Challenge (PaSC) a competition that examined 5 different methodologies on the PaSC database [26], which contains unconstrained face photos and videos of outdoor and indoor scenes. In this competition the best performing algorithm [141] claims that their approach is nearly perfect on pose variation, later on same set of methods proved to be unsuccessful on other face datasets.

5.2 Illumination variation

Variable illumination or lightning effect is another major challenge for face recognition systems. Due to skin reflectance properties, multiple camera sensors, resolution effects and environmental conditions, illumination is totally uncontrolled for machine intelligence. Traditional approaches have their own limitations with varying illumination effects. It has been theoretically [299] as well as practically [3] proved that the effects of fluctuating illumination are more significant than the difference between two different images. Classical methods like eigenface [250], fisherface [24], probabilistic and Bayesian matching [175] and SVM [181] are unable to deal with illumination variations. On the other hand, a rich volume of recent literature [7, 36, 89, 99, 100, 182, 253, 254, 293, 294, 297] is a strong witness for the significance of dealing illumination fluctuations for performance tuning of face recognition systems. The Fig. 4 presents a sample taken from CMU-PIE dataset shows a significant impact of change in illumination conditions:

Different lightning conditions for same face as presented in CMU PIE dataset [224]

5.3 Occlusion

Face occlusion means hiding important facial features due to the presence of other objects like hate, helmet, sunglasses, hand, scarf, mask, etc. Occlusion is another critical factor that seriously affects the performance of face recognition technique. As illustrated in Fig. 5, it is a common phenomenon in real life that certain facial features are occluded due to hand, hair or scarf, etc., especially when the subject is in an uncontrolled environment. Significant research attempts [66, 83, 117, 130, 134, 140, 196, 199, 236, 251, 265, 276, 277, 291] have been made to deal with occlusion in face images. One simple solution is adopted in various models: consider the occluded part as noise and subtract it from the given face image and compare rest of the information with stored image, but it is not universal for all cases. Recently, Nojavanasghari et al. [180] introduce a model to deal with hand over occlusions from a dataset of non-occluded faces. Similarly, Iliadis et al. [106] present an iterative method to address the occlusion problem for face identification. The proposed approach makes use of robust representation based on two features in order to model occlusion efficiently. First, the occluded part is fitted as a distribution described by a tailored loss function, secondly, it is expressed by a specific structure. Finally, Alternating Direction Method of Multipliers (ADMM) model is utilized to make it computationally efficient and robust.

Faces with occlusion effect as presented in Hand2Face [180]

5.4 Aging

With the passage of time human face changes in a nonlinear and inconsistent way. This is critical for both human and machine intelligence to recognize faces with varying aging effects. This problem is harder enough to solve as compared to other face recognition challenges that only a few efforts found in the literature to address age variation. Aging continuously changes the texture, shape and appearance style of a human face. Most of the classical methods [61, 135, 142, 148] that deal with age variation problem are composed of two steps: features extraction and calculation of distance metric. Sometimes, these steps ignore the contact of these components and a fixed distance threshold affects the algorithm performance. Furthermore, it is not possible for most of the real-world applications like passport or ID card image database to frequently update gallery images. Chen et al. [33] propose a Cross-Age Reference Coding (CARC) model to deal with age invariant. The model encodes low-level features of a face image with an age variation reference space. It needs only a linear projection to extract features that make it highly scalable. The authors evaluate the model on a self-constructed dataset of more than 160,000 images of 2000 celebrities (ranging 16 to 62 age group) and obtain considerable performance. Another deep convolutional neural network-based age invariant face recognition model is proposed by Wen et al. [261]. The model contains two major components: convolution unit for feature learning and latent factor fully connected layer that is responsible for age variation feature learning. The CNN architecture is carefully designed to detain micro-level variations and therefore proved to be successful.

6 Face recognition frameworks

Since, face recognition has been a key research area for the last three decades by many research communities like machine learning, artificial intelligence, image processing, and computer vision. Methods proposed for face recognition belong to vast and diverse scientific domains and that is why it is difficult to draw a clear line that categorizes these approaches in a standard way. Also, the usage of hybrid models makes it difficult to categorize these approaches in standard branches for feature representation or classification. However, according to recent literature, we sum-up and present face recognition approaches as a clear and high-level categorization. Figure 6 shows the categorical distribution of face recognition approaches:

6.1 Classical approaches

The research in face recognition has long historic roots such as in the 1950s psychology and 1960s in engineering literature [298]. These beginning concepts were derived from pattern recognition systems as discussed in an MIT Ph.D. thesis [210] by Lawrence Gilman. He first identified that a 2D features extracted from a photograph can be matched with the 3-D representation. Subsequent research identified practical difficulties in variable environmental conditions which are still challenging with today’s modern supercomputers and GPU’s. Although these early research methods have been driven by pattern recognition, they were based on the geometrical relationships between facial points. Most of these methods are obviously highly dependent on detection of these facial points in a challenging environment as well as the consistency of these relationships across different variations. These issues are still a critical challenge for the research community. Another early attempt for developing face recognition system was by Kanade et al. [115]. They utilize simple image processing techniques to extract a vector of 16 facial parameters. It used a simple Euclidean distance measure for matching these feature vectors and achieve 75% accuracy rate on a predefined database of 20 people using 2 images per person.

In 2003, Zhao et al. [298] presented a precise and brief overview of techniques being employed by face detection and recognition community during last 30 years. They discuss many psychological and neuroscience aspects of face recognition. As for the method is concerns, face recognition techniques are initially categorized into three broad ways, holistic methods (PCA, LDA, SVM, ICA, FLD and PDBNN), features based methods (pure geometry methods, dynamic link architecture, Hidden Markov Model and Convolution Neural Network) and many hybrid methods like Modular Eigenfaces, Hybrid LFA, Shape normalized and component based methods.

6.1.1 Holistic methods

Holistic methods, also known as global features based methods, attempt to identify face using global characteristics, i.e., entire face representation is considered rather than individual components like mouth, eyes, and nose, etc. Sirovich et al. [227] was the first who take advantage of Principal Component Analysis (PCA) to represent global face features. More early research attempts include: Turk et al. [250] with Eigenface, Kamran et al. [58] with Linear Discriminant Analysis (LDA), Zhao et al. [300] with Subspace Linear Discriminant Analysis (SLDA) and Bartlett et al. [21] with Independent Component Analysis (ICA) for face recognition. In the same era, Guo et al. [80] introduced the use of well known method of the time Support Vector Machine SVM for recognizing most challenging Cambridge ORL face dataset. Similarly, Liu et al. [153] approach with an evolutionary algorithm for the projection of face image based on generalization error and Kawulok et al. [119] proposed model to assign variable significance parameters to different facial regions is a key milestone in classical face recognition tenure. Furthermore, holistic methods are subdivided into two key branches, i.e., linear holistic techniques and non-linear holistic techniques.

Xue et al. [273] utilize well-known approximation technique Non-negative Matrix Factorization to overcome limitations of PCA especially when face images are captured under an uncontrolled environment like varying expressions, illumination and or occlusions. Experimental results on AR database demonstrate that the proposed model has achieved high accuracy as compared to Bayesian and PCA methods. Yang [274] takes advantage of kernel methods and proposed a kernel PCA and kernel LDA for face recognition. Face images are mapped into a higher-dimensional space by a kernel function and utilized PCA∖LDA to build feature space. Another global feature approach based on locality preserving projections, known as Laplacianface [92], mapped the face images into a face subspace for analysis. It has been found that an integration maintains local information and deduces a face subspace for optimal global face structure.

Another milestone achieved in 2009, when R. Kasturi et al. [118] proposed a framework for face recognition and object detection from real time video streaming. The proposed framework takes video data as input and detects recognize face images. It provided an optimum set of measures for comprehensive comparisons can be made and failures could be analyzed by dividing the accuracy score and precision. The approach given in this paper is applicable for both direct measurement of tracking technologies as well as iterative algorithm development.

6.1.2 Local features based methods

Local features based approaches first evaluate the input image to segregate distinctive facial regions like the mouth, nose, eyes, etc. and then figure out the geometric relationships among these facial points. A group of statistical pattern recognition techniques and graph matching methods are available to compare these features. Early days efforts for local features based face recognition includes: Kanade et al. [154] with linear feature matching scheme, Yuille et al. [282] with interest of the points-based features extraction method using deformable templates, Brunelli et al. [28] with geometric features based templates matching scheme and Leads et al. [133] with dynamic link architecture based Gabor filter utilization are most considerable in classical face recognition literature.

Cox et al. [42] introduced a distance matching based local features model that has gained the highest recognition rate like 95% on a database of 685 people. Similarly, Wiskott et al. [263] utilize graph matching concepts to deal with a very challenging issue of single image per person face recognition. The proposed model based on elastic bunch graph matching extracts concise face descriptions in the form of image graphs and classifies them into different facial regions. Each region is represented by a set of wavelet components. These sets of wavelet components act as a foundation for elastic graph matching. Another novel framework proposed by Edwards et al. [54] introduces active appearance model that contains a statistical, photo-realistic model of the shape and grey-level appearance of faces. The model utilizes the information available from training data and facilitates the different facial parts based on a system-generated ID.

Ahonen et al. [6] introduced the most widely used algorithm Local Binary Pattern (LBP). At first, the face image is divided into small regions of the interest points from which region-wise local binary pattern histograms are extracted and a single spatially enhanced global feature histogram is constructed that efficiently represents the face image. The classification of features is achieved through the nearest neighbor classifier. It was the most novel idea of its time and still in practice today in most of face recognition techniques. Tan et al. [239] present a generalized LBP texture descriptor that efficiently utilizes local texture features. The approach is comparatively simple and efficient for change in illumination effect while preserving the essential appearance details that are needed for recognition. It is also demonstrated that replacing local histogram with local distance transformed based similarity measure is a powerful tool to boost up the performance of LBP based recognition.

Queirolo, et al. [201] provide another generic framework for 3D face identification. The method used a simulated annealing based approach to range image registration with the Surface Interpenetration Measure (SIM). Primarily, the feature space is extracted by segmenting the face image into four regions. Each region is compared with the corresponding region from an image that has been already enrolled in the database. To perform entire image comparison, a modified version of simulated annealing-based approach is used to eliminate face expression effects. Kemelmacher-Shlizerman and Basri [121] introduced another 3D Face recognition model by reconstructing a single image using a single reference face shape. The method exploits the likeness of faces by obtaining a single input face image and uses a single 3D reference model of a different person’s face. The basic idea is to use the input faces as a guide for recognizing the targeted reference model by reconstruction of 3D image. The authors also claim that the method can handle unconstrained lighting effects. In the same stream, Li et al. [139] with a nonparametric-weighted fisherfaces model, Han et al. [279] with a generic eigenvector weighting model for local face features and Choi et al. [39] with a pixel selection model based on discriminant features have gained considerable research attention in past years.

6.1.3 Hybrid methods

The third category in classical face recognition approaches is hybrid methods that use both local and holistic methods simultaneously. Hybridizing or mixing different approaches to obtain better results is a common research trend. Many researchers [85, 165, 183, 246, 253, 267] exploit hybrid models to take advantages of both local and global methods. The literature on hybrid face recognition approaches is quite vast and scattered in multiple disciplines and the key methods that we considered includes: Tolba et al. [246] with a combined classifier based on Radial Basis Function (RBF) and Learning Vector Quantization (LVQ), Huang et al. [102] with integration of holistic and feature analysis-based approach utilizing a Markov random field model and Wang et al. [256] with a biologically inspired features model and Local Binary Patterns (LBP).

Lavanya and Inbarani [137] come up with a hybrid approach based on Principal Component Analysis (PCA) and Tolerance Rough Similarity (TRS). In this hybrid approach, first PCA is used to extract feature matrix from the face image then TRS is applied to extract similarity index. Results achieved high accuracy rates up-to 97% on different datasets (OUR, YALE and ORL) and reflect a consistent performance. Hashemi and Gharahbagh [91] use comparatively a different approach for face recognition purpose. The algorithm uses Eigen values of 2D wavelet transform, k-means and correlation coefficient as a preprocessing method and RBFN network as classifier to obtain feature vector. After obtaining features, the training process carried out by the RBFN classifier, the smallest Euclidean distance is calculated for each person from the selected feature vectors. For a new face image, initially the feature vector is computed and then the distance of this new vector with all centers is compared to see similarity index. In best case results achieved 96% of recognition accuracy.

Hu et al. [98] proposed a uniquely different direction for face identification which is based on fog-computing based scheme. It has addressed the problem of face identification on the internet of things. Initially, a face recognition system generates a matrix of identities for an individual. After that, a proposed fog-computing based model decides individual identity. Experimental results show that the model can efficiently save bandwidth and achieve high accuracy level up-to 96.77% which is a remarkable contribution as compared to previous ones. Jain and Park [109] come up with another different direction of identification, i.e., soft biometrics. The soft biometrics refers to the attributes of individuals such as their age, gender, gesture, the size and shape of their head, approximate height, etc. Various researchers [12, 14, 16, 23, 46, 47, 74, 108, 116, 186, 212, 249] believes that soft biometrics research can play a vital role to deal with the challenging issues like variable illumination, pose, rotation, scale, and background related complexities for face recognition and human re-identification. Following are some classifications in this regard:

-

Demographic attributes: The term demographics attributes refer to age, gender, ethnicity, and race. These are widely used soft biometrics in multiple applications.

-

Anthropometric attributes: The Anthropometrics attributes for soft biometric refers to the geometrical appearance and shape of the face body and skeleton etc.

-

Medical attributes: The medical attributes refers to the broad range of more efficient soft biometrics like health, weight, skin texture and DNA patters information, etc.

-

Material, behavioral, and other soft biometric attributes: Accessories associated with any person like a hat, jewelry, bag, glasses, etc. can also be very helpful for an identity recognition system.

Lin et al. [151] recently claim that most of the face identification techniques are appropriate for a test or small data set, but couldn’t achieve such experimental results in real world applications that contain millions of human faces. So, the system should be robot enough to deal efficiently with large data sets like in MS-Celeb-1M. The authors proposed a 3 step algorithm that used a Max Feature Map (MFM) activation function to train an initial classifier by mapping raw images into the feature matrix. Secondly, face identities from MS-Celeb-1M are clustered into three subsets: a pure set, a hard set and a mess set. Third and final, locality sensitive hashing (LSH) method is used to fasten the search of the closest centroid. Experimental results proved that model is suitable and appropriate even for large datasets like MS-Celeb-1M.

6.1.4 Summary of classical approaches

With more than 50 years of research efforts in face recognition, the literature on classical face recognition approaches is quite vast and scattered in multiple disciplines. In the above section, we attempt to precisely summarize the time line based key milestones in face recognition research. Conclusively, we confined the early literature into three broad categories i.e. holistic methods, local features based methods and hybrid approaches. Table 3 categorically presents classical face recognition approaches and the performance evaluation of these methods on the corresponding datasets. It has been noticed that local features based methods and hybrid approaches better performed under different illumination conditions, facial expressions and other key challenges. Also, they are comparatively less sensitive to noise and translation invariant.

6.2 Modern approaches

In recent years, there is an immense improvement due to machine learning-based algorithms and methodologies in a lot of social and scientific domains. We summarize the modern era of face recognition approaches as three subcategories i.e. deep learning-based methods, sparse or dictionary learning-based methods and fuzzy logic-based techniques. An overview and research contributions in these categories are presented in this section.

6.2.1 Deep learning based face recognition

It has been observed that deep neural networks have massive computational power for object recognition and it has revolutionized machine learning during the last few years. Researchers from all the fields including but not limited to social sciences and engineering to life sciences considering deep frameworks to hybridize their existing models and get radical results. Many researchers, especially in face recognition community [15, 232], affirms that it has remarkable computational power with outstanding accuracy and result oriented behavior. In this section, we present a brief overview of recent developments in deep learning for face recognition.

Why Deep Learning?

Current research in face recognition through deep learning is largely based on hybridizing existing models with deep networks. Researchers focus on introducing improved techniques for better face recognition. It has been realized that deep neural networks and related techniques are highly suitable for achieving high performance in term of accuracy and robustness. Its ability to classify a large number of unlabeled face images in a robust and accurate way gives it an upper hand on classical face recognition approaches. Ongoing research in deep models in unconstrained environment with variable face images by means of pose, illumination, and cosmetics effects have achieved outstanding results. Although some efforts have been done for assessment of deep learning technique for face recognition, a huge gap is still there against different variations.

Early Deep Learning Models:

Early face recognition applications as discussed in [230, 298] use ANN for large scale features representation. This idea ultimately leads towards maximization of hidden layers in a neural network. Each layer in a deep model is based on a kernel function with a defined optimization objective and its multilayer architecture [15] that made possible to extract features space in a generalized way. The ability to automatically learn from unlabeled data without any human involvement make it incredible for identification purpose. The use of deep belief network in 2006 [97], hybridizing different approaches by Sun et al. [231, 233,234,235] and classification of ImageNet with CNN [129] are believed as key milestones, and it has opened a new generation of face recognition research in last few years.

Grm et al. [78] presented a comprehensive review about the strengths and weaknesses of deep learning models for face recognition. They use four most common deep CNN models AlexNet, VGG-Face, GoogLeNet, and SqueezeNet, to extract features from input images and analyze how certain factors like brightness, noise, blur, and missing data values affect the output performance through a well-known LFW benchmark. The performance metrics given by LFW protocol for accuracy verification is used under the ten-fold cross-validation test protocol with separately selected t threshold value. Separate data sets for training and evaluation are used, i.e., the VGG data set for training and LFW for performance evaluation. Experimental results are presented in four different categories, the first one is Gaussian blur the other covariates were noise, brightness, and missing data. Experimental results proved that output results are least affected by changing input conditions in color, contrast or other parameters through deep models selected for data evaluation.

Since, hashing has been very popular in access and retrieval algorithms for many decades due to its quick retrieval and low storage cost. To utilize this capability of hashing Tang et al. [240] come-up with an innovative idea of deep hashing based on classification and quantization errors for face image retrieval. As presented in Fig. 7 the proposed model learns image representation features from hash codes and classifier simultaneously. Deep model predicts image labels and generates corresponding hash codes for quick image matching. The prediction and quantization error are highly linked with each other and they jointly assist the learning process of deep network. To evaluate the performance proposed model famous YouTube, Cifar-10 and FaceScrub datasets are used and results prove that proposed model is highly efficient.

General Architecture of Deep Hashing [240]

Preprocessing and Deep Models:

Since face alignment is one of the key preprocessing challenges among overall face recognition process. It aims to localize facial landmarks and predict face position in the input image. This is an open research problem for many decades. Shi et al. [223] address the problem by using deep regression model and gain a significant improvement in output results. The model contains a global layer that formulates the initial face shape and multiple local layers that iteratively update the shape estimated by global layer. The function of the global layer is illustrated in (1)

The function of global layer as proposed in [223].

The outcome of the global layer S0 is the initial shape estimation from input image I, whereas the function GR() represent the global feature space that extracts d −dimensional features. Here, r0 is a regression function that takes two parameters ϕ0 and 𝜃0 respectively. The set of local layers take both inputs, the image I and the predecessor shape S0/St iteratively. A general structure of local layer formulation is presented in (2)

The function of the local layer as proposed in [223]. This layer-wise local learning locates the parameters of function LRt sequentially, from 𝜃0 to 𝜃t to approximately minimize the objective function. Parameters at every layer are optimized by considering the trained parameters of the predecessor layer. More recently a modified approach for face alignment by constructing deep face features is presented by Jiang et al. [111]. In this model, a large scale independent training dataset has been prepared.

Design Invariants for Deep Model:

Here, an important question is how to design a deep neural network for a particular face recognition system? Many researchers [90, 110, 114, 174, 257, 268, 292] address this problem in different ways but a considerable effort has been done by Chen et al. [35]. Design principles for a deep convolutional neural network architecture have been discussed for unconstrained facial images both for static and video streaming data. It is illustrated that most of the times CNN architectures have to analyze millions of parameters that impose massive computational and memory overhead, so a balanced compromise between the cost and efficiency is required while designing a deep architecture. Positively, all critical aspects of face recognition system like face alignment, detection, association, and face verification have been discussed. Another considerable effort has been done by Hasanpour et al. [90] while introducing core design principles of deep convolutional network. Many critical parameters like number of hidden layers, architecture formulation, handling homogeneous layers, local correlation preservation, maximum utilization of predecessor information at each layer, performance utilization, balanced weight allocation, isolate prototyping, maximum utilization of dropouts and adoptive features pooling have presented in detailed way and relative calculation measures are also provided. In the similar direction, recently Nguyen et al. [178] have presented a comprehensive review of the measures to choose a best deep model for a particular face recognition system. The authors evaluated three most popular deep face models VGG Face B, CenterLoss C and VIPLFaceNet and in light of experimental results they demonstrate certain characteristics of these deep models suitable for face recognition.

Recently, Ranjan et al. [208] provide a detailed overview of designing deep-learning frameworks for face recognition. This is a comprehensive overview, on design techniques illustrates subject independent multitask learning benefits of deep neural network for face recognition systems. The key contribution of this research is a list of future issues for designing a face recognition system such as face detection in a big crowd, variable illumination and pose or expression constraints for face detection, identifying model dependencies on large training datasets, controlling cost in case of deep models, complexity handling and mathematical formulation of functions in each hidden layer, handling degradation and data bias in training process, establishing theoretical foundations to understand the behaviors of deep models and incorporating domain knowledge while designing a deep network. Same as, Peng et al. [189] introduced a high-dimensional re-ranking deep representation for face recognition. The proposed method first build a feature space by extracting and concatenating deep features on local facial patches via a convolutional neural network (CNN). Then, the authors used a novel locally linear re-ranking framework to refine the initial ranking outcomes, which can explore valuable information from the initial ranking results. The method need not any human interaction or data annotation and can be served as an unsupervised post processing model. Likewise, Wen et al. [262] propose a concept of center loss to enhance the learning power of deep model. The suggested scheme effectively pulls the deep features of a selected class to their centers and joint supervision model not only stretch the inter-class features differences, but also the intra-class features variations are mitigated. Therefore, the discriminative power of the deeply learned features can be highly enhanced.

Efficiency and Robustness in Deep Model:

Efficiency and robustness of a system is another important aspect which is thoroughly analyzed by Mohammadi et al. [176] while presenting a study of robustness for face recognition. It is also highlighted in this research work that this is not enough for a particular face recognition system that it may obtain high accuracy results but it should be robust against presentation attacks like a person X present a photo of person Y in front image acquisition camera. Other forms of presentation attacks include digital photographs or video, artificial masks, and make-up, etc. The authors proved through experimental results on three popular CNN based face recognition methods VGG-Face, LightCNN, and faceNet that Deep CNN model is highly accurate up to 98 % and less vulnerable to presentation attack as well.

Recent Developments:

Kim et al. [127] show that transfer learning from a convolutional neural network on a 2D face images can better perform for 3D face identification. They use VGG face for initially 2D training and then add 3D data to enlarge the size of data which make CNN more robust for recognition. Additionally augmented 3D data is converted into a 2D depth map, isolated random points are separated from this depth map to simulate hard occlusions. Finally, a fine-tuning phase added to represent 3D face features from the previous layers. Lin et al. [151] present another novel idea based on clustering lightened deep model for large scale face identification to deal with Microsoft Challenge of recognizing one million celebrities (MS-Celeb-1M). Since deep models have memory and computational overheads, most of the suggested techniques work well on ordinary data sets like LFW. On the other hand, sometimes real life face recognition systems have to deal with a large number of face identities. The authors propose a three-phase model to deal with this problem. At the first stage, a face feature representation model is trained through a function entitled as Max-Feature-Map (MFM) on a publically available cross-domain dataset CASIA-Web. In the second stage, face features are clustered into three independent sets called mess, hard and pure sets. Each set acts a cluster of feature space and its cluster center is used as corresponding MID for MS-Celeb-1M. This approach automatically reduces the number of comparisons and the effect of noise in an input image. In the third or final stage, the Locality Sensitive Hashing (LSH) algorithm is used to accelerate the search process for nearest centroid. Another important question has been addressed by Zhong et al. [304] that what kind of features are most effective for a deep face model and how we best represent these features? The most important phenomenon that has been highlighted was the high level features correspondence with complex face attributes which the human could not express in few words.

Bashbaghi et al. [22] suggested a triplet-loos function based deep learning architecture for face recognition in video surveillance. The proposed architecture is highly suitable for single sample per person which is a very challenging situation in current face recognition systems. The whole architecture is mainly divided into two broad categories: first triplet-loss function originally proposed by [218] based deep CNN model, second, deep autoencoders. The defined function is capable to learn from complex face representations like low illuminations, contrast or brightness and this makes sure robustness in inter/intraclass variations. The autoencoder as shown in Fig. 8 is used to normalize discrepancies in face image capturing conditions and reconstruct a fine image with the help of input image. This work well for both single reference training and domain adoption issues. The performance of the proposed model is evaluated on a dataset especially collected for video surveillance applications called Cox Face DB [161]. Finally, experimental results proved that CCM-CNN and CFR-CNN have provided significant improvement in recognition performance with lower computational cost.

Block Diagram of autoencoder in Canonical Face Representation CNN (CFR-CNN) [185]

Recently, Iranmanesh et al. [107] come up with a totally different way of face recognition. They utilize deep coupled learning framework for polarimetric thermal face images to compare with a group of visual face images. The proposed model is capable to train a deep model by making full use of polarimetric thermal information. The deep model locates global discriminative features through nonlinear embedding feature space to match the polarimetric thermal faces with visible faces. The experimental results proved that the deep couple framework is highly efficient as compared to traditional approaches. Likely, Liu et al. [157] proposed a deep hypersphere embedding model (SphereFace) under open-set protocol settings and Deng et al. [50] suggest a novel additive angular margin loss function for deep face recognition. The proposed model provides much better geometrical clarification to find discriminative face features by maximizing decision boundary in angular space based on l2 normalized weights. The authors claim to have significant improvement in recognition results on many popular face recognition benchmarks like LFW, AgeDB, CFP and more importantly on MegaFace Challenge. Another recent development was made by Lin et al. [152], the idea is quite intuitive and simple that they utilized local features for all the salient facial points and produce feature tensor to represent 3D face. Similarity of two 3D faces can thus be calculated by two feature tensors. To resolve the unavailability of large samples, a feature tensor based data augmentation approach has been introduced to augment the number of feature tensors. Results on BOSPHROUS and BU3DFE face dataset demonstrate the effectiveness of proposed approach. In the same era, Efremova et al. [55] proposed an easy-to-implement five class classification model for face and emotion recognition with neural networks. The suggested framework is capable for large-scale emotion identification on different platforms such as, desktop, mobile and VPU.

Regardless of many advantages of deep neural network, there is a darker side of the picture as well. In last few years many researchers [2, 19, 184, 193, 195, 203] have illustrated some loopholes in deep learning models for face recognition as well. Goswami et al. [76] illustrate that due to the complex formulation of the function that learns with the hidden layers of a deep network it is difficult to mathematically formulate and validate each of them. There are three key aspects of this publication. First, the authors show that the deep face models considerably affected due to adversarial attacks. Secondly, it is important that system should be robust enough to determine that which sort of images could be caused of such distortions and take necessary countermeasures by means of a proposed model in deep network hidden layers. Finally, the identified suspicious images will be rejected by means of the novel contribution of this research. In most of the practical face recognition systems, we have limited training data but a large amount of actual unseen images that deep model need to be classified. This leads towards biased classification in an ordinary trained deep neural networks. A research group at NEC Laboratories and Michigan State University under Yin et al. [278] proposed a transfer learning-based framework to deal with long-tail data for a face recognition system. According to Mostafa et al. [57] long-tail data have exponential production rate and even it has no comparison with big data due to its data generation behavior and resource consumption. A Gaussian prior is supposed in all regular classes and the variance from these classes is transferred to the long-tail class. This facilitates the long-tail data distribution to be more similar to the regular distribution and ultimately balance the limited training data which broadly impact the biased decision making. The author’s experiments on MS-Celeb-1M, LFW and IJB-A datasets by restricting the number of samples on long-tail classes shows state-of-the-art results against the proposed algorithm.

Galea and Farrugia [62] introduce a deep neural networks for matching software generated sketches to face photos by utilizing morphed faces and transfer learning. This is one of the most curtail and sensitive social security issues that eyewitness based (human drafted or software generated) sketches need to be automatically synchronized with built-in large scale criminal face galleries. The existing methods are coupled with human intervention and still have poor performance. Furthermore, most of these algorithms have not been designed to work with software generated faces. The authors propose a three steps methodology. First of all, a deep CCN is trained by means of transfer learning and then it is being utilized to determine the identity of a composed sketch with face photos. Secondly, a 3D morphable model is applied to integrate both software generated face sketches with step one identified face photos. Finally, a specialized heterogeneous large-scale software generated face composite sketch database called UoM-SGFS is used with an extension of twice number of subjects to boost up the performance.

Summary of Deep Learning Methods:

Deep learning based face recognition methods have gained significant research attention in recent years. In the above section, we present recent developments in a chronological order. Due to potential applications, the volume of literature is too vast and diversified in multiple branches. Therefore, we precisely discuss key milestones with respect to their time series sequence, technical strength and popularity. We composed different aspects of deep learning methods regarding early contributions, design invariants for deep networks, efficiency parameters of deep learning methods and recent developments in deep learning-based face recognition. Table 4 presents deep learning-based face recognition approaches and the performance evaluation of selective methods on corresponding datasets. Additionally, it has been observed that the use of intermediate visual features in convolutional neural networks to describe visual attributes has been rarely discussed in academic literature. This could be a promising future research line if combined with the pre-trained autonomies units.

6.2.2 Sparse representation models

Sparse representation is a powerful pixel-wise classification technique that learns redundant dictionaries from input images and classifies them accordingly. It has the ability to discover semantic information which is very useful for visual understanding domains. Sparse representation has been proven [24, 56, 169, 266] to be the most powerful tool for feature representation in high dimensional data structures. It has gained significant attention and obtained remarkable performance in various applications such as visual understanding, audio encoding, and medical image processing [37]. Specifically, the discriminative feature representation power of dictionary learning is highly valuable for face recognition applications. The facts like face images are naturally sparse, highly complex, high-dimensional and availability of greedy or convex optimization techniques for sparse models make it more suitable for face recognition.

Dictionary Learning for Face Recognition:

Dictionary learning is a branch of machine learning algorithms that aims to find a matrix called dictionary in which a training data submits a sparse representation. In our context, if collections of face samples are there in a random distribution, we can extract discriminative features by learning the desired dictionary from training data. The learned dictionary plays a vital role in the success of the sparse representation [194]. We have to learn a task-specific dictionary from the given face images. Therefore, as an emerging research field, existing theories and approaches for feature representation need to be rebuilt for dictionary learning.

Early Contributions:

Some early milestones like Wright et al. [266] and Rubinstein et al. [211] provide a strong theoretical foundation on sparse representation in visual understanding and pattern recognition. Also, researchers such as [128, 290] presented a comprehensive review and highlight how research trends come towards sparse modeling in the previous two decades. A considerable effort that has opened various new directions for the future of machine intelligence has been done by Tosic and Frossard [248]. In our context, Wright et al. [255] robust sparse representation algorithm is one of the early and most efficient sparse models for face recognition. The proposed framework gives a different view of the two most critical face recognition problems: robustness to occlusion and efficient feature extraction. The existing theories in sparse models show that it can prior predict how much occlusion the model can handle. Experimental results show that if sparsity is properly addressed then feature extraction is not a critical issue in the face recognition process. Furthermore, the framework is capable to handle errors caused by occlusion and illumination because these errors are sparse with respect to pixel basis.

Zhang and Li [287] proposed an extended version of K-SVD (K mean Singular Value Decomposition) algorithm that incorporates the classification error in the objective function, which improves the learning power of dictionary and performance of linear classifier. The proposed discriminative K-SVD algorithm finds a dictionary and supports the classifier using standard K-SVD method to create overcomplete dictionaries for sparse representation. This is a quite different approach as compared to most of the existing methodologies that iteratively solve sub-problems with the hope to achieve a globally optimal solution. The proposed method shows outstanding results on YaleB and AR datasets. More recently, Liu et al. [160] proposed an image-set based face recognition using K-SVD dictionary learning. Basically, the proposed approach is to learn variation dictionaries from gallery and probe face images separately, and then utilized an improved joint sparse representation, which employs the information learned from both gallery and probe samples effectively.

Chen et al. [32] proposed a generalized dictionary learning-based face recognition framework for video streaming. The method is proved to be robust for change in illumination, pose, and variations in video sequences. The proposed model is based on three general steps: first of all the input video is partition into a group of frames with the same illumination effect and pose invariant. This group-wise framing strategy helps to eliminate temporal redundancy while keeping in view of changing pose and illumination. For each group of frames, a sub-dictionary is constructed and representation error is minimized through sparse representation. Secondly, these learned dictionaries are combined together to form a global sequence-specific dictionary. Step three is formulated purely for recognition purpose and here the frames from the input video are projected onto the sequence-specific dictionary constructed in step-two. This projection automatically leads towards recognition with a cost effective way. Performance evaluation on three most challenging datasets for video-based face recognition (Face and Ocular Challenge Series (FOCS), Multiple Biometric Grand Challenge (MBGC) and the Honda/UCSD datasets) shows a significant improvement as compared to other methods.

Recent developments for dictionary learning based face recognition:

Meng et al. [173] introduced a future fusion-based linear discriminative redundant dictionary algorithm that improved the face recognition capability of the sparse model. First of all, an initial layer extracts common local features and concatenate them to form a feature vector or atoms. Secondly, Linear Discriminant Analysis (LDA) is used for dimensionality reduction and rebuild the dictionary of atoms. Experimental results proved that this has a positive impact on the structural design and discriminative ability of the dictionary. Similarly, Liu et al. [156] hybridized collaborative representation based classification (CRC) with sparse representation-based classification (SRC) and obtain outstanding results for face recognition. The proposed hybrid model assume that the training data samples in each category play an equal role in learning the dictionary. Based on these assumptions, it generates a dictionary that contains the training samples in the corresponding class.

Xu et al. [272] come-up with another different thinking direction, it is basically l2 regularization based sparse representation algorithm that is computationally efficient and it achieves noticeable performance for face image classification on various dataset. The fundamental claim in this article is still proven among pattern recognition community that sparse representation is highly efficient for image classification and identification purpose. The proposed method in this article, suggests that discriminative sparseness can be achieved by reducing correlation among test samples taken from different classes. In the similar direction, Liao et al. [150] proposed an efficient subspace learning based face recognition algorithm. The proposed method utilized sparse constraint, low-rank technology and label relaxation model to reduce the disparity between domains. Additionally, a high-performance dictionary learning algorithm work by constructing the embedding terms, non-local self-similarity terms and it ultimately drop down the time complexity. Experiments on wide range of face recognition datasets such as FRGC, LFW, CVL, Yale B and AR face proved the effectiveness of proposed algorithm. Similarly, other researchers [158, 163, 288] have also utilized sparse representation for face recognition purpose.

To overcome a major limitation of deep learning models that it may not work well if the number of training samples is too small, Shao et al. [222] proposed a two-step dynamic dictionary optimization model for face image classification. Initially, a dictionary with a set of artificial faces taken from a pair of face differences is constructed. Secondly, dictionary optimization methods are utilized to eliminate redundancy in this constructed dictionary. The original samples with small contribution were discarded to shorten the extended dictionary to a more compact structure. This optimized dictionary can be utilized for face classification based on sparse representation. In the same direction. Recently Lin et al. [151] proposed a virtual dictionary-based kernel sparse representation to overcome limited sample problem for face recognition. The given model automatically provides a number of new training samples and termed virtual dictionary from the original dataset. Then, it uses the constructed virtual dictionary and training set to build the kernel sparse representation for classification (KSRC) model. The coordinate descent algorithms have been used to solve KSRC model and enhance computational efficiency.

Jing et al. [113] utilized multispectral imaging to boost the performance of face recognition system. Multi-view dictionary learning is an efficient feature learning model that learns dictionaries from different views of the same object and has obtained state-of-the-art classification results. In the proposed model, multiview dictionary learning has been used to multi-spectral face recognition by a totally different concept called multispectral low-rank structured dictionary learning (MLSDL) method. The method learns numerous dictionaries that include a spectrum-common dictionary and few spectrum-specific dictionaries. Each dictionary has a set of class-specified sub-dictionaries. Low-rank matrix recovery algorithm is used to regularize the multi-spectral dictionary learning process so that MLSDL can easily handle issues in multi-spectral face recognition. Performance evaluation on HK PolyU, CMU and UWA hyper-spectral face databases prove the significance of the proposed framework.