Abstract

In medicine, diagnosis is as important as treatment. Retinal blood vessels are the most easily visible vessels in the whole body, and therefore, play a key role in the diagnosis of numerous diseases and eye disorders. Systematic and eye diseases cause morphologic variations, such as the growing, narrowing or branching of retinal blood vessels. Imaging-based screening of retinal blood vessels plays an important role in the identification and follow-up of eye diseases. Therefore, automatic retinal vessel segmentation can be used to diagnose and monitor those diseases. Computer-aided algorithms are required for the analysis of progression of eye diseases. This study proposes a hybrid method that provides a combination of pre-processing and data augmentation methods with a deep learning model. Pre-processing was used to solve the irregular clarification problems and to form a contrast between the background and retinal blood vessels. After pre-processing step, a convolutional neural network (CNN) was designed and then trained for the extraction of retinal blood vessels. In the training phase, data augmentation was performed to improve training performance. The CNN was trained and tested in the DRIVE database, which is commonly used in retinal blood vessel segmentation and publicly available for studies in this area. Results showed that the proposed system extracted vessels with a sensitivity of 77.78%, specificity of 97,84%, precision of 84.17% and accuracy of 95.27%.

This study also compared the results to those of previous studies. The comparison showed that the proposed method is an efficient and successful method for extracting retinal blood vessels. Moreover, the pre-processing phases improved the system performance. We believe that the proposed method and results will make contribution to the literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In medicine, diagnosis is as important as treatment. However, providing the fastest and most accurate diagnosis is an important parameter as well. Advances in technology allow us to provide faster and more accurate solutions to current problems. For example, different types of technological devices are used to diagnose a variety of diseases. Generally, x-rays are used for bone and hard tissues, tomography for soft tissues, and fundus cameras for retinal imaging. They are also complementary devices that help doctors to diagnose diseases.

The retina is a light-sensitive tissue that consists of photosensitive cells (photoreceptors) and covers the inner surface of the eye. Photoreceptors convert light into nerve signals that travel along the optic nerves to the brain. Fundus camera systems (retinal microscope) are generally used to obtain images (fundus images) to document the status of the retina. Fundus images contain basic diagnostic information that can be used to determine whether the retina is healthy or damaged. Fundus images are widely used in the diagnosis of vascular and non-vascular pathologies [38] and also provide information on retinal vascular changes that are frequently observed in diabetes, glaucoma, hypertension, cardiovascular diseases, and strokes [10, 11], which generally alter the arrangement of blood vessels [49]. For example, hypertension alters the branching angle or curvature of the vessels [44] while diabetic retinopathy can cause neovascularization, that is, the formation of new blood vessels. If left untreated, such conditions can cause visual impairment and even blindness [42]. Early detection of those symptoms enables us to take measures for the prevention of vision loss and blindness [33]. Retinal blood vessels also play an important role in the early stage diagnosis of numerous diseases. However, manual retinal blood vessel segmentation is a time-consuming and repetitive process that requires training and skill. Therefore, automatic segmentation of retinal vessels is the first step in developing a computer-aided diagnostic system for ophthalmic disorders [12].

For rapid and timely retinal image analysis, ophthalmologists use automated methods, which, therefore, attract the attention of members of the medical image analysis community. Diagnostic analysis of retinal images of blood vessels is of particular interest to eye specialists. Therefore, most studies on computerized methods for retinal image analysis focus on segmentation of retinal blood vessels [39].

Retinal blood vessels are analyzed in many areas - including ophthalmology - for diagnosis, treatment planning and administration, and assessment of clinical outcomes [32]. Methods based on blood vessel segmentation include morphological (structural) filters and multi-scale line detectors. However, those methods fail to solve the main problems of retinal imaging analysis [40]. Therefore, new approaches based on machine learning have been developed in recent years.

Segmentation of retinal blood vessels is of interest in medical image analysis. The studies in this field can be examined under two groups; those using rule-based methods and those using machine learning based methods. Rule-based methods are vessel tracking approaches, adaptation filter, multi-scale approaches, and morphology-based methods. Machine learning based methods are traditional machine learning algorithms where training is carried out with manual segmentation of vessels and deep learning methods thanks to recent developments. Rule-based methods use the natural features of a pixel in a retinal image to determine whether that pixel belongs to the vessel structure. Manual segmentation of vessels has an indirect effect on the success of the algorithm. Previous studies have focused on rule-based methods. For example, Salem et al. proposed a Radius-Based Clustering algorithm, which uses a distance-based principle, to map the distributions of image pixels before segmentation. They made use of three main features of the image: (1) green image channels, (2) maximum gradient magnitude, and (3) local maximum of the largest eigenvalue calculated from the Hessian matrix [36]. Zhang et al. developed a matched filter method with first-order derivative of Gaussian for retinal vessel extraction and reported promising results [50]. Delibasis et al. (2010) proposed a model-based tracing algorithm for vessel segmentation and diameter estimation by using the main geometrical features of blood vessels. They computed the starting points using the Frangi filter and then determined the geometric properties of the vessel such as width, size and orientation. They used a measure of match to quantify the similarity between the image and the developed parametric model [6]. Mendonca et al. (2006) used the DoOG (Difference of Offset Gaussian) filter to detect vessel centerlines and used them as starting points. They used multi-scale morphologically modified top-hat filtering to smooth out the images. The algorithm is complemented with morphological restructuring and vessel filling [31]. Fraz et al. took a step further and combined the morphological bit plane slicing method with the vessel centerlines detection approach. They applied bit plane slicing to the improved gray level images of retinal blood vessels and combined it with vessel centerlines [12]. Ghoshal et al. proposed an improved vessel extraction scheme from retinal fundus images and performed a mathematical morphological process on the image. They converted the original image and the image removed from the vessels into negative gray scale images, which were extracted to balance the image and then were enhanced to obtain thin vessels by transforming the developed image into a binary image. Lastly, they merged the thin vessel image the binary image to obtain the vessel extracted image. They reported that they achieved satisfactory performance results [14].

Studies also recommend machine learning-based methods for retinal blood vessel segmentation. Controlled methods use information on whether a pixel belongs to a vessel or the retinal background. The algorithm learns how to decide whether a pixel belongs to a vessel. Training is carried out using training data along with manually segmented versions. Soares et al. (2006) applied a two-dimensional Gabor wavelet transform and supervised learning and used the two-dimensional Gabor wavelet transform responses at different scales of each pixel as features. They used a Bayesian classifier and classified a complex model quickly [38]. Ricci and Perfetti (2007) proposed a method based on line operator that can be used to calculate the features required for retinal blood vessel segmentation. Their model is faster and requires fewer features than other methods thanks to the line detector that it applies to the green-channel of retinal images [35]. Marin et al. proposed an artificial neural network technique and used a seven-dimensional feature vector to train a multi-layered forward-oriented artificial neural network. They used sigmoid activation function in each neuron of the network with three hidden layers. They reported that the trained network works successfully in other databases as well [29]. Due to recent developments, there is an increase in the number of studies using deep learning for blood vessel segmentation in retinal images. Convolutional neural networks (CNNs), which are robust methods used for image classification and segmentation, also yield successful results in vascular segmentation. CNNs detect and learn features from raw images instead of using manually designed features. Studies on different network structures have reported significant results regarding blood vessel segmentation in retinal images. Liskowski et al. used preprocessing steps to input images and then used a convolutional neural network for training. They used data augmentation and successfully performed vascular segmentation [27]. Melinscak et al. developed a method with an CNN architecture for determining whether each pixel is a vessel or background [30]. Wang et al. proposed a new retinal vessel segmentation framework based on Dense U-net and patch-based learning strategy and reported that the proposed method was promising in terms of common performance metrics [43]. Yao et al. developed a CNN-based blood vessel segmentation algorithm and used a two-stage binarization and a morphological operation to refine the preliminary segmentation results of fundus images. They reported that the proposed algorithm significantly improved the segmentation of blood vessels performance [46]. Guo et al. proposed a CNN-based two class classifier with two convolution layers, two pooling layers, one dropout layer and one loss layer for retinal vessel segmentation. They concluded that the proposed method had high accuracy and training speed without pre-processing [17]. Leopold et al. presented PixelBNN, which is an efficient deep learning method for automatic segmentation of fundus morphologies and reported that it had shorter test time and relatively good performance, considering the reduction of information due to the resizing of images during preprocessing [26].

Image quality and image enhancement play a significant role in object recognition and detection and is an integral part of image and video processing applications [2,3,4]. Advances in technology have provided high resolution images with high pixel density, clear details and rich information. Therefore, high image quality can meet the practical application requirements of image analysis and image understanding [48]. CNN yields successful outcomes in image classification and object detection, which however depends on some factors, such as the network architecture, selection of an activation function, and input image quality. Research shows that the performance of a CNN is negatively affected by poor quality input images, even if not visible [7, 48].

This study proposed a hybrid method based on CNN architectures and image processing techniques for segmentation of blood vessels in retinal images. Different from previous methods, the proposed method utilizes four-step image processing techniques to significantly extract blood vessels from the background and to standardize retinal images for the training of the network. The preprocessed images were randomly selected and cropped to certain sizes, which improved training performance through data augmentation. The post-training network was used to segment blood vessels in the retinal images.

The success of the proposed CNN method depends on several factors. Retinal blood vessels are generally detected by segmenting full-size images. However, the proposed CNN involves detecting regional vascular sections rather than segmenting full-size images. It divides an image into small pieces to detect the retinal blood vessels and then assembles the image back, resulting in an exponential increase in the amount of training data and thus improving the training performance of the CNN. CNNs can learn attributes from raw images and perform well in making sense of images. Moreover, the performance of the proposed CNN was greatly improved by applying preprocessing to the input images. The comparative results also showed that the proposed CNN is an efficient and successful method that can be used for extracting retinal blood vessels.

2 Materials and method

In this study, a fully CNN model was developed, and retinal blood vessels were extracted using semantic segmentation technique. The greatest challenge to detecting retinal blood vessels is differentiating them from surrounding tissues and lesions. There are several points critical for the success of the proposed method, which is successful for several reasons. First, it has transformed the problem of detection of retinal blood vessels from full segmentation to regional segmentation. It divides images into small pieces and detects retinal blood vessels and then brings them together. This leads to an increase in the amount of training data to thousands or even hundreds of thousands, resulting in a significant improvement in the training performance of the CNN. In addition, the method preprocesses input images, thereby, greatly improving the performance of the CNN.

The proposed method consists of three parts. Before processing, the data were divided into groups: training and test. Prior to training and testing, a series of preprocesses were applied to the images, and then, data augmentation was performed, which greatly affected the performance of the full CNN. This both diversified the training data and evolved the problem from full segmentation into regional segmentation of retinal blood vessels in retinal images. The image slices after data augmentation were used as training data. Test data were used to test the performance of the network after training. In the final step, the image slices were combined. The Fig. 1 shows the operational steps and the details of the methods used in Fig. 1 are given in the following sections.

2.1 Data set

The retinal images were obtained from the publicly accessible DRIVE database [22], which is the result of a screening program for diabetic retinopathy in 400 diabetic patients 25 to 90 years of age in the Netherlands. The database consists of 40 fundus images, 33 of which exhibit no symptoms of diabetic retinopathy while the rest present symptoms of mild early diabetic retinopathy. The images were captured using a Canon CR5 non-mydriatic 3CCD camera with a 45-degree field of view (FOV). Each image was captured using 24 bits per color plane at 768 by 584 pixels. The FOV of each image is circular with a diameter of approximately 540 pixels. The images are cropped around the FOV. Each image is provided with a mask image that delineates the FOV [34, 41].

The DRIVE database consists of 20 training and 20 test images along with manually segmented versions. Figure 2 shows an example of a training image. The database also has its own gold standard image, which is used both to compare computer-generated vessels with that of an independent observer and as a training outcome. Retinal images were also obtained from a second database, the STARE, consisting of 20 retinal fundus images (10 from healthy individuals and 10 from individuals with pathology). Each image was carefully hand-labeled to produce a ground truth vessels segmentation [20, 21].

Types of images in the DRIVE data set: (left to right), color fundus image, gold standard image and mask image [22]

2.2 Gray level transformation

Gray level transformation is often used in image processing. Several different methods are used for gray level transformation, which is achieved by adding a constant value to or multiplying a constant value by the color channels of a given image. Its generalized version is as follows:

where GL is the gray level of a given pixel. R, G and B are the red, green and blue channel values, respectively, and \( {k}_{\begin{array}{c}r\\ {}\ \end{array}} \), \( {k}_{\begin{array}{c}g\\ {}\ \end{array}} \) and \( {k}_{\begin{array}{c}b\\ {}\ \end{array}} \) are the factors of three different channels. These coefficients must satisfy the condition in Eq. 2 [47].

2.3 Gray level normalization

Uneven illumination, local brightness and contrast differences are common problems observed in obtaining fundus images [23]. To overcome those problems, data standardization is applied to image sets that are used as training data. In the first stage of data standardization, the mean value (Eq. 3) and standard deviation (Eq. 4) of the entire image data set are calculated.

where l is the total number of images, n is the image height, m is the image width, and N is the total number of pixels.

Standardization is then calculated using the standard deviation and mean values (Eq. 5).

In the next stage, the standardized input image data set is normalized (Eq. 6).

where min(Ik) and max(Ik) are, respectively, the minimum and maximum pixel gray level values of the first image [37].

2.4 Contrast limited adaptive histogram equalization (CLAHE)

Vessels that are characterized as small and extreme should be accurately segmented in fundus images because they generally have low contrast with the background. The histogram equalization can be used to enhance image contrast.

For a given gray level image, let ni be the number of gray levels at i occurrences. The probability that a pixel of level i occurs in the image is calculated as follows:

where i is the total number of gray levels (usually 256), n is the total number of pixels in the image, and p(i) is the histogram value of level i in the image. Hence, cumulative distribution function is calculated as follows:

A transform is calculated to obtain an image with a flat histogram. The resulting image has a linear increasing cumulative distribution function.

The histogram equalization calculates and normalizes the density distribution of a given image. The use of all pixels in normalization, however, results in noisy data. Therefore, contrast limited adaptive histogram equalization (CLAHE) can be used alternately. CLAHE divides the image into sections and then calculates and equalizes the histogram of each section. Applying histogram equalization to sections may result in noisy data. A contrast limit is set to overcome this.

Rayleigh Distribution is generally used to clip the histogram in CLAHE, which is also used to prevent excessive contrast magnification [23]. Rayleigh Distribution is expressed as follows:

where P(f) is the cumulative probability distribution and gmin is the minimum pixel value.

In the last stage, subregions are joined using bi-linear interpolation method, resulting in an improved image [19, 24]. Fig. 3 shows the pixel density distribution, i.e., histogram, of a randomly selected image before and after CLAHE.

2.5 Gamma correction

Gamma correction applies a linear or nonlinear mapping between input and output gray values depending on the value of gamma. If gamma is 1, the mapping is linear. If gamma is less than 1, the mapping is weighted towards brighter values. If gamma is greater than 1, the mapping is weighted towards darker values. It is generally expressed as in Eq. 10.

Where A is generally 1 and γ is the gamma value [5].

2.6 Deep learning

Deep learning is a branch of machine learning that has provided significant solutions to many problems in recent years. It is based on artificial neural networks (ANNs), which, however, fell out of favor in the 2000s due to small data sets, low processing capacity of computers, insufficient hardware despite increasing number of layers and relatively ineffective non-linear activation functions [45]. ANNs have become popular again due to the emergence of big data, improvements in hardware technology, new non-linear activation functions and new methods of initialization [15, 18] and new methods of regularization.

Multilayer ANN models as shown in Fig. 4, aim to calculate the weight and bias parameters with which the model will yield the best output score. Multilayer sensors have many deep-layered variants, some of which are CNNs [25]. CNNs are advanced neural networks inspired by the visual cortex of living creatures and specialized for processing data with grid-like topology. Images are regarded as 2-dimensional grid-type structures.

Convolution (Eq. 11) is the response of an artificial nerve cell to excitations [25]. CNNs employ a special linear mathematical operation referred to as convolution as well, hence the name “convolutional neural network.” In CNN terminology, the first argument is the input, the second argument is the kernel and the output is the feature map [16]. Figure 4 shows the schematic of a given ANN and CNN.

Deep learning is based on learning multiple levels of data resulting from the interaction of multiple abstract layers. The output of a layer is the input to the next layer. In the first layers, low quality features are derived, from which high-quality features are derived, resulting in a hierarchical representation. Deep learning provides low- and high-quality feature extraction from a given raw image [1].

In a CNN, the convolution layer is the basic layer where the perception of the basic features of an image is carried out. In Eq. 12, the output attribute map is expressed after the convolution in its most general form. Given a convolution layer l, the input consists of \( {kb}_1^{\left(l-1\right)} \) attribute maps of the previous convolution layer. When l = 1, this value is the channel size \( {kb}_{\mathbf{2}}^{\left(l-1\right)} \), \( {kb}_3^{\left(l-1\right)} \) image dimensions of the image input.

where \( {Y}_j^{\left(l-1\right)}\ \mathrm{is}\ \mathrm{the}\ \mathrm{input} \), \( {Y}_i^{(l)} \) is the ith output attribute matrix, \( {B}_{\boldsymbol{i}}^{\left(\boldsymbol{l}\right)} \) is the bias matrix, and \( {K}_{i,j}^{(l)} \) is the filter used in convolution.

The sharing layer is another important layer of a CNN. Pooling refers to the process in which the value of pixels in an output matrix is calculated using the statistical summary of the pixels around the data at a certain point in the input matrix, thereby creating all the values of the output matrix [16]. Pooling is used to reduce size. The dimensions of the pooled matrix is calculated using Eq. 13:

where G is the width, Y is the height, D is the depth, S is the number of shifting steps, and F is the filter size.

Multi-layer artificial neural networks can use activation functions to learn nonlinear real-world information. ReLU, which is the most common activation function in deep layered artificial neural networks, is expressed as follows:

2.7 Data augmentation

Data augmentation, such as rotation, scaling, cropping, and mirroring, is used to expand the training of a data set, and thus, to improve training performance in deep learning. In this study, data augmentation was performed by dividing an input image into small regions.

A training set is prepared using random patch generation procedure, where the points (x, y) that satisfy the conditions in Eqs. 15 and 16 are randomly selected. Cropped patches with a dimension of 28 × 28 remain in the center of the image slice.

where H and W are the image height and width, respectively. The points are randomly selected, and therefore, the image slices overlap. Moreover, the image slices are completely or partially out of sight, which allows the proposed CNN to distinguish between visual field boundaries and retinal blood vessels [30].

The test set is prepared using ordered patch generation with sliding procedure, where multiple consecutive overlapping patches with a striding step of 5 pixels and a dimension of 28 × 28 are obtained in each testing image.

3 Experiments and results

This study proposed a method based on image processing techniques and deep CNN architectures for segmentation of blood vessels in retinal images. The method primarily applied image processing techniques to extract blood vessels from the background and standardized the retinal images for the training of the network. The preprocessed images were randomly selected and cropped to certain sizes, which improved training performance through data augmentation. After preprocessing, the network was trained. The resulting network was used to perform segmentation of blood vessels in retinal images.

The proposed method was performed in MATLAB environment on a PC Intel Core i7 @ 2.6 GHz with 16 GB RAM and Nvidia GTX 960 M running Windows 10. The fully CNN was trained on a GPU (graphic processing unit). Training lasts an average of 8 h on a single GPU whereas preprocessing steps are completed much faster. The DRIVE database consists of 20 training and 20 test images. It takes an average of 4.5 s to crop 40,000 random packaged images from the training set and to generate and preprocess 8000 consecutive test packages from the test set.

The proposed method used color fundus images as input. Preprocessing began with the process of transforming the color images into gray level images. Gray level transformation was carried out using Eq. 1. Experiments showed that \( {k}_{\begin{array}{c}r\\ {}\ \end{array}}=0.299 \), \( {k}_{\begin{array}{c}g\\ {}\ \end{array}}=0.587 \) and \( {k}_{\begin{array}{c}b\\ {}\ \end{array}}=0.114 \) were the ideal coefficient values for gray transformation. Gray level normalization was then performed. Afterwards, CLAHE was applied to the normalized images. In this process, 8 × 8 pixel dimensions were selected for image sub-regions. Normal distribution was selected for the histogram equalization intensity distribution. Lastly, gamma correction was performed, and the optimal gamma was found to be 0.833. In the last step, the gray level of the image ranged from 0 to 1.

After preprocessing, data augmentation was performed by dividing an input image into small regions in order to increase the amount of data, and thereby, to improve training performance. The data set consisted of 40 retinal images along with manually segmented versions. 2000 images (28 × 28) were extracted from each training image. Figure 5 shows the image slices. A total of 40,000 image slices were prepared for training.

Striding step 5 was selected, and the test images were sequentially cropped from the full image to obtain image slices (28 × 28). A total of 246,340 image slices (12,317 per image) were obtained.

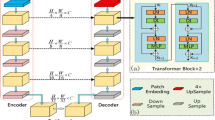

Data augmentation was followed by the generation of fully CNN for segmentation of retinal blood vessels. Figure 6 shows the overall structure of the network. CL is the convolution layers, BNL is the batch normalization layers, MP is the maximum pooling, and TCL is the transpose convolution layer (upsampling).

The first layer consisted of preprocessed image slices (1 × 28 × 28). The inputs were convoluted in the first convolution layer (C1). Layer C1 consisted of 32 filters of size 1 × 28 × 28. The pixel addition method was used to avoid a dimensional difference between the convolution output and input. The ReLU was the activation function, and batch normalization layer was added at the end of the output. The second convolution layer (C2) had the same characteristics as C1. Sequential convolution layers were followed by maximum pooling. The layer dimensions were 2 × 2, and the striding step was 2. After maximum pooling, the input dimensions were reduced by half (14 × 14). Then, there were two more convolution layers (C3, C4). C3 and C4 were 3 × 3 as well, however, the number of filters was increased to 64 because the input sizes were reduced. The activation functions were ReLU and supported by the batch normalization layer. Subsequently, outputs C4 were convoluted using the upsampling layer to achieve a dimensional increase. The size of the transpose convolution layer was 2 × 2. It consisted of 64 filters and the striding step was 2, resulting in a two-fold increase in input size (28 × 28). The next layers were C5 and C6, which were the same as C1 and C2. The last part involved the combination of features for the calculation of class scores and contained the convolution layer with a size of 1 × 1. In that layer, filters as many as classes, that is, two filters (retinal blood vessels and background) were applied. The SoftMax function was applied onto the outputs followed by classification involving pixel-based classification rather than a full image-based scalar score calculation. This layer showed to which class each pixel belonged and labeled background pixels as 0 and retinal blood vessels as 1. The output image matrix of 0 and 1 was converted to black and white image in the next stage.

In the training stage, cross-entropy function was used as cost function (Eq. 17).

where yi and \( {y}_i^{\prime } \) are the class label and estimation matrices, respectively. The two matrices have the same dimensions and represent the neighborhood of an (x, y) coordinate of the preprocessed images before they are divided into image slices.

Optimization in an ANN is a process of minimizing the difference between the actual values and the ANN output. Gradient-descent is the most common optimization method. In this study, stochastic gradient descent was used as the optimization method.

In CNNs, weights and biases of neurons are updated using the back-propagation method, in which the difference between predicted and actual outputs is calculated, and then, the difference is multiplied by the learning rate parameter, and the resulting value is subtracted from the weight value. A high learning rate causes the network to be affected by the input data and makes it prone to overshoot the minima, whereas a low learning rate results in prolonged learning. The learning rate of the proposed deep network during training was 0.0001.

The batch size is the number of training examples used per iteration. In general, 32, 64 and 128 iterations are used. The training of an ANN with each batch of data is referred to as 1 iteration. The completion of training of all data batches of a data set is referred to as epoch. In this study, the batch size was 32, and the network was trained for 60 epochs, i.e. 75,000 iterations. Figure 7 shows the feature maps obtained from training.

Striding step was set to 5, and the test was performed on 246,340 image slices (12,317 per image). Each image slice was tested, and the results were stored for later assembly. Overlapping pixels were averaged. These mean values correspond to the likelihood that a pixel belongs to a vessel or not. These values ranging from 0 to 1 were set to 0 or 1 using the thresholding method. As a result of experiments, the threshold value was selected as 0.5.

The difference between estimated and actual values is calculated to evaluate the performance of a network. As mentioned earlier, the DRIVE database was used in this study. The DRIVE database consists of 20 training and 20 test images along with manually segmented versions. Network performance was determined comparing the network output on those images. Five most common performance metrics, accuracy (Eq. 18), specificity (Eq. 19), sensitivity (Eq. 20), precision (Eq. 21), and F1 score (Eq. 22) as well as Structure-measure (SM) proposed by Fan et al. [8], WFb proposed by Margolin et al. [28], E-measure proposed by Fan et al. [9] was used in this study.

Accuracy (ACC), which is the ratio of correctly classified pixels (TP and TN) to the total number of pixels, is the most widely used metric for evaluation. Specificity (SPEC) is a measure of how well a model can detect truly negatives. In other words, it is a measure of the ability of a model to separate vessel pixels from the retinal background pixels. Sensitivity (SENS) is a measure of success of the network in identifying vessel pixels that are truly vessel pixels. The higher the score, the higher the success. Precision (PREC) is a measure of how close values corresponding to inputs are to the average value in repeated measures. Table 1 shows the performance metrics of the proposed method in the DRIVE test images. Figure 8 shows the radar graph, which is a different display technique.

The proposed fully convolutional ANN yielded a similar accuracy rate on all images and calculated a standard deviation of 0.0042, indicating that it is resistant to uneven distribution of light and to low contrast.

The proposed method was also tested using the STARE database. Unlike the DRIVE database, the STARE database does not contain FOV images. Therefore, color images were filtered to obtain FOV masks. The training and test data for the STARE database were randomly determined as 50 %. All steps were performed on the STARE database in the same order as in the DRIVE database. Table 2 shows the performance metrics of the proposed method in both data sets.

CNNs are good at extracting features from and segmenting blood vessels in raw retinal images. The preprocessing steps greatly improved the performance of the proposed CNN on the DRIVE data set (Table 3). Table 4 compares the overall performance metrics of the proposed method with those reported by previous studies for the DRIVE and STARE data sets while Figs. 9 and 10 presents the radar graph comparative representation.

Table 4, Figs. 9 and 10 show that the proposed method yields better results than some studies. In terms of accuracy, the proposed method is one of the most successful ones. The performance metrics of the proposed method and of other deep learning methods indicate that CNNs can be successfully used for retinal vessel segmentation. Rule-based methods have the lowest performance whereas ANNs in general and CNNs in particular are the most successful methods.

It is not enough to use the criterion “accuracy” to perform performance evaluation. Therefore, rank analysis was performed on the proposed method and other studies for general evaluation in DRIVE and STARE datasets. Table 5 shows the results of the analysis.

Most studies did not use the precision metric in rank analysis. Therefore, it was also excluded from this study to make the analysis more objective. The mean rank analysis of accuracy, sensitivity, and specificity suggests that the proposed method is overall one of the most successful methods. All the metric scores of the proposed method are higher than the total mean scores, and what is more, the other methods that perform better in total rank analysis have some metric scores far below the total mean scores (Table 4). We can, therefore, conclude that the proposed method is one of the most consistent methods in terms of specificity, sensitivity, and accuracy.

Figure 11 shows the segmentation outputs of the proposed method for retinal vessel segmentation.

4 Conclusion & Discussion

Computer-aided algorithms are being used more and more widely on medical images to detect eye diseases. Accurate diagnosis and assessment of diseases depends both on image acquisition and interpretation. There has been significant progress in image acquisition thanks to recent advances in technology. Moreover, advances in computer technologies and improvements in processing performance have made the extraction of data from medical images using computer-aided algorithms more and more popular.

Medical images are generally interpreted by doctors. However, expert interpretation is costly and might differ widely. Computer-aided tools, such as machine learning and image processing, help experts diagnose diseases. One of those tools is deep learning, which is widely used thanks to advances in computer hardware and software.

This study proposed a method of blood vessel segmentation in color retinal images. Although CNNs can learn and classify important features from raw images, preprocessing was conducted to improve classification performance. Preprocessing consists of four steps; (1) gray level transformation, (2) gray level normalization to eliminate noise that adversely affects image quality, such as uneven illumination, local brightness etc., (3) contrast-limited adaptive histogram equalization to eliminate contrast differences, and (4) Gamma correction to better regulate gray levels.

Data augmentation was used to improve the learning capacity and performance of the fully convolutional network. Central points were randomly determined to divide the input images into small slices of size 28 × 28. The image slices were introduced to CNN as input. During learning, networks autonomously extract low quality features that do not change with respect to small geometric variations, and then, gradually transform them into higher quality features. This makes raw images more usable and suitable for blood vessel segmentation. A complex function is used to automatically evaluate the progressively learned features, and input image slices corresponding to estimation outputs are obtained.

The striding step was set to 5, and the image was cut into slices with a size of 28 × 28 in order to improve the network forecasting performance. The overlapping pixels were averaged, and the thresholding method was used to decide whether a pixel belonged to a vessel or not, which improved the network forecasting performance.

The fact that CNNs automatically extract features from images makes it easier to overcome problems in the selection of correct features. In the first layers, simpler features are extracted while, in the following layers, more complex feature maps are extracted. Therefore, the process can express the desired features better than manually extracted features.

In the proposed method, the preprocessing steps greatly improved the performance of the neural network, and the CNNs had good forecasting performance in retinal blood vessel segmentation. The superior performance of the supervised learning networks shows that eliciting information on dependencies between class labels for neighboring pixels is of importance for segmentation of continuous anatomical structures, such as retinal blood vessels. We, therefore, believe that the proposed method is an effective and reliable method that can be used by future studies on artificial intelligence-based disease diagnosis systems to detect retinal blood vessels. In recent years, CNNs have been popular in many areas related to image processing and salient object detection [13, 51]. Most CNN-based salient object detection approaches regard the image patches as input and use multi-scale or multi-context information to obtain a final saliency map. In this context, future studies can use salient edge features for segmentation of retinal blood vessels and detect vessels, especially their boundaries, more accurately.

References

Bengio, Y (2009). Learning deep architectures for AI technical report 1312, Dept. IRO, Universit’e de Montr’eal, Montreal, Canada, 2: 1–127.

Chen Y, Wang J, Chen X, Zhu M, Yang K, Wang Z, Xia R (2019) Single-image super-resolution algorithm based on structural self-similarity and deformation block features. IEEE Access 7:58791–58801

Chen, Y, Wang, J, Liu, S, Chen, X, Xiong, J, Yang, K (2019). Multiscale fast correlation filtering tracking algorithm based on a feature fusion model. Concurrency Computat Pract Exper https://doi.org/10.1002/cpe.5533

Chen Y, Wang J, Xia R, Zhang Q, Cao Z, Yang K (2019) The visual object tracking algorithm research based on adaptive combination kernel. J Ambient Intell Human Comput 10:4855–4867

David R (2014). Bull, chapter 4 - digital picture formats and representations, editor(s): David R. Bull, Communicating Pictures, Academic Press, 99–132.

Delibasis KK, Kechriniotis AI, Tsonos C, Assimakis N (2010) Automatic model-based tracing algorithm for vessel segmentation and diameter estimation. Comput Methods Prog Biomed 100:108–122

Dodge, S, Karam, L, (2016). Understanding how image quality affects deep neural networks. Eighth international conference on quality of multimedia experience (QoMEX), pp. 1–6.

Fan D, Cheng M, Liu Y, Li T, Borji A (2017) Structure-measure: a new way to evaluate foreground maps, IEEE international conference on computer vision (ICCV). Venice 2017:4558–4567

Fan, DP, Gong, C, Cao, Y, Ren, B, Cheng, MM, Borji, A, (2018). Enhanced-alignment measure for binary foreground map evaluation. Proceedings of the 27th international joint conference on artificial intelligence, 698-704.

Fang, B, Hsu, W and Lee, MU (2003). On the detection of retinal vessels in fundus images. http://hdl.handle.net/1721.1/3675 (10.04.2019).

Fathi A, Naghsh-Nilchi AR (2012) Automatic wavelet-based retinal blood vessels segmentation and vessel diameter estimation. Biomedical Signal Processing and Control 8:71–80

Fraz MM, Barman SA, Remagnino P, Hoppe A, Basit A, Uyyanonvara B, Rudnicka AR, Owen CG (2012) An approach to localize the retinal blood vessels using bit Planes and centerline detection. Comput Methods Prog Biomed 108:600–616

Fu K, Zhao Q, Gu IYH, Yang J (2019) Deepside: a general deep framework for salient object detection. Neurocomputing 356:69–82

Ghoshal R, Saha A, Das S (2019) An improved vessel extraction scheme from retinal fundus images. Multimed Tools Appl 78:25221–25239

Glorot, X and Bengio, Y (2010). Understanding the difficulty of training deep feedforward neural networks. Proceedings of the thirteenth international conference on artificial intelligence and statistics, Universite de Montr ´ eal, Canada, 249–256.

Goodfellow IJ, Bengio Y, Courville A (2017) Deep learning. MIT Press, USA

Guo Y, Budak Ü, Vespa LJ, Khorasani E, Şengür A (2018) A retinal vessel detection approach using convolution neural network with reinforcement sample learning strategy. Measurement 125:586–591

He, K, Zhang, X, Ren, S, Sun, J (2015). Delving deep into rectifiers: surpassing human-level performance on ImageNet classification, IEEE international conference on computer vision (ICCV), USA, December 07–13, 1026–1034.

Hemanth, DJ, Deperlioglu, O, Kose, U (2018). An enhanced diabetic retinopathy detection and classification approach using deep convolutional neural network. Neural Computing and Applications,1–15.

Hoover A, Kouznetsova V, Goldbaum M (2000) Locating blood vessels in retinal images by piece-wise Threhsold probing of a matched filter response. IEEE Trans Med Imaging 19(3):203–210

Kolar, R, Odstrcilik, J, Jan, J, Harabis, V (2011). Illumination correction and contrast equalization in colour fundus images. 19th European signal processing conference, Brno University of Technology, Barcelona, Spain, September 2, 298–302.

Kumar M, Rana A (2016) Image enhancement using contrast limited adaptive histogram equalization and wiener filter. International Journal Of Engineering And Computer Science 5:16977–16979

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proceedings of the IEEE 86:2278–2324

Leopold HA, Orchard J, Zelek JS, Lakshminarayanan V (2019) Pixelbnn: augmenting the pixelcnn with batch normalization and the presentation of a fast architecture for retinal vessel segmentation. J Imaging 2019(5):26

Liskowski P, Krawiec K (2016) Segmenting retinal blood vessels with newline deep neural networks. IEEE Trans Med Imaging 35:2369–2380

Margolin R, Zelnik-Manor L, Tal A (2014) How to evaluate foreground maps. IEEE Conference on Computer Vision and Pattern Recognition, Columbus, pp 248–255

Marin D, Aquino A, Gegúndez-Arias ME, Bravo JM (2011) A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging 30:146–158

Melinscak, M, Prentasic, P, Loncaric S (2015). Retinal vessel segmentation using deep neural networks. VISAPP 2015- 10th international conference on computer vision theory and applications, Berlin, Germany,1: 577-582.

Mendonca AM, ve Campilho A (2006) Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans Med Imaging 25:1200–1213

Moccia S, Momi E, Hadji S, Mattos L (2018) Blood vessel segmentation algorithms – review of methods, data sets and evaluation metrics. Comput Methods Prog Biomed 158:71–91

Nguyen UT, Bhuiyan A, Park LA, Ramamohanarao K (2013) An effective retinal blood vessel segmentation method using multi-scale line detection. Pattern Recogn 46:703–715

Niemeijer, M, Staal, JJ, van Ginneken, B, Loog, M, Abramoff, MD, (2004). Comparative study of retinal vessel segmentation methods on a new publicly available database, in: SPIE Medical Imaging, Editor(s): J Michael Fitzpatrick, M Sonka, SPIE, vol. 5370, pp. 648–656.

Ricci E, Perfetti R (2007) Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans Med Imaging 26:1357–1365

Salem SA, Salem NM, Nandi AK (2007) Segmentation of retinal blood vessels using a novel clustering algorithm with a partial supervision strategy. Medical & Biological Engineering & Computing 45:261–273

Sane P and Agrawal R (2017). Pixel normalization from numeric data as input to neural networks for machine learning and image processing. IEEE WiSPNET conference, 2250–2254.

Soares JV, Leandro JJ, Cesar RM, Jelinek HF, Cree MJ (2006) Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Transaction of Medical Imaging 25:1214–1222

Soomro TA, Gao J, Khan TM, Hani AFM, Khan AUM, Manoranjan P (2017) Computerised approaches for the detection of diabetic retinopathy using retinal fundus images. Journal of Pattern Analysis and Application 20:927–961

Soomro, TA, Gao, J, Khan, MAU, Khan, TM, Paul, MA (2016). Role of image contrast enhancement technique for ophthalmologist as diagnostic tool for diabetic retinopathy. International conference on digital image computing: techniques and applications, Queensland, Australia.1- 8.

Staal JJ, Abramoff MD, Niemeijer M, Viergever MA, van Ginneken B (2004) Ridge based vessel segmentation in color images of the retina. IEEE Transactions on Medical Imaging 23:501–509

Sussman EJ, Tsiaras WG, Soper KA (1982) Diagnosis of diabetic eye disease. J Am Med Assoc 247:3231–3234

Wang C, Zhao Z, Ren Q, Xu Y, Yu Y (2019) Dense U-net based on patch-based learning for retinal vessel segmentation. Entropy 21(2):168

Wasan B, Cerutti A, Ford S, Marsh R (1995) Vascular network changes in the retina with age and hypertension. J Hypertens 13:1724–1728

Wong RKTY, Klein BEK, Tielsch JM, Hubbard L, Nieto FJ (2001) Retinal microvascular abnormalities and their Reletionship with hypertension, cardiovascular disease, and mortality. Surv Ophthalmol 46:59–80

Yao, Z, Zhang, Z, Xu, LQ, (2016). Convolutional neural network for retinal blood vessel segmentation. In proceedings of the 9th international symposium on computational intelligence and design (ISCID), Hangzhou, China, 10–11 December 2016; pp. 406–409.

Yavuz, Z., (2018). Extraction of blood vessels with pixel based classification methods in retinal fundus images, Phd Thesis, Karadeniz Technical University, Institute of Science and Technology.

Yim, J, Sohn, KA, (2017). Enhancing the performance of convolutional neural networks on quality degraded data sets. arXiv:1710.06805.

You X, Peng Q, Yuan Y, Cheung Y, Lei J (2011) Segmentation of retinal blood vessels using the radial projection and semi-supervised approach. Pattern Recogn 44:2314–2324

Zhang B, Zhang L, Zhang L, Karray A (2010) Retinal vessel extraction by matched filter with first -order derivative of gaussian. Computersin Biologyand Medicine 40:438–445

Zhao JX, Liu JJ, Fan DP, Cao Y, Yang J, Cheng MM (2019) EGNet: edge guidance network for salient object detection. In: International conference on computer vision (ICCV), pp 8779–8788

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Uysal, E., Güraksin, G.E. Computer-aided retinal vessel segmentation in retinal images: convolutional neural networks. Multimed Tools Appl 80, 3505–3528 (2021). https://doi.org/10.1007/s11042-020-09372-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09372-w