Abstract

Content based image retrieval (CBIR) is an extrusive technique of retrieving the relevant images from vast image archives by extracting their low level features. In this research paper, the pursuance of five most prominent texture feature extraction techniques used in CBIR systems are experimentally compared in detail. The main issue with the CBIR systems is the proper selection of techniques for the extraction of low level features which comprises of color, texture and shape. Among these features, texture is one of the most decisive and dominant features. This selection of features completely depends upon the type of images to be retrieved from the database. The texture techniques explored here are Grey level co-occurrence matrix (GLCM), Discrete wavelet transform (DWT), Gabor transform, Curvelet and Local binary pattern (LBP). These are experimented on three touchstone databases which are Wang, Corel-5 K and Corel-10 K. The chief parameters of CBIR systems are evaluated here such as precision, recall and F-measure on all these databases using all the techniques. After detailed investigation it is figured out that LBP, GLCM and DWT provide highlighted and comparable results in all these datasets in terms of average precision. Besides practical implementation, the précised conceptual examination of these three texture techniques is also proposed in this article. So, this analysis is extremely beneficial for selecting the appropriate feature extraction technique by taking into consideration the experimental results along with image conditions such as noise, rotation etc.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the incredible up-gradation in the fields of image capturing devices, smart mobiles etc. there has been a considerable innovation in the area of image processing and image storage devices. Due to all this, there is the formation of different types of colossal image repositories. But dealing with these databases is a very challenging and tedious task. Consequently, for searching and indexing the images from these huge databases a competent and fast retrieval system is needed. Traditionally, retrieval of the images is done by text based image retrieval (TBIR) system, where textual description such as keywords or some text which describes the image is entered manually in the system [1]. From that particular word the similar images are finally retrieved. But this system suffers from several pit falls such as synonyms, homonyms, human annotation errors etc. Due to all these limitations TBIR is an inaccurate image retrieval system. So to overcome the limitations of TBIR systems content based image retrieval system (CBIR) was developed which retrieves the images automatically using the visual attributes of the images and without any human interference [2].

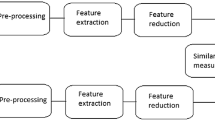

So in order to make this type of system, the first step is the extraction of productive and efficient low level features of the image and after that similarity algorithm is applied for retrieval of images. The three common well known visual features are color, shape and texture [3]. The contents of the image are directly related to these low level features. Almost every image annotation and retrieval systems have been developed successfully by using these features. When all the three features are integrated, the retrieval results obtained are much better. But individually the color and texture are the two most important and widely used features due to their easier extraction process and higher accuracy as compared with shape [4]. The complete CBIR system with given single query image and retrieved multiple image is shown in Fig. 1.

The similar types of features are extracted from the query as well as from the database images and the feature vector is constructed. After this step, the similarity calculation is evaluated between query image and all the database images using various distance measures such as Euclidean, manhattan, city block and many more. Decisively, depending on those values the images are retrieved. Lesser is the evaluated distance value more is the similar image [5].

These systems have many applications mainly in the areas of medical diagnosis, criminal identification, GIS, advertisement and many more [6, 7]. This experimental analysis is mainly focused on the texture techniques that had been discussed and proposed in the literature. The texture features that are analyzed are basically statistical based and transform based and delivers the enhanced results as associated to others [8].

No doubt, there is a great swing of deep learning algorithms like Convolutional neural networks, auto-encoders, deep belief networks etc. in today’s world because they automatically extracts the features from the images [9]. No separate color, texture and shape feature extraction techniques are required to extract the features from the images. On the other side, it is also very truthful that the deep learning algorithm takes a very high time during its training as compared to other feature extraction techniques.

The research paper is framed as: In the next section brief survey work is presented. In the 3rd section different types of image retrieval techniques are presented. In the next section i.e. section 4 types of image features are discussed. In section 5 the different texture techniques which are experimented here are discussed in detail. 6th is the proposed system and 7th is the experimental results section. Last is the conclusion and future scope section.

2 Related works

In this section, various latest CBIR systems especially based on texture feature extraction techniques are discussed. The combination of two texture techniques was used in [8] i.e. wavelet and curvelet transform along with dominant color descriptor (DCD) technique to improve the capability of the system. Another combination of texture and color based CBIR system was proposed in [2] along with some amount of spatial information so as to increase the discriminating power of color indexing. Here, in this system, an image is separated into three non-overlapping regions and the color distribution of first three instants was extracted. For texture feature extraction, Gabor texture descriptors were used and Canberra distance is used for similarity measurement.

The curvelet approach was proposed in [10] which provide good accuracy in texture retrieval. In this scheme, from the transformed images, low order statistics are computed. Results here proved that it provides better results than Gabor texture feature. There are some images in which there is variation in the sizes and deformation the shape based feature comes into picture. By keeping this in mind Jin et al. designed the shape based CBIR system which can then be combined with texture and color to provide the better results [11].

Different features have their own pros and cons and when these features are properly selected and combined together they will become more effective and accurate. The two features were combined i.e. discrete wavelet transforms and edge histogram descriptor for texture and shapes respectively [12]. Sometimes only the single texture feature provides the good results such Local tri-directional pattern was proposed in [13] which was the texture feature and uses the pixel intensity in three directions. It was then compared with other existing systems based on image retrieval applications..

The main issue or the limitation of CBIR systems is the difference among the low and high level features of the images namely semantic gap. To overcome this limitation relevance feedback was used. In [14] the work was done to decrease the semantic gap by the process of feature selection and adaptation. In this paper mixed gravitational search algorithm was engaged for optimization of color and texture features to maximize the precision value. For this feature subset selection was also done to increase the effectiveness of the system.

To reduce the problem of semantic gap many researchers has proposed some algorithms such as in some papers the semantic gap was not reduced based on feature selection but based on the distance measurement. The hybrid system was proposed on the basis of algorithm Sequential forward selector meta-heuristic with relevant feedback and a single round. Wang database was used and color moment feature was used for extraction of color features and this system showed to be much effective than others [15]. In [16, 17] different types of relevant feedback techniques were analyzed such as SVM, query refining and deep learning.

This paper is mainly focused on texture feature extraction techniques which are mostly used in the literature along with fusion of other features. Some of which are shown in Table 1.

3 Types of image retrieval techniques

The image retrieval techniques can be classified into three major categories based features which color, texture and shape.

Color based retrieval

For retrieving the images, color is considered to be the most significant feature. There are multifold retrieval techniques based on colors, such as Color moments, auto-correlogram and color histogram. For digital images retrieval, color histogram is most frequently employed for color feature extraction [18]. The main advantage of the color histograms are speed and minimum storage space. It embodies the frequency distribution of the color pixels in the images. It figure out number of similar pixels of the image and stores that pixels. The major limitation of this technique is that the spatial information is not evaluated during its computation. Color moment is also very suitable and fast technique as it provides the statistical measures like mean and standard deviation etc. [19]. Many other techniques are there such as color correlogram, dominant color descriptor etc.

Texture retrieval

The texture signifies the spatial arrangement of pixel elements that are present in the images. The most commonly used technique is GLCM as it provides comparable features like the human visual system. In GLCM, the statistical features are computed such as energy, entropy, correlation etc. [20]. LBP technique is mostly used in many applications as it is very swift and moreover provides very accurate results. DWT is a signal processing technique which is able to capture the spatial characteristic present in the images and also it is multi-resolutional technique.

Shape retrieval

Shape allows an image to be recognized from its surroundings, edges and regions. Shapes of digital images can be extracted by using any one of the following methods. Generally used shape feature extraction techniques are contour based shape extraction, boundary based, region based and Generalized Hough Transform (GHT). These techniques are robust to the de-formalities of shape and tolerant to noise [21].

4 Image feature types

The features are the measurement functions which quantify the significant and important characteristics of the images. These image features are classified in two types i.e. low and high level features. The color, texture and shape are the low level features and are independent of the application. The high level features are determined in order to turn down the ‘semantic gap’ constraint of CBIR systems and the extraction of these features are also based on low level features [22]. Semantic gap refers as the variation between the low level features of an image picked up by the system and the human perception. This issue can be effectively overcome by the mechanism of Relevant Feedback in CBIR system [23]. The CBIR with relevant feedback is shown in Fig. 2. It acts as an interface which connects the user with the search engine.

With the help of this technique, output images are refined which depends upon the feedback from the user. This operation repeats till the applicable results are not obtained.

5 Texture feature extraction techniques

Texture refers to the spatial organization of intensities in an image. The important properties of the texture are coarseness, contrast, regularity, roughness etc. The comparative experimental analysis of various texture techniques are presented in this paper. Several approaches are used for extracting the texture, such as structural, model based, statistical and transform based. But the mostly used methods reported in the literature as seen in the above described table are statistical based and transform based because of their good performance. The different types of texture techniques are further described and experimented in this paper.

5.1 Gray level co-occurrence matrix (GLCM)

It is one of the most accurate feature extraction techniques and computes the statistical properties present in the images. Its important attributes are faster implementation and lesser complexity. The information it contains is the position of pixels that have similar gray levels [24]. The four most important statistical parameters of GLCM are given in Eq. (1) to Eq. (4). The matrices that are generated using this technique provide the spatial frequencies which gives the relation among the adjacent and distinct distance pixels.

Where, x and y are the co-occurrence matrix coefficients.

5.2 Discrete wavelet transform (DWT)

It is a category of transform based texture descriptor which inspects the signal in time frequency zone. The wavelet transform have the frequency and spatial characteristics which obtains the multi-scale resolution of the images [25]. It decomposes the image into frequency sub-bands and maps these bands to some numerical values. After decomposition, four sub-bands are formed containing both horizontal and vertical frequency information which are LL, LH, HL, and HH. For further decompositions the algorithm is repeatedly applied on LL frequency band.

5.3 Gabor transform

These transforms works as the filters which are the group of wavelets and every wavelet is able to capture the energy at a specific orientation and frequency. They produce the optimized resolution in both frequency and spatial domains [2]. For the image P(x, y), the Gabor transform is given by convolution as given in Eq. (5)

Here, in this equation, m and n are the mask variables of the filter and \( {\varphi}_{ab}^{\ast } \) is the complex conjugate of the φab produced from the rotation and dilation of mother wavelet which is given by Eq. (6)

W = centre frequency, σx and σy are scaling functions.

5.4 Local binary pattern (LBP)

This method is widely used in various applications of image processing due to its simplicity and ease of implementation. Before applying this technique some pre-processing steps on the images are performed such as firstly, the RGB images are converted into grey scale [26].After this, the image is firstly subdivided into smaller 3×3 matrices on which LBP computations are performed. The difference between the centre value of the pixel and neighboring pixels is calculated. By thresholding of neighbor pixel with the centre pixel the binary code is produced for every pixel of the image.

These LBP’s values are evaluated for the entire image and after that the histogram is framed which determines the texture of an image [27].

5.5 Curvelet transform

This type of transform is able to represents the singularities, edges and curves more efficiently. Till now two curvelet transforms algorithms are introduced which are Unequispaced FFT (USFFT) and Wrapping algorithm. Both of them produce the same output but the second algorithm i.e. wrapping is faster means having less computation time than other [28].

6 Proposed CBIR system

In this proposed framework, the texture features which are described in the above section are extracted individually and experimented on three benchmark datasets which are Wang, Corel-10 K and Corel-5 K.

All these types of texture techniques which are proposed here are statistical based and transform based. The stages of the designed approach is described in the below headings.

6.1 Stages of the proposed system

-

a.

Training and Testing Phase- Basically, the complete model is divided into two phases, training and testing phase as shown in Fig. 3. The system works by taking all the training images as the test images in case of all the datasets. As for example in case of Wang dataset which contains total 1000 images, here all the 1000 training images are taken as test images while calculating its performance parameters. Same is the case with other datasets also.

-

b.

Pre-processing- In this phase, all the images are converted into required color spaces or size such as grey scale, HSV etc. which are required by the system.

-

c.

Feature Extraction- This is the main stage of the proposed system. At this phase different types of texture features are extracted individually and their performance parameters are evaluated. The texture techniques which are used here for feature extraction are LBP, DWT, GLCM, Curvelet and Gabor transform. All these techniques have their own properties. All these types that are experimented here are statistical type or transform type. Among these two, the statistical type methods are easy and simple to implement. They have high adaptability power and strong robustness. But the limitation with them is they are sensitive to noise and rotation. Also these techniques provide less global information about the images.

GLCM and LBP are statistical type of feature extraction techniques.

The other type which is proposed here is transform based techniques. DWT, Curvelet and Gabor transform falls under this type of category. These techniques are insensitive to direction and noise. These techniques provide multi-resolutional information of the image. However, these are complex and difficult to implement as compared to statistical type of techniques.

Features are extracted from all these five techniques exclusively at this stage and after this feature vectors are created of query as well as database images.

-

d.

Similarity Measuring Stage- This is the last step of the proposed system which is similarity matching stage. After the step of feature vector creation, similarity matching is performed between the query and database images by using various distance metrics. After that, the resultant images are ranked in increasing order based on their evaluated distance value. A value of zero means the exact similar images. Different types of distance measures can be used here for the purpose of measuring the similarity between the images. And finally, in our system after this step based on the similarity top ten images are retrieved.

Graphical user interfaces (GUI) have also been designed of all the datasets of all the texture techniques showing top ten retrieved images. These GUI’s depicts the performance of the system pictorially. The proposed system showing all these stages is shown in Fig. 3.

7 Experimental set up and results

7.1 Experimental set up

The experiments are implemented in MATLAB R2018a, 4GB memory and 64 bit windows. The category used here is feature detection, extraction and matching which comes under computer vision toolbox. Basically, the functions used are Detect features, extract features, match features and store features. The details of all the datasets used for the experimentation is tabulated in Table 2 and the sample images of all these datasets are shown in Fig. 4. The images used in these experiments are in JPEG format. These datasets contains the categories such flowers, beaches, animals, buildings, butterflies etc. These datasets contains general type of images.

7.2 Experimental results

The experiments evaluate the most important parameters of CBIR system which are precision, recall and f-measure which are computed as:

Experiments are conducted on all the texture feature extraction techniques by taking all the images of the dataset as the query image and all the images as training images. After that average value of precision and recall is computed when top 10 images are retrieved.

The simple Euclidean distance is utilized here for the measurement of similarity between the feature vectors of query and database images which is defined as Eq. (7).

Where qi and Diare the feature vectors of query and database image respectively. The number of features extracted from every technique is given in Table 3.

The speed analysis of all the feature extraction techniques is shown in Table 4 which shows that as the size of database increases, the time taken to extract the features also increases. It is also analyzed from the figure that the LBP technique extracts the features much faster than all other techniques.

The Table 5 below shows the comparison of average precision values of five texture feature extraction techniques on different datasets which are mostly used for analyzing the performance are reported in the experiments.

Several distance measures can be used for the measurement of similarity between the images and the numerical value of the calculated distance is then arranged in increasing order. Zero value means the most similar image. Some of the important distance measures are shown below in Eqs. 8 and 9.

Here DM denotes the manhattan distance metric and Dm is the minkowski distance measure. We have tested the texture techniques on these distance measures also and calculated the value of average precision just like the above experiments on Wang dataset. The comparative value of average precision of all these techniques on three distance measures when top 10 images are retrieved is shown graphically in Fig. 5.

It is clear from the above figure that the Euclidean distance provides the highlighted results for all techniques and much more in case of LBP technique. In all others, manhattan distance measure provides comparable results whereas the minkowski distance provides the worst results. The Euclidean measure provides the best results as it is based on the normalized and weighted attributes which results in swift computational results.

Recall is another prominent measure of any CBIR system. Like precision, the average recall is also computed for some texture techniques such as LBP and GLCM whose results are highest using above equation at different number of image retrievals which are 10, 20, 30 and 40 which are shown in Fig. 6 and Fig. 7. It is observed from these figures that if the number of retrieval increases, then precision decreases and recall increases as these are obtained in PR spaces.

Along with precision and recall F-measure is also is a significant parameter to check out the competency of any CBIR system. It is the geometric mean of the precision and recall as described in the above equations.

Just as precision and recall all the images are taken as query images and F-measure is calculated for every case for the retrieval of top 10 images. After that the average value of F-measure is computed of every technique. Similar method repeats for all the datasets. The Fig. 8 shows the graphical comparison of F-measure of all the techniques on three datasets.

From the above shown graph of F-measure, it is undoubtedly clear that GLCM, LBP and DWT provide almost comparable and better results with these types of images. While gabor and curvelet technique do not provide acceptable results.

Precision is the most decisive parameter of any CBIR system as the efficiency of any system depends on the precision percentage. So experimentally, all the three techniques LBP, GLCM and DWT have commensurate precision values with very less differences almost of 5–7% in all the datasets.

But theoretically, all these techniques have some pros and cons which is already discussed in the above section 6. So before selecting the particular technique for the retrieval of images, the researcher should go for both results as well as the type of the images to be retrieved from the database.

So in order to choose the better technique for texture feature extraction the type and categories of the images should be kept on mind because each and every technique has its own properties and different angle of working on images.

8 Image retrieval results

The GUI’s of the top 10 retrieved images of two random cases are shown in Fig. 9 and Fig. 10. It can be clearly observed from the figures that in case of Wang dataset all the retrieved images belongs to the query image and hence its precision is 100%. But in case of Corel-5 K dataset where images are more, only 70% images matched with the query image and hence it’s precision is 70%.

9 Conclusion and future scope

In this designed framework, the influential texture feature extraction techniques generally used in CBIR systems are experimented individually on three different datasets. The datasets that are used here for the experiments covers almost all the general type of images. The techniques are compared in terms of computed value of average precision, recall and F-measure. Experimentally it can be concluded that LBP, GLCM and DWT techniques provides superlative results as compared with gabor and curvelet techniques with these types of images. But it is also very true that these techniques have also some limitations which are described in above section. So, in order to select a particular technique out of these three for feature extraction the researcher has to keep certain points in mind such as image type, environmental conditions, time constraint etc. Only then the accuracy of the CBIR system can be enhanced.

The results obtained by various techniques can further be improved if the fusion of texture techniques is done with other features such as shape, color etc. Deep learning algorithms can be employed in future for the intendment of feature extraction to increase the efficiency of any CBIR system.

References

Sharma R, Singh EN (2014) Comparative study of different low level feature extraction techniques. Int J Eng Res Technol 3(4):1454–1460

Singh SM, Hemachandran K (2012) Content based image retrieval using color moment and Gabor texture feature. Int J Comput Sci 9(5):299–309

Murala S, Maheshwari RP, Balasubramanian R (2012) Local tetra patterns: a new feature descriptor for content-based image retrieval. IEEE Trans Image Process 21(5):2874–2886

Wang L, Wang H (2017) Improving feature matching strategies for efficient image retrieval. Signal Process Image Commun 53:86–94

Wang H, Feng L, Zhang J, Liu Y (2016) Semantic discriminative metric learning for image similarity measurement. IEEE Trans Multimed 18(8):1579–1589

Yadav AK, Roy R, Kumar AP (2015) Survey on content based image retrieval and texture analysis with Aplications. Int J Signal Process, image Process Pattern Recognit 7(6):41–50

Liu GH, Yang JY (2013) Content-based image retrieval using color difference histogram. Pattern Recogn 46(1):188–198

Fadaei S, Amirfattahi R, Ahmadzadeh MR (2017) New content-based image retrieval system based on optimised integration of DCD, wavelet and curvelet features. IET Image Process 11(2):89–98

Prabhu J, Kumar JS (2014) Wavelet based content based image retrieval using color and texture feature extraction by gray level Coocurence. J Comput Sci 10(1):15–22

Sumana I and Islam M (2008) Content based image retrieval using curvelet transform, in proceedings of international IEEE conference on signal process. 2008: 11–16.

Jin C, Ke SW (2017) Content-based image retrieval based on shape similarity calculation, 3D res. Springer 8(3):1–19

Verma M, Raman B (2016) Local tri-directional patterns: a new texture feature descriptor for image retrieval, digit. Signal process. Rev J 51:62–72

Murala S, Maheshwari RP, Balasubramanian R (2012) Local tetra patterns : a new feature descriptor for content-based image retrieval. IEEE Trans Image Process 21(5):2874–2886

Alsmadi MK (2017) An efficient similarity measure for content based image retrieval using memetic algorithm. Egypt J Basic Appl Sci 4(2):112–122

Rashedi E, Nezamabadi Pour H, Saryazdi S (2013) A simultaneous feature adaptation and feature selection method for content-based image retrieval systems. Knowledge-Based Syst 39:85–94

Mosbah M, Boucheham B (2017) Distance selection based on relevance feedback in the context of CBIR using the SFS meta-heuristic with one round, Egypt. Informatics J 18(1):1–9

Sheshasaayee A, Jasmine C (2014) Relevance feedback techniques implemented in CBIR : current trends and issues. Int J Eng Trends Technol 10(4):166–175

Shahbahrami A (2008) Comparison between color and texture features for image retrieval, 2008 6th Int. Symp Appl Mach Intell Informatics 27648:221–224

Srivastava D, Wadhvani R (2015) A review: color feature extraction methods for content based image retrieval. Int J Computational Eng Manag 18(3):9–13

Agarwal S (2013) Content based image retrieval using discrete wavelet transform and edge histogram descriptor, IEEE, Int. Conf. Inf. Syst. Comput. Networks: 19–23.

Chaudhari R, Patil A (2012) Content based image retrieval using color and shape features, nternational journal of advanced research in electrical. Electron Instrument Eng 1(5):386–392

Yang W, Sun C, Zhang L (2011) A multi-manifold discriminant analysis method for image feature extraction. Pattern Recogn 44(8):1649–1657

Chaoran C, Peiguang L, Xiushan N, Yilong Y, Qingfeng Z (2017) Hybrid textual-visual relevance learning for content-based image retrieval. J Vis Commun Image Represent 48:367–374

Zhao M, Zhang H, Sun J (2016) A novel image retrieval method based on multi-trend structure descriptor. J Vis Commun Image Represent 38:73–81

Sai N, Patil R, Sangle S (2016) Truncated DCT and decomposed DWT SVD features for image Retrieval,7th international conference on communication, computing and virtualization 2016. Procedia Comput Sci 79:579–588

Pavithra L K and SharmilaT S (2017) An efficient framework for image retrieval using color, Texture and edge features R, Int J Comput Electric Eng, 0: 1–14.

Prakasa E (2016) Texture feature extraction by using local binary pattern. INKOM 9(2):45–48

Al-Rawi SS, Sadiq AT and Ahmed IT (2011) Texture Recognition Based on DCT and Curvelet Transform, International Arab Journal of Information Technology: 1–5.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dhingra, S., Bansal, P. Experimental analogy of different texture feature extraction techniques in image retrieval systems. Multimed Tools Appl 79, 27391–27406 (2020). https://doi.org/10.1007/s11042-020-09317-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09317-3