Abstract

Video surveillance cameras capture huge amount of data 24 hours a day. However, most of these videos contain redundant data which make the process difficult for browsing and analysis. A significant amount of research findings have been made in summarization of recorded video, but such schemes do not have much impact on video surveillance applications. On the contrary, video synopsis is a smart technology that preserves all the activities of every single object and projects them concurrently in a condensed time. The energy minimization module in video synopsis framework plays a vital role, which in turn minimizes the activity loss, number of collision and temporal consistency cost. In most of the reported schemes, Simulated Annealing (SA) algorithm is employed to solve the energy minimization problem. However, it suffers from slow convergence rate resulting in a high computational load to the system. In order to mitigate this issue, this article presents an improved energy minimization scheme using hybridization of SA and Teaching Learning based Optimization (TLBO) algorithms. The suggested framework for static surveillance video synopsis generation consists of four computational modules, namely, Object detection and segmentation, Tube formation, Optimization, and finally Stitching and the central focus is on the optimization module. Thus, the present work deals with an improved hybrid energy minimization problem to achieve global optimal solution with reduced computational time. The motivation behind hybridization (HSATLBO) is that TLBO algorithm has the ability to search rigorously, ensuring to reach the optimum solution with less computation. On the contrary, SA reaches the global optimum solution, but it may get disarrayed and miss some critical search points. Exhaustive experiments are carried out and results compared with that of benchmark schemes in terms of minimizing the activity, collision and temporal consistency costs. All the experiments are conducted on five widely used videos taken from standard surveillance video data set (PETS 2001, MIT Surveillance Dataset, ChangeDetection.Net, PETS 2006 and UMN Dataset) as well as one real generated surveillance video from the IIIT Bhubaneswar Surveillance Dataset. To make a fair comparison, additionally, performance of the proposed hybrid scheme to solve video synopsis optimization problem is also compared with that of the other benchmark functions. Experimental evaluation and analysis confirm that the proposed scheme outperforms other state-of-the-art approaches. Finally, the suggested scheme can be easily and reliably deployed in the off-line video synopsis generation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Towards worldwide application of video surveillance solutions, the existence of CCTV camera is obvious. These enormous amount of cameras are working 24 hours a day and generate a gigantic amount of surveillance video data. With the advancement of camera technology, the quality and the use of surveillance cameras is increasing exponentially. To locate an event of interest manually is time consuming and strenuous because of the redundant nature of the surveillance video. So, there is a growing need to develop a smart technology, which in turn forms compact representation of the original video by preserving all the essential activities. Video synopsis is such an efficient and intelligent technology to generate shorter version of the original surveillance video through the projection of all important activities concurrently. Video synopsis also serves as an efficient index to the original video, thus making video browsing easy and simultaneously resolving the issue of storage scarcity.

Due to the absence of a standard to measure, evaluation of the quality and performance of a synopsis video is highly dependent on the application. Like other reported literature [16] in this field, the proposed work has also adopted the following qualitative measurement for the performance evaluation of a synopsis video:

Preservation of maximum energy in a minimum time period.

A minimum number of artifacts like collision among objects.

Good-conservation of the original order of objects’ appearance and their spatial positions.

In 2006, the authors in [32] introduced the concept of video synopsis and stated two different directions, namely, low-level video synopsis generation and object-based video synopsis generation. Gradually the efficiency and intellectuality of the object based approach to generate a video synopsis made itself the mainstream. The optimization module is considered to be the central block among the other building blocks (object detection and segmentation, tube generation, and stitching) of object-based video synopsis framework, since this module supervises the final length, activity preservation and visual quality of the synopsis video. However, the complexity of the optimization problem is not only high, but also quite extensive due to gigantic amount of combinations. For feasibility purpose, authors in [32], restricted the solution to two class, namely, lossy and lossless object-based video synopsis. These restricted solutions are then solved using SA [12]. Similarly, authors in [27], solved the optimization problem using greedy approach.

From quality enhancement point of view in video synopsis, many notable findings have been carried out in this field. One of the considered performance measures, namely, collision reduction, is addressed in [24] through the spatio-temporal rearrangement of objects. The same issue is also addressed through another innovative idea to reduce the size of objects in [16]. Similarly, [20, 50] focused on the issue of improved tube formation, while [36] focused on tracking strategies. One major limitation of video synopsis technology stated in [27] is that it is not applicable to crowded video, as the synopsis video is itself crowded than the original. To limit this crowded appearance of the synopsis, an abnormal activity based synopsis generation is depicted in [18] and an event based one in [44]. Returning to the solution to the key module, energy minimization in video synopsis framework, a global energy function in [24] is solved by Graph Cut [2]-based technique. Blob Trajectory Optimization is employed in [18] to address the energy minimization problem and [15] employed a greedy approach. Maximum a Posteriori Probability Estimation is used for real-time synopsis generation in [9, 44]. However, SA is widely employed for most of the off-line synopsis generation schemes as in [16, 50] to solve the objective function. The iterative computational nature of SA makes the process lengthy and time-consuming to produce better results.

1.1 Motivation & overview

The video synopsis preparation is formulated as an energy minimization problem, which minimizes collision among objects, minimizes temporal consistency cost to maintain uniformity among objects’ tracks, and minimizes activity loss with the inclusion of maximum objects’ activities. The aforementioned costs are regulated by certain parameters like number of objects, object size, spatial distance between objects and tube length as per original video. These parameters are not consistent from one video to another, which makes the whole process highly arbitrary and minimizing the energy function complex. Hence, SA is an ultimate choice in most of the video synopsis approaches to address the above issues through its probabilistic selection process and its ability to reach a global optima in the presence of several local optima regardless of the objective function. Thus, the application of SA is very prominent to minimize the energy in the field of video synopsis. The nature of probabilistic selection of SA to reach the global optimal solution sometimes may get disarrayed and hence, miss some crucial search points. Various control parameters like population size, number of generations, and elite size, are required in all the probability grounded evolutionary and swarm intelligence-based algorithms. Along with these parameters, certain algorithms require other algorithm-specific parameters for regulating the optimization process. The effectiveness of these algorithms is highly dependent on the algorithm-specific control parameters and if not tuned properly, it may lead to increased computational time or a local optimal solution. Thus, TLBO [31] is a better choice for this. The purpose of using TLBO is that it has fewer number of adjusting parameters in comparison to traditional meta-heuristic optimization algorithms like Genetic Algorithm, Differential Evolution, and Particle Swarm Optimization etc. Further, TLBO shows faster convergence and is easier to implement.

Based on the above observations, authors are motivated to propose a hybrid optimization algorithm using SA and TLBO (HSATLBO) for solving the global energy function in object-based video synopsis framework. Usually, meta-heuristic techniques for any given optimization problem produce random set of solutions in a predefined range. These techniques are able to achieve the objectives, but they suffer from certain issues like getting stuck in a local minimum and/or time to reach the optimum solution. So, a hybrid technique is essential in this regard to combine and enhance the properties of the parent techniques.

Motivation for the hybridization is as follows:

TLBO is one of the recent meta-heuristic algorithms which has been successfully applied to distinct optimization problems in various research areas of electrical engineering, mechanical design, thermal engineering, manufacturing engineering, civil engineering, structural engineering, computer engineering, electronics engineering, physics, chemistry, biotechnology and economics [30].

The algorithm consists of two phases (i.e. Teacher and Learner Phase), which enhance the ability of the technique to search rigorously within the search space before moving onto the next population of students, thus ensuring to reach the optimum solution with less computations.

The Simulated Annealing (SA) on the other hand undergoes a probabilistic selection depending on the temperature scheduled.

Although the probabilistic selection empowers the technique to reach the global optimal solution but, it may get disarrayed and miss some critical search points. Here, TLBO technique further assists SA to perform a rigorous search within a certain search space prior to probabilistic selection. This reduces the possibility of missing an optimum point due to probabilistic selection and increases the effectiveness of the technique in achieving a global optimum solution.

1.2 Contributions

Video synopsis not only requires all activity by an object to be preserved within the shortest possible time length but also to satisfy the cost constraints. The contributions of the present work are as follows:

An object-based video synopsis framework is formulated for static surveillance videos without missing any activity.

A hybrid optimization scheme, namely HSATLBO, is formulated by combining the properties of both SA and TLBO to minimize the energy function in terms of activity, collision and temporal consistency costs.

1.3 Organizations

The remaining part of the article is organized as follows: Section 2 elaborates on the related literature study. Some preliminaries about SA and TLBO are summerized in Section 3. The suggested surveillance video synopsis framework is presented and elaborately discussed in Section 4. Section 5 critically discusses the experimental results and comparisons are made with that of the benchmark schemes. Finally, concluding remarks and scope for the future work are outlined in Section 6.

2 Related work

As mentioned in the previous section, optimization module is the key as it affects the overall efficacy of video synopsis framework. Hence, this section deals with some of the notable findings in the context of optimization module in video synopsis. Initially [32] proposed a dynamic video synopsis scheme in terms of low-level and object-based approach for video synopsis generation. In low-level approach, the optimization framework is formulated as a 3D Markov random field (MRF) [13] and minimized by graph cuts algorithm. For the case of object-based video synopsis, the moving objects are extracted from the video and then they are rearranged without maintaining the chronological order of the objects’ appearance to create a short and seamless video synopsis. In object-based video synopsis, the problem of energy minimization is made feasible through two restricted solutions, one being video synopsis with a pre-determined length and the other being the lossless video synopsis. In both the cases, SA is employed. Authors in [26] proposed an object-based video synopsis methodology by expanding the work of [32] for the endless video stream captured by webcam. In this method, the energy minimization is done through SA. A detail presentation of the work of [32] and [26] is presented in [27], where the energy minimization is done through greedy approach.

To further enhance the subjective performance of the synopsis video, authors in [24] proposed a video synopsis generation procedure through global spatial and temporal optimization, where the alpha-beta swap graph cut method is used for energy minimization. Similar to [24], authors in [16] proposed a novel scheme of object-based video synopsis, where the subjective performance of the synopsis video is enhanced via scaling down the object size. This methodology is an addition to pixel domain optimization and solved using SA.

Deviating from the aforementioned optimization techniques, blob trajectory optimization is used for synopsis generation in [18]. The problem of tube rearrangement in [44] is formulated as a maximum a posteriori probability estimation. In [15], seam carving is proposed for video synopsis generation process and optimized through a greedy algorithm. Dynamic programming is used in [48] and [39] for energy minimization. Though video synopsis technology is superior in summarizing, the shorter version is a bit confusing as it projects multiple activities simultaneously. To reduce the confusion level of the synopsized video, authors in [28] proposed a scheme to generate synopsis video through the grouping of similar activities and their projection. In this methodology, the problem of tube rearrangement is mapped through k-nearest neighbours algorithm and kd-tree implementation. In the work proposed by authors in [45], an object-based video synopsis is generated considering the objects as a spatio-temporal collection and minimization is done by mean shift algorithm.

An updated version of the energy function proposed in [26] is reflected in [40] by the addition of foreground importance to achieve multi-scale scalable browsing. Here, SA is used to optimize the function. Similarly, SA is also employed to minimize the energy function in [51] and [41]. Emphasizing on the issue of memory requirement and time cost behind tube generation, authors in [17] proposed an algorithm based on 3D graph cuts and realized synopsis video through blank frame deletion. Recently, the problem of synopsis generation has also been formulated in terms of scheduling problem and solved through trajectory scheduling algorithm [3] and graph coloring algorithm [7]. Genetic algorithm [47] and [37] is also explored in this field for tube rearrangement. Table 1 presents a comparison chart among several state-of-the-art techniques in the field of surveillance video synopsis with a central focus towards energy minimization, methodology used, and potential applications.

From the related works, it is observed that the outcome of video synopsis technology greatly depends on the central module, the so called optimization module. To improve the overall efficacy of the video synopsis generation, several attempts have been made in this direction. Hence, keeping this in mind, this article presents a hybrid optimization algorithm (HSATLBO) for object-based video synopsis to improve the overall performance of video synopsis generation as well as to minimize the activity, collision, and temporal consistency costs.

3 Preliminaries

3.1 Simulated annealing

Inspired from metallurgy, in 1953, authors in [21] proposed an algorithm for efficient molding of atoms in symmetry at a certain fixed temperature. A new state is designated by the change of a randomly nominated particle from an initial state with energy E0. Let, E be the energy of the current state and for E0 > E, E is being nominated along with a new next state generation. Otherwise if, E0 ≤ E, the likelihood to persist in this new state is given by:

where T is the current temperature and kb is Boltzmann Constant. This probabilistic acceptance strategy is called Metropolis criterion. The major limitation of the Metropolis approach is that it is based on a single fixed temperature. To eliminate this, authors in [12] globalized it by integrating an annealing schedule to decrease the temperature. Beginning with a high initial temperature, it drops according to the annealing schedule and the step iterates until the system freezes. The system finally freezes in a state of global minimum energy.

3.2 TLBO algorithm

Authors in [31] have proposed a meta-heuristic optimization based on the process of teaching and learning. It considers the significance of a teacher’s presence in the class and the teacher’s ability to influence the students to improve in the respective subjects. The individual factors to be optimized are considered as subjects and the result obtained in each subject is the optimized parameter value. The optimization process undergoes two phase, namely, Teacher phase and Learner phase.

This technique operates with a population size Pmax (i.e. apparent optimum state, k = 1,2,⋯,Pmax) and N number of decision variables j = 1,2,⋯,N for every individual of the population. After evaluating the initial set of population, the set with the best solution is designated as the teacher solution \( T^{best}_{k} \). The mean Mj is obtained for all students in each subject. For any ith iteration, the difference between the mean and the teacher solution is calculated by (2) and added to the individual student solution to update them with respect to the teacher solution given as in (3).

Here, ri is a random value in the interval of [0,1] and Tf is considered either 1 or 2 based on (4).

On completion of teacher phase the updated solution is passed on to the student phase, where two individual learners m, n are chosen and a self-learning process is undertaken based on the equation

Thus, an optimum result is obtained at the end of the iteration which pushes the subsequent population of next iteration to an even improved solution until the termination criterion is fulfilled.

4 Surveillance video synopsis framework

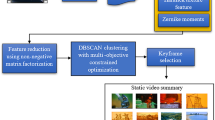

Initially the input video is preprocessed for the purpose of detection and segmentation of object activities. Subsequently, tubes are formulated for each object as per the tracked information. Further, the objective function is formulated to evaluate the various penalty costs involved in synopsis generation. Next, the HSATLBO scheme is proposed to simplify the objective function, which is the weighted sum in terms of activity, collision, and temporal consistency costs. Finally, the optimized object tubes and the background are blended together to produce the final synopsis video. The flow diagram of the proposed framework is depicted in Fig. 1.

4.1 Object detection and segmentation

Generally, surveillance videos are of long duration and consists of either static or dynamic backgrounds. The proposed approach concentrates on the surveillance videos with static background. In such surveillance videos, the frames usually change due to the movements of the observed objects and illumination. Taking these facts into account along with the view point of video synopsis generation, the problem of object detection and segmentation focused on the extraction of moving foregrounds like other object based video synopsis generation approaches such as [16, 27], and [24]. Similar to [16], the proposed scheme adopted the qualitative criteria that are exploited for the preservation of major activities in video synopsis field and hence several state-of-the-art [43, 49], and [19] in the field of object detection and segmentation are not suitable for the proposed framework. In the suggested scheme, a robust multi-layer background subtraction algorithm [46] is employed to extract the moving objects along with a modeling of the background. The multi-layer background subtraction algorithm efficiently extracts foreground through the power of local binary pattern (LBP) features and photometric invariant color measurements in RBG color space. Further, the morphological dilation and erosion are applied on the resulted foreground frames to reduce any noise, if present. The final resultant foreground masks are passed to blob analyzer for the detection of moving objects. In this work, the original video is mathematically represented in terms of a space-time volume, denoted as V (x,y,t), where t is the temporal and x, y are the spatial indices of each frame. The resulting background model is denoted as B(x,y,t′), where t′≤ t and the detected and segmented moving objects are represented through precise and independent bounding boxes.

4.2 Tube formation

Tube formation is another important preprocessing module of the video synopsis generation framework. Efficient track information of the individual moving object is called a tube. The quality of the synopsis video not only depends on efficient object detection and segmentation, but also on efficient tracking. In the proposed methodology, similar to [16], Kalman Filter [1] is employed for multi-object tracking. The track or tube is composed of the set of all connected bounding boxes in the time axis. The next module, i.e. the optimization module works on the tubes and decides the temporal shift applied to each tube for the minimization of energy.

4.3 Proposed optimization framework

The proposed optimization framework deals with multiple objectives that are associated with the video synopsis technology. The objectives are activity cost, collision cost, and temporal consistency cost, which are discussed below. The temporal shift for each tube is decided by the optimization module and it guarantees the minimal cost for each of the objectives considered. To solve the formulated optimization problem, HSATLBO, that produces an optimized time location for each object tube to start, is proposed so as to reduce the cost asserted. Finally, stitching of the objects as per the time location completes the synopsis process. fμ, a mapping of the original video to the synopsis video is executed and E is defined by the following equation, which represents the total penalty cost incurred for the process.

The weights ω0 and ω1 determine the precedence of the individual cost functions while formulating a single objective function. A higher value would signify a high precedence of the cost over other penalties. The following subsection elaborates the mathematical formulation of the various energy costs involved.

4.3.1 The energy costs

Activity cost

Ea(fμ) is the penalty imposed for dropping any activity from the original video.

Where, O is a set comprising of all the objects’ tubes, whereas o represents an individual tube present in the original video. Here, os is a mapping of o pertaining to the time position obtained for formulating the synopsis video. The term Ea(os) is defined as:

Where, absDiff() is a function for evaluating the absolute difference between the element \( \hat {o} \) and its corresponding background and \( \hat {o} \) a part of os. In (9), V and B denote the original video frames and the corresponding background model, respectively and bbox() stands for bounding box function. Equation (8) summarizes all the active pixels not included in the synopsis video. Activity cost would be zero for a scenario, where all events are conserved in the synopsis video (i.e. lossless synopsis).

Collision cost

Considering the size of the synopsis video as compared to the original video size, collisions among object tubes are obvious. Ec(fμ) is the penalty imposed for suffering any collision among the objects. Minimizing the collision count guarantees enhanced synopsis quality. Let \( {o_{m}^{s}} \) & \( {o_{n}^{s}} \) be the mapping to the synopsis video from the original video elements, om & on; the collision between them is defined as

Where, the Area() function calculates the overlap region between the bounding box of \( \hat {o}_{m} \) and \( \hat {o}_{n} \). If a video produced has no collisions, the cost is considered to be zero.

Temporal consistency cost

The procedure for the generation of synopsis video may cause violation of the temporal associations among captured objects due to the time-based alteration. Et(fμ) is the penalty for mislaying the chronology and relative appearance among objects with respect to the original video. The order of appearance of the objects are mapped from the source video and the penalty aims at minimizing the deviation from the original order. To realize the penalty cost for temporal inconsistency, this work follows the following two situations. In the first situation, any two objects in the original video share some common frames. In the second situation, two objects are not sharing any common frames. The second situation can be further sub-classified into two classes. In the first class, the consistency with respect to chronology as well as entry difference is violated. The other class deals with the case where the same is preserved. These situations can efficiently address the issue of preservation of interaction among objects in the synopsis if it exists in the original video.

Spatial relationships (△) between two moving objects can be utilized to measure their probability of interaction. This measurement represents the first considered situation. The spatial relationships between two moving objects, say om and on, is established as:

Here, the Euclidean distance between the objects om and on from the original video in tth frame is represented as δ(om,on,t), where, σ is used to tune the level of space communication between om and on.

A high value of σ may lead to a situation where the higher cost will be assigned for the violation of temporal consistency. Hence, the optimization process attempts to minimize the cost, even though there may be very little possibility of interaction between the objects. Thus, in this scenario the cost allocation is ambiguous due the high value of σ. On the other hand, a low value of σ drives the situation to assign a low temporal consistency cost which is insignificant in the minimization process. But, in this case, there might be a high possibility of interaction between objects which may be neglected. Henceforth, in our experiment, we have assumed 40 as an intermediate value of σ.

Likewise, the temporal relationships (τ) between objects can be expressed as:

Here,\( \overrightarrow {t}_{{o^{s}_{m}}} \) and \( \overrightarrow {t}_{{o^{s}_{n}}} \) are the entry frame indices in the synopsis video and \( \overrightarrow {t}_{o_{m}} \) and \( \overrightarrow {t}_{o_{n}} \) are that of om and on, respectively, in the original video.

In second the situation, the measurement of the temporal violation (\( \hat {\tau } \)) between om and on is defined as:

The positive value of \( \hat {\tau } \) implies successful preservation of temporal consistency between om and on in the synopsis incurring zero penalty cost. Thus, based on the considered situations, the complete representation of the temporal consistency cost is represented as:

Here, the time of interaction of the objects, om and on, in the original video is denoted by \( t_{o_{m}}\cap t_{o_{n}} \). Now, there can be two cases, first, \( t_{o_{m}}\cap t_{o_{n}} \) is not an empty set, i.e. the objects have shared some common frames in the original video. In this case, △(om,on) and τ(om,on) are utilized to preserve the temporal consistency. The equal value of the individual time of intervals \( (\overrightarrow {t}_{o_{m}}-\overrightarrow {t}_{o_{n}}) \) and \( (\overrightarrow {t}_{{o^{s}_{m}}}-\overrightarrow {t}_{{o^{s}_{n}}}) \) makes τ(om,on) zero, thereby resulting in the first term, △(om,on) × τ(om,on), to be zero which implies that the temporal consistency is well preserved. On the other hand, for the non-zero values of τ(om,on), △(om,on) is used to regulate the temporal consistency measure. Violation of temporal relationship is crucial for close distant objects, resulting in high temporal consistency cost.

Under the second case, \( t_{o_{m}}\cap t_{o_{n}} \) is an empty set, there can two sub-cases. First, if \( \hat {\tau }(o_{m},o_{n})\le 0 \), the temporal consistency violation is proportional to the exponential growth of penalty cost in terms of the absolute difference of the temporal violation, normalized by the factor γ, with respect to the synopsis video. γ is the normalization factor, defining the time interval in which events still have temporal communication. Based on the nature of the considered videos for experiments, authors have assumed the value of γ as 20. Second, for \( \hat {\tau }(o_{m},o_{n}) > 0 \), the temporal consistency cost is zero as the positive value of \( \hat {\tau }(o_{m},o_{n}) \) implies successful preservation of temporal consistency in the synopsis video.

4.3.2 Energy minimization

Minimization of the energy function in (6) is a tedious job as it searches through an enormous amount of odds. In [16], authors have addressed this issue with the application of Simulated Annealing, while authors in [47] have used Genetic Algorithm for the same. The base line for any optimization technique remains the same (namely, to generate a solution with least possible cost and in the smallest possible time for computation). The technique must be able to verify all possible mapping μ, and produce a temporal shift which is capable of optimizing all the individual objectives in (6). To initiate the process it is assumed that all the objects start from the first frame and the cost function value is evaluated. The upper bound is decided by the difference in length of the tube for a certain object and the synopsis length. It can be user defined, but to study a lossless structure it is set to be a fixed value (i.e. length of the longest object tube). To meet the requirement of the complex objective function and to justify the search space, an efficient algorithm is suggested for solving (6) by the hybridization of Simulated Annealing and Teaching Learning Based Optimization (HSATLBO).

4.3.3 Proposed HSATLBO

The proposed hybrid technique is presented in Algorithm 1. In the initialization step, all the required parameters are initiated as described in Step-1. A set of Initial population μT is produced for each object which is being shifted within a range [0, (maxlen − objlen)] as described in Step-2, where fμk represents each population as a row vector of μT. The decision variables, xj, are the elements of fμk. Finally, the activity, collision & temporal consistency costs, as well as the corresponding objective function values E1, E2, ... Ek for each kth population is evaluated initially according to (6), as given in Step-3. As per the TLBO technique, for the solution with best fitness (Ebest), the corresponding decision variable value (Tbest) is designated as the Teacher. The rest of the population is termed as student who would learn and update themselves in regard to teacher solution as described in the teacher phase. The mean Mj is calculated as stated in Step-4 and the teacher phase is initiated as depicted in Steps 6-16. For each kth population, teaching factor Tf is calculated as shown in Step-7. Each element of μT is updated as depicted in Steps 8 and 9 to produce \( \mu ^{new}_{j,k} \) and the new set of decision variables are applied. From (6), \( E_{k}^{new} \) is evaluated as described in Step-10. Now the process for selection or rejection of the updated solution is done in the selection phase, which is represented in Steps 11-16. For the purpose of achieving a global optimum solution, selection steps of simulated annealing is applied instead of the conventional technique of TLBO. After selection step, the obtained Ebest and μT are passed to the student phase for further processing.

In the student phase, two students fμm and fμn are selected such that fμm ≠ fμn. \( f_{\mu m}^{new} \) is generated in Steps 17-23 to accomplish a mutual learning process. The selection at the end of the student phase is similar to that of the teacher phase, where simulated annealing is applied for that purpose. The teacher phase and the student phase are repeated until ‘Gen’ and ‘T’ values are within the specified limits.

To make a fair comparison, the efficacy of the proposed optimization algorithm, HSATLBO, is tested on standard benchmark functions as listed in Table 2 and the analysis results in terms of Best, Worst, Mean and Standard Deviation based on 10 independent runs with 100 iterations and population size 10 for unconstrained as well as constrained single objective functions are displayed in Tables 3, 4, and 5, respectively. From Tables 3 and 4, it can be seen that the proposed hybrid optimization algorithm, HSATLBO, yields better results compared to that of Grey Wolf Optimizer (GWO) [22], NSGA II [4], JAYA [29], SA, and TLBO to solve unconstrained single objective benchmark functions. Similarly, from Table 5, it is evident that the proposed HSATLBO outperforms GWO, NSGA II, JAYA, SA, and TLBO to address constrained single objective benchmark functions. Thus, the proposed HSATLBO can be applied for the minimization of the objective functions in connection with the problem of off-line single view surveillance video synopsis generation.

4.4 Stitching

The stitching module is considered to be the final module of the video synopsis framework and used for the visualization of the final synopsis. The optimization framework generates the temporal shifts for all moving objects and according to the corresponding temporal shifts the objects are projected in the synopsis. Application of multi-layer background subtraction algorithm [46] for object detection and segmentation also produces the corresponding background frames parallel to the generation of foreground masks. These backgrounds frames are considered for making the background video for the synopsis onto which the objects are stitched. The background video length is dependent on the synopsis length, where the synopsis length is user dependent or as per the length of the largest tube to preserve all activities. Uniform temporal sampling is employed on the background frames to generate time lapsed synopsis background. This scheme helps to preserve the lightning conditions successfully in the generated synopsis background video. The corresponding time stamp for each tube is generated and stitched together with the tubes on the synopsis background frames applying the temporal shifts using Poisson Image Editing [25].

5 Experimental results and discussions

Exhaustive experiments are carried out on numerous surveillance videos for the performance evaluation of the proposed framework. MATLAB (version 2017a) with Intel Core I7 3.2 GHz CPU with 32 GB RAM is employed in the development and testing phase of the proposed approach. Figure 2 shows the experimental setup for the proposed work. The description of the set of video data, as well as the experimental results and analysis are discussed herein.

5.1 Experimental video data set

Videos having different frame rates, video lengths (i.e. number of frames) and number of objects are considered for the validation of the proposed methodology. In Table 6 various features of the videos considered are presented along with a snapshot of the first frame.

Videos considered for the testing purpose have different types of objects. The 1st video (Atrium) observes human beings walking around the field taken from [15]. The 2nd video (Pedestrians taken from ChangeDetection.NET [42]), captured within a park, involves walking as well as cycling action of humans. The 3rd video is a scene from MIT campus (PETS 2001 [5]) with a group of people moving around; it also contains tree branches and car movement. The 4th one is a real generated video taken from the IIIT Bhubaneswar surveillance dataset. The 5th video is captured in a railway station, projecting various human beings including long activity (PETS 2006 [6]) and the 6th one is depicting an outdoor crowded scenario of University of Minnesota (DS1/UMN/1 taken from UMN Dataset [38]).

5.2 Simulation results

5.2.1 Evaluation of the preprocessing modules (object detection & segmentation and tube formation)

In the suggested scheme, Multi-layer background subtraction algorithm is employed in the object Detection and Segmentation phase. Figure 3 shows a detailed visual analysis of various object detection and segmentation techniques applied to the considered experimental data set. Here, A(1), B(1), C(1), D(1), E(1), and F(1) are the input frames from original video (1st row); A(2), B(2), C(2), D(2), E(2), and F(2) are the resultant frames for background subtraction method (2nd row), where background is modeled by executing the temporal median for the video clip of 30 sec (15 seconds before and 15 seconds after); A(3), B(3), C(3), D(3), E(3), and F(3) are the resultant frames obtained by Gaussian mixture model (GMM) [14] (3rd row); A(4), B(4), C(4), D(4), E(4), and F(4) are the resultant frames obtained by PBAS [8] (4th row); A(5), B(5), C(5), D(5), E(5), and F(5) are the resultant frames obtained by LOBSTERBGS [34] (5th row); A(6), B(6), C(6), D(6), E(6), and F(6) are the resultant frames obtained by SuBSENSE [35] (6th row) and finally, A(7), B(7), C(7), D(7), E(7), and F(7) are the resultant frames obtained by applied multi-layer background subtraction algorithm [46] (7th row) for the proposed framework.

The input frame from the considered videos and the resultant foregrounds obtained from various object detection and segmentation algorithms. Columns A, B, C, D, E, and F corresponds to video 1, 2, 3, 4, 5, and 6, respectively. Rows 2-7 presents the resultant foreground frames while the 1st row depicts the input frames

It can be observed from Fig. 3 (A(7), B(7), C(7), D(7), E(7), and F(7)) that the resulted foreground masks obtained through the application of multi-layer background subtraction algorithm outperforms in terms of detected results using the other approaches (results depicted in Fig. 3 (A(2-6), B(2-6), C(2-6), D(2-6), E(2-6), and F(2-6))). Additionally, it is also able to remove noise and artifacts like ghosting and shadows as compared to its counterparts. Due to the fact that the performance of the tube formation is greatly affected by the results of object detection and segmentation phase, the selection of the foreground mask generation algorithm is crucial. The presence of shadow and noise in the foreground lead to sudden appearance of insignificant activities in the synopsis video. On the other hand, broken object trajectories on the foreground drives a single object extraction as multiple. This phenomenon affects the tracking process and restricts the tracker from generating a continuous tube, leading to unexpected disruption of appearance of objects in the synopsis video.

In the tube formation phase, like other approaches [16, 24, 27], the widely-applied Kalman filter-based multi-object tracker is employed. It is noted from the literature that in the process of tracking related to video synopsis, Kalman filter-based multi-object tracker is widely accepted, which in turn successfully addresses the occlusion problem. Thus, similar to other object-based video synopsis generation techniques [16, 24, 27], this work exploits Kalman filter-based multi-object tracker for tracking process. It should be noted that if the original video contains partial or full occlusion of moving objects, it will be reflected in the synopsis video as well, because of the fact that the occluded visual information of that moving object is missing in the original video.

5.2.2 Evaluation of proposed algorithm for the optimization framework

The proposed algorithm (HSATLBO) is implemented for the energy minimization (given by (6)), which generates the optimized video synopsis. The corresponding values of the biasing co-efficients ω0 and ω1 are determined to be 0.8 and 0.2, respectively, through extensive experimentation. Collision cost is assigned a higher bias as per sheer choice of the user to eliminate collision which causes loss of object activity data.

A comparative experimental study is performed with various optimization techniques (i.e. SA, TLBO, JAYA, NSGA II, and GWO) to authenticate the potential and precision of HSATLBO for the optimization of (6) and the results presented in Table 7. The chosen population size is 10 and maximum number of cost function evaluation is 100. In this table, the individual costs and the corresponding fitness values along with the execution time are compiled. The length of the synopsis produced is assigned to be equal to that of the longest object tube, thus a zero activity cost is obtained.

A statistical analysis in terms of Best, Mean, Worst, Standard Deviation and average Execution Time is presented in Table 8 for all the optimization techniques applied to videos 1, 2, 3, 4, 5, and 6, respectively. This analysis is performed using 10 independent runs for each algorithm and the best convergence plot is represented in Fig. 4. Additionally, Table 9 reflects a comparison between the proposed HSATLBO algorithm, and SA to solve the optimization framework proposed in the best state-of-the-arts [32] and [16]. The results obtained are presented in terms of the fitness value, time of execution, and final synopsis length (in terms of number of frames). During simulations of [32] and [16], the weights associated to collision and temporal consistency costs, are assumed to be 0.8 and 0.2, respectively.

In summary, from Tables 7 and 8, it is evident that the proposed algorithm outperforms the other optimization techniques considered for solving the proposed objective function. Moreover, it is observed from Table 9 that the proposed scheme is superior for solving the state-of-the-arts optimization frameworks in [32] and [16]. The visual quality assessment in terms of collision avoidance is shown in Fig. 5. The resultant synopsis frames present in all the odd rows of Fig. 5 are the outcome of the methodology proposed in [32], whereas those present in all the even rows are the outcome of the proposed methodology. In Fig. 5, the red circle and yellow circle signify the resultant collision from [32] and the proposed method, respectively. Except for the second row of Fig. 5, there is no yellow circle present in other rows indicating less number of collisions obtained from the proposed scheme. Thus, the final video synopsis obtained from the proposed scheme outperforms that of the approach used in [32].

First row: Video Synopsis results obtained in [32] of Video 1 (155th - 160th frames). Second row: Video Synopsis results obtained in HSATLBO of Video 1 (155th - 160th frames). Third row: Video Synopsis results obtained in [32] of Video 2 (140th - 145th frames). Fourth row: Video Synopsis results obtained in HSATLBO of Video 2 (140th - 145th frames). Fifth row: Video Synopsis results obtained in [32] of Video 3 (425th - 430th frames). Sixth row: Video Synopsis results obtained in HSATLBO of Video 3 (425th - 430th frames). Seventh row: Video Synopsis results obtained in [32] of Video 4 (271th - 276th frames). Eighth row: Video Synopsis results obtained in HSATLBO of Video 4 (271th - 276th frames). Ninth row: Video Synopsis results obtained in [32] of Video 5 (110th - 115th frames). Tenth row: Video Synopsis results obtained in HSATLBO of Video 5 (110th - 115th frames). Eleventh row: Video Synopsis results obtained in [32] of Video 6 (175th - 180th frames). Twelfth row: Video Synopsis results obtained in HSATLBO of Video 6 (175th - 180th frames). Red circle signifies the presence of collision in the resultant synopsis obtained in [32], where as yellow circle signifies the collision in resultant synopsis obtained in HSATLBO

6 Conclusion & future scope

Video synopsis is a potential tool to assess long surveillance video in a shorter retro of time by projecting multiple objects concurrently. In this paper, a hybrid approach (HSATLBO) is proposed for energy minimization problem. The hybrid optimization scheme offers an optimum object tube placement, which minimizes the energy function in terms of activity, collision and temporal consistency costs. Exhaustive experimental results and analysis reveal that the proposed scheme outperforms the benchmark schemes in terms of minimizing the overall fitness value for three different standard surveillance videos and one real generated surveillance video. Further, the proposed scheme has potential applications for home/office security, traffic control, detection of criminal activity, unusual alarming and civic protections etc.

Development of an automated and intelligent video synopsis framework remains a challenging problem. There exist several future directions which might further improve the overall efficacy of video synopsis framework. (1) To include action recognition module in single as well as multi-camera video synopsis framework to classify wide range of actions to obtain better action recognition accuracy. Moreover, it would help the synopsis generation steps to retain only the important actions in final synopsis video. (2) To design and formulate alternate multi-objective optimizations to reduce the synopsis length, number of collisions, and action loss. (3) To develop an efficient feature based object tracking techniques to form fluent activity tubes. (4) Further, to devise improved scheme for best view selection and mapping the multiple-view to a single view could be thought of as another area of extension.

References

Bishop G, Welch G, et al. (2001) An introduction to the Kalman filter. Tech. Rep. 27599-23175 Chapel Hill, NC, USA

Boykov Y, Veksler O, Zabih R (2001) Fast approximate energy minimization via graph cuts. IEEE Trans Pattern Anal Mach Intell 23(11):1222–1239. https://doi.org/10.1109/34.969114

Chou CL, Lin CH, Chiang TH, Chen HT, Lee SY (2015) Coherent event-based surveillance video synopsis using trajectory clustering. In: 2015 IEEE international conference on multimedia & expo workshops (ICMEW). IEEE, pp 1–6

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197. https://doi.org/10.1109/4235.996017

Ferryman JM (2001) Second IEEE international workshop on performance evaluation of tracking and surveillance (pets 2001). IEEE Computer Society

Ferryman JM (2006) Ninth IEEE international workshop on performance evaluation of tracking and surveillance (pets 2006). IEEE Computer Society

He Y, Gao C, Sang N, Qu Z, Han J (2017) Graph coloring based surveillance video synopsis. Neurocomputing 225:64–79

Hofmann M, Tiefenbacher P, Rigoll G (2012) Background segmentation with feedback: the pixel-based adaptive segmenter. In: 2012 IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW). IEEE, pp 38–43

Huang CR, Chung PCJ, Yang DK, Chen HC, Huang GJ (2014) Maximum a posteriori probability estimation for online surveillance video synopsis. IEEE Trans Circ Syst Vid Technol 24(8):1417–1429. https://doi.org/10.1109/TCSVT.2014.2308603

Jamil M, Yang XS (2013) A literature survey of benchmark functions for global optimization problems. arXiv:13084008

Khadanga RK, Kumar A (2016) Hybrid adaptive ‘gbest’-guided gravitational search and pattern search algorithm for automatic generation control of multi-area power system. IET Gen Transmiss Distrib 11(13):3257–3267

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Kolmogorov V, Zabin R (2004) What energy functions can be minimized via graph cuts? IEEE Trans Pattern Anal Mach Intell 26(2):147–159. https://doi.org/10.1109/TPAMI.2004.1262177

Lee DS (2005) Effective gaussian mixture learning for video background subtraction. IEEE Trans Pattern Anal Machne Intell 5:827–832

Li K, Yan B, Wang W, Gharavi H (2016) An effective video synopsis approach with seam carving. IEEE Signal Process Lett 23(1):11–14. https://doi.org/10.1109/LSP.2015.2496558

Li X, Wang Z, Lu X (2016) Surveillance video synopsis via scaling down objects. IEEE Trans Image Process 25(2):740–755. https://doi.org/10.1109/TIP.2015.2507942

Liao W, Tu Z, Wang S, Li Y, Zhong R, Zhong H (2017) Compressed-domain video synopsis via 3d graph cut and blank frame deletion. In: Proceedings of the on thematic workshops of ACM multimedia 2017. ACM, pp 253–261

Lin W, Zhang Y, Lu J, Zhou B, Wang J, Zhou Y (2015) Summarizing surveillance videos with local-patch-learning-based abnormality detection, blob sequence optimization, and type-based synopsis. Neurocomputing 155:84–98. https://doi.org/10.1016/j.neucom.2014.12.044

Liu Y, Li X, Wang L, Niu Y (2014) Interpolation-tuned salient region detection. Sci China Inf Sci 57(1):1–9

Lu M, Wang Y, Pan G (2013) Generating fluent tubes in video synopsis. In: 2013 IEEE international conference on acoustics, speech and signal processing, pp 2292–2296. https://doi.org/10.1109/ICASSP.2013.6638063

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equation of state calculations by fast computing machines. J Chem Phys 21 (6):1087–1092

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mishra SK (2006) Some new test functions for global optimization and performance of repulsive particle swarm method. MPRA Paper

Nie Y, Xiao C, Sun H, Li P (2013) Compact video synopsis via global spatiotemporal optimization. IEEE Trans Vis Comput Graph 19(10):1664–1676. https://doi.org/10.1109/TVCG.2012.176

Pérez P, Gangnet M, Blake A (2003) Poisson image editing. ACM Trans Graph (TOG) 22(3):313–318

Pritch Y, Rav-Acha A, Gutman A, Peleg S (2007) Webcam synopsis: peeking around the world. In: IEEE 11th international conference on computer vision, 2007. ICCV 2007. IEEE, pp 1–8

Pritch Y, Rav-Acha A, Peleg S (2008) Nonchronological video synopsis and indexing. IEEE Trans Pattern Anal Mach Intell 30(11):1971–1984. https://doi.org/10.1109/TPAMI.2008.29

Pritch Y, Ratovitch S, Hendel A, Peleg S (2009) Clustered synopsis of surveillance video. In: 2009 advanced video and signal based surveillance. IEEE, pp 195–200

Rao R (2016) Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int J Ind Eng Comput 7(1):19–34

Rao R (2016) Review of applications of tlbo algorithm and a tutorial for beginners to solve the unconstrained and constrained optimization problems. Decis Sci Lett 5 (1):1–30

Rao RV, Savsani VJ, Vakharia D (2011) Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput Aided Des 43(3):303–315

Rav-Acha A, Pritch Y, Peleg S (2006) Making a long video short: dynamic video synopsis. In: 2006 IEEE computer society conference on computer vision and pattern recognition (CVPR’06), vol 1, pp 435–441. https://doi.org/10.1109/CVPR.2006.179

Simionescu PA (2014) Computer-aided graphing and simulation tools for AutoCAD users. Chapman and Hall/CRC

St-Charles PL, Bilodeau GA (2014) Improving background subtraction using local binary similarity patterns. In: 2014 IEEE winter conference on applications of computer vision (WACV). IEEE, pp 509–515

St-Charles PL, Bilodeau GA, Bergevin R (2014) Flexible background subtraction with self-balanced local sensitivity. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 408–413

Sun L, Xing J, Ai H, Lao S (2012) A tracking based fast online complete video synopsis approach. In: Proceedings of the 21st international conference on pattern recognition (ICPR2012), pp 1956–1959

Tian Y, Zheng H, Chen Q, Wang D, Lin R (2016) Surveillance video synopsis generation method via keeping important relationship among objects. IET Comput Vis 10(8):868–872

UMN (2006) Umn: unusual crowd activity dataset. http://mha.cs.umn.edu/Movies/Crowd-Activity-All.avi

Vural U, Akgul YS (2009) Eye-gaze based real-time surveillance video synopsis. Pattern Recogn Lett 30(12):1151–1159. https://doi.org/10.1016/j.patrec.2009.03.002

Wang S, Yang J, Zhao Y, Cai A, Li SZ, et al. (2011) A surveillance video analysis and storage scheme for scalable synopsis browsing. In: 2011 IEEE international conference on computer vision workshops (ICCV Workshops). IEEE, pp 1947–1954

Wang Sz, Wang Zy, Hu Rm (2013) Surveillance video synopsis in the compressed domain for fast video browsing. J Vis Commun Image Represent 24(8):1431–1442

Wang Y, Jodoin PM, Porikli F, Konrad J, Benezeth Y, Ishwar P (2014) Cdnet 2014: an expanded change detection benchmark dataset. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 387–394

Wang W, Shen J, Li X, Porikli F (2015) Robust video object cosegmentation. IEEE Trans Image Processing 24(10):3137–3148

Wang WC, Chung PC, Huang CR, Huang WY (2017) Event based surveillance video synopsis using trajectory kinematics descriptors. In: 2017 fifteenth IAPR international conference on machine vision applications (MVA), pp 250–253. https://doi.org/10.23919/MVA.2017.7986848

Xu M, Li SZ, Li B, Yuan XT, Xiang SM (2008) A set theoretical method for video synopsis. In: Proceedings of the 1st ACM international conference on multimedia information retrieval. ACM, pp 366–370

Yao J, Odobez JM (2007) Multi-layer background subtraction based on color and texture. In: 2007 IEEE conference on computer vision and pattern recognition, pp 1–8. https://doi.org/10.1109/CVPR.2007.383497

Yao T, Xiao M, Ma C, Shen C, Li P (2014) Object based video synopsis. In: 2014 IEEE workshop on advanced research and technology in industry applications (WARTIA), pp 1138–1141. https://doi.org/10.1109/WARTIA.2014.6976479

Yildiz A, Ozgur A, Akgul YS (2008) Fast non-linear video synopsis. In: 23rd international symposium on computer and information sciences, 2008. ISCIS’08. IEEE, pp 1–6

Yuan Y, Lu X, Chen X (2015) Multi-spectral pedestrian detection. Signal Process 110:94–100. https://doi.org/10.1016/j.sigpro.2014.08.003. http://www.sciencedirect.com/science/article/pii/S0165168414003661, machine learning and signal processing for human pose recovery and behavior analysis

Zhong R, Hu R, Wang Z, Wang S (2014) Fast synopsis for moving objects using compressed video. IEEE Signal Process Lett 21(7):834–838. https://doi.org/10.1109/LSP.2014.2317754

Zhu X, Liu J, Wang J, Lu H (2012) Key observation selection for effective video synopsis. In: 2012 21st international conference on pattern recognition (ICPR). IEEE, pp 2528–2531

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ghatak, S., Rup, S., Majhi, B. et al. An improved surveillance video synopsis framework: a HSATLBO optimization approach. Multimed Tools Appl 79, 4429–4461 (2020). https://doi.org/10.1007/s11042-019-7389-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7389-7