Abstract

Because on the increase in the number of the elderly living alone and accidents occurring to them, the demand for a monitoring system capable of supporting fast response in case of an emergency situation by monitoring their everyday life in their residential spaces has been increasing. A framework and a system are presented to monitor the emergency situations of the elderly living alone using a low-cost device and open-source software. First, human pose recognition and emergency situations according to the pose change were defined using object recognition, and a procedure capable of detecting such situations was proposed. In addition, a pose recognition model was created using the TensorFlow Object Detection application programming interface (API) of Google to implement the procedure. Using a data preprocessing process and the created model, a system capable of detecting emergency situations and sounding an alarm was implemented. To verify the proposed system, the pose recognition success rate was examined, and an experiment on emergency situation recognition was performed while the angle and distance of the camera were varied in a setup similar to the residential environment. It is expected that the proposed framework for the emergency notification system for the elderly will be utilized for the analysis of various behavior patterns, such as the sudden abnormal behavior of the elderly, people with disabilities, and children.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

According to an Organisation for Economic Co-operation and Development (OECD) demographic survey, the population proportion of the elderly will double within the next few decades [8]. People aged 65 or older are generally defined as the elderly. In the Unites States and Europe, the proportion of the elderly is expected to rise by 2% every year and reach almost 30% in 2025. In Japan, the proportion will be 19% in 2025, signaling its transition into a super-aged society [10]. In the case of South Korea, the proportion rose from 10.2% in 2008 to 14% in 2017, indicating its transition into an aging society. Along with the aging phenomenon, the proportion of the elderly living alone is increasing by 2–3% each year, representing 33.5% of Korea’s total population. The elderly living alone have limited or disconnected communication with society owing to their residential and living environment characteristics. In particular, although there are social laws for the aged population, such as income assurance, healthcare assurance, work assurance, and social welfare assurance, the area of elderly welfare law cannot be separately regulated. Therefore, even though individual laws for each target to be protected have been enacted, the elderly have been relatively excluded from the area of interest because of the insufficient connection between the individual laws and the limitation of the national finance [15]. Therefore, in many cases, the elderly are disconnected from society without proper social protection and cannot contact such emergency telephone numbers as 119 or nearby hospitals when they are faced with sudden accidents or health emergencies, thereby increasing lonely deaths. To prevent such problems, a system is required to promptly respond to emergency situations by contacting 119 or nearby hospitals when the elderly perform unusual behavior or exhibit poses indicating emergency situations.

Previous studies to manage the safety of the elderly and to prevent lonely deaths can be largely divided into studies on the lonely-death prevention Internet of things (IoT) system, which sounds an alarm when the fall of a person is detected, and studies on the ambient intelligence (AmI)-based smart home, which manages the living environments of the elderly, such as the moving paths, meals, leisure, sleep patterns, temperature, and humidity. However, previous studies on motion detection could not identify various poses and life patterns, and home care services are expensive, because they required many sensors and wireless devices.

Therefore, an elderly behavior emergency detection system that monitors the indoor behavior of the elderly, detects emergency situations, and sounds an alarm using a low-cost webcam device and open-type object recognition software is proposed. Emergency situations are detected by monitoring the pose change of the elderly using the object recognition technology.

Object recognition means creating a classification model through machine learning on the images of objects and identifying objects based on the model. Google recently released the TensorFlow Object Detection application programming interface (hereafter “Object Detection API”) (https://www.tensorflow.org/), an object recognition framework, as an open-source software. It is a model developed to be capable of recognizing images using TensorFlow, which is the machine-learning and deep-learning engine of Google, and is provided in a library form. It is possible to apply the technology without directly creating a deep-learning model and providing training, because numerous deep-learning models developed for image training can be easily used.

The Object Detection API can be used in various areas, such as homes, industries, and ecosystems. For example, considerable labor force and cost have been invested to identify the number of animals in an ecosystem using photography with drones and image analysis through manual work. However, the use of the Object Detection API can save time and cost, because it is not necessary for people to classify animals and count their numbers [27]. In addition, Amazon applied Object Detection to different packing methods for each product and saved time and cost by allowing robots to pack objects promptly in various situations and places [32].

The purpose of Object Detection API is basically to distinguish objects in an image. However, it can be utilized to detect the emergency situations of the elderly, because it may also distinguish the poses of people caused by the characteristics of the image analysis algorithm used.

The contents of this study are as follows. In Section 2, studies on lonely-death prevention and healthcare of the elderly, as well as studies on the detection of objects, poses, and movements from images, are introduced. In Section 3, the framework, architecture, and implementation results of the elderly behavior emergency detection system for lonely-death prevention are described. In Section 4, the experimental design to verify the performance of the proposed system and the experiment results are explained. Section 5 concludes this study.

2 Related studies

2.1 Object detection

Object detection is a method of identifying an object through a model that has completed training on images. Defining the object section and accurately implementing the matter of how well an object is recognized for the set image section are the keys.

Fast Region-based Convolutional Network method (Fast-RCNN) can derive higher accuracy than the existing Convolutional Neural Network (CNN) by extracting the characteristics of the image and minimizing noise for the image analysis. Fast-RCNN consists of a convolution feature map and a region of interest from a proposal method (RoIs) feature vector, as shown in Fig. 1. The convolution feature map extracts the characteristics of the entire image using convolution and the max pooling layer, creates vector values for the characteristics, and delivers them to the RoI pooling layer. The RoIs feature vector sets the ranges of various spaces for the characteristics of the image based on the delivered information and converts the characteristics into maps. In this case, the maps are moved to the linked fully connected layers (FCs). The probability for a single K object class is calculated, and each set for K classes is assessed to determine the final class of the image [26].

Fast-RCNN architecture (edited from [11])

2.2 Related studies

2.2.1 Physical health and residential-environment monitoring system

Because of the advances in IoT and the development of the Internet, studies on healthcare systems that monitor everyday life are increasing. The methods for monitoring the physical health and residential environment of people can be divided into contact types that attach sensors to the body to be monitored and noncontact types that do not attach a sensor to the body.

Contact types cause inconvenience in motion because of the sensors attached to the body. Therefore, the use of them is mostly limited to biosignal monitoring. Chung et al. [7] fabricated bracelet-type and belt-type conductivity, accelerometer, and oxygen saturation sensors and constructed a medical monitoring system that collects the physical activity information of the wearer using a wireless network (IEEE 802.15.4). The study is significant in that it developed a system capable of monitoring biosignals using a wearable device compatible with next-generation wireless sensor networks without interfering with the activities of the elderly. Jang et al. [13] fabricated light-transmissive and light-reflective pulse sensors in the shape of a ring and compared them by checking the heart rate. They transmitted the heart rate data collected from the sensors using the Zigbee network and constructed a system that monitors the data and sends a warning message using Short Message Service (SMS) in case of an emergency. They allowed more-comfortable motion by fabricating ring-type pulse sensors that make free movements in everyday life possible compared with pulse sensors attached to the chest. Kim [14] detected falls and activities and measured the heart rate by attaching a three-axis acceleration sensor and a pulse sensor to the structure with the shape of a wrist watch. An experiment was performed by creating a fall situation, and an approximately 70% fall detection rate was obtained. A sensor with the shape of a wrist watch was fabricated to prevent uncomfortable movements. Paradise et al. [22] fabricated clothes composed of piezoresistive materials to insert electrode sensors considering the wearing comfort of the user, and they developed a transmission module called a “portable patient unit,” which can transmit the data collected from the electrode sensors remotely. The study fabricated clothes based on the standard textile industry process to provide the same wearing comfort as ordinary clothes. Therefore, ease and comfort were added to the movements of the everyday life of the target to be monitored. Bourke et al. [3] attached three-axis acceleration sensors to the thighs of people and proposed a system capable of classifying eight types of fall situation using the upper and lower limits of the values collected from the three-axis acceleration sensors. The upper and lower limits were designated using the data of approximately 240 fall situations. In addition, they derived the optimal attachment location of the three-axis acceleration sensor through experiments.

Noncontact sensors are frequently used to analyze the activities of the monitoring target, because they are not attached to the human body to ensure free movements. Mehta et al. [19] collected the usage information of home appliances by attaching noncontact sensors to home appliances, such as refrigerators and gas valves, and derived the behavior pattern of the target. The study is significant in that it predicted the movements of the target based on the behavior pattern and provided convenience, such as automatically operating home appliances. Zhu et al. [34] attached radio-frequency identification (RFID) tags to the elderly living alone and proposed a method to detect emergency situations based on changes in the radio frequency. The study constructed a monitoring system capable of distinguishing falls from the everyday life patterns based on the Doppler frequency and received signal strength (RSS) that vary depending on the sudden physical movements of the wearer. Movements, such as walking, sitting, and standing, were repeated in the experiment, and the thresholds of the proposed Doppler frequency and RSS were derived through the experiment. Erik et al. [29] created a decision-making tree capable of distinguishing the poses (sitting, standing, and lying down) of the monitoring target in images using the Kinect depth camera from Microsoft. They proposed a method capable of detecting falls using the Kinect depth camera as well as a method of increasing the accuracy using the decision-making tree. The fall situations played by a stunt actor and the life of 16 residents for approximately 3000 days were monitored, and data were collected to be used in the analysis. They verified the excellence of their models through a comparison with previous fall detection algorithms [2, 16, 18, 21, 24].

In addition to the above studies, studies that use contact and noncontact sensors have also been conducted. Cho et al. [5] conducted a study to collect the position, momentum, and voice data of the user using multisensing technology, which combines wearable devices with various sensors, such as smartphones, IoT-based GPS, acceleration sensors, gyro sensors, heart rate sensors, voice sensors, and touch sensors, to analyze the behavior information of the user through the support vector machine (SVM) and artificial neural network (ANN). They provided a customized monitoring service by combining psychological factors with the existing method of predicting human behavior using the data of voice sensors and heart rate sensors. Choi et al. [6] monitored the entrance usage status, indoor movements, use frequency of home appliances, and blood pressure measurement results of the elderly living alone based on the ZigBee-public switched telephone network (PSTN) gateway. In the study, it was possible to monitor the activities of the elderly living alone, even in areas where the Internet installation is limited, using the PSTN-based telephone line wired network.

2.2.2 Object recognition and pose detection

Many studies have been conducted to recognize objects in images. In the early period, the method of detecting an object using the image difference between the background image and the current frame was frequently used [20, 28, 31]. For the object tracking method that uses the image difference between frames, however, the accuracy cannot be guaranteed, because it is significantly affected by external environments, such as the color and lighting of the background. To address this problem, Ahmed Elgammal et al. [9] proposed a method of estimating the kernel density for each pixel of the background. This algorithm calculates the distribution of the pixel values of the background inside the image and uses the method of eliminating the pixels that correspond to the calculated pixel distribution of the background from the next frame. It exhibited higher accuracy and faster speed than the method of comparing only the difference between frames. Zhan et al. [33] used the canny algorithm [4] to detect a moving object. Based on this algorithm, they derived the edge of an object detected from the difference between frames and proposed an algorithm capable of determining a moving area by comparing pixel values with the threshold. They compared this algorithm with the frame difference method [28], background subtraction method [20], and moving-edge method [31] algorithms using 250 images and verified that the proposed algorithm could detect an object with higher accuracy and faster speed compared with the existing algorithms. John et al. [1] proposed a camshaft algorithm that tracks an object by determining whether pixel values belong to the preset value. After the object area to be tracked is designated in the image, the probability of determining the pixel value of the area is calculated. An object is recognized through the method of finding a part judged to be the pixel value of the predesignated area in the next frame image. The study has limitations in that it cannot perform tracking when the color of the predesignated area changes because of lighting.

Recently, object recognition models using deep learning have been proposed. Girshick et al. [11] conducted a study to track the entire object through a part of the object using the Fast-RCNN model. ImageNet, AlexNet, and VGG datasets were learned and compared with the previous algorithms in the experiment, making the fastest speed and high detection rates possible. The study is important because it proposed a deep-learning model capable of detecting an object without high hardware performance. Gkioxari et al. [12] proposed a model capable of detecting behavior by learning poses that accompany the specific behavior of a person. Experiments were conducted using image datasets, such as PASCAL VOC Action and MPII Human Pose. All the experiments exhibited more than 90% accuracy.

Various studies for detecting the pose of the detected object have also been conducted. As a representative study, Rogez et al. [25] divided areas based on the joints of the body in 2D and 3D images to detect the pose of a person and proposed a method of distinguishing poses using the localization classification-regression network (LCR-Net). Yang [30] also proposed a method of detecting poses by designating each partial area of the human body and learning the partial areas. The 305 poses from the Image Parse dataset and 748 poses from the Buffy Stickmen dataset were learned using the proposed method, and the possibility of monitoring the body movements was exhibited by designating the joints of the body and defining the body movements. Pfister et al. [23] proposed a method of predicting poses by expressing the joint points of an object in each frame as heatmaps using different colors as the frames of a video proceed and by learning the optical flow, which is the sequence of the heatmaps, with the convolution network.

3 Development of the elderly behavior emergency detection system

In this section, a framework for detecting emergencies in the everyday life of the elderly is presented. The presented framework was implemented using the TensorFlow Object Detection API of Google, and the results of the development of the elderly behavior detection system are explained.

3.1 Elderly behavior emergency detection framework

Figure 2 shows the configuration of the elderly behavior emergency detection framework. First, various pose images are collected from the Web and preprocessed. The preprocessed images are then learned using the Fast-RCNN model so that poses can be distinguished. The model that derives the highest accuracy is selected among the image training results, and a person and the pose of the person are detected from the images of the residential environment collected from the webcam in real time using the selected model. Finally, the pose change of the detected person is tracked, and a warning message is transmitted in case a pose change occurs within a short period of time, through the time between frames for a specific pose change.

The detailed explanations and guidelines for each step are as follows.

-

1.

Image Collecting: In this step, images by pose required for training are collected from the Web, and the collected images are stored in the Pose Image Database (D1). It is possible to use Fatkun (https://chrome.google.com/webstore/detail/fatkun-batch-downloadima/nnjjahlikiabnchcpehcpkdeckfgnohf) and Extreme Picture Finder (https://download.cnet.com/Extreme-Picture-Finder/3000-2071_4-10070685.html) so that images can be easily downloaded using keywords for each pose.

-

2.

Image Preprocessing: In this step, the collected images are preprocessed for the training of the Object Detection API. Image preprocessing cuts the area of a person from the image to be used for training and creates the metadata of the cut area. The metadata include the information of the width, depth, height, and pose class of the extracted area in the image and are stored in the XML or CSV file format. For the preprocessing process, the method of people directly cutting the area of a person from the image or the method of automatically extracting the area of a person using the Object Detection API capable of detecting a person can be used. The former method can accurately cut only the area of a person from the image. The latter method can preprocess the image within a short period of time, but its accuracy is low compared with the former method.

-

3.

Model Generating: In the model-generating step, an object detection model capable of classifying poses using the metadata stored in D2 is created through training. During the training process, training models are stored at a certain point in time, and the model with the highest accuracy is selected by measuring the loss values of the training models. Representative object detection models include Single Shot Detector (SSD), You Only Look Once (YOLO), and Fast Region-based Convolution Network (Fast-RCNN) Model.

-

4.

Detecting

The detecting step is divided into the Pose Detecting and Speed Tracking steps. The pose of a person is distinguished, and the pose change speed is measured using a model that completed training.

-

1.

Pose Detecting: Pose detecting is performed for each frame of the real-time image using the pose classification model. In this case, it is possible to use a Raspberry Pi camera or a webcam as an image-capturing device. The Raspberry Pi camera is slower than the webcam in terms of the speed to receive frames in real time.

-

2.

Speed Tracking: In the speed-tracking step, the pose of the previous frame recognized through pose detecting and unrecognized frames due to poses not learned between the frames of changed poses are present. Using these, and through the time difference between frames in which a pose change occurred, an emergency is detected. The shorter time difference is judged to be the faster pose change. Therefore, it is possible to detect emergency situations, such as falls or collapses.

-

1.

-

5.

Emergency Detecting: Emergency types are defined in advance, and speed tracking is performed if the detected pose change corresponds to the emergency types. Speed tracking extracts the coordinates of the highest center point of the bounding box that represents the detecting target recognized through pose detecting, and it detects the speed using the movement value of the pixel obtained by comparing the previous image frame with the next image frame for the coordinates. When a pose change corresponding to the emergency types is detected and the speed threshold is exceeded, the situation is determined to be an emergency, and the speed threshold is set through experiments. In case of an emergency, an emergency SMS is transmitted through the Internet hosting company.

3.2 Implementation

The elderly behavior emergency detection system largely consists of an image preprocessing module, an image training module, an object detection module, and an emergency detection module, as shown in Fig. 3. The detailed descriptions of the system configuration are as follows.

Pose image searching

It is possible to use several programs for image collection. In this study, the images of three poses — sitting, standing, and lying down — were collected using the Extreme Picture Finder System shown in Fig. 4. Extreme Picture Finder produces little noise because it automatically deletes the images not related to keywords.

There may be overlapping images among the images retrieved using Extreme Picture Finder. In such cases, it is necessary to eliminate the overlapping images using VisiPics (http://www.visipics.info/index.php?title), as shown in Fig. 5.

Image preprocessing module

In this study, image preprocessing was performed using the object detection model trained by the single shot multibox detector. Images to be extracted are classified into sitting, standing, and lying down. After designating the corresponding pose image class as the name of each folder, the images are stored in each folder. The object detection model tours the folders and detects the area of a person for the stored images. It is possible to use the pretrained object detection model by utilizing the Deep Neural Network module provided by openCV3 (https://opencv.org/opencv-3-3.html), a computer vision library. The object detection model detects the area of a person with a rectangle from the input image and stores the x and y coordinates of the bottom left and the top right of the bounding box, which represents the area of a person. The object detection model keeps searching the images until the area of a person is no longer detected. The x and y coordinates of the rectangle representing the area of a person are stored in a CSV file along with the file name and class. The created CSV file is used in the image-training module, which is the next step. The system constructed in this research can also detect two or more people from an image, thereby allowing image labeling and reducing time. However, results may vary, depending on the object detection model used, because the accuracy is different. Figure 6 shows the conceptual diagram of the data labeling constructed in this study.

Image training module

The metadata output as the results of the data labeling module are used as the input data of the deep-learning model along with the images. As the deep-learning model, Fast-RCNN, which exhibits satisfactory performance in image analysis, is used. For the image characteristics extracted from the RoI feature vector section, noise is removed through fully connected layers, optimization is performed using Softmax, and the pose class is identified using the bounding box regressor. During the training process, training models are stored at certain sections, and the model with the lowest loss value is selected as the final model.

Detecting module

Pose detecting is performed using the finally selected pose-detecting model and webcam. People are detected from the images captured in real time, and their poses are detected using the trained object detection model. An emergency is determined depending on the pose change of the simultaneously detected person. Figure 7 shows the entire sequences of the detection module and the emergency detection module.

An emergency is detected by detecting the pose of a person from the real-time image frames captured by the camera and by storing the detected pose and input frame time as well as comparing the image frames. An emergency is determined using the pose change between the previous image frame and the current image frame, as well as the time difference between the frames with changes.

Emergency detecting module

In this study, the speed between frames was calculated, and the focus was given to the pose changes of the tracking target from standing to lying down and from sitting to lying down. When the specific pose change condition (standing→lying down or sitting→lying down) is met and the time difference between frames is less than the specific threshold, the situation is judged to be an emergency, such as a fall or collapse, and an emergency warning message is transmitted. For the emergency notification, the method of sending an SMS message to the mobile phone of the caregiver is used. Figure 8 shows an example of sending a warning message to the caregiver. Figure 9 shows example of sending warning message with SMS.

4 Experiment

In this section, the pose detection accuracy and emergency detection rate were measured while the angle of the installed webcam and the distance between the webcam and the elderly living alone were varied to verify the performance of the elderly behavior emergency detection system. An experiment was performed using the angle and the distance of the camera based on the approximately 26 m2 studio-type housing, which was similar to the residential environment of the elderly living alone.

In this experiment, the recognition accuracy was verified according to the changes in the installation angle of the camera and the distance between the camera and the elderly living alone to measure the recognition accuracy of the pose detection module, which is used for detecting the emergency situations of the elderly living alone. First, to create a pose detection training model to be used as the pose detection module in the experiment, a training set was created by collecting 350 images for each pose using Extreme Picture Finder. The training for the collected training set was performed using the Fast-RCNN of TensorFlow Object Detection API in accordance with the procedure presented in Section 3. The model with the optimal loss value was created, and the experiment for verifying the performance of the pose detection training model was conducted.

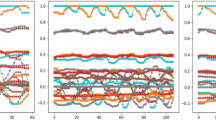

The pose detection training model used in the experiment exhibited the best performance when the number of trainings reached approximately 100,000, as shown in Fig. 10. In this case, four hours were consumed as the training time. To measure the pose detection accuracy of the pose detection training model, the pose detection accuracy was verified according to the angle of the webcam and the distance between the camera and the elderly living alone. For the three poses of standing, sitting, and lying down, a test was conducted with five test subjects taking each pose. Table 1 shows the experimental conditions. Nine experimental conditions were prepared with three camera distances (1.0–1.5 m, 1.5–3.0 m, and 3.0–4.5 m) and three camera angles (top, front, and side) at positions that can show the entire room.

For each test subject, 100 tests were performed for each condition by performing 20 tests for the three poses while the angle and distance of the web camera were varied. Through the number of accurate pose detections according to the experimental conditions, the object detection accuracy of the pose detection training model was verified. (Figure 11)

As a result of accuracy derivation, the pose detection training model exhibited the accuracy shown in Table 1 for each pose. In most cases, the poses were normally recognized, as shown in Fig. 12. From the close distance of 1.0–1.5 m, however, the pose recognition rates were relatively low, because only a part of the body was captured in the image, as shown in Fig. 13. Detection failure or false detection of other poses occurred. From a distance farther than 1.5 m, detection success rates close to more than 90% were observed, regardless of the camera angle. This experiment verified the applicability of the elderly behavior emergency detection system. It was found that the optimal detection condition was significantly affected by the distance, regardless of the camera angle. The detection success rate was high for the camera distance of at least 2–3 m, where the entire body could be captured. For the pose recognition of lying down, the detection success rate was somewhat low compared with standing and sitting.

The experiment of detecting emergency situations was conducted using the pose detection training model. The performance of the proposed system was verified by setting the optimal detection conditions and making emergency situations considering the study of Erik [29].

Under the optimal pose detection conditions with the camera installed at the front and the distance between the camera and the elderly living alone at 2–3 m, two types of fall — from sitting to lying down and from standing to lying down — were set as emergency types, as shown in Table 2. The speed threshold was set to 2.5 m/s, which is the speed at which a fall can cause fatal injuries to the elderly living alone. For a person with a height of 150–180 cm to reach the 2.5-m/s falling speed from a vertical falling distance of 130–150 cm, the person has to fall within the short period of 0.2 s. For Emergency 1, a relatively lower speed of 1.5 m/s was set as the threshold, because it starts with a sitting pose [17]. An experiment for verifying the success in emergency detection was conducted for the two emergency types by making a fall, as shown in Fig. 13. As a result of the five test subjects making a total of 100 falls for each of the two emergency types in Table 2 by making 20 falls per test subject, every fall was detected as an emergency, and the warning for an emergency was transmitted.

5 Conclusion

A procedure and a system capable of detecting the emergency situations of the elderly behavior using a low-cost device and open-source software were proposed. For the three poses of sitting, standing, and lying down, 350 images per pose were learned using Fast-RCNN, and a deep-learning model capable of distinguishing more than 90% of the poses was created. In addition, a system capable of measuring the pose change speed of the detected object from standing to lying down or from sitting to lying down and creating an alarm if the determined threshold is exceeded was implemented. To verify the performance of the proposed system, nine experimental conditions were prepared by varying the angle and distance of the camera in a testing room similar to the residential environment of the elderly living alone (26 m2 studio). As a result, the detection success rates were not much different according to the camera angle under the nine conditions, but they were significantly different according to the camera distance. It was confirmed that the detection success rate was high when the proportion of the whole body, not the part of the body, was high in the captured screen.

While previous studies for managing the safety of the elderly and preventing their lonely deaths required considerable expenses because of the use of many sensors and wireless devices, the elderly safety management using images proposed in this study does not require much cost, because it uses a low-cost webcam and open-source software. In addition, the proposed system is expected to be used for the elderly pattern analysis and emergency notification, as well as for the analysis of various behavior patterns, such as the sudden abnormal behavior of people with disabilities and the behaviors of children.

This study, however, used only three poses and could not consider situations in various spaces. Furthermore, in terms of the speed measurement, it could not consider speed change while in motion. In the future, it is necessary to supplement the proposed system through training on both the poses of people and household objects, such as furniture and home appliances, so that various actions, such as eating, watching TV, cooking, and exercising, can be detected. In addition, in terms of setting the speed threshold for determining an emergency, it is necessary to propose thresholds considering the characteristics of the target object instead of an arbitrary setting.

References

Allen JG, Xu RYD, Jin JS (2004) Object tracking using camshift algorithm and multiple quantized feature spaces. In: Proceedings of the Pan-Sydney area workshop on Visual information processing. Australian Computer Society, Inc., p. 3–7

Anderson D, Luke RH, Keller J, Skubic M, Rantz M, Aud M (2009) Linguistic summarization of activities from video for fall detection using voxel person and fuzzy logic. Comput Vis Image Underst 113(1):80–89

Bourke AK, O’brien JV, Lyons GM (2007) Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait Posture 26(2):194–199

CANNY J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 6:679–698

Cho W-D et al (2014) Life log big data-based lifestyle analysis and wellness prediction using IoT care service system. Journal of The Korean Institute of Communication Sciences 35(12):17–24

Choi K-S, Chun J-C (2015) Development of mobility and vitality signal monitoring system based on ZigBee-PSTN gateway for the elderly. The Journal of Korea Institute of Information, Electronics, and Communication Technology 9(1):9–14

Chung W-Y, et al (2008) A wireless sensor network compatible wearable U-healthcare monitoring system using integrated ECG, accelerometer and SpO2 30th annual international IEEE EMBS conference Vancouver, British Columbia

Cutler DM, Poterba JM, Sheiner LM, Summers LH, Akerlof GA (1990) An aging society: opportunity or challenge. Brook Pap Econ Act 1:1–73

Elgammal A et al (2000) Non-parametric model for background subtraction, ECCV 2000, LNCS 1843, pp. 751–767

Fougère M, Mérette M (1999) Population ageing and economic growth in seven OECD countries. Econ Model 16(3):411–427

Girshick R (2015) Fast r-cnn arXiv preprint arXiv:1504.08083

Gkioxari G, Girshick R, Malik J (2015) Contextual action recognition with r-cnn. In Proceedings of the IEEE international conference on computer vision, pp 1080–1088

Jang I-H et al (2007) Ring-type Heart Rate Sensor and Monitoring system for Sensor Network Application, Journal of Korean institute of intelligent systems, Vol.17 No.5

Kim N (2010) Development of an emergency monitoring device in a wrist watch. Communications of the Korean Institute of Information Scientists and Engineers 8(4)

Kim TH, Ko ZK (2013) Problems and improvement devices of normative system on welfare of the aged act according to the aging society. Inha Law Review The Institute of Legal Studies Inha University 16(1):167–198

Kim YS, Lee CM, Namgung SJ, Kim HG (2011) A study on the social networks effectiveness to prevent the lonely death of the elderly who live alone. Soc Sci Res 50(2):143–169

Lee TK et al. (2016) A basic study on human injuries according to slip velocity during falling. Proceedings of the Korean Society of Precision Engineering Conference, pp. 217–218

Mastorakis G, Makris D (2012) Fall detection system using Kinect’s infrared sensor. Journal of Real-Time Image Processing

Mehta R, Kale S, Utage AS (2017) The internet of things (IOT) intelligence computing Technology for Home Automation. International Journal of Current Engineering and Technology

Mingwu R, Han S (2005) A practical method for moving target detection under complex background. Computer Engineering, pp. 33–34

Otto C et al (2006) System architecture of a wireless body area sensor network for ubiquitous health monitoring. Journal of Mobile Multimedia 1(4):307–326

Paradiso R et al (2005) WEALTHY – a wearable healthcare system: new frontier on e-textile, Journal of Telecommunications and Information Technology, 4/2005

Pfister T, Charles J, Zisserman A (2015) Flowing convnets for human pose estimation in videos. In: Proceedings of the IEEE International Conference on Computer Vision, p. 1913–1921

Planinc R, Kampel M (2012) Robust fall detection by combining 3D data and fuzzy logic. ACCV Workshop on Color Depth Fusion in Computer Vision, pp. 121–13

Rogez G, Weinzaepfel P, Schmid C (2018) LCR-net++: multi-person 2D and 3D pose detection in natural images. arXiv preprint arXiv:1803.00455

Rougier C, Anvient E, Rousseau J, Mignotte M, Meunier J (2011) Fall detection from depth map video sequences,” Intl Conf on Smart Homes and Health Telematics, pp. 121–128

Schneider S, Taylor GW, Kremer SC (2018) Deep Learning Object Detection Methods for Ecological Camera Trap Data arXiv preprint arXiv 1803.10842

Sonka M, Hlavac V, Boyle R (2003) Image processing, analysis, and machine vision (second edition). Posts & Telecom Press, Beijing

Stone EE, Skubic M (2015) Fall detection in homes of older adults using the Microsoft Kinect. IEEE Journal of Biomedical and Health Informatics 19(1):290–301

Yang Y (2013) Articulated human pose estimation with flexible mixtures of parts. University of California, Irvine

Yunchu Z, Zize L, En L, Min T (2006) A Background Reconstruction Algorithm Based on C-means Clustering for Video Surveillance. Computer Engineering and Application, pp. 45–47

Zeng A, Yu KT, Song S, Suo D, Walker E, Rodriguez A, Xiao J (2017) Multi-view self-supervised deep learning for 6d pose estimation in the amazon picking challenge. In Robotics and Automation (ICRA), 2017 IEEE International Conference on, pp 1386–1383

Zhan C et al (2007) An improved moving object detection algorithm based on frame difference and edge detection. In: image and graphics, 2007. ICIG 2007. Fourth international conference on. IEEE, p. 519–523

Zhu L, Wang R, Wang Z, Yang H (2017) TagCare: using RFIDs to monitor the status of the elderly living alone. IEEE Access 5:11364–11373

Acknowledgements

This work was supported by the Ministry of Land, Infrastructure and Transport in Korea.

(17CTAP-C114867-02)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Youm, S., Kim, C., Choi, S. et al. Development of a methodology to predict and monitor emergency situations of the elderly based on object detection. Multimed Tools Appl 78, 5427–5444 (2019). https://doi.org/10.1007/s11042-018-6660-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6660-7