Abstract

Facial expression recognition (FER) is one of the most active areas of research in computer science, due to its importance in a large number of application domains. Over the years, a great number of FER systems have been implemented, each surpassing the other in terms of classification accuracy. However, one major weakness found in the previous studies is that they have all used standard datasets for their evaluations and comparisons. Though this serves well given the needs of a fair comparison with existing systems, it is argued that this does not go in hand with the fact that these systems are built with a hope of eventually being used in the real-world. It is because these datasets assume a predefined camera setup, consist of mostly posed expressions collected in a controlled setting, using fixed background and static ambient settings, and having low variations in the face size and camera angles, which is not the case in a dynamic real-world. The contributions of this work are two-fold: firstly, using numerous online resources and also our own setup, we have collected a rich FER dataset keeping in mind the above mentioned problems. Secondly, we have chosen eleven state-of-the-art FER systems, implemented them and performed a rigorous evaluation of these systems using our dataset. The results confirm our hypothesis that even the most accurate existing FER systems are not ready to face the challenges of a dynamic real-world. We hope that our dataset would become a benchmark to assess the real-life performance of future FER systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Knowledge about people’s emotions can serve as an important context for automatic service delivery in a large number of context-aware systems. Many research applications of image processing and pattern recognition, such as human computer interaction [3], robot control and driver state surveillance [49], and human behavior studies in telemedicine and e-health environments [24], can benefit from the knowledge of people’s emotions. Hence, human facial expression recognition (FER) has emerged as an important research area over the last two decades.

Facial expression recognition can be classified into two categories: First is pose-based FER [28, 47], which deals with recognizing artificial expressions: expressions produced by people when they are asked to do so [5]. The second is spontaneous FER [4, 50], which deals with the expressions that people give out spontaneously, and these are the ones that can be observed on a day-to-day basis, such as during conversations or while watching movies [5].

A typical FER system consists of four main sub-components: preprocessing, feature extraction, feature selection, and recognition modules. In preprocessing, the image quality is improved, and the faces are located in the expressions frames before recognizing the expressions. Feature extraction deals with extracting the distinguishable features from each facial expression shape and quantizing them as discrete symbols. Feature selection is used for selecting a subset of relevant features from a large number of features extracted from the input data. Finally, in recognition, a classifier is first trained using the training data, which is then used to generate labels for the expressions in the incoming video data [37].

A great deal of research effort has gone into designing efficient and accurate FER systems in the past, and a variety of techniques for each component has been proposed [1, 16–18, 31, 33], which will be discussed later. One major weakness with almost all of the state-of-the-art approaches in FER, including our own system [36], is the way those systems have been evaluated. Every FER system is designed with a motivation to be used in a real-life scenario; however, when it comes to testing and validating the recognition performance of these systems, standard datasets are employed for both training and testing. Though it serves well for the sake of comparison with existing approaches, which were also tested using the same datasets, such results cannot be used as a representative of an FER system’s performance in real-life. It is because almost all of these datasets were collected using specific kinds of video cameras, which might not be the case in real world. Furthermore, a majority of these datasets was collected in controlled environments under constant ambient settings and did not take into account the color features and factors such as gender, race, and age. Some of the previous datasets did not consider whether the subjects wore glasses or if they had a beard. Another important element in FER domain is the size of a subject’s face that, in real life, can vary from person to person. Also, it can differ depending on how far the subject is from the camera. However, in most of the previously used datasets, the face size did not vary much, mainly due to a predefined setup of the cameras. All previous datasets were collected either indoors or outdoor under static scenarios. In most of the datasets, the expressions were recorded mainly from the frontal view of the subjects, with only a slight variation, which might not be the case in a dynamic real world.

In short, existing FER systems utilized publicly available datasets and did not consider the real world challenges in their respective systems. Since the beginning of research in FER, the focus has been on designing new and improved methodologies, and evaluating them using publicly controlled-settings datasets for the sake of a fair comparison. Little or no effort has been put into designing a new dataset that is closer to real-life situations, probably because creating such a dataset is a very difficult and time consuming task. Accordingly, this work makes the following contributions:

-

We have defined a comprehensive, realistic and innovative dataset collected in-house as well as from online sources, such as YouTube, for real-life evaluation of FER systems. From indoor lab settings to real-life situations, we collected three cases with increasing complexity. In the first case, ordinary subjects performed expressions in a pose-based manner, with dynamic background, lighting and camera settings. Hence, these expressions are pose-based expressions in an uncontrolled environment. In the second case, the expressions were collected from the movie/drama scenes of professional actors and actresses. Though these are also pose-based expression, we had no control on expression production, camera, lighting and background settings. Hence, these expressions are semi-naturalistic expressions under dynamic settings. Finally, in the third case, the expressions were recorded from real world talk shows, news, and interviews. Hence, these expressions are spontaneous expressions collected in natural and dynamic settings. In all three cases, a large number of different subjects of different gender, race, and age were included. Also, many subjects wore glasses and had a beard.

-

From the existing work in FER, more recent and highly accurate FER systems including [1, 16–18, 20, 29–31, 33–35] were selected and implemented.

-

After implementation, all these systems were tested on the three collected datasets, and a detailed analysis of their performance was produced and presented in this paper.

-

Based on the obtained results, components are identified that are crucial to a satisfactory performance of an FER system in real-life situations.

The rest of the paper is organized as follows. Section 2 reviews the existing standard datasets of facial expression and recent published FER systems. Section 3 describes the defined datasets. The experimental setup, results, and discussion are presented in Section 4. Finally, the paper concludes with future directions in Section 5.

2 Related works

2.1 Existing datasets

Table 1 provides a short but thorough review of previously used datasets for evaluating the performance of existing FER studies. We can see that the most of the datasets were collected either indoors or outdoors, in controlled conditions under identical ambient settings with fixed or similar backgrounds. These assumptions can not be held true in the dynamic real world. Furthermore, when recording expression, variations in gender, age, race and color were not taken into account. Even in the studies where multiple subjects were considered, having different age, race, and gender; the face size did not vary much as subjects were at the same distance from the camera. Furthermore, other facial features like wearing glasses, having a beard and keeping different hairstyles were mostly ignored. Finally, in most of the datasets, the expressions were recorded mainly from the frontal view of the subjects, with only a slight variation, which might not be the case in real life.

2.2 Existing FER systems

Similar to Table 1, Table 2 provides a summary of the existing FER systems. Mainly, those techniques are discussed that have shown a high accuracy when evaluated using the existing datasets, and which we were able to implement. For each system, Table 2 provides the methodology (feature extraction, feature selection, and classification), the dataset used for evaluation, and the recognition accuracy achieved on that dataset.

3 Proposed dataset

As stated earlier, the main aim of this research was to collect a unique and comprehensive dataset that any FER system can employ to evaluate its real performance for identifying the desired emotions correctly and efficiently from a variety of subjects from across the globe. When collecting this dataset, limitations of the existing datasets were considered, and a significant amount of time was spent on selecting the most appropriate images with relevant emotions, situation, and surroundings. In total, three sub-datasets were collected: emulated, semi-naturalistic, and naturalistic datasets. Each dataset contains six basic expressions: happy, sad, angry, normal, disgust, and fear. The description of each of these datasets is as follows.

-

Emulated Dataset: Emulated dataset is a mixture of front-faced images collected from the existing pose-based facial expression datasets, and the pose-based expressions collected in-house using our own testbed. For the latter case, 50 subjects (male: 25, female: 25, aged between 20 - 35 years old) were hired to perform each of the six targeted expressions. For each expression, we collected over 165 images in our lab under varying ambient settings and changing background. The images used in this dataset are of the size 240 × 320 and 320 × 240 pixels. Six sample images from this dataset are shown in Fig. 1.

-

Semi-naturalistic Dataset: To construct this dataset, we downloaded and thoroughly watched hundreds of online available movies, videos, and shows from various sources including YouTube, Dailymotion, and other online available media sources. The selection of source videos was made such that the subjects in them are from across the globe (actors and actresses from the Hollywood, Bollywood, and Lollywood). Furthermore, they belong to a variety of ethnicities (Asian, American, African, European, etc.); age groups (4 to 60 years old); gender (male and female); and have varying facial structural properties (such as with/without beard).

Moreover, from each video we chose images that represented real life scenarios and contributed to the benefit of the dataset for evaluation and efficiency. For example, we collected images with different facial orientations, such as frontal, right-sided, left-sided, etc. The videos were in high definition quality, and the images were separately extracted using an image capturing software called GOMPlayer software [10] that is freely available online and captures images in user defined resolution and image quality. The generated images are all in “.jpg” format, whereas the videos were in “.avi” format. Similar to emulated dataset, each expression has over 165 images in this dataset, too. The image size is 240 ×320 and 320 ×240 pixels. Six sample images from this dataset are shown in Fig. 2.

-

Naturalistic Dataset: Unlike other datasets, we collected the naturalistic dataset purely from the talk shows, interviews, and other natural videos (such as news and recordings of real life incidents). Such a source makes this dataset more vibrant and suitable for real life testing of an FER system. To collect this dataset, we went through a tough situation of selecting appropriate emotions and capturing them at the right time, with the right mood. Just like the semi-naturalistic dataset, the subjects in this dataset do not represent a particular community class. They belong to various parts of the world, race, age (10 to 50 years old), and gender. However, unlike semi-naturalistic dataset the subjects in this dataset are not actors and include doctors, patients, politicians, instructors to children, and workers, etc.

Similar to the semi-naturalistic dataset, images in this dataset reflect real life situations. These include a variety of backgrounds, unintentional expressions of the subjects, expressions from different facial orientations, and both indoors and outdoor locations under different ambient settings, etc. Moreover, subjects with/without glasses, open/closed hair, with/without a hat, and other complex scenarios were considered. For each expression, over 165 images were collected. Similar to the other two datasets, the images used in the dataset are of size 240 ×320 and 320 ×240 pixels. Six sample images from the naturalistic dataset are shown in Fig. 3.

The collection of datasets began in September 2014 and finished in February 2015. GOMPlayer software was used for capturing the images from the videos. All images were resized by using Fotosizer software [11] in order to bring a consistency among the expression images. These datasets are made publicly available at (https://github.com/hameedsiddiqui/dataPublic.git) for the research community.

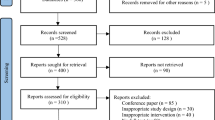

4 Experimental results and discussion

4.1 Experimental setup

The eleven FER techniques, listed in Table 2, were implemented and tested on the collected datasets in a set of two experiments. Each of these experiments was performed in Matlab using an Intel ®; Pentium ®; Dual-Core TM (2.5 GHz) with a RAM capacity of 3 GB. A brief description of the experiments is given below.

-

In the first experiment, we used the 10 −fold cross-validation rule to measure the recognition accuracy of each FER system for the three datasets. In other words, each dataset was divided into ten random subsets. Out of these ten subsets, one subset was used as the validation data, whereas the remaining nine subsets were used as the training data, and this process (training and testing) was repeated ten times, each time picking a new subset as the validation data. The overall process, division into random sets and applying the 10 −fold cross-validation, was repeated 20 times.

-

On the other hand, in the second experiment n−fold cross-validation scheme was applied based on datasets. In other words, from the three datasets, two were used as validation data, whereas the remaining one dataset served as the training data. This process was repeated three times, with data from each dataset used exactly once as the training data.

4.2 Experimental results

Table 3 provides the results (recognition accuracy and standard deviation) obtained by each FER technique in both experiments. It also gives a breakdown of each FER technique concerning its architectural elements.

4.2.1 Overall analysis

It can be seen in Table 3 that in the first experiment majority of the systems showed a good performance (within the range 70 to 90 %) on emulated dataset. Their performance dropped by 10 to 15 % on semi-naturalistic scenarios, and as expected, their performance was dramatically reduced between 20 to 25 % on the naturalistic dataset.

In the second experiment when all the systems were trained using the emulated dataset and tested on naturalistic and semi-naturalistic datasets, the recognition accuracy of each system is much less than their respective accuracies, where all these systems were trained using the same emulated dataset; however, testing was done using the samples from the emulated dataset, too. This shows that an FER system that has achieved very high recognition accuracy for pose-based dataset, collected in controlled settings, cannot be expected to yield the same high accuracy when deployed to be used in the real-world.

The performance of all the systems went further down by 19 % when trained on semi-naturalistic datasets and tested on the emulated and naturalistic datasets; and by 27 % when trained on naturalistic dataset and tested on emulated and semi-naturalistic datasets. This clearly tells us that the FER systems, even the ones that have provided impressive results for the standard datasets, are not yet ready to handle the challenges of a highly dynamic real-life scenario. These challenges include: subjects with different facial features, gender, race, and age; varying lighting conditions; high variations in angle to the camera, difference in size of the face that is related to proximity. These are only some of the factors that can cause misclassification.

4.2.2 Detailed analysis

Among the eleven FER systems implemented and tested in this work, CNF-FER and OLDA-HMM showed better performance on previous datasets, as well as on the proposed datasets. CNF-FER reported the recognition accuracy of 98 % on existing dataset (as indicated in Table 2). As for the proposed datasets, we observed recognition accuracy of about 90 % on emulated, 78 % on semi-naturalistic, and 73 % on naturalistic datasets (as shown in Table 3). We believe that the reported high accuracy and an acceptable performance on the proposed datasets is because CNF-FER employs a feature selection method on top of curvelet transform in the frequency domain. The feature selection is performed using normalized mutual information criteria based on max-relevance and min-redundancy (mRMR) methods, which helps the system in getting rid of unnecessary features and improves the overall feature space. Similarly, OLDA-HMM reported 98 % accuracy on existing datasets (as indicated in Table 2), and gave 91 % on emulated, 80 % on semi-naturalistic, and 72 % on naturalistic datasets (as shown in Table 3). The facial features are very sensitive to noise and illumination changes. OLDA-HMM uses a preprocessing method to minimize such noise. Moreover, it also employs a feature selection method, based on the forward selection and backward regression model, to remove the unnecessary features. It is due to these factors that OLDA-HMM got a high original recognition accuracy, and showed an adequate performance on the proposed datasets, too. Finally, both CNF-FER and OLDA-HMM use a sequence-based classifier, which enables them to use temporal information for a better performance.

On the other hand, LDN-SVM, CLM-SVM, LDP-SVM, and LDPv-SVM showed better performance on emulated and semi-naturalistic datasets (as shown in Table 3). LDN-SVM got 87 % on emulated and 73 % on semi-naturalistic datasets. CLM-SVM got 88 % on emulated and 71 % on semi-naturalistic. LDP-SVM attained 88 % on emulated and 78 % on semi-naturalistic datasets. LDPv-SVM got 81 % on emulated and 70 % on semi-naturalistic datasets. However, the results were not as satisfactory when these methods were applied to the naturalistic dataset. LDN-SVM achieved 64 %, CLM-SVM got 60 %, LDP-SVM attained 67 %, and LDPv-SVM got only 57 % recognition accuracy. It could be because all of these FER systems extract local features. Furthermore, they do not employ the preprocessing step. As a result, the features extracted by these systems get affected by the dynamic backgrounds, changing ambient settings, and other variations that are present in the naturalistic dataset. Finally, all of these systems use frame-based classification, which relies on extracting information from only the current frame.

Next, W-BPNN and LBP-SVM showed better performance only on emulated dataset (as shown in Table 3). This is because these systems are specifically designed for the indoor environment and do not possess the ability to show better performance in outdoor settings. Thus, their performance degraded to a great extent when applied to semi-naturalistic and naturalistic datasets.

Finally, AH-ASM did not show a satisfactory performance on any of the proposed datasets (as shown in Table 3). This is because the system uses active shape model with Haar-like features. Under these settings, some specific intensity values are used that can vary in different scenarios and thus can cause misclassification.

5 Conclusion and future direction

A significant number of very accurate and efficient FER systems have been proposed over the last decade, which have yielded high recognition accuracies when tested on existing standard FER datasets. However, this does not guarantee them displaying the same performance in real-world situations. It is because the existing datasets collected facial expressions under a predefined setup and camera deployment. It is an assumption that cannot hold true in real-life scenarios. Furthermore, these datasets are mostly pose-based and were collected in a controlled environment with constant background and ambient conditions.

Accordingly, in this work, we have compiled a rich FER dataset, which consists of three sub-datasets: emulated, semi-naturalistic, and naturalistic datasets. We put our utmost effort into making sure that the datasets we collected would closely represent the real-world. They consist of a vast number of subjects of different gender, race, and age. Instead of using a fixed settings, the datasets were collected from various situations having different backgrounds, proximity to the camera (it affects the size of the face), camera angles, ambient settings, and ambient noise. Subjects have different facial features, too such as glasses and beard.

Also, we implemented eleven state-of-the-art FER systems and evaluated their performance using our datasets in a set of two experiments. Based on the experimental results we conclude the following.

-

The facial features are very sensitive to noise and changes in ambient settings. These factors can frequently change in the real life. Therefore, it is essential for FER systems to have a preprocessing method to handle such noise to cope with the challenges of the dynamic real world.

-

Several parts of a human face contribute towards expressions making, and extracting features from these parts can help FER systems to classify the expressions accurately. However, relying only on a single type of features won’t suffice in real life situations, and thus, hybrid feature extraction techniques should be explored.

-

Even after proper and efficient feature extraction, there might be some redundancy among the features. Therefore, a feature selection method is advised to select only the most informative features and remove unnecessary features from the feature space.

-

Using a frame-based classification limits FER systems to using only the current frame without any reference image (neutral face image). This results in loss of information, which may cause misclassification. Therefore, it is advised to use sequence-based classification methods that can allow FER systems to use the temporal information to recognize expressions from a set of frames.

Overall, the results showed that even the most accurate existing FER systems are not ready to face the challenges of a dynamic real-world. Thus future research in FER should focus on finding ways to handle the challenges highlighted in this research. It is hoped that the dataset collected in this study would become a useful benchmark for the evaluation of future FER systems.

References

Abidin Z, Alamsyah A (2015) Wavelet based approach for facial expression recognition. Int J Adv Intell Inf 1(1):7–14

Aleix M (1998) Martinez. The ar face database. CVC Technical Report

Bartlett MS, Littlewort G, Fasel I, Movellan JR (2003) Real time face detection and facial expression recognition: Development and applications to human computer interaction. In: Conference on computer vision and pattern recognition workshop, 2003. CVPRW’03, vol 5, pp 53–53. IEEE

Bartlett MS, Littlewort G, Frank M, Lainscsek C, Fasel I, Movellan J (2005) Recognizing facial expression: machine learning and application to spontaneous behavior. In: IEEE Computer society conference on computer vision and pattern recognition, 2005. CVPR 2005, vol 2, pp 568–573. IEEE

Bettadapura V (2012) Face expression recognition and analysis: the state of the art. arXiv:1203.6722

Bioid face db - humanscan ag, switzerland. https://www.bioid.com/About/BioID-Face-Database. Accessed: 2014-12-15

Chen L, Man H, Nefian AV (2005) Face recognition based on multi-class mapping of fisher scores. Pattern Recog 38(6):799–811

Dantcheva A, Chen C, Ross A (2012) Can facial cosmetics affect the matching accuracy of face recognition systems?. In: 2012 IEEE Fifth international conference on biometrics: theory, applications and systems (BTAS), pp 391–398. IEEE

Face recognition and artificial vision group frav2d face database. http://www.frav.es/index.php/en/. Accessed: 2014-12-15

Fotosizer software. http://www.gomlab.com/eng/. Accessed: 2014-08-30

Fotosizer software. http://www.fotosizer.com/Download.aspx. Accessed: 2015-02-20

Garris MD (1994) Design, collection, and analysis of handwriting sample image databases. Encyclop Comput Sci Technol 31(16):189–213

Georghiades AS, Belhumeur PN, Kriegman D (2001) From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell 23(6):643–660

Grgic M, Delac K, Grgic S (2011) Scface–surveillance cameras face database. Multi Tools Appl 51(3):863–879

Gross R, Matthews I, Cohn J, Kanade T, Baker S (2010) Multi-pie. Image Vis Comput 28(5):807–813

Happy S, Routray A (2015) Automatic facial expression recognition using features of salient facial patches. IEEE Trans Affect Comput 6(1):1–12

Happy SL, Routray A (2015) Robust facial expression classification using shape and appearance features. In: 2015 Eighth International Conference on Advances in Pattern Recognition (ICAPR), pp 1–5. IEEE

Jabid T, Md HK, Chae O (2010) Robust facial expression recognition based on local directional pattern. ETRI J 32(5):784–794

Jain V, Mukherjee A (2002) The indian face database. http://vis-www.cs.umass.edu/-vidit/IndianFaceDatabase

Kabir Md H, Jabid T, Chae O (2012) Local directional pattern variance (ldpv): a robust feature descriptor for facial expression recognition. Int Arab J Inf Technol 9(4):382–391

Kanade T, Cohn JF, Tian Y (2000) Comprehensive database for facial expression analysis. In: Fourth IEEE international conference on automatic face and gesture recognition, 2000. Proceedings, pp 46–53. IEEE

Kang D, Han H, Anil KJ, Lee S-W (2014) Nighttime face recognition at large standoff: Cross-distance and cross-spectral matching. Pattern Recogn 47(12):3750–3766

Kasinski A, Florek A, Schmidt A (2008) The put face database. Image Process Commun 13(3-4):59–64

Lisetti CL, LeRouge C (2004) Affective computing in tele-home health: design science possibilities in recognition of adoption and diffusion issues. In: Proceedings 37th IEEE Hawaii international conference on system sciences, Hawaii, USA

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp 94–101. IEEE

Lyons M, Akamatsu S, Kamachi M, Gyoba J (1998) Coding facial expressions with gabor wavelets. In: Third IEEE International Conference on Automatic Face and Gesture Recognition, 1998. Proceedings, pp 200–205. IEEE

Marszalec E, Martinkauppi B, Soriano M, Pietika M et al (2000) Physics-based face database for color research. J Electron Imaging 9(1):32–38

Moore S, Bowden R (2009) The effects of pose on facial expression recognition. In: Proceedings of the British machine vision conference, pp 1–11

Nagaraja S, Prabhakar CJ (2014) Extraction of curvelet based rlbp features for representation of facial expression. In: 2014 international conference on contemporary computing and informatics (IC3I), pp 845–850. IEEE

Qi J, Gao X, He G, Luo Z, Yi W (2015) Multi-layer sparse representation for weighted lbp-patches based facial expression recognition. Sensors 15(3):6719–6739

Rivera AR, Castillo R, Chae O (2013) Local directional number pattern for face analysis: Face and expression recognition. IEEE Trans Image Process 22(5):1740–1752

Samaria FS, Harter AC (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of the Second IEEE Workshop on Applications of Computer Vision, 1994, pp 138–142. IEEE

Shbib R, Zhou S (2015) Facial expression analysis using active shape model. International Journal of Signal Processing, Image Processing and Pattern Recognition 8 (1):9–22

Siddiqi MH, Ali R, Idris M, Khan AM, Kim ES, Whang MC, Lee S (2016) Human facial expression recognition using curvelet feature extraction and normalized mutual information feature selection. Multimedia Tools Appl 75(2):935–959

Siddiqi MH, Ali R, Khan AM, Kim ES, Kim GJ, Lee S (2015) Facial expression recognition using active contour-based face detection, facial movement-based feature extraction, and non-linear feature selection. Multimedia Systems 21(6):541–555

Siddiqi MH, Ali R, Khan AM, Park Y-T, Lee S (2015) Human facial expression recognition using stepwise linear discriminant analysis and hidden conditional random fields. IEEE Trans Image Process 24(4):1386–1398

Siddiqi MH, Lee S, Lee Y-K, Khan AM, Truc PTH (2013) Hierarchical recognition scheme for human facial expression recognition systems. Sensors 13(12):16682–16713

Sim T, Baker S, Bsat M (2003) The cmu pose, illumination, and expression database. IEEE Trans Pattern Anal Mach Intell 25(12):1615–1618

Singh R, Vatsa M, Bhatt HS, Bharadwaj S, Noore A, Nooreyezdan SS (2010) Plastic surgery: A new dimension to face recognition. IEEE Trans Inf Forensics Secur 5(3):441–448

Somanath G, Rohith MV, Vadana CK (2011) A dense dataset for facial image analysis. In: 2011 IEEE international conference on computer vision workshops (ICCV Workshops), pp 2175–2182. IEEE

Sung K-K, Poggio T (1998) Example-based learning for view-based human face detection. IEEE Trans Pattern Anal Mach Intell 20(1):39–51

The color feret database. http://www.nist.gov/itl/iad/ig/colorferet.cfm. Accessed: 2014-12-15

Thomaz CE, Giraldi GA (2010) A new ranking method for principal components analysis and its application to face image analysis. Image Vis Comput 28(6):902–913

Wang S, Liu Z, Lv S, Lv Y, Wu G, Peng P, Chen F, Wang X (2010) A natural visible and infrared facial expression database for expression recognition and emotion inference. IEEE Trans Multimedia 12(7):682–691

Wolf L, Hassner T, Maoz I (2011) Face recognition in unconstrained videos with matched background similarity. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR), pp 529–534. IEEE

Wolf L, Hassner T, Taigman Y (2011) Effective unconstrained face recognition by combining multiple descriptors and learned background statistics. IEEE Trans Pattern Anal Mach Intell 33(10):1978–1990

Wu X, Zhao J (2010) Curvelet feature extraction for face recognition and facial expression recognition. In: 2010 6th international conference on natural computation (ICNC), vo 3, pp 1212–1216. IEEE

Zhang B, Zhang L, Zhang D, Shen L (2010) Directional binary code with application to polyu near-infrared face database. Pattern Recogn Lett 31(14):2337–2344

Zhang L, Tjondronegoro D (2011) Facial expression recognition using facial movement features. IEEE Trans Affect Comput 2(4):219–229

Zhu Z, Ji Q (2006) Robust real-time face pose and facial expression recovery. In: 2006 IEEE computer society conference on computer vision and pattern recognition, vo 1, pp 681–688. IEEE

Acknowledgments

This research was supported by the MSIP, Korea, under the G-ITRC support program (IITP-2015-R6812-15-0001) supervised by the IITP, and by the Priority Research Centers Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (NRF-2010-0020210).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Siddiqi, M.H., Ali, M., Abdelrahman Eldib, M.E. et al. Evaluating real-life performance of the state-of-the-art in facial expression recognition using a novel YouTube-based datasets. Multimed Tools Appl 77, 917–937 (2018). https://doi.org/10.1007/s11042-016-4321-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-4321-2