Abstract

Vehicle detection and type recognition based on static images is highly practical and directly applicable for various operations in a traffic surveillance system. This paper will introduce the processing of automatic vehicle detection and recognition. First, Haar-like features and AdaBoost algorithms are applied for feature extracting and constructing classifiers, which are used to locate the vehicle over the input image. Then, the Gabor wavelet transform and a local binary pattern operator is used to extract multi-scale and multi-orientation vehicle features, according to the outside interference on the image and the random position of the vehicle. Finally, the image is divided into small regions, from which histograms sequences are extracted and concentrated to represent the vehicle features. Principal component analysis is adopted to reach a low dimensional histogram feature, which is used to measure the similarity of different vehicles in euler space and the nearest neighborhood is exploited for final classification. The typed experiment shows that our detection rate is over 97 %, with a false rate of only 3 %, and that the vehicle recognition rate is over 91 %, while maintaining a fast processing time. This exhibits promising potential for implementation with real-world applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A traffic surveillance camera system is an important part of an intelligent transportation system [30]. It mainly includes automatic monitoring digital cameras to take snapshots of passing vehicles and other moving objects, as is shown in Fig. 1. The recorded images are high-resolution static images, which can provide valuable clues for police and other security departments, such as a vehicle plate number, the time it passed, its movement path and the driver’s face, etc. In prior days, massive amounts of stored images were processed manually, but this required hard work and resulted in poor efficiency. With the rapid development of computer technology, the latest in automatic license plate recognition software is utilized at an increasing rate in the field with great success [4]. Unfortunately, sometimes we may not discover the license plate of a vehicle because of cloned license plates, missing license plates, or because the license plate can’t be recognized. This is why automatic vehicle detection and recognition is becoming the imminent requirement for traffic surveillance applications [22]. This technology will save a lot of time and effort for users trying to identify blacklisted vehicles or who are searching for specific vehicles from a large surveillance image database [16, 28].

2 Related work

Vehicle detection and recognition are a vital, yet challenging task since the vehicle image is distorted and affected by many factors. Firstly, the number of vehicle types is rising with new car model promoted regularly. And then there is also a great deal of similarities between some vehicle models. At last there are also significant differences among vehicle images due to differences of road environments, weather, illumination, and the cameras used.

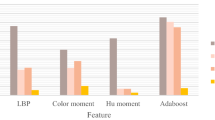

Nowadays, most of the published research mainly focuses on the classification of vehicles into broad categories, such as motorbike, cars, buses, or trucks [5, 17, 23], but this does not provide sufficient functionality to satisfy users’ demands. Some researchers studied vehicle logo detection and recognition using frontal vehicle images to access the information that would reveal the vehicle’s manufacturer [19, 21]. Recently, some researchers have recently adapted feature extraction and machine learning algorithms to classify vehicles into precise classes. For vehicle recognition, Munroe used Canny edges as the extracted features, and tested 3 different classifiers: k-NN, neural network and the decision tree [13]. The data set was composed of 5 classes, and each class had 30 samples. Sobel edges are extracted and oriented-contour points are then obtained in Clady’s processing [3]. The training image set contains 50 classes, and each class is comprised of 291 frontal view images. The last correct recognition rate is about 93 %. Petrovic and Cootes described an investigation of feature representations and recognition, which is to create a rigid structure recognition framework for automatic identification of vehicle types with the recognition rates of over 93 % [18]. P. Negri, et al., developed an oriented-contour point-based voting algorithm to represent a vehicle type for multi-class vehicle type identification, which is robust to partial occlusion and lighting for recognition [14]. F. M. Kazemi investigated the application of transform-based image features in the use of classifying five models of vehicles, which contains wavelet transforms, fast Fourier transforms, and discrete curvelet transforms [10]. B. L. Zhang first studied two feature extraction methods used for image description, which included a wavelet transform and the Pyramid Histogram of Oriented Gradient for feature extraction; then Zhang proposed a reliable classification scheme for vehicle type recognition using cascade classifier ensembles [27]. M. A. Hannan introduced automatic vehicle classification for traffic monitoring using image processing. This technique uses the fast neural network (FNN) as a primary classifier, and then the classical neural network as a final classifier, which are applied to achieve high classification performance [8].

In recent years, computer vision and pattern recognition have made great progress in the development of image feature description and recognition, especially in the field of face recognition [6]. Face recognition continues to be an active, hot research point in image processing and computer vision research, which yields many useful and effective methods and algorithms [11, 24]. Compared to face recognition, vehicle recognition is very similar. For examples, each face consists of the same components, such as eyes, mouth, and nose, and each frontal vehicle consists of the same components, such as lights, bumper, and windscreens. Based on current, highly effective face recognition methods, the paper proposes an integrated vehicle detection and classification system. The first part of this paper concentrates on vehicle detection. In order to detect a vehicle in a static image, almost all researchers make use of license plate locations to extract the vehicle area from the image [20, 29]. However, this is not a valid technique when there are vehicles with non-symmetrical front license plates and the vehicle contour may not be accurate enough for some types of vehicles, particularly those that are either larger or smaller than the average.

This paper proposes a robust vehicle detection scheme based on an AdaBoost algorithm firstly. The basic idea is to extract the Haar-like features from vehicle samples and then use the AdaBoost algorithm to train classifiers for detection, which is distinct from previous research on vehicle detection for static images. The second part of this paper concentrates on vehicle recognition, which can also be called vehicle type classification. As vehicle images are subject to their environment and vehicles position can vary, a Gabor wavelet transform and a local binary pattern (LBP) operator are used to extract multi-scale and multi-orientation vehicle features; then, the principal components analysis (PCA) is used to reduce the feature vector dimensions; finally, an euclidean distance comparison algorithm is used to measure the similarity of vectors with lower dimensions in order to finalize the vehicle types.

3 Vehicle detection and recognition principle

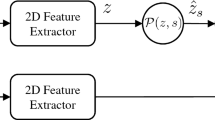

This method of processing is divided into two stages: Vehicle detection (or location) and vehicle recognition. First, a machine learning algorithm, based on Haar-like features [15], and an AdaBoost algorithm is applied to train a classifier for the vehicle detection on the input image, which to find region-of-interest (ROI) of an image for recognition. Then, additional training is performed using a PCA classifier to learn recognition from samples of different types of vehicle, as is illustrated in Fig. 2.

4 Vehicle detection

4.1 Haar-like feature

A Haar-like feature is well known as a local texture descriptor for describing the local appearance of object [2], which have been used successfully in object detecting and classification. Standard Haar-like features value can be calculated by subtracting the sum of a white region of the pixel value from the sum of the black region of the pixel value, as is shown in Fig. 3. When the position, size or scale of the Haar-like temple are changed, the object feature information, such as the intensity gradient, edge, or contour can be captured. As is shown in Fig. 3, the vehicle image includes saliency rectangle, contour and edge characteristics, where the Haar-like feature is especially suitable for its feature description. Besides, the value of Haar-like feature is easy to calculate with the use of an integral image [12].

4.2 AdaBoost algorithm

The purpose of the AdaBoost algorithm [25] is to use the feature to discover the best weak classifiers to form a strong classifier, and has shown its capability to improve the performance of various detection and classification applications. Actually the strong classifier is an ensemble classifier composed of many weak classifiers that just better than a random guess. The Adaboost algorithm can be described as follows:

A. Assume that samples are (x 1, y 1), (x 2, y 2),… (x n , y n ), While, y i = 1 denotes a positive sample (vehicle), and y i = 0 denotes a negative sample (non vehicle). n is the number of samples.

B. Normalize the weights w 1,i = D(i)

C. For t = 1, 2, 3 … T :

(1). Normalize the weights: \( {q}_{t,i}=\frac{w_{t,i}}{{\displaystyle {\sum}_{j=1}^n{w}_{t,j}}} \)

(2). For each feature f, firstly training a weak classifier h(x, f, p, θθ), and then generate the weight sum of error rate εε f = ∑ i q i |h(x i , f, p, θθ) − y i |. At last the weak classifier h(x, f, p, θθ) is defined as: \( h\left(x,f,p,\theta \right)=\left\{\begin{array}{l}1\kern0.72em pf(x)<p\theta \hfill \\ {}0\kern0.6em otherwise\hfill \end{array}\kern0.36em \right. \)

(3). Choose the best weak classifier h t (x), which have the lowest error ε t and the ε t is defined as: εε t = min f,p,θ ∑ i q i |h(x i , f, p, θθ) − y i |

(4). For each training process, update the weights: \( {W}_{t+1,i}={w}_{t,i}{\beta}_t^{1-{e}_i} \).

D. The final strong classifier is: \( H(x)=\left\{\begin{array}{l}1\;{\displaystyle \sum_{t=1}^T{\alpha}_t{h}_t(x)}\ge \frac{1}{2}{\displaystyle \sum_{t=1}^T{\alpha}_t}\hfill \\ {}0\kern3.12em otherwise\hfill \end{array}\right. \), Where \( {\alpha}_t= \log \frac{1}{\beta_t} \)

4.3 Vehicle detecting

The strong classifier is used to vehicle detection by sliding a sub-window across the image at all locations with a step. In order to reduce the detection time, the scaling is achieved by changing the detector itself, not the scaling of the image. The initial size of the classifier is 15 × 15, the sub-window is scaled at 1.2 each time, and the transform step is 2 pixels, as is shown in Fig. 4. The neighbour detected objects should be combined as one target object, because the same vehicle may be detected twice or more.

5 Vehicle recognition

The vehicle image can be translated and described as a model called a Local Gabor Binary Pattern Histogram Sequence [9], which is illustrated in Fig. 5. The approach contains the following procedures: (1) collecting some vehicle images as input samples for the same type vehicle and then transforming the average image of one type vehicle to a Gabor magnitude picture via the frequency domain using Gabor wavelets filters; (2) extracting the LBP for each Gabor magnitude picture; (3) dividing each LBP picture into rectangle regions R 0, R 1 … R m − 1, and then computing the histogram for each region; (4) concatenating the histograms of each region to form the final histogram sequence, which represents the original vehicle image; (5) Measuring the vehicle’s similarity with the histogram’s feature vector after the dimension reduction via PCA . This procedure is described in detail in the following sub-sections.

5.1 Gabor wavelets transform

The Gabor wavelets filter has been widely used in face recognition since the pioneering of the field. Considering the advantages of the Gabor filters in object recognition [31], we adopt the multi-resolution and multi-orientation Gabor filters to process input vehicle images for sequential feature extraction. The Gabor wavelets filters are defined as follows [26]:

In formula (1), u is the orientation of the Gabor kernels and v is the scale of the Gabor kernels z = (x, y), and the wave vector is \( {k}_{u,v}={k}_v{e}^{i{\phi}_u} \),where k v = k max/f with k max being the maximum frequency and f being the spacing factor between kernels in the frequency domain. This approach uses Gabor filters with 5 scales and 8 orientations. Therefore, each vehicle image will be will result in a total of 40 Gabor Magnitude Pictures (GMPs), as is shown in Fig. 6.

5.2 Local gabor binary pattern (LGBP) and histogram sequence

After the Gabor transform, we encode the magnitude values with the LBP operator to enhance the information. In 1993, Ojala introduced the LBP texture operator for 2D texture analysis. Later, the LBP operator was extended to use neighborhoods of different sizes [7]. Using the LBP 8,3 operator, the histogram of the labeled image can be defined as follows:

Where n is the number of different labels produced by the LBP operator, which is less than 256. Then, f(x, y) is the labeled image and I (A) is the decision function with a value of 1 if the event A is true and 0 otherwise.

To form the LBP histogram sequence, the LBP histogram for each sub-region has to be computed. The LBP histogram of one sub-region contains the local feature of that sub-region; by combining the LBP histograms for all sub-regions, and the last histogram sequence represents the global characteristics for the whole image.

For representation efficiency, every magnitude image has to be divided into 6*6 sub-regions, (R0,R1, . . . Rn-1), then the 36 sub-region histograms have to be combined to form a histogram sequence to create a magnitude image. With the same step, all the histogram pieces computed from the regions of all the 40 LGBP Maps are concentrated into a large histogram sequence to produce the final vehicle representation, as is shown in Fig. 7.

5.3 Feature dimension reduction using PCA

Finally, the cell-level histograms are concatenated to produce a high-dimensional global descriptor vector. For example, the vector with 40 Gabor wavelets, the histograms bin scale will be 255, the subset grid is 6 × 6, which will produce a vector descriptor with 6 × 6 × 255 × 50 = 459000 dimensionality. So, we use simple, PCA-based dimensionality reduction [1].

Assume that we have n feature vectors x i (i = 0, 1, 2 … n) of size n, which is representing a set of sampled vehicle images with high dimension. PCA tends to find a low dimensional subspace whose basis vectors correspond to the maximum variance direction in the original space of vectors x i . Let m represent the mean vector of x i :

And let w i be defined as the mean centered vector:

So the covariance matrix C can be defined as:

Where W is a matrix composed of the column vectors w i , which are placed side by side. Then we can calculate eigenvalues and eigenvectors of matrix C with singular value decomposition algorithm, and the eigenvectors \( u{}_i \) are sorted from high to low according to their corresponding eigenvalues λ i . Corresponding to k largest eigenvalues, the projection matrix M k is composed as:

With M k , each vector x i is projection to low dimensional vector Ω = x i M k . So the simplest method for determining which vehicle class provides the best description of an input vehicle image is to find the vehicle class k that minimizes the Euclidean distance.

Where Ω k is a vector describing the k th vehicle class. If ε k is less than some predefined threshold, then the vehicle is classified as belonging to class k.

6 Experimental evaluations

This section will introduce the results of the vehicle detection and the vehicle recognition efforts, which will be described separately below. Our results are all calculated on a desktop computer with an Intel Core i7 3.4GHz CPU, 4GB RAM and NVIDIA Quadro 2000 GPU. Our software is developed in windows 7, with the visual studio 2010 and the opencv 2.4.3.

The local police department in Maanshan City provided a large collection of vehicle images recorded with traffic surveillance cameras in 1 week. The capturing time was between 7:00 (AM) and 17:00 (PM) with a wide range of illumination condition. All original image resolution are over 1600 × 1200 pixels. For more accurate experiments, the vehicle image data set were split randomly into training and testing sets.

6.1 Vehicle detection result

5,000 positive images were used for classifier training, which were obtained by manually cropping the vehicle area of the vehicle images that recorded in the database of traffic surveillance system. All images were resized to 30x30 pixels for training. In order to get sufficient negative samples, we download several image set from the internet, which resulted in more than 15,000 negative samples, at least. In the training processing, the maximum detection rate was set at 99.5 %, and the minimum false rate was 50 %. Parts of the image set are shown in Fig. 8.

The detection rate (DR), the total detection rate (TDR), and the false rate (FAR) were defined for getting the numerical result of vehicle detection in our experiment as follows.

The P is the number of all positive samples, and The N is the number of non-vehicle samples (negative samples). Where TP stands for the number of vehicle images detected correctly, and TN stands for the number of negative samples detected correctly. FP stands for the number of negative samples that detected to be positive samples. The aim of vehicle detection is to get the higher DR, TDR value and the lower FAR value. The results of the experiment are displayed in Table 1. The image of vehicle detection result is shown in Fig. 9.

A total of 720 images in our test and the total time it took to deal with these images is about 43 s. Thus, the average detection time for each image is 60 milliseconds. As is shown in Table 1, we achieved a high detection rate and low false rate, which is better than the previous method. Vehicle detection may be failed because of multiple vehicles occlusion and incomplete vehicles in images, as demonstrated in Fig. 10. The unsuccessful segmentation of vehicle will not be included in classification images sets.

6.2 Vehicle recognition result

A total of 223 images of different type of vehicles were selected for testing, including 8 classes, including Buick Excellent, Volkswagen Lavida, Volkswagen Santana, Volkswagen Tiguan, Skoda Ovcia, Chevrolet Cruze, Toyota Corolla, and all the vehicle are in old style. The training sets were collected randomly from the images database of traffic surveillance systems, separate with the training set, as shown in Fig. 11.

For each class, we calculate the correct number and correct rate of recognition, the last result for all types of vehicle is as shown in Table 2.

From Table 2 we can conclude that the last recognition rate is achieve 91.6 %, and the detection time of all 200 images is about 13 s (including image reading time), so the average recognition time for each picture is less than 300 ms, which indicates a very high time efficiency.

Unlike some other object detection and recognition filed, especially in face recognition, there are no standards or benchmark image sets for testing, so it is very difficult to make a fair comparison with other published investigations of vehicle recognition. In this paper, we had to create experiments with our own image sets to show our results; however, the results can still be contrasted roughly. From available information, recognition rates generally range from 85 to 96 % in other studies, whereas we have achieved an expected result, which is higher than the average levels of current vehicle recognition methods.

7 Conclusion

Accurate and robust vehicle detection and the recognition still a challenging task in the field of intelligent transportation surveillance systems. In this paper, we presented a cascade of boosted classifiers based on the characteristics of the vehicle images to be used for vehicle detection in on-road scene images. Then, Haar-like features and an AdaBoost algorithm were used to construct the classifier for the vehicle detection, which is distinct from previous research published on vehicle detection. Next, the histogram intersection was used to measure the similarity of different LGBP Histogram Sequence, and the nearest neighborhood of the Euclidean distance was exploited for final classification, which is impressively insensitive to appearance variations due to lighting or vehicle pose. We have tested this method on a realistic data set of over 800 frontal images of cars that were used for vehicle detection, which achieved a high accuracy of 97.3 %. Over seven types of vehicle with 227 images were tested in our experiment. The recognition rate was over 92 %, with a fast processing time, which is over the average levels of current vehicle recognition methods. However, the images we used were captured during the day, so our future efforts will be focused on detecting and recognizing vehicles during the night, which is very difficult problem to solve with existing technology.

References

Abdi H, Williams LJ (2010) Principal component analysis[J]. Wiley Interdiscip Rev Comput Stat 2(4):433–459

Bilgic B, Horn BK, Masaki I (2010) Efficient integral image computation on the GPU[C]. Intelligent Vehicles Symposium (IV): 528–533

Clady X, Negri P, Milgram M,Poulenard R (2008) Multi-class vehicle type recognition system[M]. Artificial Neural Networks in Pattern Recognition. Springer Berlin, Heidelberg: 228–239

Du S, Ibrahim M, Shehata M et al (2013) Automatic license plate recognition (ALPR): a state-of-the-art review[J]. IEEE Trans Circ Syst Video Technol 23(2):311–325

Fazli S, Mohammadi S, Rahmani M (2012) Neural network based vehicle classification for intelligent traffic control[J]. Int J Softw Eng Appl 3(3):17–22

Ghimire D (2014) Extreme learning machine ensemble using bagging for facial expression recognition[J]. J Inf Process Syst 10(3):443–458

Guo Z, Zhang L, Zhang D (2010) Rotation invariant texture classification using LBP variance (LBPV) with global matching[J]. Pattern Recogn 43(3):706–719

Hannan MA, Gee CT, Javadi MS (2013) Automatic vehicle classification using fast neural network and classical neural network for traffic monitoring[J]. Turk J Electr Eng Comput Sci

Huang D, Shan C, Ardabilian M et al (2011) Local binary patterns and its application to facial image analysis: a survey[J]. IEEE Trans Appl Rev 41(6):765–781

Kazemi FM, Samadi S, Poorreza HR et al (2007) Vehicle recognition based on fourier, wavelet and curvelet transforms-a comparative study[C]. IEEE Fourth International Conference on Information Technology: 939–940

Kim H, Lee SH, Sohn MK et al (2014) Illumination invariant head pose estimation using random forests classifier and binary pattern run length matrix[J]. Human-Centric Comput Inf Sci 4(1):1–12

Lienhart R, Maydt J (2002) An extended set of Haar-like features for rapid object detection. IEEE International Conference on Image Processing: 900–903

Munroe DT,Madden MG (2005) Multi-class and single-class classification approaches to vehicle model recognition from images[J]. Proc. AICS

Negri P, Clady X, Milgram M et al (2006) An oriented-contour point based voting algorithm for vehicle type classification[C]. ICPR (1): 574–577

Park KY, Hwang SY (2014) An improved Haar-like feature for efficient object detection[J]. Pattern Recogn Lett 42:148–153

Pearce G, Pears N (2011) Automatic make and model recognition from frontal images of cars[C]. In Proc. 8th IEEE Int. Conf. AVSS, Klagenfurt, Austria: 373–378

Peng Y, Jin JS, Luo S et al (2013) Vehicle type classification using data mining techniques[M]. The Era of Interactive Media. Springer, New York: 325–335

Petrovic V, Cootes TF (2004) Analysis of features for rigid structure vehicle type recognition[C]. BMVC: 1–10

Psyllos AP, Anagnostopoulos CN, Kayafa E (2010) Vehicle logo recognition using a SIFT-based enhanced matching scheme[J]. IEEE Trans Intell Transp Syst 11(2):322–328

Psyllos A, Anagnostopoulos CN, Kayafas E (2011) Vehicle model recognition from frontal view image measurements[J]. Comput Stand Interfaces 33(2):142–151

Sam KT, Tian XL (2012) Vehicle logo recognition using modest adaboost and radial tchebichef moments[J]. Int Proc Comput Sci Inf Technol 25:91–95

Sivaraman S, Trivedi MM (2010) A general active-learning framework for on-road vehicle recognition and tracking[J]. IEEE Trans Intell Transp Syst 11(2):267–276

Sluss J, Cheng S, Wang S, Cui L, Liu D et al (2012) Vehicle identification via sparse representation [J]. IEEE Trans Intell Transp Syst 13(2):955–962

Uddin J, Islam R, Kim JM (2014) Texture feature extraction techniques for fault diagnosis of induction motors[J]. J Convergence Vol 5(2):15–20

Viola P, Jones MJ (2001) Rapid object detection using a boosted cascade of simple features[C]. IEEE Computer Society Conference on Computer Vision and Pattern Recognition: 8–14

Yang M, Zhang L, Shiu SC et al (2013) Gabor feature based robust representation and classification for face recognition with Gabor occlusion dictionary[J]. Pattern Recogn 46(7):1865–1878

Zhang BL (2013) Reliable classification of vehicle types based on cascade classifier ensembles[J]. IEEE Trans Intell Transp Syst 14(1):322–332

Zhang BL (2014) Classification and identification of vehicle type and make by cortex–like image descriptor HMAX[J]. Int J Comput Vision Robot 4(3):195–211

Zhang BL, Zhou Y (2012) Vehicle type and make recognition by combined features and rotation forest ensemble[J]. Int J Pattern Recognit Artif Intell: 26(03)

Zhang BL, Zhou Y, Pan H (2013) Vehicle classification with confidence by classified vector quantization [J]. IEEE Intell Transp Syst Mag 5(3):8–20

Zou J, Liu C, Zhang Y et al (2013) Object recognition using Gabor co-occurrence similarity[J]. Pattern Recogn 46(1):434–448

Acknowledgments

The research has been supported by the fund of high academic qualification in Nanjing forestry university (GXL201315) in China, natural science fund for colleges and universities of Jiangsu Province (14KJB520017), the natural science foundation of Jiangsu Province (BK20130981), the project supported by the science fund of state key laboratory of advanced design and manufacturing for vehicle Body (31415008).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tang, Y., Zhang, C., Gu, R. et al. Vehicle detection and recognition for intelligent traffic surveillance system. Multimed Tools Appl 76, 5817–5832 (2017). https://doi.org/10.1007/s11042-015-2520-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-2520-x