Two problems associated with distinguishing measurement problem theory from measurement theory are described. The first issue to be addressed is that of harmonizing the definition of the term “measurement” due to a basic terminological confusion between measurements, methods, measurement procedures, and methods for solving measurement problems, including in the International Vocabulary of Metrology, i.e., the problem of distinguishing between measurements and calculations. The second problem arises from the inadequacy of mathematical models representing measurement objects. A solution to these problems is presented in terms of measurement problem theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

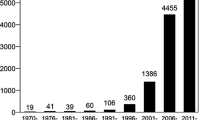

Introduction. The following issues were first considered in the monograph by Yuri Linnik [1]: equal precision direct measurements, unequal direct observations, unconditional indirect measurements, conditional indirect measurements, as well as equalization and interpolation from the perspective of statistical data processing as a separate field of metrology having its own objectives. At that time (1960s), GOST 16263-70, GSI. Metrology. Terms and Definitions, was not even in planning. For random errors, this was the “normal law” era. Therefore, Linnik’s monograph [1] can be regarded as the first fundamental mathematical work on the theory of measurement problems.

Although the Russian word for “measure”Footnote 1 generally points metrological terminology in the right direction, the authors of GOST 16263-70 adopted a different approach, i.e., the alphabetical index of Russian terms was accompanied by the corresponding alphabetical indexes of German, English, and French terms. In RMG 29-99, GSI. Metrology. Basic Terms and Definitions, direct, indirect, joint, absolute, and relative “measurements,” as well as measurements in a closed series, were complemented with equal-precision, unequal, single, multiple, static, and dynamic measurements. Some ambiguous notes were also added:

5.10 direct measurement: measurement in which the target value of the physical quantity is found directly Note. The term direct measurement emerged as the opposite of the term indirect measurement. Strictly speaking, measurement is always direct and viewed as the comparison of a quantity with its unit. In this case, the term direct method of measurement is preferable.

5.11 indirect measurement: determinationFootnote 2 of the target value of a physical quantity drawing on the results of direct measurements of other physical quantities functionally related to the target quantity.

Note. In many cases, the term indirect method of measurement is used instead of the term indirect measurement.

In the early 1990s, V. Kuznetsov commented on the vagueness inherent in the concept of “measurement”: “We have ceased to understand what measurement is.”

However, the intractability of the problem associated with the term “measurement” only became apparent to the compilers of the International Vocabulary of Metrology (VIM3) [3] a decade and a half later in 2007 [4, 5]: “the same term may be used to describe different concepts in the different approaches.” The article [4, 5] ends with a rather pessimistic conclusion: “Trying to create a vocabulary of metrology that harmonizes the language of measurement among the different approaches, and that keeps one term designating only one concept, has presented tremendous challenges in developing VIM3. ... it has in a few cases been necessary to allow two concepts having the same term ... or different terms for the same concept ...”

The following definition gives a better idea of the problem [3]:

2.5 (2.4) measurement method: generic description of a logical organization of operations used in a measurement Note. Measurement methods may be qualified in various ways such as:

-

substitution measurement method,

-

differential measurement method, and

-

null measurement method;

or

-

direct measurement method and

-

indirect measurement method.

However, the present issue consists in the physical difference between measurements and calculations rather than logic. Thus, MI 2222-92, GSI. Types of Measurements. Classification, divides the types of measurements according to the types of physical quantities, stating the necessity “to prevent the inclusion of the same physical quantity to be measured in different types of measurements,” whereas in RMG 29-99, GSI. Metrology. Basic Terms and Definitions, substitution, differential, and zero methods are classified as measurement methods. The terms “direct method of measurement” and “indirect method of measurement” from RMG 29-99 and its revised edition RMG 29-2013 did not become widespread due to the emphasis Footnote 3 on the “method of measurement.” The unification of measurements, measurement methods, and methods for solving measurement problems has only exacerbated the main problem of the Guide to the Expression of Uncertainty in Measurement (GUM) [6], i.e., the problem of inadequacy [7]. The introduction of a new term to VIM3 [3] did not change the situation:

2.27 definitional uncertainty: component of measurement uncertainty resulting from the finite amount of detail in the definition of a measurand.

Note 1. Definitional uncertainty is the practical minimum measurement uncertainty achievable in any measurement of a given measurand.

Note 2. Any change in the descriptive detail leads to another definitional uncertainty.

Note 3. In the ISO/IEC Guide 98-3:2008, D.3.4, and in IEC 60359, the concept definitional uncertainty is termed intrinsic uncertainty.

Although it is noted in the introduction to [3] that “in the GUM, the definitional uncertainty is considered to be negligible with respect to the other components of measurement uncertainty,” it was the introduction of this term that turned measurement uncertainty into an incognizable quantity.

The first detailed analysis [7] of the GUM translation [8] revealed an uncounted fraction of inadequacy-related error amounting to about 60 % along with structural flaws in the calibration function in the example of thermometer calibration [8, Annex H.3].

However, for GUM, the problem of inadequacy proved to be a special case. After all, GUM gave a generally accurate description of the problem related to the inadequacy of mathematical models outlining its resolution by means of metrological certification; however, no concrete solution was found. “Definitional uncertainty” was left unquantified, while “amount of detail,” owing to its ambiguity, turned out to be a fitting phrase in a poor definition. Thus, a short note in RMG 29-99 revealed the ambiguity of the term “measurement” leading to the special case of inadequacy [9].

A step in the right direction was taken in RMG 29-2013:

4.19 direct measurement: measurement in which the target value of the quantity is found directly using a measuring instrument.

Note 1. The term direct measurement emerged as the opposite of the term indirect measurement. Strictly speaking, measurement is always direct and viewed as the comparison of a quantity with its unit or the scale. In this case, the term direct method of measurement is preferable.

Note 2. A measurement model type may serve as the basis for distinguishing between direct measurements, indirect measurements, joint measurements, as well as measurements in a closed series. In this case, the distinction between indirect and direct measurements is vague since most measurements in metrology are indirect and the consideration of influence factors, the introduction of corrections, etc., is implied.

Terminological catachreses. While metrology is typically regarded as the science of measurement, it is, in fact, a fundamental and ancient science of methods and means of representing the properties of physical objects using mathematical models. A discrepancy between calculations performed using models and the results of measuring properties exhibited by physical objects under specific conditions indicates the cognizability of phenomena. The inevitability of such “errors” has led to their being renamed as errors of measurement and inadequacy. It seems that the problem associated with the inadequacy of mathematical models and terms remains a permanent issue in metrology [10].

The combination of contradictory, incompatible concepts, typically constituting a speech error, yet in some cases coming into common use, is referred to as catachresis in linguistics.

The issue of terminological catachresis emerges when a term employs at best the indirect rather than the direct meaning of the base word, while, at worst, a semantic error arises due to the inability to comprehend the inner form of the word. Both of these cases can lead to dangerous systemic errors in applications arising due to terminological inadequacies. Here we should also mention the problem associated with harmonizing normative documents, which is a matter of their purpose and the competence of editors rather than the equivalence of translations. The translation [8] of an international document [6] published in 1999 serves as a good example of this issue. However, as early as 2002, the Scientific board of the Mendeleev All-Russian Institute for Metrology (VNIIM) criticized imbalances in the English-language harmonization, as well as “unjustified attempts to limit the significant potential of the Russian language, in some cases for the sake of alleged and far-fetched harmonization.”

Terminology plays a special role in science, since it not only serves as a list of names and definitions, but also as a subsystem in the system of linguistic associations [12]. The perception of words and terms as a perception of their form with a transition to semantics is studied by psycholinguistics. [13]. Thus, if the form of a word is at variance with polysemy of usage, its name and definition become inadequate. Particularly disruptive are terms whose definitions include words having no relation to the direct meaning of the term.

The following definition from RMG 29-99 (RMG 29-2013) provides an indicative example:

5.18 (4.23) measurement problem: problemFootnote 4 that consists in determiningFootnote 5 the value of a physical quantity by measuring it to the required accuracy under given measurement conditions.

Once “measuring” is replaced by the underlined part of the definition provided in RMG 29-2013,

4.1 measurement (of a quantity) – process of experimentally obtaining one or more quantity values that can be reasonably attributed to the quantity, another “subtlety” neglected by the VIM3 compilers [3] becomes clearer. Perhaps, the decision made by the Scientific board of VNIIM, which involves a literal translation that is not adequate to the meaning, will also become clearer.

Emergence of measurement problem theory. In the 1970s, an All-Union discussion on the applicability scope of mathematical statistics [16] was initiated by Alimov [14] and Tutubalin [15]. By the early 1990s, the discussion participants shifted their focus to the problem associated with the statistical identification of inadequacy-related errors of mathematical models representing complex technical objects and their operation support systems [17,18,19].

The relevance of introducing the concept of adequacy-related error was noted by P. Novitskii. He argued that “the problem associated with selecting a type of functional dependence cannot be formalized” and that “the compactnessFootnote 6 of a model is achieved through a proper choice of elementary functions providing a sufficient approximation at their small number,” as well as defining an adequacy-related error as error arising “due to the insufficient correspondence between the approximating function and all the characteristics of the experimental curve” [20].

This issue was also considered in MI 2091-90, GSI. Measurements of Physical Quantities. General Requirements:

2.2.1. ... error due to the discrepancy between the model and the measurement object should not exceed 10% of the maximum permissible measurement error.

2.2.2. If the selected model does not meet the requirements of 2.2.1, another model of the measurement object should be used. More details on selecting a model to be measured are given in MI 1967-89.

However, according to MI 1967-89, GSI. Selection of Methods and Measuring Instruments in Developing Measurement Procedures. General Provisions:

1.3.1 The measurand corresponds to some model of the measurement object, which is adopted as an adequate representation of its properties that are to be studied via measurements. Meanwhile, any adopted model, in practice, only approximates the properties of measurement objects.

4.2.1 Error associated with the difference between the model adopted to represent the measurement object and that (unknown) model which would adequately reflect the properties of the measurement object studied via measurements and (or) with the difference in terms of a parameter (functional) more adequately reflecting the studied property of the measurement object (1.3.1).

Note. Methods for determining a procedural measurement error due to the inadequacy of the adopted model representing the measurement object belong to the least developed fields of metrology. This fact can be explained by the virtual lack of formal approaches to the establishment of such models of measurement objects that are strictly adequate to measurement objects and problems; therefore, the determination of this procedural measurement error requires high qualification, as well as experience and engineering insight of measurement procedure developers.

The discussion revealed that the choice of a functional dependence is formalized in the maximum compactness method (MCM), while the compactness function [21], which turned out to be equivalent to the inadequacy error distribution, is adopted as the accuracy indicator of the obtained result.

Normatively, this stage in the development of the theory of measurement problems was formalized by RRT 507-98, GSI. Measurement Problems. Solution Methods. Terms and Definitions, specifying the initial provisions necessary for the correct understanding and practical application of the theory behind measurement problems:

4.1. Measurement problem: problem of establishing a quantitative correspondence between a physical object and its mathematical model by calculating values, measuring or reproducing physical quantities with the required accuracy on the basis of adopted scales and units under given conditions.

4.3. Physical quantity: denominate mathematical quantity representing a general qualitative property of physical objects, as well as its specific manifestation.

4.4. Interpretive model: mathematical model representing a relationship between physical quantities in a measurement problem.

4.43. Measurement error: difference between the measured value of a physical quantity and its (true or conventional true) value adopted as a reference.

4.44. Error (of inadequacy) of the interpretive model: difference between measurement results and the corresponding design values of the model, whose parameters were determined independently of these measurement results.

4.45. Uncertainty: property of error expressing the impossibility of constructing its nondegenerate interpretive model as a certain value.

An interpretive model is called degenerate when no dependence between physical quantities exists. In these cases, probability distributions are used for the considered quantities.

4.85. Сross-sectional observation of errors: way to create conditions for observing the errors of an interpretive model by using only those data of joint measurements that were not involved in determining its parameters.

4.86. Segmentation rule: rule specifying the division of a sample from the joint measurement data in the cross-sectional observation of errors into test (for observing approximation errors) and control parts (for observing extrapolation errors) in order to use the extrapolation errors as the inadequacy errors of the interpretive model.

The following techniques are employed: “jack-knife” method involving sequential sampling of the unit values of the output variable [22]; “group method of data handling” requiring the sample to be divided in half [23]; “bootstrap” method employing random multiple sample divisions [24]; “cross-sectional observation” involving non-random sample divisions [21].

During the next stage in the development of the measurement problem theory, R 50.2.004-2000, GSI. Determination of Characteristics Exhibited by the Mathematical Models of Dependences between Physical Quantities When Solving Measurement Problems. Basic Provisions, clarified the definition of the term error of inadequacy and approaches to the solution of measurement problems:

3.1. ... error of inadequacy (of a mathematical model representing a measurement object): quantity characterizing the difference between the calculated value of a given physical quantity as an output variable of the mathematical model representing the measurement object and the result of its measurement under conditions corresponding to the calculation (while simultaneously measuring the input variables).

5.1. If a measurement problem requires that a property of an object expressed by the target variable of its model can be compared to a measure of the corresponding physical quantity with the required accuracy using some method (substitution, complementary, differential, etc.), the numerical result of such comparison is rounded to the digit corresponding to the least significant digit of the numerical expression for the limits (bounds) of permissible error, while indicating these limits (bounds) and confidence probability. This method of solving a measurement problem is referred to as the method of direct measurement.

5.2. If the output variable of a known model representing an object serves as the target in a measurement problem, while its input variables are available for measurements, the problem is solved using the following method in the static case: first input variables are measured; then the obtained data are substituted into the constraint equation and the value of the output variable is calculated, rounding the result taking into account the characteristics exhibited by measurement and inadequacy errors of the model. This method of solving a measurement problem is referred to as the method of indirect measurement.

6.1. Structural and parametric identification of a model representing a measurement object or identification of an interpretive model according to the output variable involves constructing its systematic (position characteristic) and random components (error distribution) as a random function of input variables.

6.3. Identification of interpretive models is carried out using the data of joint measurements and structural variants set by a model of maximum complexity ... (method of joint measurements).

Simultaneously, R 50.2.004-2000 establishes a classification of measurement problems:

4.6. Measurement problems (henceforth referred to as “problems”) are subdivided:

-

–according to the focus of procedures for establishing a quantitative correlation between the properties of a physical object and the characteristics of its mathematical model into the problems of identification and reproduction;

-

–according to the type of applied mathematical models into dynamic (operator models), static (functional models), as well as probabilistic and statistical problems;

-

–according to their purpose into dimensional (related to variables) and structural-and-parametric (related to structure and parameters) problems;

-

according to their status into applied (using working measuring instruments) and metrological (using measurement standards) problems.

Dimensional problems are further subdivided by the types of measurements (types of physical quantities or hierarchy schemes). According to the degree of prior uncertainty in the conditions for finding a solution, structural and parametric problems are subdivided into initial (structure of the model is not specified), structurally indeterminate (structure of the model is specified up to the class of models), and parametrically indeterminate problems (model is specified up to parameters).

While R 50.2.004-2000 also provides a classification of errors, only the classification of inadequacy-related errors is currently of interest since the classifications of measurement errors and inadequacy-related errors coincide in the form of representation, mathematical description, and the form of distribution. According to the origin, inadequacy errors are divided into dimensional errors of inadequacy, i.e., errors of initial data used in the construction of a model, rounding errors, errors due to interrupted calculations, and errors due to operations involving approximate numbers; parametric inadequacy errors, i.e., errors associated with the method of parameter estimation and parameterization of variables; structural inadequacy errors, i.e., errors associated with selecting the structure of a model and errors due to the implementation of a computation scheme.

R 50.2.004-2000 (Annex A) provides several mathematical results:

Modular criterion theorem: If the distribution function FX(x) of a random variable X is such that

it is true for the parameter θ that

Corollary. Identity (А.1) is minimized by the median since at

When identifying the probability density function f(x) of the variable X, the maximum of its reproducibility criterion is used as the identification criterion

where ft(x) and fc(x) – density estimates of f(x) using test and control samples in cross-sectional observation (lemma about the kappa criterion).

If the equality of probability density functions ft(x) and fc(x) of the random variable X in cross-sectional observation is achieved at a single point x0, the reproducibility parameter is as follows:

where Ft(x) and Fc(x) – probability distribution functions in cross-sectional observation.

Corollary. The roots хm, m = 1, ..., M, of the equation ft(x) = fc(x) correspond to extrema of the difference D(x) = = Ft(x) – Fc(x) while Identity (A.3) takes the form

The main provisions of the theory behind measurement problems acquired its final form in MI 2916-2005, GSI. Identification of Probability Distributions When Solving Measurement Problems. The document provides algorithms for the structural and parametric identification of probability distributions and contour estimation of inadequacy error for probability distribution functions, as well as introducing the term reproducibility of probability distribution in cross-sectional observation:

“3.2.2 probability of agreement between probability distributions: intersection area of probability density function plots f1(x) and f2(x):

Note. Given a single intersection point of probability density function plots, the probability of agreement is related to Kolmogorov distance DN via the identity oe* ≡ 1 – DN.

3.2.16 undetermined quantity: quantity whose value is undetermined; however, it can be characterized by a probability distribution on the basis of a set of values or just by a set of values (interval).

Note. It characterizes the probabilistic uncertainty of target quantities in measurement problems, including probabilistic uncertainty equivalent to statistical uncertainty. Residual systematic error is described as an undetermined quantity.

3.2.29 random variable: observed quantity assuming unpredictable values in the experiment. Note. It characterizes the statistical uncertainty of target quantities.

4.1.3 According to R 50.2.004, complete identification is aimed at finding the PDF convolution of the observed or random fΞ(ξ) and unobserved or residual systematic fΨ(ψ) components of the target quantity

For an arbitrary distribution function FΞ(ξ) and uniform probability density function on the interval [–h, h], this convolution is reduced to a finite interval [a, b] according to the formula provided in [25] taking the form

where the uncertainty interval [a, b] is obtained using Smirnov statistics for the difference between the statistical (empirical) distribution function and the interpretive distribution function fX(x). Convolution (1) is interpreted as a distributed sum of a random variable described by the distribution function fX(x), with its inadequacy error uniformly distributed on the interval [a, b], which contributed to the development of software for assessing the reliability of control test results, including the verification of measuring instruments [26,27,28,29].

Surprisingly, only Kolmogorov–Smirnov statistics conformed to the expression of the probability of agreement through the Feller distance [30].

The initial idea of the theory behind measurement problems is related to the approximation theory [31] which consists in the interpolation of dependences between physical quantities characterizing the properties of measurement objects under specific conditions. From its inception, this theory incorporated interpolation algorithms for identifying mathematical models, the concept of inadequacy error, and the theorem about the existence of the best approximant [32]. This idea fully developed in 1983 when the lemma about the probability of agreement between probability distributions in cross-sectional observation was proved and its relationship with the modular criterion of accuracy was established [33].

The authors of [8, clause 0.4] adopted a different course of action: “... it is often necessary to provide an interval about the measurement result that may be expected to encompass a large fraction of the distribution of values that could reasonably be attributed to the quantity subject to measurement. Thus, the ideal method for evaluating and expressing uncertainty in measurement should be capable of readily providing such an interval, in particular, one with a coverage probability or level of confidence that corresponds in a realistic way with that required.” In addition, it was noted that: “This approach, the justification of which is discussed in Annex E, meets all of the requirements outlined above.” However, the subsequent GUM revisions introduce “confidence interval” instead of “required interval,” while Annex C.3.2 of ISO/IEC Guide 98-3, Uncertainty of Measurement – Part 3: Guide to the Expression of Uncertainty in Measurement (GUM: 1995), GOST R 54500-3-2011/ISO/IEC Guide 98.3:2008, Uncertainty of Measurement. Part 3. Guide to the Expression of Uncertainty in Measurement, and GOST 34100-3-2017 having the same name state that: “The variance of the arithmetic mean or average of the observations, rather than the variance of the individual observations, is the proper measure of the uncertainty of a measurement result.” It is this ambiguity that has prompted a heated debate about the differences and similarities between tolerance and confidence intervals, as well as about the possibility of using tolerance interval limits as metrological characteristics. After all, in state hierarchy schemes, the confidence error limits serve as the bounds of tolerance intervals rather than of confidence intervals.

A problem associated with an ideal method for the “evaluation and expression of uncertainty in measurement,” which only started to emerge in [8, clause E.3.1], had been solved in 1834–1841 by Jacobi [34] using a composed distribution for input variables for the equation for the indirect measuring method. Therefore, the accuracy assessment of measurement problem solutions relying on probability distributions received the name of the compositional approach, whereas the accuracy assessment of measurement problem solutions using the method of moments proposed by Pearson in 1894 [35] (the most popular moments – expected value and variance) was called the method of moments. Thus, it seems that the method of moments specified in the Guide relates very loosely, to put it mildly, to the ideal method for estimating and expressing uncertainty in the solutions of measurement problems.

However, the primary difficulty associated with the compositional approach consists in the fact that it rarely produces analytical solutions, although numerical methods can obtain the target solution having a predetermined accuracy. Therefore, in order to avoid confusion in metrological applications, it was proposed in 2006 to use the concept of “uncertainty in a broad sense” for the compositional approach and the concept of “uncertainty in the narrow sense” for the (parametric) method of moments [36,37,38], which was brought about by 2.1.1 [8]:

2.2.1 The word uncertainty means doubt, and thus in its broadest sense ‘uncertainty of measurement’ means doubt about the validity of the result of a measurement. Because of the lack of different words for this general concept of uncertainty and the specific quantities that provide quantitative measures of the concept, for example, the standard deviation, it is necessary to use the word uncertainty in these two different senses.

However, English scientific literature offers three “different words” [39]: 1) uncertainty – inadequacy (of the analytical description of the property to be measured using a mathematical model of a physical quantity); 2) indeterminateness (in property manifestation); 3) indeterminacy – influence (of a measuring instrument on the property to be measured). At international seminars conducted at VNIIM, W. Wöger, B. Siebert, K. Sommer (PTB), V. P. Kuznetsov (VNIIMS), M. Cox, and P. Harris (National Physical Laboratory, UK), as well as the present author came to a consensus over using a truly recognized quantitative measure of “uncertainty in a broad sense,” i.e., probability distribution [38].

This does not eliminate the problem shared by all approaches, i.e., the identification of posterior probability distributions. However, according to GOSR R ISO 3534-1-2019, Statistical Methods. Vocabulary and Symbols. Part 1. General Statistical Terms and Terms Used in Probability, an interval determined via random sampling so as to cover at least the specified proportion of population with a given level of confidence is called a tolerance interval.

Conclusion. The question concerns what has changed with the emergence of a theory of measurement problems. First of all, the idea of a measurement problem has been transformed. Presently, it is a physical and mathematical problem, as it should be, whose solution involves obtaining initial data via measurements. Numerous so-called “types of measurements” became methods for solving measurement problems according to mathematical features and objectives: direct measurement method, multiple measurement method, indirect measurement method, joint measurement method, and the method of measurements in a closed series. The other “measurement” types fit into the classification of measurement problems, thus avoiding any vagueness.

Thus, for solving metrological problems, a unified approach, even when highly nontraditional, is insufficient. For this reason, the general viewpoint on metrology should sometimes be re-evaluated, while most importantly, simply solving measurement problems.

Notes

To measure – determine the quantity {model – author’s note} of something {object – author’s note} using any measure {means – author’s note}. Measure body temperature. Measure the length of a building. Measure voltage. || figurative – to ascertain / infer the quantity and dimensions of something (literary). Measure the depth of feeling [2].

Derived from the word to determine: “... 3. Calculate, ascertain, deduce according to some data ... 6. Fix, specify” [2].

The preferred word sequence is given in GOST 8.061-80, GSI. Hierarchy Schemes. Scope and Layout: “method of direct measurement” and “method of indirect measurement.”

PROBLEM: statement requiring calculations to obtain some quantities (maths): arithmetic problem; algebraic problem; percent rule problem [2].

See Footnote 2.

Here, the most frequent associations with the Russian word for compactness are its antonyms meaning vagueness and uncertainty.

References

Yu. V. Linnik, Method of Least Squares and the Principles of the Theory of Observations, Fizmatgiz, Moscow (1962), 2nd ed.

D. N. Ushakov (ed.), Explanatory Dictionary of the Russian Language, OGIZ, Moscow (1935).

International Vocabulary of Metrology (VIM3) [Russian translation], NPO Professional, St. Petersburg (2010).

Ch. Ehrlich, R. Dybkaer, and W. Wöger, Accredit. Qual. Assur., 12, 201–218 (2007), https://doi.org/https://doi.org/10.1007/s00769-007-0259-4.

Ch. Ehrlich, R. Dybkaer, and W. Wöger, “Evolution of philosophy and description of measurement,” Glavn. Metrol., No. 1, 11–30 (2016).

Guide to the Expression of Uncertainty in Measurement, ISO, Switzerland (1993), 1st ed.

S. F. Levin, “Metrology. Mathematical statistics. Legends and myths of the 20th century: the legend of uncertainty,” Partn. Konkur., No. 1, 13–25 (2001).

Guide to the Expression of Uncertainty in Measurement [Russian translation], VNIIM, St. Petersburg (1999).

S. F. Levin, “Definitional uncertainty and inadequacy error,” Izmer. Tekhn., No. 11, 7–17 (2019), https://doi.org/https://doi.org/10.32446/0368-1025it.2019-11-7-17.

S. F. Levin, “Mathematical theory of measurement problems: applications. Inadequacy and reliability in metrology,” Kontr.-Izmer. Prib. Sistemy, No. 2, 32–38 (2020).

H. Lebesgue, Measurement of Quantities [Russian translation], Gosuchpedgiz, Moscow (1938).

S. F. Levin, “Metrological mentality: calibration and definitional uncertainty,” Zakonodat. Prikl. Metrol., No 2, 46–55 (2020).

A. N. Leont’ev, A. A. Leont’ev, A. E. Suprun, et al., Fundamentals of Speech Activity Theory, Nauka, Moscow (1974).

Yu. I. Alimov, “On the logic behind the fundamentals of mathematical statistics as an applied discipline,” Avtomatika, No. 3, 88–91 (1971).

V. N. Tutubalin, Probability Theory. A Short Course and Scientific Methodological Notes, MGU, Moscow (1972).

Cybernetics Issues, Iss. 94, Statistical Methods in the Theory of Maintenance, S. F. Levin (ed.), AN SSSR, NSK, Moscow (1982).

Statistical Identification, Prediction, and Control: Guidelines, MO SSSR, Moscow (1990).

Statistical Identification, Prediction, and Control, in: Proc. 2nd All-Union Sci. Techn. Seminar, Sept. 4–6, 1991, Znanie, Sevastopol (1991).

Control of Technical Facilities According to Critical Condition and Key Parameters: Guidelines, Znanie, Kiev (1992).

P. V. Novitskii and I. A. Zograf, Estimation of Measurement Errors, Energoatomizdat, Leningrad (1985).

S. F. Levin, Statistical Analysis of Systems for the Operational Support of Technical Facilities, in: Cybernetics Issues, Iss. 94, Statistical Methods in the Theory of Maintenance, AN SSSR, NSK, Moscow (1982), pp. 105–122.

M. H. Quenouille, “Approximate tests of correlation in time-series,” J. R. Stat. Soc., No. B 11, 68–84 (1949).

A. G. Ivakhnenko, “Group method of data handling as an alternative to the method of stochastic approximation,” Avtomatika, No. 3, 58–72 (1968).

B. Efron, “Bootstrap methods: another look at the jackknife,” Ann. Stat., 7, No 1, 1–26 (1979).

P. Lévy, Calcul des Probabilitiés, Gauthir-Villars, Paris (1925).

S. S. Gogin, “MMI-verification program,” Izmer. Tekhn., No. 7, 20–21 (2006).

I. A. Suleiman, “Procedure for solving a verification measurement problem using truncated distribution functions,” Izmer. Tekhn., No. 1, 28–30 (2012).

A. N. Klenitskii, “Cosmological Distance Scale program,” Metrologiya, No. 1, 3–7 (2013).

E. E. Nevskaya, “Assessment of posterior verification reliability of instruments for measuring ionizing radiation characteristics,” Izmer. Tekhn., No. 1, 13–15 (2017).

W. Feller, An Introduction to Probability Theory [Russian translation], Mir, Moscow (1984), Vo l. 2.

I. K. Daugavet, Introduction to the Approximation Theory: Textbook, Izd. Leningrad. Univ., Leningrad (1977).

S. F. Levin, Optimal Interpolation Filtering of Statistical Characteristics of Random Functions in a Deterministic Version of the Monte Carlo Method and Redshift Law, AN SSSR, NSK, Moscow (1980).

S. F. Levin, Fundamentals of Control Theory, MO SSSR, Moscow (1983).

C. G. J. Jacobi, “De Determinantibus functionalibus,” J. Reine Angew. Math., 22, 319–359 (1841).

K. Pearson, “Contributions to the mathematical theory of evolution,” Philos. T. R. Soc. Lond., A, 71–110 (1894).

S. F. Levin, “Uncertainty of solutions to measurement problems in a broad and narrow sense,” in: Proc. Int. Sci. Tech. Seminar “Mathematical, Statistical and Computer Measurement Quality Support,” VNIIM, COOMET, June 28–30, 2006, St. Petersburg; Mendeleev VNIIM, St. Petersburg (2006), pp. 48, 50.

S. F. Levin, “Uncertainty of solutions to measurement problems in a narrow and broad sense,” Metrologiya, No. 9, 3–24 (2006).

S. F. Levin, “Uncertainty of measuring instrument verification results in a narrow and broad sense,” Izmer. Tekhn., No. 9, 15–19 (2007).

Encyclopedia of Physics, Bol’shaya Rossiiskaya Entsiklopediya, Moscow (1992), Vol. 3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Izmeritel’naya Tekhnika, No. 1, pp. 3–11, January, 2022.

Rights and permissions

About this article

Cite this article

Levin, S.F. Systematic Aspects of Measurement Problem Theory. Meas Tech 65, 1–10 (2022). https://doi.org/10.1007/s11018-022-02041-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11018-022-02041-4