An important problem for the domestic machine building industry involving the development and introduction of modern means of monitoring the geometric parameters of shells of revolution as basic details of aerospace technology, oil and gas, chemical, and power production equipment is examined. The main methodological and instrumental errors in a design-control and measurement robot for determining the geometric parameters of large-scale shells of revolution which depend on the parameters of the computer vision system are analyzed. A scheme for measuring the geometric parameters of a shell is proposed, the methodological and instrumental errors of the robot and their analytic dependences on the computer vision system are obtained, and ways minimizing these errors are identified. The analytical dependences are used for a metrological analysis of a real prototype computer vision system and the applicability of the chosen robot structure is demonstrated. The relative measurement error is less than 0.3%, which meets the requirements of standards documents.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction. A hollow cylindrical component, a shell, is a basic part of aerospace technology, and of oil and gas, chemical and power production equipment. A shell is produced by bending sheet material. The technological accuracy of shell manufacture has a significant effect on the productivity of work involving the assembly and installation of equipment and on the operational characteristics of completed production, i.e., it determines the quality of the output production. The basic operation of the technical process that determines the technological precision of a part is the straightening of the shell, i.e., the adjustment of the shape in the transverse cross section of the shell. This operation is carried out with rotation of the shell in the cylinders of the sheet bending machine owing to the local bending force which is created by movement by the moving cylinders. The technology for assembling the housings affects the productivity in setting up internal parts, the quality and operational characteristics of the output production. The level of working strains in the shells has the greatest influence on the characteristics of the equipment. The main influence on the degree of stress concentration is from the relative shifts of the edges of the adjoining shells of the housing of the equipment before they are welded. This shift is regulated by the standards document GOST R 52630-2006, “Vessels and Apparatus for Steel Welding. General Technical Conditions,” and is measured from the average surface in joints, and is determined by the shape of the intersection of the shells, their mutual position, and should not exceed 10% (no more than 3 mm), the thickness of the shell sheets. Stricter specifications are given in GOST R 52630-2006 for annular and longitudinal seams in bimetal vessels from the side of the corrosion resistant layer. Since the tolerance is 1% of the nominal diameter of the finished shell during assembly, while the shift of the edges owing to the thickness owing to the thickness of the sheet material, for large overall sizes of the housing the limit on the shift in the edges is not always feasible. This conflict can be resolved by individual selection of the abutting shells and their relative angular position, and this requires information on the sizes and shape of the transverse cross section of the shells.

At present, a manual method for control of the sheet bending machine is mostly used in factories; here the shape of the shell is controlled by contact methods, in particular with the aid of templates. Production techniques of this kind for monitoring and control have become obsolescent and do not meet the ever increasing requirements. The sheet metal bending equipment currently used in most enterprises is not equipped with modern systems for monitoring the shape of the transverse cross section of shells. In addition, to reduce the effort, the shells are heated to 1000°C before technical processing for shaping. The time for shaping parts on cooling to the permissible temperature of 600°C is limited, so that it is necessary to increase the monitoring capability. It is difficult to analyze foreign means of monitoring since the technical equipment is strategic and its supply to other countries is limited, and if this equipment is delivered, then it is without means for monitoring and control. For this reason, information on the developments by foreign firms is limited. The Haeusler firm (Germany), which produces sheet metal bending equipment, has developed an opto-electronic device for control of the technical process for straightening shells, which monitors the radius of curvature in the zone of the shafts of the metal bending machine by a triangulation method over three points [1]. The authors of this paper have found that the error in the triangulation method owing to the ripple in sheet metal workpieces and deformation of shells during shaping in the shafts of the machine may be large, i.e., this method is not suitable for monitoring the shape of shells.

Thus, for domestic machine building it is important to develop and introduce modern means of monitoring the geometric parameters of shells as they are being shaped. Computer vision systems, which solve the problem of recognizing objects, can be used as means of control. However, the recognized objects do not impose special requirements on the accuracy of determining the dimensions. Here the main requirement for a measurement system in the form of a control-measurement robot containing a computer vision system is to ensure the required accuracy for the measurement processes which are regulated by the corresponding government and specialist standards.

The purpose of the present study is a metrological analysis of a projection control-measurement robot, i.e., to determine and account for its basic methodological and instrumental errors, which depend on the parameters of the computer vision system, to propose means for minimizing them, and, based on an analysis, to make a well justified choice of the components and parameters of the main parts of the robot.

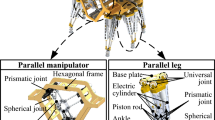

The control and measurement robot. The task of the control and measurement robot is to determine the coordinates of the contour of the transverse cross section of the shell with respect to the center of the contour [2,3,4]. To solve this problem, the authors of this article have used a digital camera which determined the coordinates of the contour with respect to its center in digital form as a measurement converter for the computer vision system. As opposed to the analog optoelectronic measurement converters for which analog-to-digital conversion takes place, the digital camera produces a spatially discrete image sample which makes it possible to eliminate some components of error. It should be noted that at present there is a wide choice of matrices for the camera which can provide the necessary error in discrete sampling of an image.

Figure 1 shows a structural diagram of the control and measurement robot, which consists of a digital camera 1 and a two-coordinate mechanism (vertical 4 and horizontal 5 axes with carriages) to move it. The carriages move with a cable system and stepping motors. The camera records an image of the profile of the transverse cross section of the part, and the center of the shell 3 is found by searching for the geometric center of gravity. Then the camera is set at the found coordinate of the center of the shell, an image of the profile of the transverse cross section is again recorded, and the center of the shell is determined again. The search process continues until the deviation of the center of the camera image from the center of the part is as small as possible. After positioning of the camera at the center of the last image, a profilogram is recorded with respect to the coordinates of the points in the contour of the transverse cross section and then the geometric parameters of the part are determined.

Analysis of the errors in the control and measurement robot. In the metrological analysis, specific features of the object of measurement were taken into account. Since the shells of housings for different purposes have a large size (with a diameter up to 10 m), the absolute technological error is 10 and 50 mm for diameters of 1 and 5 m, respectively. A specification for the error on the robot equal to the 0.3 tolerance, i.e., 0.3%, was set. Since the absolute tolerance is relatively large, in analyzing the errors the methods of geometrical optics were used. In this case, the distortion error has the largest influence on the overall measurement error of the optical components. The measurement error consists of two components:

– the error in the optical measurement converter (digital camera) which contains sampling and distortion errors;

– the error in basing the optical measurement converter which includes the instrumental error of the apparatus for monitoring the distance to the object (the error in the laser phase range finder).

Sampling error (resolving power) is expressed as the ratio of the dimensions of an elementary area of the real object and that of a single pixel of the image corresponding to it, obtained with the aid of the camera. We consider a simplified optical layout for recording the image using a camera with a transverse cross section in the end plane (Fig. 2). To obtain the length and width of an elementary area lr of the real object corresponding to a single pixel of the image, it is necessary to find the angle α and the visible area A along the horizontal and vertical. On converting the dependences described in Ref. 5 into a single expression, we obtain the desired value,

where L is the distance to the object, h is the size of the camera matrix, N is the size of the image, and f is the focal distance.

The parameters L, h, and f are measured in millimeters and N, in pixels. The elementary object of the real object is directly proportional to the distance to the object and decreases nonlinearly (with a hyperbolic dependence) for a increasing focal distance.

The relative sampling error δs when measuring an element of the profilogram is defined as

where R is the radius of the part.

For minimizing this error, it is possible to increase the resolution or the focal distance of the camera.

Distortion error. The radial distortions are the aberrations in optical systems for which the linear magnification coefficient varies with distance of the imaged objects from the optic axis (the displacements Δx and Δy in Fig. 3). Here the geometric similarity between the object and its image [6] is violated. As a result of the distortion, the straight lines of the recorded objects that do not intersect the optic axis are reflected in the form of bent arcs. The angles in the image of a square the center of which coincides with the optic axis may fall outside or, oppositely, be “drawn” inward so that the square becomes similar to a pillow or a barrel. A “pillow” distortion is regarded as positive, so the distance from the optical center along it increases. A “barrel” distortion [7] is regarded as negative, since it shrinks the distance from the optical center (Fig. 4).

We now determine the error introduced by a third order distortion. We assume that the real magnification coefficient equals unity (no magnification) and take the point contour of a circle of known radius R with its center at the coordinate origin and no distortion and apply a third order distortion F3 to it with different values. The coordinates of the circle with distortion will be equal to [7]

Thus, the absolute ΔR and relative δF errors for each measurement of the radius in the profilogram are given by

This error is statistical, so it is possible introduce a correction after determining a third order distortion of the camera. The contours constructed from the obtained points on a plane are shown in Fig. 4a, when it follows that the third order distortion does not distort the shape of the circle, but only changes the diameter of the proportional distortion. For a more complicated shape, however (e.g., a square with side B), significant distortions will be observed in the shape (see Fig. 4b).

There are two approaches to eliminating the influence of distortion on the measurement result: using high quality orthoscopic optics; programmed calibration of each specimen of the camera. The second approach offers more promise, since the calibration process is automated and built into the modern program library for operating with the image.

Errors in basing. Errors in basing arise in connection with deviations in the optical axis of the converter from the axis of the part owing to deviations from coaxiality of the optical center of the camera and the center of the transverse cross section of the part, instability in the distance between the part and the camera, as well as with deviation from parallelism between the transverse cross section of the part and the image plane of the camera.

Deviation from coaxiality of the optical center of the camera and the center of the transverse cross section of the part arises from gaps in the two-coordinate mechanism (see Fig. 3), which leads to displacements Δx, Δy relative to the center of the measured part. These displacements cause an error in the eccentricity which affects the accuracy of the profilogram measurement of the transverse cross section. The measured radius vector R′ of the profilogram taking the eccentricity into account will appear as the sum of the vectors R and e, with

The relative error in measuring the radius is defined by

The deviation in the distance between the part and the camera from a specified distance mainly depends on the instrumental error of the apparatus that is monitoring this distance. Given the error in the distance between the camera and the object, ΔL owing to instrumental error of the rangefinder, and Eqs. (1) and (2), we write the sampling error in the form

This error, as described above, is minimized by an interactive method of searching for the center of the transverse cross section of the part, with which the agreement between the centers of the image plane and the measured part is checked in each step.

The deviation from parallelism between the transverse cross section of the part and the camera image plane is related to mechanical free play of the two-coordinate mechanism, so that the camera is inclined along one of the axes by a certain angle (Fig. 5). For a deviation from parallelism, the distance between the camera and the part changes by an amount ΔLA (negative for the upper boundary of the images and positive for the lower), which also influences the relative sampling error. The value of ΔLA depends on the region of visibility A of the camera and its angles of inclination γ and survey α:

Therefore, with all the deviations taken into account, the relative sampling error is given by

The resulting relative measurement error for this computer vision system of the robot will be determined from Eqs. (3)–(5) as

To verify the theoretical analysis, we have used a prototype of a system [8] containing a digital IP-camera IP-33-OH40BP (ORIENT, China) with a horizontal matrix size h = 4.8 mm, horizontal resolution N = 1920 pixels, objective focal distance f = 3.6 mm, and distance to object of 1000 mm. The apparatus for monitoring the distance to the object was a laser phase rangefinder with an instrumental absolute error ΔL = ±2 mm; displacements Δx = ±0.004 mm, Δy = ±0.004 mm were taken in accordance with a fitting tolerance class of 3. For the initial value of the radius, we take the point from a manual profilograph with parameters R = 384 mm, x = 384 mm, y = 0. We specify a distortion of third order F3 = 2·10–6 and an angle of inclination for the camera of γ = 15° (including the turning of the part itself). We get errors δe = 0.001%, δF = 0.077%, and δs = 0.108% with a resulting relative error δ = 0.15%.

Thus, the largest contribution to the measurement error of the geometric parameters of parts in the form of largescale shells of rotation originates in the deviation from parallelism between the transverse cross section of the part and the image plane of the camera.

Conclusion. The analytic dependences for the errors of the robot on the parameters of the computer vision system given in this article can be used carry out a metrological analysis of the robot, to minimize these errors, and thereby chose a justified component composition and parameters for the main parts of the robot. Introducing a robot in various branches of industry, e.g., aerospace, power production, oil and gas, etc., will make it possible to enhance the quality and reduce the cost of the output production.

References

G. G. Zemskov and V. A. Saveliev, Instruments for Measuring Linear Dimensions Using Lasers, Mashinistroenie, Moscow (1977).

A. N. Shilin and D. G. Snitsaruk, 2019 Int. Conf. on Industrial Engineering, Applications and Manufacturing (ICIEAM), Sochi, Russia, March 25–29, 2019, IEEE Industry Applications Society, IEEE Power Electronics Society (2019), рр. 1–5, https://doi.org/10.1109/ICIEAM.2019.8742977.

A. N. Shilin and D. G. Snitsaruk, in: A. Gorodetskiy and I. Tarasova (eds.), Smart Electromechanical System, in: Studies in Systems, Decision, and Control, Springer, Cham (2019), Vol. 174, рр. 263–273, https://doi.org/10.1007/978-3-319-99759-9_21.

D. G. Snitsaruk and A. N. Shilin, Patent RU 2654957 C1, “Opto-electronic device for measuring the sizes of shells,” Izobret. Polezn. Modeli, No. 15 (2018).

B. D. Guenther, Modern Optics Simplified, Oxford University Press (2019).

V. Chari and A. Veeraraghavan, “Lens distortion, radial distortion,” in: K. Ikeuchi (ed.), Computer Vision, Springer, Boston, MA (2014), pp. 443–445, https://doi.org/10.1007/978-0-387-31439-6-479.

J. Chang and L.-G. Miao, J. Comp. Appl., 30, No. 4, 950–952 (2010), https://doi.org/10.3724/SP.J.1087.2010.00950.

A. N. Shilin and D. G. Snitsaruk, Measur. Techn., 61, No. 9, 897–902 (2018), https://doi.org/10.1007/s11018-018-1521-3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Izmeritel’naya Tekhnika, No. 12, pp. 29–34, December, 2021.

Rights and permissions

About this article

Cite this article

Shilin, A.N., Snitsaruk, D.G. & Kuznetsova, N.S. Control and Measurement Robot for Determining the Geometric Parameters of Large Shells of Revolution: Metrological Analysis. Meas Tech 64, 985–990 (2022). https://doi.org/10.1007/s11018-022-02033-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11018-022-02033-4