Abstract

The present paper deals with the problem of nonparametric estimation of the trend for stochastic processes driven by G-Brownian motion with small noise. The consistency, the bound on the rate of convergence, and the asymptotic distribution of the nonparametric estimator are studied. Finally, a numerical example is given to verify our theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the last decades, stochastic differential equations (SDEs) with small noise have received considerable attention in biology, mathematical finance, and other fields (see, e.g., Albeverio et al. 2019; Bressloff 2014; Takahashi and Yoshida 2004). In the real world, due to the existence of random factors, the drift function is seldom known. The drift function can be estimated by using nonparametric smoothing approach. Kutoyants (1994) first discussed the consistency and asymptotic normality of nonparametric estimator for SDEs driven by wiener process with small noise. After that, the asymptotic theory of nonparametric estimation for SDEs with small noise has drawn increasing attention. For instance, Mishra and Prakasa Rao (2011) investigated the problem of nonparametric estimation for SDEs driven by small fractional noise. Prakasa Rao (2020) studied nonparametric estimation of trend coefficient in models governed by a SDE driven by sub-fractional Brownian motion with small noise. Zhang et al. (2019) consider the consistency and asymptotic distribution of the kernel type estimator for SDEs with small \(\alpha \)-stable noises.

It is easy to see that the additivity of probability measures and mathematical expectations is the key to obtaining the above-mentioned works. In practice, such additivity assumption is not feasible in many areas of applications because many uncertain phenomena can not be well modelled using additive probabilities or additive expectations. To do it, many scholars use non-additive probabilities (called capacities) and nonlinear expectations (for example Choquet integral/expectation, G-expectation) to describe and interpret the phenomena which are generally nonadditive (see, e.g., Chen and Epstein 2002; Chen et al. 2013; Gilboa 1987; Peng 1997; Wakker 2001; Wasserman and Kadane 1990). Motivated by problems of model uncertainty in statistics, measures of risk and superhedging in finance, Peng (2007a, 2007b, 2008a, 2009, 2019) proposed a formal mathematical approach under the framework of nonlinear expectation and the related G-Brownian motion (GBm) in some sublinear space \((\Omega ,\mathcal {H},\hat{\mathbb {E}})\). Under the sublinear expectation framework, Peng (2008b) proved the central limit theorem (CLT). The corresponding limit distribution of the CLT is a G-normal distribution. Since these main results were provided, the theory of sub-linear expectation has been well developed (Gao 2009; Fei et al. (2023a, 2023b, 2022); Mao et al. 2021; Peng and Zhou 2020; Song 2020; Wei et al. 2018). In particular, Lin et al. (2017) studied upper expectation parametric regression. Lin et al. (2016) constructed a k-sample upper expectation regression, a special nonlinear expectation regression, and then investigated its statistical properties. Sun and Ji (2017) proved the existence and uniqueness of the least squares estimator under sublinear expectations.

However, there has been no study on nonparametric estimation for SDEs driven by GBm with small noises yet. Recently, Fei and Fei (2019) analyzed the consistency of the least squares estimator for SDEs under distribution uncertainty. Motivated by the aforementioned works, in this paper, we will deal with the problem of nonparametric estimation for stochastic processes driven by GBm with small noise

where \(\varepsilon \in (0,1)\), the function \(S(\cdot )\) is an unknown, \(B_t\) is a one-dimensional GBm, \(\langle B\rangle _t\) is the quadratic variation process of the GBm. Suppose \(\{x_t,0\le t\le T\}\) is the solution of the differential equation

where \(x_0\) is the initial value. We would like to estimate the function \(S_t=S_t(x)\) based on the observation \(\{X_t,0\le t\le T\}\). Following techniques in Kutoyants (1994), we define a kernel type estimator of the trend function \(S_t=S_t(x)\) as

where \(K(\cdot )\) is a bounded function of finite support, and the normalizing function \(\varphi _\varepsilon \rightarrow 0\) with \(\varepsilon ^2\varphi _\varepsilon ^{-1}\rightarrow 0\) as \(\varepsilon \rightarrow 0\).

This paper is organized as follows. In Section 2, some preliminaries on G-expectation theory are given. In Section 3, the consistency and bound on the rate of convergence of nonparametric estimator are discussed. In Section 4, the asymptotic distribution of the estimator is obtained. In Section 5, a numerical simulation is provided.

2 Preliminaries

Throughout the paper, we shall use notation “\(\rightarrow _\mathbb {C}\)” to denote “convergence in capacity” and notation “\({\mathop {=}\limits ^{d}}\)” to denote equality in distribution. Denote \((\mathcal {C}_T, \mathcal {B}_T)\) as the measurable space of continuous on [0, T] functions \(x=\{x_t, 0\le t\le T\}\) with the \(\sigma \)-algebra \(\mathcal {B}_T=\sigma \{x_t,0\le t\le T\}\). Let us introduce a class \(\Theta _k(L)\) of functions \(\{g_t,0\le t\le T\}\) k-times derivable with respect to t and the k-th derivative which satisfies the following condition of the order \(\gamma \in (0,1]\)

for some constants \(L>0\). If \(k=0\), we interpret \(g^{(0)}\) as g.

Next, let us recall some some notions of G-expectation theory. For more details, please see, e.g., Denis et al. (2011), Gao (2009), Peng (2019).

Let \(\Omega \) be a given nonempty set, and let \(\mathcal {H}\) be a linear space of real-valued functions defined on \(\Omega \). We assume that \(\mathcal {H}\) satisfies that \(a\in \mathcal {H}\) for any constant a and \(|X| \in \mathcal {H}\) for all \(X\in \mathcal {H}\).

Definition 2.1

A sublinear expectation \(\hat{\mathbb {E}}\) is a functional \(\hat{\mathbb {E}}: \mathcal {H}\rightarrow \mathbb {R}\) satisfying

-

(i)

Monotonicity: \(\hat{\mathbb {E}}[X]\ge \hat{\mathbb {E}} [Y]\) if \(X\ge Y\).

-

(ii)

Constant preserving: \(\hat{\mathbb {E}}[C]=C\) for all \(C\in \mathbb {R}\).

-

(iii)

Sub-additivity: For each \(X,Y\in \mathcal {H}\), \(\hat{\mathbb {E}}[X+Y]\le \hat{\mathbb {E}}[X]+\hat{\mathbb {E}}[Y]\).

-

(iv)

Positive homogeneity: \(\hat{\mathbb {E}}[\lambda X]=\lambda \hat{\mathbb {E}}[X]\) for all \(\lambda \ge 0\).

The triple \((\Omega ,\mathcal {H},\hat{\mathbb {E}})\) is called a sublinear expectation space.

Definition 2.2

Given a random variable \(\xi \in \mathcal {H}\) with

is called G-normal distribution, denote by \(N(\{0\}\times [\underline{\sigma }^2,\bar{\sigma }^2])\), if for any \(\varrho \in C_{b,Lip}(\mathbb {R})\), where \(C_{b,Lip}(\mathbb {R})\) denote the space of bounded functions \(\varrho \) satisfying \(|\varrho (x)-\varrho (y)|\le C|x-y|\), for \(x,y\in \mathbb {R}\), some \(C>0\) depending on \(\varrho \), writing \(u(t,x):=\hat{\mathbb {E}}[\varrho (x+\sqrt{t}\xi )]\), \((t,x)\in [0,\infty )\times \mathbb {R}\), then u is the viscosity solution of the following partial differential equation (PDE):

where \(G(x):=\frac{1}{2}(\bar{\sigma }^2x^+-\underline{\sigma }^2x^-)\) and \(x^+:=\max \{x,0\}\), \(x^-:=(-x)^+\).

Definition 2.3

A one-dimensional stochastic process \((B_t)_{t\ge 0})\) on a sublinear expectation space \((\Omega ,\mathcal {H},\hat{\mathbb {E}})\) is called a GBm if the following properties are satisfied:

-

(i)

\(B_0(\omega )=0\);

-

(ii)

For each \(t,s\ge 0\), the increment \(B_{t+s}-B_t\) is \(N(\{0\}\times [s\underline{\sigma }^2,s\bar{\sigma }^2])\)-distributed.

-

(iii)

The increment \(B_{t+s}-B_t\) is independent of \((B_{t_1},B_{t_2},\cdots ,B_{t_n})\), for each \(n\in \mathbb {N}\) and \(0\le t_1\le \cdots \le t_n\le t\).

Now, let \(\Omega =C_0(\mathbb {R}^+)\) be the space of all real-valued continuous paths \((\omega _t)_{t\in \mathbb {R}^+}\) with \(w_0=0\) equipped with the distance

Consider the canonical process \(B_t(\omega )=\omega _t, t\in [0,\infty )\), for \(\omega \in \Omega \). For each fixed \(T\ge 0\), we set

and \(Lip(\Omega ):=\bigcup _{n=1}^\infty Lip(\Omega _n)\), where \(C_{b,Lip}(\mathbb {R}^n)\) is the space of bounded Lipschitz continuous functions on \(\mathbb {R}^n\). In Peng (2019), the sublinear expectation \(\hat{\mathbb {E}}[\cdot ]: Lip(\Omega )\mapsto \mathbb {R}\) defined through the above procedure is called a G-expectation. The corresponding canonical process \((B_t)_{t\ge 0}\) on the sublinear expectation space \((\Omega ,Lip(\Omega ),\hat{\mathbb {E}})\) is called a GBm. For each \(p\ge 1\), \(L_G^p(\Omega )\) denotes the completion of \(Lip(\Omega )\) under the normal \(||\cdot ||_p=(\hat{\mathbb {E}}|\cdot |^p)^{\frac{1}{p}}\).

Let \(p\ge 1\) be fixed. We consider the following type of simple process: for a given partition \(\pi _T=\{t_0,\cdots ,t_N\}\) of [0, T] we set

where \(\xi _k\in L_G^p(\Omega _{t_k})\), \(k=0,1,2,\cdots ,N-1\) are given. The collection of these processes is denoted by \(M_G^{p,0}(0,T)\).

Definition 2.4

For each \(p\ge 1\), We denote by \(M_G^{p,0}(0,T)\) the completion of \(M_G^{p,0}(0,T)\) under the norm

Definition 2.5

For each \(\eta \in M_G^{p,0}(0,T)\), the Bochner integral and Itô integral are defined by

respectively.

Definition 2.6

Let \(\pi _t^N=\{t_0^N,t_1^N,\ldots ,t_N^N\}\), \(N=1,2,\ldots ,\) be a sequence of partitions of [0, t]. For the GBm, we define the quadratic variation process of \(B_t\) by

where \(\mu (\pi _t^N)=\max _{1\le i\le N}|t_{i+1}-t_i|\rightarrow 0\) as \(N\rightarrow \infty \).

Definition 2.7

Define the integral of a process \(\eta \in M^{1,0}_G(0,T)\) with respect to \(\langle B\rangle _t\). We start with the mapping:

Lemma 2.1

(Gao 2009) Let \(p\ge 2\) and \(\zeta =\{\zeta (s),s\in [0,T]\}\in M_G^{p,0}(0,T)\). Then, for all \(t\in [0,T]\) such that

where \(\bar{\sigma }^2=\hat{\mathbb {E}}[B_1^2]\), and the positive constants \(C_i(p,\bar{\sigma }),i=1,2\) depend on parameter \(p,\bar{\sigma }\).

Let \(\mathcal {B}(\Omega )\) be a Borel \(\sigma \)-algebra of \(\Omega \). It was proved in Denis et al. (2011) that there exists a weakly compact family \(\mathcal {P}\) of probability measures defined on \((\Omega ,\mathcal {B}(\Omega ))\) such that

Definition 2.8

The capacity \(\mathbb {C}\) associated with \(\mathcal {P}\) is defined by \(\mathbb {C}(A)=\sup _{\mathbb {P}\in \mathcal {P}}\mathbb {P}(A)\), \(A\in \mathcal {B}(\Omega )\). A set \(A\subset \Omega \) is called polar if \(\mathbb {C}(A)=0\). A property is said to hold quasi-surely (q.s.) if it holds outside a polar set.

Lemma 2.2

(Peng 2019) Let \(X\in L_G^p\) and \(\hat{\mathbb {E}}[|X|^p]<\infty \), \(p>0\). Then, for any \(\delta >0\), we have

Lemma 2.3

(Peng 2019) For each \(X,Y\in \mathcal {H}\), we have

and

We will make use of the following assumptions:

- (H1):

-

There exists a positive constant \(L_1\) such that

$$\begin{aligned} |S_t(x)-S_t(y)|\le L_1|x_t-y_t|,\quad x,y\in \mathcal {C}_T. \end{aligned}$$ - (H2):

-

Let \(K(u),u\in \mathbb {R}\) be a bounded function of finite support (there exist two constants \(\psi _1<0\) and \(\psi _2>0\) such that \(K(u)=0\) for \(u\notin [\psi _1,\psi _2]\)) and \(\int _{\psi _1}^{\psi _2}K(u)du=1\). In addition,

$$\begin{aligned} \int _{-\infty }^\infty |K(u)| du<\infty ,\,\,\int _{-\infty }^\infty K^2(u) du<\infty ,\,\,\int _{-\infty }^\infty (K(u)u^{\gamma })^2 du<\infty . \end{aligned}$$ - (H3):

-

The kernel function \(K(\cdot )\) satisfies the following condition

$$\begin{aligned} \begin{aligned} \int _{-\infty }^\infty (K(u)u^{k+\gamma })^2&du<\infty ,\,\, \int _{-\infty }^\infty |K(u)u^{k+\gamma +1}du|<\infty , \,\, \text{ and }\\ {}&\int _{-\infty }^\infty u^jK(u)du=0, \,\,j=1,2,\ldots ,k. \end{aligned} \end{aligned}$$

It is not difficult to see that SDE (1.1) admits a unique solution under condition (H1).

3 Consistency of the Kernel Estimator \(\widehat{S}_t\)

In the section, we study the consistency and bound on the rate of convergence of the estimator \(\widehat{S}_t\). We first present a useful lemma.

Lemma 3.1

If assumption (H1) holds, then, we have

and

Proof

(i) By assumption (H1), it follows that

Using Gronwall’s inequality to get that (3.1) holds. (ii) By (3.1), Lemma 2.1, and (2.2) in Lemma 2.3 we get

This completes the proof.

Theorem 3.1

Let the trend function \(S_t(x)\in \Theta _0(L)\) and assumptions (H1)-(H2) hold. Then, for any \(0<c\le d<T\), the estimator \(\widehat{S}_t\) is uniformly consistent, that is,

Proof

By (2.2) in Lemma 2.3, it follows that

Utilizing the change of variables \(u=(\tau -t)\varphi _\varepsilon ^{-1}\) and denoting \(\varepsilon _1=\varepsilon '\wedge \varepsilon ''\), where

For the term \(F_1(\varepsilon )\), by assumptions (H1) and (H2), (2.3) in Lemma 2.3, (3.2) in Lemma 3.1, and (3.5), one sees that for \(\varepsilon <\varepsilon _1\)

which tends to zero as \(\varepsilon \rightarrow 0\). For \(F_2(\varepsilon )\), using \(S_t(x)\in \Theta _0(L)\), assumption (H2), (2.3) in Lemma 2.3, and (3.5), we get that for \(\varepsilon <\varepsilon _1\)

which tends to zero as \(\varepsilon \rightarrow 0\). For \(F_3(\varepsilon )\), by Lemma 2.1 and assumptions (H2), one can obtain that

which tends to zero as \(\varepsilon ^2\varphi _\varepsilon ^{-1}\rightarrow 0\). Similar to the discussion of \(F_3(\varepsilon )\), one has

which tends to zero as \(\varepsilon ^2\varphi _\varepsilon ^{-1}\rightarrow 0\). Therefore, by combining (3.4)–(3.9), we can conclude that (3.3) holds. This completes the proof.

Next, a bound on the rate of convergence of the estimator \(\widehat{S}_t\) is given.

Theorem 3.2

Suppose that the trend function \(S_t(x)\in \Theta _{k}(L)\), and \(\varphi _\varepsilon =\varepsilon ^{\frac{2}{2(k+\gamma )+1}}\). Then, under the the conditions (H1)-(H3), one has

Proof

It follows from Taylor’s formula that for any \(x\in \mathbb {R}\),

where \(\theta \in (0,1)\). Substituting (3.11) into (3.7) yields that for \(\varepsilon <\varepsilon _1\)

One can use condition (H3), (2.3) in Lemma 2.3, and \(S_t(x)\in \Theta _{k+1}(L)\) to show that

By (3.4), (3.6), (3.8) and (3.12), one sees that

with some positive constants \(K_1,K_2,K_3\) which do not depend on function \(S(\cdot )\). So letting \(\varphi _\varepsilon =\varepsilon ^{\frac{2}{2(k+\gamma )+1}}\), we can conclude that (3.10) holds.

Remark 3.1

Let \(\varphi _\varepsilon =\varepsilon ^{\frac{2}{2\gamma +1}}\). Then, under the assumptions (H1)-(H2), we have

4 Asymptotic Distribution of the Estimator \(\widehat{S}_t\)

In this section, we discuss the asymptotic distribution of the estimator \(\widehat{S}_t\). Throughout, U denotes a random variable with the distribution \(N([\underline{\sigma }^2,\bar{\sigma }^2]\times \{0\})\), and N denotes a random variable with the G-normal distribution \(N(\{0\}\times [\underline{\sigma }^2,\bar{\sigma }^2])\) independent of U.

Theorem 4.1

Let the trend function \(S_t(x)\in \Theta _{k+1}(L)\), \(\varphi _\varepsilon =\varepsilon ^{\frac{2}{2(k+\gamma )+1}}\), and assumptions (H1)-(H3) hold. (i) When \(\gamma \in (0,1)\) and \(\varepsilon \rightarrow 0\), one has

(ii) When \(\gamma =1\) and \(\varepsilon \rightarrow 0\), one has

Proof

One can apply (1.1) to get that

For the term \(\phi _1(\varepsilon )\), by hypothesis (H1) and (3.1) in Lemma 3.1, we have

By Lemma 2.2, and hypothesis (H2), we have, for any given \(\delta >0\),

which tends to zero as \(\varepsilon \rightarrow 0\). This implies that

as \(\varepsilon \rightarrow 0\). By the Taylor’s formula, we have, for any \(x\in \mathbb {R}\),

where \(\theta \in (0,1)\). For the term \(\phi _2(\varepsilon )\), by the definition of \(\varepsilon _1\), (4.4), and hypothesis (H3), it follows that for \(\varepsilon <\varepsilon _1\)

Since \(S_t(x)\in \Theta _{k+1}(L)\), we have

which tends to zero as \(\varepsilon \rightarrow 0\). Combining (4.5) with (4.6) gives that

as \(\varepsilon \rightarrow 0\). For the term \(\phi _3(\varepsilon )\), by the properties of Itô integral with respect to quadratic variation of GBm, one can obtain that for \(\varepsilon <\varepsilon _1\)

as \(\varepsilon \rightarrow 0\). For the term \(I_4(\varepsilon )\), applying the properties of Itô integral with respect to GBm, one sees that for \(\varepsilon <\varepsilon _1\)

as \(\varepsilon \rightarrow 0\). Thus, by (4.3) and (4.7)–(4.9), we can conclude that (4.1) and (4.2) hold as \(\varepsilon \rightarrow 0\) in terms of the range of \(\gamma \), i.e. \(\gamma \in (0,1)\) and \(\gamma =1\), respectively. This completes the proof.

Remark 4.1

If \(\underline{\sigma }=\bar{\sigma }\), the G-Brownian motion reduces to the classical Brownian motion. Then Theorem 4.1-(ii) will reduce to Remark 4.2 in Kutoyants (1994).

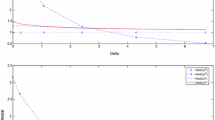

5 A Simulation Example

In this section, an example will be presented to illustrate our theory. Let T be the length of observation time interval, n be the sample size, and \(\Delta = T /n\). For simplicity, in our simulation, we consider the following SDE

where the initial value \(X_0=x_0=0.9\), and \(B_t\) is a scalar GBm with \(B_1\sim N(0,[1,2])\). We can use the Euler scheme (see e.g. Deng et al. 2019; Fei and Fei 2019) to approximate the SDE (5.1):

where \(t_i=i\Delta \), \(\Delta B_i=B_{t_{i+1}}-B_{t_i}\), \(\Delta \langle B\rangle _i=\langle B\rangle _{t_{i+1}}-\langle B\rangle _{t_i}\), \(i=0,1,\ldots ,n-1\). Let \(T=30\), \(n=3000\), \(\varphi _\varepsilon =0.01\), and the function \(K(u)=2(1-2|u|)\), for \(u\in [-\frac{1}{2},\frac{1}{2}]\) and \(K(u)=0\) otherwise. Furthermore, we use the direct method from Deng et al. (2019) and Fei and Fei (2019) to generate the GBm and the quadratic variation process of the GBm. Let \(\{x_t,0\le t\le T\}\) is the solution of the differential equation

where the initial value \(x_0=0.9\). The simple sample path of the process \(y_t=|\widehat{S}_t-\sin (x_t)|^2\) is given in Fig. 1. It shows that the estimator \(\widehat{S}_t\) performs reasonably well.

Data Availability

All data generated or analysed during this study are included in this article.

References

Albeverio S, Cordoni FG, Di Persio L, Pellegrini G (2019) Asymptotic expansion for some local volatility models arising in finance. Decis Econ Finance 42:527–573

Bressloff PC (2014) Stochastic processes in cell biology, vol 41. Springer, New York

Chen Z, Epstein L (2002) Ambiguity, risk and asset returns in continuous time. Econometrica 70:1403–1443

Chen Z, Wu P, Li B (2013) A strong law of large numbers for non-additive probabilities. Int J Approx Reason 54:365–377

Deng S, Fei C, Fei W, Mao X (2019) Stability equivalence between the stochastic differential delay equations driven by G-Brownian motion and the Euler-Maruyama method. Appl Math Lett 96:138–146

Denis L, Hu M, Peng S (2011) Function spaces and capacity related to a sublinear expectation: Application to G-Brownian motion paths. Potential Anal 34:139–161

Fei C, Fei W (2019) Consistency of least squares estimation to the parameter for stochastic differential equations under distribution uncertainty. Acta Math Sci 39A(6):1499–1513. arXiv:1904.12701v1

Fei C, Fei W, Deng S, Mao X (2023a) Asymptotic stability in distribution of highly nonlinear stochastic differential equations with G-Brownian motion. Qual Theor Dyn Syst 22:57

Fei C, Fei W, Mao X (2023b) A note on sufficient conditions of asymptotic stability in distribution of stochastic differential equations with G-Brownian motion. Appl Math Lett 136:108448

Fei C, Fei W, Mao X, Yan L (2022) Delay-dependent asymptotic stability of highly nonlinear stochastic differential delay equations driven by G-Brownian motion. J Franklin Inst 359(9):4366–4392

Gao F (2009) Pathwise properties and homeomorphic flows for stochastic differential equations driven by G-Brownian motion. Stoch Process Appl 119:3356–3382

Gilboa I (1987) Expected utility theory with purely subjective non-additive probabilities. J Math Econom 16:65–68

Kutoyants Y (1994) Identification of dynamical systems with small noise. Kluwer, Dordrecht

Lin L, Dong P, Song Y, Zhu L (2017) Upper expectation parametric regression. Stat Sinica 27(3):1265–1280

Lin L, Shi Y, Wang X, Yang S (2016) k-sample upper expectation linear regression-modeling, identifiability, estimation and prediction. J Stat Plan Infer 170:15–26

Mao W, Chen B, You S (2021) On the averaging principle for SDEs driven by G-Brownian motion with non-Lipschitz coefficients. Adv Differ Equ 71

Mishra MN, Prakasa Rao BLS (2011) Nonparametric estimation of trend for stochastic differential equations driven by fractional Brownian motion. Stat Inf Stoch Proc 14:101–109

Peng S (1997) Backward SDE and related g-expectation. Backward stochastic differential equations. In Karoui EN, Mazliak L (Eds) Pitman Res Notes Math Ser (vol. 364), pp 141–159

Peng S (2007a) G-expectation, G-Brownian motion and related stochastic calculus of Itô’s type. In Stochastic Analysis and Applications, the Able Symposium 2005, Abel Symposia 2, Edit Benth et al., pp 541–567

Peng S (2007b) G-Brownian motion and dynamic risk measure under volatility uncertainty. Preprint at: arXiv:0711.2834v1 [math.PR]

Peng S (2008a) Multi-dimensional G-Brownian motion and related stochastic calculus under G-expectation. Stoch Process Appl 118(12):2223–2253

Peng S (2008b) A new central limit theorem under sublinear expectations. arXiv:0803.2656v1 [math.PR]

Peng S (2009) Survey on normal distributions, central limit theorem, Brownian motion and the related stochastic calculus under sublinear expectations. Sci China Ser A 52(7):1391–1411

Peng S (2019) Nonlinear expectations and stochastic calculus under uncertainty. Springer, Berlin, Heidelberg

Peng S, Zhou Q (2020) A hypothesis-testing perspective on the G-normal distribution theory. Stat Probab Lett 156:108623

Prakasa Rao BLS (2020) Nonparametric estimation of trend forstochastic differential equations driven by sub-fractional Brownian motion. Random Oper Stoch Equ 28:113–122

Song Y (2020) Normal approximation by Stein’s method under sublinear expectations. Stoch Process Appl 130:2838–2850

Sun C, Ji S (2017) The least squares estimator of random variables under sublinear expectations. J Math Anal Appl 906–923

Takahashi A, Yoshida N (2004) An asymptotic expansion scheme for optimal investment problems. Stat Inference Stoch Process 7:153–188

Wakker P (2001) Testing and characterizing properties of nonadditive measures through violations of the sure-thing principle. Econometrica 69:1039–1059

Wasserman L, Kadane J (1990) Bayes’s theorem for Choquet capacities. Ann Statist 18:1328–339

Wei W, Zhao M, Luo P (2018) Asymptotic estimates for the solution of stochastic differential equations driven by G-Brownian motion. Appl Anal 97:2025–2036

Zhang X, Yi H, Shu H (2019) Nonparametric estimation of the trend for stochastic differential equations driven by small \(\alpha \)-stable noises. Stat Probab Lett 151:8–16

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 12101004, 62273003), the Natural Science Foundation of Universities in Anhui Province (2022AH050993), and the Startup Foundation for Introducing Talent of Anhui Polytechnic University (Nos. 2020YQQ064, 2021YQQ058).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, X., Deng, S. & Fei, W. Nonparametric Estimation of Trend for Stochastic Processes Driven by G-Brownian Motion with Small Noise. Methodol Comput Appl Probab 25, 65 (2023). https://doi.org/10.1007/s11009-023-10045-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11009-023-10045-y