Abstract

The Naive Bayes approximation (NBA) and associated classifier are widely used and offer robust performance across a large spectrum of problem domains. As it depends on a very strong assumption—independence among features—this has been somewhat puzzling. Various hypotheses have been put forward to explain its success and many generalizations have been proposed. In this paper we propose a set of “local” error measures—associated with the likelihood functions for subsets of attributes and for each class—and show explicitly how these local errors combine to give a “global” error associated to the full attribute set. By so doing we formulate a framework within which the phenomenon of error cancelation, or augmentation, can be quantified and its impact on classifier performance estimated and predicted a priori. These diagnostics allow us to develop a deeper and more quantitative understanding of why the NBA is so robust and under what circumstances one expects it to break down. We show how these diagnostics can be used to select which features to combine and use them in a simple generalization of the NBA, applying the resulting classifier to a set of real world data sets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Although superseded by more sophisticated classifiers, the Naive Bayes classifier (NBC) is still widely used in a multitude of different areas of application [see, for example, Wang et al. (2007), Turhan and Bener (2009), Wei et al. (2011), Broos et al. (2011), Panda and Patra (2007), Farid et al. (2014), Bermejo et al. (2014), Ng et al. (2014), Mohamad et al. (2014), as a small, but representative sample]. It has been shown to be remarkably robust, both in its range of applicability and its performance. Although it is well known that it is a high-bias/low-variance classifier, and therefore might be expected to have relatively better performance on small data sets, the robustness of its performance across so many problems with widely differing characteristics remains somewhat of a puzzle, especially given its strong independence assumption on the features, and has led to several papers trying to understand and explain why it seems to be “unreasonably” successful.

There have also been many papers associated with different proposals for generalizing the NBC so as to circumvent its strong independence assumption. However, these generalizations are invariably more complicated to implement and much more resource intensive than the NBC. It is therefore important to develop diagnostics with which, for a given problem, one can gauge if and when the NBC will lead to significant error relative to a more sophisticated classifier that tries to account for potential feature correlations, while at the same time developing a deeper intuitive and theoretical understanding of how and why the NBC is so robust. In this paper, we analyze under what circumstances the NBA (Naive Bayes approximation) and associated NBC can be expected to be suboptimal and develop general diagnostics with which a problem can be examined a priori to ascertain if the NBA is adequate, or if a more sophisticated generalization is required. We then use those diagnostics to determine which features should be combined and from that construct a simple generalization of the NBC.

As far as explaining the robust performance of the NBC, Domingos and Pazzani (1996) have argued that it is largely due to its being applied to classification problems, where errors are counted with respect to whether the classification was correct (yes/no) for a given prediction, not whether the corresponding probability estimate was accurate. Further support for this type of hypothesis comes from the work of Frank et al. (2000), who showed that the performance of the NBA is substantially worse when applied to regression type problems. Pure classification accuracy, however, is only a single, global measure of classifier performance and there are others that can be more appropriate. For instance, it has been demonstrated in Ling et al. (2003) that the area under the ROC curve is a more discriminating measure than pure classification accuracy. Often of more interest are relative risk scores. This is especially the case in problems such as healthcare costs where a very small (\({\sim }1\%\)), but high risk group, can generate a large fraction of healthcare costs (\({\sim }30\%\)). In this circumstance it is very unlikely that a classifier is sufficiently accurate that it would place anyone in such a small group. Rather, what is of interest is the relative degree of risk from one patient to another (Stephens et al. 2005). This reasoning, that beyond pure classification the NBA can be more rigorously judged, is circumstantially supported by the fact that the NBA can produce poor probability estimates (Bennett 2000; Monti and Cooper 1999), though in Lowd and Domingos (2005) it was shown that NB models can be as effective as more general Bayesian networks for general probability estimation tasks.

However, even in the case of classification, as noted by Zhang (Zhang and Ling 2003; Zhang 2004), Domingos and Pazzani’s argument does not explain why it is not possible to have situations where the inaccurate probability estimates flip the classification. Zhang has proposed that it is not just the presence of dependencies between attributes that affects performance, it is how they distribute between different classes that plays a crucial role in the performance of the NBC, arguing that the effect of dependencies can partially cancel between classes and, further, that dependencies can potentially cancel between different subsets of feature values. The question then is, if this is possible, under what conditions will it occur and can we quantify it and therefore predict a priori when the NBA might be inadequate?

In this paper, we will investigate the relationship between attribute dependence and error by analysing a set of model problems, providing tools to investigate the set of attribute dependencies and showing how they affect both probability estimates and classification accuracy. We will thereby provide statistical diagnostics that allow both insight and predictive capacity to estimate when the NBA will breakdown and how to improve it. As accounting for attribute correlation is a question of model bias not model variance, as in Rish (2001), we will consider first a set of “infinite sample” artificial probability distributions, chosen in order to be able to tune the degree of correlation between different attributes while ignoring finite sampling effects. Indeed, it is precisely the existence of sampling error in real world problems that is associated with the superior performance of the NBA in spite of attribute dependencies (Friedman 1997).

The second major question revolves around how to improve the NBA and NBC. There have been many generalizations (Friedman et al. 1997; Keogh and Pazzani 1999; Kohavi 1996; Kononenko 1991; Langley 1993; Langley and Sage 1994; Pazzani 1996; Sahami 1996; Singh and Provan 1996; Webb and Pazzani 1998; Webb 2001; Webb et al. 2005, 2012; Xie et al. 2002; Zheng et al. 1999; Zheng and Webb 2000; Liangxiao et al. 2009) of the NBA. Some, such as Lazy Bayesian Rules (Zheng and Webb 2000), Super Parent TAN (Keogh and Pazzani 1999) and Hidden Naive Bayes (Liangxiao et al. 2009), have been shown to have very good performance, with significant improvements over the NBA but at substantial computational cost. A good overview of many of these algorithms can be found in Zheng and Webb (2000), Liangxiao et al. (2009). As it is not the primary purpose of this paper to introduce a new, competing algorithm, we will restrict ourselves to some general comments: All these generalizations seek to discover sets of attribute values that have dependencies such that they should either be combined together, or with the class variable. In general, they are such that the improvement associated with combining a set of features is judged a posteriori through the relative performance of the algorithm with or without that combination. As there are a combinatorially large number of possible attribute value combinations that might be considered however, the process of attribute selection can be intensive, so that, generally, studies have been restricted to considering only pairs of attributes with an exhaustive search of those combinations being performed.

The effectiveness of these generalizations of the NBA is generally judged by comparing the performance of the proposed algorithm against the NBA, and, potentially, a chosen set of other algorithms, on a set of canonical test problems, more often than not taken from the UCI repository. The effectiveness of the new algorithm is then inferred globally across the set of considered problem instances. We know from the No-free lunch theorems (Wolpert 1996; Wolpert and Macready 1997) that no algorithm is better than any other across all problem instances. The question is: can we infer a priori which algorithm will perform better on a given problem instance? This is especially important if performance enhancements are dominated by only a small number of instances. It also requires detailed insight as to how and why a given generalization outperforms the NBA on some data sets and not on others. Also, as comparatively complicated, “black box” type algorithms there is no transparent theoretical underpinning with which to understand their relative performance. A by product of the development of generalizations of the NBA has been the construction of diagnostics to determine the degree of attribute dependence and therefore detect which features to potentially combine. The most used diagnostic has been that of conditional mutual information (Rish 2001; Friedman et al. 1997; Zhang and Ling 2003). However, as Rish has pointed out, this measure does not correlate well with NBA performance, arguing that a better predictor of accuracy is the loss of information that features contain about the class when assuming the NB model. What is required is a measure of attribute dependence that relates directly to the appearance of a corresponding error in the NBA and, further, how these errors combine to yield an overall error for a given feature set or classifier.

The structure of the paper is as follows: In Sect. 2 we will compare and contrast the NBA with a particular class of generalizations—the semi-Naive Bayesian classifier (Kononenko 1991). We also introduce the metrics that we use for comparing the relative performance of the different approximations. In Sect. 3, we construct our basic error diagnostics—\(\delta (\xi |C)\), the difference between the likelihood functions for a given class, C, and for a given feature combination, \(\xi \), relative to the NBA to the likelihood function. In Sect. 4 we introduce the set of 12 two-feature probability distributions that we use to test our error diagnostics. In Sect. 5 using our 12 distributions we show that our error metrics are natural measures of when features should be combined, showing explicitly in the case of two features how the NBA can be valid even in the presence of strong attribute dependence due to cancelations between errors in the likelihoods of the different classes. In Sect. 6 we extend our analysis to three features to show how the GBA is sensitive to the particular factorization of the likelihoods and to the fact that different classes may have different factorizations. In Sect. 7 we show how our error diagnostics can determine the most appropriate factorizations in this three feature case and how they relate to and predict classifier performance. In Sect. 8 we extend our analysis to four, six and eight features, generalizing the previous analysis and, crucially, showing how errors can cancel between different sets of features for the same classifier. In Sect. 9 we apply the formalism to a set of UCI data sets, showing how our diagnostics can be used to determine which features to combine and constructing a simple generalization of the NBC and comparing its performance to the NBC. Finally, in Sect. 10 we summarize and draw our conclusions.

2 Comparing classifiers

2.1 Naive Bayes approximation

In trying to understand under what circumstances we might expect the NBA and the NBC to break down we will start with Bayes’ theorem

for a class C and a vector of N features \(\mathbf {X}=(X_1,X_2,\ldots ,X_N)\), where P(C) is the prior probability, \(P(\mathbf X|C)\) the likelihood function and \(P(C|\mathbf X)\) the posterior probability. Unfortunately, when \(\mathbf X\) is of high dimension, there are too many different probabilities, \(\hat{P}(C|\mathbf X)\), to estimate. Related to this is the fact that \(N_{C\mathbf X}\), the number of elements in \(\mathbf X\) and C, is generally so small that statistical estimates, \({\hat{P}}(C|\mathbf X)\) of \(P(C|\mathbf X)\) are unreliable due to large sampling errors.Footnote 1 Although \({\hat{P}}(\mathbf X|C)\) suffers from the same problem, if there is statistical independence of the \(X_i\) in the class C, then \(P(\mathbf X|C)=\prod _{i=1}^NP(X_i|C)\), where \(P(X_i|C)\) is the marginal conditional probability. Generally, this is not the case. However, one may make the assumption that it is approximately true, taking \(P_{\textit{NB}}(\mathbf X|C)= \prod _{i=1}^NP(X_i|C)\) to find

where \({\bar{C}}\) is the complement of C. Of course, if we were to calculate \(P(C|\mathbf X)\) using (2.2) we would have to also estimate \(P(\mathbf X|{\bar{C}})\), which would present the same problems as estimating \(P(\mathbf X|C)\). The same naive approximation can be used in this case too, writing \(P(\mathbf X|{\bar{C}})=\prod _{i=1}^NP(X_i|{\bar{C}})\) to find

Rather than constructing \(P(C|\mathbf X)\) directly, from (2.1), usually a score function, \(S(\mathbf X)\), that is a monotonic function of \(P(C|\mathbf X)\) itself, is constructed, by considering the odds ratio of the class C and another class, usually its complement, \({\bar{C}}\)

The NBA to this score function, \(S_{\textit{NB}}(\mathbf X)\), is given by

As a simple sum this form of the approximation is transparent. Another advantage of this form is that it is not neccessary to have in hand \(P(\mathbf X)\) as the idea of such a score function is just to discriminate between the classes C and \({\bar{C}}\). This is a different task from estimating the probability \(P(C|\mathbf X)\) directly. As a classifier, (2.4) is such that if \(S_{\textit{NB}}(\mathbf X)>0\) then the instance defined by \(\mathbf X\) is assigned to the class C and if \(S_{\textit{NB}}(\mathbf X)<0\) to the complement \({\bar{C}}\).

2.2 Generalized Naive Bayes approximation

The NBA and NBC are based on a maximal factorization of the likelihood function \(P(\mathbf X|C)\). Generalizations of the NBA have been associated with introducing dependencies between the features and seeking an alternative factorization of the likelihood functions. In order to have a concrete theoretical framework in which to examine the validity of the NBA, the framework of Bayesian networks (Friedman et al. 1997) is particularly appropriate.Footnote 2 Some generalizations, such as Lazy Bayesian Rules (Zheng and Webb 2000) and Super Parent TAN (Keogh and Pazzani 1999), consider factorizations of the form \(\prod _{i=1}^NP(X_i|C, \xi )\), where \(\xi \) represents some subset of attribute values. In the case of TAN or SP-TAN, \(\xi \) is restricted to be one other variable. Other generalizations, such as the semi-Naive Bayesian classifier (Kononenko 1991), consider factorizations of the form \(\prod _{i=1}^mP(\xi |C)\). Both types of generalization consider attribute dependencies. Here, we will focus on the semi-Naive Bayesian classifier.

To define generalizations of the NBA and NBC associated with alternative factorizations we will first introduce a “schema” based notation for marginal probabilities familiar from genetic algorithms (Holland 1975; Poli and Stephens 2014), where for any feature vector, \(\mathbf X = \{X_1,X_2,\ldots ,X_N\}\), a marginal probability \(P(X_{i_1}X_{i_2}\ldots X_{i_m}|C)\) can be written \(P(*^{i_1-1}X_{i_1}*^{i_2-i_1}X_{i_2}\ldots X_{i_m}|C)\), where \(*^n\) signifies \(*\) repeated n times and an \(*\) in the ith position means that \(X_i\) has been marginalized. The order of the schema is just the number of non-marginalized variables. The NBA uses only order-one schemata, i.e., order-one marginals. We can then denote an arbitrary m-feature value combination by a schema \(\xi =(\xi _{i_1},\xi _{i_2},\ldots ,\xi _{i_m})\), of order \(m<N\).

With this notation we can define the GBA, and a Generalized Bayes Classifier (GBC), via generalizations of Eqs. (2.2) and (2.4) that correspond to alternative factorizations of the likelihood functions. Unlike the NBA the GBA is not unique. For N features there are \(B_N\) partitions, where \(B_N\) is the Bell number. Worse, there is this number for every feature value set and there are \(\mathcal R=\prod _{i=1}^n a(i)\) feature value combinations, where a(i) is the cardinality of the ith feature.

Of course, as estimates of probabilities or as classifiers the question is: out of all the possible factorizations which one is optimal and how do we define optimal? For instance, for the case of three variables, out of the 4 possible factorizations, which gives the best approximation to \(P(X_1X_2X_3|C)\)? Much of this paper will be concerned with the question of how to determine better factorizations using diagnostics. We will denote the GBA associated with a particular factorization by a set of \(N_\xi \) schema \(\xi ^{(i)}=\cup _{\alpha =1}^{N_\xi }\xi ^\alpha \). Note that any factorization must correspond to a partition of the set of N feature values. So, for any given feature vector, \(\mathbf X=(X_1,X_2,\ldots ,X_N)\), every \(X_i\) must be a member of one and only one schema \(\xi ^\alpha \in \xi ^{(i)}\). Thus, we have the GBA for the likelihood functions

where \(N^C_{\xi ^{(i)}}\) is the number of independent marginals used in the GBA for the likelihood function for the class C, and we take C to abstractly represent either the class C of interest or its complement \({\bar{C}}\). In the NBA, \(N^C_{\xi ^{(i)}}=N^{{\bar{C}}}_{\xi ^{(j)}}=N\). Note that at this level of generality we make no a priori assumption that the optimal factorization of the likelihood function of C is the same as that of \({\bar{C}}\), although it is usually assumed that the factorization of the likelihood functions for C and \({\bar{C}}\) are the same. We note again that our factorizations are a particular subset of all possible factorizations in that we will not consider factorizations where a particular attribute value may occur in more than one schema.

For the posterior probability we have

and finally, for the score function

where we define

as the contributions to the overall score from the likelihood of C and \({\bar{C}}\) respectively.

If we make the simplifying assumption that the optimal factorization of the likelihood functions for C and \({\bar{C}}\) are the same then (2.7) simplifies to

which is a natural generalization of the score function in the NBA. The GBC is then such that a feature vector \(\mathbf X\) belongs to the class C if \(S_{\textit{GB}}(\mathbf X)>0\) and to \({\bar{C}}\) if \(S_{\textit{GB}}(\mathbf X)<0\). Thus, the NBA and GBA will lead to the same classification if and only if \(sign(S_{\textit{GB}}(\mathbf X))= sign(S_{\textit{NB}}(\mathbf X)), \forall \mathbf X\).

2.3 The difference

Any difference between the NBA and the GBA is due to correlations between the features \(X_i\) in the likelihoods for C and \({\bar{C}}\). In terms of model bias, the most appropriate factorization of the likelihood functions should be that which most respects the existence of such correlations. For a given factorization we can determine the differences between the GBA and the NBA from Eqs. (2.5), (2.6) and (2.7). For the likelihoods we have

where \(\mathcal{C}=C\) or \({\bar{C}}\) and for which we do not assume the same factorization. An important property of the (2.10) is that they satisfy the equations

where the sum is over all feature vectors \(\mathbf X=(X_1,X_2,\ldots ,X_N)=(\xi _1,\xi _2,\ldots ,\xi _{N^C_{\xi ^{(i)}}})\), the decompositions over individual features or over schemata simply corresponding to two distinct partitions. The result (2.11) is a consequence of the conservation of probability as \(\varDelta _{P_{\textit{GB}}}\) is composed of the difference between two probabilities, each of which is normalized. Thus, the differences between the GBA and NBA cannot all be of the same sign. As we will see, this plays an important role in understanding under what circumstances the NBA is a good one.

For the posterior probability we have

and, finally, for the score function,

where \(P_{\textit{NB}}(\xi ^\alpha |C)=\prod _{i=1}^m P(\xi ^\alpha _i|C)\), m being the number of features, \(\xi ^\alpha _i\), in the feature schema \(\xi ^\alpha \). In the case of identical factorizations for C and \({\bar{C}}\)

2.4 Performance metrics

In determining the relative merits of the GBA versus the NBA we will consider several performance metrics by which to judge them. In this paper, as emphasized, the idea is to understand the errors introduced by the intrinsic biases arising from the different approximations. Hence, in determining the impact of correlations on the validity of the NBA, and the improvement of the GBA, this can and, we would argue, should be considered first in an infinite sample setting, where sampling errors can be ignored. Hence, we will first consider various definite probability distributions in a setting with a small number of features. The advantage is that we then have in hand the exact probability distributions and therefore can measure errors in both the NBA and GBA relative to the exact distribution \(P_e(C|\mathbf X)\). Explicitly, we will consider distributions defined by the likelihood functions \(P_e(\mathbf X|C)\) and \(P_e(\mathbf X|{\bar{C}})\), where \(\mathbf X\) is a N-dimensional vector representing N binary features \(X_i = 0,\ 1\). These likelihood functions will be specified in order to model different degrees of correlation between the features to better understand how the NBA and GBA perform as a function of these correlations.

As we have in hand the exact distributions, we will consider directly as a performance measure the error in the posterior probability distribution \(\varDelta _{i}(C|\mathbf X)=P_e(C|\mathbf X)-P_i(C|\mathbf X)\), where i denotes the corresponding approximation—NBA or GBA. Secondly, we will consider the classification accuracy by considering the sensitivity \(\mathcal{S}_i\) of each classifer, \(S_i(\mathbf X)\), which will correspond to the number, or fraction, of feature combinations classified correctly.

Thirdly, we will consider the relative ranking of the full set of feature combinations in terms of the relevant score function \(\{S_i(1), S_i(2),\ldots ,S_i(2^n)\}\), where \(S_i(1)\ge S_i(2)\ge \ldots \ge S_i(2^n)\). We will then consider the distance

where \(r_i(m)\) is the relative rank of the feature combination m of the classifier \(S_i\) and similarly \(r_j(m)\) for the classifier \(S_j(m)\). Here, if \(i=e\), corresponding to the exact classifier, then Eq. (2.15) measures how faithful the ranking of the classifier is relative to the exact one. Finally, in the case of the real world data sets we will consider classification error and AUC as relevant metrics.

3 Coping with correlations

The differences between the NBA and GBA in Sect. 2.3 depend on the particular factorization chosen and this factorization is composed of components—schemata. In determining the relative merits of the NBA and GBA there are then two basic questions: Firstly, what criteria should be used to determine those features that should be considered together rather than independently? Secondly, once we have determined which features to combine, we must ask how the NBA should then be modified. We will first consider how to identify feature sets that should be considered together starting with the simple example of only two features.

3.1 Two features

Taking two features, \(X_1\) and \(X_2\), treating them as independent potentially leads to errors in \(P(\mathbf X|C)\), \(P(\mathbf X|{\bar{C}})\) and \(P(\mathbf X)\), and therefore in \(P(C|\mathbf X)\) and \(S(\mathbf X)\). Denoting these errors as \(\delta (X_1X_2|C)\), \(\delta (X_1X_2|{\bar{C}})\) and \(\delta (X_1X_2)\) we have

where \(\mathcal{C} = C\), or \({\bar{C}}\), that satisfy

which is a consequence of the conservation of probability, as \(\sum _{X_1X_2}P(X_1X_2|C)=\sum _{X_1X_2}P_{\textit{NB}}(X_1X_2|C)=1\). For the simple case of binary features we have \(\delta (X_1X_2|C)=\delta ({\bar{X}}_1{\bar{X}}_2|C)=-\delta (X_1{\bar{X}}_2|C)=-\delta (\bar{X}_1 X_2|C)\), where \({\bar{X}}_i\) is the bit complement of \(X_i\), implying that there are only two independent errors and that they have the same magnitude and opposite sign. We can also normalize any of these error terms, \(\delta \rightarrow \delta '\); e.g. by dividing by the NBA.

These errors imply a corresponding error in the posterior probability

where, in passing to Eq. (3.4), we have used the definitions of the errors (3.1) and (3.2) and (2.3). The quantities \(\delta (X_1X_2|C)\) and \(\delta (X_1X_2|{\bar{C}})\) offer a complete description of the errors of the NBA for the case of two variables. Interestingly, we can see that, as \(\delta (C|X_1X_2)\) involves the difference of the errors in the two likelihood functions, it is possible to have large errors in these without this necessarily leading to a significant error in the posterior probability itself. We can also see that, all else being equal, the error will be greater when the error in the likelihoods for the class and its complement are of opposite sign. In other words, that the variables are positively correlated in \(C/{{\bar{C}}}\) and negatively correlated in \({{\bar{C}}}/C\).

With regard to the score function (2.4) there are two potential sources of error—in \(P(X_1X_2|C)\) and in \(P(X_1X_2|{\bar{C}})\). The exact score is

Given that in Sect. 2.2 we indicated that the optimal factorizations of the likelihood functions for C and \({\bar{C}}\) may be distinct, it is convenient to introduce separate measures for the errors in the corresponding score functions

where, again, \(\mathcal{C} = C\) or \({\bar{C}}\). A consequence of (3.3) is that \(\delta _s(X_1X_2|C)\) cannot have the same sign for all \(X_1X_2\) thereby providing the basis by which deviations from the NBA can cancel between different feature combinations.

Comparing with Eq. (2.4) the difference between the GBA and NBA that accrues from correlation between \(X_1\) and \(X_2\) in C or \({\bar{C}}\) is given by

3.2 General case: more than two features

For the case of two features there is only one possible factorization. For more than two features, however, there are two related problems to be confronted: how many feature combinations (schemata) appear in a given factorization and which features appear in a given feature combination (schema)?

In terms of the error between the NBA for a given feature combination—schema \(\xi \)—the two-feature analysis generalizes quiet readily. Using our schema notation, errors (3.1) and (3.2) have simple generalizations

where m is the number of features in the schema \(\xi \). As with Eq. (3.3) we have

For the error in the posterior probability the generalization is

Finally, for the score function, in distinction to the two feature case, where the factorizations of \(P(X_1X_2|C)\) and \(P(X_1X_2|{\bar{C}})\) are, by definition, the same, here it is appropriate to consider separately the errors in the contributions to the score from the likelihood functions for C and \({\bar{C}}\). From Eq. (2.8) we can then define

Assuming the same schema appears in the factorizations of \(P(\mathbf X|\mathcal C)\) the error is

As with the two feature case, the constraints (3.10) imply that not all the errors \(\delta _s(\xi |C)\) and \(\delta _s(\xi |{\bar{C}})\) can be of the same sign.

3.3 General case: more than two schemata

The preceding error functions are useful for determining the impact of attribute dependence in a subset, \(\xi \subset \mathbf X\), of feature values. However, when there are multiple sets, it is not clear how errors may combine to influence the total difference between the NBA and GBA as illustrated by Eqs. (2.10), (2.12) and (2.13). As might have been anticipated, the difference between the likelihood functions and the posterior probabilities for the GBA and NBA do not seem to be simple functions of the errors \(\delta (\xi |C)\). However, for the score function we may write

In (3.14) we can see how the constraints (3.10) may play a role in error cancelation. In the error associated with C, as for each schema, \(\xi ^\alpha \), there is at least one particular feature combination, \(\xi ^\alpha _{i_1i_2\ldots i_m}\), with an error \(\delta _s(\xi ^\alpha |C)\) that has a different sign to the others, then some degree of error cancelation is inevitable between different schemata when considering different feature combinations for those schemata.

When the factorizations are the same, Eq. (3.14) simplifies even further,

Equations (3.14) and (2.13) hold an important lesson: Just as for a single schema one can have cancelations in likelihood errors between C and \({\bar{C}}\), i.e., intra-schema cancelations, so, one can have cancelations between different schemata, i.e., inter-schemata cancelations. What is more, the likelihood errors for the components of the factorizations of C and \({\bar{C}}\) are what determine the overall error of the NBA.

4 What difference does it make?

The impact of correlations on the validity of the NBA should be understood from the point of view of model bias. As argued, this should be considered first in an infinite sample setting. In Appendix A we introduce a set of 12 different two-feature probability distributions with binary feature values and also the parity function. The distributions are uniquely characterized by specifying values for the likelihoods \(P(X_1X_2|C)\) and \(P(X_1X_2|{\bar{C}})\) for \(X_i=0,1\) for each distribution.

These probability distributions have been constructed to exhibit a diversity of different correlation structures that can occur. For instance, distributions 1-3 all use the same set of probabilities for the likelihoods for C and \({\bar{C}}\) and differ only in how the likelihoods for \({\bar{C}}\) are assigned to the different feature value combinations. All three distributions exhibit strong correlations between the features. How these correlations affect the validity of the NBA, however, is quite distinct. Distribution 4 is chosen as it exhibits only weak correlations between the features in both likelihoods. Distributions 5-7 show moderate correlations but with different characteristics. For example, distributions 6 and 7 have correlations in the likelihoods that are all of equal magnitude, differing only in the sign between C and \({\bar{C}}\). Distribution 8 shows strong correlations in the likelihood for C, but weak correlations for \({\bar{C}}\). Distribution 9 is the inverse of distribution 7, with C and \({\bar{C}}\) interchanged. Distribution 10 is the analog to distribution 9 as distribution 2 is to distribution 1, i.e., the likelihoods of C are the same but the likelihoods for \({\bar{C}}\) have been permuted. Finally, distributions 11 and 12 are two more that exhibit strong correlations in all likelihood functions but differ in how the correlations are distributed among the features. In all these cases the class probability was taken to be \(P(C) = 0.6\). We add in as distribution 0 the well known parity function, where \(P(C|X_1X_2) = 0,\ 1\) according to if \(X_1+X_2\) is even or odd. This serves as a test case where correlations are strongest.

We will consider the errors at two levels: Firstly, in estimating the \(P(C|X_1X_2)\); and, secondly, as a classifier, where we consider the difference between the exact classifier \(S_e(\mathbf X)=\ln (P(C|X_1X_2)/P({\bar{C}}|X_1X_2))+\ln (P(C)/P({\bar{C}}))\) and the NBC, Eq. (2.4). We will consider the percentage errors between the exact classifiers and posterior probability and their NBA counterparts, as well as the distance function (2.15). In Table 9 we see the results of the calculation for the exact posterior probability for the twelve probability distributions and the parity function, the NBA to the posterior probability and the percentage difference to the exact expression. There are four feature combinations, \(X_1=0,\ 1\), \(X_2=0,\ 1\) for each class \(C=1\), \({\bar{C}} = 0\) and vice versa. Also in that table are the exact scores for each classifier and its NBA and the percentage difference between them.

So what can we glean from these results? Clearly, the performance of the NBA is very mixed over the different probability distributions, with mean absolute errors relative to the exact posterior probabilities over a given distribution of between 10 and 100% and, in the case of score differences, errors of between 10 and 5000%, and infinite in the case of the parity function, where the NBA gives zero score. In the case of estimating posterior probabilities the distributions where the NB error is greatest are 11, 3, 1, 8 and 10 and the least in 4, 7, 12, 9 and 2 and, of course, the parity function. What are distinguishing factors of better/worse performance? In Table 9 we also see the errors in the likelihood functions, (3.1), and the errors in the score functions, (3.6). Note that errors in the likelihood functions themselves are not necessarily good indicators of errors in the posterior probabilities or classification errors. Rather, what is of most importance is the relative sign of the errors for C and \({\bar{C}}\). The distributions with the largest errors in the posterior probabilities or score—0, 11, 3, 1, 8 and 10—all have sign differences between \(\delta (X_1X_2|C)\) and \(\delta (X_1X_2|{\bar{C}})\) for every value of \(X_1\) and \(X_2\). On the other hand, four of the five distributions with least error—2, 7, 9 and 12—do not have any sign differences between \(\delta (X_1X_2|C)\) and \(\delta (X_1X_2|{\bar{C}})\) for any value of \(X_1\) and \(X_2\). The low error distribution, 4, has sign differences, but in this case the magnitude of the errors is very low \(\approx 10^{-2}\).

5 Diagnostics for when the NBA is valid

When considering the validity of the NBA we have to ask: The NBA of what? and, also, With respect to what benchmark? To answer the NBA of what—two important quantities to calculate are posterior probabilities and classifier scores. For benchmarking we cannot benchmark to the exact answer in real world problems, so we must benchmark to another algorithm. Here, the benchmarking will be to the GBA. Generically, the validation of an algorithm, comes from a performance metric on a set of test problems, i.e., the choice of algorithm is determined a posteriori, after testing the algorithm on the set of problems. As emphasised, an important goal here is to infer a priori whether a GBA type algorithm will be better than the NBA. We have seen that the differences between the two approximations stem from two different factorisations of the likelihood functions for the class of interest and its complement. We have also seen that we can infer potential errors at the level of the individual factors—schemata—which are combinations of features. This provides a huge simplification as it allows one to infer which approximation will be better on a given problem before applying the full algorithm.

5.1 Diagnostics for when to combine features

So, what is a good diagnostic to determine the relative accuracy of the approximations? In the case of two features we have deduced that significant errors in the likelihood functions are a necessary, but not sufficient, condition in order to have significant errors in the estimation of posterior probabilities or in classification performance. With this in mind, we propose (3.1), or the associated functions, (3.6), as direct measures of when it is necessary to combine the features \(X_1X_2\) in the context of a given likelihood. Specifically, we introduce

or their unnormalized equivalents, as measures of when to potentially combine variables in the likelihoods of C and \({\bar{C}}\). We can then ask to what degree significant errors in these functions lead to significant errors in the distinct performance measures. However, as the difference between the GBA and NBA depends on the relative signs of the errors in the likelihood functions we propose as further diagnostics

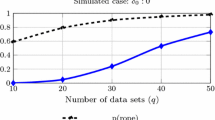

Thus, if \(\varDelta (X_1X_2)\) or \(\varDelta _s(X_1X_2)\) is large, then the NBA will be expected to give relatively poor performance for predicting, say, \(P(C|X_1X_2)\). In Fig. 1 we see a graph of the error in \(P(C|X_1X_2)\) as a function of \(\varDelta (X_1X_2)\) for the twelve probability distributions introduced in Sect. 4. We have differentiated in the graph the distinct distributions, wherein, from the analysis of Sect. 4, we can distinguish the 5 distributions with greatest error, the 5 with least error and the two intermediate—“neutral”—distributions. We see that significant errors in the likelihood functions for C or \({\bar{C}}\) separately are not sufficient to predict errors in the corresponding posterior distributions. Of the five distributions with smallest errors—2, 4, 7, 9 and 12—only one, 4, has errors in the likelihoods which are small in magnitude. The others are associated with distributions where the errors in the individual likelihoods are large, but of the same sign for C and \({\bar{C}}\), thus leading to a partial cancelation between the two in their contributions to the posterior distributions. In distinction, the distributions with the greatest error in \(P(C|X_1X_2)\) have large likelihood errors, but of opposite sign between C and \({\bar{C}}\), thus leading to a reinforcement of the individual errors. As can be seen, the correlation is impressive—coefficient of correlation 0.90. The analogous coefficients of correlation for \(\varDelta _C\) and \(\varDelta _{{\bar{C}}}\) are 0.77 and \(-0.59\) respectively. Thus, we see that error cancelation between the different likelihoods makes \(\varDelta (X_1X_2)\) a better indicator of performance difference between the NBA and GBA for calculating posterior probabilities than \(\varDelta _C\) and \(\varDelta _{{\bar{C}}}\), though the latter also show substantial correlation.

In the case of more than two variables we propose equations (3.8) as diagnostics for when the error in the likelihood function is sufficient to warrant using the GNB approximation for that schema. As with the case of two variables however, significant errors in the likelihood functions are not a sufficient condition for significant errors in the posterior distribution or in classification accuracy. Hence, one may be tempted to take analogs of (5.3) and (5.4), with \(X_1X_2\) replaced by an arbitrary schema \(\xi \), as a natural diagnostic for when features should be combined into a schema of more than two variables. Going beyond the case of two features however, we must confront the possibility that the optimal factorization of the likelihoods for C and \({\bar{C}}\) may be different. In this case, we propose as diagnostics

or their unnormalized equivalents. So, we have a conundrum: we can identify measures for when the likelihood functions, or score contributions, for a given schema should not be factorized, but this is not sufficient to guarantee significant errors in the posterior probabilities, or in classification performance. On the other hand, we can identify measures, Eqs. (5.3), (5.4) that directly speak to errors in these latter quantities, but that are associated with a symmetric, not necessarily optimal, factorization of the likelihoods \(P(\mathbf X|C)\) and \(P(\mathbf X|C)\).

When the likelihood factorizations are not symmetric, the above discussion indicates that the validity of the NBA is a “global” as opposed to a “local” question; i.e., for a non-symmetric factorization, the validity should be adjudged after calculating the contribution and corresponding errors of all factors together. On the other hand, for a symmetric factorization, the evaluation of errors can be broken down into a set of separate components, and this is how the generalizations of the NBA discussed in the introduction function. We will consider these two cases in more depth in the next Section, beginning with the symmetric case.

First, however, rather than an analysis for every feature variable combination individually, as in real world problems there may be many of them, we are likely to be more interested in the validity of the NBA when averaged over them, and, as \(\varDelta (X_1X_2)\) can change sign from one set of feature values to another, as a measure of the validity of the NBA over the whole set of possible feature values we take

In Fig. 2 we see a graph of the average absolute error in the posterior probability versus the average value of \(D_2\) over the feature value combinations \(00,\ 01,\ 10,\ 11\), and the average value of \(\delta (X_1X_2|C)/P_{\textit{NB}}(X_1X_2|C)\) over those combinations for each of the 12 probability distributions seen in Appendix A. We clearly see the high degree of correlation for \(\varDelta \), with correlation coefficient of 0.97 (the corresponding correlation coefficient using \(\delta \) is 0.43), thus demonstrating once again, at least at this simple level, its value as a predictor of when the NBA will break down. This also indicates the importance of considering \({\bar{C}}\) in understanding the effect of the NBA on the calculation of posterior probabilities.

Besides the impact of the NBA on the calculation of posterior probabilities there is the question of how it impacts classification accuracy. We could use Eq. (5.3) as a diagnostic. However, we can also use (5.4), which shows a high degree of correlation with (5.3). In the case of only two features, if we take the exact score as our target then Eq. (5.4) is somewhat tautological. The correlation between a pure classification performance measure, such as sensitivity, and a diagnostic such as (5.4) is weaker than for that of posterior probability. Although the relation shows a clear tendency there is also a high degree of dispersion. The reason for the high variance is that a misclassification is not dependent on the difference in magnitude between \(S(X_1X_2)\) and \(S_{\textit{NB}}(X_1X_2)\) for a given \(X_1X_2\) but, rather, only on if there is a difference in sign between them. To account for this, we can introduce the diagnostic

If \(\mathcal{S}(X_1X_2)=0\) then the GBC and NBC will place the instance \(X_1X_2\) in the same class. If \(\mathcal{S}(X_1X_2)\ne 0\) on the other hand the GBC and NBC will place the instance in different classes. From this we can clearly intuit the robustness of the NBC given that it is only when the exact classifier and the NBC are in disagreement that there can be differences between them. This will preferentially occur near a score threshold, \(S^*\), generically \(S^*=0\), that marks the cutoff between one class and another. In other words, S and \(S_{\textit{NB}}\) can be substantially different and still have agreement over the assigned class. This is the logic of the argument of Domingos and Pazzani (1996) about the robustness of the NBC.

A complementary diagnostic is the ratio of the variance in the exact score to the variance in the NB score, the average value of this ratio being 429 for the 5 distributions with highest error and 29 for those with lowest error. This reflects the fact that there is much more dispersion in the exact posterior probabilities than in their NB approximations and that this dispersion is particularly associated with correlation between the features as opposed to the contributions of the individual features themselves. For distribution 4 the NBA performs very well, and we can clearly see why. The variance in the NB scores is 5.94, far larger than the other distributions, and very similar to the exact variance. This variance is the raw material on which the validity of the NBA rests. The larger this variance, and the more similar it is to the exact variance, the less likely it is that sampling errors will lead to an erroneous ranking of the NBC relative to the exact one.

6 Factorization dependence of the performance of the GBA versus the NBA

We will now concentrate on the effect of the GNB and NB approximations in terms of the corresponding classifiers \(S_{\textit{GNB}}(\mathbf X)\) and \(S_{\textit{NB}}(\mathbf X)\) rather than on the calculation of posterior probabilities. The reason for this is two-fold, first of all the vast majority of work on the NBA and the GBA is in terms of classification, and, secondly, the analysis of errors is much simpler due to their purely additive nature in the score functions. Analyzing Eq. (3.14), two fundamental observations are pertinent: Firstly, the error in the score function for a given schema \(\xi \) may be small, even though the errors in the constituent likelihood functions is large, due to a cancelation between the differences \(\delta _s(C|\xi )\) and \(\delta _s({\bar{C}}|\xi )\). Secondly, the overall difference in the GBC and the NBC may be small due to cancelations between the differences \(\delta _s(C|\xi )\) and/or \(\delta _s({\bar{C}}|\xi )\) with those, \(\delta _s({\bar{C}}|\xi ')\) and \(\delta _s({\bar{C}}|\xi ')\), of other schemata \(\xi '\).

To develop more intuition for this, we can turn to the philosophy of the two-variable case, where we posited specific distributions for the likelihood functions. Now, however, we are interested in more than two features. We will consider in this Section three-feature distributions \(P(X_1X_2X_3|C)\), before passing in later Sections to four-, six- and eight-feature distributions, all with analogous expressions for the likelihoods for \({\bar{C}}\). Of course, one can consider correlations in the exact likelihoods such that they do not possess any exact factorization at all. However, any real world problem will, at the very least, have approximate factorizations. What is more, in an algorithmic implementation of the GBA, where a vast space of possible factorizations could be searched, it is natural to concentrate on correlations between pairs of features as these will tend to be the combinations that have the biggest sample size and therefore the most statistical significance. We will therefore use the probability distributions of Appendix A, creating distributions for more than two features by concatenating the two-feature distributions we have constructed. In this way we will not consider potential correlations between more than two features, although the following analysis generalizes very simply to more than two features. Moreover, all our qualitative observations about the validity of the NBA are independent of the number of correlated features.

We will proceed by considering different possible scenarios: first, we may consider that the correlations in the underlying probablility distributions of the likelihoods for C and \({\bar{C}}\) may be symmetric, i.e., appear in the same feature combinations in the two likelihoods, or asymmetric, i.e., that the correlated feature combinations in the likelihoods for C and \({\bar{C}}\) are distinct; secondly, in applying the GBA we may choose a factorization of the likelihoods that is symmetric, i.e., the same for both, or asymmetric, i.e., distinct for both. We will observe, naturally, that the optimal factorization, in terms of our performance metrics, is that which best respect the correlations in the underlying probability distributions.

6.1 Symmetric correlations, symmetric factorizations: three features

We will begin with the case where correlations in the likelihoods for C and \({\bar{C}}\) are associated with the same features and, moreover, the factorizations of the likelihoods of C and \({\bar{C}}\) are symmetric in that the combined features in the GBA are the same for both likelihoods. In schema language, symmetric factorizations are such that the schema partitions, \(\xi ^{(i)}\), of both likelihoods are the same. We will fix the exact probability distribution to be a product

where each \(P(X_1X_2|\mathcal{C})\) can be chosen from an independent distribution; for example, from our set of twelve distributions. In this case, the likelihoods are a product of one order-two schema and one order-one schema. The exact score function/classifier is

Unlike the NBA, for the GBA there are many possible distinct factorizations. For three binary features, we denote the NBA by the schema partition \(\xi ^{(0)}=(\xi _1=X_1,\xi _2=X_2,\xi _3=X_3)\). The three possible two-schema partitions are: \(\xi ^{(1)}=(\xi _1=X_1X_2,\xi _2=X_3)\), \(\xi ^{(2)}=(\xi _1=X_1X_3,\xi _2=X_2)\) and \(\xi ^{(3)}=(\xi _1=X_2X_3,\xi _2=X_1)\). The only order-three schema \(\xi ^{(4)}=(\xi _1=X_1X_2X_3)\) corresponds to the exact probability distribution itself. As, by construction, there are no dependencies between the schemata \(\xi _1=X_1X_2\) and \(\xi _2=X_3\), the GBA, if it is based on the two schemata \(\xi _1=X_1X_2\) and \(\xi _2=X_3\), should be exact. In other words,

where the superscript e on \(P^e_{\textit{GB}}\) signifies that this particular factorization of the GBA is exact as it respects the correlation structure of the exact probability distribution. The factorizations of the GBA we will consider here are \((\xi ^{(0)},\xi ^{(0)})\), \((\xi ^{(1)},\xi ^{(1)})\), \((\xi ^{(2)},\xi ^{(2)})\) and \((\xi ^{(3)},\xi ^{(3)})\) which are all symmetric, in that the combined features are the same in the likelihoods of both C and \({\bar{C}}\).

In Table 1 we see the performance of these different factorizations relative to the exact classifier, and also to the NBA, \((\xi ^{(0)},\xi ^{(0)})\), for the cases where the correlations in the underlying probability distribution are strong, s, (we use distribution 1 of Appendix A) or weak, w, (where we use distribution 4 of Appendix A). As performance measures we consider the sensitivity of the different classifiers and the distance function, Eq. (2.15). We consider the cases ss, ww, sw and ws for the strength of correlation in the likelihoods for C and \({\bar{C}}\) respectively. Thus, ss refers to strong correlation in both likelihoods (distribution 1 for the likelihoods of C and \({\bar{C}}\)), whereas ws corresponds to a weak correlation in the likelihood for C (distribution 4) but a strong correlation in the likelihood for \({\bar{C}}\) (distribution 1) and vice versa for sw. Finally, we will consider the correlation strength distribution \(ss'\), which corresponds to distribution 2 for the likelihoods of both C and \({\bar{C}}\). The important distinction between distributions 1 and 2 is that, although both are associated with strong correlations in the likelihoods for C and \({\bar{C}}\), distribution 1 results in a reinforcement of errors between the likelihoods of C and \({\bar{C}}\), whereas distribution 2 is associated with an intra-schema cancelation.

For the case where there are strong correlations in either or both likelihood functions, we see that the GBA is better or equal to the NBA in every case, independently of the factorization. For the factorization \(\xi ^{(1)}\) for both C and \({\bar{C}}\) it is strictly better. This is understandable, as in this case the factorization captures precisely the correlation structure of the underlying exact probability distributions. For the factorizations \(\xi ^{(2)}\) and \(\xi ^{(3)}\), the performance of the GBA is equivalent to that of the NBA, because there are no correlations between the features in the pairs \(X_1X_3\) or \(X_2X_3\). For example, for \(\xi ^{(2)}\), which considers \(P(\xi _1|C)=P(X_1X_3|C)\), we have \(P(X_1X_3|C)=P(X_1|C)P(X_3|C)\), which is equivalent to the NBA. Note also that the performance of the NBA in the case of \(ss'\) is substantially better than for the distribution ss, showing how the quality of the NBA is improved when there is a cancelation effect among large errors in the likelihoods. We can also conclude that there is no performance cost relative to the NBA of choosing an inappropriate symmetric factorization, 22 or 33, but neither is there an improvement.

6.2 Asymmetric correlations, all factorizations: three features

We now consider the case where the correlations in the two likelihoods are asymmetric with

with an exact score function/classifier

Once again, there are three possible factorizations: \(\xi ^{(1)}=(\xi _1=X_1X_2,\xi _2=X_3)\), \(\xi ^{(2)}=(\xi _1=X_1X_3,\xi _2=X_2)\) and \(\xi ^{(3)}=(\xi _1=X_2X_3,\xi _2=X_1)\). However, distinct to the symmetric case, here the optimal GBA factorization is different for the two likelihoods. For \(P(X_1X_2X_3|C)\), the factorization \(\xi ^{(1)}\) will be optimal, while for \(P(X_1X_2X_3|{\bar{C}})\) the factorization \(\xi ^{(2)}\). As in the previous Section, we consider the cases ss, ww, sw, ws and \(ss'\) for the strength of correlation in the likelihoods for C and \({\bar{C}}\) respectively. We use distribution 1 for s and distribution 4 for w. For \(ss'\) we use distribution 2. The difference now is that s in ss is associated with distribution 1 for \(P(X_1X_2|C)\) and \(P(X_1X_3|{\bar{C}})\), \(P(X_1X_2|C)\) for s in sw and \(P(X_1X_3|{\bar{C}})\) for s in ws. Similarly, for w.

In Table 2 we see the performance of the different factorizations relative to the exact classifier and the NBA (factorization 00) for the cases ss, ww, sw, ws and \(ss'\). The first immediate observation is that the factorization 12, corresponding to \(\xi ^{(1)}\) for \(P(X_1X_2X_3|C)\) and \(\xi ^{(2)}\) for \(P(X_1X_2X_3|{\bar{C}})\), has optimal performance, with 100% classification accuracy and perfect ranking. In general, we see that the GBA for most factorizations is better than or equal to the NBA with respect to both metrics. For the distribution ss the GBA is strictly better or equal to the NBA for all factorizations and both performance measures. Moreover, it is strictly better in those cases, \(1*\) and \(*2\), where one of the combined feature pairs captures the underlying correlations in the probability distributions; \(1*\) capturing the strong correlation in \(P(X_1X_2|C)\) and \(*2\) the underlying strong correlation in \(P(X_1X_3|C)\). Interestingly, for the case ww, the NBA is optimal with respect to both sensitivity and distance. This is in distinction to the case of symmetric correlations. In the mixed distributions, ws and sw, we see the same pattern as for the case of symmetric correlations: i.e., the GBA is strictly better for sw in the cases 10, 11 and 12 and 13, where the GBA captures the strong correlation in the likelihood for C; for the distribution ws it is strictly better in the cases 02, 12, 22 and 32, where the GBA accounts for the strong correlation in the likelihood for \({\bar{C}}\). For the distribution \(ss'\), interestingly, in no case is the GBA worse than the NBA in terms of the distance metric. For the classification metric it is worse for 02, 22 and 32. These are precisely the cases where features are combined in the strongly correlated likelihood for \({\bar{C}}\) but not for the strongly correlated likelihood for C.

7 Error analysis: choosing the right factorization

In the above we showed how the relative performance of the GBA when compared to the NBA was sensitive to the factorization used for the GBA relative to the distribution of correlations inherent in the underlying probability distributions. However, to determine the relative efficacy of the GBA in the above we exhaustively considered every factorization. This is not practicable for real world problems with many features, hence, the importance of a priori diagnostics. We will consider the distribution of errors associated with all two-feature combinations using the error diagnostics, \(\varDelta _C(X_iX_j)\), \(\varDelta _{{\bar{C}}}(X_iX_j)\) and \(\varDelta (X_iX_j)\), and show how these indicate which features should be combined and, therefore, when and why the GBA should be used. We will work first in the context of the three-feature problem, considering first symmetric and then asymmetric correlations in the underlying probability distributions.

For symmetric correlations, an analysis of the errors for every pair of feature values yields several noteworthy observations. First, that \(\varDelta _C(X_iX_j)= \varDelta _{{\bar{C}}}(X_iX_j)=\varDelta (X_iX_j)=0\) for \(X_iX_j=X_1X_3\) or \(X_iX_j=X_2X_3\), for all five correlation strength distribution types. In other words, our diagnostics can clearly identify combinations of features where there are no correlations, that can then be well approximated by the NBA. The only non-zero values are associated with the feature combination \(X_1X_2\), where their values depend on the degree of correlation in the underlying probability distributions. For the distribution ss, both \(\varDelta _C(X_1X_2)\) and \(\varDelta _{{\bar{C}}}(X_1X_2)\) are large—ranging from 64 to 96% of the corresponding probabilities in the NBA for the four different feature value combinations—while the error in \(\varDelta (X_1X_2)\) is approximately double that, due to the reinforcement of the errors from C and \({\bar{C}}\). For the distribution ww, on the other hand, both \(\varDelta _C(X_1X_2)\) and \(\varDelta _{{\bar{C}}}(X_1X_2)\) are relatively small, only about 1–36% of the corresponding NBA probabilities. The errors in \(\varDelta (X_1X_2)\) are only of the order of 10–39% in spite of the fact that there is no cancelation between the likelihood errors for C and \({\bar{C}}\). For sw and ws, errors are dominated by the corresponding strongly correlated distribution. Thus, for sw, the likelihood error in C is 83–95%, while that of \({\bar{C}}\) is only 1–17%. The resultant error \(\varDelta (X_1X_2)\) is thus dominated by the error of the likelihood in C. A similar result holds for the distribution ws, where now the error in the likelihood for \({\bar{C}}\) dominates the overall error. For the distribution \(ss'\), although the errors in \(\varDelta _C(X_1X_2)\) and \(\varDelta _{{\bar{C}}}(X_1X_2)\) are large, 83–95% in C and 64–78% in \({\bar{C}}\), the error in \(\varDelta (X_1X_2)\) is relatively small, 6–54%, due to the cancellation in errors between the two likelihoods.

In the case where the correlations in the underlying probability distributions for the likelihood functions are asymmetric, with a correlation between \(X_1X_2\) for C and between \(X_1X_3\) for \({\bar{C}}\), the most noteworthy difference to the symmetric case is that, as expected, the \(\varDelta _{ij}\) in the case \(ss'\) do not exhibit any cancelation, as the strong correlations are in likelihoods associated with distinct feature sets.

7.1 Relating errors to the GBA: three features

We see then that our diagnostics clearly indicate which combination of features should potentially be treated together rather than independently and therefore which factorization of the GBA is the most appropriate. We also see that it may be necessary to combine features in only one likelihood as opposed to both. In order to determine whether the features \(X_iX_j\) should be combined together and, further, whether they should be combined together for the likelihoods of both C and \({\bar{C}}\) or only one or none, implies setting a threshold for the errors above which the features will be combined. In this infinite population setting the natural threshold is zero as there are no sampling errors affecting the \(\varDelta \), which are then a pure measure of the error due to model bias. We will consider using \(\varDelta _C\) to determine when to combine features in the likelihood for C, \(\varDelta _{{\bar{C}}}\) for combining features in \({\bar{C}}\) and \(\varDelta \) to determine if we are likely to see error cancelation or error reinforcement. In this three-feature setting we can consider classifier performance as a function of \(\varDelta \), where performance is measured in terms of our distance function or classifier accuracy. In Fig. 3 we graph average error against average distance for each correlation distribution ww, ws, sw \(ss'\) and ss (a graph of average error against classifier accuracy is very similar). We show results for both the average signed normalized error and the average absolute error. The difference between the two errors is a measure of the degree of cancelation in the errors between C and \({\bar{C}}\). For both performance measures we see a clear correlation between the average error and performance, with, as expected, the distribution ww showing the best performance and ss the worst. Note for the distributions ws, sw and, particularly, the distribution \(ss'\), the difference between the average signed and absolute errors, once again indicating that large errors in the individual likelihoods is not sufficient to predict performance but indicating that our diagnostics do correlate with performance.

8 Cancelations between correlations in different feature combinations

By a systematic analysis of the case of two and three features, we may understand almost all of the principle elements—correlation type (symmetric, asymmetric), correlation strength (weak, strong) and “correlation correlation” (reinforcing, canceling)—that explain the relative difference in performance between the GBA and the NBA. Moreover, we saw that our diagnostics correlate very well with this difference allowing us to predict a priori when the NBA might be expected to break down and what factorization of the GBA should be used. Our error diagnostics allow us to identify which features should be combined and therefore which factorization of the GNB is better. Furthermore, the analysis also allows us to understand why the NBA is such a robust and versatile performer in spite of its very strong assumption of independence between features. Essentially, the only element missing when passing to more than three features is that of error reinforcement or cancelation between different feature combinations.

8.1 Cancelations for four features

The simplest illustration of the cancelation between different feature combination occurs with two binary schemata, considering concatenations of two two-feature distributions taken from Appendix A. Explicitly, we consider a probability distribution \(P_e(\mathbf X|C)\) for four features, \(X_1\), \(X_2\), \(X_3\), and \(X_4\), of the form

where each \(P(X_iX_{i+1}|C)\) can be chosen from an independent distribution. Note that the correlation structure is symmetric in the likelihoods for C and \({\bar{C}}\). We will restrict attention to symmetric correlations as for the purpose of studying reinforcement or cancelation of errors between feature sets there is nothing new in the asymmetric version. As, by construction, there are no dependencies between the features \(X_iX_j\) for \(ij = 13, 14, 23, 24\) the schema partition \(\xi ^{(e)}=(\xi _1,\xi _2)\) with \(\xi _1=X_1X_2\) and \(\xi _2=X_3X_4\), will be exact and, hence, the GBA based on these two schemata should be an exact approximation. In other words,

and analogously for \(P(X_1X_2X_3X_4|{\bar{C}})\). In contrast, the NBA gives for the likelihood function

while the error in the score function relative to the optimal (exact) factorization is given by

We will consider different concatenations of the probability distributions of Appendix A, as discussed in 3.1. We saw there, that for distributions 1, 3, 8, 10 and 11 the NBA was particularly bad, while for distributions 2, 4, 7, 9 and 12 the NBA was better. However, we also saw that there were different reasons why the NBA might work well in the two-feature examples. First, as in distribution 4, that the correlations were weak in both the likelihoods for both C and \({\bar{C}}\); and, alternatively, as in distribution 2, that the correlations were strong in the likelihoods for both C and \({\bar{C}}\) but that there were cancelations in the errors between them. Hence, as building blocks for the concatenations we will use distribution 4, denoted as W, which has small errors in the NBA for both likelihoods; distribution 1, S, which exhibits large errors in both likelihoods and of opposite sign; distribution 2, \(W'\), which has large errors for both likelihoods, but with cancelations between them; and, finally, distribution 1, but where C and \({\bar{C}}\) have been interchanged, \(S'\). This latter artifice has the effect of giving errors for each specific feature combination of different sign to that of the corresponding error of distribution 1. Hence, \(\textit{WW}\) is a concatenation of distribution 4, \(\textit{SW}\) of distributions 1 and 4; \(\textit{SS}\) of distribution 1; \(\textit{WW}'\) of distributions 4 and 2; \(W'W'\) of distribution 2 and \(\textit{SS}'\) of distribution 1 with distribution 1 where C and \({\bar{C}}\) are inverted.

With these in hand we can now examine how errors cancel both at the intra- and inter-schemata level. In Table 3 we see the different errors in the likelihoods for C and \({\bar{C}}\) for each schema \(\xi _1=X_1X_2;\ \xi _2=X_3X_4\) for the distribution SS. The most notable feature is that, for any schema, the signs of the errors vary between the different feature combinations \(11,\ 10,\ 01,\ 00\). In fact, as previously stated, for binary features the errors in the likelihood functions for \(X_1X_2\) and \({\bar{X}}_1{\bar{X}}_2\) must be the same and opposite to those of \({\bar{X}}_1X_2\) and \(X_1{\bar{X}}_2\). Although for features with higher cardinality and for schemata of more than two features the situation is more complicated, it is still true that the errors for different feature values cannot all be of the same sign and therefore when different feature value combinations are considered in different schemata it is inevitable that there will be cancelations.

This phenomenon can be seen clearly in Table 3: considering \(\delta _s(C|\xi )=\delta _s(C|X_1X_2)+\delta _s(C|X_3X_4)\), 8 configurations, \(X_1X_2X_1X_2\) and \(X_1X_2{\bar{X}}_1{\bar{X}}_2\) lead to an enhanced error while another 8, \(X_1X_2X_1{\bar{X}}_2\) and \(X_1X_2{\bar{X}}_1X_2\), are associated with a cancelation in errors. The same is true for \(\delta _s({\bar{C}}|\xi )\). For example, 1111, 1010 etc. are associated with error enhancement, while 1110, 1101 etc. are associated with error cancelation. This pattern of error enhancement and error cancelation is equally valid for any four binary feature distributions. Hence, in order to analyse the different possibilities for cancelations between the four different likelihood functions in this four-feature problem we consider the following quantities: \(\varDelta S_i,\ i=1,\ 2=\delta _s(C|\xi _i)=\delta _s(\xi _i|C)-\delta _s(\xi _i|{\bar{C}})\) is the sum of the signed errors for each feature combination, while \(|\varDelta S_i|=|\delta _s(\xi _i|C)|+ |\delta _s(\xi _i|{\bar{C}})|\) is the sum of the absolute errors in the two likelihoods. Similarly, \(\varDelta S_C=\delta _s(\xi _1|C)+\delta _s(\xi _2| C)\) is the sum of the signed errors for the likelihoods of C summed across the two schemata \(\xi _1\) and \(\xi _2\). \(\varDelta S_{{\bar{C}}}=\delta _s(\xi _1|{\bar{C}})+\delta _s(\xi _2|{\bar{C}})\) is the analogous quantity for the likelihoods of \({\bar{C}}\). Finally, \(\varDelta S_{total} =\delta _s(\xi _1|C)-\delta _s(\xi _1|{\bar{C}})+\delta _s(\xi _2|C)-\delta _s(\xi _2|{\bar{C}})\) is the signed error for the full feature set, while \(|\varDelta S_{total}|=|\delta _s(\xi _1|C)|+|\delta _s(\xi _1|{\bar{C}})|+|\delta _s(\xi _2|C)|+|\delta _s(\xi _2|{\bar{C}})|\) is the sum of the absolute errors across all four likelihood functions.

Table 4 shows the absolute values of these different diagnostics averaged over the 16 different feature combinations \(1111,1110,\ldots ,0000\) for each concatenation. For the homogeneous distributions WW and SS, we see that \(\varDelta S_i=|\varDelta S_i|, \ i=1, 2\), indicating that there are no cancelations between the errors in the likelihoods for C and \({\bar{C}}\) in a given schema. In other words, the errors in the likelihoods for C and \({\bar{C}}\) reinforce one another rather than cancel. On the other hand, in both cases, \(\varDelta S_i<|\varDelta S_i|, \ i= C, {\bar{C}}\), which indicates that there are cancelations between schemata, for both likelihood functions, as illustrated above for the case SS. In the case of the distribution \(\textit{SW}\), once again there are no cancelations between the errors for C and \({\bar{C}}\) in a given schemata but there are between schemata. For the distributions \(\textit{WW}'\), \(\textit{SS}'\) and \(\textit{WW}'\), all three contain distributions, \(S'\) or \(W'\), where there is a cancelation of errors between the likelihoods for C and \({\bar{C}}\) within a given schemata, i.e., that \(\varDelta S_i<|\varDelta S_i|\) for \(i=2\) for \(WW'\) and \(\textit{SS}'\), or both in the case of \(W'W'\). Additionally, there are also cancelations between schemata for all three distributions. By comparing \(\varDelta S_{total}\) with \(|\varDelta S_{total}|\) we can see the extent of the overall error cancelation for the score function. By far the largest reductions are associated with those distributions, \(\textit{WW}'\), \(\textit{SS}'\) and \(W'W'\), where there are cancelations at both the intra- and inter-schemata level. The least cancelation is for the distribution \(\textit{SW}\). This is due to the fact that it is a concatenation of a distribution, S, with large errors, with another, W, with small errors. However, although the greatest error cancelation is associated with those distributions where there are both intra- and inter-schemata cancelations they do not correspond necessarily to those distributions where the average absolute difference between the NB score and the exact score is highest. Rather, the largest differences in score are associated with those distributions where the NB scores are small. As mentioned previously, the NBA has inadequate raw material with which to work.

8.2 Cancelations for more than four features

We can concatanate the two-feature distributions as many times as we like to see how things change as a function of the number of features and as a function of the mix of correlations. For instance, for six and eight features we will take the probability distributions for the likelihood functions to be

with analogous expressions for \({\bar{C}}\), so that the correlation structure is symmetric.

For the 6-feature case there are three order-two schemata, \(\xi _1=X_1X_2\), \(\xi _2=X_3X_4\), \(\xi _3=X_5X_6\), and for the 8-feature case four, \(\xi _1=X_1X_2\), \(\xi _2=X_3X_4\), \(\xi _3=X_5X_6\) and \(\xi _4=X_7X_8\), with no dependencies in either case between features that are in different concatenated two-feature blocks. The schema partitions \(\xi ^e=(\xi _1,\xi _2,\xi _3)\) and \(\xi ^e=(\xi _1,\xi _2,\xi _3,\xi _4)\), with \(\xi _1=X_1X_2\), \(\xi _2=X_3X_4\), \(\xi _3=X_5X_6\), \(\xi _4=X_7X_8\) will be exact as will be the GBA based on this factorization. The corresponding errors in the score function can be calculated from Eq. (3.14). In these examples, we have constructed all the dependencies between different variables explicitly. By choosing as schemata \(\xi _1=X_1X_2\) and \(\xi _2=X_3X_4\), for four features, \(\xi _1=X_1X_2\), \(\xi _2=X_3X_4\), \(\xi _3=X_5X_6\) for 6 features and \(\xi _1=X_1X_2\), \(\xi _2=X_3X_4\), \(\xi _3=X_5X_6\) and \(\xi _4=X_7X_8\) for eight features we have chosen a GBA that matches the exact factorization of the likelihood function and, hence, the GBA is exact. The implication of this is that the error of the NBA is just the sum of the errors associated with the four order-two dependencies arising from the four schemata \(\xi _1\), \(\xi _2\), \(\xi _3\) and \(\xi _4\).

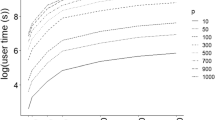

We will consider concatenations of the distributions W, \(W'\), S and \(S'\) of Sect. 8.1, for six features considering the distributions \(\textit{WWW}\), \(\textit{SWW}\), \(\textit{SSW}\), \(\textit{SSS}\), \(\textit{WW}'W\) and \(\textit{SS}'S\) and for eight features the distributions \(\textit{WWWW}\), \(\textit{SWWW}\), \(\textit{SSWW}\), \(\textit{SSSW}\), \(\textit{SSSS}\), \(\textit{WW}'{} \textit{WW}'\) and \(\textit{SS}'{} \textit{SS}'\). In Table 5, for each distribution we see our error measures—\(\varDelta S_C\), \(\varDelta S_{{\bar{C}}}\), \(\varDelta S_{total}\), \(|\varDelta S_C|\), \(|\varDelta S_{{\bar{C}}}|\) and \(|\varDelta S_{total}|\), as well as the GNB and NB scores. We see manifest the phenomenon of error cancelation at both the intra- and inter-schemata level. Note that the examples with the greatest degree of cancelation are those with feature pairs associated with the strongly correlated distributions \(W'\) and \(S'\) that exhibit important error cancelations between the likelihoods for C and \({\bar{C}}\), leading to cancelations of up to 75% of the absolute error with the main contribution coming from cancelations of the errors in \(\varDelta _C\) and \(\varDelta _{{\bar{C}}}\). The symmetric distributions \(\textit{WWW}\), \(\textit{SSS}\), \(\textit{WWWW}\) and \(\textit{SSSS}\) illustrate very well the existence of cancelations across different feature value combinations given that not all can have the same sign of error. Moreover, this type of cancelation increases as the number of features increases. Indeed, we see that, even if there are very strong correlations with errors that reinforce between the likelihoods of C and \({\bar{C}}\), i.e., without intra-schemata cancelation, the overall error cancelation due to inter-schemata cancelation is more than 50% of the absolute error and this reduction is about the same for the weakly correlated case WWWW.

These effects are summarized in Fig. 4: For a given correlation type—(\(\textit{WW}\), \(\textit{WWW}\), \(\textit{WWWW}\)), (\(\textit{SS}\), \(\textit{SSS}\), \(\textit{SSSS}\))—the relative degree of cancelation is an increasing function of the number of features. This repeated concatenation of the same distribution shows and isolates the effect of inter-schemata error cancelation between different feature values combinations in different modules due to the fact that the error function cannot be of the same sign over all feature value combinations. We also see the enhanced cancelation for distributions with \(W'\) and \(S'\) modules due to the addition of intra-schemata cancelation.

8.3 Performance as a function of number of features and degree of correlation

So, how do our error measures relate to performance in these multi-feature cases? In Figs. 5 and 6 we see the performance of the NBA as a function of our signed score error measures, \(\varDelta S_{total}\), averaged over all feature value combinations for the four- six- and eight-feature distributions considered in the previous Sections (the results are very similar for the absolute error measure \(|\varDelta S_{total}|\)). The most notable feature of the graphs is the good degree of correlation between the error measure and the corresponding performance measure, with an \(R^2\) of approximately 0.7, clearly showing that our error diagnostics do predict relative performance. We can also note from these graphs that the correlations between points associated with a fixed number of features are stronger than when considering the full set of distributions.

We have used two different performance metrics as they represent two different measures of classifier performance. As emphasized by Domingos and Pazzani (1996), the all or nothing nature of classification should explain some of the robustness of the NBA. Indeed, we can confirm this very well using the present analysis. Indeed, there is a low degree of correlation (\(R^2=0.32\)) between the two measures for the 19 concatenated distributions we have considered. Why the low correlation? Well, the distance measure is a metric that determines the similarity between the NBA ranking and the GBA (in this case, exact) ranking and is global over the full set of predictions. On the other hand, classification performance is particularly sensitive to the NB score in the vicinity of the score threshold, i.e., \(S_{\textit{NBA}}=0\). This means that even large errors are relatively unimportant if the NB score is far from the threshold and, on the contrary, the effect of small errors may be significantly amplified in the vicinity of the threshold.

In Fig. 7 we see the relation between the relative score error, i.e., relative to the NB score, versus classification error (false positive rate). The strong relationship between them confirms the importance of the vicinity of the score threshold \(S_{\textit{NB}}=0\), where the relative error would be expected to be the largest and hence the highest sensitivity to misclassification. On the contrary, the correlation between the relative score error and the distance metric is weak showing that the two metrics are sensitive to quite different characteristics of the error function.

9 Application to real world data

In this Section we will briefly show how the insights gleaned from our previous analysis can be applied to real world problems. We have seen how local error measures for the likelihoods, Equations (3.8); and for the scores, (3.12), can be used to determine which features should be combined and therefore how to construct an appropriate factorization for the GNB. However, these diagnostics are all associated with probabilities and, therefore, independent of sample size. For example, for two features, if \(P(X_1X_2|C)=N_{CX_1X_2}/N_C=0.3\), \(P(X_1|C)=N_{CX_1}/N_C=0.4\) and \(P(X_2|C)=N_{CX_2}/N_C=0.4\), we must consider the possibility that the error \(\delta (X_1X_2|C)=0.14\) is not statistically significant if \(N_{X_1X_2}\), \(N_C\), \(N_{X_1}\) or \(N_{X_2}\) are small. To determine the degree of statistical significance of the errors (3.8) we will use the following binomial tests