Abstract

This paper propose a binomial distribution model based on Bayesian statistics in the field of monitoring artificial radionuclides. A multi time-interval analysis method is adopted to extract the 214Bi–214Po correlated events counts in the 222Rn decay series. Then the model evaluates natural background using the ratio of correlated events counts to total α counts. The Beta distribution is used as the prior distribution of this model. We detailed discussed the process of posterior estimation, and compared the new approach with the conventional approaches. The results show the new approach has a more sensitive response to artificial radionuclides.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Since the tragic accident at the Fukushima Nuclear Power Plant (NPP) on March 11, 2011, the monitoring of airborne dust activity across the northern hemisphere has intensified in response the initial reports of atmospheric releases from Japan [1, 2]. Radionuclides released into the atmosphere include noble gases, volatile ones, and some non-volatile elements such as Plutonium isotopes, Americium, Curium, Actinium, etc., which pose a health risks when the isotopes disperse into air through atmospheric diffusion [3, 4]. The complexity of monitoring artificial radionuclides has increased due to the presence of accompanying uranium and thorium series progeny emitting α or β particles, alongside seasonal fluctuations in radon and thoron concentrations [5].

Addressing the complexity of monitoring artificial radionuclides, Japanese researchers introduced the multi time-interval analysis (MTA) method in the early 2000s. This method involves calculating short time-intervals derived from correlated events (such as 220Rn–216Po in thorium series, 214Bi–214Po in uranium series) to estimate the background levels [6,7,8,9]. Rolf Falk and Bigu et al. successfully applied the MTA method to monitor the atmospheric activity of 220Rn [10, 11]. Then Sanada et al. combined this method with the α-spectrum and developed a background compensation system for rapid detection of artificial radionuclides [12]. However, this system did not account for the progeny of 220Rn. Notably, 212Po with a shorter half life 0.3 μs in thorium series renders the extraction of correlated events indistinguishable.

In conventional approaches, as mentioned earlier, an averaged ratio of correlated event counts to total α counts, termed the equilibrium factor or compensation factor, is commonly employed to estimate the background levels in the absence of contamination. These approaches are typical applications of Frequentists, wherein the population recognition is based on sampling distributions. Moreover, parameters of the distribution, including the expected value and the standard deviation, are treated as unknown constants and can be estimated from random, independent samples, with deviations indicating uncertainties [13, 14]. Abundant measurements are discarded after the parameters settle-down. Therefore, one could not use scientific judgement based on historical information in Frequentist statistics [15].

Compared with its conventional counterparts, Bayesian statistics are straightforward: First, it allows the incorporation of prior information into the measurements analysis. Second, Bayesian statistics can rectify situations where conventional methods result in a high detection limit due to biased compensation factors by calculating the posterior distribution of the compensation factor iteratively. Particularly in the case of monitoring low-level artificial radionuclides, the subtraction of background counts, which fluctuate over time, is crucial. Calculations of constantly ever-changing compensation factors would benefit from a coherent Bayesian approach. Demonstrating the effectiveness of the Bayesian approach, Peng Luo et al. Employed the Gamma distribution as a prior for the coefficient of the Poisson distribution in ambient surveillance measurements, yielding meaningful non-negative results [16]. Additionally, YuZabulonov et al. used the Normal distribution as a prior of the expectation of another Normal distribution in radioactive source detection [17]. The utility of Bayesian statistics for radiation dose assessment was affirmed by Sonecka et al. [18]. It highlights the pivotal role of the prior distribution’s selection in Bayesian methodology. In this article, we adopt the Beta distribution as a priori of the parameter of a binomial distribution to evaluate radon and thorium radiation level, demonstrating its application in monitoring artificial radionuclides.

Theory

Multi time-interval analysis method

The Multi Time-Interval Analysis (MTA) is a method used to measure short-lived in the radon-thorium decay series in the atmosphere [6]. In this method, short-lived progeny nuclei and their parent nuclei are defined as a pair of correlated events, indicating a logical causal relationship between them, and they also follow a distinct chronological order. A defining characteristic of these correlated events is that time intervals between them are significantly shorter than those between other nuclei. By calculating these short time intervals, the number of short-lived progeny nuclei can be determined. For example, when observing a single 222Rn nucleus over an extended period, one can observe a β particle which probably derives from a 214Bi nucleus for it is promptly followed by an α particle from 214Po. This is because the extremely short half-life of 214Po (about 164 microseconds), facilitating it to rapidly emit an alpha particle and decay into 210Po. Consequently, the time interval between the observed pair of particles (β–α) is exceptionally short.

However, it’s essential to note that not all short time intervals are necessarily caused by the correlated events like 214Bi–214Po. Other coincidental random events may also result in short time intervals. The MTA method separates correlated events from a set of short time intervals using an exponential distribution, thus allowing for the determination of the number of short-lived nuclei. Moreover, under equilibrium conditions, the MTA method can also determine the quantity of nuclei, either 222Rn or 220Rn [7].

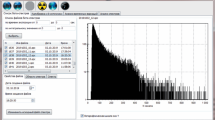

The fundamental principles of the MTA method are as follows: Firstly, for the time sequence collected by nuclear detectors, the MTA defines a fixed time window with a width equal to 20 times the half-life of the target short-lived nuclei. This choice of width aims to ensure that both the parent event and its subsequent correlated events are detected within the time window. Secondly, the method treats each particle as a starting event for the window, calculating and recording the time intervals between it and other delayed events within the window, as shown in the upper part of Fig. 1. These intervals include both correlated events and coincidental random events. Finally, the MTA method statistically analyzes the frequency of these intervals to generate a multi time-interval spectrum. This spectrum consists of two parts: the first part corresponds to the distribution of coincidental random events. The time intervals of random events are treated to be an uniform distribution. The second part represents the statistical distribution of correlated events. The time intervals between correlated events are primarily determined by the half-life of the short-lived progeny, resulting in an exponential distribution with the decay constant of the progeny as a parameter. The formula for the multi time interval spectrum can be expressed as:

where M is the total count, P(t) is the probability of occurrence within a time interval at t, dt is the differential time, λ is the decay constant of the progeny nucleus, αt is the probability that a particle is a relevant parent event, C is the count rate of random events. According to references [6,7,8,9], Eq. (1) can be simplified to the second form, A is a numerical coefficient of correlated events which should be determined by fitting results, B is a coefficient which represents random coincidences. In the practical case for MTA, detection efficiencies of detectors are taken into account, Eq. (1) can be used in the third form, where εp and εd presents the counting efficiency for 214Bi and 214Po respectively. As the decay process of particles are modular and simulated from Geant4, the counting efficiency of them are set to 100%. Then Aʹ is equal to A, and the correlated events count for one observation, denoted as Ncor, is equal to Aʹεp−1εd−1/λ.

The traditional approach of monitoring artificial radionuclides

It’s well known that the concentration of radon and its progeny in the atmosphere is significantly higher than that of thorium and its progeny. Among the radon progeny, 214Bi–214Po is regarded as the pair of correlated events typically. Consequently, the traditional approach for monitoring artificial radionuclides using the MTA method relies on the ratio of the correlated events counts to the total α counts in order to estimate the natural background level.

In the absence of artificial radionuclides contamination, air dust is sampled over a specific duration like 40 min or 1 h using filters. These filters contain radon progeny such as 218Po, 214Pb, 214Bi and 214Po, as well as thorium progeny like 216Po, 212Pb, 212Bi and 212Po etc. The sample is then submitted to a detector for measurements. The detector records the arrival time of α and β particles emitted by these descendant nuclei, resulting in a time sequence. The MTA method is then employed to extract the count of correlated events, specifically 214Bi–214Po, from this time sequence. The ratio of the correlated events counts to the total α counts, also called the compensation coefficient θ, is defines as θ = Ncor/Nt for an individual measurement, where Nt represents the total α counts. Through multiple measurements, a sample space Θ = {θ1, θ2, θ3,…,θn} can be obtained, with each θi being constant. The sample mean \(\overline{\theta }\) serves as an estimation of the relationship between the correlated events Ncor and the total α counts Nt, often referred to as the compensation factor. It can be affirmed that the compensation factor holds a position of paramount importance in assessing the natural background level within the traditional approach. Together with its standard deviation σ, the factor forms the decision criterion of the approach, typically denoted as \(\left[ {\overline{\theta } - 3\sigma ,\;\overline{\theta } + 3\sigma } \right]\).

In practical terms, if the ratio of correlated event counts to total α counts falls outside the criterion in a specific measurement, it is indicative of contamination. The artificial radionuclides α counts Nα can be calculated by incorporating the compensation factor like:

The Bayesian approach of monitoring artificial radionuclides

Our Bayesian approach also bases on analysis of the relationship between the correlated events and total α counts. Let’s consider a measurement process described by the total α counts and the correlated counts, let x denote the correlated counts such as 214Bi–214Po from the MTA method. These counts are regarded as the “successful events” of the total in a binomial distribution model, whereas θ denotes the successful probability. Unlike the description in the classical approach, the Bayesian approach describes the unknown variant θ as a distribution rather than a constant. The mathematical form of Bayesian approach is like:

where π(θ|x) is the posterior probability distribution of the unknown parameter θ given the data x, and P(x|θ) is the likelihood function that is given by a chosen probability model (a binomial distribution here). The priori distribution of θ, denoted as π(θ), is a quantitative description of the authors’ belief about θ based on previous experience and knowledge before the measurement. The denominator m(x) refers to the marginal distribution of the data x. The purpose of Bayesian approach is to make statistic inference through the posterior distribution that incorporates the knowledge of θ given the priori belief and the actual data x.

As the reference [19] pointed out, choosing of a priori distribution in the absence of any knowledge is a delicate task. A priori from a conjugate family can make the estimation of the posterior mathematically tractable and convenient in that the posterior will follow the same parametric form as the priori. In this study, a conjugate distribution known as Beta distribution is assigned to be the priori. For the likelihood that is given by Binomial distribution, the posterior distribution can be expressed in the form of Beta distribution either. The general probability density function of Beta distribution, Beta (a, b), is given by

where Г is the gamma function, a is the shape parameter (the number “successful” events)and b is the scale parameter (the number of “failure” events). It is worth noting that the uniform distribution, U(0, 1), which is usually used as a priori with little knowledge of θ, is regarded as a special case of Beta (1, 1). For the correlated events counting information from the MTA method in a fixed time, the likelihood is given by \(\left( {\begin{array}{*{20}c} n \\ x \\ \end{array} } \right)\theta^{x} \left( {1 - \theta } \right)^{n - x}\), where n is the total α counts. Based on Bayesian theorem, the posterior probability of θ is given as

From the posterior probability distribution, the posterior expectation estimation of θ can be inferred as:

where x/n presents a maximum likelihood estimation (MLE) of the binomial distribution, a/(a + b) presents an estimation of θ in the priori, and γ = n/(a + b + n) presents the weight of MLE in the Bayesian estimation. It can be seen that the expectation of posterior is the weighted average of sample information and priori. On the other hand, the variance of the posterior expectation is like

From Eqs. (6) and (7), the decision criterion of our Bayesian approach can be given like \(\left[ {\overline{\theta }_{E} - K\sigma_{\theta } ,\;\overline{\theta }_{E} + K\sigma_{\theta } } \right],\) where K = 1–3. In the application of this approach, when a new θ is lower than \(\overline{\theta }_{E} - K\sigma_{\theta } ,\) it’s at some credibility believed that there is contamination. In the mean time, the comparisons between the traditional approach and the Bayesian can be performed. Based on Eqs. (1), (5), (6) and (7), our Bayesian approach consisted of 3 steps is presented in Fig. 2.

Step 1: The MTA method is used extract the correlated events 214Bi–214Po counts and accumulate α counts according to Eq. (1). Then, a compensation coefficient θ is obtained.

Step 2: If it is the first measurement, the Beta (1, 1) is adopted as the priori of θ. Otherwise, the posterior distribution of last θ is used as the prior of θ in the current measurement. The posterior distribution of θ in the current measurement can be calculated according to Eq. (5).

Step 3: The new decision criterion \(\left[ {\overline{\theta }_{E} - K\sigma_{\theta } ,\;\overline{\theta }_{E} + K\sigma_{\theta } } \right],\) (K = 1–3) is constructed according to Eqs. (6) and (7). One can make a judgement that if the ratio of correlated events to total α counts of a new sample is smaller than the lower limit of the criterion, contamination. Otherwise, the new sample belongs to natural background. Thus it will be added into the new priori for next measurement and go back to Step 2.

Experimental

The Geant4 software toolkit has been considered as an effective simulation packages of the passage of particles through matter. One of its kernel is tracking particles over time [20]. Ahtesham et al. assessed the Geant4 internal radioactive decay data for the investigated alpha-emitting radionuclides, and found the G4RadioActiveDecay class was well-suited for alpha particle dosimetry [21].

In this research, the Geant4 toolkit plays a pivotal role in simulating the physical decay process of natural radon (222Rn), thorium (224Ra) and artificial radionuclide (242Cm). Specific details are as follows:

Firstly, three decay process classes, namely “G4RadioactiveDecayPhysics”, “G4DecayPhysics” and “G4EmStandardPhysics” are declared and initialized in “PhysicsLists” class.

Secondly, a General Particle Source (GPS) is defined in a macro file, allowing simultaneously simulation of multiple radioactive sources. The GPS configuration includes parameters such as the number of protons, mass number, charge number, cut-off value, etc.

Thirdly, the virtual method “PreUserTrackingAction” from G4UserTrackingAction class is called to record essential track information such as particle name, identity, parent id, half-life and global time (in nanoseconds) for all particles in the simulation.

Lastly, to eliminate redundant track records, the “ClassifyNewTrack” method from G4UserStackingAction class is implemented to delete the duplicate tracks related to electrons and gamma particles.

The data format of the exported information is shown in Table 1, which records details particles originating form one 222Rn particle source, including their arrival times. Among these particles, alpha particles and electrons are the ones can be observed or measured. Consequently, they are restored in a ASCII document for the multi time-intervals analysis which will be depicted specifically in the subsequent section. Advantages of simulation are twofold: Firstly, one could identify alpha particles from their parent ID if interested, facilitating the determination of whether they belong to the natural background or are a result of artificial radionuclides. Secondly, the contribution of artificial radionuclides over a fixed time can be precisely measured. Thereby the effectiveness of the traditional approach and the Bayesian one can be compared.

Results and discussion

The measurement of natural background

The accurate estimation of radon and thorium levels in the atmosphere forms a crucial foundation for effective artificial radionuclides monitoring. However, other progeny like 212Po and its parent 212Bi in the thorium series have a shorter interval than 214Bi–214Po. These pairs are easily mistaken as the correlated events to impact the ratio, even to the decision criterion. To depict the impact of 212Bi–212Po, two samples including 5000 222Rn in radon series, a mixture of 5000 224Ra and 5000 222Rn, are simulated by Geant4. Although the concentration ratio of thorium to radon in the mixture deviates from real-world conditions, this segment seeks to investigate the reliability of MTA’s correlated event extraction. Firstly, a complete MTA spectrum of 5000 222Rn and their progeny is shown as Fig. 3. The decreasing part of the counts follows an exponential distribution with 214Po decay constant (164/ln (2) = 236.6522) as its parameter, indicating that most of the short intervals are from 214Bi–214Po correlated events and are accurately identified.

However, the presence of thorium progeny, specially 212Po, has a notable impact on the time-interval spectrum for the mixed source analysis, as depicted in Fig. 4a. One can observe an a remarkably high count within the first 10 μs interval, encompassing contributions from both 212Bi–212Po and 214Bi–214Po. Importantly, this spectrum does not follow to an exponential distribution with the 212Po decay constant as the parameter, nor does it comply with the exponential distribution characterized by the 214Po decay constant. Hence, we propose the adoption of a two-stage MTA method to process the multi time-interval spectrum of the natural background.

In the initial stage, the primary goal is to compute the contribution of 212Bi–212Po among all short intervals. The method entails setting the time window equivalent to 20 times the duration of the of 212Po half-life (approximately 6 μs). The A in Eq. (1) is calculated using the 212Po decay constant as the parameter, representing the contribution of 212Bi–212Po. Subsequently, in the second stage, the time window is reset to 20 times the 214Po half-life (approximated 3.2 ms), and the contribution of 212Bi–212Po is subtracted. The multi time-interval spectrum continues to follow an exponential distribution characterized by the 214Po decay constant as the parameter as shown in Fig. 4b. Based on Eq. (1), a recalculation is conducted to determine the count of A values for 214Bi–214Po correlated events.

Posterior calculation of the compensation coefficient

Utilizing the MTA method provides us with a maximum likelihood estimation of the compensation coefficient θ, which represents the correlated events to the total α counts after a single observation. Conversely, with the framework of Bayesian statistics and the family of conjugate distributions, we can establish a Beta distribution corresponds to θ. This Beta distribution is dynamically updated by incorporating the posterior distribution of θ obtained from the previous observation. This iterative approach enables us to leverage historical information for each measurement, leading to more precise θ estimations in successive observations. Figure 5 visually illustrates the progression of the prior, likelihood, and posterior distributions across the first four observations of a mixed source scenario (1Bq 222Rn and 0.1Bq 224Ra) where θ changes rapidly.

In Fig. 5a, the MTA method yields correlated events counts of 591 and total α counts of 591 + 8097. The choice of a Beta (1, 1) distribution as the prior for the first observation reflects limited prior knowledge about the compensation factor θ. The resulting first posterior distribution, calculated using Eq. (5) as Beta (591 + 1, 8097 + 1), aligns entirely with the likelihood of a binomial distribution corresponding to x/n. This alignment underscores the dominance of the sample weight, γ, in Eq. (6), effectively overshadowing the influence of the prior.

Next, the posterior from the initial observation serves as the prior for the second observation, leading to the derivation of a new posterior distribution with its associated likelihood, as shown in Fig. 5b. Notably, the likelihood solely pertains to the current measurement and remains independent of previous measurement. Consequently, a distinct difference occurs between the two distributions, and this difference originates from the comprehensive consideration of θ estimations from preceding measurements in the second observation.

In finer detail, utilizing Beta (592, 8098) as the prior distribution for the second observation results in a posterior of Beta (2976, 16522). The alterations in the shape parameter a and scale parameter b of the beta distribution correspond to the reduction in the sample weight γ in Eq. (6). Simultaneously, there is an increase in the weight (1 − γ) of the prior information in the inference. Through posterior iteration, the posteriors of the third and fourth observations are derived, as illustrated in Fig. 5c and d. It can be said that our Bayesian approach effectively incorporates historical data through iterative prior updates.

The deviation of estimation of the compensation factor

In this section, we delve into a comparative analysis of estimation biases between the Bayesian and conventional approaches, specifically focusing on the compensation factor θ and total α counts. Figure 6 encapsulates this analysis by presenting estimations across 40 consecutive observations for both approaches.

It is intriguing to observe that, particularly in scenarios characterized by a small-size sample (times of observations < 30), the Bayesian approach excels in estimation accuracy. Notably, when θ undergoes rapid and pronounced changes, the Bayesian approach consistently provides estimates much closer to the true θ. This phenomenon finds its explanation in the variance dynamics represented by the parameters a and b in Eq. (7), where increasing priori leads to reduced variance. As the times of observations reach approximately 30, the conventional approach begins to catch up with the Bayesian one in terms of estimation performance. It’s worth noting that subsequent samples immediately lose their utility once the average θ is determined, a phenomenon that contributes to the Bayesian approach’s sustained advantage.

A striking result emerges from the analysis of deviation in the estimation of total α counts: the Bayesian approach yields significantly smaller deviations compared to its conventional counterpart. This stems from the fact that Bayesian posterior expectation estimation takes into account the weights of both the sample and the prior. As a result, the estimation consistently maintains a certain distance from the likelihood, contributing to its commonly perceived “conservative” nature. This improvement underscores the Bayesian approach’s efficacy in refining estimations and reducing uncertainties.

Application of artificial radioactive monitoring

This section focuses on evaluating the sensitivity of both approaches to artificial radionuclides through two carefully experiments. For experiment 1, three test points of a 1.5Bq 242Cm source are introduced to background at the 5th, 15th, 25th h in consecutive observations. The results of artificial radionuclides monitoring using both approaches are shown in Fig. 7. The conventional approach, operating on the premise of treating low-level radiation as typical background fluctuations, fails to identify the source. In contrast, the proposed approach exhibits a keen ability to detect anomalies. Notably, at the 5th h, an alarm is triggered with a reliability of 68.26%, a reflection of the nascent priori accumulation at this point. With the increase in samples, the approach’s estimation variance diminishes, leading to dual alarms at the 15th and 25th h, showcasing an impressive reliability of 99.74%.

For experiment 2, we examine a scenario where both approaches have accumulated a substantial sample size (over 30 h). In this scenario, the same artificial source is introduced at the 31st, 36th, and 41st h. The results, as depicted in Fig. 8, reveal a key distinction in reliability between the two approaches. While both approaches succeed in detecting the source, the Bayesian approach holds a distinct advantage. Specifically, at the 36th and 41st h, the Bayesian approach exhibits a notably higher reliability in signaling alarms, underscoring its efficacy in discerning artificial radionuclides presence even under evolving circumstances.

Conclusions

This article introduces a novel approach of monitoring artificial radionuclides based on Bayesian statistics. The application of Bayesian theory reveals that the posterior distribution of the binomial distribution parameter follows a Beta distribution when the related priori is a Beta distribution. This approach simplifies posterior expectation estimation and enhances parameter updates through the incorporation of historical information. The comparisons between the proposed approach and conventional methods demonstrate its superior performance in terms of estimate accuracy, precision, and response speed. While the Bayesian approach involves iterative prior updates and complex calculations, its potential benefits in accuracy and efficiency warrant its consideration. In the future, other models which can be used to describe background radiation are worthy taken into consideration, such as Poisson, Gaussian model in the Bayesian Framework.

In conclusion, this work contributes to the field of artificial radionuclides monitoring by presenting a robust Bayesian approach that offers improved accuracy and efficiency. The potential impact of this approach, coupled with its adaptability and versatility, underscores its significance for advancing radiation detection and monitoring methodologies.

References

Glavic-Cindro D, Brodnik D, Cardellini F, De Felice P, Ponikvar D, Vencelj M, Petrovic T (2018) Evaluation of the radon interference on the performance of the portable monitoring air pump for radioactive aerosols (MARE). Appl Radiat Isot 134:439–445. https://doi.org/10.1016/j.apradiso.2017.07.039

Thakur P, Ballard S, Nelson R (2013) An overview of Fukushima radionuclides measured in the northern hemisphere. Sci Total Environ 458–460:577–613. https://doi.org/10.1016/j.scitotenv.2013.03.105

Lujaniene G, Bycenkiene S, Povinec PP, Gera M (2012) Radionuclides from the Fukushima accident in the air over Lithuania: measurement and modelling approaches. J Environ Radioact 114:71–80. https://doi.org/10.1016/j.jenvrad.2011.12.004

Wang G, Huang Z, Xu H, Yin Y, Mu C, Wang Q (2020) Remote real-time control of aerosol continuous monitoring for high radon concentration environments. Appl Radiat Isot 155:108922. https://doi.org/10.1016/j.apradiso.2019.108922

Długosz-Lisiecka M, Bem H (2020) Seasonal fluctuation of activity size distribution of 7Be, 210Pb, and 210Poradionuclides in urban aerosols. J Aerosol Sci 144:105544. https://doi.org/10.1016/j.jaerosci.2020.105544

Hashimoto T, Sakai Y (1990) Selective determination of extremely low-levels of the thorium series in environmental samples by a new delayed coincidence method. J Radioanal Nucl Chem 138:195–206. https://doi.org/10.1007/BF02039845

Hashimoto T, Sanada Y, Uezu Y (2004) Simultaneous determination of radionuclides separable into natural decay series by use of time-interval analysis. Anal Bioanal Chem 379:227–233. https://doi.org/10.1007/s00216-004-2571-8

Sanada Y, Kobayashi H, Furuta S, Nemoto K, Kawai K, Hashimoto T (2006) Selective detection using pulse time interval analysis for correlated events in Rn-progeny with a microsecond lifetime. Radioisotopes 55:727–734. https://doi.org/10.3769/radioisotopes.55.727

Sanada Y, Tanabe Y, Iijima N, Momose T (2011) Development of new analytical method based on beta-alpha coincidence method for selective measurement of 214Bi-214Po-application to dust filter used in radiation management. Radiat Prot Dosim 146:80–83. https://doi.org/10.1093/rpd/ncr116

Bigu J, Elliott J (1994) An instrument for continuous measurement of 220Rn (and 222Rn) using delayed coincidences between 220Rn and 216Po. Nucl Instrum Methods Phys Res Sect A: Accel Spectrom Detect Assoc Equip 344:415–425. https://doi.org/10.1016/0168-9002(94)90091-4

Falk R, Möre H, Nyblom L (1992) Measuring techniques for environmental levels of radon-220 in air using flow-through Lucas cell and multiple time analysis of recorded pulse events. Int J Radiat Appl Instrum Part A Appl Radiat Isot 43:111–118. https://doi.org/10.1016/0883-2889(92)90084-R

Barrera M, Lourdes Romero M, Nunez-Lagos R, Bernardo JM (2007) A Bayesian approach to assess data from radionuclide activity analyses in environmental samples. Anal Chim Acta 604:197–202. https://doi.org/10.1016/j.aca.2007.10.015

Kacker R, Jones AJM (2003) On use of Bayesian statistics to make the guide to the expression of uncertainty in measurement consistent. Metrologia 40:235. https://doi.org/10.1088/0026-1394/40/5/305

Sakaue H, Fujimaki H, Tonouchi S, Itou S, Hashimoto T (2007) A new time interval analysis system for the on-line monitoring of artificial radionuclides in airborne dust using a phosfitch type alpha/beta detector. J Radioanal Nucl Chem 274:449–454. https://doi.org/10.1007/s10967-006-6922-0

Johannesson E (2022) Classical versus Bayesian statistics. Philos Sci 87:302–318. https://doi.org/10.1086/707588

Luo P, Sharp JL, DeVol TA (2013) Bayesian analyses of time-interval data for environmental radiation monitoring. Health Phys 104:15–25. https://doi.org/10.1097/HP.0b013e318260d5f8

Zabulonov Y, Burtniak V, Krasnoholovets V (2016) A method of rapid testing of radioactivity of different materials. J Radiat Res Appl Sci 9:370–375. https://doi.org/10.1016/j.jrras.2016.03.001

Slonecka I, Krasowska J, Baranowska Z, Fornalski KW (2021) Application of Bayesian statistics for radiation dose assessment in mixed beta-gamma fields. Radiat Environ Biophys 60:257–265. https://doi.org/10.1007/s00411-021-00906-w

Laedermann JP, Valley JF, Bochud FO (2005) Measurement of radioactive samples: application of the Bayesian statistical decision theory. Metrologia 42:442–448. https://doi.org/10.1088/0026-1394/42/5/015

Khan AU, DeWerd LA (2021) Evaluation of the GEANT4 transport algorithm and radioactive decay data for alpha particle dosimetry. Appl Radiat Isot 176:109849. https://doi.org/10.1016/j.apradiso.2021.109849

Allison J, Amako K, Apostolakis J, Araujo H, Arce Dubois P, Asai M, Barrand G, Capra R, Chauvie S, Chytracek R, Cirrone GAP, Cooperman G, Cosmo G, Cuttone G, Daquino GG, Donszelmann M, Dressel M, Folger G, Foppiano F, Generowicz J, Grichine V, Guatelli S, Gumplinger P, Heikkinen A, Hrivnacova I, Howard A, Incerti S, Ivanchenko V, Johnson T, Jones F, Koi T, Kokoulin R, Kossov M, Kurashige H, Lara V, Larsson S, Lei F, Link O, Longo F, Maire M, Mantero A, Mascialino B, McLaren I, Mendez Lorenzo P, Minamimoto K, Murakami K, Nieminen P, Pandola L, Parlati S, Peralta L, Perl J, Pfeiffer A, Pia MG, Ribon A, Rodrigues P, Russo G, Sadilov S, Santin G, Sasaki T, Smith D, Starkov N, Tanaka S, Tcherniaev E, Tome B, Trindade A, Truscott P, Urban L, Verderi M, Walkden A, Wellisch JP, Williams DC, Wright D, Yoshida H (2006) Geant4 developments and applications. IEEE Trans Nucl Sci 53:270–278. https://doi.org/10.1109/tns.2006.869826

Acknowledgements

This work is supported by the grant of the Department of Science and Technology of Hunan Province (Project No. 2018JJ2317). We are grateful for their support, which has enabled us to conduct this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, X., Yan, Y. & Gong, X. Enhancing artificial radionuclides monitoring: a Bayesian statistical approach combined with the multi time-interval analysis method. J Radioanal Nucl Chem 333, 2121–2130 (2024). https://doi.org/10.1007/s10967-024-09394-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-024-09394-w