Abstract

Age-related deficits are often observed in emotion categorization tasks that include negative emotional expressions like anger, fear, and sadness. Stimulus characteristics such as facial cue salience and gaze direction can facilitate or hinder facial emotion perception. Using two emotion discrimination tasks, the current study investigated how older and younger adults categorize emotion in faces with varying facial cue similarity and with direct or averted gaze (Task 1) and in faces that appear on actors in congruent or incongruent contexts (Task 2). When context was included, the target’s gaze direction was averted toward emotionally laden objects in the background context on half of the trials. In both tasks, younger adults generally outperformed older adults. Discrimination performance was best when cue similarity was minimal. Negative facial emotion cues were interpreted through the lens of the context in which they appear, as facial emotion judgments in both age groups were impacted by background contextual emotion cues, especially when highly confusable negative emotions were evaluated. Although the contextual emotion cues were deemed irrelevant within task instructions, these cues were nevertheless integrated into one's percept. When emotion discrimination proved difficult, older adults were more inclined than younger adults to use the additional context to support their decision, suggesting that context plays a pivotal role in older adults’ everyday evaluation of emotion in social partners.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Changes in emotion processing throughout adulthood have implications for social interaction, including seeking out or providing social support to those experiencing emotionally evocative problems and stressors (Blanchard-Fields, 2007; Scheibe & Carstensen, 2010). Therefore, the ability to accurately detect and decode emotion from one’s face and environment remains a crucial function with age. Although many studies have investigated age differences in emotion perception for static expressions in isolation (Charles & Campos, 2011; Isaacowitz & Stanley, 2011), facial emotion is unlikely to appear in everyday life without matching emotional context (e.g., evocative body language). Such context facilitates facial cue decoding, especially when cues are common to multiple emotion states and are easily confused, such as anger and disgust (Aviezer et al., 2008). In this special issue, factors beyond facial cues that are relevant to our experience, expression, and interpretation of emotion are examined. Within the literature examining emotion perception in adulthood, the experimental manipulation of facial cues (e.g., salience, conformation, or gaze) often generates a characteristic age-related deficit in which older adults make more recognition errors than younger adults when evaluating negatively-valenced, static facial expressions. The current study examined how negative emotion discrimination varies as a function of age, facial cue similarity, gaze direction, and contextually congruent or incongruent information. More specifically, we were interested in examining how a target’s eye gaze when averted toward contextually relevant information or directed toward the observer impacts older and younger adults’ abilities to discriminate between negative facial emotion pairings with varying degrees of cue similarity. Here, background contextual emotion information communicates its own emotion signal, as is routinely the case in everyday life. When facial cues offer an emotion signal that is difficult to interpret, how influential are other aspects of the observer’s environment in their interpretation of their social partners’ emotional states?

Past research has found that younger adults outperform older adults on emotion recognition tasks, particularly when labeling negative (e.g., anger, fear, and sadness) compared to positive emotions (e.g., happiness) (Murphy & Isaacowitz, 2010; Ruffman et al., 2008). This may be partly explained by older adults’ preference for positive over negative emotion information stemming from a lifespan shift in goals (Carstensen & Mikels, 2005). Observed age differences in emotion recognition can also be attributed to the methods used to investigate the process (Charles & Campos, 2011; Isaacowitz & Stanley, 2011). For example, when selecting one emotion label among four or six options, older adults are more likely than younger adults to make errors (Orgeta, 2010). However, this difference disappears when participants choose between only two emotion categories. Correctly eliminating competing emotion categories requires cognitive resources (e.g., switching evaluative criteria from one trial to the next) and draws out age differences in performance. Age differences in emotion recognition also depend on the expressive intensity of the facial expression, with little to no difference in perception when faces are highly expressive (Orgeta & Phillips, 2008) and larger differences when facial emotion cues are less salient (Mienaltowski et al., 2013, 2019). Lastly, age differences in emotion recognition are driven by how information is accrued from facial regions by observers. Older adults spend more time than younger adults looking at the bottom half of the face (i.e., mouth region) than the top half of the face (Chaby et al., 2017; Slessor et al., 2008; Sullivan et al., 2007), contributing to older adults’ success when categorizing mouth-dominant emotions like disgust and happiness (Wong et al., 2005), and to their deficits when perceiving eye-dominant emotions like sadness, anger, and fear (Beaudry et al., 2014; Calvo & Nummenmaa, 2008).

In addition to providing insight into a target’s emotional state, the eye region also signals where the target’s gaze is focused. When gaze location is detected by an observer, the target and observer may engage in joint attention. The observer accesses information from the environment that can be integrated with the target’s emotion cues to estimate the target’s emotionality. Older adults are not as effective as younger adults at gaze following and engaging in joint attention (Slessor et al., 2008). Consequently, older adults may not derive crucial contextual information found within the social environment that could be helpful to decoding facial expressions. In addition to alerting an observer to factors in the target’s external environment, gaze direction amplifies perceived emotion intensity (e.g., fear; Adams & Kleck, 2005). For older adults, though, gaze direction only weakly impacts perceived intensity (Slessor et al., 2010). Missing this subtle emotion signal may contribute to older adults’ emotion recognition deficits.

Although lab-based emotion recognition tasks often rely on static facial expressions devoid of social context, emotion cues are also imbued within one’s environment and body language. Context disambiguates facial cues shared across multiple emotion categories (Aviezer et al., 2008, 2011; Barrett & Kensinger, 2010). Background context has been manipulated in a variety of ways, like embedding faces on emotional scenes, placing faces on emotionally expressive bodies, and including emotionally laden objects or focal points (e.g., a dirty diaper or raised fist) (Aviezer et al., 2008; Ngo & Isaacowitz, 2015; Noh & Isaacowitz, 2013). Even when instructed to ignore them, non-facial emotion cues are often spontaneously perceived and guide one's interpretation of facial emotion (Aviezer et al., 2011; Foul et al., 2018; Meeren et al., 2005). When these contextual cues are incongruent with facial cues, increased response latency and erroneous categorization result. In other words, when context is available, it is used to disambiguate facial cues.

To enhance the ecological validity of their emotion recognition task, Noh and Isaacowitz (2013) explored the influence of context (e.g., emotionally laden object, body language) on age differences in emotion perception. Participants observed angry and disgusted faces in a neutral (or no) context, a congruent context (e.g., a disgusted face with a dirty diaper, and an angry face with a raised fist), and an incongruent context (e.g., a disgusted face with a raised fist, an angry face with a dirty diaper). When context and facial cues were congruent, older and younger adults displayed comparable emotion recognition performance. However, when incongruent, older adults struggled more than younger adults to inhibit the contextual influence. The participants’ visual scanning patterns demonstrated that older adults were more prone than younger adults to initially fixate on the context rather than on facial cues in both congruent and incongruent contexts relative to neutral contexts, regardless of the facial expression. One implication of this finding is that older adults may naturally use background contextual emotion cues to inform their evaluation of social partners’ emotional state.

The Current Study

Although studies have examined age differences in the impact of gaze direction on emotion perception or age differences in the impact of context on emotion perception, no study has combined all four variables—aging, cue similarity, gaze direction, and context—to explore how they interact to impact emotion recognition performance. The current study examined these factors across two emotion discrimination tasks using negative emotional expressions: one manipulating gaze direction without manipulating context, and one manipulating both gaze direction and context. Importantly, we wanted to further our understanding of how eye gaze influences the emotion perceived in social targets because context is included in the evaluation of emotions, which is often left out of studies that attempt to capture age differences in emotion perception. In the first discrimination task, older and younger adults were presented with angry, sad, fearful, and disgusted facial expressions in the absence of context. Rather than taking a discrete emotions approach that focuses on including all possible pairings of the four emotions or all expression types in each experimental block (e.g., 4-option choice), facial expressions were blocked by cue similarity based on Aviezer et al. (2011): anger and disgust reflect high cue similarity, sadness and disgust reflect moderate cue similarity, and fear and disgust reflect low cue similarity. This operationalization of cue similarity is consistent with findings from the evaluation of distinctiveness of emotion categories via morphed combinations of pairings (Young et al., 1997) and from human and computer-modeled judgments of stimulus category membership (Katsikitis, 1997; Susskind et al., 2007). When evaluating facial stimuli to categorize expressed emotion, studies generally focus on the selection of the correct label from many possible options. More confusable expressions garner more errors, typically reflected in a confusability matrix (e.g., Isaacowitz et al., 2007, p. 159). Are the errors caused by cue similarity or by the demand to shift the criteria for each judgment from trial to trial? Blocking expressions in this manner allowed us to focus on cue similarity as a factor that influences emotion perception. Within a block, cognitive demand is minimized because people are choosing between two options throughout the block and do not have to shift criteria for making discrimination judgments from one trial to the next (Orgeta, 2010). Instead, participants focus on the specific pairing at hand in each block, reducing the opportunity for emotion type to confound the impact of gaze direction (and later context in the second task). This design also allowed for the use of signal detection techniques to characterize negative emotion discrimination. Data from the first negative emotion discrimination task (Task 1) can address whether manipulating target gaze direction exacerbates age differences in negative emotion discrimination when gaze direction affects facial cue interpretation.

In the second negative discrimination task (Task 2), the influence of emotional context (i.e., body posture and external object) was explored by partly replicating Noh and Isaacowitz (2013) and extending their work by manipulating gaze direction. Negative expressions with high facial cue similarity (i.e., anger/disgust) and low facial cue similarity (i.e., fear/disgust; Aviezer et al., 2011) were presented in congruent and incongruent contexts. Again, gaze direction was manipulated such that the target’s gaze direction was either directed toward the observer or averted away from the observer and toward an emotionally laden contextual feature (e.g., a dirty diaper). While we know that older adults are generally less effective at gaze following compared to younger adults, the question as to whether older adults could utilize synergistic, congruent face-context cue combinations to their benefit when evaluating negative facial emotion remains an open one. Could it be the case that older adults’ discrimination performance would improve if the actor’s gaze is averted toward contextually relevant information compared to when the actor’s gaze is directed toward the observer? Moreover, the influence that context has on emotion judgments could be contingent upon the saliency of the emotion cues found on the face and the extent to which the eye gaze directs the observer’s attention to contextually relevant details that may be used to help disambiguate facial cues. With the findings from the current study, we aimed to disentangle the different factors' (i.e., facial cue similarity, gaze direction, and context congruency) contributions to age differences in emotion discrimination abilities in order to enhance our knowledge of how emotion perception skills change throughout the lifespan.

Task 1—Emotion Discrimination without Contextual Information

The goal of Task 1 was to explore how younger and older adults differ in their interpretation of emotion cues for negative expressions when the target's gaze is directed toward or averted from the observer. Prior studies have asked participants to indicate whether facial stimuli have a direct or averted gaze or to rate the emotional intensity of facial expressions (e.g., Adams & Kleck, 2005). The current study asks different questions: (a) when eye gaze is averted, are the age differences in negative emotion discrimination exacerbated when the gaze direction is important to the interpretation of one emotion in the pairing (i.e., fear versus disgust)?, and (b) do averted gaze stimuli lead to similar reductions in discrimination performance for younger and older adults when an averted gaze introduces ambiguity to the expression (i.e., sadness versus disgust)?

In prior work, Adams and Kleck (2005) found that, when investigating a younger adult sample, perceived sad and fearful faces were rated as more intense when depicted with an averted relative to a direct gaze. When an averted gaze does not support the emotion signal, like when discriminating between anger and disgust, performance may be better when targets display a direct gaze instead. For instance, the same study showed that younger adults rated angry expressions with a direct gaze as being more emotionally intense. An averted eye gaze for angry expressions, absent context, could introduce additional ambiguity when decoding the true emotional state of the target. This ambiguity may further impair older adults' perception of anger in such instances, given that they tend to be poorer at recognizing anger when emotional cues are less salient (Mienaltowski et al., 2013, 2019) and have difficulty discerning subtle differences in angry facial expressions as a function of eye gaze direction (Slessor et al., 2010).

Overall, we expected younger adults to consistently outperform older adults at each level of cue similarity. However, we expected these age differences to be more apparent for the high similarity pairing (anger/disgust) than for moderate and low similarity pairings (sadness/disgust and fear/disgust, respectively), given that older adults struggle more in perceiving subtle than more salient differences in facial cues. We also expected that, to the extent that an averted eye gaze has the power to reduce cue saliency, members of both age groups would show reduced discrimination performance for each pairing. However, given the high facial cue similarity for the anger/disgust pairing, older adults may make more errors than younger adults when angry and disgusted targets are depicted with an averted gaze.

Method

Participants

Prior to data collection, a power analysis (Faul et al., 2007) revealed that a sample size of N = 80 allows for the detection of moderate (ηp2 = 0.06) and moderate-to-large (ηp2 = 0.10) effect sizes with a power of 0.80 in 2 (between-subjects) × 3 (within-subjects) and 2 (between-subjects) × 2 (within-subjects) mixed model ANOVAs, respectively. These effect sizes were derived from prior research investigating age differences in emotion discrimination (Mienaltowski et al., 2013) and are comparable to those observed in Noh and Isaacowitz (2013; n = 84).

Younger adults (n = 48; M = 19.4, SD = 1.8; aged 18–26 years; 52% female; 93% not Hispanic or Latino; 75% Caucasian, 9.1% African American) were recruited from undergraduate psychology courses and received course credit for participating. Older adults (n = 45; M = 70.5, SD = 5.7; aged 60–81 years; 56% female; 98% not Hispanic or Latino; 91% Caucasian, 6.7% African American) were recruited from the community and received $20 in compensation for participating. The same participants completed both Task 1 and Task 2. All older adults were screened for dementia using the Mini-Mental Status Exam (MMSE; Folstein et al., 1975). None displayed performance indicative of a risk for mild cognitive impairment (i.e., < 17 out of 21; n = 48; M = 20.7, SD = 0.6). Please refer to Table 1 for sample demographics and cognitive assessment characteristics. All protocols were approved by a university Institutional Review Board (IRB# 19–080). Individuals provided informed consent before participating. Data and stimuli are available at OSF: https://osf.io/th8zx/?view_only=e667f09325874047b1be401889b435d0.

Stimuli and Materials

Emotionally Expressive Faces

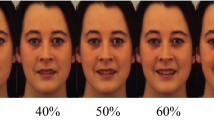

Facial stimuli were adapted from the NimStim Facial Stimulus Set (Tottenham et al., 2009). Closed mouth angry, fearful, sad, and disgust facial expressions of eight Caucasian actors (4 female/4 male) were used in Task 1. We decided to include only closed mouth expressions because emotions depicted with an open mouth could be more emotionally salient—regardless of absolute differences in expressive intensity—and thereby reduce task difficulty and possibly facilitate emotion discrimination performance differentially for older and younger adults. In prior work, Mienaltowski and colleagues (2019) found that age-related emotion perception deficits occurred at lower expressive intensities. At higher perceived intensities (e.g., posed exaggerated open mouth expressions), ceiling effects can make it difficult to investigate the competing influences of age and other stimulus properties. All 32 stimuli depicted emotion cues using a direct or averted gaze and were presented in color. Each stimulus was edited in Photoshop to move eye irises to the left and to the right from the central location in the eye socket by 3 pixels, a distance large enough to ensure detection (Slessor et al., 2008), creating two different gaze averted stimuli. No target had facial hair to obscure the available emotion cues. Example stimuli are provided in panel A–C of Fig. 1.

Images A–C were presented during Task 1. Example of a target expressing anger in a neutral context with a (A) direct gaze, (B) left averted gaze, and (C) right averted gaze. Images D–I were presented during Task 2. Images D and E represent a congruent trial (i.e., a disgusted face in a disgusted context) with a direct (Image D) and averted (Image E) gaze. Images F and G represent an incongruent trial in the high similarity condition (i.e., an angry face in a disgusted context) with a direct (Image F) and averted (Image G) gaze. Images H and I represent an incongruent trial in the low similarity condition (i.e., a fearful face in a disgusted context) with a direct (Image H) and averted (Image I) gaze

Procedure

Upon providing informed consent, participants were seated in an individual testing room with a computer station and a chinrest. Participants completed 384 trials in three blocks of 128 trials. Images were presented centrally on an ASUS VG248QE 24-inch full HD 1920 × 1080-pixel resolution monitor with a 100 Hz refresh rate. In each block, participants observed eight targets expressing two emotions. Averted gaze stimuli were repeated twice (50% of trials), and direct gaze stimuli were repeated four times (50% of trials) for each emotion pair, resulting in 2 (emotion) × 8 (target) × 8 (gaze × rep) trials, or 128 trials. Blocks were randomly counterbalanced, and stimuli were randomly presented in each block. Trial blocks operationalized the degree of similarity between the emotions included in this task, as described above (high similarity = anger/disgust, moderate similarity = sadness/disgust, and low similarity = fear/disgust). Blocking in this fashion minimizes the need to consider non-relevant alternatives (i.e., 2-option forced-choice), encourages participants to focus on specific emotion cue discrimination criteria, and makes it easier for participants to avoid considering other criteria that were not diagnostic of the emotions in the pairing (e.g., fear cues in the anger/disgust block). Including additional comparisons (anger/fear, sadness/anger, and sadness/fear), although informative, would demand additional time from the participants and may cause fatigue. This concern was particularly salient given that participants completed a second negative emotion discrimination task later in this study. Face stimuli were approximately 13˚(h) × 10˚(w) when viewed at a fixed distance of 57.3 cm. Hit rates and false alarm rates were calculated from 64 trials per cell of the 3 (similarity) × 2 (gaze direction) design.

In Task 1, participants observed face stimuli blocked by each of three emotion pairings, indicating which emotion they observed on each trial using the 1-key or 3-key on the keyboard number pad. At the beginning of Task 1, participants completed ten practice trials and were provided feedback after each practice trial. Feedback was only provided during the practice trials. A central fixation cross appeared for 300 ms before each stimulus, and responding was self-paced. Participants were informed that they would always be asked to decide between disgust and a second emotion, such as anger, fear, or sadness. Participants were instructed to be mindful of their choices because they would be unable to change a response once provided but were encouraged to select an emotion label to the best of their ability.

Results

Discrimination (d’) scores were calculated from participants’ hit rates and false alarm rates (Macmillan & Creelman, 2005). Disgusted expressions were the reference emotion and angry, sad, or fearful expressions served as the target emotion for d’ scores. To provide a complete picture of participant behavior in the current study, we also analyzed response times because they serve as an additional indicator of judgment difficulty within the task. Longer response times suggest greater judgment difficulty. Moreover, if response times and discrimination performance are generally consistent, then it is plausible to assume that a speed-accuracy tradeoff is not taking place. As such, we analyzed median reaction times (RTs), given the tendency for RT data to be negatively skewed. Note that RTs > 2 SD above the mean did not exceed 6.5% of the trials for either task. Two younger adults were excluded from analyses for responding within 100 ms on numerous (> 25) trials.

For Task 1, d' were submitted to a 2 (age group: younger/older) × 3 (similarity: high/moderate/low) × 2 (gaze direction: direct/averted) mixed-model, multifactorial analysis of variance (ANOVA). Median RT data were submitted to a 2 (age group: younger/older) × 3 (similarity: high/moderate/low) × 2 (emotion: target emotion/disgust) × 2 (gaze direction: direct/averted) mixed-model, multifactorial ANOVA. For all analyses, age group was the only between-subjects factor; all other factors were within-subjects. Alpha levels were corrected for the post-hoc comparisons (e.g., to compare younger and older adults’ discrimination performance across the three similarity pairings; 0.05/3 = 0.017) to avoid probability pyramiding.

Discrimination Performance (d’)

The ANOVA revealed significant main effects of age group, F(1, 87) = 5.98, p = 0.016, ηp2 = 0.064, gaze direction, F(1, 87) = 10.37, p = 0.002, ηp2 = 0.107, and similarity, F(2, 174) = 134.48, p < 0.001, ηp2 = 0.606, as well as a marginal Similarity × Age group interaction, F(2, 174) = 2.83, p = 0.062, ηp2 = 0.032. Condition means are displayed in panels A and B of Fig. 2. Younger adults outperformed older adults, and discrimination was better for direct than averted gaze expressions. Performance significantly, incrementally improved as emotion similarity decreased.

Independent samples t-tests revealed that the marginal interaction emerged because younger adults outperformed older adults in the high similarity pairing, t(87) = 3.40, padj = 0.016, d = 0.72, but not in the moderate and low similarity pairings, ts(87) ≤ 1.43, ps = 0.15.

Median Reaction Time

The ANOVA revealed significant main effects of age group, F(1, 87) = 34.40, p < 0.001, ηp2 = 0.29, similarity, F(2, 174) = 30.35, p < 0.001, ηp2 = 0.26, emotion, F(1, 87) = 16.59, p < 0.001, ηp2 = 0.16, and gaze direction, F(1, 87) = 9.68, p = 0.003, ηp2 = 0.10, as well as Age group × Emotion, F(1, 87) = 7.88, p = 0.006, ηp2 = 0.08, Similarity × Gaze direction, F(2, 174) = 11.54, p < 0.001, ηp2 = 0.12, Emotion × Gaze direction, F(1, 87) = 8.87, p = 0.004, ηp2 = 0.09, Gaze direction × Emotion × Age group, F(1, 87) = 5.51, p = 0.021, ηp2 = 0.06, and Similarity × Emotion × Gaze direction interactions, F(2, 174) = 10.68, p < 0.001, ηp2 = 0.11. Condition means are available in Table 2. RTs were faster for direct than averted gaze expressions, and RTs significantly, incrementally declined with decreasing similarity. Generally, younger adults’ RTs were faster than older adults’ RTs, but age group interacted with gaze direction and emotion. Emotion in this ANOVA compares RT to the target emotion (i.e., anger, sadness, or fear) with RT to the reference emotion (i.e., disgust). Emotion effects that do not involve similarity fail to offer clear interpretations and will no be discussed further.

To decompose the Similarity × Emotion × Gaze direction interaction, we examined how the Emotion × Gaze direction interaction varied by similarity. For the high similarity pairing, main effects of gaze direction, F(1, 88) = 18.76, p < 0.001, ηp2 = 0.18, and emotion, F(1, 88) = 8.26, p = 0.005, ηp2 = 0.09, were qualified by a Gaze direction × Emotion interaction, F(1, 88) = 15.63, p < 0.001, ηp2 = 0.15. Post-hoc tests of the simple main effects for this condition demonstrated that: (a) RTs were faster to direct relative to averted gaze expressions for angry (padj < 0.001) but not for disgusted stimuli; (b) For direct gaze expressions, RTs were faster for angry relative to disgusted stimuli, (padj < 0.001), and (c) For averted gaze expressions, RTs to angry and disgusted stimuli were equivalent. For the moderate similarity pairing, a main effect of emotion, F(1, 88) = 16.12, p < 0.001, ηp2 = 0.16, emerged because RTs were faster for sad relative to disgusted expressions (p < 0.001). For the low similarity pairing, no effects were observed.

Discussion of Task 1

The goal of Task 1 was to explore older and younger adults’ ability to discriminate between pairs of negative emotions with varying degrees of facial cue overlap. In addition, we wanted to examine whether eye gaze direction impacted age differences in emotion perception and perhaps interacted with cue similarity. Consistent with studies examining both human and computer models for emotion categorization, negative emotion discrimination performance declined and required more time as cue similarity increased (Aviezer et al., 2011; Katsikitis, 1997; Susskind et al., 2007; Young et al., 1997). Relative to the predictions made for Task 1, although older adults required more time than younger adults to respond, age effects in negative emotion discrimination performance were limited to the high similarity condition. Younger adults outperformed older adults here, but not in the other cue similarity conditions where differences between the emotions were more salient (Mienaltowski et al., 2013, 2019; Slessor et al., 2010).

Across cue similarity conditions, main effects of gaze were observed such that discrimination performance was best for direct than for averted gaze stimuli. This outcome held regardless of age, contrary to our predictions. In the absence of additional background context, a direct eye gaze may be valuable for negative emotion categorization. An averted eye gaze may reduce the diagnostic value of eye-related information to the categorization judgment when there is no focal target for the averted gaze in the background. With respect to the high similarity condition, this finding is supported by response time differences as well. Specifically, for angry expressions, participants required more time to evaluate averted gaze than direct gaze stimuli. The absence of interactions between age group and gaze direction for negative emotion discrimination performance in Task 1 runs counter to findings from prior research showing that younger adults are more sensitive than older adults to gaze direction (Slessor et al., 2008, 2010). Also, although prior research with younger adults found that an averted eye gaze facilitates fear perception in targets (Adams & Kleck, 2005), an averted eye gaze did not facilitate discrimination performance in our low similarity condition (i.e., fearful versus disgust). It is possible, however, that a ceiling effect in this condition (i.e., d' ≈ 4) reduced our ability to replicate this finding due to the clear difference between fear and disgusted expressions.

Task 2—Emotion Discrimination with Contextual Information

In the second negative emotion discrimination task, the influence of emotional context was explored by partly replicating Noh and Isaacowitz (2013) and extending their work by manipulating gaze direction. As in Task 1, negative expressions with high facial cue similarity (i.e., anger/disgust) and low facial cue similarity (i.e., fear/disgust; Aviezer et al., 2011) were presented in congruent and incongruent contexts. Again, gaze direction was manipulated such that the target’s gaze direction was either directed toward the observer or averted away from the observer toward an emotionally laden contextual feature. Consistent with prior studies, both age groups were expected to attend to the irrelevant contextual details (Aviezer et al., 2011), but older adults were expected to do so to a greater extent (Noh & Isaacowitz, 2013). Failure to inhibit contextual information would benefit emotion discrimination on trials with face-context congruity. However, older adults were expected to perform worse when facial cues and contextual details were incongruent, given their increased reliance on contextual influences when available in emotion recognition tasks.

Prior research demonstrates that younger adults are sensitive to gaze direction when evaluating emotional stimuli (Adams & Kleck, 2005; Slessor et al., 2010). For instance, an averted target gaze can facilitate perceptions of fear and a direct target gaze can facilitate perceptions of anger. Unlike in these studies, in Task 2 here, gaze was manipulated concurrently with background context. Participants were instructed that the background is not relevant to the judgment (as in Noh & Isaacowitz, 2013). If background context and target gaze direction influence the observer, an averted gaze could orient the observer toward context that (a) is useful for disambiguating facial cues when emotionally congruent with these cues, or (b) impairs facial emotion perception when emotionally incongruent with these cues. Each of these possibilities seems more likely when the observer is evaluating stimuli in the high cue similarity condition than in the low cue similarity condition. The overlap between facial cues creates uncertainty that may be mitigated by background context, especially if the target's eyes are averted toward the context instead of being directed toward the observer. Older adults do not inhibit the influence of background context as much as younger adults do (Noh & Isaacowitz, 2013), so older adults may show larger context congruency effects than younger adults. However, given that older adults attend less to the eye region compared to the mouth region (Chaby et al., 2017; Slessor et al., 2008; Sullivan et al., 2007), eye gaze and context may not interact for older adults to the same extent as for younger adults.

Method

Participants

The same younger (n = 48) and older (n = 45) adults who completed Task 1 also completed Task 2. Please refer to Table 1 for sample demographics and cognitive assessment characteristics. Data and stimuli are available at OSF: https://osf.io/th8zx/?view_only=e667f09325874047b1be401889b435d0.

Stimuli and Materials

Emotionally Expressive Faces

As in Task 1, facial stimuli were adapted from the NimStim Facial Stimulus Set (Tottenham et al., 2009). The same four actors depicting angry, disgusted, and fearful faces with a direct and averted gaze were included in Task 2. In order to reduce the likelihood that participants would experience fatigue during the study, we did not include the sad/disgust facial expression pair block in Task 2. Note that, relative to Task 1, the duration of this task was lengthened by including a within-subjects manipulation of context congruency.

Emotionally Expressive Contexts

Contexts were piloted on older and younger adults to ensure that both the body language and emotionally laden objects conveyed the intended emotion for both age groups (see Supplementary Materials). For each emotion category, three scenes were selected in which (a) the actor displayed a body posture consistent with the emotion reflected in the image, and (b) there was a focal object in the image that was consistent with the target emotion (Aviezer et al., 2011). Because Task 2 focused only on comparing anger, disgust, and fear, there were 12 total contexts. Two targets (either both male or both female) were paired with a given set of three context images, creating four unique groups of target/context pairings (i.e., Target A and B with Contexts 1–3, Targets C and D with Contexts 4–6, Targets E and F, with Contexts 7–9, and Targets G and H with Contexts 10–12). This combination strategy offered stimulus variety in Task 2. Each context image was edited to insert gaze direct and averted expressions, creating face-context congruent and incongruent stimuli. When averted, gaze was directed toward the focal object in the image carrying emotional connotations. The inserted face stimuli did not interfere with participants’ ability to observe the target's body posture. Example stimuli are provided in panels D–I of Fig. 1.

Procedure

After completing Task 1, participants completed Task 2. Again, blocks were operationalized in terms of emotion cue similarity (high similarity = anger/disgust, and low similarity = fear/disgust). Task 1 and Task 2 were not counterbalanced to avoid differential practice effects (i.e., practice with high and low but not moderate cue similarity). Face stimuli used in Task 1 were also used in Task 2. Background context varied from 10.8–20.7˚(h) × 13.8–27˚(w) visual angle when viewed at a fixed distance of 57.3 cm. Participants completed 384 trials in two blocks of 192 trials. In each block, participants observed eight targets expressing two negative emotions in congruent and incongruent contexts. Targets held a direct gaze in half of the trials and an averted gaze toward a focal object in the context in the other half. Each stimulus was repeated three times for each emotion pairing, resulting in 2 (emotion) × 8 (target) × 6 (Gaze × Rep) × 2 (context congruence) trials, or 192 trials. Blocks were randomly counterbalanced, and stimuli were randomly presented within each block. Hit rates and false alarm rates were calculated from 48 trials per cell of the 2 (similarity) × 2 (gaze direction) × 2 (context congruency) design.

Again, images were presented centrally on an ASUS VG248QE 24-inch full HD 1920 × 1080-pixel resolution monitor with a 100 Hz refresh rate. Participants indicated which emotion they observed on each trial using the 1-key or 3-key on the keyboard number pad. At the beginning of Task 2, participants completed six practice trials and were provided feedback after each trial. Feedback was only provided during the practice trials. A central fixation cross appeared for 300 ms before each stimulus and responding was self-paced. Before each block, participants were informed that they would always be asked to decide between disgust and a second emotion, such as anger or fear. Participants were instructed to be mindful of their choices because they would be unable to change a response once provided but were encouraged to select an emotion label to the best of their ability. Participants were instructed to categorize the emotion expressed on the face in each trial and that the background contexts were randomly assigned to each facial expression, consistent with the ignore condition of Aviezer et al. (2011). After completing Task 2, participants completed a demographics questionnaire and cognitive assessments. Finally, participants were thanked for their participation, debriefed, and compensated.

Results

Discrimination (d’) scores were calculated from participants’ hit rates and false alarm rates. Median RTs were analyzed as well. For Task 2, the same analysis plan was employed as in Task 1, however, each test included context congruency (2: congruent/incongruent). Also, cue similarity consisted of two levels (high/low) instead of three. For all analyses, age group was the only between-subjects factor; all other factors were within-subjects. Alpha levels were corrected for the post-hoc comparisons to avoid probability pyramiding in the same manner as Task 1.

Discrimination Performance (d’)

The ANOVA revealed significant main effects of age group, F(1, 87) = 4.88, p = 0.030, ηp2 = 0.05, similarity, F(1, 87) = 132.41, p < 0.001, ηp2 = 0.60, gaze direction, F(1, 87) = 5.06, p = 0.027, ηp2 = 0.06, and context congruency, F(1, 87) = 193.59, p < 0.001, ηp2 = 0.69, as well as Age group × Context congruency, F(1, 87) = 6.61, p = 0.012, ηp2 = 0.07, Similarity × Context congruency, F(1, 87) = 11.57, p = 0.001, ηp2 = 0.12, and Gaze direction × Context congruency interactions, F(1, 87) = 10.12, p = 0.002, ηp2 = 0.10. Condition means are reported in panels A–D in Fig. 3. Generally, younger adults outperformed older adults. Performance was better in the low similarity than in the high similarity pairing, and performance was better for direct relative to averted gaze stimuli. However, each of these main effects were moderated by context congruency.

Panels A–D have the mean emotion discrimination performance for each similarity and context congruency condition for younger (n = 46) and older (n = 45) adults. Panels A and B contain the mean d’ for younger adults (A) and older adults (B) in the high similarity condition (A/D = Anger/Disgust). Panels C and D contain the mean d’ for younger (C) and older (D) adults in the low similarity condition (F/D = Fear/Disgust). Error bars reflect ± 1 standard error

The Age group × Context congruency interaction emerged because younger adults outperformed older adults for expressions presented in incongruent contexts, t(86.10) = 2.61, padj = 0.01, d = 0.56, but no age differences emerged for expressions found in congruent contexts, t(87) = 0.14, p = 0.89. Context congruency also moderated the impact of similarity and gaze direction. For both high and low similarity pairings, discrimination performance was lower in incongruent relative to congruent contexts, ts(88) = 10.16–13.92, padj < 0.001, ds = 1.08–1.48. Costs to performance were larger when discriminating between stimuli with high cue similarity (anger/disgust). For the Gaze direction × Context congruency interaction, performance for direct and averted stimuli was equivalent in congruent contexts (Δd’ = 0.05), t(88) = 0.70, padj = 0.49. However, in incongruent contexts, performance was lower for averted compared to direct gaze stimuli (Δd’ = 0.18), t(88) = 4.19, padj < 0.001, d = 0.44, possibly because the averted gaze drew attention to the key contextual feature in the incongruent background.

Median Reaction Time

The ANOVA revealed significant main effects of age group, F(1, 87) = 41.11, p < 0.001, ηp2 = 0.32, similarity, F(1, 87) = 17.02, p < 0.001, ηp2 = 0.16, gaze direction, F(1, 87) = 5.15, p = 0.026, ηp2 = 0.06, and context congruency, F(1, 87) = 19.57, p < 0.001, ηp2 = 0.18, as well as Similarity × Emotion, F(1, 87) = 7.92, p = 0.006, ηp2 = 0.08, and Age × Similarity × Emotion interactions, F(1, 87) = 14.16, p < 0.001, ηp2 = 0.14. Condition means are available in Table 3. Generally, younger adults responded faster than did older adults. RTs were faster for direct relative to averted gaze expressions, for low relative to sigh similarity trials, and for congruent relative to incongruent trials.

To decompose the Age × Similarity × Emotion interaction, separate Similarity × Emotion ANOVAs were conducted for older and younger adults. Younger adults displayed a main effect of similarity, F(1, 43) = 15.25, p < 0.001, ηp2 = 0.26; RTs were faster for low similarity trials than for high similarity trials. Older adults displayed a main effect of similarity, F(1, 44) = 5.06, p = 0.03, ηp2 = 0.10, qualified by a Similarity × Emotion interaction, F(1, 44) = 13.69, p < 0.001, ηp2 = 0.24. Older adults had longer RTs for disgusted relative to angry expressions in the high similarity pairing, t(44) = 3.37, padj = 0.002, d = 0.50, but had significantly faster RTs for disgusted relative to fearful expressions in the low similarity pairing, t(44) = 2.91, padj = 0.006, d = 0.43. Between similarity conditions, older adult RTs were longer for disgusted facial expressions in the high relative to low similarity condition, t(44) = 3.45 padj = 0.001, d = 0.51, but their RTs angry and fearful expressions were no different, t(44) = 1.08, p = 0.29.

Discussion of Task 2

The goal of Task 2 was to examine the roles that facial cue similarity, target eye gaze direction, and the congruence between background context and facial cues play in younger and older adults' negative emotion discrimination performance. Consistent with our predictions and past research, older adults were worse at and slower than younger adults when discriminating between negative facial expressions presented in incongruent contexts but were no different from younger adults when negative facial expressions were presented in congruent contexts. When evaluating expressions with highly similar facial cues, congruent contexts facilitated emotion discrimination and RT. However, incongruent contexts created a cost to discrimination performance, especially when the emotions being compared were easily confusable. For both the low and high cue similarity conditions, the difference in performance for trials with congruent and incongruent backgrounds was larger for older adults than for younger adults, replicating Noh and Isaacowitz (2013), and confirming that older adults may be more inclined than younger adults to integrate all available information when forming their evaluation of the target's emotion—even if that information is deemed irrelevant to the task at hand by the instructions provided.

Clearly, background contextual cues affected both younger and older adults’ discrimination performance. When the target’s facial cues are inconsistent with the background contextual emotion cues, an averted gaze may serve as a powerful indicator of the value added by the contextual cues when evaluating the emotional state of our social partners in the wild. Objectively speaking, performance for both younger and older adults was worse on these trials when the target’s gaze was averted toward emotionally laden contextual information, suggesting that the contextual cues were used to attempt to disambiguate the facial emotion signal.

Exploratory Comparison between Task 1 and Task 2

Although not initially proposed at the outset of the study, a cost/benefit analysis of additional context could be captured via a direct comparison of negative emotion discrimination performance in Task 1 (no context) with performance in Task 2 (with context), relying strictly on the high and low similarity conditions shared in both tasks. Observed costs and benefits to discrimination performance when comparing trials that included background contextual emotion cues to those that did not can gauge the participants’ reliance on contextual cues during responding. Mixed-model ANOVAs were conducted to examine how discrimination performance (see Fig. 4) varied by context (neutral, congruent, incongruent), cue similarity, and age group. Context and cue similarity were within-subjects factors, and age group was a between-subjects factor. When appropriate, follow-up t-tests were conducted to decompose significant interactions. Paired-samples t-tests were applied for within-subjects comparisons, and independent-samples t-tests were applied to between-subjects comparisons. This analysis speaks to whether, relative to the condition in which no context is present, the availability of congruent contextual information in the background of the target improves negative emotion discrimination performance, whereas the availability of incongruent contextual information impairs negative emotion discrimination performance. Additionally, these tests can reveal whether such effects differ by age and/or by target eye gaze direction or emotion cue similarity.

Mean discrimination performance values for each similarity and context congruency condition for younger (n = 46) and older (n = 45) adults. The neutral d’ values were obtained from Task 1 and compared to performance in congruent and incongruent contexts from Task 2. Error bars reflect ± 1 standard error. Panels A and B contain the mean d’ scores for younger (A) and older (B) in the high similarity condition (A/D = Anger/Disgust). Panels C and D contain the mean d’ scores for younger (C) and older (D) adults in the low similarity condition (F/D = Fear/Disgust)

Unsurprisingly, context played a crucial role in discrimination performance but differentially so by emotion cue similarity, F(2, 174) = 9.91, p < 0.001, ηp2 = 0.10. This interaction was decomposed by examining the impact of background context on emotion discrimination separately by cue similarity condition. For emotions with highly similar facial cues (i.e., anger/disgust), context mattered, F(2, 174) = 173.12, p < 0.001, ηp2 = 0.67. Congruent contextual information facilitated performance, whereas incongruent context reduced performance relative to the condition in which no context was present, ts (87) ≥ 5.75, ps < 0.001, d ≥ 1.23. For emotions with less similar facial cues (i.e., fear/disgust), context mattered as well, F(2, 174) = 91.12, p < 0.001, ηp2 = 0.51, to the extent that incongruent context could disrupt facial emotion perception but did not drive performance to near chance levels as was the case for the highly similar emotion cue condition (Δd’ = 1.71), t(88) = 10.83, p < 0.001, d ≥ 2.31. Relative to the no context condition, congruent contextual information failed to facilitate discrimination performance for the low similarity emotion pairing (Δd’ = 0.26), t(88) = 1.56, p = 0.121.

Discrimination performance was better for direct gaze than for averted gaze stimuli, F(1, 87) = 12.59, p < 0.001, ηp2 = 0.13, but this effect varied as a function of context, F(2, 174) = 6.77, p = 0.001, ηp2 = 0.07. As reported in the results from Task 1, when facial stimuli were presented without context, discrimination performance was worse for averted than for direct gaze stimuli (Δd’ = 0.13), t(260) = 3.03, p = 0.032, d = 0.37. This same outcome emerged when facial cues were incongruent with the emotion conveyed by the background context (Δd’ = 0.18), t(260) = 4.61, p < 0.001, d = 0.44. When facial cues were congruent with the emotion conveyed by the background context, discrimination performance did not differ by target gaze direction (Δd’ = 0.04), t(260) = 0.009, p = 0.971. Ultimately, an averted gaze led to lower performance than a direct gaze both when context was absent and when it was present but incongruent with facial cues. However, the disruptive impact of averted gaze was ameliorated when the context was congruent with the target's facial cues. Taken together, these findings suggest that participants attend to the background context both when it is congruent or incongruent with the target's facial cues, but that, when there is no context available, an averted gaze may reduce the emotion signal on the target's static facial expression.

Younger adults outperformed older adults, F(1, 87) = 6.47, p = 0.013, ηp2 = 0.07, but age group interacted with context, F(2, 174) = 5.19, p = 0.006, ηp2 = 0.06. This interaction was decomposed by examining the impact of background context on emotion discrimination separately by age group. Overall, the impact of context on each age group was comparable: older adults, F(2, 88) = 99.88, p < 0.001, ηp2 = 0.69; younger adults, F(2, 86) = 75.15, p < 0.001, ηp2 = 0.67. Older adults' performance improved significantly when facial stimuli were presented in congruent contexts (Δd’ = 1.18) but declined significantly when facial stimuli were presented in incongruent contexts (Δd’ = 1.95) relative to no context, ts(44) ≥ 5.07, p < 0.001, d ≥ 1.53. Similarly, presenting facial stimuli in congruent contexts facilitated younger adults' performance (Δd’ = 0.51), whereas presenting facial stimuli in incongruent context hindered their performance (Δd’ = 1.55) relative to no context, ts(43) ≥ 2.90, p ≤ 0.005, d ≥ 0.88. Although younger and older adults' discrimination performance both benefited from and was disrupted by context, the effects were larger for older adults than for younger adults. This replicates Noh and Isaacowitz (2013) who attributed the especially negative impact of incongruent contexts on older adults' emotion perception to an inability to inhibit the influence of context.

General Discussion

The current study investigated how emotion cue similarity contributes to age-related differences in negative emotion discrimination performance, as well as whether the target’s gaze direction and non-facial cues like background context impact discrimination performance. Although examined independently from one another in prior work, we sought to examine the interplay of these factors on age differences in negative emotion perception. Consistent with expectations, older adults were outperformed by younger adults, especially when emotions were signaled by similar facial cues, when gaze direction was supposed to amplify the emotion signal, and when incongruent contextual features were available to disambiguate facial cues. These findings are discussed further below.

Emotion Discrimination without Contextual Information

Absent contextual information, younger adults were better than older adults at discriminating between negative emotion pairs of varying similarity. Although consistent with past research (Ruffman et al., 2008), our data extend these findings. Older adults struggled more than younger adults when discriminating between easily confusable negative emotions (i.e., anger and disgust), but age differences were not observed when participants discriminated between sad and disgusted expressions or between fearful and disgusted expressions (i.e., moderate to low facial cue similarity; Aviezer et al., 2011). Consequently, age differences in emotion discrimination may be more apparent when participants are asked to perform perceptually demanding comparisons even when not taxed by the need to select amongst 4 + emotion categories (i.e., load associated with maintaining and switching between display rules for expansive set of categories to make an accurate judgment). This finding is consistent with other studies where specific emotion pairs are pit against one another (Mienaltowski et al., 2013; Orgeta, 2010).

Gaze direction minimally impacted discrimination performance when emotional expressions were presented devoid of context regardless of how similar emotion cues were between competing categories. However, it is important to note that gaze direction is not the only emotion cue found in the eye region that is necessary for accurately categorizing eye-dominant emotions. For instance, fearful expressions include widened eyes with more prominent sclera, as well as a heightening of the brows. Averting the target's gaze through image manipulation does not alter the eye socket, brow, or forehead. Rather, it appears to subtly distort the emotion signal. Perhaps gaze information may weaken the emotion signal when it does not itself serve as a diagnostic cue for an emotion or when it is not really directing attention to a location in the environment signaling meaningful emotion information. In other words, perceivers may attend to but gain little additional useful information from an averted gaze, particularly when the facial expressions are easily confused (i.e., anger and disgust).

Emotion Discrimination with Contextual Information

Although participants were told that the contextual information was randomly assigned to each facial stimulus, we anticipated that context would nevertheless affect emotion discrimination because context exerts a top-down influence on emotion perception (Ngo & Isaacowitz, 2015). Given that older adults were less likely to inhibit contextual influence in prior studies, we expected older adults both to benefit from congruent contextual details and to pay a cost for incongruent contextual details (Noh & Isaacowitz, 2013). When comparing highly similar negative emotions, both age groups benefited from additional context when that context was congruent with the facial expression. Likewise, both worsened when the additional context was incongruent with the facial expression. In other words, both younger and older adults used the contextual details when making their judgments. This was stronger for older adults who did not differ from chance, whereas younger adults performed above chance. Unlike Noh and Isaacowitz (2013) who used a multi-label task, the current study utilized an emotion discrimination task with only two label options meant to reduce cognitive load. Nevertheless, both age and age-by-context effects emerged in the current study for confusable negative emotion pairings, supporting a general deficit-oriented interpretation of past emotion perception findings. Taken together, these findings demonstrate that context is difficult to ignore and can perhaps even override the information provided by the facial emotion cues.

Again, the findings from the current study underscore the often-observed outcome that advancing age is associated with emotion perception deficits (Hayes et al., 2020). Whether facial emotion cue salience is manipulated via expressive intensity (Mienaltowski et al., 2013; Orgeta & Phillips, 2008), stimulus clarity amidst noise (Smith et al., 2018), or the dynamic evolution of an expression (Holland et al., 2019), an age deficit seems to emerge, albeit often small. Despite this commonality with prior research, another important finding emerged here. The current study demonstrates that an age-related negative emotion discrimination deficit is no longer observed when the background contextual information is consistent with the facial cues available on the target, regardless of the facial cue similarity in the judgment involved. Certainly, incongruent contextual information disrupts emotion discrimination accuracy and does this more so for older adults than for younger adults. However, in everyday life, the contextual information is undoubtedly more likely to match our social partners' facial cues than conflict with it. Even if a social partner engages in emotion regulation to suppress the emotion signal conveyed by their facial expression, the background context (e.g., spilled drink, dented fender, dog vomit, etc.) will still provide a signal to potentially communicate how our partner may feel. Given that consistent context is available, older adults seem to be as likely as younger adults to notice and use this information. Our emotions are defined by more than the muscular conformation of our face (Barrett et al., 2019). In the current study, participants benefited from congruent contextual information despite the fact that they were instructed that it was randomly assigned (i.e., irrelevant). Although not examined here, one can only imagine that making this context relevant to the judgment would similarly facilitate performance. This finding further supports the call by Isaacowitz and Stanley (2011) to explore age differences in emotion perception from new experimentally controlled yet ecologically meaningful perspectives.

Averted Gaze Direction Draws Attention to Contextual Details

When context is available in experimental tasks like those used in the current study, gaze direction may have a greater impact on emotion perception because the target's gaze may communicate new information to the observer when directed toward a contextual detail. For instance, a fearful expression with eyes averted toward a fear-inducing contextual detail may facilitate fear perception because the context reinforces the signal communicated by the facial cues (Adams & Kleck, 2005). Conversely, a disgust expression with eyes averted toward this same contextual detail may disrupt disgust perception because the context contradicts the facial emotion signal. In the current study, averted eye gaze exacerbated the disruptive effects of incongruent contexts. The emotionally laden object created contextual emotion, or tone, that was difficult to ignore and led to erroneous conclusions despite participants being informed that background contexts were randomly assigned to the faces and that decisions should be based only on facial cues.

When the background context was congruent with the target's expression, gaze direction neither helped nor hurt performance. Unlike for the incongruent context trials, when the context was congruent with the facial expression, there was little to no competition between emotion signals. When the emotion categories being considered are highly confusable (e.g., anger/disgust), the target's averted eye gaze may prompt the observer to look to the context for relevant information. However, when the emotion categories being considered are less confusable (e.g., fear/ disgust), the target's averted eye gaze has less of an influence on the observer's ability to detect the emotion signal needed to make an accurate choice. Age did not moderate the relationship between gaze direction and context, which was inconsistent with our expectations (Slessor et al., 2010) and suggests that younger and older adults similarly exploit context to disambiguate negative facial emotion signals.

Limitations and Future Directions

The current study provides insight into age differences in negative emotion discrimination but is not without its limitations. By limiting choice to two emotion label options, we observed small age differences in emotion recognition (for review, Hayes et al., 2020). One benefit of this technique is that it allows for participants to complete emotion judgments without having to focus on the criteria for more than two emotion categories at the same time. Admittedly, emotion categorization in every day experiences can be more complex than two-alternative choices for static images and can include more subtlety in the observer's interpretation than is captured by basic category labels (Barrett et al., 2019). For example, an observer may interpret a wide-eyed expression as anxious, afraid, startled, alarmed, etc., but, in the current study, was limited to the discrete emotion category of fear. Given the number of factors being examined simultaneously in the current study, some of this subtlety could not be investigated. Moreover, use of a signal detection approach for task design meant forgoing some emotion pairings or additional emotion categories to minimize the likelihood of participant fatigue.

Another issue common to studies examining the impact of aging on emotion perception is that stimulus-specific features limit the generalizability of findings. For instance, we only used closed-mouth facial expressions. Other work has found that closed mouth disgust expressions in an angry context produce a confusability effect (Reschke et al., 2019), but that open mouth disgust expressions in fear and sad contexts produce a confusability effect. Additionally, this study only included younger adult targets. Older adults are more accurate at labeling emotion in same-age peers than in younger adult targets (Fölster et al., 2014; Malatesta et al., 1987), possibly due to more frequent interactions with same-age peers. The gender of the target also impacts how emotions are perceived, as gender evokes stereotyped associations (e.g., angry males and sad or happy females; Bijlstra et al., 2010; Craig & Lipp, 2018) and expectations for gender-specific ties between facial structure and emotion) (Becker et al., 2007; Hess et al., 2009; Zebrowitz et al., 2010). Future research may also consider whether contexts that are differentially relevant to each age group impact emotion perception (Foul et al., 2018). Finally, the inferred racial background of targets can also impact emotion perception (Halberstadt et al., 2020; Hugenberg, 2005). The current study employed only Caucasian targets to avoid introducing another stimulus characteristic to the already expansive independent variable list of the design, but certainly future research that will explore how stereotype associations might obscure affective judgments is needed. However, even before research is directed to understanding potential effects of stereotypes on such perceptions, research needs to be conducted with a more racially diverse sample and stimulus set. The selection of targets in the current study was also limited by the availability of contextual backgrounds that could be edited to introduce emotional facial expressions. The lack of representativeness of black, indigenous, and people of color in media, advertising, and web content reflects a limitation that detracts from social scientists’ ability to address how factors manipulated in studies like this extend beyond commonly depicted actors (Roberts et al., 2020).

Conclusions

The current study demonstrates that gaze direction and background context interact to influence negative emotion discrimination ability, especially when the emotions under consideration are highly similar and may encourage the use of additional context to disambiguate the categorization process. Both younger and older adults displayed a drive to attend to the contextual background information even though instructed it was randomly assigned. Contextual emotion cues can supplement—nd potentially override—the facial emotion cues used by the observer to reduce uncertainty when negative emotional facial expressions are challenging to decode. Moreover, when the target’s eye gaze is directed toward contextual emotion cues, observers may interpret the gaze direction as a cue signaling that emotional details may be embedded within the target’s context. This finding suggests that, as a communicative cue, gaze direction alerts both younger and older observers to aspects of the context that are available to aid facial cue interpretation, which could be informative for researchers investigating methods to improve emotion perception, as some interventions emphasize focusing on the face (Tanaka et al., 2012). Finally, consistent with prior research (Noh & Isaacowitz, 2013), older adults were less likely than younger adults to inhibit the influence of incongruent contextual information when discriminating between highly confusable negative emotions, suggesting that advancing age may lead to a greater automatic reliance on non-facial emotion cues when available in the environment to inform social interaction. To the extent that additional background context adds socially relevant information and creates additional complexity in the perceived mental state of the target, older adults may be more prone than younger adults to rely on the background context instead of the facial cues when evaluating the target's emotional state. This interpretation is consistent with prior research demonstrating that, apart from deficits in negative emotion recognition, older adults face greater challenges than younger adults in recognizing complex negative mental states (Franklin & Zebrowitz, 2016). Taken together, the current study highlights the importance of examining how emotions are interpreted using facial cues and contextual information in the social target's background. This background, in conjunction with eye gaze information conveyed by the social target, can have a strong effect on which emotion is perceived, especially for older adults who may use this context to assist in discriminating between negative emotional states that share overlapping facial cues.

Availability of Data and Material

Data and material can be found on Open Science Framework (OSF): https://osf.io/th8zx/?view_only=e667f09325874047b1be401889b435d0.

Code Availability

Availability of stimuli and E-prime 2.0 experiment code available by request to corresponding author.

References

Adams, R. B., & Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion, 5, 3–11. https://doi.org/10.1037/1528-3542.5.1.3

Aviezer, H., Dudarev, V., Bentin, S., & Hassin, R. R. (2011). The automaticity of emotional face-context integration. Emotion, 11, 1406–1414. https://doi.org/10.1037/a0023578

Aviezer, H., Hassin, R. R., Ryan, J., Grady, C., Susskind, J., Anderson, A., Moscovitch, M., & Bentin, S. (2008). Angry, disgusted, or afraid?: Studies on the malleability of emotion perception. Psychological Science, 19, 724–732. https://doi.org/10.1111/j.1467-9280.2008.02148.x

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1–68. https://doi.org/10.1177/1529100619832930

Barrett, L. F., & Kensinger, E. A. (2010). Context is routinely encoded during emotion perception. Psychological Science, 21, 595–599. https://doi.org/10.1177/095679761036357

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I., & Tapp, R. (2014). Featural processing in recognition of emotional facial expressions. Cognition and Emotion, 28, 416–432. https://doi.org/10.1080/02699931.2013.833500

Becker, D. V., Kenrick, D. T., Neuberg, S. L., Blackwell, K. C., & Smith, D. M. (2007). The confounded nature of angry men and happy women. Journal of Personality and Social Psychology, 92, 179–190. https://doi.org/10.1037/0022-3514.92.2.179

Bijlstra, G., Holland, R. W., & Wigboldus, D. H. J. (2010). The social face of emotion recognition: Evaluations versus stereotypes. Journal of Experimental Social Psychology, 46, 657–663. https://doi.org/10.1016/j.jesp.2010.03.006

Blanchard-Fields, F. (2007). Everyday problem solving and emotion: An adult developmental perspective. Currently Directions in Psychological Science, 16, 26–31. https://doi.org/10.1111/j.1467-8721.2007.00469.x

Calvo, M. G., & Nummenmaa, L. (2008). Detection of emotional faces: Salient physical features guide effective visual search. Journal of Experimental Psychology: General, 137, 471–494. https://doi.org/10.1037/a0012771

Carstensen, L. L., & Mikels, J. A. (2005). At the intersection of emotion and cognition: Aging and the positivity effect. Current Directions in Psychological Science, 14, 117–121. https://doi.org/10.1111/j.0963-7214.2005.00348.x

Chaby, L., Hupont, I., Avril, M., Luherne-du Boullay, V., & Chetouani, M. (2017). Gaze behavior consistency among older and younger adults when looking at emotional faces. Frontiers in Psychology, 8, 1–14. https://doi.org/10.3389/fpsyg.2017.00548

Charles, S. T., & Campos, B. (2011). Age-related changes in emotion recognition: How, why, and how much of a problem? Journal of Nonverbal Behavior, 35, 287–295. https://doi.org/10.1007/s10919-011-0117-2

Craig, B. M., & Lipp, O. V. (2018). The influence of multiple social categories on emotion perception. Journal of Experimental Social Psychology, 75, 27–35. https://doi.org/10.1016/j.jesp.2017.11.002

Ekstrom, R. B., French, J. W., Harman, H. H., & Dermen, D. (1976). Kit of factor-referenced cognitive tests (Rev). Education Testing Services.

Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39, 175–191.

Folstein, M. F., Folstein, S. E., & McHugh, P. R. (1975). Mini-mental state: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12, 189–198. https://doi.org/10.1016/0022-3956(75)90026-6

Fölster, M., Hess, U., & Werheid, K. (2014). Facial age affects emotional expression decoding. Frontiers in Psychology, 5(30), 1–13. https://doi.org/10.3389/fpsyg.2014.00030

Foul, Y. A., Eitan, R., & Aviezer, H. (2018). Perceiving emotionally incongruent cues from faces and bodies: Older adults get the whole picture. Psychology and Aging, 33, 660–666. https://doi.org/10.1037/pag0000255

Franklin, R. G., & Zebrowitz, L. A. (2016). Aging-related changes in decoding negative complex mental states from faces. Experimental Aging Research, 42, 471–478. https://doi.org/10.1080/0361073X.2016.1224667

Halberstadt, A. G., Cooke, A. N., Garner, P. W., Hughes, S. A., Oertwig, D., & Neupert, S. D. (2020). Racialized emotion recognition accuracy and anger bias of children’s faces. Emotion. https://doi.org/10.1037/emo0000756 Advance Online Publication.

Hayes, G. S., McLennan, S. N., Henry, J. D., Philips, L. H., Terrett, G., Rendell, P. G., Pelly, R. M., & Labuschagne, I. (2020). Task characteristics influence facial emotion recognition age-effects: A meta-analytic review. Psychology and Aging, 35, 295–315. https://doi.org/10.1037/pag0000441

Hess, U., Adams, R. B., Grammer, K., & Kleck, R. E. (2009). Face gender and emotion expression: Are angry women more likely men? Journal of Vision, 9(12), 1–8. https://doi.org/10.1167/9.12.19

Holland, C. A. C., Ebner, N. C., Lin, T., & Samanez-Larkin, G. R. (2019). Emotion identification across adulthood using the Dynamic FACES database of emotional expressions in younger, middle aged, and older adults. Cognition and Emotion, 33, 245–257. https://doi.org/10.1080/02699931.2018.1445981

Hugenberg, K. (2005). Social categorization and the perception of facial affect: Target race moderates the response latency advantage for happy faces. Emotion, 5(3), 267–276. https://doi.org/10.1037/1528-3542.5.3.267

Isaacowitz, D. M., Löckenhoff, C. E., Lane, R. D., Wright, R., Sechrest, L., Riedel, R., & Costa, P. T. (2007). Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging, 22, 147–159. https://doi.org/10.1037/0882-7974.22.1.147

Isaacowitz, D. M., & Stanley, J. T. (2011). Bringing an ecological perspective to the study of aging and recognition of emotional facial expressions: Past, current, and future methods. Journal of Nonverbal Behavior, 35, 261–278. https://doi.org/10.1007/s10919-011-0113-6

Katsikitis, M. (1997). The classification of facial expressions of emotions: A multidimensional-scaling approach. Perception, 25, 613–626. https://doi.org/10.1068/p260613

Macmillan, N. A., & Creelman, C. D. (2005). Detection theory (2nd ed.). Lawrence Erlbaum.

Malatesta, C. Z., Izard, C. E., Culver, C., & Nicolich, M. (1987). Emotion communication skills in young, middle-aged, and older women. Psychology and Aging, 2, 193–203. https://doi.org/10.1037//0882-7974.2.2193

Meeren, H. K. M., van Heijnsbergen, C. R. J., & de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America, 102, 16518–16523. https://doi.org/10.1073/pnas.0507650102

Mienaltowski, A., Johnson, E. R., Wittman, R., Wilson, A., Sturycz, C., & Norman, J. F. (2013). The visual discrimination of negative facial expressions by younger and older adults. Vision Research, 81, 12–17. https://doi.org/10.1016/j.vsres.2013.01.006

Mienaltowski, A., Lemerise, E. A., Greer, K., & Burke, L. (2019). Age-related differences in emotion matching are limited to low intensity expressions. Aging, Neuropsychology, and Cognition. https://doi.org/10.1080/13825585.2018.1441363

Murphy, N. A., & Isaacowtiz, D. M. (2010). Age effects and gaze patterns in recognizing emotional expressions: An in-depth look at gaze measures and covariates. Cognition and Emotion, 24, 436–452. https://doi.org/10.1080/02699930802664632

Ngo, N., & Isaacowitz, D. M. (2015). Use of context in emotion perception: The role of top-down control, cue type, and perceiver’s age. Emotion, 15, 292–302. https://doi.org/10.1037/emo0000062

Noh, S. R., & Isaacowitz, D. M. (2013). Emotional faces in context: Age differences in recognition accuracy and scanning patterns. Emotion, 13, 238–249. https://doi.org/10.1037/a0030234

Orgeta, V. (2010). Effects of age and task difficulty on recognition of facial affect. The Journals of Gerontology, Series b: Psychological Sciences and Social Sciences, 65, 323–327. https://doi.org/10.1093/geronb/gbq007

Orgeta, V., & Phillips, L. H. (2008). Effects of age and emotional intensity on the recognition of facial emotion. Experimental Aging Research, 34, 63–79. https://doi.org/10.1080/0361073070162047

Radloff, L. S. (1977). The CES-D Scale: A self-report depression scale for research in the general population. Applied Psychological Measurement, 1, 385–401. https://doi.org/10.1177/014662167700100306

Reschke, P. J., Walle, E. A., Knothe, J. M., & Lopez, L. D. (2019). The influence of context on distinct facial expressions of disgust. Emotion, 19, 365–370. https://doi.org/10.1037/emo0000445

Roberts, S. O., Bareket-Shavit, C., Dollins, F. A., Goldie, P. D., & Mortenson, E. (2020). Racial inequality in psychological research: Trends of the past and recommendations for the future. Perspectives on Psychological Science. https://doi.org/10.1177/1745691620927709

Ruffman, T., Henry, J. D., Livingstone, V., & Phillips, L. H. (2008). A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience and Biobehavioral Review, 1023, 1–20. https://doi.org/10.1016/j.neubiorev.2008.01.001

Scheibe, S., & Carstensen, L. L. (2010). Emotional aging: Recent findings and future trends. The Journals of Gerontology, Series b: Psychological Sciences and Social Sciences, 65, 135–144. https://doi.org/10.1093/geronb/gbp132

Slessor, G., Phillips, L. H., & Bull, R. (2008). Age-related declines in basic social perception: Evidence from tasks assessing eye-gaze processing. Psychology and Aging, 23, 812–822. https://doi.org/10.1037/a0014348

Slessor, G., Phillips, L. H., & Bull, R. (2010). Age-related changes in the integration of gaze direction and facial expressions of emotion. Emotion, 10, 555–562. https://doi.org/10.1037/a0019152

Smith, M. L., Grühn, D., Bevitt, A., Ellis, M., Ciripan, O., Scrimgeour, S., Papasavva, M., & Ewing, L. (2018). Transmitting and decoding facial expressions of emotion during healthy aging: More similarities than differences. Journal of Vision, 18(9), 1–16. https://doi.org/10.1167/18.9.10

Sullivan, S., Ruffman, T., & Hutton, S. B. (2007). Age differences in emotion recognition skills and the visual scanning of emotion faces. The Journals of Gerontology, Series b: Psychological Sciences and Social Sciences, 62, 53–60. https://doi.org/10.1093/geronb/62.1.53

Susskind, J. M., Littlewort, G., Bartlett, M. S., Movellan, J., & Anderson, A. K. (2007). Human and computer recognition of facial expressions of emotion. Neuropsychologia, 45, 152–162. https://doi.org/10.1016/j.neuropsychologia.2006.05.001

Tanaka, J. W., Wolf, J. M., Klaiman, C., Koenig, K., Cockburn, J., Herlihy, L., Brown, C., Stahl, S. S., South, M., McPartland, J. C., Kaiser, M. D., & Schultz, R. T. (2012). The perception and identification of facial emotions in individuals with autism spectrum disorders using the Let’s Face It! Emotion Skills Battery. Journal of Child Psychology and Psychiatry, 53, 1259–1267. https://doi.org/10.1111/j.1469-7610.2012.02571.x

Tottenham, N., Tanak, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., Marcus, D. J., Westerlund, A., Casey, B. J., & Nelson, C. (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. https://doi.org/10.1016/j.psychres.2008.05.006

Wong, B., Cronin-Golomb, A., & Neargarder, S. (2005). Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology, 19, 739–749. https://doi.org/10.1037/0894-4105.19.6.739

Young, A. W., Rowland, D., Calder, A. J., Etcoff, N. L., Seth, A., & Perrett, D. I. (1997). Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition, 63(3), 271–313. https://doi.org/10.1016/S0010-0277(97)00003-6

Zebrowitz, L. A., Kikuchi, M., & Fellous, J.-M. (2010). Facial resemblance to emotions: Group differences, impression effects, and race stereotypes. Journal of Personality and Social Psychology, 98, 175–189. https://doi.org/10.1037/a001799

Funding

This work was supported by the Graduate School at Western Kentucky University (221560).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We attest there is no conflict of interest.

Ethics Approval