Abstract

This work focuses on recovering images from various forms of corruption, for which a challenging non-smooth, non-convex optimization model is proposed. The model consists of a concave truncated data fidelity functional and a convex total-variational term. We introduce and study various novel equivalent mathematical models from the perspective of duality, leading to two new optimization frameworks in terms of two-stage and single-stage. The two-stage optimization approach follows a classical convex-concave optimization framework with an inner loop of convex optimization and an outer loop of concave optimization. In contrast, the single-stage optimization approach follows the proposed novel convex model, which boils down to the global optimization of all variables simultaneously. Moreover, the key step of both optimization frameworks can be formulated as linearly constrained convex optimization and efficiently solved by the augmented Lagrangian method. For this, two different implementations by projected-gradient and indefinite-linearization are proposed to build up their numerical solvers. The extensive experiments show that the proposed single-stage optimization algorithms, particularly with indefinite linearization implementation, outperform the multi-stage methods in numerical efficiency, stability, and recovery quality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Image restoration is one of the most interesting problems of image processing, which aims to discover the ‘clean’ or original image from the given corrupted image and covers a wide range of applications, e.g., image denoising, image deblurring and image deconvolution, etc. In practice, image corruptions may come from many different sources, such as malfunctioning pixels in camera sensors, motion blurring, or bit errors in transmission [16, 49], which can often be formulated as the following image convolution model

where \(\varvec{u}\) denotes the restored original image, f is the given corrupted image and e is some additive random noise. The linear operator H in (1) stands for image corruption, e.g., a convolution operator for image deconvolution, a projection operator for image inpainting and the identity operator for image denoising [7, 38], etc. It is often challenging to restore the ‘correct’ image \(\varvec{u}\) inversely from the input corrupted image f, which is ill-posed in most practical scenarios due to the unknown noise e and usually undetermined operator H [43]. To handle the ill-posedness, a regularization term \(J(\varvec{u})\) incorporating prior information about the ‘correct’ image \(\varvec{u}\) is introduced, leading to the following optimization problem:

where \(l\left( {H\varvec{u},f} \right) \) measures the data fidelity between \(H\varvec{u}\) and the observation f, and \(\lambda >0\) is a positive parameter to trade-off between the two terms of data fidelity and regularization \(J(\varvec{u})\). In view of the proposed optimization model (2), the regularization function \(J\left( \varvec{u} \right) \) encodes the prior image information. And both the smooth quadratic Tikhonov regularization term [36] and the non-smooth total-variation (TV) regularization term [28] were proposed and extensively studied to enforce regularities of image functions. Particularly, the TV regularization function performs well in practice to preserve rich image details and edges while smoothing.

On the other hand, the data fidelity function \(l\left( { \cdot , \cdot } \right) \) in (2) usually penalizes the difference between \(H\varvec{u}\) and f. The formulation of \(l\left( { \cdot , \cdot } \right) \) is carefully taken as the assumed statistical distribution model of the additive noise e. Nowadays, it is well-known that \(l_1\)-norm [12, 25, 42], as the fidelity penalty function \(l\left( { \cdot , \cdot } \right) \,\), performs more robustly to non-Gaussian-type noise in many applications of image restoration than \(l_2\)-norm [5, 26, 37]. The \(l_2\)-norm is sensitive on the specified additive Gaussian noise and quadratic loss. The \(l_1\)-norm shows outperformance on the Laplace-type noise distribution [9, 24], and particularly provides a proper convex approximation to the non-convex and nonlinear sparsity prior, i.e. \(l_0\)-norm, which is closely related to a universal criterion of signal recoverability. To some extent, such convex \(l_1\)-norm and other convex relaxations of the sparsity measurement [4, 15, 22, 30], which enjoy great computational simplicity and efficiency though, yield a biased estimator for the highly non-convex sparsity function of \(l_0\)-norm, and thus fail to achieve the best estimation performance in practice [35, 47]. To alleviate these issues, many works have proposed to directly exploit \(l_0\)-pseudo-norm or apply non-convex approximations of sparsity as the fidelity penalty function \(l(\cdot , \cdot )\). Yuan and Ghanem [44] proposed a sparse optimization method, which applies \(l_0\)-pseudo-norm data fidelity, for image denoising and deblurring with impulse noise. Gu et al. [11] introduced the TV-SCAD model, which utilizes the smoothly clipped absolute deviation (SCAD) data penalty function. Zhang et al. [47] proposed a non-convex model that exploits exponential-type data fitting term for image restoration. And recently, Zhang et al. [45] proposed the TVLog model that makes use of a non-convex log function for data fitting term. In addition, there are some other fractional- and exponential-type date fidelity functions [10, 17], and the directly designed difference of convex functions [1], etc. These abundant non-convex data fidelity penalty functions are proven to outperform the convex \(l_1\)-norm data fidelity penalty function in practice.

Since the data fidelity penalty function is generally non-convex, the computation of minimizers of the non-convex model (2) is a challenge problem. For non-convex models that directly utilize the sparsity function, scholars usually reformulate them into a mathematical programming with equilibrium constraints (MPEC) [2, 23, 44]. Work [44] proposed a proximal ADMM method to solve the MPEC, which is more effective than the penalty decomposition algorithm. As for non-convex models that contain some well-defined non-convex sparsity approximations, such as SCAD, log, truncated-\(l_1\), \(l_p\), fractional- and exponential-type functions, etc. These approximations can be characterized as a concave-convex composite function \(g(\left|\cdot \right|)\), where the outer function \(g(\cdot )\) is concave and the inner one \(\left|\cdot \right|\) is convex. In this work, we focus on this class of popular non-convex models. Below we will briefly introduce several commonly used numerical optimization strategies for solving such non-convex models.

The graduated non-convexity (GNC) method [3, 40, 48] is widely used to optimize non-convex energy functions. Rather than optimizing the original non-convex model directly, GNC method optimize a succession of surrogate functions that begin with a convex approximation of the original model, progressively become non-convex, and then converge to the original model. Due to the lack of efficient solvers, the broad applications of GNC have been limited. The Convex Non-Convex (CNC) strategy [8, 19, 21, 29] aims to construct and then optimize convex functionals containing non-convex regularization terms, which can be regarded as an evolution of the GNC method. Unlike the GNC method, the CNC strategy leads to a sequence of convex problems by carefully refining the parameter, which is a scalar representing the ‘degree of concavity’ of the non-convex term. The difference of convex functions (DC) [32,33,34] is also a common method to solve such non-convex models. As shown in [11, 45], both SCAD and log functions can be decomposed as the difference of two convex functions and then solved using a DC algorithm (DCA). The DCA solves non-convex models by linearizing their second convex function and then solving a sequence of convex functions. These three methods can be viewed as the energy function being redefined in each iteration to obtain a gradually sparser solution; in this sense, they are all multi-stage methods.

It is obvious that the non-convex fidelity functions provide a better mimic of the sparsity measure and are, hence, more effective in sparsity-based estimation than the other convex fidelity functions. Due to these considerations, we propose the image restoration model (2) with a concave-convex composite data-fidelity function \(l\left( { \cdot , \cdot } \right) \), in this work, which properly promotes the sparsity measure and results in a challenging non-smooth non-convex optimization problem. Meanwhile, we introduce its new equivalent optimization models for analysis and numerical implementations under a primal-dual perspective. As shown by Zhang [46], such concave-convex composite function indeed generalizes many popular non-convex sparsity approximation functions and can be in-depth analyzed in a multi-stage optimization way.

1.1 Contributions and Organization

In this paper, we study the proposed non-convex and non-smooth optimization model (2) of image restoration, which consists of a concave-convex composite function to impose sparse data fidelity and a non-smooth TV regularization term. We first follow the essential ideas of multi-stage optimization introduced in [46] to propose a new two-stage optimization approach to solve the optimization model (2) with both outer and inner loops. The key optimization sub-problem is efficiently solved by the augmented Lagrangian method (ALM) based on its dual model in the inner loop at the first stage. The key parameters are updated in the outer loop at the second stage. Then, we show that the proposed optimization model can be rewritten equally as a linearly constrained convex optimization problem using a primal-dual formulation. This approach has two benefits: in theory, it means that the studied non-convex optimization problem could be optimized globally given due to its equivalence to the dual model, which is a linearly constrained convex optimization problem; in numerics, all the variables can be optimized simultaneously under the proposed our new single-stage optimization framework. Moreover, numerical solvers for the kernel optimization problems for both the two-stage and the single-stage approaches can be implemented with two different simple fast algorithms, i.e., projected gradient scheme and indefinite linearized scheme. Extensive experiments over various image restoration problems illustrate the proposed single-stage optimization approach, with both projected gradient and indefinite linearized implementations, outperforms the other approaches, esp. in terms of numerical efficiency, stability, and image recovery. This further confirms our theoretical analysis.

The rest of this paper is organized as follows: Sect. 2 gives the details of the two-stage optimization method. Section 3 presents the mathematically equivalent dual model of the non-convex image restoration optimization model, which turns out to be a linear constrained convex optimization problem. A new efficient ALM-based optimization method is used to solve it. Section 4 gives extensive and comparative experiments for the proposed model and algorithms. A conclusion is given in Sect. 5.

2 Two-Stage Optimization Approach

In this work, we aim to solve the following discretized optimization problem:

where, \(\varvec{u} \in R^{N}\) with \(N = m \times n\) represents the column stacking vectorization of a 2D \(m \times n\) image; for each pixel element i, the sparsity function \(\Psi (\cdot )\) takes 1 for any nonzero variable and vanishes otherwise; \(O_i \in \{0,1\}\), related to the noise type of the corrupted image,Footnote 1 is predefined at the specified pixel i; \(h_i\) is the ith row vector of linear operator H and \(f_i\) is the image value at pixel i. Clearly, it is highly challenging to solve this problem due to its non-convexity and non-smoothness. Many literature works have tried to substitute the sparsity function \(\Psi (\cdot )\) by its well-defined approximation [18, 20, 31, 39], for examples, the well-studied non-convex \(l_p\)-function \(\left|\cdot \right|^p\) while \(p\in (0,1)\), the truncated-\({l_1}\) function \(\text {min}(\left|\cdot \right|, \alpha )\) for any \(\alpha > 0\), and the smoothed-log function \(\alpha \ln (\alpha + \left|\cdot \right|)\,\). It is easy to see that \(\left|\cdot \right|^p\) tends to be \(\Psi (\cdot )\) as \(p \rightarrow 0^+\), the same for \(\text {min}(\left|\cdot \right|, \alpha )/\alpha \) and \( \ln (1 + \left|\cdot \right|/\alpha ) / \ln (1+1/\alpha )\) as \(\alpha \rightarrow 0^+\). A recent study by Chan et al. [8] proposed a so-called CNC strategy to approximate and analyze the sparsity function by a composite concave-convex smooth function. Also, it is known that both \(\left|\cdot \right|^p\) and \(\text {min}(\left|\cdot \right|, \alpha )\) can be generalized as a composite function \(g(\left|\cdot \right|)\), where the outer function \(g(\cdot )\) is concave and the inner one \(\left|\cdot \right|\) is convex. In this work, we apply such concave-convex approximation to the sparsity data term in (3) and study the following optimization problem:

2.1 Primal and Dual Models

Let S denote the index set of i for which \(O_i = 1\), then the optimization model (4) can be identically rewritten as

Given the concave duality [27], the concave function g(v) can be equally represented as:

where \({g^ * }\left( q \right) \) is the concave dual of \(g\left( v \right) \) and one also has

Generally, the concave dual of concave functions is computable. For examples, the cancave dual for the concave \(l_p\)-function \(\left|\cdot \right|^p\) with \(p\in (0,1)\) is

Similarly, for the truncated-\({l_1}\) function \(\text {min}(\left|\cdot \right|, \alpha )\) with any \(\alpha > 0\), its dual form is

where \(I(\cdot )\) is the indicator function of the given set. For the smoothed-log function \(g(\cdot ) = \alpha \ln (\alpha + \left|\cdot \right|)\) with any \(\alpha > 0\,\), its dual form is

In this work, we focus on the concave truncated-\(l_1\) function, i.e., \(g(\left|h_i \varvec{u} - f_i\right|) = \text {min}\left( \left|h_i \varvec{u} - f_i\right|, \alpha \right) \), to discuss the two proposed dual-based two-stage and single-stage optimization approaches.

With the above concave dual expression (6), each component function of the first term in (5) can be equivalently written as

for any \(i \in S\), where \(q_i \ge 0\).

2.2 Two-Stage Convex–Concave Algorithm

Now we apply the dual expression (10) to the first term of the optimization model (5). This leads to the following equivalent minimization problem:

To solve the above optimization problem, the most popular way is to explore a two-stage convex-concave algorithmic framework such that, for each kth outer iteration, two optimization problems are solved sequentially:

-

We first fix each \(q_i^k\), where \(i \in S\), and solve the following sub-problem over \(\varvec{u}\):

$$\begin{aligned} \varvec{u}^{k+1} \,:= \, \text {arg}\text {min}_{\varvec{u} \in R^N} \, \underbrace{\Big \{ \sum _{i \in S} q_i^k \left|h_i \varvec{u} - f_i\right| \, + \, \lambda \left\Vert \nabla \varvec{u}\right\Vert _{l_1}\Big \}}_{F(\varvec{u}, q^{k})} ; \end{aligned}$$(12) -

Then we fix the computed \(\varvec{u}^{k+1}\) to optimize each \(q_i \ge 0\) over

$$\begin{aligned} q_i^{k+1} \, := \, \text {arg}\text {min}_{q_i \ge 0} \, \underbrace{\Big \{ q_i \left|h_i \varvec{u}^{k+1} - f_i\right| \, - \, g^*(q_i) \Big \}}_{F(\varvec{u}^{k+1}, q)} \, . \end{aligned}$$(13)

Obviously, once \(\varvec{u}^{k+1}\) is computed by (12), the second ‘stage’ optimization sub-problem (13) can be solved easily for each element \(i \in S\), mostly with a closed-form solver (see “Appendix A”). Hence, the main computational load amounts to solve \(\varvec{u}^{k+1}\) over the first optimization problem (12) for any fixed \(q^k\), which is convex but non-smooth. We show that, in the following section, the introduced optimization problem (12) can be solved efficiently by an ALM-based algorithm upon and it avoids dealing with the non-smooth energy function directly.

2.2.1 Duality-based Optimization Algorithm for (12)

Now we study the non-smooth convex optimization sub-problem (12). Observe the equivalent dual representation of the absolute function is

and the dual form of the total-variation function [6] such that

hence we can reformulate the optimization subproblem (12) as its primal-dual model

By minimizing the above primal-dual formulation (15) over the free variable \(\varvec{u}\), we then obtain its mathematically equivalent dual model, i.e., a linear-equality constrained optimization problem, as follows:

which is the so-called dual model of (15).

It is easy to see that, in view of the primal-dual model (15), the optimization target \(\varvec{u}\) just works as the optimal multiplier to the linear-equality constraints in its equivalent dual model (16).

The classical augmented Lagrangian method (ALM) can be applied to efficiently solve such a linear-equality constrained dual optimization problem (16). Its augmented Lagrangian function is defined as

Given the above Lagrangian function \({\mathcal {L}_\beta }( {w,\varvec{p};\varvec{u}})\), the duality-based ALM algorithm uses the following iterative algorithm till convergence, i.e., for each lth inner iteration:

-

Fix \(\varvec{u}^l\) and \(\varvec{p}^l\), solve the following optimization sub-problem over w:

$$\begin{aligned} w^{l+1} \, := \, \mathop {\text {arg}\text {max}_{w_i \in [-q_i^k, q_i^k]} } \mathcal {L_\beta }(w, \varvec{p}^l ;\varvec{u}^l) \, ; \end{aligned}$$(18) -

Fix the computed \(w^{l+1}\) and \(\varvec{u}^l\), solve the following optimization problem over each \(\varvec{p}\)

$$\begin{aligned} \varvec{p}^{l+1} \, := \, \text {arg}\text {max}_{\varvec{p}_i \le \lambda } {\mathcal L_\beta }(w^{l+1}, \varvec{p}; \varvec{u}^l) \, ; \end{aligned}$$(19) -

Once \(w^{l+1}\) and \(\varvec{p}^{l+1}\) are computed, simply update \(\varvec{u}\) by

$$\begin{aligned} \varvec{u}^{l+1} \, := \, \varvec{u}^l - \beta \, \big ( \sum _{i \in S} w_i^{l+1} {h_i}^T + \textrm{Div} \varvec{p}^{l+1} \big ) \, . \end{aligned}$$(20)

Clearly, the ALM-based algorithm proposed above solves the convex optimization problem (12) without dealing with its non-smoothness directly. Refer to “Appendix B” for details for numerical implementations for the above optimization problems (18) and (19).

3 Single-Stage Optimization Approach

For optimizing a convex-concave composite energy function, the multi-stage type algorithms are usually applied. For example, as shown in Sect. 2.2, the proposed two-stage optimization approach to the convex-concave optimization problem (11) alternates between the optimization of the two variables \(\varvec{u}\) and q. It essentially incorporates an inner iterative ALM-based algorithm for the first ‘stage’ optimization of \(F(\varvec{u}, q^k)\) over \(\varvec{u}\) while fixing \(q^k\), and an outer iteration to update \(q^{k+1}\) over the follow-up ‘stage’ with optimization of \(F(\varvec{u}^{k+1},q)\) by fixed \(\varvec{u}^{k+1}\).

In this section, we show that, for the convex-concave optimization problem (11), we can optimize \(F(\varvec{u}, q)\) over \(\varvec{u}\) and q simultaneously, i.e., a single-stage optimization approach, which enjoys great advantages in both theoretical analysis and numerical practices.

3.1 Primal and Dual Models

Following a similar primal-dual analysis as in Sect. 2.2.1, the optimization problem (11) can be equally reformulated as its primal-dual model

Notice that the variable q in the model (21) is independent of the others, we then add negative signs to all variables except q and get a more uniform format as follows:

It is obvious to see that the optimization of q in the above primal-dual model (22) is just the optimization of the bound of w. Thus, the proposed two-stage algorithm in Sect. 2.2 actually alternates between the optimization of \((w, \varvec{p},\varvec{u})\) and the bound q of w. As a matter of fact, we prove that, in the following, all the variables of \((w, \varvec{p},\varvec{u})\) and q in the primal-dual model (22) can be optimized at the same time, utilizing the a single-stage approach as given later.

To see this, we first maximize the aforementioned primal-dual model (22) over the unconstrained variable \(\varvec{u}\,\) and obtain the theoretically identical dual formulation

which is known as the dual model of (11). The optimization of \(\varvec{u}\), according to the primal-dual model (22), simply functions as the optimal multiplier to the linear-equality constraint in its corresponding dual model (23).

For decoupling the two variables w and q, the constraint \(w_i \in [-q_i, q_i]\) can be essentially formulated as two linear inequality constraints as below:

Thus, we finally obtain another equivalent dual model of the studied optimization problem (11) such that

Given the concave function \(g^*(\cdot )\,\), the dual model (25) is essentially a convex optimization problem with linear equality and inequality constraints. Consequently, it is clear that this new optimization model (25) can be solved by simultaneously optimizing all the variables \(\left( w,\varvec{p},q,\varvec{u}\right) \,\).

3.2 A Single-Stage Algorithm for (25)

Given the linearly constrained optimization model (25), ALM provides one most simple framework to develop efficient algorithms to tackle such convex optimization problem with both linear equality and inequality constraints. By the recent study [14], the number of variable blocks has a considerable influence on the performance of ALM-based algorithms, and the linear inequality constraints add new challenges. Therefore, we simplify the introduced optimization model (25) by integrating the two variables w and \(\varvec{p}\) into one new variable \(\varvec{x}\), namely \(\varvec{x} = \left( w \,, \, \varvec{p} \right) ^{T}\,\), and reformulate it as follows:

where \({\hat{\varvec{f}}}^T = \left( f_{s_1},f_{s_2}, \ldots , f_{s_{n^{'} }}, 0, \ldots , 0 \right) \), \(A = \left( \begin{matrix} {\hat{H}}^T &{}{\textrm{Div}} \\ {\hat{I}} &{} \varvec{0} \\ -{\hat{I}} &{} \varvec{0} \end{matrix} \right) \), \(B = \left( \varvec{0} \,,\, {\hat{I}} \,,\, {\hat{I}}\right) ^{T}\,\), \({\hat{H}}^T = \big ( h_{s_1}^T, h_{s_2}^T, \ldots , h_{s_{n^{'} }}^T \big )\), \(\hat{I} = (\varvec{1}_{s_1},\varvec{1}_{s_2},\ldots ,\varvec{1}_{s_{n^{'}}})^T\).

Clearly, it results in a two-block separable convex optimization model (26) with both linear equality and inequality constraints. Let \(\varvec{\lambda } = \left( \varvec{u}, \lambda _{+}, \lambda _{-} \right) ^{T} \,\), where \(\varvec{u} \in \mathbb {R}^n \,\), \(\lambda _{+},\, \lambda _{-} \in \mathbb {R}_{+}^n \,\), be the Lagrangian multiplier to the associated linear constraints, and define the following augmented Lagrangian function for the optimization problem (26)

Therefore, we propose the single-stage ALM algorithm to the two-block separable convex optimization problem, which repeatedly explores the following steps in a prediction-correction way until convergence [14], i.e., for each kth iteration:

-

(Prediction Step) With given \(\left( A\varvec{x}^k, Bq^k, \varvec{\lambda }^k \right) \,\), find \(\left( \tilde{\varvec{x}}^k, \tilde{q}^k, \tilde{{\varvec{\lambda }}}^k \right) \,\) via

$$\begin{aligned} \tilde{\varvec{x}}^k \, := \, \text {arg}\text {min}_{\varvec{x} \in \Omega }\, \left\langle {\hat{\varvec{f}}}, \varvec{x} \right\rangle \, - \, \varvec{x}^{T} A^T {{\varvec{\lambda }}^k} \, + \, \frac{\beta }{2} \left\Vert A \left( \varvec{x} - \varvec{x^k}\right) \right\Vert ^2 \,, \end{aligned}$$(28)$$\begin{aligned} \tilde{q}^k \! := \! \text {arg}\text {min}_{q \ge \varvec{0}} -\! \sum _{i \in S} g^*(q_i) \!- \! q^{T} B^T {{\varvec{\lambda }}^k}\! +\! \frac{\beta }{2} \left\Vert B\left( q \!- \! q^k\right) + A\left( \tilde{\varvec{x}}^k \!-\! \varvec{x^k}\right) \right\Vert ^2 \,, \end{aligned}$$(29)$$\begin{aligned} \tilde{\varvec{\lambda }}^k \, := \, {\varvec{\lambda }}^k \,-\, \beta \, \left( A \tilde{\varvec{x}}^k + B \tilde{q}^k \right) \,. \end{aligned}$$(30) -

(Correction Step) Correct the solved predictors \(\left( \tilde{\varvec{x}}^k, \tilde{q}^k, \tilde{\varvec{\lambda }}^k \right) \,\), and generate the new iterate \(\left( A\varvec{x}^{k+1}, Bq^{k+1}, \varvec{\lambda }^{k+1} \right) \,\) with \(v \in (0,1)\,\) by

$$\begin{aligned} A\varvec{x}^{k+1} \, := \, A \varvec{x}^{k} \, - \, v \left( A \varvec{x}^{k} - A \tilde{\varvec{x}}^k \right) \, + \, v \left( B q^{k} - B \tilde{q}^k \right) \,, \end{aligned}$$(31)$$\begin{aligned} Bq^{k+1} \, := \, Bq^{k} \,-\, v \left( B q^{k} - B \tilde{q}^k \right) \,, \end{aligned}$$(32)$$\begin{aligned} {\varvec{\lambda }}^{k+1} \, := \, \tilde{{\varvec{\lambda }}} \,+\, v\beta \left( A \varvec{x}^{k} - A \tilde{{\varvec{x}}}^k \right) \,. \end{aligned}$$(33)

Here, for simplicity, we set \( v = 0.1 \) for all formulas (31), (32) and (33). Please refer to “Appendix C” for detailed implementation for solving the sub-problems (28) and (29). It is worth noting that, given that (26) has both equality and inequality constraints, the update of \(\tilde{{\varvec{\lambda }}}\) in (30) can be expressed particularly as below:

where \(\varvec{u}\) is associated with the equality constraint, \({\lambda }_{+}\) and \({\lambda }_{-}\) are associated with the two inequality constraints respectively.

It is easy to see that the above proposed single-stage optimization algorithm updates all the variables \(\left( {\varvec{x}}, q, {\varvec{\lambda }} \right) \,\), i.e., \(\left( w, \varvec{p}, q, \varvec{u}, \lambda _{+}, \lambda _{-} \right) \,\), at the same time. As a result, unlike the classical two-stage optimization approach, the proposed single-stage algorithm deals with all the variables under a global optimization perspective. Numerical experiments demonstrate that the single-stage optimization algorithm is more seamless, requiring less manual intervention in the estimation of the variable \(q\,\), whereas in the two-stage optimization approach, the handoff between its two computation stages is artificially determined.

4 Experiments

This section presents extensive experiments to show the performance of two-stage and single-stage approaches for the proposed convex-concave optimization model (4). Especially, two different ways are applied to implement the solvers of the sub-problems in the two-stage and single-stage approaches, i.e., projected gradient method (PG) and indefinite linearized method (IDL), which leads to four different algorithms, so-called Two-stage\(\_\)PG, Two-stage\(\_\)IDL, Single-stage\(\_\)PG and Single-stage\(\_\)IDL, respectively.

We first perform a numerical comparison of proposed single-stage and two-stage approaches implemented by PG and IDL, respectively, in Sect. 4.2. The test problem in this part is image deblurring. Since these four algorithms focus on the same non-convex model (4), their comparison results better reflect the differences between the single-stage approach and the two-stage approach. It is clear that the single-stage optimization algorithms are more numerically efficient than the two-stage ones. Then, in Sect. 4.3, we no longer perform the proposed two-stage algorithms, and compare our single-stage algorithms with other state-of-the-art non-convex methods, including \(l_0\)-pseudo-norm-based data fidelity algorithm \(l_0\)TV-PADMM [44], SCAD function-based data fidelity algorithm TVSCAD [11], and log function-based data fidelity algorithm TVLog [45]. The tests in this part are three typical problems of image restoration: image denoising, deblurring and scratched image denoising. All the algorithms were implemented by Matlab R2020b and performed in a laptop with a 2.90 GHz CPU and 8GB RAM.

4.1 Implementation Details

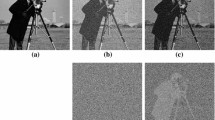

The clean test images are presented in Fig. 1. For the denoising test, the test images are polluted by 30–70\(\%\) of the pixels with salt-and-pepper (SP) noise or gaussian random-valued (RV) noise. For the deblurring test, the test images are artificially blurred by \(P \otimes \varvec{u} + e\,\), where P represents a blurring operation. In experiments, five types of blurring operations are tested, they are the defocus blurring operation with a radius \(r=7\), the gaussian blurring operation with parameter \(( [9 \ 9],10 )\), the average blurring operation with parameter 9, the motion blurring operation with parameter [15, 30], and the disk blurring operation with parameter 4. And here e represents the additive SP noise or RV noise with 30–70\(\%\) of the pixels.

In all text cases, for Two-stage\(\_\)PG, we simply set the algorithm parameters \((\beta ,\sigma ,\mu ) = (0.1, 0.1, 10)\,\); for Two-stage\(\_\)IDL, the algorithm parameters are set as \((\beta ,\tau ) = (0.08, 0.75)\,\); for Single-stage\(\_\)PG, the algorithm parameters are set as \((\beta ,\sigma ,\mu ) = (0.03, 0.1, 10)\,\); and for Single-stage\(\_\)IDL, the algorithm parameters are set as \((\beta ,\tau ) = (0.06, 0.75)\,\). In different application scenarios, we only tune the model parameters \((\lambda , \alpha )\,\). For the parameters in \(l_0\)TV-PADMM, TVSCAD, and TVLog, we set them as suggested in [11, 44, 45].

For two-stage optimization approach, we set the inner stopping criterion as

and the outer one is

Meanwhile, to keep the stopping criterion of the single-stage optimization consistent with that of the two-stage optimization, we set the stopping criterion for the single-stage optimization approach to be the same as (36).

To illustrate the recovery performance of these methods, the Peak Signal to Noise Ratio (PSNR) and the Structural Similarity (SSIM) are used to measure the quality of the restored images. They are computed as follows:

where \(\varvec{u}\) is the recovered image, \(\varvec{u}_0\) is the original image, MSE represent the mean square error between \(\varvec{u}\) and \(\varvec{u}_0\), \(\bar{\varvec{u}}\), \(\bar{\varvec{u}}_0\), \(\sigma _{\varvec{u}}\), \(\sigma _{\varvec{u}_0}\) and \(\sigma _{\varvec{u} \varvec{u_0}}\) are the local means, standard deviations, and cross-covariance for images \(\varvec{u}\), \(\varvec{u}_0\,\), \(c_1\), \(c_2\) are the constants for stability.

4.2 Numerical Comparisons of Proposed Algorithms

This section compares numerical performance of the proposed four algorithms, i.e., Two-stage\(\_\)PG, Two-stage\(\_\)IDL, Single-stage\(\_\)PG and Single-stage\(\_\)IDL, over experiments of image deblurring. We first use an example to illustrate numerical comparisons between two-stage and single-stage algorithms under different parameters. The comparisons results between PG-based and IDL-based implementations are shown in Sects. 4.2.1 and 4.2.2, respectively. Then, we compare all these four algorithms’ performance for deblurring more blurring operators in Sect. 4.2.3.

4.2.1 Comparisons Between PG-based Implementations

The test image, as shown in Fig. 2b, is artificially blurred ‘aircraft’ image using the defocus blur operation, and \(30\%\) of the pixels are contaminated by the RV noise. Experiment results by the four proposed algorithms show that all algorithms successfully obtain convergence and achieve similar results in terms of PSNR, SSIM and visuality. The single-stage based algorithms reach convergence faster than the two-stage based ones, and, for the same optimization framework of two-stage or single-stage, the IDL implementation is faster than the PG-based implementation. In the following part, we provide detailed performance comparisons between PG-based implementations in “Appendices B.1 and C.1” and IDL-based ones in ”Appendices B.2 and C.2” respectively.

For PG-based implementations, see “Appendices B.1 and C.1” for numerical details, the key parameters of these algorithms include the ALM penalty parameter \(\beta \), PG step-size \(\mu \), and TV step-size \(\sigma \). Figure 3 clearly illustrates convergence rates with different parameter settings: we initially set \(\beta = 0.1\,\), \(\sigma = 0.1\,\), \(\mu = 10\) for the two-stage optimization approach based on the PG implementation, and \(\beta = 0.03\,\), \(\sigma = 0.1\,\), \(\mu = 10\) for the single-stage optimization approach based on the PG implementation; then, we change one parameter but keep the others constant for experiments.

In general, as shown in Fig. 3, the single-stage algorithm with the PG implementation converges faster than the two-stage one, and both obtain similar visual results (see Fig. 2); in particular, the single-stage algorithm with the PG implementation converges fairly smoothly, while the two-stage algorithm with the PG implementation demonstrates a zig-zag convergence after an initial smooth decline. In fact, this is true since, for the two-stage algorithm, an update of q at the second stage indeed influences its global convergence and makes it slower. Meanwhile, it also shows that the single-stage algorithm is much less sensitive w.r.t. parameter settings than the two-stage one; in another words, the single-stage algorithm performs more stable than the two-stage one.

4.2.2 Comparisons Between IDL-based Implementations

For both two-stage and single-stage approaches, the IDL implementations, see “Appendices B.2 and C.2” for numerical details, generally converge faster than their corresponding PG implementations. The related key parameters of these algorithms include the ALM penalty parameter \(\beta \) and the IDL factor \(\tau \). Figure 4 shows the convergence rates with different parameter settings: we initially set \(\beta = 0.08\), \(\tau = 0.75\) for the two-stage optimization approach based on the IDL implementation, and \(\beta = 0.06\,\), \(\tau = 0.75\,\) for the single-stage optimization approach based on the IDL implementation; then, we change one parameter but keep the other one constant for experiments.

In general, as Fig. 4 shown, the two-stage algorithm with the IDL implementation still has a zig-zag convergence, and the single-stage algorithm with the IDL implementation converges smoothly and stablely. In detail, as Table 1 shown, the single-stage algorithm with the IDL implementation has a substantially lower total iteration number (It.) than the two-stage one while both reach comparable visual results (see Fig. 2). It is noteworthy that, for the Single-stage\(\_\)IDL algorithm, the lower the \(\tau \,\), the fewer iterations to attain convergence, and the higher the accuracy (i.e., the lower the MSE) of the convergence results, which is consistent with the conclusion of the work [13]. Meanwhile, for the Two-stage\(\_\)IDL algorithm, the lower IDL parameter \(\tau \) can reduces the iteration numbers of the ALM algorithm that solves its first-stage sub-problem, and thus reduces the overall iteration numbers. However, from Table 1, the lower the \(\tau \), the poorer the solution accuracy (i.e., the larger the MSE) of Two-stage\(\_\)IDL algorithm. This is because the update of q at the second stage influences global convergence and results poor accuracy.

4.2.3 Comparisons Under Different Blur Operators

In this part, we test the performance of proposed four algorithms, i.e., Two-stage\(\_\)PG, Two-stage\(\_\)IDL, Single-stage\(\_\)PG, and Single-stage\(\_\)IDL, under more kind of blur operators. The RNSR, SSIM, and Iteration numbers results of all the algorithms for recovering different types of blur operators are list in Table 2. Generally, from this Table, for all blur operators, the four duality-based two-stage and single-stage algorithms reach convergence successfully, and the single-stage algorithms have fewer iteration numbers compared with two-stage ones. Among them, when the test images are contaminated by additive SP noise, regardless of what kind of blur operators, the Single-stage\(\_\)IDL algorithm can achieve the highest PSNR values and the smallest iteration numbers. When the test images are contaminated by additive RV noise, in some cases for average, motion, and disk blur operators, the two-stage algorithms may obtain higher PSNR or SSIM values; however, the Single-stage\(\_\)IDL algorithm achieves the best PSNR or SSIM values in most cases, and it still has a obvious advantage in the minimum iteration numbers in all cases.

4.3 Experiments of Different Image Restoration Problems

In part 4.2, we have demonstrated the advantage of the single-stage approach compared with the two-stage approach to solving the same non-convex model (4). And in this section, we no longer perform the two-stage algorithms and compare our single-stage algorithms (Single-stage\(\_\)PG and Single-stage\(\_\)IDL) with other state-of-the-art non-convex models and algorithms, which are \(l_0\)TV-PADMM [44], TVSCAD [11], and TVLog [45]. The test experiments are three typical image restoration scenarios: image denoising, deblurring, and scratched image denoising.

4.3.1 Image Denoising

In this experiment, we demonstrate the performance of all five algorithms on image denoising. Figure 5 shows the visual results of different algorithms for denoising corrupted ‘castle’ image with a noise level of \(30\%\,\). Its first row shows the recovered images for denoising the SP noise, and in this case the parameter settings for the single-stage algorithms are \(\lambda = 0.06, \, \alpha = 0.02\,\). In general, the duality-based single-stage\(\_\)IDL algorithm achieves the best visuality, PSNR value, SSIM value, and iteration numbers. And since the \(l_0\)TV-PADMM, Single-stage\(\_\)GP, and Single-stage\(\_\)IDL algorithms all use the prior information of SP noise, i.e., the indicating matrix O, their visual results, PSNR and SSIM values are better than those of the TVSCAD and TVLog algorithms. The problem of denoising the additive RV noise is usually more challenging in practice compared to denoising the additive SP noise. The recovered images for the additive RV denoising are shown at the second row of Fig. 5, and the parameter settings for the single-stage algorithms are \(\lambda = 0.53, \, \alpha = 0.02\,\). It shows that our proposed Single-stage\(\_\)IDL algorithm still get the best visuality, PSNR value, and iteration numbers; the TVSCAD algorithm performs well in the SSIM value.

Table 3 shows the image recovery PSNR/SSIM/It. results for image denoising when adding SP or RV noise at different noise levels. Generally, for the additive SP noise, the single-stage algorithms have advantage in the fewer iteration numbers. The single-stage\(\_\)IDL algorithm outperforms other algorithms in PSNR, SSIM, and iteration numbers in most cases. The \(l_0\)TV-PADMM also has advantages in some cases. The TVSCAD and TVLog algorithms perform somewhat disadvantageously since they do not employ the noise type indicting matrix O. For the additive RV noise, the Single-stage\(\_\)IDL algorithm achieves the minimum iteration numbers in all the cases, the highest PSNR and SSIM values in most cases. The TVSCAD and TVLog algorithms also have advantages in PSNR or SSIM values in some cases. However, as two specific multi-stage methods, they have disadvantages in terms of iteration numbers. Especially when the noise level increases, the iteration numbers of TVSCAD and TVLog algorithms increase rapidly, but the iteration numbers of the single-stage algorithms can still be kept small. This is because the single-stage approach is a global optimization framework that can optimize all the variables simultaneously, which is more stable and less affected by the increase in noise level.

4.3.2 Image Deblurring

In this part, we show the performance of the \(l_0\)TV-PADMM, TVSCAD, TVLog, and the proposed single-stage algorithms on image deblurring with defocus blur operator. In the deblurring with SP noise experiments, we found the noise type indicating matrix O had a detrimental effect on our algorithms, so we did not utilize it. Obviously, unlike image denoising, the problem of image deblurring has additive noise as well as a multiplicative kernel, which is hence more difficult to handle than image denoising.

Figure 6 shows the visual results of different algorithms for deblurring corrupted ‘boat’ image with a noise level of \(30\%\). The first row displays the recovered images for deblurring with the additive SP noise, and in this case the parameter settings for the single-stage algorithms are \(\lambda = 0.019, \, \alpha = 0.01\,\). It is clear that all five algorithms successfully obtain convergence. Among them, the TVLog algorithm obtains the best PSNR and SSIM, the Single-stage\(\_\)IDL algorithm achieves the best iteration number and CPU time. Besides, the recovered images for the additive RV deblurring are shown at the second row of Fig. 6, in this case the parameter settings for the single-stage algorithms are \(\lambda = 0.032, \, \alpha = 0.03\,\). Not surprisingly, all five algorithms obtain convergence successfully with similar results. Meanwhile, the Single-stage\(\_\)IDL algorithm achieves the best PSNR, SSIM, iteration number, and CPU time.

Table 4 shows the more images recovery PSNR/SSIM/It. results for image deblurring when adding SP or RV noise at different noise levels. As for the image deblurring with addictive SP noise, the \(l_0\)TV-PADMM and TVLog have advantages over PSNR and SSIM in most cases, and the proposed Single-stage\(\_\)IDL still has advantages in iteration numbers. Especially when the noise level becomes high, for some test images with rich details, such as starfish or spaceman, the number of iterations of the TVSCAD and TVLog algorithms increase very quickly. But the Single-stage\(\_\)IDL algorithm can still maintain a small iteration number to reach convergence. As for image deblurring with additive RV noise, the \(l_0\)TV-PADMM has advantages on PSNR and SSIM in some large noise level cases, the Single-stage\(\_\)IDL algorithm has advantages on PSNR and SSIM in most cases. Similarly, in terms of computational efficiency, the Single-stage\(\_\)IDL algorithm still has a superiority compared with TVSCAD and TVLog models.

4.3.3 Scratched Image Denoising

In the last experiment, we demonstrate the performance of all five algorithms on restoring the images corrupted by nonuniform scratches, i.e. with image information loss. The test images f are the images of ‘pepper’, ‘barbara’ and‘pirate’ with manual scratches, as shown in the first column of Fig. 7. Recovering such scratched images is typically more challenging than image denoising and deblurring.

The recovered images for denoising the scratched ‘pepper’ are shown at the first row of Fig. 7, and the parameter settings for the single-stage algorithms are \(\lambda = 0.19, \, \alpha = 0.05\,\). It is obvious to see that all the proposed algorithms reach convergence successfully with similar results in terms of visuality. The \(l_0\)TV-PADMM algorithm achieves the best PSNR and SSIM. Meanwhile, the Single-stage\(\_\)IDL algorithm achieves the best iteration numbers and CPU time. The recovered images for denoising the scratched ‘barbara’ are shown at the second row of Fig. 7, the parameter settings for the single-stage algorithms are \(\lambda = 0.19, \, \alpha = 0.05\,\). Clearly, the \(l_0\)TV-PADMM, Single-stage\(\_\)PG, and Single-stage\(\_\)IDL algorithms obtain well visual results. And the Single-stage\(\_\)IDL algorithm has an advantage in PSNR, SSIM, and iteration numbers. The recovered images for denoising the scratched ‘pirate’ are shown at the third row of Fig. 7, the parameter settings for the single-stage algorithms are \(\lambda = 0.95, \, \alpha = 0.05\,\). All the proposed algorithms still obtain convergence successfully, with similar results in PSNR and visuality. Moreover, the single-stage optimization algorithm with the PG implementation converges significantly faster than the other ones.

5 Conclusion

In this paper, we propose a non-convex and non-smooth optimization model for image restoration problems with different types of corruption. The model is made of a concave-convex sparse promoting function to measure the data fidelity and a convex TV regularization component. Using the primal-dual technique, the proposed difficult non-convex and non-smooth problem can be surprisingly reduced to a convex optimization problem with linear equality and inequality constraints. Based on these observations, an efficient augmented Lagrangian method, built by two different implementations, i.e., projected-gradient and indefinite-linearization, is proposed to solve the target non-smooth and non-convex optimization problem. Clearly, the proposed new dual optimization framework deals with both the non-convex and convex terms of the studied optimization problem jointly from a global optimization perspective, which is different from the classical two-stage convex-concave way, and hence enjoys great benefits in both theory and numerics. Extensive experiments in image restoration, along with image denoising, image deblurring, and scratched image denoising, have been performed; and the experiment results show that the proposed dual optimization-based single-stage algorithm outperforms the multi-stage methods in numerical efficiency, stability, and image recovery quality. In addition, the Single-stage\(\_\)IDL algorithm is competitive with the \(l_0\)TV-PADMM [44].

Data Availability

The codes generated during the current study are available from the corresponding author on reasonable request.

Notes

For instance, for the random-valued impulse noise, \( \text { O} = \varvec{1}\,\); and for the salt-and-pepper impulse noise, \({\text {O}_i} = \left\{ {\begin{aligned}&{0, \quad {{f}_i} = {u_{\text {max}}} \;\text {or}\; {{u_{\text {min}}}},}\\&{1, \quad \text {otherwise.}} \end{aligned}} \right. \).

References

Bai, L.: A new nonconvex approach for image restoration with gamma noise. Comput. Math. Appl. 77(10), 2627–2639 (2019)

Bi, S., Liu, X., Pan, S.: Exact penalty decomposition method for zero-norm minimization based on MPEC formulation. SIAM J. Sci. Comput. 36(4), A1451–A1477 (2014)

Blake, A., Zisserman, A.: The Graduated Non-Convexity Algorithm, pp. 131–166 (2003)

Candès, E.J., Tao, T.: The power of convex relaxation: near-optimal matrix completion. IEEE Trans. Inform. Theory 56(5), 2053–2080 (2010)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20(1), 89–97 (2004)

Chambolle, A., Caselles, V., Cremers, D., Novaga, M., Pock, T.: An introduction to total variation for image analysis. Theor. Found. Numer. Methods Sparse Recov. 9(263–340), 227 (2010)

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numer. 25, 161–319 (2016)

Chan, R., Lanza, A., Morigi, S., Sgallari, F.: Convex non-convex image segmentation. Numer. Math. 138(3), 635–680 (2018)

Clason, C., Jin, B., Kunisch, K.: A duality-based splitting method for l1-tv image restoration with automatic regularization parameter choice. SIAM J. Sci. Comput. 32(3), 1484–1505 (2010)

Cui, Z.X., Fan, Q.: A nonconvex+ nonconvex approach for image restoration with impulse noise removal. Appl. Math. Model. 62, 254–271 (2018)

Gu, G., Jiang, S., Yang, J.: A TVSCAD approach for image deblurring with impulsive noise. Inverse Probl. 33(12), 125008 (2017)

Guo, X., Li, F., Ng, M.K.: A fast \(\ell \)1-tv algorithm for image restoration. SIAM J. Sci. Comput. 31(3), 2322–2341 (2009)

He, B., Ma, F., Yuan, X.: Optimal proximal augmented Lagrangian method and its application to full Jacobian splitting for multi-block separable convex minimization problems. IMA J. Numer. Anal. 40(2), 1188–1216 (2020)

He, B., Xu, S., Yuan, X.: Extensions of ADMM for separable convex optimization problems with linear equality or inequality constraints. arXiv:2107.01897 (2021)

Hong, B.W., Koo, J., Burger, M., Soatto, S.: Adaptive regularization of some inverse problems in image analysis. IEEE Trans. Image Process. 29, 2507–2521 (2019)

Kang, M., Jung, M.: Simultaneous image enhancement and restoration with non-convex total variation. J. Sci. Comput. 87(3), 1–46 (2021)

Keinert, F., Lazzaro, D., Morigi, S.: A robust group-sparse representation variational method with applications to face recognition. IEEE Trans. Image Process. 28(6), 2785–2798 (2019)

Lai, M.J., Xu, Y., Yin, W.: Improved iteratively reweighted least squares for unconstrained smoothed \(l_q\) minimization. SIAM J. Numer. Anal. 51(2), 927–957 (2013)

Lanza, A., Morigi, S., Selesnick, I., Sgallari, F.: Nonconvex nonsmooth optimization via convex-nonconvex majorization-minimization. Numer. Math. 136, 343–381 (2017)

Lanza, A., Morigi, S., Selesnick, I.W., Sgallari, F.: Sparsity-inducing nonconvex nonseparable regularization for convex image processing. SIAM J. Imaging Sci. 12(2), 1099–1134 (2019)

Lanza, A., Morigi, S., Sgallari, F.: Convex image denoising via non-convex regularization with parameter selection. J. Math. Imaging Vis. 56, 195–220 (2016)

Lerman, G., McCoy, M.B., Tropp, J.A., Zhang, T.: Robust computation of linear models by convex relaxation. Found. Comput. Math. 15(2), 363–410 (2015)

Lu, Z., Zhang, Y.: Sparse approximation via penalty decomposition methods. SIAM J. Optim. 23(4), 2448–2478 (2013)

Nikolova, M.: Minimizers of cost-functions involving nonsmooth data-fidelity terms. Application to the processing of outliers. SIAM J. Numer. Anal. 40(3), 965–994 (2002)

Nikolova, M., Ng, M.K., Tam, C.P.: On \(\ell _1\) data fitting and concave regularization for image recovery. SIAM J. Sci. Comput. 35(1), A397–A430 (2013)

Peng, G.J.: Adaptive ADMM for dictionary learning in convolutional sparse representation. IEEE Trans. Image Process. 28(7), 3408–3422 (2019)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (2015)

Rudin, L.I., Osher, S.: Total variation based image restoration with free local constraints. In: Proceedings of 1st International Conference on Image Processing, vol. 1, pp. 31–35. IEEE (1994)

Selesnick, I.W., Bayram, I.: Sparse signal estimation by maximally sparse convex optimization. IEEE Trans. Signal Process. 62(5), 1078–1092 (2014)

Swoboda, P., Mokarian, A., Theobalt, C., Bernard, F., et al.: A convex relaxation for multi-graph matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11156–11165 (2019)

Tao, M.: Minimization of \(l_1\) over \(l_2\) for sparse signal recovery with convergence guarantee. SIAM J. Sci. Comput. 44(2), A770–A797 (2022)

Tao, P.D., An, L.H.: Convex analysis approach to DC programming: theory, algorithms and applications. Acta Math. Vietnam 22(1), 289–355 (1997)

Tao, P.D., Souad, E.B.: Duality in DC (difference of convex functions) optimization. subgradient methods. In: Trends in Mathematical Optimization: 4th French-German Conference on Optimization, pp. 277–293. Springer (1988)

Tao, P.D., et al.: Algorithms for solving a class of nonconvex optimization problems. methods of subgradients. In: North-Holland Mathematics Studies, vol. 129, pp. 249–271. Elsevier (1986)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B. 58(1), 267–288 (1996)

Tikhonov, A.N., Arsenin, V.I.: Solutions of Ill-Posed Problems, vol. 14. V.H. Winston, Washington (1977)

Vongkulbhisal, J., De la Torre, F., Costeira, J.P.: Discriminative optimization: theory and applications to computer vision. IEEE Trans. Pattern Anal. 41(4), 829–843 (2018)

Wang, Y., Yang, J., Yin, W., Zhang, Y.: A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 1(3), 248–272 (2008)

Wu, T., Shao, J., Gu, X., Ng, M.K., Zeng, T.: Two-stage image segmentation based on nonconvex \(l_2-l_p\) approximation and thresholding. Appl. Math. Comput. 403, 126168 (2021)

Yang, H., Antonante, P., Tzoumas, V., Carlone, L.: Graduated non-convexity for robust spatial perception: From non-minimal solvers to global outlier rejection. IEEE Robot. Autom. Lett. 5(2), 1127–1134 (2020)

Yang, J., Yuan, X.: Linearized augmented Lagrangian and alternating direction methods for nuclear norm minimization. Math. Comput. 82(281), 301–329 (2013)

Yang, J., Zhang, Y., Yin, W.: An efficient tvl1 algorithm for deblurring multichannel images corrupted by impulsive noise. SIAM J. Sci. Comput. 31(4), 2842–2865 (2009)

You, J., Jiao, Y., Lu, X., Zeng, T.: A nonconvex model with minimax concave penalty for image restoration. J. Sci. Comput. 78(2), 1063–1086 (2019)

Yuan, G., Ghanem, B.: \(\ell _0\)tv: a sparse optimization method for impulse noise image restoration. IEEE Trans. Pattern Anal. 41(2), 352–364 (2017)

Zhang, B., Zhu, G., Zhu, Z.: A tv-log nonconvex approach for image deblurring with impulsive noise. Signal Process. 174, 107631 (2020)

Zhang, T.: Analysis of multi-stage convex relaxation for sparse regularization. J. Mach. Learn. Res. 11(3), 1081–1107 (2010)

Zhang, X., Bai, M., Ng, M.K.: Nonconvex-tv based image restoration with impulse noise removal. SIAM J. Imaging Sci. 10(3), 1627–1667 (2017)

Zhou, Q.Y., Park, J., Koltun, V.: Fast global registration. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part II 14, pp. 766–782. Springer (2016)

Zhu, W.: Image denoising using \(l^{p}\)-norm of mean curvature of image surface. J. Sci. Comput. 83(2), 1–26 (2020)

Funding

This work was supported in part by the National Natural Science Foundation of China (Grant Nos. 12271419, 61877046, 61877047, 11801430, and 62106186), in part by the RG(R)-RC/17-18/02-MATH, HKBU 12300819, NSF/RGC Grant N-HKBU214-19, ANR/RGC Joint Research Scheme (A-HKBU203-19) and RC-FNRA-IG/19-20/SCI/01.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Natural Science Foundation of China (Grant Nos. 12271419, 61877046, 61877047, 11801430, and 62106186), in part by the RG(R)-RC/17-18/02-MATH, HKBU 12300819, NSF/RGC Grant N-HKBU214-19, ANR/RGC Joint Research Scheme (A-HKBU203-19) and RC-FNRA-IG/19-20/SCI/01

JY: Contributed equally as joint first author.

Appendices

Appendix A: Close-form Solver for Sub-problem (13)

When function g to be the truncated-\({l_1}\) function with any \(\alpha > 0\), its dual form is \({g^*}\left( q \right) = \alpha \left( { - 1 + q} \right) I\left( {q \in \left[ {0,1} \right] } \right) \,\). Thus, the optimization problem (13) can be reformulated as:

so, in this case, the straightforward close form solution for q is

Appendix B: Numerical Implementation of (18) and (19)

In this part, we list two different implementations for solving problems (18) and (19): the first one is implemented using the classical projected gradient-ascent method (PG), while the second one employs a indefinite linearized method (IDL) recently published in [13].

1.1 Appendix B.1: Solver by Projected Gradient-Ascent Method (PG)

Clearly, the maximization problem (18) over w results in:

which can be easily tackled by the following projection gradient-ascent scheme:

where \(\mu > 0\) is the step-size of gradient-ascent and the associate gradient is

As we observed in practice, the total number of projection gradient ascent iterations (41) has no obvious influence on its numerical performance; so we take one iteration (41) in this work to decrease computational complexity.

The maximization problem (19) over \(\varvec{p}\) is reduced as:

which can be solved by the following projection gradient-ascent scheme:

where \(\sigma > 0\) is the step-size of gradient-ascent, \(\nabla = - \textrm{Div}^T\,\), and \({\prod }_\lambda \) is the projection onto the convex set \({C_\lambda } = \left\{ {\eta |\left| \eta \right| \le \lambda } \right\} \,\).

1.2 Appendix B.2: Solver by Indefinite Linearized Method (IDL)

A proximal linearization strategy, which adds a positive-definite proximal term to linearize the studied optimization problem and derives their closed-form solutions, was introduced in [41]. Recently, He et al. [13] showed that such positive definiteness of the added proximal term for linearization is not required for convergence, they proposed an efficient indefinite linearized algorithmic that permits a larger step-size to speed up convergence.

For problem (18), the indefinite linearized w-subproblem is

We can easily compute its closed-form solution:

where \(c_1 > \beta \rho \left( {H{H^T}} \right) \) and \(\tau \in \left( {0.75,1} \right) \,\).

The indefinite linearized \(\varvec{p}\)-subproblem for problem (19) is

So, we have its closed-form solution:

where \(c_2 > \beta \rho \left( \textrm{Div}^T \textrm{Div}\right) \) and \(\tau \in \left( {0.75,1} \right) \,\).

Appendix C: Numerical Implementation of (28) and (29)

Just as the previous “Appendix B”, we can implement two different numerical solvers for (28) and (29), by the classical projected gradient-ascent method (PG) and the indefinite linearized method (IDL).

1.1 Appendix C.1: Solver by Projected Gradient-Descent Method (PG)

The projection gradient-descent scheme for solving (28) is

where \(\nu > 0\) is the step-size of gradient-descent, and the associate gradient is

In essence, recall the definition of \(\varvec{x}\,\), the iterative formulas for updating variables w and \(\varvec{p}\) are as follows:

and

where \(\mu > 0\) is the step-size for updating \(w\,\), \(\sigma >0\) is the step-size for updating \(\varvec{p}\,\), \({\prod }_\lambda \) is the projection onto the convex set \({C_\lambda } = \left\{ {\eta \, | \, \left| \eta \right| \le \lambda } \right\} \,\), and

For the truncated-\(l_1\) function, its dual function is \({g^*}\left( q \right) = \alpha \left( { - 1 + q} \right) I\left( {q \in \left[ {0,1} \right] } \right) \,\), and the optimization problem (29) can then be reformulated as:

Since \(B = \left( \varvec{0} ,\, {\hat{I}} ,\, {\hat{I}}\right) ^{T}\,\) is a variant of the identity matrix I, the above problem (52) has a closed-form solution

where \({\prod }_{[\varvec{0},\varvec{1}]}\) is the projection onto the convex set \({C_{[\varvec{0},\varvec{1}]} } = \left\{ {\eta \, | \, \varvec{0} \le \eta \le \varvec{1} } \right\} \,\), \(A\varvec{x}^k_2\) and \(A\varvec{x}^k_3\) are as shown in ().

1.2 Appendix C.2: Solver by Indefinite Linearized Method (IDL)

We now implement the indefinite linearized method (IDL) to solve sub-problem (28). Its corresponding indefinite linearized version is

where the indefinite matrix M in (54) is taken as

So, the proximal optimization problem (54) can be reduced to

whose closed-form solution is

Thus, the iterative formula for updating the variables w and \(\varvec{p}\) are

and

where \(c_1 > \beta \rho \left( {H{H^T}} \right) \,\), \(c_2 > \beta \rho \left( \textrm{Div}^T \textrm{Div}\right) \,\), and \(\tau \in \left( {0.75,1} \right) \,\).

Clearly, the coefficient matrix B in the optimization problem (29) is indeed a rearrangement of the identity matrix. Hence, the optimization of q using the indefinite linearized technique is the same as (53).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, X., Yuan, J., Tai, XC. et al. Efficient Convex Optimization for Non-convex Non-smooth Image Restoration. J Sci Comput 99, 57 (2024). https://doi.org/10.1007/s10915-024-02504-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02504-6