Abstract

Structure-preserving algorithms and algorithms with uniform error bound have constituted two interesting classes of numerical methods. In this paper, we blend these two kinds of methods for solving nonlinear systems with highly oscillatory solution, and the blended algorithms inherit and respect the advantage of each method. Two kinds of algorithms are presented to preserve the symplecticity and energy of the Hamiltonian systems, respectively. Long time energy conservation is analysed for symplectic algorithms and the proposed algorithms are shown to have uniform error bound in the position for the highly oscillatory structure. Moreover, some methods with uniform error bound in the position and in the velocity are derived and analysed. Two numerical experiments are carried out to support all the theoretical results established in this paper by showing the performance of the blended algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is known that nonlinear Hamiltonian systems are ubiquitous in science and engineering applications. In numerical simulation of evolutionary problems, one of the most difficult problems is to deal with highly oscillatory problems, since they cannot be solved efficiently using conventional methods. The crucial point is that standard methods need a very small stepsize and hence a long runtime to reach an acceptable accuracy [25]. In this paper we are concerned with efficient algorithms for the following highly oscillatory second-order differential equation

where \(x(t)\in {\mathbb {R}}^d\), \(\tilde{B}\) is a \(d\times d\) skew symmetric matrix, and it is assumed that F(x) is a smooth function and is the negative gradient of a real-valued function U(x). In this work, we focus on the case where \(0<\varepsilon \ll 1\). Under this case, the solution of this dynamic is highly oscillatory. We note that with \( p = \dot{x} -\frac{1}{2\varepsilon }\tilde{B}x, \) the Eq. (1) can be transformed into a Hamiltonian system with the non-separable Hamiltonian

It immediately follows that the energy of (1) is given by

with \(v=\dot{x}\). This energy is exactly conserved along the solutions, i.e.

We denote in this paper by \(|\cdot |\) the Euclidean norm. If the system (1) is two dimensional and the matrix \(\tilde{B}\) appeared there depends on x, it has been shown in [5] that \(x(t)-x(0)=\mathcal {O}(\varepsilon )\). Therefore, all the methods presented in this paper can be extended to the two dimensional system (1) with a matrix \(\tilde{B}(x)\) by rewriting (1) as

Hamiltonian systems with highly oscillatory solutions frequently occur in physics and engineering such as charged-particle dynamics, Vlasov equations, classical and quantum mechanics, and molecular dynamics [2, 5, 6, 15, 20,21,22, 24, 29, 30, 36, 40]. In the recent few decades, geometric numerical integration also called as structure-preserving algorithm for differential equations has received more and more attention. This kind of algorithms is designed to respect the structural invariants and geometry of the considered system. This idea has been used by many researchers to derive different structure-preserving algorithms (see, e.g. [14, 17, 25, 44]). For the Hamiltonian system (2), there are two remarkable features: the symplecticity of its flow and the conservation of the Hamiltonian. Consequently, for a numerical algorithm, these two features should be respected as much as possible in the spirit of geometric numerical integration.

The numerical computation of highly oscillatory system contains numerous enduring challenges. In practice, the numerical methods used to treat (1) are focus on charged-particle dynamics and can be summarized in the following three categories.

a) The primitive numerical methods usually depend on the approximation of the solution besides high-frequency oscillation and structure preservation characteristics such as the Boris method [1] as well as its further researches [20, 34]. This method does not perform well for highly oscillatory systems and cannot preserve any structure of the system.

b) Some recent methods are devoted to the structure preservation such as the volume-preserving algorithms [27], symplectic methods [26, 37, 38, 41], symmetric methods [21] and energy-preserving methods [2,3,4, 35]. In [22], the long-time near-conservation property of a variational integrator was analyzed under the condition \(0<\varepsilon \ll 1\). Very recently, some integrators with large stepsize and their long term behaviour were studied in [23] for charged-particle dynamics. All of these methods can preserve or nearly preserve some structure of the considered system. However, these methods mentioned above do not pay attention to the high-frequency oscillation, and then the convergence of these methods is not uniformly accurate for \(\varepsilon \). Their error constant usually increases when \(\varepsilon \) decreases.

c) Accuracy is often an important consideration for highly oscillatory systems over long-time intervals. Some new methods with uniform accuracy for \(\varepsilon \) have been proposed and analysed recently. The authors in [24] improved asymptotic behaviour of the Boris method and derived a filtered Boris algorithm under a maximal ordering scaling. Some multiscale schemes have been proposed such as the asymptotic preserving schemes [15, 16] and the uniformly accurate schemes [5, 6, 18]. Although these powerful numerical methods have very good performance in accuracy, structure preservation usually cannot be achieved.

Based on the above points, a natural question to ask is whether one can design a numerical method for (1) such that it has uniform error bound for \(\varepsilon \) and can exactly preserve some structure simultaneously. A kind of energy-preserving method without convergent analysis was given in [40]. It will be shown in this paper that this method has a uniform error bound which has not been studied in [40]. In a recent paper [7], a kind of uniformly accurate methods has been demonstrated numerically to have a near energy conservation but without theoretical analysis. Very recently, the authors in [42] presented some splitting methods with first-order uniform error bound in x and energy or volume preservation. However, only first-order methods are proposed there and higher-order ones with energy or other structure preservation have not been investigated. More recently, geometric two-scale integrators have been developed in [43] but the methods only have near conservations. A numerical method combining uniform error bound and structure preservation has more challenges and importance.

In this paper, we will derive two kinds of algorithms to preserve the symplecticity and energy, respectively. For symplectic algorithms, their near energy conservation over long times will be analysed. Moreover, all the structure-preserving algorithms will be shown to have second-order uniform error bound for \(0<\varepsilon \ll 1\) in x. Meanwhile, some algorithms with first-order or higher-order uniform error bound in both x and v will be proposed. Compared with the existing analysis techniques, the main differences and contributions in the proof involve in two aspects. a) We transform the system and the methods, and then the symplecticity and long time energy conservation are shown for the transformed methods. These transformations make the analysis be more ingenious and simpler. b) For the convergence analysis, to make good use of the scheme of methods, two different techniques named as the re-scaled technique and modulated Fourier expansion are taken. According to the scheme of methods, we choose the suitable analytical technique and make the necessary modifications to make the proof go smoothly.

The remainder of this paper is organised as follows. In Sect. 2, we formulate three kinds of algorithms. The main results of these algorithms are given in Sect. 3 and two numerical experiments are carried out there to numerically show the performance of the algorithms. The proofs of the main results are presented in Sects. 4–6 one by one. The last section includes some concluding remarks.

2 Numerical Algorithms

Before deriving effective algorithms for the system (1), we first present the implicit expression of its exact solution as follows.

Theorem 2.1

(See [24].) The exact solution of system (1) can be expressed as

where \(\Omega =\frac{1}{\varepsilon } \tilde{B}\), h is a stepsize, \(t_n=nh\) and the \(\varphi \)-functions are defined by (see [28])

In what follows, we present two kinds of algorithms which will correspond to symplectic algorithms and energy-preserving algorithms, respectively.

Algorithm 2.2

By denoting the numerical solution \(x_n\approx x(t_n),\, v_n\approx v(t_n)\) with \(n=0,1,\ldots ,\) an s-stage adaptive exponential algorithm applied with stepsize h is defined by:

where \({\alpha }_{ij}(h\Omega ), \beta _i(h\Omega ), \gamma _i(h\Omega )\) are matrix-valued functions of \(h\Omega \).

As some practical examples, we present three explicit algorithms based on the conditions (27) of symplecticity given below. The coefficients are obtained by considering the s-stage adaptive exponential algorithm (5) with the coefficients for \(i=1,2,\ldots ,s,\ j=1,2,\ldots ,i,\)

where \((c_1,c_2,\ldots ,c_{s}),\ (b_{1},b_{2}, \ldots ,b_{s})\) and \((a_{ij})_{s\times s}\) are coefficients of an s-stage diagonal implicit RK method. It can be checked easily that if this RK method is chosen as a symplectic method, then the corresponding coefficients (6) satisfy the symplectic conditions (27) given below. We omit the details of calculations for brevity. We first consider

The adaptive exponential algorithm whose coefficients are given by this choice and (6) is denoted by SM1. For \(s=2\), choosing

yields another method, which is called as SM2. If we consider

then the corresponding method is referred to SM3.

Remark 2.3

It is remarked that the following integrator for solving (1) has been given in [24]

where \(F_n=F(x_n)\) and \(\Psi , \Psi _0,\Psi _1\) are matrix-valued functions of \(h \Omega \) satisfying \(\Psi (0)=\Psi _0(0)=\Psi _1(0)=1\). For this scheme, convergence is researched but the structure preservation such as symplecticity or energy conservation has not been discussed. It is noted that Algorithm 2.2 given by (5) contains (7) and thence the results of SM1-SM3 also hold for (7). In this paper, we will study not only the convergence but also the symplecticity and long time energy conservation for (5). It will be shown that SM1-SM3 are all symplectic and have a good near conservation of energy over long times, which are also true for the algorithm (7) presented in [24].

We also note that it will be shown in this paper that SM1-SM3 are all second order and they have similar long time conservations. Higher-order methods can be derived but their long term analysis is very complicated and challenging. We hope to make some progress on this aspect in our future work.

The following algorithm is devoted to the energy-preserving methods which are designed based on the variation-of-constants formula (4) and the idea of continuous-stage methods.

Algorithm 2.4

An s-degree continuous-stage adaptive exponential algorithm applied with stepsize h is defined by

where \( X_{\tau } \) is a polynomial of degree s with respect to \( \tau \) satisfying \(X_{0}=x_{n},\ X_{1}=x_{n+1}.\)\(C_{\tau } \), \( \bar{B}_{\tau }, B_{\tau } \) and \(A_{\tau ,\sigma }\) are polynomials which depend on \( h\Omega \). The \( C_{\tau }(h\Omega ) \) satisfies \( C_{c_{i}}(h\Omega )=c_{i}\varphi _{1}(c_{i}h\Omega ),\) where \( c_{i} \) for \( i=1,2, \ldots , s+1 \) are the fitting nodes, and one of them is required to be one.

As an illustrative example, we consider \(s=1,\ c_1=0,\ c_2=1\) and choose

This obtained algorithm can be rewritten as

which is denoted by EM1.

Remark 2.5

It is noted that EM1 of Algorithm 2.4 has been given in [40] and it was shown to be energy-preserving. However, its convergence has not been studied there. In this paper, we will analyse the convergence of each algorithm. It will be shown that some methods have a first-order or higher-order uniform error bound in both x and v and the others have a second-order uniform convergence in x for \(0<\varepsilon \ll 1\). In contrast, many classical methods such as Euler method, Runge–Kutta (-Nyström) methods often show non-uniform error bounds in both x and v, where the error constant is usually proportional to \(1/\varepsilon ^k\) for some \(k>0\).

We remark that the above four methods will be shown to have uniform error bound only in x. In order to obtain some methods with the same uniform error bound in both x and v, we derive the following algorithm.

Algorithm 2.6

For constant \(F\equiv F_0\in {\mathbb {R}}^3\), the variation-of-constants formula (4) reads

Based on this, we consider the following algorithm

This method is referred to M1.

By the simple M1 and parareal algorithms (see [31]), we formulate some higher order methods. For solving the second-order system (1), the parareal algorithm uses two propagators: the fine propagator \(\mathcal {F}\) and the coarse propagator \(\mathcal {G}\), where classically \(\mathcal {F}\) uses a small (fine) time step \(\delta t\) and \(\mathcal {G}\) a large (coarse) time step \(\Delta t\). In this paper, we consider M1 with \(\Delta t=h\) as the coarse propagator and denote this propagator by

For the fine propagator \(\mathcal {F}\), we also choose M1 with a small time step \(0<\delta t<h\) and refer to it as

where

For solving (1), the parareal algorithms compute for iteration index \(k =0,1,\ldots \) and \( n=0,1,\ldots ,\frac{T}{h}-1\), and with \([x^k_0,v^k_0]= [x_0,v_0]\)

The initial guess \(\{[x^0_n;v^0_n]\}_{n\ge 1}\) can be random or generated by the \(\mathcal {G}\)-propagator. We shall refer to (12) by PM. As two examples, we choose \(k=1,\delta t=h^2\) and \(k=2,\delta t=h^3\) and denote them by parareal method 1 (PM1) and parareal method 2 (PM2), respectively.

Remark 2.7

It is noted that M1 is a kind of exponential integrators and compared with the methods given in [43], it has simple scheme. The aim of presenting M1 is to derive higher-order methods with uniform error bounds and simple scheme. For the higher-order uniformly accurate methods, more complicated formulations are needed and we refer to [8, 18, 43] for some recent work on this topic.

3 Main Results and a Numerical Test

3.1 Main Results

The main results of this paper are given by the following four theorems. The first three theorems are about structure preservations of the methods for the Hamiltonian system (2) and the last one concerns uniform error bound for the format (1).

Theorem 3.1

(Symplecticity of SM1-SM3) Consider the methods SM1-SM3 of Algorithm 2.2 where \(p_{n+1} = v_{n+1} -\frac{1}{2\varepsilon } \tilde{B}x_{n+1}\). In this case, for the non-separable Hamiltonian (2), the map \((x_n,p_n ) \rightarrow (x_{n+1},p_{n+1})\) determined by these methods is symplectic, i.e.,

Theorem 3.2

(Energy preservation of EM1 [40].) The method EM1 of Algorithm 2.4 preserves the energy (3) exactly, i.e.

Theorem 3.3

(Long time energy conservation of SM1-SM3.) Consider the following assumptions.

-

It is assumed that the initial values \(x_{0}\) and \(v_0:=\dot{x}_0\) are bounded independently of \(\varepsilon \).

-

Suppose that the considered numerical solution stays in a compact set.

-

A lower bound on the stepsize

$$\begin{aligned}h/\varepsilon \ge c_0 > 0\end{aligned}$$is required.

-

After diagonalization of \( \tilde{B}/\varepsilon \), denote the obtained diagonal matrix by \(i\tilde{\Omega }\). Consider the notations \(k=(k_1,k_2,\ldots ,k_l),\ \varpi =(\varpi _1,\varpi _2,\ldots ,\varpi _l):=(diagonal elements of \ \tilde{\Omega }), \ k\cdot \varpi =k_1\varpi _1+k_2\varpi _2+\ldots +k_l\varpi _l \) and the resonance module

$$\begin{aligned} \mathcal {M}= \{k\in \mathbb {Z}^{l}:\ k\cdot \varpi =0\}.\end{aligned}$$(13)Assume that the numerical non-resonance condition is true

$$\begin{aligned} |\sin (\frac{h}{2}(k\cdot \varpi ))| \ge c \sqrt{h}\ \ \textrm{for} \ \ k \in \mathbb {Z}^l\backslash \mathcal {M} \ \ \textrm{with} \ \ \sum _{j=1}^l|k_j|\le N \end{aligned}$$for some \(N\ge 2\) and \(c>0\). The notations used here are referred to the last part of Sect. 5.

For the symplectic methods SM1-SM3 of Algorithm 2.2, it holds that

for \(0\le nh\le h^{-N+1}.\) The constant C is independent of \(n, h,\varepsilon \), but depends on N, T and the constants in the assumptions.

Remark 3.4

It is noted that M1 and PM1-PM2 do not have the above energy conservation property. The reason is that they are not symplectic and symmetric methods. It will be seen from the proof given in Sect. 5 that symplecticity and symmetry play an important role in the analysis.

Remark 3.5

It is noted that in Theorem 3.3, a lower bound on the stepsize \(h\ge c_0 \varepsilon \) is presented. At first glance, it seems that this contradicts with the fact that a small stepsize is needed in the methods for highly oscillatory problems. From Theorem 3.6 given blew, it follows that the symplectic methods SM1-SM3 have the accuracy \(\mathcal {O}(h^2)\) in x and \(\mathcal {O}(h^2/\varepsilon )\) in v. Thus if one only concerns the accuracy in x, large stepsizes can be used for the symplectic methods and Theorem 3.3 shows that under this case, the methods still have a long-time near energy conservation. If a small stepsize is used to keep a good accuracy in both x and v, the energy behaviour of the symplectic methods can be derived with the help of backward error analysis (Chap. IX of [25]).

Theorem 3.6

(Convergence.) Assume that the initial value of (1) is uniformly bounded w.r.t \(\varepsilon \), F(x) is smooth and uniformly bounded for all \(\varepsilon \), and the solution of (1) stays in a uniformly bounded set. For the methods M1 and PM of Algorithm 2.6, the global errors are bounded by

where \(0<nh\le T\). The convergence of the energy-preserving method EM1 of Algorithm 2.4 is

Here we denote \(A\lesssim B\) for \(A\le CB\) with a generic constant \(C>0\) independent of h or n or \(\varepsilon \) but depends on T and the bound of \(F_x\). For the symplectic methods SM1-SM3 of Algorithm 2.2, under the conditions of Theorem 3.3,

where the error constants are independent of \(n, h,\varepsilon \), but depend on T, the bound of \(F_x\) and the constants in the assumptions of Theorem 3.3.

In the Sects. 4–6, we will prove Theorems 3.1, 3.3-3.6, respectively. For the dimension d, it is required that \(d\ge 2\) since \(\tilde{B} \) is a zero matrix once \(d=1\), and then the system (1) reduces to a second-order ODE \(\ddot{x}(t)=F(x(t))\) without highly oscillatory solutions.

Remark 3.7

For the seven methods presented in this paper, concerning the symmetry [25], it is easy to check that all of them except M1 and PM1-PM2 are symmetric. Their properties are summarized in Table 1. All of these observations will be numerically illustrated by a test given below. Ones can choose the appropriate algorithm according to their interest.

-

If uniform (in \(\varepsilon \)) error bound is needed in both x and v, M1 and PM1-PM2 are most appropriate and these three algorithms provide different uniform accuracy from the first order to the third order.

-

If ones only focus on uniform error bound in x, the symplectic or energy-preserving methods are good choices. EM1 can preserve the energy exactly but it is implicit. Symplectic methods SM1-SM3 have similar numerical behaviour. They are explicit, preserve the symplecticity and have a good near conservation of energy over long times. Ones can choose the preferred method depending on their demands.

3.2 Numerical Tests

In this part, we carry out two numerical experiments to show the performance of the derived methods.

Problem 1 As an illustrative numerical experiment, we consider the charged particle system of [20] with an additional factor \(1/\varepsilon \) and a constant magnetic field. The system can be expressed by (1) with \(d=3\), where the potential \(U(x)=x_{1}^{3}-x_{2}^{3}+x_{1}^{4}/5 +x_{2}^{4}+x_{3}^{4}\) and \(\tilde{B}= \begin{pmatrix} 0 &{} 0.2 &{} 0.2 \\ -0.2 &{} 0 &{} 1 \\ -0.2 &{} -1 &{} 0 \\ \end{pmatrix}.\) The initial values are chosen as \(x(0)=(0.6,1,-1)^{\intercal }\) and \(v(0)=(-1,0.5,0.6)^{\intercal }.\) It is noted here that we use the four-point Gauss-Legendre’s quadrature to the integral involved in the numerical flow EM1.

Energy conservation We take \(\varepsilon =0.05,0.005\) and apply our seven methods as well as the symplectic Euler method (denoted by SE), the exponential Euler method (denoted by EE) [28] and the explicit exponential Runge–Kutta method (denoted by ERK) of order two [28] to this problem on [0, 100000] with \(h=\varepsilon \). The standard fixed point iteration is used for EM1 and we set \(10^{-16}\) as the error tolerance and 10 as the maximum number of iterations. For the other methods, they are explicit and the iteration is not needed. The relative errors \(ERR:=(E(x_{n},v_{n})-E(x_{0},v_{0}))/E(x_{0},v_{0})\) of the energy are displayed in Figs. 1–4. According to these results, we have the following observations. SM1-SM3 (Fig. 1) have near energy conservation over long times, EM1 preserves the energy very well (Fig. 2) but others show a bad energy conservation (Figs. 3–4). We do not show the result for PM2 since it has a similar behaviour as PM1.

Convergence For displaying the results of convergence, the problem is solved on [0, 1] and the global errors \( errx:=\frac{\left| x_n-x(t_n)\right| }{\left| x(t_n)\right| },\ errv:=\frac{\left| v_n-v(t_n)\right| }{\left| v(t_n)\right| } \) of each method for different \(\varepsilon \) are shown in Figs. 5–8. It is noted that we use the result of HOODESolver given in [32] as the true solution. It follows from these results that M1 and PM1-PM2 have uniform convergence in both x and v (Fig. 5) and the other our methods have a uniform second-order error bound in x (Figs. 6–7), which agree with the results stated in Theorem 3.6. The existing method SE behaves very poor in the accuracy of both x and v and the exponential integrators EE, ERK have a uniform error bound in x (Fig. 8).

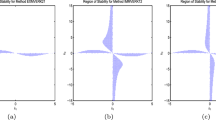

Resonance instability Finally, we show the resonance instability of the proposed methods. This is done by fixing \(\varepsilon =1/2^{10}\) and showing the errors at \(T=1\) against \(h/\varepsilon \) in Fig. 9. It can be observed that PM1 gives a very poor resultFootnote 1, M1 shows very well but other methods have a good behavior for values of \(h/\varepsilon \) except integral multiples of \(\pi \). SM3 shows a not uniform result close to \(4\pi \), other methods EM1 and SM1-SM2 close to even multiples of \(\pi \). This means that SM3 appears more robust near stepsize resonances and other methods behave very similar away from stepsize resonances.

Problem 2 The second test is devoted the system (1) with a large dimension \(d=32\), where the nonlinear function \(F(x)=-\sin (x)\) and \(\tilde{B}\) is chosen as a skew-symmetric tridiagonal matrix with \(\tilde{B}_{j,j+1}=j/d\) for \(j=1,2,\ldots ,d-1\). We consider the initial values \(x(0)=(0.1,0.1,\ldots ,0.1)^{\intercal }\) and \(v(0)=(0.2,0.2,\ldots ,0.2)^{\intercal }.\) This problem is firstly solved with \(\varepsilon =0.05\) and \(h=0.1\). The relative energy errors are presented in Fig. 10. PM2 has a similar behaviour as PM1 and thus we do not show its result for brevity. Then we integrate this problem on [0, 1] and the global errors in x and v are displayed in Figs. 11–14. All the numerical observations remain the same as before.

4 Analysis on Symplecticity (Theorem 3.1)

In this section, we will prove the symplecticity of SM1-SM3 given in Theorem 3.1.

4.1 Transformed System and Methods

Due to the skew-symmetric matrix \(\tilde{B}\), it is clear that there exists a unitary matrix P and a diagonal matrix \(\tilde{\Omega }\) such that

where

with the integer \(l\ge 1\). With the linear change of variable

the system (1) can be rewritten as

where \(\tilde{F}(\tilde{x})=P^H F(P \tilde{x})= -\nabla _{\tilde{x}} U(P\tilde{x})\). In the rest parts of this paper, we denote the vector x by

and the same notation is used for all the vectors in \({\mathbb {R}}^d\) or \({\mathbb {C}}^d\) and for the diagonal matrix in \({\mathbb {R}}^{d\times d}\) or \({\mathbb {C}}^{d\times d}\). For example, \(\tilde{\Omega }^{-l}\) is referred to \(-\tilde{\omega }_l\). According to (19) and the property of the unitary matrix P, one has that for \(k=1,2,\ldots ,l\)

The energy of this transformed system (20) is given by

For this transformed system, we can modify the schemes of SM1-SM3 accordingly. For example, the scheme (5) has a transformed form for (20)

We summarise the relationships as follows:

Denote the transformed method (23) by

where the superscript index J for \(J=-l,-l+1,\ldots , l\) denotes the J-th entry of a vector or a matrix and \(\tilde{F}^{J}_{i}\) denotes the J-th entry of \(\tilde{F}(\tilde{X}_{i})\) as the scheme of (21). It is noted that when \(d=2l\), \(J=0\) does not exist. With the notation of differential 2-form, we need to prove that (see [25])

We compute

where \(P^HP=I\) is used here. Similarly, one has \( \sum \limits _{J=-l}^{l}dx_{n}^{J} \wedge dp_{n}^{J}= \sum \limits _{J=-l}^{l} { d}\bar{\tilde{x}}_{n}^{J} \wedge { d}\tilde{p}_{n}^{J}.\) Thus we only need to prove \( \sum \limits _{J=-l}^{l} { d}\bar{\tilde{x}}_{n+1}^{J} \wedge { d}\tilde{p}_{n+1}^{J}= \sum \limits _{J=-l}^{l} { d}\bar{\tilde{x}}_{n}^{J} \wedge { d}\tilde{p}_{n}^{J}, \) i.e.

4.2 Symplecticity of the Transformed Methods

In this part, we will prove the following lemma.

Lemma 4.1

The result (26) is true if the following conditions are satisfied

where \(i,j=1,2,\ldots ,s,\) and \(K=h\tilde{\Omega }\textrm{i}\). Here \(\bar{\varphi }_1\) denotes the conjugate of \(\varphi _1\) and the same notation is used for other functions.

Proof

In view of the definition of differential 2-form (see [25]), it can be proved that for \(J=-l,-l+1,\ldots ,l\),

In the light of the scheme (25) and the fact that any exterior product \(\wedge \) appearing here is real, it is obtained that

where the fact that \(e^{K^{J}} -h \tilde{\Omega }^{J}\textrm{i}\varphi _{1}(K^{J})=I\) is used here. On the other hand, from the first s equalities of (25), it follows that

for \( i=1,2,\ldots ,s.\) We then obtain for \(j=1,2,\ldots ,s\)

Inserting this into (28) and summing over all J yields

\(\circ \) Prove that (29a)=0.

Based on the first s conditions of (27), \(\tilde{F}(\tilde{x})=-\nabla _{\tilde{x}} U(P\tilde{x})\) and (26), it can be verified that \(d\bar{\tilde{X}}^{J}_{j}\wedge d\tilde{F}^{J}_{j}= d X^{J}_{j}\wedge dF^{J}_{j}\). Thus, one has

\(\circ \) Prove that (29b)=0.

Using the property of \(\tilde{v}_{n}\), we have

and

Therefore, it follows that

\(\circ \) Prove that (29c)-(29f)\(=0\).

In the light of all the identities after the previous s ones in (27), the last two terms (29c)-(29f) vanish.

The results stated above lead to (26). \(\square \)

Based on the results of Lemma 4.1, it can be verified straightforwardly that the coefficients of SM1-SM3 satisfy (27). Therefore, these methods are symplectic.

5 Analysis on Long-time Energy Conservation (Theorem 3.3)

In this section, we will show the long time near-conservation of energy presented in Theorem 3.3 along SM2 algorithm. We first derive modulated Fourier expansion (see, e.g. [10, 19, 22, 24]) with sufficient many terms for SM2. Then one almost-invariant of the expansion is studied and based on which the long-time near conservation is confirmed. The proof of other methods can be given by modifying the operators \(\mathcal {L}(hD),\hat{\mathcal {L}}(hD)\) (32) and following the way given below. We skip this proof for brevity.

5.1 Reformulation of SM2

Using symmetry, the algorithm SM2 can be expressed in a two-step form

with \(F_{n}:=F(x_{n}),\) which yields that

where \( \alpha (\xi )=\frac{\xi }{\varphi _1(\xi )-\varphi _1(-\xi )},\ \ \beta (\xi )=\frac{2}{\varphi _1(\xi )+\varphi _1(-\xi )},\ \ \gamma (\xi )=\xi \frac{2 \varphi _1(\xi )\varphi _1(-\xi )}{\varphi ^2_1(\xi )-\varphi ^2_1(-\xi )}.\)

For the transformed system (20), it becomes

where the coefficient functions are given by \( \tilde{\alpha } (\xi )=\frac{1}{{{\,\textrm{sinc}\,}}^2(\frac{\xi }{2})},\ \tilde{\beta } (\xi )=\frac{1}{{{\,\textrm{sinc}\,}}(\xi )},\ \tilde{\gamma } (\xi )=\xi \csc (\xi )\) with \({{\,\textrm{sinc}\,}}(\xi )=\sin (\xi )/\xi \). Based on (31), we define the operator

where D is the differential operator.

Before we derive the modulated Fourier expansion for SM2, we need the following notations. We collect the diagonal elements of \(\tilde{\Omega }\) (18) in the vector

It is noted that \(\varpi =\mathcal {O}(1/\varepsilon )\). In this paper, the notation k is used to describe a vector in \({\mathbb {R}}^d\) and as stated in the previous section, its components are denoted by

and the same notation is used for all the vectors with the same dimension as k. For example, \(\varpi ^{l}\) is referred to \(\tilde{\omega }_l\). In this paper, we also use the notations

and the resonance module \(\mathcal {M}= \{k\in \mathbb {Z}^{D}:\ k\cdot \varpi =0\}\). Denote \(\langle j\rangle \) by the unit coordinate vector \((0, \ldots , 0, 1, 0, \ldots ,0)^{\intercal }\in \mathbb {R}^{d}\) with the only entry 1 at the j-th position. The set \(\mathcal {N}^*\) is defined as follows. For the resonance module \(\mathcal {M}\), we let \(\mathcal {K}\) be a set of representatives of the equivalence classes in \(\mathbb {Z}^l\backslash \mathcal {M}\) which are chosen such that for each \(k\in \mathcal {K}\) the sum |k| is minimal in the equivalence class \([k] = k +\mathcal {M},\) and that with \(k\in \mathcal {K}\), also \(-k\in \mathcal {K}.\) We denote, for the positive integer N, \(\mathcal {N}=\{k\in \mathcal {K}:\ |k|\le N\},\ \mathcal {N}^*=\mathcal {N}\backslash \{(0,\ldots ,0)\}. \)

5.2 Modulated Fourier Expansion

We first present the modulated Fourier expansion of the numerical result \(\tilde{x}_{n}\) and \(\tilde{v}_{n}\) for solving the transformed system (20). In the rest parts of this paper, we consider \(d=2l+1\) and all the analysis can be modified to \(d=2l\) without any difficulty.

We will look for smooth coefficient functions \(\tilde{\zeta }_{k}\) and \(\tilde{\eta }_{k}\) such that for \(t=nh\), the functions

yield a small defect \(\tilde{R},\tilde{S}\) when they are inserted into the numerical scheme (31).

Lemma 5.1

Under the conditions of Theorem 3.3 and for \(t=nh\), the numerical results \(\tilde{x}_{n}\) and \(\tilde{v}_{n}\) produced by (31) satisfy

The coefficient functions \(\tilde{\zeta }_{k}\) have the bounds

and we have the following results for the coefficient functions \(\tilde{\eta }_{k}\)

A further result is true

The remainders in (34) are bounded by

The constants symbolised by the notation \(\mathcal {O}\) are independent of \(h, \varepsilon \), but depend on \( c_0, c\) appeared in the conditions of Theorem 3.3.

Proof

The proof is presented by using the technique of the modulated Fourier expansion [19, 25] and it involves the construction of the series and the analysis of the coefficients and the truncation. For brevity, we present the key steps here and the details are referred to some related works [21,22,23,24, 42, 43].

\(\bullet \) Proof of (34). Inserting the first expansion of (33) into the two-step form (31), expanding the nonlinear function into its Taylor series and comparing the coefficients of \(\textrm{e}^{\textrm{i}(k \cdot \varpi ) t}\), we obtain

where the sum ranges over \(m\ge 0\), \(s(\alpha )=\sum \limits _{j=1}^{m}\alpha _j\) with \(\alpha =(\alpha _1,\ldots ,\alpha _m)\) and \(0<|\alpha _i|<N\), and \((\tilde{\zeta })_{\alpha }\) is an abbreviation for \((\tilde{\zeta }_{\alpha _1},\ldots ,\tilde{\zeta }_{\alpha _m})\). This formula gives the modulation system for the coefficients \(\tilde{\zeta }_{k}\) of the modulated Fourier expansion. Choosing the dominating terms and considering the Taylor expansion of \(\hat{\mathcal {L}}\)

the following ansatz of \(\tilde{\zeta }_{k}\) can be obtained:

where \(j=1,2,\ldots ,l\), \(A(x)= 2{{\,\textrm{sinc}\,}}^2(\frac{1}{2}x)\), all the \(\mathcal {F}\) and so on are formal series, and the dots stand for power series in \(\sqrt{h}\). In this paper we truncate the ansatz after the \(\mathcal {O}(h^{N+1})\) terms. On the basis of the second formula of (30), one has

Inserting (33) into (41), expanding the nonlinear function into its Taylor series and comparing the coefficients of \(\textrm{e}^{\textrm{i}(k \cdot \varpi ) t}\), one arrives

where

In a same way as [21,22,23,24, 42, 43], this formula gives the modulation system for the coefficients \(\tilde{\eta }_{k}\) of the modulated Fourier expansion by choosing the dominating terms and by the Taylor expansion of \(\mathcal {L}\)

Under the above analysis, the construction of \(\tilde{\zeta }_{k}\) and \(\tilde{\eta }_{k}\) is presented and this proves (34).

\(\bullet \) Proof of (35)-(37). For the first-order and second-order differential equations appeared in (40), initial values are needed and we derive them as follows.

According to the conditions \(\tilde{x}_{h}(0)=\tilde{x}_0\) and \(\tilde{v}_{h}(0)=\tilde{v}_0\), we have

Thus the initial values \(\tilde{\zeta }_{\langle 0\rangle }^0(0)=\mathcal {O}(1)\) and \(\dot{\tilde{\zeta }}_{\langle 0\rangle }^0(0)=\mathcal {O}(1)\) can be derived by considering the first and third formulae, respectively. According to the second equation of (43), one gets the initial value \(\tilde{\zeta }^{\pm j}_{\langle 0\rangle }(0)=\mathcal {O}(1)\). It follows from the fourth formula that \(\tilde{\zeta }_{\langle j\rangle }^{j}(0)=\frac{1}{\textrm{i} \tilde{\omega }_j}\big (\tilde{v}^{j}_{\langle 0\rangle }-\dot{\tilde{\zeta }}^{j}_{\langle 0\rangle }(0)+\mathcal {O}(\varepsilon )\big ) =\mathcal {O}(\varepsilon )\), and likewise one has \(\tilde{\zeta }^{-j}_{\langle - j\rangle }(0)=\mathcal {O}(\varepsilon )\).

With the ansatz (40), we achieve the bounds

According to the initial values stated above, the bounds

are obtained. Moreover, based on the above bounds and the relationship (42), the coefficient functions \(\tilde{\eta }_k\) are bounded as (36). With these results and considering (40) and (42) for \(\left| k\right| >1\), a further result (37) is deduced by the same arguments in [23, 24, 42, 43].

\(\bullet \) Proof of (38). Define

for \(t=nh\). Considering the two-step formulation, it is clear that \(\delta _1(t+h)+\delta _1(t-h)=\mathcal {O}(h^{4})\). According to the choice for the initial values, we obtain \(\delta _1(0)=\mathcal {O}(h^{N+2}).\) Therefore, one has \(\delta _1(t)=\mathcal {O}(h^{N+2})+\mathcal {O}(t h^{N+1}).\) Using this result and (41), we have \(\delta _2=\mathcal {O}(h^{N}).\) Then let \(\tilde{R}_{n}=\tilde{x}_{n}-\tilde{x}_{h}(t)\) and \(\tilde{S}_{n}=\tilde{v}_{n}-\tilde{v}_{h}(t).\) With the scheme of SM2, the error recursion is obtained as follows:

where \(\Gamma _{n}:=\int _{0}^1 \tilde{F}_x(\tilde{x}_{n}+\tau \tilde{R}_{n}) d\tau \). Similar to [23, 24, 42, 43], solving this recursion and the application of a discrete Gronwall inequality gives (38). \(\square \)

Using the relationship shown in (24), the modulated Fourier expansion of the method SM2 is immediately obtained.

Lemma 5.2

The numerical results \(x_{n}\) and \(v_{n}\) produced by SM2 admits the following modulated Fourier expansion

where \(\zeta _{k}=P\tilde{\zeta }_{k}\) and \(\eta _{k}=P\tilde{\eta }_{k}.\) Moreover, we have \(\zeta _{-k}=\overline{\zeta _{k}}\) and \(\eta _{-k}=\overline{\eta _{k}}\).

5.3 An Almost-Invariant

Denote \({\mathbf {\tilde{\zeta }}}=\big (\tilde{\zeta }_{k}\big )_{k\in \mathcal {N}^*}.\) An almost-invariant of the modulated Fourier expansion (33) is given as follows.

Lemma 5.3

There exists a function \(\mathcal {E}[\mathbf {\tilde{\zeta }}](t)\) such that

Meanwhile, this function has the form

Proof

According to the analysis of Sect. 5.2, it is deduced that

where we use the denotations \(\tilde{x}_{h}=\sum \limits _{ k\in \mathcal {N}^*}\tilde{x}_{h,k}\) with \( \tilde{x}_{h,k}=\textrm{e}^{\textrm{i}(k \cdot \varpi ) t}\tilde{\zeta }_{k}.\) Multiplication of this result with P yields

where \(x_{h}=\sum \limits _{ k\in \mathcal {N}^*}x_{h,k}\) with \(x_{h,k}=\textrm{e}^{\textrm{i}(k \cdot \varpi ) t}\zeta _k.\) Rewrite the equation in terms of \(x_{h,k}\) and then one has

where \(\mathcal {U}(\textbf{x})\) is defined as

with \(\textbf{x}=\big (x_{h,k}\big )_{k\in \mathcal {N}^*}.\) Multiplying this equation with \( \big (\dot{x}_{h,-k}\big )^\intercal \) and summing up yields

Denoting \(\mathbf {\zeta }=\big (\zeta _{k}\big )_{k\in \mathcal {N}^*}\) and switching to the quantities \(\zeta ^k\), we obtain

In what follows, we show that the right-hand side of (45) is the total derivative of an expression that depends only on \(\tilde{\zeta }_k\) and derivatives thereof. Consider \(k = \langle 0\rangle \) and then it follows that

By the formulae given on p. 508 of [25], we know that \(Re \big ( \dot{\overline{\tilde{\zeta }}}_{\langle 0\rangle }\big )^\intercal \hat{\mathcal {L}}(hD) \tilde{\zeta }_{\langle 0\rangle }\) is a total derivative. For \(k\ne {\langle 0\rangle }\), in the light of

where \( N_n\in \mathbb {R}^{d\times d}\) for \(n=0,1,\ldots \), it is easy to check that \(Re \big ( \dot{\overline{\tilde{\zeta }}}_{k}\big )^\intercal \tilde{\gamma }^{-1} (h\tilde{\Omega })\hat{\mathcal {L}}(hD+\textrm{i}h(k \cdot \varpi )) \tilde{\zeta }_k\) and \(Re \big (i (k \cdot \varpi ) \overline{\tilde{\zeta }_{k}}\big )^\intercal \tilde{\gamma }^{-1} (h\tilde{\Omega })\hat{\mathcal {L}}(hD+\textrm{i}h(k \cdot \varpi )) \tilde{\zeta }_k\) are both total derivatives. Therefore, there exists a function \(\mathcal {E}\) such that \(\frac{d}{dt}\mathcal {E}[\mathbf {\tilde{\zeta }}](t)=\mathcal {O}(h^{N})\). It follows from an integration that (44) holds.

On the basis of the previous analysis, the construction of \(\mathcal {E}\) is derived as follows

\(\square \)

5.4 Long-Time Near-conservation

Considering the result of \(\mathcal {E}\) and the relationship (36) between \(\tilde{\zeta }\) and \(\tilde{\eta }\), we obtain

We are now in a position to show the long-time conservations of SM2.

In terms of the bounds of the coefficient functions, one arrives at

A comparison between (46) and (47) gives \( \mathcal {E} [\mathbf {\tilde{\zeta }}](t_n)=E(x_{n},v_{n}) +\mathcal {O}(h). \) Based on (44) and this result, the statement (14) is easily obtained by considering \(nh^N\le 1\) and

6 Analysis on Convergence (Theorem 3.6)

In this section, we discuss the convergence of the algorithms stated in Theorem 3.6. The proof will be firstly given for EM1, M1 and PM by using the averaging technique and then presented for SM1-SM3 by using modulated Fourier expansion.

6.1 Proof for EM1, M1 and PM

6.1.1 Re-scaled System and Methods

In order to establish the uniform error bounds, the strategy developed in [9, 42] will be used in the proof. This means that the time re-scaling \(\tau :=t/\varepsilon \) is considered and \( \dot{q}(\tau )=\varepsilon \dot{x}(t),\ \dot{w}(\tau )=\varepsilon \dot{v}(t), \) where the notations \(q(\tau ):=x(t),\ w(\tau ):=v(t)\) are used. Then the convergent analysis will be given for the following long-time problem

where \(\dot{q}\) (resp. \(\dot{w}\)) is referred to the derivative of q (resp. w) with respect to \(\tau \). The solution of this system satisfies \(\Vert q\Vert _{L^\infty (0,T/\varepsilon )}+\Vert w\Vert _{L^\infty (0,T/\varepsilon )}\lesssim 1\) and for solving (48), the method EM1 becomes

where \(\Delta \tau \) is the time step \(\Delta \tau =\tau _{n+1}-\tau _n\) and \(q_{n}\approx q(\tau _n),\ w_n\approx w(\tau _n)\) is the numerical solution. The variation-of-constants formula of (48) reads

6.1.2 Local Truncation Errors

Based on (49), the local truncation errors \(\xi _n^q\) and \(\xi _{n}^w\) of EM1 for \(0\le n<\frac{T}{\varepsilon \Delta \tau }\) are defined as

By this result and the variation-of-constants formula of (48), we compute

where \(\hat{F} (\xi )=F(q(\xi ))\) and \(\hat{F}^{(j)}\) denotes the jth derivative of \(\hat{F}\) with respect to \(\tau \). By this definition, it follows that

Consequently, the local error becomes

where the result \(\varphi _{2}(\Delta \tau \tilde{B}) -\frac{1}{2} \varphi _1(\Delta \tau \tilde{B})=\mathcal {O}(\Delta \tau )\) is used here. Similarly, we obtain

Remark 6.1

It is noted that for M1, the local truncation errors are

6.1.3 Error Bounds

Define the error of the considered scheme

We first prove the error bounds of re-scaled EM1 and M1.

Lemma 6.2

The convergence of re-scaled EM1 is given by

For the re-scaled M1, its global error becomes

Proof

In this part, we will first prove the boundedness of EM1: there exists a generic constant \(\Delta \tau _0>0\) independent of \(\varepsilon \) and n, such that for \(0<\Delta \tau \le \Delta \tau _0\), the following inequalities are true:

For \(n=0\), (57) is obviously true. Then we assume that (57) is true up to some \(0\le m<\frac{T}{\varepsilon \Delta \tau }\), and we shall show that (57) holds for \(m+1\).

For \(n\le m\), subtracting (51) from the scheme (49) leads to

where we use the following notations

From the induction assumption of the boundedness, it follows that

Taking the absolute value (Euclidean norm) on both sides of (58) and using (59), we have

Summing them up for \(0\le n\le m\) gives

In the light of the truncation errors in (52) and the fact that \(m\Delta \tau \varepsilon \lesssim 1\), one has

and then by Gronwall’s inequality arrives at

Meanwhile, concerning

there exists a generic constant \(\Delta \tau _0>0\) independent of \(\varepsilon \) and m, such that for \(0<\Delta \tau \le \Delta \tau _0\), (57) holds for \(m+1\). This completes the induction.

We rewrite (58) as

Following the same way as stated above, (55) is arrived. We note that for M1, the global error given in (60) becomes (56).

In what follows, we derive the error bound for re-scaled PM.

Lemma 6.3

For the error bound of re-scaled PM, it satisfies

where we denote by \(C>0\) a generic constant independent of \(\Delta \tau \) or \(\delta \tau \) or n or \(\varepsilon \).

Proof

Firstly, for the coarse propagator (10), we compute

with \(\left\| F_q\right\| \le L\), which gives

Similarly, we have the same result for the fine coarse propagator (11)

Then by the induction, it is immediately derived that

On the other hand, it can be seen that \((1+C \varepsilon \delta \tau )^{\frac{\Delta \tau }{\delta \tau }}=1+(C \varepsilon \delta \tau )\frac{\Delta \tau }{\delta \tau }+\mathcal {O}(\Delta \tau )\le C\). Thus \(\left| \mathcal {F}^{\tau _n+\Delta \tau }_{\tau _n}([q;w])-\mathcal {F}^{\tau _n+\Delta \tau }_{\tau _n}([\tilde{q};\tilde{w}])\right| \le C\left| [q;w]-[\tilde{q};\tilde{w}]\right| \). Letting \([\tilde{q};\tilde{w}]=[0;0]\) in this result and (62) yields the boundedness

For the exact solution of (48), it is assumed that there exists a propagator \(\mathcal {E}\) such that \([q(\tau );w(\tau )]=\mathcal {E}_{0}^{\tau }[q(0);w(0)]\). Moreover, based on (50), there exists a constant \(C > 0\), such that

Define

Using the same argument as stated in the part of local truncation errors, it is obtained immediately that there exists a constant \(C > 0\), such that,

Letting \([\tilde{q};\tilde{w}]=[0;0]\) gives

Now we are in a position to prove the statement (61) by induction over \(j \ge 0\). In the light of the result (56) of M1, this statement is obvious for \(j = 0\). In what follows, it is assumed that (61) is true for j and we will prove it for \(j + 1\). From the definition of the method, it follows that

In order to prove the result, we split this form as follows

By triangular inequality and the results (62)-(65) stated above, it is obtained that

The boundedness of the exact solution as well as the induction hypothesis (61) allow to get

As long as \(\varepsilon ( \Delta \tau + \varepsilon ^{j} \Delta \tau ^{j+1} + \delta \tau )(1+\left| [q_0; w_0]\right| )\le 1,\) we have

from which we get (61) for \(j + 1\) from the discrete Gronwall lemma. \(\square \)

6.1.4 Proof of the Results for the Methods Applied to (1)

By considering the grids in the t variable and \(\tau \) variable, it is obtained that for the original system (1) and the re-scaled system (48), \(x(t_n)=q(\tau _n )\) and \(v(t_n)=w(\tau _n )\). Moreover, by comparing (9) with (49), we know that the numerical solution \(x_{n}, v_{n}\) of (9) is identical to \(q_{n}, w_{n}\) of (49). Therefore, the result (55) yields the uniform error bound in x given in (16) and also shows the non-uniform error in v of (16). The results (15a) of M1 and (15b) of PM are direct results of (56) and (61), respectively.

6.2 Proof for SM1-SM3

For SM1-SM3, the above proof cannot be applied since their local truncation errors will lose a factor of \(\varepsilon \) in (52) and (53). This motivates us to consider modulated Fourier expansions (see, e.g. [19, 22, 24, 25, 40]) for analysis in this part. The proof will be briefly shown for SM2 and it can be modified for the other two methods easily. Since the result is given under a lower bound on the stepsize, here the truncation error of modulated Fourier expansion is measured under h.

6.2.1 Decomposition of the Numerical Solution

Now we turn back to the SM2 given in (31) and consider its modulated Fourier expansion (33). In order to derive the convergence, we need to explicitly present the results of \(\tilde{\zeta }_{k}\) and \(\tilde{\eta }_{k}\) with \(\left| k\right| \le 1\). In the light of (39) and the properties of \(\hat{\mathcal {L}}(hD)\), we obtain

Then the following results

are easily arrived by considering (42) as well as the property of \(\mathcal {L}(hD)\).

6.2.2 Decomposition of the Exact Solution

Following the result given in [24], the exact solution of (20) for \(t=nh\) admits the following expansion

where the defects are bounded by \( \tilde{d}_{\tilde{x}}(t)=\mathcal {O}(\varepsilon ^2),\ \tilde{d}_{\tilde{v}}(t)=\mathcal {O}( \varepsilon ).\) The functions \(\tilde{\mu }_k\) with \(\left| k\right| \le 1\) are given by

and the functions \(\tilde{\nu }_k\) with \(\left| k\right| \le 1\) are

The initial values are the same as those of the numerical solutions.

6.2.3 Proof of the Convergence

Looking closely to the Eqs. (69) and (66), which determine the modulated Fourier expansion coefficients, it is obtained that \(\tilde{x}^{*}(t)-\tilde{x}_h(t)=\mathcal {O}(h^2).\) Similarly, with (70) and (67), one has \(\tilde{v}^{*}(t)-\tilde{v}_h(t)=\mathcal {O}(h^2).\) According to the above results and the defects of modulated Fourier expansions, we have the following diagram:

The error bounds

are immediately obtained on the basis of this diagram. This obviously yields

and the proof is complete.

7 Conclusions

Structure-preserving algorithms constitute an interesting and important class of numerical methods. Furthermore, algorithms with uniformly errors of highly oscillatory systems have received a great deal of attention. In this paper, we have formulated and analysed some structure-preserving algorithms with uniform error bound for solving nonlinear highly oscillatory Hamiltonian systems. Two kinds of algorithms with uniform error bound in x were given to preserve the symplecticity and energy, respectively. Moreover, some methods with uniform error in both x and v were derived. Long term energy conservation of symplectic methods were also discussed. All the theoretical results were supported by two numerical experiments and were proved in detail.

Last but not least, it is noted that all the algorithms and analysis are also suitable to the non-highly oscillatory system (1) with \(\varepsilon =1\). The algorithms are also applicable to the strongly damped Helmholtz-Duffing oscillator, strongly damped wave equation, eardrum oscillations, elasto-magnetic suspensions, and other physical phenomena [13, 33, 39]. Meanwhile, there are some issues brought by this paper which can be researched further. For the system (1) (not two dimensional) with a matrix \(\tilde{B}(x)\) depending on x, how to get uniformly accurate structure-preserving algorithms? This point is challenging and will be considered in future. Another issue for future exploration is the extension and application of the methods presented in this paper to the Vlasov equations under strong magnetic field [5, 6, 11, 12].

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

PM2 and SE also behave very badly and we omit their numerical results for brevity.

References

Boris, J.P.: Relativistic plasma simulation-optimization of a hybrid code. In: Proceeding of Fourth Conference on Numerical Simulations of Plasmas, pp. 3–67 (1970)

Brugnano, L., Iavernaro, F., Zhang, R.: Arbitrarily high-order energy-preserving methods for simulating the gyrocenter dynamics of charged particles. J. Comput. Appl. Math. 380, 112994 (2020)

Brugnano, L., Montijano, J.I., Rández, L.: High-order energy-conserving line integral methods for charged particle dynamics. J. Comput. Phys. 396, 209–227 (2019)

Celledoni, E., Grimm, V., McLachlan, R.I., McLaren, D.I., O’Neale, D., Owren, B., Quispel, G.R.W.: Preserving energy resp. dissipation in numerical PDEs using the Average Vector Field method. J. Comput. Phys. 231, 6770–6789 (2012)

Chartier, Ph., Crouseilles, N., Lemou, M., Méhats, F., Zhao, X.: Uniformly accurate methods for Vlasov equations with non-homogeneous strong magnetic field. Math. Comp. 88, 2697–2736 (2019)

Chartier, Ph., Crouseilles, N., Lemou, M., Méhats, F., Zhao, X.: Uniformly accurate methods for three dimensional Vlasov equations under strong magnetic field with varying direction. SIAM J. Sci. Compt. 42, B520–B547 (2020)

Chartier, Ph., Lemou, M., Méhats, F., Vilmart, G.: A new class of uniformly accurate methods for highly oscillatory evolution equations. Found. Comput. Math. 20, 1–33 (2020)

Chartier, Ph., Lemou, M., Méhats, F., Zhao, X.: Derivative-free high-order uniformly accurate schemes for highly-oscillatory systems. IMA J. Numer. Anal. 42, 1623–1644 (2022)

Chartier, Ph., Méhats, F., Thalhammer, M., Zhang, Y.: Improved error estimates for splitting methods applied to highly-oscillatory nonlinear Schrödinger equations. Math. Comp. 85, 2863–2885 (2016)

Cohen, D., Hairer, E., Lubich, Ch.: Modulated Fourier expansions of highly oscillatory differential equations. Found. Comput. Math. 3, 327–345 (2003)

Crouseilles, N., Hirstoaga, S., Zhao, X.: Multiscale Particle-in-Cell methods and comparisons for the long time two-dimensional Vlasov-Poisson equation with strong magnetic field. Comput. Phys. Comm. 222, 136–151 (2018)

Crouseilles, N., Lemou, M., Méhats, F., Zhao, X.: Uniformly accurate Particle-In-Cell method for the long time solution of the two-dimensional Vlasov-Poisson equation with uniform strong magnetic field. J. Comput. Phys. 346, 172–190 (2017)

Elías-Zúñiga, A.: Analytical solution of the damped Helmholtz-Duffing equation. Appl. Math. Lett. 25, 2349–2353 (2012)

Feng, K., Qin, M.: Symplectic Geometric algorithms for Hamiltonian systems. Springer-Verlag, Berlin, Heidelberg (2010)

Filbet, F., Rodrigues, M.: Asymptotically stable particle-in-cell methods for the Vlasov-Poisson system with a strong external magnetic field. SIAM J. Numer. Anal. 54, 1120–1146 (2016)

Filbet, F., Rodrigues, M.: Asymptotically preserving particle-in-cell methods for inhomogeneous strongly magnetized plasmas. SIAM J. Numer. Anal. 55, 2416–2443 (2017)

Gauckler, L., Hairer, E., Lubich, CH.: Dynamics, numerical analysis, and some geometry. In: Proceedings of the International Congress of Mathematicians-Rio de Janeiro 2018. Plenary lectures,I, 453–485, World Sci. Publ., Hackensack, NJ (2018)

Grigori, L., Hirstoaga, S.A., Nguyen, V., Salomon, J.: Reduced model-based parareal simulations of oscillatory singularly perturbed ordinary differential equations. J. Comput. Phys. 436, 110282 (2021)

Hairer, E., Lubich, Ch.: Long-time energy conservation of numerical methods for oscillatory differential equations. SIAM J. Numer. Anal. 38, 414–441 (2000)

Hairer, E., Lubich, Ch.: Energy behaviour of the Boris method for charged-particle dynamics. BIT 58, 969–979 (2018)

Hairer, E., Lubich, Ch.: Symmetric multistep methods for charged-particle dynamics. SMAI J. Comput. Math. 3, 205–218 (2017)

Hairer, E., Lubich, Ch.: Long-term analysis of a variational integrator for charged-particle dynamics in a strong magnetic field. Numer. Math. 144, 699–728 (2020)

Hairer, E., Lubich, Ch., Shi, Y.: Large-stepsize integrators for charged-particle dynamics over multiple time scales. Numer. Math. 151, 659–691 (2022)

Hairer, E., Lubich, Ch., Wang, B.: A filtered Boris algorithm for charged-particle dynamics in a strong magnetic field. Numer. Math. 144, 787–809 (2020)

Hairer, E., Lubich, Ch., Wanner, G.: Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations, 2nd edn. Springer-Verlag, Berlin, Heidelberg (2006)

He, Y., Zhou, Z., Sun, Y., Liu, J., Qin, H.: Explicit K-symplectic algorithms for charged particle dynamics. Phys. Lett. A 381, 568–573 (2017)

He, Y., Sun, Y., Liu, J., Qin, H.: Volume-preserving algorithms for charged particle dynamics. J. Comput. Phys. 281, 135–147 (2015)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Iserles, A.: On the global error of discretization methods for highly-oscillatory ordinary differential equations. BIT 42, 561–599 (2002)

Iserles, A., Nørsett, S.P.: From high oscillation to rapid approximation III: Multivariate expansions. IMA J. Num. Anal. 29, 882–916 (2009)

Lions, J.-L., Maday, Y., Turinici, G.: A parareal in time discretization of PDE’s, C. R. Acad. Sci. Ser. I Math. 332, 661–668 (2001)

Mocquard, Y., Navaro, P., Crouseilles, N.: HOODESolver, jl: a Julia package for highly oscillatory problems. J. Open Source Softw. 61, 3077 (2021)

Pata, V., Squassina, M.: On the strongly damped wave equation. Commun. Math. Phys. 253, 511–533 (2005)

Qin, H., Zhang, S., Xiao, J., Liu, J., Sun, Y., Tang, W.M.: Why is Boris algorithm so good? Phys. Plasmas 20, 084503 (2013)

Ricketson, L.F., Chacón, L.: An energy-conserving and asymptotic-preserving charged-particle orbit implicit time integrator for arbitrary electromagnetic fields. J. Comput. Phys. 418, 109639 (2020)

Sanz-Serna, J.M.: Mollified impulse methods for highly-oscillatory differential equations. SIAM J. Numer. Anal. 46, 1040–1059 (2008)

Sanz-Serna, J.M.: Symplectic Runge-Kutta schemes for adjoint equations, automatic differentiation, optimal control and more. SIAM Rev. 58, 3–33 (2016)

Tao, M.: Explicit high-order symplectic integrators for charged particles in general electromagnetic fields. J. Comput. Phys. 327, 245–251 (2016)

Thomee, V., Wahlbin, L.B.: Maximum-norm estimates for finite-element methods for a strongly damped wave equation. BIT 44, 165–179 (2004)

Wang, B.: Exponential energy-preserving methods for charged-particle dynamics in a strong and constant magnetic field. J. Comput. Appl. Math. 387, 112617 (2021)

Wang, B., Iserles, A., Wu, X.: Arbitrary-order trigonometric Fourier collocation methods for multi-frequency oscillatory systems. Found. Comput. Math. 16, 151–181 (2016)

Wang, B., Zhao, X.: Error estimates of some splitting schemes for charged-particle dynamics under strong magnetic field. SIAM J. Numer. Anal. 59, 2075–2105 (2021)

B. Wang, X. Zhao, Geometric two-scale integrators for highly oscillatory system: uniform accuracy and near conservations, SIAM J. Numer. Anal., Accepted for publication (2023)

Wu, X., Wang, B.: Geometric Integrators for Differential Equations with Highly Oscillatory Solutions. Springer, Singapore (2021)

Acknowledgements

The authors sincerely thank the two anonymous reviewers for the very valuable comments and helpful suggestions. This work was supported by NSFC (12271426) and Key Research and Development Projects of Shaanxi Province (2023-YBSF-399). The first author is grateful to Christian Lubich for his valuable comments on the first version of Theorem 3.3 as well as its proof, which was done in part at UNIVERSITAT TÜBINGEN when he worked there as a postdoctoral researcher (2017-2019, supported by the Alexander von Humboldt Foundation).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, B., Jiang, Y. Structure-Preserving Algorithms with Uniform Error Bound and Long-time Energy Conservation for Highly Oscillatory Hamiltonian Systems. J Sci Comput 95, 66 (2023). https://doi.org/10.1007/s10915-023-02178-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02178-6

Keywords

- Nonlinear Hamiltonian systems

- Highly oscillatory systems

- Symplectic algorithms

- Energy-preserving algorithms

- Uniform error bound

- Long-time conservation