Abstract

The low-rank approximation of a quaternion matrix has attracted growing attention in many applications including color image processing and signal processing. In this paper, based on quaternion normal distribution random sampling, we propose a randomized quaternion QLP decomposition algorithm for computing a low-rank approximation to a quaternion data matrix. For the theoretical analysis, we first present convergence results of the quaternion QLP decomposition, which provides slightly tighter upper bounds than the existing ones for the real QLP decomposition. Then, for the randomized quaternion QLP decomposition, the matrix approximation error and the singular value approximation error analyses are also established to show the proposed randomized algorithm can track the singular values of the quaternion data matrix with high probability. Finally, we present some numerical examples to illustrate the effectiveness and reliablity of the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Low-rank matrix approximation has been widely used in scientific computing and data science, including image processing [1, 2], high dimensional data analysis [3, 4], face recognition [5] and structured matrix approximation [6, 7], etc. In theory, the rank-k truncated singular value decomposition (SVD) provides the optimal rank-k low-rank approximation in the matrix spectral norm and the Frobenius norm [8, 9].

In recent years, randomized algorithms have been developed for computing the low-rank approximation of a real or complex data matrix, which are designed for high-performance computing [3, 10,11,12,13]. For the input matrix A, the goal of a randomized algorithm is to find a low-rank matrix approximation \(A\approx QB\), which is called a matrix sketch. The matrix sketch only preserves important features and filters unnecessary information out from the data matrix A. A randomized algorithm includes two stages: in the first stage, the original high-dimensional data matrix is projected onto a low-dimensional matrix via random matrices, a deterministic algorithm is applied in the second stage to a low-dimensional projected matrix. Compared with a deterministic algorithm (e.g., the SVD and the rank-revealing QR decomposition (RRQR)), a randomized algorithm have the advantages of low complexity, fast running speed and easy implementation. Recently, many matrix-decomposition based randomized algorithms have been proposed for the low-rank matrix approximation such as randomized SVD [3], randomized LU [14], randomized QR decomposition with column pivoting (QRCP) [15,16,17], and randomized QLP [18], etc.

The concept of quaternion was introduced by Hamilton in 1843 [19]. Quaternion and quaternion matrices have been applied in various applications such as quantum mechanics [20], image processing [21,22,23] and so on. The quaternion toolbox for MATLAB (QTFM), which was developed by Sangwine and Le Bihan [24], allows calculation with quaternion matrices (e.g., quaternion QR factorization [25] and quaternion SVD (QSVD) [26]). By exploring the real counterpart of a quaternion matrix with different real transformations, various structure-preserving algorithms have been proposed for different quaternion matrix decompositions such as quaternion LU factorization [27, 28], quaternion QR decomposition [29, 30], QSVD [29, 31], and quaternion Cholesky decomposition [32], etc.

The low-rank quaternion matrix approximation has drawn growing attention in many applications including color image processing [33] and signal processing [34]. Obviously, the truncated QSVD provides the optimal low-rank approximation of a quaternion matrix in a least-squares sense under the Frobenius norm [8]. However, it is expensive to compute the QSVD of a large-scale quaternion data matrix. In [35], Liu et al. presented a randomized QSVD algorithm for computing a low-rank approximation of a large-scale quaternion matrix, where the matrix approximation error analysis was also studied. Compared with QSVD, the randomized QSVD can improve the computational efficiency surprisingly. This is the only work on quaternion randomized algorithms so far.

Although a quaternion-matrix based method is shown to be more effective [22] than the hot tensor-based algorithm in color image processing, randomized algorithms of quaternion matrices do not get enough attention as randomized algorithms of real matrices. To enrich the application and efficient numerical algorithms of quaternion matrices, in this paper, we propose a randomized quaternion QLP decomposition algorithm for computing a low-rank approximation to a quaternion data matrix. This is motivated by the two papers due to Liu et al. [35] and Stewart [36]. As an approximate SVD, the QLP decomposition was introduced by Stewart [36]. The QLP decomposition is essentially two-step QRCP, i.e., for a real matrix A, we obtain an upper triangular factor \(R_0\) from the QRCP \(AP_{0}=Q_{0}R_{0}\) of A and then we get a lower triangular matrix L from the QRCP \(R_{0}^{T}P_{1}=Q_{1}L^{T}\) of \(R_0^T\), which results in \(A=QLP^T\), where \(Q=Q_0P_1\) and \(P=P_0Q_1\) with \(Q_0\) and \(Q_1\) being orthogonal and \(P_0\) and \(P_1\) being permutation matrices. As noted in [36], the singular values of A can be tracked by the diagonal entries of L with considerable fidelity. We see that the QLP decomposition needs only no more than twice the cost required for a QRCP.

In this paper, we directly apply the QLP decomposition to a quaternion data matrix and we obtain a quaternion QLP (QQLP) decomposition. Then, a good low-rank approximation of a quaternion data matrix can be obtained by truncating the final QQLP decomposition. However, the QQLP decomposition for a large quaternion data matrix might be very costly. To enhance the efficiency, we develop a randomized QQLP algorithm for computing a low-rank approximation. Based on quaternion probability theory and technical handling in numerical algebra, our entire and novel work includes

-

The convergence properties of QQLP. In [37], Huckaby and Chan presented the convergence results for the real QLP decomposition. With new technical handling, we give new convergence results for the QQLP decomposition. These theoretical results show that the singular values of the diagonal blocks of L approximate those of A quadratically in the gap ratio of neighboring singular values. When the quaternion data matrix degenerates to a real matrix, the corresponding results deliver slightly tighter error bounds than those in [37].

-

Error analysis of randomized QQLP. In [35], the authors only considered the matrix approximation error analysis for randomized quaternion SVD. Our error analysis of randomized QQLP includes the matrix approximation error analysis, and the singular value approximation error analysis as well. The error analysis results illustrate that the randomized QQLP algorithm computes low-rank matrix approximations and approximate singular values up to some constants depending on the dimension of data matrix and the gap ratio from optimal.

The rest of this paper is organized as follows. In Sect. 2 we review some preliminary results on quaternion and quaternion matrices. In Sect. 3 a randomized quaternion QLP decomposition algorithm is proposed for computing a low-rank approximation to a quaternion data matrix. In Sect. 4, convergence analysis of QQLP is studied. Both the matrix approximation error analysis and the singular value approximation error analysis of randomized QQLP are presented. In Sect. 5 we report some numerical experiments to demonstrate the effectiveness of the proposed algorithm. Finally, some concluding remarks are presented in Sect. 6.

2 Preliminaries

In this section, we review some preliminary results on quaternions and quaternion matrices.

2.1 Notation

Let \({\mathbb {R}}\) and \({\mathbb {Q}}\) be the set of all real numbers and the set of all quaternions, respectively. Let \({\mathbb {R}}^{m\times n}\) and \({\mathbb {Q}}^{m\times n}\) be the set of all \(m\times n\) real matrices and the set of all \(m\times n\) quaternion matrices, respectively. Denote by \(\Vert \cdot \Vert _2\) and \(\Vert \cdot \Vert _F\) the matrix spectral norm and the Frobenius norm, respectively. The superscripts “\(\cdot ^{T}\)”, “\(\cdot ^*\)”, and “\(\cdot ^{\dag }\)" denote the transpose, the conjugate transpose, and the Moore-Penrose inverse of a matrix accordingly. Let \(\sigma _{\max }(A)=\sigma _{1}(A)\ge \sigma _{2}(A)\ge \cdots \ge \sigma _{\ell }(A)=\sigma _{\min }(A)\ge 0\) denote the singular values of an \(m\times n\) matrix A, where \(\ell =\min \{m,n\}\). Denote by \({\mathcal {R}}(A)\) the subspaces spanned by the column vectors of a matrix A. Let \(I_n\) be the identity matrix of order n. The symbol “\(\otimes \)" means the Kronecker product. Finally, let \({\mathbb {P}}(\cdot )\) and \({\mathbb {E}}(\cdot )\) denote the probability of a random event and the expectation of a random variable, respectively.

2.2 Quaternion Matrix and its Properties

A quaternion \(a\in {\mathbb {Q}}\) is defined by

where \(a_0\), \(a_1\), \(a_2\), \(a_3\in {{\mathbb {R}}}\) and the quaternion units \(\mathbf {i}\), \(\mathbf {j}\) and \(\mathbf {k}\) satisfy the following properties:

The quaternion a is called a pure quaternion if \(a_0=0\). The conjugate of a is given by

For two quaternions \(a=a_0+a_1\mathbf{i}+a_2\mathbf{j}+a_3\mathbf{k}\in {\mathbb {Q}}\) and \(b=b_0+b_1\mathbf{i}+b_2\mathbf{j}+b_3\mathbf{k}\in {\mathbb {Q}}\), their product (i.e., the Hamilton product) is given by

The modulus of \(a=a_0+a_1\mathbf{i}+a_2\mathbf{j}+a_3\mathbf{k}\in {\mathbb {Q}}\) is defined by

It is worth noting that quaternion multiplication does not satisfy the commutative law but satisfies the associate law. Therefore, \({\mathbb {Q}}\) is an associate division algebra over \({{\mathbb {R}}}^4\).

Let \(A=A_0+A_1{\mathbf {i}}+A_2{\mathbf {j}}+A_3{\mathbf {k}}\in {{\mathbb {Q}}}^{m\times n}\) be a quaternion matrix, where \(A_0\), \(A_1\), \(A_2\), \(A_3\in {\mathbb {R}}^{m\times n}\). Then the conjugate transpose of A is given by \(A^*=A_0^T-A_1^T{\mathbf {i}}-A_2^T{\mathbf {j}}-A_3^T{\mathbf {k}}\). The product of two quaternion matrices \(A=A_0+A_1{\mathbf {i}}+A_2{\mathbf {j}}+A_3{\mathbf {k}}\in {{\mathbb {Q}}}^{m\times s}\) and \(B=B_0+B_1{\mathbf {i}}+B_2{\mathbf {j}}+B_3{\mathbf {k}}\in {{\mathbb {Q}}}^{s\times n}\) is given by

We recall the inner product and norms of quaternion vectors and matrices [38].

Definition 2.1

The inner product of two quaternion vectors \(\mathbf{x}=[\mathbf{x}_1,\ldots ,\mathbf{x}_n]^T\in {{\mathbb {Q}}}^n\) and \(\mathbf{y}=[\mathbf{y}_1,\ldots ,\mathbf{y}_n]^T\in {{\mathbb {Q}}}^n\) is defined by

with the induced 2-norm

The inner product of two quaternion matrices \(A=[\mathbf{a}_{ij}]\in {{\mathbb {Q}}}^{m\times n}\) and \(B=[\mathbf{b}_{ij}]\in {{\mathbb {Q}}}^{m\times n}\) is defined by

with the induced Frobenius norm

In addition, the spectral norm of the quaternion matrix \(A=[\mathbf{a}_{ij}]\in {{\mathbb {Q}}}^{m\times n}\) is defined by

From Definition 2.1, it is easy to check the following unitary invariance

and the following inequalities

for all consistent quaternion matrices A and B and unitary quaternion matrices \(Z_1\) and \(Z_2\).

Next, we recall some properties of the real counterpart of quaternion matrices [30, 39]. We recall that a real counterpart of \(A=A_0+A_1{\mathbf {i}}+A_2{\mathbf {j}}+A_3{\mathbf {k}}\in {{\mathbb {Q}}}^{m\times n}\) is given by [40]:

The matrix \(\Upsilon _A\) has special algebraic symmetry structure that is preserved under some operations below.

Lemma 2.2

([40]). Let A, \(B\in {\mathbb {Q}}^{m\times n}\), \(C\in {\mathbb {Q}}^{n\times s}\), and \(\tau \in {\mathbb {R}}\). Then

-

(i)

\(\Upsilon _{A+B}=\Upsilon _A+\Upsilon _B\), \(\Upsilon _{\tau A}=\tau \Upsilon _A\), \(\Upsilon _{AC}=\Upsilon _A\Upsilon _C\).

-

(ii)

\(\Upsilon _{A^*}=\Upsilon _A^T\).

-

(iii)

\(A\in {\mathbb {Q}}^{m\times m}\) is a unitary matrix if and only if \(\Upsilon _A\) is an orthogonal matrix.

-

(iv)

\(\Vert A\Vert _F=\frac{1}{2}\Vert \Upsilon _A\Vert _F\) and \(\Vert A\Vert _2=\Vert \Upsilon _A\Vert _2\).

The following result on the QSVD follows from [41, Theorem 7.2].

Lemma 2.3

([41]). Let \(A\in {\mathbb {Q}}^{m\times n}\). Then there exist two unitary quaternion matrices \(U_m\in {\mathbb {Q}}^{m\times m}\) and \(V_n\in {\mathbb {Q}}^{n\times n}\) such that

where \(\sigma _{1}(A)\ge \sigma _{2}(A)\ge \cdots \ge \sigma _\ell (A)\ge 0\).

It is easy to see that, for any quaternion matrix A, we have \(\Vert A\Vert _2=\sigma _{\max }(A)\). We must point out that there exist some fast structure-preserving algorithms for computing the QSVD [29, 31].

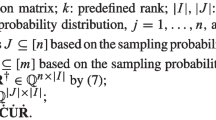

3 Randomized Quaternion QLP Decomposition

In this section, we first present the quaternion QLP decomposition. Then we propose a randomized quaternion QLP decomposition algorithm for computing a low-rank approximation of a large-scale quaternion data matrix.

3.1 Quaternion QLP Decomposition

As described in Sect. 1, the QLP of a real \(m\times n\) \((m\ge n)\) matrix A applies two consecutive QRCPs to A [36], i.e., computes the QRCP of A first, and then applies the same procedure to the transpose of the obtained R-factor. The convergence results in [37] show that the singular values of the diagonal blocks of L in the QLP decomposition can track the singular values of A under some circumstances, and it has a quadratic relative error with respect to the gap ratio.

We now present the quaternion QLP decomposition. Let \(A\in {\mathbb {Q}}^{m\times n}\) with \(m\ge n\). Then, by using the following two consecutive quaternion QRCPs (qrcpQ)

we obtain the following quaternion QLP decomposition of A:

where \(P_{0}\in {{\mathbb {Q}}}^{n\times n}\) and \(P_{1}\in {{\mathbb {Q}}}^{m\times m}\) are two permutation matrices, \(Q_{0}\in {{\mathbb {Q}}}^{m\times m}\) and \(Q_{1}\in {{\mathbb {Q}}}^{n\times n}\) are two unitary matrices, \(R_0\in {{\mathbb {Q}}}^{m\times n}\) is an upper triangular matrix, and \(L\in {{\mathbb {Q}}}^{m\times n}\) is a lower triangular matrix.

Based on the above descriptions, the quaternion QLP decomposition of a quaternion matrix can be described as Algorithm 3.1.

One may use the toolbox QTFM to implement Algorithm 3.1, where the quaternion operations are involved. We can carry out Algorithm 3.1 via a real structure-preserving quaternion QR algorithm within real operations (e.g., [29, 30]).

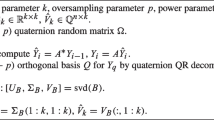

3.2 Randomized Quaternion QLP Decomposition

In this subsection, we propose a randomized quaternion QLP decomposition algorithm for computing a low-rank approximation of a large-scale quaternion data matrix.

We first recall the randomized QLP decomposition of a real matrix in [18]. Let \(A\in {{\mathbb {R}}}^{m\times n}\) be a given data matrix. Then the randomized QLP decomposition of A can be divided into two stages. The first stage aims to construct an approximate basis for the range of A or \(A^T\), where the basis matrix V has only a few orthonormal columns and \(A\approx VV^TA\) or \(A^T\approx VV^TA^T\). The second stage is to compute an approximated rank-k QLP decomposition of A by using V. Specifically, we first form the matrix product \(Y=A\Omega \) or \(Y=\Omega A\) by applying random sampling to A via a random test matrix \(\Omega \) and then we construct an orthonormal basis for the range of Y or \(Y^T\), e.g., using the QR factorization \(Y=QR\) or \(Y^T=QR\). Afterwards we form the matrix \(B=V^TA\) or \(B=AV\) and compute the QLP decomposition of B: \(B={\widehat{Q}}{\widehat{L}}{\widehat{P}}^T\). The final approximate randomized QLP decomposition of A is given by \(A\approx (V{\widehat{Q}}){\widehat{L}}{\widehat{P}}^T\) or \(A\approx {\widehat{Q}}{\widehat{L}}(V{\widehat{P}})^T\).

As in [35], we use the quaternion random test matrix defined by

where \(\Omega _0,\Omega _1,\Omega _2,\Omega _3\in {{\mathbb {R}}}^{m\times n}\) are all real independent standard Gaussian random matrices. Then we can develop a randomized QLP decomposition algorithm for computing a low-rank approximation of a quaternion data matrix. This can be described as Algorithm 3.2 with MATLAB notations, where the power iterations \(Y=\Omega A(A^*A)^q\) in Steps 3–6 assert a good low-rank basis of \({{{\mathcal {R}}}}(A^*\Omega ^*)\). In practice, it is enough if \(q=1\) or \(q=2\).

Remark 3.1

In Algorithm 3.2, by using the row sampling, we get an approximate basis \(V\in {{\mathbb {Q}}}^{n\times l}\) for the range of \(A^*\) and thus \(B=AV\) is an \(m\times l\) matrix. Then in Steps 9–10, we can compute an economy-size QQLP decomposition of the \(m\times l\) matrix \(B=AV\) since \(l<m\). However, if we use the column sampling to get an approximate basis \(V\in {{\mathbb {Q}}}^{m\times l}\) for the range of A and thus \(B=V^*A\) is an \(l\times n\) matrix. In this case, the QQLP decomposition of the \(l\times n\) matrix \(B=V^*A\) is more expensive, since more pivoting selection and update on trailing column vectors are needed in the quaternion QRCP.

Remark 3.2

In Step 7 of Algorithm 3.2, we need to compute the quaternion QR decomposition of \(Y^*\), where the Q-factor \(V\in {\mathbb {Q}}^{n\times l}\) should have orthonormal columns. This process can be implemented by using the quaternion modified Gram-Schmidt (QMGS) algorithm [9, Algorithm 5.2.6] or the quaternion Householder QR decomposition (QHQR) [29] to the quaternion matrix \(Y^*\). In the QHQR method, we need to explicitly form V. As in [9, p. 238], by using the Householder vectors \(\mathbf{v}^{(1)},\ldots ,\mathbf{v}^{(l)}\), we can form the matrix V using MATLAB notations as follows:

The QHQR method can also be used to form \(Q_0\) in Step 9 of Algorithm 3.2.

Remark 3.3

We must point out that, in Algorithm 3.2, the data matrix A is accessed twice when the power iteration number \(q=0\). However, when the data is stored outside the core memory, the cost of accessing the data is very expensive. We can access the data once if it is generated in the form of stream data. In this case, in Step 8 of Algorithm 3.2, we can replace \(B=AV\) by \(B={\widetilde{Y}}(V^*{\widetilde{\Omega }})^{\dag }\), where \({\widetilde{Y}}=A{\widetilde{\Omega }}\) with \({\widetilde{\Omega }}\in {\mathbb {Q}}^{n\times {\tilde{l}} }(l \le {\tilde{l}}\le \min \{m,n\})\) being a quaternion random test matrix. This is a single-pass version [3].

Remark 3.4

In general, it is difficult to give the suitable target rank for Algorithm 3.2. However, we can determine the target rank k, an \(m\times k\) orthonormal quaternion matrix \({\widetilde{V}}_k\) and a \(k\times n\) quaternion matrix \(B_k={\widetilde{V}}_k^*A\) such that \(\Vert A-{\widetilde{Q}}_kB_k\Vert \le \varepsilon \) for a prescribed accuracy \(\varepsilon >0\). This can be implemented by adaptively in a greedy fashion (see [42, Algorithm 12.2.1]).

Algorithm 3.2 can be implemented by using the toolbox QTFM, but the computational efficiency of this implementation method is relatively low. To improve the efficiency, one may carry out Algorithm 3.2 using real structure-preserving operations. For example, in Steps 7 and 9–10, Algorithm 3.2 can be more efficient by using the fast real structure-preserving quaternion QR algorithm [30] with/without column pivoting.

4 Theoretical Analysis

In this section, we provide theoretical analysis of Algorithms 3.1 and 3.2, including the convergence analysis of QQLP, and the matrix approximation error analysis and singular value approximation error analysis of RQQLP as well.

4.1 Improved Convergence Results of QQLP Decomposition

In [37], Huckaby and Chan gave the convergence analysis of the QLP decomposition for a real matrix. In this section, we extend the relevant results to quaternion matrices and give tighter error bounds.

We start from the right eigenvalue inequality of the Hermitian quaternion matrix sum. It is worth noting that in general a square quaternion matrix has left and right eigenvalues with distinct values and properties [41], due to the non-commutative multiplication of quaternions. However, the two kinds of eigenvalues of a quaternion Hermitian matrix coincide to be the same real number [40], and any right eigenvalue of a quaternion Hermitian matrix A is also an eigenvalue of the symmetric matrix \(\Upsilon _{A}\) with multiplicity four. With this, we obtain the following eigenvalue inequality of quaternion matrices.

Lemma 4.1

If \(A,B\in {\mathbb {Q}}^{n\times n}\) are quaternion Hermitian matrices, then

for \(i=1,\ldots ,n\), where \(\lambda _i(A)\) denotes the i-th largest right eigenvalue of A.

Proof

According to [9, Theorem 8.1.5], the inequality

holds for any real matrices \({\tilde{A}},{\tilde{B}}\in {\mathbb {R}}^{n\times n}\). Applying this result to \(\Upsilon _A\) and \(\Upsilon _B\) leads to Lemma 4.1. \(\square \)

On the singular value inequalities for the quaternion matrix sum and product, we have the following results.

Lemma 4.2

Let \(A,B\in {\mathbb {Q}}^{m\times s}\) and \(C\in {{\mathbb {Q}}}^{s\times n}\). Then we have

for \(i=1,\ldots ,\min \{m,s\}\) and

for \(i=1,\ldots ,\min \{m,s,n\}\).

Proof

It is well known that

for \({\tilde{A}},{\tilde{B}}\in {\mathbb {R}}^{m\times s}\) [43, Theorem 3.3.16] and

for \({\tilde{A}}\in {\mathbb {R}}^{m\times s}\) and \({\tilde{C}}\in {\mathbb {R}}^{s\times n}\) [44, Proposition 9.6.4]. Notice that each singular value of A is also that of \(\Upsilon _A\) with multiplicity four. Thus Lemma 4.2 can be established by applying above relations to \(\Upsilon _A\), \(\Upsilon _B\) and \(\Upsilon _C\). \(\square \)

Using Lemma 4.2, we obtain the following result.

Lemma 4.3

Let \(A\in {\mathbb {Q}}^{m\times n}\) and \({{{\mathcal {U}}}}_1\in {\mathbb {Q}}^{m\times s}, {{{\mathcal {V}}}}_1\in {\mathbb {R}}^{n\times t}\) with orthonormal columns (\(s\le m, t\le n\)). Then \(\sigma _{j}(A)\ge \sigma _{j}({{{\mathcal {U}}}}_1^{*}A{{{\mathcal {V}}}}_1)\) for \(j=1,\ldots , \min \{s,t\}\).

Now we set to analyze the interior singular value approximation errors for the QLP decomposition of a quaternion matrix. As noted in [36, 37], for the QLP decomposition, the pivoting in the first QRCP is indispensable while the pivoting in the second QRCP simply avoid certain contrived counterexamples. For simplification, we assume that there is no pivoting in the second QRCP of the QQLP decomposition for the matrix B generated by Algorithm 3.2.

Theorem 4.4

Let \(A\in {\mathbb {Q}}^{m\times n}\) and \(\sigma _{k}(A)>\sigma _{k+1}(A)\) for some \(k<\ell =\min \{m,n\}\). Let \(R_0\) be the R-factor in the QRCP of A, \(AP_{0}=Q_{0}R_{0}\), where \(Q_0\in {{\mathbb {Q}}}^{m\times m}\) and \(R_0\in {{\mathbb {Q}}}^{m\times n}\), and let \(L^*\) be the R-factor in the QR of \(R_0^*\), \(R_{0}^{*}=Q_{1}L^{*}\), where \(Q_1\in {{\mathbb {Q}}}^{n\times n}\) and \(L\in {{\mathbb {Q}}}^{m\times n}\). Partition \(R_{0}\) and L as

where \(R_{11}\), \(L_{11}\in {\mathbb {Q}}^{k\times k}\). If \(\Vert R_{22}\Vert _{2}\le \sqrt{(k+1)(n-k)}\cdot \sigma _{k+1}(A)\), \(\sigma _{k}(R_{11})\ge \frac{\sigma _{k}(A)}{\sqrt{k(n-k+1)}}\), and \(\rho =\frac{\Vert R_{22}\Vert _{2}}{\sigma _{k}(R_{11})}<1\), then for \(i=1,\ldots ,\ell -k\),

and for \(j=1,\ldots ,k\),

where \(\rho _{1}=\frac{\Vert L_{22}\Vert _{2}}{\sigma _{k}(L_{11})}\le \rho <1\).

Proof

We first show that

By assumption, we have \(AP_0=Q_0R_0\) and \(\sigma _{k}(A)>\sigma _{k+1}(A)\). Thus \(\sigma _k(R_0)>\sigma _{k+1}(R_0)\) and \(\sigma _j(A)=\sigma _j(R_0)\) for \(j=1,\ldots ,\ell \). By hypothesis, \(R_0^*=Q_1L^*\), i.e., \(R_0Q_1=L\), and hence \(R_0R_0^*=LL^*\), i.e.,

It follows from (4.4) that

By Lemma 4.1 and (4.5) we obtain

for \(j=1,\ldots ,k\). This shows that (4.3b) holds.

Also, it follows from (4.4) that

Thus,

where the second inequality follows from (4.3b). Then we obtain (4.3c).

Thus,

Therefore, we obtain (4.3d).

Again, it follows from (4.4) that

Using Lemma 4.1 we have \(\sigma _j(R_{22})\ge \sigma _j(L_{22})\) for \(j=1,\ldots ,\ell -k\). This shows that (4.3e) holds. Using (4.3b) and (4.3e), we obtain (4.3a).

Thus,

Let \(R_{11}^{-1}R_{12}\) admit the SVD: \(R_{11}^{-1}R_{12}={\widehat{U}}\widehat{\Sigma }{\widehat{V}}^*\), where \(\widehat{\Sigma }={\mathrm{diag}}(\sigma _1(R_{11}^{-1}R_{12}),\ldots ,\sigma _{s_0}(R_{11}^{-1}R_{12}))\) \(\in {{\mathbb {R}}}^{k\times (n-k)}\) with \(s_0=\min \{k, n-k\}\). By simple calculations, we obtain

where \(G:={\widehat{V}}D_G{\widehat{V}}^*\) with \(D_G:={\mathrm{diag}}(1/(1+\sigma _1^2(R_{11}^{-1}R_{12}))^{1/2},\ldots ,1/(1+\sigma _{s_0}^2(R_{11}^{-1}R_{12}))^{1/2},1,\ldots ,1)\) \(\in {{\mathbb {R}}}^{(n-k)\times (n-k)}\). This, together with Lemma 4.2 and (4.8), yields

for \(j=1,\ldots ,\ell -k\). This shows that (4.3f) holds.

Next, we consider the QLP iteration, which aims to compute the QR decomposition of the conjugate transpose of the R-factor produced by the last step. With \(R_1=L^*\), the algorithm can be described by

For any \(i\ge 0\), let

where \(S_0=R_{11}\), \(H_{0}=R_{12}\), \(E_{0}=R_{22}\), \(S_1=L_{11}^*\), \(H_{1}=L_{21}^*\), and \(E_{1}=L_{22}^*\). By using a similar proof of (4.3), we have

Using (4.10a)–(4.10f) and (4.3a)–(4.3f), we can establish (4.1)–(4.2) by following the arguments similar to those of [37, Theorem 3.4] under the assumptions that \(\sigma _{k}(R_{11})\ge \frac{\sigma _{k}(A)}{\sqrt{k(n-k+1)}}\) and \(\Vert R_{22}\Vert _{2}\le \sqrt{(k+1)(n-k)}\cdot \sigma _{k+1}(A)\). \(\square \)

On the maximum singular value approximation error for the QLP decomposition of a quaternion matrix, we have the following theorem.

Theorem 4.5

With the matrices \(R_0, L\) defined in Theorem 4.4, if we partition \(R_{0}\) and L as

where \(r_{11}\), \(l_{11}\in {\mathbb {Q}}\). If \(\Vert R_{22}\Vert _{2}\le \sqrt{2(n-1)}\sigma _{2}(A)\) and \(\frac{\Vert R_{22}\Vert _{2}}{|r_{11}|}<1\), then

where \(\rho _{1}=\frac{\Vert L_{22}\Vert _{2}}{|l_{11}|}<\frac{\Vert R_{22}\Vert _2}{|r_{11}|}<1\).

Proof

This follows from a similar proof of Theorem 4.4 by using the fact that

\(\square \)

Remark 4.6

The results indicate that the singular values of the diagonal blocks of L are good approximations to those of A, when there exists a significant gap between \(\sigma _k(A)\) and \(\sigma _{k+1}(A)\). When the quaternion data matrix degenerates to a real matrix, the bounds provided in [37, Theorem 3.4] are given by

and

We note that \(\Vert R_{11}^{-1}R_{12}\Vert _2\le \Vert R_{11}^{-1}\Vert _2\Vert R_{12}\Vert _2=\Vert R_{12}\Vert _2/ \sigma _{k}(R_{11})\) and experimental results for QRCP of 300 real test matrices in [45] show that \(\Vert R_{11}^{-1}R_{12}\Vert _2\) is always smaller than 10. This shows that our error bound in Theorem 4.4 is tighter. In fact, the main difference is that, in the proof [37, Theorem 3.4], the main inequalities (4.10a)–(4.10c) and (4.10e) were derived by employing the block structure and the singular value property of the blocks of \(Q_{i+1}\) in (4.9) while we deduce (4.10a)–(4.10f) from \(R_iR_i^*=R_{i+1}^*R_{i+1}\) avoiding using the singular value property of the blocks of \(Q_{i+1}\), and we obtain the new inequalities associated with \(\Vert S_j^{-1}H_j\Vert _2\) in (4.10d) and (4.10f) to substitute the ones in [37] that are associated with \(\Vert S_j^{-1}\Vert _2\Vert H_j\Vert _2\) .

4.2 Error Analysis of Randomized QQLP

The idea and implementation of RQQLP are simple, while the theoretical analysis is rather lengthy. In this section we first give the main approximation results of RQQLP.

4.2.1 Matrix Approximation Error Analysis

In this subsection, we give the matrix approximation error bound for Algorithm 3.2.

On the matrix approximation error of Algorithm 3.2, we have the following results. The proof is similar to [35, Theorems 4.1–4.3] and thus we omit the details here.

Theorem 4.7

(Average error) Let \(A\in {{\mathbb {Q}}}^{m\times n}\) admit the QSVD: \(A=U_m\Sigma V_n^* \), where \(U_m=[U_{1m}, U_{2m}]\) with \(U_{1m}\in {{\mathbb {Q}}}^{m\times k}\) and \(\Sigma ={\mathrm{diag}}(\sigma _1(A),\ldots ,\sigma _\ell (A))\in {{\mathbb {R}}}^{m\times n}\) with \(\sigma _{1}(A)\ge \sigma _{2}(A)\ge \ldots \ge \sigma _\ell (A)\ge 0\) (\(\ell =\min \{m,n\}\)). With the notations of Algorithm 3.2, if the oversampling parameter \(p\ge 2\), \(l=k+p\le \min \{m,n\}\), the power iteration number \(q=0\), and \(\Omega _1=\Omega U_{1m}\) has full column rank for the quaternion random test matrix \(\Omega \in {\mathbb {Q}}^{l\times m}\), then the expected approximation error for output matrices \(Q_l, L_l, P_l\) from Algorithm 3.2 satisfies

If the power iteration number \(q>0\), then the expected approximation error is given by

Theorem 4.8

(Deviation bound for the approximation error) With the notations of Theorem 4.7, for Algorithm 3.2, we have the following bounds for the approximation error:

with probability not less than \(1-2v^{-4p}-e^{-u^2/2}\) and

with probability not less than \(1-2v^{-4p}-e^{-u^2/2}\), where \(u,v\ge 1\) and \(\eta _{k,p}=\frac{e\sqrt{4k+4p+2}}{4p+4}\).

We observe from Theorems 4.7–4.8 that the singular value decaying rate is a key factor governing the low-rank approximation, and Algorithm 3.2 can provide low-rank approximations from optimal up to constants depending on the oversampling parameter p and gap ratio of singular values. If the singular values decay slowly, the number q of the power iteration may significantly improve the matrix approximation error with high probability.

4.2.2 Singular Values Approximation Error Analysis

In this subsection, we provide the singular values approximation error bounds for Algorithm 3.2.

On the maximum and interior singular value approximation errors of Algorithm 3.2, we have the following results.

Theorem 4.9

With the notation of Algorithm 3.2, computing the QQLP decomposition of B such that \(R_0\) is the R-factor in the QRCP of B, \(BP_{0}=Q_{0}R_{0}\) and \(L^*\) is the R-factor in the QRCP of \(R_0^*\), \(R_{0}^{*}=Q_{1}L^{*}\). Suppose \(\sigma _{1}(B)>\sigma _{2}(B)\) and partition \(R_{0}\) and L as

where \(r_{11}\), \(l_{11}\in {\mathbb {Q}}\). If \(\Vert R_{22}\Vert _{2}\le \sqrt{2(l-1)}\sigma _{2}(B)\) and \(\frac{\Vert R_{22}\Vert _{2}}{|r_{11}|}<1\), then we have

with probability not less than \(1-\Delta \), where \(\Delta =\exp (-\alpha ^2/2)+\frac{\pi ^{-3}}{4(p+1)(2p+3)}\gamma ^{-4(p+1)}\), \(\rho _{1}=\frac{\Vert L_{22}\Vert _{2}}{|l_{11}|}<1\), and \(\delta _1=\sqrt{1+{\mathcal {C}}_{\alpha ,\gamma }^2 \left( \frac{\sigma _{k+1}(A)}{\sigma _{1}(A)}\right) ^{4q+2}}\) with \({\mathcal {C}}_{\alpha ,\gamma }=(3\sqrt{m-k}+3\sqrt{\ell }+\alpha ) \frac{e\sqrt{4l+2}}{4(p+1)}\gamma \) for \(\alpha >0\) and \(\gamma \ge 1\).

Theorem 4.10

With the notation of Algorithm 3.2, computing the QQLP decomposition of B such that \(R_0\) is the R-factor in the QRCP of B, \(BP_{0}=Q_{0}R_{0}\) and \(L^*\) is the R-factor in the QRCP of \(R_0^*\), \(R_{0}^{*}=Q_{1}L^{*}\). Suppose \(\sigma _{s}(B)>\sigma _{s+1}(B)\) for some \(1\le s< k\). Partition \(R_{0}\) and L as

where \(R_{11}\), \(L_{11}\in {\mathbb {Q}}^{s\times s}\). Assume that \(\Vert R_{22}\Vert _{2}\le \sqrt{(s+1)(l-s)}\sigma _{s+1}(B)\), \(\sigma _{s}(R_{11})\ge \frac{\sigma _{s}(B)}{\sqrt{s(l-s+1)}}\), and \(\frac{\Vert R_{22}\Vert _{2}}{\sigma _s(R_{11})}<1\). Then we have

with probability not less than \(1-\Delta \) for all \(j=1,\ldots ,s\), and

for all \(j=1,\ldots ,k-s\), where \(\rho _{1}=\frac{\Vert L_{22}\Vert _{2}}{\sigma _{s}(L_{11})}<1\), \(\delta _j=\Big (1+{\mathcal {C}}_{\alpha ,\gamma }^2 \Big (\frac{\sigma _{k+1}(A)}{\sigma _{j}(A)}\Big )^{4q+2}\Big )^{1/2}\), \(\Delta \) and \({\mathcal {C}}_{\alpha ,\gamma }\) are defined in Theorem 4.9.

4.3 Proof of Theorems 4.9–4.10

4.3.1 Setup

To study the singular values approximation error of Algorithm 3.2, we first build some preliminary results for later analysis.

Lemma 4.11

([3, Proposition 10.3]) Let \(h(\cdot )\) be a Lipschitz function on real matrices: \(|h(X)-h(Y)|\le L\Vert X-Y\Vert _F\) for all \(X, Y\in {{\mathbb {R}}}^{m\times n}\). Then for any \(m\times n\) standard real Gaussian matrix G and \(u>0\), we have \(\mathbb {P}(h(G) \ge \mathbb {E}h(G)+Lu)\le e^{-u^2/2}\).

In the following lemma, we give some upper bounds on the spectral norm of a random quaternion test matrix defined by (3.1).

Lemma 4.12

Let \(\Omega =\Omega _0+\Omega _1{\mathbf {i}}+\Omega _2{\mathbf {j}}+\Omega _3{\mathbf {k}}\in {\mathbb {Q}}^{s\times \ell }\), where \(\Omega _0,\Omega _1,\Omega _2,\Omega _3\in {\mathbb {R}}^{s\times \ell }\) are all real independent standard Gaussian random matrices. Then

and

with probability not less than \(1-\exp (-\alpha ^2/2)\) for any \(\alpha >0\).

Proof

In [35, Lemma 4.15], the relation \({\mathbb {E}}\Vert S\Omega T\Vert _2\le 3(\Vert S\Vert _2\Vert T\Vert _F+\Vert T\Vert _2\Vert S\Vert _2)\) for any \(S\in {{\mathbb {Q}}}^{s\times s}\) and \(T\in {{\mathbb {Q}}}^{\ell \times \ell }\) has been established. By taking \(S=I_s\) and \( T=I_{\ell }\), the upper bound for \({\mathbb {E}}\Vert \Omega \Vert _2\) is as desired.

We now establish the probability upper bound of \(\Vert \Omega \Vert _2\). Define

for all \(X=X_0+X_1{\mathbf {i}}+X_2{\mathbf {j}}+X_3{\mathbf {k}}\in {\mathcal {Q}}^{s\times \ell }\), and \(J_0=I_4\otimes I_s\), \(J_1=[-\mathbf{e}_2, \mathbf{e}_1,\mathbf{e}_4,-\mathbf{e}_3]^T\otimes I_s\), \(J_2=[-\mathbf{e}_3, -\mathbf{e}_4, \mathbf{e}_1,\mathbf{e}_2]^T\otimes I_s\), \(J_3=[-\mathbf{e}_4,\mathbf{e}_3,-\mathbf{e}_2,\mathbf{e}_1]^T \otimes I_s\) with \(\mathbf{e}_i\) being the i-th column of \(I_4\). By Lemma 2.2,

for all \(F_c=[F_0^T, F_1^T, F_2^T, F_3^T]^T\), \(G_c=[G_0^T, G_1^T, G_2^T, G_3^T]^T\in {\mathbb {R}}^{4s\times \ell }\) with \(F=F_0+F_1{\mathbf {i}}+F_2{\mathbf {j}}+F_3{\mathbf {k}}\), \(G=G_0+G_1{\mathbf {i}}+G_2{\mathbf {j}}+G_3{\mathbf {k}}\in {\mathbb {Q}}^{s\times \ell }\). This shows that \(h(\cdot )\) is a Lipschitz function with the Lipschitz constant \(L=1\). By hypothesis, \(\Omega _c=[\Omega _0^T,\Omega _1^T,\Omega _2^T,\Omega _3^T]^T\) is a \(4s\times \ell \) standard Gaussian random matrix. By Lemma 2.2 and Lemma 4.11, we have

with probability not less then \(1-e^{-\alpha ^2/2}\) for any \(\alpha >0\). \(\square \)

On the spectral norm of the Moore-Penrose inverse of a quaternion random test matrix, we have the following result [35].

Lemma 4.13

[35, Theorem 4.14] Let \(\Omega =\Omega _0+\Omega _1{\mathbf {i}}+\Omega _2{\mathbf {j}}+\Omega _3{\mathbf {k}}\in {\mathbb {Q}}^{s\times \ell }\) with \(s\le \ell \), where \(\Omega _0,\Omega _1,\Omega _2,\Omega _3\in {{\mathbb {R}}}^{m\times n}\) are all real independent standard Gaussian random matrices. Then for any \(\gamma \ge 1\), we have

and

Let the matrix \(A\in {\mathbb {Q}}^{m\times n}\) and the parameters k, l and q be defined as in Algorithm 3.2. The following lemma provides the lower bounds for the k largest singular values of \(B=W^*A\) with \(W\in {\mathbb {Q}}^{m\times l}\) being an orthogonal column basis of \((AA^*)^qA\Psi \), where \(\Psi =\Psi _0+\Psi _1{\mathbf {i}}+\Psi _2{\mathbf {j}} +\Psi _3{\mathbf {k}} \in {\mathbb {Q}}^{n\times l}\) is a quaternion random test matrix.

Lemma 4.14

Let \(A\in {\mathbb {Q}}^{m\times n}\) admit the SVD: \(A=U_\ell \Sigma _\ell V_\ell ^*\), where \(U_\ell \in {{\mathbb {Q}}}^{m\times \ell }\) and \(V_\ell \in {{\mathbb {Q}}}^{n\times \ell }\) have orthonormal columns and \(\Sigma _\ell ={\mathrm{diag}}(\sigma _1(A),\ldots ,\sigma _\ell (A))\in {{\mathbb {R}}}^{\ell \times \ell }\) with \(\ell =\min \{m,n\}\). Suppose k, l and q are integers such that \(0<k\le l\le \ell \), and \(q\ge 0\). Let \(\Psi =\Psi _0+\Psi _1{\mathbf {i}}+\Psi _2{\mathbf {j}} +\Psi _3{\mathbf {k}} \in {\mathbb {Q}}^{n\times l}\) be a quaternion random test matrix. Partition \(V_\ell ^{*}\Psi =[\widehat{\Psi }_{1}^T, \widehat{\Psi }_{2} ^T]^T\) with \(\widehat{\Psi }_{1}\in {\mathbb {Q}}^{k\times l}\). Let \(B=W^{*}A\) with the column vectors of \(W\in {\mathbb {Q}}^{m\times l}\) being an orthogonal basis of \({{{\mathcal {R}}}}((AA^*)^qA\Psi )\). If \(\widehat{\Psi }_{1}\) has full row rank, then

for \(j=1,\ldots ,k\).

Proof

Using the SVD of A we have \((AA^*)^qA\Psi =U_\ell \Sigma _\ell ^{2q+1} V_\ell ^*\Psi \). By hypothesis, we have

Let

Then we obtain

Let \(X\in {{\mathbb {Q}}}^{l\times l}\) be nonsingular. Then we can easily prove that \({\mathcal {R}}((AA^*)^qA\Psi X)={\mathcal {R}}((AA^*)^qA\Psi )\). Let \(X=\left[ \widehat{\Psi }_1^{\dag }\Sigma _k^{-(2q+1)}, {\widehat{X}}_2 \right] ,\) where \({\widehat{X}}_2\in {{\mathbb {Q}}}^{l\times (l-k)}\) satisfies \(\widehat{\Psi }_1{\widehat{X}}_2=0\). In this case, we have

where \(H_1=\Sigma _c^{2q+1}\widehat{\Psi }_2\widehat{\Psi }_1^{\dag }\Sigma _1^{-(2q+1)} \in {{\mathbb {Q}}}^{(\ell -k)\times k}\), and \(H_2=\Sigma _c^{2q+1}\widehat{\Psi }_2{\widehat{X}}_2\in {{\mathbb {Q}}}^{(\ell -k)\times (l-k)}\) has full column rank. By assumption, \({{{\mathcal {R}}}}(W)={\mathcal {R}}\left( (AA^*)^qA\Psi \right) \) with \(W^*W=I_l\) yields \(WW^*=ZZ^\dag \).

Let \({\widehat{H}}\) admit the following QR decomposition:

which implies that

Therefore,

where the first inequality follows from Lemma 4.3 with \({{{\mathcal {U}}}}_1={{{\mathcal {V}}}}_1=I(:,1:k)\), and the penultimate equality follows from (4.14).

On the other hand, by Lemma 4.2 and (4.14), we obtain

This, together with (4.15), gives rise to

From the definition of \(H_1\) in (4.13) we have

This, together with (4.16), yields

This shows that the result in Lemma 4.14 holds for \(j=k\). By repeating all previous arguments for the rank-j truncated SVD of B for all \(1\le j<k\), we can establish Lemma 4.14. \(\square \)

We point out that one may establish Lemma 4.14 by employing the similar arguments in [10, Lemmas 4.1–4.2, Theorem 4.3].

4.3.2 The Detailed Proof

Based on the preliminary results developed in Sect. 4.3.1, we give the proof of Theorems 4.9–4.10.

Proof of Theorem 4.9

Let \(A\in {\mathbb {Q}}^{m\times n}\) admit the SVD: \(A=U_\ell \Sigma _\ell V_\ell ^*\), where \(U_\ell \in {{\mathbb {Q}}}^{m\times \ell }\) and \(V_\ell \in {{\mathbb {Q}}}^{n\times \ell }\) have orthonormal columns and \(\Sigma _\ell ={\mathrm{diag}}(\sigma _1(A),\ldots ,\sigma _\ell (A))\in {{\mathbb {R}}}^{\ell \times \ell }\). Denote

By Lemma 4.3 and Lemma 4.14, we obtain, for any \(1\le j\le k\),

where \(\hat{\delta }_j=\big (1+\Vert \widehat{\Omega }_{2}\Vert _{2}^{2} \Vert \widehat{\Omega }_{1}^{\dag }\Vert _{2}^{2}\left( \frac{\sigma _{k+1}(A)}{\sigma _{j}(A)}\right) ^{4q+2}\big )^{1/2}.\) Using Lemmas 4.12–4.13 we have

with probability not less than \(1-\Delta \), where \(\Delta =\exp (-\alpha ^2/2)+\frac{\pi ^{-3}}{4(p+1)(2p+3)}\gamma ^{-4(p+1)}\). From (4.17) we have, for any \(1\le j\le k\),

with probability not less than \(1-\Delta \), where \(\delta _j=\left( 1+{\mathcal {C}}_{\alpha ,\gamma }^2\left( \frac{\sigma _{k+1}(A)}{\sigma _{j}(A)}\right) ^{4q+2}\right) ^{1/2}\). It follows from Theorem 4.5 and (4.18) with \(j=1\) that

with probability not less than \(1-\Delta \). \(\square \)

Proof of Theorem 4.10

By Theorem 4.4 and (4.17) we have, for any \(1\le j\le s\),

with probability not less than \(1-\Delta \).

On the other hand, using Theorem 4.4 and (4.17) again we have, for any \(1\le j\le k-s\),

\(\square \)

Finally, we have the following corollary from Theorem 4.10.

Corollary 4.15

Under the same assumptions of Theorem 4.10, if \(s>k\), then

with probability not less than \(1-\Delta \) for all \(j=1,\ldots ,k\). In particular, if \(s=k\), then

with probability not less than \(1-\Delta \) for all \(j=1,\ldots ,k\).

Remark 4.16

From Theorems 4.9–4.10 and Corollary 4.15, we see that if the numerical rank of A is clearly defined, i.e., \(\sigma _k(A)\gg \sigma _{k+1}(A)\) [46], then \(\delta _j\) tends to 1 for \(j=1,2,\ldots , k\) and the error bounds grow sharper with high probability whenever there exists a significant gap in the singular values of B.

5 Numerical Experiments

In this section, we report some numerical results on the performance of Algorithm 3.2 for computing the low-rank quaternion approximation. To illustrate the effectiveness, all the numerical experiments are carried out in MATLAB 2019b on a personal laptop with an Intel(R) CPU i5-10210U of 1.60 GHz and 8 GB of RAM. To improve the efficiency, as described in Sect. 3.2, we implement Algorithm 3.2 via real structure-preserving operations. As noted in Remark 3.2, in Algorithm 3.2, we can compute an approximate orthonormal basis of the range of \(A^*\) (i.e., V in Step 7) via the QHQR method or the QMGS method.

For Algorithms 3.1 and 3.2, the relative matrix approximation errors are given by

and the absolute and relative singular value approximation errors are measured by

respectively. We set the oversampling parameter \(p=5\) and \(q=0\) unless otherwise specified.

We first consider a numerical example with multiple positive singular values in Example 5.1.

Example 5.1

We consider a synthetic quaternion data matrix \(A=U_n\Sigma V_n^*\in {\mathbb {Q}}^{n\times n}\), where the unitary matrices \(U_n, V_n\in {\mathbb {Q}}^{n\times n}\) are generated by the QSVD of an \(n\times n\) random quaternion matrix via the toolbox QTFM and \(\Sigma \) takes the form of

-

Polynomially decaying spectrum (pds):

$$\begin{aligned} \Sigma ={\mathrm{diag}}(\underbrace{1,\ldots ,1}_{t},2^{-s},3^{-s},\ldots ,(n-t+1)^{-s}); \end{aligned}$$ -

Exponentially decaying spectrum (eds):

$$\begin{aligned} \Sigma ={\mathrm{diag}}(\underbrace{1,\ldots ,1}_{t},2^{-s},2^{-2s},\ldots ,2^{-(n-t)s}), \end{aligned}$$

where \(t>0\) controls the numerical rank of the matrix and \(s>0\) controls the singular value decay rate. We report our numerical results for \(n=400\) (\(s=2, t=30\) in pds and \(s=0.25, t=30\) in eds) from several aspects below.

5.1 Comparison of Running Time

Table 1 lists the running time (in seconds) of Algorithms 3.1 and 3.2 with different target ranks for one of the test matrices in Example 5.1. Note that Algorithm 3.1 is a deterministic algorithm, the running time has nothing to do with the target rank. The low rank approximation is obtained by truncation. We find from Table 1 that the running time of Algorithm 3.2 increases with the increase of the target rank k. We also see that Algorithm 3.2 is much faster than Algorithm 3.1.

5.2 Comparison of Matrix Approximation Error

Figure 1 shows the relative matrix approximation error of Algorithms 3.1 and 3.2 with different target ranks for one of the test matrices in Example 5.1. It is observed from Fig. 1(pds) that both Algorithm 3.1 and Algorithm 3.2 reduce the matrix approximation error with the increase of the target rank. We also see from Fig. 1(eds) that both Algorithm 3.1 and Algorithm 3.2 with QHQR works effectively while Algorithm 3.2 with QMGS doesn’t work very well. Moreover, there is no significant difference between Algorithm 3.2 and Algorithm 3.1 with QHQR in terms of matrix approximation error. Finally, experimental results show the QHQR approach is more stable than the QMGS process especially for an extremely ill-conditioned matrix.

Relative matrix approximation error for Example 5.1

5.3 Comparison of Singular Value Approximation Error

Figures 2 and 3 display the singular value approximation error of Algorithms 3.1 and 3.2 for one of the test matrices in Example 5.1, where “Index” means the index varies from 1 to the target rank \(k=190\). From Figs. 2 and 3, we can observe that Algorithm 3.2 can reduce the singular value approximation error significantly as Algorithm 3.1 for most cases, and even better for some cases. We also see from Fig. 3 that Algorithm 3.2 works much better than Algorithm 3.1 in terms of the relative singular value approximation error for most of the top 30 singular values of the test matrix. This is perhaps because the first \(t=30\) singular values of the test matrix are all equal to 1.

Absolute singular value error for Example 5.1

Relative error of top 30 singular values for Example 5.1

Next, we give a numerical example with distinct positive singular values.

Example 5.2

In this example, we construct a test matrix with distinct positive singular values \( A=U\Sigma V^*\in {\mathbb {Q}}^{m\times n} (m\ge n), \) where the unitary matrices \(U\in {\mathbb {Q}}^{m\times m}\) and \(V\in {\mathbb {Q}}^{n\times n}\) are generated by the QSVD of two random quaternion matrices using the toolbox QTFM, and \(\Sigma =[\Sigma _1,0]^T\) with \(\Sigma _1={\mathrm{diag}}(\sigma _1(A), \ldots , \sigma _n(A))\). Here, \(\sigma _1(A)=1\), \(\frac{\sigma _{i+1}(A)}{\sigma _i(A)}=0.9\) for \(i=1, \ldots , n-1\). We report our numerical results for \(m=600\) and \(n=400\).

Absolute and relative singular value error for Example 5.2

Figure 4 shows the effect of using Algorithms 3.1 and 3.2 to approximate the singular value of the test matrix in Example 5.2. We observe from Fig. 4 that there is no significant difference between Algorithm 3.1 and Algorithm 3.2 in the singular value approximation error. However, why Algorithm 3.2 works better than Algorithm 3.1 for the case of multiple positive singular values needs further study.

5.4 Application in Image Processing

In this subsection, we apply Algorithm 3.2 to image compression.

Example 5.3

We consider the standard test image “Building” with \(300\times 300\) pixels and the (i, j) pixel is set to be

where \(r_{ij}\), \(g_{ij}\) and \(b_{ij}\) are respectively the red, green and blue pixels at location (i, j).

Figure 5 displays the reconstructed images via Algorithm 3.1 and Algorithm 3.2 with QHQR for \(k=20, 40, 60, 80\). Fig. 6 shows the reconstructed image quality for different target ranks, where we use the peak signal-to-noise ratio defined by

where \(A\in {{\mathbb {Q}}}^{m\times n}\) denotes the original image, \({\widehat{A}}\) denotes the reconstructed image, and \(\max (A)\) represents the maximum pixel value of the original image.

We observe from Figs. 5 and 6 that the reconstructed image quality of Algorithm 3.2 is very close to that of Algorithm 3.1 under the same target rank. With the increase of the target rank, the reconstructed image quality is increasing. To illustrate the effect of the parameter q in Algorithm 3.2, in Fig. 6, we show the image quality reconstructed by Algorithm 3.2 for \(q = 0,1,2,3\). We see from Fig. 6 that the reconstructed image quality via Algorithm 3.2 with \(q=1,2\) or 3 is much better than Algorithm 3.1. We also see that \(q=1\) produces significantly better image quality than \(q = 0\) while there is no essential further improvement for \(q=2,3\). Therefore, to improve the effectiveness of our algorithm, we suggest \(q = 1\).

5.5 Application in Signal Denoising Problem

In this subsection, we apply Algorithm 3.2 to the signal denoising problem.

Example 5.4

[48] The Lorentz attractor was first used in atmospheric turbulence, which can be regarded as a three-dimensional nonlinear system. Specifically, the Lorentz system can be expressed as a coupled differential equation as follows:

where \(\phi , \psi , \omega >0\). For chaotic behavior of the Lorenz attractor, we set \(\phi =10\), \(\psi =28\) and \(\omega =8/3\). In our experiment, the Lorentz system are solved by the built-in function ode45(f(t, [x, y, z]), [0, 40], [12, 4, 0]) in MATLAB, where \(x,y,z\in {\mathbb {R}}^{2001}\). For convenience, we take \(x_1=x_r(401:800)\), \(x_2=x_g(401:800)\), \(x_3=x_b(401:800)\) as the three true signals, where \(x_r\), \(x_g\) and \(x_b\) are the solutions of the Lorenz system. We add white Gaussian noise

by the built-in function awgn in MATLAB, where the signal-to-noise ratio snr is set to be \(snr=5\). For a signal \(x=[x(1),x(2),\cdots ,x(N)]^T\), we can construct a Hankel matrix as follows:

where \(s=\lfloor N/2\rfloor \). In a similar way, we can construct three Hankel matrices \(Y_1, Y_2, Y_3\) for signals \(y_1\), \(y_2\) and \(y_3\), respectively. As such, we obtain we can construct a pure quaternion noisy signal matrix

The original signal and noisy signal are shown in Fig. 7(a). We see from Fig. 7 (b) that, except for the few largest singular values, the other singular values decreases slowly. Therefore, we choose the target rank k such that cumulative contribution ratio of k largest singular values attains 80%. As we know, the noise can be removed effectively by truncating the SVD decomposition of the noisy signal matrix. Therefore, we denoise the noisy signal by using the truncated QSVD and Algorithm 3.2 with \(q=1\) to the noisy signal matrix A and the recovery signal is shown in Fig. 8. We see from Fig. 8 that Algorithm 3.2 is nearly as effective as the truncated QSVD. However, as a randomized algorithm, Algorithm 3.2 is more economical.

6 Conclusions

In this paper, we have presented a randomized quaternion QLP decomposition algorithm for computing a low-rank approximation to a quaternion data matrix. We give the matrix approximation error analysis of the proposed algorithm. To study the singular value approximation error, we first establish the convergence results of the quaternion QLP decomposition, where the provided bounds are sharper than those for the real QLP decomposition. Based on the new convergence analysis for the quaternion QLP decomposition and the quaternion probability theory, we also provide the error bounds for the singular value approximation error of the proposed randomized algorithm. These theoretical results confirm that the proposed randomized algorithm can approximate the prescribed largest singular values of the data matrix with high probability. Our numerical experiments verify the effectiveness of our algorithm for image compression and signal denoising.

Data Availability Statement

All data generated or analysed during this study are included in this manuscript.

References

Chen, Y.Y., Guo, Y.W., Wang, Y.L., Wang, D., Peng, C., He, G.P.: Denoising of hyperspectral images using nonconvex low rank matrix approximation. IEEE Trans. Geosci. Remote Sens. 55, 5366–5380 (2017)

Ren, W.Q., Cao, X.C., Pan, J.S., Guo, X.J., Zuo, W.M., Yang, M.-H.: Image deblurring via enhanced low-rank prior. IEEE Trans. Image Process. 25, 3426–3437 (2016)

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53, 217–288 (2011)

Rokhlin, V., Szlam, A., Tygert, M.: A randomized algorithm for principal component analysis. SIAM J. Matrix Anal. Appl. 31, 1100–1124 (2009)

Muller, N., Magaia, L., Herbst, B.M.: Singular value decomposition, eigenfaces, and 3D reconstructions. SIAM Rev. 46, 518–545 (2004)

Martinsson, P.G.: A fast randomized algorithm for computing a hierarchically semi-separable representation of a matrix. SIAM J. Matrix Anal. Appl. 32, 1251–1274 (2011)

Xia, J.L., Gu, M.: Robust approximate Cholesky factorization of rank-structured symmetric positive definite matrices. SIAM J. Matrix Anal. Appl. 31, 2899–2920 (2010)

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika. 1, 211–218 (1936)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Gu, M.: Subspace iteration randomization and singular value problems. SIAM J. Sci. Comput. 37, A1139–A1173 (2015)

Mahoney, M.: Randomized algorithms for matrices and data. Found. Trends Mach. Learn. 3, 123–224 (2011)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Practical sketching algorithms for low-rank matrix approximation. SIAM J. Matrix Anal. Appl. 38, 1454–1485 (2017)

Woodruff, D.P.: Sketching as a tool for numerical linear algebra. Found. Trends Theor. Comput. Sci. 10, 1–157 (2014)

Shabat, G., Shmueli, Y., Aizenbud, Y., Averbuch, A.: Randomized LU decomposition. Appl. Comput. Harmon. Anal. 44, 246–272 (2018)

Duersch, J.A., Gu, M.: Randomized QR with column pivoting. SIAM J. Sci. Comput. 39, C263–C291 (2017)

Xiao, J.W., Gu, M., Langou, J.: Fast parallel randomized QR with column pivoting algorithms for reliable low-rank matrix approximations. 2017 IEEE 24th International Conference on High Performance Computing (HiPC), IEEE. 233–242 (2017)

Duersch, J.A., Gu, M.: Randomized projection for rank-revealing matrix factorizations and low-rank approximations. SIAM Rev. 62, 661–682 (2020)

Wu, N.C., Xiang, H.: Randomized QLP decomposition. Linear Algebra Appl. 59, 18–35 (2020)

Hamilton, W.R.: Elements of quaternions. Chelsea, New York (1969)

Scolarici, G., Solombrino, L.: Notes on quaternionic groups representation. Int. J. Theor. Phys. 34, 2491–2500 (1995)

Bihan, N.L., Sangwine, S.J.: Quaternion principal component analysis of color images. IEEE Interniational Conference on Iamge Processing. 1, 809–812 (2003)

Jia, Z.G., Ng, M.K., Song, G.J.: Robust quaternion matrix completion with applications to image inpainting. Numer. Linear Algebra Appl. 26, e2245 (2019)

Jia, Z.G., Ng, M.K., Wang, W.: Color image restoration by saturation-value total variation. SIAM J. Imaging Sciences. 12, 972–1000 (2019)

Sangwine, S.J., Bihan, N.L.: Quaternion and octonion toolbox for Matlab. http://qtfm.sourceforge.net/

Bunse-Gerstner, A., Byers, R., Mehrmann, V.: A quaternion QR algorithm. Numer. Math. 55, 83–95 (1989)

Sangwine, S.J., Bihan, N.L.: Quaternion singular value decomposition based on bidiagonalization to a real or complex matrix using quaternion Householder transformations. Appl, Math. Comput. 182, 727–738 (2006)

Li, Y., Wei, M.S., Zhang, F.X., Zhao, J.L.: A real structure-preserving method for the quaternion LU decomposition, revisited. Calcolo. 54, 1553–1563 (2017)

Wang, M.H., Ma, W.H.: A structure-preserving method for the quaternion LU decomposition in quaternionic quantum theory. Comput. Phys. Comm. 184, 2182–2186 (2013)

Li, Y., Wei, M.S., Zhang, F.X., Zhao, J.L.: Real structure-preserving algorithms of Householder based transformations for quaternion matrices. J. Comput. Appl. Math. 305, 82–91 (2016)

Jia, Z.G., Wei, M.S., Zhao, M.-X., Chen, Y.: A new real structure-preserving quaternion QR algorithm. J. Comput. Appl. Math. 343, 26–48 (2018)

Li, Y., Wei, M.S., Zhang, F.X., Zhao, J.L.: A fast structure-preserving algorithms for computing the singular value decomposition of quaternion matrix. Appl. Math. Comput. 235, 157–167 (2014)

Wang, M.H., Ma, W.H.: A structure-preserving algorithm for the quaternion Cholesky decomposition. Appl. Math. Comput. 223, 354–361 (2013)

Chen, Y.Y., Xiao, X.L., Zhou, Y.C.: Low-rank quaternion approximation for color image processing. IEEE Trans. Image Process. 29, 1426–1439 (2020)

Bahia, B., Sacchi, M.D.: Quaternionic rank-reduction methods for vector-field seismic data processing. Digit. Signal Process. 87, 178–189 (2019)

Liu, Q.H., Jia, Z.G., Ling, S.T.: Randomized quaternion singular value decomposition for low-rank approximation. SIAM J. Sci. Comput. 44, A870–A900 (2022)

Stewart, G.W.: The QLP approximation to the singular value decomposition. SIAM J. Sci. Comput. 20, 1336–1348 (1999)

Huckaby, D.A., Chan, T.F.: On the convergence of Stewart’s QLP algorithm for approximating the SVD. Numer. Algorithms 32, 287–316 (2003)

Jia, Z.G., Ng, M.K.: Structure preserving quaternion generalized minimal residual method. SIAM J. Matrix Anal. Appl. 42, 616–634 (2021)

Ma, R.-R., Jia, Z.G., Bai, Z.-J.: A structure-preserving Jacobi algorithm for quaternion Hermitian eigenvalue problems. Comput. Math. Appl. 75, 809–820 (2018)

Jia, Z.G., Wei, M.S., Ling, S.T.: A new structure-preserving method for quaternion Hermitian eigenvalue problems. J. Comput. Appl. Math. 239, 12–24 (2013)

Zhang, F.Z.: Quaternions and matrices of quaternion. Linear Algebra Appl. 251, 21–57 (1997)

Mahoney, M.W., Duchi, J.C., Gilbert, A.C.: The Mathematics of Data. AMS, IAS/Park City Mathematics Institute, and SIAM (2018)

Hron, R.A., Johnson, C.R.: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Bernstein, D.S.: Matrix Mathematics: Theory, Facts, and Formulas, 2nd edn. Princeton University Press, Princeton and Oxford (2009)

Higham, N.J.: Analysis of the Cholesky decomposition of a semi-definite matrix. In: Cox, M.G., Hammarling, S.J. (eds.) Reliable Numerical Computation, pp. 161–185. Oxford University Press, Oxford (1990)

Meier, M., Nakatsukasa, Y.: Fast randomized numerical rank estimation. preprint, arXiv: 2105.07388

Mathias, R., Stewart, G.W.: A block QR algorithm and singular value decomposition. Linear Algebra Appl. 182, 91–100 (1993)

Strogatz, L.S.: Nolinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry and Engineering. Westview Press, Boulder, Co (2001)

Acknowledgements

The authors are grateful to the handling editor and three anonymous referees for their useful comments and suggestions, which greatly improved the original presentation.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ren, H., Ma, RR., Liu, Q. et al. Randomized Quaternion QLP Decomposition for Low-Rank Approximation. J Sci Comput 92, 80 (2022). https://doi.org/10.1007/s10915-022-01917-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01917-5