Abstract

We develop and analyze a new hybridizable discontinuous Galerkin method for solving third-order Korteweg–de Vries type equations. The approximate solutions are defined by a discrete version of a characterization of the exact solution in terms of the solutions to local problems on each element which are patched together through transmission conditions on element interfaces. We prove that the semi-discrete scheme is stable with proper choices of stabilization function in the numerical traces. For the linearized equation, we carry out error analysis and show that the approximations to the exact solution and its derivatives have optimal convergence rates. In numerical experiments, we use an implicit scheme for time discretization and the Newton–Raphson method for solving systems of nonlinear equations, and observe optimal convergence rates for both the linear and the nonlinear third-order equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we develop and analyze a new hybridizable discontinuous Galerkin (HDG) method for the following initial-boundary value problem of the Korteweg–de Vries (KdV) type equation on a finite domain

Here \(f \in L^2(\Omega )\) and \(F(u)=\beta u^m\), where \(\beta \) is a constant and \(m\ge 0\) an integer. The well-posedness of the problem (1.1) and properties of the solution have been theoretically and numerically studied; see [3,4,5, 17, 18, 30] and references therein.

KdV type equations play an important role in applications, such as fluid mechanics [7, 25, 26], nonlinear optics [1, 19], acoustics [28, 33], plasma physics [6, 29, 32, 37], and Bose–Einstein condensates [21, 31] among other fields. They also have an enormous impact on the development of nonlinear mathematical science and theoretical physics. Many modern areas were opened up as a consequence of the basic research on KdV equations. Due to their importance in applications and theoretical studies, there has been a lot of interest in developing accurate and efficient numerical methods for KdV equations. In particular, an ongoing effort on developing discontinuous Galerkin (DG) methods for KdV type equations has been made in the last decade. The first DG method, the local discontinuous Galerkin (LDG) method, for the KdV equation was introduced in 2002 by Yan and Shu [36] and further studied for the linear case in [20, 23, 34, 35]. In [10], a DG method for the KdV equation was devised by using repeated integration by parts. Recently, several conservative DG methods [2, 9, 22] were developed for KdV type equations to preserve quantities such as the mass and the \(L^2\)-norm of the solutions. When solving KdV equations, one can use these DG methods for spatial discretization together with explicit schemes for time-marching if the coefficient before the third-order derivative is very small. However, when such coefficient is of order one, for example, implicit time-marching methods might be the methods of choice.

Traditional DG methods, despite their prominent features such as hp-adaptivity and local conservativity, were criticized for having larger number of degrees of freedom than continuous finite element methods when solving steady-state problems or problems that require implicit-in-time solvers. Here, we develop an HDG method which is very suitable for solving KdV equations when implicit time-marching is used. HDG methods [11, 13,14,15] were first introduced for diffusion problems and they provide optimal approximations to both the potential and the flux. Due to the feature that the global coupled degrees of freedom only live on element interfaces, they are significantly advantageous for solving steady-state problems or time-dependent problems that require implicit time-marching. In [8], we introduced the first family of HDG methods for stationary third-order linear equations, which allow the approximations to the exact solution u and its derivatives \(u_x\) and \(u_{xx}\) to have different polynomial degrees. We proved superconvergence properties of these methods on projection of errors and numerical traces, and numerical results indicate that the HDG method using the same polynomial degree k for all three variables is quite robust with respect to the choice of the stabilization function and provides a converging postprocessed solution with order \(2k+1\) with the least amount of degrees of freedom. This suggests that the HDG method using the same polynomial degrees for all variables is the method of choice for solving one-dimensional third-order problems. Therefore, in this paper we extend this HDG method to time-dependent third-order KdV type equations.

To construct the HDG method for KdV equations, we follow the approach used in [8] for stationary third-order equations. That is, given any mesh of the domain, we show that the exact solution can be obtained by solving the equation on each element with provided boundary data that are determined by transmission conditions. Then we define HDG methods by a discrete version of this characterization, which ensures that the only globally-coupled degrees of freedom are those associated to the numerical traces on element interfaces. In [8], it was shown that HDG methods derived by providing boundary data to local problems in different ways are indeed equivalent to each other when the stabilization function is finite and nonzero. So here we just need to consider the one that takes the numerical trace of u at both ends of the interval and the numerical trace of \(u_{xx}\) at the right end as boundary data for the local problems. Our method is different from the HDG method in [27], which was designed from implementation point of view. That HDG method involves two sets of numerical traces for \(u_x\), and there is no error analysis for the method.

Our way of devising HDG methods from the characterization of the exact solution allows us to carry out stability and error analysis. We first apply an energy argument to find conditions on the stabilization function in the numerical traces, under which the HDG method has a unique solution for KdV type equations. Then by deriving four energy identities and combining them together, we prove that the method has optimal approximations to u as well as its derivatives \(u_x\) and \(u_{xx}\) for linear equations; this technique is similar to that in [35]. In implementation, implicit time-marching schemes such as BDF or DIRK methods can be used, and at each time step a stationary third-order equation is solved by the HDG method together with the Newton–Raphson method (see “Appendix”). Due to the one-dimensional setting of the KdV equations, the global matrix of the HDG method that needs to be numerically inverted at each time step is independent of the polynomial degree of the approximations, its size is only \(2N+1\), where N is the number of intervals of the mesh, and its condition number is of the order of \(h^{-2}\), where h denotes the size of the intervals of the mesh.

The paper is organized as follows. In Sect. 2, we define the HDG method for third-order KdV type equations and state and discuss our main results. The details of all the proofs are given in Sect. 3. We show numerical results in Sect. 4 and some concluding remarks in Sect. 5. The details on implementation of the method are in “Appendix”.

2 Main Results

In this section, we state and discuss our main results. We begin by describing the characterizations of the exact solution that the HDG method is a discrete version of. We then introduce our HDG method for KdV type equations, and state our stability result and optimal a priori error estimate.

2.1 Characterizations of the Exact Solution

To display the characterizations of the exact solution we are going to work with, let us first rewrite our third-order model equation as the following first-order system:

with the initial and boundary conditions

We partition the domain \(\Omega \) as

and introduce the set of the boundaries of its elements, \(\partial {\mathcal {T}}_h:=\{ \partial I_i{:}\,i=1,\ldots ,N\}\). We also set \(\mathscr {E}_h:=\{x_i\}_{i=0}^N,\,h_i = x_i - x_{i-1}\) and \(h:=\max _{i=1}^N h_i\).

We know that, when f is smooth enough, if we provide the values \(\{\widehat{u}_i\}_{i=0}^N\) and \(\{\widehat{p}_i\}_{i=1}^N\) and, for each \(i=1,\ldots ,N\), solve the local problem

then (P, Q, U) coincides with the solution (p, q, u) of (2.1) if and only if the transmission conditions

and the boundary conditions

are satisfied. There are other possible characterizations of the exact solution corresponding to different choices of boundary data for the local problem; see [8]. Note that for these characterizations, the boundary data of the local problems are the unknowns of a global problem obtained from the transmission conditions and boundary conditions, and the system of equations for the global unknowns is square.

2.2 HDG Method

To define our HDG method, we first introduce the finite element spaces to be used. We let the approximations \((u_h, q_h, p_h, \widehat{u}_h, \widehat{q}_h, \widehat{p}_h)\) to \((u|_\Omega ,q|_\Omega ,p|_\Omega ,u|_{\mathscr {E}_h},q|_{\mathscr {E}_h},p|_{\mathscr {E}_h})\) be in the space \(W_h^k\times W_h^k \times W_h^k \times L^2(\mathscr {E}_h)\times L^2(\partial {\mathcal {T}}_h) \times L^2(\partial {\mathcal {T}}_h)\) where

Here \(P_k(I_i)\) is the space of polynomials of degree at most k on the domain \(I_i\). For any function \(\zeta \) lying in \(L^2(\partial {\mathcal {T}}_h)\), we denote its values on \(\partial I_i:=\{x_{i-1}^+, x_i^-\}\) by \(\zeta (x_{i-1}^+)\) (or simply \(\zeta ^+_{i-1}\)) and \(\zeta (x_i^-)\) (or simply \(\zeta ^-_i\)). Note that \(\zeta (x_{i}^+)\) is not necessarily equal to \(\zeta (x_i^-)\). In contrast, for any \(\eta \) in the space \(L^2(\mathscr {E}_h)\), its value at \(x_i,\,\eta (x_i)\) (or simply \(\eta _i\)) is uniquely defined; in this case, \(\eta (x_i^-)\) or \(\eta (x_{i}^+)\) mean nothing but \(\eta (x_i)\).

To obtain the HDG formulation, we use a discrete version of the characterization of the exact solution. Assuming that the values \(\{\widehat{u}_{hi}\}_{i=0}^N\) and \(\{\widehat{p}^{\;-}_{hi}\}_{i=1}^N\) are given, for each \(i=1,\ldots ,N\), we solve a local problem on the element \(I_i\) by using a Galerkin method. To describe it, let us introduce the following notation. By \((\varphi , v)_{I_i}\), we denote the integral of \(\varphi \) times v on the interval \(I_i\), and by \(\left\langle \varphi ,v n\right\rangle _{\partial I_i}\) we simply mean the expression \(\varphi (x_i^-)v(x_i^-)n(x_i^-) +\varphi (x_{i-1}^+)v(x_{i-1}^+)n(x_{i-1}^+)\). Here n denotes the outward unit normal to \(I_i\): \(n(x_{i-1}^+):=-1\) and \(n(x_i^-):=1\).

On the element \(I_i=(x_{i-1}, x_i)\), we give f and the boundary data \(\widehat{u}_{h\, i-1}, \widehat{u}_{h\,i}\) and \(\widehat{p}^{-}_{h\, i}\) and take the HDG approximate solutions \((p_h,q_h,u_h)\in P_{k}(I_i)\times P_{k}(I_i)\times P_{k}(I_i)\) to be the solution of the equations

for all \((v, z, w)\,\in \,P_{k}(I_i)\times P_{k}(I_i) \times P_{k}(I_i)\), where the remaining undefined numerical traces are given by

The functions \(\tau _{qu}, \tau _{pu}, \tau _{qp}\), and \(\tau _F(\widehat{u}_h, u_h)\) are defined on \(\partial {\mathcal {T}}_h\) and are called the components of the stabilization function; they have to be properly chosen to ensure that the above problem has a unique solution. In particular, due to the nonlinearity of F, the function \(\tau _F(\cdot ,\cdot ){:}\,\partial \mathcal {T}_h\rightarrow \mathbb {R}\) can be nonlinear in terms of \(\widehat{u}_h\) and \(u_h\). In the case of \(F=0\), we simply take \(\tau _F=0\).

It remains to impose the transmission conditions

and the boundary conditions

Here, \([\![\zeta ]\!](x_i):=\zeta (x_i^-)-\zeta (x_i^+)\). This completes the definition of the HDG methods using the characterization of the exact solution. Note that this way of defining the HDG methods immediately provides a way to implement them.

On the other hand, the above presentation of the HDG methods is not very well suited for their analysis. Thus, we now rewrite it in a more compact form using the notation

Let

The approximation provided by the HDG method, \((u_h, q_h, p_h, \widehat{u}_h, \widehat{p}_h^{\,-})\), is the element of \(W_h^{k}\times W_h^{k} \times W_h^{k} \times M_h(u_D)\times \tilde{M}_h\) which solves the equations

and

for all \((v, z, w,\mu ,\chi )\,\in \,W_h^{k}\times W_h^{k} \times W_h^{k}\times \tilde{M}_h\times M_h(0)\), where, on \(\partial {\mathcal {T}}_h\), we have

It is not difficult to define HDG methods that are associated to other characterizations of the exact solution, but these methods are actually the same, provided that the corresponding stabilization function allows for the transition from one characterization to the other; see [8, 16]. In fact, the choice of characterization to use is more relevant for the actual implementation of the HDG method rather than for its actual definition. The implementation of the HDG method (2.2) is discussed in the “Appendix”.

When above scheme is discretized in time, we can choose the initial approximation (\(u_h^0, q_h^0, p_h^0, \widehat{u}_h^0, \widehat{p}_h^0\)) to be the HDG approximate solutions of the stationary equation \(v + v_{xxx} + F(v)_x = g,\) where \(g=u_0+(u_0)_{xxx}+F(u_0)_x\) and \(u_0\) is the initial data of the time-dependent problem (1.1); see [8] for HDG methods on stationary third-order equations. The initial approximation \((u_h^0, q_h^0, p_h^0, \widehat{u}_h^0, \widehat{p}_h^{0})\), is the element of \(W_h^{k}\times W_h^{k} \times W_h^{k} \times M_h(u_D)\times \tilde{M}_h\) which solves the equations

for all \((v, z, w,\mu ,\chi )\,\in \,W_h^{k}\times W_h^{k} \times W_h^{k}\times \tilde{M}_h\times M_h(0)\), where \(\widehat{q}_h^0, \widehat{p}_h^0\), and \(\widehat{F}_h^0\) are defined in the same ways as \(\widehat{q}_h, \widehat{p}_h\), and \(\widehat{F}_h\) in (2.2e). Note that the equations above are almost the same as those in (2.2) except the third one. This way of choosing initial data for time-dependent problems by solving corresponding stationary problems has been used in [9, 12].

Next, we present our stability result and a priori error estimate of the HDG method under some conditions on the stabilization function.

2.3 Stability

To discuss the \(L^2\)-stability of the HDG method, we let

We have the following stability result.

Theorem 2.1

Assume that \(u_D=q_N=0\). If the stabilization function satisfies

then for the HDG method (2.2), we have

Note that if the nonlinear term \(F=0\), then we have \(\tau _F =\tilde{\tau }=0\) and the condition (2.3) in the Theorem above can be simplified as

If \(F(u)\ne 0\), we just need to have \(\tau _F\ge \tilde{\tau }\) and take \(\tau _{qu}^\pm , \tau _{pu}^+\) and \(\tau _{qp}^-\) to satisfy (2.4). Since

where \(J(u_h, \widehat{u}_h)=[\min \{u_h,\widehat{u}_h\}, \max \{u_h,\widehat{u}_h\}]\), the stabilization function \(\tau _F\) satisfies the condition \(\tau _F\ge \tilde{\tau }\) if

For other choices of \(\tau _F\) which satisfies the condition \(\tau _F\ge \tilde{\tau }\), see [24].

2.4 A Priori Error Estimate for Linear Equations

Now we consider the convergence properties of our HDG method for linear equations in which \(F=0\). We proceed as follows. We first define an auxiliary projection and state its optimal approximation property. Then, we provide an estimate for the \(L^2\)-norm of the projections of the errors in the primary and auxiliary variables.

Let us introduce a key auxiliary projection that is tailored to the numerical traces. The projection of the function \((u,q,p)\in H^1(\mathcal {T}_h)\times H^1(\mathcal {T}_h)\times H^1(\mathcal {T}_h),\,\varPi (u, q, p) := (\varPi u, \varPi {q},\varPi p)\), is defined as follows. On an element \({I_i}=(x_{i-1}, x_i)\), the projection is the element of \({{ P }}_{k}({I_i})\times { { P }}_{k}({I_i})\times P_{k}({I_i})\) which solves the following equations:

where we use the notation \(\delta _\omega := \omega - \varPi \omega \) for \(\omega = u, q\), and p. Note that the last three equations have exactly the same structure as the numerical traces of the HDG method in (2.2e).

The following result for the optimal approximation properties of the projection \(\varPi \) was shown in [8]. To state it, we use the following notation. The \(H^s(D)\)-norm is denoted by \(\Vert \cdot \Vert _{s, D}\). We drop the first subindex if \(s=0\), and the second one if \(D=\Omega \) or \(D={\mathcal T}_h\).

Lemma 2.2

Suppose that

Then the projection \(\varPi \) in (2.5) is well defined on any interval \(I_i\). In addition, if \(\tau _{qu}^+, \tau _{qu}^-, \tau _{pu}^+\) and \(\tau _{qp}^-\) are constants, we have that, for \(\omega =u,q\) and p, there is a constant C such that

provided \(\omega \in H^{s+1}(I_i)\).

Next, we provide estimates for the \(L^2\)-norm of the projection of the errors

and deduce from them the estimates for the \(L^2\)-norm of the errors

Theorem 2.3

Suppose that \(F(u)=0\) in the problem (2.1) and the exact solution \((u,q,p)\in W^{2,\infty }((0, T]; H^{k+1}(\mathcal {T}_h))\times W^{1,\infty }((0, T]; H^{k+1}(\mathcal {T}_h))\times W^{1,\infty }((0, T]; H^{k+1}(\mathcal {T}_h))\). If the stabilization function of the HDG method (2.2) satisfies the condition

then for \(k>0\) and h small enough, we have

where C is independent of h.

It is easy to see that if the stabilization function satisfies the condition (2.7), then it also satisfies the conditions (2.4) and (2.6). Using Lemma 2.2, Theorem 2.3 and the triangle inequality, we immediately get the following \(L^2\) error estimate for the actual errors.

Theorem 2.4

Suppose that the hypotheses of Theorem 2.3 are satisfied. Then we have

where C is independent of h.

3 Proofs

In this section, we provide detailed proofs of our main results. We first prove Theorem 2.1 on the \(L^2\)-stability of the HDG method for general KdV type equations. Then we combine several energy identities to prove the error estimate in Theorem 2.3 for linear third-order equations.

3.1 \(L^2\)-Stability

Now let us prove Theorem 2.1 on the stability of the HDG method for the KdV equation. We treat the nonlinear term in a way similar to that in [24].

Proof

Taking \(\omega =u_h, v=-p_h\) and \(z=q_h\) in (2.2a)–(2.2c) and adding the three equations together, we get

Using integration by parts and (2.2d), we have

Let G(s) be such that \(d G(s)/ds=F(s)\). It is easy to see that

Using it for the second term on the right hand side of (3.1), we get that

where

Next, we just need to show that \(\Phi \ge 0\). Let

Using the definition of \(\widehat{F}_h\) in (2.2e), we have

By the definition of \(\widehat{p}_h\) and \(\widehat{q}_h\) in (2.2e), we get

It is easy to check that if the stabilization function satisfies the condition (2.3), then we get \(\Phi ^+\ge 0\) and \(\Phi ^-\ge 0\). This shows that

\(\square \)

3.2 Error Analysis

In this section, we prove the optimal error estimate for the projections of the errors in Theorem 2.3 for linear equations with \(F=0\). First, we obtain the equations for the projection of the errors.

3.2.1 The Error Equations

From the equations defining the HDG method, (2.2a)–(2.2c), and the fact that the exact solution also satisfy these equations, we obtain the following error equations

for all \((v, z, w)\,\in \,W_h^{k}\times W_h^{k} \times W_h^{k}\), where \(\widehat{e}_\omega =\omega -\widehat{\omega }_h\) for \(\omega =u, q\), and p. From (2.2e) and (2.2d), it is easy to see that

and

for all \((\mu ,\chi )\in \tilde{M}_h\times M_h(0)\). Now we set

and let

Using the Eqs. (2.5d)–(2.5f), after some simple algebra manipulations we get that

Therefore, by the definition of the projection \(\varPi \), (2.5a)–(2.5c), we easily obtain the following equations for the projections of errors

for all \((v, z, w,\mu ,\chi )\,\in \,W_h^{k }\times W_h^{k } \times W_h^{k }\times \tilde{M}_h\times M_h(0)\).

3.2.2 Energy Identities

To prove the \(L^2\)-error estimate in Theorem 2.3, we begin by establishing a key identity involving the quantity

by energy arguments.

Lemma 3.1

We have that

where

Proof

Differentiating the error equations (3.3a)–(3.3c) with respect to t, we get

Next, we use (3.3) and (3.4) to get four energy identities.

(i) Taking \(w=\epsilon _u, v=-\epsilon _p,\) and \(z=\epsilon _q\) in (3.3) and adding the three equations together, we have

Using integration by parts, (3.3d), and the fact that

we get

(ii) Similar to (i), taking \(v={\epsilon }_q\) in (3.4a), \(z={{\epsilon }_u}_t\) in (3.3b), and \(w=-{\epsilon }_p\) in (3.3c) and adding the three equations together, we get

(iii) Taking \(v={{\epsilon }_u}_t\) in (3.4a), \(z={\epsilon }_p\) in (3.4b), and \(w=-{{\epsilon }_{q}}_t\) in (3.3c) and adding the equations together, we get

(iv) Taking \(v=-{{\epsilon }_p}_t, z= {{\epsilon }_q}_t\), and \(w={{\epsilon }_u}_t\) in (3.4a)–(3.4c) and adding the equations together, we get

The proof is completed by adding the four equations (3.5)–(3.8) together. \(\square \)

3.2.3 Proof of the \(L^2\)-Error Estimate

Using Lemma 3.1, we first get the following result.

Lemma 3.2

If the stabilization function satisfies the condition (2.7), then we have

where

and S is the same as in Lemma 3.1.

Proof

Using the definition of \(\widehat{{\epsilon }}_p^+\) and \(\widehat{{\epsilon }}_q\) in (3.2), for the \(\Psi \) term in Lemma 3.1, we have

where

and

We can rewrite the term \(\Psi ^+ \) as

where

Similarly, if we assume that \(\tau _{qu}^-\tau _{qp}^-=1\), after some calculations we get

where

So from Lemma 3.1 we get

Now we integrate the Eq. (3.9) with respect to t and get

It is easy to check that if \(\tau _{qu}^\pm , \tau _{pu}^+\) and \(\tau _{qp}^-\) satisfy the condition (2.7), we have

Therefore,

where \(\Theta =\Theta _1+\Theta _2.\) \(\square \)

To prove Theorem 2.3, we also need the following Lemma for error estimates of the initial approximations at \(t=0\) (see Theorems 2.2 and 2.3 in [8]).

Lemma 3.3

If \(\tau _{qu}^\pm , \tau _{pu}^+, \tau _{qp}^-\) satisfy the condition (2.6), then for \(k>0\),

In addition, let us get an estimate for \({\epsilon }_{ut}\) at \(t=0\).

Lemma 3.4

If \(\tau _{qu}^\pm , \tau _{pu}^+, \tau _{qp}^-\) satisfy the condition (2.6), then for \(k>0\)

Proof

Taking \(t=0\) and \(w={{\epsilon }_u}_t(0)\) in the error equation (3.3c), we have

By Cauchy inequality, trace inequality and inverse inequality, we get

Then the conclusion follows by using Lemmas 2.2 and 3.3. \(\square \)

Now let us finish the proof of Theorem 2.3 by estimating the right hand side of the inequality in Lemma 3.2 and using Lemmas 3.3 and 3.4.

Proof

We first estimate the term \(\int _0^t\;\widehat{\epsilon }_p^{\;2}(x_N) dt\). Taking \(\omega \) to be \(\omega _1:=\frac{x-x_0}{x_N-x_0}\) in (3.3c), we get

by the fact that \(\omega _1(x_0)=0\) and \(\omega _1(x_N)=1\). Using Cauchy inequality, we have

Then by the approximation property of the projection \(\Pi \) in Lemma 2.2, we obtain

Next, we estimate the term \(|\int _0^t S \, dt|\). Let

where

Using Cauchy inequality and the approximation property of the projection \(\Pi \) in Lemma (2.2), we get

Integrating \(S_2\) with respect to t, we have

By the approximation property of the projection \(\Pi \) in Lemma 2.2,

So we get

Applying (3.10) and (3.11) to Lemma 3.2, we have

Since

by Lemma 3.3 and the trace inequality, we have

using Lemmas 3.3 and 3.4. Now we use Grönwall’s inequality and get

where C depends on t but not on h. This completes the proof of Theorem 2.3. \(\square \)

4 Numerical Results

In this section, we carry out several numerical experiments to study the accuracy and capability of our HDG method. In the first and the second numerical experiments, we examine the orders of convergence of the method for linear and nonlinear third-order problems. In the third and the fourth experiments, we apply the method to solve some well-known dispersive wave problems. For all the experiments, we use the following second-order midpoint rule [2, 9] for time discretization. Let \(0=t_0<t_1<\cdots <t_J=T\) be a partition of the interval [0, T] and \(\Delta t_j=t_{j+1}-t_j\). For \(j=0, \ldots , J-1\) and \(\omega \in \{u_h, q_h, p_h\}\), let \(\omega ^{j+1}\in W_h^k\) be defined as

where \(\omega ^{j,1}\) is the solution of the equation

The components of the stabilization function, \((\tau _{qu}^+, \tau _{pu}^+, \tau _{qu}^-, \tau _{qp}^-)\) are taken to be \((0, -1, 1, 1)\) in all the following numerical tests.

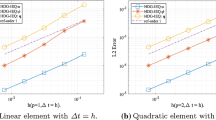

Numerical Experiment 1 In this test, we use the HDG method to solve the time-dependent third-order linear problem

where f is chosen so that the exact solution is \(u(x,t)=\sin (x+t)\) on the domain \((x,t)\in [0, 1]\times [0, 0.1]\). The initial condition is \(u_0=\sin (x)\) and the boundary conditions are \(u(0,t)=\sin (t), u(1,t)=\sin (1+t)\) and \(u_x(1,t)=\cos (1+t)\). We take \(h={2^{-n}}\) for \(n=1,\ldots , 5\). The step size for time discretization is \(\Delta t=0.1*h^2\) for \(k=0, 1\), and \(\Delta t=0.1*h^3\) for \(k=2, 3\) so that the temporal errors are very small. We compute the orders of convergence of \(u_h, q_h, p_h\) at the final time \(T=0.1\), and the orders we observe in the numerical experiments are listed in Table 1.

Our numerical results indicate that the orders of convergence of \((e_u,e_q,e_p)\) are optimal as predicted by the error estimate in Theorem 2.4 for any \(k>0\). For \(k=0\), although our error analysis is inclusive, we observe that the method converges optimally in the numerical experiment.

Numerical Experiment 2 Now we use the HDG method to solve the nonlinear third-order equation

The function f, the initial condition and the boundary conditions are chosen so that the exact solution is \(u(x,t)=\sin (2x+t)\) in the domain \((x,t)\in [0, \pi ]\times [0, 0.1]\). Here, we take the stabilization function \(\tau _F=3\), given that \(F(u)=3u^2\) and \(\frac{1}{2} |F'(u)|=3|u|\le 3\) for the solution u. The mesh size for the HDG method is \(h={2^{-n}}\) for \(n=3,\ldots , 7\). The step size for time discretization is \(\Delta t=0.1*h^2\) for \(k=0, 1\) and \(\Delta t=0.1*h^3\) for \(k=2, 3\) so that the temporal errors are much smaller than the spatial errors. The orders of convergence of \(u_h, q_h, p_h\) at the final time \(T=0.1\) are displayed in Table 2. Our numerical results show that the orders of convergence of \((e_u,e_q,e_p)\) are also optimal for any \(k\ge 0\) for the nonlinear problem.

In the previous two tests, we have observed optimal convergence rates of the HDG method for both linear and nonlinear third-order problems. In the next two tests, we apply the method to solve the KdV equation

Numerical Experiment 3 In this test, we consider the KdV equation (4.1) in the domain \((x,t)\in [-10,0]\times [0,2]\) with the initial condition \(u_0=2{{\mathrm{sech}}}^2(x-4)\) and the boundary conditions \(u(-10, t)=2{{\mathrm{sech}}}^2(-10-4t+4), \,u(0,t)=2{{\mathrm{sech}}}^2(-4t+4), \,u_x(0, t)= -4{{\mathrm{sech}}}^2(-4t+4)\tanh (-4t+4)\). The exact solution to this initial-boundary value problem is the classical solitary-wave solution [2, 27]

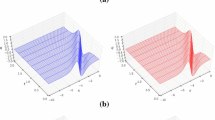

In the computation, we use 100 elements, piecewise cubic polynomials, and time-step size \(\Delta t=10^{-3}\), and take \(\tau _F=(F'(\widehat{u}))^2+\frac{1}{4}\) so that \(\tau _F > \frac{1}{2}|F'(\widehat{u}_h)|\). The space-time graphs of the computed solution \((u_h, q_h, p_h)\) as well as the exact solutions (u, q, p) at the final time \(T=2\) are displayed in Fig. 1. We observe a good match between the approximate solutions and the exact solutions.

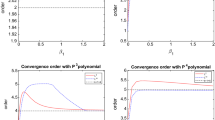

Numerical Experiment 4 In this test, we simulate the interaction of two solitary waves with different propagation speeds using our HDG method. We consider the KdV equation (4.1) in the domain \((x,t)\in [-20,0]\times [0,2]\) with the initial condition

and boundary data \(u(-20,t), u(0,t), u_x(0,t)\), which admits the solution (see [27])

In our computation, we use 50 elements, piecewise cubic polynomials, and the time-step size \(\Delta t=10^{-4}\). The stabilization function \(\tau _F\) is taken in the same way as in the previous test. The space-time graphs of the HDG approximate solutions and the exact solutions are displayed in Fig. 2. From the side-by-side comparison, we see that the HDG solutions are good approximations to the exact solutions. They show that the two waves are moving toward the same direction. The faster soliton catches up with the slower one and they overlap around \(t=0.5\). Afterwards, the faster soliton continues to propagate and the slower one falls behind.

5 Concluding Remarks

In this paper, we develop a new HDG method for time-dependent third-order equations in one space dimension based on the characterization of the exact solution as the solutions to local problems that are “glued” together by transmission conditions. We find conditions on the stabilization function under which the method is \(L^2\) stable for KdV type equations. We also obtain optimal error estimates for the linear third-order equation. Numerical results from computation verify the theoretical error analysis and show that the method is able to accurately simulate solitary wave solutions of the KdV equation. Our future work is to develop and analyze HDG methods for fifth-order KdV equations and third-order equations in multiple dimensions and complex systems.

References

Biswas, H.A., Rahman, A., Das, T.: An investigation on fiber optical solution in mathematical physics and its application to communication engineering. IJRRAS 6(3), 268–276 (2011)

Bona, J.L., Chen, H., Karakashian, O., Xing, Y.: Conservative, discontinuous Galerkin-methods for the generalized Korteweg–de Vries equation. Math. Comput. 82(283), 1401–1432 (2013)

Bona, J.L., Chen, H., Sun, S.-M., Zhang, B.-Y.: Comparison of quarter-plane and two-point boundary value problems: the KDV-equation. Discrete Contin. Dyn. Syst. Ser. B 7(3), 465–495 (2007)

Bona, J.L., Sun, S.-M., Zhang, B.-Y.: A nonhomogeneous boundary-value problem for the Korteweg–de Vries equation posed on a finite domain. Commun. Partial Differ. Equ. 28(7–8), 1391–1436 (2003)

Bona, J.L., Sun, S.-M., Zhang, B.-Y.: A non-homogeneous boundary-value problem for the Korteweg–de Vries equation posed on a finite domain II. J. Differ. Equ. 247, 2558–2596 (2009)

Braginskii, S.I.: Transport processes in a plasma. Rev. Plasma Phys. 1, 205 (1965)

Buckingham, M.: Theory of acoustic attenuation, dispersion, and pulse propagation in unconsolidated granular materials including marine sediments. J. Acoust. Soc. Am. 102(5), 2579–2596 (1997)

Chen, Y., Cockburn, B., Dong, B.: Superconvergent HDG methods for linear, stationary, third-order equations in one-space dimension. Math. Comput. 85, 2715–2742 (2016)

Chen, Y., Cockburn, B., Dong, B.: A new discontinuous Galerkin method, conserving the discrete \(H^2\)-norm, for third-order linear equations in one space dimension. IMA J. Numer. Anal. 36(4), 1570–1598 (2016)

Cheng, Y., Shu, C.-W.: A discontinuous Galerkin finite element method for time dependent partial differential equations with higher order derivatives. Math. Comput. 262, 699–730 (2008)

Cockburn, B., Dong, B., Guzmán, J.: A superconvergent LDG-hybridizable Galerkin method for second-order elliptic problems. Math. Comput. 77, 1887–1916 (2008)

Cockburn, B., Fu, Z., Hungria, A., Ji, L., Sánchez, M., Sayas, F.-J.: Stormer–Numerov methods for the acoustic wave equation (submitted)

Cockburn, B., Gopalakrishnan, J., Lazarov, R.: Unified hybridization of discontinuous Galerkin, mixed and continuous Galerkin methods for second order elliptic problems. SIAM J. Numer. Anal. 47, 1319–1365 (2009)

Cockburn, B., Gopalakrishnan, J., Sayas, F.-J.: A projection-based error analysis of HDG methods. Math. Comput. 79, 1351–1367 (2010)

Cockburn, B., Guzmán, J., Wang, H.: Superconvergent discontinuous Galerkin methods for second-order elliptic problems. Math. Comput. 78, 1–24 (2009)

Cockburn, B., Gopalakrishnan, J.: The derivation of hybridizable discontinuous Galerkin methods for Stokes flow. SIAM J. Numer. Anal. 47, 1092–1125 (2009)

Goubet, O., Shen, J.: On the dual Petrov–Galerkin formulation of the KdV equation on a finite interval. Adv. Differ. Equ. 12(2), 221–239 (2007)

Holmer, J.: The initial-boundary value problem for the Korteweg–de Vries equation. Commun. Partial Differ. Equ. 31, 115–1190 (2006)

Horsley, S.A.R.: The KdV hierarchy in optics. J. Opt. 18, 085104 (2016)

Hufford, C., Xing, Y.: Superconvergence of the local discontinuous Galerkin method for the linearized Korteweg–de Vries equation. J. Comput. Appl. Math. 255, 441–455 (2014)

Kamchatnov, A.M., Shchesnovich, V.S.: Dynamics of Bose–Einstein condensates in cigar-shaped traps. Phys. Rev. A 70(02), 023604 (2004)

Karakashian, O., Xing, Y.: A posteriori error estimates for conservative local discontinuous Galerkin methods for the generalized Korteweg–de Vries equation. Commun. Comput. Phys. 20, 250–278 (2016)

Liu, H., Yan, J.: A local discontinuous Galerkin method for the Korteweg–de Vries equation with boundary effect. J. Comput. Phys. 215, 197–218 (2006)

Nguyen, N.C., Peraire, J., Cockburn, B.: An implicit high-order hybridizable discontinuous Galerkin method for nonlinear convection–diffusion equations. J. Comput. Phys. 228, 8841–8855 (2009)

Panda, N., Dawson, C., Zhang, Y., Kennedy, A.B., Westerink, J.J., Donahue, A.S.: Discontinuous Galerkin methods for solving Boussinesq–Green–Naghdi equations in resolving non-linear and dispersive surface water waves. J. Comput. Phys. 273, 572–588 (2014)

Phillips, O.: Nonlinear dispersive waves. Annu. Rev. Fluid Mech. 6, 93–110 (1974)

Samii, A., Panda, N., Michoski, C., Dawson, C.: A hybridized discontinuous Galerkin method for the nonlinear Korteweg–de Vries equation. J. Sci. Comput. 68, 191–212 (2016)

Schamel, H.: A modified Korteweg–de Vries equation for ion acoustic waves due to resonant electrons. J. Plasma Phys. 9(3), 377–387 (1973)

Shukla, P.K., Eliasson, B.: Colloquium: nonlinear collective interactions in quantum plasmas with degenerate electron fluids. Rev. Mod. Phys. 83(3), 885–906 (2011)

Skogestad, J.O., Kalisch, H.: A boundary value problem for the KdV equation: comparison of finite-difference and Chebyshev methods. Math. Comput. Simul. 80, 151–163 (2009)

Tagare, S.G.: Effect of ion temperature on propagation of ion-acoustic solitary waves of small amplitudes in collisionless plasma. Plasma Phys. 15(12), 1247 (1973)

Tassi, E., Morrison, P.J., Waelbroeck, F.L., Grasso, D.: Hamiltonian formulation and analysis of a collisionless fluid reconnection model. Plasma Phys. Control. Fusion 50(8), 1–29 (2008)

Tran, M.Q.: Ion acoustic solitons in a plasma—a review of their experimental properties and related theories. Phys. Scr. 20, 317–327 (1979)

Xu, Y., Shu, C.-W.: Error estimates of the semi-discrete local discontinuous Galerkin method for nonlinear convection–diffusion and KdV equations. Comput. Methods Appl. Mech. Eng. 196, 3805–3822 (2007)

Xu, Y., Shu, C.W.: Optimal error estimates of the semidiscrete local discontinuous Galerkin methods for high order wave equations. SIAM J. Numer. Anal. 50, 79–104 (2012)

Yan, J., Shu, C.W.: A local discontinuous Galerkin method for KdV type equations. SIAM J. Numer. Anal. 40, 769–791 (2002)

Zabusky, N.J., Kruskal, M.D.: Interaction of “Solitons” in a collisionless plasma and the recurrence of initial states. Phys. Rev. Lett. 15, 240–243 (1965)

Acknowledgements

The author would like to acknowledge the support of National Science Foundation Grant DMS-1419029.

Author information

Authors and Affiliations

Corresponding author

Appendix: Implementation

Appendix: Implementation

To implement the HDG method (2.2), we use an implicit scheme for the discretization of the time derivative. One may use high order BDF or an implicit Runge–Kutta method for time discretization. Here, for simplicity we consider the backward Euler method with time-step \(\Delta t\). At time-level \(t_j\), inserting the definition of the numerical traces (2.2e) into (2.2a)–(2.2e), we obtain the equations

from which \((u_h, q_h, p_h)\) can be locally solved in terms of \(f,\,\widehat{u}_h\) and \(\widehat{p}_h^{\,-}\), and the equations

which determine the globally coupled unknowns \((\widehat{u}_h, \widehat{p}_h^{\,-})\).

Next, we apply the Newton–Raphson method to solve the above nonlinear system. Denoting the approximations at the current iteration by \((\bar{u}_h, \bar{q}_h, \bar{p}, \bar{\widehat{u}}_h, \bar{\widehat{p}}_h^{\,-})\in W_h^k\times W_h^k\times W_h^k\times M_h(u_D)\times \tilde{M}_h\), we want to find the increments \((\delta u_h, \delta q_h, \delta p_h, \delta \widehat{u}_h, \delta \widehat{p}_h^{\,-}) \in W_h^k\times W_h^k\times W_h^k\times M_h(0)\times \tilde{M}_h\) such that

and

for any \((v, z, w, \mu ,\chi )\,\in \,W_h^{k}\times W_h^{k} \times W_h^{k}\times \tilde{M}_h\times M_h(0)\), where

Here we have used the notation \(\bar{\tau }_F:=\tau _F(\bar{\widehat{u}}_h, \bar{u}_h)\), and \(\partial _1 \bar{\tau }_F\) (respectively, \(\partial _2\bar{\tau }_F\)) denotes the first-order partial derivative of \(\tau _F\) with respect to the first argument (respectively, second argument) evaluated at \((\bar{\widehat{u}}_h, \bar{u}_h)\).

The discretization of the system above gives rise to matrix equations of the form

and

From (5.1), we get

We emphasize that the above inverse can be computed on each element independently of each other since the matrices \(A_1, A_2,A_3, B_1, B_2\) and C are block-diagonal owing to the discontinuous nature of the approximation spaces. Applying (5.3)–(5.2), we get the global linear system

where

and

Therefore, the only globally coupled degrees of freedom are those associated with \(\delta \widehat{u}_h\) and \(\delta \widehat{p}_h^{\,-}\), which live only on element interfaces. Due to the one-dimensional setting of the KdV equation, the size and the bandwidth of the global linear system are independent of the degrees of polynomials used; it only depends on the number of subintervals in the mesh. Once \(\delta \widehat{u}_h\) and \(\delta \widehat{p}_h^{\,-}\) are obtained, \((\delta p_h, \delta q_h, \delta u_h)\) can be locally computed by using (5.3).

Rights and permissions

About this article

Cite this article

Dong, B. Optimally Convergent HDG Method for Third-Order Korteweg–de Vries Type Equations. J Sci Comput 73, 712–735 (2017). https://doi.org/10.1007/s10915-017-0437-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0437-4

Keywords

- Hybridizable discontinuous Galerkin methods

- HDG

- DG

- Korteweg–de Vries (KdV) equation

- Third-order equations