Abstract

In this paper, we study fast iterative solvers for the solution of fourth order parabolic equations discretized by mixed finite element methods. We propose to use consistent mass matrix in the discretization and use lumped mass matrix to construct efficient preconditioners. We provide eigenvalue analysis for the preconditioned system and estimate the convergence rate of the preconditioned GMRes method. Furthermore, we show that these preconditioners only need to be solved inexactly by optimal multigrid algorithms. Our numerical examples indicate that the proposed preconditioners are very efficient and robust with respect to both discretization parameters and diffusion coefficients. We also investigate the performance of multigrid algorithms with either collective smoothers or distributive smoothers when solving the preconditioner systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fourth order parabolic partial differential equations (PDEs) appear in many applications including the lubrication approximation for viscous thin films [49], interface morphological changes in alloy systems during stress corrosion cracking by surface diffusion [1], image segmentation, noise reduction, inpainting [13, 42], and phase separation in binary alloys [14, 22, 30], etc. There have been extensive studies on the numerical methods for solving these fourth order parabolic PDEs, including the finite difference methods [39, 56], spectral methods [44, 66], discontinuous Galerkin methods [27, 35, 65], \(C^1\)-conforming finite element methods [31, 37], nonconforming finite element methods[32, 67], and mixed finite element methods [11, 15, 29, 33, 34, 36]. The resulting large sparse linear systems of equations are typically very ill-conditioned which poses great challenges for designing efficient and robust iterative solvers. In addition, the linear systems become indefinite when mixed methods are employed. In this work, we design fast iterative solvers for the fourth order parabolic equations discretized by mixed finite element methods.

Multigrid (MG) methods are among the most efficient and robust solvers for solving the linear systems arising from the discretizations of PDEs. There have been a number of studies on multigrid methods for fourth order parabolic equations [7, 45, 47, 48, 63]. It is known that smoothers play a significant role in multigrid algorithms. In particular, for saddle point systems, there are two different types of smoothers, i.e., decoupled or coupled smoothers. For a decoupled point smoother, each sweep consists of relaxation over variables per grid point as well as relaxation over grid points. On the other hand, for a coupled point smoother, variables on each grid point are relaxed simultaneously which corresponds to solving a sequence of local problems. Distributive Gauss–Seidel (DGS) is the first decoupled smoother proposed by Brandt and Dinar [20] for solving Stokes equations. Later, Wittum [64] introduced transforming smoothers and combined it with incomplete LU factorization to solve saddle-point systems with applications in Stokes and Navier–Stokes equations. Gaspar et al. [40] studied the DGS smoother for solving poroelasticity problems. Recently, Wang and Chen [62] proposed a distributive Gauss–Seidel smoother based on the least squares commutator for solving Stokes equations and showed multigrid uniform convergence numerically. For coupled smoother, in [61], Vanka proposed a symmetrically coupled Gauss–Seidel smoother for the Navier–Stokes equations discretized by finite difference schemes on staggered grids. Later, in [53], Olshanskii and Reusken introduced an MG solver with collective smothers for the Navier–Stokes equations in rotation form discretized by conforming finite elements. They proved that W-cycle MG method with block Richardson smoother is robust for the discrete problem. Their numerical experiments show that the W-cycle MG method with the damped Jacobi smoother is robust with respect to the mesh size and problem parameters. Collective Jacobi and Gauss–Seidel smoothers have also been studied in multigrid method for elliptic optimal control problems by Lass et al. [50], and Takacs and Zulehner [57]. In our work, we investigate the performance of both coupled and decouple smoothers for the discrete fourth order problem in the mixed form.

In the literature, there are also many studies on the Krylov subspace methods and their preconditioners for solving fourth order parabolic problems. In [8, 9], Bänsch, Morin and Nochetto solved a discrete fourth order parabolic equation by applying the conjugate gradient method on the two-by-two block system in Schur complement form. In [10], the same authors proposed symmetric and nonsymmetric preconditioners based on operator splitting. Axelsson et al. [2], Axelsson and Neytcheva [3], Boyanova et al. [18] and Boyanova and Neytcheva [19] proposed a block preconditioner by adding an extra small term to the (2, 2) block and then followed by a block LU factorization which results in a preconditioned system when eigenvalues have a uniform bound. Bosch et al. [16] studied block triangular preconditioner for the mixed finite element discretization of a modified Cahn–Hilliard equation for binary image inpainting. In their method, the Schur complement is solved by algebraic multigrid method (AMG) using V-cycle and Chebyshev smoother. Moreover, the convergence rate of their method is independent of the mesh size. Same method has been used by Bosch et al. [17] to solve Cahn–Hilliard variational inequalities. In [41], Gräser and Kornhuber introduced a preconditioned Uzawa-type iterative method with multigrid solvers for subproblems to solve Cahn–Hilliard equation with an obstacle potential. Based on the Hermitian and skew-Hermitian splitting (HSS) iteration introduced in [6], Benzi and Golub [12] proposed a robust preconditioner for the generalized saddle point problems which exhibit similar two-by-two block structure as the discrete fourth order parabolic problems in mixed form. Later, Bai et al. [4] studied preconditioned modified HSS (PMHSS) iteration methods for the block linear systems. In [5], Bai, Chen, and Wang further simplified PMHSS and proposed an additive block diagonal preconditioner for a block linear system of equations arising from finite element discretization of two-phase flow problems and elliptic PDE-constrained optimization problems.

In this work, we construct preconditioners for discrete fourth order parabolic problems based on the mass lumping technique. Mass lumping technique has been widely used in solving time dependent PDEs by the finite element method [46]. It consists of replacing a consistent mass matrix by a diagonal lumped mass matrix so that its inversion at each time step becomes a simple division. The error estimates for lumped mass approximation have been studied in [23, 59, 60] which show that the order of accuracy for the discretization is preserved. On the other hand, the loss of solution accuracy associated with mass lumping has been studied by Gresho et al. [43] for advection equations and by Niclasen and Blackburn [52] for incompressible Navier–Stokes equations. It is well known that mass lumping may also induce dispersion errors when solving wave equations, see e.g., [28, 51]. These studies suggest that it is sometimes advantageous to use consistent mass matrix in the discretization schemes. In the study of fourth order parabolic equations, both consistent mass matrix and lumped mass matrix have been widely used. In this work, we choose consistent mass matrix in the finite element discretization to keep the solution accuracy and utilize lumped mass matrix to design efficient preconditioners so that the cost of inverting consistent mass matrix can be alleviated. We prove that GMRes method with mass lumping preconditioner converges when \(\tau \ge Ch^2\) for some constant C depending on the diffusion coefficients (\(\tau \), h corresponds to time and spatial discretization parameters, respectively). In a special case when the two diffusion operators A and B only differ by a scaling factor, we are able to prove uniform convergence of GMRes method for the preconditioned system without the constraint \(\tau \ge Ch^2\). Furthermore, we show that the preconditioner systems can be solved inexactly by geometric multigrid methods with the two different types of smoother discussed previously. By combining the optimality of multigrid methods with the computational efficiency of the mass lumping technique, we obtain very efficient solvers for the discrete fourth order problems.

The remainder of the paper is organized as follows. In Sect. 2, we describe the model problem and the corresponding mixed finite element discretization. Next, in Sect. 3, we describe the multigrid method and the collective Jacobi/Gauss–Seidel smoothers for our model problem. In Sect. 4, we construct two mass lumping preconditioners. Multigrid method with decoupled smoother are also introduced in this section to solve the preconditioner system approximately. The spectrum bounds of the preconditioned systems and the convergence property of GMRes method are analyzed in Sect. 5. Finally, in Sect. 6, we present numerical experiments to demonstrate the efficiency and robustness of the proposed solvers.

2 Model Problem and Discretization

2.1 Model Problem

We are interested in solving the following fourth order problem:

with boundary conditions

where \(\tau = \sqrt{\triangle t}\) and \(\triangle t\) is the time-step size, \(\varOmega \) is a bounded polyhedral domain in \(\mathbb {R}^d\), \(d\ge 1\), \(\nu \) is the unit outward normal, \(\varGamma _D\), \(\varGamma _N\) denote the Dirichlet and Neumann boundary part, respectively. We mainly study the Dirichlet boundary condition in this paper, i.e., \(\varGamma _N=\emptyset \). The diffusion coefficients a(x) and b(x) are measurable functions satisfying the following continuity and coercivity conditions

Equations (2.1) and (2.2) may arise from a time semi-discretization of the fourth order parabolic problem

In this case, \(f=u^{\text {old}}\) is the solution at the previous time step, and \(g=0\).

As an example, consider the following Cahn–Hilliard equations that model the phase separation and coarsening dynamics in a binary alloy:

where \(\varOmega \subset \mathbb {R}^3\) is a bounded domain, u represents the relative concentration of one component in a binary mixture, M(u) is the degenerate mobility, which restricts diffusion of both components to the interfacial region, e.g., \(M(u) = u(1-u)\) [11], \(F'(u)\) is the derivative of a double well potential F(u), a typical choice is

Introducing v, defined by \(v = \tau (-\epsilon \varDelta u + F'(u))\) (the chemical potential multiplied by \(\tau \)), after semi-implicit time discretization, we obtain a splitting of (2.5) into a coupled system of second order equations [33, 34, 36]

Denote \(a=M(u)\), \(b=\epsilon \), and assume \(F'(u)=0\), we can get (2.1) and (2.2), which corresponds to the linearization of the nonlinear Cahn–Hilliard equations.

The weak formulation of (2.1)–(2.2) is: find \(u,v\in \mathbb {V}\) such that

where \(\mathbb {V}\) is the subspace of \(H^1(\varOmega )\) associated with the boundary condition (2.3). The well-posedness of (2.6) follows from the Lax–Milgram lemma [54].

2.2 Finite Element Discretization

Let \(\mathcal {T}\) be a shape-regular triangulation of \(\varOmega \), \(\mathbb {V}_{\mathcal {T}}\) be the piecewise linear finite element space over \(\mathcal {T}\) satisfying the homogeneous boundary condition (2.3), and \(N_h = \mathrm{dim(\mathbb {V}_\mathcal {T})}\). The discrete problem for the PDE system (2.1)–(2.2) is: find \(u_h, v_h\in \mathbb {V}_{\mathcal {T}}\), such that \(\forall \phi , \psi \in \mathbb {V}_{\mathcal {T}}\)

In matrix form, we have

where \(\mathcal {A}\) is a matrix of size \(2N_h\times 2N_h\), M is the mass matrix, and A, B are the stiffness matrices defined by

Eliminating  in (2.7), we get the following Schur complement equation

in (2.7), we get the following Schur complement equation

In this paper, we develop efficient preconditioners for the two-by-two block linear systems (2.7); however, we will solve the Schur complement for preconditioners. In order to avoid inverting mass matrix, we employ the mass lumping technique to construct preconditioners.

3 Multigrid with Collective Smoother

A multigrid algorithm typically consists of three major components: the smoother (or relaxation scheme), the coarse grid operator, and the grid transfer operators (interpolation/restriction operators). It is well known that the efficiency of a multigrid method crucially depends on the choice of the smoother. In particular, for the block system (2.7), we observe numerically that multigrid method with point-wise Gauss–Seidel smoother does not converge uniformly. We construct a block Jacobi or Gauss–Seidel smoother that collects the degrees of freedom corresponding to variables u and v for each grid point. In other words, each block corresponds to a \(2\times 2\) matrix.

To describe these collective smoothers, we first consider the following matrix splitting, i.e.,

where \(L_A, L_B, L_M\) are strictly lower triangular parts of A, B, and M, and \(D_A, D_B, D_M\) are their diagonal parts. The collective damped Jacobi relaxation can be represented as

The collective Gauss–Seidel relaxation can be represented as

More practically, one can rearrange variables  and

and  in

in  so that \(\mathcal {A}_L+\mathcal {A}_D\) corresponds to a lower block triangular matrix. In fact, let

so that \(\mathcal {A}_L+\mathcal {A}_D\) corresponds to a lower block triangular matrix. In fact, let  with each entry

with each entry  corresponds to a pair of variables. Then, each relaxation sweep of (3.1) consists of solving a sequence of small systems

corresponds to a pair of variables. Then, each relaxation sweep of (3.1) consists of solving a sequence of small systems

and each sweep of (3.2) consists of solving

where

Note that \(\mathscr {A}_{ii}\) is invertible since \(\text {det} (\mathscr {A}_{ii}) = -\tau ^2 A_{ii} B_{ii} - M_{ii}^2 \ne 0\).

It is clear from the above form that for collective damped Jacobi relaxation, these small system can be solved in parallel, and for collective Gauss–Seidel relaxation, they are solved successively. Numerical experiments in Sect. 6 indicate that by relaxing both variables \(u_i,v_i\) corresponding to the same grid point i collectively, geometric multigrid V-cycle converges uniformly with respect to both h and \(\tau \).

The collective Gauss–Seidel smoother has been studied by Lass et al. [50] for solving elliptic optimal control problems. It is shown in [50] that the convergence rate of multigrid method with collective Gauss–Seidel smoother is independent of h. The robustness with respect to \(\tau \) is, however, hard to prove theoretically. Takacs and Zulehner [57] also investigated multigrid algorithm with several collective smoothers for optimal control problems and proved the convergence of the W-cycle multigrid with collective Richardson smoother. For convergence proof of multigrid methods using collective smoothers applied to saddle point systems, we refer to the work by Schöberl [55] and by Chen [25, 26].

4 Mass Lumping Preconditioners

In this section, we study preconditioners for the two-by-two block linear systems (2.7) for use with GMRes method. When the meshsize and time-step size are small, these systems are generally very ill-conditioned, especially when the diffusion coefficients a and b degenerate. Hence, efficient preconditioners are necessary in order to speed up the convergence of GMRes method. In [10], Bänsch, Morin, and Nochetto proposed symmetric and non-symmetric preconditioners that work well for the Schur complement system (2.8). However, the convergence rates of these methods are not uniform with respect to h or \(\tau \). Besides, the performance of those methods deteriorate for degenerate problems [10].

In the following, we design two preconditioners based on the mass lumping technique and geometric multigrid method for the system (2.7). The main focus is on the efficiency and robustness of the proposed solvers. Numerical experiments in Sect. 6 indicate that GMRes method preconditioned by the mass lumping preconditioners (solved inexactly) converges uniformly with respect to the discretization parameters and is also robust for problems with degenerate diffusion coefficients.

4.1 Preconditioner with Two Lumped Mass Matrices

The mass lumping technique has been widely used in the finite element computations, especially for time-dependent problems as it avoids inverting a full mass matrix M at each time step. The lumped-mass matrix \(\bar{M}\) is a diagonal matrix with diagonal elements equal to the row sums of M. By using the diagonal matrix \(\bar{M}\), the computational cost for the preconditioner is reduced significantly.

The mass lumping preconditioner \(\mathcal {B}\) for the block system \(\mathcal {A}\) is defined by

GMRes method is then applied to solve the preconditioned linear system

4.2 Preconditioner with One Lumped Mass Matrix

We can also use the following preconditioner \(\tilde{\mathcal {B}}\) with one lumped mass matrix for solving the block system (2.7), i.e.

The matrix \(\tilde{\mathcal {B}}\) is nonsymmetric and is a better approximation to \(\mathcal {A}\) compared with \(\mathcal {B}\). For \(\tilde{\mathcal {B}}\), we still have a block factorization which avoids inverting mass matrix M. Numerical experiments of Sect. 6.2 indicate that the performance of the two preconditioners \(\tilde{\mathcal {B}}\) and \(\mathcal {B}\) used with GMRes method are similar when solved inexactly by geometric multigrid V-cycle when \(\tau \ge Ch^2\). However, when \(\tau \) is very small, \(\tilde{\mathcal {B}}\) performs better than \(\mathcal {B}\).

4.3 Multigrid for Preconditioners

The preconditioner systems only need to be solved approximately. We can use geometric multigrid method with the coupled smoothers described in Sect. 3. In the following, we construct a decoupled smoother following the idea of the distributive Gauss–Seidel relaxation (DGS) which is suitable for the model problem.

DGS is a decoupled smoother introduced by Brandt and Dinar [20] for solving Stokes equations. The main idea of DGS is to apply standard Gauss–Seidel relaxation on decoupled equations using transformed variables. Let us consider the mass-lumping preconditioner system \(\tilde{\mathcal {B}}\) (a similar scheme can be derived for \(\mathcal {B}\)),

We introduce the following change of variables

is called the distribution matrix. Right preconditioning \(\tilde{\mathcal {B}}\) by \(\mathcal {P}\) results in an upper block triangular matrix

We construct a decoupled smoother for preconditioner (4.4) by solving

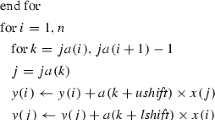

More precisely, we have the following algorithm

We can use multigrid method with the above decoupled smoother to solve the preconditioner system (4.4).

Remark 1

By using the damped Jacobi to solve (4.7), we can avoid matrix multiplication. In fact, we only need to calculate the diagonal part of \(M+\tau ^2A\bar{M}^{-1}B\). This requires \(\mathcal {O}(N_h)\) operations because \(\bar{M}\) is a diagonal matrix.

5 Convergence Analysis of Preconditioned GMRes Method

In this section, we analyze the convergence of the GMRes method preconditioned by the two preconditioners introduced in Sect. 4 for the block system (2.7). We show that the preconditioned GMRes method converges when \(\tau \ge Ch^2\) for some constant C depending on the diffusion coefficients. Numerical results in Sect. 6 indicate that the preconditioned GMRes method converges uniformly for any h and \(\tau \).

Let \(R=\mathcal {A}\mathcal {B}^{-1}\) (or \(\mathcal {A}\tilde{\mathcal {B}}^{-1}\)) with \(\mathcal {B}\) (or \(\tilde{\mathcal {B}}\)) being a right preconditioner of \(\mathcal {A}\). To solve the preconditioned system \(Rv=b\), the GMRes method starts from an initial iterate \(v_0\) and produces a sequence of iterates \(v_k\) and residuals \(r_k := b-Rv_k\), such that \(r_k = p_k(R) r_0\) for some polynomial \(p_k\in \mathcal {P}_k\), and

where \(\mathcal {P}_k\) is the space of polynomials of degree k or less and \(\Vert \cdot \Vert _2\) is the Euclidean norm. As a consequence, the convergence rate of the GMRes method can be estimated by

Since R may not be a normal matrix, we follow the approach given in [10] using the concept of \(\epsilon \)-pseudospectrum [58]. Given \(\epsilon >0\), denote by \(\sigma _{\epsilon }(R)\) the set of \(\epsilon \)-eigenvalues of R, namely those \(z\in \mathbb {C}\) that are eigenvalues of some matrix \(R+E\) with \(\Vert E\Vert \le \epsilon \). We first quote the following two results.

Lemma 1

(Pseudospectrum estimate [58]) Let \(\varSigma _\epsilon \) be a union of closed curves enclosing the \(\epsilon \)-pseudospectrum \(\sigma _{\epsilon }(R)\) of R. Then for any polynomial \(p_k\) of degree k with \(p_k(0)=1\) we have

where \(L_{\epsilon }\) is the arclength of \(\varSigma _{\epsilon }\).

Lemma 2

(Bound on the \(\epsilon \)-pseudospectrum [10]) If R is a square matrix of order n and \(0<\epsilon \le 1\), then,

where \(C_R:=n(1+\sqrt{n-p})\kappa (V)\), with \(\kappa (V)\) the condition number of V and V is a nonsingular matrix transforming R into its Jordan canonical form J, i.e. \(V^{-1}RV=J\), p is the number of Jordan blocks, and m is the size of the largest Jordan block of R.

In order to estimate the error caused by mass lumping, we introduce the following operators [23]. Let \(z_j (1\le j\le 3)\) be the vertices of a triangle \(K \in \mathcal {T}\), consider the following quadrature formula

where \(\pi _h: C^0(\bar{\varOmega })\rightarrow \mathbb {V}_{\mathcal {T}}\) is the standard nodal interpolation operator. Then, the mass lumping procedure can be interpreted as

The following results are useful for later analysis.

Lemma 3

(Quadrature error [23]) Let \(u,v\in \mathbb {V}_{\mathcal {T}}\subset H_0^1\) and \( (u,v)_h \) be the lumped inner product. Then,

where \(C_l\) is a constant independent of h.

Lemma 4

(Norm equivalence [33]) Let M be the mass matrix and \(\bar{M}\) be its lumped version. Then, for any \(u=\textstyle {\sum }_j u_j\phi _j\in \mathbb {V}_\mathcal {T}\) we have

where  and \(C_1\in (0,1)\) is a constant independent of h.

and \(C_1\in (0,1)\) is a constant independent of h.

Proof

We first show that  . Let \(\delta M = M - \bar{M}\), then

. Let \(\delta M = M - \bar{M}\), then

By direct calculations, we have

By Lemma 3 and the inverse inequality, we get

Hence, \( (u,u)_h\le (1+ C)(u,u)\). Equivalently,

which implies that the left inequality holds with \(C_1={1}/({1+ C})\) for some positive constant C. \(\square \)

We also need the following estimates for the eigenvalues of the stiffness matrices and mass matrix [21, 38].

Lemma 5

(Eigenvalue estimates) Let A, B be the stiffness matrices corresponding to diffusion coefficients a(x) and b(x), respectively, and let M be the mass matrix, then,

where \(C_A, C_B\) depend on the continuity assumption of the bilinear forms and the constant in the inverse inequality.

5.1 Eigenvalue Analysis for \(\mathcal {A}\mathcal {B}^{-1}\) with Constraint \(\tau \ge Ch^2\)

Recall

We have the following spectrum bound for the preconditioned system \(\mathcal {B}^{-1}\mathcal {A}\).

Theorem 1

(Spectral bound for \(\mathcal {B}^{-1}\mathcal {A}\)) Let h denote the meshsize and \(\tau \) denote the square root of time-step size. Then, the spectral radius of \(\mathcal {B}^{-1}\mathcal {A}\) satisfies

if \({\tau }\ge Ch^2\) for some positive constant C independent of h and \(\tau \).

Proof

Let  be a pair of eigenvalue and eigenvector of \(\mathcal {B}^{-1}\mathcal {A}\), i.e.

be a pair of eigenvalue and eigenvector of \(\mathcal {B}^{-1}\mathcal {A}\), i.e.

Equivalently,

Taking the inner product of the Eq. (5.7) with  , we obtain

, we obtain

Then, we have

Since A and B are symmetric positive definite matrices,  and

and  are nonnegative real numbers. By taking the modulus of (5.9) we get

are nonnegative real numbers. By taking the modulus of (5.9) we get

where  . Hence,

. Hence,

Let \(v={\textstyle \sum }_i v_i\phi _i\), \(u={\textstyle \sum }_i u_i\phi _i\), we get

Hence,

Let \(C = 2C_lC_aC_b\), when \(\tau \ge Ch^2\), we get \(\rho (\mathcal {B}^{-1}\mathcal {A})<2\). \(\square \)

Using the same proof of Corollary 5.11 in [10] with \(\epsilon _0={1-\rho (\mathcal {B}^{-1}\mathcal {A})/2}\), we have

Corollary 1

(Convergence rate of GMRes for \(\mathcal {A}\mathcal {B}^{-1}\)) For the preconditioned system \(\mathcal {A}\mathcal {B}^{-1}\), GMRes method converges with an asymptotic linear convergence rate bounded by

if \(\tau \ge Ch^2\). Moreover,

with \(C_0=2^{m-1}C_R^m(1+\rho (E))\mathrm{dim}\mathbb {V}_\mathcal {T}/(1-\rho (E))^m\), \(C_R, \ m\) are the parameters defined in the Lemma 2 and \(\mathbb {V}_\mathcal {T}\) is the finite element space.

Similarly, we have the following result.

Corollary 2

(Convergence rate of GMRes for \(\mathcal {A}\tilde{\mathcal {B}}^{-1}\)) The spectral radius of \(\tilde{\mathcal {B}}^{-1}\mathcal {A}\) satisfies

if \({\tau }\ge Ch^2\) for some constant C independent with h and \(\tau \). For the preconditioned system \(\mathcal {A}\tilde{\mathcal {B}}^{-1}\), GMRes method converges with an approximate linear convergence rate bounded by

5.2 Analysis Without the Constraint \(\tau \ge Ch^2\)

As we will see in Sect. 6, numerical results show uniform convergence rate of GMRes method for the preconditioned system irrespective of the relation between h and \(\tau \). A proof of this result appears elusive due to the fact that the Schur complement is nonsymmetric. In this subsection, we give a proof in this direction for the special case \(B = \alpha A\) with \(\alpha >0\) being some scaling constant in the mass lumping preconditioner \(\tilde{\mathcal {B}}\). For simplicity, we choose \(\alpha =1\) for the remaining part of this paper.

Notice that

where

Then, any eigenvalue of \(\tilde{\mathcal {B}}^{-1}\mathcal {A}\) is either 1 or \(1+\lambda \) for some \(\lambda \in \sigma (X)\) where \(\sigma (X)\) represents the spectrum of X.

To derive the bounds of \(\sigma (X)\), we first recall the Sherman-Morrison-Woodbury formula

and the following identity

Appying (5.11), we get

Lemma 6

(Spectrum of X) If \(A = B\), and \(C_1<1\) is the constant in (5.5), then

Proof

Let  be an eigenpair of the matrix X, then

be an eigenpair of the matrix X, then

\(\square \)

By Lemma 6 and the relation between \(\tilde{\mathcal {B}}^{-1} \mathcal {A}\) and X, we obtain the following result.

Theorem 2

(Spectrum of \(\tilde{\mathcal {B}}^{-1} \mathcal {A}\)) Let \(\mathcal {A}\) and \(\tilde{\mathcal {B}}\) be given by (2.7) and (4.3), respectively. If \(A=B\), we have

The following result can be proved by using the same proof for the Corollary 5.11 in [10] with \(\epsilon _0 = C_1/2\).

Corollary 3

(Convergence rate of GMRes for \(\mathcal {A}\tilde{\mathcal {B}}^{-1}\)) GMRes’s iteration converges for system \(\mathcal {A}\tilde{\mathcal {B}}^{-1}\) with an asymptotic linear convergence rate bounded by

Moreover,

with \(C_0=2^{2m-1}C^m_R\mathrm{dim}\mathbb {V}_{\mathcal {T}}/C_1^{m-1}\).

Remark 2

(Preconditioning for \(\tau < C h^2\)) By inverse inequality, we have \(\tau \Vert u\Vert _A^2 \le \tau h^{-2}\Vert u\Vert _M^2\). So if \(\tau < h^2\), then M will dominate A. For the case when \(A\ne \alpha B\) and \(\tau < Ch^2\), we rewrite the system in the following form

and consider the diagonal preconditioner

To give an estimate of the spectrum bound for the preconditioned system, we let

where

Let  be an eigenpair of \(E_d\), i.e.,

be an eigenpair of \(E_d\), i.e.,

Taking the inner product of the above equation with the vector  we obtain

we obtain

Denote \(\Vert \cdot \Vert _M^2=(M\cdot , \cdot )\), we have

Since M is symmetric positive definite,

Similarly,

Then, by Lemma 5,

if \(\tau <Ch^2\), where \(C=2C_M/(C_A+C_B)\). Hence, all the eigenvalues of the preconditioned system can be bounded in \(B(1, \rho (E_d))\) with \(\rho (E_d)<1\).

6 Numerical Experiments

In this section, we provide numerical experiments to demonstrate the performance of multigrid method and the preconditioned flexible GMRes method (without restart) using mass lumping preconditioners. The MATLAB adaptive finite element package iFEM [24] is used for all experiments. We choose the following two test problems from [10].

Consider the L-shaped domain \(\varOmega =(-1, 1)^2\backslash [0, 1)^2\), set \(g=0\), \(f=1\), and choose the Dirichlet boundary condition in both examples. For the diffusion coefficients \(a(x_1, x_2),\ b(x_1, x_2)\), we choose

-

1.

Nice problem:

$$\begin{aligned} a(x_1, x_2)=1,\quad b(x_1, x_2)=\left\{ \begin{array} {cc} 0.6, &{}\quad \text{ if }\;\, x_2<x_1,\\ 1.2, &{}\quad \text{ otherwise }.\end{array}\right. \end{aligned}$$ -

2.

Degenerate problem:

$$\begin{aligned} a(x_1, x_2)=0.1|x_1|+|x_2|, \quad b(x_1, x_2)=10+3\sin (5\pi x_1)\sin (8\pi x_2). \end{aligned}$$

The range of parameter \(\tau \in [10^{-4}, 1]\), which corresponds to the time-step size \(\varDelta t\in [10^{-8}, 1]\). The stopping criterion of the multigrid solver and GMRes iteration is chosen to be the relative residual error in \(L^2\) norm less than \(10^{-7}\). The initial guess for the iterative methods are chosen randomly. For time-dependent problems, we can use the approximation in the previous time step as the initial guess and our solvers can converge in just a few steps. All computations are done using MATLAB R2015b on a desktop computer with a 3.7 GHz Intel processor and 32 GB RAM.

6.1 Results of Multigrid Solver with Collective Smoothers

We compare the performance of multigrid method with collective Gauss–Seidel and collective Jacobi smoother (with damping parameter \(\vartheta = 0.8\)) for solving (2.7). We choose this particular Jacobi damping parameter because it gives the best performance compared with other values we have tried. We use multigrid V-cycle with one pre-smoothing and one post-smoothing (i.e., V(1, 1)) which achieves the best efficiency in terms of CPU time. The number of iterations and CPU time (in parentheses) are summarized in Tables 1 and 2. We observe that multigrid methods converge uniformly with respect to h. The convergence rate is close to uniform with respect to \(\tau \) except for some deterioration when \(\tau \) is around \(10^{-4}\) or \(10^{-5}\) as shown in Table 1. For very small \(\tau \), the block system is dominated by the two mass matrix blocks, and multigrid performs better as shown in Tables 1 and 2. Although it is not covered by our analysis in Sect. 5, numerical results in Table 2 show that multigrid methods with collective smoothers are robust for problems with degenerate diffusion coefficient.

6.2 Results of GMRes with Preconditioners Solved Inexactly by CGS-MG

In this subsection, we compare the performance of the preconditioned GMRes method using three different preconditioners \(V_{\mathcal {B}}(1,1), V_{\tilde{\mathcal {B}}}(1,1), V_{\mathcal {A}}(1,1)\). Here, we use \(V_{\mathcal {B}}(1,1), V_{\tilde{\mathcal {B}}}(1,1), V_{\mathcal {A}}(1,1)\) to represent the inexact preconditioners corresponding to \(\mathcal {B},\tilde{\mathcal {B}}\) and \(\mathcal {A}\) respectively. For example, \(V_{\mathcal {B}}(1,1)\) is one CGS-MG V-cycle with one pre-smoothing and one post-smoothing. As we can see from Tables 3 and 4 that GMRes method converges uniformly with respect to both h and \(\tau \). The CPU time for GMRes preconditioned by \(V_{\mathcal {B}}(1,1)\) is approximately half of the time when preconditioned by \(V_{\mathcal {A}}(1,1)\) which clearly demonstrate the efficiency of mass lumping.

6.3 Results of GMRes with Preconditioners Solved Inexactly by DGS-MG

In this subsection, we present the numerical results for GMRes method using inexact mass lumping preconditioners \(V_{\mathcal {B}}(1, 1)\) and \(V_{\tilde{\mathcal {B}}}(1, 1)\). Namely, the preconditioner systems \(\mathcal {B}\) and \(\tilde{\mathcal {B}}\) are solved approximately by one multigrid V-cycle using the decoupled smoother proposed in Sect. 4.3 with one pre-smoothing and one post-smoothing. Here, we choose the Jacobi damping parameter to be 0.5. Our numerical experiments show that the preconditioners have similar performance when the Jacobi damping parameter varies between 0.4 and 0.6. As we can see from Tables 5 and 6 that GMRes converges uniformly when varying h and \(\tau \).

6.4 Choice of Multigrid Parameters for Solving Preconditioner Systems

In the following, we compare the performance of the preconditioner \(\mathcal {B}\) solved by CGS-MG or DGS-MG with different types of cycles and different number of smoothing steps. From Tables 7, 8, 9 and 10, we can see that increasing the number of smoothing steps can reduce the number of GMRes iterations. However, the CPU time is not always decreasing since more smoothing steps may also increase the computational cost. The GMRes iteration numbers are similar when using W cycle or V cycle. Since W cycle is usually more expensive, its efficiency in terms of CPU time is not as good as V cycle in our experiment. For practical purposes, we suggest using V cycle with one pre- and one post-smoothing step for solving the preconditioner systems discussed in this work.

6.5 Results of GMRes with Preconditioners \(\tilde{\mathcal {B}}\) and \(\mathcal {B}_d\)

The theoretical analysis in Remark 2 shows that when \(\tau < Ch^2\), the block diagonal preconditioner \(\mathcal {B}_d\) defined by (5.13) is also good for GMRes method. In Tables 11 and 12, we demonstrate the performance of inexact \(\mathcal {B}_d\) preconditioner when \(\tau \) is very small. We use \(GS_{\mathcal {B}_d}(3)\) to represent three steps of Gauss–Seidel relaxation applied to \(\mathcal {B}_d\). It can be observed from the numerical results that \(GS_{\mathcal {B}_d}(3)\) is very efficient when \(\tau \) is very small. However, we would like to point out that from the approximation point of view, \(\tau \) should be of order \(h^2\). Hence, in practice, a reasonable choice of \(\tau \) may not be too small.

6.6 Robustness with Respect to Other Boundary Conditions

In previous numerical experiments, homogeneous Dirichlet boundary conditions are prescribed. In Tables 13 and 14, we show that the proposed preconditioners also work well for problems with other boundary conditions. In particular, we consider example 1 defined at the beginning of Sect. 6 and choose mixed homogeneous Neumann and Dirichlet boundary conditions 2.3 and 2.4 where \(\varGamma _N:=\{(x,y) | x=0, y\in (0,1)\}\cup \{(x,y) | x\in (0,1), y=0\}\) and \(\varGamma _D:=\partial \varOmega \backslash \varGamma _N\). There is a slight increase of GMRes iteration numbers when \(\tau \) is large and the preconditioned systems are solved by CGS-MG.

6.7 Spectrum Distribution of the Preconditioned Systems

In Figs. 1 and 2, we show the spectrum distribution of the preconditioned systems, \(\mathcal {B}^{-1}\mathcal {A}\) and \(\tilde{\mathcal {B}}^{-1}\mathcal {A}\), respectively, when varying h (left) or \(\tau \) (right) (with diffusion coefficients \(a(x_1,x_2)=b(x_1,x_2)=1\)). We can see that the eigenvalues are more concentrated when h decreases with fixed \(\tau \). They become more scattered when \(\tau \) gets smaller while h is fixed, but away from zero which means the original system is well conditioned. Moreover, the preconditioned system \(\tilde{\mathcal {B}}^{-1}\mathcal {A}\) has more favorable spectral property than \(\mathcal {B}^{-1}\mathcal {A}\) for GMRes method which is consistent with the numerical results reported in Sects. 6.2 and 6.3.

7 Conclusions

In this work, we propose mass lumping preconditioners for use with GMRes method to solve a class of two-by-two block linear systems which correspond to some discrete fourth order parabolic equations. Using consistent matrix in the discretization has the advantage of better solution accuracy as compared with applying mass lumping directly in the discretization. We propose to use lumped-mass matrix \(\bar{M}\) and optimal multigrid algorithm with either coupled or decoupled smoother to construct practical preconditioners which are shown to be computationally very efficient. Numerical experiments indicate that preconditioned GMRes method converges uniformly with respect to both h and \(\tau \) and is robust for problems with degenerate diffusion coefficient. We provide theoretical analysis about the spectrum bound of the preconditioned system and estimate the convergence rate of the preconditioned GMRes method.

References

Asaro, R.J., Tiller, W.A.: Interface morphology development during stress corrosion cracking: part I. Via surface diffusion. Metall. Trans. 3(7), 1789–1796 (1972)

Axelsson, O., Boyanova, P., Kronbichler, M., Neytcheva, M., Wu, X.: Numerical and computational efficiency of solvers for two-phase problems. Comput. Math. Appl. 65(3), 301–314 (2013)

Axelsson, O., Neytcheva, M.: Operator splitting for solving nonlinear, coupled multiphysics problems with application to numerical solution of an interface problem. TR2011-009 Institute for Information Technology, Uppsala University (2011)

Bai, Z.-Z., Benzi, M., Chen, F., Wang, Z.-Q.: Preconditioned MHSS iteration methods for a class of block two-by-two linear systems with applications to distributed control problems. IMA J. Numer. Anal. 33(1), 343–369 (2013)

Bai, Z.-Z., Chen, F., Wang, Z.-Q.: Additive block diagonal preconditioning for block two-by-two linear systems of skew-Hamiltonian coefficient matrices. Numer. Algorithms 62(4), 655–675 (2013)

Bai, Z.-Z., Golub, G.H., Ng, M.K.: Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 24(3), 603–626 (2003)

Baňas, L., Nürnberg, R.: A multigrid method for the Cahn–Hilliard equation with obstacle potential. Appl. Math. Comput. 213(2), 290–303 (2009)

Bänsch, E., Morin, P., Nochetto, R.H.: Surface diffusion of graphs: variational formulation, error analysis, and simulation. SIAM J. Numer. Anal. 42(2), 773–799 (2004)

Bänsch, E., Morin, P., Nochetto, R.H.: A finite element method for surface diffusion: the parametric case. J. Comput. Phys. 203(1), 321–343 (2005)

Bänsch, E., Morin, P., Nochetto, R.H.: Preconditioning a class of fourth order problems by operator splitting. Numer. Math. 118(2), 197–228 (2011)

Barrett, J.W., Blowey, J.F., Garcke, H.: Finite element approximation of the Cahn–Hilliard equation with degenerate mobility. SIAM J. Numer. Anal. 37(1), 286–318 (1999)

Benzi, M., Golub, G.H.: A preconditioner for generalized saddle point problems. SIAM J. Matrix Anal. Appl. 26(1), 20–41 (2004)

Bertozzi, A.L., Esedoglu, S., Gillette, A.: Inpainting of binary images using the Cahn–Hilliard equation. IEEE Trans. Image Process. 16(1), 285–291 (2007)

Blowey, J.F., Elliott, C.M.: The Cahn–Hilliard gradient theory for phase separation with non-smooth free energy part I: mathematical analysis. Eur. J. Appl. Math. 2(3), 233–280 (1991)

Blowey, J.F., Elliott, C.M.: The Cahn–Hilliard gradient theory for phase separation with non-smooth free energy. Part II: numerical analysis. Eur. J. Appl. Math. 3, 147–179 (1992)

Bosch, J., Kay, D., Stoll, M., Wathen, A.J.: Fast solvers for Cahn–Hilliard inpainting. SIAM J. Imaging Sci. 7(1), 67–97 (2014)

Bosch, J., Stoll, M., Benner, P.: Fast solution of Cahn–Hilliard variational inequalities using implicit time discretization and finite elements. J. Comput. Phys. 262, 38–57 (2014)

Boyanova, P., Do-Quang, M., Neytcheva, M.: Efficient preconditioners for large scale binary Cahn–Hilliard models. Comput. Methods Appl. Math. 12(1), 1–22 (2012)

Boyanova, P., Neytcheva, M.: Efficient numerical solution of discrete multi-component Cahn–Hilliard systems. Comput. Math. Appl. 67(1), 106–121 (2014)

Brandt, A., Dinar, N.: Multi-grid solutions to elliptic flow problems. Numerical Methods for Partial Differential Equations, pp. 53–147 (1979)

Brenner, S.C., Scott, R.: The Mathematical Theory of Finite Element Methods, vol. 15. Springer, Berlin (2008)

Cahn, J.W., Hilliard, J.E.: Free energy of a nonuniform system. I. Interfacial free energy. J. Chem. Phys. 28(2), 258–267 (2004)

Chen, C.M., Thomée, V.: The lumped mass finite element method for a parabolic problem. J. Aust. Math. Soc. Ser. B Appl. Math. 26(03), 329–354 (1985)

Chen, L.: iFEM: an integrated finite element methods package in MATLAB. Technical Report, University of California at Irvine (2009)

Chen, L.: Multigrid methods for constrained minimization problems and application to saddle point problems. Submitted (2014)

Chen, L.: Multigrid methods for saddle point systems using constrained smoothers. Comput. Math. Appl. 70(12), 2854–2866 (2015)

Choo, S.M., Lee, Y.J.: A discontinuous Galerkin method for the Cahn–Hilliard equation. J. Appl. Math. Comput. 18(1–2), 113–126 (2005)

Christon, M.A.: The influence of the mass matrix on the dispersive nature of the semi-discrete, second-order wave equation. Comput. Methods Appl. Mech. Eng. 173(1), 147–166 (1999)

Du, Q., Nicolaides, R.A.: Numerical analysis of a continuum model of phase transition. SIAM J. Numer. Anal. 28(5), 1310–1322 (1991)

Elliott, C.M.: The Cahn–Hilliard model for the kinetics of phase separation. In: Rodrigues, J.F. (ed.) Mathematical Models for Phase Change Problems, pp. 35–73. Springer (1989)

Elliott, C.M., French, D.A.: Numerical studies of the Cahn–Hilliard equation for phase separation. IMA J. Appl. Math. 38(2), 97–128 (1987)

Elliott, C.M., French, D.A.: A nonconforming finite-element method for the two-dimensional Cahn–Hilliard equation. SIAM J. Numer. Anal. 26(4), 884–903 (1989)

Elliott, C.M., French, D.A., Milner, F.A.: A second order splitting method for the Cahn–Hilliard equation. Numer. Math. 54(5), 575–590 (1989)

Elliott, C.M., Larsson, S.: Error estimates with smooth and nonsmooth data for a finite element method for the Cahn–Hilliard equation. Math. Comput. 58(198), 603–630 (1992)

Feng, X., Karakashian, O.: Fully discrete dynamic mesh discontinuous Galerkin methods for the Cahn–Hilliard equation of phase transition. Math. Comput. 76(259), 1093–1117 (2007)

Feng, X., Prohl, A.: Error analysis of a mixed finite element method for the Cahn–Hilliard equation. Numer. Math. 99(1), 47–84 (2004)

Feng, X., Prohl, A.: Numerical analysis of the Cahn–Hilliard equation and approximation for the Hele–Shaw problem. J. Comput. Math. 26(6), 767–796 (2008)

Fried, I.: Bounds on the spectral and maximum norms of the finite element stiffness, flexibility and mass matrices. Int. J. Solids Struct. 9(9), 1013–1034 (1973)

Furihata, D.: A stable and conservative finite difference scheme for the Cahn–Hilliard equation. Numer. Math. 87(4), 675–699 (2001)

Gaspar, F.J., Lisbona, F.J., Oosterlee, C.W., Wienands, R.: A systematic comparison of coupled and distributive smoothing in multigrid for the poroelasticity system. Numer. Linear Algebra Appl. 11(2–3), 93–113 (2004)

Gräser, C., Kornhuber, R.: On preconditioned Uzawa-type iterations for a saddle point problem with inequality constraints. In: Widlund, O.B., Keyes, D.E. (eds.) Domain Decomposition Methods in Science and Engineering XVI, Volume 55 of Lecture Notes in Computational Science Engineering, pp. 91–102. Springer, Berlin (2007)

Greer, J.B., Bertozzi, A.L.: \({H}^{1}\) solutions of a class of fourth order nonlinear equations for image processing. Discrete Contin. Dyn. Syst. 10(1/2), 349–366 (2004)

Gresho, P.M., Lee, R.L., Sani, R.L.: Advection-dominated flows, with emphasis on the consequences of mass lumping. Finite Elem. Fluids 1, 335–350 (1978)

He, Y., Liu, Y.: Stability and convergence of the spectral Galerkin method for the Cahn–Hilliard equation. Numer. Methods Partial Differ. Equ. 24(6), 1485–1500 (2008)

Henn, S.: A multigrid method for a fourth-order diffusion equation with application to image processing. SIAM J. Sci. Comput. 27(3), 831–849 (2005)

Hinton, E., Rock, T., Zienkiewicz, O.C.: A note on mass lumping and related processes in the finite element method. Earthq. Eng. Struct. Dyn. 4(3), 245–249 (1976)

Kay, D., Welford, R.: A multigrid finite element solver for the Cahn–Hilliard equation. J. Comput. Phys. 212(1), 288–304 (2006)

Kim, J., Kang, K., Lowengrub, J.: Conservative multigrid methods for Cahn–Hilliard fluids. J. Comput. Phys. 193(2), 511–543 (2004)

King, B.B., Stein, O., Winkler, M.: A fourth-order parabolic equation modeling epitaxial thin film growth. J. Math. Anal. Appl. 286(2), 459–490 (2003)

Lass, O., Vallejos, M., Borzi, A., Douglas, C.C.: Implementation and analysis of multigrid schemes with finite elements for elliptic optimal control problems. Computing 84(1–2), 27–48 (2009)

Mullen, R., Belytschko, T.: Dispersion analysis of finite element semidiscretizations of the two-dimensional wave equation. Int. J. Numer. Methods Eng. 18(1), 11–29 (1982)

Niclasen, D.A., Blackburn, H.M.: A comparison of mass lumping techniques for the two-dimensional Navier–Stokes equations.pdf. In: Twelfth Australasian Fluid Mechanics Conference, pp. 731–734. The Univesity of Sydney (1995)

Olshanskii, M.A., Reusken, A.: Navier–Stokes equations in rotation form: a robust multigrid solver for the velocity problem. SIAM J. Sci. Comput. 23(5), 1683–1706 (2002)

Quarteroni, A.: On mixed methods for fourth-order problems. Comput. Methods Appl. Mech. Eng. 24(1), 13–34 (1980)

Schöberl, J.: Multigrid methods for a parameter dependent problem in primal variables. Numer. Math. 84, 97–119 (1999)

Sun, Z.: A second-order accurate linearized difference scheme for the two-dimensional Cahn–Hilliard equation. Math. Comput. 64(212), 1463–1471 (1995)

Takacs, S., Zulehner, W.: Convergence analysis of multigrid methods with collective point smoothers for optimal control problems. Comput. Vis. Sci. 14(3), 131–141 (2011)

Trefethen, L.N., Embree, M.: Spectra and Pseudospectra: The Behavior of Nonnormal Matrices and Operators. Princeton Univeristy Press, Princeton (2005)

Ushijima, T.: On the uniform convergence for the lumped mass approximation of the heat equation. J. Fac. Sci. Univ. Tokyo 24, 477–490 (1977)

Ushijima, T.: Error estimates for the lumped mass approximation of the heat equation. Mem. Numer. Math. 6, 65–82 (1979)

Vanka, S.P.: Block-implicit multigrid solution of Navier–Stokes equations in primitive variables. J. Comput. Phys. 65, 138–158 (1986)

Wang, M., Chen, L.: Multigrid methods for the stokes equations using distributive Gauss–Seidel relaxations based on the least squares commutator. J. Sci. Comput. 56(2), 409–431 (2013)

Wise, S., Kim, J., Lowengrub, J.: Solving the regularized, strongly anisotropic Cahn–Hilliard equation by an adaptive nonlinear multigrid method. J. Comput. Phys. 226(1), 414–446 (2007)

Wittum, G.: Multigrid methods for Stokes and Navier–Stokes eqautions with transforming smoothers: algorithms and numerical results. Numer. Math. 54(5), 543–563 (1989)

Xia, Y., Xu, Y., Shu, C.W.: Local discontinuous Galerkin methods for the Cahn–Hilliard type equations. J. Comput. Phys. 227(1), 472–491 (2007)

Ye, X., Cheng, X.: The Fourier spectral method for the Cahn–Hilliard equations. Numer. Math. 171(1), 345–357 (2005)

Zhang, S., Wang, M.: A nonconforming finite element method for the Cahn–Hilliard equation. J. Comput. Phys. 229(19), 7361–7372 (2010)

Acknowledgments

B. Zheng would like to acknowledge the support by NSF Grant DMS-0807811 and a Laboratory Directed Research and Development (LDRD) Program from Pacific Northwest National Laboratory. L.P. Chen was supported by the National Natural Science Foundation of China under Grant No. 11501473. L. Chen was supported by NSF Grant DMS-1418934 and in part by NIH Grant P50GM76516. R.H. Nochetto was supported by NSF under Grants DMS-1109325 and DMS-1411808. J. Xu was supported by NSF Grant DMS-1522615 and in part by US Department of Energy Grant DE-SC0014400. Computations were performed using the computational resources of Pacific Northwest National Laboratory (PNNL) Institutional Computing cluster systems. The PNNL is operated by Battelle for the US Department of Energy under Contract DE-AC05-76RL01830.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zheng, B., Chen, L., Hu, X. et al. Fast Multilevel Solvers for a Class of Discrete Fourth Order Parabolic Problems. J Sci Comput 69, 201–226 (2016). https://doi.org/10.1007/s10915-016-0189-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-016-0189-6