Abstract

In this paper, we propose a numerical algorithm based on the method of fundamental solutions (MFS) for the Cauchy problem in two-dimensional linear elasticity. Through the use of the double-layer potential function, we give the invariance property for a problem with two different descriptions. In order to adapt this invariance property, we give an invariant MFS to satisfy this invariance property, i.e., formulate the MFS with an added constant and an additional constraint. The method is combining the Newton method and classical Tikhonov regularization with Morozov discrepancy principle to solve the inverse Cauchy problem. Some examples are given for numerical verification on the efficiency of the proposed method. The numerical convergence, accuracy, and stability of the method with respect to the the number of source points and the distance between the pseudo-boundary and the real boundary of the solution domain, and decreasing the amount of noise added into the input data, respectively, are also analysed with some examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

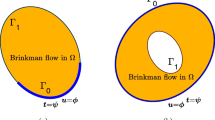

Consider an isotropic linear elastic material which occupies an open bounded simply connected domain \(D\subset {\mathbb {R}^{2}}, \varGamma \) be a portion of the boundary \(\partial D, \varGamma _1=\partial D\setminus \varGamma \). In the absence of body forces, the equilibrium equations with respect to the Cauchy stress tensor \(\varvec{\sigma }(\mathbf{x })\) are given by

While the stresses \(\varvec{\sigma }_{ij}\) are related to the strains \(\varvec{\varepsilon }_{ij}\) through the constitutive law (Hooke’s law) as following

with \(\delta _{ij}\) the Kronecker delta tensor. \(\lambda \) and \(\mu \) are the Lamé constants, which are related to shear modulus \(G\) and Poisson’s ratio \(\nu \) as \(\lambda =\frac{2G\nu }{1-2\nu },\) \(\mu =G\).

The strains \(\varvec{\varepsilon }_{ij}\) are defined as a differential operator on the displacement vector \(\mathbf u (\mathbf{x })\) by

This equation is often referred to as the compatibility equation for small deformations.

On combining the three field Eqs. (1)–(3) and eliminating \(\varvec{\varepsilon }\) and \(\varvec{\sigma }\), the displacement field \(\mathbf u \) is found to be governed by the Cauchy–Navier equation as following

Let \(\mathbf{n }\) be the unit normal to the boundary \(\partial {D}\) directed into the exterior of \(D\), and \(T_n\mathbf u \) be the traction vector at a point \(\mathbf{x }\in \partial D\), defined by

In this paper, we consider the following Cauchy problem: given Cauchy data \(\mathbf f \) and \(\mathbf t \) on \(\varGamma \), find \(\mathbf u =(u_1,u_2)^\top \), such that \(\mathbf u \) satisfies Eq. (4) and the following boundary conditions

We will determine both the displacement and the traction vectors on \(\varGamma _1=\partial D\backslash \varGamma \).

In the above formulation of the boundary conditions (5), it can be seen that the boundary \(\varGamma \) is overspecified by prescribing both the displacement and the traction vectors, whilst the boundary \(\partial D\setminus \varGamma \) is underspecified since both the displacement and the traction vectors are unknown and have to be determined. This problem, termed the Cauchy problem, is much more difficult to solve both analytically and numerically than the direct problem, since the solution does not satisfy the general conditions of well-posedness. The existence and uniqueness of the solution to such problems have been well established by Calderon [5]. Moreover, it is well known that they are ill-posed, i.e. the solutions do not depend continuously on Cauchy data and a small perturbation in the data may result in large change in the solutions ([1, 10]).

There are many numerical methods in the literature to solve the Cauchy problem in linear elasticity. Liu [16] proposed a Lie-group integrator method for the solution of inverse Cauchy problem in linear or nonlinear elasticity. Baranger and Andrieux [3] gave an optimization method. The energy error minimization method for 3D Cauchy problem was investigated by Andrieux and Baranger [2]. Sun et al. [33] give an integral equations method for 3D elastostatics Cauchy problem, they construct a regularizing solution using the single-layer potential function to solve this ill-posed problem. We refer to [19, 20] for the alternating iterative boundary element method. Comino et al. [6] proposed the alternating iterative method [13] to solve the Cauchy problem in two dimensional anisotropic elasticity, and the boundary element method (BEM) has been used for the numerical implementation. Delvare et al. [7] gave a least square fitting method to solve the Cauchy problem by solving a sequence of optimization problems under equality constraints. Durand et al. [8] gave an iterative method for solving axisymmetric Cauchy problems in linear elasticity based the finite element method. Marin and Johansson [29, 30] investigated alternating iterative algorithm for the Cauchy problem in linear isotropic elasticity by relaxation procedures. The BEM combined successfully with the conjugate gradient method and a stopping criterion based on a Monte-Carlo simulation of the generalized cross-validation (GCV) by Turco [35]. Other iterative method refer to [21]. Marin and Lesnic [23, 24, 26] investigated BEM via singular value decomposition, regularization, Landweber iteration, and the MFS was used by Marin and Lesnic [25]. Turco [34] used the BEM to discretize the problem along with a strategy based on the Tikhonov regularization method completed by the GCV criterion in order to make the solution process entirely automatic. BEM was also investigated by Marin et al. [22] and Zabaras et al. [36]. The FEM was investigated by Maniatty [17], Martin et al. [31] and Schnur and Zabaras [32]. Later, Marin and Johansson [30] applied alternating iterative algorithm [13] to the Cauchy problems in linear elasticity. The application of the MFS, in conjunction with the Tikhonov regularization method, to the numerical solution of Cauchy problem in three-dimensional isotropic linear elasticity was studied by Marin [27]. We can refer [4, 14, 28] for other methods.

The main purpose of this paper is to provide a novel MFS for the Cauchy problem in two-dimensional linear elasticity. The main idea is to approximate the solution of (6) by the following form

where \(\mathbf a ^j=(a_j,b_j)^\top \), and \(\mathbf U (\mathbf{x })\) is fundamental matrix [15] given by

with \(C_1={1}/[8\pi G(1-\bar{\nu })], ~C_2=4\bar{\nu }-3\). We set \(\bar{\nu }=\nu /(1+\nu )\) for plane stress and \(\bar{\nu }=\nu \) for the plane strain.

The MFS is a meshless method. In comparison to the BEM and FEM, the MFS doesn’t require interior or surface meshing which makes it extremely attractive for solving problems under complicated boundary, thus the method has become increasingly popular. The simple implementation of the MFS dealing with complex boundaries makes it an ideal candidate for the problems in which the boundary is of major importance or requires special attention, such as free boundary problems. For these reasons, the MFS has been used increasingly over the last decade for the numerical solution of the inverse problems. The excellent surveys of the MFS and related methods over the past three decades have been presented by Fairweather and Karageorghis [9] and Karageorghis et al. [11].

The outline of this paper is as follows. In Sect. 2, we formulate the invariance property of the solution for the boundary value problem, and then we give the invariant MFS. In Sect. 3, we solve the equations by Tikhonov regularization method with Morozov principle. Finally, several numerical examples are illustrated the effectiveness of our method, whilst comparing the accuracy errors with the classical MFS.

2 Formulation and Solution Method

2.1 The Invariance Property of the Solution

Let \(D\subset {\mathbb {R}^{2}}\) be a bounded and connected domain with piecewise smooth boundary \(\partial {D}\). We consider the following boundary value problem: given \(\mathbf{g }\) on \(\partial D\), find \(\mathbf u \) such that \(\mathbf u \) satisfies

Whilst we consider another problem as follows: \(D'\subset {\mathbb {R}^{2}}\) is a bounded and connected domain. For every \(\mathbf{x }\in D\), there holds \(\mathbf{x }'=\hat{\alpha }\mathbf{x }\in D', \hat{\alpha }>0\), i.e. \(D'\) is related to \(D\), which is enlarged or compressed. \(\mathbf u '\) is the solution of the following problem:

If we fix the boundary conditions \(\mathbf{g }({\varvec{x}})=\mathbf{g }'(\hat{\alpha }\mathbf{x })\) for \(\mathbf{x }\in \partial D\), it is well known that the two solutions have some relevance, i.e. \(\mathbf u (\mathbf{x })=\mathbf u '(\hat{\alpha }\mathbf{x })\) for \(\mathbf{x }\in D\). We call that the invariance under trivial coordinate changes in the problem description as scaling of coordinates.

We will show that (P.1) and (P.2) are equivalent virtually.

First, we give the solvability of problem (P.1) and (P.2). It is well-known that such problem has a unique solution in \(\mathbf{H }^{1}\), and \(\mathbf u \) has the following double-layer representation for a charge density \(\varvec{\varphi }({\varvec{x}})\in L^2(\partial D)\),

whilst \(u'\), with a charge density \(\phi ({\varvec{x}}')\in L^2(\partial D')\), will be

where \(T_{n({\varvec{y}})}\mathbf U ({\varvec{x}},{\varvec{y}})=(\mathbf T _{ij})\) is given by

with \(r(\mathbf{x },\mathbf{y })=|\mathbf{x }-\mathbf{y }|, C_3=-\frac{1}{4\pi (1-\bar{\nu })}\) and \(C_4=1-2\bar{\nu }\). From the jump relation [15, p. 18], for (8) and (9), we get

and

respectively. Thus, we can get the charge density \(\varvec{\varphi }({\varvec{x}})\) and \(\varvec{\phi }({\varvec{x}}')\).

By introducing the operator

we will get the charge density

providing that \(\mathbf I -\mathbf K \) is invertible.

Theorem 1

The operator \(\mathbf I -\mathbf K \) is injective.

Proof

It is sufficient to prove that the homogeneous equation \((\mathbf I -\mathbf K )\varvec{\varphi }=0\) only has a trivial solution. We can define a double-layer potential \(\varvec{v}\) by (8). Then by the jump relation [15, p. 18], we can get \(\varvec{v}_{-}=\frac{1}{2}(\mathbf K \varvec{\varphi }-\varvec{\varphi })=0\). Therefore, the potential \(\varvec{v}\) solves the boundary value problem in \(D\) with homogeneous Dirichlet boundary condition. So we get \(\varvec{v}=0\) in \(D\). This yields \({T}_n \varvec{v}_{-}=0\). By the jump relation, we can get \({T}_n \varvec{v}_{+}={T}_n \varvec{v}_{-}=0\) on \(\partial D\). We observe that \(\varvec{v}=\mathcal {O}(\frac{1}{|{\varvec{x}}|})\) for \(|{\varvec{x}}|\longrightarrow \infty \). The uniqueness of the exterior boundary value problem for Cauchy–Navier equation yields that \(\varvec{v}\) vanishes in the exterior of \(D\), i.e. \(\varvec{v}=0\) in \(\mathbb {R}^2\setminus D\). Hence by the jump relation, we get \(\varvec{\varphi }=\varvec{v}_{+}-\varvec{v}_{-}=0\) on \(\partial D\), which completes the proof. \(\square \)

By Theorem 1, we can get \(\varvec{\varphi }\) and \(\varvec{\phi }\) from (10) and (11), respectively. We will explain \(\varvec{\varphi }({\varvec{x}})=\varvec{\phi }(\hat{\alpha } {\varvec{x}}),~ {\varvec{x}} \in \partial D\).

Let us consider the reason why (6) and (7) have the invariance property. From (9) and the integral transform

This means that (11) has the following form

From (10), (13) and Theorem 1, it can be seen that \(\varvec{\varphi }(\mathbf{x })=\varvec{\phi }(\hat{\alpha }\mathbf{x })\) provides \(\mathbf{g }(\mathbf{x })=\mathbf{g }'(\hat{\alpha }\mathbf{x })\) for \(\mathbf x \in \partial D\).

By the above discussions, we get the following, for (P.1) and (P.2),

provides \(\mathbf{g }(\mathbf{x })=\mathbf{g }'(\hat{\alpha }\mathbf{x })\) for \(\mathbf{x }\in \partial D\).

2.2 The Invariant MFS

One popular method for solving problems (P.1) and (P.2) is the MFS. The classical MFS assumes that \(\mathbf u \) is approximated by the following form

where \(\mathbf{y }^j, j=1,\cdot \cdot \cdot ,M,\) are source points chosen suitably on the boundary of \(B\) with \(\overline{D}\subset B\). The coefficients \(a_j\) and \(b_j\) are to be determined from the boundary conditions, which are implemented by the interpolation conditions at the collocation method.

However, the approximation \(\mathbf u ^{(M)}_C(\mathbf{x })\) of \(\mathbf u \) constructed in this way lacks an essential property, i.e., the invariance under trivial coordinate changes in the problem description such as scaling of coordinates:

and the origin shift for the boundary data:

To be more specific, we expect that the approximation \(\mathbf u ^{(M)}_C(\mathbf{x })\) should transform as

under the transformations (15) and (16), which, however, is not the case with \(\mathbf u ^{(M)}_C(\mathbf{x })\). Since for \(\mathbf{u '}^{(M)}_C=({u'_1}^{(M)}_C,{u'_2}^{(M)}_C)^{\top }\), we have

and

Thus, we have

In general, \(\sum _{j=1}^{M}\mathbf a ^j\ne 0\), thus \(\mathbf u '^{(M)}_C(\hat{\alpha }\mathbf{x })\ne \mathbf u ^{(M)}_C( \mathbf{x })\). About (18), i.e., the origin shift, it is easy to be satisfied, while the other is not.

From Sect. 2.1, we know that the analytic solution \(\mathbf u \) has the invariance property \(\mathbf u (\mathbf{x })=\mathbf u '(\hat{\alpha }\mathbf{x })\). We expect that the meshless method also has this invariance property. More preciously, it should have \(\mathbf u '^{(M)}_C(\hat{\alpha }\mathbf{x })=\mathbf u ^{(M)}_C( \mathbf{x })\), if the collocation points \(\mathbf{x }\rightarrow \hat{\alpha }\mathbf{x }\) and source points \(\mathbf{y }^j\rightarrow \hat{\alpha }\mathbf{y }^j\) simultaneously stretch or compress the same scale. But from (19), we know the traditional MFS \(\mathbf u _C^{(M)}(\mathbf{x })\) defined by (14) is not the case. Based on this consideration, the invariant MFS assumes an approximation of the following form

where \(\mathbf c =(c_1,c_2)^\top \). However, the appended constant in (20) is not the most important, the major difference between the conventionally MFS and the invariant MFS is the constraint about the coefficients \(\mathbf a ^j\) as follows

From (19), we know the invariant approximation \(\mathbf u _{I}^{(M)}\) enjoys the expected invariance properties under (21).

Then we use the invariant MFS to deal with Cauchy problem. In the collocation method, the coefficients are determined from the interpolation conditions at \(N\) collocation points \(\mathbf{x }^i\) uniformly distributed on \(\varGamma \) by solving a system of \(2N+1\) equations consisting of (21) and

We recast (21) and (22) as a system of \(4N+2\) linear algebraic equations with \(2M+2\) unknowns which can be generically written as

where matrix \(\fancyscript{A}\), the unknown vector \(\mathbf d \) and the right-hand side \(\mathbf h \) are given by

The other elements of \(\fancyscript{A}\) is zero.

In order to uniquely determine the coefficients \(a_j\) and \(b_j\), it should be noticed that the number \(N\) of the boundary collocation points and the number \(M\) of the source points must satisfy \(2N\ge M\). However, the system of linear algebraic equation (23) cannot be solved by direct methods, such as the least squares method, since such an approximation would produce a highly unstable solution. Thus, we should solve it by some regularization method.

3 Regularization Method for Solving the Linear Algebraic Equations

In this section, we will use Tikhonov regularization method with Morozov discrepancy principle, see [12], to solve the system (23).

In general, we need to consider the perturbed equations with Gaussian noise by

Here, \(\mathbf{h }^\delta \) is measured noisy data satisfying

where \(\delta \) denotes the noise level.

It is well known that the linear system (24) is ill-conditioned. An important tool for the analysis of rank-deficient and discrete ill-posed problems is the singular value decomposition (SVD). The matrix \(\fancyscript{A}\) in (24) will be SVD-decomposed into

\(\varvec{W}\) and \(\varvec{V}\), which have \((4N+2)\times (2M+2)\) and \((2M+2)\times (2M+2)\) dimensions, respectively, are matrices whose columns are the orthogonal vectors \(\varvec{W}_i\) and \(\varvec{V}_i\), the left and right singular vectors, and \(\varvec{\Sigma }=\mathrm{diag}(\lambda _1,\ldots ,\lambda _{2M+2})\) is a diagonal matrix has non-negative diagonal elements in decreasing order, which are the singular values of \(\fancyscript{A}\). Such a decomposition makes explicit the degree of ill-conditioning of the matrix \(\fancyscript{A}\) through the ratio between the maximum and the minimum singular value but also allows to write the solution of the system (24) in the following form

For ill-conditioned matrix systems, there usually exist extremely small singular values, thus this equation clearly brings out the difficulties to deal with the ill-posed discrete problems, and the ill-posedness of this problem is given by

For a given and fixed regularization parameter \(\alpha \), the Tikhonov regularization of system (24) is to solve the following equations

By introducing the regularization operator

we can achieve the regularized solution \(\mathbf d ^\delta _{\alpha }=R_\alpha \mathbf h ^\delta \) of equations (24).

A suitable regularization parameter \(\alpha \) is crucial for the accuracy of the regularized solution. We can refer [18] for the investigation of regularization parameters and error estimating. Best-parameter search is not the scope of this work and we will choose the regularization parameter \(\alpha \) by Morozov discrepancy principle. The computation of \(\alpha (\delta )\) can be carried out with Newton’s method [33]. The derivative of the mapping \(\alpha \mapsto \mathbf d ^\delta _{\alpha }\) is given by the solution of the equation \((\alpha I + \fancyscript{A}^\top \fancyscript{A})\frac{d}{d\alpha }\mathbf d ^\delta _{\alpha }=-\mathbf d ^\delta _{\alpha }\), as easily seen by differentiating (25) with respect to \(\alpha \). With the regularization \(\alpha ^*\) fixed, we can solve (24) to obtain the regularized solution \(\mathbf d ^\delta _{\alpha ^*}=R_{\alpha ^*} \mathbf h ^\delta \). From (20), if we get the approximation \(\mathbf d ^\delta _{\alpha ^*}\) of \(\mathbf d \), and will get the approximation of \(\mathbf u ^{(M)}_I(\mathbf{x })\).

In order to analyze the accuracy of the numerical results obtained, we introduce the errors \(err(u_i)(i=1,2)\) and \(err(t_i)(i=1,2)\) given by

and

where \(\mathbf{x }^l, l=1,\ldots ,L\), are \(L\) uniformly distributed points on the underspecified boundary \(\varGamma _1. \mathbf u ^{(an)}\) and \(\mathbf t ^{(an)}\) are the exact displacement and traction vectors, respectively. \(\mathbf u ^{({\alpha })}\) and \(\mathbf t ^{({\alpha })}\) are the numerical displacement and traction vectors, respectively, obtained for the value \({\alpha }\) of the regularization parameter. When \({u_i}^{(an)}=0\) or \({t_i}^{(an)}=0\), we will use

or

4 Numerical Examples and Discussion

In this section, we report some examples to demonstrate the effectiveness of our algorithm. The implementation of the algorithm is based on the MATLAB software. We consider an isotropic linear elastic medium characterized by the material constants \(G=3.35\times 10^{10}\hbox {N}/{\hbox {m}^2}\) and \(\nu =0.34\) corresponding to a copper alloy. We can get \(\lambda \) and \(\mu \) by \(\mu =G\) and \(\lambda =\frac{2G\nu }{1-2\nu }\). For plane stress, we change \(\nu \) to \(\frac{\nu }{1+\nu }\).

In the forthcoming examples, we choose the source points, which locate on \(\partial B=\{{\mathbf{x }}\in \mathbb {R}^2:~x_1^2+x_2^2=25^2\}\) except Example 3, off-setting from the real boundary.

Example 1

[Simply connected, smooth geometry] Consider the case in which the exact solution to Navier equation is

In this example, we set \(D=\{{\mathbf{x }}\in \mathbb {R}^2:~x_1^2+x_2^2<1\}, \varGamma \) is a portion of the unit circle and has the parametric representation \(\varGamma (\varTheta )=\{{\mathbf{x }}\in \partial D:~0\le \theta ({\mathbf{x }})\le \varTheta \}, \theta ({\mathbf{x }})\) is the polar angle of \({\mathbf{x }}\) in the form \({\mathbf{x }}=(\cos \theta , \sin \theta )\). Here, \(t_j=\sigma _0n_j\).

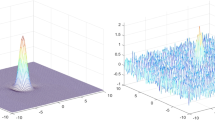

First, let \(\varTheta =\frac{\pi }{4}. \varGamma _1=\partial D\backslash \varGamma \) is the rest portion of the unit circle. Fig. 1 shows the numerical solutions with different levels of noise for Example 1 with 40 source points and 20 collocation points, and it can be seen that the numerical solutions are stable approximations to the exact solution.

Table 1 compares the accuracy errors with the MFS for Example 1 with \(\varTheta =\frac{\pi }{2}\) by using 80 source points and 40 collocation points. It can be seen that the invariant MFS and the MFS are effective for the Cauchy problem.

In order to investigate the sensitivity analysis with respect to the measure of the boundary on which Cauchy data are available, we set \(\varTheta =\frac{\pi }{2}, \pi , \frac{3\pi }{2}\). Tables 2, 3 and 4 present the regularization parameters and the relative \(L^2\) errors for the numerical solutions on boundary \(\varGamma _1\) with 120 source points and 60 collocation points. From these tables, it is readily seen that the numerical approximation for larger \(\varTheta \) is more stable and accurate. It should also be noted that the numerical solution converges to the exact solution as the level of noise decreases.

Moreover, in order to give a numerical stability analysis of the proposed method by investigating the influence of the amount of noise added into the Cauchy data on the numerical results, Tables 5 and 6 show the numerical results with 80 source points and 40 collocation points in the following cases: (i) given noisy displacements and exact tractions; (ii) given exact displacements and noisy tractions. From the tables, we can see that the noisy displacements will not affect the numerical results for the tractions, and the noisy tractions will affect the numerical results for the displacements. We think the reason is that the noisy tractions will affect the regularization parameters. More precisely, the regularization parameter chosen by Morozov discrepancy principle is fixed, i.e. \(\alpha =7.14\times 10^{-17}\) for the first term. The second term is not fixed, i.e. \(\alpha =8.42\times 10^{-5}, 2.11\times 10^{-4}, 3.24\times 10^{-4}\).

Example 2

[Non-convex, smooth geometry] In the previous example, the exact solution is simple and \(D\) is a rather simple unit disk shape. In this example, we consider the case in which the exact solution to Navier equation is

\(\partial D\) is a non-convex kite-shaped and described by the parametric representation

see Fig. 2. We give the Cauchy data on \(\varGamma =\{{\mathbf{x }}\in \partial D:~0\le t\le \pi \}, \varGamma _1=\partial D\backslash \varGamma \). Here, \(t_1=\sigma _0x_2n_1, t_2=0\).

Figure 3 shows the numerical solutions with different levels of noise for Example 2 with 120 source points and 60 collocation points, and it can be seen that the numerical solutions are also giving stable approximations to the exact solution even for this non-convex shaped domain.

Example 3

[Doubly connected, smooth geometry] Consider the exact solution to Navier equation is

In this example, let \(D=\{\mathbf{x }\in \mathbb {R}^2: ~1<x_1^2+x_2^2<16\}\), and the Cauchy boundary be \(\varGamma =\{\mathbf{x }\in \mathbb {R}^2: ~x_1^2+x_2^2=16\}, \varGamma _1=\partial D\backslash \varGamma \), i.e., \(\varGamma _1\) is the rest portion of \(\partial D, \varGamma _1=\{\mathbf{x }\in \mathbb {R}^2: ~x_1^2+x_2^2=1\}\). Here, \(t_1=\sigma _0n_1, t_2=0\). The source points locate on \(\partial B=\{\mathbf{x }\in \mathbb {R}^2: ~x_1^2+x_2^2=6^2\}\cup \{\mathbf{x }\in \mathbb {R}^2: x_1^2+x_2^2=0.5^2\}\).

Figure 4 shows the numerical solutions with different levels of noise for Example 3 with 120 source points and 60 collocation points. Figure 5 gives the errors of Example 3. From these figures, it can be seen that the numerical solution converges to the exact solution as the level of noise decreases.

Example 4

[L-type piecewise smooth geometry] The exact solution to Navier equation is

In this example, we set \(D=\{\mathbf{x }\in \mathbb {R}^2: ~-1<x_1,x_2<1\}, \varGamma =\{\mathbf{x }\in \partial D: ~x_2=-1\}\cup \{\mathbf{x }\in \partial D: ~x_1=-1\}\), and \(\varGamma _1=\partial D\backslash \varGamma \). We only give the numerical simulation on \(s(\mathbf{x })=\{\mathbf{x }\in \partial D: ~x_1=1\}\). Here, \(t_1=\sigma _0x_2n_1, t_2=0\).

Figure 6 shows the numerical solutions with different levels of noise for Example 4 with 120 source points and 60 collocation points. From the figure, it can be seen that the numerical solution converges to the exact solution as the level of noise decreases.

In order to investigate the influence of choosing \(B\), Fig. 7 gives the accuracy errors corresponding to the unknown boundary data via changing the radius \(R\) with 120 source points and 60 collocation points. From this figure, we can see that these errors decrease when \(R\) becomes large.

Table 7 gives the accuracy errors corresponding to the unknown boundary data via changing the regularization parameter for Example 4 with 200 source points and 100 collocation points. From Table 7, we can see that the minimum errors appear in \((10^{-8},10^{-7})\), which includes the regularization parameter \({\alpha }=8.6\times {10^{-8}}\) chosen by discrepancy principle.

Figure 8 shows the accuracy errors corresponding to the unknown boundary data with \(\alpha =4\times 10^{-7}\) via increasing the number of the collocation points \(N\). Moreover, Fig. 9 shows the numerical solutions for the different numbers of collocation points \(N=20,~40,~60\), and the the regularization parameters are chosen by discrepancy principle. These results indicate the fact that accurate numerical solutions for the displacement and the traction vectors on the underspecified boundary can be obtained using a relatively small number \(N\).

At last, in order to give the ill-posedness of this problem clearly, we find the condition number Cond(\(\fancyscript{A}\)) is \(3.3\times 10^{33}\). Since the minimum singular value is close to zero, this problem is illposed, and the introducing of a regularization parameter is necessary.

5 Conclusions

In this paper, we study the application of the invariant MFS to solve the Cauchy problem in two-dimensional linear elasticity based on Tikhonov regularization method with Morozov discrepancy principle. Through the use of the double-layer potential function, we give the invariant property for a problem with two different descriptions. Then, we formulate the MFS with an added constant and an additional constraint to adapt this invariance property. Moreover, the invariant MFS retains the advantages of the MFS. The solution of some kinds of domain are numerically tested. From the examples, we can see that the modified MFS and the classical MFS are all effective and stable, whilst the modified MFS is leaving the very basic natural property of the analytic solution, i.e., the invariance under trivial coordinate changes in the problem description.

This method also can be used to deal with other problems in linear elasticity, such as the inverse boundary determination problem, the boundary value problem. They will be reported elsewhere.

References

Alessandrini, G., Rondi, L., Rosset, E., Vessella, S.: The stability for the Cauchy problem for elliptic equations. Inverse Probl. 25, 1–47 (2009)

Andrieux, S., Baranger, T.N.: An energy error-based method for the resolution of the Cauchy problem in 3D linear elasticity. Comput. Methods Appl. Mech. Eng. 197, 902–920 (2008)

Baranger, T.N., Andrieux, S.: An optimization approach for the Cauchy problem in linear elasticity. Struct. Multidiscip. Optim. 35, 141–152 (2008)

Bilotta, A., Turco, E.: A numerical study on the solution of the Cauchy problem in elasticity. Int. J. Solids Struct. 46, 4451–4477 (2009)

Calderon, A.P.: Uniquess in the Cauchy problem for partial differential equations. Am. J. Math. 80, 16–36 (1958)

Comino, L., Marin, L., Gallego, R.: An alternating iterative algorithm for the Cauchy problem in anisotropic elasticity. Eng. Anal. Bound. Elem. 31, 667–682 (2007)

Delvare, F., Cimetiére, A., Hanus, J.L., Bailly, P.: An iterative method for the Cauchy problem in linear elasticity with fading regularization effect. Comput. Methods Appl. Mech. Eng. 199, 3336–3344 (2010)

Durand, B., Delvare, F., Bailly, P.: Numerical solution of Cauchy problem in linear elasticity in axisymmetric situations. Int. J. Solids Struct. 48, 3041–3053 (2011)

Fairweather, G., Karageorghis, A.: The method of fundamental solutions for elliptic boundary value problems. Adv. Comput. Math. 9, 69–95 (1998)

Isakov, V.: Inverse Problems for Partial Differential Equations. Springer, New York (1998)

Karageorghis, A., Lesnic, D., Marin, L.: A survey of applications of the MFS to inverse problems. Inverse Probl. Sci. Eng. 19(3), 309–336 (2011)

Kirsch, A.: An Introduction to the Mathematical Theory of Inverse Problems. Springer, New York (1996)

Kozlov, V.A., Maz’ya, V.G., Fomin, A.V.: An iterative method for solving the Cauchy problem for elliptic equations, Zh. Vych. noi Mat. Mat. Fiziki 31 64–74. English translation. USSR Computational Mathematics and Mathematical Physics 31, 45–52 (1991)

Koya, T., Yeih, W.C., Mura, T.: An inverse problem in elasticity with partially overspecified boundary conditions. II. Numerical details. Trans. ASME J. Appl. Mech. 60, 591–606 (1993)

Linkov, A.M.: Boundary Integral Equations in Elasticity Theory. Springer, Russia (2002)

Liu, C.S.: To solve the inverse Cauchy problem in linear elasticity by a novel Lie-group integrator. Inverse Probl. Sci. Eng. 22, 641–671 (2014)

Maniatty, A., Zabaras, N., Stelson, K.: Finite element analysis of some elasticity problems. J. Mech. Eng. Div. ASCE 115, 1302–1316 (1989)

Maniatty, A., Zabaras, N.: Investigation of regularization parameters and error estimating in inverse elasticity problems. Int. J. Numer. Methods Eng. 37, 1039–1052 (1994)

Marin, L., Elliott, L., Ingham, D.B., Lesnic, D.: Boundary element method for the Cauchy problem in linear elasticity. Eng. Anal. Bound. Elem. 25, 783–793 (2001)

Marin, L., Elliott, L., Ingham, D.B., Lesnic, D.: Boundary element regularization methods for solving the Cauchy problem in linear elasticity. Inverse Probl. Eng. 10, 335–357 (2002)

Marin, L., Elliott, L., Ingham, D.B., Lesnic, D.: An iterative boundary element algorithm for a singular Cauchy problem in linear elasticity. Comput. Mech. 28, 479–488 (2002)

Marin, L., Háo, D.N., Lesnic, D.: Conjugate gradient-boundary element method for the Cauchy problem in elasticity. Q. J. Mech. Appl. Math. 55, 227–247 (2002)

Marin, L., Lesnic, D.: Boundary element solution for the Cauchy problem in linear elasticity using singular value decomposition. Comput. Methods Appl. Mech. Eng. 191, 3257–3270 (2002)

Marin, L., Lesnic, D.: Regularized boundary element solution for an inverse boundary value problem in linear elasticity. Commun. Numer. Methods Eng. 18, 817–825 (2002)

Marin, L., Lesnic, D.: The method of fundamental solutions for the Cauchy problem in two-dimensional linear elasticity. Int. J. Solids Struct. 41, 3425–3438 (2004)

Marin, L., Lesnic, D.: Boundary element-Landweber method for the Cauchy problem in linear elasticity. IMA J. Appl. Math. 18, 817–825 (2005)

Marin, L.: A meshless method for solving the Cauchy problem in threedimensional elastostatics. Comput. Math. Appl. 50(1–2), 73–92 (2005)

Marin, L.: The minimal error method for the Cauchy problem in linear elasticity. Numerical implementation for two-dimensional homogeneous isotropic linear elasticity. Int. J. Solids Struct. 46, 957–974 (2009)

Marin, L., Johansson, B.T.: Relaxation procedures for an iterative MFS algorithm for the stable reconstruction of elastic fields from Cauchy data in two-dimensional isotropic linear elasticity. Int. J. Solids Struct. 47, 3462–3479 (2010)

Marin, L., Johansson, B.T.: A relaxation method of an alternating iterative algorithm for the Cauchy problem in linear isotropic elasticity. Comput. Methods Appl. Mech. Eng. 199, 3179–3196 (2010)

Martin, T.J., Haldermann, J.D., Dulikravich, G.S.: An inverse method for finding unknown surface tractions and deformations in elastostatics. Comput. Struct. 56, 825–835 (1995)

Schnur, D., Zabaras, N.: Finite element solution of two-dimensional elastic problems using spatial smoothing. Int. J. Numer. Methods Eng. 30, 57–75 (1999)

Sun, Y., Ma, F., Zhang, D.: An integral equations method combined minimum norm solution for 3D elastostatics Cauchy problem. Comput. Methods Appl. Mech. Eng. 271, 231–252 (2014)

Turco, E.: A boundary elements approach to identify static boundary conditions in elastic solids from stresses at internal points. Inverse Probl. Eng. 7, 309–333 (1999)

Turco, E.: An effective algorithm for reconstructing boundary conditions in elastic solids. Comput. Methods Appl. Mech. Eng. 190, 3819–3829 (2001)

Zabaras, N., Morellas, V., Schnur, D.: Spatially regularized solution of inverse elasticity problems using the BEM. Commun. Appl. Numer. Methods 5, 547–553 (1989)

Acknowledgments

The very constructive comments and suggestions made by the reviewers, which have enriched this paper, are to be acknowledged. Finally, we express our gratitude to the anonymous referees for pointing out a few confusing expressions in the original manuscript, and pointing to future research.

Author information

Authors and Affiliations

Corresponding author

Additional information

The research was supported by the fundamental Research Funds for the Central Universities (No: 3122014K012) and the NSFC (Nos: 11371172, 11271159, 11401574, 61403395).

Rights and permissions

About this article

Cite this article

Sun, Y., Ma, F. & Zhou, X. An Invariant Method of Fundamental Solutions for the Cauchy Problem in Two-Dimensional Isotropic Linear Elasticity. J Sci Comput 64, 197–215 (2015). https://doi.org/10.1007/s10915-014-9929-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-014-9929-7