Abstract

The goal of this paper is to describe a method to solve a class of time optimal control problems which are equivalent to finding the sub-Riemannian minimizing geodesics on a manifold M. In particular, we assume that the manifold M is acted upon by a group G which is a symmetry group for the dynamics. The action of G on M is proper but not necessarily free. As a consequence, the orbit space M/G is not necessarily a manifold but it presents the more general structure of a stratified space. The main ingredients of the method are a reduction of the problem to the orbit space M/G and an analysis of the reachable sets on this space. We give general results relating the stratified structure of the orbit space, and its decomposition into orbit types, with the optimal synthesis. We consider in more detail the case of the so-called K−P problem where the manifold M is itself a Lie group and the group G is determined by a Cartan decomposition of M. In this case, the geodesics can be explicitly calculated and are analytic. As an illustration, we apply our method and results to the complete optimal synthesis on S O(3).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a recent paper [4], we have solved the time optimal control problem for a system on S U(2) using a method which exploits the symmetries of the problem and provides an explicit description of the reachable sets at every time. In this paper, we formalize such methodology in general and give results (proved in Sections 3 and 4) linking the structure of a G-manifoldFootnote 1 to the optimal synthesis. As an example of application, we provide the complete optimal synthesis for a minimum time problem on S O(3), which complements some of the results of [7] obtained with a different method.

In order to introduce some of the ideas we shall explore, we provide a brief summary of the treatment of [4] for the problem on S U(2), in its simplest formulation, from the point of view we will take in this paper. The problem is to control in minimum time the system

to a desired final condition X f ∈S U(2), subject to a bound on the L 2 norm of the control, i.e., \({u_{x}^{2}}+{u_{y}^{2}} \leq 1\). Here, σ x and σ y are Pauli matrices:

The matrices, i σ x and i σ y , span a subspace of s u(2) which is invariant under the operation of taking a similarity transformation using a diagonal matrix D in S U(2), i.e., A∈s u(2)→D A D ‡∈s u(2). In this respect, the first observation is that if X: = X(t) is an optimal trajectory to go from the identity to X f , then D X D ‡: = D X(t)D ‡ is an optimal trajectory from the identity to D X f D ‡. Therefore, once we have a minimizing geodesic leading to X f , we also have a minimizing geodesic for every element D X f D ‡ in the “orbit” of X f and all such geodesics project to a unique curve in the space of orbits, S U(2)/G, where G denotes the subgroup of diagonal matrices. The second observation concerns the nature of the orbit space S U(2)/G. Since a general matrix in S U(2) can be written as

and a similarity transformation by a diagonal matrix only affects the phase of the off-diagonal elements, an orbit is uniquely determined by the complex value x, with |x|≤1, i.e., an element of the unit disc in the complex plane which is therefore in one to one correspondence with the elements of S U(2)/G. With these facts, we studied in [4] the whole optimal synthesis in the unit disc. Since the problem has a K−P structure (cf. Section 4), the candidate optimal trajectories can be explicitly expressed in terms of some parameters to be determined according to the desired final condition X f . The number is reduced to only one if we consider the projection on the unit disc of these trajectories. Fixing the time t and varying such parameter, we obtained, as parametric curves, the boundary of the reachable in the unit disc, or, more precisely, the boundary of the projection of the reachable set onto the orbit space. Once an explicit description of the reachable sets is available, a method to determine the optimal controls is obtained as a consequence.

The study of the role of symmetries in optimal control problems is a fundamental subject in geometric control theory, important both from a conceptual point of view and a practical one as it allows us to reduce the problem to a smaller state (quotient) space. This symmetry reduction in control problems has a long history (see, e.g., [10, 13–15, 18, 21, 26] and see, in particular, [27] for a recent account). It is obtained from the application of techniques in geometric mechanics such as in [19, 20]. However, typically, translation of these results of geometric mechanics in control theory has been restricted to the case where the action of the symmetry group G on the underlying manifold M is not only proper but also free (definitions are given in Section 2). In this case the orbit space M/G is guaranteed to be a manifold. In the case where such an action is not free, the orbit space M/G is a stratified space [8]. This is the case discussed here. One example is the abovementioned (closed) unit disc which is a manifold with boundary, a special case of a stratified space.

We have kept the paper as much as possible self-contained introducing several concepts from the beginning. In particular, the paper is organized as follows: In Section 2, we give the necessary background on sub-Riemannian geometry and how it connects with the time-optimal control problem (we refer to [1, 2] and [24] for a detailed treatment). This section also contains the basic facts on Lie transformation groups, in particular the decomposition of the orbit space into orbit types (see, e.g., [8]). In Section 3, we present results linking the geometry of the orbit space with the geometry of the optimal synthesis in optimal control. In Section 4, we apply and expand these results to the case where the problem has an underlying K−P structure. As an example, we apply our results to determine the geometry of the optimal synthesis for a control system on S O(3) in Section 5.

2 Background

In the next two subsections, we summarize some basic concepts in sub-Riemannian geometry and optimal control. We refer to [1, 2, 24] for introductory monographs on the subject.

2.1 Sub-Riemannian Structures and Minimizing Geodesics

Given a Riemannian manifold, M, a sub-Riemannian structure on M is given by a sub-bundle, Δ, of the tangent bundle TM. Letting π Δ:Δ→M be the canonical projection, Δ is a vector bundle on M, whose fibers at x∈M, \({\Delta }_{x}:=\pi _{\Delta }^{-1}(x) \subseteq T_{x}M\), are assumed to have constant dimension, i.e., dimΔ x : = m independently of x. In the control theoretic setting, a sub-Riemannian structure is often described by giving a set of m, linearly independent, smooth vector fields (a frame) on M, \(\mathcal {F}:=\{X_{1},X_{2},\ldots ,X_{m}\}\), such that at every point x∈M, span {X 1(x),X 2(x),…,X m (x)}=Δ x . It is assumed that \(\mathcal {F}\) is bracket generating: the smallest Lie algebra of vector fields containing \(\mathcal {F}\), i.e., the Lie algebra generated by \(\mathcal { F}\), \(Lie \, \mathcal {F}\), is such that, at every point x∈M, \(Lie \, \mathcal {F}(x)=T_{x}M\). Since M is a Riemannian manifold, by restricting the Riemannian metric to Δ x ⊆T x M at every x∈M, we obtain a smoothly varying positive definite inner product for vectors in Δ x , which we will denote by 〈⋅,⋅〉. We shall assume that the given frame \(\mathcal {F}\) is orthonormal with respect to this inner product, that is, 〈X j (x),X k (x)〉 = δ j,k , for every x∈M.

We shall consider horizontal curves on M. A curve γ:[0,T]→M is assumed to be Lipschitz continuous and therefore differentiable almost everywhere in [0,T], with \(\dot {\gamma }\) essentially bounded. That is, there exists a constant N and a map H:[0,T]→T M, with H(t)∈T γ(t) M, such that 〈H(t),H(t)〉 R ≤N, for every t∈[0,T], and such that \(H(t)=\dot \gamma (t)\), almost everywhere in [0,T]. Here, 〈⋅,⋅〉 R denotes the original Riemannian metric on M from which the sub-Riemannian metric 〈⋅,⋅〉 is derived. We shall assume a curve γ to be regular, that is \(\dot \gamma (t) \not =0\), almost everywhere in [0,T]. A curve γ is said to be horizontal if \(\dot \gamma (t) \in {\Delta }_{\gamma (t)}\) almost everywhere in [0,T]. Given the orthonormal frame \(\mathcal {F}:=\{ X_{1},\ldots ,X_{m}\}\), this implies that we can write, almost everywhere in [0,T],

with the functions u j , j=1,…,m, given by \(u_{j}(t)=\langle X_{j}(\gamma (t)), \dot {\gamma }(t) \rangle \). We remark that, because of the smoothness of the X j ’s, the continuity of γ on the compact set [0,T] and the fact that \(\dot \gamma \) is essentially bounded, the functions u j are also essentially bounded. Therefore, a horizontal curve determines m essentially bounded “control” functions, u 1,…,u m , satisfying Eq. 4 while, viceversa, given m essentially bounded control functions u 1,…,u m , the solution of Eq. 4 gives a horizontal curve.

A horizontal curve γ has a length, l(γ), which is given by its length in the Riemannian geometry sense, i.e., (using Eq. 4)

A horizontal curve γ, in the interval [0,T], is said to be parametrized by a constant if \(\langle \dot \gamma , \dot \gamma \rangle \) is constant, almost everywhere in [0,T]. It is said parametrized by arclength if such a constant is equal to one. The image of a curve γ in M as well as its length do not change if we re-parametrize the time t. A reparametrization is a Lipschitz, monotone and surjective map ϕ:[0,T ′]→[0,T], and a reparametrization of a curve γ is a curve γ ϕ : = γ∘ϕ : [0,T ′]→M. Given a horizontal curve γ of length L and α>0, consider the increasing map s:[0,T]→[0,α L],

which is invertible. Let ϕ be the inverse map ϕ:[0,α L]→[0,T]. Then a standard chain rule argument shows that the re-parametrization γ ϕ : = γ∘ϕ is parametrized by a constant \(\frac {1}{\alpha }\), and in particular it is parametrized by arclength if α=1. Viceversa every horizontal curve is the reparametrization of a curve parametrized by a constant. We refer to [1] (Lemma 3.14 and Lemma 3.15) for details.

Given two points, q 0 and q 1, the sub-Riemannian distance between them, d(q 0,q 1), is defined as the infimum of the lengths of all horizontal curves γ, such that γ(0) = q 0, and γ(T) = q 1. This is obviously greater or equal than the Riemannian distance between the two points where the infimum is taken among all the Lipschitz continuous curves, not necessarily horizontal. The Chow-Raschevskii theorem states that if M is connected, in the above described situation and in particular under the bracket generating assumption for \(\mathcal {F}\), (M,d) is a metric space and its topology as a metric space is equivalent to the one of M. This theorem has several consequences including the fact that, for any two points q 0 and q 1 in M, the distance d(q 0,q 1) is finite, i.e., there exists a horizontal curve γ joining q 0 and q 1 having finite length. Moreover, once q 0 is fixed d(q 0,q 1) is continuous as a function of q 1. A minimizing geodesic γ joining q 0 and q 1, is a horizontal curve which realizes the sub-Riemannian distance d(q 0,q 1). The existence theorem says that if M is a complete metric space, and in particular if it is compact, then there exists a minimizing geodesic for any pair of points q 0 and q 1 in M. We shall assume this to be the case in the following.

2.2 Time Optimal Control

The problem we shall consider will be, once q 0∈M is fixed, to characterize the minimizing geodesic connecting q 0 to q 1 for any q 1∈M. This problem is related to the minimum time optimal control problem as described in the following theorem (cf., e.g., [1]).

Theorem 1

The following two facts are equivalent:

-

1.

γ:[0,T]→M is a minimizing sub-Riemannian geodesic joining q 0 and q 1 , parametrized by constant speed L.

-

2.

γ:[0,T]→M is a minimum time trajectory of Eq. 4 , subject to γ(0)=q 0 and γ(T)=q 1 , and subject to \(\| \vec u \| \leq L\) , almost everywhere.

Proof

The proof that 1→2 is obtained by contradiction. If 1 is true and 2 is not true, then there exists an essentially bounded control \(\vec {\tilde {u}} \), with \( \|\vec {\tilde {u}} \| \leq L\), and a corresponding solution of Eq. 4, \(\tilde \gamma \), with \(\tilde \gamma (0)=q_{0}\), and \(\tilde \gamma (T_{1})=q_{1}\), and T 1<T. Calculate the length of \(\tilde \gamma \),

which contradicts the fact that γ is a minimizing geodesic.

Let us prove now that 2→1. First observe that γ must be indeed parametrized by constant speed. Since the vector fields X j in Eq. 4 are orthonormal, we know that \(\| \dot \gamma \|=\| \vec u\|\) almost everywhere. However, \(\| \vec u\|\) (and therefore \(\| \dot \gamma \|\)) must be equal to L almost everywhere. In fact, assume \(\| \vec u \| < L-\epsilon \), for some 𝜖>0, on an interval of positive measure [t 1,t 2], with \(\gamma (t_{1}):=\bar q_{1}\), and \(\gamma (t_{2}):=\bar q_{2}\). Direct computation shows that with the “re-scaled” control \(\vec {u}_{R}:= \frac {L}{L-\epsilon } \vec u \left (\frac {L}{L-\epsilon } t - \frac {\epsilon }{L-\epsilon } t_{1} \right )\) , the curve \(\gamma _{R}:=\gamma \left (\frac {L}{L-\epsilon }t -\frac {\epsilon t_{1}}{L-\epsilon } \right )\) is solution of Eq. 4 with \(\gamma _{R}(t_{1})=\gamma (t_{1})=\bar q_{1}\) and \(\gamma _{R}(t_{1}+\frac {L-\epsilon }{L}(t_{2}-t_{1}))=\gamma (t_{2})=\bar q_{2}\). Therefore, \(\vec u_{R}\), which is an admissible control since its norm is bounded by L, achieves the transfer from \(\bar q_{1}\) to \(\bar q_{2}\) in time \(\frac {L-\epsilon }{L} (t_{2} - t_{1}) < (t_{2} - t_{1})\), which contradicts the optimality of \(\vec u\). Moreover, γ has to be a minimizing geodesic with constant speed L. If there was another geodesic \(\tilde {\gamma }\) with constant speed L, its length would be L T 1 which must be less than the length of γ, that is LT. This implies T 1<T and contradicts the optimality of the time T. □

In the following, we shall assume that our initial point q 0 is fixed and we shall look for the sub-Riemannian minimizing geodesics parametrized by constant speed L, or equivalently the minimum time trajectories (cf. Theorem 1) connecting q 0 to q 1, for any q 1∈M. These curves describe the so called optimal synthesis on M. Two loci are important in the description of the optimal synthesis: The critical locus \(\mathcal {CR}(M)\) is the set of points in M where minimizing geodesics loose their optimality, i.e., \(p \in \mathcal {C R}(M)\) if and only if there exists a horizontal curve defined in [0,T + 𝜖), with T>0 and 𝜖>0, such that γ(0) = q 0, γ(T) = p, γ is a minimizing geodesic joining q 0 and γ(t), for every t in (0,T) and it is not a minimizing geodesic for t∈(T,T + 𝜖). The cut locus is the set of points p∈M which are reached by two or more minimizing geodesics, i.e., \(p \in \mathcal {CL}(M)\) if and only if there exists two horizontal curves γ 1 and γ 2, [0,T]:→M such that both γ 1 and γ 2 are optimal in [0,T). Because of the existence of a minimizing geodesic, if \(p \in {\mathcal {CL}} (M)\), at least one of the curves γ 1 and γ 2 is optimal for p, at time T. Points in the cut locus are called cut points. Regularity of minimizing geodesics (cf. [25]) has consequences on the cut and critical locus. Next proposition proves that cut points are also critical points when analyticity is verified, this holds in the K−P problem that will be treated in Section 4. We have

Proposition 2.1

Assume that all minimizing geodesics are analytic functions of t defined in [0,∞). Then.

Proof

Assume \(p \in \mathcal {CL}(M)\). Then beside the minimizing geodesic for p, γ 1:[0,∞)→M, with γ 1(T) = p, there exists another horizontal curve γ 2:[0,∞)→M, which is optimal on [0,T) and satisfies γ 2(T) = p. At least one between γ 1 and γ 2 has to loose optimality at p. Therefore, \(p \in \mathcal {CR} (M)\). If this is not the case, the concatenation of one of them until time T and the other after time T will also be optimal, which contradicts analyticity of the minimizing geodesics. □

We shall also consider the reachable sets for system (4), with \(\| \vec u \| \leq L\). The reachable set \(\mathcal {R}(T)\) is the set of all points p∈M such that there exists an essentially bounded function \(\vec {u}\), with \(\| \vec u \| \leq L\), a.e., such that the corresponding solution of Eq. 4, γ, satisfies, γ(0) = q 0 and γ(T) = p. We have that T 1≤T 2 implies \(\mathcal {R}(T_{1}) \subseteq \mathcal {R}(T_{2})\). Moreover if γ = γ(t) is a time optimal trajectory on [0,T] with γ(T) = p then p belongs to the boundary of the reachable set \(\mathcal {R}(T)\), which is a closed set since the set of values for the control is closed (cf. [12])

2.3 Symmetries and Lie Transformation Groups

In addition to the above sub-Riemannian structure, on the manifold M, we shall consider the action of a Lie transformation group G assuming that it is a left action,Footnote 2and it is a proper action.Footnote 3 We shall also assume that the action map is smooth. We shall denote by M/G the orbit space of M under the action of G, i.e., the space of equivalence classes (orbits) [p], where p 1 is equivalent to p 2, if and only if there exists a g∈G such that g p 1 = p 2. π:M→M/G denotes the canonical projection, and M/G is endowed with the quotient topology. The study of the structure of M/G is part of the theory of Lie transformation groups. We now recall the main facts which are needed for our treatment. Details can be found in introductory monographs on the subject, such as, e.g., [8].

Two points x and y in M are said to be of the same type if their isotropy groups in G are conjugate. Recall, that the isotropy group of a point x∈M, G x , is the subgroup of elements g of G, such that g x = x. Two subgroups H 1 and H 2 are conjugate if there exists a g∈G such that the map H 1→H 2, h→g h g −1 is a group isomorphism. For any subgroup H of G, we denote by (H) the set of groups conjugate to H. The subset M (H)⊆M is the set of points of M whose isotropy group belongs to (H), or, in other words, the set of points whose isotropy group is conjugate to H. There will be only certain classes of groups (H) for which M (H) is not empty. These are called the isotropy types. It is known that M (H) is a submanifold of M (see, e.g., [23], 7.4). If two points x and y in M are on the same orbit, i.e., y = g x and h∈G y , then h y = h g x = y = g x, so that, g −1 h g∈G x . This means that G x and G y are conjugate, and therefore, x and y both belong to \(M_{(G_{x})}=M_{(G_{y})}\). A consequence of this is that M (H) is the inverse image of a set in M/G, π(M (H)) = M (H)/G, which is called the isotropy stratum of type (H). Isotropy strata have a smooth structure in M/G: They are smooth manifolds and the inclusion M (H)/G→M/G is smooth (cf. e.g., [5]). We remark that M/G itself is not in general a smooth manifold. It is a smooth manifold if the action of G on M is free that is the isotropy group G x is the trivial one given by the identity, for any x∈M. In that case, there exists only one possible (H) which contains only the trivial group composed of only the identity. Therefore, M (H)/G = M/G is a smooth manifold according to the above cited result. In general, both M and M/G have the structure of a stratified space.

Definition 2.2

A stratification of a topological space N is a partition of N into connected manifolds N i , i.e., N=∪ i N i which is locally finite, i.e., every compact set in N intersects only a finite number of N i ’s. Moreover such a partition satisfies the frontier condition: If \(N_{i} \cap \bar N_{j} \not =\emptyset \) then \(N_{i} \subseteq \bar N_{j}\) and dim(N i )< dim(N j ).Footnote 4

Consider M:=∪(H) M (H), where the union is taken over all the isotropy types and further decompose each M (H) into its connected components, so as to obtain a partition of M, M=∪ i M i . Moreover, partition M/G as M/G=∪ i π(M i ):=∪ i M i /G. Such partitions give a stratification of M and M/G, respectively (see, e.g., [22] Theorem 1.30).

On the sets of isotropy types (H), a partial order is established by saying that (H 1)≤(H 2) if H 1 is conjugate to a subgroup of H 2. This defines subsets in M and M/G: M ≤H is defined as

with \(M_{\leq (H)}/G=\cup _{(H_{1}) \leq (H)} M_{(H_{1})}/G\). We have from the definition that (H 1)≤(H 2) implies \(M_{\leq (H_{1})} \subseteq M_{\leq (H_{2})}\) and \(M_{\leq (H_{1})}/G \subseteq M_{\leq (H_{2})}/G\). One of the fundamental results of the theory of transformation groups is the theorem of existence of minimal orbit type: There exists a unique orbit type (K) such that (K)≤(H) for every orbit type (H). Moreover, M (K)/G is a connected, locally connected, open and dense set in M/G, which is a manifold of dimension dim M (K)/G= dimM− dimG+ dimK (cf., e.g., [23]) . Notice in particular that if K is a discrete group the dimension of M (K)/G is dimM− dimG. The manifold M (K)/G (M (K)) is called the regular part of M/G (M), while M/G−M (K)/G (M−M (K)) is called the singular part.

Given the sub-Riemannian (and Riemannian) structure described in Section 2.1, with the initial point q 0∈M, we shall say that the Lie transformation group G is a group of symmetries if the following conditions are verified: (Denote by Φ g the smooth map on M which gives the action of G, Φ g x: = g x)

-

1.

q 0 is a fixed point for the action of G on M. That is,

$$ {\Phi}_g(q_0)=q_0, \qquad \forall g \in G. $$(9) -

2.

If Δ denotes the distribution which defines the sub-Riemannian structure, the action of G satisfies the following invariance property, for every p∈M,

$$ {\Phi}_{g*} {\Delta}_p= {\Delta}_{{\Phi}_g p}. $$(10) -

3.

G is a group of isometries for the sub-Riemannian metric 〈⋅,⋅〉, that is, for every p∈M, and U,V in Δ p

$$ \langle U, V \rangle=\langle {\Phi}_{g*} U, {\Phi}_{g*} V \rangle. $$(11)

In the next two sections of this paper, we investigate how the optimal synthesis is related to the orbit type decomposition in a sub-Riemannian structure where the group G is a group of symmetries for such a structure in the sense above specified.

3 Symmetries in the Time Optimal Control Problem

The following propositions clarify the role of symmetries and the corresponding orbit space decomposition in the optimal synthesis.

Proposition 3.1

Let q 1 and q 2 be two points in M on the same orbit, i.e. q 2 =gq 1 for some g∈G. Let γ 1 be a minimizing sub-Riemannian geodesic parametrized by constant speed L (and therefore a minimum time trajectory for Eq. 4 subject to \(\|\vec u \| \leq L\) (cf. Theorem 1), with γ 1 (0)=q 0 and γ 1 (T)=q 1 . Then, γ 2 :=gγ 1 is a minimizing sub-Riemannian geodesic parametrized by constant speed L (and therefore a minimum time trajectory) as well.

Proof

First notice that because of property (10), for almost every t in [0,T], we have

so that γ 2 is horizontal. Moreover because of property (11), a.e.,

so that γ 2 is also parametrized by constant speed L and l(γ 2) = l(γ 1). It is the minimum length since a smaller length would contradict the minimality of γ 1. □

As a consequence of the previous proposition, we have that the space M/G is a metric space with the distance \(\bar d\) between the two orbits \(\bar q_{1}\) and \(\bar q_{2}\), defined as

where d is the sub-Riemannian distance (cf. the Chow-Rashevskii theorem). A geodesic connecting two points \(\bar q_{1}\) and \(\bar q_{2}\), in M/G is a curve that achieves such an infimum.

Corollary 3.2

The distance \(\bar d(\bar q_{0}, \bar q_{1})\) is achieved by π(γ) where γ is a minimizing sub-Riemannian geodesic connecting q 0 to any q 1 , independently of the representative \(q_{1} \in \bar q_{1}\).

Therefore, the optimal synthesis in M is the inverse image of the optimal synthesis in M/G. Furthermore, on M/G, we can define critical locus, cut locus, and reachable sets, in terms of geodesics in exactly the same way we defined them on M. These sets are the projections of the corresponding sets in M. We have:

Proposition 3.3

-

1.

$$ \mathcal{R}(T)=\pi^{-1}(\pi(\mathcal{R}(T))). $$(15)

-

2.

$$ \mathcal{CR}(M)=\pi^{-1}(\pi(\mathcal{CR}(M))). $$(16)

-

3.

$$ \mathcal{CL}(M)=\pi^{-1}(\pi(\mathcal{CL}(M))). $$(17)

Proof

Analogously to the proof of Proposition 3.1, if q 1 and q 2 are on the same orbit and there is a horizontal curve γ 1 connecting q 0 to q 1, with control \(\vec u_{1}\), \(\|\vec u_{1} \|=\| \dot \gamma _{1} \| \leq L\) in time T, then the curve γ 2: = g γ 1, for some g∈G, corresponds to control \(\vec u_{2}\), with \(\|\vec u_{2} \|=\|\dot \gamma _{2} \|=\| \dot \gamma _{1}\| \leq L\), a.e., connecting q 0 to q 2, in the same time T. Therefore, q 1 is in \(\mathcal {R}(T)\) if and only if q 2 is in \(\mathcal {R}(T)\), which proves (15). Analogously, we can prove that if q 1 and q 2 are on the same orbit they are both in \({\mathcal {CL}}(M)\) and \({\mathcal {CR}}(M)\) or none of them is, that is, Eqs. 16 and 17 also hold. We illustrate the proof for \(\mathcal {CL}(M)\) (the proof of \(\mathcal {CR}(M)\) is similar). Assume by contradiction that \(q_{1} \in \mathcal {CL}(M)\) and \(q_{2} \notin \mathcal {CL}(M)\), with q 2 = g q 1, for g∈G. Since \(q_{1} \in \mathcal { CL}(M)\), there are two different minimizing sub-Riemannian geodesics with q 1 as their final point, γ and \(\tilde \gamma \). Then, g γ and \(g\tilde \gamma \) will be two different geodesics with q 2 as their final point. In fact, \(g \gamma (t)=g \tilde \gamma (t)\), for all t∈[0,T] would imply \(\gamma (t)=\tilde \gamma (t)\), which we have excluded. □

Proposition 3.4

Assume that q 1 does not belong to the cut locus, i.e., \(q_{1} \notin \mathcal {CL}(M)\) and γ is a sub-Riemannian minimizing geodesic in [0,T] for q 1 . Then, for every t∈(0,T), we have the following relation for the isotropy groups

Under the assumption that geodesics are analytic, we can obtain for general q 1∈M the converse inclusion to Eq. 18.

Proposition 3.5

If all geodesics are analytic then, for any q 1 ∈M, and any geodesic γ:[0,T]→M, connecting q 0 to q 1 , we have for any t∈(0,T)

Proof

The proof uses some ideas of Lemma 3.5 in [5]. Assume there exists a \(\bar {t}\in (0,T)\) and a \(g\in G_{\gamma (\bar {t})}\) which is not in \(G_{q_{1}}\). Then, the curve, which is equal to γ between q 0 and \(\gamma (\bar {t})\) and is equal to g γ between \(\gamma (\bar {t})\) and g q 1 (which is different from q 1), has the same length as the curve g γ. Such a curve is a minimizing geodesic, according to Proposition 2.1, since it has the same length as γ, that is the minimal length. However, such a curve which is equal to γ in an interval of positive measure until time t and different from γ afterwards cannot be analytic, which contradicts the analyticity of all the geodesics. □

We collect in the following corollary some consequences of Propositions 3.4 and 3.5. The corollary describes how minimizing sub-Riemannian geodesics sit in the orbit type decomposition of M and M/G.

Corollary 3.6

If all minimizing sub-Riemannian geodesics are analytic, then for every q∈M ( \(\bar q \in M/G\) ), every minimizing geodesic is entirely contained in \(M_{\leq ({G_{q}})}\) ( \(M_{\leq ({G_{q}})}/G\) ) except possibly for the initial point q 0 . In particular, if q∈M (K) (the regular part of M) then the whole minimizing geodesic except possibly the initial point q 0 is in M (K) . If, in addition, \(q \notin \mathcal {CL}(M)\) then

and the corresponding sub-Riemannian geodesic is all contained in \(M_{(G_{q})}\).

Similar “convexity” results in the Riemannian case are given in ([5] 3.4). Corollary 3.6 gives a general principle for the behavior of geodesics in the presence of a group of symmetries for the optimal control problem:

The geodesics can only go from lower ranked strata such as the lowest M (K) to higher ranked ones but not viceversa. If a geodesic touches a higher ranked point and then goes back to a lower ranked one, it means that it has lost optimality and therefore the point belongs to the critical locus.

Remark 3.7

The corollary suggests that the points in the singular part of M, M s i n g : = M−M (K), are, in general, good candidates to be in the cut locus \(\mathcal {CL}(M)\).Footnote 5 In fact, any point q∈M s i n g which has a geodesic with points in M (K), which is an open and dense set in M, must be in \(\mathcal {CL}(M)\). Points in M s i n g which are not in \(\mathcal {CL}(M)\) must be such that every geodesic leading to that point must be entirely contained in the singular part of M since we have that Eq. 20 is verified. In the S U(2) example of [4], it holds that \(\mathcal {CL}(M)=\mathcal {CR}(M)=M_{sing}\). However, this is not always the case and in general the situation changes by changing the group of symmetries we consider (cf. Remark 5.7 below).

4 The K−P Problem

An example of a sub-Riemannian problem with symmetries is the K−P problem discussed in [6,7]. In this section, we shall focus on this type of problems.

In the K−P problem, the manifold M is a semisimple Lie group with its Lie algebra of right invariant vector fields \(\mathcal {L}\). The Lie algebra \(\mathcal {L}\) has a Cartan decomposition, that is, a vector space decomposition

with the commutation relations:Footnote 6

The Lie algebra \(\mathcal {L}\) is endowed with a (pseudo)-inner product defined by the Killing form 〈A,D〉: = K i l l(A,D): = T r(a d A a d D ), where a d A is the linear operator on \(\mathcal {L}\), given a d A (X)=[A,X]. A comprehensive introduction to notions of Lie theory can be found for example in [17]. In particular, since \(\mathcal {L}\) is assumed semisimple, K i l l is non degenerate (this is the Cartan criterion for semisimplicity). Associated with a Cartan decomposition is a Cartan involution, that is, an automorphism 𝜃 of \(\mathcal {L}\), such that 𝜃 2 is the identity, and \(\mathcal {K}\) and \(\mathcal {P}\) above are the +1 and −1 eigenspaces of 𝜃 in \(\mathcal {L}\). Moreover, B(A,D):=−K i l l(A,𝜃 D) is a positive definite bilinear form and therefore an inner product defined on all of \(\mathcal {L}\). Notice that this, in particular, implies that \(\mathcal {K}\) is a compact subalgebra of \(\mathcal {L}\), (i.e., K i l l(A,A)<0, if \(A\in \mathcal {K}\) and A ≠ 0) and \(\mathcal {K}\) and \(\mathcal {P}\) are orthogonal with respect to such inner product.Footnote 7 Using the inner product B on the Lie algebra \(\mathcal {L}\), one naturally defines a Riemannian metric on M. In fact, for any point b∈M and any tangent vector U∈T b M, one can associate a right invariant vector field X U defined as \(X_{U}|_{a}:=R_{b^{-1}a *}U\) and this association is an isomorphism of vector spaces. Then, the Riemannian metric 〈⋅,⋅〉 R is defined as (with U,V∈T b M)@@@

The K−P problem is the minimum time problem for system (4) on a Lie group M with a Cartan decomposition as described above, where the vector fields X j are right-invariant vector fieldsFootnote 8 on M spanning \(\mathcal {P}\) and orthonormal with respect the inner product B. The initial point q 0 is the identity of the group M. The problem is to steer from q 0 to an arbitrary final condition in M in minimum time, subject to the condition \(\| \vec u \| \leq L\).

The problem can be cast in the above sub-Riemannian setting with symmetries as follows: The distribution of vector fields \(\mathcal {P}\) in \(\mathcal {L}\) defines a sub-Riemmannian structure on M with the sub-Riemannian metric at any point b defined by the restriction of 〈⋅,⋅〉 R to \(\mathcal {P}|_{b}\) for any b in M. Consider now a Lie subgroup of M, G (not necessarily connected) with Lie algebra \(\mathcal {K}\), which acts on M by conjugation, i.e., for p∈M, g∈G, Φ g (p): = g p: = g×p×g −1, where × is the group operation in M. Such (left) action induces a map on the Lie algebra \(\mathcal {L}\) given by its differential Φ g∗ which is a Lie algebra automorphism. We assume that the map Φ g is an isometry and that for every connected component j of G there exists a g j such that \({\Phi }_{g_{j} *} \mathcal {P} \subseteq \mathcal {P}\). This also implies, because of the (Killing) orthogonality of \(\mathcal {K}\) and \(\mathcal {P}\), \({\Phi }_{g_{j} *} \mathcal {K} \subseteq \mathcal {K}\). Moreover, because of Eq. 22, these properties are not restricted to g j but are true for every g∈G.Footnote 9 A special case is when G is the connected component containing the identity with Lie algebra \(\mathcal {K}\), in which case g j can be taken equal to the identity. @@@@

In the following, we shall restrict ourselves to linear Lie groups so that M and G will be the Lie groups of matrices.Footnote 10 In the standard coordinates (inherited from the standard ones of G l(n,R) or G l(n,C)), the system (4) is written as

where the Lie algebra \(\mathcal {L}\) of matrices of the Lie group M has a Cartan decomposition as in Eqs. 21 and 22 and the B j ’s span an orthonormal basis of \(\mathcal {P}\).Footnote 11 The K−P problem is the minimum time problem, with initial condition X 0 = 1 equal to the identity matrix subject to \(||\vec u|| \leq L\). The symmetries are given by the transformations X→K X K −1, for K∈G, which induce transformations on the matrices \(B \in \mathcal {L}\), B→K B K −1. These are symmetries because they preserve \(\mathcal {P}\) and \(\mathcal {K}\), and the commutation relations (22).

As a special case of what we have seen in general, the minimum time control for system (24) is equivalent to that of finding minimizing geodesics on M and it can be treated on M/G. On the orbit space, we can describe the cut locus, the critical locus, and the reachable sets.

Remark 4.1

The knowledge of the reachable sets for a K−P problem of the form Eq. 24 also gives the reachable sets for the larger class of systems

with the drift AX, with \(A \in \mathcal {K}\) and the \(B_{j} \in \mathcal {P}\). In fact, consider the change of coordinates U(t): = e −At X(t). A straightforward calculation gives

and since B→e −At B e At is assumed to be an isometry, there exists an orthogonal matrix a j,k : = a j,k (t) such that \( e^{-At} B_{j} e^{At}={\sum }_{k=1}^{m} a_{j,k}(t) B_{k}\), so that we have

where \(v_{k}(t):={\sum }_{j=1}^{m} a_{j,k}(t)u_{j}(t)\), and \(\|\vec v \| \leq L\) if and only if \(\| \vec u \| \leq L\). Therefore, the reachable set of system (26) coincides with the one of Eq. 4 and knowledge of the reachable set for system (26), \(\mathcal {R}_{U}(t)\), gives the reachable set for system (25), \(\mathcal {R}_{X}(t)\), via the relation \(\mathcal { R}_{X}(t)=e^{At}\mathcal {R}_{U}(t)\).

In the K−P problem, the equations of the Pontryagin maximum principle are explicitly integrable and give (cf. [6] and references therein) that the optimal control \(\vec u\) is such that there exist matrices \(A_{k} \in \mathcal {K}\) and \(A_{p} \in \mathcal {P}\) with

with ∥A p ∥ = L. Therefore, the optimal trajectories satisfy

and the solution can be written explicitly as

The geodesics (28) are analytic curves. Therefore, all the results on the geometry of the optimal synthesis in the previous section apply. Moreover, for every geodesic in the orbit space M/G (which is the projection of a geodesic in M), we can always take a representative (28) in M with \(A_{p}:=A_{a} \in \mathcal {A}\), with \(\mathcal {A}\) a maximal Abelian (Cartan) subalgebra in \(\mathcal {P}\). To see this, recall the known property of the Cartan decomposition that if \(\mathcal {A} \subseteq \mathcal {P}\) is a maximal Abelian subalgebra in \(\mathcal {P}\), then

Therefore, we can write (27), for \(A_{a} \in \mathcal {A}\), as

for K∈G and \(\bar A_{k} \in \mathcal {K}\). Using A p : = K A a K, with K∈G, we have (cf. (28))

with \(\bar A_{k}:=K^{-1} A_{k} K\).

The following proposition gives some restrictions on the pairs (A k ,A p ) for points that are not on the cut locus of M. This proposition can be used to prove that a certain point is in the cut locus.Footnote 12

Proposition 4.2

Let \(X_{f} \notin \mathcal {CL}(M)\) . Let H denote the isotropy group of X f . Then, the pair (A k ,A p ) giving the minimizing geodesic are such that for every \(\hat H \in H\) ,

for any n≥0.

Proof

We know from Propositions 3.4 and 3.5 and from formula (28) that the pairs (A k ,A p ) satisfy the invariance property

for every t∈[0,T], where T is the minimum time associated to X f . Taking the n-th derivative and by induction it is seen that this implies that X n(t) also satisfies the invariance property with respect to \(\hat H\), i.e.,

where X n(t) is defined as

with

Using the invariance (34) of X n(t) at t=0, it follows that all the matrices H n are also \(\hat H\)-invariant. We want to show that this implies the invariance of

for each n≥1. For n=1 L 1 = A p and it is clear that L n is invariant since L 1 = H 1 = A p . From this, we proceed by induction on n, for n≥2. We shall prove that each H n , n≥2, can be written, with l n =2n−1−1, as:

with \({V_{j}^{n}}\) and \({W_{j}^{n}}\) invariant and both addends of some H s , with s<n. From this, since H n is also invariant, we must have that L n is invariant as well.

First notice that \(H_{2}={A^{2}_{p}}+ [A_{p},A_{k}] =A_{p}A_{p} +L_{2}\), so clearly the statement holds for n=2.

Assume that the statement holds for H n , then we have

Letting

we can write

where \(V_{j}^{n+1}\) are invariant, since product of invariant, and they are addend of H n , and \(W_{j}^{n+1}=A_{p}\) is also invariant and it is in H 1. Now we have in Eq. 37

If \({W_{j}^{n}}\) is one of the addends of H s with s<n, then \([{W_{j}^{n}},A_{k}]\) is one of the addends of H s+1, so it is also invariant, by inductive assumption, and s+1<n+1, so \({V_{j}^{n}}[{W_{j}^{n}},A_{k}]\) is the product of two invariant factors which are addends of two H p for some p<n+1. The same argument applies to \([{V_{j}^{n}},A_{k}]{W_{j}^{n}}\). So also the sum in the second brackets can be rewritten in the desired form. Now it is sufficient to notice that [L n ,A k ] = L n+1. □

The previous considerations suggests a general methodology to find the optimal synthesis for time optimal control problems with symmetries and in particular for K−P problems.

The first step of the method is to identify a group of symmetries. There are in general several choices of groups, connected and not connected. In the K−P case, the natural choice is the connected Lie group corresponding to the subalgebra \(\mathcal {K}\) in the Cartan decomposition or a possible not connected Lie group having \(\mathcal {K}\) as its Lie algebra. It is typically convenient to take the Lie group G as large as possible so as to have a finer orbit type decomposition of M/G, which we would like to have of as small dimension as possible.

The second step of the procedure is to determine the nature of M/G so that the problem is effectively reduced to a lower dimensional space. This is important both from a conceptual and practical point of view since a computer solution of the problem will have to consider a smaller number of parameters. This task typically requires some analysis since not all the quotient spaces are known in the literature.Footnote 13 An analysis of the various isotropy groups of the points in M reveals the stratified structure of M/G which, as we have seen in Section 3, has consequences for the optimal synthesis.

The third step is to obtain the boundaries of the reachable sets in M/G, that is, the projections of the boundaries of the reachable sets \(\mathcal {R}(T)\) in M. In order to do this, if A a is an element in the Cartan subalgebra \(\mathcal {A} \subseteq \mathcal {P}\), we write a representative of a geodesic as (cf. (31)),

with \(\bar A_{k} \in \mathcal {K}\) and \(A_{a} \in \mathcal {A}\) and ∥A a ∥ = L. By fixing t and varying \(\bar A_{k} \in \mathcal {K}\) and \(A_{a} \in \mathcal {A}\), we obtain an hyper-surface in M/G, part of which is the boundary of the reachable set at time t. The determination of the sets in \(\mathcal {K}\) and \(\mathcal {A}\) which is mapped to this boundary is an analysis problem to be considered on a case by case basis, which is obviously simpler in low dimensional cases, and requires help from computer simulations in higher dimensional cases.

The fourth step is to find the first t such that \(\pi (\mathcal {R} (t))\) contains π(X f ). At this value of t, there are matrices A k and A a such that \([e^{-\bar A_{k} t} e^{(\bar A_{k} + A_{a})t}]=\pi (X_{f})\).

Finally, the fifth step is to find K∈G such that

This gives the correct pair (A k ,A p ) to be used in the optimal control (27): \(A_{k}=K\bar A_{k} K^{-1}\), \(A_{p}:= K \bar A_{a} K^{-1}\). From the last two steps, it follows that the problem is therefore effectively divided in two. Restricting ourselves to the orbit space we first find an optimal control to drive the state of the system to the desired orbit. Then, in the fifth step, we move inside the orbit to find exactly the final condition we desire.

The treatment of the optimal synthesis on S O(3) in the following section gives an example of application of this method.

5 Optimal Synthesis for the K−P Problem on S O(3)

A basis of the Lie algebra of skew-symmetric real 3×3 matrices, s o(3), is given by:

We consider the K−P Cartan decomposition of s o(3) where \({\mathcal {K}}=\text {span} \{k\}\), and \({\mathcal {P}}=\text {span} \{ p_{1}, \, p_{2}\}\). There are two possible maximal groups of symmetries with Lie algebra \(\mathcal {K}\). A maximal connected Lie group, K +, which is the connected component containing the identity and consists of matrices of the form

So here the upper-left 2×2 block is in S O(2). A maximal not connected Lie group, K +∪K −, is given by the matrices which are either of the previous type or of the type

Therefore, in Eq. 41, the upper-left 2×2 block is in O(2), with determinant equal to −1. We shall consider this second case, that is, G = K +∪K −. Remark 5.7 discusses what would change had we chosen G = K +.

5.1 Structure of the Orbit Spaces S O(3)/G

Following the second step of the procedure described in the previous section, we now describe the structure of M/G = S O(3)/(K +∪K −) and its isotropy strata. We use the Euler decomposition of S O(3) from which it follows that any matrix X∈S O(3) can be written as X = K +(r 1)H(s)K +(r 2), with K +(r i ) of the type (40), and \(H(s):=e^{p_{1}s}\), for some real s. Since K +(r)⊂G, [X]=[H(s)K +(r 2)(K +(r 1))T]=[H(s)K +(r 2−r 1)]. So, we can always choose as representatives of the orbits matrices of the type:

with s, r∈[0,2π). Moreover, we have,

which changes the sign of sin(s) as compared with Eq. 42. Thus, we can assume sin(s)≥0, so s∈[0,π]. Furthermore, we have

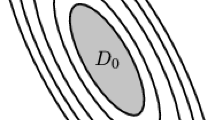

so we can also assume r∈[0,π]. It follows that each equivalence class has an element of the form Eq. 42, with r,s∈[0,π]. By equating two matrices of the form Eq. 42 for different values of the pairs (r,s), one can see that such a correspondence is one to one unless s = π. In this case, all the matrices H(π)K +(r) (which give the set K −) are equivalent. So if s∈[0,π) and r∈[0,π], each H(s)K +(r) represents a unique orbit, while if s = π, since they are all equivalent, the choice of r is irrelevant. We can therefore represent S O(3)/G as the upper part of a disc of radius π, where if ρ and 𝜃 are the polar coordinate, we have ρ∈[0,π] with ρ = π−s, and 𝜃∈[0,π] with 𝜃 = r (see Fig. 1).

Remark 5.1

If [X 1]=[X 2], then (X 1)3,3=(X 2)3,3, and also the trace is preserved. So, from any element X of a given equivalence class, we can compute the two parameters s, r∈[0,π] of Eq. 42, by setting:

From these values, we have also the two values of ρ = π−s and 𝜃 = r. So there is a one to one, onto, readily computable correspondence between points in the half disc in Fig. 1 and orbits in S O(3)/G.

The point ρ = π and 𝜃=0 (B in Figure 1) represents the identity matrix, while the point ρ = π and 𝜃 = π (A in Fig. 1) gives the matrix:

Both these matrices are fixed points for the action of G, so they are the only matrices in their orbit, and their isotropy group is the entire group G.

The points with ρ = π and 𝜃∈(0,π) give the matrices in K +, except for the identity 1 and the matrix J defined in Eq. 44. The matrices in K + commute, and it holds that K −(v)K +(r)(K −(v))T = K +(−r); thus, the orbits of these elements contain two matrices, and we took as representative the one with sin(r)>0. Their isotropy group is K +.

The origin, i.e., the point with ρ=0 and 𝜃 = r arbitrary, corresponds to the matrices:

These matrices are all equivalent, and their isotropy groups are all conjugated to:

which is the isotropy group of the matrix with r=0.

The matrices with 𝜃 = π and ρ∈(0,π) are the classes of the symmetric matrices in S O(3). It can be seen that their isotropy group is conjugated to the one given by

The matrices with 𝜃=0 and ρ∈(0,π) correspond to matrices in S O(3) of the type:

Their isotropy group is, again, conjugated to V, as in the symmetric case.

The matrices which are in the interior of the half disc have a trivial isotropy group, i.e., composed of only the identity matrix. This is the regular part of S O(3)/G while the boundary of the half disc corresponds to the singular part.

Summarizing, the isotropy types of S O(3) are given by ({1}), (V), (W) in Eqs. 47 and 46, (K +), and (K +∪K −), with the partial ordering

and

\(M_{(\textbf {K}^{+} \cup \textbf {K}^{-})}\) is composed by the matrices 1 and J, M (W) are the matrices in Eq. 45, M (V) are matrices which are either symmetric or of the form Eq. 48, \(M_{(\textbf {K}^{+})}\) are the matrices in K + except for 1 and J, M ({1}) are all the remaining matrices. The corresponding strata on the orbit space (half disc) are indicated in Fig. 1.

5.2 Cut Locus and Critical Locus

We shall now apply the results given in the previous two sections to determine the cut locus \(\mathcal {CL}(SO(3))\) and the critical locus \(\mathcal {CR} (SO(3))\). The cut locus was also described in [7] using a different method. Following what suggested in Remark 3.7, we analyze the singular points, first.

Proposition 5.2

All the matrices that correspond to ρ=π and 𝜃∈(0,π] (these are all the matrices in K + except the 1) are in \(\mathcal {CL}(SO(3))\) , and so also in the \(\mathcal {CR}(SO(3))\) (cf. Proposition 2.1).

Proof

Fix a matrix X f ∈K + and let A p = α p 1 + β p 2, be the matrix giving the minimizing geodesic that appear in Eq. 28 for X f . If this matrix is not in \(\mathcal {CL}(SO(3))\), then, using Proposition 4.2, it must hold:

for all K +∈K +, since K + is contained in the isotropy group (indeed K + is the isotropy group for all values of 𝜃∈(0,π), while for 𝜃 = π the isotropy group is all G). The previous equality holds for all K + if and only if A p =0, which is not possible since ||A p ||=1. So X is in the cut locus and also in the critical locus. □

The next proposition proves that all the symmetric matrices (which correspond to the segment O−A in Fig. 1) are in the cut locus.

Proposition 5.3

The matrices corresponding to ρ=0 and to ρ∈(0,π) and 𝜃=π (these are the matrices which correspond to the origin and to the segment (A,O) in the Fig. 1 ) are in \(\mathcal {CL}(SO(3))\) , and so also in \(\mathcal {CR}(SO(3))\).

Proof

Fix a symmetric matrix X f . Its isotropy group is conjugated either to W in Eq. 46 (if ρ=0) or to V in Eq. 47. By continuity, the geodesic from 1 to X f must contain matrices whose isotropy group is different from the one of X f , so by using Corollary 3.6 we get that X f lies in the cut locus. □

Now we will prove that all the remaining matrices, i.e., the ones corresponding to the open segment (O B) and the regular part (the interior of the disc) are neither on the \(\mathcal {CL}(SO(3))\) nor in the critical locus \(\mathcal {CR}(SO(3))\).

We know, that the geodesic are analytic curves given by Eq. 28. Here, we may choose as \(\mathcal {A}=\text {span }\{p_{1}\}\), thus the geodesic are given by:Footnote 14

where

The next proposition gives the optimal time to reach the matrices with ρ=0, i.e., the ones corresponding to the origin of the half disc as in Eq. 45.

Proposition 5.4

The optimal geodesic to reach any X f such that \([X_{f}]=\left [\left (\begin {array}{llll} 1 & ~~0 & ~~0 \\ 0 & -1 & ~~0 \\ 0 & ~~0 & -1 \end {array}\right )\right ]\) must have the parameter α of Eq. 49 equal to 0, and the minimum time to reach X f is π.

Proof

Since the conjugation by elements of G does not change the 3,3 element, letting T the minimum time to reach X f , we must have (see Eq. 49):

The previous equality can hold if and only if α=0. Moreover, we must have cos(T)=−1. Thus, the minimum time T is equal to π. □

The next proposition proves that the matrices in the singular part which correspond to the segment O−B in Fig. 1 are neither on the cut locus nor on the critical locus. In particular, this implies that the projection of the geodesics reaching these matrices lies all in the segment, since each point of these trajectories has to have the same isotropy group.

Proposition 5.5

Fix the matrix X f that corresponds to 𝜃=0 and ρ=π−s, with s∈(0,π) as in Eq. 48. Then, this matrix is not on the cut locus nor on the critical locus, and the minimum time T to reach X f from 1 is T=s.

Proof

Fix a matrix X f that corresponds to 𝜃=0 and ρ = π−s, with s∈(0,π), i.e., such that

These are matrices of the form Eq. 48. First, we prove that necessarily the geodesic reaching X f must have α=0. Let γ(t) be a geodesic with α=0. Then, by Proposition 5.4, its projection is optimal until t = π; thus, γ(t) is optimal until t = π. Moreover, since its projection at time t = s is equal to \(H(s):=e^{p_{1}s}\), we have γ(s) = X f , and s is the minimum time, since the minimum time is the same for equivalent matrices (cf. Proposition 3.1). If there was another trajectory reaching optimally X f , with α ≠ 0, and call this trajectory \(\tilde {\gamma }(t)\), then the trajectory:

would also be an optimal trajectory to the origin, which contradicts the fact that all geodesics are analytic.

Assume now that X f is on the cut locus. Then, there exist two optimal trajectories both with α=0, so \(\gamma _{i}(t)=e^{{A_{p}^{i}}t}\) such that,

Since every two Abelian subagebras in \(\mathcal {P}\) are conjugate by an element of K +, there must exist a matrix K +∈K + such that

However, since these spans are one dimensional, we must have

Thus,

In the first case, we have that K + must be in the isotropy group of X f . On the other hand, the isotropy group of X f is conjugated to the group V of Eq. 47; thus, it contains two elements, one is the identity and the other must have −1 in the 3,3 position. Thus, necessarily since K + has +1 in the 3,3 position, we must have K + = 1, and so \({A_{p}^{2}}={A_{p}^{1}}\). In the second case, X f is conjugate via an element of K + to \(X_{f}^{-1}={X_{f}^{T}}\). Writing the third column of the relation \(X_{f} K^{+}=K^{+} {X_{f}^{T}}\) using the formula (48) with \(K^{+}:=\left (\begin {array}{lll} K_{1}^{+} & ~0 \\ ~~0 & ~1\end {array}\right )\) as

we have that the 2×2 matrix \(K_{1}^{+} \in SO(2)\) has an eigenvalue in −1 (unless c and f are equal to zero which is to be excluded since g≠±1). Therefore,

Using this in \({A_{p}^{2}}=-K^{+} {A_{p}^{1}} (K^{+})^{T}\) and the general expression for \({A_{p}^{1}}\), we find again \({A_{p}^{2}}={A_{p}^{1}}\).

Therefore, X f is not on the cut locus. Moreover, since the projection of the trajectory is optimal until t = π>s, the matrix X f is not on the critical locus either. □

5.3 The Optimal Synthesis

The last proposition has characterized the minimizing geodesics for points corresponding to the interval O−B in Fig. 1, while Proposition 5.4 has given the minimizing geodesic and optimal time for points corresponding to the origin, i.e., matrices in K −, in Fig. 1. We now consider the geodesics leading to the remaining pieces of the singular part of S O(3)/G. Then, we put all things together to describe the full optimal synthesis.

The geodesic curves given in Eq. 49 depend on the parameter α which varies in R. However, both parameters ρ and 𝜃 which characterize the points of the equivalence classes in the orbit space are even function of α (see Eq. 43), so in the analysis in the orbit space, we can restrict ourselves to values α≥0.

The next proposition provides the optimal time to reach any matrix with ρ = π, i.e., all the matrices in K +.

Proposition 5.6

Assume X f ∈ K + , then \([X_{f}]=\{X_{f},{X_{f}^{T}}\}\) , and let 𝜃∈(0,π] be the value of the parameter of Eq. 42 , which together with ρ=π gives the equivalence class [X f ]. Then, the minimum time T to reach X f is given by

and the optimal value of the parameter α to reach [X f ] is \(\alpha =\frac {2\pi -\theta }{\sqrt {\theta (4\pi -\theta )}}\).

Proof

First notice that necessarily α ≠ 0, since all the trajectories corresponding to α=0 have 𝜃=0. Since the equivalence class of X f consists of only two elements (which coincide when 𝜃 = π) and these elements have 0 in the 3,1 and 3,2 position, and 1 in the 3,3 position, for t = T we must have in Eq. 49, C 2 = C 3=0 and \(\frac {C_{1}+\alpha ^{2}}{1+\alpha ^{2}}=1\), which implies:

thus, we must have

for some \(m\in \mathbb {N}\). Moreover, at time T, we have

which implies

for some \(p\in \mathbb {N}\). We will treat the ±𝜃 sign separately.

Case +1 Assume that Eq. 53 holds with the +1 sign. Since \(\sqrt {(1+\alpha ^{2})} T>\alpha T\), we must have p≤m−1. From Eqs. 52 and 53, we have

The previous equality implies

and consequently,

The value of \(T^{+}_{m,p}\), for each fixed m, is minimum when p is maximum, i.e. p = m−1, and its minimum value is

which is minimum when m=1 and we have \(T_{1,0}^{+}=\sqrt {(2\pi -\theta )(2\pi +\theta )}\).

Case −1 Assume that Eq. 53 holds with the −1 sign. Imposing again \(\sqrt {(1+\alpha ^{2})} T>\alpha T\), we now get p≤m. From Eqs. 52 and 53 we have

The previous equality implies

and consequently,

Again \(T^{-}_{m,p}\), for each fixed m is minimum when p is maximum. Therefore, we now take p = m, and we get

which is minimum when m=1 and we have \(T^{-}_{1,1}=\sqrt {\theta (4\pi -\theta )}\).

Since 𝜃≤π, we have \(T^{-}_{1,1}\leq T^{+}_{1,0}\), thus the minimum time is \(T=\sqrt {\theta (4\pi -\theta )}\) with the corresponding \(\alpha =\frac {2\pi -\theta }{\sqrt {\theta (4\pi -\theta )}}\). □

From the previous proposition, since 𝜃∈(0,π), we have that for \(\alpha \geq \frac {1}{\sqrt 3}\), all the geodesics are optimal until time \(T=\frac {2\pi }{\sqrt {1+\alpha ^{2}}}\), when they reach the boundary of the disc. It is clear that T is a decreasing function of α, with maximum equal to \(\pi \sqrt 3\), which corresponds to the trajectory reaching the matrix J. The trajectory corresponding to α=0 lies on the segment (O,B) and it is optimal until time T = π, when it reaches the origin. The trajectories corresponding to \(\alpha \in (0,\frac {1}{\sqrt 3})\) are optimal until they reach the segment (A,O), which correspond to the symmetric matrices. We know from Proposition 5.3 that these matrices are on the cut locus. For a given α, the time T where the corresponding geodesic loses optimality can be numerally estimated, and it is always between π and \(\sqrt 3\pi \).

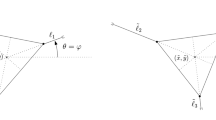

Thus, all elements are reached in time \(T\leq \sqrt 3\pi \). See Fig. 2 for the shape of the optimal trajectories, the red curve is the optimal curve with \(\alpha =\frac {1}{\sqrt 3}\), the black curves correspond to bigger values of α and loose optimality at the boundary of the circle, while the blue curves correspond to smaller values of α and loose optimality at the segment (A,O).

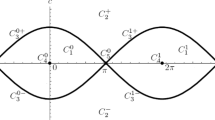

Figure 3 describes the optimal synthesis according to the third step of the procedure given in the previous section, that is, it gives the boundaries of the reachable sets at any time t. To draw these curves, for a given time T one finds the values of α such that the corresponding trajectory at time T lies on the boundary, and these are parametric curves with α as a parameter in the given interval. For T<π, the boundary is given varying α from 0, until the boundary of the circle is reached, for T>π, the parameter α has to be chosen from the values that correspond to the segment (A,O) until it again reaches the boundary of the circle. So the behavior changes at the curve in red corresponding to T = π.

Remark 5.7

To derive all the previous results, we have taken as symmetry group G = K +∪K −. We could have done a similar analysis, taken as a group of symmetries only the connected component containing the origin, i.e., \(\tilde {G}=K^{+}\). In this case, as representatives of equivalent classes, we could take again matrices of the type (42), but now, while s∈[0,π], we may allow r∈(−π,π]. So the quotient space turns out to be the all disc of radius π, instead of only the upper part. Here, the boundary represents the matrices in K + that now are all fix points and the center are the matrices in K −, which are again all equivalent. It is easy to see that this two sets give the singular part of S O(3)/(K +), while the interior of the disc is all in the regular part. The trajectories in the quotient space are given by the trajectories we have found previously and the one that are the symmetric with respect to the x-axis, this can be easily seen, since the two parameters s, r can be found using, as before, Remark 5.1, but while s is the same, for r we have two choices, the r given in Remark 5.1 and its opposite (see also Fig. 4).

Notes

That is, a manifold with the action of a Lie transformation group.

That is, for any p∈M, g 1 and g 2 in G, (g 2 g 1)p = g 2(g 1 p).Every aspect of the theory goes through for right actions with minor modification, that is, g 2(g 1 p)=(g 1 g 2)p.

That is, the action map α:G×M→M×M defined by α(g,p)=(g p,p) is proper, that is, the preimage of any compact set is compact.

The intuitive idea of the frontier connection is that smaller dimensional manifolds in the partition are either totally detached from higher dimensional manifolds (that is the intersection with the closure is empty) or they are part of the boundary.

And therefore in the critical locus \({\mathcal {CR}}(M)\) cf. Proposition 2.1.

If \(A\in \mathcal {K}\) and \(D \in \mathcal {P}\)@@@@

@@@where we have used the property of the Killing form that for every Lie algebra automorphism ϕ, K i l l(A,D) = K i l l(ϕ A,ϕ D).

Notice that we could have as well set up the whole treatment for right invariant vector fields but we could have given an analogous treatment for left invariant vector fields.

Consider a connected component of G. We know that there exists a number of right invariant vector fields X 1,X 2,…,X m in \(\mathcal {K}\) such that denoting by σ 1,t , σ 2,t ,..., σ m,t the corresponding flows, we have \(\sigma _{m,t_{m}} \circ \sigma _{{m-1},t_{m-1}} \circ {\cdots } \circ \sigma _{1,t_{1}} (g_{j})=g\). For every r=1,…m the map σ r,t is real analytic as a function of t. Denote by \(\bar g:=\sigma _{1,t_{1}}(g_{j})\). We want to show that \({\Phi }_{\bar g * }\mathcal {P} \subseteq \mathcal {P}\) and applying this m times we have that \({\Phi }_{g*} \mathcal {P} \subseteq \mathcal {P}\). Consider K in \(\mathcal {K}\) and \(P \in \mathcal {P}\) and the Killing inner product \(B(K, {\Phi }_{\sigma _{1, t}(g_{j}) *} P)\) which is a real analytic function of t at every point in M and it is zero for t=0. By taking the k-th derivative of this function at t=0, we obtain, using the definitions of Lie derivative@@@

where \(ad_{X_{1}}^{k}\) denotes the k−the repeated Lie bracket with X 1 and we have used Eq. 22.

The example of S U(2) treated in [4] and the example of S O(3) of the next section are K−P problems of this type.

Here, with minor abuse of notation, we identify \(\mathcal {K}\), \(\mathcal {L,}\) and \(\mathcal {P}\) with the spaces of matrices representing the corresponding vector fields.

This is done for example in the next section in Proposition 5.3.

Typical cases in the literature look at a Lie group M where the conjugation action on M is given by M itself and not by a subgroup G of M as in our case.

Here, we use the calculation of [7] section 3.2.1.

References

Agrachev A, Barilari D, Boscain U. Introduction to Riemannian and sub-Riemannian geometry, Lecture Notes SISSA. Italy: Trieste; 2011.

Agrachev A, Sachkov Y. Control theory from the geometric viewpoint, Encyclopaedia of Mathematical Sciences, 87. Berlin: Springer; 2004.

Albertini F, D’Alessandro D. Minimum time optimal synthesis for two level quantum systems. J Math Phys 2015;56:012106.

Albertini F, D’Alessandro D. Time optimal simultaneous control of two level quantum systems, submitted to Automatica.

Alekseevsky D, Kriegl A, Losik M, Michor P. The Riemannian geometry of orbit spaces. The metric, geodesics, and integrable systems. Publ Math Debrecen 2003; 62:247–276.

Boscain U, Chambrion T, Gauthier JP. On the K+P problem for a three-level quantum system: optiMality implies resonance. J Dyn Control Syst 2002;8(4):547–572.

Boscain U, Rossi F. Invariant Carnot-Caratheodory metric on s 3, S O(3) and S L(2) and lens spaces. SIAM J Control Optim 2008;47:1851–1878.

Bredon GE, Vol. 46. Introduction to compact transformation groups pure and applied mathematics. New York: Academic Press; 1972.

D’Alessandro D, Albertini F, Romano R. Exact algebraic conditions for indirect controllability of quantum systems. SIAM J Control Optim 2015;53(3):1509–1542.

Echeverrìa-Enriquez A, Marìn-Solano J, Munõz Lecanda MC, Roman-Roy N. Geometric reduction in optimal control theory with symmetries. Rep Math Phys 2003;52:89–113.

Bredon GE, Vol. 46. Introduction to compact transformation groups pure and applied mathematics. New York: Academic Press; 1972.

Filippov AF. On certain questions in the theory of optimal control. SIAM J on Control 1962;1:78–84.

Grizzle J, Markus S. The structure of nonlinear control systems possessing symmetries. IEEE Trans Automat Control 1985;30:248–258.

Grizzle J, Markus S. Optimal control of systems possessing symmetries. IEEE Trans Automat Control 1984;29:1037–1040.

Ibort A, De la Pen̈a TR, Salmoni R. Dirac structures and reduction of optimal control problems with symmetries, preprint; 2010.

Jacquet S. Regularity of the sub-Riemannian distance and cut locus, in nonlinear control in the year 2000. Lecture Notes Control Inf Sci 2007;258:521–533.

Knapp A, Vol. 140. Lie groups beyond and introduction, Progress in Mathematics. Boston: Birkhäuser; 1996.

Koon WS, Marsden JE. The Hamiltonian and Lagrangian approaches to the dynamics of nonholonomic systems. Rep Math Phys 1997;40:21–62.

Marsden JE, Ratiu TS. Introduction to mechanics and symmetry. New York: Springer; 1999.

Marsden JE, Weinstein A. Reduction of symplectic manifolds with symmetry. Rep Math Phys 1974;5:121–130.

Martinez E. Reduction in optimal control theory. Rep Math Phys 2004;53(1):79–90.

Meinrenken E. 2003. Group actions on manifolds (lecture notes) University of Toronto.

Michor P. Unknown Month 1996. Isometric actions of lie groups and invariants, Lecture Course at the University of Vienna.

Montgomery R. A Tour of sub-Riemannian geometries, their geodesics and applications, volume 91 of Mathematical Surveys and Monographs. RI: American Mathematical Society; 2002.

Monti R. The regularity problem for sub-Riemannian geodesics, in geometric control and sub-Riemannian geometry. Springer INdAM Series. In: Stefani G, Boscain U, Gauthier J-P, Sarychev A, and Sigalotti M, editors; 2014. p. 313–332.

Nijmeijer H, Van der Schaft A. Controlled invariance for nonlinear systems. IEEE Trans Automat Control 1982;27:904–914.

Ohsawa T. Symmetry reduction of optimal control systems and principal connections. SIAM J Control Optim 2013;51(1):96–120.

Acknowledgements

Domenico D’Alessandro’s research was supported by the ARO MURI grant W911NF-11-1-0268. Domenico D’Alessandro also would like to thank the Institute of Mathematics and its Applications in Minneapolis and the Department of Mathematics at the University of Padova, Italy, for kind hospitality during part of this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Albertini, F., D’Alessandro, D. On Symmetries in Time Optimal Control, Sub-Riemannian Geometries, and the K−P Problem. J Dyn Control Syst 24, 13–38 (2018). https://doi.org/10.1007/s10883-016-9351-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10883-016-9351-6