Abstract

The item response tree (IR-tree) model is increasingly used in the field of personality assessments. The IR-tree model allows researchers to examine the cognitive decision-making process using a tree structure and evaluate conceptual ideas in accounting for individual differences in the response process. Recent research has shown the feasibility of applying IR-tree models to personality data; however, these studies have been exclusively focused on an honest or incumbent sample rather than a motivated sample in a high-stakes situation. The goal of our research is to elucidate the internal mechanism behind how respondents in different testing situations (i.e., honest and motivated test conditions) experience decision-making processes through the three-process IR-tree model (Böckenholt, 2012). Our findings generally corroborate the response mechanism of the direction–extremity–midpoint sequence in both honest and motivated test settings. Additionally, samples in motivated test settings generally exhibit stronger directional and extreme response preferences but weaker preferences of midpoint selection than samples in unmotivated test settings. Furthermore, for actual job applicants, social desirability had a substantial effect on all directional, extreme, and midpoint response preferences. Our findings will aid researchers and practitioners in developing a nuanced understanding of the decision-making process of test-takers in motivated test environments. Furthermore, this research will help researchers and practitioners develop more fake-resistant personality assessments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In personnel selection settings, various assessments (e.g., cognitive ability tests, personality tests, situational judgment tests, and biodata tests) are used to evaluate job applicants’ knowledge, skills, abilities, and other characteristics (Sackett & Lievens, 2008). In particular, personality tests are ubiquitously used because of their various advantages, given that personality traits can predict valued work behavior outcomes, such as job performance (Barrick et al., 2001), training performance (Schmidt & Hunter, 1998), and leadership (Judge et al., 2002). Personality tests also provide incremental validity, reduce the adverse impact associated with cognitive ability tests, and maximize the chances of hiring qualified and diverse candidates, which is a common organizational goal (Hough et al., 2001; Ployhart & Holtz, 2008). In essence, personality tests are convenient, readily available, and relatively inexpensive to administer (Anderson et al., 2010).

Despite their merits and popularity, personality tests are often criticized owing to the ease of faking responses (Cucina et al., 2019; Ellingson et al., 2001; Mueller-Hanson et al., 2003; Ones et al., 1996; Stark et al., 2001). Faking refers to the deliberate attempt of an individual to distort information about their personality, interests, experience, or attitudes to be more favorably perceived (Kuncel & Borneman, 2007). To date, researchers have published various studies focusing on the following research questions: 1) Can people fake? 2) Do applicants fake? and 3) Does the faking matter? As a result of these studies, it is now widely accepted that people can intentionally distort their responses (Byle & Holtgraves, 2008; Grubb & McDaniel, 2007; Ziegler et al., 2007) and applicants do fake responses in the selection settings (Arthur et al., 2010; Fell & König, 2016; Griffith & Converse, 2012; Peterson et al., 2011; Roulin & Krings, 2020). Additionally, applicants differ in their ability and motivation to fake responses (Ellingson & McFarland, 2011; Griffith et al., 2011; Marcus, 2009; McFarland & Ryan, 2000; Pavlov et al., 2019; Roulin et al., 2016), and faking responses generally have a negative impact on psychometric property, decision making (e.g., rank order), and the overall utility of personality tests (Birkeland et al., 2006; Donovan et al., 2014; Hough & Dilchert, 2017; Lee et al., 2017; Mueller-Hanson et al., 2003; Zickar et al., 2004).

Despite numerous research efforts, scholars have paid relatively little attention to the faking response process itself (Holtgraves, 2004; Zhang et al., 2020). We found that the majority of papers that addressed this topic were focused on the following aspects: 1) examining response latency by using response reaction time (e.g., quicker or slower response process) (Fine & Pirak, 2016; Shoss & Strube, 2011; Vasilopoulos et al., 2000), 2) describing the cognitive process through think-aloud methods (Hauenstein et al., 2017; Holtrop et al., 2020; Robie et al., 2007), and 3) identifying the differential item functioning (DIF) via item response theory (IRT) (O’Brien & LaHuis, 2011; Scherbaum et al., 2013; Stark et al., 2004; Zhang et al., 2020; Zickar & Robie, 1999).

First, the response latency approach (e.g., Fine & Pirak, 2016; Van Hooft & Born, 2012) can provide a practical implication in personnel selection. For example, longer response times are thought to indicate faking on the logic, and thus, more cognitive efforts and processes are needed to respond dishonestly (Fine & Pirak, 2016). From this perspective, speed tests are suggested as an effective and appealing way to inhibit faking because of their ease of implementation (Fine & Pirak, 2016; Komar et al., 2010; Meade et al., 2020). Second, the think-aloud approach (e.g., Holtrop et al., 2020; Robie et al., 2007) is effective to examine the cognitive process directly by asking respondents about their intentions during test-taking. Finally, the DIF approach (e.g., O’Brien & LaHuis, 2011; Scherbaum et al., 2013; Zhang et al., 2020) provides the psychometric properties of items (i.e., item parameters) across different testing conditions and reveals items that display dissimilar item characteristics even at the same level of latent traits. Although these studies contribute significantly to our knowledge of faking response processes, further study is necessary to increase our grasp of the response process of personality faking via advanced methodology.

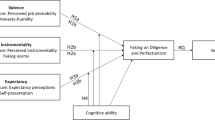

In the current study, we aimed to identify the internal mechanism that would explain how respondents in two testing situations (i.e., honest and motivated test conditions) differently experience decision-making processes through the three-process item response tree (IR-tree) model (Böckenholt, 2012). Specifically, the objectives of this study are to 1) evaluate the suitability of the agree primary process (APP) and midpoint primary process (MPP) models between honest and motivated test settings, 2) investigate the decision-making process of Likert-type items between the two test settings and have a better understanding of the relationships of latent traits involving each decision-making step between test settings, 3) examine the differential impacts of social desirability at each step of the decision-making process in real personnel selection settings. For this purpose, we investigated both experimental samples under honest and faking instruction conditions (within-subject design) and open-source real job applicant and research-only samples (between-subject design) obtained from Anglim et al. (2017).

To assess the internal decision-making mechanism behind response category selection, we examined two distinct three-process IR-tree models: the APP and the MPP (LaHuis et al., 2019). Additionally, we examined the sequence of decision-making stages in the response process in the honest and motivated test situations using the multigroup IR-tree model (Plieninger, 2020). Finally, we included the covariate (i.e., social desirability) in the actual job application sample’s IR-tree model to examine the impacts of the social desirability on each response process step.

Theoretical Response Process Models

To date, researchers have proposed a few theoretical models to reveal the response process while answering a self-report survey (e.g., Krosnick, 1999; Krosnick & Fabrigar, 1998; Schwarz & Sudman, 1996; Ziegler, 2011). For example, Krosnick (1999) proposed a four-step response process consisting of comprehension (e.g., interpret the item, deduce the purpose, and form a mental representation), retrieval (e.g., match relevant memories to the item content), judgment (e.g., integrate and evaluate the obtained information and compare it with their mental representation), and mapping (e.g., represent their decision on a rating scale). Tourangeau and Rasinski (1988) also proposed a similar model, but they referred to the last step as the “editing” step. They suggested that the editing step reflects faking or socially desirable responses, and thus, they viewed that test-takers edit or change their responses in a more socially desirable way during this last step.

More recently, Ziegler (2011) proposed a model of applicant faking behavior by integrating the general models of Krosnick (1999) and Tourangeau and Rasinski (1988). The main difference between Ziegler’s model and the previous models lies in incorporating interactions between the person (e.g., test motivation, general mental ability, self-understanding) and the situation variables (e.g., testing conditions, presence of test administrators) in each of the stages. Ziegler’s model likewise consists of four steps: comprehension, importance classification, retrieval/judgment, and mapping. In the comprehension step, test-takers are assumed to evaluate the importance of items based on the situational demand. Ziegler hypothesized that the importance classification phase originates from establishing a mental picture of the item content. In the retrieval/judgment stage, test-takers recollect their memories and judge their choice by replying based on their mental image of the object. The last stage comprises the mapping process. In this stage, test-takers reflect their choice on the rating scale. Although these theoretical models give insights into the response process for personality items, relatively little empirical research has studied how respondents experience the cognitive response process in various testing settings. The recently proposed IR-tree models can be an effective methodological tool to this end. In the next section, we will describe these IR-tree models.

Introduction to IR-Tree Models

According to Böckenholt’s three-process model IR-tree approach (Böckenholt, 2012), the decision of a respondent to select a response category in a five-point Likert-type item is guided by a sequential response process that examines “whether to express or not express an opinion, whether to agree or disagree with an item, and whether to express weakly or strongly the intensity of their attitude” (Böckenholt, 2019, p. 488). That is, test-takers experience a series of the process while answering an item: i) indifference, which is the tendency to endorse the midpoint response category; ii) direction, which is the tendency to answer in the direction of the trait; and iii) intensity, which is the tendency to select the extreme response category. Recently, LaHuis et al. (2019) described two different IR-tree models for personality data, namely the MPP and APP models, and our research follows this approach.

MPP Model

As illustrated in Fig. 1, the MPP model consists of sequential steps of decision making: the midpoint (M) indicates the indifference, direction (D) describes the agree/disagree tendency, and extremity (E) represents the intensity. Each latent factor of M, D, and E is referred to as a query (Böckenholt, 2012). For example, suppose a test-taker assigns “1” to a five-point Likert-type personality item (1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree, and 5 = strongly agree); in this case, the MPP model assumes that the test-taker first evaluates whether or not to explicitly present their personality characteristics in the query M step. In this example, because the test-taker chooses “1” in the response category, the model assumes they clearly expressed their personality characteristic, so the sequential process moves to the query D step. In the D step, the MPP model evaluates the direction of personality response (e.g., agreement or disagreement). Given that the response option “1” indicates a disagreement, the decision-making process proceeds to the query E on the left side (i.e., disagree or strongly disagree) in the MPP model. In the query E step, the MPP model evaluates the intensity of the agreement or disagreement. Thus, the MPP model identifies the response process following the queries M, D, and E steps and ends this example with a “strongly disagree.”

To estimate the IR-tree model, the original Likert-style responses need to be transformed to pseudo-items for each query. The underlying idea of IR-tree models is that “decisions between several alternatives can be split up into several sub-decisions that are commonly called pseudo-items” (Lang et al., 2019, p. 13). Each decision making can be coded as 0 or 1 pseudo-items depending on whether a specific process is involved or not in each query. If a respondent (v) selects the category “1 (strongly disagree)” for an item (i), then the response would be evaluated by three steps in the MPP model. Because the response is “1 (strongly disagree),” they are considered to have an explicit opinion. Thus, the pseudo-item in the query M is coded as 0. If the respondent has a neutral opinion, then the pseudo-item in the query M would be coded as 1. Decision making then proceeds to the query D step to evaluate whether the response is an agreement (i.e., positive direction) or disagreement (i.e., negative direction). If the response is disagreement, then the pseudo-item in the query D would be coded as 0.

By contrast, if the response is in agreement, then the pseudo-item in the query D would be coded as 1. In our example, “1 (strongly disagree)” indicates a negative direction, and thus, the pseudo-item in the query D step is coded as 0. The extremity (E) is evaluated in the last step. If the response is negatively or positively extreme (i.e., 1 or 5), then the pseudo-item in the query E step would be coded as 1; otherwise, 0. Therefore, category “1” is transformed to a set of binary data, [M, D, E] = [0, 0, 1]. With the same logic, other responses can be transformed into pseudo-items. However, if category “3” is chosen, then the decision process would be terminated in the query M. Given that “3” indicates indifference (i.e., midpoint), the pseudo-item in the query M is coded as 1 and the pseudo-items in queries D and E are coded as missing because queries D and E are not involved in a response process of “3.” Category “3” is coded as [M, D, E] = [1, \(-\), \(-\)]. Here, the queries D and E are missing at random by design (\(-)\).

The IR-tree models typically estimate separate latent traits for each sub-decision process (i.e., queries M, D, and E) using the Rasch model or two-parameter probit models. For example, when the Rasch model is used, the probability \(p\left({Y}_{vip}=1\right)\) is determined by \(\Phi ({\theta }_{vp}-{\beta }_{ip})\). Here, \({\theta }_{vp}\) indicates the person parameter of individual v for the response process p. \({\beta }_{ip}\) is the difficulty or extremity parameter of item i for the response process p. When the two-parameter probit model is used, \(p\left({Y}_{vip}=1\right)\) is determined by \(\Phi ({{\alpha }_{ip}\theta }_{vp}-{\beta }_{ip})\). Here, \({\alpha }_{ip}\) indicates the discrimination of item i concerning response process p. Back to our example of the category “1,” the response “1” is coded as [M, D, E] = [0, 0, 1], and then, the probability is determined by \([1-\Phi \left({\alpha }_{M}{\theta }_{M}-{\beta }_{M}\right)\)]\(\times [1-\Phi \left({{\alpha }_{D}\theta }_{D}-{\beta }_{D}\right)]\times \Phi ({\alpha }_{E}{\theta }_{E}-{\beta }_{E})\). In our research, we use the two-parameter probit model to estimate the IR-tree data. Table 1 shows the category probabilities of each pseudo-item for five different categories.

APP Model

Unlike the MPP model, the APP model consists of different sequential steps of decision making, namely direction (D), extremity (E), and midpoint (M), as shown in Fig. 2. In accordance with the same example discussed earlier, the APP model assumes that the test-takers first evaluate the personality response direction (e.g., agree or disagree) in the query D step. Because the selected response category of “1” represents a disagreement, the model proceeds to the next step, the query E step. In this step, test-takers are assumed to evaluate the intensity of the agreement. Specifically, they decide whether or not to provide an extreme response. Because the response category of “1” indicates an extreme response, the decision-making process terminates with a “strongly disagree.” The same logic of pseudo-item transformation and category probabilities described in the MPP model is applied to the APP model. Table 2 shows the APP pseudo-item coding of the five-point Likert item based on the two-parameter probit model. Unlike the MPP model, the response category “1” in the APP model is coded as [D, E, M] = [0, 1, \(-\)]. The probability of the response category “1” is determined by \([1-\Phi \left({\alpha }_{D}{\theta }_{D}-{\beta }_{D}\right)\)]\(\times \Phi \left({{\alpha }_{E}\theta }_{E}-{\beta }_{E}\right)\).

IR-Tree Approach to Personality Data

The strength of the IR-tree model is that it disentangles trait-based response processes (i.e., indifference, direction, and intensity) from a single latent trait and enables us to evaluate sequential decision-making steps in Likert-type responses, in contrast to the traditional IRT model, which allows only a single latent trait for a unidimensional scale (Böckenholt & Meiser, 2017; LaHuis et al., 2019; Lang et al., 2019; Zettler et al., 2016).

Despite the advantages offered by IR-tree models, only a few researchers have implemented these models in personality tests (LaHuis et al., 2019; Lang et al., 2019; Zettler et al., 2016). For example, Zettler et al. (2016) applied the three-process IR-tree model to HEXACO personality data and found the indifference, direction, and intensity steps to be distinct response processes in the personality data. They also demonstrated that directional responding (query D) is associated with content-specific tendency, while indifferent responding (query M) and extreme responding (query E) are extensively related to person-specific tendencies. This result implies that respondents’ query D represents latent traits resulting from item content, while their queries E and M vary depending on individual differences (e.g., response style, response motivation).

Later, LaHuis et al. (2019) extended Zettler et al.’s (2016) research by testing the MPP model that follows an order of indifference, direction, and intensity as the original three-process model as well as the APP model that follows an alternate order of direction, intensity, and indifference. They found that the APP model displays better fitting of the personality data compared to the MPP model and concluded that the former is more appropriate in a personality assessment context. However, because their samples were all job incumbents, LaHuis et al. (2019) noted that “our results may not generalize to applicant samples. We recommend that future research replicates our study using an applicant sample” (p. 1016). Therefore, the current status of literature may not fully capture the decision-making process when respondents are confronted with personality assessment in motivated test settings.

Recent studies indicate that faking is a continuous and quantitative variable, rather than a qualitatively differentiated reaction pattern (Robie et al., 2021; Ziegler et al., 2015). Given that IR-tree models treat latent traits associated with each decision-making stage as continuous and quantitative variables, they are a good methodological tool for examining the characteristics and patterns of personality faking in each response phase (Böckenholt & Meiser, 2017). Additionally, in the IR-tree models, the latent traits involved in each decision-making step can be combined with covariates (e.g., social desirability), allowing researchers to evaluate the differential impact of covariates on the latent traits involved in each decision-making step (e.g., Böckenholt, 2012, 2019; De Boeck & Partchev, 2012; Plieninger & Meiser, 2014; Zettler et al., 2016).

Research Questions

Based on these theoretical and empirical backgrounds, we formulated three research questions. First, recent research showed that the APP model fits the personality assessment data better compared to the MPP model (LaHuis et al., 2019). As aforementioned, this finding was based on the incumbent sample. It is not guaranteed that the same conclusion can still be applied to the motivated sample, such as job applicants or faking respondents. More research is needed to examine whether their result can be generalized to motivated samples in high-stakes settings. Thus, the first RQ is as follows:

Research Question 1 (RQ1): Does the APP model fit better than the MPP model under both honest and motivated test conditions?

Second, based on the findings of Zettler et al. (2016), directional responding is a content-specific tendency, whereas indifferent and extreme responding is person-specific tendencies. Overall, the query D generally indicates a strong association with raw scale scores, while the association with the queries M and E varies across individuals. Böckenholt (2019) also supported these findings, showing that the queries M and E “appear to be uncorrelated with the trait measure (scale scores), which supports the interpretation of these effects as response styles” (p. 494). He also found that the query D was strongly correlated with scale scores. Based on these findings, the queries D, E, and M could vary across test conditions. For example, extreme responding (the query E) may be significantly influenced by test motivation, which could be especially pronounced in high-stakes decision making. To better understand the response process of Likert-type personality items in high-stakes settings, further research is required to examine how the queries D, E, and M vary between honest and motivated test conditions. Thus, the second research question is as follows:

Research Question 2 (RQ2): How do the magnitudes and relationships of the queries D, E, and M vary between honest and motivated test conditions?

Third, prior personality IR-tree research has not examined the effect of social desirability on each response step in actual job applicant samples. Sun et al. (2021) recently applied the IR-tree model to real job applicants’ vocational interests (not personality), but they focused on faking detection rather than on the internal mechanism of decision making and the association of social desirability with each response decision-making step. By examining how social desirability affects the specific decision-making process of personality responses, we can deepen our understanding of the personality response process in high-stakes settings and develop more fake-resistant personality tests. In this vein, we formulated the third research question as follows:

Research Question 3 (RQ3): How does item-level social desirability affect the decision-making process of the queries D, E, and M under real job selection settings?

Method

Samples and Measures

In this study, we used two samples. The first sample consists of 391 college students from a Korean university (50% men, average age = 20.9 years, standard deviation [SD] = 2.4 years) who volunteered through the university’s Psychology Research Participation System. For the Big Five Likert-type measure, we selected 100 items (5-point Likert) from the International Personality Item Pool (Goldberg, 1992) and constructed a Korean version of the Big Five Likert-type measure through the standard translation and back-translation procedure (Brislin, 1970). Next, we selected 60 items (5-point Likert) assessing Big Five dimensions, namely openness, conscientiousness, extraversion, agreeableness, and neuroticism, based on item discriminations. Each dimension was measured by 12 single statements. Afterward, we administered the measure to the 391 students through the within-subject design. We note that the within-subject design was used because it allowed us to investigate differences in the decision-making process between honest and faking conditions while it controlled individual differences (e.g., Byle & Holtgraves, 2008). We administered the personality test to half of the students under the “fake good” condition (Time 1) and then administered it under the “honest responding” condition 4 weeks later to minimize practice effects (Time 2). We administered the same personality test to the other half under the “honest responding” condition and followed it by the “fake good” condition after 4 weeks. Therefore, the data collection was counter-balanced. For the honest condition, we asked students to answer by describing the way they truly see themselves and not how they wish to be seen by others. For the faking condition, we asked students to imagine themselves applying for their dream job (e.g., Mueller-Hanson et al., 2003).

We took the second sample from Anglim et al.’s (2017) open-source data (https://osf.io/9e3a9). This dataset was collected by a human resource consulting company in Australia. Job applicants for various positions completed personality tests as part of a personnel selection process, and the consulting company collected their responses over several years. Meanwhile, the company collected research-only samples (i.e., nonapplicant samples) “as part of internal research principally designed at validating the psychometric properties of the personality measure” (Anglim et al., 2017, p. 672). The company obtained applicant- and research-only samples through a matching process to ensure comparability between samples. Specifically, strata sampling enabled similar distribution of age and gender. This achieved sample equivalence between the two samples (see Anglim et al., 2017 for details).

The final sample consisted of 1,613 real job applicants under the high-stakes setting and 1,613 research-only samples under the low-stakes setting. For the job applicant sample, 44% were male, and the average age was 39.45 years (SD = 11.08). For the research-only samples, 54% were male, and the average age was 45.16 years (SD = 11.51). We used the domain-level 60-item HEXACO-PI-R with a five-point Likert format. We measured each of six HEXACO personality dimensions (openness, conscientiousness, extraversion, agreeableness, neuroticism, and honest humility) by 10 items. Anglim et al. (2017) computed averaged standardized mean differences between the applicant- and research-only samples across items; then, they used them as indicators of social desirability. We also used this index of social desirability to investigate how social desirability affects the decision-making process of the queries D, E, and M.

Analytical Strategy

As a preliminary step, we identified careless responses and removed them from the analyses. For this purpose, we used the careless R package (Yentes & Wilhelm, 2021). Specifically, we used two indices, mahalanobis and longstring, to identify any potential careless responses because they are known to be effective in identifying random answers and patterned answers (Ehlers et al., 2009; Johnson, 2005; Meade & Craig, 2012). For the Mahalanobis distance, we flagged careless respondents by “mahad” function with a confidence level of 0.99. For the longstring index, we detected careless respondents by “longstring” function and removed them when they were higher than the maximum value in the boxplot. We evaluated the careless respondents and removed them for each scale. After identifying potential careless respondents, we removed approximately 10% of both samples (Korean sample and Anglim et al.’s [2017] open-source sample) from the original sample. Before conducting the MPP and the APP models, we reverse-coded any negatively keyed items to facilitate the interpretation and transformed raw scores to pseudo-items for the models. Then, we conducted two different IR-tree models (i.e., MPP and APP models) using the Mplus 7.2 (Muthén & Muthén, 2014).

To answer RQ1, we compared the model-data fit between the APP and the MPP models using the Akaike information criterion (AIC) and Bayesian information criterion (BIC). Lower AIC and BIC values indicate a better fit. Because the IR-tree models are recent developments in the IRT literature and still growing, they still have limitations from the psychometric perspective. LaHuis et al. (2019) highlighted this by saying that.

“One area of need is indices of model fit. In the present study, we used the AIC and BIC to compare models. We were not able to assess directly how well the models fit the data . . . We suspect indices of model fit will soon be developed for IR trees. (p. 1016)”

Unfortunately, this methodological issue has not been fully addressed until this moment, and thus, we were unable to test the overall model fit of IR-tree models. Accordingly, following previous empirical research using IR-tree models, we evaluated model fits between the APP and MPP models using the AIC and BIC (e.g., Böckenholt, 2012, 2019; Böckenholt & Meiser, 2017; LaHuis et al., 2019; Zettler et al., 2016).

To investigate how the magnitudes and relationships of the queries D, E, and M vary between honest and motivated samples (RQ2), we applied the multigroup IR-tree model. Plieninger (2020) recently proposed the multigroup version of the IR-tree model by allowing the model item parameters to vary among different groups or conditions. Thus, the item parameters and the means and covariances of the latent variables of queries D, E, and M can differ among groups, while the scales are calibrated on the same metric. Following Plieninger (2020), the latent traits of each query in the group g follow a multivariate normal distribution,\(MVN({{\varvec{\mu}}}_{g}, {\boldsymbol{\Sigma }}_{g})\), where the latent mean of the first group (\({{\varvec{\mu}}}_{g=1}\)) is constrained to be zero for the scale identification. We fixed the latent means of queries at zero for the nonmotivated samples (i.e., honest or research-only samples as the reference group), while we freely estimated them for the motivated test samples (i.e., faking or applicant respondents as the focal group). We compared the mean of latent traits associated with each response process (i.e., queries D, E, and M) between two test conditions.

To investigate how social desirability differentially affects the decision-making process of the queries D, E, and M under real employee selection settings (RQ3), we incorporated the social desirability scores into the IR-tree model of the job applicant sample (Böckenholt, 2012). Specifically, we regressed the queries D, E, and M on social desirability. We then examined the impacts (i.e., significance and magnitude of coefficients) of item-level social desirability on each response process. We conducted analyses based on Böckenholt and Meiser (2017) and Plieninger (2020). Example Mplus codes are available from the online supplementary materials.

Results

For RQ1, we investigated the APP and the MPP models separately in both samples. Overall, the APP model fit slightly better than the MPP model across samples. For example, for the faking samples (see Table 3), the APP model for extraversion yielded slightly lower AIC and BIC values than the MPP model (AIC = 9,783 and BIC = 9,994 for the APP model vs. AIC = 9,786 and BIC = 9,997 for the MPP model). Similarly, for the honest sample, the APP model for extraversion still yielded slightly lower AIC and BIC values than the MPP (AIC = 10,950 and BIC = 11,161 for the APP model vs. AIC = 10,954 and BIC = 11,164 for the MPP model).

We also observed similar patterns for the job applicant- and the research-only samples (see Table 4). For example, for the job applicant sample, the APP model for conscientiousness yielded slightly lower AIC and BIC values (AIC = 29,084 and BIC = 29,326 for the APP model vs. AIC = 29,095 and BIC = 29,338 for the MPP model). In addition, for the research-only sample, the APP model for conscientiousness still yielded slightly lower AIC and BIC values than the MPP model (AIC = 35,396 and BIC = 35,638 for the APP model vs. AIC = 35,411 and BIC = 35,653 for the MPP model).

Although the differences in AIC and BIC values between models were small, AIC and BIC are relative fit indices, and a lower value indicates a relatively better model fit. As a result, we supported that the APP model fit relatively better than the MPP model under both honest and motivated test settings and chose to use the APP model in subsequent analyses.

To investigate the RQ2, we conducted a multigroup IR-tree model between honest and motivated samples. First, as shown in Table 5, participants in the faking condition showed significantly higher latent traits of direction (query D) than those in the honest condition across Big Five personality traits: agreeableness (m = 0.701, p < 0.001), conscientiousness (m = 1.054, p < 0.001), extraversion (m = 0.749, p < 0.001), and openness (m = 0.657, p < 0.001), while lower on neuroticism (m = -0.774, p < 0.001). We note that means of the queries D, E, and M for the honest sample were set to 0 for identification purposes. Thus, the values of m can be interpreted as latent mean differences between samples.

These findings indicate that the faking sample selected more positive directional responses (i.e., query D) on agreeableness, conscientiousness, extraversion, and openness than the honest sample, but more negative directional responses (i.e., query D) on neuroticism. In addition, the results show latent traits of the query E of the faking sample were significantly higher than those of the honest sample on agreeableness (m = 0.243, p < 0.01) and conscientiousness (m = 0.422, p < 0.001). This finding indicates that the faking sample more extremely responded to these two personality traits than did the honest sample. However, there were no significant differences in extraversion, openness, and neuroticism. For the query M, the faking sample showed significantly lower preference of midpoint selection than the honest sample on agreeableness (m = -0.368, p < 0.001), conscientiousness (m = -0.545, p < 0.001), extraversion (m = -0.195, p < 0.001), and openness (m = -0.192, p < 0.001).

The real job applicant sample also showed similar patterns. As shown in Table 6, the job applicant sample showed significantly higher latent traits of directional preference (query D) than those of the research-only sample on agreeableness (m = 1.412, p < 0.001), conscientiousness (m = 1.092, p < 0.001), extraversion (m = 0.860, p < 0.001), openness (m = 0.313, p < 0.001), and honesty-humility (m = 1.154, p < 0.001), but lower latent trait on neuroticism (m = -0.202, p < 0.001). For the query E, the job applicant sample showed a significantly stronger tendency to select the extreme response category than the research-only sample on agreeableness (m = 0.557, p < 0.01), conscientiousness (m = 0.549, p < 0.001), extraversion (m = 0.338, p < 0.001), openness (m = 0.216, p < 0.001), and honesty-humility (m = 0.603, p < 0.001), but a weaker tendency on neuroticism (m = -0.125, p < 0.05). For the query M, the job applicant sample showed a significantly lower preference of midpoint selection than the research-only sample on agreeableness (m = -0.323, p < 0.001), conscientiousness (m = -0.721, p < 0.001), extraversion (m = -0.542, p < 0.001), openness (m = -0.138, p < 0.01), and honesty-humility (m = -0.406, p < 0.001), but higher on neuroticism (m = 0.284, p < 0.001).

We also note that the mean differences in the queries D, E, and M were more pronounced between the job applicant sample and the research-only sample than those in the experimental settings. Furthermore, the job applicant sample showed stronger directional (query D) and extreme (query E) response preferences but a weaker preference of midpoint selection (query M) compared to the research-only sample.

To answer RQ3, we incorporated the social desirability ratings into the IR-tree model of the job applicant sample as a covariate. Table 7 shows impacts of the social desirability on the queries D, E, and M of the job applicant sample across personality traits. The results showed that the social desirability made positive impacts on the directional preference (query D) on agreeableness (β = 0.646, p < 0.001), conscientiousness (β = 0.351, p < 0.001), extraversion (β = 0.539, p < 0.001), openness (β = 0.376, p < 0.001), and honesty-humility (β = 0.335, p < 0.001), but a negative impact on neuroticism (β = -0.267, p < 0.001). In addition, the social desirability made positive impacts on the extreme response preference (query E) across all six dimensions: agreeableness (β = 0.628, p < 0.001), conscientiousness (β = 0.577, p < 0.001), extraversion (β = 0.588, p < 0.001), openness (β = 0.243, p < 0.001), neuroticism (β = 0.215, p < 0.001), and honesty-humility (β = 0.587, p < 0.001). These results clearly show that the social desirability level significantly increases both job applicants’ directional and extreme response preference across all personality traits in the real job selection settings. The social desirability made much stronger impacts on the query E than the query D for the conscientiousness and honesty-humility traits.

Finally, the social desirability made negative impacts on the preference of midpoint selection (query M) across all six dimensions: agreeableness (β = -0.575, p < 0.001), conscientiousness (β = -0.533, p < 0.001), extraversion (β = -0.527, p < 0.001), openness (β = -0.342, p < 0.001), neuroticism (β = -0.248, p < 0.001), and honesty-humility (β = -0.327, p < 0.001). The results indicate that job applicants tend to avoid selecting middle category responses because they prefer more directional and extreme responses.

Discussion

An overarching goal of this study was to reveal the internal mechanism that would explain how test-takers in different test environments experience decision-making processes through the three-process IR-tree model (Böckenholt, 2012). The main findings are as follows: First, our results generally support the APP model (i.e., direction–extremity–midpoint sequence) under both honest and motivated test settings (RQ1). Second, samples in the motivated test settings (i.e., faking and job applicant samples) generally showed stronger directional and extreme response preferences, but a weaker preference of midpoint selection compared to samples in the nonmotivated test settings (i.e., honest and research-only samples) (RQ2). Third, for the job applicant sample, the social desirability made significant impacts for all queries D, E, and M. Results clearly showed that a higher level of social desirability leads to more positive directional responses for personality traits (but negative responses for neuroticism) and more extreme responses for personality traits (but less extreme for neuroticism). Also, results showed that as social desirability increased, job applicants tended to avoid endorsing middle-category responses (RQ3).

Theoretical Implications of the Current Study

These findings help to deepen our understanding of personality faking responses. Although researchers have proposed theoretical models to explain the decision-making process of Likert-type items in motivated testing conditions (e.g., Ziegler, 2011), these have been rarely tested. Our study generally supports Ziegler’s theoretical framework. First, Ziegler’s model begins with the process of comprehension and importance classification. After item content is interpreted and evaluated, it is assumed that respondents classify the item as important or unimportant. In this process, respondents may decide whether they agree or disagree with the item. If respondents deem the item content important to present their personality characteristics, then they would “agree”; otherwise, they would “disagree.” Thus, this process is closely related to the query D step. Because Ziegler (2011) suggested that decision making could vary depending on the situational demand (e.g., testing conditions), the first faking response may occur in the query D step under motivated test conditions. That is, if a test-taker evaluates an item as important in high-stakes situations, they would respond to the item in a more socially desirable way to create a favorable impression, and thus, faking response would occur. If test-takers evaluate items as unimportant according to the situational demand, then they would not fake the response. Our results support this theoretical viewpoint by showing that latent traits of the query D were significantly higher for the faking and job applicant samples than for the honest and research-only samples.

Second, Ziegler’s retrieval/judgment step (i.e., recall their memory and evaluate their preference) corresponds to the query E step involving the intensity of agreement or disagreement. Depending on the situational factor (e.g., testing conditions), respondents may attempt to exaggerate self-ratings of positive personality characteristics or downplay self-ratings of negative personality characteristics in the judgment step. In motivated test settings, the second faking response may occur in the query E step. Highly motivated respondents can first distort their answers in terms of directional response preference (e.g., changing from negative to positive attitude/opinion) and then change their answers to an even more extreme level (e.g., changing from “4-agree” to “5-strongly agree”). This two-step process assumption (e.g., faking occurs in both D and E sequentially) has also been proposed by previous researchers (Holtgraves, 2004; Leary & Kowalski, 1990; Leng et al., 2020). For example, most recently, Leng et al. (2020) introduced “the retrieve-deceive-transfer (RDT) model that involves three latent traits: (1) information retrieval from knowledge, (2) the tendency to deceive by the use of socially desirable information, and (3) the tendency to perform transfer selection” (p. 58). They suggested that respondents may first distort their retrieved information in a socially desirable way to generate an initial opinion (e.g., agree or disagree). Then, they “transfer the selection from their initial opinion to other more favorable options to gain more acceptance” (p. 58). Our findings also support the two-step process assumption, which indicates that social desirability can affect not only the manner in which information is retrieved for their initial opinion, but also the manner it is represented in the final evaluation stage under motivated test settings.

However, the faking response process may not be the same for all test-takers in motivated test settings. For instance, some test-takers may fake their responses through a two-step process, while others may fake their responses through a one-step process (i.e., faking occurs in only D or only E). Our findings support this phenomenon. For participants in the experimental faking conditions, the latent traits of the directional response preference (query D) were significantly inflated across all personality traits. In contrast, the latent traits of the extreme response preference (query E) were only inflated for two personality traits (i.e., agreeableness and conscientiousness), and the mean differences were much smaller than those of the query D. Moreover, we found nonsignificant mean differences of the query E for extraversion, openness, and neuroticism traits. We found significant differences of the query E only for agreeableness and conscientiousness. Overall, this finding from the experimental setting indicates the faking sample was more likely to be involved in a one-step faking process focusing on the directional response preference (query D) than the extremity response preference (query E). That said, we found different results for the actual job applicant sample. For the job applicant sample, we found significant mean differences for both query D and E across all personality traits. This result indicates that actual job applicants were more likely to experience the two-step faking process via a sequence of D and E steps.

Furthermore, the job applicant sample showed much stronger extreme response preferences (query E) across personality traits than the experimental faking sample. This result is somewhat surprising in light of previous studies that suggested the degree of faking in real job selection settings is generally considerably less than that found in faking studies based on experimental settings (Griffith et al., 2007; Griffith & Chet, 2013). This contradictory finding may be explained by our experimental faking sample perhaps not being strongly motivated by the instructional set because we simply asked participants to imagine themselves applying for their “dream job.” The suggested words “dream job” can be interpreted differently depending on individuals, and they may not be enough to sufficiently trigger test motivation in a specific high-stakes situation. The contradicting results involving the query E between the real job applicant and experimental faking samples may suggest that situational demands (e.g., job selection settings) could differently affect the query E. That is, the query E may be significantly influenced by an individual’s test motivation in a high-stakes situational context (Böckenholt, 2019; Zettler et al., 2016). However, this finding cannot be generalized based on a single study; thus, more research is needed to validate our findings in both experimental and real job applicant settings. Furthermore, future researchers should examine how individual (e.g., narcissism, locus of control, self-monitoring) and situational factors (e.g., job market, job type, job characteristics) differently influence the faking response process associated with the queries D and E (e.g., two-step vs. one-step faking response process).

Finally, our research clearly showed how respondents reacted differently to middle-category responses. Ziegler (2011) proposed that respondents with a high level of retrieval/judgment would avoid selecting a middle category in the mapping stage. This last sequence is corresponding to the query M step in the APP model. In our results, respondents in the motivated test settings preferred to choose extreme responses and tended to avoid middle-category responses. Taken together, our research findings support the theoretical framework of Ziegler’s response process under the motivated test condition and also generalize LaHuis et al.’s (2019) recent finding (i.e., the APP model fits personality data better than the MPP model) on faking and the job applicant context.

Practical Considerations for Fake-Resistant Personality Tests

As noted by Böckenholt (2019), “it cannot be assumed that the response process of a person is independent of the scale format selected. Instead, it seems more likely that the scale format can influence the response process in systematic ways” (p. 496). In this vein, our findings provide further implications for the response format of personality tests. This research focused on the five-point Likert scale, and its findings suggest that the Likert-type format is susceptible to faking in the response process involving direction (query D) and extremity (query E) in the motivated test conditions. Given these findings, the use of alternative response formats should be considered to reduce faking responses. One promising strategy is to use a multidimensional forced-choice (MFC) format, which is known to be more fake-resistant than the Likert-type format (Cao & Drasgow, 2019; Lee et al., 2019; Lee & Joo, 2021; Martinez Gómez & Salgado, 2021; Wetzel et al., 2020).

Unlike the Likert-type format, the MFC format presents multiple statements with a similar level of social desirability within a block. Then, respondents are asked to choose the most like me statement or rank from the most like me to the least like me statement. These two formats have a fundamental difference in the cognitive processes of perceiving and selecting responses. The Likert-type format assumes that individuals conduct an absolute judgment to evaluate their level of (dis)agreement and decide the best response option for single statements. By contrast, the MFC format assumes respondents conduct a comparative judgment among statements within an MFC item and choose or rank them according to their preference (Lee & Joo, 2021). The underlying difference between the two formats may influence the response process in high-stakes settings, and response formats based on comparative judgment could be more fake-resistant because such judgment does not require the response process involving extremity (E) steps.

Despite the merits of MFC formats in reducing faking responses, the negative reactions of test-takers to MFC (e.g., high cognitive load, test anxiety, lack of choice) have been a concern (e.g., Bowen et al., 2002; Converse et al., 2008). To address problems involving a perceived lack of choice in the MFC format, a graded preference format has recently been proposed as a modified version of the MFC format (Brown & Maydeu-Olivares, 2018; Dalal et al., 2021). In the graded preference format, a respondent is asked to “express the extent of their preference for one item or the other using several graded categories” (Brown & Maydeu-Olivares, 2018, p. 517). An example of the graded preference format is shown as follows. Each statement (i.e., Statement A and Statement B) presents different latent traits.

Much more | Slightly more | About the same | Slightly more | Much more | ||

|---|---|---|---|---|---|---|

Statement A | Statement B |

This response format involves a two-step decision-making process. In the first step, respondents are forced to choose the preferred latent traits between two dimensions. In the second step, they are asked to decide the extent or magnitude of their preference using graded categories, similar to the query E step. Recent research showed that the graded response format could improve the reaction of test-takers by allowing them more opportunities to present themselves through graded rating categories (Dalal et al., 2021). However, we raise a concern about the fake resistance of the graded preference format in high-stakes settings because our results showed that faking and job applicant samples significantly inflate their latent traits of query E (extremity preference) under motivated test conditions. Thus, test-takers may still have the opportunity to fake responses by inflating the extent of their preference in graded categories (e.g., by increasing the query E). That is, they can intentionally choose “much more” rather than “slightly more” to be more favorably perceived. However, there is no empirical evidence for whether this format is indeed fakable or non-fakable. Therefore, future researchers should explore the effectiveness of this new format.

Additionally, our work has implications for the response process, such as dominance and ideal point response. Our research is mainly focused on the five-point Likert-type scale that was developed based on the dominance assumption. The dominance model postulates that the probability of endorsing an item increases as the latent trait of a respondent increases. As a result, it demonstrates a monotonically increasing relationship between the probability of endorsing an item and latent traits. By contrast, the ideal point response model presupposes that individuals evaluate their latent traits and an item’s location while responding to an item. Individuals would be less inclined to endorse the item if their true latent trait was located far below or far above the location of the item. As a result, the ideal point model exhibits an inverted-U relationship between endorsement probability and latent traits.

An important difference between the dominance and ideal point response assumptions lies in the use of intermediate items located in the middle of the latent continuum. Ideal point-based personality measures generally include large proportions of intermediate items (e.g., 60% intermediate items, Cho et al., 2015; Harris et al., 2021). As noted by Harris et al. (2021), “faking requires identifying and responding consistent with the socially desirable pole of a given trait (p. 517).” Because dominance model-based personality measures include only positively or negatively worded items that indicate a clear direction of traits, the direction of all items and corresponding latent traits are transparent, and the faking can be easily carried out by inflating latent traits of queries E and D. By contrast, respondents may experience difficulty in faking ideal point-based personality items because the items do not represent an obvious direction of latent traits. LaPalme et al. (2017) recently found that respondents regarded the ideal point-based measure to be more difficult to fake than the dominance-based measures. Thus, respondents may experience difficulty in determining direction (query D) and extremity (query E) from a large number of intermediate items, which may contribute to reducing faking responses in a high-stakes setting. In this vein, the use of ideal point-based personality measures rather than dominance-based personality measures may be more effective to reduce faking responses. Future researchers should investigate how the response process of dominance-based and ideal point-based personality items appear differently in terms of direction (query D), mid-point (query M), and extremity (query E) between honest and motivated testing conditions.

Limitations

This study has several limitations. Although our model comparison generally supported the APP model, we still discovered minor discrepancies in the AIC and BIC values between the MPP and APP models. The little differences may cast doubt on the APP model’s unambiguous statistical support. Nevertheless, from an empirical and theoretical standpoint, the APP model with a sequential step of direction, extremity, and midpoint would make more sense because test-takers in motivated test settings are more likely to first evaluate whether they agree or disagree with item contents to make a more socially desirable impression. However, further study is required to establish the APP model’s superiority under various test situations. We limited our analysis to Korean and Australian samples. Our results may not be generalizable to other cultures or nations. An additional cross-cultural study should be conducted to determine the APP model’s suitability for personality response process under motivated test settings. Additionally, although we used the newly developed IR-tree model to explore the internal processes behind personality item responses, the IR-tree model is basically a variant of the traditional IRT model via the response recode process (i.e., pseudo-item transformation). Thus, the IR-tree model assumes all respondents choosing a response category (e.g., “5” strongly agree) are engaged in an identical response process. However, this assumption may be too strict because respondents endorsing the same category may still have differential response processes owing to various situational and individual factors. Also, it is still limited to investigate true causal relationships of mental process in personality faking through IR-tree models. Thus, other methods such as a think-aloud would remain beneficial in understanding the personality faking process.

Conclusion

Earlier researchers on personality faking have concentrated mainly on the mean differences in scale scores across personality characteristics to assess faking effects (e.g., Birkeland et al., 2006; Byle & Holtgraves, 2008; Griffith et al., 2007; Hough et al., 1990; Lee et al., 2017; Stark et al., 2001; Viswesvaran & Ones, 1999). Our study is the first to disentangle trait-based response processes (i.e., indifference, direction, and intensity) from a single latent trait and to investigate the decision-making process of Likert-type personality items under honest and motivated testing conditions. We hope our findings help researchers and practitioners better understand the decision-making process of applicants who take personality tests in high-stakes environments and develop more effective fake-resistant personality assessments.

References

Anderson, N., Salgado, J. F., & Hülsheger, U. R. (2010). Applicant reactions in selection: Comprehensive meta-analysis into reaction generalization versus situational specificity. International Journal of Selection and Assessment, 18, 291–304.

Anglim, J., Morse, G., De Vries, R. E., MacCann, C., Marty, A., & Mõttus, R. (2017). Comparing job applicants to non–applicants using an item–level bifactor model on the HEXACO personality inventory. European Journal of Personality, 31, 669–684.

Arthur, W., Jr., Glaze, R. M., Villado, A. J., & Taylor, J. E. (2010). The magnitude and extent of cheating and response distortion effects on unproctored internet-based tests of cognitive ability and personality. International Journal of Selection and Assessment, 18, 1–16.

Barrick, M. R., Mount, M. K., & Judge, T. A. (2001). Personality and performance at the beginning of the new millennium: What do we know and where do we go next? International Journal of Selection and Assessment, 9, 9–30.

Birkeland, S. A., Manson, T. M., Kisamore, J. L., Brannick, M. T., & Smith, M. A. (2006). A meta-analytic investigation of job applicant faking on personality measures. International Journal of Selection and Assessment, 14, 317–335.

Böckenholt, U. (2012). Modeling multiple response processes in judgment and choice. Psychological Methods, 17, 665–678.

Böckenholt, U. (2019). Contextual Responses to Affirmative and/or Reversed-Worded Items. Psychometrika, 84, 986–999.

Böckenholt, U., & Meiser, T. (2017). Response style analysis with threshold and multi-process IRT models: A review and tutorial. British Journal of Mathematical and Statistical Psychology, 70, 159–181.

Bowen, C. C., Martin, B. A., & Hunt, S. T. (2002). A comparison of ipsative and normative approaches for ability to control faking in personality questionnaires. International Journal of Organizational Analysis, 10, 240–259.

Brown, A., & Maydeu-Olivares, A. (2018). Ordinal factor analysis of graded-preference questionnaire data. Structural Equation Modeling: A Multidisciplinary Journal, 25, 516–529.

Brislin, R. W. (1970). Back-translation for cross-cultural research. Journal of Cross-Cultural Psychology, 1, 185–216.

Byle, K. A., & Holtgraves, T. M. (2008). Integrity testing, personality, and design: Interpreting the Personnel Reaction Blank. Journal of Business and Psychology, 22, 287–295.

Cao, M., & Drasgow, F. (2019). Does forcing reduce faking? A meta-analytic review of forced-choice personality measures in high-stakes situations. Journal of Applied Psychology, 104, 1347–1368.

Converse, P. D., Oswald, F. L., Imus, A., Hedricks, C., Roy, R., & Butera, H. (2008). Comparing personality test formats and warnings: Effects on criterion-related validity and test-taker reactions. International Journal of Selection and Assessment, 16, 155–169.

Cho, S., Drasgow, F., & Cao, M. (2015). An investigation of emotional intelligence measures using item response theory. Psychological Assessment, 27, 1241–1252.

Cucina, J. M., Vasilopoulos, N. L., Su, C., Busciglio, H. H., Cozma, I., DeCostanza, A. H., … Shaw, M. N. (2019). The effects of empirical keying of personality measures on faking and criterion-related validity. Journal of Business and Psychology, 34, 337–356.

Dalal, D. K., Zhu, X. S., Rangel, B., Boyce, A. S., & Lobene, E. (2021). Improving applicant reactions to forced-choice personality measurement: Interventions to reduce threats to test takers’ self-concepts. Journal of Business and Psychology, 36, 55–70.

De Boeck, P., & Partchev, I. (2012). IRTrees: Tree-based item response models of the GLMM family. Journal of Statistical Software, 48, 1–28.

Donovan, J. J., Dwight, S. A., & Schneider, D. (2014). The impact of applicant faking on selection measures, hiring decisions, and employee performance. Journal of Business and Psychology, 29, 479–493.

Ehlers, C., Greene-Shortridge, T. M., Weekley, J. A., & Zaiack, M. D. (2009). The exploration of statistical methods in detecting random responding. Poster session presented at the annual meeting for the Society for Industrial/Organizational Psychology, Atlanta, GA.

Ellingson, J. E., Smith, D. B., & Sackett, P. R. (2001). Investigating the influence of social desirability on personality factor structure. Journal of Applied Psychology, 86, 122–133.

Ellingson, J. E., & McFarland, L. A. (2011). Understanding faking behavior through the lens of motivation: An application of VIE theory. Human Performance, 24, 322–337.

Fell, C. B., & König, C. J. (2016). Cross-cultural differences in applicant faking on personality tests: A 43-nation study. Applied Psychology, 65, 671–717.

Fine, S., & Pirak, M. (2016). Faking fast and slow: Within-person response time latencies for measuring faking in personnel testing. Journal of Business and Psychology, 31, 51–64.

Goldberg, L. R. (1992). The development of markers for the Big-Five factor structure. Psychological Assessment, 4, 26–42.

Griffith, R. L., & Chet, R. (2013). Personality testing and the “F-word.”. Handbook of personality at work, 253–277.

Griffith, R. L., & Converse, P. D. (2012). The rules of evidence and the prevalence of applicant faking. In M. Ziegler, C. MacCann, & R. D. Roberts (Eds.), New perspectives on faking in personality assessment (pp. 34–52). Oxford University Press.

Griffith, R. L., Chmielowski, T., & Yoshita, Y. (2007). Do applicants fake? An examination of the frequency of applicant faking behavior. Personnel Review, 36, 341–355.

Griffith, R. L., Lee, L. M., Peterson, M. H., & Zickar, M. J. (2011). First dates and little whitelies: A trait contract classification theory of applicant faking behavior. Human Performance, 24, 338–357.

Grubb, W. L., III., & McDaniel, M. A. (2007). The fakability of Bar-On’s emotional quotient inventory short form: Catch me if you can. Human Performance, 20, 43–59.

Harris, A. M., McMillan, J. T., & Carter, N. T. (2021). Test-taker reactions to ideal point measures of personality. Journal of Business and Psychology, 36, 513–532.

Hauenstein, N. M., Bradley, K. M., O’Shea, P. G., Shah, Y. J., & Magill, D. P. (2017). Interactions between motivation to fake and personality item characteristics: Clarifying the process. Organizational Behavior and Human Decision Processes, 138, 74–92.

Holtrop, D., Hughes, A. W., Dunlop, P. D., Chan, J., & Steedman, G. (2020). Do social desirability scales measure dishonesty? A think-aloud study. European Journal of Psychological Assessment. Advance online publication. https://doi.org/10.1027/1015-5759/a000607.

Holtgraves, T. (2004). Social desirability and self-reports: Testing models of socially desirable responding. Personality and Social Psychology Bulletin, 30, 161–172.

Hough, L., & Dilchert, S. (2017). Personality: Its Measurement and Validity for Employee Selection. In Handbook of Employee Selection (pp. 298–325). Routledge.

Hough, L. M., Eaton, N. K., Dunnette, M. D., Kamp, J. D., & McCloy, R. A. (1990). Criterion-related validities of personality constructs and the effect of response distortion on those validities. Journal of Applied Psychology, 75, 581–595.

Hough, L. M., Oswald, F. L., & Ployhart, R. E. (2001). Determinants, detection and amelioration of adverse impact in personnel selection procedures: Issues, evidence and lessons learned. International Journal of Selection and Assessment, 9, 152–194.

Johnson, J. A. (2005). Ascertaining the validity of individual protocols from webbased personality inventories. Journal of Research in Personality, 39, 103–129.

Judge, T. A., Bono, J. E., Ilies, R., & Gerhardt, M. W. (2002). Personality and leadership: A qualitative and quantitative review. Journal of Applied Psychology, 87, 765–780.

Komar, S., Komar, J. A., Robie, C., & Taggar, S. (2010). Speeding personality measures to reduce faking. Journal of Personnel Psychology, 9, 126–137.

Krosnick, J. A. (1999). Survey research. Annual Review of Psychology, 50, 537–567.

Krosnick, J. A., & Fabrigar, L. R. (1998). Designing good questionnaires: Insights from psychology. Oxford Univ.

Kuncel, N. R., & Borneman, M. J. (2007). Toward a new method of detecting deliberately faked personality tests: The use of idiosyncratic item responses. International Journal of Selection and Assessment, 15, 220–231.

Lang, J. W., Lievens, F., De Fruyt, F., Zettler, I., & Tackett, J. L. (2019). Assessing meaningful within-person variability in Likert-scale rated personality descriptions: An IRT tree approach. Psychological Assessment, 31, 474–487.

LaHuis, D. M., Blackmore, C. E., Bryant-Lees, K. B., & Delgado, K. (2019). Applying item response trees to personality data in the selection context. Organizational Research Methods, 22, 1007–1018.

LaPalme, M., Tay, L., & Wang, W. (2017). A within-person examination of the ideal-point response process. Psychological Assessment, 30, 567–581.

Leary, M. R., & Kowalski, R. M. (1990). Impression management: A literature review and two-component model. Psychological Bulletin, 107, 34–47.

Lee, P., & Joo, S. H. (2021). A new investigation of fake resistance of a multidimensiona l forced-choice measure: An application of differential item/test functioning. Personnel Assessment and Decisions, 7, 31–38.

Lee, P., Mahoney, K. T., & Lee, S. (2017). An application of the exploratory structural equation modeling framework to the study of personality faking. Personality and Individual Differences, 119, 220–226.

Lee, P., Joo, S.-H., & Fyffe, S. (2019). Investigating faking effects on the construct validity through the Monte Carlo simulation study. Personality and Individual Differences, 150, 109491.

Marcus, B. (2009). ‘Faking’From the Applicant’s Perspective: A theory of self-presentation in personnel selection settings. International Journal of Selection and Assessment, 17, 417–430.

Martinez Gómez, A., & Salgado, J. F. (2021). A Meta-Analysis of the Faking Resistance of Forced-Choice Personality Inventories. Frontiers in Psychology, 4273.

McFarland, L. A., & Ryan, A. M. (2000). Variance in faking across noncognitive measures. Journal of Applied Psychology, 85, 812–821.

Meade, A. W., & Craig, S. B. (2012). Identifying careless responses in survey data. Psychological Methods, 17, 437–455.

Meade, A. W., Pappalardo, G., Braddy, P. W., & Fleenor, J. W. (2020). Rapid response measurement: Development of a faking-resistant assessment method for personality. Organizational Research Methods, 23, 181–207.

Mueller-Hanson, R., Heggestad, E. D., & Thornton, G. C., III. (2003). Faking and selection: Considering the use of personality from select-in and select-out perspectives. Journal of Applied Psychology, 88, 348–355.

Muthén, L. K., & Muthén, B. O. (1998–2014). Mplus user’s guide. (7th ed.). Muthén & Muthén.

O’Brien, E., & LaHuis, D. M. (2011). Do applicants and incumbents respond to personality items similarly? A comparison of dominance and ideal point response models. International Journal of Selection and Assessment, 19, 109–118.

Ones, D. S., Viswesvaran, C., & Reiss, A. D. (1996). Role of social desirability in personality testing for personnel selection: The red herring. Journal of Applied Psychology, 81, 660–679.

Pavlov, G., Maydeu-Olivares, A., & Fairchild, A. J. (2019). Effects of applicant faking on forced-choice and Likert scores. Organizational Research Methods, 22, 710–739.

Peterson, M. H., Griffith, R. L., Isaacson, J. A., O’Connell, M. S., & Mangos, P. M. (2011). Applicant faking, social desirability, and the prediction of counterproductive work behaviors. Human Performance, 24, 270–290.

Plieninger, H. (2020). Developing and applying IR-tree models: Guidelines, caveats, and an extension to multiple groups. Organizational Research Methods. https://doi.org/10.1177/1094428120911096.

Plieninger, H., & Meiser, T. (2014). Validity of multiprocess IRT models for separating content and response styles. Educational and Psychological Measurement, 74, 875–899.

Ployhart, R. E., & Holtz, B. C. (2008). The diversity–validity dilemma: Strategies for reducing racioethnic and sex subgroup differences and adverse impact in selection. Personnel Psychology, 61, 153–172.

Robie, C., Brown, D. J., & Beaty, J. C. (2007). Do people fake on personality inventories? A verbal protocol analysis. Journal of Business and Psychology, 21, 489–509.

Robie, C., Risavy, S. D., Jacobs, R. R., Christiansen, N. D., König, C. J., & Speer, A. B. (2021). An updated survey of beliefs and practices related to faking in individual assessments. International Journal of Selection and Assessment. 1–7.

Roulin, N., & Krings, F. (2020). Faking to fit in: Applicants’ response strategies to match organizational culture. Journal of Applied Psychology, 105, 130–145.

Roulin, N., Krings, F., & Binggeli, S. (2016). A dynamic model of applicant faking. Organizational Psychology Review, 6, 145–170.

Sackett, P. R., & Lievens, F. (2008). Personnel selection. Annual Review of Psychology, 59, 419–450.

Scherbaum, C. A., Sabet, J., Kern, M. J., & Agnello, P. (2013). Examining faking on personality inventories using unfolding item response theory models. Journal of Personality Assessment, 95, 207–216.

Schmidt, F. L., & Hunter, J. E. (1998). The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings. Psychological Bulletin, 124, 262–274.

Schwarz, N. E., & Sudman, S. E. (1996). Answering questions: Methodology for determining cognitive and communicative processes in survey research. Jossey-Bass.

Shoss, M. K., & Strube, M. J. (2011). How do you fake a personality test? An investigation of cognitive models of impression-managed responding. Organizational Behavior and Human Decision Processes, 116, 163–171.

Stark, S., Chernyshenko, O. S., Chan, K. Y., Lee, W. C., & Drasgow, F. (2001). Effects of the testing situation on item responding: Cause for concern. Journal of Applied Psychology, 86, 943–953.

Stark, S., Chernyshenko, O. S., & Drasgow, F. (2004). Examining the effects of differential item (functioning and differential) test functioning on selection decisions: When are statistically significant effects practically important? Journal of Applied Psychology, 89, 497–508.

Sun, T., Zhang, B., Cao, M., & Drasgow, F. (2021). Faking detection improved: Adopting a Likert item response process tree model. Organizational Research Methods, 10944281211002904.

Tourangeau, R., & Rasinski, K. A. (1988). Cognitive-Processes Underlying Context Effects in Attitude Measurement. Psychological Bulletin, 103, 299–314.

Van Hooft, E. A., & Born, M. P. (2012). Intentional response distortion on personality tests: Using eye-tracking to understand response processes when faking. Journal of Applied Psychology, 97, 301–316.

Vasilopoulos, N. L., Reilly, R. R., & Leaman, J. A. (2000). The influence of job familiarity and impression management on self-report measure scale scores and response latencies. Journal of Applied Psychology, 85, 50–64.

Viswesvaran, C., & Ones, D. S. (1999). Meta-analyses of fakability estimates: Implications for personality measurement. Educational and Psychological Measurement, 59, 197–210.

Wetzel, E., Frick, S., & Brown, A. (2020). Does multidimensional forced-choice prevent faking? Comparing the susceptibility of the multidimensional forced-choice format and the rating scale format to faking. Psychological Assessment. Advance online publication. https://doi.org/10.1037/pas0000971.

Yentes, R. D., & Wilhelm, F. (2021). Careless: Procedures for computing indices of careless responding. R Package Version, 1(2), 1.

Zettler, I., Lang, J. W., Hülsheger, U. R., & Hilbig, B. E. (2016). Dissociating indifferent, directional, and extreme responding in personality data: Applying the three-process model to self-and observer reports. Journal of Personality, 84, 461–472.

Zhang, B., Cao, M., Tay, L., Luo, J., & Drasgow, F. (2020). Examining the item response process to personality measures in high-stakes situations: Issues of measurement validity and predictive validity. Personnel Psychology, 73, 305–332.

Zickar, M. J., & Robie, C. (1999). Modeling faking good on personality items: An item-level analysis. Journal of Applied Psychology, 84, 551–563.

Zickar, M. J., Gibby, R. E., & Robie, C. (2004). Uncovering faking samples in applicant, incumbent, and experimental data sets: An application of mixed-model item response theory. Organizational Research Methods, 7, 168–190.

Ziegler, M. (2011). Applicant faking: A look into the black box. The Industrial and Organizational Psychologist, 49, 29–36.

Ziegler, M., Schmidt-Atzert, L., Buhner, M., & Krumm, S. (2007). Fakability of different measurement methods for achievement motivation: Questionnaire, semi-projective, and objective. Psychology Science, 49, 291–307.

Ziegler, M., Maaß, U., Griffith, R., & Gammon, A. (2015). What is the nature of faking? Modeling distinct response patterns and quantitative differences in faking at the same time. Organizational Research Methods, 18, 679–703.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, P., Joo, SH. & Jia, Z. Opening the Black Box of the Response Process to Personality Faking: An Application of Item Response Tree Models. J Bus Psychol 37, 1199–1214 (2022). https://doi.org/10.1007/s10869-022-09791-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10869-022-09791-6