Abstract

This study compared the effectiveness and efficiency of an error-correction procedure, response repetition, to a prompting procedure, simultaneous prompting, on the acquisition and maintenance of multiplication facts for three typically developing 3rd grade students. This study employed an adapted alternating treatments design nested in a multiple probe design across three sets of multiplication facts. Results indicated that correct responding increased upon intervention implementation for all participants. For two participants, response repetition was a more effective teaching procedure. For one participant, while both teaching procedures were effective, response repetition was more efficient in terms of sessions to mastery while simultaneous prompting was more efficient in terms of errors and seconds to mastery. Maintenance data were variable. Discussion focuses on conceptual differences between response repetition and simultaneous prompting that might have accounted for results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Discrete trial instruction (DTI) is a systematic approach to teaching distinct behaviors. DTI is rooted in applied behavior analysis and involves the intentional arrangement of antecedent and consequent events around carefully defined behaviors to promote skill acquisition. In DTI, learning trials involve a task instruction (i.e., the discriminative stimulus), a prompt (if needed), an opportunity for the learner to respond, and consequences provided contingent on the learner’s response (i.e., reinforcement for correct responding and error-correction for incorrect responding; Lovaas 2003). DTI is an effective way to teach a wide range of skills (Smith 2001). Two key aspects of DTI are the use of prompting and error-correction procedures, with many variations of each used in research and practice. Few studies have isolated the effectiveness and efficiency of these components of DTI and those that exist focused on students with disabilities (e.g., Leaf et al. 2010). The current investigation extends this literature by comparing the effectiveness and efficiency of a prompting procedure, simultaneous prompting, to an error-correction procedure, response repetition, on the acquisition and maintenance of multiplication facts among typically developing students.

Simultaneous Prompting

Simultaneous prompting (SP) is a prompting strategy related to time delay procedures (i.e., constant time delay, progressive time delay). SP involves the simultaneous delivery of a discriminative stimulus and a controlling prompt. SP has advantages over other prompting strategies in that it is efficient, there are no decision rules regarding prompt fading, few prerequisite skills are required to benefit from this strategy, learners always access reinforcement, and it reduces learner errors (Rao and Mallow 2009). However, because students lack opportunities to respond independently during instruction, probe sessions are required to assess for transfer of stimulus control. During probe sessions, students can make errors without a consequence (Johnson et al. 1996), and a wide range of student error responses during probe sessions have been documented (MacFarland-Smith et al. 1993; Singleton et al. 1995). Research supports the use of SP for skill acquisition when working with individuals with developmental disabilities of all ages (see Morse and Schuster 2004 for a review). Although limited, evidence also supports the use of SP with typically developing individuals. Preschool children successfully learned nouns from classroom storybooks (Gibson and Schuster 1992), and adolescent students learned a variety of national flags (Fickel et al. 1998), and elements from the periodic table (Parker and Schuster 2002).

Response Repetition

Response repetition (RR), also known as directed rehearsal, is an intensive error-correction strategy. RR requires learners to emit multiple correct responses after a teacher model contingent on an error (Barbetta et al. 1993). RR has advantages over other error-correction strategies in that it provides a model of, and additional opportunities to practice, the correct response, and this practice occurs under appropriate stimulus conditions. Research has consistently demonstrated that increases in the number of opportunities to respond result in increases in skill acquisition (Belfiore et al. 1995; Szadokierski and Burns 2008). Learning may be less efficient with RR, however, when examining trials and errors required to reach mastery. Requiring students to engage in multiple consecutive correct responses may substantially increase the number of trials to mastery (Carroll et al. 2015; McGhan and Lerman 2013). RR has been used to teach a variety of skills including math facts (Rapp et al. 2012; Reynolds et al. 2016) and sight words (Barbetta et al. 1993; Barbetta et al. 1994; Marvin et al. 2010; Worsdell et al. 2005) for learners with and without disabilities.

Comparison of Prompting and Error-Correction Procedures

Ample studies have demonstrated the effectiveness of using SP or RR alone (Barbetta et al. 1993; Fickel et al. 1998; Gibson and Schuster 1992; Parker and Schuster 2002; Rapp et al. 2012; Reynolds et al. 2016). However, none have compared their effectiveness and efficiency in promoting skill acquisition. There are few studies that have isolated the effectiveness and efficiency of other prompting and error-correction procedures on the acquisition and maintenance of discrete skills and all have involved students with disabilities. In general, results of these studies seem to support the use of error-correction procedures over prompting procedures.

Leaf et al. (2010) compared the effectiveness of no–no-prompting (NNP) to SP on the acquisition and maintenance of conditional discrimination skills for three participants with autism spectrum disorder. NNP involved saying “no” or “try again” contingent on an error then representing the trial. The same consequence was delivered contingent on a second error. After a third error, the researchers re-presented the trial and delivered a controlling prompt. This study utilized a parallel treatments design across three item pairs. Results suggested all participants mastered all targets assigned to the NNP condition; however, only one participant mastered targets assigned to the SP condition, and for only one of three item pairs. The researchers provided several possible explanations as to why NNP was more effective than SP. First, there were a lack of differential consequences for targets taught via SP (i.e., participants always emitted prompted correct responses, thus, they always accessed reinforcement), whereas consequences varied in the NNP based on accuracy of responding. Second, visual attending was promoted for targets taught in the NNP condition but not the SP condition. In other words, to maximize the rate of reinforcement in the NNP condition, participants needed to visually attend to stimuli. However, this was not the case in the SP condition because all trials resulted in reinforcement. Third, probe trials did not have programmed consequences based on accuracy of responding which the authors suggested might be necessary to achieve transfer of stimulus control for targets taught via SP.

Fentress and Lerman (2012) found similar support for NNP when the effectiveness and efficiency of NNP was compared to most-to-least (MTL) prompting on acquisition and maintenance of a variety of basic skills for four participants with autism spectrum disorder. MTL prompting is a near-errorless learning strategy, where instruction is paired with a controlling prompt and the intrusiveness of the prompt is gradually faded (Libby et al. 2008). Performance was compared using an alternating treatments design nested in a multiple baseline design across item pairs. On average, students met mastery criteria faster using NNP. However, fewer errors were made and maintenance of skills was better for skills taught in the MTL condition (Fentress and Lerman 2012). The researchers suggested increased opportunities to respond accounted for the relative effectiveness of NNP in promoting skill acquisition, but suggested the same factor may have accounted for poorer maintenance of performance relative to MTL.

In a more recent study, Leaf et al. (2014a, b) compared the effectiveness and efficiency of MTL prompting to an error-correction procedure on the acquisition and maintenance of stating names of Muppet or comic book characters for two students with autism spectrum disorder. The error-correction procedure used in this study was similar to RR. In this study, contingent on an error, the researchers modeled the correct response and required participants to engage in one remedial trial (compared to multiple remedial trials in most RR research). This study employed an adapted alternating treatment design nested in a multiple probe design across item pairs. Results suggested error-correction was slightly more effective and efficient than MTL prompting.

When flexible prompt fading (i.e., a prompting technique that relies on teachers’ judgment to decide to prompt a student and type of prompt to implement) was compared to error-correction procedures for teaching children with autism spectrum disorder to state names of Muppet characters, results indicated both teaching procedures were effective. However, flexible prompt fading was more efficient in terms of total number of trials and sessions, as well as total amount of time for participants to learn all targeted skills (Leaf et al. 2014b).

While it is important to understand how aspects of DTI differentially contribute to the effectiveness of skill acquisition, it is equally important to understand the efficiency of those aspects. Instructional efficiency can be computed by examining the number of trials or sessions to mastery, the total amount of time needed for mastery, as well as the number of errors made until mastery. For the comparison studies listed above when looking at sessions and trials to mastery, three of the four studies (Fentress and Lerman 2012; Leaf et al. 2010, 2014a, b) support the use of error-correction procedures over prompting strategies, with the exception of flexible prompt fading compared to error-correction (Leaf et al. 2014a, b). Additional data are needed to understand the efficiency of aspects of DTI when used with typically developing students.

The Current Study

The purpose of this study was to compare RR to SP on the acquisition and maintenance of multiplication facts among typically developing students. Research indicates both RR and SP promote acquisition and maintenance of a variety of skills when evaluated in isolation. Research directly comparing prompting and error-correction procedures has generally supported the relative effectiveness and efficiency of error-correction procedures; however, more comparison research is needed. There are a number of variations to prompting and error-correction used in research and practice and their relative effectiveness and efficiency are unknown. RR and SP have not previously been compared. While one might expect both to lead to skill acquisition, it is unclear which would be more efficient. Relying exclusively on SP could hinder skill acquisition for reasons discussed by Leaf et al. (2010), yielding less efficient skill acquisition. On the other hand, relying exclusively on RR, which allows students to make errors, could hinder skill acquisition by leading to additional errors or disruptive behavior (Leaf et al. 2014a, b). This study was undertaken to answer the following research questions:

-

1.

Is RR or SP more effective in promoting multiplication fact acquisition and maintenance among typically developing elementary school students? This will be evaluated by determining whether participants met the mastery criterion for item sets.

-

2.

Is RR or SP more efficient in promoting multiplication fact acquisition and maintenance among typically developing elementary school students? This will be evaluated by determining which procedure led to mastery in fewer sessions, errors, and seconds.

Method

Participants and Setting

Potential participants were identified via performance on a single-skill multiplication math fact curriculum-based measurement (CBM) probe. Consultation with teachers helped the team identify final participants. Participants were three 9-year-old females in the 3rd grade attending a rural elementary school in the Midwestern region of the USA. All participants were Caucasian. Anna, Bailey, and Rachel answered 4, 7, and 15 digits correct per minute, respectively. This is well below the instructional criteria of 24–49 digits correct per minute for 3rd graders (Burns et al. 2006). Participants did not receive free or reduced lunch, or have documented or observed intellectual disabilities or sensory impairments.

The participants’ school served 574 K-5th grade students. The student population of the school was 89% Caucasian and 34% were eligible for the federal free or reduced price lunch. Approximately 79% of the students scored in the proficient range on the most recent state test in mathematics. School psychology graduate students conducted probe and instructional sessions in a private room. Sessions were video recorded to permit a second observer to assess interobserver agreement (IOA) and procedural integrity. Parent consent and student assent were documented, and procedures were carried out in accordance with the second author’s Institutional Review Board.

Materials

Pretesting

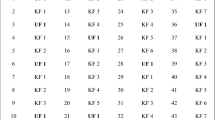

Participants were pretested with 126 multiplication facts (0 × 0 through 12 × 12). Instructors presented multiplication facts printed vertically on 3″ × 5″ index cards in a random order. To identify multiplication facts as unknowns in the acquisition phase (Haring and Eaton 1978), participants were given 4 s to respond and had two opportunities. Other studies allow 2 s to respond and a single opportunity (e.g., Burns 2005). Facts answered incorrectly on both trials were considered unknown. The facts with the smaller multiplicand from facts from the same family (e.g., 2 × 6 and 6 × 2) were removed from the list of unknowns to limit practice effects or carryover. Leftover facts were used to construct training stimuli. Bailey, Anna, and Rachel had 34, 28, and 26 unknowns, respectively.

Training Stimuli

Three sets of training stimuli were created (Sets A, B, and C). Twenty-four unknown facts were taught during instructional sessions in order to have an equal number of unknowns in each set and in each condition for all participants. There were eight multiplication facts in each item set. Four facts in each item set were assigned to the RR condition and four were assigned to the SP condition. To obtain final 24 training stimuli, × 0 and × 1 facts, and × 12 and × 11 facts with larger factors were removed. Facts were randomly assigned to Sets A, B, and C, and then systematically assigned to RR or SP. During systematic assignment, × 10, × 11, and × 12 facts, and squared facts (e.g., 6 × 6) were equally distributed across conditions.

Response Measurement

Instructors collected data on correct and incorrect responses on probe trials and instructional trials. Correct responding was defined as accurately stating the answer to the multiplication fact within 3 s of the experimenter presenting the flashcard (e.g., when presented with 4 × 6 the participant stated “24” within 3 s). Incorrect responses were recorded if the participant stated an inaccurate response or did not respond within 3 s. The primary-dependent variable in this study was percent of trials correct on probe trials of multiplication facts across baseline, intervention, and maintenance conditions. Participants were considered to have mastered a set of multiplication facts in either the RR or SP condition after he or she responded correctly to 100% of the probe trials across three consecutive probe sessions. A discontinue criterion was predetermined in the case participants mastered facts taught under one condition (RR or SP) but not another. Upon mastering facts taught under one condition, the corresponding facts remained in the intervention phase for three additional sessions. At this point, the intervention phase was discontinued to prevent spending time on an ineffective teaching procedure and detracting from classroom time (Leaf et al. 2014a, b). To collect data on the efficiency of these procedures, the number of sessions, the number of errors, and seconds to meeting the mastery criterion for each set were also recorded.

Procedure

Data were collected for an average of 3.57 days per week for about 10 weeks for each participant, except during the school district’s spring break. After facts were mastered or the discontinue criterion was met for facts in all sets, data were collected one to two times per week for an additional 4 weeks. Following the collection of baseline data via full probe sessions, each day of intervention began with a probe session to assess participant performance independent of the two teaching procedures compared in the study. This was followed by a short break. Following the break, the first of two instructional sessions began. The sequence of instructional sessions was randomly determined each day using a coin flip before the start of the intervention. A new coin flip occurred each day to determine which instructional session was presented first. A short break was provided between the two instructional sessions.

Probe Trials

Probe trials were used throughout the study to assess performance on facts not yet introduced in intervention (baseline), those currently being taught (intervention), and those already mastered by participants (maintenance). Probe trials allowed participants to demonstrate responses independent of the two teaching procedures compared in this study. During probe trials, researchers presented the training stimuli on 3″ × 5″ flashcards for 3 s. Researchers did not read the problem, and there were no programmed consequences based on accuracy of responses during probe sessions. Probe trials were deployed within full probe sessions and daily probe sessions, though they were implemented identically across session types. Full probe sessions and daily probe sessions varied only in the number of sets of training stimuli presented to participants.

Full Probe Sessions

Full probe sessions assessed all training stimuli (N = 24) across Sets A, B, and C. Full probe sessions allowed the researchers to assess performance on facts not yet introduced in intervention (baseline), those currently being taught (intervention), and those already mastered by participants (maintenance) simultaneously. Full probe sessions were administered if: all item sets were in baseline, a phase change was being considered because student performance was approaching the discontinue or mastery criterion, or if it was the initial session of the week. Researchers administered three blocks of 8 probe trials (Sets A, B, C). Participants were first presented with the 8 facts assigned to Set A in a random order. Identical procedures continued for facts assigned to Sets B and C. In all, there were 24 probe trials per full probe session. Data from full probe sessions are apparent in the figures when there are graphed data for all three item sets for a session.

Daily Probe Sessions

Daily probe sessions assessed training stimuli (n = 8) currently being taught. There were 8 probe trials per daily probe session. Data from daily probe sessions are apparent in the figures when there are graphed data only for the item set currently in the intervention phase.

Instructional Trials

Following a day’s probe session, instructional trials were used to teach the training stimuli in the intervention phase (n = 8). To encourage acquisition of unknown facts, both conditions were exposed daily. Unlike probe trials, researchers read the problem and there were programmed consequences based on accuracy of responses. There were 24 instructional trials per day, in sum. The four facts in each condition were presented in random order in three blocks for 12 trials. Instructional trials were deployed within the RR and SP conditions described in more detail below.

RR

After ensuring participants were attending by making eye contact, instructors introduced this condition by stating, “For these facts, I will read the problem and you will have a chance to answer it on your own. If you answer incorrectly, that’s okay. I will give you the correct answer then ask you to read the problem and answer five times.” The instructor exposed the first flash card, read the problem, and waited up to 3 s for the participant to respond. Verbal praise was contingent on correct responses, and an RR procedure was contingent on incorrect responses. After an incorrect response, the instructor delivered an instructional no (e.g., “No.” or “Try again.”) in a neutral tone and modeled the correct response. Next, the instructor asked the participant to recite the multiplication fact and its correct answer five times. Five repetitions were selected to be consistent with other studies of response repetition (Worsdell et al. 2005; Reynolds et al. 2016). The instructor continued to expose the flashcard throughout the RR procedure.

SP

Prior to presenting trials, instructors ensured participants were attending by making eye contact and said, “For these facts, I will first read the problem and give you the answer. Then I will read the problem and ask you to provide the answer on your own.” The instructor exposed the first flash card, read the problem, and immediately stated the correct response. The instructor then continued to expose the flash card, reread the problem, and waited up to 3 s for the participant to respond. Verbal praise was contingent on each correct response. Incorrect responses were ignored.

Reliability and Procedural Integrity

A second researcher watched session video recordings to conduct interobserver agreement (IOA; 35% of sessions) over the full and daily probes and procedural integrity (28% of sessions). The second researcher determined whether a participant responded correctly or incorrectly to individual trials presented during the probe sessions. During a full probe session, there were 24 trials that were compared. During a daily probe session, there were 8 trials that were compared. IOA data were collected on a trial-by-trial basis and was calculated by dividing the number of agreements by the number of agreements plus disagreements and multiplying by 100. An agreement was defined as both researchers indicating that a response was correct or incorrect. A disagreement was defined as one researcher indicating a response was correct and the second researcher indicated a response was incorrect, or vice versa. The average IOA across participants and sessions was 97% (88–100%).

A 24-item checklist was completed to collect procedural integrity data. A second researcher observed recorded sessions and indicated whether each item was implemented as intended on an item-by item basis. The percentage of items implemented correctly was calculated for each session. The average integrity across participants was 99% (89–100%).

Experimental Design

This intervention nested an adapted alternating treatments design into a multiple probe design (Leaf et al. 2014a, b). Because training stimuli were taught in sets, this design necessitates two special considerations. First, as described above, we systematically equated the difficulty of the facts assigned to the two conditions within each set. Second, as noted above, a discontinue criterion was predetermined in the case participants mastered facts taught under one condition (RR or SP) but not another.

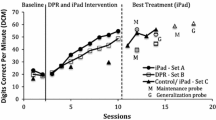

Results

Figures 1, 2 and 3 show the results from probe sessions for the three participants. Table 1 indicates efficiency data on sessions, errors, and seconds to meeting the discontinue or mastery criteria. Figure 1 shows results for Bailey. During baseline, while correct responding ranged from 0 to 50%, there did not appear to be systematic differences in correct responding between conditions nor was there a discernable trend toward mastery of facts. Correct responding increased from baseline levels when intervention was implemented across item sets and conditions. Bailey met the mastery criterion for facts in the RR condition for all item sets. She met the mastery criterion for facts in the SP condition only for Set B. The discontinue criterion was met for Sets A and C. For Bailey, RR was the more effective teaching procedure. For Set B, as indicated in Table 1, Bailey met the mastery criterion for facts assigned to RR in three fewer sessions than those in the SP condition. However, she made fewer errors and spent less time learning facts assigned to the SP condition. During maintenance, average correct responding was 48% in the RR condition and 64% in the SP condition. This result was surprising given Bailey did not meet the mastery criterion for two sets in the SP condition. Bailey did not appear to consistently maintain facts acquired in the intervention phase.

Anna’s results are shown in Fig. 2. Correct responding ranged from 0 to 25% during probe sessions across conditions and item sets during baseline. There did not appear to be systematic differences in correct responding between conditions. Further, there was no trend toward mastery of facts. Correct responding increased upon introduction of the intervention across item sets and conditions. Anna met the mastery criterion for facts in both conditions for all item sets. Table 1 indicates she met the mastery criterion for facts in the RR condition one session sooner than those in the SP condition for each item set. Thus, for Anna, while both procedures were effective, RR was slightly more efficient in terms of sessions to mastery. On the other hand, SP was more efficient in terms of errors and seconds to mastery. During maintenance, average correct responding was 78% in the RR condition and 63% in the SP condition. Except for facts in Set A, Anna appeared to consistently maintain facts acquired during the intervention phase. Correct responding for facts in Set A was characterized by initial maintenance, followed by sharp declines, and finally recovery toward the end of data collection.

Figure 3 shows results for Rachel. During baseline, Rachel answered between 0 and 50% of probe trials correctly across conditions and item sets. For Set A, correct responding remained at 0% across conditions. However, for Sets B and C, correct responding was typically higher for facts assigned to the RR conditions. Despite this difference, there did not appear to be a discernable trend toward mastery in either condition. Correct responding increased from baseline levels when intervention was implemented, except for facts assigned to SP in Set C. Rachel met the mastery criterion for facts in the RR condition for all item sets. She met the discontinue criterion for facts in the SP condition for all item sets. For Rachel, RR was more effective than SP. During maintenance, average correct responding was 84% in the RR condition and 25% in the SP condition, which is unsurprising given she met the discontinue criterion for facts in the SP condition for all item sets. Rachel appeared to consistently maintain facts acquired during the intervention phase.

Discussion

This study compared the effectiveness and efficiency of RR to SP on multiplication fact acquisition and maintenance among three typically developing 3rd grade students. Results indicated that correct responding increased upon intervention implementation for all participants. Effectiveness of the two procedures was compared on whether participants met the mastery criterion for facts assigned to each condition for each item set. RR was an effective procedure for all participants. SP was effective for one participant. Bailey failed to meet the mastery criterion for facts assigned to SP for two item sets. Rachel, similarly, failed to meet the mastery criterion for facts assigned to SP for all item sets. When both procedures were effective within a given item set, efficiency of the two procedures was compared on sessions to mastery, errors to mastery, and time to mastery. RR was more efficient in terms of sessions to mastery, but SP was associated with fewer errors and less time to mastery. Correct responding during the maintenance phase was variable, but participants usually maintained facts mastered in the intervention phase to some extent.

Consistent with other studies (Reynolds et al. 2016; Worsdell et al. 2005), the current investigation supports the use of RR for skill acquisition. The current investigation extends the literature by showing that an error-correction procedure (RR) was, in general, more effective than a near-errorless learning strategy (SP) for skill acquisition in typically developing students. This was surprising given SP has been effective in teaching a variety of skills to learners with and without disabilities. However, this is not the first study where SP was ineffective for teaching discrete skills. Leaf et al. (2010), for example, showed that three participants with autism spectrum disorder failed to master at least some skills taught via SP and mastered all skills taught via NNP.

There are two important themes to discuss based on this study’s results. First, hypotheses should be explored for why SP did not lead to skill acquisition for two of three participants. Second, why, in this study, was RR generally more effective than SP? Perhaps the simplest explanation for why two participants did not acquire skills taught via SP was procedural. Participants were not provided enough time to meet the mastery criterion for facts in SP. Except for Set C for Rachel, correct responding clearly increased from baseline levels on other item sets taught via SP that were not mastered. Our discontinue criterion was consistent with previous research, but we might have considered adjusting it based on participant performance.

It is also important to consider conceptual differences between RR and SP that might have partially accounted for differences in effectiveness. There are at least four conceptual factors that might account for differences in effectiveness. First, in SP, each instructional trial resulted in reinforcement because participants did not make any errors (Leaf et al. 2010). In RR, however, consequences differed based on accuracy of responding. Importantly, incorrect responses led to contingent error-correction. Previous research shows error-correction may promote skill acquisition by incorporating additional practice under relevant stimulus conditions and/or functioning as an aversive stimulus in the context of an avoidance contingency (Rodgers and Iwata 1991; Worsdell et al. 2005). This factor may have accounted for some of the differences in performance across conditions in the current study.

Second, as noted by Leaf et al. (2010), error-correction procedures (e.g., NNP or RR) may have been more effective than near-errorless procedures (e.g., SP) because they necessitate attending to visual components of the discriminative stimulus to maximize the rate of reinforcement during instructional trials. In the current study, during instructional trials, participants needed to attend to both the auditory (i.e., the instructor reading the math fact) and visual (i.e., the written math fact) components of the discriminative stimulus in the RR condition only. In the SP condition, participants could attend solely to the auditory component of the discriminative stimulus and access reinforcement on every trial in the SP condition. This may have caused reduced response effort in SP (i.e., participants not attending to the visual component of the discriminative stimulus), which may have, in turn, affected the subsequent transfer of stimulus control during probe sessions, where the auditory component of the discriminative stimulus was not presented.

Third, one advantage of RR is the additional opportunities to correctly respond under the appropriate stimulus conditions, and increased opportunities to respond increases learning (Belfiore et al. 1995; Szadokierski and Burns 2008). In the current investigation, students had to recite the multiplication fact five times contingent on an error. Given all students made errors, this provided substantially more opportunities to respond to each fact in the RR condition compared to those facts in the SP condition. Like previous comparative studies, this appears to be one mechanism by which error-correction is relatively more effective and efficient than prompting procedures (Leaf et al. 2010; Fentress and Lerman 2012). Future comparative studies might attempt to keep opportunities to respond in each condition similar.

Finally, SP might have been less effective compared to other studies because there were no consequences for incorrect responding during the probe trials. A wide range of error responses during probe sessions have been documented (MacFarland-Smith et al. 1993; Singleton et al. 1995). Other studies that have investigated SP (Akmanoglu and Batu 2004) provided praise for correct responses and no consequence for incorrect responses during probe trials. Future investigations may consider incorporating consequences based on accuracy in probe trials to enhance transfer of stimulus control.

This study had several limitations. First, the present results do not include information regarding how many additional SP teaching trials would be required to meet mastery criterion.

Though correct responding increased compared to baseline for all participants, it is unknown how many additional sessions would have been needed for Bailey to master facts assigned to SP in Sets A and C and Rachel to master all facts assigned to SP. The discontinue criterion was established to be consistent with previous research and implemented to prevent spending unfruitful time on an ineffective teaching procedure and detracting from classroom time (Leaf et al. 2014a, b). Because two of three participants did not meet the mastery criterion for all facts assigned to the SP condition, we could not compare the efficiency of RR and SP to the extent we had hoped. Second, this study lacked social validity data. No data were collected on students’ preferences for the RR or SP strategy. Without this information, we could not explore whether the students’ preference for one of the two strategies was related to effectiveness. Third, we failed to use an assessment to determine whether a less intrusive prompt functioned as a controlling prompt. Finally, as a limitation inherent in adapted alternating treatment designs, it is possible that the items in a set were more difficult in one condition over the other.

Further research is needed comparing near-errorless to error-correction procedures in teaching skills to learners with and without disabilities to provide information on the effectiveness and efficiency of these two approaches. Given the limited research in this area, there are a number of procedures that might be compared. Based on the results of the current study, it might be useful to compare a near-errorless procedure that incorporates differential consequences (e.g., progressive time delay) to various, possible less intensive and intrusive, error-correction strategies. In addition, some researchers have shown error-correction procedures can evoke interfering behaviors and argued instructors should rely on near-errorless strategies for this reason (Weeks and Gaylord-Ross 1981). This argument is sensible; however, anecdotally there did not appear to be differences in interfering behaviors across conditions in the current study. Despite this, there is very little empirical data showing learners exhibit more interfering behaviors when learning skills via near-errorless procedures as compared to error-correction procedures. Future research might code interfering behavior of participants learning skills in each of these conditions to provide insight into this question.

References

Akmanoglu, N., & Batu, S. (2004). Teaching pointing to numerals to individuals with autism using simultaneous prompting. Education and Training in Developmental Disabilities, 39, 326–336. Retrieved from http://www.jstor.org/stable/23880212.

Barbetta, P. M., Heron, T. E., & Heward, W. L. (1993). Effects of active student response during error correction on the acquisition, maintenance, and generalization of sight words by students with developmental disabilities. Journal of Applied Behavior Analysis, 26, 111–119. https://doi.org/10.1901/jaba.1993.26-111.

Barbetta, P. M., Heward, W. L., Bradley, D. M., & Miller, A. D. (1994). Effects of immediate and delayed error correction on the acquisition and maintenance of sight words by students with developmental disabilities. Journal of Applied Behavior Analysis, 27, 177–178. https://doi.org/10.1901/jaba.1994.27-177.

Belfiore, P. J., Skinner, C. H., & Ferkis, M. A. (1995). Effects of response and trial repetition on sight-word training for students with learning disabilities. Journal of Applied Behavior Analysis, 28, 347–348. https://doi.org/10.1901/jaba.1995.28-347.

Burns, M. K., VanDerHeyden, A. M., & Jiban, C. L. (2006). Assessing the instructional level for mathematics: A comparison of methods. School Psychology Review, 35, 401–418. Retrieved from http://www.nasponline.org/publications/periodicals/spr/volume-35/volume-35-issue-3/assessing-the-instructional-level-for-mathematics-a-comparison-of-methods.

Carroll, R. A., Joachim, B. T., St. Peter, C. C., & Robinson, N. (2015). A comparison of error correction procedures on skill acquisition during discrete-trial instruction. Journal of Applied Behavior Analysis, 48, 257–273. https://doi.org/10.1002/jaba.205.

Fentress, G. M., & Lerman, D. C. (2012). A comparison of two prompting procedures for teaching basic skills to children with autism. Research in Autism Spectrum Disorders, 6, 1083–1090. https://doi.org/10.1016/j.rasd.2012.02.006.

Fickel, K. M., Schuster, J. W., & Collins, B. C. (1998). Teaching different tasks using different stimuli in a heterogeneous small group. Journal of Behavioral Education, 8, 219–244. https://doi.org/10.1023/A:1022887624824.

Gibson, A. N., & Schuster, J. W. (1992). The use of simultaneous prompting for teaching expressive word recognition to preschool children. Topics in Early Childhood Special Education, 12, 247–267. https://doi.org/10.1177/027112149201200208.

Haring, N. G., & Eaton, M. D. (1978). Systematic instructional technology: An instructional hierarchy. In N. G. Harting, T. C. Lovitt, M. D. Eaton, & C. L. Hansen (Eds.), The fourth R: Research in the classroom (pp. 23–40). Columbus, OH: Merrill.

Johnson, P., Schuster, J., & Bell, J. K. (1996). Comparison of simultaneous prompting with and without error correction in teaching science vocabulary words to high school students with mild disabilities. Journal of Behavioral Education, 6, 437–458. https://doi.org/10.1007/BF02110516.

Leaf, J. B., Leaf, J. A., Alcalay, A., Dale, S., Kassardjian, A., Tsuji, K., et al. (2014a). Comparison of most-to-least to error correction to teach tacting to two children diagnosed with autism. Evidence-Based Communication Assessment and Intervention, 7, 124–133. https://doi.org/10.1080/17489539.2014.884988.

Leaf, J. B., Leaf, R., Taubman, M., McEachin, J., & Delmolino, L. (2014b). Comparison of flexible prompt fading to error correction for children with autism spectrum disorder. Journal of Developmental and Physical Disabilities, 26, 203–224. https://doi.org/10.1007/s10882-013-9354-0.

Leaf, J. B., Sheldon, J. B., & Sherman, J. A. (2010). Comparison of simultaneous prompting and no-no prompting in two-choice discrimination learning with children with autism. Journal of Applied Behavior Analysis, 43, 215–228. https://doi.org/10.1901/jaba.2010.43-215.

Libby, M. E., Weiss, J. S., Bancroft, S., & Ahearn, W. H. (2008). A comparison of most-to-least and least-to-most prompting on the acquisition of solitary play skills. Behavior Analysis in Practice, 1, 37–43. https://doi.org/10.1007/BF03391719.

Lovaas, O. I. (2003). Teaching individuals with developmental delays: Basic intervention techniques. Austin, TX: PRO-ED.

MacFarland-Smith, J., Schuster, J. W., & Stevens, K. B. (1993). Using simultaneous prompting procedures to teach expressive object identification to preschoolers with developmental delays. Journal of Early Intervention, 17, 1–10. https://doi.org/10.1177/105381519301700106.

Marvin, K. L., Rapp, J. T., Stenske, M. T., Rojas, N. R., Swanson, G. J., & Bartlett, S. M. (2010). Response repetition as an error-correction procedure for sight-word reading: A replication and extension. Behavioral Interventions, 25, 109–127. https://doi.org/10.1002/bin.299.

McGhan, A. C., & Lerman, D. C. (2013). An assessment of error-correction procedures for learners with autism. Journal of Applied Behavior Analysis, 46, 626–639. https://doi.org/10.1002/jaba.65.

Morse, T. E., & Schuster, J. W. (2004). Simultaneous prompting: A review of the literature. Education and Training in Developmental Disabilities, 39, 153–168. Retrieved from http://www.jstor.org/stable/23880063.

Parker, M. A., & Schuster, J. W. (2002). Effectiveness of simultaneous prompting on the acquisition of observational and instructive feedback stimuli when teaching a heterogeneous group of high school students. Education and Training in Mental Retardation and Developmental Disabilities, 37, 89–104. Retrieved from http://www.jstor.org/stable/23879585.

Rao, S., & Mallow, L. (2009). Using simultaneous prompting procedure to promote recall of multiplication facts by middle school students with cognitive impairment. Education and Training in Developmental Disabilities, 44, 80–90. Retrieved from http://www.jstor.org/stable/24233465.

Rapp, J. T., Marvin, K. L., Nystedt, A., Swanson, G. J., Paananen, L., & Tabatt, J. (2012). Response repetition as an error-correction procedure for acquisition of math facts and math computation. Behavioral Interventions, 27, 16–32. https://doi.org/10.1002/bin.342.

Reynolds, J. L., Drevon, D. D., Schafer, B., & Schwartz, K. (2016). Response repetition as an error-correction strategy for teaching subtraction facts. School Psychology Forum: Research in Practice, 10, 349–358. Retrieved from http://www.nasponline.org/resources-and-publications/periodicals/spf-volume-10-issue-4-(winter-2016)/response-repetition-as-an-error-correction-strategy-for-teaching-subtraction-facts.

Rodgers, T. A., & Iwata, B. A. (1991). An analysis of error-correction procedures during discrimination training. Journal of Applied Behavior Analysis, 24, 775–781. https://doi.org/10.1901/jaba.1991.24-775.

Singleton, K. C., Schuster, J. W., & Ault, M. J. (1995). Simultaneous prompting in a small group arrangement. Education and Training in Mental Retardation and Developmental Disabilities, 30, 218–230. Retrieved from http://www.jstor.org/stable/23889173.

Smith, T. (2001). Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities, 16, 86–92. https://doi.org/10.1177/108825760101600204.

Szadokierski, I., & Burns, M. K. (2008). Analogue evaluation of the effects of opportunities to response and rations of known items within drill rehearsal of Esperanto words. Journal of School Psychology, 46, 593–609. https://doi.org/10.1016/j.jsp.2008.06.004.

Weeks, M., & Gaylord-Ross, R. (1981). Task difficult and aberrant behavior in severely handicapped students. Journal of Applied Behavior Analysis, 14, 449–463. https://doi.org/10.1901/jaba.1981.14-449.

Worsdell, A. S., Iwata, B. A., Dozier, C. L., Johnson, A. D., Neidert, P. L., & Thomason, J. L. (2005). Analysis of response repetition as an error-correction strategy during sight-word reading. Journal of Applied Behavior Analysis, 38, 511–527. https://doi.org/10.1901/jaba.2005.115-04.

Acknowledgements

The authors would like to acknowledge the assistance of Denise Cai, Nicole Dailey, and Brienne Riebe with intervention implementation and data collection.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Human and Animals Rights

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from parents of all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Drevon, D.D., Reynolds, J.L. Comparing the Effectiveness and Efficiency of Response Repetition to Simultaneous Prompting on Acquisition and Maintenance of Multiplication Facts. J Behav Educ 27, 358–374 (2018). https://doi.org/10.1007/s10864-018-9298-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10864-018-9298-7