Abstract

This study sought to examine the relationship of implicit emotional judgments with experiential avoidance (EA) and social anxiety. A sample of 61 college students completed the Emotional Judgment – Implicit Relational Assessment Procedure (EJ-IRAP) as well as a public speaking challenge. Implicit judgments were related to greater self-reported EA, anxiety sensitivity, emotional judgments and social anxiety as well as lower performance ratings and willingness in the public speaking challenge. Effects differed by trial type with “Anxiety is bad” biases related to greater EA/anxiety, while “calm is bad” biases related to lower EA/anxiety (“Good” biases were generally unrelated to outcomes). Implicit emotional judgments moderated the relationship of heart rate during the speech with speech time and willingness, such that increases in heart rate were only related to lower speech time and willingness among those high in implicit judgments. Implicit judgments predicted social anxiety above and beyond self-report EA measures. Implicit emotional judgments appear to have a functional role in EA and anxiety that warrants further research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Over the past few decades, research has shown experiential avoidance (EA) to be a key pathological process in anxiety disorders (Bluett et al. 2014; Hooper and Larsson 2015). EA refers to rigid patterns of behavior which seek to avoid, escape, or otherwise change thoughts, feelings, and other inner experiences, despite the harmful consequences that might result (Hayes et al. 1996). Although other EA measures have recently been developed (e.g., Gámez et al. 2011), research on EA thus far has primarily focused on variants of the Acceptance and Action Questionnaire (AAQ; Hayes et al. 2004) and AAQ-II (Bond et al. 2011). A meta-analysis with 63 such studies found a correlation of r = .45 between the AAQ and anxiety measures, with similar significant correlations found across a range of specific disorders (Bluett et al. 2014). EA has been found to predict anxiety disorders up to 2 years later (Spinhoven et al. 2014), to predict a range of specific anxiety disorders over and above general distress measures (e.g., Levin et al. 2014b) and over and above other known predictors such as anxiety sensitivity (e.g., Gloster et al. 2011).

Although research has demonstrated a global link between self-report measures of EA and anxiety, there is a lack of empirical research regarding the specifics of how and in what ways EA contributes to anxiety disorders. For example, it is unclear the conditions under which EA is particularly problematic (e.g., when experiencing distress, rigid and inflexible patterns of EA) and if there are specific EA behaviors (either overt or private) that are more or less harmful. Furthermore, EA is distinguished from traditional behavioral concepts of avoidance/escape due to the role of verbal processes, but what verbal processes are problematic, in what contexts, and through what mechanisms has not been thoroughly studied to-date. Conducting this more detailed research is key for further testing and refining the theoretical model as well as informing novel interventions seeking to treat or prevent problems through targeting EA.

Thus far, research on EA has relied heavily on global self-report measures, particularly the AAQ. In addition to EA, the AAQ is often conceptualized as measuring the broader construct of psychological inflexibility, which refers to the variety of pathological processes by which behavior is rigidly guided by internal psychological experiences rather than values or direct contingencies (Bond et al. 2011). Adding to this complexity, newer attempts to measure EA (e.g., the Multidimensional Experiential Avoidance Questionnaire; MEAQ), suggest EA itself can be viewed as a multifaceted construct. Factor analytic findings with the MEAQ indicated six subscales assessing distinct forms of avoidance (i.e., distraction and suppression, procrastination, behavioral avoidance, repression and denial) and psychological features (i.e., distress aversion, distress endurance) (Gámez et al. 2011). More precise measures and research are now needed to understand exactly how EA contributes to anxiety disorders.

The verbal processes involved in EA may be particularly useful to focus such research efforts on. Theoretically, EA is supported by a learning history in which the verbal processes involved in evaluating and problem solving generalize to inner experiences such as emotions (Hayes et al. 2012; Levin et al. 2012). In other words, people begin to judge their emotions in terms of whether they are good or bad in the same way they would with external objects and events. These emotional judgments elicit avoidant behaviors seeking to eliminate “bad” emotions and increase “good” emotions. Although this approach may be effective with external problems (i.e., figure out what is wrong and fix it), it may lead to maladaptive behaviors when applied to emotional experiences that cannot be directly eliminated or changed in the same ways. A classic example of this is thought suppression, in which deliberate attempts to suppress unwanted thoughts actually leads to a “rebound effect’ where the thoughts occur more frequently over time (Wenzlaff and Wegner 2000). These emotional judgments may also help explain how EA persists despite negative consequences, as individuals develop maladaptive rules specifying that certain emotions are bad and must be avoided at all costs (i.e., insensitivity to direct contingencies with rule-governed behavior; Hayes 1989). Similarly, emotional judgments can help account for how EA generalizes to new stimuli and contexts without the requisite direct learning histories, as novel external and internal experiences can all become related to the occurrence of potential “bad” emotions to be avoided (Levin et al. 2012).

Although emotional judgments represent a key verbal component of EA, there has been limited research on their role in EA and psychopathology. The nonjudgmental subscale of the Five Facet Mindfulness Questionnaire (FFMQ-NJ; Baer et al. 2006) is the only measure of which we are aware that assesses judgments of inner experiences. Research using the FFMQ-NJ has found it relates to a range of problems including depression, anxiety, eating, and substance use disorders (Desrosier et al. 2013; Lavender et al. 2011; Levin et al. 2014a). However, self-report methods have significant limitations, which has also been an issue for research using the AAQ. Most notably, self-report requires individuals to have the requisite awareness and insight to identify these phenomena, which may not be the case when assessing processes such as EA. Self-report is also susceptible to response biases that can skew results. For example, respondents who are judgmental and avoidant of inner experiences may underreport such struggles as a form of EA itself.

Measures of implicit cognition could help overcome limitations with self-report methods. Implicit cognitions refer to the automatic, immediate cognitions that occur in response to stimuli, which are difficult to control and potentially even occur outside of one’s awareness. These implicit cognitions can be measured with tools such as the Implicit Relational Assessment Procedure (IRAP; Barnes-Holmes et al. 2010). The IRAP, and similar methods such as the Implicit Association Test (IAT; Greenwald et al. 1998), assume that differences in latency on time-pressured responses to stimuli provide information about an individual’s automatic, initial reactions toward those stimuli (Barnes-Holmes et al. 2010). These implicit cognition measures can thus assess biases without relying on conscious awareness and while preventing participants from modulating their responses (McKenna et al. 2007).

The IRAP has been successfully used in several past studies to examine the role of implicit cognition in psychopathology. A meta-analysis of 15 studies indicated the IRAP predicts a range of clinical outcomes (e.g., depression, anxiety, addiction, OCD, eating disorders) with an average correlation coefficient of r = .45 (Vahey et al. 2015). Preliminary research using the IRAP to study facets of EA specifically has also found promising results. For example, one IRAP study tested implicit cognitions related to whether it is better to accept or avoid negative emotions, finding this measure was sensitive to the effects of a mindfulness versus suppression manipulation (Hooper et al. 2010). Of most relevance to the current study, a pilot IRAP study examined a preliminary measure of implicit emotional judgments, with results finding those who are higher in EA are more likely to judge “Hate” as bad and “Love” as good (Levin et al. 2010). However, this study did not find effects with other emotional stimuli (i.e., happy, cheerful, sad, anxious), possibly due to the broad set of emotion words used in the IRAP and lack of specific criterion measures besides the AAQ to examine.

The IRAP provides the methodological advantage of being able to decompose overall implicit biases to examine more specific implicit effects. For example, in addition to testing whether an overall emotional judgment bias contributes to EA (i.e., “that anxiety is bad and not good, and that being calm is good and not bad”), the IRAP can test isolated biases (e.g., just the “anxiety is bad” bias or “calm is good” bias). This feature is critical to testing the role of emotional judgments in EA. The more obvious judgments that may lead to EA and anxiety problems are those in which anxiety is judged as bad, which can be specifically tested in isolation using the IRAP (i.e., only examining effects for Anxiety-Bad trials). However, overly judging other emotions as good may lead to striving for positive emotions and ineffective rules that produce similar EA patterns (e.g., “It’s good to be calm and if I’m not, then I should avoid”). To test this, the IRAP can similarly be used to examine the effects of “calm is good” implicit biases in isolation (i.e., only examining effects for Calm-Good trials). Finally, patterns indicating opposing judgments (“anxiety is good” and “calm is bad”) have additional theoretical implications. For example, it is unclear whether those low in EA might even show opposing emotional judgments, such as a belief that it’s good to be anxious, given anxiety can have useful functions in some contexts/levels. Each of these biases can be tested separately with the IRAP.

Implicit emotional judgments represent the more automatic, spontaneous verbal responses one might immediately make in relation to emotions, which captures a unique stream of verbal behavior that differs from global self-report measures (representing more elaborated and complex cognitive responses). Theoretically, a stronger bias towards immediately responding to anxiety (or predicted anxiety) as bad, would lead to a greater likelihood of other experientially avoidant responses. These immediate responses may be particularly relevant in moments where one is experiencing anxiety, serving to transform various experiences (e.g., rapid heartbeat) into more aversive stimuli to be avoided. Thus, implicit emotional judgments may capture a key aspect of EA, which leads to more elaborated cognitions (e.g., rules about how feeling bad emotions is dangerous and need to be avoided) and overt behavioral responses (e.g., escaping situations where anxiety arises). This implicit effect on behavior may occur even if subsequent elaborated cognitions oppose it (e.g., “I can persist and I shouldn’t avoid”), particularly in contexts where individuals are unaware or taxed with other demands. Thus, implicit judgments theoretically represent a key facet of the verbal processes that contribute to EA and could lead to anxiety problems.

The current study sought to develop a new emotional judgment IRAP measure (EJ-IRAP) and examine its relationship to EA and social anxiety. The first aim was to examine the validity of the newly developed EJ-IRAP by exploring its relation to relevant constructs including self-report measures of emotional judgment (FFMQ-NJ), EA (MEAQ; AAQ-II), and fear of anxiety (Anxiety Sensitivity Index-3 [ASI-3] Taylor et al. 2007). Given that emotional judgments are theorized to be a key facet of EA, an implicit measure of emotional judgments would be expected to correlate such that both greater “anxiety is bad” and “calm is good” biases would relate to greater self-reported EA, emotional judgments and anxiety sensitivity. If successful, this could lead to a new implicit measure of a specific, key facet of EA, while avoiding limitations with global self-report measures.

The second aim was to examine how this implicit judgment facet of EA relates to outcomes in the domain of social anxiety. Although emotional judgments may apply to a broad range of anxiety disorders and psychological problems more broadly, this study focused on social anxiety, particularly in the context of a public speaking challenge, in order to provide a more precise examination of how implicit emotional judgments impact anxiety and EA. Public speaking is the most commonly reported fear among individuals with a social phobia (Ruscio et al. 2008) and estimated to elicit at least some level of anxiety in the majority (85 %) of the general population (Motley 1995). Well-validated public speaking tasks and measures have been used in psychological research (e.g., England et al. 2012; Hofmann et al. 2009), which allow for assessment of a wide range of domains relevant to implicit emotional judgments, including anxiety, persistence and willingness in the speech, performance, and so on.

For the second aim, the study hypothesized that greater implicit judgments (both “anxiety is bad” and “calm is good”) would relate to greater self-reported social anxiety and more anxious/avoidant responding to the public speaking challenge. Given that implicit judgments may alter how one responds to anxiety in the moment, the study also hypothesized a moderation effect such that greater anxiety during the speech would be more strongly related to avoidance behavior (i.e., quitting early, being unwilling to give another speech) among those with stronger implicit judgments. Lastly, EJ-IRAP scores were hypothesized to provide incremental validity in predicting these outcomes over and above self-report measures of EA.

Methods

Participants

The sample consisted of 77 college students, 18 years of age or older, participating for extra credit in a psychology course. Four participants were removed because they were well acquainted with the researcher running the experiment and an additional 12 participants (16 %) did not pass the EJ-IRAP practice trials and so were excluded from analyses (criteria for failing the practice trials are described below). Thus, there was a final sample of 61 participants, with 59 completing all study procedures (two dropped out prior to the public speaking challenge due to medical issues/emergency that were unrelated to participation in the study).

The final sample (n = 61) was 59 % female with a mean age of 21.02 (SD = 5.18, Mode = 18). The vast majority were White (92 %), with 5 % identifying as mixed race, 2 % as Asian, and 2 % as Hispanic. Based on empirically derived cutoff scores from the Liebowitz Social Anxiety Scale (LSAS; Liebowitz 1987), 18 % fell within the non-anxious range, 49 % within nongeneralized social anxiety range (moderate anxiety), and 33 % within more severe, generalized social anxiety (severe anxiety). These rates of social anxiety are high enough to question the validity of the cutoffs in this non-clinical sample, but at least suggest a sample that is likely to show the necessary variance in anxiety/EA during the public speaking challenge to examine relevant effects.

Procedures

The study procedures were completed in a single 1.5-h appointment in the laboratory. After providing informed consent, participants completed the EJ-IRAP measure, followed by baseline self-report measures, then a public speaking challenge, and lastly post-speech measures.

Emotion Judgment IRAP (EJ-IRAP)

Participants began the study by completing the EJ-IRAP task in a small room on a desktop computer using the IRAP program (2012 version). The EJ-IRAP task involved completing a series of testing trials on the computer in which respondents indicated the relationship between pairs of stimuli (a target emotional stimulus and a categorical judgment stimulus) as quickly and accurately as possible. Target stimuli in this study included three emotion words related to anxiety (i.e., nervous, anxious, afraid)Footnote 1 and four emotion words related to being calm/non-anxious (i.e., calm, relaxed, comfortable, content). Two categorical stimuli “good” and “bad” were used for positive and negative judgments. These categorical stimuli were selected given positive preliminary findings with implicit judgments using “good” and “bad” (Levin et al. 2010) and because they mirror a related item on the AAQ (i.e., “anxiety is bad”).

Each testing trial presented a randomly selected target and categorical stimulus (e.g., “anxiety” and “bad”), along with response options (“True” or “False”) at the bottom corners of the screen (see Fig. 1). Participants responded by pressing the “d” key to indicate “True” (“anxiety” is “bad”) or the “k” key to indicate “False” (“anxiety” is not “bad”). A correct response cleared the screen for 400 milliseconds (ms) before the next trial began. Incorrect responses resulted in corrective feedback (a red “X” appeared), which remained until a correct response was made. Response latency was recorded for the speed between the onset of a trial and a correct response. To help maintain fast responding, a message reading “too slow” appeared if participants took longer than 2000 ms to respond. Although the computer keyboard keys were not marked, the prompts indicating “Press ‘d’ for True” and “Press ‘k” for False” were presented onscreen for every trial, including practice trials, and use of these response options were covered during the introductory training provided by the experimenter.

Implicit effects were identified by determining whether participants were faster at relating target/categorical stimuli in one way versus another (e.g., are participants faster at responding “true” “anxiety” is “bad” versus “false” “anxiety” is “bad”). These comparisons were made by having participants alternate between two different rules across testing blocks. Participants were instructed in one block of trials to respond under the first set of rules (i.e., as if anxiety words are bad and calm words are good). In the next block of trials participants were instructed to respond under the second set of rules (i.e., as if anxiety words are good and calm words are bad). Testing blocks (each with 48 trials) alternated between these two sets of rules, with corrective feedback provided according to the rules of the block. The response latencies on these two types of trial blocks (anxiety is bad/calm is good vs. anxiety is good/calm is bad) were then compared to determine implicit effects.

Given the complexity of the IRAP, a research assistant first described the task and completed one practice block in front of participants. Participants then completed a series of practice blocks, which used the same categorical and target stimuli as the subsequent testing blocks, but were not included as part of the test data. Practice was used to establish quick and accurate responding under the two sets of rules, a requisite feature for detecting IRAP effects (Barnes-Holmes et al. 2010). Participants had to score 80 % accuracy or better with a median response latency of 2 s per block on 2 consecutive blocks to proceed to the testing blocks. Those unable to reach this criteria after 6 practice blocks were excused from the IRAP portion of the study. This procedure has been used successfully in previous IRAP research to ensure participants respond quickly and accurately enough to provide valid test data before proceeding to the actual IRAP test (Barnes-Holmes et al. 2010; Nicholson and Barnes-Holmes 2012).

Following successful completion of the practice blocks, participants completed a series of 6 test blocks (each with 48 trials), alternating between the rules (anxiety is bad/calm is good vs. anxiety is good/calm is bad). These test blocks were nearly identical to the practice blocks except participants no longer received feedback at the end of each block on their accuracy/speed.

Public Speaking Task

After completing the IRAP, participants completed a series of self-report measures assessing social anxiety symptomatology and variables related to EA. A baseline phase was then collected for heart rate. Participants were hooked up to a Bluetooth heart rate monitor watch, Mio Alpha, to obtain a baseline heart rate reading over 3 min while sitting quietly with their eyes closed.

Participants were then told they would give an impromptu 10-min speech about a controversial topic in front of a video camera placed at eye level. In order to further elicit anxiety, the researcher added that the videotape would be reviewed by other researchers who would rate the participants’ speech quality and level of observed/physiological anxiety. Participants were directed to choose one of six controversial topics (i.e., abortion, health care system changes, the death penalty, gun rights, immigration, gay rights) adapted from those used in previous studies (Hofmann et al. 1995). Participants were allowed 3 min to plan their speech while the researcher recorded their heart rate again.

Participants then stood in front of a camera and gave their speech until the 10-min time limit had been reached or until choosing to quit. The instructions were designed to provide a clear expectation that participants persist for the entire 10 min: “I would like for you to speak for as long as you possibly can. If you make it to the 10-min mark, I will tell you that you can stop. if you decide to stop the speech prematurely, please say ‘I want to stop’ or hold up the ‘STOP’ card that I handed you earlier. We ask that you try to continue the speech if you can, even if you aren’t sure what to say. However, you can stop if needed using the card if you are experiencing significant anxiety and can’t go on.” These instructions are similar to those used in other studies that examined length of speech time as a measure of behavioral avoidance (e.g., England et al. 2012) and are consistent with a high demand characteristic condition to support persisting in the speech (Matias and Turner 1986). Heart rate was continuously measured during the speech phase. The researcher remained in the room during the speech, but outside of direct view.

Once the speech concluded, participants completed a measure of perceived performance. The recovery phase for heart rate was collected while sitting quietly with their eyes closed. Participants were then asked if they would be willing to return the following week to complete a second speech for the study, providing a measure of self-reported willingness. The exact script for this willingness question was “Our study is also interested in looking at people’s experiences with public speaking over time. We’d like to invite you to return sometime in the next week or two to do a second recorded speech. Would you be willing to come back to the lab and complete a second speech?” We did not specifically reference whether returning would be compensated with additional research credits (for course credit/extra credit), but it is likely that students assumed such given typical expectations for completing laboratory research tasks in this context. Lastly, participants were debriefed and informed they would not return for another speech.

EA-Related Measures

Five Facet Mindfulness Questionnaire – Nonjudgmental Subscale (FFMQ-NJ; Baer et al. 2006)

The 8-item FFMQ-NJ subscale was used to assess self-reported tendency to judge one’s thoughts and feelings. Although the FFMQ-NJ assesses judgments more broadly than the EJ-IRAP’s focus on anxiety, this represents a highly related construct that would be expected to correlate with implicit emotional judgments. Items are rated on a 5-point scale ranging from 1 “never or very rarely true” to 5 “very often or always true.” The FFMQ-NJ has been found to be reliable and valid in past studies (Baer et al. 2006) with an internal consistency of α = .92 in the current study.

Acceptance and Action Questionnaire-II (AAQ-II; Bond et al. 2011)

The 7-item AAQ-II was included as a self-report measure of global EA and psychological inflexibility more broadly. Items are rated on a 7-point scale ranging from 1 “never true” to 7 “always true.” The AAQ-II has been found to have adequate reliability and validity in past studies with college students (Bond et al. 2011) with an internal consistency of α = .93 in the current study. The AAQ-II and its variations represent the most broadly studied measure of EA to-date (Hooper and Larsson 2015), although measures have since been developed that assess EA more specifically and precisely (e.g., MEAQ).

Multidimensional Experiential Avoidance Questionnaire (MEAQ; Gámez et al. 2011)

The 62-item MEAQ was also used to assess EA. This measure was designed to comprehensively assess the specific construct of EA and includes subscales measuring aspects of EA including behavioral avoidance, distress aversion, procrastination, distraction and suppression, repression and denial, and distress endurance (although only the total score was used given the aims of this study and number of variables being analyzed). Items are rated on a 6-point scale ranging from 1 “strongly disagree” to 6 “strongly agree.” The MEAQ has been found to be reliable and valid in past studies (Gámez et al. 2011). The internal consistency for the MEAQ total score was adequate in the current study (α = .89).

Anxiety Sensitivity Index-3 (ASI-3; Taylor et al. 2007)

The ASI was used to measure fear of anxiety symptoms. This represents a construct highly related to EA, and emotional judgments more specifically, within the domain of anxiety disorders. The 18-item ASI includes subscales assessing physical, cognitive, and social fears related to anxiety symptoms, although again only the total score was used given the aims and variables included in the study. Items are rated on a 5-point scale ranging from 0 “very little” to 4 “very much.” The ASI has been found to have adequate reliability and validity in past studies (Taylor et al. 2007). The internal consistency for the ASI total score was adequate in the current study (α = .86).

Anxiety Measures

Liebowitz Social Anxiety Scale (LSAS; Liebowitz 1987)

The 24-item self-report version of the LSAS was used to assess social anxiety symptomatology. The LSAS assesses fear and avoidance of various social situations including performance situations. Each social situation is rated on 4-point scales for degree of fear (0 “none” to 3 “severe”) and degree of avoidance (0 “never” to 3 “usually”). Clinical cutoff scores have been identified with the LSAS for classifying individuals with social anxiety disorder (Rytwinski et al. 2009). The internal consistency for the LSAS was adequate in the current study (α = .95).

Social Performance Scale-Self Report Version (SPS-SR; Rapee and Lim 1992)

The SPS was provided after the public speaking challenge to assess perceived speech performance. The scale includes 17 items assessing features of performance, including whether the content was understandable, eye contact, fidgeting and voice clarity. Each item is rated on a 5-point scale from 0 “not at all” to 4 “very much.” The SPS has been found to be a reliable and valid measure in past research (Rapee and Lim 1992) with an internal consistency of α = .92 in the current study.

Behavioral Avoidance

Duration of the speech (in seconds) during the public speaking challenge was used as a behavioral measure of avoidance. This variable provides a maximum score of 600 s, indicating that a participant spoke for the entire time. Theoretically, ending the task prior to 600 s can be conceptualized as an attempt to escape anxiety elicited through the speech. To support this, a clear expectation was placed for participants to speak as long as possible, but with an option to quit if they became too uncomfortable. Although quitting early might also be due to simply not having more to say, attempts were made to reduce the alternate source of quitting by explicitly requesting participants continue the speech even if they do not know what to say. Speech duration has been used as a behavioral avoidance measure in previous research using similar procedures, although it is worth noting that there are mixed findings with some studies showing it is sensitive to intervention effects (e.g., Seim et al. 2010) and other intervention studies failing to show any changes (e.g., England et al. 2012) or expected relations to anxiety measures (Matias and Turner 1986).

Willingness to Repeat Speech

Participants were asked at the end of the study whether they would be willing to return and complete another speech, as a measure of willingness. This was presented as a genuine request to participate further in the study, although participants were informed at the end of the study that there would not be a second appointment. Similar measures of willingness have been used in past research testing acceptance-based interventions (e.g., Levitt et al. 2004).

Heart Rate (HR)

Heart rate data were collected as a proxy for physiological indicators of anxiety at several points: after completing the baseline self-report measures, while preparing for the speech, during the speech, and recovering after the speech. Data was collected through the Mio Alpha smartwatch, which was worn by the participant in a manner similar to a standard wristwatch. The Mio Alpha smartwatch consists of an optical heart rate module (OHRM) that utilizes photoplethysmography (PPG) to measure continuous heart rate alongside an accelerometer unit to measure and correct for movement artifacts (Valenti and Westerterp 2013). Accelerometer data assessing user’s movement is entered into an algorithm that compensates for movement artifacts in the optical signal. A quality index is calculated based off of this information for each measurement point at each second (Valenti and Westerterp 2013). Mio Alpha utilizes proprietary smoothing algorithms to identify poor quality data points (e.g., due to movement artifacts) as well as outliers (heart rate ± 1/3 of previous heart rate), which are automatically detected and replaced by the average of the previous two heartbeats (Teohari et al. 2014). The raw data provided estimated beats per minute each second, which was subsequently averaged for each participant by experiment phase (i.e., average beats per minute at baseline, preparing for speech, during speech, recovering after speech). Change scores were calculated comparing average beats per minute in each phase relative to baseline (e.g., increased heart rate during the speech relative to baseline).

The Mio Alpha’s PPG technology was previously validated against a standard electrocardiogram (ECG) (Maeda et al. 2011a and has displayed more accurate measurement of heart rate in conjunction with an accelerometer compared to an infrared heart rate system when compensating for movement artifacts (Maeda et al. 2011b). Furthermore, the particular OHRM technology within the Mio Alpha smartwatch was validated against a traditional chest-strap heart rate monitor and ECG device, in which the Mio Alpha displayed overall high performance with non-significant error, as well as higher accuracy (−0.1 bmp vs 0.3 bmp, p < 0.001), but lower precision (3.0 bmp vs 2.0 bmp, p < 0.001), compared to the chest-strap device (Valenti and Westerterp 2013). However, most of the issues associated with precision occurred when users were running at high speed (12.43 mph) (Valenti and Westerterp 2013). The current study required that participants stand while engaging in the public speaking task, thus protecting against most precision issues associated with movement artifacts.

A self-report measures of anxiety was also collected (State-Trait Anxiety Inventory-State [STAI]; Spielberger 1972), but was excluded from analyses because the critical speech anxiety data were not collected until after the speech had ended (i.e., no self-reported anxiety data were collected during the speech). This was done to avoid interrupting the speech, but led to a decrease in anxiety at this time point: pre speech STAI M = 51.08, post speech STAI M = 43.22, suggesting the measure was more likely capturing relief from finishing the speech challenge.

Data Analysis Plan

EJ-IRAP scores were calculated by comparing response latencies between “anxiety is bad and calm is good” test blocks and “anxiety is good and calm is bad” blocks. The D-IRAP scoring method was used, which is an adaptation of Cohen’s d that similarly compares the difference in average response latencies between test blocks, divided by the pooled standard deviation of those latencies (Hussey et al. 2015). A total EJ-IRAP score provides an overall summary of implicit effects across all trial types, with higher scores indicating an overall bias for “anxiety is bad/not good” and “calm is good/not bad.” However, a key benefit of using the IRAP is it allows one to parse these overall implicit effects by trial type, providing a more precise examination of implicit effects for specific sets of stimuli: anxiety-bad, anxiety-good, calm-bad, calm-good. These trial type scores are reported such that higher scores indicate affirmative emotional biases (i.e., that the emotion is related to the judgment): anxiety is bad, anxiety is good, calm is good, and calm is bad. For example, positive (as opposed to negative) EJ-IRAP scores on the anxiety-bad trial type indicates participants provided faster responses when instructed to answer with “True” to “Anxiety” and “Bad” than when responding “False” to “Anxiety” and “Bad.” Similarly, positive EJ-IRAP scores on calm-bad trial type indicates faster responding when instructed to answer with “True” to “Calm” and “Bad” than when responding “False” to “Calm” and “Bad.” Note that this subscale scoring differs from the calculated total score (“anxiety is bad and not good, calm is good and not bad”) in which subscales were reversed for anxiety-good and calm-bad, in order to more clearly interpret reported effects. Analyses were conducted with both the total EJ-IRAP score as well as each trial type separately given the theoretical importance of both positive and negative emotional judgments in EA and anxiety.

Preliminary analyses examined the reliability and descriptive statistics for the EJ-IRAP. Split-half reliability was examined by running correlations between even and odd numbered trials. Descriptive statistics for speech time and changes in heart rate from the speech were also examined to determine whether the task elicited anxiety and was challenging to persist in. One-sample t-tests were conducted for each EJ-IRAP trial type to determine whether there were significant overall implicit biases across all participants (e.g., whether differences in average latency between responding “true, anxiety is bad” and “false, anxiety is bad” were significantly different from zero).

The final step for the first aim of this study was to examine the convergent validity of the EJ-IRAP with self-report measures of relevant constructs. A series of correlational analyses tested whether EJ-IRAP scores were correlated with self-reported emotional judgments (FFMQ-NJ), EA (AAQ-II, MEAQ), and anxiety sensitivity (ASI).

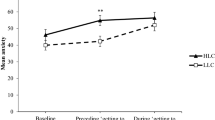

The second aim of the study was to examine the relationship of the EJ-IRAP to social anxiety. Correlational analyses examined whether EJ-IRAP scores related to the LSAS as a global self-report measure of social anxiety. MANOVA compared EJ-IRAP scores between participants with severe social anxiety (LSAS ≥60, n = 20) relative to those with low to moderate social anxiety (LSAS <60, n = 41) using validated clinical cutoff scores (Rytwinski et al. 2009). Low and moderate social anxiety groups were combined given the lack of participants indicating low social anxiety (n = 11) and the similarity in EJ-IRAP scores between these groups. One-sample t-tests were conducted within each group on EJ-IRAP scores (relative to zero) to further explore patterns of implicit biases between those with and without severe social anxiety.

Regression analyses examined whether EJ-IRAP scores predicted social anxiety over and above global self-report measures of EA, emotional judgments, and anxiety sensitivity. A hierarchical regression approach was taken with each self-report measure entered as a predictor in the first step, followed by adding the total combined EJ-IRAP scores in a second step. Each self-report measure was tested in a separate model to reduce the effects of collinearity due to the high correlations between these related measures. Analyses were limited to the LSAS total score due to the lack of relationship between self-reported EA measures and other anxiety outcomes.

Analyses were then conducted to examine the relationship of EJ-IRAP scores to the public speaking challenge. Correlational analyses tested the relationship between EJ-IRAP scores and self-rated speech performance, time in speech, and heart rate in preparation for, during, and recovering from the speech. A between group analysis tested for differences in EJ-IRAP scores between those who were willing (n = 50) or unwilling to return to complete a second speech (n = 8). Given differences in sample size between groups, a Mann–Whitney U test was used as a nonparametric test to compare these groups on EJ-IRAP scores.

Finally, moderation analyses were conducted to examine whether EJ-IRAP scores moderated the relationship of heart rate during the speech to speech outcomes. Linear regression was used for testing moderation with continuous outcomes (speech time and performance), while logistic regression was used for testing the dichotomous outcome of willingness to give another speech. The MODPROBE macros (Hayes and Matthes 2009) was used in SPSS to examine significant moderation effects at different levels of EJ-IRAP scores (M, 1 SD above M, 1 SD below M).

Results

Preliminary analyses

A total of 12 participants (16 %) did not pass the practice trial criteria (80 % accuracy and ≤2000 ms median latency) and were excluded from all analyses. The average error rate for participants in EJ-IRAP test trials was 11 % (SD = 5 %, range = 3–22 %). In terms of split-half reliability, even and odd trials for overall IRAP effects were significantly correlated, r(61) = .46, p < .001. With anxiety stimuli, the split-half reliability score was similar, r(61) = .46, p < .001, but it was somewhat lower for calm stimuli, r(61) = .29, p = .02.

The mean EJ-IRAP scores by trial type were: calm is good (M = .38, SD = .32), calm is bad (M = .09, SD = .33), anxiety is good (M = .00, SD = .41), anxiety is bad (M = −.02, SD = .35), and total EJ-IRAP (M = .09, SD = .22). One sample t-tests indicated significant (relative to zero) implicit biases for “calm is good”, t(60) = 9.13, p < .001, but also surprisingly for “calm is bad”, t(60) = 2.04, p = .046. This suggests on average that participants were faster at responding “true” than “false” to both calm-good and calm-bad trials. There was no overall implicit bias across participants for anxiety-bad or anxiety-good trials. However, the total EJ-IRAP score was significant, suggesting an overall implicit bias for “anxiety is bad and not good, calm is good and not bad.” Taken in combination with the standard deviations, these results suggest likely heterogeneity in the sample with regards to implicit biases, which might be further elucidated in examining the relation to EA and anxiety. It is worth noting, a common implicit bias across all participants was not hypothesized per se, but rather how variability in EJ-IRAP scores are predictive of EA and anxiety symptomatology.

Preliminary analyses indicated the public speaking challenge was effective in inducing anxiety and avoidant behaviors. The average speaking time was 6.33 min (SD = 3.32) with only 34 % completing the full 10-min speech. However, the majority of participants (86 %) reported being willing to return to complete a second speech. Almost all participants (97 %) demonstrated an increase in heart rate from baseline to preparation (M = 10.79, SD = 9.07) and from baseline to during the speech (95 %, M = 17.63, SD = 11.16).

Aim 1: Do Implicit Emotional Judgments Demonstrate Convergent Validity?

Significant positive correlations indicated that anxiety-bad EJ-IRAP scores were significantly correlated with every measure, such that greater “anxiety is bad” bias was related to greater EA, emotional judgments and anxiety sensitivity (see Table 1). Negative correlations were also found between calm-bad trial scores and self-report measures, such that a greater bias for “calm is bad” was related to lower EA, anxiety sensitivity, and emotional judgments. A more mixed pattern was found for the total EJ-IRAP score, likely due to the lack of relation between anxiety-good and calm-good scores with self-report measures.

Thus, although the overall sample demonstrated a significant “calm is good” bias, this was unrelated to EA and similar constructs. However, the surprising “calm is bad” bias in the overall sample was found to correlate with lower EA. Similarly, although there was no “anxiety is bad” bias at a group level, variation in this implicit bias was consistently correlated with greater EA.

Aim 2: Do Implicit Emotional Judgments Relate to Social Anxiety?

A series of correlations indicated that social anxiety symptoms correlated with anxiety-bad, calm-bad and total EJ-IRAP scores, such that a greater “anxiety is bad” bias was related to greater social anxiety while a greater “calm is bad” bias was related to lower social anxiety (see Table 1). The LSAS did not correlate with anxiety-good or calm-good EJ-IRAP scores.

MANOVA compared EJ-IRAP scores between participants falling within the more severe, generalized social anxiety range (LSAS ≥60, n = 20) versus those in the nonanxious and moderately socially anxious ranges (LSAS <60, n = 41). A marginally significant effect was found for the overall model, F(5, 55) = 2.38, Wilk's Λ = .82, p = .05. Post hoc analyses indicated significant differences for anxiety-bad, calm-bad and total EJ-IRAP scores (see Table 2). One sample t-tests within each subgroup indicated that only those with low to moderate social anxiety demonstrated a significant “calm is bad” bias and only those with severe social anxiety demonstrated a significant “anxiety is bad” bias. These results again suggest that “anxiety is bad” biases might only occur among a subgroup of individuals struggling with anxiety and EA, while the opposing “calm is bad” bias might serve a protective function against anxiety and EA. It is also worth noting that those with low to moderate social anxiety demonstrated both a significant “calm is bad” and “calm is good” bias.

Do Implicit Emotional Judgments Predict Social Anxiety Over and Above Self-Report Measures?

A series of regression analyses were conducted to examine whether total EJ-IRAP scores predict social anxiety over and above EA self-report measures (see Table 3). Hierarchical regressions indicated that total EJ-IRAP scores significantly predicted LSAS total scores even after controlling for the AAQ-II and for the MEAQ. The EJ-IRAP score was only a statistical trend when predicting over and above the ASI, a strong predictor of anxiety (Taylor et al. 2007). The FFMQ-NJ was not a significant predictor of social anxiety symptoms.

Do Implicit Emotional Judgments Relate to Public Speaking Outcomes?

Anxiety-bad, calm-good and total EJ-IRAP scores were correlated with self-rated performance in the speech (see Table 1), such that greater “anxiety is bad” and “calm is good” biases were related to worse performance ratings. Only calm-good was correlated with time in speech, such that greater “calm is good” biases were related to ending the speech sooner. EJ-IRAP scores were not correlated with any changes in heart rate during speech preparation, speech, or recovery following speech phases (p > .10).

Mann–Whitney U tests compared EJ-IRAP scores between participants who were willing to complete another speech (n = 50) and those who were not willing (n = 8). Significant differences were found for anxiety-good (U = 109.00, z = 2.05, p = .04, Willing M = .06, Non-Willing M - = −.25) and calm-bad EJ-IRAP scores (U = 105.00, z = 2.14, p = .03, Willing M = .14, Non-Willing M = −.21). Those who were unwilling had an “anxiety is not good and calm is not bad” bias, while those who were willing showed a “calm is bad” bias. There were no differences between groups on anxiety-bad, calm-good or total EJ-IRAP scores.

Do Implicit Emotional Judgments Interact with Increases in Heart Rate?

Moderation analyses tested whether EJ-IRAP scores moderated the relationship between changes in heart rate (from baseline to during the speech) and speaking outcomes (speech time, willingness, performance). There was a significant interaction between heart rate and anxiety-bad scores in predicting speech time, β = 20.62, t(54) = 2.24, p = .03. Conditional effects indicated heart rate was only related to speech time among those high (>1 SD above M) on EJ-IRAP scores, β = 8.24, t = 2.15, p = .04, such that greater increases in heart rate were related to less time in the speech only among those with a strong “anxiety is bad” bias (see Fig. 2). The relationship of heart rate and speech time was near zero among those with average EJ-IRAP scores (no “anxiety is bad” bias), β = .79, t = .34, p = .73, and was nonsignificant among those with low (<1 SD below M) EJ-IRAP scores (i.e., an opposite “anxiety is not bad” bias), β = −6.65 t = 1.56, p = .13. Statistical trends in predicting speech time were also found for interactions between heart rate and anxiety-good, β = 10.81, t(54) = 1.94, p = .06, and total EJ-IRAP scores, β = 16.79, t(54) = 1.69, p = .098, which showed a similar pattern with conditional effects moderated by “anxiety is good” and an overall bias.

Graph of Anxiety-Bad EJ-IRAP scores moderating the relationship between heart rate and speaking time. Relationships are plotted for high EJ-IRAP scores (1 SD above mean representing “anxiety is bad” implicit bias), mean EJ-IRAP (near zero and representing no bias), and low EJ-IRAP scores (1 SD below mean representing an opposite “anxiety is not bad” bias). Heart rate is calculated as the average increase in beats per minute during speech relative to baseline

A statistical trend was found for an interaction between heart rate and anxiety-bad scores in predicting speech willingness using logistic regression, β = .28, z = 1.72, p = .06. The interaction was such that increasing heart rate during the speech was related to less willingness to give another speech only among those with a strong “anxiety is bad” bias, β = .12, z = 1.90, p = .06, but not among those with average scores (i.e., no bias), β = .02, z = .54, p = .59, or low scores (i.e., “anxiety is not bad” bias), β = −.08, z = 1.08, p = .28. A statistical trend in predicting willingness was also found with an interaction between heart rate and calm-good scores, β = .25, z = 1.66, p = .097, which showed a similar pattern with conditional effects moderated by “calm is good” bias. There were no significant interactions in predicting speech performance (p > .10).

Discussion

The current study sought to 1) validate a new measure of implicit emotional judgments and 2) examine its relation to social anxiety. Results for the first aim generally supported the reliability and validity of the EJ-IRAP. Convergent validity was found for EJ-IRAP scores in relation to self-reported EA, emotional judgments and anxiety sensitivity. Results were generally consistent with predictions for the second aim, indicating implicit emotional judgments predict social anxiety, even after controlling for self-reported EA, and response to a public speaking challenge (i.e., performance, willingness). Although some results were only statistical trends, moderation effects suggested that implicit emotional judgments interacted with increases in heart rate during the speech in predicting speech time and willingness to give another speech. Results differed by trial type, with greater “anxiety is bad” implicit biases generally predicting greater EA and social anxiety, while greater “calm is bad” biases predicting low EA and social anxiety. There were few significant effects from implicit good judgments of emotions.

These results suggest the EJ-IRAP is a valid and reliable measure of implicit emotional judgments in the context of anxiety. The measure demonstrated adequate split-half reliability and most participants were able to meet the accuracy/speed criteria for practice blocks. Results with EJ-IRAP scores, particularly anxiety-bad and calm-bad trials, suggested convergent validity with measures of EA, concurrent validity with measures of social anxiety, known groups validity in predicting social anxiety group status, predictive validity with responses to a public speaking challenge, and incremental validity in predicting outcomes above and beyond self-reported EA.

The results supported theoretical predictions that implicit emotional judgments contribute to social anxiety and responses to a public speaking task. As would be expected, EJ-IRAP scores were related to severe social anxiety and poorer self-rated performance in the speech. Theoretically, a greater tendency to judge one’s emotions as good and bad would increase EA behaviors that exacerbate social anxiety and behavioral avoidance. Surprisingly, only one of the two behavioral measures of EA (willingness to complete a second speech) was consistently related to EJ-IRAP scores. Although speech time has been used as a behavioral measure of avoidance in past studies, research has found this to be an inconsistent measure in detecting treatment effects (e.g., England et al. 2012; Seim et al. 2010) and correlating with anxiety (Matias and Turner 1986). This may be due in part to variability in what such persistence or lack thereof represents in a lab-based setting, which is likely affected by within participant variables (e.g., level of anxiety) and experiment-wide variables (e.g., level of demand characteristics). For example, we attempted to provide high demand characteristics for persisting, while allowing the option to quit. However, previous research suggests such high demand characteristics may actually alter the impact of anxiety on persistence, such that individuals persist despite high levels of anxiety that might typically lead to escape behavior (Matias and Turner 1986). In either case, the moderation analyses suggest that implicit emotional judgments impact behavioral avoidance in the most relevant context in which one is experiencing high levels of anxiety.

A key advantage in using the IRAP is the ease this method provides in examining more specific trial type implicit effects. The total EJ-IRAP score was generally related to relevant outcomes, suggesting an overall implicit judgment of “anxiety is bad and calm is good” relates to EA and social anxiety. However, when examining specific trial type effects it became clear that these relations were primarily carried by the role of “bad” judgments, with very few significant relations found with “good” implicit biases.

“Anxiety is bad” biases were most consistently related to greater EA and anxiety. This is consistent with theoretical predictions, in which the tendency to judge anxiety as bad contributes to further avoidant behaviors that exacerbate anxiety symptomatology (Levin et al. 2012). Part of why the term EA is used rather than the traditional escape/avoidance terms for such negatively reinforced behavior is the theorized role of verbal behavior. As a form of rule-governed behavior (Hayes 1989), individuals may continue to engage in EA based on emotional judgments, while being insensitive to direct contingencies that might otherwise extinguish these behaviors. These emotional judgments may also help expand EA by eliciting avoidance behaviors with stimuli that have never been directly experienced as aversive through derived relational responding (Hayes et al. 2001). Future research should be conducted to test these predictions regarding emotional judgments supporting persistence in EA and generalization to new behaviors/contexts.

Contrary to predictions, implicit positive judgments were generally unrelated to outcomes, despite the presence of these biases in the sample. Instead, it appeared that those who struggle less with anxiety and EA actually judge calm emotions as bad in addition to being good. For example, both low and high socially anxious participants had a significant “calm is good” bias, but those low in socially anxious also had a contrasting “calm is bad” bias at the same time. The IRAP allows for the detection of such effects as biases in each trial type can be calculated separately. This pattern suggests that although people generally have an implicit bias towards calm being good, what’s more important is whether they are flexible such that they also judge being calm as bad (i.e., “being calm is both good and bad”). This more flexible way of relating to emotions is largely consistent with acceptance-based therapies, which seek to reduce rigid and unhelpful functions of cognition, while enhancing contextually sensitive responding (i.e., being calm might have varying degrees of both good and bad functions depending on context).

The use of an implicit measure of emotional judgments helps to overcome the limitations found in explicit self-report measures of EA (i.e., response bias, limitations with insight). Typically EA research has used explicit self-report measures that assess elaborated cognitions, which involve more complex, extended verbal reflections influenced by factors such as response biases (Barnes-Holmes et al. 2010). In contrast, implicit cognitions capture the more immediate, spontaneous and automatic verbal responses that occur right after encountering stimuli such as emotions (Barnes-Holmes et al. 2010; De Houwer and Moors 2010). For example, if someone began to feel anxious while giving a speech, the immediate thought might be “anxiety is bad” which could elicit EA. Subsequent, elaborated cognitions could further support EA (e.g., “I shouldn’t feel this way. It really is bad. I have to get rid of it.”) or not (e.g., “I’m thinking anxiety is bad, but it will pass eventually and I can continue to speak.”). Failing to assess implicit cognitions may leave out a key aspect of this verbal phenomenon, the initial, automatic verbal response, which might not be reported after more deliberation in explicit self-report. This study suggests these more immediate and automatic cognitions can in fact play a key role in EA and symptomatology above and beyond self-report EA measures. In some cases these implicit cognitions even predicted speaking outcomes that self-report EA measures failed to predict at all.

The moderation effects between implicit emotional judgments and heart rate provide a key example of why these automatic, immediate verbal responses are important for understanding EA. The results suggest that those who have a high propensity to implicitly judge emotions, particularly anxiety as bad, are more likely to stop speaking and be unwilling to complete another speech in response to physiological anxiety. In other words, these automatic judgments affect how individuals respond to anxious sensations in the moment.

How these implicit cognitions are conceptualized and addressed differs from explicit self-report in important ways that inform EA theory. First, implicit cognitions can affect behavior even when one is unaware of them or when they conflict with more elaborated cognitions and values (Greenwald et al. 2009). Second, implicit cognitions are defined in part by being difficult to modulate in assessment paradigms, suggesting this is a set of verbal processes that are difficult to directly control. From an EA perspective, deliberately trying to suppress or alter implicit judgments may simply elicit further judgments of judgments (e.g., “anxiety is bad”, “it’s bad to think that”) and ineffective EA strategies (e.g., thought suppression). The combination of being relatively automatic, difficult to control, and outside of one’s awareness, while still influencing behavior, suggests the potential benefits of an acceptance-based approach. For example, research on decoupling effects (Levin et al. 2015) suggest that acceptance and mindfulness can change the function of implicit cognitions so they no longer influence behavior (see Ostafin et al. 2012 for an example). Increasing mindful awareness of cognitive biases, from a defused and accepting perspective, could allow one to choose how to effectively respond in a given context and decouple the direct effect of implicit judgments on EA.

It is worth noting however, that cognitive therapy (CT) models similarly theorize the role of implicit cognitions in maladaptive behaviors (Teachman and Woody 2004). However, a CT perspective might conceptualize the causal connection between cognition and behavior as more stable, and instead focus on directly altering implicit cognitions to reduce maladaptive behaviors (Teachman and Woody 2004). Although implicit cognitions are automatic and difficult to control, research indicates implicit biases can be changed through cognitive behavioral methods (e.g., Teachman and Woody 2003). Future research might use the EJ-IRAP to compare the mechanisms of change between ACT and CT with regards to changing versus decoupling implicit judgments.

This study is an attempt to use multimodal and laboratory methods to conduct more refined research on the theoretical predictions of EA. A variety of methods are likely needed to test these more specific aspects of the theory. For example, intensive longitudinal research has begun to test whether psychopathology is related to more rigid, de-contextualized use of EA (e.g., Shahar and Herr 2011) and what contextual factors moderate the impact of EA on anxiety symptoms (e.g., Kashdan et al. 2014). Laboratory-based research has shown how EA relates to broader difficulties with persisting in distressing tasks (e.g., Zettle et al. 2012). Implicit research has also begun to test other facets of EA, such as beliefs about avoidant versus accepting strategies with negative emotions (Hooper et al. 2010) and being willing versus trying to get rid of positive and negative emotions (Drake et al. 2016). More research testing specific predictions and using sophisticated measurement methods is needed with EA.

There were some notable limitations with this study. First of all, a non-clinical sample was recruited and future research is needed to examine how the EJ-IRAP functions among those with diagnosable anxiety disorders. The levels of social anxiety based on the LSAS were surprisingly high, suggesting there was at least adequate variance in symptomatology to examine anxiety outcomes despite the non-clinical sample. Further, the study used a convenience sample of college students who were homogeneous on factors including age and race. Thus, it is unclear how well these findings might generalize to other populations (e.g., minority groups, older populations, populations with varied levels of cognitive functioning).

The measures of anxiety during the public speaking challenge were also limited. Although attempts were made to collect self-report data related to the speech, they were excluded because no data were collected at the primary time point during the actual speech. The data collected immediately after the speech, intended to represent speech anxiety, tended to be lower than pre-speech scores, likely due to the relief following speech completion. Although heart rate data were collected during the speech as a proxy for physiological arousal/anxiety, this was a fairly limited assessment method and future research would benefit from more extensive physiological measures of anxiety. The procedures used for assessing whether a participant would be willing to return for a second speech were limited by the lack of clear information regarding whether the return visit would be compensated. This may have led to variability among participants in responding, some of whom likely assumed this second visit would lead to additional research credits for courses, while others may have considered this to be uncompensated additional volunteering. Lastly in terms of measures, the study did not include any variables to assess divergent validity to further assess the precision and bounds of constructs the EJ-IRAP is related to.

Finally, this study conducted a fairly large number of analyses, particularly in examining convergent validity with each EJ-IRAP trial type, which increased the potential for Type I error. Adjustments to the criteria for statistical significance were considered to adjust for family-wise error (e.g., Bonferroni correction), but were not used given the study was not adequately powered for this more conservative testing approach. Given the lack of power as well as that the tests being conducted are related and based on a key set of theoretical predictions, such a correction would be overly conservative in increasing the potential for Type II error. However, the interpretation of the results throughout this manuscript have emphasized overall patterns of findings, rather than more isolated effects, in an attempt to address this issue. Ultimately, these findings need to be replicated in more diverse and clinical populations with adequate power to control for such methodological issues.

The current study sought to examine a key theoretical facet of EA, implicit emotional judgments. The results were largely supportive of theory, suggesting that immediate, automatic judgments of anxiety as being bad may contribute to EA and anxiety symptomatology, while judgments of “calm is bad” may serve as a protective factor. Implicit emotional judgments appear to be an important phenomenon to assess for furthering our understanding of the negative effects of EA and its amelioration. These implicit emotional judgments may represent a key aspect of EA to target in treatments for anxiety disorders and future research is now needed to test if and how such biases can be targeted.

Notes

Preliminary analyses with emotion stimuli indicated that IRAP effects with one of the anxiety stimuli (“worried”) did not correlate with the other anxiety stimuli (r coefficients ranging between -.16 and .15). This stimulus was therefore removed from all analyses.

References

Baer, R. A., Smith, G. T., Hopkins, J., Krietemeyer, J., & Toney, L. (2006). Using self-report assessment methods to explore facets of mindfulness. Assessment, 13, 27–45.

Barnes-Holmes, D., Barnes-Holmes, Y., Stewart, I., & Boles, S. (2010). A sketch of the Implicit Relational Assessment Procedure (IRAP) and the Relational Elaboration and Coherence (REC) model. The Psychological Record, 60, 527–542.

Bluett, E. J., Homan, K. J., Morrison, K. L., Levin, M. E., & Twohig, M. P. (2014). Acceptance and commitment therapy for anxiety and OCD spectrum disorders: an empirical review. Journal of Anxiety Disorders, 6, 612–624.

Bond, F. W., Hayes, S. C., Baer, R. A., Carpenter, K., Orcutt, H. K., Waltz, T., & Zettle, R. D. (2011). Preliminary psychometric properties of the Acceptance and Action Questionnaire-II: a revised measure of psychological flexibility and acceptance. Behavior Therapy, 42, 676–688.

De Houwer, J., & Moors, A. (2010). Implicit measures: Similarities and differences. In Handbook of implicit social cognition: Measurement, theory, and applications (pp. 176–193). New York: Guildford Press.

Desrosier, A., Klemanski, D. H., & Nolen-Hoeksema, S. (2013). Mapping mindfulness facets onto dimensions of anxiety and depression. Behavior Therapy, 44, 373–384.

Drake, C. E., Timko, C. A., Luoma, J. B. (2016). Explorin an implicit measure of acceptance and experiential avoidance of anxiety. The Psychological Record.

England, E. L., Herbert, J. D., Forman, E. M., Rabin, S. J., Juarascio, A., & Goldstein, S. P. (2012). Acceptance-based exposure therapy for public speaking anxiety. Journal of Contextual Behavioral Science, 1, 66–72.

Gámez, W., Chmielewski, M., Kotov, R., Ruggero, C., & Watson, D. (2011). Development of a measure of experiential avoidance: the Multidimensional Experiential Avoidance Questionnaire. Psychological Assessment, 23, 692–713.

Gloster, A. T., Klotsche, J., Chaker, S., Hummel, K. V., & Hoyer, J. (2011). Assessing psychological flexibility: what does it add above and beyond existing constructs? Psychological Assessment, 23, 970–982.

Greenwald, A. G., McGhee, D. E., & Schwartz, J. L. K. (1998). Measuring individual differences in implicit cognition: the implicit association test. Journal of Personality and Social Psychology, 74, 1464–1480.

Greenwald, A. G., Poehlman, T. A., Uhlmann, E. L., & Banaji, M. R. (2009). Understanding and using the Implicit Association Test: III. Meta-analysis of predictive validity. Journal of Personality and Social Psychology, 97, 17–41.

Hayes, S. C. (1989). Rule-governed behavior: Cognition, contingencies, and instructional control. New York: Plenum.

Hayes, A. F., & Matthes, J. (2009). Computational procedures for probing interactions in OLS and logistic regression: SPSS and SAS implementations. Behavior Research Methods, 41, 924–936.

Hayes, S. C., Wilson, K. G., Gifford, E. V., Follette, V. M., & Strosahl, K. (1996). Experiential avoidance and behavioral disorders: a functional dimensional approach to diagnosis and treatment. Journal of Consulting and Clinical Psychology, 64(6), 1152–1168.

Hayes, S. C., Barnes-Holmes, D., & Roche, B. (Eds.). (2001). Relational frame theory: A post-Skinnerian account of human language and cognition. New York: Plenum.

Hayes, S. C., Strosahl, K. D., Wilson, K. G., Bissett, R. T., Pistorello, J., Toarmino, D., & McCurry, S. M. (2004). Measuring experiential avoidance: a preliminary test of a working model. The Psychological Record, 54, 553–578.

Hayes, S. C., Strosahl, K. D., & Wilson, K. G. (2012). Acceptance and commitment therapy: The process and practice of mindful change (2nd ed.). New York: Guilford Press.

Hofmann, S. G., Newman, M. G., Ehlers, A., & Walton, R. (1995). Psychophysiological differences between subgroups of social phobia. Journal of Abnormal Psychology, 104, 224–231.

Hofmann, S. G., Heering, S., Sawyer, A. T., & Asnaani, A. (2009). How to handle anxiety: the effects of reappraisal, acceptance, and suppression strategies on anxious arousal. Behaviour Research and Therapy, 47, 389–394.

Hooper, N., & Larsson, A. (2015). The research journey of acceptance and commitment therapy (ACT). Palgrave Macmillan.

Hooper, N., Villatte, M., Neofotistou, E., & McHugh, L. (2010). The effects of mindfulness versus thought suppression on implicit and explicit measures of experiential avoidance. International Journal of Behavior Consultation and Therapy, 6, 233–244.

Hussey, I., Thompson, M., McEnteggart, C., Barnes-Holmes, D., & Barnes-Holmes, Y. (2015). Interpreting and inverting with less cursing: a guide to interpreting IRAP data. Journal of Contextual Behavioral Science, 4, 157–162.

Kashdan, T. B., Goodman, F. R., Machell, K. A., Kleiman, E. M., Monfort, S. S., Ciarrochi, J., & Nezlek, J. B. (2014). A contextual approach to experiential avoidance and social anxiety: evidence from an experimental interaction and daily interactions of people with social anxiety disorder. Emotion, 14, 769–781.

Lavender, J. M., Gratz, K. L., & Tull, M. T. (2011). Exploring the relationship between facets of mindfulness and eating pathology in women. Cognitive Behaviour Therapy, 40, 174–182.

Levin, M. E., Hayes, S. C., & Waltz, T. (2010). Creating an implicit measure of cognition more suited to applied research: a test of the Mixed Trial – Implicit Relational Assessment Procedure (MT-IRAP). International Journal of Behavioral Consultation and Therapy, 6, 245–262.

Levin, M. E., Hayes, S. C., & Vilardaga, R. (2012). Acceptance and commitment therapy: Applying an iterative translational research strategy in behavior analysis. In G. Madden, G. P. Hanley, & W. Dube (Eds.), APA handbook of behavior analysis (Vol. 2). Washington, DC: American Psychological Association.

Levin, M. E., Dalrymple, K., & Zimmerman, M. (2014a). Which facets of mindfulness predict the presence of substance use disorders in an outpatient psychiatric sample? Psychology of Addictive Behaviors, 28, 498–506.

Levin, M. E., MacLane, C., Daflos, S., Pistorello, J., Hayes, S. C., Seeley, J., & Biglan, A. (2014b). Examining psychological inflexibility as a transdiagnostic process across psychological disorders. Journal of Contextual Behavioral Science, 3, 155–163.

Levin, M. E., Luoma, J. B. Haeger, J. (2015). Decoupling as a mechanism of action in mindfulness and acceptance: A literature review. Behavior Modification, 39, 870–911.

Levitt, J. T., Brown, T. A., Orsillo, S. M., & Barlow, D. H. (2004). The effects of acceptance versus suppression of emotion on subjective and psychophysiological response to carbon dioxide challenge in patients with panic disorder. Behavior Therapy, 35, 747–766.

Liebowitz, M. R. (1987). Social phobia. Modern Problems of Pharmacopsychiatry, 22, 141–173.

Maeda, Y., Sekine, M., & Tamura, T. (2011a). The advantages of wearable green reflected photoplethysmography. Journal of Medical Systems, 35(5), 829–834.

Maeda, Y., Sekine, M., & Tamura, T. (2011b). Relationship between measurement site and motion artifacts in wearable reflected photoplethysmography. Journal of Medical Systems, 35(5), 969–976.

Matias, R., & Turner, S. M. (1986). Concordance and discordance in speech anxiety assessment: the effects of demand characteristics on the tripartite assessment method. Behaviour Research and Therapy, 24, 537–545.

McKenna, I. M., Barnes-Holmes, D., Barnes-Holmes, Y., & Stewart, I. (2007). Testing the fake-ability of the Implicit Relational Assessment Procedure (IRAP): The first study. International Journal of Psychology and Psychological Therapy, 7, 253–268.

Motley, M. T. (1995). Overcoming your fear of public speaking: A proven method. New York: McGraw-Hill.

Nicholson, E., & Barnes-Holmes, D. (2012). The Implicit Relational Assessment Procedure (IRAP) as a measure of spider fear. The Psychological Record, 62, 263–277.

Ostafin, B. D., Bauer, C., & Myxter, P. (2012). Mindfulness decouples the relation between automatic alcohol motivation and heavy drinking. Journal of Social and Clinical Psychology, 31(7), 729–745.

Rapee, R. M., & Lim, L. (1992). Discrepancy between self- and observer ratings of performance in social phobics. Journal of Abnormal Psychology, 101, 728–731.

Ruscio, A. M., Brown, T. A., Sareen, J., Stein, M. B., & Kessler, R. C. (2008). Social fears and social phobia in the United States: results from the National Comordbidity Survey replication. Psychological Medicine, 38, 15–28.

Rytwinski, N. K., Fresco, D. M., Heimberg, R. G., Coles, M. E., Liebowitz, M. R., Cissell, S., & Hofmann, S. G. (2009). Screening for social anxiety disorder with the self-report version of the liebowitz social anxiety scale. Depression and Anxiety, 26, 34–38.

Seim, R. W., Waller, S. A., & Spates, C. R. (2010). A preliminary investigation of continuous and intermittent exposures in the treatment of public speaking anxiety. International Journal of Behavioral and Cognitive Therapies, 6, 84–94.

Shahar, B., & Herr, N. R. (2011). Depressive symptoms predict inflexibly high levels of experiential avoidance in response to daily negative affect: a daily diary study. Behaviour Research and Therapy, 49, 676–681.

Spielberger, C. D. (1972). Anxiety: Current trends in theory and research: I. New York: Academic.

Spinhoven, P., Drost, J., de Rooij, M., van Hemert, A. M., & Penninx, B. W. (2014). A longitudinal study of experiential avoidance in emotional disorders. Behavior Therapy, 45, 840–850.

Taylor, S., Zvolensky, M. J., Cox, B. J., Deacon, B., Heimberg, R. G., Ledley, D. R., & Stewart, S. H. (2007). Robust dimensions of anxiety sensitivity: development and initial validation of the Anxiety Sensitivity Index-3. Psychological Assessment, 19, 176–188.

Teachman, B. A., & Woody, S. R. (2003). Automatic processing in spider phobia: implicit fear associations over the course of treatment. Journal of Abnormal Psychology, 112, 100–109.

Teachman, B. A., & Woody, S. R. (2004). Staying tuned to research in implicit cognition: relevance for clinical practice with anxiety disorders. Cognitive and Behavioral Practice, 11, 149–159.

Teohari, V. M., Ungureanu, C., Bui, V., Arends, J., & Aarts, R. M. (2014). Epilepsy seizure detection app for wearable technologies. Eidenhoven: Paper presented at the 2014 European Conference on Ambient Intelligence.

Vahey, N. A., Nicholson, E., & Barnes-Holmes, D. (2015). A meta-analysis of criterion effects for the implicit relational assessment procedure (IRAP) in the clinical domain. Journal of Behavior Therapy and Experimental Psychiatry, 48, 59–65.

Valenti, G., & Westerterp, K. R. (2013). Optical heart rate monitoring module validation study. Las Vegas: Paper presented at 2013 I.E. International Conference on Consumer Electronics (ICCE).

Wenzlaff, R. M., & Wegner, D. M. (2000). Thought suppression. Annual Review of Psychology, 51, 59–91.

Zettle, R. D., Barner, S. L., Gird, S. R., Boone, L. T., Renollet, D. L., & Burdsal, C. A. (2012). A psychological biathlon: the relationship between level of experiential avoidance and perseverance on two challenging tasks. The Psychological Record, 62, 433–445.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

All procedures performed in the study involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Conflict of Interest

Dr. Levin is a research associate with Contextual Change LLC, a small business involved in developing commercial web-based Acceptance and Commitment Therapy programs. Dr. Smith and Mr. Haeger declare having no conflicts of interest.

Experiment Participants

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Levin, M.E., Haeger, J. & Smith, G.S. Examining the Role of Implicit Emotional Judgments in Social Anxiety and Experiential Avoidance. J Psychopathol Behav Assess 39, 264–278 (2017). https://doi.org/10.1007/s10862-016-9583-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10862-016-9583-5