Abstract

Although the order of the time steps in which the non-uniform sampling (NUS) schedule is implemented when acquiring multi-dimensional NMR spectra is of limited importance when sample conditions remain unchanged over the course of the experiment, it is shown to have major impact when samples are unstable. In the latter case, time-ordering of the NUS data points by the normalized radial length yields a reduction of sampling artifacts, regardless of the spectral reconstruction algorithm. The disadvantage of time-ordered NUS sampling is that halting the experiment prior to its completion will result in lower spectral resolution, rather than a sparser data matrix. Alternatively, digitally correcting for sample decay prior to reconstruction of randomly ordered NUS data points can mitigate reconstruction artifacts, at the cost of somewhat lower sensitivity. Application of these sampling schemes to the Alzheimer’s amyloid beta (Aβ1–42) peptide at an elevated concentration, low temperature, and 3 kbar of pressure, where approximately 75% of the peptide reverts to an NMR-invisible state during the collection of a 3D 15N-separated NOESY spectrum, highlights the improvement in artifact suppression and reveals weak medium-range NOE contacts in several regions, including the C-terminal region of the peptide.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Non-uniform sampling (NUS) of the indirect dimensions of multi-dimensional NMR spectra is a natural generalization of the original idea of exponential sampling (Barna et al. 1987) and offers considerable savings in the minimal amount of time required for recording high resolution spectra (Rovnyak et al. 2004; Mobli et al. 2012; Coggins et al. 2012; Bermel et al. 2012; Orekhov and Jaravine 2011; Billeter 2017). Provided that the reconstruction algorithm does not introduce significant residual point-spread-function (PSF) “noise” in the final spectrum, NUS can even yield an increase in signal-to-noise per unit of time by concentrating most of the time domain sampling at earlier time points (Barna et al. 1987; Hyberts et al. 2013; Palmer et al. 2015), albeit at the cost of a potential decrease in accuracy of lineshape and resolution (Ying et al. 2017). The latter disadvantage can be mitigated by co-processing the data with a reference spectrum, using the multi-dimensional decomposition (MDD) approach (Mayzel et al. 2014) provided that such a spectrum is available. Numerous different algorithms have been introduced for reconstructing a frequency domain spectrum from the sparsely sampled time domain data, each with inherent advantages and disadvantages among many defining factors of spectral reconstruction performance such as speed of reconstruction, linearity of the reconstructed spectral intensities, fidelity of peak positions and linewidths, and achievable dynamic range (Barna et al. 1987; Hyberts et al. 2012, 2013; Ying et al. 2017; Delsuc and Tramesel 2006; Mobli et al. 2007; Balsgart and Vosegaard 2012; Hoch et al. 2014; Kazimierczuk and Orekhov 2011; Bostock et al. 2012; Holland et al. 2011). Efforts at an objective comparison of the performance of the various algorithms have also been initiated, in order to clarify their relative effectiveness in common applications (2018).

Early implementations of NUS simply deleted time points from a regular, full sampling schedule, and such ordered schedules will here be referred to as “conventionally ordered”. The advantages and disadvantages of random ordering versus conventional ordering of the sampling schedule in NUS data collection have been discussed by Hyberts et al. (2014) Various home-written procedures use the conventionally ordered sampling schedule and some versions of Varian’s Biopack software enabled this mode of sampling schedule selection by means of a “sequential” checkbox in one of their protocols for generating a sampling list. More recent protocols appear to favor random ordering, and this is currently the only displayed option in standard commercial Bruker software, unless users generate their own ordered sampling list. A particularly attractive feature of randomly ordered NUS is the ability to predefine the desired spectral resolution and then periodically monitor the signal-to-noise ratio of an NMR spectrum during data collection (Hyberts et al. 2014). Signal-to-noise in such applications represents the aggregate of the PSF noise and the intrinsic signal-to-noise encapsulated in the time domain data, where the latter approximately scales with the square root of the number of transients collected. The PSF noise results from failure to optimally reconstruct the frequency domain spectrum from a sparse set of time domain data points and typically decreases rapidly as the sampling of the time domain data becomes less sparse (Hyberts et al. 2014). In such applications, the minimal amount of time needed to acquire a multi-dimensional NMR data set can be defined “on the fly”, before jumping to the collection of a different type of spectrum, frequently on the same sample (Eghbalnia et al. 2005).

Other important applications of NUS involve collection of NMR data on unstable samples, where it is known a priori that a chemical reaction will limit the available amount of time to a duration less than would be required for full sampling of the indirect time domain. Examples include the study of protein folding or unfolding (Schanda et al. 2007; Schlepckow et al. 2008), enzymatic turnover of substrate (Kern et al. 1999), and the common case of samples that are inherently unstable due to aggregation or fibril formation, as well as proteins or nucleic acids subject to auto-cleavage. Stability of samples in the latter applications typically scales inversely with concentration. For such studies, application of NUS is particularly advantageous as it permits fast collection of high-resolution multi-dimensional spectra that can be of high sensitivity provided that sample concentration is adequate and that the PSF “noise” can be contained to a level lower than the thermal noise. Previous applications of NUS to the study of unstable samples either exploited multi-dimensional decomposition, which effectively limits the reconstructed spectra to time domain sections that were recorded in narrow bands of time (Mayzel et al. 2014), or the co-addition of multiple NUS spectra from a series of dilute samples (Miljenovic et al. 2017).

Here, we describe two alternate, user-friendly, and more generally applicable strategies for the application of NUS to transient (or time-sensitive) samples. Specifically, by time-ordering of the sparse set of collected time-domain data points according to the normalized radial length of each time point, sample degradation will primarily manifest as an additional decay constant of the time domain data. This essentially results in some additional line broadening in the indirectly sampled dimensions, analogous to what would be observed in a fully sampled data set. In contrast, typical application of NUS with random ordering of the time domain data results in a noise-like decay of the signal amplitude that, in the absence of special adaptations to the reconstruction algorithm, can strongly elevate the level of PSF-like noise. To the best of our knowledge, this limitation applies to all currently known NUS reconstruction algorithms but can be eliminated by time-ordered sampling. However, this approach is not without drawbacks since time-ordered sampling requires the total time duration of data collection to be defined prior to starting the experiment and also introduces additional line broadening to all indirectly acquired spectral dimensions by amounts that are determined by the decay rate of the time-sensitive sample.

A second strategy, limited to samples that decay with a uniform time constant, incorporates 1D reference spectra in the time domain matrix. In this manner, the decay of sample concentration with time can be reversed digitally by upscaling of the signals recorded at later time points, before conventional NUS processing. Although this latter strategy retains the advantage that an acquisition can be halted whenever a satisfactory signal-to-noise level is achieved, it is intrinsically of lower sensitivity than that of time-ordered NUS since weaker signals, acquired after the sample has partially decayed, require upscaling prior to conventional NUS processing, thereby amplifying the impact of thermal noise.

To test the capability of these NUS strategies on transient samples, we applied both approaches to a sample of the Aβ1–42 peptide undergoing significant decay of the soluble, monomeric species. For this, we prepared a high concentration (1.2 mM) sample of Aβ1–42 in a buffer of high ionic strength and acidic pH. Importantly, it is known that these sample conditions promote aggregation and fibril formation of this peptide and, hence, disappearance of the NMR signals of the monomeric observable species. To slow down the effects of aggregation, NMR spectra were collected at 3 kbar of hydrostatic pressure and low temperature (280 K), resulting in an approximate exponential decay of the soluble peptide with a time constant of ~ 1 day. High resolution 3D NOESY spectra with NUS sampling were then collected in order to probe the potential presence of transient oligomeric species. Although these proved undetectable in our measurements, some weak medium-range NOEs indicative of transient formation of local structure could be observed. The absence of a detectable concentration dependence of the Aβ1–42 1H–15N HSQC spectrum suggests that these weak (≤ ~ 0.5%) NOEs are either intramolecular or result from transient binding to the growing fraction of aggregated peptides, previously observed by DEST experiments (Fawzi et al. 2011).

Materials and methods

Vector construction, expression and purification of Aβ1–42

The Aβ1–42 peptide was generated from an expression construct consisting of a 6His tag followed by the immunoglobulin binding domain B1 of protein G (GB1), Avi-Tag (Avidity, LLC), TEV protease cleavage site, and Aβ1–42. The last residue (G/S) in the TEV protease recognition sequence (ENLYFQG/S) was omitted such that upon cleavage the Aβ1–42 peptide starts with the native N-terminal D residue spanning the sequence D1AEFRHDSGY EVHHQKLVFF AEDVGSNKGA IIGLMVGGVV IA42.

The DNA insert was cloned into the pJ414 vector (ATUM) and transformed into E. coli BL21-DE3 cells (Thermo Fisher Scientific). Cells were grown in minimal medium with 15N ammonium chloride as the sole source of nitrogen and the Aβ1–42 fusion protein was expressed using established protocols. Following cell harvesting, high speed centrifugation, and acquisition of the cell lysate, the fusion protein was purified under denaturing conditions by Ni–NTA affinity chromatography. This was then followed by size-exclusion chromatography (SEC) on a Superdex-75 column (GE Healthcare) to remove minor high molecular weight contaminants and urea as well as to exchange the buffer to that which is suitable for cleavage by TEV protease. Upon cleavage, as monitored by SDS-PAGE, Aβ1–42 collected in the flow-through from the Ni–NTA column was dialyzed extensively against 20 mM ammonium hydroxide and 1.2 mg aliquots were lyophilized and stored at − 70 °C. Purity was confirmed both by SDS-PAGE and HPLC. The mass of the recombinant 15N-labeled Aβ1–42 (4568.1) matched the calculated mass (4568.8) as verified by electrospray ionization mass spectrometry. This method yielded ca 18 mg of pure peptide per liter of culture.

The autoproteolysis resistant (S219V) catalytic domain of TEV protease (27 kDa) containing a 6His tag at its N terminus was expressed as previously described (Lucast et al. 2001). Purification steps included Ni–NTA affinity chromatography, followed by SEC using TEV protease storage buffer. The stock solution (~ 70 µM) of the enzyme was stored in 1-mL aliquots at − 70 °C.

Aβ1–42 NMR sample preparation

High (1.2 mM) and low (0.02 mM) concentration Aβ1–42 samples for NMR experiments were prepared as follows. A concentrated stock solution of pure Aβ1–42 was prepared by dissolving 1.2 mg of the dry peptide in 153 µL of 50 mM NaOH. This solution (150 µL) was neutralized with 15 µL of 0.5 M HCl which had been pre-mixed with 55 µL of 0.5 M sodium phosphate pH 6, to attain a high concentration of Aβ1–42 peptide in a total sample volume of 220 µL. A second, dilute NMR sample was prepared from the stock solution (3 µL) by adding 147 µL of 50 mM NaOH, succeeded by neutralization and dilution as described above.

Data acquisition and processing

All Aβ1–42 NMR spectra were recorded under 3 kbar of static pressure at 280 K on a 900 MHz Bruker Avance III spectrometer, operated by Topspin 2.1 software, and equipped with a TCI cryogenic probe containing a z-axis gradient probe, and a high-pressure accessory (Daedalus Innovations), including a ceramic high-pressure tube (Peterson and Wand 2005) with an outer diameter of 5.0 mm and an inner diameter of 2.8 mm, requiring a sample volume of 220 µL prior to solvent compression.

Each 3D NOESY-HSQC spectrum was acquired using an NOE mixing time of 150 ms while using standard Rance-Kay (Palmer et al. 1991; Kay et al. 1992) and States-TPPI (Marion et al. 1989) quadrature detection methods for the indirect 15N and 1H dimensions, respectively.

In the directly detected 1H dimension, 1300 complex points were recorded, corresponding to an acquisition time of 120.1 ms. For the indirect dimensions, t1max and t2max values were 53.8 ms and 30.7 ms for 15N and 1H, respectively, with spectral windows set to 20.4 ppm (15N) and 9.26 ppm (1H), using a sparsity level of 12%, or 3072 hypercomplex (t1, t2) sets of 4 FIDs per 3D data set, with 4 scans per FID used for phase cycling to remove axial peaks. Total measuring time for two interleaved (see below) NOESY-HSQC NUS data sets was ca 38 h.

Evaluation of the effect of the sampling order on the NUS spectra was carried out by interleaving two independent, 3D NOESY-HSQC spectra. For this, two random NUS schedules containing the same set of 3072 full quadrature time points (4 FIDs for each (t1, t2) time point) but ordered differently were used. No deviations from random sampling, such as T2 weighting (Barna et al. 1987) or Poisson gap sampling (Hyberts et al. 2012) were applied in either schedule. For the first set, the order of the sampling schedule was fully random. For the second sampling scheme, the schedule was obtained by sorting the first set according to the normalized radial length of the sampling vectors, calculated as \(\sqrt {\mathop \sum \nolimits_{{i=1,2}} {{\left( {{k_i}/{N_i}} \right)}^2}}\), where \({N_i}\) is the maximum increment number in the i-th dimension and \({k_i}\) = 0, 1, 2, …, \({N_i} - 1\). This yielded the time-ordered sampling schedule consisting of the same randomly sampled data points.

To record two data sets under identical conditions, except for the sampling schedules, the collection of both data sets was carried out in an interleaved manner, using a single NUS list file. This mode of acquisition does not require modifications of the pulse sequence or experimental set-up but does require that the two sampling list files be merged in an interleaved manner before starting the experiment, and that the acquired time domain data be separated into the time-ordered and randomly ordered NUS data sets before processing and reconstruction. In addition, two single quadrature time points, corresponding to the 15N echo and anti-echo FIDs of the t1 = t2 = 0 time point, were included in the interleaved sampling list after completion of every 166 full quadrature time points (664 FIDs). These t1 = t2 = 0 data points serve to monitor the sample decay every hour.

Following completion, the interleaved data sets were separated into two data sets containing an equal number of FIDs using the NMRPipe software (Delaglio et al. 1995). Duplicate FIDs from the t1 = t2 = 0 time points can be automatically averaged during NMRPipe NUS data expansion. However, to prevent the first (t1, t2 = 0, 0) FID from being impacted by sample decay, an in-house C program was used to remove the spiked duplicate data prior to NUS reconstruction by the SMILE program (Ying et al. 2017). Next, the time domain matrix was expanded to a uniformly sampled data set using nusExpand.tcl in the NMRPipe software suite, thereby filling data points corresponding to non-sampled FIDs with zeros, before conversion to into the NMRPipe data format. SMILE NUS reconstruction, within NMRPipe, starts with conventional processing of the directly detected 1H dimension, followed by iterative detection of the most intense peaks after 3D Fourier transformation, analysis of their lineshape, and generation and subsequent subtraction of the best-fitted synthetic signals from the input experimental data (Ying et al. 2017).

In the directly detected (t3) dimension, the time domain data was apodized with a Lorentzian to Gaussian transformation function, exp(19t3 − 910\({\text{t}}_{3}^{2}\)), where t3 is in seconds. This is equivalent to the application of the nmrPipe GM processing function with g1 and g2 set to 6 and 16 Hz, respectively. No extrapolation of the indirectly sampled time domains was used during NUS reconstruction, and the expanded (t1, t2) time domain matrix consisted of 100* × 256* complex points in the indirect dimensions. Both t1 and t2 dimensions were apodized by a cosine function, extending from 0° to 86.4°. Data were analyzed using NMRDraw (Delaglio et al. 1995).

To evaluate the effect of time ordering on the quality of the reconstructed spectra by other commonly used methods, both time-ordered and randomly ordered NUS data sets were also processed using the IST algorithm implemented in hmsIST (Hyberts et al. 2012) as well as the IRLS and MDD algorithms within MDDNMR (Kazimierczuk and Orekhov 2011; Orekhov et al. 2001). The same apodization, zero filling, and phasing parameters as for SMILE were used in these reconstructions, and no extrapolation of the indirect dimensions was employed. The reconstruction was performed using the resources available on NMBox (Maciejewski et al. 2017).

Results and discussion

Spectral simulations

The effect of randomizing the collection of time domain data for a decaying sample is first shown for a fully sampled synthetic data set, focusing on the interferogram, S(t1, ω2), obtained for a signal in a 15N–1H HSQC spectrum that is on-resonance in the 15N dimension (Fig. 1). A fully sampled set is used for generating the simulated data, such that NUS reconstruction artifacts or the use of any specific algorithm do not play a role. Exponential decay of the sample, with a time constant that corresponds to 50% signal loss when the last FID is sampled, manifests as a faster apparent signal decay rate in the t1 dimension when signals are sampled sequentially (compare Fig. 1a with b). By contrast, when the order of sampling of the t1 domain is randomized (Fig. 1c), the amplitude of any given interferogram data point will be attenuated by an amount of up to 50% that depends on the temporal position of the sampled FID data point relative to the start of the experiment. As expected, Fourier transformation of the resulting time domain signal shows noise-like features (Fig. 1f), even though the synthetic time domain signal was noise free.

Simulation of the impact of sample decay on a–c the time domain in the indirect dimension of a fully sampled 2D experiment, and d–f corresponding frequency domain NMR data. a Interferogram, S(t1, ω2) of a fully sampled, noise-free, on-resonance signal of 116 ms duration and an intrinsic T2 of 87 ms, in the absence of sample decay. b Simulated time domain data, recorded conventionally, while the sample exponentially degrades with a time constant that results in a decrease in sample concentration by 50% at the end of data collection. c Simulated time domain decay when the ordering in the t1 dimension is randomized, as applies to common NUS data collection. d–f Fourier transforms of the time domain signals of a–c, after apodization with a cosine bell function and zero filling. Red boxes mark segments of the baseline that are upscaled tenfold

As illustrated here using a fully sampled indirect time domain, where sampling was ordered and sequential, the sample decay manifests as faster signal decay and therefore additional line broadening. The amplitude modulation introduced by random ordering (Fig. 1c) equally applies for non-uniformly sampled data, which typically uses random ordering of the time domain data points.

Time-ordering of fully sampled data converts signal decay into an additional apparent decay constant in the indirectly sampled dimensions. For NUS data, time-ordered sampling typically retains a very small degree of spurious amplitude modulation as a result of the uniform sample decay between sequentially sampled t1 durations, whereas the spacing on the sampling grid is non-uniform. In practice, the t1 noise introduced by this imperfection is typically far below the thermal noise.

Time ordering of 3D and 4D data

The principle of time ordering the collection of the FIDs equally applies to the recording of 2D, 3D, 4D, or higher dimensional data sets. The data collection can be ordered in a number of ways, and the effect of such ordering on the lineshape was assessed by simulations of 3D fully sampled data. Use of fully sampled instead of NUS data sets prevented the evaluation from being impacted by the NUS reconstruction algorithm. Specifically, a noise-free 3D hypercomplex time domain data set was generated by the simTimeND routine in NMRPipe, yielding a data matrix of 191* × 200* × 30* hypercomplex points, corresponding to acquisition times of 63.7 ms, 30.8 ms, and 53.6 ms for the t3, t2, and t1 dimensions, respectively. The t3 acquisition time was chosen to be 2 × T2, while the acquisition times for t2 and t1 were set to the transverse decay times, T2, of the simulated data. This data set was used to first evaluate the conventional ordering method, i.e., “sorted” first by k1 and then by k2, where k1 and k2 are the increment numbers in the t1 and t2 dimensions, respectively. Subsequently, a 100% sampling schedule containing a full grid of the data points in t2 and t1 dimensions in a random order was generated, which served to simulate the effect of random ordering. A number of different, time-ordered schedules were then derived from sorting the random full schedule by either the values of \(\mathop \sum \nolimits_{{i=1,2}} {k_i}\), \(\mathop \sum \nolimits_{{i=1,2}} \left( {{k_i}/{N_i}} \right)\), \(\sqrt {\mathop \sum \nolimits_{{i=1,2}} {{\left( {{k_i}} \right)}^2}}\), or \(\sqrt {\mathop \sum \nolimits_{{i=1,2}} {{\left( {{k_i}/{N_i}} \right)}^2}}\), where again \({N_i}\) is the maximum increment number in the i-th dimension and \({k_i}\) = 0, 1, 2, …, \({N_i} - 1\). These ordering methods are hereafter referred to as sum, normalized sum, radial length, and normalized radial length, respectively. Although the simulated data was uniformly and fully sampled, the NUS utility script nusCompress.tcl, included in the NMRPipe distribution, allowed arrangement of the t3 FIDs in the simulated data to that of the above random and ordered sampling schedules. Using these sampling schedules, amplitudes of the FIDs were multiplied by an exponentially decaying function, such that the last FID in the NUS data sets was downscaled to 25% of its original value, thereby simulating the effect of sample loss on the recorded signal of an experiment. Each data set (except the one with conventional ordering) was then sorted using the nusExpand.tcl script within NMRPipe, followed by conventional NMRPipe processing. Note that nusExpand.tcl was only used to reorder the fully sampled array before conventional processing and not for its primary function of data expansion. The (F2, F1) plane containing the simulated on-resonance (F3) peak was then used to evaluate the impact of sample degradation and time ordering, with results described below. Although the simulation was performed for a 3D data set, the results equally apply to NUS data acquisition of higher dimensional spectra.

It is readily apparent that all evaluated time ordering schemes (Fig. 2b–f) strongly reduce the reconstruction noise in comparison to random ordering (Fig. 2a). This is also reflected in the much smoother interferograms, S(ω1, t2, ω3) and S(t1, ω2, ω3) (Fig. 2g, h). Essentially, whenever Δt1/A1 differs from Δt2/A2, where Δti and Ai are the increment and acquisition time in the i-th dimension, respectively, ordering by simply taking the sum, k1 + k2 (Fig. 2b, SI Fig. S1a), is less effective at smoothing the amplitude distortion resulting from sample decay, and therefore is excluded as a sampling approach. Conventional ordering of the fully sampled data set yields the lowest impact of sample degradation on the signal decay in the t2 dimension but at the expense of the largest increase in the t1 dimension (Fig. 2g, h). The interferograms for this mode of ordering retain a more mono-exponential decay than the other ordering approaches (Fig. 2g, h), resulting in a lineshape closest to be Lorentzian. However, the linewidth is impacted very differently in the two indirect dimensions, with significant broadening in F1 but minimally in F2 (Fig. 2d). This results from the loop structure of the conventional 3D experiment, where the t2 incrementation loop is nested within the outer t1 incrementation loop. Conventional ordering therefore can result in an undesirable imbalance in spectral resolution. The strongest sample decay artifacts in the other ordering protocols (Fig. 2c, e, f) are more than three orders of magnitude lower than the peak intensity, and therefore will stay well below the thermal noise in most practical applications.

Impact of time ordering on the apparent noise level, lineshape, and peak intensity when reconstructing a noise-free simulated time-domain signal, on-resonance in the F1 and F2 dimensions. Sample decay is accounted for by an exponential decrease of the amplitude of the FIDs by exp{−1.4(k−1)/N}, where N is the total number of acquired FIDs, and k is the FID number (k = 1 for the first FID, and k = N for the last FID). The length of the simulated time domain data is set to the transverse relaxation time in both the t1 and t2 dimensions (53.6 ms, t1; 30.8 ms, t2). The 2D planes are (F2, F1) cross sections taken at the center of the peak in the F3 dimension for spectra with different FID acquisition order. a Randomly ordered, or sorted by b the sum: \(\mathop \sum\nolimits_{{i=1,2}} {k_i}\); c the normalized sum: \(\mathop \sum\nolimits_{{i=1,2}} \left( {{k_i}/{N_i}} \right)\); d conventional ordering (k1 first and then k2), e radial length: \(\sqrt {\mathop \sum\nolimits_{{i=1,2}} {{\left( {{k_i}} \right)}^2}}\), and f normalized radial length: \(\sqrt {\mathop \sum\nolimits_{{i=1,2}} {{\left( {{k_i}/{N_i}} \right)}^2}}\). A low first contour level (0.2% of the peak height) is used for panels (b–f), while a four times higher level is used for a. g S(ω1, t2, ω3) and h S(t1, ω2, ω3) interferograms, corresponding to the six modes of ordering of the detected FIDs. Plots of panels b, c, e, and f at four times lower contour levels are shown in SI Fig. S1

The t2 and t1 interferograms, S(ω1, t2, ω3) and S(t1, ω2, ω3) (Fig. 2g, h), show that the amplitudes at t2 = 0 (Fig. 2g) or t1 = 0 (Fig. 2h) are different, even though the amplitudes at S(t1 = 0, t2 = 0, ω3) are the same for all datasets. The ti = 0 value of an interferogram is impacted by the decay rate in the other indirectly sampled dimensions. For example, the S(t1 = 0, ω2, ω3) of the conventionally ordered data set has the highest intensity, but decays the fastest (Fig. 2h). As shown in Fig. 2f, ordering by the normalized radial length is preferred since it represents the best compromise of peak intensity, lineshape and artifact levels, despite the noticeable deviation of its interferograms from being strictly exponential (Fig. 2g, h) and therefore a peak lineshape deviation from Lorentzian.

Application of time-ordered NUS to 3D HSQC-NOESY of Aβ1–42

Effectiveness of the time-ordered versus randomly ordered NUS sampling was evaluated for a concentrated sample of the Aβ1–42 peptide. High sample concentration of this peptide was used to potentially increase the population of transient dimeric and/or oligomeric species, and to identify the structure of such species using NOEs. However, although high sample concentrations of Aβ1–42 dramatically increase the signal to noise (S/N) attainable per unit of time in the NOESY spectrum, there is a detrimental cost on available measurement time due to the strong concentration dependence of the irreversible aggregation kinetics of Aβ1–42 which leads to the formation of amyloid fibrils. As demonstrated by Akasaka and co-workers for a disulfide-deficient variant of hen lysozyme, amyloid formation can also be inhibited and even reversed by using high hydrostatic pressure (Kamatari et al. 2005). Work by the Kalbitzer group demonstrated that high pressure can resolubilize Aβ1–40 amyloid fibrils (Munte et al. 2013; Cavini et al. 2018), presumably because the mature fibrils contain voids that result in a fibril volume that is higher than that of the corresponding disordered peptides. It might be expected that the number of water-inaccessible void volumes in lower order oligomeric species is substantially less than those of mature fibrils and that high hydrostatic pressure will have a correspondingly smaller adverse effect on the formation of such species (Roche et al. 2012). However, although we find that the use of high pressure and low temperature dramatically lowers the rate of Aβ1–42 aggregation, the peptide eventually aggregates to form pressure-resistant fibrils. Under the conditions used, specifically, at 3 kbar and 280 K, the soluble fraction of our ca 1.2 mM Aβ1–42 sample decayed approximately fourfold during the collection of two interleaved 3D NUS NOESY-HSQC spectra (Fig. 3).

Plot of the Aβ1–42 signal intensity decay during the interleaved 3D NOESY-HSQC experiment. Blue circles represent the normalized intensity measured from the 1D spectra, corresponding to t1, t2 = 0, 0 time points, spiked every hour during the 3D data collection. The red line represents a bi-exponential fit, I(t) = 0.191 exp(−t/5.1) + 0.815 exp(−t/33.4) to the intensity decay, where t is the time in units of hours after the start of the measurement

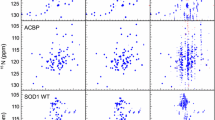

As expected, SMILE processing of the randomly ordered 3D NUS NOESY-HSQC spectrum results in a high level of apparent sampling “noise”, obscuring many of the weak NOE interactions present in this intrinsically disordered peptide (Fig. 4a). By contrast, processing of the simultaneously acquired time-ordered 3D NUS NOESY-HSQC data set yields a high-quality 3D spectrum. Figure 4 compares “skyline projections” over a ca. 4 ppm range in the 15N dimension, which encompasses about half of the residues in Aβ1–42, and illustrates the strong spectral improvement obtained by time-ordering of the NUS data. One-dimensional cross sections, taken through the 3D spectra at the amide of V39 further demonstrate this improvement (Fig. 5). We note that the amplitude modulation introduced by a decaying sample while using random ordering of its NUS sampling points adversely impacts all common NUS reconstruction protocols, which then yield spectra that become dominated by this amplitude “noise” to comparable extents (SI Fig. S3).

Skyline-projected regions of 3D NOESY-HSQC spectra on the 1H–1H plane. The projection was limited to the 120.27–124.17 ppm region 15N chemical shifts and therefore only includes amide signals of residues resonating in this region (depicted between the two dashed lines in SI Fig. S2). Two separate but fully interleaved sets of 3D time domain data were recorded, using duplicates of a single sampling list, but with the order of these sampling points randomized for a and sorted for b. c Was reconstructed from the same experimental data as used for a but utilized upscaling of each FID by the inverse of the fitted, bi-exponential decay function of Fig. 3. a, b are plotted at the same contour levels, while the lowest contour level is 1.6 times higher in c reflecting the increase of the peak intensity by a factor of ca 1.6 resulting from the signal upscaling, which increases the intrinsic thermal noise level by a factor of ca 2.4. Individual cross sections through the 3D spectrum are compared in Fig. 5

Cross sections taken through the 3D spectra for which projected regions are shown in Fig. 4. F1 cross sections for V39 are taken at (F2, F3) = (120.56, 8.08) ppm from the SMILE-reconstructed 3D NOESY-HSQC spectra acquired using a the normalized radial length and b randomly ordered sampling schedules, containing the same time points and a sparsity of 12% for each spectrum. c The same cross section obtained from the randomly ordered data set but with FID intensities corrected for sample decay prior to NUS reconstruction. In all panels, black traces are scaled to display the full amplitude of the diagonal resonance; red traces are upscaled tenfold

In our time-ordered NUS 3D spectrum, NOE cross peaks with intensities greater than ca 0.7% of the diagonal peak are reliably detected. Under the recording conditions, sequential 1HN–1HN cross peaks at intensities ranging between 2 and 3.5% are obtained for all sequential amides that are not obscured by resonance overlap (Fig. 6; SI Fig. S4). Intraresidue HN–Hα intensities are mostly in the 4–6% range relative to the diagonal intensity, and sequential \({\text{H}}_{{{\text{i}} - 1}}^{\alpha }\) to HN NOEs are approximately two times stronger. These NOE intensity ratios are quite typical for an intrinsically disordered polypeptide (Mantsyzov et al. 2014), and at first appearance seem fully compatible with prior conclusions that the Aβ1–42 peptide lacks significant populations of turns or meta-stable secondary structure elements that have lifetimes greater than about 1 ns (Roche et al. 2016). Virtually all of the βN(i, i + 1) connectivities observed in the non-oxidized form of Aβ1–40 (Hou et al. 2004), in addition to many not previously noted, could be unambiguously identified in the 3D spectrum (SI Fig. S4). Under different sample conditions, a number of long-range NOEs has also been reported for Aβ1–42, putatively reporting on transiently structured forms of the polypeptide (Kotler et al. 2015). Of those involving amide protons, the F4-HN to A21-Hβ cross peak could not be confirmed unambiguously because the A21 and A2 methyl protons perfectly overlap under our sample conditions. Presence of a weak G25-HN to D23-Hα NOE (SI Fig. S4) is consistent with earlier data (Kotler et al. 2015).

Strip plot of the time-ordered 3D NOESY-HSQC for the last 9 residues of Aβ1–42. Sequential NOEs are marked by blue arrowed lines, unless there is a longer-range NOE passing through the sequential one. Red lines represent medium-range NOEs, with the inter-residue peaks circled in red. Noise and reconstruction artifacts above the contour threshold, as well as off-strip peaks, are marked ×. Each strip is taken from the 1H–1H plane and labeled with its HN chemical shift and residue. A complete strip plot is presented in Fig. S4, with the corresponding 1H–15N HSQC spectrum shown in Fig. S2

Several weak NOEs, not commonly seen in intrinsically disordered polypeptides, can also be identified in our 3D NOESY-HSQC spectrum (Fig. 6). These include a weak, symmetric NOE between V36-HN and V39-HN, and a weak NOE between I41-HN and M35-Hα, which becomes more pronounced in spectra recorded at pH 8 (Fig. S5). Such interactions suggest the transient formation of a turn type structure in this region of the polypeptide, which requires the additional two residues in Aβ1–42 compared to Aβ1–40. The transient presence of such a turn-containing motif in Aβ1–42, but not in Aβ1–40, could explain the small but significant amide chemical shift and 3JHNHα differences observed for residues M35-G38 between the two peptides (Roche et al. 2016).

As described previously (Ying et al. 2017), SMILE reconstructs the NMR signals using exponentially decaying sinusoidal functions. Since the interferograms in Fig. 2g, h of the time-ordered NUS data deviate from mono-exponential decay, the 3D time-ordered NOESY data serves as a good test for the robustness of the SMILE algorithm when the Lorentzian lineshape assumption is not strictly satisfied. For this purpose, reconstruction of the time-ordered SMILE NOESY spectrum is compared to the data reconstructed by other widely used algorithms, including hmsIST (Hyberts et al. 2012), IRLS (Kazimierczuk and Orekhov 2011), and MDD (Orekhov et al. 2001). As shown for a representative cross section in Fig. S6, SMILE retains the ability to detect the weakest G37-V39 HN–HN NOE. Of the other programs, only the computationally expensive IRLS algorithm performs comparably to SMILE. This result demonstrates that the Lorentzian lineshape assumption in SMILE is not limiting its performance in practical situations, even for the time-ordered data that clearly deviate from exponential decay. The primary reason for this robustness stems from the fact that SMILE effectively approximates the non-exponential decay of strong signals (which dominate the PSF noise) as the sum of exponentially decaying functions during successive iterations of the algorithm. The weakest peaks are either well approximated as a single exponentially decaying sinusoid within the available thermal signal-to-noise, or are never recognized by the SMILE algorithm, as they contribute minimally to the PSF noise, in which case they retain the lineshape encoded in the decay of the sampled time domain data.

Compensation for decay in randomly ordered NUS data

As illustrated above (Fig. 4a), standard NUS reconstruction of randomly ordered data results in a high degree of t1-noise like features. The amplitude modulation of the collected time domain data induced by the progressive sample decay is intimately coupled to the use of random sampling order. However, if the rate at which the sample degrades is known, this decay in sample intensity can be reversed simply by multiplying each acquired FID by exp(t/Td), where t is the time after starting data acquisition, and Td is the decay constant of the sample.

Insertion of multiple t1, t2 = 0, 0 time points in the sampling list of the experiment permits straightforward monitoring of the sample decay during the NUS data collection process (Fig. 3). For Aβ1–42, the sample decay is found to be well-fitted with a bi-exponential function, which then is inverted and used to upscale all acquired FIDs accordingly. This procedure negates the intensity variation induced by the random ordering of the acquired time domain data. However, it also amplifies the noise, and thereby the weight, of the FIDs acquired towards the end of the experiment where signals are weakest. This procedure therefore decreases the attainable sensitivity, an effect clearly visible in the spectrum reconstructed using this approach (Figs. 4c, 5c). This decreased sensitivity is pronounced for our Aβ1–42 spectrum because the monomer concentration at the time when the last FID was collected had decreased by about fourfold. For samples that exhibit a decay by less than a factor of two, the corresponding sensitivity loss becomes much smaller, and inverting the effect of sample decay prior to reconstruction of randomly ordered NUS data becomes a viable option. For the time-ordered data, digitally reversing the sample decay generally is not needed because the noise originating from the very small amplitude modulation that results from the variability in the time steps generally will be far below the thermal noise (Fig. 2f–h) in typical NUS experiments when using normalized radial ordering of the sampling list. The primary consequence of digitally reversing the effect of sample decay then becomes comparable to the result of apodizing conventional, fully sampled time domain data with a positive exponential, line-narrowing function: adversely impacting sensitivity while (modestly) improving spectral resolution.

Concluding remarks

Slow degradation of a sample during the collection of a conventional multi-dimensional NMR spectrum will manifest itself as additional line-broadening in the indirectly sampled frequency dimensions and most severely in the dimension that is incremented last. Analogously, fluctuations in sample temperature, amplifier stability, shimming, or other instrumental parameters that are slow on the time scale over which data collection of an individual FID is averaged, will result in deviations from ideality of the frequency domain line shapes. On the other hand, if such fluctuations occur on time scales comparable to the collection of individual FIDs, they manifest themselves as t1-noise (Mehlkopf et al. 1984). If the order of the recorded sample points is random in the indirect dimensions, as commonly practiced in NUS data collection, then the subsequent re-ordering, which is required prior to Fourier transformation, redistributes the gradual changes in amplitude, phase, or frequency of the collected FIDs and makes them appear uncorrelated in the time-ordered matrix. This reordering therefore transforms these slow variations into changes that appear random and uncorrelated between sequential FIDs in the ordered time domain matrix, thereby resulting in t1-noise like artifacts rather than the line-shape distortions that would be seen in fully sampled, conventionally acquired multi-dimensional NMR spectra.

For multi-dimensional NMR data collected with randomly-ordered NUS, the distortions from instabilities and sample degradation superimpose on the collected data. Re-ordering of the collected data prior to SMILE reconstruction then redistributes the distortions in the same way as would happen for a fully sampled N-dimensional spectrum with random ordering of the indirect time domain points and therefore adds an element of t1-noise to the randomly ordered NUS data. This t1-noise interferes with faithful reconstruction of the frequency domain and is most severe for slices in the indirect dimensions that contain intense resonances. Keeping the ordering of the indirectly sampled dimensions during NUS data collection conventional will strongly reduce the t1-noise like artifacts during spectral reconstruction. On the other hand, slow fluctuations in line shape in the directly detected dimension, due to changes in shimming, temperature, or other instrumental parameters, which would manifest as t1-noise in conventional NUS, will instead result in line shape distortions. When NUS data are collected in a time-ordered fashion, monotonic sample decay will mainly manifest itself as additional line broadening. The precise protocol used for ordering the time points in the indirect dimensions of a q-D NMR spectrum is not particularly critical but preferentially carried out on the basis of the normalized indirect time domain length vector: \(\sqrt {\mathop \sum\nolimits_{{i=1, \ldots ,q - 1}} {{\left( {{k_i}/{N_i}} \right)}^2}}\)

Despite the superficial similarity to the concentric ring/shell sampling methods (Marion 2006; Coggins and Zhou 2008; Coggins et al. 2010), it is important to realize that our time ordering approach is not a sampling method, but instead uses the normalized radial length to sort the schedule generated by any of the common sampling methods. Therefore, use of a time-ordered schedule does not cause the artifact patterns associated with concentric ring/shell sampling, unless the time-ordered sampling scheme itself is generated by such a method.

It is worth pointing out that the time-ordering of the sampling schedule is applicable to both real-time and constant-time evolution. However, in cases where the experiment includes a constant-time evolution period, one could order the time points such that the constant-time dimension is more broadened by the sample decay, thereby leading to more balanced line widths in the indirect dimensions. Alternatively, using conventional ordering with the constant-time dimension incremented first will optimize sensitivity at the expense of some loss of resolution in the dimension that is incremented last.

As reported previously (Ying et al. 2017), for data processed with the SMILE program, exponentially weighting a sampling schedule or using the weighted Poisson-gap sampling (Hyberts et al. 2012) decreases the accuracy of peak positions and also lowers the spectral resolution. It is worth noting that the normalized radial length sampling essentially favors the data points when t1 and t2 are short because these data points are collected before the sample significantly decays. It is important to take this factor into account when designing deviations from regular, fully randomly spaced sampling schemes.

Clearly, in addition to the choice of a sampling schedule when recording NUS data, the ordering of the sampling schedule can have important consequences for the final spectral characteristics. It therefore is important to specify both the sampling schedule and the ordering method used when presenting NUS spectra, in particular when recorded on unstable samples.

Application of the time-ordered NUS protocol to the collection of a 3D NOESY-HSQC spectrum on a highly concentrated Aβ1–42 sample, kept at 3 kbar of pressure and 280 K to minimize fibril formation and concomitant signal loss, resulted in a very high-quality spectrum. Unless obscured by resonance overlap, all conventional sequential NOE connectivities, normally seen in disordered polypeptides, were clearly observed, including a full set of sequential HN–HN NOEs. Several less common NOE interactions were also observed, however. These include several medium-range NOEs for the C-terminal region, as well as multiple weak NOEs for residues D23-K28 that are not normally seen in linear unstructured peptides. None of these interactions appear spatially proximate in the intact fibril, whose structure was recently determined by solid-state NMR spectroscopy (Colvin et al. 2016), indicating that the presence of unusual NOE contacts in solution at high pressure does not foreshadow the structure adopted in the amyloid state. Despite the high concentration used, we also do not find evidence for intermolecular interactions. In particular, the exceptionally close agreement between chemical shifts measured at concentrations that differ by a factor of 60 (Fig. S2) strongly indicates the absence of significantly populated intermolecular interactions under the conditions where we carried out our experiments. Numerous other medium and long-range NOEs, mostly involving sidechain–sidechain interactions, have previously been reported by others at atmospheric pressure (Ball et al. 2011). Our result therefore suggests that the use of high pressure adversely impacts the formation of transient long-range interactions, which could correlate with the much lower rate at which fibrils form under such conditions.

References

Ball KA et al (2011) Homogeneous and heterogeneous tertiary structure ensembles of amyloid-beta peptides. Biochemistry 50:7612–7628

Balsgart NM, Vosegaard T (2012) Fast forward maximum entropy reconstruction of sparsely sampled data. J Magn Reson 223:164–169

Barna JCJ, Laue ED, Mayger MR, Skilling J, Worrall SJP (1987) Exponential sampling, an alternative method for sampling in two-dimensional NMR experiments. J Magn Reson 73:69–77

Bermel W et al (2012) Speeding up sequence specific assignment of IDPs. J Biomol NMR 53:293–301

Billeter M (2017) Non-uniform sampling in biomolecular NMR. J Biomol NMR 68:65–66

Bostock MJ, Holland DJ, Nietlispach D (2012) Compressed sensing reconstruction of undersampled 3D NOESY spectra: application to large membrane proteins. J Biomol NMR 54:15–32

Cavini IA et al (2018) Inhibition of amyloid Ab aggregation by high pressures or specific D-enantiomeric peptides. Chem Commun (Cambridge UK) 54:3294–3297

Coggins BE, Zhou P (2008) High resolution 4-D spectroscopy with sparse concentric shell sampling and FFT-CLEAN. J Biomol NMR 42:225–239

Coggins BE, Venters RA, Zhou P (2010) Radial sampling for fast NMR: concepts and practices over three decades. Prog Nucl Magn Reson Spectrosc 57:381–419

Coggins BE, Werner-Allen JW, Yan A, Zhou P (2012) Rapid protein global fold determination using ultrasparse sampling, high-dynamic range artifact suppression, and time-shared NOESY. J Am Chem Soc 134:18619–18630

Colvin MT et al (2016) Atomic resolution structure of monomorphic A beta(42) amyloid fibrils. J Am Chem Soc 138:9663–9674

Delaglio F et al (1995) NMRPipe: a multidimensional spectral processing system based on UNIX pipes. J Biomol NMR 6:277–293

Delsuc MA, Tramesel D (2006) Application of maximum-entropy processing to NMR multidimensional datasets, partial sampling case. CR Chim 9:364–373

Eghbalnia HR, Bahrami A, Tonelli M, Hallenga K, Markley JL (2005) High-resolution iterative frequency identification for NMR as a general strategy for multidimensional data collection. J Am Chem Soc 127:12528–12536

Fawzi NL, Ying J, Ghirlando R, Torchia DA, Clore GM (2011) Atomic-resolution dynamics on the surface of amyloid-beta protofibrils probed by solution NMR. Nature 480:268

Hoch JC, Maciejewski MW, Mobli M, Schuyler AD, Stern AS (2014) Nonuniform sampling and maximum entropy reconstruction in multidimensional NMR. Acc Chem Res 47:708–717

Holland DJ, Bostock MJ, Gladden LF, Nietlispach D (2011) Fast multidimensional NMR spectroscopy using compressed sensing. Angew Chem Int Ed 50:6548–6551

Hou LM et al (2004) Solution NMR studies of the A beta(1–40) and A beta(1–42) peptides establish that the met35 oxidation state affects the mechanism of amyloid formation. J Am Chem Soc 126:1992–2005

Hyberts SG, Milbradt AG, Wagner AB, Arthanari H, Wagner G (2012) Application of iterative soft thresholding for fast reconstruction of NMR data non-uniformly sampled with multidimensional Poisson Gap scheduling. J Biomol NMR 52:315–327

Hyberts SG, Robson SA, Wagner G (2013) Exploring signal-to-noise ratio and sensitivity in non-uniformly sampled multi-dimensional NMR spectra. J Biomol NMR 55:167–178

Hyberts SG, Arthanari H, Robson SA, Wagner G (2014) Perspectives in magnetic resonance: NMR in the post-FFT era. J Magn Reson 241:60–73

Kamatari YO, Yokoyama S, Tachibana H, Akasaka K (2005) Pressure-jump NMR study of dissociation and association of amyloid protofibrils. J Mol Biol 349:916–921

Kay LE, Keifer P, Saarinen T (1992) Pure absorption gradient enhanced heteronuclear single quantum correlation spectroscopy with improved sensitivity. J Am Chem Soc 114:10663–10665

Kazimierczuk K, Orekhov VY (2011) Accelerated NMR spectroscopy by using compressed sensing. Angew Chem Int Ed 50:5556–5559

Kern D et al (1999) Structure of a transiently phosphorylated switch in bacterial signal transduction. Nature 402:894–898

Kotler SA et al (2015) High-resolution NMR characterization of low abundance oligomers of amyloid-beta without purification. Sci Rep 5:11811

Lucast LJ, Batey RT, Doudna JA (2001) Large-scale purification of a stable form of recombinant tobacco etch virus protease. Biotechniques 30:544–544

Maciejewski MW et al (2017) NMRbox: a resource for biomolecular NMR computation. Biophys J 112:1529–1534

Mantsyzov AB et al (2014) A maximum entropy approach to the study of residue-specific backbone angle distributions in alpha-synuclein, an intrinsically disordered protein. Protein Sci 23:1275–1290

Marion D (2006) Processing of ND NMR spectra sampled in polar coordinates: a simple Fourier transform instead of a reconstruction. J Biomol NMR 36:45–54

Marion D, Ikura M, Tschudin R, Bax A (1989) Rapid recording of 2D NMR spectra without phase cycling. Application to the study of hydrogen exchange in proteins. J Magn Reson 85:393–399

Mayzel M, Rosenlow J, Isaksson L, Orekhov VY (2014) Time-resolved multidimensional NMR with non-uniform sampling. J Biomol NMR 58:129–139

Mehlkopf AF, Korbee D, Tiggelman TA, Freeman R (1984) Sources of t1 noise in two-dimensional NMR. J Magn Reson 58:315–323

Miljenovic T, Jia XY, Lavrencic P, Kobe B, Mobli M (2017) A non-uniform sampling approach enables studies of dilute and unstable proteins. J Biomol NMR 68:119–127

Mobli M, Maciejewski MW, Gryk MR, Hoch JC (2007) Automatic maximum entropy spectral reconstruction in NMR. J Biomol NMR 39:133–139

Mobli M, Maciejewski MW, Schuyler AD, Stern AS, Hoch JC (2012) Sparse sampling methods in multidimensional NMR. Phys Chem Chem Phys 14:10835–10843

Munte CE, Erlach MB, Kremer W, Koehler J, Kalbitzer HR (2013) Distinct conformational states of the Alzheimer-amyloid peptide can be detected by high-pressure NMR spectroscopy. Angew Chem Int Ed 52:8943–8947

Orekhov VY, Jaravine VA (2011) Analysis of non-uniformly sampled spectra with multi-dimensional decomposition. Prog Nucl Magn Reson Spectrosc 59:271–292

Orekhov VY, Ibraghimov IV, Billeter M (2001) MUNIN: a new approach to multi-dimensional NMR spectra interpretation. J Biomol NMR 20:49–60

Palmer AG, Cavanagh J, Wright PE, Rance M (1991) Sensitivity improvement in proton-detected 2-dimensional heteronuclear correlation NMR-spectroscopy. J Magn Reson 93:151–170

Palmer MR et al (2015) Sensitivity of nonuniform sampling NMR. J Phys Chem B 119:6502–6515

Peterson RW, Wand AJ (2005) Self-contained high-pressure cell, apparatus, and procedure for the preparation of encapsulated proteins dissolved in low viscosity fluids for nuclear magnetic resonance spectroscopy. Rev Sci Instrum 76(9):094101

Roche J et al (2012) Cavities determine the pressure unfolding of proteins. Proc Natl Acad Sci USA 109:6945–6950

Roche J, Shen Y, Lee JH, Ying J, Bax A (2016) Monomeric A beta(1–40) and A beta(1–42) peptides in solution adopt very similar Ramachandran map distributions that closely resemble random coil. Biochemistry 55:762–775

Rovnyak D et al (2004) Accelerated acquisition of high resolution triple-resonance spectra using non-uniform sampling and maximum entropy reconstruction. J Magn Reson 170:15–21

Schanda P, Forge V, Brutscher B (2007) Protein folding and unfolding studied at atomic resolution by fast two-dimensional NMR spectroscopy. Proc Natl Acad Sci USA 104:11257–11262

Schlepckow K, Wirmer J, Bachmann A, Kiefhaber T, Schwalbe H (2008) Conserved folding pathways of alpha-lactalbumin and lysozyme revealed by kinetic CD, fluorescence, NMR, and interrupted refolding experiments. J Mol Biol 378:686–698

Schuyler AD, Hoch JC (2018) NUScon, nonuniform sampling and reconstruction challenge in NMR spectroscopy. https://nuscon.org/

Ying J, Delaglio F, Torchia DA, Bax A (2017) Sparse multidimensional iterative lineshape-enhanced (SMILE) reconstruction of both non-uniformly sampled and conventional NMR data. J Biomol NMR 68:101–118

Acknowledgements

This work was supported by the Intramural Research Program of the National Institute of Diabetes and Digestive and Kidney Diseases. We acknowledge use of the NIDDK Mass Spectrometry facility and thank Annie Geronimo and Dr. Marielle Waelti for technical support, Dr. Dennis A. Torchia, Dr. Frank Delaglio, Dr. Andy Byrd, and Dr. Hsiau-Wei Lee for valuable discussions, and Professor Vladislav Orekhov for his kind help with optimizing the MDDNMR processing scripts used to reconstruct some of the data presented in SI Figs. S3 and S6. These reconstructions were performed by making use of NMRbox: National Center for Biomolecular NMR Data Processing and Analysis, a Biomedical Technology Research Resource (BTRR), which is supported by NIH Grant P41GM111135 (NIGMS).

Funding

Funding was provided by National Institute of Diabetes and Digestive and Kidney Diseases (Grant No. DK075141-02).

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Dennis A. Torchia on the occasion of his 80th Birthday.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ying, J., Barnes, C.A., Louis, J.M. et al. Importance of time-ordered non-uniform sampling of multi-dimensional NMR spectra of Aβ1–42 peptide under aggregating conditions. J Biomol NMR 73, 429–441 (2019). https://doi.org/10.1007/s10858-019-00235-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10858-019-00235-7