Abstract

In this paper, we introduce a generalized asymmetric fronts propagation model based on the geodesic distance maps and the Eikonal partial differential equations. One of the key ingredients for the computation of the geodesic distance map is the geodesic metric, which can govern the action of the geodesic distance level set propagation. We consider a Finsler metric with the Randers form, through which the asymmetry and anisotropy enhancements can be taken into account to prevent the fronts leaking problem during the fronts propagation. These enhancements can be derived from the image edge-dependent vector field such as the gradient vector flow. The numerical implementations are carried out by the Finsler variant of the fast marching method, leading to very efficient interactive segmentation schemes. We apply the proposed Finsler fronts propagation model to image segmentation applications. Specifically, the foreground and background segmentation is implemented by the Voronoi index map. In addition, for the application of tubularity segmentation, we exploit the level set lines of the geodesic distance map associated with the proposed Finsler metric providing that a thresholding value is given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fronts propagation models have been considerably developed for the applications of image segmentation and boundary detection since the original level set framework proposed by Osher and Sethian [44]. Guaranteed by their solid mathematical background, the fronts propagation models lead to strong abilities in a wide variety of computer vision tasks such as image segmentation and boundary detection [11, 12, 35, 55]. In their basic formulation, the boundaries of an object are modeled as closed contours, each of which can be obtained by evolving an initial closed curve in terms of a speed function till the stopping criteria reached. A typical speed function usually involves a curve regularity penalty, for instance the curvature, and an image data term. The use of curve evolution scheme for image segmentation can be backtrack to the original active contour model [28] which has inspired a amount of approaches [16, 18, 20, 30, 38, 53].

Let \(\varOmega \subset \mathbb {R}^2\) be an open bounded domain. Based on the level set framework [44], a closed contour \(\gamma \) can be retrieved by identifying the zero-level set line of a scalar function \(\phi :\varOmega \rightarrow \mathbb {R}\) such that \(\gamma :=\{\mathbf {x}\in \varOmega ;\,\phi (\mathbf {x})=0\}\). By this curve representation, the curve evolution can be carried out by evolving the function \(\phi \)

where \(\xi :\varOmega \rightarrow \mathbb {R}\) is a speed function and t denotes the time. At any time t, the curve \(\gamma \) can be recovered by identifying the zero-level set line of the function \(\phi \). Using the level set evolutional equation in Eq. (1), the contours splitting and merging can be adaptively handled. The main drawback of the level set-based fronts propagation method is its expensive computational burden. In order to alleviate this problem, Adalsteinsson and Sethian [1] suggested to restrict the computation for the update of the level set function \(\phi \) within a narrow band. In this case, only the values of \(\phi \) at the points nearby the zero-level set lines are updated according to Eq. (1). Moreover, the distance-preserving level set method [32] is able to avoid level set reinitialization by enforcing the level set function \(\phi \) as a signed Euclidean distance function from the current curves during the evolution.

Despite the efforts devoted to the reduction in the computation burden, the classical level set-based fronts propagation scheme (1) is still impractical especially for real-time applications. In order to solve this issue, Malladi and Sethian [36] proposed a new geodesic distance-based fronts propagation model for real-time image segmentation. It relies on a geodesic distance map \(\mathcal {U}_\mathfrak {s}:\varOmega \rightarrow \mathbb {R}^+\cup \{0\}\) associated with a set \(\mathfrak {s}\) of source points. The value of \(\mathcal {U}_\mathfrak {s}(\mathbf {x})\) at a point \(\mathbf {x}\) in essence is equivalent to the minimal geodesic curve length between the point \(\mathbf {x}\) and a source point \(\mathbf {s}\in \mathfrak {s}\) in the sense of an isotropic Riemannian metric, which is dependent on a potential function \(P:\varOmega \rightarrow \mathbb {R}^+\). The geodesic distance map \(\mathcal {U}_\mathfrak {s}\) coincides with the viscosity solution to the Eikonal equation, which can be efficiently computed by the fast marching methods [40,41,42, 49, 51], leading to a possible real-time solution to the segmentation problem. In the context of segmentation, the potential function usually has small values in the homogeneous region and large values near the boundaries. Based on the geodesic distance map \(\mathcal {U}_\mathfrak {s}\), a curve can be denoted by the T-level set of the distance map \(\mathcal {U}_\mathfrak {s}\), where \(T>0\) is a geodesic distance thresholding value. In other words, a curve \(\gamma \) can be characterized by the distance value T such that

By assigning large values to the potential function P around image edges, the basic idea behind [36] is to use the curve \(\gamma \) defined in Eq. (2) to delineate the boundaries of interesting objects. One difficulty suffered by the geodesic distance-based fronts propagation scheme is that the fronts may leak outside the targeted regions before all the points of these regions have been visited by the fronts. The leakages sometimes occur near the boundaries close to the source positions or in weak boundaries, especially when dealing with long and thin structures. The main reason for this leaking problem is the positivity constraint required by the metric (potential) functions for the Eikonal equation. In order to solve this problem, Cohen and Deschamps [19] suggested an adaptive freezing scheme for tubular structure segmentation. They took into account a Euclidean curve length criterion to prevent the fast marching fronts to travel outside the tubular structures in order to avoid the leaking problem. The main difficulty of this model lies at the choice of a suitable Euclidean curve length thresholding value. Chen and Cohen [13] considered an anisotropic Riemannian metric for vessel tree segmentation, where the vessel orientations are taken into account to mitigate the leaking problem. Li and Yezzi [33] proposed a dual fronts propagation model for active contours evolution, where the geodesic metric includes both edge and region statistical information. The basic idea of [33] is to propagate the fronts simultaneously from the exterior and interior boundaries of the narrowband. The optimal contours can be recovered from the positions where the two fast marching fronts meet. These meeting interfaces also correspond to the boundaries of the adjacent Voronoi regions. Arbeláez and Cohen [3, 4] and Bai and Sapiro [5] made use of the Voronoi index map and the Voronoi region for interactive image segmentation, both of which can be constructed through the geodesic distance maps associated with the pseudopath metrics. In their formulation, these models [3,4,5] allow the values of the metrics to be zero and to be dependent on path orientations. The image segmentation can be characterized by the Voronoi regions, each of which involves all the points labeled by the same Voronoi index. In this case, the contours indicating the tagged object boundaries are no longer the level sets of the geodesic distance map, but the common boundaries of the adjacent Voronoi regions. Other interesting geodesic distance map-based image segmentation methods include [10, 21, 45].

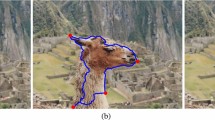

Fronts propagation for interactive image segmentation through different geodesic metrics. a Original image and the seeds, where blue and green brushes indicate the seeds which are placed in the foreground and background, respectively. b–d Segmentation results via the isotropic Riemannian metric, the anisotropic Riemannian metric and the Finsler metric. The blue curves represent the segmented foreground boundaries (Color figure online)

A common point of the Eikonal fronts propagation models mentioned above is that the segmentation procedure is carried out by using the geodesic distance map itself. Finding geodesics through the gradient descent on the geodesic distance map is an alternative way of using the Eikonal equation framework for practical applications. Since the original work by Cohen and Kimmel [20], a broad variety of minimal path models have been proposed to solve various image analysis problems [7, 8, 15, 29, 34, 39]. Recently, a significant contribution to the minimal path framework lies at the development of the curvature-penalized geodesic models such as [6, 16, 23, 37]. In the basic formulations of [14, 16, 23], the curve length values of the minimal geodesics with curvature penalization can be approximately measured by strongly anisotropic Riemannian metrics or Finsler metrics established in an orientation-lifted space. As a result, the geodesic distance maps associated with these metrics can be efficiently and accurately estimated by the Hamiltonian fast marching method [43]. The curvature-penalized geodesics can be recovered via a gradient descent scheme on the associated geodesic distance map.

In this paper, we extend the geodesic distance map-based fronts propagation framework to a Finsler case, where both the edge anisotropy and asymmetry are taken into account simultaneously. Our model thus relies on the geodesic distance map itself instead of minimal paths. Moreover, we also present two ways to construct the Finsler metrics with respect to the applications of foreground and background object segmentation and tubularity segmentation. In order to quickly find suitable and reliable solutions in various situations, it is important for the fronts propagation models with a single-pass manner to be robust against to the leaking problem. The existing fronts propagation approaches invoking either Riemannian metrics [13, 19] or pseudopath metrics [3,4,5] do not take into account the edge asymmetry information. This may increase the risk of the leaking problem especially when the provided seeds are close to the targeted boundaries. We show an example of the leaking problem in Fig. 1. In Fig. 1a, the source points inside the foreground and background are indicated by green and blue brushes, respectively. The contours in Fig. 1b, c obtained from the Riemannian metrics pass through the boundaries before the whole object has been covered by the fast marching fronts. In contrast, the segmented contour derived from the proposed Finsler metric can avoid such problem as shown in Fig. 1d.

1.1 Paper Outline

The remaining of this paper is organized as follows: In Sect. 2, we introduce the geodesic distance map associated with a general Finsler metric, the Voronoi regions and the relevant numerical tool. Sect. 3 presents the construction of the asymmetric Finsler metrics associated with different image segmentation applications. The numerical considerations for the Finsler metrics-based fronts propagation are introduced in Sect. 4. The experimental results and the conclusion are, respectively, presented in Sects. 5 and 6.

2 Background on Geodesic Distance Map

A Finsler geodesic metric \(\mathcal {F}:\varOmega \times \mathbb {R}^2\rightarrow [0,+\infty ]\) is a continuous function over the domain \(\varOmega \times \mathbb {R}^2\). For each fixed point \(\mathbf {x}\in \varOmega \), the geodesic metric \(\mathcal {F}(\mathbf {x},\mathbf {v})\) can be characterized by an asymmetric norm of \(\mathbf {v}\in \mathbb {R}^2\). In other words, the Finsler geodesic metric \(\mathcal {F}\) is convex and 1-homogeneous on its second argument. It is also potentially asymmetric such that \(\exists \mathbf {x}\in \varOmega \) and \(\exists \mathbf {v}\in \mathbb {R}^2\), the following inequality is held

The geodesic curve length associated with the metric \(\mathcal {F}\) along a Lipschitz continuous curve \(\mathcal {C}\) can be expressed by

where \(\mathcal {C}^\prime (s)=\frac{d}{\mathrm{d}s}\mathcal {C}(s)\) is the first-order derivative of the curve \(\mathcal {C}\) and s is the arc length parameter of the curve \(\mathcal {C}\).

Letting \(\mathfrak {s}\subset \varOmega \) be a set which involves all the source points. The minimal curve length from \(\mathbf {y}\) to \(\mathbf {x}\) with respect to the Finsler metric \(\mathcal {F}\) is defined by

where \(\mathcal {A}_{\mathbf {y},\mathbf {x}}\) is the set of all the Lipschitz continuous curves linking from a point \(\mathbf {y}\) to \(\mathbf {x}\in \varOmega \).

The geodesic distance map \(\mathcal {U}_\mathfrak {s}\) associated with the geodesic metric \(\mathcal {F}\) can be defined in terms of the minimal curve length \(\mathcal {D}_\mathcal {F}\) in Eq. (5) such that

The geodesic distance map \(\mathcal {U}_{\mathfrak {s}}\) is the unique viscosity solution to the Eikonal equation [16, 41]

where \(\nabla \mathcal {U}_\mathfrak {s}\) denotes the standard Euclidean gradient of \(\mathcal {U}_\mathfrak {s}\) and \(\langle \cdot ,\cdot \rangle \) is the Euclidean scalar product in the Euclidean space \(\mathbb {R}^2\).

The Eikonal equation in Eq. (7) can be interpreted by the Bellman’s optimality principle which states that

where \(\varLambda (\mathbf {x})\subset \varOmega \) is a neighborhood of point \(\mathbf {x}\) and \(\partial \varLambda (\mathbf {x})\) is the boundary of \(\varLambda (\mathbf {x})\). This interpretation is a key ingredient for the numerical computation of the geodesic distance map via the fast marching method [41].

Course of the fast marching fronts propagation for the computation of the geodesic distance map \(\mathcal {U}_\mathfrak {s}\). a Original image. The blue brush indicates the source point set \(\mathfrak {s}\) such that \(\mathcal {U}_\mathfrak {s}(\mathbf {x})=0,\,\forall \mathbf {x}\in \mathfrak {s}\). b–e Course of the fast marching fronts propagation (Color figure online)

2.1 Voronoi Index Map

In this section, we consider a more general case for which a family of source point sets are provided. Suppose that these source point sets are denoted by \(\mathfrak {s}_k\) and are indexed by \(k\in \{1,2,\cdots ,n\}\) with n the total number of source point sets. For the sake of simplicity, we note \(\mathfrak {s}=\cup _{k=1}^n\mathfrak {s}_k\).

For a given geodesic metric \(\mathcal {F}\), we can compute the respective geodesic distance map \(\mathcal {U}_k\) associated with each source point set \(\mathfrak {s}_k\) by Eq. (6). A Voronoi index map is a function \(\mathcal {L}:\varOmega \rightarrow \{1,2,\cdots ,n\}\) such that for any source point \(\mathbf {x}\in \mathfrak {s}_k\)

and for any domain point \(\mathbf {x}\in \varOmega \backslash \mathfrak {s}\), the Voronoi index \(\mathcal {L}(\mathbf {x})\) is identical to the index of the closest source point set in the sense of the geodesic distance [4, 7]. One can construct a Voronoi index map \(\mathcal {L}\) in terms of the geodesic distance maps \(\mathcal {U}_k\) (\(1\le k\le n\)) by

By the Voronoi index map \(\mathcal {L}\), the whole domain \(\varOmega \) can be partitioned into n Voronoi regions \(\mathcal {V}_k\subset \varOmega \)

The common boundary \(\varGamma _{i,j}:=\partial \mathcal {V}_i\cap \partial \mathcal {V}_j\) of two adjacent Voronoi regions \(\mathcal {V}_i\) and \(\mathcal {V}_j\) is comprised of a collection of equidistant points to the source point sets \(\mathfrak {s}_i\) and \(\mathfrak {s}_j\), i.e.,

Finally, we consider a geodesic distance map \(\mathcal {U}_\mathfrak {s}\) associated with the set \(\mathfrak {s}=\cup _k\mathfrak {s}_k\) which can be expressed by

where \(\mathcal {U}_k\) is the geodesic distance map with respect to the source point set \(\mathfrak {s}_k\) indexed by k.

2.2 Fast Marching Method

Many approaches [9, 48, 52, 54] can be used to estimate the geodesic distance map \(\mathcal {U}_\mathfrak {s}\). Among them, the fast marching method [49, 51] solves the Eikonal equation in a very efficient way. It has a similar distance estimation scheme with Dijkstra’s graph-based shortest path algorithm [22]. One crucial ingredient of the fast marching method is the construction of the stencil map \(\varLambda \), where \(\varLambda (\mathbf {x})\) defines the neighborhood of a grid point \(\mathbf {x}\). The isotropic fast marching methods [49, 51] are established on an orthogonal 4-connectivity neighborhood system, which suffers some difficulties for the distance computation associated with asymmetric Finsler metrics [41]. In order to find accurate solutions to the Finsler Eikonal equation, more complicated neighborhood systems are taken into account [40, 41, 43, 50]. These neighborhood systems or stencils are usually constructed depending on the geodesic metrics. In this paper, we adopt the Finsler variant of the fast marching method proposed by Mirebeau [41]. It invokes a geometry tool of anisotropic stencil refinement and leads to a highly accurate solution, but requires a low computation complexity.

2.2.1 Hopf–Lax Operator for Local Distance Update

The Finsler variant of the fast marching method [41] estimates the geodesic distance map on a regular discretization grid \(\mathbb {Z}^2\) of the domain \(\varOmega \). It makes use of the Hopf–Lax operator to approximate (8) by

where \(\varLambda (\mathbf {x})\) denotes the stencil of \(\mathbf {x}\) involving a set of vertices in \(\mathbb {Z}^2\) and \({{\mathrm{\mathbb {I}}}}_{\varLambda (\mathbf {x})}\) is a piecewise linear interpolation operator in the neighborhood \(\varLambda (\mathbf {x})\). The estimate of the quality and order for the solution to (14) can be found in [41].

The Hopf–Lax operator is first introduced for the geodesic distance computation by Tsitsiklis [51] from an optimal control point of view. The minimal curve length \(\mathcal {D}_\mathcal {F}\) of a short geodesic from \(\mathbf {y}\) to \(\mathbf {x}\) is approximated by the length \(\mathcal {F}(\mathbf {x},\mathbf {x}-\mathbf {y})\) of a line segment \(\overline{\mathbf {x}\mathbf {y}}\). The geodesic distance value \(\mathcal {U}_\mathfrak {s}(\mathbf {y})\) in Eq. (8) is estimated by a piecewise linear interpolation operator \({{\mathrm{\mathbb {I}}}}_{\varLambda (\mathbf {x})}\) at \(\mathbf {y}\) located at the stencil boundary \(\partial \varLambda (\mathbf {x})\). It is comprised of a set \(\mathcal {T}_\mathbf {x}\) of one-dimensional simplexes or line segments. Each simplex \({\mathbb {T}}_i\in \mathcal {T}_\mathbf {x}\) connects two adjacent vertices which are involved in the stencil \(\varLambda (\mathbf {x})\). The solution \(\mathcal {U}_\mathfrak {s}\) to the Hopf–Lax operator (14) can be attained by

where \(U_i\) is the solution to the minimization problem

For each simplex \({\mathbb {T}}_i\in \mathcal {T}_\mathbf {x}\) which joins two vertices \(\mathbf {z}_1\) and \(\mathbf {z}_2\), the minimization problem (16) can be approximated by Tsitsiklis’ theorem [51] such that

where \(\varvec{\lambda }=(\lambda _1,\lambda _2)\) subject to \(\lambda _1,\lambda _2\ge 0\) and \(\sum ^2_i\lambda _i=1\).

2.2.2 Fast Marching Fronts Propagation Scheme

The fast marching method estimates the geodesic distance map \(\mathcal {U}_{\mathfrak {s}}\) in a wave fronts propagation manner. We demonstrate the course of the fast marching fronts propagation in Fig. 2 on a synthetic image. In this figure, we invoke a Finsler metric for the computation of the geodesic distance map \(\mathcal {U}_\mathfrak {s}\), where the metric will be presented in Sect. 4. The fast marching fronts propagation is coupled with a procedure of label assignment operation, during which all the grid points are classified into three categories:

-

Accepted points, for which the values of \(\mathcal {U}_\mathfrak {s}\) have been estimated and frozen.

-

Far points, for which the values of \(\mathcal {U}_\mathfrak {s}\) are unknown.

-

Trial points, which are the remaining grid points in \(\mathbb {Z}^2\) and form the fast marching fronts. A Trial point will be assigned a label of Accepted if it has the minimal geodesic distance value among all the Trial points.

In the course of the geodesic distance estimation, each grid point \(\mathbf {x}\in \mathbb {Z}^2\backslash \mathfrak {s}\) will be visited by the monotonically advancing fronts which expand from the source points involved in \(\mathfrak {s}\). The values of \(\mathcal {U}_\mathfrak {s}\) for all the Trial points are stored in a priority queue in order to quickly find the point with minimal \(\mathcal {U}_\mathfrak {s}\). The label assignment procedureFootnote 1 can be carried out by a binary map \(b:\mathbb {Z}^2\rightarrow \{Accepted ,\,Far ,\,Trial \}\).

Suppose that \(\mathfrak {s}=\cup _k\mathfrak {s}_k\) with \(\mathfrak {s}_k\) a source point set. The geodesic distance map \(\mathcal {U}_\mathfrak {s}\) and the Voronoi index map \(\mathcal {L}\) can be simultaneously computed [7, 17], where the computation scheme in each iteration can be divided into two steps.

Voronoi Index Update In each geodesic distance update iteration, among all the Trial points, a point \(\mathbf {x}_{\mathrm{min}}\) that globally minimizes the geodesic distance map \(\mathcal {U}_\mathfrak {s}\) is chosen and tagged as Accepted. We set \(\mathcal {L}(\mathbf {x}_{\mathrm{min}})=k\) if \(\mathbf {x}_{\mathrm{min}}\in \mathfrak {s}_k\). Otherwise, the geodesic distance value \(\mathcal {U}_\mathfrak {s}(\mathbf {x}_{\mathrm{min}})\) can be estimated in the simplex \({\mathbb {T}}^*\in \mathcal {T}_{\mathbf {x}_\mathrm{min }}\) [see Eq. (15)], where the vertices relevant to \({\mathbb {T}}^*\) are respective \(\mathbf {z}_1\) and \(\mathbf {z}_2\). This is done by finding the solution to (17) with respect to the simplex \({\mathbb {T}}^*\), where the corresponding minimizer is \(\varvec{\lambda }^*=(\lambda ^*_1,\lambda ^*_2)\). Then the Voronoi index map \(\mathcal {L}\) can be computed by

Local Geodesic Distance Update For a grid point \(\mathbf {x}\), we denote by \(\varLambda _\star (\mathbf {x}):=\{\mathbf {z}\in \mathbb {Z}^2;\mathbf {x}\in \varLambda (\mathbf {z})\}\) the reverse stencil. The remaining step in this iteration is to update \(\mathcal {U}_{\mathfrak {s}}(\mathbf {z})\) for each grid point \(\mathbf {z}\) such that \(\mathbf {z}\in \varLambda _\star (\mathbf {x}_{\mathrm{min}})\) and \(b(\mathbf {z})\ne \) Accepted through the solution \(\hat{\mathcal {U}}_\mathfrak {s}(\mathbf {z})\) to the Hopf–Lax operator (14). This is done by assigning to \(\mathcal {U}_\mathfrak {s}(\mathbf {z})\) the smaller value between the solution \(\hat{\mathcal {U}}_\mathfrak {s}(\mathbf {z})\) and the current geodesic distance value of \(\mathcal {U}_\mathfrak {s}(\mathbf {z})\). Note that the solution \(\hat{\mathcal {U}}_{\mathfrak {s}}(\mathbf {z})\) to (14) is attained using the stencil \(\varLambda (\mathbf {z})\) [41].

The algorithm for the fast marching method is described in Algorithm 1. In this algorithm, the computation of a map \(\mathfrak {C}_{\mathrm{dyn}}\) in Line 12 of Algorithm 1 is not necessary for the general fast marching fronts propagation scheme, but required by our method as discussed in Sect. 4.3.

Computation Complexity The complexity estimate for the isotropic fast marching methods [49, 51] established on the 4-neighborhood system is bounded by \({\mathcal {O}}(N\ln N)\), where N denotes the cardinality \(N:=\#\mathbb {Z}^2\) of the discrete domain \(\mathbb {Z}^2\cap \varOmega \). The complexity estimates for the Finsler variant cases [41, 50] with adaptive stencil system rely on the anisotropic ratio \(\kappa (\mathcal {F})\) of the geodesic metric \(\mathcal {F}\). The estimate for the ordered upwind method [50] is bounded by \({\mathcal {O}}(\kappa (\mathcal {F}) N\ln N)\), which is impractical for image segmentation application. In contrast, for the method [41] used in this paper, the complexity bound reduces to \({\mathcal {O}}(N\ln \kappa (\mathcal {F})+N\ln N)\). Note that the anisotropic ratio \(\kappa (\mathcal {F})\) is defined by

3 Finsler Metric Construction

Definition 1

Let \(S^+_2\) be the collection of all the positive definite symmetric matrices with size \(2\times 2\). For any matrix \(M\in S_2^+\), we define a norm \(\Vert \mathbf {u}\Vert _M=\sqrt{\langle \mathbf {u},\,M\mathbf {u}\rangle },\,\forall \mathbf {u}\in \mathbb {R}^2\).

3.1 Principles for Finsler Metric Construction

In this section, we present the construction method of the Finsler metric which is suitable for fronts propagation and image segmentation. Suppose that a vector field \(\mathfrak {g}:\varOmega \rightarrow \mathbb {R}^2\) has been provided such that \(\mathfrak {g}(\mathbf {x})\) points to the object edges at least when \(\mathbf {x}\) is nearby them. In this case, the orthogonal vector field \(\mathfrak {g}^\perp \) indicates the tangents of the edges.

Basically, the Eikonal equation-based fronts propagation models [36] perform the segmentation scheme through a geodesic distance map. In order to find a good solution for image segmentation, the used geodesic metric should be able to reduce the risk of front leaking problem. For this purpose, we search for a direction-dependent metric \(\mathcal {F}_\mathfrak {g}\) satisfying the following inequality

Recall that for an edge point \(\mathbf {x}\), both the feature vectors \(\mathfrak {g}^\perp (\mathbf {x})\) or \(-\mathfrak {g}^\perp (\mathbf {x})\) are propositional to the tangent of the edge at \(\mathbf {x}\) . When the fast marching front arrives at the vicinity of image edges, it prefers to travel along the edge feature vectors \(\mathfrak {g}^\perp (\mathbf {x})\) and \(-\mathfrak {g}^\perp (\mathbf {x})\), instead of passing through the edges, i.e., prefers to travel along the direction \(-\mathfrak {g}(\mathbf {x})\).

The inequality (20) requires the geodesic metric \(\mathcal {F}_\mathfrak {g}\) to be anisotropic and asymmetric with respect to its second argument. Thus, we consider a Finsler metric with a Randers form [46] involving a symmetric quadratic term and a linear asymmetric term for any \(\mathbf {x}\in \mathbb {R}^2\) and any vector \(\varvec{u}\in \mathbb {R}^2\)

where \(\mathcal {M}_\mathfrak {g}:\varOmega \rightarrow S^+_2\) is a positive symmetric definite tensor field and \(\varvec{\omega }_\mathfrak {g}:\varOmega \rightarrow \mathbb {R}^2\) is a vector field that is sufficiently small. The function \(\mathfrak {C}:\varOmega \rightarrow \mathbb {R}^+\) is a positive scalar-valued potential which gets small values in the homogeneous regions and large values around the image edges. It can be derived from the image data such as the coherence measurements of the image features, which will be discussed in detail in Sect. 4.3.

The tensor field \(\mathcal {M}_\mathfrak {g}\) and the vector field \(\varvec{\omega }_\mathfrak {g}\) should satisfy the constraint

in order to guarantee the positiveness [41] of the Randers metric \(\mathcal {F}\).

We reformulate the Randers metric \(\mathcal {F}_\mathfrak {g}\) in Eq. (21) as

where \(\mathcal {G}_\mathfrak {g}:\varOmega \times \mathbb {R}^2\rightarrow [0,\infty ]\) is still a Randers metric which can be formulated by

The remaining part of this section will be devoted to the construction of the Randers metric \(\mathcal {G}_\mathfrak {g}\) in terms of the vector field \(\mathfrak {g}\) which is able to characterize the directions orthogonal to the image edges.

Let us define a new vector field \(\bar{\mathfrak {g}}:\varOmega \rightarrow \mathbb {R}^2\) by

The tensor field \(\mathcal {M}_\mathfrak {g}\) used in Eq. (21) can be constructed dependently on two scalar-valued coefficient functions \(\eta _1\) and \(\eta _2\) such that

where \(\bar{\mathfrak {g}}^\perp (\mathbf {x})\) is the orthogonal vector of \(\bar{\mathfrak {g}}(\mathbf {x})\) and \(\otimes \) denotes the tensor product, i.e., \(\mathbf {u}\otimes \mathbf {u}=\mathbf {u}\mathbf {u}^T\). Note that the eigenvalues of \(\mathcal {M}_\mathfrak {g}(\mathbf {x})\) are \(\eta _1^2(\mathbf {x})/\Vert \mathfrak {g}(\mathbf {x})\Vert ^2\) and \(\eta _2(\mathbf {x})/\Vert \mathfrak {g}(\mathbf {x})\Vert ^2\), respectively, corresponding to the eigenvectors \(\mathfrak {g}(\mathbf {x})/\Vert \mathfrak {g}(\mathbf {x})\Vert \) and \(\mathfrak {g}^\perp (\mathbf {x})/\Vert \mathfrak {g}(\mathbf {x})\Vert \).

The vector \(\varvec{\omega }_\mathfrak {g}(\mathbf {x})\) is positively collinear to field \(\mathfrak {g}(\mathbf {x})\) for all \(\mathbf {x}\in \varOmega \)

where \(\tau :\varOmega \rightarrow \mathbb {R}\) is a scalar-valued coefficient function.

Control sets \(\mathcal {B}(\mathbf {q})\) for different geodesic metrics associated with different values of \(\psi _\mathrm{f}(\mathbf {q})\) and \(\psi _\mathrm{b}(\mathbf {q})\). The blue arrow indicates the direction \(\mathfrak {g}(\mathbf {q})=(\cos (\pi /4),\sin (\pi /4))^T\). The blue dots and the contours denote the origins and the boundaries of these control sets, respectively. a Control sets for Randers metrics associated with \(\psi _\mathrm{f}(\mathbf {q})=5\) and different values of \(\psi _\mathrm{b}(\mathbf {q})\). b Control sets for anisotropic Riemannian metrics with \(\psi _\mathrm{f}(\mathbf {q})=\psi _\mathrm{b}(\mathbf {q})>1\). c Control set for an isotropic Riemannian metric with \(\psi _\mathrm{f}(\mathbf {q})=\psi _\mathrm{b}(\mathbf {q})=1\) (Color figure online)

Geodesic distance maps associated with the Randers metric \(\mathcal {G}_\mathfrak {g}\) with different values of \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\). The red arrow indicate the vector \((\cos (\pi /4),\sin (\pi /4))^T\). The white dots are the source points. Each white curve indicates a level set line of the respective geodesic distance map. a Geodesic distance map associated with \(\psi _\mathrm{f}\equiv 3\) and \(\psi _\mathrm{b}\equiv 8\). b Geodesic distance map associated with \(\psi _\mathrm{f}\equiv 3\) and \(\psi _\mathrm{b}\equiv 3\). c Geodesic distance map associated with \(\psi _\mathrm{f}\equiv 8\) and \(\psi _\mathrm{b}\equiv 3\). The color bars are on the top of each figure (Color figure online)

We estimate the coefficient functions \(\eta _1\), \(\eta _2\) and \(\tau \) through two cost functions \(\psi _\mathrm{f},\,\psi _\mathrm{b}:\varOmega \rightarrow (1,\,+\infty )\), which assign the cost values \(\psi _\mathrm{f}(\mathbf {x})\), \(\psi _\mathrm{b}(\mathbf {x})\) and 1 to the Randers metric \(\mathcal {G}_\mathfrak {g}\), respectively, along the directions \(\mathfrak {g}(\mathbf {x})\), \(-\mathfrak {g}(\mathbf {x})\) and \(\mathfrak {g}^\perp (\mathbf {x})\) for any point \(\mathbf {x}\in \varOmega \) such that

Combining Eqs. (25) and (27) yields that

for any \(\mathbf {x}\in \varOmega \).

The positive symmetric definite tensor field \(\mathcal {M}_\mathfrak {g}\) and the vector field \(\varvec{\omega }_\mathfrak {g}\) thus can be, respectively, expressed in terms of the cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) by

and

Based on the tensor field \(\mathcal {M}_\mathfrak {g}\) and the vector field \(\varvec{\omega }_\mathfrak {g}\), respectively, formulated in Eqs. (29) and (30), the positiveness constraint (22) is satisfied due to the assumption that \(\psi _\mathrm{f}(\mathbf {x})>1\) and \(\psi _\mathrm{b}(\mathbf {x})>1,\,\forall \mathbf {x}\in \varOmega \). The cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) can be derived from the image edge information such as the image gradients, which will be discussed in Sect. 4.

Note that if we set \(\psi _\mathrm{f}\equiv \psi _\mathrm{b}\), the vector field \(\varvec{\omega }_\mathfrak {g}\) will vanish, i.e., \(\varvec{\omega }_\mathfrak {g}\equiv \mathbf 0\) [see Eq. (30)]. In this case, one has \(\langle \varvec{\omega }_\mathfrak {g}(\mathbf {x}),\mathbf {u}\rangle = 0\) for any point \(\mathbf {x}\in \varOmega \) and any vector \(\mathbf {u}\in \mathbb {R}^2\), leading to a special form of the Randers metric \(\mathcal {G}_\mathfrak {g}\). This special form is a symmetric (potentially anisotropic) Riemannian metric \(\mathcal {R}(\mathbf {x},\mathbf {u})=\Vert \mathbf {u}\Vert _{\mathcal {M}_{\mathfrak {g}}(\mathbf {x})}\) which depends only on the tensor field \(\mathcal {M}_{\mathfrak {g}}\).

Remark 1 (Randers Eikonal Equation) The Eikonal equation for a general Finsler metric can be found in Eq. (7). Associated with the Randers metric \(\mathcal {G}_{\mathfrak {g}}\) defined in (24), the general Eikonal Eq. (7) gets to be the following form

where \(\mathcal {U}_\mathfrak {s}\) is the geodesic distance map and \(\mathfrak {s}\) is the source point set.

3.2 Tissot’s Indicatrix

A basic tool for studying and visualizing the geometry distortion induced from a geodesic metric is the Tissot’s indicatrix defined as the collection of control sets in the tangent space [16]. For an arbitrary geodesic metric \(\mathcal {F}:\varOmega \times \mathbb {R}^2\rightarrow [0,\infty ]\), the control set \(\mathcal {B}(\mathbf {x})\) for any point \(\mathbf {x}\in \varOmega \) is defined as the unit ball centered at \(\mathbf {x}\) such that

We demonstrate the control sets \(\mathcal {B}(\mathbf {q})\) in Fig. 3 for the Randers metric \(\mathcal {G}_\mathfrak {g}(\mathbf {q},\cdot )\) with different values of \(\psi _\mathrm{f}(\mathbf {q})\) and \(\psi _\mathrm{b}(\mathbf {q})\) at a point \(\mathbf {q}\in \varOmega \). The vector \(\mathfrak {g}(\mathbf {q})\) is set as

In Fig. 3a, we show the control sets for the Randers metric \(\mathcal {G}_\mathfrak {g}\) with respect to different values of \(\psi _\mathrm{b}(\mathbf {q})\) and a fixed value \(\psi _\mathrm{f}(\mathbf {q})=5\). One can point out that the common origin of these control sets have shifted from the original center of the ellipsesFootnote 2 due to the asymmetric property as formulated in Eq. (3). In Fig. 3b, the control sets for the Randers metric \(\mathcal {G}_\mathfrak {g}\) associated with \(\psi _\mathrm{f}(\mathbf {q})=\psi _\mathrm{b}(\mathbf {q})>1\) are demonstrated, where \(\mathcal {G}_\mathfrak {g}(\mathbf {q},\cdot )\) gets to be anisotropic and symmetric on its second argument. When \(\psi _\mathrm{f}(\mathbf {q})=\psi _\mathrm{b}(\mathbf {q})=1\), the values of the Randers metric \(\mathcal {G}_\mathfrak {g}(\mathbf {q},\varvec{u})\) turn to be invariant with respect to \(\varvec{u}\) as shown in Fig. 3c. In this case, the tensor \(\mathcal {M}_\mathfrak {g}(\mathbf {q})\) is propositional to the identity matrix.

In Fig. 4, we show the geodesic distance maps associated with \(\mathcal {G}_\mathfrak {g}\) with different values of the cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\). The vector field \(\mathfrak {g}\) is set to

In Fig. 4a, c, we can see that the geodesic distance maps have strongly asymmetric and anisotropic appearance. In Fig. 4b, we observe that the geodesic distance map appears to be symmetric and strongly anisotropic. This is because the respective propagation speed of the fast marching fronts along the directions \((\cos (\pi /4),\sin (\pi /4))^T\) and \(-(\cos (\pi /4),\sin (\pi /4))^T\) is identical to each other.

4 Data-Driven Randers Metrics Construction

4.1 Cost Functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) for the Application of Foreground and Background Segmentation

In this section, we present the computation method for the cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) using the image edge information, based on which the image data-driven Randers metric \(\mathcal {G}_{\mathfrak {g}}\) can be obtained.

Let \({\mathbf {I}}=(I_1,I_2,I_3):\varOmega \rightarrow \mathbb {R}^3\) be a vector-valued image in the chosen color space and let \(G_\sigma \) be a Gaussian kernel with variance \(\sigma \). (We set \(\sigma =1\) through all the experiments of this paper.) The gradient of the image \({\mathbf {I}}\) at each point \(\mathbf {x}=(x,y)\) is a \(2\times 3\) Jacobian matrix

involving the Gaussian-smoothed first-order derivatives along the axis directions x and y

Let \(\rho :\varOmega \rightarrow \mathbb {R}\) be an edge saliency map. It has high values in the vicinity of image edges and low values inside the flatten regions. For each domain point \(\mathbf {x}\in \varOmega \), the value of \(\rho (\mathbf {x})\) can be computed by the Frobenius norm of the Jacobian matrix \(\nabla {\mathbf {I}}_\sigma (\mathbf {x})\)

For a gray-level image \(I:\varOmega \rightarrow \mathbb {R}\), the edge saliency map \(\rho \) can be simply computed by the norm of the Euclidean gradient of the image I such that

Construction of the Vector Field \(\mathfrak {g}\) We use the gradient vector flow method [53] to compute the vector field \(\mathfrak {g}\) for the construction of the Randers metric \(\mathcal {F}_\mathfrak {g}\). This can be done by minimizing the following functional \({\mathcal {E}}_{\mathrm{gvf}}\) with respect to a vector field \(\varvec{h}=(h_1,h_2)^T:\varOmega \rightarrow \mathbb {R}^2\), where \({\mathcal {E}}_{\mathrm{gvf}}\) can be expressed as

where \(\epsilon \in \mathbb {R}^+\) is a constant and

The parameter \(\epsilon \) controls the balance between the regularization term \({\mathcal {E}}_{\mathrm{reg}}\) and the data fidelity term \({\mathcal {E}}_{\mathrm{data}}\). As discussed in [53], the values of \(\epsilon \) should depend on the image noise levels such that a large value of \(\epsilon \) is able to suppress the effects from image noise. We set \(\epsilon =0.1\) through all the numerical experiments of this paper. The minimization of the functional \({\mathcal {E}}_{\mathrm{gvf}}\) can be carried out by solving the Euler–Lagrange equations of the functional \({\mathcal {E}}_{\mathrm{gvf}}\) with respect to the components \(h_1\) and \(h_2\). The gradient vector flow \(\varvec{h}\) is more dense and smooth than the original gradient filed \(\nabla \rho \). In the vicinity of edges, the values of \(\Vert \nabla \rho \Vert \) are large and one has the approximation \(\varvec{h}\approx \nabla \rho \) due to the effects of the data fidelity term \({\mathcal {E}}_{\mathrm{data}}\), while in the flatten regions where the gradient \(\nabla \rho \) nearly vanishes, the vector field \(\varvec{h}\) is forced to keep smooth (i.e., slowly varying), because within these regions the minimization of the regularization term \({\mathcal {E}}_{\mathrm{reg}}\) plays a dominating role for the computation of \(\varvec{h}\). More details for gradient vector flow method can be found from [53].

The vector field \(\mathfrak {g}\) for the construction of the Randers metric \(\mathcal {G}_\mathfrak {g}\) can be obtained by normalizing the vector field \(\varvec{h}\)

The cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) used in Eq. (27) for the foreground and background segmentation application can be expressed for any \(\mathbf {x}\in \varOmega \) by

where \(\alpha _\mathrm{f}\) and \(\alpha _\mathrm{b}\) are nonnegative constants dominating how anisotropic and asymmetric the Randers metric \(\mathcal {G}_\mathfrak {g}\) is.

Once the cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) have been computed by Eqs. (40) and (41), we can construct the tensor filed \(\mathcal {M}_\mathfrak {g}\) and the vector field \(\varvec{\omega }_\mathfrak {g}\), respectively, via Eqs. (29) and (30). Indeed, one has \(\psi _\mathrm{f}(\mathbf {x})\approx \psi _\mathrm{b}(\mathbf {x})\approx 1\) for the points \(\mathbf {x}\) located in the homogeneous region of the image \({\mathbf {I}}\) where \(\rho (\mathbf {x})\approx 0\). In this case, the data-driven Randers metric \(\mathcal {G}_\mathfrak {g}(\mathbf {x},\cdot )\) expressed in Eq. (24) approximates to be an isotropic Riemannian metric. For each point \(\mathbf {x}\) around the image edges such that the value of \(\rho (\mathbf {x})\) is large, the Randers metric \(\mathcal {G}_\mathfrak {g}(\mathbf {x},\cdot )\) will appear to be strongly anisotropic and asymmetric.

Fronts propagation results associated with the Randers metric \(\mathcal {F}_{\mathfrak {g}}\) with different values of \(\beta _\mathrm{d}\). a Original image with source points, respectively, placed in the foreground (green brush) and background (blue brush) regions. b–d Fronts propagation for the values of \(\beta _\mathrm{d}=0,\,5\) and 10, respectively (Color figure online)

4.2 Cost Functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) for Tubularity Segmentation

In this section, we take into account a feature vector field \(\mathbf {p}:\varOmega \rightarrow \mathbb {R}^2\) which extracts local orientation features from the image. A feature vector \(\mathbf {p}(\mathbf {x})\) characterizes the orientation that a tubular structure should have at a point \(\mathbf {x}\) belonging to this structure. The feature vector field \(\mathbf {p}\) can be estimated by the steerable filters [24, 26, 27, 31].

Based on the cost functions \(\psi _\mathrm{f}\) and \(\psi _\mathrm{b}\) in Eqs. (39) and (40), we are able to build a positive symmetric definite tensor field \(\mathcal {M}_\mathfrak {g}\) for the Randers metric \(\mathcal {G}_\mathfrak {g}\). For each point \(\mathbf {x}\) inside the tubular structures where the gray levels vary slowly, the value of the gradient norm \(\rho (\mathbf {x})\) nearly vanishes, leading to \(\psi _\mathrm{b}(\mathbf {x})\approx \psi _\mathrm{f}(\mathbf {x})\approx 1\). In this case, the Randers metric \(\mathcal {G}_{\mathfrak {g}}(\mathbf {x},\mathbf {u})\) is approximately independent of the directions \(\mathbf {u}\) (see Sect. 4.1). However, with respect to tubular structure segmentation application, we expect that inside the tubular structure the fast marching fronts travel faster along the directions \(\mathbf {p}(\cdot )\) or \(-\mathbf {p}(\cdot )\) than along the directions \(\mathbf {p}^\perp (\cdot )\) or \(-\mathbf {p}^\perp (\cdot )\) in order to reduce the risk of the front leakages. For this purpose, we make use of a new tensor field \({\tilde{\mathcal {M}}}_\mathfrak {g}:\varOmega \rightarrow S_2^+\) based on \(\mathcal {M}_\mathfrak {g}\) in Eq. (29) such that

where \(\mu \in \mathbb {R}^+\) is a constant. It is relevant to the anisotropy property of the tensor field \({\tilde{\mathcal {M}}}_{\mathfrak {g}}\). In the numerical experiments of this paper, we set \(\mu =\Vert \psi _\mathrm{f}\Vert ^2_\infty \).

In practice, we observe that inside the tubular structures, the feature vector field \(\mathbf {p}\) can be well approximated by the vector field \(\mathfrak {g}^\perp \) which is the orthogonal vector field of \(\mathfrak {g}\) derived from Eqs. (36) and (39). In order to reduce the computation time, we construct the tensor field \({\tilde{\mathcal {M}}}_\mathfrak {g}\) by the vector field \(\mathfrak {g}\) such that

In this case, we obtain a new Randers metric \({\tilde{\mathcal {G}}}_\mathfrak {g}\) for the application of tubularity segmentation, which depends on the tensor field \({\tilde{\mathcal {M}}}_{\mathfrak {g}}\) and can be formulated as

Note that we build \({\tilde{\mathcal {G}}}_\mathfrak {g}\) by using the same vector field \(\varvec{\omega }_\mathfrak {g}\) with the Randers metric \(\mathcal {G}_\mathfrak {g}\). Based on the new Randers metric \({\tilde{\mathcal {G}}}_\mathfrak {g}\), inside the tubular structures the fast marching fronts will travel fast along the directions \(\mathbf {p}(\cdot )\) or \(-\mathbf {p}(\cdot )\), but slow when the fronts arrive at the boundaries and slower when the fronts tend to pass through them.

4.3 Computing the Potential

We present the computation methods for the potential function \(\mathfrak {C}\) used by the data-driven Randers metric that is formulated in Eq. (23). Basically, the potential function \(\mathfrak {C}\) should have small values in the homogeneous regions and large values in the vicinity of the image edges.

4.3.1 Foreground and Background Segmentation

For foreground and background segmentation application, the potential function \(\mathfrak {C}_{\mathrm{FB}}:=\mathfrak {C}\) has a form of

where \(\beta _\mathrm{s}\) is a positive constant and \(\rho \) is the edge saliency map defined in Eqs. (34) or (35). The term \(\exp (\beta _\mathrm{s}\,\rho (\mathbf {x}))\) which depends only on the edge saliency map \(\rho \) will keep invariant during the fast marching fronts propagation. The dynamic potential function \(\mathfrak {C}_{\mathrm{dyn}}\) relies on the positions of the fronts. It will be updated in the course of the geodesic distances computation in terms of some consistency measure of image features [5]. Basically, the values of the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) should be small in the homogeneous regions. We use a feature map \(\mathfrak {F}:\varOmega \rightarrow \mathbb {R}^n\) with n the dimensions of the feature vector to establish the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\). The feature map \(\mathfrak {F}\) can be the image color vector (\(n=3\)), the image gray level (\(n=1\)) or the scalar probability map (\(n=1\)) as used in [5].

Recall that in each fast marching distance update iteration, the latest Accepted point \(\mathbf {x}_{\mathrm{min}}\) is chosen by searching for a Trial point with minimal distance value \(\mathcal {U}_\mathfrak {s}\) (\(\mathfrak {s}\) is the set of the source points), i.e.,

Then the value of \(\mathfrak {C}_{\mathrm{dyn}}(\mathbf {z})\) for each point \(\mathbf {z}\in \mathbb {Z}^2\backslash \mathfrak {s}\) such that \(\mathbf {x}_{\mathrm{min}}\in \varLambda (\mathbf {z})\) and \(b(\mathbf {z})\ne \) Accepted can be updated by evaluating the Euclidean distance between \(\mathfrak {F}(\mathbf {z})\) and \(\mathfrak {F}(\mathbf {x}_{\mathrm{min}})\) (see Line 12 of Algorithm 1). In other words, one can compute the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) in each fast marching update iteration by

for all grid points \(\mathbf {z}\in \mathbb {Z}^2\backslash \mathfrak {s}\) such that \(\mathbf {x}_{\mathrm{min}}\in \varLambda (\mathbf {z})\) and \(b(\mathbf {z})\ne \) Accepted, where \(\beta _\mathrm{d}\) is a positive constant. Note that we initialize the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) by

Based on the potential \(\mathfrak {C}_{\mathrm{FB}}\) in Eq. (44) and the Randers metric \(\mathcal {G}_\mathfrak {g}\) (see Sect. 4.1), the data-driven Randers metric \(\mathcal {F}_\mathfrak {g}\) for the foreground and background segmentation application can be expressed for any point \(\mathbf {x}\in \varOmega \) and any vector \(\mathbf {u}\in \mathbb {R}^2\) by

Finally, we show the effects of the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) in Eq. (46) in the foreground and background segmentation application. The fronts propagation results are shown in Fig. 5 with respect to the Randers metric \(\mathcal {F}_{\mathfrak {g}}\). In this experiment, we choose different values for the parameter \(\beta _\mathrm{d}\) that is used by the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) and a fixed parameter \(\beta _\mathrm{s}=10\) to compute the edge-based potential \(\mathfrak {C}_{\mathrm{FB}}\). The values of \(\alpha _\mathrm{f}\) and \(\alpha _\mathrm{b}\) are set to be 2 and 3, respectively. Indeed, one can point out that the dynamic potential is able to help the fronts propagation scheme to avoid leakages.

4.3.2 Tubularity Segmentation

We assume that the gray levels inside the tubular structures are stronger than outside. For tubularity segmentation, we consider a potential function \(\mathfrak {C}_\mathrm{T}:=\mathfrak {C}\) involving a static term and a dynamic term \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\) such that

where \(\zeta :\varOmega \rightarrow [0,1]\) is a feature map that characterizes the tubularity appearance, i.e., the value of \(\zeta (\mathbf {x})\) indicates the probability of a point \(\mathbf {x}\) belonging to a tubular structure. In practice, the map \(\zeta \) can be specified as the image gray levels or as a normalized vesselness map derived from a tubularity detector such as [24, 25, 31].

The dynamic potential \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\) is estimated in the course of the fast marching fronts propagation. The computation scheme of \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\) is almost identical to the dynamic potential \(\mathfrak {C}_{\mathrm{dyn}}\) presented in Sect. 4.3.1, except that the dynamic potential \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\) for tubularity segmentation is dependent on the feature map \(\zeta \).

We also initialize the dynamic potential by \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}(\mathbf {x})=1,~\forall \mathbf {x}\in \mathfrak {s}\). In each geodesic distance update iteration, we suppose \(\mathbf {x}_{\mathrm{min}}\) be the latest Accepted point. For each grid point \(\mathbf {z}\in \mathbb {Z}^2\backslash \mathfrak {s}\) such that \(\mathbf {x}_{\mathrm{min}}\in \varLambda (\mathbf {z})\) and \(b(\mathbf {z})\ne \) Accepted, we update the dynamic potential \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\) by

The reason for the use of (49) is that if the latest Accepted point \(\mathbf {x}_{\mathrm{min}}\) belongs to a tubular structure, then the inequality \(\zeta (\mathbf {z})>\zeta (\mathbf {x}_{\mathrm{min}})\) means that the neighbor point \(\mathbf {z}\) also belongs to this structure. Therefore, we assign a small value to \(\tilde{\mathfrak {C}}_{\mathrm{dyn}}\). In this paper, we set the map \(\zeta \) as the normalized image gray levels, i.e., \(\zeta (\mathbf {x})\in [0,1],~\forall \mathbf {x}\in \varOmega \).

The data-driven Randers metric \({\tilde{\mathcal {F}}}_\mathfrak {g}\) for tubularity segmentation can be formulated by

where the potential \(\mathfrak {C}_\mathrm{T}\) and the metric \({\tilde{\mathcal {G}}}_\mathfrak {g}\) are expressed in Eqs. (48) and (43), respectively.

a A synthetic image. The blue dots indicate two sampled points. The arrows indicate the directions \(\mathfrak {g}(\mathbf {x})\) and \(\mathfrak {g}(\mathbf {y})\). b, c Plots of the cost values of \(\mathcal {G}^{(0,0)}_\mathfrak {g}\), \(\mathcal {G}^{(2,0)}_\mathfrak {g}\) and \(\mathcal {G}^{(2,1)}_\mathfrak {g}\) at points \(\mathbf {x}\) and \(\mathbf {y}\) along different directions (Color figure online)

a A synthetic image. The blue dots indicate two sampled points. The arrows indicate the directions of \(\mathfrak {g}(\mathbf {x})\) and \(\mathfrak {g}(\mathbf {y})\). b, c Plots of the cost values of \({\tilde{\mathcal {G}}}^{(0,0)}_\mathfrak {g}\), \({\tilde{\mathcal {G}}}^{(2,0)}_\mathfrak {g}\) and \({\tilde{\mathcal {G}}}^{(2,1)}_\mathfrak {g}\) at points \(\mathbf {x}\) and \(\mathbf {y}\) along different directions (Color figure online)

5 Experimental Results

5.1 Implementation Details

Parameter Setting The anisotropy and asymmetry of the Randers metrics \(\mathcal {G}_\mathfrak {g}\) and \({\tilde{\mathcal {G}}}_\mathfrak {g}\) are determined by the parameters \(\alpha _\mathrm{f}\) and \(\alpha _\mathrm{b}\) [see Eqs. (40) and (41)]. We, respectively, denote by \(\mathcal {G}_\mathfrak {g}^{\varvec{\alpha }}\) and \({\tilde{\mathcal {G}}}_\mathfrak {g}^{\varvec{\alpha }}\) the data-driven Randers metrics \(\mathcal {G}_{\mathfrak {g}}\) and \({\tilde{\mathcal {G}}}_\mathfrak {g}\) with a pair of parameters \(\varvec{\alpha }=(\alpha _\mathrm{f},\alpha _\mathrm{b})\). In this case, the corresponding Randers metrics \(\mathcal {F}_{\mathfrak {g}}\) in Eq. (47) and \({\tilde{\mathcal {F}}}_\mathfrak {g}\) in (50) can be noted by \(\mathcal {F}^{\varvec{\alpha }}_{\mathfrak {g}}\) and \({\tilde{\mathcal {F}}}^{\varvec{\alpha }}_\mathfrak {g}\), respectively. The potential functions \(\mathfrak {C}_{\mathrm{FB}}\) and \(\mathfrak {C}_\mathrm{T}\) rely on two parameters \(\beta _\mathrm{s}\) and \(\beta _\mathrm{d}\). We fix \(\beta _\mathrm{d}=10\) through all the experiments, except in Fig. 8 for which we set \(\beta _\mathrm{d}=5\). The values of \(\beta _\mathrm{s}\) are individually set for each experiment.

Note that the parameter \(\varvec{\alpha }=(0,0)\) indicates that the geodesic metrics \(\mathcal {G}^{(0,0)}_\mathfrak {g}\) and \({\tilde{\mathcal {G}}}^{(0,0)}_\mathfrak {g}\) are isotropic with respect to the second argument. Furthermore, when \(\varvec{\alpha }=(a,0)\) with \(a\in \mathbb {R}^+\), the metrics \(\mathcal {G}_\mathfrak {g}^{(a,0)}\) and \({\tilde{\mathcal {G}}}_\mathfrak {g}^{(a,0)}\) get to be the anisotropic Riemannian casesFootnote 3.

Image Segmentation Schemes The interactive foreground and background segmentation task can be converted to the problem of identifying the Voronoi index map or Voronoi regions in terms of geodesic distance [4, 5]. Let \(\mathfrak {s}_1\) and \(\mathfrak {s}_2\) be the sets of source points which are, respectively, located at the foreground and background regions. The Voronoi regions \(\mathcal {V}_1\) and \(\mathcal {V}_2\), indicating foreground and background regions, respectively, can be determined by the Voronoi index map \(\mathcal {L}\) through Eq. (11) such that

With respect to the foreground and background segmentation, the complexity for the geodesic distance computation is consistent with the used fast marching method [41], which is bounded by \({\mathcal {O}}(N\ln \kappa (\mathcal {F}_\mathfrak {g})+N\ln N)\) with N the number of the grid points in \(\mathbb {Z}^2\).

For tubularity segmentation, all the user-provided seeds are supposed to be placed inside the targeted structures. We make use of the \(T_h\)-level set of the geodesic distance map \(\mathcal {U}_\mathfrak {s}\) as the boundaries of the targeted tubular structures. We denote by \(N_{\mathrm{accepted}}\) the number of the grid points in \(\mathbb {Z}^2\) tagged as Accepted, i.e.,

In order to reduce the computation time of the fast marching method, we terminate the fronts propagation once the number \(N_{\mathrm{accepted}}\) of the grid points tagged as Accepted reaches the given thresholding value \(N_{\mathrm{th}}\). In this case, the tubular structures can be recovered by the regions which are comprised of all the Accepted points. Let \(N_{\mathrm{trial}}\) be the number of Trial grid points when \(N_{\mathrm{accepted}}\) points have been tagged as Accepted and let \(N_{\mathrm{used}}=N_{\mathrm{accepted}}+N_{\mathrm{trial}}\) be the total number of grid points for which the distance values have been updated. The computation complexity for this application is bounded by \({\mathcal {O}}(N_{\mathrm{used}}\ln \kappa ({\tilde{\mathcal {F}}}_\mathfrak {g})+N_{\mathrm{used}}\ln N_{\mathrm{used}})\), since only \(N_{\mathrm{used}}\) grid points are visited by the fast marching fronts.

Image segmentation via different geodesic metrics on a synthetic image. Column 1 shows the initializations, where the green and blue brushes indicating the seeds in different regions. Columns 2–4 show the segmentation results by the fronts propagation associated with the isotropic Riemannian metric \(\mathcal {F}^{(0,0)}_{\mathfrak {g}}\), the anisotropic Riemannian metric \(\mathcal {F}^{(2,0)}_\mathfrak {g}\) and the Randers metric \(\mathcal {F}_{\mathfrak {g}}^{(2,3)}\), respectively. The red curves are the segmented boundaries of the Voronoi regions (Color figure online)

Image segmentation via different geodesic metrics on real images. Column 1 shows the initializations, where the green and blue brushes are the seeds indicate the background and foreground regions. Columns 2–4 show the segmentation results by the metrics \(\mathcal {F}^{(0,0)}_\mathfrak {g}\), \(\mathcal {F}^{(2,0)}_\mathfrak {g}\) and \(\mathcal {F}_\mathfrak {g}^{(2,3)}\), respectively. The red curves are the segmented boundaries of the respective Voronoi regions (Color figure online)

Tubularity segmentation results via different geodesic metrics on a Spiral. Column 1 shows the initializations with the blue brushes indicating the seeds. Columns 2–4 show the segmentation results through the geodesic metrics \({\tilde{\mathcal {F}}}^{(0,0)}_\mathfrak {g}\), \({\tilde{\mathcal {F}}}^{(2,0)}_\mathfrak {g}\) and \({\tilde{\mathcal {F}}}_\mathfrak {g}^{(2,3)}\), respectively (Color figure online)

Tubularity segmentation results via different geodesic metrics on a tubular tree. Column 1 shows the initializations with the blue brushes indicating the seeds. Columns 2–4 show the segmentation results through the geodesic metrics \({\tilde{\mathcal {F}}}^{(0,0)}_\mathfrak {g}\), \({\tilde{\mathcal {F}}}^{(2,0)}_\mathfrak {g}\) and \({\tilde{\mathcal {F}}}_\mathfrak {g}^{(2,3)}\), respectively (Color figure online)

5.2 Advantages of Using Anisotropy and Asymmetry Enhancements

Let us consider a synthetic image as shown in Fig. 6a with two sampled points \(\mathbf {x}\) and \(\mathbf {y}\) indicated by blue dots. The arrows, respectively, indicate the directions of \(\mathfrak {g}(\mathbf {x})\) and \(\mathfrak {g}(\mathbf {y})\), where \(\mathbf {x}\) is near the edges and \(\mathbf {y}\) is located inside the homogeneous region. In Fig. 6b, we plot the cost values of the metrics \(\mathcal {G}_{\mathfrak {g}}^{(0,0)}(\mathbf {x},\varvec{u}_j)\), \(\mathcal {G}_{\mathfrak {g}}^{(2,0)}(\mathbf {x},\varvec{u}_j)\) and \(\mathcal {G}_{\mathfrak {g}}^{(2,1)}(\mathbf {x},\varvec{u}_j)\), along different directions \(\varvec{u}_j\in \mathbb {R}^2\). The directions \(\varvec{u}_j\) are obtained by rotation such that

where \(\theta _\mathrm{s}=\pi /36\) is the angle resolution and \(M(j\,\theta _\mathrm{s})\) is a rotation matrix with angle \(j\,\theta _\mathrm{s}\) in a counterclockwise order. In Fig. 6c, we plot the cost values for the metrics \(\mathcal {G}^{(0,0)}_\mathfrak {g}(\mathbf {y},\varvec{v}_j)\), \(\mathcal {G}^{(2,0)}_\mathfrak {g}(\mathbf {y},\varvec{v}_j) \) and \(\mathcal {G}^{(2,1)}_\mathfrak {g}(\mathbf {y},\varvec{v}_j)\) with

In Fig. 6b, we can see that all of the three metrics get low values around the directions \(M(\pi /2)\,\mathfrak {g}(\mathbf {x})\) and \(M(3\pi /2)\,\mathfrak {g}(\mathbf {x})\), which are orthogonal to the direction \(\mathfrak {g}(\mathbf {x})\). However, around the direction \(-\mathfrak {g}(\mathbf {x})\), the Randers metric \(\mathcal {G}^{(2,1)}_\mathfrak {g}\) attains much larger values than the Riemannian cases \(\mathcal {G}_\mathfrak {g}^{(0,0)}\) and \(\mathcal {G}_\mathfrak {g}^{(2,0)}\). Such an asymmetric property is able to reduce the risk of front leakages.

In Fig. 7, we plot the cost values for the metrics \({\tilde{\mathcal {G}}}^{(0,0)}_\mathfrak {g}\), \({\tilde{\mathcal {G}}}^{(2,0)}_\mathfrak {g}\) and \({\tilde{\mathcal {G}}}^{(2,1)}_\mathfrak {g}\) at the points \(\mathbf {x}\) and \(\mathbf {y}\) along different rotated directions. In Fig. 7b, the cost values (indicated by the red curve) of the Randers metric \({\tilde{\mathcal {G}}}_\mathfrak {g}^{(2,1)}\) at the point \(\mathbf {x}\) near the edges and outside the tubular structure show strongly asymmetric property. In Fig. 7c, we can see that along all of the rotated directions, the cost values for both the Randers metric \({\tilde{\mathcal {G}}}_\mathfrak {g}^{(2,1)}\) are equivalent to the anisotropic Riemannian metric \({\tilde{\mathcal {G}}}_\mathfrak {g}^{(2,0)}\). This anisotropic property is able to force the fast marching fronts travel faster along the tubularity orientations which are approximated by the vector field \(\mathfrak {g}^\perp \).

5.3 Experiments on Synthetic and Real Images

In Fig. 8, we show the fronts propagation results on a synthetic image. In the first column of Fig. 8, we initialize the sets of the source points in different locations, which are indicated by green and blue brushes. The columns 2 to 4 of Fig. 8 are the segmentation results from the isotropic Riemannian metric \(\mathcal {F}_\mathfrak {g}^{(0,0)}\), the anisotropic Riemannian metric \(\mathcal {F}^{(2,0)}_\mathfrak {g}\) and the Randers metric \(\mathcal {F}^{(2,3)}_\mathfrak {g}\), respectively. For the purpose of fair comparisons, the three metrics used in this experiment share the same potential function \(\mathfrak {C}\) defined in Eq. (44). One can point out that the results from the metrics \(\mathcal {F}^{(0,0)}_\mathfrak {g}\) and \(\mathcal {F}^{(2,0)}_\mathfrak {g}\) suffer from the leaking problem, while the final boundaries (red curves) associated with the proposed Randers metric \(\mathcal {F}^{(2,3)}_\mathfrak {g}\) are able to catch the expected edges thanks to the asymmetric enhancement. In this experiment, we choose \(\beta _\mathrm{d}=5\).

In Fig. 9, we compare the interactive image segmentation results via different geodesic metrics on real images which are obtained from the Weizmann dataset [2] and the Grabcut dataset [47]. The final segmentation results are derived from the boundaries of the corresponding Voronoi index maps. In column 1, we show the initial images with seeds indicating by green and blue brushes, respectively, inside the foreground and background regions. In columns 2 to 4 of Fig. 9, we demonstrate the segmentation results obtained via the isotropic Riemannian metric \(\mathcal {F}_\mathfrak {g}^{(0,0)}\), the anisotropic Riemannian metric \(\mathcal {F}_\mathfrak {g}^{(2,0)}\) and the Randers Metric \(\mathcal {F}_\mathfrak {g}^{(2,3)}\). For the results from the isotropic and anisotropic Riemannian metrics (shown in columns 2 and 3), the final contours leak into the background regions. In contrast, the segmentation contours associated with the Randers metric \(\mathcal {F}^{(2,3)}\) are able to follow the desired object boundaries.

In Fig. 10, we compare the tubularity segmentation results on a Spiral, respectively, using the isotropic Riemannian metric \({\tilde{\mathcal {F}}}^{(0,0)}_\mathfrak {g}\), the anisotropic Riemannian metric \({\tilde{\mathcal {F}}}^{(2,0)}_\mathfrak {g}\) and the Randers metric \({\tilde{\mathcal {F}}}^{(2,3)}_\mathfrak {g}\). The tubularity boundaries (red curves) are computed through the level set lines of the respective geodesic distance maps with an identical thresholding value of \(N_{\mathrm{th}}\). The shadow regions indicate the segmented regions involving all the points tagged as Accepted. In the first column of Fig. 10, for each row the respective source points are placed at the end of the Spiral. One can point out that the final segmentation contours corresponding to the isotropic Riemannian metric \({\tilde{\mathcal {F}}}_\mathfrak {g}^{(0,0)}\) (shown in column 2) and the anisotropic Riemannian metric \({\tilde{\mathcal {F}}}^{(2,0)}_\mathfrak {g}\) (shown in column 3) leak into the background before the whole tubular structure has been covered by the fast marching fronts. Indeed, using the anisotropy enhancement can improve the segmentation results such that the leakages for the contours from the anisotropic Riemannian metric \({\tilde{\mathcal {F}}}_\mathfrak {g}^{(2,0)}\) only occur at the locations far from the seeds. Finally, the segmentation contours shown in column 4 resulted by the Randers metric \(\mathcal {F}^{(2,3)}\) with both anisotropic and asymmetric enhancements are able to delineate the desired tubularity boundaries.

In Fig. 11, we perform the fast marching fronts propagation on a tubular tree structure. We again observe the leaking problem that occurs in the segmentation results derived from the isotropic Riemannian metric \({\tilde{\mathcal {F}}}^{(0,0)}_\mathfrak {g}\) and the anisotropic Riemannian metric \({\tilde{\mathcal {F}}}_\mathfrak {g}^{(2,0)}\), which are shown in columns 2 and 3, respectively, while in column 4, the fronts are able to pass through the whole tubular tree structure before they leak into the background.

6 Conclusion

In this paper, we extend the fronts propagation framework from the Riemannian case to a general Finsler case with applications to image segmentation. The Finsler metric with a Randers form allows us to take into account the asymmetric and anisotropic image features in order to reduce the risk of the leaking problem during the fronts propagation. We presented a method for the construction of the Finsler metric with a Randers form using a vector field derived from the image edges. This metric can also integrate with a feature coherence penalization term updated in the course of the fast marching fronts propagation. We applied the fronts propagation model associated with the proposed Randers metrics to foreground and background segmentation and tubularity segmentation. Experimental results show that the proposed model indeed produces promising results.

Notes

Initially, each source point \(\mathbf {x}\in \mathfrak {s}\) is tagged as Trial and the remaining grid points are tagged as Far.

These ellipses are the boundaries of the control sets.

Note that metrics \(\mathcal {G}_\mathfrak {g}^{\varvec{\alpha }}\) and \({\tilde{\mathcal {G}}}_\mathfrak {g}^{\varvec{\alpha }}\) have the identical anisotropy and asymmetry properties to \(\mathcal {F}_{\mathfrak {g}}^{\varvec{\alpha }}\) and \({\tilde{\mathcal {F}}}_{\mathfrak {g}}^{\varvec{\alpha }}\), respectively.

References

Adalsteinsson, D., Sethian, J.A.: A fast level set method for propagating interfaces. J. Comput. Phys. 118(2), 269–277 (1995)

Alpert, S., Galun, M., Brandt, A., Basri, R.: Image segmentation by probabilistic bottom-up aggregation and cue integration. IEEE Trans. Pattern Anal. Mach. Intell. 34(2), 315–327 (2012)

Arbeláez, P., Cohen, L.: Constrained image segmentation from hierarchical boundaries. In: Proceedings of CVPR, pp. 1–8 (2008)

Arbeláez, P.A., Cohen, L.D.: Energy partitions and image segmentation. J. Math. Imaging Vis. 20(1), 43–57 (2004)

Bai, X., Sapiro, G.: Geodesic matting: a framework for fast interactive image and video segmentation and matting. Int. J. Comput. Vis. 82(2), 113–132 (2009)

Bekkers, E.J., Duits, R., Mashtakov, A., Sanguinetti, G.R.: A PDE approach to data-driven sub-Riemannian geodesics in SE (2). SIAM J. Imaging Sci. 8(4), 2740–2770 (2015)

Benmansour, F., Cohen, L.D.: Fast object segmentation by growing minimal paths from a single point on 2D or 3D images. J. Math. Imaging Vis. 33(2), 209–221 (2009)

Benmansour, F., Cohen, L.D.: Tubular structure segmentation based on minimal path method and anisotropic enhancement. Int. J. Comput. Vis. 92(2), 192–210 (2011)

Bornemann, F., Rasch, C.: Finite-element discretization of static Hamilton–Jacobi equations based on a local variational principle. Comput. Vis. Sci. 9(2), 57–69 (2006)

Cardinal, M.H., Meunier, J., et al.: Intravascular ultrasound image segmentation: a three-dimensional fast-marching method based on gray level distributions. IEEE Trans. Med. Imaging 25(5), 590–601 (2006)

Caselles, V., Catté, F., Coll, T., Dibos, F.: A geometric model for active contours in image processing. Numer. Math. 66(1), 1–31 (1993)

Caselles, V., Kimmel, R., Sapiro, G.: Geodesic active contours. Int. J. Comput. Vis. 22(1), 61–79 (1997)

Chen, D., Cohen, L.D.: Vessel tree segmentation via front propagation and dynamic anisotropic riemannian metric. In: Proceedings of ISBI, pp. 1131–1134 (2016)

Chen, D., Mirebeau, J.M., Cohen, L.D.: Global minimum for curvature penalized minimal path method. In: Proceedings of BMVC (2015)

Chen, D., Mirebeau, J.M., Cohen, L.D.: Finsler geodesic evolution model for region-based active contours. In: Proceedings of BMVC (2016)

Chen, D., Mirebeau, J.M., Cohen, L.D.: Global minimum for a Finsler elastica minimal path approach. Int. J. Comput. Vis. 122(3), 458–483 (2017)

Cohen, L.: Multiple contour finding and perceptual grouping using minimal paths. J. Math. Imaging Vis. 14(3), 225–236 (2001)

Cohen, L.D.: On active contour models and balloons. CVGIP: Image Underst. 53(2), 211–218 (1991)

Cohen, L.D., Deschamps, T.: Segmentation of 3D tubular objects with adaptive front propagation and minimal tree extraction for 3D medical imaging. Comput. Methods Biomech. Biomed. Eng. 10(4), 289–305 (2007)

Cohen, L.D., Kimmel, R.: Global minimum for active contour models: a minimal path approach. Int. J. Comput. Vis. 24(1), 57–78 (1997)

Criminisi, A., Sharp, T., Blake, A.: Geos: Geodesic image segmentation. In: Proceedings of ECCV, pp. 99–112 (2008)

Dijkstra, E.W.: A note on two problems in connexion with graphs. Numer. Math. 1(1), 269–271 (1959)

Duits, R., Meesters, S.P., Mirebeau, J.M., Portegies, J.M.: Optimal paths for variants of the 2D and 3D Reeds-Shepp car with applications in image analysis. (2016, submitted)

Frangi, A.F., Niessen, W.J., Vincken, K.L., Viergever, M.A.: Multiscale vessel enhancement filtering. In: Proceedings of MICCAI, pp. 130–137 (1998)

Franken, E., Duits, R.: Crossing-preserving coherence-enhancing diffusion on invertible orientation scores. Int. J. Comput. Vis. 85(3), 253 (2009)

Freeman, W.T., Adelson, E.H., et al.: The design and use of steerable filters. IEEE Trans. Pattern Anal. Mach. Intell. 13(9), 891–906 (1991)

Jacob, M., Unser, M.: Design of steerable filters for feature detection using canny-like criteria. IEEE Trans. Pattern Anal. Mach. Intell. 26(8), 1007–1019 (2004)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 1(4), 321–331 (1988)

Kaul, V., Yezzi, A., Tsai, Y.: Detecting curves with unknown endpoints and arbitrary topology using minimal paths. IEEE Trans. Pattern Anal. Mach. Intell. 34(10), 1952–1965 (2012)

Kimmel, R., Bruckstein, A.M.: Regularized laplacian zero crossings as optimal edge integrators. Int. J. Comput. Vis. 53(3), 225–243 (2003)

Law, M.W., Chung, A.C.: Three dimensional curvilinear structure detection using optimally oriented flux. In: Proceedings of ECCV, pp. 368–382 (2008)

Li, C., Xu, C., Gui, C., Fox, M.D.: Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process. 19(12), 3243–3254 (2010)

Li, H., Yezzi, A.: Local or global minima: flexible dual-front active contours. IEEE Trans. Pattern Anal. Mach. Intell. 29(1), 1–14 (2007)

Li, H., Yezzi, A.: Vessels as 4-D curves: global minimal 4-D paths to extract 3-D tubular surfaces and centerlines. IEEE Trans. Med. Imaging 26(9), 1213–1223 (2007)

Malladi, R., Sethian, J., Vemuri, B.C.: Shape modeling with front propagation: a level set approach. IEEE Trans. Pattern Anal. Mach. Intell. 17(2), 158–175 (1995)

Malladi, R., Sethian, J.A.: A real-time algorithm for medical shape recovery. In: Proceeding of ICCV, pp. 304–310 (1998)

Mashtakov, A., Duits, R., Sachkov, Y., Bekkers, E.J., Beschastnyi, I.: Tracking of Lines in Spherical Images via Sub-Riemannian Geodesics in SO(3). J. Math. Imaging Vis. 58(2), 239–264 (2017)

Melonakos, J., Pichon, E., Angenent, S., Tannenbaum, A.: Finsler active contours. IEEE Trans. Pattern Anal. Mach. Intell. 30(3), 412–423 (2008)

Mille, J., Bougleux, S., Cohen, L.D.: Combination of piecewise-geodesic paths for interactive segmentation. Int. J. Comput. Vis. 112(1), 1–22 (2015)

Mirebeau, J.M.: Anisotropic fast-marching on cartesian grids using lattice basis reduction. SIAM J. Numer. Anal. 52(4), 1573–1599 (2014)

Mirebeau, J.M.: Efficient fast marching with Finsler metrics. Numer. Math. 126(3), 515–557 (2014)

Mirebeau, J.-M.: Anisotropic fast-marching on cartesian grids using voronoi’s first reduction of quadratic forms (2017). https://hal.archives-ouvertes.fr/hal-01507334/file/HamiltonFM.pdf

Mirebeau, J.-M.: Fast-marching methods for curvature penalized shortest paths. J. Math. Imaging Vis. (2017). https://doi.org/10.1007/s10851-017-0778-5

Osher, S., Sethian, J.A.: Fronts propagating with curvature-dependent speed: algorithms based on Hamilton–Jacobi formulations. J. Comput. Phys. 79(1), 12–49 (1988)

Price, B.L., Morse, B., Cohen, S.: Geodesic graph cut for interactive image segmentation. In: Proceedings of CVPR, pp. 3161–3168 (2010)

Randers, G.: On an asymmetrical metric in the four-space of general relativity. Phys. Rev. 59(2), 195 (1941)

Rother, C., Kolmogorov, V., Blake, A.: Grabcut: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 23(3), 309–314 (2004)

Rouy, E., Tourin, A.: A viscosity solutions approach to shape-from-shading. SIAM J. Numer. Anal. 29(3), 867–884 (1992)

Sethian, J.A.: Fast marching methods. SIAM Rev. 41(2), 199–235 (1999)

Sethian, J.A., Vladimirsky, A.: Ordered upwind methods for static Hamilton–Jacobi equations: theory and algorithms. SIAM J. Numer. Anal. 41(1), 325–363 (2003)

Tsitsiklis, J.N.: Efficient algorithms for globally optimal trajectories. IEEE Trans. Autom. Control 40(9), 1528–1538 (1995)

Weber, O., Devir, Y.S., Bronstein, A.M., Bronstein, M.M., Kimmel, R.: Parallel algorithms for approximation of distance maps on parametric surfaces. ACM Trans. Graph. 27(4), 104 (2008)

Xu, C., Prince, J.L.: Snakes, shapes, and gradient vector flow. IEEE Trans. Image Process. 7(3), 359–369 (1998)

Yatziv, L., Bartesaghi, A., Sapiro, G.: O (N) implementation of the fast marching algorithm. J. Comput. Phys. 212(2), 393–399 (2006)

Yezzi, A., Kichenassamy, S., Kumar, A., Olver, P., Tannenbaum, A.: A geometric snake model for segmentation of medical imagery. IEEE Trans. Med. Imaging 16(2), 199–209 (1997)

Acknowledgements

The authors would like to thank all the anonymous reviewers for their detailed remarks that helped us improve the presentation of this paper. The authors thank Dr. Jean-Marie Mirebeau from Université Paris-Sud for his fruitful discussion and creative suggestions. The first author also thanks Dr. Gabriel Peyré from ENS Paris for his financial support. This work was partially supported by the European Research Council (ERC project SIGMA-Vision).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, D., Cohen, L.D. Fast Asymmetric Fronts Propagation for Image Segmentation. J Math Imaging Vis 60, 766–783 (2018). https://doi.org/10.1007/s10851-017-0776-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-017-0776-7