Abstract

Manufacturing process monitoring systems is evolving from centralised bespoke applications to decentralised reconfigurable collectives. The resulting cyber-physical systems are made possible through the integration of high power computation, collaborative communication, and advanced analytics. This digital age of manufacturing is aimed at yielding the next generation of innovative intelligent machines. The focus of this research is to present the design and development of a cyber-physical process monitoring system; the components of which consist of an advanced signal processing chain for the semi-autonomous process characterisation of a CNC turning machine tool. The novelty of this decentralised system is its modularity, reconfigurability, openness, scalability, and unique functionality. The function of the decentralised system is to produce performance criteria via spindle vibration monitoring, which is correlated to the occurrence of sequential process events via motor current monitoring. Performance criteria enables the establishment of normal operating response of machining operations, and more importantly the identification of abnormalities or trends in the sensor data that can provide insight into the quality of the process ongoing. The function of each component in the signal processing chain is reviewed and investigated in an industrial case study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

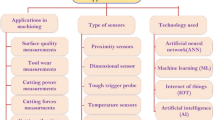

Performance measurement is imperative to manufacturing production, due to the fact that if the efficiency of an activity cannot be measured, it could not be effectively controlled (Hon 2005). Currently a wealth of knowledge has been generated to achieve intelligent manufacturing monitoring systems in a centralised way (Teti et al. 2010). Recent trends towards decentralised architectures via cloud-based technology have the potential to universally incorporate this knowledge, while enabling reconfigurability and extensibility (Gao et al. 2015). These Cyber Physical Systems (CPS) represent the collective collaboration between decentralised cyber computation and physical devices (Calvo et al. 2012). CPS enables manufacturing process monitoring systems to be viewed as interactive colonies, similar to decentralised design paradigms of Agent-based design and Holonic systems (Giret and Botti 2004), as illustrated in Fig. 1. The fundamental process monitoring steps of: measurement, acquisition, signal processing, decision support, and loop control; can now be distributed within a virtually limitless space.

Unique benefits of these cyber physical process monitoring systems include: (1) dynamic customisation, systematic functionalities can be added or removed freely as collaboration between components is provided via services. (2) Extensive application, many different processes can now utilise the same monitoring tools that are customised through plug-and-play components and reconfigurable software attributes. (3) Ubiquitous data, open data interoperability enables the integration of heterogeneous manufacturing inputs and outputs, e.g. sensors, communication protocols, control equipment etc. (Morgan and O’Donnell 2014a). (4) Sensor fusion, the decentralised environment promotes sensor fusion analysis (Mitchell 2007), which has the potential to generate new process insights previously unexplored. (5) Resilience, the open interconnection of applications enables the integration of redundancy mechanisms, e.g. duplication, to ensure reliability enhancement for critical systems in the event of component failure (Zhang and van Luttervelt 2011).

The realisation of this new era is taking place through the convergence of a multitude of research areas, including the decentralised paradigms of ubiquitous and cloud computing (Ferreira et al. 2013), Service Oriented Architecture (SOA) (Wang et al. 2012), and the Internet-of-Things (IoT) (Liu et al. 2014). Examples of the intelligent manufacturing through these research mediums can be seen in; cloud robotics (Kehoe et al. 2015), which provide access to “Big Data” for machine learning, parallel computation, crowd sourcing of production tasks. Sensor clouds, which collect heterogeneous data for reconfigurable diagnostics (Neto et al. 2015) (Morgan et al. 2013) (Izaguirre et al. 2011). Large scale sensor networks for manufacturing resource monitoring (Alahakoon and Yu 2015) (Tao et al. 2014). Production control and management, via Enterprise Resource Planning (ERP) optimisation (Savio et al. 2008); decision support path planning, production scheduling, and preventative maintenance (Pinto et al. 2009); intelligent machining (Li et al. 2015); and distributed production orchestration (Colombo et al. 2012) and wireless control (Abrishambaf et al. 2011).

Key to these research areas is the core communication technologies that facilitates data interoperability. This industrial internet has the potential to bring substantial transformation to global industry (Evans and Annunziata 2012). Another embodiment of this global phenomena is ‘Industry 4.0’, for manufacturing Cyber-Physical Production Systems (CPPS), which have the potential to define a 4th industrial revolution. CPPS research and development challenges (Monostori 2014) include; context-adaptive and autonomous systems, cooperative production systems, identification and prediction of dynamical systems, robust scheduling, fusion of real and virtual systems, human-machine symbiosis.

Examples of CPPS research and challenges have primary focused on management, planning, and Supervisory Control And Data Acquisition (SCADA). Initial cloud analytic research has consisted of a close-ended one-to-one relationships enabled through a client-to-server communication. Client-to-server enabled analytics enable the parallel processing of multiple signals. However signal process and decision support systems are more intricate, as data is transitioned between states, e.g. signal pre-processing, signal extraction and selection. Furthermore, the abstraction of signal processing functions into cloud based architectures via Agent/Holonic design requires orchestration amongst entities. Evidently, to achieve the required output from these decentralised signal processing chains, requires open-ended one-to-many relationships, and methodical orchestration/configuration.

A realisation of a dynamic multi-scalable signal processing via CPS has not yet been demonstrated. This incorporates the migration of fundamental signal processing techniques to cloud/agent-based architecture, to form a cyber-physical process monitoring system. Subsequently, the focus of this work is on the development of such a system. The signal processing chain incorporates the process monitoring steps of; data acquisition, signal processing, and process analytics. The resultant system is utilised to continuously and semi-autonomously characterise the performance of a manufacturing turning machine tool.

Cyber physical process monitoring system design

Prologue

Vijayaraghavan and Dornfeld (2010) proposed a framework for cloud-based process characterisation of manufacturing machine tools. This work focuses on the dynamic generation of process events from multiple process data types, e.g., machine controller data, and measured phenomenon. These events enable the characterisation of a process into machining operations via a Complex Event Processing (CEP) application. Identification of process states facilitates the correlation of process data to machining operations, allowing for context specific analysis to be achieved.

Examples of context specific analysis of machine operations have been identified by multiple authors. Dias et al. (2009) identify machining power usages of different machine components, e.g., controller, servo, coolant pump, etc. Eckstein and Mankova (2012) utilise Numerical Controllers (NC) state data to identify machine movements with defined feed rates to examine multiple process variables, e.g., torque, effective power, and feed force. Brazel et al. (2013) correlate machining tool path position with cutting power data to identify magnitude of feature specific power consumption over a parts machining cycle time. Other simulated examples of context specific analysis through process state acquisition and cross-reference are visualised in Vijayaraghavan and Dornfeld (2010), Schmitt et al. (2011) and He et al. (2012). Evidently, it is paramount to identify process states to establish relevant windows of analysis.

The cyber physical process monitoring system produced in this work is a realisation of the process characterisation system inspired by Vijayaraghavan and Dornfeld’s proposed framework; and demonstrates the operation of a decentralised signal processing chain.

Design requirements

Utilising a top-down approach, the following must be defined in order to develop a decentralised signal processing chain: (1) global goal of the processing chain, (2) localised goals of the chain components, and (3) interoperability architecture.

- (1):

-

Global goal

In this work, the global or collaborative goal of the processing chain is to characterise the performance of a monitored process. This is achieved via the sequential identification of the process operations, which will provide windows of analysis for performance characterisation. The process being monitored in this case is an OKUMA LT15-M CNC turning machine tool, see Fig. 2. Process performance will be inferred from: spindle vibration, measured via a tri-axial accelerometer mounted on the left spindle housing; and tool force, measured via a dynamometer which encompasses the tool holder. Process operations are digitally represented by active machine variables, which in previous examples utilised machine operation state data, acquired via the machines controller. However, this is not always possible due to the decentralisation of process control, and functional capability present in some industrial technologies. This obstacle can be overcome through the measurement of peripheral machining variables, in connection with signal processing. In this case, machine motor current is monitored on multiple movement axis, and spindle rotation, via Current Transformers (CT). Process states can be identified from the movement of motors, through AC-to-DC conversion.

- (2):

-

Local goals

Local goals represent the systematic functional requirements of each component in the processing chain. The current functionality of the process chain includes: data acquisition, data storage, signal processing, analogue-to-digital event conversion, event correlation, and performance characterisation. Multi-source data acquisition acquires: spindle vibration, tool force, and motor currents. Data storage correlates the multi-source data streams and efficiently stores data for post process analysis. Parallel signal processing extracts features from process variables. Analogue digital conversion provides digital events from analogue signals via set limits. Event correlation identifies the machines operating sequence from multiple event inputs. The sequence iterations form windows of analysis for performance characterisation. Performance characterisation is achieved via time and frequency domain analytics that are correlated to the sequential machine operations.

- (3):

-

Interoperability architecture

The cloud interoperability architecture defines the interactive capabilities between the process chain components. Selection of an manufacturing field-level interoperability medium depends on the desired attributes of the whole decentralised system (Morgan and O’Donnell 2015). The required attributes in this case are; high data rates:  kHz for vibration measurement, high communication speed: \(\le \)1 ms for an industrial setting, open data model for custom data throughput, and local area network data interoperability. Consequently, Acquire Recognise Cluster (ARC) (Morgan and O’Donnell 2014b)architecture was selected, as it meets these requirements. The ARC utilises the National Instruments Shared Variable Engine (SVE) to provide interoperability between software applications. Furthermore, the ARC utilises a binary message representation for effective data structuring and efficient data transmission.

kHz for vibration measurement, high communication speed: \(\le \)1 ms for an industrial setting, open data model for custom data throughput, and local area network data interoperability. Consequently, Acquire Recognise Cluster (ARC) (Morgan and O’Donnell 2014b)architecture was selected, as it meets these requirements. The ARC utilises the National Instruments Shared Variable Engine (SVE) to provide interoperability between software applications. Furthermore, the ARC utilises a binary message representation for effective data structuring and efficient data transmission.

Signal processing chain components

The resultant decentralised signal processing chain is shown in Fig. 3. The signal processing chain is composed of discrete data processing software applications, which are reconfigurable to provide custom data processing across multiple data streams. Data transitions from: (1) raw signal, (2) pre-processing, (3) feature extraction, (4) feature selection, (5a) spectra analysis, and (5b) wave analysis. The following software applications have been created to facilitate the signal processing chain: acquisition-adaptor, database-client, signal-processing-agent, fuzzy-agent, CEP-agent, SANS-frequency-domain-client, and SANS-time-domain-client. The functionality of each application is migrated from literature and integrated into a cloud-based heterarchy, or Holonic Holarchy (Trentesaux 2009).

- (1):

-

Data acquisition

The acquisition-adaptor is the starting point of the processing chain. It acquires the sensor data in discrete increments, formats the data to the ARC Binary Message Model (BMM) (Morgan and O’Donnell 2014b), and communicates the data package to the ARC data cloud for distribution, as illustrated in Fig. 4. The data is time-stamped via the computers CPU clock. Multiple acquisition-adaptors can also reference this clock, enabling the signals to be correlated together.

- (2):

-

Signal processing

The signal-processing-agent acquires the raw signal data from the ARC data cloud, subjects the data to layered signal processing functions in parallel, to form unique filtered signals, and communicates these processed signals to the SVE data cloud, as illustrated in Fig. 5. This process represents signal pre-conditioning, which is required to improve the signal-to-noise ratio and extract useful information from raw data (Vachtsevanos et al. 2007). Examples of pre-processing functions available for utilisation include frequency domain high-pass, low-pass, and band-pass filters (Paarmann 2003). Additional processing functions include time domain mean, standard deviation, variance, RMS, peak-to-peak valley amplitude, etc. (Teti et al. 2010). All of these functions are reconfigurable in their operating properties and sequence of operation in their function stack; this approach enable a truly dynamic multi-scalable signal pre-processing tool.

- (3):

-

Fuzzy events

The fuzzy-agent acquires the filtered frequency data from the ARC data cloud, subjects the signal to a fuzzy logic inference mechanism to form Boolean states, and communicates these unique state signals to the ARC data cloud, as illustrated in Fig. 6. This process represents signal feature extraction, which is the process of extracting and classifying distinguishable features of a signal (Vachtsevanos et al. 2007). In this case, fuzzy logic is utilised as the classification means to identify the occurrence of specific process phenomenon, i.e. events. Fuzzy logic is a generalisation of the convention that utilises a set of rules to specify how decisions are made (Lilly 2010). It is a universally intuitive way to characterise a process in a coherently expressive format. The fuzzy-agent utilises basic fuzzy logic principles, ‘crisp’ sets, i.e. the input value either belongs or does not belong to the set. By defining limits on the analogue signal, the output is either above or below the limit, the event is either active or inactive, or in Boolean ‘True’ or ‘False’. Other Fuzzy systems (Song et al. 2015) utilise a multi-state output where the corresponding extent of the input to different fuzzy sets is determined. A multi-state output is however not required in this instance. Each output is required to represent the occurrence of a single machine operational state phenomenon, for utilisation further down the processing chain.

- (4):

-

Sequence identification

The CEP-agent acquires multiple state signals from the ARC data cloud, subjects the signals to a correlated sequential Boolean logic inference mechanism to identify the current state of the process/machines operation, as illustrated in Fig. 7. This process represents signal feature selection, which corresponds to the seeking of features that possess properties of particular objective distinction and detection (Vachtsevanos et al. 2007). In this case the feature selection is represented by a Complex Event Processing (CEP) application, which utilises process state data to identify the operation sequence of a process/machine. CEP research in cloud-based has been well represented through the EU AESOP initiative (Izaguirre et al. 2011; Lindgren et al. 2013; Pietrzak et al. 2012). Other examples of CEP systems include T-REX (Cugola and Margara 2012) and SAP’s unified management system (Walzer et al. 2008). Fundamentally, CEP systems utilise a logic rule base to determine the occurrence of a complex event. Similarly, the CEP-agent utilises a logical rule-base, consisting of Boolean logic sets and time-frame reference modes, e.g., states are equal in parallel, serial sequence, or randomly. Each state set represents a different sequence operation. The application searches sets in succession. For example, if sequence set N is active, then sequence set N \(+\) 1 is being actively searched for. Once sequence set N \(+\) 1 is identified, it becomes the active state, and sequence set N \(+\) 2 becomes the active search set, and so on. Once all sequence sets have been found, the search resets to the first sequence set N, unless specified otherwise.

- (5):

-

Sequence analysis: frequency domain

The Sequence ANalySis (SANS)-frequency-domain-client acquires a waveform and the current operating sequence from the ARC data cloud, subjects the waveform to frequency spectrum analysis, and correlates the results to different machining operations to achieve context specific analysis, as illustrated in Fig. 8. The analytics are focused on the frequency domain using Fast Fourier Transforms (FFTs). Fourier transforms allow for a signal to be represented by its frequency components in the frequency domain (Tan and Jiang 2013). An efficient way to achieve this is through the use of an FFT algorithm for computing Fourier transform coefficients with reduced computation complexity. Frequency analysis is fundamental to vibration monitoring fault diagnosis and prognostics, where the condition of a kinematic system can be accurately determined (Vachtsevanos et al. 2007). The SANS-frequency-domain-client enables the generation of power or energy spectrums. Configuration of the analysis type, sample size, and sensor sensitivity characterise the resulting spectrum. These spectrums are correlated to the sequence operation that is produced by the CEP-agent. The resultant sequence specific frequency spectrums form metrics for performance characterisation.

- (6):

-

Sequence analysis: time domain

The Sequence ANalySis (SANS)-time-domain-client acquires a waveform and the current operating sequence from the ARC data cloud, subjects the waveform to time domain analytics, and correlates the results to different machining operations to achieve context specific analysis, as illustrated in Fig. 9. Time domain analytics include: mean, standard deviation, maximum, and sum. These values are processed in discrete increments and correlated to the sequence operation that is produced by the CEP-agent. The SANS-time-domain-client can acquire multiple waveforms for parallel data processing.

Performance characterisation testing

Introduction

An experimental investigation was setup to validate the functionality of the decentralised signal processing chain. In this investigation the OKUMA CNC turning machine is monitored via tool force, spindle vibration, and single phase motor current. These process signals are manipulated throughout the decentralised signal processing chain to achieve process performance characterisation, via sequence-orientated correlation that is inferred from motor current activity. Time and frequency domain analysis is undertaken to provide contrasting performance metrics. The objectives of this phase-1 investigation are to: validate the functionality of each signal processing chain component, validate the collaborative goal of the signal processing chain to characterise a process, and demonstrate the operation of a decentralised cyber-physical process monitoring system.

Setup

The cyber-physical process monitoring systems topology is shown in Fig 10. The OKUMA CNC turning machine is monitored through: tool force, via a turret mounted tri-axial dynamometer; spindle vibration, via a magnetic mounted tri-axial accelerometer; single phase motor current, via clip on Current Transformers (CT) attached to the spindle, turret X and Z axis motor windings. Each sensor is attached to individual data acquisition device and connected to a Next Unit of Computing (NUC) computer through different communication mediums. The NUC’s have a small form factor, 116.6 mm \(\times \) 112.0 mm \(\times \) 34.5 mm, providing a minimal impact to the industrial environment. Furthermore, the ARC-SVE enables data interoperability across a network. Multiple NUCs can be networked together to allow for high capacity decentralised computation. NUC#1 hosts all the data acquisition adaptors, the signal processing agent, the fuzzy agent, and a database client for post process analysis. NUC#2 hosts the CEP agent and both the time and frequency domain SANS client applications. All applications share data via the ARC-SVE which ensures effective and efficient data distribution.

The machining operation for this experiment is dry single point oblique cutting of a bright steel (BS 970-1:1991) workpiece, 42 mm in diameter and 200 mm in length. The workpiece is exposed a distance of 100 mm from the chuck and is pre-machined to a diameter of 33 mm over a length of 50 mm. A rough cutting cycle is selected due to its repetitive operation, as illustrated in Fig. 11. The machining length of cut is 30 mm, with a depth of 1 mm. Constant surface speed is utilised and set to 100 m/min. Three cutting cycles with varying feed rates: 0.1, 0.2, 0.3 mm/rev, represent the machining operation. The varying feed rates will allow for a comparative process performance for this initial phase-1 investigation.

Signal processing and process characterisation

The decentralised signal processing chain is a semi-autonomous process performance characterisation mechanism. Initial configuration is required to specify the data acquisition parameters, signal processing function settings, fuzzy event limits, CEP sequence logic, and SANS analysis criteria. After initial configuration, the signal process chain becomes autonomous in operation, by continuously processing data and performing sequence specific performance characterisation.

A 3-step methodology is utilised to initially orchestrate/configure the signal processing chain:

- (1):

-

Primary data acquisition

Firstly, the data acquisition-adaptors need to be configured. These applications provide the raw data that is utilised at every step of the process. This step identifies: the sensing parameters, data acquisition and cloud distribution rates, and SVE addresses. For example; spindle-Y-axis vibration: Max/Min 5 V, IEPE excitation 4.0 mA, AC coupling, 12 kHz sampling, 12 Hz cloud distribution @ 1000 samples/package, SVE address: NUC1/ARC_Adaptor1/V1, Index 0. Once setup, for all process variables, primary data can be acquired from the process and stored for post process analysis via the database-client.

- (2):

-

Alpha configuration

Alpha configuration involves the experimental configuration of signal processing chain components, with the primary data acquired previously, to achieve local goals. In this work, alpha configuration involves the configuration of the signal-processing-agent, fuzzy agent, and CEP-agent. This process represents signal pre-processing, feature extraction, and feature selection.

Spindle and axis motor current need to be processed to provide the CEP-agent with process event data. The primary data of both the spindle and z-axis motor currents is represented in Fig. 12-1. Both signals provide AC which is composed of multiple CNC actions. Spindle motor current varies in amplitude due to inrush currents (Trout 2010), and back EMF (Pople 1999), spindle acceleration, and steady velocity. Axis motor current varies in amplitude due to varying axis acceleration, and steady-state velocity. Axis motor current (4 kW motor) has considerably lower amplitude compared to the spindle motor current (15 kW motor). Subsequently, the axis current signal is more influenced by noise due to the minimum CT sensing capacity of 1A. However, both signals have a variety of features which could be utilised for process characterisation.

In this experiment, spindle rotation and z-axis rapid movements are utilised for process characterisation, as seen in Fig. 12-2. Spindle rotation is a constant signal indicating the various activities of the spindle within an active state. Spindle rotation is extracted with a Root Mean Square (RMS) function. Rapid movements have a strong influence on the signal, are common occurrence in different part machining processes, and are resistance to variation from machining parameters. Rapid movements are extracted from the raw signal with a standard deviation function to convert AC-to-DC. Alternatively, a RMS can be utilised. Finally, a Square Root function is utilised to further magnify the event occurrence. These processed signals are the inputs into the fuzzy-agent.

Converting both signals from AC to DC and extracting signal features, has enabled an identification of process events that are either active or inactive, ‘on’ or ‘off’. Fuzzy events are characterised by event limits, e.g., spindle active  20 A, z-axis rapid movement active

20 A, z-axis rapid movement active  5 A. The resultant Boolean fuzzy events are identified in Fig. 12-3. The current event occurrence are the inputs into the CEP-agent to identify the operating sequence of the process.

5 A. The resultant Boolean fuzzy events are identified in Fig. 12-3. The current event occurrence are the inputs into the CEP-agent to identify the operating sequence of the process.

Process operating sequences are identified via the occurrence of sequential event logic. In this experiment, the ‘start’ process sequence is identified by the occurrence of both the spindle rotation and initial rapid movement. Each cutting cycle is identified by the occurrence of a rapid movement after the ‘start’ sequence is active. The rapid movements effectively divide the machining operations. The ‘stop’ sequence is identified by the occurrence of a final rapid movement and the deactivation of the spindle. In total there are five process sequences: start, cut 1, cut 2, cut 3, and stop, as illustrated in Fig. 12-4. These sequences are windows of analysis for SANS-clients to correlate data. The current sequence is communicated to the SVE every time there is a change in event, and at regular intervals of 100ms.

- (3):

-

Beta configuration

Beta configuration involves the configuration of signal processing chain components, with real-time data, that is either actively acquired or simulated, to achieve global goals. In this work beta configuration involves the configuration of the SANS frequency and time domain clients. Furthermore, this step provides real-time testing of all previously configured signal processing chain components. The signal processing chain utilises spindle vibration and tool force to establish process performance characterisation.

The SANS time domain client utilises both vibration and force data. In this investigation the focus is on the cutting axis of the machining process, which is represented by the y-axis spindle vibration, x-axis tool force, as seen in Fig. 13-1. Both signals require pre-processing. Spindle vibration is an alternating signal, to identify the power of the signal a Mean Square (MS) is required. Tool force is represented as a negative value, is subject to drift, and needs to be scaled from Volts to Newtons. In order to successfully monitor tool force the following signal processing functions are required: time series detrend, multiplication scaling, and a negation. The resultant pre-processed signals are represented in Fig. 13-2. The correlation of these signals to the sequence iterations is represented in Fig. 13-3.

The SANS frequency domain client utilises raw vibration data, and does not require any pre-processing. Configuration for frequency spectra analysis includes: spectrum type: power spectrum, Nyquist frequency: 6000, sensor sensitivity: 6000, and window size: 600. This produces a power spectrum with 300 bins each representing a 20 Hz bandwidth, resulting in a 6000 Hz frequency range, as seen in Fig. 14. This power spectrum is highly reactive to the process, producing 12 spectrums a second. The 3D power spectra provides key frequency response information to a changing process. The utilisation of this data is primarily for offline analyse. In order to achieve performance characterisation limits need to be set to correlate the data to. The integration of operation sequence provides these limits and results in power spectrum per sequence.

Sequence analysis

- (1):

-

Frequency domain

The resultant sequence correlated power spectrums are represented in Fig. 15. The spindle cutting axis frequency response has resulted in two primary peaks. The Peak 1 frequency band is induced by machining, and the Peak 2 frequency is responsive to the spindle rotation, workpiece rotation, and machining. These peaks vary in both power and frequency in response to a changing feed rate. Peak 1 increases incrementally in power between cuts, and undergoes a peak frequency shift at a feed rate of 0.3 mm/rev. Peak 2 maintains peak frequency but varies in power.

The results show how varying the cutting parameters can change the frequency response of the machining process. Peak spectrum analysis is key to identifying contributing vibration sources. By providing a base reference, any deviation in peak power or frequency and the occurrence of new frequency peaks can be highlighted.

Furthermore, the power spectrums can be simplified into 1 dimensional statistics, as seen in Fig. 16. The square root of the sum of the power spectrum results in the RMS of the total signal (Herlufsen et al. 2008). These statistics provide a summary of total signal power and energy for each sequence. The results show how feed rate increases the cutting axis vibration power, but also shows how the reduced cutting duration results in a decrease in total vibration energy.

- (2):

-

Time domain

The resultant sequence correlated time domain spindle y-axis vibration statistics are represented in Fig. 17-1. These values are nearly identical ( 1 % deviation) to the statistics achieved previously through power spectrum accumulation. This identifies the successful operation of both SANS client applications—by unanimously achieving corresponding performance metrics—through different methods which have different degrees of collaboration.

1 % deviation) to the statistics achieved previously through power spectrum accumulation. This identifies the successful operation of both SANS client applications—by unanimously achieving corresponding performance metrics—through different methods which have different degrees of collaboration.

Uniquely, the SANS time domain client can provide other statistical metrics, such as the stand deviation and maximum value, as seen in in Fig. 17-2. These values provide more dimensions to process performance characterisation. Furthermore, the SANS time domain client enables multiple waves to be analysed in parallel. This means signal analysis is not limited to alternating signals. Other process variables can be correlated to sequential process operations, such as tool force, as seen in Fig. 18. Varying feed rate increases the average power, maximum power, and standard deviation, of both spindle vibration and tool force. However, both signals are reactive to the process in different ways. Tool force is reactive only to the machining process, while spindle vibration is a combination of machining operations and machine actions. Subsequently, vibration can be utilised to monitor the condition of spindle rotation and axis motion outside of the cutting process.

Discussion

The cyber-physical signal processing chain is a complex analysis system. However, through the separation of functional requirements into interactive applications, the task is simplified into a step-by-step process.

Throughout this investigation each signal processing chain component has been validated in functionality. Multi-source data was acquired and distributed throughout the data interoperability cloud. Parallel customised signal pre-processing, feature extraction, and feature selection was encapsulated in multiple interactive applications with reconfigurable controls. The application of theses subsystems was aimed at enabling process analytics, through pre-processing, and aimed at sequential process operation identification, through analogue to digital conversion. The resultant digital process states formed the metrics for sequence identification through programmable logic CEP. The end of the signal processing chain incorporated the integration of sequence identification data, process performance data, and time and frequency domain analytics. The result was context specific performance analysis of a CNC turning operation.

Context specific analysis was only achievable through the collaboration present in signal processing chain. The external configuration of these digital resources enable the system to meet the global goal of the system, and characterise a process. The results provide a quantified measure of sequential machining operations from spindle vibration and tool force. This measurement is a first step insight into process and sub-process behaviour, and enables a comparative medium for successive sequence iterations.

This phase-1 investigation utilised an initial simplistic process, with varying machining parameters. The variation in machining parameters was aimed at inducing variation in performance metrics. Variation identification is key to achieving preventative maintenance. Future studies, or applications, could utilise hundreds of events. The capacity within the signal processing chain is dynamic and is capable of handling the increased capacity. Furthermore, the events in the experiment are 100 % process driven. Future applications could also utilise human driven inputs for human-to-machine collaboration. Otherwise more advanced control systems could be integrated to achieve a more direct process sequencing identifier, for example, a direct output from a controller to indicate current operation.

The succession to this work will be a phase-2 investigation, which will focus on natural variations in manufacturing machining, such as: tool wear, motor faults, and chatter.

Conclusion

This work successfully demonstrated the operation of a cyber-physical process monitoring system. On the micro-scale, each software application consists of abstract reconfigurable functions, which execute accurately and systematically in soft real-time. On the macro scale, these functions become reconfigurable cyber-building blocks on a physical networked computation foundation. The orientation of these function blocks manipulate signals in a specific way to meet the global goals of a signal processing chain. However, reconfiguration on the micro and macro scale can drastically change the output of the processing chain. This enables a process monitoring system to be: reconfigurable to meet multiple processes, flexible to change with a process over time, and, extensible for future capacity and capability requirements.

The idea that these systems will create the next generation of innovative intelligent machines, is based on the concepts of; abstraction, simplification, and free data. New innovative solutions will be enabled by tools that exist in a borderless computational collaborative space. Engineers, previously unable to access these resources due to high skill requirements, are now presented with reconfigurable tools, for direct utilisation, or custom modification.

References

Abrishambaf, R., Hashemipour, M., & Bal, M. (2011). Integration of wireless sensor networks into the distributed intelligent manufacturing within the framework of IEC 61499 function blocks. In 2011 IEEE international conference on systems, man, and cybernetics (SMC). doi:10.1109/ICSMC.2011.6084204.

Alahakoon, D., & Yu, X. (2015). Smart electricity meter data intelligence for future energy systems: A survey. IEEE Transactions on Industrial Informatics. doi:10.1109/TII.2015.2414355.

Brazel, E., Hanley, R., Cullinane, R., & O’Donnell, G. E. (2013). Position-oriented process monitoring in freeform abrasive machining. The International Journal of Advanced Manufacturing Technology, 69(5–8), 1443–1450. doi:10.1007/s00170-013-5111-x.

Calvo, I., Etxeberria-Agiriano, I., & Noguero, A. (2012). Distribution middleware technologies for cyber physical systems. In 2012 9th international conference on remote engineering and virtual instrumentation (REV). doi:10.1109/REV.2012.6293151.

Colombo, A. W., Mendes, J. M., Leitao, P., & Karnouskos, S. (2012). Service-oriented SCADA and MES supporting petri nets based orchestrated automation systems. In IECON 2012—38th annual conference on ieee industrial electronics society. doi:10.1109/IECON.2012.6389076.

Cugola, G., & Margara, A. (2012). Complex event processing with T-REX. Journal of Systems and Software, 85, 1709–1728. doi:10.1016/j.jss.2012.03.056.

Diaz, N., Helu, M., Jarvis, A., Tönissen, S., Dornfeld, D., & Schlosser, R. (2009). Strategies for minimum energy operation for precision machining. The Proceedings of MTTRF 2009 Annual Meeting, 1, 6. doi:10.1007/978-3-642-19692-8.

Eckstein, M., & Mankova, I. (2012). Monitoring of drilling process for highly stressed aeroengine components. Procedia CIRP, 1(1), 587–592. doi:10.1016/j.procir.2012.04.104.

Evans, P., & Annunziata, M. (2012). Industrial internet: Pushing the boundaries of minds and machines. General Electric. http://www.ge.com/docs/chapters/Industrial_Internet.pdf.

Ferreira, L., Putnik, G., Cunha, M., Putnik, Z., Castro, H., Alves, C., et al. (2013). Cloudlet architecture for dashboard in cloud and ubiquitous manufacturing. Procedia CIRP, 12, 366–371. doi:10.1016/j.procir.2013.09.063.

Gao, R., Wang, L., Teti, R., Dornfeld, D., Kumara, S., Mori, M., et al. (2015). Cloud-enabled prognosis for manufacturing. CIRP Annals-Manufacturing Technology. doi:10.1016/j.cirp.2015.05.011.

Giret, A., & Botti, V. (2004). Holons and agents. Journal of Intelligent Manufacturing. doi:10.1023/B:JIMS.0000037714.56201.a3.

He, Y., Liu, B., Zhang, X., Gao, H., & Liu, X. (2012). A modeling method of task-oriented energy consumption for machining manufacturing system. Journal of Cleaner Production, 23, 167–174. doi:10.1016/j.jclepro.2011.10.033.

Herlufsen, H., Gade, S., & Zaveri, H. K. (2008). Analyzers and signal generators. Handbook of Noise and Vibration Control, 470–485. doi:10.1002/9780470209707.ch40.

Hon, K. K. B. (2005). Performance and evaluation of manufacturing systems. CIRP Annals-Manufacturing Technology, 54(2), 139–154. doi:10.1016/S0007-8506(07)60023-7. http://www.sciencedirect.com/science/article/pii/S0007850607600237.

Izaguirre, J. A. G., Lobov, A., & Lastra, J. L. M. (2011). OPC-UA and DPWS interoperability for factory floor monitoring using complex event processing. In 2011 9th IEEE international conference on industrial informatics (INDIN). Glendale, AZ: IEEE. doi:10.1109/INDIN.2011.6034874.

Kehoe, B., Patil, S., Abbeel, P., & Goldberg, K. (2015). A survey of research on cloud robotics and automation. IEEE Transactions on Automation Science and Engineering, 12(2), 1–12. doi:10.1109/TASE.2014.2376492. http://www.scopus.com/inward/record.url?eid=2-s2.0-84924680020&partnerID=tZOtx3y1.

Li, Y., Liu, Q., Xiong, J., & Wang, J. (2015). Research on data-sharing and intelligent CNC machining system. In 2015 IEEE international conference on mechatronics and automation (ICMA). doi:10.1109/ICMA.2015.7237557.

Lilly, J. H. (2010). Fuzzy control and identification. Hoboken: Wiley.

Lindgren, P., Pietrzak, P., & Makitaavola, H. (2013). Real-time complex event processing using concurrent reactive objects. In 2013 IEEE international conference on industrial technology (ICIT). Cape Town: IEEE. doi:10.1109/ICIT.2013.6505984.

Liu, M., Ma, J., Lin, L., Ge, M., Wang, Q., & Liu, C. (2014). Intelligent assembly system for mechanical products and key technology based on internet of things. Journal of Intelligent Manufacturing, 1–29, doi:10.1007/s10845-014-0976-6.

Mitchell, H. B. (2007). Multi-Sensor data fusion: An introduction. doi:10.1007/978-3-540-71559-7.

Monostori, L. (2014). Cyber-physical production systems: Roots, expectations and R&D challenges. Procedia CIRP, 17, 9–13. doi:10.1016/j.procir.2014.03.115.

Morgan, J., & O’Donnell, G. E. (2014a). The cyber physical implementation of cloud manufacturing monitoring systems. In 9th CIRP conference on intelligent computation in manufacturing engineering. Capri: Elsevier.

Morgan, J., & O’Donnell, G. E. (2014b). A service oriented reconfigurable process monitoring system-enabling cyber physical systems. Journal of Machine Engineering, 14(2), 116–129.

Morgan, J., & O’Donnell, G. E. (2015). Enabling a ubiquitous and cloud manufacturing foundation with field-level service-oriented architecture. In International Journal of Computer Integrated Manufacturing. doi:10.1080/0951192X.2015.1032355.

Morgan, J., O’Driscoll, E., & O’Donnell, G. E. (2013). Data interoperability for reconfigurable manufacturing process monitoring systems. Journal of Machine Engineering, 13(1), 64–79.

Neto, L., Reis, J., Guimaraes, D., & Goncalves, G. (2015). Sensor cloud: Smart component framework for reconfigurable diagnostics in intelligent manufacturing environments. In 2015 IEEE 13th international conference on industrial informatics (INDIN). doi:10.1109/INDIN.2015.7281991.

Paarmann, L. (2003). Design and analysis of analog filters: A signal processing perspective. Dordrecht: Kluwer.

Pietrzak, P., Lindgren, P., & Makitaavola, H. (2012). Towards a lightweight CEP engine for embedded systems. In IECON 2012—38th annual conference on IEEE industrial electronics society. doi:10.1109/IECON.2012.6389134.

Pinto, J., Marco Mendes, J., Leitão, P., Colombo, A. W., Bepperling, A., & Restivo, F. (2009). Decision support system for Petri nets enabled automation components. In IEEE international conference on industrial informatics (INDIN) (pp. 289–294). doi:10.1109/INDIN.2009.5195819.

Pople, S. (1999). Electromagnetic induction. In S. Pople (Ed.), Advanced physics through diagrams (pp. 78–79). Oxford: Oxford Universoty Press.

Savio, D., Karnouskos, S., Wuwer, D., & Bangemann, T. (2008). Dynamically optimized production planning using cross-layer SOA. In 32nd annual IEEE international computer software and applications, 2008. COMPSAC ’08. Turku: IEEE. doi:10.1109/COMPSAC.2008.219.

Schmitt, R., Bittencourt, J. L., & Bonefeld, R. (2011). Modelling machine tools for self-optimisation of energy consumption. In Glocalized solutions for sustainability in manufacturing—Proceedings of the 18th CIRP international conference on life cycle engineering (pp. 253–257). doi:10.1007/978-3-642-19692-8-44.

Song, K., Seniuk, G. T. G., Kozinski, J. A., Zhang, W., & Gupta, M. M. (2015). An innovative fuzzy-neural decision analyzer for qualitative group decision making. International Journal of Information Technology & Decision Making, 14(03), 659–696. doi:10.1142/S0219622015500029.

Tan, L., & Jiang, J. (2013). Digital signal processing systems, basic filtering types, and digital filter realizations. In Digital signal processing: Fundamentals and applications. doi:10.1016/B978-0-12-415893-1.00006-8.

Tao, F., Zuo, Y., Da Xu, L., & Zhang, L. (2014). IoT-based intelligent perception and access of manufacturing resource toward cloud manufacturing. IEEE Transactions on Industrial Informatics. doi:10.1109/TII.2014.2306397.

Teti, R., Jemielniak, K., O’Donnell, G., & Dornfeld, D. (2010). Advanced monitoring of machining operations. CIRP Annals-Manufacturing Technology, 59, 717–739.

Trentesaux, D. (2009). Distributed control of production systems. Engineering Applications of Artificial Intelligence, 22(7), 971–978. doi:10.1016/j.engappai.2009.05.001. http://www.sciencedirect.com/science/article/pii/S0952197609000797.

Trout, C. M. (2010). Essentials of electric motors and controls. Burlington: Jones and Bartlett Publishers.

Vachtsevanos, G., Lewis, F., Roemer, M., Hess, A., & Wu, B. (2007). Intelligent fault diagnosis and prognosis for engineering systems. doi:10.1002/9780470117842.

Vijayaraghavan, A., & Dornfeld, D. (2010). Automated energy monitoring of machine tools. CIRP Annals-Manufacturing Technology, 59(1), 21–24. doi:10.1016/j.cirp.2010.03.042.

Walzer, K., Rode, J., Wünsch, D., & Groch, M. (2008). Event-driven manufacturing: Unified management of primitive and complex events for manufacturing monitoring and control. In IEEE international workshop on factory communication systems—Proceedings, WFCS (pp. 383–391). doi:10.1109/WFCS.2008.4638734.

Wang, X., Wong, T. N., & Wang, G. (2012). Service-oriented architecture for ontologies supporting multi-agent system negotiations in virtual enterprise. Journal of Intelligent Manufacturing, 23(4), 1331–1349. doi:10.1007/s10845-010-0469-1.

Zhang, W. J., & van Luttervelt, C. A. (2011). Toward a resilient manufacturing system. CIRP Annals-Manufacturing Technology, 60(1), 469–472. doi:10.1016/J.Cirp.2011.03.041.

Acknowledgments

This research was funded under the Graduate Research Education Programme in Engineering (GREP-Eng) which is a PRTLI Cycle 5 funded programme and is co-funded under the European Regional Development Fund. The author would like to thank his work colleagues at Trinity College Dublin for their assistance in collating this publication.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Morgan, J., O’Donnell, G.E. Cyber physical process monitoring systems. J Intell Manuf 29, 1317–1328 (2018). https://doi.org/10.1007/s10845-015-1180-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-015-1180-z