Abstract

We recently designed two calculi as stepping stones towards superposition for full higher-order logic: Boolean-free \(\lambda \)-superposition and superposition for first-order logic with interpreted Booleans. Stepping on these stones, we finally reach a sound and refutationally complete calculus for higher-order logic with polymorphism, extensionality, Hilbert choice, and Henkin semantics. In addition to the complexity of combining the calculus’s two predecessors, new challenges arise from the interplay between \(\lambda \)-terms and Booleans. Our implementation in Zipperposition outperforms all other higher-order theorem provers and is on a par with an earlier, pragmatic prototype of Booleans in Zipperposition.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

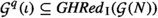

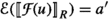

Superposition is a leading calculus for first-order logic with equality. We have been wondering for some years whether it would be possible to gracefully generalize it to extensional higher-order logic and use it as the basis of a strong higher-order automatic theorem prover. Towards this goal, we have, together with colleagues, designed superposition-like calculi for three intermediate logics between first-order and higher-order logic. Now we are finally ready to assemble a superposition calculus for full higher-order logic. The filiation of our new calculus from Bachmair and Ganzinger’s standard first-order superposition is as follows:

Our goal was to devise an efficient calculus for higher-order logic. To achieve it, we pursued two objectives. First, the calculus should be refutationally complete. Second, the calculus should coincide as much as possible with its predecessors \(\hbox {o}\)Sup and \(\lambda \)Sup on the respective fragments of higher-order logic (which in turn essentially coincide with Sup on first-order logic). Achieving these objectives is the main contribution of this article. We made an effort to keep the calculus simple, but often the refutational completeness proof forced our hand to add conditions or special cases.

Like \(\hbox {o}\)Sup, our calculus \(\hbox {o}\lambda \)Sup operates on clauses that can contain Boolean subterms, and it interleaves clausification with other inferences. Like \(\lambda \)Sup, \(\hbox {o}\lambda \)Sup eagerly \(\beta \eta \)-normalizes terms, employs full higher-order unification, and relies on a fluid subterm superposition rule (FluidSup) to simulate superposition inferences below applied variables—i.e., terms of the form \(y\>t_1\dots t_n\) for \(n \ge 1\).

In addition to the issues discussed previously and the complexity of combining the two approaches, we encountered the following main challenges.

First, because \(\hbox {o}\)Sup contains several superposition-like inference rules for Boolean subterms, our completeness proof requires dedicated fluid Boolean subterm hoisting rules (FluidBoolHoist, FluidLoobHoist), which simulate Boolean inferences below applied variables, in addition to FluidSup, which simulates superposition inferences.

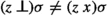

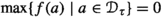

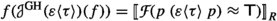

Second, due to restrictions related to the term order that parameterizes superposition, it is difficult to handle variables bound by unclausified quantifiers if these variables occur applied or in arguments of applied variables. We solve the issue by replacing such quantified terms \(\forall y.\>t\) or \(\exists y.\>t\) by equivalent terms  or

or  in a preprocessing step. We leave all other quantified terms intact so that we can process them more efficiently.

in a preprocessing step. We leave all other quantified terms intact so that we can process them more efficiently.

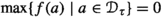

Third, like other higher-order calculi that support Booleans, our calculus must include some form of primitive substitution [1, 11, 21, 34]. For example, given the clauses \({\textsf {a} } \not \approx {\textsf {b} }\) and  , it is crucial to find the substitution

, it is crucial to find the substitution  , which does not arise through unification. Primitive substitution accomplishes this by blindly substituting logical connectives and quantifiers; here it would apply

, which does not arise through unification. Primitive substitution accomplishes this by blindly substituting logical connectives and quantifiers; here it would apply  to the second clause, where y and \(y'\) are fresh variables. In the context of superposition, this is problematic because the instantiated clause is subsumed by the original clause and could be discarded. Our solution is to immediately clausify the introduced logical symbol, yielding a clause that is no longer subsumed.

to the second clause, where y and \(y'\) are fresh variables. In the context of superposition, this is problematic because the instantiated clause is subsumed by the original clause and could be discarded. Our solution is to immediately clausify the introduced logical symbol, yielding a clause that is no longer subsumed.

The core of this article is the proof of refutational completeness. To keep it manageable, we structure it in three levels. The first level proves completeness of a calculus in first-order logic with Booleans, essentially relying on the completeness of \(\hbox {o}\)Sup. The second level lifts this result to a ground version of our calculus by transforming the first-order model constructed in the first level into a higher-order model, closely following \(\lambda \)Sup. The third level lifts this result further to the nonground level by showing that each ground inference on the second level corresponds to an inference of our calculus or is redundant. This establishes refutational completeness of our calculus.

We implemented our calculus in the Zipperposition prover and evaluated it on TPTP and Sledgehammer benchmarks. The new Zipperposition outperforms all other higher-order provers and is on a par with an ad hoc implementation of Booleans in the same prover by Vukmirović and Nummelin [39].

This article is structured as follows. Section 2 introduces syntax and semantics of higher-order logic and other preliminaries of this article. Section 3 presents our calculus, proves it sound, and discusses its redundancy criterion. Section 4 contains the detailed proof of refutational completeness. Section 5 discusses our implementation in Zipperposition. Section 6 presents our evaluation results.

An earlier version of this article is contained in Bentkamp’s PhD thesis [7], and parts of it were presented at CADE-28 [9]. This article extends the conference paper with more explanations and detailed soundness and completeness proofs. We phrase the redundancy criterion in a slightly more general way to make it more convenient to use in our completeness proof. However, we are not aware of any concrete examples where this additional generality would be useful in practice. We also present a concrete term order fulfilling our abstract requirements as well as additional evaluation results.

2 Logic

Our logic is higher-order logic (simple type theory) with rank-1 polymorphism, Hilbert choice, Henkin semantics, and functional and Boolean extensionality. It closely resembles Gordon and Melham’s HOL [20] and the TPTP TH1 standard [25].

Although standard semantics is commonly considered the foundation of the HOL systems, also Henkin semantics is compatible with the notion of provability employed by the HOL systems. By admitting nonstandard models, Henkin semantics is not subject to Gödel’s first incompleteness theorem, allowing us to claim refutational completeness of our calculus.

On top of the standard higher-order terms, we install a clausal structure that allows us to formulate calculus rules in the style of first-order superposition. It does not restrict the flexibility of the logic because an arbitrary term t of Boolean type can be written as the clause  .

.

2.1 Syntax

We use the notation \(\bar{a}_n\) or \(\bar{a}\) to stand for the tuple \((a_1,\dots ,a_n)\) or product \(a_1 \times \dots \times a_n\), where \(n \ge 0\). We abuse notation by applying an operation on a tuple when it must be applied elementwise; thus, \(f(\bar{a}_n)\) can stand for \((f(a_1),\dots ,f(a_n))\), \(f(a_1) \times \dots \times f(a_n)\), or \(f(a_1, \dots , a_n)\), depending on the operation f.

As a basis for our logic’s types, we fix an infinite set  of type variables. A set \(\Sigma _{{\textsf {ty} }}\) of type constructors with associated arities is a type signature if it contains at least one nullary Boolean type constructor \(\hbox {o}\) and a binary function type constructor \(\rightarrow \). A type, usually denoted by \(\tau \) or \(\upsilon \), is inductively defined to either be a type variable

of type variables. A set \(\Sigma _{{\textsf {ty} }}\) of type constructors with associated arities is a type signature if it contains at least one nullary Boolean type constructor \(\hbox {o}\) and a binary function type constructor \(\rightarrow \). A type, usually denoted by \(\tau \) or \(\upsilon \), is inductively defined to either be a type variable  or have the form \(\kappa (\bar{\tau }_n)\) for an n-ary type constructor \(\kappa \in \Sigma _{{\textsf {ty} }}\) and types \(\bar{\tau }_n\). We write \(\kappa \) for \(\kappa ()\) and \(\tau \rightarrow \upsilon \) for \({\rightarrow }(\tau ,\upsilon )\). A type declaration is an expression

or have the form \(\kappa (\bar{\tau }_n)\) for an n-ary type constructor \(\kappa \in \Sigma _{{\textsf {ty} }}\) and types \(\bar{\tau }_n\). We write \(\kappa \) for \(\kappa ()\) and \(\tau \rightarrow \upsilon \) for \({\rightarrow }(\tau ,\upsilon )\). A type declaration is an expression  (or simply \(\tau \) if \(m = 0\)), where all type variables occurring in \(\tau \) belong to \(\bar{\alpha }_m\).

(or simply \(\tau \) if \(m = 0\)), where all type variables occurring in \(\tau \) belong to \(\bar{\alpha }_m\).

To define our logic’s terms, we fix a type signature \(\Sigma _{{\textsf {ty} }}\) and a set  of term variables with associated types, written as \({\textit{x}}:\tau \) or \({\textit{x}}\). We require that there are infinitely many variables for each type.

of term variables with associated types, written as \({\textit{x}}:\tau \) or \({\textit{x}}\). We require that there are infinitely many variables for each type.

A term signature is a set \(\Sigma \) of (function) symbols, usually denoted by \({\textsf {a} }\), \({\textsf {b} }\), \({\textsf {c} }\), \({\textsf {f} }\), \({\textsf {g} }\), \({\textsf {h} }\), each associated with a type declaration, written as  . We require the presence of the logical symbols

. We require the presence of the logical symbols  ;

;  ;

;  ;

;  ; and

; and  . Moreover, we require the presence of the Hilbert choice operator

. Moreover, we require the presence of the Hilbert choice operator  . The logical symbols are printed in bold to distinguish them from the notation used for clauses below. Although \({\varepsilon }\) is interpreted in our semantics, we do not consider it to be a logical symbol. The reason is that our calculus will enforce the semantics of \({\varepsilon }\) by an axiom, whereas the semantics of the logical symbols will be enforced by inference rules.

. The logical symbols are printed in bold to distinguish them from the notation used for clauses below. Although \({\varepsilon }\) is interpreted in our semantics, we do not consider it to be a logical symbol. The reason is that our calculus will enforce the semantics of \({\varepsilon }\) by an axiom, whereas the semantics of the logical symbols will be enforced by inference rules.

In the following, we also fix a term signature \(\Sigma \). A type signature and a term signature form a signature.

We will define terms in three layers of abstraction: raw \(\lambda \)-terms, \(\lambda \)-terms, and terms; where \(\lambda \)-terms will be \(\alpha \)-equivalence classes of raw \(\lambda \)-terms and terms will be \(\beta \eta \)-equivalence classes of \(\lambda \)-terms.

We write \(t :\tau \) for a raw lambda term t of type \(\tau \). The set of raw \(\lambda \)-terms and their associated types is defined inductively as follows. Every  is a raw \(\lambda \)-term of type \(\tau \). If

is a raw \(\lambda \)-term of type \(\tau \). If  and \(\bar{\upsilon }_m\) is a tuple of types, called type arguments, then \({\textsf {f} }{\langle \bar{\upsilon }_m\rangle }\) (or simply \({\textsf {f} }\) if \(m = 0\)) is a raw \(\lambda \)-term of type \(\tau \{\bar{\alpha }_m \mapsto \bar{\upsilon }_m\}\). If \(x:\tau \) and \(t:\upsilon \), then the \(\lambda \)-expression \(\lambda x.\> t\) is a raw \(\lambda \)-term of type \(\tau \rightarrow \upsilon \). If \(s:\tau \rightarrow \upsilon \) and \(t:\tau \), then the application \(s\>t\) is a raw \(\lambda \)-term of type \(\upsilon \). Using the spine notation [17], raw \(\lambda \)-terms can be decomposed in a unique way as a nonapplication head t applied to zero or more arguments: \(t \> s_1\dots s_n\) or \(t \> \bar{s}_n\) (abusing notation). For the symbols

and \(\bar{\upsilon }_m\) is a tuple of types, called type arguments, then \({\textsf {f} }{\langle \bar{\upsilon }_m\rangle }\) (or simply \({\textsf {f} }\) if \(m = 0\)) is a raw \(\lambda \)-term of type \(\tau \{\bar{\alpha }_m \mapsto \bar{\upsilon }_m\}\). If \(x:\tau \) and \(t:\upsilon \), then the \(\lambda \)-expression \(\lambda x.\> t\) is a raw \(\lambda \)-term of type \(\tau \rightarrow \upsilon \). If \(s:\tau \rightarrow \upsilon \) and \(t:\tau \), then the application \(s\>t\) is a raw \(\lambda \)-term of type \(\upsilon \). Using the spine notation [17], raw \(\lambda \)-terms can be decomposed in a unique way as a nonapplication head t applied to zero or more arguments: \(t \> s_1\dots s_n\) or \(t \> \bar{s}_n\) (abusing notation). For the symbols  and

and  , we will typically use infix notation and omit the type argument.

, we will typically use infix notation and omit the type argument.

A raw \(\lambda \)-term s is a subterm of a raw \(\lambda \)-term t, written \(t = t[s]\), if \(t = s\), if \(t = (\lambda x.\>u[s])\), if \(t = (u[s])\>v\), or if \(t = u\>(v[s])\) for some raw \(\lambda \)-terms u and v. A proper subterm of a raw \(\lambda \)-term t is any subterm of t that is distinct from t itself. A variable occurrence is free in a raw \(\lambda \)-term if it is not bound by a \(\lambda \)-expression. A raw \(\lambda \)-term is ground if it is built without using type variables and contains no free term variables.

The \(\alpha \)-renaming rule is defined as  , where y does not occur free in t and is not captured by a \(\lambda \)-binder in t. Two terms are \(\alpha \)-equivalent if they can be made equal by repeatedly \(\alpha \)-renaming their subterms. Raw \(\lambda \)-terms form equivalence classes modulo \(\alpha \)-equivalence, called \(\lambda \)-terms. We lift the above notions on raw \(\lambda \)-terms to \(\lambda \)-terms.

, where y does not occur free in t and is not captured by a \(\lambda \)-binder in t. Two terms are \(\alpha \)-equivalent if they can be made equal by repeatedly \(\alpha \)-renaming their subterms. Raw \(\lambda \)-terms form equivalence classes modulo \(\alpha \)-equivalence, called \(\lambda \)-terms. We lift the above notions on raw \(\lambda \)-terms to \(\lambda \)-terms.

A substitution \(\rho \) is a function from type variables to types and from term variables to \(\lambda \)-terms such that it maps all but finitely many variables to themselves. We require that it is type correct—i.e., for each  , \(x\rho \) is of type \(\tau \rho \). The letters \(\theta ,\rho ,\sigma \) are reserved for substitutions. Substitutions \(\alpha \)-rename \(\lambda \)-terms to avoid capture; for example, \((\lambda x.\> y)\{y \mapsto x\} = (\lambda x'\!.\> x)\). The composition \(\rho \sigma \) applies \(\rho \) first: \(t\rho \sigma = (t\rho )\sigma \). The notation \(\sigma [\bar{x}_n \mapsto \bar{s}_n]\) denotes the substitution that replaces each \(x_i\) by \(s_i\) and that otherwise coincides with \(\sigma \).

, \(x\rho \) is of type \(\tau \rho \). The letters \(\theta ,\rho ,\sigma \) are reserved for substitutions. Substitutions \(\alpha \)-rename \(\lambda \)-terms to avoid capture; for example, \((\lambda x.\> y)\{y \mapsto x\} = (\lambda x'\!.\> x)\). The composition \(\rho \sigma \) applies \(\rho \) first: \(t\rho \sigma = (t\rho )\sigma \). The notation \(\sigma [\bar{x}_n \mapsto \bar{s}_n]\) denotes the substitution that replaces each \(x_i\) by \(s_i\) and that otherwise coincides with \(\sigma \).

The \(\beta \)- and \(\eta \)-reduction rules are specified on \(\lambda \)-terms as  and

and  . For \(\beta \), bound variables in t are implicitly renamed to avoid capture; for \(\eta \), the variable x may not occur free in t. Two terms are \(\beta \eta \)-equivalent if they can be made equal by repeatedly \(\beta \)- and \(\eta \)-reducing their subterms. The \(\lambda \)-terms form equivalence classes modulo \(\beta \eta \)-equivalence, called \(\beta \eta \)-equivalence classes or simply terms.

. For \(\beta \), bound variables in t are implicitly renamed to avoid capture; for \(\eta \), the variable x may not occur free in t. Two terms are \(\beta \eta \)-equivalent if they can be made equal by repeatedly \(\beta \)- and \(\eta \)-reducing their subterms. The \(\lambda \)-terms form equivalence classes modulo \(\beta \eta \)-equivalence, called \(\beta \eta \)-equivalence classes or simply terms.

We use the following nonstandard normal form: The \(\beta \eta {\textsf {Q} }_{\eta }\)-normal form  of a \(\lambda \)-term t is obtained by applying

of a \(\lambda \)-term t is obtained by applying  and

and  exhaustively on all subterms and finally applying the following rewrite rule \({\textsf {Q} }_{\eta }\) exhaustively on all subterms:

exhaustively on all subterms and finally applying the following rewrite rule \({\textsf {Q} }_{\eta }\) exhaustively on all subterms:

where t is not a \(\lambda \)-expression. Here and elsewhere, \({\textsf {Q} }\) stands for either  or

or  .

.

We lift all of the notions defined on \(\lambda \)-terms to terms:

Convention 1

When defining operations that need to analyze the structure of terms, we use the \(\beta \eta {\textsf {Q} }_{\eta }\)-normal representative as the default representative of a \(\beta \eta \)-equivalence class. Nevertheless, we will sometimes write  for clarity.

for clarity.

Many authors prefer the \(\eta \)-long \(\beta \)-normal form [22, 24, 29], but in a polymorphic setting it has the drawback that instantiating a type variable with a functional type can lead to \(\eta \)-expansion. Below quantifiers, however, we prefer \(\eta \)-long form, which is enforced by the \({\textsf {Q} }_{\eta }\)-rule. The reason is that our completeness theorem requires arguments of quantifiers to be \(\lambda \)-expressions.

A literal is an equation \(s \approx t\) or a disequation \(s \not \approx t\) of terms s and t. In both cases, the order of s and t is not fixed. We write \(s \mathrel {\dot{\approx }}t\) for a literal that can be either an equation or a disequation. A clause \(L_1 \vee \dots \vee L_n\) is a finite multiset of literals \(L_{\!j}\). The empty clause is written as \(\bot \).

Our calculus does not allow nonequational literals. These must be encoded as  or

or  . We even considered excluding negative literals by encoding them as

. We even considered excluding negative literals by encoding them as  , following \(\mathrel {\leftarrow \rightarrow }\)Sup[19]. However, this approach would make the conclusion of the equality factoring rule (EFact) too large for our purposes. Regardless, the simplification machinery will allow us to reduce negative literals of the form

, following \(\mathrel {\leftarrow \rightarrow }\)Sup[19]. However, this approach would make the conclusion of the equality factoring rule (EFact) too large for our purposes. Regardless, the simplification machinery will allow us to reduce negative literals of the form  and

and  to

to  and

and  , thereby eliminating redundant representations of literals.

, thereby eliminating redundant representations of literals.

A complete set of unifiers on a set X of variables for two terms s and t is a set U of unifiers of s and t such that for every unifier \(\theta \) of s and t there exists a member \(\sigma \in U\) and a substitution \(\rho \) such that \(x\sigma \rho = x\theta \) for all \(x \in X.\) We let \({{\,\textrm{CSU}\,}}_X(s,t)\) denote an arbitrary (preferably, minimal) complete set of unifiers on X for s and t. We assume that all \(\sigma \in {{\,\textrm{CSU}\,}}_X(s,t)\) are idempotent on X—i.e., \(x\sigma \sigma = x\sigma \) for all \(x \in X.\) The set X will consist of the free variables of the clauses in which s and t occur and will be left implicit. To compute \({{\,\textrm{CSU}\,}}(s,t)\), Huet-style preunification [21] is not sufficient, and we must resort to full unification procedures [23, 38].

2.2 Semantics

A type interpretation  is defined as follows. The universe

is defined as follows. The universe  is a collection of nonempty sets, called domains. We require that

is a collection of nonempty sets, called domains. We require that  . The function

. The function  associates a function

associates a function  with each n-ary type constructor \(\kappa \), such that

with each n-ary type constructor \(\kappa \), such that  and for all domains

and for all domains  , the set

, the set  is a subset of the function space from

is a subset of the function space from  to

to  . The semantics is standard if

. The semantics is standard if  is the entire function space for all

is the entire function space for all  . A type valuation \(\xi \) is a function that maps every type variable to a domain. The denotation of a type for a type interpretation

. A type valuation \(\xi \) is a function that maps every type variable to a domain. The denotation of a type for a type interpretation  and a type valuation \(\xi \) is recursively defined by

and a type valuation \(\xi \) is recursively defined by  and

and  .

.

A type valuation \(\xi \) can be extended to be a valuation by additionally assigning an element  to each variable \(x :\tau \). An interpretation function

to each variable \(x :\tau \). An interpretation function  for a type interpretation

for a type interpretation  associates with each symbol

associates with each symbol  and domain tuple

and domain tuple  a value

a value  , where \(\xi \) is a type valuation that maps each \(\alpha _i\) to

, where \(\xi \) is a type valuation that maps each \(\alpha _i\) to  . We require that

. We require that

-

(I1)

-

(I2)

-

(I3)

-

(I4)

-

(I5)

-

(I6)

-

(I7)

-

(I8)

-

(I9)

-

(I10)

-

(I11)

for all \(a,b\in \{0,1\}\),  ,

,  , and

, and  .

.

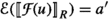

The comprehension principle states that every function designated by a \(\lambda \)-expression is contained in the corresponding domain. Loosely following Fitting [18, Sect. 2.4], we initially allow \(\lambda \)-expressions to designate arbitrary elements of the domain, to be able to define the denotation of a term. We impose restrictions afterwards using the notion of a proper interpretation, enforcing comprehension.

A \(\lambda \)-designation function  for a type interpretation

for a type interpretation  is a function that maps a valuation \(\xi \) and a \(\lambda \)-expression of type \(\tau \) to an element of

is a function that maps a valuation \(\xi \) and a \(\lambda \)-expression of type \(\tau \) to an element of  . A type interpretation, an interpretation function, and a \(\lambda \)-designation function form an interpretation

. A type interpretation, an interpretation function, and a \(\lambda \)-designation function form an interpretation  .

.

For an interpretation  and a valuation \(\xi \), the denotation of a term is defined as

and a valuation \(\xi \), the denotation of a term is defined as  , and

, and  . For ground terms t, the denotation does not depend on the choice of the valuation \(\xi \), which is why we sometimes write

. For ground terms t, the denotation does not depend on the choice of the valuation \(\xi \), which is why we sometimes write  for

for  .

.

An interpretation  is proper if

is proper if  for all \(\lambda \)-expressions \(\lambda x.\>t\) and all valuations \(\xi \). If a type interpretation

for all \(\lambda \)-expressions \(\lambda x.\>t\) and all valuations \(\xi \). If a type interpretation  and an interpretation function

and an interpretation function  can be extended by a \(\lambda \)-designation function

can be extended by a \(\lambda \)-designation function  to a proper interpretation

to a proper interpretation  , then this

, then this  is unique [18, Proposition 2.18]. Given an interpretation

is unique [18, Proposition 2.18]. Given an interpretation  and a valuation \(\xi \), an equation \(s\approx t\) is true if

and a valuation \(\xi \), an equation \(s\approx t\) is true if  and

and  are equal and it is false otherwise. A disequation \(s\not \approx t\) is true if \(s \approx t\) is false. A clause is true if at least one of its literals is true. A clause set is true if all its clauses are true. A proper interpretation

are equal and it is false otherwise. A disequation \(s\not \approx t\) is true if \(s \approx t\) is false. A clause is true if at least one of its literals is true. A clause set is true if all its clauses are true. A proper interpretation  is a model of a clause set N, written

is a model of a clause set N, written  , if N is true in

, if N is true in  for all valuations \(\xi \).

for all valuations \(\xi \).

2.3 Skolem-Aware Interpretations

Some of our calculus rules introduce Skolem symbols—i.e., symbols representing objects mandated by existential quantification. We define a Skolem-extended signature that contains all Skolem symbols that could possibly be needed by the calculus rules.

Definition 2

Given a term signature \(\Sigma \), let the Skolem-extended term signature \(\Sigma _{\textsf {sk} }\) the smallest signature that contains all symbols from \(\Sigma \) and a symbol  for all types \(\upsilon \), variables \(z:\upsilon \), terms \(t:\upsilon \rightarrow \hbox {o}\) over the signature \((\Sigma _{{\textsf {ty} }}, \Sigma _{\textsf {sk} })\), where \(\bar{\alpha }\) are the free type variables occurring in t and \(\bar{x}:\bar{\tau }\) are the free term variables occurring in t in order of first occurrence.

for all types \(\upsilon \), variables \(z:\upsilon \), terms \(t:\upsilon \rightarrow \hbox {o}\) over the signature \((\Sigma _{{\textsf {ty} }}, \Sigma _{\textsf {sk} })\), where \(\bar{\alpha }\) are the free type variables occurring in t and \(\bar{x}:\bar{\tau }\) are the free term variables occurring in t in order of first occurrence.

Interpretations as defined above can interpret the Skolem symbols arbitrarily. For example, an interpretation  does not necessarily interpret the symbol

does not necessarily interpret the symbol  as

as  . Therefore, an inference producing

. Therefore, an inference producing  from

from  is unsound w.r.t. \(\models \). As a remedy, we define Skolem-aware interpretations as follows:

is unsound w.r.t. \(\models \). As a remedy, we define Skolem-aware interpretations as follows:

Definition 3

We call a proper interpretation over a Skolem-extended signature Skolem-aware if for all Skolem symbols  , where \(\bar{\alpha }\) are the free type variables and \(\bar{x}\) are the free term variables occurring in t in order of first occurrence. An interpretation is a Skolem-aware model of a clause set N, written

, where \(\bar{\alpha }\) are the free type variables and \(\bar{x}\) are the free term variables occurring in t in order of first occurrence. An interpretation is a Skolem-aware model of a clause set N, written  , if

, if  is Skolem-aware and

is Skolem-aware and  .

.

3 The Calculus

The inference rules of our calculus \(\hbox {o}\lambda \)Sup have many complex side conditions that may appear arbitrary at first sight. They are, however, directly motivated by our completeness proof and are designed to restrict inferences as much as possible without compromising refutational completeness.

The \(\hbox {o}\lambda \)Sup calculus closely resembles \(\lambda \)Sup, augmented with rules for Boolean reasoning that are inspired by \(\hbox {o}\)Sup. As in \(\lambda \)Sup, superposition-like inferences are restricted to certain first-order-like subterms, the green subterms. Our completeness proof allows for this restriction because it is based on a reduction to first-order logic.

Definition 4

(Green subterms and positions) A green position of a \(\lambda \)-term is a finite sequence of natural numbers defined inductively as follows. For any \(\lambda \)-term t, the empty sequence \(\varepsilon \) is a green position of t. For all symbols  , types \(\bar{\tau }\), and \(\lambda \)-terms \(\bar{u}\), if p is a green position of \(u_i\), then i.p is a green position of \({\textsf {f} }{\langle \bar{\tau }\rangle }\>\bar{u}\).

, types \(\bar{\tau }\), and \(\lambda \)-terms \(\bar{u}\), if p is a green position of \(u_i\), then i.p is a green position of \({\textsf {f} }{\langle \bar{\tau }\rangle }\>\bar{u}\).

The green subterm of a \(\lambda \)-term at a given green position is defined inductively as follows. For any \(\lambda \)-term t, t itself is the green subterm of t at green position \(\varepsilon \). For all symbols  , types \(\bar{\tau }\), and \(\lambda \)-terms \(\bar{u}\), if t is a green subterm of \(u_i\) at some green position p for some i, then t is the green subterm of \({\textsf {f} }{\langle \bar{\tau }\rangle }\>\bar{u}\) at green position i.p.

, types \(\bar{\tau }\), and \(\lambda \)-terms \(\bar{u}\), if t is a green subterm of \(u_i\) at some green position p for some i, then t is the green subterm of \({\textsf {f} }{\langle \bar{\tau }\rangle }\>\bar{u}\) at green position i.p.

For positions in clauses, natural numbers are not appropriate because clauses and literals are unordered. A green position in a clause C is a tuple L.s.p where \(L = s \mathrel {\dot{\approx }}t\) is a literal in C and p is a green position in s. The green subterm of C at green position L.s.p is the green subterm of s at green position p. A green position is top level if it is of the form \(L.s.\epsilon \).

We write \(s|_p\) to denote the green subterm at position p in s. A position p is at or below a position q if q is a prefix of p. A position p is below a position q if q is a proper prefix of p.

For example, the green subterms of  are the term itself,

are the term itself,  , and \(\lambda x.\>{\textsf {h} }\>{\textsf {b} }\).

, and \(\lambda x.\>{\textsf {h} }\>{\textsf {b} }\).

Definition 5

(Green contexts) We write  to denote a \(\lambda \)-term s with the green subterm u at position p and call

to denote a \(\lambda \)-term s with the green subterm u at position p and call  a green context; We omit the subscript p if there are no ambiguities.

a green context; We omit the subscript p if there are no ambiguities.

The notions of green positions, subterms, and context are lifted to \(\beta \eta \)-equivalence classes via the \(\beta \eta {\textsf {Q} }_{\eta }\)-normal representative.

3.1 Preprocessing

Our completeness theorem requires that quantified variables do not appear in certain higher-order contexts. Quantified variables in these higher-order contexts are problematic because they have no clear counterpart in first-order logic and our completeness proof is based on a reduction to first-order logic. We use preprocessing to eliminate such problematic occurrences of quantifiers.

Definition 6

The rewrite rules  and

and  , which we collectively denote by

, which we collectively denote by  , are defined on \(\lambda \)-terms as

, are defined on \(\lambda \)-terms as

where the rewritten occurrence of \({\textsf {Q} }{\langle \tau \rangle }\) is unapplied, has an argument that is not a \(\lambda \)-expression, or has an argument of the form \(\lambda x.\>v\) such that x occurs free in a nongreen position of v.

If either of these rewrite rules can be applied to a given term, the term is  -reducible; otherwise, it is

-reducible; otherwise, it is  -normal. We lift this notion to \(\beta \eta \)-equivalence classes via the \(\beta \eta {\textsf {Q} }_{\eta }\)-normal representative. A clause or clause set is

-normal. We lift this notion to \(\beta \eta \)-equivalence classes via the \(\beta \eta {\textsf {Q} }_{\eta }\)-normal representative. A clause or clause set is  -normal if all contained terms are

-normal if all contained terms are  -normal.

-normal.

For example, the term  is

is  -normal. A term may be

-normal. A term may be  -reducible because a quantifier appears unapplied (e.g.,

-reducible because a quantifier appears unapplied (e.g.,  ); a quantified variable occurs applied (e.g.,

); a quantified variable occurs applied (e.g.,  ); a quantified variable occurs inside a nested \(\lambda \)-expression (e.g.,

); a quantified variable occurs inside a nested \(\lambda \)-expression (e.g.,  ); or a quantified variable occurs in the argument of a variable, either a free variable (e.g.,

); or a quantified variable occurs in the argument of a variable, either a free variable (e.g.,  ) or a bound variable (e.g.,

) or a bound variable (e.g.,  ).

).

We can also characterize  -normality as follows:

-normality as follows:

Lemma 7

Let t be a term with spine notation \(t = s\>\bar{u}_n\). Then t is  -normal if and only if \(\bar{u}_n\) are

-normal if and only if \(\bar{u}_n\) are  -normal and

-normal and

-

(i)

s is of the form \({\textsf {Q} }{\langle \tau \rangle }\), \(n=1\), and \(u_1\) is of the form \(\lambda y.\>u'\) such that y occurs free only in green positions of \(u'\); or

-

(ii)

s is a \(\lambda \)-expression whose body is

-normal; or

-normal; or -

(iii)

s is neither of the form \({\textsf {Q} }{\langle \tau \rangle }\) nor a \(\lambda \)-expression.

Proof

This follows directly from Definition 6. \(\square \)

In the following lemmas, our goal is to show that  -normality is invariant under \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization—i.e., if a \(\lambda \)-term t is

-normality is invariant under \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization—i.e., if a \(\lambda \)-term t is  -normal, then so is

-normal, then so is  . However,

. However,  -normality is not invariant under arbitrary \(\beta \eta \)-conversions. Clearly, a \(\beta \)-expansion can easily introduce

-normality is not invariant under arbitrary \(\beta \eta \)-conversions. Clearly, a \(\beta \)-expansion can easily introduce  -reducible terms, e.g.,

-reducible terms, e.g.,  .

.

Lemma 8

If t and v are  -normal \(\lambda \)-terms, then \(t\>v\) is a

-normal \(\lambda \)-terms, then \(t\>v\) is a  -normal \(\lambda \)-term.

-normal \(\lambda \)-term.

Proof

We prove this by induction on the structure of t. Let \(s\>\bar{u}_n = t\) be the spine notation of t. By Lemma 7, \(\bar{u}_n\) are  -normal and one of the lemma’s three cases applies. Since t is of functional type and

-normal and one of the lemma’s three cases applies. Since t is of functional type and  -normal, s cannot be of the form \({\textsf {Q} }{\langle \tau \rangle }\), excluding case (i). Cases (ii) and (iii) are independent of \(\bar{u}_n\), and hence appending v to that tuple does not affect the

-normal, s cannot be of the form \({\textsf {Q} }{\langle \tau \rangle }\), excluding case (i). Cases (ii) and (iii) are independent of \(\bar{u}_n\), and hence appending v to that tuple does not affect the  -normality of t. \(\square \)

-normality of t. \(\square \)

Lemma 9

If t is a  -normal \(\lambda \)-term and \(\rho \) is a substitution such that \(x\rho \) is

-normal \(\lambda \)-term and \(\rho \) is a substitution such that \(x\rho \) is  -normal for all x, then \(t\rho \) is

-normal for all x, then \(t\rho \) is  -normal.

-normal.

Proof

We prove this by induction on the structure of t. Let \(s\>\bar{u}_n = t\) be its spine notation. Since t is  -normal, by Lemma 7, \(u_n\) are

-normal, by Lemma 7, \(u_n\) are  -normal and one of the following cases applies:

-normal and one of the following cases applies:

Case (i): s is of the form \({\textsf {Q} }{\langle \tau \rangle }\), \(n = 1\), and \(u_1\) is of the form \(\lambda y.\>u'\) such that y occurs free only in green positions of \(u'\). Since our substitutions avoid capture, \(y\rho = y\) and y does not appear in \(x\rho \) for all other variables x. It is clear from the definition of green positions (Definition 4) that since y occurs free only in green positions of \(u'\), y also occurs free only in green positions of \(u'\rho \). Moreover, by the induction hypothesis, \(u_1\rho \) is  -normal. Hence, \(t\rho = {\textsf {Q} }{\langle \tau \rho \rangle }\>(u_1\rho ) = {\textsf {Q} }{\langle \tau \rho \rangle }\>(\lambda y.\>u'\rho )\) is also

-normal. Hence, \(t\rho = {\textsf {Q} }{\langle \tau \rho \rangle }\>(u_1\rho ) = {\textsf {Q} }{\langle \tau \rho \rangle }\>(\lambda y.\>u'\rho )\) is also  -normal.

-normal.

Case (ii): s is a \(\lambda \)-expression whose body is  -normal. Then \(t\rho = (\lambda y.\>s'\rho )\>(\bar{u}_n\rho )\) for some

-normal. Then \(t\rho = (\lambda y.\>s'\rho )\>(\bar{u}_n\rho )\) for some  -normal \(\lambda \)-term \(s'\). By the induction hypothesis, \(s'\rho \) and \(\bar{u}_n\rho \) are

-normal \(\lambda \)-term \(s'\). By the induction hypothesis, \(s'\rho \) and \(\bar{u}_n\rho \) are  -normal. Therefore, \(t\rho \) is also

-normal. Therefore, \(t\rho \) is also  -normal.

-normal.

Case (iii): s is neither of the form \({\textsf {Q} }{\langle \tau \rangle }\) nor a \(\lambda \)-expression. If s is of the form \({\textsf {f} }{\langle \bar{\tau }\rangle }\) for some  , then \(t\rho = {\textsf {f} }{\langle \bar{\tau }\rho \rangle }\>(\bar{u}_n\rho )\). By the induction hypothesis, \(\bar{u}_n\rho \) are

, then \(t\rho = {\textsf {f} }{\langle \bar{\tau }\rho \rangle }\>(\bar{u}_n\rho )\). By the induction hypothesis, \(\bar{u}_n\rho \) are  -normal, and therefore \(t\rho \) is also

-normal, and therefore \(t\rho \) is also  -normal. Otherwise, s is a variable x and hence \(t\rho = x\rho \>(\bar{u}_n\rho )\). Since \(x\rho \) is

-normal. Otherwise, s is a variable x and hence \(t\rho = x\rho \>(\bar{u}_n\rho )\). Since \(x\rho \) is  -normal by assumption and \(\bar{u}_n\rho \) are

-normal by assumption and \(\bar{u}_n\rho \) are  -normal by the induction hypothesis, it follows from (repeated application of) Lemma 8 that \(t\rho \) is also

-normal by the induction hypothesis, it follows from (repeated application of) Lemma 8 that \(t\rho \) is also  -normal. \(\square \)

-normal. \(\square \)

Lemma 10

Let t be a \(\lambda \)-term of functional type that does not contain the variable x. If \(\lambda x.\>t\>x\) is  -normal, then t is

-normal, then t is  -normal.

-normal.

Proof

Since \(\lambda x.\>t\>x\) is  -normal, \(t\>x\) is also

-normal, \(t\>x\) is also  -normal. Let \(s\>\bar{u}_n = t\). Since \(t\>x\) is

-normal. Let \(s\>\bar{u}_n = t\). Since \(t\>x\) is  -normal and x is not a \(\lambda \)-expression, s cannot be a quantifier by Lemma 7. Cases (ii) and (iii) are independent of \(\bar{u}_n\), and hence removing x from that tuple does not affect

-normal and x is not a \(\lambda \)-expression, s cannot be a quantifier by Lemma 7. Cases (ii) and (iii) are independent of \(\bar{u}_n\), and hence removing x from that tuple does not affect  -normality. Thus, \(t\>x\) being

-normality. Thus, \(t\>x\) being  -normal implies that t is

-normal implies that t is  -normal. \(\square \)

-normal. \(\square \)

Lemma 11

Let t be a \(\lambda \)-term and x a variable occurring free only in green positions of t. Let \(t'\) be a term obtained via a \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step from t. Then x occurs free only in green positions of \(t'\).

Proof

By induction on the structure of t. If x does not occur free in t, the claim is obvious. If \(t = x\), there is no possible \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step because for these steps the head of the rewritten term must be either a \(\lambda \)-expression or a quantifier. So we now assume that x does occur free in t and that \(t \not = x\). Then, by the assumption that x occurs free only in green positions, t must be of the form \({\textsf {f} }{\langle \bar{\tau }\rangle }\>\bar{u}\) for some  , some types \(\bar{\tau }\) and some \(\lambda \)-terms \(\bar{u}\). The \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step must take place in one of the \(\bar{u}\), yielding \(\bar{u}'\) such that \(t'= {\textsf {f} }{\langle \bar{\tau }\rangle }\> \bar{u}'\). By the induction hypothesis, x occurs free only in green positions of \(\bar{u}'\) and therefore only in green positions of \(t'\). \(\square \)

, some types \(\bar{\tau }\) and some \(\lambda \)-terms \(\bar{u}\). The \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step must take place in one of the \(\bar{u}\), yielding \(\bar{u}'\) such that \(t'= {\textsf {f} }{\langle \bar{\tau }\rangle }\> \bar{u}'\). By the induction hypothesis, x occurs free only in green positions of \(\bar{u}'\) and therefore only in green positions of \(t'\). \(\square \)

Lemma 12

Let t be  -normal and let \(t'\) be obtained from t by a \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step. If it is an \(\eta \)-reduction step, we assume that it happens not directly below a quantifier. Then \(t'\) is also

-normal and let \(t'\) be obtained from t by a \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step. If it is an \(\eta \)-reduction step, we assume that it happens not directly below a quantifier. Then \(t'\) is also  -normal.

-normal.

Proof

By induction on the structure of t. Let \(s\>\bar{u}_n = t\). By Lemma 7, \(\bar{u}_n\) are  -normal, and one of the following cases applies:

-normal, and one of the following cases applies:

Case (i): s is of the form \({\textsf {Q} }{\langle \tau \rangle }\), \(n = 1\), and \(u_1\) is of the form \(\lambda y.\>v\) such that y occurs free only in green positions of v. Then the normalization cannot happen at t, because s is of the form \({\textsf {Q} }{\langle \tau \rangle }\) and \(u_1\) is a \(\lambda \)-expression already. It cannot happen at \(u_1\) by the assumption of this lemma. So it must happen in v, yielding some \(\lambda \)-term \(v'\). Then \(t = s \> (\lambda y.\>v')\). The \(\lambda \)-term \(v'\) is  -normal by the induction hypothesis and hence \((\lambda y.\>v')\) is

-normal by the induction hypothesis and hence \((\lambda y.\>v')\) is  -normal. Since y occurs free only in green positions of v, by Lemma 11, y occurs free only in green positions of \(v'\). Thus, \(t'\) is

-normal. Since y occurs free only in green positions of v, by Lemma 11, y occurs free only in green positions of \(v'\). Thus, \(t'\) is  -normal.

-normal.

Cases (ii) and (iii): s is a \(\lambda \)-expression whose body is  -normal; or s is neither of the form \({\textsf {Q} }{\langle \tau \rangle }\) nor a \(\lambda \)-expression.

-normal; or s is neither of the form \({\textsf {Q} }{\langle \tau \rangle }\) nor a \(\lambda \)-expression.

If the \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step happens in some \(u_i\), yielding some \(\lambda \)-term \(u_i'\), then \(u_i'\) is  -normal by the induction hypothesis. Thus, \(t' = s\>u_1\>\cdots \>u_{i-1}\>u_{i}'\>u_{i+1}\cdots \>u_n\) is also

-normal by the induction hypothesis. Thus, \(t' = s\>u_1\>\cdots \>u_{i-1}\>u_{i}'\>u_{i+1}\cdots \>u_n\) is also  -normal.

-normal.

Otherwise, if \(s = \lambda x.\>v\) and the \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step happens in v, yielding some \(\lambda \)-term \(v'\), then \(v'\) is  -normal by the induction hypothesis. Thus, \(t' = (\lambda x.\>v')\>\bar{u}_n\) is also

-normal by the induction hypothesis. Thus, \(t' = (\lambda x.\>v')\>\bar{u}_n\) is also  -normal.

-normal.

Otherwise, the \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization step happens at \(s\>\bar{u}_m\) for some \(m \le n\), yielding some \(\lambda \)-term \(v'\). Then \(t' = v'\>u_{m+1}\>\cdots \>u_n\). The \(\lambda \)-terms s and \(\bar{u}_m\) are  -normal and by repeated application of Lemma 8, \(s\>\bar{u}_m\) is also

-normal and by repeated application of Lemma 8, \(s\>\bar{u}_m\) is also  -normal. The \(\lambda \)-term \(v'\) is

-normal. The \(\lambda \)-term \(v'\) is  -normal by Lemma 9 (for \(\beta \)-reductions) or Lemma 10 (for \(\eta \)-reductions). The normalization step cannot be a \({\textsf {Q} }_{\eta }\)-normalization because s is not a quantifier. Since \(\bar{u}_n\) are also

-normal by Lemma 9 (for \(\beta \)-reductions) or Lemma 10 (for \(\eta \)-reductions). The normalization step cannot be a \({\textsf {Q} }_{\eta }\)-normalization because s is not a quantifier. Since \(\bar{u}_n\) are also  -normal, by repeated application of Lemma 8, \(t[v'] = v'\>u_{m+1}\>\cdots \>u_n\) is also

-normal, by repeated application of Lemma 8, \(t[v'] = v'\>u_{m+1}\>\cdots \>u_n\) is also  -normal. \(\square \)

-normal. \(\square \)

A direct consequence of this lemma is that  -normality is invariant under \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization, as we wanted to show:

-normality is invariant under \(\beta \eta {\textsf {Q} }_{\eta }\)-normalization, as we wanted to show:

Corollary 13

If t is a  -normal \(\lambda \)-term, then

-normal \(\lambda \)-term, then  is also

is also  -normal.

-normal.

As mentioned above, the converse does not hold. Therefore, following our convention,  -normality is defined on terms (i.e., \(\beta \eta \)-equivalence classes) via \(\beta \eta {\textsf {Q} }_{\eta }\)-normal forms. It follows that

-normality is defined on terms (i.e., \(\beta \eta \)-equivalence classes) via \(\beta \eta {\textsf {Q} }_{\eta }\)-normal forms. It follows that  -normality is well-behaved under applications of terms as well:

-normality is well-behaved under applications of terms as well:

Lemma 14

If t and v are  -normal terms where t is of functional type, then \(t\>v\) is also

-normal terms where t is of functional type, then \(t\>v\) is also  -normal.

-normal.

Proof

Since  -normality is defined via \(\beta \eta {\textsf {Q} }_{\eta }\)-normal forms, we must show that if

-normality is defined via \(\beta \eta {\textsf {Q} }_{\eta }\)-normal forms, we must show that if  and

and  are

are  -normal, then

-normal, then  is

is  -normal. By Lemma 8,

-normal. By Lemma 8,  is

is  -normal. By Corollary 13,

-normal. By Corollary 13,  is

is  -normal. \(\square \)

-normal. \(\square \)

A preprocessor  -normalizes the input problem. It clearly terminates because each

-normalizes the input problem. It clearly terminates because each  -step reduces the number of quantifiers. The

-step reduces the number of quantifiers. The  -normality of the initial clause set of a derivation will be a precondition of the completeness theorem. Although inferences may produce

-normality of the initial clause set of a derivation will be a precondition of the completeness theorem. Although inferences may produce  -reducible clauses, we do not

-reducible clauses, we do not  -normalize during the derivation process itself. Instead,

-normalize during the derivation process itself. Instead,  -reducible ground instances of clauses will be considered redundant by the redundancy criterion. Thus, clauses whose ground instances are all

-reducible ground instances of clauses will be considered redundant by the redundancy criterion. Thus, clauses whose ground instances are all  -reducible can be deleted. However, there are

-reducible can be deleted. However, there are  -reducible clauses, such as

-reducible clauses, such as  , that nevertheless have

, that nevertheless have  -normal ground instances. Such clauses must be kept because the completeness proof relies on their

-normal ground instances. Such clauses must be kept because the completeness proof relies on their  -normal ground instances.

-normal ground instances.

In principle, we could omit the side condition of the  -rewrite rules and eliminate all quantifiers. However, the calculus (especially, the redundancy criterion) performs better with quantifiers than with \(\lambda \)-expressions, which is why we restrict

-rewrite rules and eliminate all quantifiers. However, the calculus (especially, the redundancy criterion) performs better with quantifiers than with \(\lambda \)-expressions, which is why we restrict  -normalization as much as the completeness proof allows. Extending the preprocessing to eliminate all Boolean terms as in Kotelnikov et al. [27] does not work for higher-order logic because Boolean terms can contain variables bound by enclosing \(\lambda \)-expressions.

-normalization as much as the completeness proof allows. Extending the preprocessing to eliminate all Boolean terms as in Kotelnikov et al. [27] does not work for higher-order logic because Boolean terms can contain variables bound by enclosing \(\lambda \)-expressions.

3.2 Term Orders and Selection Functions

The calculus is parameterized by a strict and a nonstrict term order, a literal selection function, and a Boolean subterm selection function. These concepts are defined below.

Definition 15

(Strict ground term order) A well-founded strict total order \(\succ \) on ground terms is a strict ground term order if it satisfies the following criteria, where \(\succeq \) denotes the reflexive closure of \(\succ \):

-

(O1)

compatibility with green contexts: \(s' \succ s\) implies

;

; -

(O2)

green subterm property:

;

; -

(O3)

for all terms

for all terms  ;

; -

(O4)

\({\textsf {Q} }{\langle \tau \rangle }\> t \succ t\>u\) for all types \(\tau \), terms t, and terms u such that \({\textsf {Q} }{\langle \tau \rangle }\>t\) and u are

-normal and the only Boolean green subterms of u are

-normal and the only Boolean green subterms of u are  and

and  .

.

Given a strict ground term order, we extend it to literals and clauses via the multiset extensions in the standard way [2, Sect. 2.4].

Remark 16

The property \({\textsf {Q} }{\langle \tau \rangle }\> t \succ t\>u\) from (O4) cannot be achieved in general—a fact that Christoph Benzmüller made us aware about. Without further restrictions, it would imply that \({\textsf {Q} }{\langle \tau \rangle } (\lambda x.\> x) \succ (\lambda x.\> x) ({\textsf {Q} }{\langle \tau \rangle } (\lambda x.\> x)) = {\textsf {Q} }{\langle \tau \rangle } (\lambda x.\> x)\), contradicting irreflexivity of \(\succ \). Restricting the Boolean green subterms of u to be only  and

and  resolves this issue. A second issue is that (O4) without further restrictions would imply \({\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} }) \succ (\lambda y.\> y\>{\textsf {a} }) (\lambda x.\>{\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y\>{\textsf {a} })) = {\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} })\), again contradicting irreflexivity. The restriction on the Boolean green subterms of u does not apply here because the Boolean subterms of \(\lambda x.\>{\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} })\) are not green. The restriction to

resolves this issue. A second issue is that (O4) without further restrictions would imply \({\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} }) \succ (\lambda y.\> y\>{\textsf {a} }) (\lambda x.\>{\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y\>{\textsf {a} })) = {\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} })\), again contradicting irreflexivity. The restriction on the Boolean green subterms of u does not apply here because the Boolean subterms of \(\lambda x.\>{\textsf {Q} }{\langle \tau \rangle }\> (\lambda y.\> y {\textsf {a} })\) are not green. The restriction to  -normal terms resolves this second issue, but it forces us to preprocess the input problem.

-normal terms resolves this second issue, but it forces us to preprocess the input problem.

Definition 17

(Strict term order) A strict term order is a relation \(\succ \) on terms, literals, and clauses such that its restriction to ground entities is a strict ground term order and such that it is stable under grounding substitutions (i.e., \(t \succ s\) implies \(t\theta \succ s\theta \) for all substitutions \(\theta \) grounding the entities t and s).

Definition 18

(Nonstrict term order) Given a strict term order \(\succ \) and its reflexive closure \(\succeq \), a nonstrict term order is a relation \(\succsim \) on terms, literals, and clauses such that \(t \succsim s\) implies \(t\theta \succeq s\theta \) for all \(\theta \) grounding the entities t and s.

Although we call them orders, a strict term order \(\succ \) is not required to be transitive on nonground entities, and a nonstrict term order \(\succsim \) does not need to be transitive at all. Normally, \(t \succeq s\) should imply \(t \succsim s\), but this is not required either. A nonstrict term order \(\succsim \) allows us to be more precise than the reflexive closure \(\succeq \) of \(\succ \). For example, we cannot have \(y\>{\textsf {b} } \succeq y\>{\textsf {a} }\), because \(y\>{\textsf {b} } \not = y\>{\textsf {a} }\) and \(y\>{\textsf {b} } \not \succ y\>{\textsf {a} }\) by stability under grounding substitutions (with \(\{y \mapsto \lambda x.\>{\textsf {c} }\}\)). But we can have \(y\>{\textsf {b} } \succsim y\>{\textsf {a} }\) if \({\textsf {b} } \succ {\textsf {a} }\). In practice, the strict and the nonstrict term order should be chosen so that they can compare as many pairs of terms as possible while being computable and reasonably efficient.

Definition 19

(Maximality) An element x of a multiset M is \(\unrhd \)-maximal for some relation \(\unrhd \) if for all \(y \in M\) with \(y \unrhd x\), we have \(y = x\). It is strictly \(\unrhd \)-maximal if it is \(\unrhd \)-maximal and occurs only once in M.

Definition 20

(Literal selection function) A literal selection function is a mapping from each clause to a subset of its literals. The literals in this subset are called selected. The following restrictions apply:

-

A literal must not be selected if it is positive and neither side is

.

. -

A literal

must not be selected if \(y\> \bar{u}_n\), with \(n \ge 1\), is a \(\succeq \)-maximal term of the clause.

must not be selected if \(y\> \bar{u}_n\), with \(n \ge 1\), is a \(\succeq \)-maximal term of the clause.

Definition 21

(Boolean subterm selection function) A Boolean subterm selection function is a function mapping each clause C to a subset of the green positions with Boolean subterms in C. The positions in this subset are called selected in C. Informally, we also say that the Boolean subterms at these positions are selected. The following restrictions apply:

-

A subterm

must not be selected if \(y\> \bar{u}_n\), with \(n \ge 1\), is a \(\succeq \)-maximal term of the clause.

must not be selected if \(y\> \bar{u}_n\), with \(n \ge 1\), is a \(\succeq \)-maximal term of the clause. -

A subterm must not be selected if it is

or

or  or a variable-headed term.

or a variable-headed term. -

A subterm must not be selected if it is at the top-level position on either side of a positive literal.

3.3 The Core Inference Rules

Let \(\succ \) be a strict term order, let \(\succsim \) be a nonstrict term order, let \(\textit{HLitSel}\) be a literal selection function, and let \(\textit{HBoolSel}\) be a Boolean subterm selection function. The calculus rules depend on the following auxiliary notions.

Definition 22

(Eligibility) A literal L is (strictly) \(\unrhd \)-eligible w.r.t. a substitution \(\sigma \) in C for some relation \(\unrhd \) if it is selected in C or there are no selected literals and no selected Boolean subterms in C and \(L\sigma \) is (strictly) \(\unrhd \)-maximal in \(C\sigma .\)

The \(\unrhd \)-eligible positions of a clause C w.r.t. a substitution \(\sigma \) are inductively defined as follows:

-

(E1)

Any selected position is \(\unrhd \)-eligible.

-

(E2)

If a literal

is either \(\unrhd \)-eligible and negative or strictly \(\unrhd \)-eligible and positive, then \(L.s.\varepsilon \) is \(\unrhd \)-eligible.

is either \(\unrhd \)-eligible and negative or strictly \(\unrhd \)-eligible and positive, then \(L.s.\varepsilon \) is \(\unrhd \)-eligible. -

(E3)

If the position p is \(\unrhd \)-eligible and the head of \(C|_p\) is not

or

or  , the positions of all direct green subterms are \(\unrhd \)-eligible.

, the positions of all direct green subterms are \(\unrhd \)-eligible. -

(E4)

If the position p is \(\unrhd \)-eligible and \(C|_p\) is of the form

or

or  , then the position of s is \(\unrhd \)-eligible if

, then the position of s is \(\unrhd \)-eligible if  and the position of t is \(\unrhd \)-eligible if

and the position of t is \(\unrhd \)-eligible if  .

.

If \(\sigma \) is the identity substitution, we leave it implicit.

We define deeply occurring variables as in \(\lambda \)Sup, but exclude \(\lambda \)-expressions directly below quantifiers:

Definition 23

(Deep occurrences) A variable occurs deeply in a clause C if it occurs free inside an argument of an applied variable or inside a \(\lambda \)-expression that is not directly below a quantifier.

For example, x and z occur deeply in  , whereas y does not occur deeply. Intuitively, deep occurrences are occurrences of variables that can be caught in \(\lambda \)-expressions by grounding.

, whereas y does not occur deeply. Intuitively, deep occurrences are occurrences of variables that can be caught in \(\lambda \)-expressions by grounding.

Fluid terms are defined as in \(\lambda \)Sup, using the \(\beta \eta {\textsf {Q} }_{\eta }\)-normal form:

Definition 24

(Fluid terms) A term t is called fluid if (1)  is of the form \(y\>\bar{u}_n\) where \(n \ge 1\), or (2)

is of the form \(y\>\bar{u}_n\) where \(n \ge 1\), or (2)  is a \(\lambda \)-expression and there exists a substitution \(\sigma \) such that

is a \(\lambda \)-expression and there exists a substitution \(\sigma \) such that  is not a \(\lambda \)-expression (due to \(\eta \)-reduction).

is not a \(\lambda \)-expression (due to \(\eta \)-reduction).

Case (2) can arise only if t contains an applied variable. Intuitively, fluid terms are terms whose \(\eta \)-short \(\beta \)-normal form can change radically as a result of instantiation. For example, \(\lambda x.\> y\> {\textsf {a} }\> (z\> x)\) is fluid because applying \(\{z \mapsto \lambda x.\>x\}\) makes the \(\lambda \) vanish: \((\lambda x.\> y\> {\textsf {a} }\> x) = y\> {\textsf {a} }\). Similarly, \(\lambda x.\> {\textsf {f} }\>(y\>x)\>x\) is fluid because \((\lambda x.\> {\textsf {f} }\>(y\>x)\>x)\{y \mapsto \lambda x.\>{\textsf {a} }\} = (\lambda x.\> {\textsf {f} }\>{\textsf {a} }\>x) = {\textsf {f} }\>{\textsf {a} }\). In Sect. 5, we will discuss how fluid terms can be overapproximated in an implementation.

The rules of our calculus are stated as follows. The superposition rule strongly resembles the one of \(\lambda \)Sup but uses our new notion of eligibility, and the new conditions 9 and 10 stem from the Sup rule of \(\hbox {o}\)Sup:

-

1.

u is not fluid;2.u is not a variable deeply occurring in C;

-

3.

variable condition: if u is a variable y, there must exist a grounding substitution \(\theta \) such that \(t\sigma \theta \succ t'\sigma \theta \) and \(C\sigma \theta \prec C''\sigma \theta \), where \(C'' = C\{y\mapsto t'\}\);

-

4.

\(\sigma \in {{\,\textrm{CSU}\,}}(t,u)\);5.\(t\sigma \not \precsim t'\sigma \);6.the position of u is \(\succsim \)-eligible in C w.r.t. \(\sigma \);

-

7.

\(C\sigma \not \precsim D\sigma \);8.\(t \approx t'\) is strictly \(\succsim \)-eligible in D w.r.t. \(\sigma \);

-

9.

\(t\sigma \) is not a fully applied logical symbol;

-

10.

if

, the position of the subterm u is at the top level of a positive literal.

, the position of the subterm u is at the top level of a positive literal.

The second rule is a variant of Sup that focuses on fluid green subterms. It stems from \(\lambda \)Sup.

with the following side conditions, in addition to Sup’s conditions 5 to 10:

-

1.

u is a variable deeply occurring in C or u is fluid;

-

2.

z is a fresh variable;3. \(\sigma \in {{\,\textrm{CSU}\,}}(z\>t{,}\;u)\);4.\((z\>t')\sigma \not = (z\>t)\sigma \).

The ERes and EFact rules are copied from \(\lambda \)Sup. As a minor optimization, we replace the \(\succsim \)-eligibility condition of \(\lambda \)Sup’s EFact by a \(\succsim \)-maximality condition and a condition that nothing is selected. The new conditions are not equivalent to the old one because positive literals of the form  can be selected.

can be selected.

For ERes: \(\sigma \in {{\,\textrm{CSU}\,}}(u,u')\) and \(u \not \approx u'\) is \(\succsim \)-eligible in C w.r.t. \(\sigma \). For EFact: \(\sigma \in {{\,\textrm{CSU}\,}}(u,u')\), \(u\sigma \not \precsim v\sigma \), \((u \approx v)\sigma \) is \(\succsim \)-maximal in \(C\sigma \), and nothing is selected in C.

Argument congruence—the property that \(t \approx s\) entails \(t\>z \approx s\>z\)—is embodied by the rule ArgCong, which is identical with the rule of \(\lambda \)Sup:

where \(n > 0\) and \(\sigma \) is the most general type substitution that ensures well-typedness of the conclusion. In particular, if s accepts k arguments, then ArgCong will yield k conclusions—one for each \(n \in \{1,\ldots , k\}\)—where \(\sigma \) is the identity substitution. If the result type of s is a type variable, ArgCong will yield infinitely many additional conclusions—one for each \(n > k\)—where \(\sigma \) instantiates the result type of s with \(\alpha _1 \rightarrow \cdots \rightarrow \alpha _{n-k} \rightarrow \beta \) for fresh \(\bar{\alpha }_{n-k}\) and \(\beta \). Moreover, the literal \(s \approx s'\) must be strictly \(\succsim \)-eligible in C w.r.t. \(\sigma \), and \(\bar{x}_n\) is a tuple of distinct fresh variables.

The following rules are concerned with Boolean reasoning and originate from \(\hbox {o}\)Sup. They have been adapted to support polymorphism and applied variables.

-

1.

\(\sigma \) is a type unifier of the type of u with the Boolean type \(\hbox {o}\) (i.e., the identity if u is Boolean or \(\alpha \mapsto \hbox {o}\) if u is of type \(\alpha \) for some type variable \(\alpha \));

-

2.

u is neither variable-headed nor a fully applied logical symbol;

-

3.

the position of u is \(\succsim \)-eligible in C;

-

4.

the occurrence of u is not at the top level of a positive literal.

1.  ; 2. \(s \approx s'\) is strictly \(\succsim \)-eligible in C w.r.t. \(\sigma \).

; 2. \(s \approx s'\) is strictly \(\succsim \)-eligible in C w.r.t. \(\sigma \).

-

1.

, or

, or  , respectively;

, respectively; -

2.

x, y, and \(\alpha \) are fresh variables;

-

3.

the position of u is \(\succsim \)-eligible in C w.r.t. \(\sigma \);

-

4.

if the head of u is a variable, it must be applied and the affected literal must be of the form

, or \(u \approx v\) where v is a variable-headed term.

, or \(u \approx v\) where v is a variable-headed term.

-

1.

\(\sigma \in {{\,\textrm{CSU}\,}}(t,u)\) and \((t, t')\) is one of the following pairs:

where y is a fresh variable;

-

2.

u is not a variable;

-

3.

the position of u is \(\succsim \)-eligible in C w.r.t. \(\sigma \);

-

4.

if the head of u is a variable, it must be applied and the affected literal must be of the form

, or \(u \approx v\) where v is a variable-headed term.

, or \(u \approx v\) where v is a variable-headed term.

-

1.

and

and  , respectively, where \(\beta \) is a fresh type variable, y is a fresh term variable, \(\bar{\alpha }\) are the free type variables and \(\bar{x}\) are the free term variables occurring in \(y\sigma \) in order of first occurrence;

, respectively, where \(\beta \) is a fresh type variable, y is a fresh term variable, \(\bar{\alpha }\) are the free type variables and \(\bar{x}\) are the free term variables occurring in \(y\sigma \) in order of first occurrence; -

2.

u is not a variable;

-

3.

the position of u is \(\succsim \)-eligible in C w.r.t. \(\sigma \);

-

4.

if the head of u is a variable, it must be applied and the affected literal must be of the form

, or \(u \approx v\) where v is a variable-headed term;

, or \(u \approx v\) where v is a variable-headed term; -

5.

for ForallRw, the indicated occurrence of u is not in a literal

, and for ExistsRw, the indicated occurrence of u is not in a literal

, and for ExistsRw, the indicated occurrence of u is not in a literal  .

.

In principle, the subscript of the Skolems above could be normalized using Boolean tautologies to share as many Skolem symbols as possible. This is an extension of our calculus that we did not investigate any further.

Like Sup, also the Boolean rules must be simulated in fluid terms. The following rules are Boolean counterparts of FluidSup:

-

1.

u is fluid;

-

2.

z and x are fresh variables;

-

3.

\(\sigma \in {{\,\textrm{CSU}\,}}(z\>x{,}\;u)\);

-

4.

;

; -

5.

and

and  ;

; -

6.

the position of u is \(\succsim \)-eligible in C w.r.t. \(\sigma \).

Same conditions as FluidBoolHoist, but  is replaced by

is replaced by  in condition 4.

in condition 4.

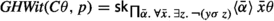

In addition to the inference rules, our calculus relies on two axioms, below. Axiom (Ext), from \(\lambda \)Sup, embodies functional extensionality; the expression \({\textsf {diff} }{\langle \alpha ,\beta \rangle }\) abbreviates  . Axiom (Choice) characterizes the Hilbert choice operator \(\varepsilon \).

. Axiom (Choice) characterizes the Hilbert choice operator \(\varepsilon \).

3.4 Rationale for the Rules

Most of the calculus’s rules are adapted from its precursors. Sup, ERes, and EFact are already present in Sup, with slightly different side conditions. Notably, as in \(\lambda \)fSup and \(\lambda \)Sup, Sup inferences are required only into green contexts. Other subterms are accessed indirectly via ArgCong and (Ext).

The rules BoolHoist, EqHoist, NeqHoist, ForallHoist, ExistsHoist, FalseElim, BoolRw, ForallRw, and ExistsRw, concerned with Boolean reasoning, stem from \(\hbox {o}\)Sup, which was inspired by \(\mathrel {\leftarrow \rightarrow }\)Sup. Except for BoolHoist and FalseElim, these rules have a condition stating that “if the head of u is a variable, it must be applied and the affected literal must be of the form  , or \(u \approx v\) where v is a variable-headed term.” The inferences at variable-headed terms permitted by this condition are our form of primitive substitution [1, 21], a mechanism that blindly substitutes logical connectives and quantifiers for variables z with a Boolean result type.

, or \(u \approx v\) where v is a variable-headed term.” The inferences at variable-headed terms permitted by this condition are our form of primitive substitution [1, 21], a mechanism that blindly substitutes logical connectives and quantifiers for variables z with a Boolean result type.

Example 25

Our calculus can prove that Leibniz equality implies equality (i.e., if two values behave the same for all predicates, they are equal) as follows:

The clause  describes Leibniz equality of \({\textsf {a} }\) and \({\textsf {b} }\); if a predicate z holds for \({\textsf {a} }\), it also holds for \({\textsf {b} }\). The clause \({\textsf {a} } \not \approx {\textsf {b} }\) is the negated conjecture. The EqHoist inference, applied on \(z\>{\textsf {b} }\), illustrates how our calculus introduces logical symbols without a dedicated primitive substitution rule. Although

describes Leibniz equality of \({\textsf {a} }\) and \({\textsf {b} }\); if a predicate z holds for \({\textsf {a} }\), it also holds for \({\textsf {b} }\). The clause \({\textsf {a} } \not \approx {\textsf {b} }\) is the negated conjecture. The EqHoist inference, applied on \(z\>{\textsf {b} }\), illustrates how our calculus introduces logical symbols without a dedicated primitive substitution rule. Although  does not appear in the premise, we still need to apply EqHoist on \(z\>{\textsf {b} }\) with

does not appear in the premise, we still need to apply EqHoist on \(z\>{\textsf {b} }\) with  . Other calculi [1, 11, 21, 34] would apply an explicit primitive substitution rule instead, yielding essentially

. Other calculi [1, 11, 21, 34] would apply an explicit primitive substitution rule instead, yielding essentially  . However, in our approach this clause is subsumed and could be discarded immediately. By hoisting the equality to the clausal level, we bypass the redundancy criterion.

. However, in our approach this clause is subsumed and could be discarded immediately. By hoisting the equality to the clausal level, we bypass the redundancy criterion.

Next, BoolRw can be applied to  with

with  . The two FalseElim steps remove the

. The two FalseElim steps remove the  literals. Then Sup is applicable with the unifier \(\{w\mapsto \lambda x_1\, x_2\, x_3.\> x_2\}\in {{\,\textrm{CSU}\,}}({\textsf {b} }{,}\; w\>{\textsf {a} }\>{\textsf {b} }\>{\textsf {b} })\), and ERes derives the contradiction.

literals. Then Sup is applicable with the unifier \(\{w\mapsto \lambda x_1\, x_2\, x_3.\> x_2\}\in {{\,\textrm{CSU}\,}}({\textsf {b} }{,}\; w\>{\textsf {a} }\>{\textsf {b} }\>{\textsf {b} })\), and ERes derives the contradiction.

This mechanism resembling primitive substitution is not the only way our calculus can instantiate variables with logical symbols. Often, the correct instantiation can also be found by unification with a logical symbol that is already present:

Example 26

The following derivation shows that there exists a function y that is equivalent to the conjunction of \({\textsf {p} }\>x\) and \({\textsf {q} }\>x\) for all arguments x:

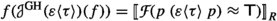

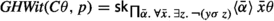

Here, \({\textsf {sk} }\) stands for  . First, we use the rule ExistsHoist to resolve the existential quantifier, using the unifier

. First, we use the rule ExistsHoist to resolve the existential quantifier, using the unifier  for fresh variables \(\alpha \), \(y'\), and z. Then ForallRw skolemizes the universal quantifier, using the unifier

for fresh variables \(\alpha \), \(y'\), and z. Then ForallRw skolemizes the universal quantifier, using the unifier  for fresh variables \(\beta \) and \(z'\). The Skolem symbol takes \(y'\) as argument because it occurs free in

for fresh variables \(\beta \) and \(z'\). The Skolem symbol takes \(y'\) as argument because it occurs free in  . Then BoolRw applies because the terms \(y'\>({\textsf {sk} }\>y')\) and

. Then BoolRw applies because the terms \(y'\>({\textsf {sk} }\>y')\) and  are unifiable and thus

are unifiable and thus  is unifiable with

is unifiable with  . Finally, two FalseElim inferences lead to the empty clause.

. Finally, two FalseElim inferences lead to the empty clause.

Like in \(\lambda \)Sup, the FluidSup rule is responsible for simulating superposition inferences below applied variables, other fluid terms, and deeply occurring variables. Complementarily, FluidBoolHoist and FluidLoobHoist simulate the various Boolean inference rules below fluid terms. Initially, we considered adding a fluid version of each rule that operates on Boolean subterms, but we discovered that FluidBoolHoist and FluidLoobHoist suffice to achieve refutational completeness.

Example 27

The following clause set demonstrates the need for the rules FluidBoolHoist and FluidLoobHoist:

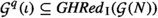

The set is unsatisfiable because the instantiation  produces the clause

produces the clause  , which is unsatisfiable in conjunction with \({\textsf {a} }\not \approx {\textsf {b} }\).

, which is unsatisfiable in conjunction with \({\textsf {a} }\not \approx {\textsf {b} }\).

The literal selection function can select either literal in the first clause. ERes is applicable in either case, but the unifiers  and

and  do not lead to a contradiction. Instead, we need to apply FluidBoolHoist if the first literal is selected or FluidLoobHoist if the second literal is selected. In the first case, the derivation is as follows:

do not lead to a contradiction. Instead, we need to apply FluidBoolHoist if the first literal is selected or FluidLoobHoist if the second literal is selected. In the first case, the derivation is as follows:

The FluidBoolHoist inference uses the unifier \(\{y \mapsto \lambda u.\> z'\>u\>(x'\> u),\ z \mapsto \lambda u. z'\>{\textsf {b} }\>u,\ x \mapsto x'\>{\textsf {b} }\} \in {{\,\textrm{CSU}\,}}(z\>x{,}\; y\>{\textsf {b} })\). We apply ERes to the first literal of the resulting clause, with unifier  . Next, we apply EqHoist with the unifier

. Next, we apply EqHoist with the unifier  to the literal created by FluidBoolHoist, effectively performing a primitive substitution. The resulting clause can superpose into \({\textsf {a} } \not \approx {\textsf {b} }\) with the unifier \(\{x'' \mapsto \lambda u.\> u\} \in {{\,\textrm{CSU}\,}}(x''\>{\textsf {b} }{,}\; {\textsf {b} })\). The two sides of the interpreted equality in the first literal can then be unified, allowing us to apply BoolRw with the unifier

to the literal created by FluidBoolHoist, effectively performing a primitive substitution. The resulting clause can superpose into \({\textsf {a} } \not \approx {\textsf {b} }\) with the unifier \(\{x'' \mapsto \lambda u.\> u\} \in {{\,\textrm{CSU}\,}}(x''\>{\textsf {b} }{,}\; {\textsf {b} })\). The two sides of the interpreted equality in the first literal can then be unified, allowing us to apply BoolRw with the unifier  . Finally, applying ERes twice and FalseElim once yields the empty clause.

. Finally, applying ERes twice and FalseElim once yields the empty clause.

Remarkably, none of the provers that participated in the CASC-J10 competition can solve this two-clause problem within a minute. Satallax finds a proof after 72 s and LEO-II after over 7 minutes. The CASC-28 version of our new Zipperposition implementation solves it in 3 s.

3.5 Soundness

All of our inference rules and axioms are sound w.r.t.  and the ones that do not introduce Skolem symbols are also sound w.r.t. \(\models \). Any derivation using our inference rules and axioms is satisfiability-preserving w.r.t. both \(\models \) and

and the ones that do not introduce Skolem symbols are also sound w.r.t. \(\models \). Any derivation using our inference rules and axioms is satisfiability-preserving w.r.t. both \(\models \) and  if the initial clause set does not contain \({\textsf {sk} }\) symbols. The preprocessing is sound w.r.t. both \(\models \) and

if the initial clause set does not contain \({\textsf {sk} }\) symbols. The preprocessing is sound w.r.t. both \(\models \) and  :

:

Theorem 28

(Soundness and completeness of  -normalization)

-normalization)  -normalization preserves denotations of terms and truth of clauses w.r.t. proper interpretations.

-normalization preserves denotations of terms and truth of clauses w.r.t. proper interpretations.

Proof

It suffices to show that

for all types \(\tau \), proper interpretations  , and all valuations \(\xi \).

, and all valuations \(\xi \).

Let f be a function from  to \(\{0,1\}\). Then

to \(\{0,1\}\). Then

By the definition of proper interpretations (Sect. 2.2), we have

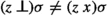

Thus, it remains to show that  if and only if f is constantly 1. This holds because by the definition of term denotation,

if and only if f is constantly 1. This holds because by the definition of term denotation,  and because

and because  by properness of the interpretation, for all

by properness of the interpretation, for all  . The case of

. The case of  is analogous. \(\square \)

is analogous. \(\square \)

To show soundness of the inferences, we need the substitution lemma for our logic:

Lemma 29

(Substitution lemma) Let  be a proper interpretation. Then

be a proper interpretation. Then

for all terms t, all types \(\tau \), and all substitutions \(\rho \), where  for all type variables \(\alpha \) and

for all type variables \(\alpha \) and  for all term variables x.

for all term variables x.

Proof

Analogous to Lemma 18 of \(\lambda \)Sup [10]. \(\square \)

It follows that a model of a clause is also a model of its instances:

Lemma 30

If  for some interpretation

for some interpretation  and some clause C, then

and some clause C, then  for all substitutions \(\rho \).

for all substitutions \(\rho \).

Proof