Abstract

Young children with autism spectrum disorder (ASD) present with a broad range of spoken language abilities, as well as delays in precursor skills such as gesture production and joint attention skills. While standardized assessments describe language strengths, the Communication and Symbolic Behavior Scales (CSBS-DP) is a particularly robust measure as it additionally characterizes precise aspects of social communication. This study provides a unique contribution by assessing the interactional effects of CSBS-DP Social Composite performance with early language samples on later language outcomes. Our results indicate that multiple social communication elements significantly interact with early spoken language to predict later language. Our findings also highlight the transactional relationship between early spoken vocabulary and social communication skills that bolster language development growth.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Autism spectrum disorder (ASD) is characterized by impaired social interactions and atypical restricted and repetitive behaviors (American Psychiatric Association 2013); however, a wide spectrum of social functioning can be seen in children with ASD, and their language abilities are similarly heterogeneous (e.g., Arunachalam and Luyster 2016; Fein et al. 2013; Kjelgaard and Tager-Flusberg 2001; Tager-Flusberg 2004; Yoder et al. 2015). That is, children with ASD may have language abilities on one end of the spectrum characterized by minimal to no language, while others present with an intact, comprehensive spoken language system on the other end (Jones et al. 2014; Tager-Flusberg and Kasari 2013; Talbott et al. 2018; Tek et al. 2014). Overall, there is a large body of research exploring factors that may help us understand or even predict language outcomes in this population, particularly in regard to nonverbal communication. Gesture use is one such area, as gestures are strong predictors of current and future language skills in both typically developing (TD) children (e.g., Iverson and Goldin-Meadow 2005) and children with ASD (e.g., Charman et al. 2003). Joint attention behaviors, which include shared eye gaze and affect with reference to an object or scene, also have been demonstrated to predict current and future language in TD children and children with ASD (Mundy et al. 1986, 2007; Siller and Sigman 2008). The current study aims to better understand the interactive relationship between early language abilities and various nonverbal communication factors, including gesture and joint attention, in predicting later language use.

Nonverbal Predictors of Language Outcomes in ASD

Gesture

A major focus of language outcomes in autism has explored the role that early gesture use plays in later language abilities. Gesture is a broad term that encompasses many different types, such as deictic gestures (e.g., showing, giving, pointing, reaching), symbolic gestures (e.g., actions carried out on an object to depict the object and its function), conventional or representational gestures (e.g., waving hello, shaking head “no”), and iconic gestures (e.g., when a form stands in for a referent, such as flapping arms to represent a bird; Capone and McGregor 2004). Early gesture use is associated with later language abilities and is considered to be a critical precursor to early language production in TD children (Bates and Dick 2002; Iverson and Goldin-Meadow 2005).

Not surprisingly, gestures reflect children’s social communication functioning, and limited gesture use is included in the diagnostic criteria for ASD under the DSM-5 (American Psychiatric Association 2013). The social communication deficits specific to autism inherently restrict the development of early gesture and early language skills, and typically also include limited use of shared attention and eye gaze (Heymann et al. 2018; Ozonoff et al. 2010; Parlade and Iverson 2015; Sowden et al. 2013; Wetherby et al. 2004; Wetherby et al. 2007). Although considered a hallmark deficit of autism, the use of gestures in children with ASD is variable (Choi et al. 2019; Dimitrova et al. 2016; Morett et al. 2016). Some children with ASD may produce gestures, but not integrate them with their nonverbal and verbal communication, while other children with ASD may present with a total absence of gesture use (Manwaring et al. 2018; Ramos-Cabo et al. 2019). Children with autism generally exhibit a unique gesture profile including a lower rate of gesture use (Colgan et al. 2006; Iverson et al. 2018; Mitchell et al. 2006; Parlade and Iverson 2015).

Several empirical studies have documented that variability in early gesture use is associated with variability in later language abilities in children with ASD (e.g., Charman et al. 2003; Luyster et al. 2008; Veness et al. 2014). These associations have been primarily based on gestural data from parent reports, using the MacArthur-Bates Communicative Development Inventories (M-CDI; Charman et al. 2003; Fenson et al. 1994; Luyster et al. 2008) or the M-CDI in combination with the Communication and Symbolic Behavior Scales – Developmental Profile (CSBS-DP) Infant Toddler Checklist (Veness et al. 2012; Wetherby and Prizant 2002). Recently, Manwaring et al. (2017) used structural equation modeling with a large sample of children (n = 197) that included both TD children and those with ASD and developmental delays. Their study provided strong empirical support that early gesture was strongly correlated with concurrent overall receptive and expressive language skills. Importantly, their study included measures of both parent report and direct observation of children’s actual behavior and gesture use. Their results also indicated that fine motor ability, as included in their construct of gesture use, also contributed to this relationship between gesture and language abilities.

Joint Attention

Joint attention is another area of nonverbal communication that has been explored for its relationship with language outcomes. Prior to the emergence of joint attention skills, both TD children and children with social communication delays begin alternating visual attention between caregivers and objects with gaze shifts (Braddock et al. 2015; Franchini et al. 2017; Heymann et al. 2018; Hobson, 2005). Shared attention and continuous monitoring of a social partner’s interest in the context of joint attention is one of the earliest opportunities that episodes of shared positive affect occur, which has also been linked to early expressive language growth (Adamson et al. 2019; Laake and Bridgett 2014; Poon et al. 2012; Yoder et al. 2015) and early social skills (Heymann et al. 2018; Lee and Schertz 2019; Parlade and Iverson 2015; Rollins 2018; Thorup et al. 2018). Joint attention supports language development in many ways, but particularly with word learning, as it allows children to match linguistic input to referents in their environmental context. Studies have indicated the emergence of the ability to coordinate attention toward a social partner and an object of mutual interest provides the child with the foundation for later symbolically mediated conversations (e.g., Carpenter et al. 1998; Hurwitz and Watson 2016; Jones et al. 2017; Mundy and Newell 2007; Romero et al. 2018; Tomasello 1988; Vivanti, et al. 2017; Wong and Kasari 2012).

There is also ample empirical evidence supporting the contribution of joint attention to language development in both TD and disordered populations. For example, Mundy and Gomes (1998) reported that TD toddlers’ frequency of following an adult’s gaze and points was a significant predictor of their receptive language abilities 4 months later. This basic effect has been replicated with new TD samples (e.g., Farrant and Zubrick 2012; Lieven 2017; Markus et al. 2000), and with joint attention coded from naturalistic play sessions (e.g., Abdelaziz et al. 2018; Kelty-Stephen et al. 2014; Naigles et al. 2016; Rollins and Snow 1998; Tomasello and Farrar 1986). Further, children with ASD engage in joint attention with others much less frequently and for shorter periods of time, compared to their TD peers (e.g. Charman et al. 1998; Mundy et al. 1994; Mundy et al. 2009; Naigles 2013). Empirical evidence suggests that such an impairment affects their language skills (e.g. Carpenter et al. 1998; Morales et al. 2000; Mundy and Gomes 1998; Stone and Yoder 2001). Given the established contributions of gesture and joint attention to language development, in the current study we sought to disentangle discrete interactions by comparing gesture and joint attention, among other social communication measures, as moderators of language growth in children with ASD. The choice of measures for this comparison is not inconsequential, and below we explicate the categories and content of language assessments.

Assessment of Nonverbal Communication in ASD

Assessing nonverbal communicative behaviors such as gesture, joint attention, and positive affect requires a systematic approach in order to inform developmental language outcomes (e.g., Carpenter et al. 1998; Iverson and Goldin-Meadow 2005; Manwaring et al. 2017; Morales et al. 2000; Mundy and Gomes 1998; Rowe and Goldin-Meadow 2009; Stone and Yoder 2001). Traditional standardized assessments of language do not always account for nonverbal communicative attempts (e.g., Courchesne et al. 2015), nor do interventions focused on nonverbal communication consistently measure language growth (Kasari et al. 2008; LeBarton et al. 2015). Systematic evaluation of nonverbal communication is especially pivotal for children who are minimally verbal (Bal et al. 2016; Capone and McGregor 2004; Crais et al. 2009; Kasari et al. 2013), as these behaviors may reveal more communicative intent than is shown in their vocalizations.

Nonverbal measures of communication can include caregiver report, such as the M-CDI in which caregivers complete a checklist whose behaviors include pointing, waving, and other kinds of gestures. While historical use of the M-CDI in particular has indicated caregivers are fairly accurate reporters, reliance on caregiver report alone introduces risk for biased reporting and inconsistencies in caregiver sensitivity (Braddock et al. 2015; Franchini et al. 2018; Iverson et al. 2018; Szatmari et al. 2016). Direct observations of nonverbal communication can also be recorded, via the Mullen Scales of Early Learning (MSEL; Mullen 1995) or the Early Social Communication Scales (ESCS; Mundy et al. 2003); however, many such standardized behavioral measures fail to denote nonverbal communicative behaviors during in person social interactions (Ozcaliskan et al. 2016).

A well-established assessment for early communication, the CSBS-DP, is particularly sensitive to examining gesture, joint attention, and shared affect profiles, and evaluates a variety of verbal and nonverbal communication behaviors, such as gaze shifts, conventional and distal gestures, and rate of communication (Wetherby and Prizant 2002). While many communication measures yield vocabulary and gesture inventories, the CSBS-DP Social Composite also captures information about joint attention and intentional communicative attempts, as they are foundational communicative behaviors (see Table 1 for a review of the clinical behaviors included in the Social Composite). Depicting this variety of social behavior provides pivotal supplemental information essential for describing a child’s communicative strengths and weaknesses. Thus, we suggest that the CSBS-DP is an ideal assessment tool for evaluating the wide range of nonverbal communication skills in children with autism, including gestures, eye gaze, joint attention, and social responsiveness to interactions.

While the CSBS-DP accounts for prevalence of observed communicative acts, including communicative act means and function, scoring is primarily completed by noting absence or presence of a behavior within each of the six sampling opportunities. In contrast, scoring for the previous edition of this assessment, the Communication and Symbolic Behavior Scales Normed Edition (CSBS; Wetherby and Prizant 1993), also tabulates the total frequency of each type of communicative act. In this study we propose to use the CSBS-DP, as well as the frequencies of behaviors captured in the Social Composite including gestural and social-affective signaling acts, to compare different types of nonverbal communicative predictors to language outcome (see Table 2 for a review of the Communication Scales included in the CSBS).

Assessment of Language Outcomes in ASD

In the research cited above suggesting a relationship between nonverbal communication and language, most studies have used broad measures of language, such as the MSEL or measures that focus specifically on parent report vocabulary like the M-CDI. However, assessment of language in autism is also inherently challenging, as many such standardized assessments require particular behavioral responses to stimuli, which demands may negatively impact performance on the assessment, as children with ASD often struggle to participate in adult-led activities. Additionally, there is the potential for children to demonstrate floor level performance (i.e., a standard score < 50), specifically those who are nonverbal or minimally verbal (Volden et al. 2016). While standardized measures of language remain useful tools for understanding a child’s unique developmental profile, comprehensive analysis of communication abilities, expressive skills in particular, necessitates supplemental observations of spontaneous language use (Goffman and Leonard 2000; Kim et al. 2014; Tommerdahl and Kilpatrick 2015; Tribushinina et al. 2013). Language samples that are collected during play-based, child-led interactions are an ideal opportunity to capture expressive language in children with ASD (e.g., Condouris et al. 2003; Kover et al. 2014; Kover et al. 2012; Tager-Flusberg et al. 2009; Tek et al. 2014; Wittke et al. 2017). Language samples provide the opportunity to examine a number of expressive language skills. Vocabulary is of particular interest for children with ASD, as it is well-documented that children with autism acquire fewer words than their TD age-matched peers, although the amount and types of words they use are similar to younger TD language-matched peers (see Naigles and Chin 2015; Tek and Landa 2012). Some of this slower vocabulary development can be attributed to the children with ASD’s difficulties with word learning strategies such as the shape bias; that is, while TD children show a preference to extend labels to same-shape objects, children with ASD do not (Potrzeba et al. 2015; Tek et al. 2008). Because word learning and word use are so varied in children with ASD (Kover et al. 2014; Tek and Landa 2012), their word production within a naturalistic interaction provides a meaningful language outcome for the current research question concerning the roles of nonverbal communication behaviors in later language abilities.

Current Study

The current study aims to investigate the interactional, moderating effects of nonverbal communication factors on vocabulary growth in young children with a diagnosis of ASD. Moderation analysis will allow us to not only examine a causal or predictive link between the variables of interest, but specifically to assess the conditions under which an effect occurs.

In particular, this study aims to ascertain whether a significant interaction between word use and social communication behaviors in a heterogenous group of toddlers diagnosed with ASD contributes to changes in lexical diversity and complexity observed approximately three years later. To date, no studies have used both a comprehensive measure of nonverbal communication and language sampling assessment that is more sensitive to capturing the range of possible language abilities seen across the spectrum.

Due to the naturalistic context of our language samples, we choose to use total word types for our measure of word use, as opposed to word tokens, in order to avoid inflation of language performance appraisal that can occur when samples include words used with a high frequency (Sperry et al. 2014). The following research questions are examined:

-

(1)

how do the Social Composite subscales of the CSBS-DP each interact with word use and therefore moderate growth in vocabulary over three years?

-

(2)

how do the frequencies of the aforementioned behaviors each interact with word use and thus moderate growth in vocabulary?

Methods

Participants

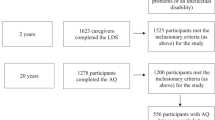

This study utilizes a subset of participants from the Autism Phenome Project (APP; total N = 54, 42 males, mean age at initial visit = 33.9 months, SD = 5.5), a longitudinal study completed through the MIND (Medical Investigation of Neurodevelopmental Disorders) Institute at the University of California, Davis. The APP includes collection of genetic, behavioral, language, and neurobiological features of children with autism, in order to better define the broad autism phenotype. Children were recruited for the APP from the northern California region in close timing to initial ASD diagnosis, around three years of age (Time 1). Participants were invited to return one and two years later (Time 3) in order to enable examination of developmental trajectory patterns. While the APP is a large-scale project that included 189 children in the initial phase of the project (collected between the years of 2007 to 2011), we are using a subset of children for the current project based on inclusion criteria to support our current aims. Inclusion criteria are limited to participants with available spontaneous language samples at Time 1 and Time 3, as well as CSBS-DP scores from Time 1. All participants also had confirmed ASD diagnoses, supported by scores in the autism range on the Autism Diagnostic Observation Schedule (ADOS; Lord et al. 1999). See Table 3 for characteristics of the sample and performance on developmental measures at Time 1 and Time 3.

Materials

The CSBS-DP Behavior Sample was administered to all participants at Time 1 and video was recorded for subsequent scoring. This measure was administered in a child friendly laboratory play room by trained researchers who had demonstrated experience with young children and test administration of standardized measures. The behaviors were elicited based on six communicative temptation activities including a wind-up toy, balloon, bubbles, jar of snacks, shared book reading, and pretend play. Raw scores yielded three subscales that include communication, gesture, and emotion and eye gaze (see Table 1). Additionally, frequency data for the comparable social elements assessed in the previous version of the CSBS were also tabulated. These frequency variables included communication forms: conventional gestures, distal gestures, gestures with vocalization, gaze shifts, shared positive affect, and episodes of negative affect. Frequency coding was also completed for communication functions: behavior regulation, social interaction, joint attention, respondent acts, and repair strategies. That is, each communicative act was coded for both form and function and is thereby accounted for with two different frequency variables. The reciprocity cluster score is measured in the CSBS, but not the CSBS-DP, and includes rate of communicative acts, respondent acts, and repair strategies. Respondent acts indicate a child responded to an adult’s communicative act within 2–3 s by continuing the same topic or focus of attention, such as answering a question or following an adult’s point with a gaze shift. A repair strategy was observed if a child promptly repeated or modified a previously unsuccessful communicative act. For example, a modified act could include using a different form of communication like combining gesture and sound or changing the quality of a signal such as increasing loudness.

Spontaneous language samples were obtained at both Time 1 and Time 3, using video recordings of behavioral testing from those time points. Spontaneous speech samples at Time 1 were collected in the context of the CSBS-DP behavior sampling opportunities. Similarly, speech samples at Time 3 were collected in the context of the ADOS. Specific tasks on the ADOS were chosen that afforded the most spontaneous and unprompted language production. Although the exact tasks varied slightly depending on the ADOS Module that the child was administered, the following tasks were included in the language sample transcriptions: Free Play, Birthday Party, Bubble Play, Snack, Make-Believe Play, Conversation, Description of a Picture, Telling a Story from a Book, Cartoons, and Creating a Story. For more specific detail and information regarding the selection criteria and transcription procedure for eligible participants at Time 3, please see Wittke et al. (2017).

Both sets of spontaneous speech samples (from the CSBS-DP at Time 1 and the ADOS at Time 3) were transcribed by the first two authors and trained research assistants using Computerized Language Analysis (CLAN) software in CHAT format (MacWhinney 2008). Samples from Time 1 were analyzed by the first author and two trained research assistants, while samples from Time 3 were analyzed by the second author and one research assistant for a previous research study (Wittke et al. 2017); both authors established coding reliability with line by line agreement exceeding 90%. The transcription team used a consensus coding procedure consistent with Shriberg et al. (1984) and Wittke et al. (2017). All discrepancies were discussed by the transcription team until at least 90% inter-rater agreement (range of 92–98%) was achieved; if line agreement was unable to be achieved, such utterances were consequently coded as unintelligible.

Once all spontaneous language transcripts were double-scored and both raters achieved line by line reliability, the total number of word types (TWT) were calculated in CLAN for each participant’s language sample. As described earlier, TWT were used as a more sensitive measure of vocabulary rather than total word tokens of these children’s expressive language functioning.

Results

Preliminary Analyses

A series of moderation models was completed with the intent of assessing interactions between early language status and summative social communication skills, communication patterns, affect and gaze behavior, and early gesture skills. A preliminary basic data screening was completed to ensure data entry accuracy and assess distribution normality. This subset of participants was selected based on availability of data for all variables of interest; therefore, no missing data solutions were required. The dependent variable for all analyses was TWT at Time 3, as predicted by the independent variable TWT at Time 1. A simple regression was calculated to predict TWT at Time 3 based on TWT at Time 1; significance was found (F(2,52) = 51.153, p < 0.001), with an \({R}^{2}\)= 0.496, confirming the appropriateness to continue with the moderation analyses.

To determine whether gender or receptive-expressive language variables at Time 1 needed to be included as covariates in the main analysis, gender and T-scores from the MSEL expressive and receptive subtests were also individually incorporated in a subsequent series of hierarchical multiple regressions analyses. While positive trends for significant contributions were observed for higher receptive language scores in greater TWT at Time 3, the proportion of variance accounted for by these predictors consistently and significantly declined in all moderation models with covariate inclusion. Therefore, we did not include any covariates in the main analyses.

Moderator Analyses

All analyses were conducted using SPSS Version 25, with moderation analysis conducted using the SPSS macro PROCESS (Hayes 2018). A series of ordinary least square regression models were run with proposed moderators including the CSBS-DP Social Communication subscales (see Table 1), as well as frequency data for the following social elements: conventional gestures, distal gestures, gestures with vocalization, gaze shifts, shared positive affect, episodes of negative affect, behavior regulation, joint attention, social interaction, respondent acts, rate of communicative acts, and repair strategies (see Table 2). Separate hierarchical multiple regression analyses were performed for each potential moderator variable. In Step 1, the predictor TWT at Time 1 was entered into the model. In Step 2 of each model, the potential moderator was added as an additional predictor. In Step 3, a two-way interaction term between Time 1 TWT and the moderator was added to the model. Differences in R2 between regression models with and without the interaction term were compared across nested models to evaluate proportion of variance uniquely explained by the moderating two-way interaction term and to prompt further investigation of conditional interactions. In order to probe significant interaction effects, the Johnson-Neyman technique (Bauer and Curran 2005; Johnson and Neyman 1936; Johnson and Fay 1950; Rogosa 1980) was implemented to identify transition points in the range of moderator variables where the effect of TWT at Time 1 on TWT at Time 3 was significant and not significant.

Multiple moderation models indicated significant interaction effects, demonstrating a change in the magnitude or direction of the relationship between TWT at Time 1 and Time 3. For the CSBS-DP, a significant moderating interaction was identified for the Emotion and Eye Gaze Scale (Δ\({R}^{2}\)= 0.0550, F(3; 50) = 6.1208, p = 0.0168) and the Communication Scale (Δ\({R}^{2}\)= 0.0754, F(3; 50) = 6.1208, p = 0.0035); however, no significant moderation effect was found for the Gesture Scale (Δ\({R}^{2}\)= 0.0218, F(3; 50) = 2.2608, p = 0.1390). The focal predictor, TWT at Time 1, had a significant effect across the entire range of values for the Emotion and Eye Gaze Scale, and for Communication Scale standard scores below 15.6229 (88.89%; 17 is the maximum). The interaction effect sizes and Johnson-Neyman threshold values for all completed moderation models are presented in Table 4. If the interaction between TWT at Time 1 and the moderator remained significant across all values of the moderator, no significant transition points are noted. However, if interaction significance was limited to a range of observed moderator values, the threshold value for significance is presented as well as the percentage of observed moderator values above and below the threshold value. Thus, variance in the Communication scale moderated T3 TWT outcomes at all but the highest scores. The non-mean centered and mean-centered models with CSBS-DP subscale standard scores as moderators are presented in Online Tables 5 and 6. In each of these tables, Model 1 reflects Time 1 TWT as a significant predictor of Time 3 TWT and Model 2 incorporates the Emotion and Eye Gaze (see Online Resource 1, Table 1) or Communication (see Online Resource 1, Table 2) subscale standard scores as an additional predictor. Model 3 presents a non-mean centered moderation model, expanding upon Model 2 by including the interaction term between the subscale standard scores and Time 1 TWT. Model 4 includes all predictors reflected in Model 3, but with a mean-centered interaction term: the interaction term mean (W) is subtracted from each value of the interaction term to produce a new variable (W′) with a mean of zero and a standard deviation equal to the standard deviation of W. Therefore, mean-centered Model 4 has the same model fit as Model 3 with reparameterization applied, estimating the effect of Time 1 TWT on Time 3 TWT when W′ = 0.

The same moderation model procedure was applied to the frequency data for the social, gestural, and emotional display elements described earlier (see Table 3 for raw data). Frequency of conventional gestures (Δ\({R}^{2}\)= 0.000, F(3; 50) = 0.007, p = 0.9785) and frequency of distal gestures (Δ\({R}^{2}\)= 0.0017, F(3; 50) = 0.1663, p = 0.6852) were not significant moderating variables. In contrast, frequency of gestures paired with vocalization was a significant moderator (Δ\({R}^{2}\)= 0.0731, F(3; 50) = 8.5710, p = 0.0051). The focal predictor, TWT at Time 1, had a significant interaction effect for gestures with vocalization when the observed frequency of this behavior was 22.5089 or lower, which includes 81.48% of the sample participants. That is, for individuals with fewer than 22.5089 observed occurrences of gestures paired with vocalization (18.52% or approximately 10 individuals in this sample), the T1-T3 TWT trajectory was not moderated by interaction effects. The non-mean centered and mean-centered models for this measure is presented in Online Resource 1, Table 3.

Further, a significant moderation effect was observed for frequency of gaze shifts (Δ\({R}^{2}\)= 0.0780, F(3; 50) = 10.2496, p = 0.0023), but not for frequency of shared positive affect (Δ\({R}^{2}\)= 0.0176, F(3; 50) = 1.9178, p = 0.1723) and episodes of negative affect (Δ\({R}^{2}\)= 0.0377, F(3; 50) = 4.4287, p = 0.0404). The focal predictor, T1 TWT, had a significant interactional effect for gaze shift frequencies below/lower than 16.8401 (87.04%; max of 25). The non-mean centered and mean-centered model for frequency of gaze shifts is presented in Online Resource 1, Table 4.

Additionally, examination of behavior regulation (Δ\({R}^{2}\)= 0.0592, F(3; 50) = 6.9780, p = 0.0110) and joint attention (Δ\({R}^{2}\)= 0.0813, F(3; 50) = 11.9276, p = 0.0011) frequency counts indicated a significant moderation effect for frequencies lower than 29.5289 and 14.9705, respectively (with maximums of 34 and 24, respectively). However, frequency of communicative acts with a social interaction function was non-significant (Δ\({R}^{2}\)= 0.0007, F(3; 50) = 0.0775, p = 0.7818). Regarding reciprocity, frequency of respondent acts (Δ\({R}^{2}\)= 0.0476, F(3; 50) = 6.1555, p = 0.0165) had a significant moderating effect for frequencies fewer than 34.3175 (with a max of 46), whereas frequency of repair strategy was nonsignificant (Δ\({R}^{2}\)= 0.0081, F(3; 50) = 0.8738, p = 0.3544). Evaluation of communication rate, or average number of communicative acts per minute, did not indicate a significant moderation effect acts (Δ\({R}^{2}\)= 0.0023, F(3; 50) = 0.2390, p = 0.6271) (see Online Resource 1, Tables 5, 6, 7 for the depicted models).

Finally, further inquiry of moderation effects was carried out by plotting interaction terms. Interaction plots for all significant moderators indicate three consistent patterns of interest based on low, medium, or high moderator values, as presented in Figs. 1, 2, 3, 4 and 5. The low, medium, and high moderator values in each of these interaction plots reflects the moderator values at the 16th, 50th, and 84th percentiles, respectively; that is, these plots are describing interactions observed at specific moderator values (rather than describing subgroups of participants as that exceeds the scope of this analysis). The low and high values on the x-axis represent TWT one standard deviation below and above the mean at Time 1. For example, in Fig. 1 Part (b): if children were observed to have a Communication subscale score at the 16th percentile of this sample, those with lower than average TWT scores at Time 1 also had low TWT scores at Time 3. A similar pattern was observed for children with a Communication subscale score at the 84th percentile: children with higher than average TWT at Time 1 were observed to produce greater TWT at Time 3 than children with lower than average TWT at Time 1. In contrast, if children were observed to have a Communication subscale score at the 16th percentile but also presented with higher than average TWT scores at Time 1, they also had somewhat low TWT at Time 3. Another contrasting pattern was observed for Communication subscale values observed at the 50th percentile of this sample. That is, children with this observed moderator value paired with lower than average TWT at Time 1 had relatively high TWT at T3, and children with higher than average TWT at Time 1 had relatively low TWT at Time 3. These three general interaction trends were observed, with subtle differences in scale, for the significant moderators of Emotion and Eye Gaze and Communication subscale standard scores (Fig. 1), frequency of gestures with vocalization (Fig. 2), frequency of gaze shifts (Fig. 3), frequency of behavior regulation and joint attention (Fig. 4), and frequency of respondent acts (Fig. 5).

Modeled Time 3 total word types (TWT) as a function of the interaction between Time 1 TWT and CSBS-DP moderators: a Emotion and Eye Gaze Scale standard scores and b Communication Scale standard scores. Designated low, medium, and high moderator values are indicative of the 16th, 50th, and 84th percentiles, respectively

Modeled Time 3 total word types (TWT) as a function of the interaction between Time 1 TWT and CSBS-DP moderators: a frequency of communicative acts with a behavior regulation function and b frequency of communicative acts with a joint attention function. Designated low, medium, and high moderator values are indicative of the 16th, 50th, and 84th percentiles, respectively

Conditional moderation effects yielded with the Johnson-Neyman technique (see Table 4) were further evaluated. The thresholds and ranges of moderator values in which significant moderating effects were observed are presented in a series of conditional effect plots (see Figs. 6, 7, 8, 9, 10). Combined, significant conditional interaction effects were observed for at least 80% of all observed values, with any non-significant regions occurring at the upper limits of observed value ranges.

Conditional interaction effects of CSBS-DP moderators and total word types (TWT) at Time 1 on TWT at Time 3. Moderators include: a Social Composite standard scores b Emotion and Eye Gaze Scale standard scores and c Communication Scale standard scores. Shaded regions indicate moderator values at which interaction effects were non-significant; significant moderator value thresholds are indicated along with percentage of observed moderator values in region of significance

Conditional interaction effects of CSBS-DP moderators and total word types (TWT) at Time 1 on TWT at Time 3. Moderator is: a frequency of gestures with vocalization. Shaded regions indicate moderator values at which interaction effects were non-significant; significant moderator value thresholds are indicated along with percentage of observed moderator values in region of significance

Conditional interaction effects of CSBS-DP moderators and total word types (TWT) at Time 1 on TWT at Time 3. Moderator is: a frequency of gaze shifts. Shaded regions indicate moderator values at which interaction effects were non-significant; significant moderator value thresholds are indicated along with percentage of observed moderator values in region of significance

Conditional interaction effects of CSBS-DP moderators and total word types (TWT) at Time 1 on TWT at Time 3. Moderators include: a frequency of communicative acts with a behavior regulation function and b frequency of communicative acts with a joint attention function. Shaded regions indicate moderator values at which interaction effects were non-significant; significant moderator value thresholds are indicated along with percentage of observed moderator values in region of significance

Conditional interaction effects of CSBS-DP moderators and total word types (TWT) at Time 1 on TWT at Time 3. Moderator is: a frequency of respondent communicative acts. Shaded regions indicate moderator values at which interaction effects were non-significant; significant moderator value thresholds are indicated along with percentage of observed moderator values in region of significance

Discussion

This study aimed to examine whether and which nonverbal communication behaviors moderate the relationship between very early spoken language and later spoken language for children with ASD. By combining spontaneous language samples collected in a naturalistic context with comprehensive observational measures of nonverbal communication behaviors, we were able to critically evaluate the conditional interactional effects of nonverbal communication factors on word use trajectories during a fundamental period of social communication and language development. Empirical support for collective interactional effects was indicated for the CSBS-DP Emotion and Eye Gaze subscale and the Communication subscale; however, significant interactional effects were not found for the Gesture subscale. These findings prompted further analysis using frequency counts of relevant social communication behaviors as captured in the prior CSBS measure. Significant conditional interaction effects emerged for gestures paired with vocalization, gaze shifts, joint attention (JA), behavior regulation, and respondent acts, implying the presence of a dynamic relationship between early word use and specific forms of social communication. We identified three distinct and consistent interaction patterns across all significant moderation models: consistently low word use across time, moderate word use with variable growth, and high word use with steady growth. One trend described children who demonstrated low social communication measure performance at T1 and ended up with low word use at Time 3 regardless of how low or high their word use was at Time 1. Another observed pattern included children with high scores on social communication measures, who ended up with low or high word use at Time 3 consistent with their low or high word use observed at Time 1. Of greatest interest is the final observed trend: children with social communication measure performance in the median range and low word use at Time 1 who produced a high number of TWT at Time 3. We discuss these findings with respect to our question about the relative strengths of gesture and joint attention as social communication moderators of language growth in children with ASD.

Gestures and Language Growth: Where’s the Link?

There were no significant interactional effects between early language use and the Gesture subscale of the CSBS-DP, although follow-up analyses with frequency counts for specific gesture scales revealed one significant moderator. While frequency of conventional gestures and distal gestures did not significantly moderate the relationship between early and later language abilities, gestures paired with vocalization did. This is consistent with findings from Parlade and Iverson (2015), which indicated gesture-vocalization coordination develops differently in children with ASD compared to children with language delays. More specifically, coordination of gestures with vocalization occurs at a slower growth rate and contributes to subsequent discrepant patterns in social and language development. Other studies have corroborated this finding (Iverson et al. 2018; Veness et al. 2012), showing that children with ASD are qualitatively different than children with language delay only based on their slow gesture growth. Thus, it is not surprising the children who used more gestures paired with vocalizations demonstrated better language outcomes in our sample. Our findings suggest the function of communication (e.g., use of a gesture or vocalization for behavior regulation or joint attention) is contributing to discrepant communication patterns.

Our finding regarding non-significant moderation effects for gesture independent of vocalizations (conventional gesture use and distal gesture use, as measured by the CSBS) corroborates evidence from Braddock and Armbrecht (2016), indicating early gesture use does not predict communication growth. While Braddock et al. (2015) identified significant associations between gesture and language abilities, this was specific to number of gesture types rather than gesture frequency. Combined with our findings, this suggests gesture may be more meaningful when assessed as a repertoire of forms similar to a vocabulary inventory. This is corroborated by Hughes et al. (2019) in their recommendation to comprehensively profile very young children’s gesture function rather than frequency during early assessment and intervention. Similarly, Leezenbaum et al. (2014) found maternal input in response to gesture production to be variable based on infants’ gestural forms: mothers were more likely to provide verbal translations in response to point or show gestures compared to reach or give gestures. This, along with our findings, again support that gesture may be more meaningful when measured as a repertoire of varied forms rather than in frequency.

Joint Attention and Language Growth: Significant Moderators

While the Gesture Scale of the CSBS-DP was not significant, the Communication subscale did have a significant interactional effect between early and later language outcomes. More precise inspections of these contributions with frequency count data specifically underscored the interactional effects of joint attention and behavior regulation, but not communication rate or social interaction. In other words, communication serving the purpose of directing others (i.e., protesting, reacting to an object of shared attention) appears to be playing a unique conditional role in shaping language growth. Similarly, the Emotion and Eye Gaze subscale was also a significant moderator, but this effect was driven by the frequency of gaze shifts, as the other two scales included in that measure (frequency of shared positive affect and negative affect) were not. More subtle nonverbal communicative behaviors like gaze shifts appear to play a conditional, superior role in language growth (McDaniel et al. 2018; Morett et al. 2016; So et al. 2015).

These findings, that early spoken language is bolstered by pivotal skills like joint attention, self-regulation, and gaze shifts, point to the intertwined nature of social interest and volitional use of communication. Particularly, the ability to allocate attention to a shared object or person of interest while also actively attempting to shape others’ behavior through verbal or nonverbal means likely creates positive reciprocal interactions. Reciprocal interactions require efficient attentional processes, such as visual perception, motor coordination, verbal memory, and spatial memory (Chu et al. 2014; Goldin-Meadow 2009; Heimann et al. 2016; Hostetter and Alibali 2007; Kuhn et al. 2014; Sassenberg et al. 2011; Schmalenbach et al. 2017). Further, these attentional processes for reciprocal interactions are known to be more compromised in young children with ASD within social contexts such as engaging with humans compared to objects (Chawarska et al. 2013; Elsabbagh et al. 2009; Morett et al. 2016; Mundy 2018). Our findings are particularly meaningful when connected to research on children with ASD who are minimally verbal. Several research studies have affirmed that minimally verbal children with ASD make progress with speech and language intervention when a certain threshold of joint attention skill is present (Abdelaziz et al. 2018; Dawson et al. 2004; Mundy et al. 1990; Paul et al. 2008, 2013; Watt et al. 2006).

Interaction Patterns in Language Growth

Interaction plots for significant moderators discussed above revealed distinct interactions patterns in this heterogeneous sample of children with ASD. One pattern demonstrated how low social communication measure performance at T1 resulted in low word use at Time 3 regardless of their word use was at Time 1. That is, it did not seem to matter how much they were talking at a younger age; rather, if they presented with poor nonverbal social communication skills early on, their later language skills were poorer. Another observed pattern included children with high scores on social communication measures, who ended up with low or high word use at Time 3 consistent with their low or high word use observed at Time 1. This pattern showed that for some children, better nonverbal social communication skills did not seem to have an effect on language growth over time. Thus, these two patterns indicate that for some children, early social communication abilities are most predictive whereas for other children, early language abilities are most predictive; both of these patterns are consistent with the literature we have reviewed above (see also Hoff and Naigles 2002). However, the most notable finding is the final observed pattern: children with social communication measure performance in the median range and low word use at Time 1 who produced a high number of TWT at Time 3. In other words, higher performance on the aforementioned social communication measures interacted with early language abilities to propel later language abilities above and beyond early language alone.

As indicated in Figs. 6, 7, 8, 9 and 10, across all social communication elements exerting influence, conditional effects were observed for the majority of observed values with some fading of effects for values in the upper limits. Interactional effects remained significant for at least 80% of all moderator values, ranging from 81 to 100% of the sample between moderators. For children with social communication measure performance at T1 in the highest 10–20% of our sample, moderation effects did not remain significant for all communication measures. Perhaps this indicates that children demonstrating the greatest strength with social communication measures are progressing in language growth at a relatively stable rate compared to children with modest early social communication measure performance. Another plausible explanation for this fading of effects could be that these children are persistently repeating communicative acts, albeit with a limited variety of forms. That is, a child could present with a high frequency of acts for the purpose of behavior regulation but these acts might recur similarly to a restricted and repetitive behavior. In sum, these findings nonetheless emphasize interactional effects are present for the majority of observed values, and further investigation of social communication measures with confirmed conditional effects is recommended.

Clinical Implications

The clinical implications for these findings may be best described by the role of communication partners in children’s language growth. The intended outcome for these significant moderators (gestures paired with vocalization, gaze shifts, joint attention, behavior regulation, and respondent acts) is to initiate, respond, and sustain social communicative interactions. In other words, these nonverbal behaviors help to elicit more language—and possibly more complex language—from one’s communication partner. For that reason, the bidirectionality of parent-mediated communication and language is a critical component in understanding the early trajectories of language development (e.g., Adamson et al. 2001; Althoff et al. 2019; Bradshaw et al. 2017; Bradshaw et al. 2015; Chiang et al. 2016; Fusaroli et al. 2019; Haebig et al. 2013; Heymann et al. 2018; Leezenbaum et al. 2014; Ozcaliskan and Goldin-Meadow 2005; Sameroff 2010; Siller et al. 2013). That is, parents strategically direct children’s attention and provide language input while continuously monitoring their child’s interest. Further, parents contingently respond to children based on situational factors like proximity, but more notably parent responses are contingent on communicative context. For instance, Heymann et al. (2018) emphasize the need for early promotion of parents imitating and expanding child communication attempts paired with gaze shifts during naturalistic interactions, principally encouraging the pairing of gaze shifts with ambiguous gestures and non-speech vocalizations. For example, even if a child’s communicative intent is unclear, such as a wavering point or a grunt, if the attempt was paired with a gaze shift it is a potential opportunity for parents to acknowledge and shape into a communicative exchange. Such responses provide exposure to progressive variations in children’s demonstrated communicative forms and foster coordination of multiple communicative behaviors and joint attention and are pivotal skills that can be honed in prevention and intervention contexts (Goldstein et al. 2010; Schreibman et al. 2015). Not surprisingly, infants at risk for ASD were less likely to pair gaze shifts with more overt communicative acts like speech or point and show gestures, thus resulting in fewer word-learning opportunities in a social parent–child context. Given the influence that these nonverbal communication skills have on language growth, these findings highlight the importance of contingent responsiveness to communicative attempts in early intervention contexts that bolster language skills.

Limitations

While we were able to confirm several proposed moderation interactions in the present study, we were limited by missing data in the sample. Our sample selected from the larger APP dataset was limited by missing data factors including inconsistencies in baseline assessments administered (5%), selective participant invited return at T3 (50%), missing or damaged DVDs, and video playback issues limiting analysis of the language samples retrospectively after Times 1 and 3 (23%; i.e., distorted sound, camera placement issues), all of which are consistent with longitudinal studies of this nature. While we anticipate the observed interaction patterns and reported results would remain consistent with a larger sample size, it is possible the interaction threshold values might shift slightly with a smaller margin of error. In addition, the frequency counts in our analysis were not independent of each other since communicative acts were coded for both form and function. That is, a child could have produced an act that was first scored by form as a conventional gesture and additionally scored by function as social interaction. And, although the CSBS-DP is norm-referenced for children with a chronological age of 6 months to 6 years, standard scores for all children exceeding a chronological age of 24 months are converted using the same table. The developmental discrepancies between chronological age and developmental age based on our sample characteristics may have influenced variation in scores.

Conclusion

In summary, our results affirm the presence of a significant interaction between very early spoken language production and social communication behaviors including gaze shifts, speech-gesture coordination, behavior regulation, and joint attention. Notably, our findings specify the conditional influences of particular communication forms and functions on language growth, emphasizing the role of how and why children with ASD communicate rather than how often. The contribution of gesture use frequency on language growth was limited to gestures paired with vocalization, suggesting delays in early gesture production do not necessarily impede later spoken language skills. Thus, intervention targeting language growth should incorporate a focus on underlying nonverbal and social cognition elements directly, as indirect growth effects in language may co-occur (Hampton and Kaiser 2016; Heymann et al. 2018). Further, nonverbal expressions of social interest with joint attention and eye gaze interact with early vocabulary status to impact language growth, above and beyond gesture use. Future research studies should critically assess the contributions of intentional communicative acts, both verbal and nonverbal, in unison with underlying social interest when describing the strengths of children with ASD. Additionally, the CSBS-DP provides an optimal context for describing social communication skills to inform language form, function, and frequency that underlies early language risk in young children with autism. Further, pairing the social communication profile observed with the CSBS-DP with the more fine-grained analysis of communicative acts with the CSBS can provide a comprehensive portrayal of complex social communication behaviors and the alignment with overall language development profiles.

References

Abdelaziz, A., Kover, S., Wagner, M., & Naigles, L. (2018). The shape bias in children with ASD: Potential sources of individual differences. Journal of Speech, Language, and Hearing Research, 61, 2685–2702.

Adamson, L., Bakeman, R., Deckner, D., & Nelson, D. (2012). Rating parent–child interactions: Joint engagement, communication dynamics, and shared topics in autism, Down Syndrome, and typical development. Journal of Autism and Developmental Disorders, 42(12), 2622–2635.

Adamson, L., Bakeman, R., Suma, K., & Robins, D. (2019). An expanded view of joint attention: Skill, engagement, and language in typical development and autism. Child Development, 90(1), E1–E18.

Adamson, L. B., McArthur, D., Markov, Y., Dunbar, B., & Bakeman, R. (2001). Autism and joint attention: Young children's responses to maternal bids. Journal of Applied Developmental Psychology, 22(4), 439–453.

Althoff, C., Dammann, C., Hope, S., & Ausderau, K. (2019). Parent-mediated interventions for children with autism spectrum disorder: A systematic review. The American Journal of Occupational Therapy: Official Publication of the American Occupational Therapy Association, 73(3), 1–13.

American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders. Arlington, VA: American Psychiatric Association.

Arunachalam, S., & Luyster, R. (2016). The integrity of lexical acquisition mechanisms in autism spectrum disorders: A research review. Autism Research, 9(8), 810–828.

Bal, V., Katz, T., Bishop, S., & Krasileva, K. (2016). Understanding definitions of minimally verbal across instruments: Evidence for subgroups within minimally verbal children and adolescents with autism spectrum disorder. Journal of Child Psychology and Psychiatry, 57(12), 1424–1433.

Bates, E., & Dick, F. (2002). Language, gesture, and the developing brain. Developmental Psychobiology, 40(3), 293–310.

Bauer, D., & Curran, P. (2005). Probing interactions in fixed and multilevel regression: Inferential and graphical techniques. Multivariate Behavioral Research, 40(3), 373–400.

Braddock, B. A., & Armbrecht, E. S. (2016). Symbolic communication forms in young children with autism spectrum disorder. Communication Disorders Quarterly, 37(2), 67–76.

Braddock, B., Pickett, C., Ezzelgot, J., Sheth, S., Korte-Stroff, E., Loncke, F., et al. (2015). Potential communicative acts in children with autism spectrum disorders. Developmental Neurorehabilitation, 18(4), 260–271.

Bradshaw, J., Koegel, L., & Koegel, R. (2017). Improving functional language and social motivation with a parent-mediated intervention for toddlers with autism spectrum disorder. Journal of Autism and Developmental Disorders, 47(8), 2443–2458.

Bradshaw, J., Steiner, A., Gengoux, M., & Koegel, G. (2015). Feasibility and effectiveness of very early intervention for infants at-risk for autism spectrum disorder: A systematic review. Journal of Autism and Developmental Disorders, 45(3), 778–794.

Capone, N. C., & McGregor, K. K. (2004). Gesture development: A review for clinical and research practices. Journal of Speech, Language, and Hearing Research, 47(1), 173–186.

Carpenter, M., Nagell, K., & Tomasello, M. (1998). Social cognition, joint attention, and communicative competence from 9 to 15 months of age. Monographs of the Society for Research in Child Development, 63(4), 143.

Charman, T., Drew, A., Baird, C., & Baird, G. (2003). Measuring early language development in preschool children with autism spectrum disorder using the MacArthur Communicative Development Inventory (Infant Form). Journal of Child Language, 30(1), 213–226.

Charman, T., Swettenham, J., Baron-Cohen, S., Cox, A., Baird, G., & Drew, A. (1998). An experimental investigation of social-cognitive abilities in infants with autism: Clinical implications. Infant Mental Health Journal, 19(2), 260–275.

Charman, T., Taylor, E., Drew, A., Cockerill, H., Brown, J., & Baird, G. (2005). Outcome at 7 years of children diagnosed with autism at Age 2: Predictive validity of assessments conducted at 2 and 3 years of age and pattern of symptom change over time. Journal of Child Psychology and Psychiatry, 46(5), 500–513.

Chawarska, K., Macari, S., & Shic, F. (2013). Decreased spontaneous attention to social scenes in 6-month-old infants later diagnosed with autism spectrum disorders. Biological Psychiatry, 74(3), 195–203.

Chenausky, K., Nelson, C., & Tager-Flusberg, H. (2017). Vocalization rate and consonant production in toddlers at high and low risk for autism. Journal of Speech, Language, and Hearing Research, 60(4), 865–876.

Chiang, C., Chu, C., & Lee, T. (2016). Efficacy of caregiver-mediated joint engagement intervention for young children with autism spectrum disorders. Autism: The International Journal of Research and Practice, 20(2), 172–182.

Choi, B., Shah, P., Rowe, M. L., Nelson, C. A., & Tager-Flusberg, H. (2019). Gesture development, caregiver responsiveness, and language and diagnostic outcomes in infants at high and low risk for autism. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-019-03980-8.

Chu, M., Meyer, A., Foulkes, L., & Kita, S. (2014). Individual differences in frequency and saliency of speech-accompanying gestures: The role of cognitive abilities and empathy. Journal of Experimental Psychology: General, 143(2), 694–709.

Colgan, S., Lanter, E., McComish, C., Watson, L., Crais, E., & Baranek, G. (2006). Analysis of social interaction gestures in infants with autism. Child Neuropsychology: A Journal on Normal and Abnormal Development in Childhood and Adolescence, 12(4–5), 307–319.

Condouris, K., Meyer, E., & Tager-Flusberg, H. (2003). The relationship between standardized measures of language and measures of spontaneous speech in children with autism. American Journal of Speech-Language Pathology, 12(3), 349–358.

Courchesne, V., Meilleur, A., Poulin-Lord, M., Dawson, M., & Soulieres, I. (2015). Autistic children at risk of being underestimated: School-based pilot study of a strength-informed assessment. Molecular Autism, 6, 12.

Crais, E. R., Watson, L. R., & Baranek, G. T. (2009). Use of gesture development in profiling children's prelinguistic communication skills. American Journal of Speech-Language Pathology, 18(1), 95–108.

Dawson, G., Toth, K., Abbott, R., Osterling, J., Munson, J., Estes, A., et al. (2004). Early social attention impairments in autism: Social orienting, joint attention, and attention to distress. Developmental Psychology, 40(2), 271–283.

Dimitrova, N., Özçaliskan, S., & Adamson, L. B. (2016). Parents' translations of child gesture facilitate word learning in children with autism, Down Syndrome and typical development. Journal of Autism and Developmental Disorders, 46(1), 221–231.

Elsabbagh, M., Volein, A., Holmboe, K., Tucker, L., Csibra, G., Baron-Cohen, S., et al. (2009). Visual orienting in the early broader autism phenotype: Disengagement and facilitation. Journal of Child Psychology and Psychiatry, 50(5), 637–642.

Farrant, B., & Zubrick, S. (2012). Early vocabulary development: The importance of joint attention and parent-child book reading. First Language, 32(3), 343–364.

Fein, D., Barton, M., Eigsti, I., Kelley, E., Naigles, L., Schultz, R. T., et al. (2013). Optimal outcome in individuals with a history of autism. Journal of Child Psychology and Psychiatry, 54(2), 195–205.

Fenson, L., Dale, P. S., Reznick, J. S., Bates, E., Thal, D. J., & Pethick, S. J. (1994). Variability in early communicative development. Monographs of the Society for Research in Child Development, 59(5), 1–173.

Franchini, M., Duku, E., Armstrong, V., Brian, J., Bryson, S., Garon, N., et al. (2018). Variability in verbal and nonverbal communication in infants at risk for autism spectrum disorder: Predictors and outcomes. Journal of Autism and Developmental Disorders, 48(10), 3417–3431.

Franchini, M., Glaser, B., Gentaz, E., Wood, H., Eliez, S., & Schaer, M. (2017). The effect of emotional intensity on responses to joint attention in preschoolers with an autism spectrum disorder. Research in Autism Spectrum Disorders, 35, 13–24.

Fusaroli, R., Weed, E., Fein, D., & Naigles, L. (2019). Hearing me hearing you: Reciprocal effects between child and parent language in autism and typical development. Cognition, 183, 1–18.

Goffman, L., & Leonard, J. (2000). Growth of language skills in preschool children with specific language impairment: Implications for assessment and intervention. American Journal of Speech Language Pathology, 9(2), 151–161.

Goldin-Meadow, S. (2009). How gesture promotes learning throughout childhood. Child Development Perspectives, 3(2), 106–111.

Goldstein, M., Schwade, J., Briesch, J., & Syal, S. (2010). Learning while babbling: Prelinguistic object-directed vocalizations indicate a readiness to learn. Infancy, 15(4), 362–391.

Haebig, E., McDuffie, A., & Ellis, W. S. (2013). Brief report: Parent verbal responsiveness and language development in toddlers on the autism spectrum. Journal of Autism and Developmental Disorders, 43(9), 2218–2227.

Hampton, L. H., & Kaiser, A. P. (2016). Intervention effects on spoken-language outcomes for children with autism: A systematic review and meta-analysis. Journal of Intellectual Disability Research, 60(5), 444–463.

Hayes, A. F. (2018). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. New York: The Guilford Press.

Heimann, M., Nordqvist, E., Strid, K., Connant Almrot, J., & Tjus, T. (2016). Children with autism respond differently to spontaneous, elicited and deferred imitation. Journal of Intellectual Disability Research, 60(5), 491–501.

Heymann, P., Northrup, J., West, K., Parladé, M., Leezenbaum, N., & Iverson, J. (2018). Coordination is key: Joint attention and vocalisation in infant siblings of children with Autism Spectrum Disorder. International Journal of Language & Communication Disorders, 53(5), 1007–1020.

Hobson, R. P. (2005). What puts the jointness into joint attention? In N. Eilan, C. Hoerl, T. McCormack, & J. Roessler (Eds.), Consciousness and self-consciousness. Joint attention: Communication and other minds: Issues in philosophy and psychology (pp. 185–204). Oxford: Clarendon Press/Oxford University Press.

Hoff, E., & Naigles, L. (2002). How children use input to acquire a lexicon. Child Development, 73(2), 418–433.

Hostetter, A. B., & Alibali, M. W. (2007). Raise your hand if you're spatial: Relations between verbal and spatial skills and gesture production. Gesture, 7(1), 73–95.

Hughes, K. R., Hogan, A. L., Roberts, J. E., & Klusek, J. (2019). Gesture frequency and function in infants with Fragile X Syndrome and infant siblings of children with autism spectrum disorder. Journal of Speech, Language, and Hearing Research, 62(7), 2386–2399.

Hurwitz, S., & Watson, L. (2016). Joint attention revisited: Finding strengths among children with autism. Autism: The International Journal of Research and Practice, 20(5), 538–550.

Iverson, J., & Goldin-Meadow, S. (2005). Gesture paves the way for language development. Psychological Science, 16(5), 367–371.

Iverson, J., Northrup, M., Leezenbaum, J., Parladé, B., Koterba, N., & West, M. (2018). Early gesture and vocabulary development in infant siblings of children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 48(1), 55–71.

Iverson, J. M., & Wozniak, R. H. (2016). Transitions to intentional and symbolic communication in typical development and in autism spectrum disorder. In D. Keen, H. Meadan, N. C. Brady, & J. W. Halle (Eds.), Prelinguistic and minimally verbal communicators on the autism spectrum (pp. 51–72). Singapore: Springer.

Johnson, P., & Fay, O. (1950). The Johnson-Neyman technique, its theory and application. Psychometrika, 15(4), 349–367.

Johnson, P., & Neyman, J. (1936). Tests of certain linear hypotheses and their applications to some educational problems. Statistical Research Memoirs, 1, 57–93.

Jones, E. J., Gliga, T., Bedford, R., Charman, T., & Johnson, M. H. (2014). Developmental pathways to autism: A review of prospective studies of infants at risk. Neuroscience & Biobehavioral Reviews, 39, 1–33.

Jones, R., Southerland, M., Hamo, A., Carberry, A., Bridges, C., Nay, C., et al. (2017). Increased eye contact during conversation compared to play in children with autism. Journal of Autism and Developmental Disorders, 47(3), 607–614.

Kasari, C., Brady, N., Lord, C., & Tager-Flusberg, H. (2013). Assessing the minimally verbal school-aged child with autism spectrum disorder. Autism Research, 6(6), 479–493.

Kasari, C., Paparella, T., Freeman, S., & Jahromi, L. (2008). Language outcome in autism: Randomized comparison of joint attention and play interventions. Journal of Consulting and Clinical Psychology, 76(1), 125–137.

Kelty-Stephen, E., Tek, S., Fein, D., & Naigles, L. (2014). Specific effects of joint attention on language development in children with autism spectrum disorders. In Orman, W. & Valleau, M. (Eds.) Proceedings of the 38th Annual Boston University Conference on Language Development (pp. 205–215). Somerville, MA: Cascadilla Press.

Kim, S., Junker, H., & Lord, D. (2014). Observation of Spontaneous Expressive Language (OSEL): A new measure for spontaneous and expressive language of children with autism spectrum disorders and other communication disorders. Journal of Autism and Developmental Disorders, 44(12), 3230–3244.

Kjelgaard, M. M., & Tager-Flusberg, H. (2001). An investigation of language impairment in autism: Implications for genetic subgroups. Language and Cognitive Processes, 16(2–3), 287–308.

Kover, S. T., Davidson, M. M., Sindberg, H. A., & Weismer, S. E. (2014). Use of the ADOS for assessing spontaneous expressive language in young children with ASD: A comparison of sampling contexts. Journal of Speech, Language, and Hearing Research, 57(6), 2221–2233.

Kover, S. T., McDuffie, A., Abbeduto, L., & Brown, W. T. (2012). Effects of sampling context on spontaneous expressive language in males with Fragile X Syndrome or Down Syndrome. Journal of Speech, Language, and Hearing Research, 55(4), 1022–1038.

Kuhn, L., Willoughby, M., Wilbourn, M., Vernon-Feagans, L., & Blair, C. (2014). Early communicative gestures prospectively predict language development and executive function in early childhood. Child Development, 85(5), 1898–1914.

Laake, L., & Bridgett, D. (2014). Happy babies, chatty toddlers: Infant positive affect facilitates early expressive, but not receptive language. Infant Behavior and Development, 37(1), 29–32.

LeBarton, E., Goldin-Meadow, S., & Raudenbush, S. (2015). Experimentally induced increases in early gesture lead to increases in spoken vocabulary. Journal of Cognition and Development, 16(2), 199–220.

Lee, K., & Schertz, H. (2019). Brief report: Analysis of the relationship between turn taking and joint attention for toddlers with autism. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-019-03979-1.

Leezenbaum, N. B., Campbell, S. B., Butler, D., & Iverson, J. M. (2014). Maternal verbal responses to communication of infants at low and heightened risk of autism. Autism: The International Journal of Research and Practice, 18(6), 694–703.

Lieven, E. (2017). Is language development dependent on early communicative development? In N. J. Enfield (Ed.), Dependencies in language (pp. 85–96). Berlin: Language Science Press.

Lord, C., Rutter, M., DiLavore, P. C., & Risi, S. (1999). Autism Diagnostic Observation Schedule-Generic (ADOS-G). Los Angeles, CA: Western Psychological Services.

Luyster, R. J., Kadlec, M. B., Carter, A., & Tager-Flusberg, H. (2008). Language assessment and development in toddlers with autism spectrum disorders. Journal of Autism and Developmental Disorders, 38(8), 1426–1438.

MacWhinney, B. (2008). Trends in language acquisition research: Enriching CHILDES for morphosyntactic analysis. In H. Behrens (Ed.), Corpora in language acquisition research: History methods, perspectives (pp. 165–197). Amsterdam: John Benjamins Publishing.

Manwaring, S. S., Mead, D. L., Swineford, L., & Thurm, A. (2017). Modelling gesture use and early language development in autism spectrum disorder. International Journal of Language & Communication Disorders, 52(5), 637–651.

Manwaring, S. S., Stevens, A. L., Mowdood, A., & Lackey, M. (2018). A scoping review of deictic gesture use in toddlers with or at-risk for autism spectrum disorder. Autism & Developmental Language Impairments, 3, 1–27.

Markus, J., Mundy, P., Morales, M., Delgado, C., & Yale, M. (2000). Individual differences in infant skills as predictors of child-caregiver joint attention and language. Social Development, 9(3), 302–315.

Mastrogiuseppe, M., Capirci, O., Cuva, S., & Venuti, P. (2015). Gestural communication in children with autism spectrum disorders during mother-child interaction. Autism: The International Journal of Research and Practice, 19(4), 469–481.

McDaniel, J., Yoder, P., Woynaroski, T., & Watson, L. R. (2018). Predicting receptive-expressive vocabulary discrepancies in preschool children with autism spectrum disorder. Journal of Speech, Language, and Hearing Research, 61(6), 1426–1439.

Mitchell, S., Brain, J., Zwaigenbaum, L., Roberts, W., Szatmari, P., Smith, I., et al. (2006). Early language and communication development infants later diagnosed with autism spectrum disorder. Developmental and behavioral pediatrics, 27(2), S69.

Morales, M., Mundy, P., Delgado, C., Yale, M., Messinger, D., Neal, R., et al. (2000). Responding to joint attention across the 6- through 24-month age period and early language acquisition. Journal of Applied Developmental Psychology, 21(3), 283–298.

Morett, L. M., O'Hearn, K., Luna, B., & Ghuman, A. S. (2016). Altered gesture and speech production in ASD detract from in-person communicative quality. Journal of Autism and Developmental Disorders, 46(3), 998–1012.

Mullen, E. M. (1995). Mullen scales of early learning (AGS ed.). Circle Pines, MN: American Guidance Service Inc.

Mundy, P. (2018). A review of joint attention and social-cognitive brain systems in typical development and autism spectrum disorder. European Journal of Neuroscience, 47(6), 497–514.

Mundy, P., Block, J., Delgado, C., Pomares, Y., Van Hecke, A., & Parlade, M. (2007). Individual differences and the development of joint attention in infancy. Child Development, 78(3), 938–954.

Mundy, P., Delgado, C., Block, J., Venezia, M., Hogan, A., & Seibert, J. (2003). A manual for the Abridged Early Social Communication Scales (ESCS). Coral Gables, FL: University of Miami.

Mundy, P., & Gomes, A. (1998). Individual differences in joint attention skill development in the second year. Infant Behavior and Development, 21(3), 469–482.

Mundy, P., & Newell, L. (2007). Attention, joint attention, and social cognition. Current Directions in Psychological Science, 16(5), 269–274.

Mundy, P., Sigman, M., & Kasari, C. (1990). A longitudinal study of joint attention and language development in autistic children. Journal of Autism and Developmental Disorders, 20(1), 115–128.

Mundy, P., Sigman, M., & Kasari, C. (1994). Joint attention, developmental level, and symptom presentation in autism. Development and Psychopathology, 6(3), 389–401.

Mundy, P., Sigman, M., Ungerer, J., & Sherman, T. (1986). Defining the social deficits of autism: The contribution of non-verbal communication measures. Journal of Child Psychology and Psychiatry, 27(5), 657–669.

Mundy, P., Sullivan, L., & Mastergeorge, A. (2009). A parallel and distributed-processing model of joint attention, social cognition and autism. Autism Research, 2(1), 2–21.

Naigles, L. (2013). Input and language development in children with autism. Seminars in Speech and Language, 34(4), 237–248.

Naigles, L., Cheng, M., Rattasone, N. X., Tek, S., Khetrapal, N., Fein, D., et al. (2016). “You’re telling me!” Prevalence and predictors of pronoun reversals in children with ASD and typical development. Research in Autism Spectrum Disorders, 27, 11–20.

Naigles, L. R., & Chin, I. (2015). Language in children with autism spectrum disorders. In E. L. Bavin & L. R. Naigles (Eds.), The Cambridge handbook of child language (pp. 637–658). Cambridge, UK: Cambridge University Press.

Özcaliskan, S., Adamson, L. B., & Dimitrova, N. (2016). Early deictic but not other gestures predict later vocabulary in both typical development and autism. Autism: The International Journal of Research and Practice, 20(6), 754–763.

Özçaliskan, S., Adamson, L. B., Dimitrova, N., & Baumann, S. (2017). Early gesture provides a helping hand to spoken vocabulary development for children with autism, Down Syndrome, and typical development. Journal of Cognition and Development, 18(3), 325–337.

Özçalışkan, S., Adamson, L., Dimitrova, B., & Baumann, N. (2018). Do parents model gestures differently when children’s gestures differ? Journal of Autism and Developmental Disorders, 48(5), 1492–1507.

Özçalışkan, S., & Goldin-Meadow, S. (2005). Gesture is at the cutting edge of early language development. Cognition, 96(3), B101–B113.

Ozonoff, S., Iosif, A. M., Bauio, D., Cook, I. C., Hill, M. M., Hutman, T., et al. (2010). A prospective study of the emergence of early behavioral signs of autism. Journal of the American Academy of Child & Adolescent Psychiatry, 49(3), 256–266.

Parladé, M. V., & Iverson, J. M. (2015). The development of coordinated communication in infants at heightened risk for autism spectrum disorder. Journal of Autism and Developmental Disorders, 45(7), 2218–2234.

Paul, R., Campbell, D., Gilbert, K., & Tsiouri, I. (2013). Comparing spoken language treatments for minimally verbal preschoolers with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43(2), 418–431.

Paul, R., Chawarska, K., Cicchetti, D., & Volkmar, F. (2008). Language outcomes in toddlers with ASD: A 2 year follow-up. Autism Research, 1, 97–107.

Poon, K., Watson, K., Baranek, L., & Poe, R. (2012). To what extent do joint attention, imitation, and object play behaviors in infancy predict later communication and intellectual functioning in ASD? Journal of Autism and Developmental Disorders, 42(6), 1064–1074.

Potrzeba, E., Fein, D., & Naigles, L. (2015). Investigating the shape bias in typically developing children and children with autism spectrum disorders. Frontiers in Psychology, 6, 446.

Ramos-Cabo, S., Vulchanov, V., & Vulchanova, M. (2019). Gesture and language trajectories in early development: An overview from the autism spectrum disorder perspective. Frontiers in Psychology, 10, 1–9.

Rogosa, D. (1980). Comparing nonparallel regression lines. Psychological Bulletin, 88(2), 307–321.

Rollins, P. R. (2018). Setting the stage: Creating a social pragmatic environment for toddlers with ASD and their caregivers. Revista de Logopedia, Foniatria y Audiologia, 38, 14–23.

Rollins, P., & Snow, C. (1998). Shared attention and grammatical development in typical children and children with autism. Journal of Child Language, 25(3), 653–673.

Romero, V., Fitzpatrick, P., Roulier, S., Duncan, A., Richardson, M. J., & Schmidt, R. C. (2018). Evidence of embodied social competence during conversation in high functioning children with autism spectrum disorder. PLoS ONE, 13(3), E0193906.

Rowe, M., & Goldin-Meadow, S. (2009). Differences in early gesture explain SES disparities in child vocabulary size at school entry. Science, 323(5916), 951–953.

Sameroff, A. (2010). A unified theory of development: A dialectic integration of nature and nurture. Child Development, 81(1), 6–22.

Sassenberg, U., Foth, M., Wartenburger, I., & Van Der Meer, E. (2011). Show your hands – Are you really clever? Reasoning, gesture production, and intelligence. Linguistics, 49(1), 105–134.

Schmalenbach, S., Billino, J., Kircher, T., Van Kemenade, B., & Straube, B. (2017). Links between gestures and multisensory processing: Individual differences suggest a compensation mechanism. Frontiers in Psychology, 8, 1828.

Schreibman, L., Dawson, G., Stahmer, A. C., Landa, R., Rogers, S. J., McGee, G. G., et al. (2015). Naturalistic developmental behavioral interventions: Empirically validated treatments for autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 2411–2428.

Shriberg, L. D., Kwiatkowski, J., & Hoffman, K. (1984). A procedure for phonetic transcription consensus. Journal of Speech and Hearing Research, 27, 456–465.

Siller, M., Hutman, T., & Sigman, M. (2013). A parent-mediated intervention to increase responsive parental behaviors and child communication in children with ASD: A randomized clinical trial. Journal of Autism and Developmental Disorders, 43(3), 540–555.

Siller, M., & Sigman, M. (2008). Modeling longitudinal change in the language abilities of children with autism: Parent behaviors and child characteristics as predictors of change. Developmental Psychology, 44(6), 1691–1704.

So, W., Wong, M. K., Ming, L., & Yip, V. (2015). The development of co-speech gesture and its semantic integration with speech in 6- to 12-year-old children with autism spectrum disorders. Autism: The International Journal of Research and Practice, 19(8), 956–968.

Sowden, H., Clegg, J., & Perkins, M. (2013). The development of co-speech gesture in the communication of children with autism spectrum disorders. Clinical Linguistics & Phonetics, 27(12), 922–939.

Sperry, D., Miller, P. J., Dyson, A., Fisher, C., Haight, W., & Koven, M. (2014). Listening to All of the Words: Reassessing the Verbal Environments of Young Working-class and Poor Children. ProQuest Diss., and Theses.

Stone, W., & Yoder, P. (2001). Predicting spoken language level in children with autism spectrum disorders. Autism, 5(4), 341–361.

Szatmari, P., Chawarska, K., Dawson, G., Georgiades, S., Landa, R., Lord, C., et al. (2016). Prospective longitudinal studies of infant siblings of children with autism: Lessons learned and future directions. Journal of the American Academy of Child & Adolescent Psychiatry, 55(3), 179–187.

Tager-Flusberg, H. (2004). Strategies for conducting research on language in autism. Journal of Autism and Developmental Disorders, 34(1), 75–80.

Tager-Flusberg, H., & Kasari, C. (2013). Minimally verbal school-aged children with autism spectrum disorder: The neglected end of the spectrum. Autism Research, 6(6), 468–478.