Abstract

We conducted a meta-analysis of 31 studies, spanning 30 years, utilizing the WCST in participants with autism. We calculated Cohen’s d effect sizes for four measures of performance: sets completed, perseveration, failure-to-maintain-set, and non-perseverative errors. The average weighted effect size ranged from 0.30 to 0.74 for each measure, all statistically greater than 0. No evidence was found for reduced impairment when WCST is administered by computer. Age and PIQ predicted perseverative error rates, while VIQ predicted non-perseverative error rates, and both perseverative and non-perseverative error rates in turn predicted number of sets completed. No correlates of failure-to-maintain set errors were found; further research is warranted on this aspect of WCST performance in autism.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

This study aims to explain the variability in performance, as well as quantify the magnitude of impairment, for persons with autism on a widely-used test of executive function, the Wisconsin Card Sort Test (WCST; Grant and Berg 1948; Milner 1963). Executive function is a broad conceptualization applied to frontal lobe functions and includes skills such as inhibitory control, working memory, and cognitive flexibility (Miyake et al. 2000). Several reviews of executive function in autism (Hill 2004; Geurts et al. 2009; Russo et al. 2007) highlight impaired performance on the WCST for participants with autism, however conflicting reports of the nature and magnitude of impairment persist. We sought to examine factors underlying these inconsistencies, both at the level of participant variables and task variables in order to elucidate the nature of executive dysfunction in autism.

The WCST is a well-known and widely used neuropsychological test that broadly assesses executive function. The WCST consists of a pack of four stimulus cards and 64 (or 128) response cards (Grant and Berg 1948; Heaton et al. 1993). The stimulus cards differ in color, form, and number, while the response cards combine these dimensions such that a response card can match different stimulus cards on different dimensions (see Fig. 1). The four stimulus cards are placed in front of the participant as targets and the participant is presented with the response cards one at a time and told to sort the response cards according to a rule that the participant must figure out on the basis of feedback. The participant then sorts the cards one at a time into the four piles and for each card is told whether the response was “correct” or “incorrect”. Unbeknownst to the participant, once the participant achieves 10 consecutive correct responses, the examiner shifts the sorting strategy. For example, a participant might stumble onto the correct sorting strategy of color after a few trials, but then after 10 correctly sorted cards, the participant, unaware of the change sorts again according to color but is told that the answer was incorrect, and the participant must figure out the new rule (form). This continues, following the prescribed order of color-form-number (repeat) until the stack of cards is complete.Footnote 1 A number of measures of performance may be collected, reflecting performance efficiency; these are described at length in the administration manual (Heaton et al. 1993). The most frequently used are the number of sets completed, a set defined as a series of 10 consecutive correct responses triggering a rule switch, and various error measurements. Errors are classified as perseverative when the participant continues with the previously correct rule despite negative feedback, or as failure to maintain set (FMS), when the participant makes several correct responses, then commits an error despite positive feedback on the previous trials. Perseverative errors are thought to reflect difficulties in cognitive flexibility (Hughes et al. 1994; Ozonoff et al. 2004; Ozonoff and Jensen 1999; Ozonoff and Strayer 2001; Ozonoff et al. 1994; Russell et al. 1999), whereas FMS errors are thought to reflect difficulties in sustained attention (Chelune and Baer 1986; Sullivan et al. 1993). Other errors may be recorded as well, but as the nature of the task requires a certain amount of trial and error in discovering the rule, these other errors, often referred to as random or non-perseverative errors, may simply reflect efficiency in rule discovery, which is also captured by the measure of sets completed. As executive function is a complex complement of skills, it is not surprising that the WCST is also criticized for the complexity of underlying skills, and inconsistencies in the factor structure are reported depending on the number of trials and stoppage rule used (Greve et al. 2005). While the WCST is purported to be sensitive to frontal lobe impairment, researchers have also criticized the test as lacking specificity in patients with traumatic brain injury (Shallice and Burgess 1991; Gioia and Isquith 2004), although despite these criticisms it is extensively used.

WCST Performance in Autism

Executive function deficits in autism have been reviewed elsewhere in great depth, with researchers converging on the conclusion that the most consistent finding is impaired performance on the WCST (Geurts et al. 2009; Russo et al. 2007; Van Eylen et al. 2011). The WCST has been criticized for both complexity of task demands and poor ecological validity (Shallice and Burgess 1991; Gioia and Isquith 2004), thus understanding the nature of impairments on this task will advance our understanding of autism. While the autism deficits in WCST performance are primarily described as cognitive inflexibility or set-shifting in nature, these same reviews highlight the inconsistencies with respect to other tasks aimed at measuring cognitive flexibility, standardized or experimental. Despite being the most consistent finding, a variety of conflicting results are reported for participants with autism on the WCST. Some studies found impairment in their participants with autism (e.g. Pascualvaca et al. 1998; Tsuchiya et al. 2005) while others did not (e.g. Liss et al. 2001; Minshew et al. 1992). Moreover, some studies found impairment in specific measures of task performance such as the number of sets (e.g. Rumsey and Hamburger 1988; Shu et al. 2001; Sumiyoshi et al. 2011), while other studies did not (e.g. Goldstein et al. 2001; Kaland et al. 2008; Liss et al. 2001). The greatest consistency is that most studies reported an impairment characterized by increased perseveration (e.g. Ciesielski and Harris 1997; Greibling et al. 2010; Kilinçaslan et al. 2010). However, two studies conducted by Kaland and colleagues (2008) and Minshew and colleagues (1992) did not find increased perseveration. Inconsistencies were also found in reports of FMS (Kaland et al. 2008; Pascualvaca et al. 1998; Lopez et al. 2005; Bennetto et al. 1996; Rumsey 1985) and in non-perseverative errors (e.g. Shu et al. 2001; Pascualvaca et al. 1998; Rumsey 1985; Tsuchiya et al. 2008; Kaland et al. 2008; Greibling et al. 2010; Minshew et al. 1992; Prior and Hoffmann 1990). We designed this meta-analysis to examine the factors across studies that might help explain these inconsistencies. These factors fall broadly into two categories: participant variables and task variables.

Discrepancies in previous findings are potentially due to cognitive or age-related factors. In such cases, significant impairments in WCST performance are hard to interpret as they may simply reflect the differences between groups in developmental level, age, or IQ. For example, developmental level and age go hand in hand with perseveration, as younger and less developmentally mature children often cannot switch rules and become stuck using the original rule in several sorting tasks (e.g. Zelazo et al. 2003). Participants with autism, who may have uneven IQ profiles, may appear impaired if compared to a more developmentally advanced comparison group with a more even IQ profile. For example, a researcher may aim to match participants one-to-one on age and full scale IQ, but participants with autism have high rates of verbal:non-verbal IQ discrepancies, which vary by chronological age (Ankenman et al. 2014) and if the researcher attempts to match on either verbal or non-verbal IQ, the other measure is often significantly different. Another participant variable is the changes that have occurred in ASD diagnostic criteria over the past three decades. That is, earlier studies with participants diagnosed under DSM-III or earlier could represent samples that differ from those diagnosed under DSM-IV, which broadened ASD to include more higher functioning children with milder presentations (e.g. Aspergers), although symptom severity was rarely quantified in the literature. Furthermore, improvements in early diagnosis, intervention, and inclusive education practices could also contribute to group-wise differential performance over time.

A key task variable is the use of computerized versions of the task, which hold a lot of appeal in testing participants with autism over a face-to-face manual testing procedure. Researchers using the computer version have claimed several advantages over the manual version (Shu et al. 2001). These advantages include improvements in reliability and more efficient use of resources than the manual version (Tien et al. 1996). Furthermore, children with autism may perform better on the computerized format due to the social demands of face-to-face manual administration and the disadvantage thus created for participants with autism (Ozonoff 1995; Schopler and Mesibov 1995; Kenworthy et al. 2008). However, the equivalence of the two formats is questionable. Feldstein and colleagues (1999) conducted a meta-analysis comparing the manual version with four different computer versions in typical populations. While researchers operate on the assumption that the computerized versions are equivalent to the manual version, Feldstein claimed that this assumption was false. The versions differed significantly from the established norms set by Heaton and colleagues (1993) for the original WCST in central tendency, dispersion of WCST variables, and shape of distribution of WCST variables. Furthermore, the four computer versions (Mouse click, mouse auto, keyboard, and touch screen) were not equivalent when compared to each other. For example, the mouse click version proved to be different in the norms set by Heaton and colleagues (1993) in the variability and shape of distribution in the FMS measure, and the keyboard version was found to be different in all assessment measures (central tendency, variability, and shape of distribution) when compared to the original manual version (Feldstein et al. 1999).

Many studies of WCST performance in autism used a computerized version of the task (Geurts et al. 2004; Kaland et al. 2008; Kilinçaslan et al. 2010; Nyden et al. 1999; Robinson et al. 2009; Shu et al. 2001; Tsuchiya et al. 2005; Winsler et al. 2007) and based their conclusions on the norms and assumptions of the original manual version. The deficits that were found in these studies are valid, as performance was considered relative to a control group and not published norms, however the underlying assumptions of what the computerized version measures are inconclusive.

Current Study

The goal of this meta-analysis is to explain the variability in the reported literature and quantify the magnitude of impairment across measures of WCST performance in autism. In our review of the literature, we have identified three key issues to examine. First, we examined the inconsistencies in the literature with respect to different metrics of performance and conclusions of impairment. Second, we examined the variability in participant populations and the potential confounds these create in interpreting impaired performance. Third, we examined the inconsistencies in task administration, specifically between the original manual version and newer computerized versions. We compiled data across studies using effect size measures, to examine these three issues. Where possible, we obtained raw data for more in depth analysis.

Methods

Sample of Studies

A literature search was conducted using Pubmed and search terms “Autism” AND “executive function” OR “card sort” for articles published prior to February 2013, resulting in 267 articles. Of these, 186 studies were excluded, as they were not experiments containing the WCST. A further 33 studies were excluded, as they did not include at least one participant group diagnosed with autism spectrum disorder. Eighteen studies were excluded, as they were literature review studies and did not provide any data of their own. Four studies were excluded, as they did not provide the necessary information to use for this meta-analysis (i.e. raw data to show improvement on the WCST, only reporting reaction time, reporting the number of trials instead of the number of sets completed). Three studies (Prior and Hoffmann 1990; Teunisse et al. 2012; Williams et al. 2013) were excluded on the basis that their modified version of the WCST used six cards instead of 10 for each rule category.

Sixteen studies from this literature search were identified to report children or adults with autism spectrum disorder using one or more of the variables of the WCST. We identified a further nine studies from two review articles (Hill 2004; Edgin and Pennington 2005) and six more studies were identified from the reference lists of other included studies. We then replicated our search using additional search engines (Google Scholar and PsycINFO); no additional studies were identified. Authors of studies published after 1990 were contacted in attempts to identify “file drawer” data and request raw data. The final number of studies included was 31, including five raw data sets.

Dependent Measures

We examined the articles for key components. These included a control group (typically developing, learning or intellectual disability, or language impaired) and ASD group. We also examined matching criteria such as age, gender, IQ, education level, mental age, socioeconomic status, handedness, and parental education. We recorded year of publication and diagnostic criteria used for participant inclusion. Finally, the four outcome variables of interest in the WCST were the number of sets completed, perseverative errors and responses (‘perseveration’), non-perseverative errors, and FMS. Both authors reviewed all included studies to ensure coding and data entry accuracy.

Procedure

Once we had a final list of articles, we recorded and organized the data. We began by looking at the population in each study. We recorded the characteristics of the experimental and control group(s) focusing on the composition of the control group (e.g. typically developing, learning or intellectually disabled, or language-impaired individuals) as well as the diagnostic criteria and label (ASD subtype), the functioning level of the autism group (high or low functioning), IQ measures, and matching criteria. We also recorded any screening measures or comorbidities described.

Next, we recorded the outcomes on the four test measures of the WCST: Number of sets completed, perseveration, non-perseverative errors, and FMS. These measures of performance were chosen as they are the most frequently reported outcome measures of the WCST. Many studies reported only one or two of the WCST measures, and in different combinations. For those studies that reported both “perseverative errors” and “perseverative responses” (4/31 studies), we included the “perseverative errors” data, however as the majority of studies reported only one of the potential measures of perseveration, we will simply refer to this measure as “perseveration”. Finally, we recorded whether the authors of each study had deemed the autism group “impaired” or not, and calculated the effect size for each WCST measure within each study; in the case of a study in which participants with autism were compared to more than one relevant comparison group, both effect sizes are reported, however we were primarily interested in comparisons to typical development, or mental-age matched developmental or learning disabled groups, not other clinical groups. The effect size measure used was Cohen’s d, which is computed by dividing the difference between group means by a pooled standard deviation weighted by sample size. The formulae for calculating d, se d and weighting of d can be found in Lipsey and Wilson (2001) and Card (2011). We used the following criteria to interpret Cohen’s d: no effect (0–0.19), small effect (0.2–0.49), medium effect (0.5–0.79), and large effect (0.8+).

Results

Broader Meta-analysis

We separately analyzed the four key outcome measures of the WCST: number of sets, perseveration, FMS, non-perseverative errors. These data are summarized in Tables 1, 2, 3, 4, 5.

First we calculated the mean raw and weighted effect sizes for each outcome measure. These are presented in Table 1, along with the percent of studies for which an autism impairment was concluded. While authors were more likely than not to conclude that participants with autism were impaired on the number of sets completed and perseverative errors measures, and more likely than not to conclude no impairment on FMS and non-perseverative errors measures, the effect sizes support autism impairment on all measures.

In our next stage of analyses, we examined factors related to the magnitude of the effect size. First, we conducted exploratory analyses to examine whether any of the demographic measures could help explain the differences between studies that did and did not conclude impairment in their autism sample. A Pearson correlation was run between the mean age of each autism sample and the effect size for each of the four measures of WCST performance; none reached statistical significance (all ps > 0.14). These correlations should be interpreted with caution, as there was variability in the age ranges represented in different studies, thus mean age as reported may not adequately represent the samples; sixteen of the studies included very wide age ranges spanning childhood, adolescence, and adulthood. The published data make it difficult to determine if there is any systematic effect size variance due to sample age.

In terms of functioning level, most studies described their sample as a high functioning and/or Asperger’s syndrome group, with explicitly restricted or lower bounds on IQ as inclusion criteria, and only one identified the sample as lower functioning. Twenty-four studies matched to a typically developing group on the basis of chronological age (CA) and IQ, one matched on mental age to CA and IQ differing typically developing children, and six matched to a clinical comparison group (including a group with dyslexia, a group with developmental language disorder, and four heterogeneous clinical samples). The aggregate effect sizes are shown in Table 6. A one-way ANOVA was used to test the association between comparison group and each of the outcome measures. Only perseveration was found to be significantly associated, F(2,28) = 10.16, p < 0.001; the magnitude of impairment was attenuated in high functioning autism relative to CA and IQ matched typical comparison groups, and may be exaggerated in lower functioning individuals matched on mental age to typically developing individuals or heterogeneous clinical groups.

In addition to examining comparison group make-up, we further coded matching “quality” as follows: Group 0 consisted of studies that did not provide sufficient details about participant IQs, including those that did not provide sufficiently detailed data to reliably code matching quality and those that did not test typically developing comparison participant IQs; Group 1 consisted of studies with less than ideal matching, including participant groups that matched on CA but did not match on IQ, or participant mean IQs matched but reported ranges did not match; Group 2 consisted of studies with the best-matched comparison groups, including studies in which participants were matched one-to-one. Twenty-seven percent of studies fell into Group 0, 19 % in Group 1, and 54 % in Group 2. Quality rating was not associated with date of publication. The aggregate effect sizes are also shown in Table 6. One-way ANOVAs were used to test the association between matching quality code and the outcome measures; no significant associations were found. If we restrict to those in Group 2, Cohen’s d estimates are similar to the weighted full-set estimates, and remain significantly above 0 for number of sets, perseveration, and non-perseverative error. For FMS, with only 6 studies coded as Group 2, the estimate is heavily influenced by the single study in which participants with autism were found to have significantly fewer FMS errors than the (heterogenous clinical) comparison group, and thus a moderate negative Cohen’s d value. As most studies had restricted participation on the basis of IQ, the range of reported mean IQs was too limited to enter into a correlational analysis (see online Supplemental Table).

Next, we analyzed the year of publication to test for changing diagnostic criteria leading to different sample compositions over time by running a correlation with the year of publication and effect size. We found no significant correlations (all ps > 0.29. The transition from DSM-III to DSM-IV criteria occurred in publications at the turn of the century, with minimal overlap of criteria in publications from 1999 to 2001. Two studies used ICD-10 criteria, and nearly all studies from English-speaking countries published since 1999 also used ADI. Fewer reported use of ADOS than ADI. We divided studies into three groups, those using DSM-III-R or earlier criteria, those using DSM-IV or ICD-10 criteria without ADIs, and those with ADIs. These data are presented in Table 6; perseveration effect sizes were significantly affected by diagnostic criteria, F(2,28) = 4.32, p = 0.023; it appears that a distinction can be made from DSM-III to DSM-IV, supporting the hypothesis that participants diagnosed under older criteria may have been more severely affected or lower-functioning as a group than those diagnosed under later criteria.

Next, we examined the mode of administration, computer or manual. Independent samples t-tests were conducted to test whether the effect size measures were affected by the version of WCST used (computer vs. manual). There were no significant differences (largest t = 0.94). These results indicate that the testing modality did not significantly affect the level of impairment observed across studies using the WCST.

Subsample

We were able to obtain raw data sets from five of the 31 studies, which included 191 participants with ASD tested around the world. While this represents a small fraction of the complete corpus, we combined these data sets in order to examine individual differences in performance. The participant variables available to examine were chronological age (N = 191), Full-scale IQ (N = 164), Verbal IQ (N = 84), Performance IQ (N = 84), and ADI scores (N = 83). A verbal IQ-performance IQ discrepancy score was also calculated. First we performed bivariate Pearson correlations with the participant variables and the four WCST outcome measures. These are presented in Table 7. Of note, chronological age was negatively correlated with perseveration, but also negatively correlated with ADI scores, which were positively correlated with perseveration. As neither age nor ADI was significantly correlated with any other WCST metrics, we used multiple regression to tease apart this relationship. All regressions were performed with listwise exclusion for missing data. Age and ADI were entered simultaneously in the model with perseveration as the dependent variable. The model predicted 20 % of the variance, however this was predominantly due to the strong relationship with age (β = −0.358, p = 0.001) as ADI was not a significant independent contributor (β = 0.159, p = 0.064). The model was robust against tests of collinearity (tolerance = 0.94, VIF = 1.06). Partial correlations also confirm that the association between perseveration and age with ADI partialled is robust, r(79) = −0.36, p = 0.001, while the association between perseveration and ADI with age partialled is less robust, r(79) = 0.21, p = 0.064. Perseveration was also negatively associated with both full-scale and performance IQ (neither of which were correlated with age or ADI scores, see Table 7). Perseveration thus appears to be driven independently by participant age and performance IQ, with both younger and lower IQ participants with autism prone to increased perseveration.

In contrast, IQ measures alone, but not the verbal-performance discrepancy, appear to be related to other metrics of WCST performance, specifically the number of sets and non-perseverative errors. Number of sets was correlated with both perseverative and non-perseverative error types and with both FSIQ and PIQ measures, but not VIQ. Non-perseverative errors were correlated with IQ measures, but not with other error types. Verbal and Performance IQ were entered simultaneously in a linear regression model with non-perseverative errors as the dependent measure. The model predicted 21 % of the variance, and VIQ (β = −0.33, p = 0.006) not PIQ (β = −0.232, p = 0.051) drove this relationship. The model is robust against tests of collinearity (tolerance = 0.90, VIF = 1.11). Partial correlations also confirm that the association between non-perseverative errors and VIQ with PIQ partialled is robust, r(64) = −0.33, p = 0.006, while the association between non-perseverative errors and PIQ with VIQ partialled is less robust, r(64) = −0.16, p = 0.051.

Our final linear multiple regression model tested number of sets as the dependent measure with PIQ and both perseverative and non-perseverative errors entered simultaneously as predictors. The model predicted 28 % of variance. Performance IQ (β = 0.003, p = 0.98) did not significantly predict number of sets above and beyond the perseverative and non-perseverative errors committed by participants. The model was robust against tests of collinearity (tolerance range 0.84–0.94, VIF range 1.06–1.18). As perseverative and non-perseverative errors were not correlated with each other, it thus appears that number of sets is independently predicted by both perseverative (β = −0.34, p = 0.003) and non-perseverative errors (β = −0.36, p = 0.002). Failure to maintain set was not significantly correlated with any measures.

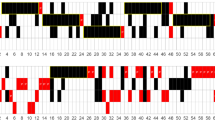

Publication Bias

We addressed the issue of publication bias with two approaches. First, we examined the funnel plots of our four outcome measures. Funnel plots show the association between the sample size and the effect size and are expected to form a “funnel” shape if there is no publication bias, with small-sample studies spread equally to either side of the large-sample studies. Second we calculated the tolerance for future null (Rosenthal 1984, p. 109), which calculates how many unpublished negative findings studies would have to exist in order to bring meta-analysis results below significance threshold. The funnel plots are presented in Fig. 2, showing the association between sample size and Cohen’s d; these plots suggest the potential for publication bias on the number of sets variable only as studies with large samples tended to find smaller effect sizes than studies with small sample sizes, whereas the funnel shape for the other outcome measures suggests the literature is representative. The tolerance for future null were 350, 428, 49, and 89 for number of sets, perseverative errors, FMS, and non-perseverative errors respectively. Thus, the large number that would have to exist offsets the potential file-drawer problem of the number of sets measure.

Discussion

We conducted a meta-analysis to examine factors underlying WCST performance in autism. We were able to compile data from 31 published studies spanning nearly 30 years. We found that of the four most frequently reported measures of performance, only two were reported by most studies: number of sets completed and perseveration. A minority of studies reported the measures of non-perseverative errors (11/31) and FMS (9/31), however the average Cohen’s d effect sizes were all significantly greater than 0, and thus a conclusion of impaired performance is justified for all four measures.

The WCST is a complex task measuring a multi-faceted cognitive skill. The multitude of potential outcome measures contributes to that complex picture. However, the vast majority of published studies have only reported on two facets of performance: number of sets completed and perseveration. The number of sets completed measure gives a global performance score; there is compelling evidence that performance is impaired in autism, replicated across a number of participant samples, and not explained by any reported methodological variability between studies. The analysis of five raw data sets revealed that the number of sets is a function of both perseverative and non-perseverative error types, independent of one another; perseverative errors are predicted by PIQ and chronological age, while non-perseverative errors are predicted primarily by VIQ. A trend was also noted for severity of symptoms to influence perseveration, and indirectly number of sets completed. Thus, older children and adults may be less symptomatic and commit fewer perseverative errors, as well as participants with higher PIQs, whereas participants with higher VIQs may commit fewer non-perseverative errors. Number of sets, then, becomes a composite of performance, based on both types of error.

Perseveration is the most widely studied facet of WCST impairment in autism with compelling evidence, replicated across a number of participant samples, and also not explained by any reported methodological variability between studies. Perseveration on the WCST is believed to reflect the specific skill of cognitive flexibility (Hughes et al. 1994; Ozonoff et al. 2004; Ozonoff and Jensen 1999; Ozonoff and Strayer 2001; Ozonoff et al. 1994; Russell et al. 1999). The theoretical impetus to examine perseveration errors over non-perseverative errors may be contributing to a self-fulfilling bias in the literature. The lesser-reported facets of performance, FMS and non-perseverative errors, were reported infrequently in the literature and are absent from the largest studies, leading many to conclude that WCST performance in autism reflects perseveration alone. Our analysis of five combined raw data sets suggests that perseveration may be a function of participant performance IQ, chronological age, and to a lesser extent, symptom severity. This is an interesting finding given that we also report a decline in the mean effect size for perseveration as a function of changing diagnostic criteria from the third to fourth editions of the DSM, supporting the notion that DSM-III criteria for infantile autism as well as PDD-NOS were applied to a generally lower-functioning and severely-impaired population as a whole, relative to the DSM-IV classifications that included Aspergers and more higher-functioning and mildly impaired individuals into the autism spectrum. We should note that neither the ADI nor ADOS is intended to provide a metric of severity for the purposes of such statistical analysis. Calibrated scores would be more appropriate, however we could not compute calibrated scores for this sample as we did not have access to the child’s expressive language level at assessment (Hus and Lord 2013).

The WCST is a quintessential but also complex multi-factorial executive functioning task, requiring the individual to effectively use feedback, monitor current and past context, and flexibly adapt to changing events. As such, it theoretically describes the behavior required on a daily basis for all social and many non-social activities, yet it has been criticized for poor ecological validity in practice (Shallice and Burgess 1991; Gioia and Isquith 2004). Understanding the mechanisms by which this executive functioning exercise falls apart for individuals with autism remains a critical goal for intervention at all ages. Perseveration appears to be the larger challenge, but non-perseverative errors, which were a function of both verbal and non-verbal IQ, and FMS, which remains unexplained, also contribute. Further investigation of these facets of executive function may help resolve what Geurts and colleagues (2009) referred to as the “paradox” of cognitive flexibility in autism.

The FMS errors are considered to reflect the specific skill of attention (Chelune and Baer 1986; Sullivan et al. 1993) within the task as the participant committing such an error was using the correct rule and receiving positive feedback, but suddenly lost track. On the basis of the published literature, only 27 % of which reported impaired performance (and one reporting significantly better performance than the heterogeneous clinical comparison group), there was an assumption that this was not an area of difficulty in autism. While the FMS impairment is not as pronounced as the perseveration impairment, we found that the average Cohen’s d effect size for FMS was 0.30, nearly one third of a standard deviation. When restricted to only the best-matched studies, the estimate is heavily influenced by the single study reporting enhanced performance for the ASD group relative to a clinical comparison group matched on CA and IQ (Ozonoff et al. 1991), the removal of which would change the estimate from d = 0.38 [95 % CI −0.27 to 1.02] to d = 0.59 [95 % CI 0.11–1.06]. What is interesting about this particular “outlier” is that it represents a single instance of a moderate sized significant effect in the opposite direction, which also warrants further inquiry. Only two studies with clinical comparison groups reported FMS, the make-up of which were heterogeneous and contained unspecified numbers of children with ADHD, thus it could be the case that individuals with autism make more FMS errors than typically developing individuals, but fewer than other diagnostic groups. Among the studies included in this meta-analysis, only one provided data on ADHD comorbidity for participants with autism; Kilinçaslan and colleagues reported 9/21 participants in their ASD group were comorbid for ADHD, and did not find increased FMS errors relative to typically developing participants (d = 0.14), where performance was impaired on other measures (d = 0.89–0.99). Kilinçaslan and colleagues also compared performance within their autism group on the basis of ADHD comorbidity and found no differences on any WCST measures, although this would have been a comparison of small and imbalanced groups. We conclude that it would be premature to dismiss the FMS aspect of performance as unaffected in autism, warranting further investigation with additional convergent and divergent measures, as well as with more appropriate sample sizes. Conceptually, FMS is also consistent with descriptions of working memory as an updating/maintenance function. This approach has not been explored in autism, due perhaps in part to the complexities of understanding working memory function in autism (Russo et al. 2007).

We also conclude that the non-perseverative errors measure, with a Cohen’s d of 0.56, also warrants further investigation, as the implications of this error are not well understood, and in many cases could be further refined into more distinct error types. For example, the Pascualvaca and colleagues (1998) and Kaland and colleagues (2008) comparison groups were well-matched, and yet the effect sizes are triple (0.9 vs 0.3); Ambery and colleagues (2006) found a null effect size (0.07) in their sample of adults with Asperger syndrome; the sample included a very wide adult range as well as wide IQ ranges, but the comparison group was similar in spread. There are clearly unanswered questions regarding non-perseverative errors in autism.

We found no evidence for reduced impairment on computer-administered versions of the task. This is surprising given the widespread assertions that the reduced social demands of computerized administration should be facilitatory for participants with autism (e.g. Kenworthy et al. 2008). The only study to compare administration modes directly was performed by Ozonoff (1995), however this conclusion was only reached in Study 3, not Study 2 in which the participants with autism were not impaired on either administration. Notably, despite small sample sizes (10–12 participants in each group), the effect sizes in Ozonoff’s Study 2 were considerably smaller (all <0.16) than in her Study 3 (all >0.5), however the “unimpaired” performance on the computerized administration in Study 3 is arguably a type 2 error as the effect sizes were 0.53 and 0.84 for sets and perseveration respectively. Across all studies included in the meta-analysis, there did not appear to be any systematic differences in sample sizes, participant ages, or matching quality between manual and computerized administrations. Only one study using computer administration used DSM-III diagnostic criteria. Sample IQs from the larger set could not be calculated due to inconsistencies in reporting, and within the subset for which we obtained raw data, four of the five were computerised administrations.

The average Cohen’s d effect size of each of the four measures considered in this meta-analysis, as shown in Table 1, was between 0.30 and 0.74. These are not negligible effect sizes, but a quick glance at Cohen’s power tables (Cohen 1988, p. 55) reveals that the sample size of many studies was simply too small; the sample size necessary to detect a medium effect size of 0.5 with power of 0.80 and alpha 0.05 is 64 participants in each group (Cohen 1988, p. 55). To detect an effect of 0.8 magnitude with power of 0.80 and alpha 0.05, one must have a sample of 25 participants in each group—the average sample size of the 31 published WCST studies was 25. Generally, the effect sizes of studies finding impairment were universally large (Cohen’s d > 0.8), whereas the effect sizes of studies finding no impairment were much smaller (Cohen’s d = ~0.4). This suggests that sample size is a substantial consideration in evaluating the literature, however the wide variability in observed effect sizes suggests that it is not the only explanation.

We sought to explain variance in Cohen’s d effect sizes as a function of reported methodological and demographic characteristics of participant samples. We were able to obtain five raw data sets, which when combined helped explain some of the individual differences in perseveration, non-perseverative errors, and sets completed, but not FMS. It appears that there could be diminishing effect size with age. This is speculative, given the small number of studies, but would be explained if typically developing participants reach adult levels of performance, at least on some measures of performance, at an earlier age. The WCST is typically not appropriate for children under the age of six and adult-like performance is achieved in early adolescence (Chelune and Baer 1986; Rosselli and Ardila 1993), thus mental age may be an important consideration. The relationship between WCST performance and IQ measures, and disambiguating between verbal and non-verbal, needs to be clarified, especially with respect to populations on the autism spectrum.

Limitations

While meta-analytic techniques have certain advantages, there are also notable limitations. The first is that given the 30-year span of these publications, it would be unreasonable to expect authors to be able to share raw data for the vast majority of the studies. The second is that meta-analysis is prone to skew due to publication bias and the file-drawer problem. We have addressed this issue with the use of funnel plots and the tolerance for future null calculations. Given the nature of the task and the population, we contend that publication bias may be minimized as demonstrating group effects in the opposite direction would be very novel and publishable, and null effects on one or more variables of interest were frequently published—nearly one third of studies published null findings for the number of sets and perseveration measures, and more than half of studies published null findings for FMS and non-perseverative errors. Given the frequency with which null findings are published for the measures of interest, we assert that our estimates of the true population effect size are not substantially inflated.

Future Directions and Recommendations

The WCST has a long history of use in the measurement of executive dysfunction, in a wide array of clinical populations, and there remain many unanswered questions. In 30 years of research, we have conclusively demonstrated that increased perseveration is an area of impairment in autism, however we seem to have made little progress in understanding why or how to intervene. In the process, we have also missed the other areas of WCST performance that contribute to overall diminished performance, the measures of failure to maintain set and of non-perseverative errors. As such, we as researchers have been perseverative.

Nevertheless, we assert that the WCST remains relevant to understanding autism, so long as researchers ask new questions. Norms exist, but the question of whether participants with autism perform below said norms or below a given comparison group has been answered. New questions should emerge surrounding the developmental trajectory of performance on the task in participants with autism, and correlates over time. The WCST would also serve as a potentially useful metric for use in school-aged, adolescent, and adult intervention studies. The WCST is also an excellent model task that could be modified to test various cognitive neuroscience hypotheses about neurodevelopment in autism that take increased non-perseverative errors and the nuances of failure-to-maintain-set into account (e.g. learning potential within the task; Calero et al. 2015). Critically, measures of non-perseverative error need to break down errors into more useful metrics rather than the current dichotomization of errors into perseverative and non-perseverative, and a new metric to examine breaks in set should be established. The WCST also includes other potentially useful metrics rarely reported for autism, such as trials to first set or conceptual level responses, which could serve as a secondary measure of rule maintenance complementary to breaks in rule set. Computerized administration offers the advantage of reducing human error in scoring, however researchers should be aware of the impact of the absolute number of trials on the underlying factor structure as latent factors subdividing executive function are only found when all 128 cards are used, whereas shorter versions and stoppage rules result in only a single executive function factor (Greve et al. 2005).

Conducting research in clinical populations is challenging, and we do not fault research groups for small sample sizes and heterogeneous groups. Our goal in conducting this meta-analysis was to combine across studies, however our greatest challenge has been in the comprehensive reporting of data. In the spirit of “the new statistics” (Cumming 2014) and open online repositories for data sharing, we hope that more comprehensive details, if not complete data sets, will be made available by diverse research groups for future meta-analyses to combine data sets to overcome these issues.

Notes

Two potential stoppage rules may be used, either six sets achieved or all cards administered. The total cards used may be 64 or 128. These variations have been criticized (see Greve et al. 2005), and may also have implications for interpretation in special populations such as autism, however this information was frequently omitted from the studies included in this meta-analysis.

References

(Articles included in the meta-analysis are denoted with *)

*Ambery, F. Z., Russell, A. J., Perry, K., Morris, R., & Murphy, D. G. M. (2006). Neuropsychological functioning in adults with Asperger syndrome. Autism, 10, 551–564. doi:10.1177/1362361306068507.

Ankenman, K., Elgin, J., Sullivan, K., Vincent, L., & Bernier, R. (2014). Nonverbal and verbal cognitive discrepancy profiles in autism spectrum disorders: Influence of age and gender. American Journal on Intellectual and Developmental Disabilities, 119, 84–99.

*Bennetto, L., Pennington, B. F., & Rogers, S. J. (1996). Intact and impaired memory functions in autism. Child Development, 67, 1816–1835. doi:10.1111/j.1467-8624.1996.tb01830.x.

Calero, M. D., Mata, S., Bonete, S., Molinero, C., & Gómez-Pérez, M. M. (2015). Relations between learning potential, cognitive and interpersonal skills in Asperger children. Learning and Individual Differences. doi:10.1016/j.lindif.2015.07.004.

Card, N. A. (2011). Applied meta-analysis for social science research. New York: Guildford Publications.

Chelune, G. J., & Baer, R. A. (1986). Developmental norms for the Wisconsin card sorting test. Journal of Clinical and Experimental Neuropsychology, 8, 219–228. doi:10.1080/01688638608401314.

*Ciesielski, K. T., & Harris, R. J. (1997). Factors related to performance failure on executive tasks in autism. Child Neuropsychology, 3, 1–12. doi:10.1080/09297049708401364.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. New York: Psychology Press.

Cumming, G. (2014). The new statistics: Why and how. Psychological Science, 25, 7–29. doi:10.1177/0956797613504966.

Edgin, J. O., & Pennington, B. F. (2005). Spatial cognition in autism spectrum disorders: Superior, impaired, or just intact? Journal of Autism and Developmental Disorders, 35, 729–745. doi:10.1007/s10803-005-0020-y.

Feldstein, S. N., Keller, F. R., Portman, R. E., Durham, R. L., Klebe, K. J., & Davis, H. P. (1999). A comparison of computerized and standard versions of the Wisconsin card sorting test. The Clinical Neuropsychologist, 13, 303–313. doi:10.1076/clin.13.3.303.1744.

Geurts, H. M., Corbett, B., & Solomon, M. (2009). The paradox of cognitive flexibility in autism. Trends in Cognitive Sciences, 13, 74–82.

*Geurts, H. M., Verte, S., Oosterlaan, J., Roeyers, H., & Sergeant, J. A. (2004). How specific are executive functioning deficits in attention deficit hyperactivity disorder and autism? Journal of Child Psychology and Psychiatry, 45, 836–854. doi:10.1111/j.1469-7610.2004.00276.x.

Gioia, G. A., & Isquith, P. K. (2004). Ecological assessment of executive function in traumatic brain injury. Developmental Neuropsychology, 25, 135–158.

Goldstein, G., Johnson, C. R., & Minshew, N. J. (2001). Attentional processes in autism. Journal of Autism and Developmental Disorders, 31, 433–440. doi:10.1023/A:1010620820786.

*Goldstein, G., Johnson, C. R., & Minshew, N. J. (2001). Attentional processes in autism. Journal of Autism and Developmental Disorders, 31, 433–440. doi:10.1023/A:1010620820786.

Grant, D. A., & Berg, E. A. (1948). A behavioral analysis of degree of reinforcement and base of shifting to new responses in a Weigl-type card-sorting problem. Journal of Experimental Psychology, 38, 404–411. doi:10.1037/h0059831.

*Greibling, J., Minshew, N. J., Bodner, K., Libove, R., Bansai, R., et al. (2010). Dorso-lateral prefrontal cortex MRI measurements and cognitive performance in autism. Journal of Child Neurology, 25, 856–863. doi:10.1177/0883073809351313.

Greve, K. W., Stickle, T. R., Love, J. M., Bianchini, K. J., & Stanford, M. S. (2005). Latent structure of the Wisconsin card sorting test: A confirmatory factor analytic study. Archives of Clinical Neuropsychology, 20, 355–364.

Heaton, R. K., Chelune, G. J., Talley, J. L., Kay, G. G., & Curtiss, G. (1993). Wisconsin card sorting test manual revised and expanded. Odessa, FL: Psychological Assessment Resources.

Hill, E. L. (2004). Evaluating the theory of executive dysfunction in autism. Developmental Review, 24, 189–233. doi:10.1016/j.dr.2004.01.001.

Hughes, C., Russell, J., & Robbins, T. W. (1994). Evidence for executive dysfunction in autism. Neuropsychologia, 32, 477–492. doi:10.1016/0028-3932(94)90092-2.

Hus, V., & Lord, C. (2013). Effects of child characteristics on the Autism Diagnostic Interview-Revised: Implications for use of scores as a measure of ASD severity. Journal of Autism and Developmental Disorders, 43, 371–381.

*Kaland, N., Smith, L., & Mortensen, E. L. (2008). Brief report: Cognitive flexibility and focused attention in children and adolescents with Asperger syndrome or high-functioning autism as measured on the computerized version of the Wisconsin card sorting test. Journal of Autism and Developmental Disorders, 38, 1161–1165. doi:10.1007/s10803-007-0474-1.

Kenworthy, L., Yerys, B. E., Anthony, L. G., & Wallace, G. L. (2008). Understanding executive control in autism spectrum disorders in the lab and in the real world. Neuropsychology Review, 18, 320–338.

*Kilinçaslan, A., Nahit, M. M., Kucukyazici, G. S., & Gurvit, H. (2010). Assessment of executive/attentional performance in Asperger’s disorder. Turkish Journal of Psychiatry, 21, 289–299.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Thousand Oaks: Sage.

*Liss, M., Fein, D., Allen, D., Dunn, M., Feinstein, C., et al. (2001). Executive functioning in high-functioning children with autism. Journal of Child Psychology and Psychiatry, 42(2), 262–270. doi:10.1017/S0021963001006679.

*Lopez, B. P., Lincoln, A. J., Ozonoff, S., & Lai, Z. (2005). Examining the relationship between executive functions and restricted, repetitive symptoms of autistic disorder. Journal of Autism and Developmental Disorders, 35, 445–460. doi:10.1007/s10803-005-5035-x.

Milner, B. (1963). Effects of different brain lesions on card sorting. Archives of Neurology, 9, 101–110. doi:10.1001/archneur.1963.00460070100010.

*Minshew, N. J., Meyer, J., & Goldstein, G. (2002). Abstract reasoning in autism: A dissociation between concept formation and concept identification. Neuropsychology, 16, 327–334. doi:10.1037/0894-4105.16.3.327.

*Minshew, N. J., Muenz, L. R., Goldstein, G., & Payton, J. B. (1992). Neuropsychological functioning in nonmentally retarded autistic individuals. Journal of Clinical and Experimental Neuropsychology, 14, 749–761. doi:10.1080/01688639208402860.

Miyake, A., Friedman, N. P., Emerson, M. J., Witzki, A. H., Howerter, A., & Wager, T. D. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive Psychology, 41, 49–100.

*Nyden, A., Gillberg, C., Hjelmquist, E., & Heiman, M. (1999). Executive function/attention deficits in boys with Asperger syndrome, attention disorder and reading/writing disorder. Autism, 3, 213–228. doi:10.1177/1362361399003003002.

*Ozonoff, S. (1995). Reliability and validity of the Wisconsin card sorting test in studies of autism. Neuropsychology, 9(4), 491.

Ozonoff, S., Cook, I., Coon, H., Dawson, G., Joseph, R. M., et al. (2004). Performance on Cambridge Neuropsychological Test Automated Battery subtests sensitive to frontal lobe function in people with autistic disorder: evidence from the Collaborative Programs of Excellence in Autism network. Journal of Autism and Developmental Disorders, 34, 139–150. doi:10.1023/B:JADD.0000022605.81989.cc.

*Ozonoff, S., & Jensen, J. (1999). Brief Report: Specific executive function profiles in three neurodevelopmental disorders. Journal of Autism and Developmental Disorders, 29, 171–177. doi:10.1023/A:1023052913110.

*Ozonoff, S., & McEvoy, R. E. (1994). A longitudinal study of executive function and theory of mind development in autism. Development and Psychopathology, 6, 415–431. doi:10.1017/S0954579400006027.

*Ozonoff, S., Pennington, B. F., & Rogers, S. J. (1991). Executive function deficits in high-functioning autistic individuals: Relationship to theory of mind. Journal of Child Psychology and Psychiatry, 32, 1081–1105. doi:10.1111/j.1469-7610.1991.tb00351.x.

Ozonoff, S., & Strayer, D. L. (2001). Further evidence of intact working memory in autism. Journal of Autism and Developmental Disorders, 31, 257–263. doi:10.1023/A:1010794902139.

Ozonoff, S., Strayer, D. L., McMahon, W. M., & Filloux, F. (1994). Executive function abilities in autism and Tourette syndrome: An information processing approach. Journal of Child Psychology and Psychiatry, 35, 1015–1032. doi:10.1111/j.1469-7610.1994.tb01807.x.

*Pascualvaca, D. M., Fantie, B. D., Papageorgiou, M., & Mirsky, A. F. (1998). Attentional capacities in children with autism: Is there a general deficit in shifting focus? Journal of Autism and Developmental Disorders, 28, 467–478. doi:10.1023/A:1026091809650.

Prior, M., & Hoffmann, W. (1990). Brief report: Neuropsychological testing of autistic children through an exploration with frontal lobe tests. Journal of Autism and Developmental Disorders, 20, 581–590. doi:10.1007/BF02216063.

*Robinson, S., Goddard, L., Dritschel, B., Wisley, M., & Howlin, P. (2009). Executive functions in children with autism spectrum disorders. Brain and Cognition, 71, 362–368. doi:10.1016/j.bandc.2009.06.007.

Rosenthal, R. (1984). Meta-analytic procedures for social science research. Thousand Oaks: Sage.

Rosselli, M., & Ardila, A. (1993). Developmental norms for the Wisconsin card sorting test in 5-to 12-year-old children. The Clinical Neuropsychologist, 7, 145–154. doi:10.1080/13854049308401516.

*Rumsey, J. M. (1985). Conceptual problem-solving in highly verbal, nonretarded autistic men. Journal of Autism and Developmental Disorders, 15, 23–36. doi:10.1007/BF01837896.

*Rumsey, J. M., & Hamburger, S. D. (1988). Neurophysiological findings in high-functioning men with infantile autism, residual state. Journal of Clinical and Experimental Neuropsychology, 10, 201–221. doi:10.1080/01688638808408236.

*Rumsey, J. M., & Hamburger, S. D. (1990). Neuropsychological divergence of high-level autism and severe dyslexia. Journal of Autism and Developmental Disorders, 20, 155–168. doi:10.1007/BF02284715.

Russell, J., Jarrold, C., & Hood, B. (1999). Two intact executive capacities in children with autism: Implications for the core executive dysfunctions in the disorder. Journal of Autism and Developmental Disorders, 29, 103–112. doi:10.1023/A:1023084425406.

Russo, N., Flanagan, T., Iarocci, G., Berringer, D., Zelazo, P. D., & Burack, J. A. (2007). Deconstructing executive deficits among persons with autism: Implications for cognitive neuroscience. Brain and Cognition, 65, 77–86.

*Schneider, S. G., & Asarnow, R. F. (1987). A comparison of cognitive/neuropsychological impairments of nonretarded autistic and schizophrenic children. Journal of Abnormal Child Psychology, 15, 29–46. doi:10.1007/BF00916464.

Schopler, E., & Mesibov, G. B. (1995). Executive functions in autism. Learning & cognition in autism. New York: Plenum Press.

Shallice, T. I. M., & Burgess, P. W. (1991). Deficits in strategy application following frontal lobe damage in man. Brain, 114, 727–741.

*Shu, B. C., Tien, A. Y., & Chen, B. C. (2001). Executive function deficits in non-retarded autistic children. Autism, 5, 165–174. doi:10.1177/1362361301005002006.

*Steel, J. G., Gorman, R., & Flexman, J. E. (1984). Case report: Neuropsychiatric testing in an autistic mathematical idiot-savant: Evidence for nonverbal abstract capacity. Journal of the American Academy of Child Psychiatry, 23, 704–707. doi:10.1016/S0002-7138(09)60540-9.

Sullivan, E. V., Mathalon, D. H., Zipursky, R. B., Kersteen-Tucker, Z., Knight, R. T., & Pfefferbaum, A. (1993). Factors of the Wisconsin card sorting test as measures of frontal-lobe function in schizophrenia and in chronic alcoholism. Psychology Research, 46, 175–199. doi:10.1016/0165-1781(93)90019-D.

*Sumiyoshi, C., Kawakubo, Y., Suga, M., Sumiyoshi, T., & Kasai, K. (2011). Impaired ability to organize information in individuals with autism spectrum disorders and their siblings. Neuroscience Research, 69, 252–257. doi:10.1016/j.neures.2010.11.007.

*Szatmari, P., Tuff, L., Finlayson, A. J., & Bartolucci, G. (1990). Asperger’s syndrome and autism: Neurocognitive aspects. The American Academy of Child & Adolescent Psychiatry, 29, 130–136. doi:10.1097/00004583-199001000-00021.

Teunisse, J. P., Roelofs, R. L., Verhoeven, E. W., Cuppen, L., Mol, J., & Berger, H. J. (2012). Flexibility in children with autism spectrum disorders (ASD): Inconsistency between neuropsychological tests and parent-based rating scales. Journal of Clinical and Experimental Neuropsychology, 34, 714–723. doi:10.1080/13803395.2012.670209.

Tien, A., Spevack, T. V., Jones, D. W., Pearlson, G. D., Schleapfer, T. E., & Strauss, M. E. (1996). Computerized Wisconsin card sorting test: Comparison with manual administration. Kaohsiung Journal of Medical Science, 12, 479–485.

*Tsuchiya, E., Oki, J., Yahara, N., & Fujieda, K. (2005). Computerized version of the Wisconsin card sorting test in children with high-functioning autistic disorder or attention-deficit/hyperactivity disorder. Brain & Development, 27, 233–236. doi:10.1016/j.braindev.2004.06.008.

Van Eylen, L., Boets, B., Steyaert, J., Evers, K., Wagemans, J., & Noens, I. (2011). Cognitive flexibility in autism spectrum disorder: Explaining the inconsistencies? Research in Autism Spectrum Disorders, 5, 1390–1401.

*Voelbel, G. T., Bates, M. E., Buckman, J. F., Pandina, G., & Hendren, R. L. (2006). Caudate nucleus volume and cognitive performance: Are they related in childhood psychopathology? Biological Psychiatry, 60, 942–950. doi:10.1016/j.biopsych.2006.03.071.

Williams, D. M., Lind, S. E., Boucher, J., & Jarrold, C. (2013). Time-based and event-based prospective memory in autism: The roles of executive function, theory of mind, and time estimation. Journal of Autism and Developmental Disorders, 43, 1555–1567. doi:10.1007/s10803-012-1703-9.

*Winsler, A., Abar, B., Feder, M. A., Schunn, C. D., & Rubio, D. A. (2007). Private speech and executive functioning among high-functioning children with autistic spectrum disorders. Journal of Autism and Developmental Disorders, 37, 1617–1635. doi:10.1007/s10803-006-0294-8.

*Yang, J., Zhou, S., Yao, S., Su, L., & McWhinnie, C. (2009). The relationship between theory of mind and executive function in a sample of children from Mainland China. Child Psychiatry Human Development, 40, 169–182. doi:10.1007/s10578-008-0119-4.

Zelazo, P. D., Müller, U., Frye, D., Marcovitch, S., Argitis, G., et al. (2003). The development of executive function in early childhood. Monographs of the Society for Research in Child Development. doi:10.1111/j.0037-976X.2003.00266.x.

Acknowledgments

The authors would like to thank Sura Muscati, Christopher Degagne, Philippe Chouinard, Jake Burack, and Natalie Russo for comments on the manuscript, and all the researchers who facilitated this meta-analysis by tracking down colleagues and sharing raw data.

Author Contributions

OL conceived of the study, participated in its design and coordination, performed measurement and statistical analysis, interpretation of the data, and drafted the manuscript; SA participated in the design and coordination of the study, performed measurement and statistical analysis, and helped to draft the manuscript. Both authors read and approved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Landry, O., Al-Taie, S. A Meta-analysis of the Wisconsin Card Sort Task in Autism. J Autism Dev Disord 46, 1220–1235 (2016). https://doi.org/10.1007/s10803-015-2659-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-015-2659-3