Abstract

During a disaster, a large number of disaster-related social media posts are widely disseminated. Only a small percentage of disaster-related information is posted by eyewitnesses. The post of a disaster eyewitness offers an accurate depiction of the disaster. Therefore, the information posted by the eyewitness is preferred over the other source of information as it is more effective at helping organize rescue and relief operations and potentially saving lives. In this work, we propose a multi-channel convolutional neural network (MCNN) that uses three different word-embedding vectors together to classify disaster-related tweets into eyewitness, non-eyewitness, and don’t know classes. We compared the performance of the proposed multi-channel convolutional neural network with several attention-based deep-learning models and conventional machine learning-models such as recurrent neural network, gated recurrent unit, Long-Short-Term-Memory, convolutional neural network, logistic regression, support vector machine, and gradient boosting. The proposed multi-channel convolutional neural network achieved an F1-score of 0.84, 0.88, 0.84, and 0.86 with four disaster-related datasets of floods, earthquakes, hurricanes, and wildfires, respectively. The experimental results show that the training MCNN model with different word embedding together performs better than the conventional machine-learning models and several other deep-learning models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Social media has been found to play a vital role in disaster relief operations. It has shown its usefulness by potentially saving lives in several instances (Imran et al., 2015; Kumar & Singh, 2019; Mendon et al., 2021; Bandyopadhyay et al., 2018). For example, a woman was rescued from a hurricane when she asked for help on Twitter because the 911 emergency number was not reachable.Footnote 1 She posted, “I have 2 children with me and tge [sic],water is swallowing us up. Please send help. 11115 Sageview, Houston,Tx, 911 is not responding!!!!!!”. Due to its potential usages during a disaster, more and more people are posting and forwarding disaster-related information on social media such as Twitter and Facebook (Imran et al., 2015; Kumar et al., 2020; Palshikar et al., 2018; Beydoun et al., 2018; Mirbabaie et al., 2021; Mendon et al., 2021). These disaster-related social media posts contain information about affected individuals, injured and missing people, and damage related to infrastructure (Kumar et al., 2019). It has been found that several disaster-related events have first appeared on social media instead of traditional news channels (Zahra et al., 2020). For example, the news of the Westgate Mall attack in Kenya in 2013 was first reported on Twitter, before it was reported by any TV channels (Zahra et al., 2020). Similarly, an airplane crash over the Hudson River was first reported on Twitter (Sakaki et al., 2012).

Although social media posts can be posted rapidly and contain useful information related to disasters, they are equally filled with redundant and false information which limits their authenticity and usages (Kumar et al., 2020; Tanev et al., 2017; Doggett & Cantarero, 2016; Stefan et al., 2019; Fang et al., 2016; Morstatter et al., 2014). The posts of local citizens and eyewitnesses of disasters convey more authentic information related to the disaster (Zahra et al., 2020). Information provided by the eyewitness can give a better perspective on the disaster (Zahra et al., 2020). Therefore, the content posted by the eyewitness is preferred over all the other sources of information (Zahra et al., 2020; Stefan et al., 2019). Hence, extracting the contents shared by the eyewitness of a disaster is very important, but it is equally challenging due to its limited volume in the massive social media posts (Zahra et al., 2020; Morstatter et al., 2014; Tanev et al., 2017). In an analysis of 26 different events, Olteanu et al. (2015) found that only 9% of messages were posted by the eyewitness. One straightforward approach for finding posts of people from the disaster-hit area is through geotagged tweets (Kumar et al., 2017). But tweets with geotagged information are rare, as only 1-3% of the total tweets are geo-tagged (Imran et al., 2020; Zahra et al., 2020; Zola et al., 2020). The second option is to use the user’s home location to decide the people belonging from the disaster-hit area, but this home location field on the Twitter user’s account is very noisy and inaccurate (Kumar et al., 2017). As the geotagged tweets are limited in numbers and users’ home location information is noisy, the textual content of the tweet can be used to identify eyewitness tweets using natural language processing techniques (Zahra et al., 2020; Tanev et al., 2017).

Recently, researchers (Zahra et al., 2020; Stefan et al., 2019; Tanev et al., 2017; Fang et al., 2016; Morstatter et al., 2014) have proposed Natural Language Processing (NLP) based approaches using textual features of the messages to identify eyewitness messages from social media. Zahra et al. (2020) applied the Random Forest classifier with uni-gram and bi-gram TF-IDF (Term Frequency-Inverse Document Frequency) features along with several domain-specific features to identify eyewitness tweets. Tanev et al. (2017) used Naive Bayes, Support Vector Machine, and Random Forest classifiers on textual features and meta-data information of tweets to find eyewitness tweets. Morstatter et al. (2014) extracted several features such as uni-gram, bi-gram, crisis-sensitive features, and preposition phrases to train a Naive Bayes classifier. Fang et al. (2016) extracted linguistic features, meta-data features, and word-embedding vectors to train decision tree, random forest, and support vector machine (SVM) classifiers. Most of these works used handcrafted features with conventional machine-learning-based classifiers to identify eyewitness messages, but their models achieved very limited performance. Recently, Stefan et al. (2019) proposed dense neural network and Long-Short-Term-Memory (LSTM) network-based models for the identification of eyewitness messages using bag-of-words and pre-trained GloVe word-embedding vectors. But they tested their models on a very limited number of data samples.

Most of the deep neural network-based models operate over word-embedding vectors. Word-embedding represents each word as a real-valued vector that captures syntactic and semantic information, and thus they are very useful in many natural language processing (NLP) tasks (Kumar and Singh, 2019; Yu et al., 2019). Nowadays, many pre-trained word embeddings are available over the web (Qiu et al., 2020), but different word embeddings may encode different aspects of the language (Levy & Goldberg, 2014; Qiu et al., 2020). Zhang et al. (2016) and Pham and Le (2018) explored the idea of integrating different word-embedding vectors and proposed a convolutional neural network-based model that achieved state-of-the-art performance for sentence classification tasks. Motivated by their works, in this study, we are proposing a multi-channel convolutional neural network (MCNN) that uses three different word embeddings’ vectors together for eyewitness tweet identification. The proposed MCNN model uses GloVeFootnote 2, CrisisFootnote 3, and Word2vec embedding vectors together. GloVe and Crisis are the pre-trained models with GloVe being trained on the 27 billion tokens of tweets whereas Crisis is trained on the crisis-related corpus. For word2vec embedding, we trained it on the disaster-specific dataset using GensimFootnote 4. Irrespective of this, we are also exploring the suitability of deep neural network-based models such as Recurrent Neural Network with attention, Gated Recurrent Unit with attention, Long-short-Term-Memory with attention, and Convolutional Neural Network (CNN) where we used GloVe-embedding vector for the identification of disaster-related eyewitness tweets. The proposed system is validated on four different disasters related to floods, earthquakes, hurricanes, and wildfires. The overall contributions of the paper can be summarized below:

-

Proposing a multi-channel convolutional neural network (MCNN) for the identification of disaster-related eyewitness tweets. The proposed MCNN model performed better than the existing methods by achieving an F1-score of 0.84, 0.88, 0.84, and 0.86 for flood, earthquake, hurricane, and wildfire events respectively.

-

The proposed MCNN uses three different word embeddings to capture the better contextual meaning of the tweets.

-

The performance of the proposed MCNN model is compared with several popular machine-learning and deep-learning models.

The rest of the sections are organized as follows: Section 2 discusses the related work, Section 3 discusses the detailed methodology used to perform the experiments. Section 4 lists the experimental results, Section 5 discusses the findings of the proposed model with some future directions and, finally, Section 6 concludes the paper.

2 Related Works

Disaster-related social media content is effectively utilized by several researchers (Kumar et al., 2020; Kumar et al., 2019; Kumar & Singh, 2019; Stefan et al., 2019; Zahra et al., 2020; Nguyen et al., 2017b; Liu & Xu, 2018) to propose different methods to provide better situational awareness in citizens, to perform better rescue and relief operations, assess the damage automatically, and find the location information of events and posts. Identification of disaster-related informative content from social media has been a very active area of research around the globe (Kumar et al., 2020; Kumar et al., 2019; Singh et al., 2019; Nguyen et al., 2017a; Caragea et al., 2016; Imran et al., 2014). This informative content contains posts of people from disaster-hit areas as well as from outside the disaster regions. As the posts of local citizens and eyewitnesses of disaster convey more authentic information and can give a better perspective on the disaster, several researchers (Stefan et al., 2019; Zahra et al., 2018; Zahra et al., 2020; Tanev et al., 2017; Morstatter et al., 2014) have shown a strong interest in finding eyewitnesses’ social media content, i.e., content posted from the disaster-hit area. Therefore, for the better organization of related literature, this section is divided into two subsections: (i) Informative disaster content identification from social media, and (ii) Eyewitness disaster content identification from social media.

2.1 Informative Disaster Content Identification from Social Media

This section briefly discusses some of the potential works for the identification of informative disaster-related content from social media. The convolutional neural network-based model was used by Caragea et al. (2016), Nguyen et al. (2017a), Kersten et al. (2019), and Madichetty and Sridevi (2019) to classify informative and non-informative disaster-related tweets. Yu et al. (2019) used convolutional neural network-based model to do a fine grain classification of informative disaster-related tweets in caution and advice, casualties and damage, and donation and aid classes. Singh et al. (2019) extracted several features from the textual content of tweets to experiment with support vector machine, random forest, and gradient-boosting classifiers to classify high-priority and low-priority tweets. Nguyen et al. (b) implemented a deep neural network to first classify disaster-related informative and non-informative tweets. They further used informative tweets to identify tweets related to affected individuals, infrastructure and utility damage, and sympathy and support. Kumar et al. (2019) performed a comparative analysis of different machine-learning and deep-learning techniques to classify informative tweets into further classes such as affected individuals, infrastructure and utility damage, injured or dead people, missing or found people, rescue volunteering or donation effort, and vehicle damage. They found that deep-learning-based models perform better than the several popular machine-learning classifiers. Roy et al. (2020) used SVM to classify cyclone fani tweets into informative and non-informative classes. They further summarized informative tweets to get better information regarding the disaster. Kumar et al. (2020) built a multi-modal system to classify informative and non-informative tweets of disaster using LSTM and VGG-16 for tweet text and images respectively. Madichetty and Sridevi (2021) proposed a model that uses low-level lexical, syntactic, and top-most frequency features to find damage assessment tweets during a disaster. Mohanty et al. (2021) proposed four different models: a geospatial model, an image model, a user model, and a text model to provide situational awareness from social media data during disaster events.

One group of researchers (Alam et al., 2017; Nguyen et al. a; a; Daly and Thom, 2016; Lagerstrom et al., 2016) developed models to filter informative social media images related to disaster to help humanitarian organizations. Alam et al. (2017) proposed a framework to identify relevant images of Queensland Australian Cyclone, 2017, from social media. Nguyen et al. (a) used transfer learning for irrelevant image filtering and the perceptual hashing technique for redundant image filtering from disaster-related social media content. Chaudhuri and Bose (2019) used a convolutional neural network for the identification of human body parts from the earthquake-related images whereas Daly and Thom (2016) and Lagerstrom et al. (2016) developed models to identify fire-related disaster images.

2.2 Eyewitness Disaster Content Identification from Social Media

Kumar et al. (2013) proposed a method to identify the subset of users from social media who can provide quick and relevant information related to an event. They reported that the selected users generate more quality information compared to other social media users. Morstatter et al. (2014) analyzed the Boston Marathon bombing and Hurricane Sandy events and found that several linguistic differences exist between the tweets posted from disaster-affected areas and outside the affected areas. They used features such as uni-gram, bi-gram, crisis-sensitive features, preposition phrases to train a Naive Bayes classifier. They found the best F1-score of 0.83 and 0.88 for the Boston bombing and Hurricane Sandy event respectively to classify tweets posted from the disaster-hit area or outside area. Truelove et al. (2015) observed that the eyewitness accounts had fewer location name references but more personal location information such as “my home” by analyzing bushfire events. Different filtering rules for events such as shooting, protests and police activity were used by Doggett and Cantarero (2016) to filter eyewitness tweets. The presence of first-person pronouns, immediate temporal words like just and now, locative markers such as school, here, and exclamative or emotive punctuation were used while defining their filtering rules. Features such as linguistic, crisis-sensitive, conversational, meta-data were utilized by Fang et al. (2016) to classify eyewitness social media accounts. They used machine-learning classifiers such as decision tree, random forest, and support vector machine (SVM). They found that SVM performed best with an F1-score of 0.90 when it was trained on linguistic features, meta-data features, and word-embedding vectors. Tanev et al. (2017) used uni-gram, bi-gram, semantic features, and metadata information of the earthquake, wildfire, landslide, and flood tweets to classify them into eyewitness and non-eyewitness classes. They used English and Italian tweets with the Naive Bayes, Support Vector Machine, and Random Forest classifiers. The best F1-score of 0.79 for English language tweets and 0.69 for Italian language tweets were achieved with Random Forest and Naive Bayes classifiers respectively.

Zahra et al. (2018) analyzed the different disaster-related tweets and labeled them into three different classes: (i) eyewitness, (ii) non-eyewitness, and (iii) don’t know. They further labeled eyewitness tweets into three different classes: direct eyewitness, indirect eyewitness, and vulnerable direct eyewitness. They observed that tweets expressing terms like feeling, seeing, and hearing appeared often in direct eyewitness tweets, whereas prayers, emotions, and thoughts appeared frequently in indirect eyewitness tweets. Zahra et al. (2020) classified flood, earthquake, hurricane, and wildfire tweets into eyewitness, non-eyewitness, and don’t know classes. They used uni-gram, bi-gram TF-IDF, and several domain-specific features. They reported that the Random Forest classifier outperformed the other machine-learning classifiers in identifying eyewitness tweets by achieving an F1-score of 0.57, 0.92, 0.60, and 0.40 for flood, earthquake, hurricane, and wildfire events respectively. Stefan et al. (2019) applied several classifiers such as Naive Bayes, logistic regression, and neural network-based approach to classifying tweets into eyewitness and non-eyewitness classes. They manually labeled datasets related to the California wildfire, Iran-Iraq earthquake, and Hurricane Harvey. But, their data size was limited having only one thousand tweets. Their best-performed models achieved an average precision of 0.79, 1.00, and 0.89 for the California wildfire, Iran-Iraq earthquake, and Hurricane Harvey respectively. Pekar et al. (2020) applied several machine-learning classifiers such as SVM, K-nearest neighbor, Naive Bayes, and ensembles methods on four different tasks: identification of eyewitness reports, detection of relevant messages, informative messages, and topical classification of messages. They performed experiments in several different settings to combine the disaster-specific dataset into one to test the performance of models in case of heterogeneous disaster events. They stated that the identification of eyewitness tweets is very hard and found that none of the models achieved F1-score of more than 50%. Truelove et al. (2017) extracted bag-of-visual-words features from the images and applied several machine-learning classifiers such as decision tree, Naive Bayes, random forest, linear support vector machine to classify images posted from a witness account or not. Their implemented linear support vector machine classifiers performed best with an accuracy of 90.17%. The summary of some of the potential works for the identification of eyewitness social media content can be seen in Table 1.

Most of the earlier works used hand-crafted features and trained conventional machine-learning classifiers to identify eyewitness messages. In this work, we are exploring different recurrent neural network-based models with attention mechanism, convolutional neural network, and multi-channel convolutional neural network with three different word-embedding techniques such as GloVe, Crisis, and Word2vec to classify tweets into eyewitness, non-eyewitness, and don’t know classes. The detailed description of the proposed models and word-embedding techniques can be seen in Section 3.

3 Methodology

The extensive experiments were performed with seven conventional machine-learning models and five deep-learning models. The conventional machine-learning classifiers are: (i) Support Vector Machine (SVM), (ii) Naive Bayes (NB), (iii) K-Nearest Neighbors (KNN), (iv) Logistic Regression (LR), (v) Decision Tree (DT), (vi) Random Forest (FT), and (vii) Gradient Boosting (GB). The deep-learning models are (i) Recurrent Neural Network with Attention (RNN-A), (ii) Gated Recurrent Unit with Attention (GRU-A), (iii) Long-Short-Term-Memory with Attention (LSTM-A), (iv) Convolutional Neural Network (CNN), and (v) Multichannel Convolutional Neural Network (MCNN).

3.1 Data Description

The proposed work uses the datasetFootnote 5 published by Zahra et al. (2020). The dataset includes tweets related to four different disasters, such as earthquakes, floods, fires, and hurricanes. Three different levels were assigned to tweets, such as eyewitness, non-eyewitness, and don’t know. Two sets of datasets were provided. In set-1, the datasets were labeled by authors and their groups. In set 2, the labeling was done using crowdsourcing. Out of a total of four different disaster-related events such as flood, earthquake, hurricane, and wildfire, the wildfire event dataset was labeled by crowdsourcing only, whereas for other events both crowdsourcing and authors manual labeling was done. We merged the event-specific dataset of manual analysis and crowdsourcing into one for earthquake, flood, and hurricane. The overall statistic of the dataset used in this study can be seen in Table 2. To perform the pre-processing of tweets, special characters such as “!, #, %, &, ?, @” and extra space, as well as tab between the words, were removed. Finally, tweets were transformed to lowercase, which is then employed by several conventional machine-learning and deep-learning models.

3.2 Representation of Tweets

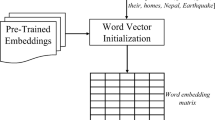

In the case of conventional machine-learning models, tweets are represented using TF-IDF vectors. For the calculation of TF-IDF vectors, uni-gram, bi-gram, and tri-gram words of the tweets are used. In the case of deep-learning models, tweets are represented using the word-embedding technique. The word-embedding technique represents each word of the tweet corpus in a real-value fixed-dimensional vector. The word-embedding generates a similar word vector for words having a similar meaning. This work uses three different word-embedding vectors: (i) GloVeFootnote 6, (ii) CrisisFootnote 7, and (iii) Word2vec. GloVe and Crisis are the pre-trained models with GloVe being trained on the 27 billion tokens of tweets whereas Crisis is trained on the crisis-related corpus. For Word2vec embedding, we trained it on the disaster-specific dataset using Gensim CBOW strategyFootnote 8. We have used 100-dimensional, 300-dimensional, and 100-dimensional word vectors for GloVe, Crisis, and Word2vec embedding respectively. The complete tweet matrix after embedding each word of the tweet into a pre-defined fixed dimension of the real-valued vector can be represented by Ti.

where Ti represents the embedding matrix of the tweets having m words. The tweets that have fewer than m words are padded to make it of the dimension of m and the tweets with greater than m words are curtailed to make it of the dimension of m. For word, Wm, embedding is represented by the vector [rm1rm2.....rmk], where k represents the embedding dimension of the word. The length of the tweet (m) is fixed at 30 for the experiments because most of the tweets in the dataset had at most 30 words.

3.3 Convolutional Neural Network

The convolutional neural network uses different n-gram filters to extract features from the tweet matrix followed by the pooling operation. The overall architecture of the convolutional neural network can be seen in Fig. 1. The convolution process applies a filter with the dimension of (w × k), where w is the length of the words and k is the embedding dimension of the word. The filter applied on the tweet matrix first performs element-wise multiplication with the first w words of the tweets and then the summation of all the multiplied values is passed through an activation function to produce a feature. This filter is again applied to the w words of the tweets by moving one step down, which means the filter is applied on the w words of the tweet matrix by leaving the first column of the tweet matrix. A simple convolution operation on the tweet matrix with the filter size of (w = 3) can be represented as:

where, F(x) = max(0, x) represents the ReLu activation function. The ReLu activation function converts negative values to zero and for the positive value it returns x itself. The feature map after the convolution operation is represented by [cf1,cf2,cf3,.....,cf(m−w+ 1)]. After getting a feature map from the convolution process, max-pooling operation is performed, which pools the maximum value from a window size of p, as given in Eq. 1.

In this work, two different convolutional neural network-based models were implemented. In the case of a single-channel convolutional neural network (CNN), only GloVe embedding was used whereas in the case of the multi-channel convolutional neural network (MCNN), three different channels were used with three different word-embedding vectors. The overall architecture of the multi-channel CNN can be seen from Fig. 1. Three different word-embedding vectors GloVe, Crisis, and Word2vec are used at the three-channel of multi-channel CNN, as shown in Fig. 1. Three different tweet matrixes were obtained with the size of (30 × 100), (30 × 100), and (30 × 300) for GloVe, Word2vec, and Crisis embedding respectively. We applied 128 filters of 2-gram, 3-gram, and 4-gram on the tweet matrix to extract relevant features from them. After the convolution operation, a max-pooling operation was performed with a window size of 5. The vector after the max-pooling from separate channels is then concatenated with each other as shown in Fig. 1. The second convolution operation is then applied to the concatenated feature maps with 128 filters of 1-gram followed by max-pooling operation with the windows size of 5. The feature maps obtained from the second convolutional operation are flattened and passed through a dense layer having 128 neurons. Finally, the dense layers having 128 neurons were passed through a softmax layer to calculate the probability of the tweets belonging to eyewitness, non-eyewitness, and don’t know classes. We used categorical cross-entropy as a loss function to calculate loss between true and predicted values and Adam (Kingma and Ba, 2014) as the optimizer to back-propagate the loss in the network. The categorical cross-entropy is defined by Eq. 2.

Where, Vc represents the classes into the one-hot vector form, and \(\hat {y_{i}}\) is the predicted class probability of the model for the ith training sample.

3.4 Attention-Based Recurrent Neural Network Models

The attention-based techniques performed well in several natural language-processing tasks (Yang et al., 2016; Wang et al., 2016) that motivated us to use this technique with RNN, GRU, and LSTM networks. Attention-based techniques can identify eyewitness tweets efficiently as it is sensitive to distinctive textual features. The attention layer averages the weights of the input sequence and propagates these weights through the softmax layers to obtain the importance of the words. A detailed explanation of the attention-based mechanism can be seen in Vaswani et al. (2017). The detailed explanation of the working of RNN, GRU, and LSTM models can be seen in Hochreiter and Schmidhuber (1997), Chung et al. (2014), and Mikolov et al. (2010).

During experiments with conventional machine-learning classifiers, the default parameters provided in the SklearnFootnote 9 library are utilized, whereas deep-learning models require a comprehensive sensitivity analysis since they are extremely sensitive to the chosen hyper-parameters. Therefore, extensive testing was conducted to find the proper hyper-parameters for deep-learning models. Table 3 lists the best-suited hyper-parameters for deep-learning models. Section 3.5 provides a complete description of selecting the best-suited hyper-parameters.

3.5 Hyper-Parameter Selection for Deep Neural Network Models

The proposed multi-channel CNN model consists of many hyper-parameters such as the number of filters, filter size, max-pooling window size, learning rate, batch size, activation functions, number of epochs, number of CNN layers, number of dense layers, number of neurons in dense layer and others. As the performance of the deep-learning models is very sensitive to the selected parameters, therefore the right choice of hyper-parameter selection is very important. To get the best set of hyper-parameters, first, we fixed the batch size of 80, max-pooling size of 5, and the learning rate to its default value i.e., 0.001, then started experiments with 2 layers of CNN and 2 layers of dense (128 and 3 neurons respectively at dense layers) where different combinations of 1-gram, 2-gram, 3-gram, and 4-gram filters were applied on each of the CNN layers by varying the number of filters. The extensive experiments were performed for 100 epochs, we found 128 filters of 2-gram, 3-gram, and 4-gram filters at the first CNN layer, and 128 filters of 1-gram at the second CNN layer performed best in the case of the multi-channel CNN model. Next, experiments were performed with different combinations of batch size and learning rate where the batch sizes of 80, 100, and 120 and learning rates of 0.01, 0.001, and 0.0001 were used. The multi-channel CNN performed best with a batch size of 100 and a learning rate of 0.001. Next, with the best-obtained batch size, learning rate, number of filters, and filter size, we experimented with one layer of multi-channel CNN, two layers of multi-channel CNN, and three layers of multi-channel CNN. The best performance was achieved in the case of two layers of multi-channel CNN. The detailed hyper-parameters for the multi-channel CNN model can be seen in Table 3.

As the best result was found with the multi-channel CNN with the batch size of 100, the learning rate of 0.001, we fixed this value for other experiments. In the case of single-channel CNN (CNN), the same set of experiments were performed with the number of filters and filter size. The best-performed hyper-parameters can be seen in Table 3. In the case of RNN with attention (RNN-A), GRU with attention (GRU-A), and LSTM with attention (LSTM-A) experiments were performed by varying the number of layers and number of hidden output units. The best performance was achieved with 2 layers having 256 and 128 hidden outputs units for each of the models. The detailed hyper-parameters for the RNN-A, GRU-A, and LSTM-A models can be seen in Table 3.

4 Results

All of the experiments were carried out on the Google CollaboratoryFootnote 10 platform, which includes an Nvidia K80/T4 GPU and 16 GB of RAM. KerasFootnote 11 Python deep-learning library with TensorflowFootnote 12 as a backend is used to create deep-learning models, whereas SklearnFootnote 13 Python library is used to implement conventional machine-learning classifiers. The extensive experiments were performed with 10-fold cross-validation (Kohavi et al., 1995) for both conventional machine-learning and deep-learning models. In 10-fold cross-validation, the entire data sample is randomly divided into 10 subsamples, in which one subsample is used to validate the system while the system is trained with nine subsamples. This process is repeated 10 times, with each of the ten subsamples being used only once as the validation data. The results from each of the folds are averaged to estimate the overall system performance. The performance of the models is evaluated using precision, recall, F1-score, accuracy, and Area Under Curve (AUC). The detailed description of each of the evaluation metrics can be seen in Powers (2011).

First, the experimentation was performed with seven different conventional machine-learning classifiers. The uni-gram, bi-gram, and tri-gram TF-IDF features of the tweets were used to classify tweets. The weighted averages of the precision, recall, and F1-score for each of the classifiers are listed in Table 4. In the case of flood, earthquake, and hurricane, the logistic regression (LR) classifier performed best with the weighted F1-score of 0.75, 0.86, 0.77, respectively. In the case of wildfire, the gradient boosting (GB) classifier performed better with a weighted F1-score of 0.80.

Next, the experimentation was performed with different deep neural network models. The range of the weighted average of F1-score for 10-fold cross-validation for different events is plotted using the box-whisker plot. The box-whisker plot of the RNN-A, GRU-A, LSTM-A, CNN, and MCNN models for flood, earthquake, hurricane, and wildfire events can be seen from Figs. 2, 3, 4, and 5 respectively.

For the flood event, the results of the different deep-learning models are listed in Table 5. The multi-channel CNN (MCNN) and signal channel CNN (CNN) both achieved a weighted F1-score of 0.84 and AUC of 0.93, which is better than other deep-learning models and conventional machine-learning models. Among all the implemented deep-learning models, the multi-channel CNN (MCNN) performed best for the eyewitness class with an F1-score of 0.70. For the earthquake event, the results of the different deep-learning models are listed in Table 6. Among all the implemented deep-learning models, GRU-A, LSTM-A, and MCNN performed equally well and achieved a weighted F1-score of 0.88 and AUC of 0.95. The CNN model performed comparatively well with a weighted F1-score of 0.88 and AUC of 0.96. For the eyewitness class, all models GRU-A, LSTM-A, CNN, and MCNN achieved an F1-score of 0.90. For hurricane event, the results of the different deep-learning models are listed in Table 7. The multi-channel CNN outperformed all the deep-learning and conventional machine-learning classifiers with the weighted F1-score of 0.84 and AUC of 0.94.

For the wildfire event, the results of the different deep-learning models are listed in Table 8. Among all the implemented deep-learning models, GRU-A, LSTM-A performed well with a weighted F1-score of 0.87 and AUC of 0.92, whereas the single-channel CNN and multi-channel CNN achieved an F1-score of 0.86 and AUC of 0.93. For the eyewitness class, LSTM-A performed best with an F1-score of 0.67 whereas multi-channel CNN achieved an F1-score of 0.60. The performance of the models for the eyewitness class is comparatively low. The possible reason for this can be a lower number of eyewitness samples (189 samples) were available (as can be seen from Table 2) for the wildfire event.

5 Discussion

The major finding of this research is that the multi-channel convolutional neural network (MCNN) is a better model to segregate eyewitness and non-eyewitness posts from Twitter compared to conventional machine-learning models and several other deep-learning models. The use of three different word-embedding vectors, GloVe, Crisis, and Word2vec in the multi-channel CNN, performed well as the features extracted from three different channels of CNN using 2-gram, 3-gram, and 4-gram filters provide robust features for the identification of eyewitness tweets. As the proposed system automatically identifies the most suitable features in their hidden layers for the identification of eyewitnesses, it does not need any feature engineering. In the case of the flood event, the F1-score of multi-channel CNN was improved by 9% in comparison to the logistic regression model of conventional machine-learning models whereas it performed comparatively well in the comparison of other deep-learning models. In the case of the earthquake event, the F1-score of multi-channel CNN was improved by 2% in comparison to the best-performed logistic regression model of conventional machine-learning models. In the case of hurricane event, the F1-score of multi-channel CNN improved by 7% in comparison to the best-performed logistic regression model of conventional machine-learning models. In the case of wildfire event, the F1-score of multi-channel CNN improved by 6% in comparison to the best-performed gradient-boosting model of conventional machine-learning models.

Our proposed multi-channel CNN outperformed the earlier work by Zahra et al. (2020) by a significant margin. For a fair comparison, we implemented our proposed MCNN model with the same set of datasets (without oversampling) used by Zahra et al. (2020). A comparative F1-scores for the classes eyewitness, non-eyewitness, and don’t know of the proposed MCNN models with Zahra et al. (2020) is listed in Table 9. In the case of the flood event, the proposed MCNN model achieved an F1-score of 0.72, 0.77, and 0.84 for the eyewitness, non-eyewitness, and don’t know classes, which is better than the earlier reported result by Zahra et al. (2020) on the same dataset. Similarly, for the earthquake event, MCNN achieved an F1-score of 0.94, 0.79, 0.39 for eyewitness, non-eyewitness, and don’t know classes, respectively. In the case of the hurricane, MCNN achieved an F1-score of 0.67, 0.87, and 0.66 for eyewitness, non-eyewitness, and don’t know classes, respectively. In the case of a wildfire event, MCNN achieved an F1-score of 0.60, 0.92, 0.79 for eyewitness, non-eyewitness, and don’t know classes, respectively. In all the events, the proposed MCNN model outperformed the earlier results reported by Zahra et al. (2020) by a significant margin.

5.1 Theoretical Contributions

During a disaster, a lot of irrelevant and redundant information is posted by people that is not useful for humanitarian aid (Kumar et al., 2020; Nguyen et al., 2017a). Therefore, the proposed system requires a filtering system (Kumar et al., 2020; Singh et al., 2019; Nguyen et al., 2017a; Caragea et al., 2016) at the top of it to first filter informative disaster-related tweets. Informative disaster-related tweets are those which are useful for humanitarian aid (Kumar et al., 2020). Then the proposed system can be used to classify these informative tweets into eyewitness, non-eyewitness, and don’t know classes to get a better perspective of the disaster. The development of a multi-channel convolutional neural network that employs three different word-embedding vectors to identify disaster-related eyewitness tweets is one of the proposed system’s significant theoretical contributions. The usage of three separate word-embedding vectors performs exceptionally well in terms of extracting robust features. Another theoretical contribution is that the suggested multi-channel convolutional neural network extract features automatically in their hidden layers, the proposed system does not require any human effort to extract significant features.

5.2 Implications for Practice

The suggested approach identifies eyewitness tweets only based on their textual content, making it simple to incorporate with any social media site. An android application based on the proposed MCNN might be developed to recognise eyewitness disaster tweets from live Twitter streams, allowing individuals to become more situationally aware during a crisis. The proposed system can be easily used by humanitarian organisations to locate disaster eyewitness information for better decision-making and to provide immediate assistance to victims because the posts of local citizens and disaster eyewitnesses convey more authentic information and provide a better perspective on the disaster. The predicted eyewitness social media posts can also be used by the location reference extractor model (Kumar and Singh, 2019; Dutt et al., 2018) to extract the location references mentioned in the social media posts, which can help to locate the victims.

Our proposed multi-channel convolutional neural network identified “Earthquake in Shinjuku, could feel the entire hotel moving. #earthquake #earthquakeintokyo #massiveearthqauke #japan” and “So a small earthquake woke me up.... I’m fine btw! A couple things fell in the streets. Never experienced one before so it was something” as the eyewitness tweet of earthquake event whereas “Hosman Church needs your help! our ceiling collapsed due to a water flood in the apartment upstairs. Thank you! https://t.co/wbAYbsn5rb” and “Our friends at Mechanic suffered some horrible flood damage last night. They are great guys. Consider helping them https://t.co/GLOhCNY4IY” as the eyewitness tweets of flood event. The first eyewitness tweet of the earthquake incident includes a crucial situational awareness statement as well as event location references such as Shinjuku and Japan. Similarly, the third eyewitness tweet about the flood event has details about individuals pleading for aid by giving their location. A similar scenario can be observed in the fourth eyewitness tweet, in which a person requests assistance for a friend during a flood disaster by mentioning their location details. In the case of the second earthquake eyewitness tweet, it can be observed that the eyewitness of the earthquake was simply reporting a minor earthquake by noting his own status, implying that the devastation caused by the earthquake will be low at that location. Therefore, humanitarian and government organisations may use the outcomes of the current work to make better decisions during disasters. The suggested approach may also be used efficiently in a variety of applications such as better event detection, finding credible information, and obtaining useful first-hand information in journalism.

5.3 Limitations and Future Directions

The limitation of this work is that the proposed system is trained with English language tweets only, but during the disaster, people also post a considerable amount of tweets in their regional languages. Therefore, the proposed system may not perform equally in those cases. In the proposed work, we did not check the authenticity of the posted tweets which is another limitation of the proposed work. Therefore, in the future, a system can be made to check the authenticity of the posted tweets and a multilingual system can be made to solve the issue of multilinguality. In this work, we have only used textual content of the tweets by discarding images that can contain important visual clues in identifying eyewitness posts. Therefore, a multi-modal system can also be made in the future to get more insight into identifying eyewitness tweets.

6 Conclusion

The identification of eyewitness tweets during the disaster provides the reality on the ground. But the identification of eyewitness tweets is very challenging, as a very small fraction of geotagged tweets is available. Identifying eyewitness tweets from their textual content is difficult as tweets are of short length and do not provide enough context about the event. In this work, we have proposed a multi-channel convolutional neural network (MCNN) model to classify tweets into eyewitness, non-eyewitness, and don’t know classes. The proposed model overcomes the need for handcrafted features. The proposed MCNN model performs better than the conventional machine-learning models and several other deep-learning models by achieving an F1-score of 0.84, 0.88, 0.84, and 0.86 for flood, earthquake, hurricane, and wildfire respectively. The proposed system can be integrated with any social media platform as it uses only textual information for training the model. As the availability of eyewitness messages is limited, a generative adversarial neural network-based model can be made to deal with the situation of the data imbalance problem.

Notes

References

Alam, F., Imran, M., & Ofli, F. (2017). Image4act: Online social media image processing for disaster response. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017 (pp. 601–604). ACM.

Bandyopadhyay, A., Ganguly, D., Mitra, M., Saha, S.K., & Jones, G.J. (2018). An embedding based ir model for disaster situations. Information Systems Frontiers, 20, 925–932.

Beydoun, G., Dascalu, S., Dominey-Howes, D., & Sheehan, A. (2018). Disaster management and information systems: Insights to emerging challenges. Information Systems Frontiers, 20, 649–652.

Caragea, C., Silvescu, A., & Tapia, A.H. (2016). Identifying informative messages in disaster events using convolutional neural networks. In International conference on information systems for crisis response and management (pp. 137–147).

Chaudhuri, N., & Bose, I. (2019). Application of image analytics for disaster response in smart cities. In Proceedings of the 52nd Hawaii International Conference on System Sciences.

Chung, J., Gulcehre, C., Cho, K., & Bengio, Y. (2014). Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv:1412.3555

Daly, S., & Thom, J.A. (2016). Mining and classifying image posts on social media to analyse fires. In ISCRAM (pp. 1–14). Citeseer.

Doggett, E., & Cantarero, A. (2016). Identifying eyewitness news-worthy events on Twitter. In Proceedings of The Fourth International Workshop on Natural Language Processing for Social Media (pp. 7–13).

Dutt, R., Hiware, K., Ghosh, A., & Bhaskaran, R. (2018). Savitr: a system for real-time location extraction from microblogs during emergencies. In Companion Proceedings of the The Web Conference, (Vol. 2018 pp. 1643–1649).

Fang, R., Nourbakhsh, A., Liu, X., Shah, S., & Li, Q. (2016). Witness identification in Twitter. In Proceedings of the Fourth International Workshop on Natural Language Processing for Social Media (pp. 65–73).

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9, 1735–1780.

Imran, M., Castillo, C., Diaz, F., & Vieweg, S. (2015). Processing social media messages in mass emergency: a survey. ACM Computing Surveys (CSUR), 47, 1–38.

Imran, M., Castillo, C., Lucas, J., Meier, P., & Vieweg, S. (2014). Aidr: Artificial intelligence for disaster response. In Proceedings of the 23rd International Conference on World Wide Web (pp. 159–162).

Imran, M., Ofli, F., Caragea, D., & Torralba, A. (2020). Using AI and social media multimodal content for disaster response and management: Opportunities, challenges, and future directions. Information Processing & Management 57. https://doi.org/10.1016/j.ipm.2020.102261

Kersten, J., Kruspe, A., Wiegmann, M., & Klan, F. (2019). Robust Filtering of crisis-related tweets. In Z. Franco J.H. Canós (Eds.) ISCRAM. https://elib.dlr.de/127586/

Kingma, D.P., & Ba, J. (2014). Adam: A method for stochastic optimization. arXiv:1412.6980

Kohavi, R., et al. (1995). A study of cross-validation and bootstrap for accuracy estimation and model selection. In Ijcai, (Vol. 14 pp. 1137–1145). Montreal: Canada.

Kumar, A., & Singh, J.P. (2019). Location reference identification from tweets during emergencies: a deep learning approach. International Journal of Disaster Risk Reduction, 33, 365–375.

Kumar, A., Singh, J.P., Dwivedi, Y.K., & Rana, N.P. (2020). A deep multi-modal neural network for informative Twitter content classification during emergencies. Annals of Operations Research, pp. 1–32. https://doi.org/10.1007/s10479-020-03514-x

Kumar, A., Singh, J.P., & Rana, N.P. (2017). Authenticity of geo-location and place name in tweets. In Proceedings of the 23rd Americas Conference on Information Systems (pp. 1–10).

Kumar, A., Singh, J.P., & Saumya, S. (2019). A comparative analysis of machine learning techniques for disaster-related tweet classification. In IEEE Region 10 Humanitarian Technology Conference (pp. 222–227).

Kumar, S., Morstatter, F., Zafarani, R., & Liu, H. (2013). Whom should I follow? Identifying relevant users during crises. In Proceedings of the 24th ACM conference on hypertext and social media (pp. 139–147).

Lagerstrom, R., Arzhaeva, Y., Szul, P., Obst, O., Power, R., Robinson, B., & Bednarz, T. (2016). Image classification to support emergency situation awareness. Frontiers in Robotics and AI, 3, 54.

Levy, O., & Goldberg, Y. (2014). Dependency-based word embeddings. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, (Vol. 2, Short Papers pp. 302–308).

Liu, F., & Xu, D. (2018). Social roles and consequences in using social media in disasters: a structurational perspective. Information Systems Frontiers, 20, 693–711.

Madichetty, S., & Sridevi, M. (2019). Detecting informative tweets during disaster using deep neural networks. In 2019 11th International Conference on Communication Systems & Networks (COMSNETS) (pp. 709–713). IEEE.

Madichetty, S., & Sridevi, M. (2021). A novel method for identifying the damage assessment tweets during disaster. Future Generation Computer Systems, 116, 440–454.

Mendon, S., Dutta, P., Behl, A., & Lessmann, S. (2021). A hybrid approach of machine learning and lexicons to sentiment analysis: enhanced insights from Twitter data of natural disasters. Information Systems Frontiers, pp. 1–24.

Mikolov, T., Karafiát, M., Burget, L., Cernockỳ, J., & Khudanpur, S. (2010). Recurrent neural network-based language model. In Interspeech, Makuhari, (Vol. 2 pp. 1045–1048).

Mirbabaie, M., Ehnis, C., Stieglitz, S., Bunker, D., & Rose, T. (2021). Digital nudging in social media disaster communication. Information Systems Frontiers, 23, 1097–1113.

Mohanty, S.D., Biggers, B., Sayedahmed, S., Pourebrahim, N., Goldstein, E.B., Bunch, R., Chi, G., Sadri, F., McCoy, T.P., & Cosby, A. (2021). A multi-modal approach towards mining social media data during natural disasters - a case study of hurricane irma. International Journal of Disaster Risk Reduction, 54, 102032.

Morstatter, F., Lubold, N., Pon-Barry, H., Pfeffer, J., & Liu, H. (2014). Finding eyewitness tweets during crises. In Proceedings of the ACL 2014 Workshop on Language Technologies and Computational Social Science. Baltimore, MD, USA: Association for Computational Linguistics.

Nguyen, D., Al Mannai, K.A., Joty, S., Sajjad, H., Imran, M., & Mitra, P. (2017a). Robust classification of crisis-related data on social networks using convolutional neural networks. In Proceedings of the International AAAI Conference on Web and Social Media, (Vol. 11 pp. 632–635).

Nguyen, D.T., Alam, F., Ofli, F., & Imran, M. (a). Automatic image filtering on social networks using deep learning and perceptual hashing during crises. arXiv:1704.02602

Nguyen, D.T., Joty, S., Imran, M., Sajjad, H., & Mitra, P. (b). Applications of online deep learning for crisis response using social media information. arXiv:1610.01030

Nguyen, D.T., Ofli, F., Imran, M., & Mitra, P. (2017b). Damage assessment from social media imagery data during disasters. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, (Vol. 2017 pp. 569–576).

Olteanu, A., Vieweg, S., & Castillo, C. (2015). What to expect when the unexpected happens: Social media communications across crises. In Proceedings of the 18th ACM conference on computer supported cooperative work & social computing (pp. 994–1009).

Palshikar, G.K., Apte, M., & Pandita, D. (2018). Weakly supervised and online learning of word models for classification to detect disaster reporting tweets. Information Systems Frontiers, 20, 949–959.

Pekar, V., Binner, J., Najafi, H., Hale, C., & Schmidt, V. (2020). Early detection of heterogeneous disaster events using social media. Journal of the Association for Information Science and Technology, 71, 43–54.

Pham, D.-H., & Le, A.-C. (2018). Exploiting multiple word embeddings and one-hot character vectors for aspect-based sentiment analysis. International Journal of Approximate Reasoning, 103, 1–10.

Powers, D.M.W. (2011). Evaluation: from precision, recall and f-measure to roc, informedness, markedness and correlation. https://doi.org/10.48550/ARXIV.2010.16061

Qiu, X., Sun, T., Xu, Y., Shao, Y., Dai, N., & Huang, X. (2020). Pre-trained models for natural language processing: a survey. Science China Technological Sciences, 63, 1872–1897.

Roy, S., Mishra, S., & Matam, R. (2020). Classification and summarization for informative tweets. In 2020 IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS) (pp. 1–4). IEEE.

Sakaki, T., Okazaki, M., & Matsuo, Y. (2012). Tweet analysis for real-time event detection and earthquake reporting system development. IEEE Transactions on Knowledge and Data Engineering, 25, 919–931.

Singh, J.P., Dwivedi, Y.K., Rana, N.P., Kumar, A., & Kapoor, K.K. (2019). Event classification and location prediction from tweets during disasters. Annals of Operations Research, 283, 737–757. https://doi.org/10.1007/s10479-017-2522-3

Stefan, I., Rebedea, T., & Caragea, D. (2019). Classification of eyewitness tweets in emergency situations. In RoCHI (pp. 46–52).

Tanev, H., Zavarella, V., & Steinberger, J. (2017). Monitoring disaster impact: detecting micro-events and eyewitness reports in mainstream and social media. In ISCRAM.

Truelove, M., Khoshelham, K., McLean, S., Winter, S., & Vasardani, M. (2017). Identifying witness accounts from social media using imagery. ISPRS International Journal of Geo-Information, 6, 120.

Truelove, M., Vasardani, M., & Winter, S. (2015). Towards credibility of micro-blogs: characterising witness accounts. GeoJournal, 80, 339–359.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In Advances in neural information processing systems (pp. 5998–6008).

Wang, Y., Huang, M., Zhu, X., & Zhao, L. (2016). Attention-based LSTM for aspect-level sentiment classification. In Proceedings of the 2016 conference on empirical methods in natural language processing (pp. 606–615).

Yang, Z., Yang, D., Dyer, C., He, X., Smola, A., & Hovy, E. (2016). Hierarchical attention networks for document classification. In Proceedings of the 2016 conference of the North American chapter of the Association for Computational Linguistics: human language technologies (pp. 1480–1489).

Yu, M., Huang, Q., Qin, H., Scheele, C., & Yang, C. (2019). Deep learning for real-time social media text classification for situation awareness – using hurricanes Sandy, Harvey and Irma as case studies. International Journal of Digital Earth, 0, 1–18. https://doi.org/10.1080/17538947.2019.1574316

Zahra, K., Imran, M., & Ostermann, F.O. (2020). Automatic identification of eyewitness messages on Twitter during disasters. Information Processing & Management, 57, 102107.

Zahra, K., Imran, M., Ostermann, F.O., Boersma, K., & Tomaszewski, B. (2018). Understanding eyewitness reports on Twitter during disasters. In Proceedings of the of the ISCRAM, (Vol. 2018 pp. 687–695).

Zhang, Y., Roller, S., & Wallace, B.C. (2016). MGNC-CNN: A simple approach to exploiting multiple word embeddings for sentence classification. In Proceedings of NAACL-HLT (pp. 1522–1527).

Zola, P., Ragno, C., & Cortez, P.A. (2020). Google Trends spatial clustering approach for a worldwide Twitter user geolocation. Information Processing & Management, 57, 102312.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, A., Singh, J.P., Rana, N.P. et al. Multi-Channel Convolutional Neural Network for the Identification of Eyewitness Tweets of Disaster. Inf Syst Front 25, 1589–1604 (2023). https://doi.org/10.1007/s10796-022-10309-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10796-022-10309-x