Abstract

This paper engages with the emerging field of Artificial Intelligence (AI) governance wishing to contribute to the relevant literature from three angles grounded in international human rights law, Law and Technology, Science and Technology Studies (STS) and theories of technology. Focusing on the shift from ethics to governance, it offers a bird-eye overview of the developments in AI governance, focusing on the comparison between ethical principles and binding rules for the governance of AI, and critically reviewing the latest regulatory developments. Secondly, focusing on the role of human rights, it takes the argument that human rights offer a more robust and effective framework a step further, arguing for the necessity to extend human rights obligations to also directly apply to private actors in the context of AI governance. Finally, it offers insights for AI governance borrowing from the Internet Governance history and the broader technology governance field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the last two decades, Artificial Intelligence (AI) and various kinds of algorithms have rapidly become integral for numerous sectors and industries, from recommendation services, online content moderation, and advertising to the provision of healthcare, and policy-making (Aizenberg & van den Hoven, 2020; Cath, 2018; Gerards, 2019). Bearing the promise of swift, rational, objective, and efficient decision-making, they are often employed to inform or make critical decisions in a constantly growing number of central and socially consequential domains, including but not limited to the justice system (Giovanola & Tiribelli, 2022; Yeung et al., 2019). From this angle, enabling data-driven, automated decision-making, advanced reasoning and processing features, AI and algorithms are considered to offer new opportunities for individuals, and the society at large, to improve and augment their capabilities and wellbeing (Floridi et al., 2018; Smuha, 2021b; Tegmark, 2017). They are also expected to contribute to global productivity (Agrawal et al., 2019), the achievement of sustainable development goals (Pedemonte, 2020; Vinuesa et al., 2020), as well as broader environmental objectives, from green technologies (Elshafei & Negm, 2017; Mishra et al., 2021) to climate change (Cowls et al., 2021).

However, apart from the benefits they offer, we have gradually come to realise that AI systemsFootnote 1 and algorithms also pose a wide range of risks (Gerards, 2019; Radu, 2021; Taeihagh, 2021) and ethical challenges (Stahl, 2021). Instances of discrimination and bias (Binns, 2017; Borgesius, 2018; Lambrecht & Tucker, 2018), online disinformation and opinion manipulation (Allen & Massolo, 2020; Cadwalladr, 2020), private censorship (Gillespie, 2014, 2018) pervasive monitoring (Feldstein, 2019a; Kambatla et al., 2014), as well as adverse job market effects (Agrawal et al., 2019; Vochozka et al., 2018), have raised serious concerns. For example, algorithmic processes and automated decision-making may reinforce and widen social inequalities, (Chander & Pasquale, 2016; O’Neil, 2016; Risse, 2018), as the same key functionalities that lead to more accurate and informed decisions may also perpetuate bias and discrimination (Floridi et al., 2018; Miller, 2020a, b; Murray et al., 2020), either due to fragmented or non-representative databases or because algorithms tend to reproduce the prejudices already existing in our societies (Miller, 2020a, b). Additionally, AI may have a considerable impact on how individuals deliberate and act, affecting our autonomy (Laitinen & Sahlgren, 2021; Vesnic-Alujevic et al., 2020), or even undermining self-determination (Danaher, 2018). This way, AI systems and algorithms may also foster repression, and authoritarian practices (Feldstein, 2019b). Consequently, it is widely recognised that if such technologies “are poorly designed, developed or misused, they can be highly disruptive to both individuals and society” (Fukuda-Parr & Gibbons, 2021).

Simultaneously, as AI systems are increasingly becoming embedded in several social contexts, they also become highly relevant for human rightsFootnote 2 (Aizenberg & van den Hoven, 2020; Gerards, 2019). For instance, algorithms are implemented to make or inform critical decisions that define individuals’ suitability, or entitlement to life-affecting opportunities and/or benefits (McGregor et al., 2019; Yeung et al., 2019). They are used to screen applicants’ CVs and make recommendations for study or job openings. They are also employed to assess individuals’ income and credit scores to determine their access to the credit system, or their eligibility for state subsidies. Such decisions affect or even interfere with a wide array of human rights, such as freedom from discrimination, right to work, and right to education (Latonero, 2018; Raso et al., 2018a). Additionally, algorithms used for online content moderation have been frequently accused of private censorship, or opinion-shaping (Borgesius, 2018; Gillespie, 2020; Gorwa et al., 2020), while massive biometrical surveillance and facial recognition algorithms threaten our privacy in an unprecedented way (Smith & Miller, 2021). Ultimately, AI and algorithms have been also introduced to the justice system, giving rise to concerns that either in the form of Law Tech (Kennedy, 2021), or as prediction and risk assessment mechanisms, they may affect or interfere with the right to equality before the law, fair trial, freedom from arbitrary arrest, detention, and exile, or even with the rights to liberty, and personal security (Asaro, 2019b; Završnik, 2020).

The accelerating pervasiveness and ubiquity of AI systems, combined with the growing public concern about their ethical, social, and human rights implications have brought to the forefront questions related to the steering of such technologies towards socially beneficial ends (Floridi et al., 2018; Stahl et al., 2021a; b; Taddeo & Floridi, 2018). Initially, the main response to concerns about the negative aspects of AI and algorithms has been a turn to ethics (Smuha, 2021a), or as Boddington describes it, “a rush to produce codes of ethics for AI, as well as detailed technical standards” (Boddington, 2020). Governments, intergovernmental organisations, public actors, civil society groups, and private sector stakeholders turned to value-based norms in search of “ethical AI” or “responsible AI” (Fukuda-Parr & Gibbons, 2021; Smuha, 2021a), to ensure the ethical and societally beneficial design, development, and deployment of AI (AI-DDD). Currently, it seems that we are at a turning point, as governments and intergovernmental organisations have taken several initiatives toward developing specific regulatory instruments. Essentially, the focus on steering AI and algorithms toward ethical and societally beneficial ends is shifted from primarily relying on ethical codes to gradually introducing binding legislation (Maas, 2022; Smuha, 2021b; Taeihagh, 2021). However, although several modes of governance have been suggested (Almeida et al., 2021; Smuha, 2021b), AI governance remains a relatively underdeveloped field of academic research and policy practice (Cihon et al., 2020; Taeihagh, 2021).

In this context, human rights are highly relevant and have been frequently discussed. The impacts and implications of AI, algorithms and automated decision-making have emerged as important areas of human rights concern during the last decade. Moreover, human rights have been proposed as a source of ethical standards (Gerards, 2019; Yeung et al., 2019) or design principles (Aizenberg & van den Hoven, 2020; Umbrello, 2022). They have been also suggested as offering a better alternative than ethics in terms of an accountability framework (McGregor et al., 2019). Additionally, some researchers argue that a more direct application of human rights law can provide clarity and guidance in identifying potential solutions to the AI challenges (Stahl et al., 2021a, b). From a similar point of view, human rights have been suggested as governance principles, to “underlie, guide, and fortify” an AI governance model (Smuha, 2021a). Most notably, they have been identified by the High-Level Expert Group on AI (AI HLEG), as offering “the most promising foundations for identifying abstract ethical principles and values, which can be operationalised in the context of AI” (AI HLEG, 2019b). Similarly, the United Nations (UN) has highlighted the role of the Universal Declaration of Human Rights (UDHR) in offering a basis for AI principles (Hogenhout, 2021).

Considering the turn to governance in conjunction with the growing relevance of human rights in the AI-DDD and AI governance discourse, this paper engages with the question “how should we regulate AI?” from a Law and Technology and Science and Technology Studies (STS) point of view. Grounded in international human rights law theory, and business and human rights scholarship, the study seeks to supplement the emerging AI governance literature from three angles. First, it focuses on the shift from steering AI through ethics and means of soft regulation to AI governance through particular national and intergovernmental binding instruments. In that context, it offers a bird-eye overview of the developments in AI governance, focusing on the comparison between ethical principles and binding rules for the governance of AI, and critically reviewing the latest regulatory developments.

Secondly, turning to the role of human rights in steering AI, through this paper I wish to take a step further the argument that human rights offer a more robust and effective framework to ensure the AI-DDD for the benefit of society. More specifically, building upon human rights and business discourse, I argue that human rights may offer more than aspirational and normative guidance in AI-DDD as well as in AI governance, if they are employed as concrete legal obligations that directly apply to both public and private actors. In my view, in an increasingly AI-mediated world the direct application of human rights obligations to private actors in terms of AI governance or through a new human rights treaty for the private sector and AI, constitutes a critical step to adequately protect human rights. Moreover, it is a meaningful way to ensure that such technologies will contribute to the flourishing of society (Gibbons, 2021; Stahl et al., 2021a, b). This aspect of direct human rights application in AI governance is not yet addressed in the relevant literature. This way, I offer a new perspective to AI governance and human rights research, providing arguments from the direct horizontality discourse and the business and human rights field.

Finally, looking beyond human rights, I offer insights to the emerging AI governance scholarship building upon the rich tradition of theories of technology, technology governance and the Internet Governance (IG) history. I start from the observation that whereas the field of AI raises very deep and broad philosophical questions, the ethical and governance questions are not necessarily new (Niederman & Baker, 2021), nor exclusively inherent to AI (Stahl et al., 2021a, b). Additionally, the discussion regarding the steering of new and disruptive technologies towards ethical and societally beneficial ends is part of a broader discourse on the relationship between technology and society (Benedek et al., 2017; Bucchi, 2009; Strobel & Tillberg-Webb, 2009) that is almost as old as human history (Black & Murray, 2019). From this angle, I argue for the necessity to examine what is at stake in AI governance and seek insights by studying the governance trajectory of other major disruptive technologies, such as the Internet.

The rest of the paper is structured as follows: In Section 2 I discuss the definition of the key terms and concepts. In Section 3, I focus on the turn from ethics to governance. I argue that although ethics are vital to steer AI-DDD to the benefit of society they are not sufficient to ensure it. Moreover, regardless of the positive steps in AI governance adequate human rights protection is not necessarily ensured yet. In Section 4, I aim to advance the discourse regarding the role of human rights, suggesting the direct application of human rights obligations to private actors in the context of AI governance. Before offering my closing remarks (Section 6), in Section 5 I ask the question of what AI governance can learn from IG and the broader field of technology governance, addressing the relevance of IG as a source of insights, and three critical points of consideration building upon the IG experience.

2 Key terms and concepts

Given that conceptual clarity and terminological consistency are yet to be achieved in AI literature (Collins et al., 2021; Larsson, 2020; Surden, 2020), it is essential to start by clarifying the way the key terms will be used. Moreover, as will be further discussed in Sections 3 and 5, the way AI systems are defined is critical for policymaking (Bhatnagar et al., 2018; Collins et al., 2021). Additionally, the field of international human rights may be significantly complex. The focus here is on the UN human rights system, which comprises the UN human rights principles along with the institutional mechanism to encourage and monitor compliance by the states (Buergenthal, 2006).

2.1 Artificial Intelligence

Almost like any other term that is shared across several different disciplines, receiving also a fair share of public and media attention, AI is riddled with multiple interpretations (Scherer, 2016), covering different aspects (Haenlein & Kaplan, 2019), functions, and functionalities, ranging from the capabilities of a smartphone to those of self-operating vehicles, or robots (Ertel, 2017; Risse, 2018). Through their systematic literature review of the field, Collins et al. (2021) identified a large variety of different definitions. According to their findings, apart from the lack of cohesion, a noteworthy observation is that most definitions tend to focus on what AI systems are capable of, instead of what AI actually is. The term can be traced back to 1956 when AI was described by John McCarthy as “the science and engineering of making intelligent machines”(McCarthy, 2018). More recent definitions describe it as “the ability of a machine to perform cognitive functions that we associate with human minds, such as perceiving, reasoning, learning, interacting with the environment, problem-solving, decision-making, and even demonstrating creativity”(Rai et al., 2019) or the process that “enables the machine to exhibit human intelligence, including the ability to perceive, reason, learn, and interact, etc.”(Russel & Norvig, 2020).

Outside academia, the EU Commission defined it as “a collection of technologies that combine data, algorithms and computing power” (European Commission, 2020), while the HLEG described it as “software (and possibly also hardware) systems designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information, derived from this data and deciding the best action(s) to take to achieve the given goal. AI systems can either use symbolic rules or learn a numeric model, and they can also adapt their behaviour by analysing how the environment is affected by their previous actions” (AI HLEG, 2019). Using human cognition as a point of reference, and highlighting the wide range of forms and applications, in his report to the EU Parliament, Szczepański, (2019) defined it as the “term used to describe machines performing human-like cognitive processes such as learning, understanding, reasoning and interacting. It can take many forms, including technical infrastructure (i.e. algorithms), a part of a (production) process, or an end-user product.”

The common thread amongst the majority of the descriptions and proposed definitions is the increasing capacity of machines to perform specific cognitive functions, roles and tasks currently performed by humans (Dwivedi et al., 2021). Thus, a key component of the definition of AI is the element of intelligence. Within the AI discourse intelligence is a somehow elusive term (Bryson & Theodorou, 2019; Ray, 2021). Although researchers suggest that in the context of AI intelligence should not be understood in terms of human intelligence (Bryson & Theodorou, 2019; Collins et al., 2021), the mere reference to intelligence almost automatically brings to mind human-related and human-premised associations, while it is commonly closely connected with notions such as sentience, sensibility, consciousness, self-awareness, or intentionality (Ertel, 2017). While such an anthropocentric approach is an integral part of human cognition, it may be still rather misleading or even unsuitable (Sætra, 2021). As Russel and Norvig (2020) note, even if a certain degree of anthropomorphism is not only expected but also descriptively helpful, the emphasis on “human-like” intelligence may be deceiving. Stemming from this observation, Bryson argues that adopting a simple definition of intelligence is of at most importance for developing the appropriate governance mechanisms (Bryson, 2020).

Yet, AI is not a single nor a stand-alone technology but “a deeply technical family of cognitive technologies” (Kuziemski & Misuraca, 2020), which include various techniques, subfields and applications (Gasser & Almeida, 2017), from machine learning, and natural language processing, to robotics and systems of super-intelligence (Raso et al., 2018a, b; Stahl et al., 2021a, b). In turn, the term “artificial intelligence” is a collective noun, an “umbrella term” employed to describe a cluster of technologies and applications (Dubber et al., 2020; Latonero, 2018), linked to and embedded in other technologies (Stahl et al., 2021a, b). The relevance of a variety of different technologies, processes, and procedures makes it difficult to clearly delineate artificial intelligence in a single way and across all contexts, particularly as often distinguishing between them is considerably hard (Stahl, 2021). Moreover, due to the impressive pace at which formerly cutting-edge innovations become mundane, “losing the privilege of being categorized as AI”, it is not always clear which technologies can be labelled as AI (Raso et al., 2018a).

In this paper, AI is perceived and studied as a time and space contextualised, enhanced computation process, which is intentionally designed, based on predefined rules and data input (Bryson, 2020). Using the term I denote the various forms of software (and their physical carrier whenever relevant) designed to perform such enhanced computational functions, including problem-solving, pattern recognition, analysis, recommendation and decision-making (Yavar 2018). Studying the ethical and societally beneficial AI-DDD, I embrace AI as a general-purpose technology (Dafoe, 2018; Trajtenberg, 2018), the disruptive effects of which may be “as transformative” as the industrial revolution (Gruetzemacher & Whittlestone, 2022). I focus equally on the externalities of AI systems and algorithmic procedures (i.e. their impact and effects, including unintended outcomes), and the impactful role of the actors involved in their design, development, and deployment, wishing to highlight the centrality of their agency.

2.2 Human Rights

The UN has been in charge of initiating the drafting of the first major international instrument containing a specific set of rights reserved for all human beings, in response to the atrocities of the Second World War (Kanalan, 2014—see also the preamble to the UDHR). Accepted on December the 10th 1948, the UDHR constitutes a foundational text in the history of human rights and combined with the two “twin” covenants, the International Covenant on Economic, Social and Cultural Rights (ICESCR), and the International Covenant on Civil and Political Rights (ICCPR) they constitute the core of the “UN human rights system.” The rights enshrined in these treaties, serving as the basic moral and legal entitlements of every human being, have a dual function, both as legal requirements under international law, and as norms encapsulating and reflecting moral, ethical, and social values (Bilchitz, 2016a; Tasioulas, 2013). Today, human rights are deeply rooted in contemporary politics and law, recognised in political practice and legal institutions globally (Etinson, 2018). In this context, the UN human rights system constitutes the key benchmark of international human rights protection, as most of the 193 Member-States of the UN have ratified at least one major human rights treaty, including the UNDHR.Footnote 3

Thus, even though not uncontroversial (Hopgood, 2018), nor equally applied universally (Tharoor, 2000), human rights represent a rare sum of principles and norms that are widely shared and institutionalised globally. Under the ‘tripartite typology of human rights obligations,’ the subjects of the international human rights obligations ought to ‘respect, protect and fulfil’ human rights (Asbjørn, 1987). In short, “respecting” human rights entails the obligation to refrain from taking any action that would infringe upon the enjoyment of these rights; “Protecting” human rights refers to the duty to prevent violations of human rights, via taking concrete measures; while “fulfilling” human rights relates with the duty to facilitate the realisation of and enjoyment of human rightsFootnote 4 (Alston & Quinn, 2017). Yet, who is the subject of these obligations? The answer to this question is closely related to a crucial and increasingly debated characteristic of international human rights that is also a key point of this article.

Human rights are vertical in nature. The term “vertical” implies that the state, placed on a higher field than the individual, is the obligation-holder, while the individual is the right-holder (Lane, 2018a). The rationality behind the vertical application, which is closely related to the historical trajectory of human rights (Witte, 2009), reflects the view that the state is the key perpetrator of individuals’ rights and freedoms, particularly given the far greater power it possesses. Building upon the power asymmetry between the state and the individuals, human rights are intended to serve as a shield, protecting people from the power of the state (Dawn & Fedtke, 2008). The “vertical nature/effect” is also the result of the international law fundamental principles. Based on international law, for an actor to be directly bound by international human rights it should be recognised as a subject of international law (Alston, 2005; Clapham, 2006; Kanalan, 2014). Given that the states constitute the original subjects and primary actors of international law (Bilchitz, 2016a; Kampourakis, 2019; Lane, 2018b),Footnote 5 only the states are directly bound by international human rights law and treaties.

Due to the vertical application of international human rights law, and the so-called “state-centric model”(de Aragão & Roland, 2017), private law relationships are broadly considered to be immune from direct human rights effects (Cherednychenko, 2007). Therefore, the protection of human rights between actors that lay on the same level, in other words between private individuals or entities, depends on domestic legislation and the extent to which the states have translated human rights into their national legal order, establishing the necessary framework and the structures required to ensure they are sufficiently protected. The degree to which this model is adequate and effective in the contemporary world is contested and will be addressed in Section 4. For now, it would suffice to note that the increasing power asymmetries between individuals and corporations, most prominently Transnational Corporations (TNCs), and the shifted balance of power to negatively affect human rights, combined with the failure of states to foster meaningful mechanisms to protect human rights at a national level, safeguarding access to meaningful remedy and redress, has intensified the discussions over a paradigm shift in international human rights law (Kampourakis, 2019; Zamfir, 2018).

3 Steering AI: From Ethics to Governance

3.1 The rush to ethics

3.1.1 Ethical principles and guidelines as the early response to AI challenges

As mentioned in the Introduction, the early response to the growing recognition that AI and algorithms may also have adverse impacts has been a rush to develop and promote a wide range of value-based norms, ethical codes, and declarations (Boddington, 2020; Radu, 2021; Smuha, 2021a). Even though Floridi and Cowls (2019) rightfully observe that the ethical debate is almost as old as the emergence of AI as a field of research, there has been a remarkable proliferation of ethical principles related to AI since 2016 (Winfield et al., 2019), as the application of AI and algorithms drastically increased during the mid-2010s (Bryson, 2019). From that moment on, harnessing the potential of AI while mitigating, or at least balancing its negative effects and harmful consequences became a pressing priority, centred around the need to make AI more “ethical”. Thus, AI Ethics came under the limelight, since almost all the major stakeholders eagerly engaged in an unofficial competition to develop and publish their own set of ethical norms, soon as ethical concerns related to AI gained momentum. Governments and intergovernmental organisations formed ad hoc expert committees, tasked to offer policy recommendations. Simultaneously, ethical guidelines have been developed by several private actors, companies, research entities, think tanks, and policy bodies (Jobin et al., 2019).

Hence, the term AI Ethics practically refers to the field of moral principles, ethical guidelines, codes, frameworks and declarations, intended to inform, guide and secure the ethical AI-DDD across several different sectors (Muller, 2020a, b; Whittlestone et al., 2019). At a regional level, the EU appointed the HLEG (AI HLEG, 2019a, b), to gather expert input from diverse stakeholders groups to produce guidelines for the ethical use of artificial intelligence, emphasising the key role of the EU Charter of fundamental rights for informing and guiding AI development. Similarly, the CoE established in February 2018 a Task Force on AI within the European Commission for the Efficiency of Justice (CEPEJ) to “lead the drafting of guidelines for the ethical use of algorithms within justice systems, including predictive justice”, and an Expert Committee on human rights dimensions of automated data processing and different forms of artificial intelligence (MSI-AUT). Outside Europe, the UN established the Ad Hoc Expert Group on the Ethics of AI (Hogenhout, 2021), while the Organisation for Economic Co-operation and Development (OECD) appointed the expert group on AI in Society (OECD, 2019). Each of these expert groups produced a distinct set of principles and ethical guidelines for the steering of AI and algorithms towards ethical, and by extension, societally beneficial ends.

At a national level, more than thirty countries, such as the UK, France, Germany, China, Japan, Canada, Finland, and the United Arab Emirates (UAE) have started drafting or even implementing national AI strategies (Dutton, 2018), centred around notions such as “ethical implementation”, “good and trustworthy AI” (Jobin et al., 2019; UK Government, 2021). Additionally, professional bodies, civil society organisations, and various think-tanks developed ethical principles and guidelines, aimed at guiding practitioners, and shaping AI-DDD to the benefit of individuals and the society at large. The Future of Life Institute developed “The Asilomar AI Principles” in January 2017. At the same time, the Association for Computing Machinery US Public Policy Council (USACM) published “The Statement on algorithmic transparency and accountability”, while in March 2017 the Institute of Electrical and Electronics Engineers (IEEE) provided “The IEEE Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems.” Slightly later the same year, the University of Montreal published “The Montreal Declaration for Responsible AI.” Several non-state actors, and most prominently a handful of major technology companies, have also issued their own ethical declarations, principles, and ethical codes, expressing their commitment to the ethical and responsible development and deployment AI and algorithms, either individually (see for example Facebook, 2020; IBM, 2020; Microsoft, 2017; Pichay, 2018; Pinjušić, 2022) or jointly, (Partnership on AI, 2020).

Reviewing the reaction to concerns over the negative implications of AI, it seems that the need for some basic rules to guide AI-DDD was widely recognised, while ethics have largely dominated the discussions (Radu, 2021). Currently, the Algorithm Watch’s AI Ethics Global Inventory includes more than 170 guidelines (Algorithm Watch, 2020), as regardless of the policy initiative, ethical codes remain the most elaborated response to the challenges of AI and algorithms. But are ethics the most appropriate way to ensure the ethical sound and socially beneficial AI-DDD? To answer this question one needs to consider the function of ethical norms as modalities of technology governance, as well as the merits and drawbacks of AI Ethics in specific.

3.1.2 The promises and limitations of ethical codes and guidelines

Ongoing historical and sociological research on the role of ethics and values-based norms in technology development has demonstrated that ethical codes and moral principles are valuable for informing and shaping the research and development of technologies in a responsible way (Basart & Serra, 2013; Doorn, 2012). They raise awareness and contribute to the ethical education of professionals while setting the key criteria against which unethical behaviour may be censured (Stark & Hoffmann, 2019). They are also vital, underpinning design and standardization, becoming translated into technical requirements, informing engineering studies and technical decisions (Floridi, 2016; Lloyd, 2009), and supplementing or substituting formal regulation (Hildebrandt, 2017). Moreover, ethical principles assist in summarising a variety of complex ethical issues and challenging moral questions into a few central elements, which in turn can be clearly understood, and reflected upon by people from diverse fields and different backgrounds. This way, they facilitate the development of a common ground, which “can form a basis for more formal commitments in professional ethics, internationally agreed standards, and regulation” (Whittlestone et al., 2019). By the same token, the softer approach they offer may buy valuable time for research, political, social, and legal inquiry to catch up with the developments (Larsson, 2020).

Particularly in the context of AI, the proliferation of various ethical guidelines and principles reframed the meaning and metrics of progress in terms of AI-DDD. It essentially shifted the attention from a purely technical assessment, focused on performance criteria, to a definition of progress that is also premised on the ethical and social aspects of AI (Scantamburlo et al., 2020). This way, ethics-informed approaches complemented the conceptualisation of progress in AI and significantly contributed to making the ethical and socially beneficial design, development and deployment the benchmark of progress in AI, and a crucial objective in AI-DDD. In turn, the fact that progress in such a transformative and all-purpose technology (Dafoe, 2018; Gruetzemacher & Whittlestone, 2022; Taeihagh et al., 2021) is defined in terms of ethics instead of technocratic criteria introduces a human-centric element that is valuable to ensure that AI will be used for the benefit of society at large, and the flourishment of humanity.

Furthermore, although steering, regulation and governance are commonly associated with the normative functions of law (Hildebrandt, 2018), the law does not enjoy a monopoly in governing human behaviour. In fact, legislation is only one of the governance modalities, namely the factors that shape and affect human actions. Social norms, including principles of ethics and morality, the market, as well as design characteristics equally shape human conduct (Lessig, 1999). In daily life, for example, we abstain from acting in certain ways not necessarily because it is prohibited by law, but because specific types of behaviour may entail ethical or social disgrace, disrepute, or isolation. From this angle, social norms and ethical codes are a significant and impactful way to affect and channel human behaviour. This has led some researchers to suggest that soft-law approaches, ethical principles, and guidelines offer a better solution for AI governance (Floridi, 2018; Floridi & Cowls, 2019; Taddeo & Floridi, 2018).

However, although ethical requirements affect our behaviour, while they often overlap with legal rules and already existing legislation, they are not binding (Horner, 2003). Practically the extent to which social or ethical norms affect our actions and shape our behaviour is contingent on the consequences and the deterrent mechanisms, namely on how we evaluate the negative impacts in comparison to whatever benefit we may gain. Hence, the normative power of social, more and ethical norms depends considerably on the impact of the attached consequences in each context (Hagendorff, 2020). In turn, without established and meaningful mechanisms providing concrete incentives for principles to become practise the normative effect of ethics is rather limited (Whittlestone et al., 2019). Based on this observation, it is suggested that ethics do not necessarily lead to an actionable chain of steps that can effectively establish the much-needed set of rules in AI-DDD (Saslow & Lorenz, 2019). As Hagendorff (2020) remarks in his acute but insightful criticism of the dominance of deontological AI ethics, “the enforcement of ethical principles may involve reputational losses […], or restrictions on memberships in certain professional bodies, yet all together, these mechanisms are rather weak and pose no imminent threat.” Similarly, Black and Murray (2019) stress that “if we are to seek to control the way corporates and governments use AI, then ethics cannot substitute for law or other forms of formal regulation.”

Apart from problematic enforcement, the limitations of ethics become clearer if we examine their normative function, applicability, and effects, as well as the motives behind them. The broader domain of AI-DDD is largely dominated by a handful of American, Chinese and some EU companies, such as Google, Amazon, Facebook, Apple, IBM, ATOS, Microsoft, Baidu, Alibaba and Tencent which have been all remarkably eager to develop and adopt ethical guidelines, in most cases before government and intergovernmental organisations engaged with AI Ethics, (see for example the initiatives undertaken individually and jointly by Google, Facebook, IBM, Apple, Atos etc. mentioned in Section 3.1). The impressive variety of principles and declarations, in conjunction with the commonly vague language in which ethical codes are drafted, has led researchers to suggest that there is a lack of conceptual clarity and concrete direction, which significantly hampers their practical impact (Asaro, 2019a; Yeung et al., 2019). Even though there is a common core, as all declarations and guidelines include a set of shared themes, such as fairness, privacy, accountability, safety and security, transparency and explainability, non-discrimination and human oversight, and the key discoursive tools are relatively shared, they are rarely concretely defined (Fjeld et al., 2020; Scantamburlo et al., 2020), and there can be overwhelming differences in how the principles are interpreted and materialised.

Critics remark that the majority of AI principles “are too broad to be action-guidings” (Vesnic-Alujevic et al., 2020; Whittlestone et al., 2019), adding that the vague way principles and guidelines are drafted, combined with the close connection between ethical guideless and morality makes them open to various interpretations and contingent on cultural differences, undermining their effectiveness and universality (Asaro, 2019b; Whittlestone et al., 2019; Yeung et al., 2019). For instance, the UN Special Rapporteur Philip Alston argues that framing guidelines as “ethics” renders them meaningless, hindering their normative impact, noting that “as long as you are focused on ethics, it’s mine against yours.” Similarly, Binns notes that whereas everyone may agree on the centrality of fairness, what it entails exactly, as well as how it is to be achieved may vary considerably among different individuals or groups (Binns, 2017), while different stakeholders may value fairness differently, especially in comparison to other values and objectives. The cultural differences may also add to the difficulty in translating such abstract values into concrete measures that can be easily assessed regarding their effectiveness and their objective capacity to serve as guidelines for practitioners. Thus, the fact that there is no consensus between the major players in the field leaves developers and designers with little guidance and the wider public with no clear view of what “ethical AI” means in practice, which is particularly problematic considering that AI is largely globalised and transborder (Saslow & Lorenz, 2019).

Moreover, codes on the ethical AI-DDD tend to predominantly focus on particular ethical issues, features, functions or consequences (Larsson, 2019; Stahl et al., 2021a, b; Vesnic-Alujevic et al., 2020). Reviewing the major ethical issues in emerging technologies, Stahl et al. suggested that they can be broadly divided into two main categories, namely issues with individual impacts, such as privacy, autonomy, treatment of humans, identity and security, and issues with broader societal impacts, such as digital divides, collective human identity and the good life, responsibility, surveillance, and cultural differences (Stahl et al., 2017). Building upon this classification, Vesnic-Alujevic et al. (2020) concluded in a similar categorisation, observing that “ethical debates about AI mainly focus on individual rights only” while the dimension of societal challenges and implications is often overlooked or marginally addressed. Thus, although the development and deployment of AI may be consequential and impactful to a diverse set of fundamental questions, the core principles and guidelines for AI Ethics tend to focus on a limited number of concerns (Fukuda-Parr & Gibbons, 2021; Vesnic-Alujevic et al., 2020). Similarly, the “noise” created by the big technology companies around their own set of rules, may marginalise the voice of citizens of civil society groups, which have significantly fewer resources to support and equally distribute their views of ethical AI (Saslow & Lorenz, 2019).

Some scholars have noted that apart from the acknowledgement of the ethical challenges of AI and algorithms, these private-driven initiatives may also conceal further objectives (Bietti, 2019; Slee, 2020; Yeung et al., 2019). Firstly, through developing their own set of principles, particularly the leading technology companies, may seek to set the narrative of ethical AI on their own terms. Defining what the ethical development and employment of AI systems and algorithms entail in practice, such companies not only promote their own interpretation of “ethical and responsible AI” but also establish the criteria for assessing AI-DDD. In turn, shaping how ethical AI is perceived in a way that serves their interests and reflects their priorities may mitigate the risk of reputational cost. Similarly, this way private actors may reserve for themselves a privileged position, establishing themselves as pioneers in the field (Slee, 2020).

Additionally, such self-declared commitments may be practically simply proclamatory, invoked merely for ‘ethics washing’ (Bietti, 2019; Metzinger, 2019; Muller, 2020a, b), promoted to avoid scrutiny, criticism or even direct regulation (Black & Murray, 2019; Rességuier & Rodrigues, 2020), or used for branding, or reasons related to corporate social responsibility (CSR) (Wettstein, 2012). For instance, Asaro contends that the primary purpose of ethical declarations and self-developed and adopted ethical rules by large corporations is to prevent the introduction of legally binding obligations and “foster a brand image for the company as socially benevolent and trustworthy”(Asaro, 2019b). Similarly, Bietti observes that AI Ethics are currently “weaponized in support of deregulation, self-regulation or hands-off governance” (Bietti, 2019) The emphasis on industry-led ethical codes and commitments may lead to the assumption that the authority and responsibility to steer and control such technologies “can be devolved from state authorities and democratic institutions upon the respective sectors of science or industry”(Hagendorff, 2020). From this angle self-promulgated and adopted ethical codes are invoked to avoid the introduction of binding legal rules, introducing a framework of self-regulation instead of concrete, specific and binding rules (Asaro, 2019b; Daly et al., 2020; Wagner, 2018). This way they serve as a shield from direct regulation (Wagner, 2018), and a vehicle to introduce and establish self-governance.

This challenges not only the actual normative impact of ethical guidelines but also the premise and intentions behind these voluntarily adopted codes. Going this argument a step further, Hao (2019) observes that it is doubtful whether such declarations produce tangible and auditable outcomes in terms of AI-DDD, while Black and Murray (2019) suggest that there are empirical and normative reasons against the reliance on such soft forms of governance for AI.

Yet, even if such commitments genuinely stem from the best of intentions, it is still fair to doubt whether industry actors can actually form adequate norms for the ethical development and deployment of such technologies, and be trusted to enforce and police adherence to them. Particularly in cases in which their monetary interests are at stake, the implementation of ethical requirements without supervision is at least questionable (Hagendorff, 2020). Furthermore, considering that engineers and developers typically lack systematic ethical training (Bednar et al., 2019; Martin et al., 2021), and they are not actively encouraged to reflect upon the ethical aspects of their work (Bairaktarova & Woodcock, 2017; Hagendorff, 2020; Slee, 2020), especially in corporate environments (Lloyd, 2009; Troxell & Troxell, 2017), it is questionable whether, in absence of binding rules and requirements of accountability and transparency, the ethical commitments will indeed guide the development of AI and algorithms. Furthermore, considering that not rarely does AI-DDD entails balancing risks and benefits, or conflicting rights and interests, it is questionable whether private actors without proper guidance are capable of successfully engaging with such delicate and complex tasks.

Finally, turning to their actual normative impact, the absence of specific enforcement tools, and the reliance on self-commitment and reputational costs put their effectiveness and normative impact in question. Looking at the individual level, a survey focusing on the effects in decision-making making processes of the ethical code developed by the ACM, found that the impact, in absence of other incentives, was rather trivial (McNamara et al., 2018). Moreover, considering that a handful of giant technology companies, including Amazon, Google, IBM, and Microsoft, have the leading role in AI, algorithms, and machine learning (Maguire, 2021; Nemitz, 2018), reputational costs can be arguably relatively easily counterbalanced through the substantial resources available for public relations and other corrective actions that may significantly reduce any damage to the company image. Thus, as the reputational costs seem to be rather insufficient, or at least negligible, ethical principles and guidelines have a rather limited normative capacity to meaningfully ensure the ethical and socially beneficial AI-DDD. From this angle, Rességuier and Rodrigues (2020) argue that while promising, ethical codes in AI are also equally problematic, as not only their effectiveness is yet to be demonstrated but also “they are particularly prone to manipulation, especially by industry.”

Thus, the current codes and ethical guidelines can guide AI-DDD only partially. They do add to the awareness around the ethical implications of AI systems and algorithms, but remain silent or at least abstract on the specific role of the companies in avoiding, mitigating and remedying these implications, and set no accountability and redress mechanisms. Moreover, overly relying on ethics, without other structures and governance benchmarks may ultimately reduce them to a mere checklist, turning fundamental values into a box you simply need to click to be on the safe side (Hagendorff, 2020). Yet, the salience of AI Ethics arguably reflects the recognition that algorithms and AI are not simply “another utility that needs to be regulated once it is mature”(Floridi et al., 2018) turning the question of how these principles can be translated into practice through governance (Winfield & Jirotka, 2018) both immediate and demanding.

3.2 The race to governance

3.2.1 The need for governance and different approaches to AI governance

Stemming from the limitations of ethics and the shortcomings of soft-law instruments as means of governance, several scholars have emphatically stressed the urgency to turn from soft to hard-law solutions, and from steering models premised on ethics to governance models based on law and binding obligations (Black & Murray, 2019; Bryson, 2020; Nemitz, 2018). Arguing for the need for regulation they highlighted the transformative power of AI, its relevance for human rights, and the risks it poses on an individual and social level, but also the disruptive effects it may have on social structures (Cihon et al., 2020; Crawford, 2021; LaGrandeur, 2021). On the policy level, the wide range of ethically important and societally impactful implications of AI systems and algorithms, as well as the adverse effects of such technologies on an individual and social level, has led governments and intergovernmental organisations to progressively shift their attention from the promulgation of ethical codes and principles to specific legal instruments (see for example the "Proposal for a Regulation laying down harmonised rules on Artificial Intelligence", by the EU Commission). This shift of attention combined with a sense of urgency has created what Smuha describes as a race for AI governance since national, regional, international, and supranational organisations are in the process of considering and assessing “the desirability and necessity of new or revised regulatory measures”(Smuha, 2021b).

Looking to mitigate the risks, while enhancing trust and legal certainty, international public and private stakeholders have engaged in a competition to draft and promote AI governance models (Radu, 2021; Taeihagh, 2021). Hence, the need and urgency to regulate AI, placing the design, development and deployment of AI systems and algorithms within a specific regulatory environment, seem anymore undeniable (Almeida et al., 2021; Gasser & Almeida, 2017).

However, how to regulate AI remains an open question, as disruptive technologies tend to challenge traditional governance paradigms (Cath, 2018; Maas, 2022). Thus, whereas literature provides with a large number of proposed governance models, most of which are still largely premised on ethics or remain primarily expressed in the language of ethics (Black & Murray, 2019; Whittlestone et al., 2019), we do not have yet “a functional model that is able to encompass all areas of knowledge that are necessary to deal with the required complexity” (Almeida et al., 2021). Regulatory proposals for AI governance vary from suggesting building upon existing norms and instruments (Scherer, 2016), and public international law (Kunz and Héigeartaigh, 2020; Yeung et al., 2019), to the establishment of completely new, specialised institutions (Kemp et al., 2019), and from centralised to international alternatives (Cihon et al., 2020, 2021; Erdélyi & Goldsmith, 2018). At the same time, governments initiatives, at both the national and international level, for the time being, seem to be either “technology-centric”, focusing mostly on individual AI applications, or “law-centric”, focusing on the effects of AI applications on specific legal fields (Maas, 2022), principally having a risk-based approach (Pery et al., 2021; Scantamburlo et al., 2020).

“Technology-centric” approaches engage with specific applications of AI technology, singling out particular use-cases that regulation should focus on, such as autonomous cars, drones, robotics etc. However, Maas (2022) stresses that such an approach “emphasizes visceral edge cases, and is therefore easily lured into regulating edge-case challenges or misuses of the technology (e.g. the use of DeepFakes for political propaganda) at a cost of addressing far more common but less visceral use cases.” Additionally, it leads to patchwork regulation and fragmentary regulatory responses, as it promotes an ad-hoc, problem-solving orientation (Liu & Maas, 2021). Reversely, the law-centric approach does not focus on the individual application, but on the relevant legal doctrine, exploring how AI applications may change or challenge the scope or assumptions of existing legislation (Crootof & Ard, 2020; Petit, 2017). Instead of starting from the technology and its applications here the point of departure is the legal system. The problem with this approach is that it leads to the segmentation of the regulatory responses, as it ties the regulatory reaction to specific legal doctrines, such as privacy, contract law, consumer protection etc., while the effects of a specific AI application may be relevant to more than one domain of law. Furthermore, focusing only on the law, it may neglect other means of regulation, such as design and standardisation. Therefore, Maas (2022) remarks that both approaches represent somehow “siloed policy responses”.

Nevertheless, AI and algorithms are not developed, nor deployed in a vacuum. Some of the challenges posed by AI systems and algorithms are subject to already existing legislation (Black & Murray, 2019; Cannarsa, 2021). For example, consumer protection law, anti-discrimination legislation, as well as privacy and data protection rules already apply in several AI applications. Yet, it is still essential to review the regulatory framework and critically evaluate it considering also the unpredictable outcomes of AI and taking steps towards the establishment of specific governance structures when necessary. Simultaneously, in sectors lacking regulation, it is urgent to identify and assess the risks and potential harms, considering also the sometimes unpredictable outcomes of AI systems (Reed, 2018). There is also a growing volume of literature that argues for the need to develop new means of governance and regulatory instruments, as the existing structures cannot successfully meet the challenges and respond to the issues AI raises (Stahl et al., 2021a, b; Taeihagh, 2021). However, the governance of such all-purpose (Dafoe, 2018; Trajtenberg, 2018), transformative (Gruetzemacher & Whittlestone, 2022), widely disruptive, highly complicated, and still emerging technology is far from a simple task (Radu, 2021; Smuha, 2021b).

3.2.2 Designing a model for AI governance

Challenges in governing AI arise from various sources. They range from the choice of the most suitable and appropriate approach; the proper combination of modalities of governance; the identification, and engagement of all the relevant stakeholders, to the very definition and conceptualisation of AI and algorithmic processes (Taeihagh, 2021). Moreover, questions regarding how we should regulate “a changing technology with changing uses, in a changing world” (Maas, 2022) as well as what exactly is that we seek to regulate (Black & Murray, 2019; Maas, 2022) represent crucial aspects of AI governance that we need to consider. Simultaneously, it is essential for legislation to strike a balance between protecting society from potential harms and allowing AI technologies to develop and advance for the benefit of society. As Floridi et al. (2018) remark, “avoiding the misuse and underuse of these technologies” is both challenging and critical in ensuring that AI will serve society.

Now, if we wanted to put the key challenges for AI governance in some sort of order, from a legal and regulatory point of view, the very first challenge for AI governance would be the lack of conceptual clarity. As in every other domain and particularly so in the field of technology governance, it is of paramount significance to have proper knowledge of what is to be regulated (Kooiman, 2003; Larsson, 2013a, 2020; Reed, 2018). Thus, framing AI is an integral part of formulating adequate governance structures and responses (Perry & Uuk, 2019), given that “the definition is in itself a form of conceptual control” with significant impacts on the governance discourse (Larsson, 2013a, b, 2020). However, as mentioned in Section 2, AI is riddled with multiple interpretations and competing definitions (Almeida et al., 2021; Haenlein & Kaplan, 2019), while, beyond the shared points Collins et al. (2021) have found between the various descriptions and conceptualisations, there is no consensus (Stahl et al., 2021a, b).

Whereas the existing definitions may be sufficient to offer an idea of the broader scope of AI and the issues at stake, allowing us to discuss AI governance, and explore the priorities, they are arguably not specific and detailed enough to allow the application of governance structures (Stahl et al., 2021a, b). Moreover, without a common framework and an agreed-upon starting point, it is hard for policy-makers to determine what aspects of AI and algorithms applications are desirable and which are not (Bhatnagar et al., 2018; Larsson, 2020), to take the appropriate regulatory measures. Additionally, the fact that AI is not a single technology, but a collection of technologies and applications (Gasser & Almeida, 2017; Latonero, 2018; Raso et al., 2018a), the relevance of a variety of processes, procedures, and components, the nonlinear way in which algorithms and machine learning work (Robbins, 2019), combined with the rapid pace at which the technologies that are considered to be part of AI change and become replaced by others (Raso et al., 2018a) further obscure the picture, making governance particularly challenging (Radu, 2021).

Furthermore, to promote a governance model that will foster “AI for human flourishing” (Stahl et al., 2021a, b), it is significant to also critically examine the black-box approach, the innate opacity and inherent unexplainability, as well as the unpredictable nature of algorithms. Such features, often repeated with a sense of truism as necessary components of the AI definition, may diminish the accountability of AI designers, owners and operators, and reduce the contestability of their decisions (Edwards & Veale, 2017; Hildebrandt, 2016), rendering the justification of the outcome impossible or unnecessary, even in cases of damaging, unfair or discriminatory results (Bayamlioglu, 2018). Unless we find a meaningful way to address these issues and challenge their premise and entailments, effective governance through law may remain particularly difficult (Leenes et al., 2017; Santoni de Sio & Mecacci, 2021). To that end, Bryson (2020), Bryson & Theodorou (2019) suggests that policy-making should be premised on a human-centric approach, based on an understanding that embraces technologies as end-product of design, choice and intentionality, which can be transparent, documented and explainable, at least to the extent necessary for accountability reasons, if so mandated by the law.

Beyond the difficulties in framing AI, a subsequent challenge involves engaging all the key stakeholders, balancing the asymmetries between them, and deciding upon the most suitable and appropriate governance model. For a long time AI research has been far away from the interests periphery of governments and the public (Smuha, 2021a, b), as technology companies have been in charge of AI development so far (Jang, 2017). Thus, the field became largely dominated by private entities, which had at their disposal not only state-of-the-art equipment and ample proprietary data but also leading researchers and sufficient discretion. This way, the field was principally industry-driven and self-governed, primarily through ethical codes and declarations. However, lately, there is an ever-increasing interest on behalf of governments, intergovernmental and supranational organisations, non-governmental organisations, research institutions, civil society groups, and the public at large (Radu, 2021; Taeihagh, 2021). Currently, the AI governance field constitutes a global arena, in which multiple stakeholders from various fields, with divergent resources, interests, motives, and familiarity with the topic, compete for power and authority to influence AI governance (Butcher & Beridze, 2019; Dafoe, 2018). Moreover, the “race for AI regulation” (Smuha, 2021b) has politicized the area (Radu, 2021) inducing competition among the stakeholders that try to steer the governance quest to their benefit, or reserve for themselves a leading position.

In this context, the traditionally leading role of the state is significantly challenged as private entities, and particularly a handful of technology companies leading the field, enjoy considerable informational and resource advantages compared to national governments. They arguably have enhanced familiarity with the field (Guihot et al., 2017; Taeihagh et al., 2021), and it is questionable whether they will be willing to share their insights, while most probably this will be a quid pro quo. Additionally, as the key role of private entities is hardly in question, governments and intergovernmental organisations need to remain cautious of the risks involved in over-delegating power and authority to private hands, considering technology companies can be notoriously difficult to control and supervise (Chenou & Radu, 2019). Building on this observation, Nemitz (2018) warns that in the context of technology governance IG has set a rather dangerous culture of “lawlessness and irresponsibility,” permitting extensive discretion, accumulation of substantive power, and ultimately allowing a handful of private technology companies to become the de facto governors (Suzor, 2019). Thus, adequately engaging private actors, delegating them the tasks that they are better equipped to fulfil while ensuring that they will not abuse their power, authority, and privileged position entails designing and deciding upon the most appropriate governance model, as well as introducing the necessary checks and balances to private and public power.

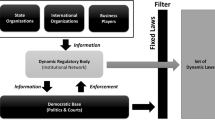

From a similar point of view, creating the appropriate model for governance and assigning roles to the different actors and stakeholders is also rather tricky, and equally crucial for the success of the AI governance regime. There have been several suggestions aimed to solve the governance mode puzzle, proposing innovative governance approaches, such as decentralized multistakeholder models, and hybrid or adaptive forms of governance (Brundage et al., 2018; Dafoe, 2018; Radu, 2021; Smuha, 2021b), as well as different approaches towards governance (Cihon et al., 2020, 2021; Kemp et al., 2019), aimed at combining the competences and de-facto governance power of the relevant stakeholders.

In this context, it is necessary to ensure the balance between the competing governments, as well as the adequate representation of smaller countries and the developing world. So far, the race for AI governance is led by the countries hosting some of the key technology companies with a leading role in AI development, such as the US, China or Japan, and governments along with intergovernmental organisations that seek to proactively develop thorough and prospective governance models to secure a leading role in the AI future, as the EU and several European governments have been doing since the mid-2010s. The rest of the world, and particularly the less advanced countries, struggle to set their objectives and priorities, to have a place on the table (Radu, 2021). Given that AI governance is essentially the quest of setting the foundations for the future of a disruptive technology that is expected to change the world, it is arguably of at most importance to secure that all countries will have a place on the table, and ensure that they will have a voice in the negotiations. Consequently, one of the most significant questions relates to defining and prescribing the role of non-state actors, as well as balancing the power, information, and resource asymmetries between private and public actors, as well as between the countries (Nemitz, 2018; Taeihagh, 2021; Taeihagh et al., 2021).

Finally, the appropriate combination of modalities of governance and the specific role of law in AI governance remain open issues (Almeida et al., 2021; Cath, 2018), bearing significant consequences for the overall form of governance as well as for the stakeholders involved (Smuha, 2021b). Law has a rather famously complicated relationship with technology. Its competence and suitability in terms of technology governance have been multiply contested, and the context of AI and algorithms is no exception. As has happened previously, in the context of Internet regulation, the capacity of law to serve as an effective vehicle of governance for AI, is debated. Yet, we need to remind ourselves that the law has successfully sustained numerous “revolutionary innovations” adapting and remaining relevant. Nonetheless, there are still some noteworthy challenges. For example, the pace of technological advances is a fairly obvious one (Larsson, 2020; Perry & Uuk, 2019). Additionally, the reference to a variety of different technologies, often hard to be told apart, can be a further challenge. Moreover, the choice of rules, the balance between over-regulating and regulatory vacuum, as well as the different domains of law that are relevant and applicable requires carefully assessing the existing instruments, the necessity for intervention, the impacts, and potential spill-over effects without losing sight of coherence (Smuha, 2021b).

3.2.3 The steps we have taken and the path ahead

Although the focus on ethics has somehow overshadowed the translation of principles into concrete regulation, and the concretization of guidelines into specific binding requirements (Radu, 2021), the scenery is rapidly changing, as governments and intergovernmental organisations are increasingly moving towards the introduction of specific and binding rules to govern AI. In this context, when discussing AI governance, a reference to the GDPR seems an inevitable commonplace, both because the Regulation is one of the most visible and well-known pieces of legislation relevant to AI (Almeida et al., 2021; Stahl et al., 2021a, b), and because it is widely embraced and celebrated as efficiently and effectively tackling several of the critical issues, particularly related to privacy, transparency, explainability, and documentation of algorithmic processes and automated decision-making procedures.

Although not explicitly referring to AI systems and algorithms, a set of specific GDPR provisions affect not only the collection and processing of personal data by AI and algorithms but also the design and deployment of AI and algorithms, as well as algorithm-based decisions (see for example Articles 4, 6, 9, 22, 25, 35). For example, regarding the opacity of algorithms and the problematic accountability of automated decision-making, the Regulation introduces the principles of transparency and explainability, and an enhanced accountability model jointly with the requirement for detailed documentation (Article 30). Moreover, although not necessarily purported to solve all the challenges of AI, data protection laws offer suitable responses to several AI issues, as the right to privacy crucially relates to a number of other rights and freedoms, such as equality and non-discrimination (Hildebrandt, 2019), which are relevant in terms of AI.

However, the GDPR does not cover all of the negative or challenging AI ethical and societal implications (Busacca & Monaca, 2020; for an interesting discussion of AI and the GDPR see also Mitrou, 2019), while privacy and data protection are only part of the AI-related concerns. Sometimes the scope of critical provisions for AI is too narrow (see for example Article 22), while the Regulation offers limited guidance on how to achieve a balance between the obligations and requirements of the GDPR and the objective of promoting AI research and applications that respect these obligations (Sartor & Lagioia, 2020). Explainability remains challenging and obscure, also due to the relatively vague way in which the requirement to provide explanations is phrased in the Regulation (Hamon et al., 2021, 2022). Issues of discrimination are only partially addressed, as Article 9 on special categories of personal data does not include “categories of colour, language, membership of a national minority, property” which may also lead to discriminatory outcomes through AI and algorithms (Ufert, 2020). Additionally, critics have underlined that “paying high fines instead of complying with the GDPR could be a preferable path for major digital companies” which in turn limits the actual effectiveness of the provisions (Vesnic-Alujevic et al., 2020). Finally, even though the extraterritorial effect significantly expands the reach of the Regulation, the GDPR is hardly a global instrument. Furthermore,

Another noteworthy instrument is the Proposed EU Regulation of AI, the Artificial Intelligence Act (AIA). The Act aims to foster an “ecosystem of trust that should give citizens the confidence to take up AI applications and give companies and public organisations the legal certainty to innovate using AI.” Notably, it constitutes the first-ever attempt to enact a horizontal regulation of AI, through an instrument that is specifically intended to govern AI, signifying the decisive step from soft to hard law. It is also indicative of the EU strategy to become a pioneer in AI governance by introducing a framework premised on the EU values and the key EU regulatory principles. The proposed legal framework focuses on the specific utilisation of AI systems, having a risk-based approach, and will also enjoy extraterritorial jurisdiction, (Pery et al., 2021). It applies to all providers, assigns responsibility to users, importers, distributors, and operators, and seeks to ensure compliance with fines that go well beyond those of the GDPR. In Article 3, AI systems are defined as “software that is developed with [specific] techniques and approaches [listed in Annex 1] and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.” As noted for the GDPR, the Proposed Act does not constitute a global solution, yet if adopted it will arguably serve as a blueprint for similar instruments.

Yet, the AIA Proposal currently is far from ratification, while several of the key points of the proposed legislation have attracted criticism and are open to debate. For example, Smuha et al., (2021). remarks that the Act fails to accurately recognise the risks and harms associated with different kinds of AI and future AI applications. It is suggested that for “trustworthy AI” it is essential to establish a mechanism that will allow the Commission to expand the list of prohibited AI systems and propose banning existing manipulative AI systems such as DeepFakes, social scoring and some biometric identification systems. They also stress that in many cases the proposal does not provide sufficient protection for fundamental rights, nor effective redress mechanisms or a meaningful framework for the enforcement of legal rights and duties, while public participation is not adequately protected and promoted (Smuha et al., 2021). Similarly, Ebers et al., (2021) while celebrating the innovative elements of the proposed Act, also emphasise the absence of effective enforcement mechanisms, criticising the self-enforcement structure for raising concerns of under-regulation. They argue that without external oversight, and meaningful ways to ensure access to remedy to the affected parties the risk-based approach does not adequately protect individuals’ rights (Ebers et al., 2021). From the same angle, Veale and Borgesius (2021) highlight that obligations on AI systems users, may fail to protect individuals given that the draft Act does not provide a mechanism for complaint or judicial redress available to them.

Reviewing the most recent regulatory initiatives, it becomes apparent that AI governance constitutes a pressing priority. Nevertheless, although positive steps have been taken there is still a long way ahead. Hopefully, the growing public attention will lead to wider and deeper discussions about the most appropriate responses to AI challenges. Yet considering the ever-increasing penetration of AI systems and algorithms in contemporary society, and their relevance to human rights, a human-centric, rights-based approach ought to underpin the governance initiatives and any regulatory instruments. To that end, additional research and further negotiation are also necessary to ensure greater inclusivity and diversity, fair participation, and meaningful representation of all the views, as well as to explore the role key actors should have, considering their place in the AI governance ecosystem along with their interests and agenda (de Almeida et al., 2021; Larsson, 2020; Perry & Uuk, 2019). Yet, broad public debate and democratic deliberation are still lagging behind technological development and policy-making in the context of AI governance (Vesnic-Alujevic et al., 2020).

4 Human Rights and AI Governance

4.1 Human rights in AI Ethics and the AI governance discourse

Human rights are highly relevant within the AI Ethics and AI governance discourse from multiple angles. First, AI and algorithms have emerged as a key area of human rights concern during the last decade, as their adverse effects on human rights became increasingly apparent and alarming (Bachelet, 2021; Fukuda-Parr & Gibbons, 2021). As noted in the introduction, AI systems and algorithms are routinely employed in ways particularly relevant and commonly impactful for human rights (Latonero, 2018; Risse, 2018; Yeung et al., 2019). The ubiquitous role of AI in our daily lives across public and private contexts could adversely impact the rights and freedoms of citizens all over the world on a scale and in ways not always clearly foreseeable (Saslow & Lorenz, 2019). Whereas considering the negative implications of AI systems for human rights privacy, data protection, and discrimination, are often discussed, McGregor et al. (2019) stress that there is also a variety of human rights issues that are less apparent and studied, while the bias and discrimination that are repeatedly reinforced by AI systems may lead to further adverse impacts for human rights.

For example, the wide employment of Amazon’s face recognition technology “Rekognition” by US law enforcement and immigration services, as well as by private companies in search for employees, has created a number of human rights-related controversies, as it tended to falsely match the images of women with darker skin colour with those of arrested people to a disproportionate degree (Godfrey, 2020). The bias of the system discriminated against these women affecting their access to work, or most importantly, their rights to life, liberty and security. Similarly, automated credit scoring may affect employment and housing rights, or the rights to work and access to education, in ways that are not always obvious ex-ante. Moreover, “the increasing use of algorithms to inform decisions on access to social security potentially impacts a range of social rights” (McGregor et al., 2019) including family life, as algorithmic bias in identifying children at risk may have devastating effects on already vulnerable families. Additionally, as Rachovitsa and Johann (2022) remark, the employment of AI systems in terms of digital welfare state initiatives often falls short of meeting basic requirements of legality.

Human rights are also highly relevant in the AI discourse as in the quest for ethical and societally beneficial AI-DDD they are commonly invoked, either as guidelines for AI Ethics or as principles for AI governance (Fukuda-Parr and Gibbons, 2021; Muller, 2020a, b; Smuha, 2021a). They are mentioned in most of the ethical principles and guidelines developed by national and intergovernmental organisations and research groups. For example, both the EU and the UN have identified human rights as forming “the most promising foundation for identifying abstract ethical principles and values” and central in the effort to ensure the development and employment of AI for the benefit of society (AI HLEG, 2019b; Hogenhout, 2021). Similarly, the CoE has emphasised the vital need to safeguard human rights along with their relevance in informing and shaping AI Ethics (Mijatović, 2018). Moreover, the Toronto Declaration clearly states that it builds upon “the relevant and well-established framework of international human rights law and standards.” Respect for human rights is the also first principle of the IEEE ethical framework for AI (The IEEE Global Initiative, 2017) and several other AI Ethics codes developed by research groups and think tanks (Algorithm Watch, 2020).

Human rights are also commonly mentioned in the AI ethical guidelines and principles of several private entities and technology companies working on AI-DDD (Asaro, 2019a). For example, human rights are invoked among the guiding principles within Facebook’s Five Pillars of Responsible AI (Pesenti, 2021). Human rights and the UNDHR are also explicitly noted in the Microsoft Global Human Rights Statement (Microsoft, 2020). However, as Alston (2019) remarks the”token references” to human rights, and the self-proclaimed commitment to respect human rights as a stand-alone principle in private-sector AI Ethics codes, are commonly ornamental. The codes rarely provide a comprehensive list of rights that individuals may invoke against the company, nor a redress system in case of violations. Access to remedy is implied and not safeguarded, while external auditing or any kind of human rights monitoring is rarely mentioned. This is not necessarily surprising, as, in terms of such codes, human rights are not perceived with the sense of legal rights, but merely as ethical principles (Hagendorff, 2020).

Building on this observation, a number of researchers and human rights advocates have suggested building “ethical” and “responsible” AI on the basis of human rights, essentially premising AI governance on human rights instead of ethics (Saslow & Lorenz, 2019; Smuha et al., 2021; Yeung et al., 2019). It is argued that human rights can both establish and reaffirm the human-centric nature AI-DDD ought to have, but also introduce actionable standards and binding rules, complementing and expanding upon ethics (Saslow & Lorenz, 2019). Developing AI governance models and rules with human rights standards as a premise, while holding AI designers, developers and operators accountable to protect individuals’ fundamental rights and freedoms may effectively address and overcome many of the limitations of ethics (Pizzi et al., 2020; Saslow & Lorenz, 2019; Yeung et al., 2019). Human rights provide a deeper and more thorough framework to analyse the overall effect of algorithmic decision-making, determine harm and address accountability (McGregor et al., 2019). Moreover, anchoring AI governance to the international human rights law can offer a more robust, comprehensive and widespread framework for AI governance (Cath, 2018; Smuha, 2021a; Yeung et al., 2019), providing “aspirational and normative guidance to uphold human dignity and the inherent worth of every individual, regardless of country or jurisdiction” (Latonero, 2018). Furthermore, as Stahl et al. (2021a, b) suggest “it seems plausible that a more direct application of human rights legislation to AI can provide some clarity on related issues and point the way to possible solutions.”

Due to their dual nature as legal and ethical entitlements, human rights are indeed both relevant and suitable to be the foundations for controlling and steering AI and algorithms. Unlike the multiplicity of ethical principles and self-adopted guidelines, the support of the UN human rights system is substantial on a global scale (Risse, 2018). Serving as the basic moral entitlements of every human being, they are deeply rooted in contemporary politics and law, recognised in political practice and legal institutions globally (Etinson, 2018). Thus, contrary to ethics, human rights are universal, offering a common set of principles that can be applied globally (Smuha, 2021a). Considering the global reach of a variety of AI systems and algorithms, along with the calls for a governance system of international nature, this is a considerable benefit, as human rights provide a globally legitimate and comprehensive framework. Of course, the human rights system is not flawless. It has several limitations, however, it evolves over time, reacting to the developments and the challenges in society. The UN has established a rigorous and robust system of Special Rapporteurs and Obunspeople who have identified and set in monition a variety of initiatives aimed at improving the level of human rights protection globally, as well as responding to the challenges posed by digital technologies (Fukuda-Parr & Gibbons, 2021; Pizzi et al., 2020).