Abstract

While i-vectors with probabilistic linear discriminant analysis (PLDA) can achieve state-of-the-art performance in speaker verification, the mismatch caused by acoustic noise remains a key factor affecting system performance. In this paper, a fusion system that combines a multi-condition signal-to-noise ratio (SNR)-independent PLDA model and a mixture of SNR-dependent PLDA models is proposed to make speaker verification systems more noise robust. First, the whole range of SNR that a verification system is expected to operate is divided into several narrow ranges. Then, a set of SNR-dependent PLDA models, one for each narrow SNR range, are trained. During verification, the SNR of the test utterance is used to determine which of the SNR-dependent PLDA models is used for scoring. To further enhance performance, the SNR-dependent and SNR-independent models are fused using linear and logistic regression fusion. The performance of the fusion system and the SNR-dependent system is evaluated on the NIST 2012 speaker recognition evaluation for both noisy and clean conditions. Results show that a mixture of SNR-dependent PLDA models perform better in both clean and noisy conditions. It was also found that the fusion system is more robust than the conventional i-vector/PLDA systems under noisy conditions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In practical situations, performance of speaker verification systems is always degraded by the variation in the acoustic environments. There has been a lot of research in compensating for the effect of these variations, which results in a number of methods that work in either the front-end (Pelecanos and Sridharan 2001; Saeidi and van Leeuwen 2012) or the backend (Ming et al. 2007; Sadjadi et al. 2012; Shao and Wang 2008; Li and Huang 2010; Mallidi et al. 2013) of the verification process. It has been found that the backend techniques is more promising, especially when joint factor analysis JFA; (Kenny et al. 2008) and i-vector/PLDA (probabilistic linear discriminant analysis) frameworks (Dehak et al. 2011; Kenny 2010) are employed.

The i-vector is a low-dimensional vector that represents both speaker and channel characteristics of an utterance. The low-dimensionality of i-vectors facilitates the usage of classical statistical techniques such as LDA (Bishop 2006), within-class covariance normalization (WCCN; Hatch et al. 2006) and PLDA (Prince and Elder 2007) to suppress the channel-variability (Dehak et al. 2011; Rao and Mak 2013; McLaren et al. 2012). PLDA performs factor analysis on the i-vector space by grouping the i-vectors derived from the same speaker in order to find a subspace with minimal channel variability. PLDA is one of the most promising techniques in speaker verification.

Based on the i-vector/PLDA framework, more advanced approaches have been proposed for noise-robust i-vector extraction. For example, Yu et al. (2014) propagated the uncertainty of noisy acoustic features into the i-vector extraction process in an attempt to marginalizing out the effect of noise. This is achieved by expressing the posterior density of an i-vector in terms of the joint density of the clean and noisy acoustic features where the uncertainties of the noisy features are represented through the variances of the joint density. To account for all possible clean features, the joint density is marginalized over all possible clean acoustic features. The marginalized density is then plugged back into the posterior density of i-vectors, where the noise-robust i-vector is its posterior mean. This modified i-vector extraction method has shown potential for improving the robustness of speaker recognition especially in low signal-to-noise ratio (SNR) conditions.

Hasan and Hansen (2013) proposed an acoustic factor analysis (AFA) scheme, which is essentially a mixture-dependent feature transformation that integrates dimensionality reduction, de-correlation, normalization and enhancement together. It was demonstrated that this transformation method can remove the need for hard feature clustering and avoid retraining of the universal background model (UBM) from the new features. In Hasan and Hansen (2014), the AFA concept was further enhanced by replacing the UBM with a mixture of factor analyzers and a new i-vector extractor was proposed.

Lei et al. (2013) proposed a noise robust i-vector extractor using vector Taylor series (VTS). The method adapts the UBM to speech signals contaminated with additive and convolutive noises and then extracts the noise-compensated i-vector based on the sufficient statistics collected from the adapted UBM. To release the computational burden of the VTS approach, Lei et al. (2014) further proposed an efficient approximation, called simplified-VTS, which collects sufficient statistics and whitens them using the VTS-synthesized UBM. As an alternative approach to VTS, an unscented transform was presented in Martinez et al. (2014) to approximate the nonlinearities between clean and noisy speech models in the cepstral domain. It is expected that the unscented transform is more accurate than VTS when the distortions are far from locally linear.

Noting that the convolution and max-pooling operations in convolution neural networks (CNNs) can reduce distortion caused by noise, McLaren et al. (2013) proposed using a CNN to estimate the posterior probability of senones and used the posterior probabilities to replace the zero-order statistics extracted from the UBM. The resulting sufficient statistics are then used for estimating the i-vectors. It was found that the performance of this CNN/i-vector framework is comparable to that of UBM/i-vector framework and that fusion of these two framework is very promising.

There are also a lot of research concentrating on the backend PLDA stage. For example, McLaren et al. (2013) developed a multiple system fusion approach that uses multiple streams of noise-robust features for i-vector fusion and score fusion. I-vector fusion consists of concatenating the stream-dependent i-vectors to a single vector and score fusion fuses scores obtained from the i-vector fusion system and the single-feature i-vector systems. Many systems use LDA and WCCN to pre-process the length-normalized i-vectors before presenting them to the PLDA model for scoring. Noting that the actual distortion of i-vectors may not be Gaussian, Sadjadi et al. (2014) replaced LDA by non-parametric discriminant analysis (NDA) that uses nearest-neighbor rule to estimate the between- and within-speaker scatter matrices. They found that NDA is more effective than the conventional LDA under noisy and channel degraded conditions.

In Leeuwen and Saeidi (2013), Lei et al. (2012), Hasan et al. (2013), and Rajan et al. (2013, 2014) multi-condition training, in which a PLDA model is trained by pooling clean and noisy utterances together, was employed to enhance noise robustness. Garcia-Romero et al. (2012) trained a collection of PLDA models, each for a specific condition, and found that the pooled-PLDA is more appealing due to its good performance as well as the small number of parameters.

Unlike Garcia-Romero et al. (2012) where the verification score is a convex mixture of the individual PLDA models weighted by the posterior probability of the test condition (Eq. 4 of Garcia-Romero et al. 2012), the SNR-dependent PLDA models proposed in this paper compute the verification scores based on the SNR of test utterances. Specifically, hard-decision SNR-dependent PLDA chooses one of the SNR-dependent PLDA models based on the SNR of test utterances; soft-decision SNR-dependent PLDA calculates weights of the individual PLDA by incorporating posterior of the SNR of test utterances. Observing the performance improvement in multi-condition training, a fusion system combing a mixture of SNR-dependent PLDA models and a multi-condition PLDA model was developed in this work.

One of the challenges in speaker verification is to maintain performance under adverse acoustic condition. For the i-vector/PLDA framework, approaches such as advanced transformation (Hasan and Hansen 2013), noise robust i-vector extraction (Lei et al. 2013), and multi-condition PLDA (Leeuwen and Saeidi 2013; Lei et al. 2012; Hasan et al. 2013; Rajan et al. 2013, 2014) have shown promise in improving the robustness of speaker verification systems. However, none of these methods explore the noise robustness of the SNR-dependent PLDA models. This paper aims to fill this gap by extending our earlier work on SNR dependent models (Pang and Mak 2014) by the following three fronts:

-

(1)

investigating both hard- and soft-decision strategies for the SNR-dependent PLDA,

-

(2)

conducting additional experiments on clean phone call speech (common condition 2) and interview speech (common conditions 1 and 3) for both male and female speakers in NIST 2012 speaker recognition evaluation (SRE),

-

(3)

performing more analysis on fusion systems with respect to decision thresholds, decision strategies, fusion methods, and fusion weights.

The paper is organized as follows. Section 2 outlines the i-vector/PLDA framework for speaker verification. Sections 3 and 4 describe hard- and soft-decision SNR-dependent PLDA models and fusion systems, respectively. In Sects. 5 and 6, we report evaluations based on NIST 2012 SRE (2012). Section 7 concludes the findings.

2 The i-vector/PLDA framework

2.1 I-vector extraction

The i-vector approach (Dehak et al. 2011) defines a low-dimensional total variability space that encompasses both speaker and channel variabilities. In this space, each utterance is represented by the latent factor in a factor analysis model:

where \(\mathbf{m}\) is the speaker- and channel-independent Gaussian mixture model (GMM)-supervector formed by stacking the mean vectors of the UBM (Reynolds et al. 2000), \(\mathbf{m}_{x}\) is the speaker-dependent supervector, \({{\mathbf {T}}}\) is a low-rank total variability matrix, and \(\mathbf{x}\) is the low-dimensional latent factor. Given an utterance, the posterior mean of the latent factor is the utterance’s i-vector. The training of the total variability matrix \({{\mathbf {T}}}\) is similar to the training of the eigenvoice matrix in JFA (Kenny et al. 2007), except that the speaker labels are ignored.

2.2 PLDA model

PLDA (Garcia-Romero and Espy-Wilson 2011; Kenny 2010; Prince and Elder 2007) considers the i-vectors of utterances as observations generated by a generative model. Specifically, assuming there are R utterances from a speaker s and denoting \(\mathbf{x}_{sr}\,(r=1,\ldots ,R)\) as the collection of the corresponding i-vectors, the PLDA model decomposes i-vector \(\mathbf{x}_{sr}\) into:

where \(\varvec{\mu }\) is the global offset, \(\mathbf{V}\) defines the bases of the speaker subspace, \(\mathbf{z}_{s}\) is the speaker factors, and \(\varvec{\epsilon }_{sr}\) is the residual noise assumed to follow a Gaussian distribution with zero mean and diagonal covariance \(\varvec{{\Sigma }}.\) An expectation-maximization (EM) algorithm (Prince and Elder 2007) is applied to estimate the parameters of the factor analyzer (Eq. 2).

Given a test i-vector \(\mathbf{x}_{t}\) and target-speaker’s i-vector \(\mathbf{x}_{s},\) a verification score can be computed as a log-likelihood ratio of two Gaussian distributions (Garcia-Romero and Espy-Wilson 2011):

where the hypotheses \(\mathcal{H}_{1}\) and \(\mathcal{H}_{0}\) denote that the two i-vectors come from the same- and different-speakers, respectively. By assuming that the i-vectors (after length normalization) and the latent factor \(\mathbf{z}\) follow Gaussian distributions, the verification score is (Garcia-Romero and Espy-Wilson 2011):

where

where

3 SNR-dependent PLDA

Classical Gaussian PLDA assumes that i-vectors follows a Gaussian distribution. However, the assumption of single Gaussian is rather limited, especially under noisy environments with a wide range of SNR. In this situation, a group of SNR-dependent PLDA models, in which each model is responsible for a small range of SNR, are more suitable. Specifically, the parameters of each SNR-dependent PLDA model are estimated independently by an EM algorithm (Prince and Elder 2007) using training data contaminated with different level of background noise.

3.1 Hard-decision SNR-dependent PLDA

For the hard-decision SNR-dependent systems, one SNR-dependent PLDA model is chosen for each test i-vector. During verification, the SNR of the test utterance determines which of the SNR-dependent PLDA models and which category (6 dB, 15 dB or clean) of target-speaker’s i-vectors should be used for scoring:

where \(\ell _{t}\) is the SNR of the test utterance, and \(\eta _{1}\) and \(\eta _{2}\) are decision thresholds. A disadvantage of this hard-decision approach is that it is necessary to determine \(\eta _{1}\) and \(\eta _{2}\) and that their optimal values depend on the SNR of test utterances. Instead of finding their optimal values using a held-out set, in this work, we varied their values and investigated how they affect performance.

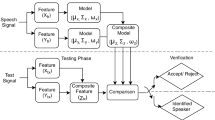

Figure 1 illustrates the data-flow of hard-decision SNR-dependent PLDA scoring. A test i-vector is fed to one of the SNR-dependent PLDA models and is scored against the corresponding i-vectors of the target speaker. Because the three PLDA models produce scores at different ranges, the scores should be normalized before computing the equal error rate (EER) and minimum decision-cost function (minDCF). We applied SNR-dependent Z-norm to the PLDA scores, with the three sets of Z-norm parameters found independently using the training files contaminated with different level of background noise. In theory, Z-norm is not necessary if the PLDA scores are well calibrated (Brümmer and de Villiers 2011). However, we found that it is not easy to achieve perfect calibration without having a set of held-out set that has the same characteristics as the test data. Therefore, we opted for using the more conventional Z-norm in this step.

3.2 Soft-decision SNR-dependent PLDA

In the soft-decision SNR-dependent systems, given a test utterance, the posterior probability of SNR of the test utterance is used to combine the scores of different PLDA models. Specifically, denote the SNR of a test utterance as \(\ell _{t},\) the posterior probability of SNR is

where \(\omega _{j}\)’s are class labels corresponding to clean, 15 dB or 6 dB PLDA models, \(P(\omega _{j})\) is the prior probability of \(\omega _{j},\,p(\ell _{t}|\mu _{j},\,\sigma _{j})\) is the probability density function of \(\ell _{t}\) with mean \(\mu _{j}\) and standard deviation \(\sigma _{j}.\) To implement Eq. 8, we trained a one-dimensional GMM with three mixtures using the SNR of training utterances. See Sect. 5.4 for details.

In the hard-decision SNR-dependent systems, the SNR of the test utterance directly determines which SNR-dependent PLDA model is used and only one SNR-dependent PLDA model is chosen for scoring with the test i-vector, as Eq. 7 describes. However, in the soft-decision SNR-dependent systems, three SNR-dependent PLDA models and SNR-dependent i-vectors of the target speakers are all used for scoring. The posterior probability obtained in Eq. 8 determines how much the three scores derived from the three SNR-dependent PLDA models contribute to the overall score. Specifically, the overall score is:

where \(s_{cln},\,s_{15\,\mathrm{dB}}\) and \(s_{6\,\mathrm{dB}}\) are the normalized score from the clean, 15 dB and 6 dB SNR-dependent PLDA, respectively, \(P_{1},\,P_{2}\) and \(P_{3}\) are \(P(\mathrm{clean}|\ell _{t}),\,P(15\,\mathrm{dB}|\ell _{t})\) and \(P(6\,\mathrm{dB}|\ell _{t})\) in Eq. 8, respectively, and \(s_{d}\) is the soft-decision SNR-dependent systems score. Figure 2 illustrates the data-flow of soft-decision SNR-dependent PLDA scoring. In the figure, a test i-vector is fed to three SNR-dependent PLDA models simultaneously and scored against the corresponding i-vectors of the target speaker. The three normalized scores are then linear combined to obtain the overall score \(s_{d},\) as Eq. 9 describes.

4 Fusion of SNR-dependent PLDA

The fusion system combines the SNR-dependent system and the SNR-independent system. We investigated linear fusion and logistic regression fusion in this work. Figure 3 illustrates the fusion system. In the figure, the upper part is the SNR-independent system whose PLDA model is trained by pooling the training data with variable noise levels. The lower part is the SNR-dependent system. It can be hard- or soft-decision SNR-dependent PLDA. The fusion block can be either linear fusion or logistic regression fusion. For linear fusion, the test scores are used to determine the best fusion weight while in logistic regression fusion, training scores are used to compute the fusion parameters. The following two subsections describe these two fusion methods.

4.1 Linear fusion

Figure 4 shows the test scores of imposters and true speakers obtained by SNR-dependent PLDA and SNR-independent PLDA models. Evidently, the scores from the two systems can be separated by a straight line. Therefore, a simple way to fuse the two systems is to linearly combine their scores:

where \(s_{i}\) is the normalized score from the SNR-independent system, \(s_{d}\) is the normalized score from the SNR-dependent system, and w is the combination weight.

As described earlier, the Z-norm parameters represented by \(\mu\) and \(\sigma\) in Figs. 1 and 2 were derived independently from the i-vectors used for training the PLDA models. The scores obtained from the SNR-independent system are also normalized to make sure that they are consistent with those obtained from the SNR-dependent system. The Z-norm equation is as follows:

where \(s_{i}\) is the score after normalization, \(\mu _{multi}\) and \(\sigma _{multi}\) are the normalization parameters shown in Fig. 3.

Test score distributions of imposters and true speakers of SNR-independent (pooling) and hard-decision SNR-dependent PLDA in CC4 of NIST 2012 SRE (male speakers). The decision thresholds \(\eta _{1}\) and \(\eta _{2}\) in Eq. 7 were set to 3 and 20, respectively

4.2 Logistic regression fusion

In logistic regression fusion (Bishop 2006; Brümmer 2014), the fused scores are also a linear combination of N sub-systems’ scores:

where \(\{\alpha _{1},\, \alpha _{2}, \ldots , \alpha _{N}\}\) are the fusion weights for the corresponding subsystems and \(\alpha _{0}\) is a bias term used for calibrating the fused scores. The fusion weights \(\{\alpha _{0},\, \alpha _{1},\, \alpha _{2}, \ldots , \alpha _{N}\}\) can be obtained by the learning algorithm of logistic regression (Brümmer 2014). In this work, we used the data that have been used for training the PLDA models for estimating the fusion weights in Eq. 12. Given the i-vectors of a set of training speakers, we computed the intra- and inter-speaker PLDA scores from the SNR-independent and SNR-dependent PLDA models (both soft- and hard-decisions). These scores represent the speaker and impostor scores of \(s_{1},\,s_{2},\) and \(s_{3}\) in Eq. 12. Then, \([s_{1}\,s_{2}\,s_{3}]^\textsf {T}\)’s are considered as three-dimensional training vectors of a logistic regression classifier (Brümmer 2014).

5 Experiments

5.1 Speech data and acoustic features

Both the phone call speech and the interview speech in the core set of NIST 2012 SRE (2012) were used for performance evaluation. Table 1 (NIST 2012) summarizes the conditions of the test segments in the evaluation. In this paper, we use the term “segment” and “utterance” interchangeably. Figure 6 shows the SNR distributions of test utterances for male speakers in the evaluation (the distributions for female speakers are similar). It shows that the noisy test utterances cover a wide range of SNR, especially for CC4. To make the SNR distribution of training segments comparable with that of the test segments, we added babble noise from the PRISM dataset to the training files at 6 and 15 dB to create three SNR-dependent PLDA models: 6 dB, 15 dB, and clean (using the original sound files). Figure 5 shows the SNR distribution of telephone (tel) and microphone (mic) speech files after adding noise.

The training segments comprise phone call speech and interview speech with variable length. We removed the 10-s segments and the summed-channel segments from the training segments but ensured that all target speakers have at least one utterance for enrollment. The speech files in NIST 2005–2010 SREs were used as development data for training gender-dependent UBMs, total variability matrices, LDA–WCCN projection matrices, PLDA models and Z-norm parameters.

Speech regions in the speech files were extracted by using a two-channel voice activity detector (VAD; Mak and Yu 2013). For each frame, 19 MFCCs together with energy plus their first- and second-derivatives were extracted from the speech regions, followed by cepstral mean normalization and feature warping (Pelecanos and Sridharan 2001) with a window size of 3 s. A 60-dimensional acoustic vector was extracted every 10 ms, using a Hamming window of 25 ms.

5.2 Creating noise contaminated utterances

For each clean training file, we randomly selected one out of the 30 noise files from the PRISM dataset (Ferrer et al. 2011) and added the noise waveform to the file at an SNR of 6 and 15 dB using the FaNT tool.Footnote 1

To measure the “actual” SNR of speech files (including the original and noise contaminated ones), we used the voltmeter function of FaNT and the speech/non-speech decisions of our VAD (Mak and Yu 2013; Yu and Mak 2011) as follows. Given a speech file, we passed the waveform to the G.712 frequency weighting filter in FaNT and then estimated the speech energy using the voltmeter function (sv-p56.c from the ITU-T software tool library Neto 1999). Then, we extracted the non-speech segments based on the VAD’s decisions and passed the non-speech segments to the voltmeter function to estimate the noise energy. The difference between the signal and noise energies in the log domain gives the measured SNR of the file. While the measured SNR is close to the target SNR, they will not be exactly the same. This explains why we have a continuous SNR distribution in Fig. 5. Because we used our VAD (which is more robust than the VAD in sv-p56.c) to determine the background segments, we are able to measure the SNR even for very noisy files.

5.3 I-vector extraction and PLDA-model training

The i-vector systems are based on gender-dependent UBMs with 1024 mixtures and total variability matrices with 500 total factors. Microphone and tel utterances (without adding noise) from NIST 2005–2008 SREs were used for training the UBMs and total variability matrices. Following McLaren et al. (2012), WCCN (Hatch et al. 2006) and i-vector length normalization (Garcia-Romero and Espy-Wilson 2011) were applied to the 500-dimensional i-vectors. Then, LDA (Bishop 2006) and WCCN were applied to reduce the dimension to 200 before training the PLDA models with 150 latent variables.

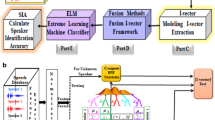

Considering that CC1 and CC3 contain interview speech and that CC2, CC4 and CC5 contain phone call speech, their PLDA models were trained separably. Specifically, for CC2, CC4 and CC5, both SNR-independent and SNR-dependent PLDA models were trained. For the former (Fig. 7a), we pooled the 6 dB (mic + tel), 15 dB (mic + tel), and original (mic + tel) speech files—excluding speakers with less than two utterances—into a single training set. Two SNR-independent PLDA models with 150 factors were then trained, one for each gender. For SNR-dependent PLDA, the 6 dB (mic + tel), 15 dB (mic + tel), and original (mic + tel) speech files were independently used to train three PLDA models, each with 150 factors (Fig. 7c).

According to the left panels in Fig. 5, SNR distribution of clean mic, 15 dB mic and 6 dB mic overlap each other. Therefore, for CC1 and CC3, we only used clean mic and 15 dB mic to train the SNR-independent PLDA (Fig. 7b) and SNR-dependent PLDA (Fig. 7d).

5.4 PLDA scoring

The scoring procedures for SNR-independent and SNR-dependent models are different. For SNR-independent PLDA models, each of the test i-vectors was scored against the target-speakers’ i-vectors derived from the tel/mic sessions of original (clean) speech files using the conventional PLDA scoring function (Eq. 4).

For hard-decision SNR-dependent PLDA, as Eq. 7 describes, one of the SNR-dependent PLDA models was chosen to score against the corresponding target’s i-vectors based on the SNR of the test utterance. Figure 6 shows the SNR distributions of male test utterances in CC1–5 in 2012 SRE. Based on the distributions, the decision thresholds (Eq. 7) for hard-decision SNR-dependent PLDA were set.

For the soft-decision SNR-dependent system, for each test i-vector, three scores were computed using three SNR-dependent PLDA models and the corresponding target’s i-vectors independently. Then, the posterior probability of SNR of the test utterance was used to determine the weights for combining the scores produced by the SNR-dependent PLDA models, as Eq. 9 describes. The posterior probability (Eq. 8) is based on a one-dimensional GMM that models the SNR of training utterances.

For CC2, CC4 and CC5 whose test segments were from phone call speech, the SNR of the original (tel), 15 dB (tel) and 6 dB (tel) utterances were used to train a three-mixture GMM. For CC1 and CC3 whose test segments were from interview speech, the SNR of the original (mic) and 15 dB (mic) training utterances were used to train a two-mixture GMM.

The upper panels of Figs. 8 and 9 show the histogram and probability density function of SNR for male speakers of the tel and mic training sessions, respectively. Figure 8 shows that the three mixtures derived from three tel training files are well separated and they have equal mixture coefficients since the 15 and 6 dB noisy training files were derived from the original training files by adding noise at different SNR. On the other hand, Fig. 9 shows that the SNR of the original and 15 dB mic training files are not well separated. The lower panels of Figs. 8 and 9 show the posterior probability of SNR obtained from Eq. 8 for male speakers, and the posterior probability of female speakers is similar with that of male speakers. During verification, test utterance’s SNR determines \(P_{1},\,P_{2}\) and \(P_{3}\) in Eq. 9 based on the posterior probability in Figs. 8 and 9.

5.5 Fusion of SNR-dependent PLDA models

In linear fusion, the fusion scores are a linear combination of SNR-independent scores and SNR-dependent scores (Eq. 10). Either hard-decision or soft-decision SNR-dependent system can be fused with the SNR-independent system. In logistic regression fusion, according to in Eq. 12, N subsystems can be combined. In particular, the SNR-independent system can be combined with both hard- and soft-decision SNR-dependent systems and these three systems can also be combined. The fusion parameters \(\{\alpha _{0},\, \alpha _{1},\, \alpha _{2}, \ldots , \alpha _{N}\}\) in the logistic regression fusion were derived from the training scores obtained from the original, 15 dB and 6 dB i-vectors used for training the PLDA models.

As described in Sects. 3 and 4, score normalization is necessary for both SNR-independent and SNR-dependent systems. The Z-norm parameters represented by \(\mu\) and \(\sigma\) in Figs. 1, 2 and 3 were derived independently from the i-vectors used for training the PLDA models. Specifically, \(\mu _{cln}\) and \(\sigma _{cln}\) were derived from the original i-vectors, \(\mu _{15\,\mathrm{dB}}\) and \(\sigma _{15\,\mathrm{dB}}\) were derived from both the original and 15 dB i-vectors, and \(\mu _{6\,\mathrm{dB}}\) and \(\sigma _{6\,\mathrm{dB}}\) were derived from the original, 15 dB and 6 dB i-vectors. The reason for this arrangement is to make sure that the scores produced by the three PLDA models in the SNR-dependent system have the same ranges. Besides, \(\mu _{multi}\) and \(\sigma _{multi}\) were derived by pooling the original, 15 dB and 6 dB i-vectors together.

6 Results and discussions

6.1 Performance analysis of SNR-dependent systems

Table 2 shows the EER and minDCF (\(\min C_\mathrm{Primary}\) in NIST 2012 SRE NIST 2012) achieved by SNR-independent (pooled) PLDA, and the hard- and soft-decision SNR-dependent PLDA in CC2, CC4 and CC5 for both male and female in NIST 2012 SRE. We consider the SNR-independent PLDA as the baseline. Table 3 shows the performance of the same systems in CC1 and CC3. The term “mix” in the tables denotes that the PLDA model was trained by both tel and mic data, and the terms “mic” denote that the PLDA models were trained by mic data only. In the following, we refer the PLDA model trained by mic data only to as mic PLDA. Likewise, we refer the model trained by both mic and tel data to as mix PLDA.

6.1.1 Hard-decision SNR-dependent systems

Different values of thresholds (\(\eta _{1}\) and \(\eta _{2}\) in Eq. 7) have been tried in the experiments and the best combination is reported in Table 2. It shows that hard-decision SNR-dependent PLDA with appropriate thresholds generally outperforms SNR-independent PLDA (baseline) especially in terms of EER.

Table 3 shows the performance of SNR-dependent PLDA in CC1 and CC3. As mentioned in Sect. 5, the SNR distribution of clean mic, 15 dB mic and 6 dB mic training data overlap with each other (see Fig. 5, left panels), so only clean mic and 15 dB mic were used for training model in the SNR-dependent PLDA for CC1 and CC3. Therefore, we set \(\eta _{1}\) to \({-}\infty .\) Similar to CC2, CC4 and CC5, hard-decision SNR-dependent PLDA performs better than SNR-independent PLDA (baseline).

6.1.2 Soft-decision SNR-dependent system

Figures 8 and 9 show the distribution and posterior probability of SNR of tel and mic training utterances for male speakers. Based on the posterior probability distribution of SNR of training utterances, the posterior probabilities of SNR of test utterances can be derived (Eq. 8). In particular, the posterior probabilities obtained in Fig. 8 were used for CC2, CC4 and CC5, and those obtained from Fig. 9 were used for CC1 and CC3. Then, based on Eq. 9, the scores of soft-decision SNR-dependent PLDA can be determined.

According to Table 2, the performance of soft-decision SNR-dependent PLDA, hard-decision SNR-dependent PLDA, and clean PLDA (trained by clean mic and tel) under CC2 and CC5 are comparable. This is mainly because both soft- and hard-decision SNR-dependent PLDA under CC2 and CC5 heavily dependent on the clean PLDA model (Fig. 6, Eqs. 8, 9 and Fig. 8, lower panel).

On the other hand, in CC4, the performance of soft-decision SNR-dependent PLDA is poorer than that of the hard-decision counterpart. This is mainly because the soft-decision PLDA relies on the SNR posterior distributions to determine the weights for combining the scores from the three PLDA models. According to Fig. 8 (lower panel), the posterior probabilities (i.e., combination weights) for the 6 and 15 dB model crossover at around 7 dB. Moreover, according to Fig. 6, a fairly large number of utterances in CC4 have SNR below this crossover point, which means that the scores from the 6 dB model are heavily weighted for some of the noisy utterances. While the whole idea of SNR-dependent PLDA is to maximize the match between test utterances’ SNR and training utterances’ SNR, we conjecture that if a test utterance is not too noisy, it is more appropriate to use the 15 dB PLDA model rather than the 6 dB one. Unfortunately, for the soft-decision PLDA, we have no control on the crossover point, and thus the posterior probabilities. However, for hard-decision PLDA, we have full control on the decision threshold \(\eta _{1},\) which allows us to find a better threshold such that only very noisy utterances will use the 6 dB model.

From Table 3, similar performance of soft- and hard-decision SNR-dependent PLDA are observed in CC1 and CC3 for female speakers. However, in CC1 and CC3 for male speakers, soft-decision SNR-dependent PLDA performs poorer than the hard-decision counterpart.

Performance of (1) SNR-independent (pooled) PLDA, (2) hard-decision SNR-dependent PLDA, (3) soft-decision SNR-dependent PLDA and fusion systems in NIST 2012 SRE (core set) for male speakers (results for female speakers have similar patterns). Linear and LR denote linear fusion and logistic regression fusion described in Sect. 4, respectively. Sys1 fusion of SNR-independent and hard-decision SNR-dependent systems. Sys2 fusion of SNR-independent and soft-decision SNR-dependent systems. Sys3 fusion of SNR-independent, hard- and soft-decision SNR-dependent systems

6.2 Performance analysis of fusion systems

Figure 10 shows the performance of linear fusion and logistic regression fusion. For the former, as described in Eq. 10, only two systems can be fused and the fusion weight w requires adjustment based on the test scores. For the logistic regression fusion, as descried in Sect. 4.2 and Eq. 12, multiple systems can be combined and the fusion parameters \(\{\alpha _{0},\, \alpha _{1},\, \alpha _{2}, \ldots , \alpha _{N}\}\) were derived from the training scores. The linear fusion weight w in Eq. 7 was set to 0.5. For CC2, CC4 and CC5, the decision thresholds used in the hard-decision SNR-dependent system were set to \(\eta _{1}=3\) and \(\eta _{2}=20.\) For CC3 (male), \(\eta _{1}={-}\infty\) and \(\eta _{2}=10.\) For CC1 (both gender) and CC3 (female), \(\eta _{1}={-}\infty\) and \(\eta _{2}=0.\) The decision thresholds were empirically chosen according to the histogram of SNR distribution shown in Fig. 9.

Figure 10 shows that the fusion systems perform significantly better than both the SNR-independent PLDA and the SNR-dependent PLDA. The following five subsections analyze the performance of the fusion systems with respect to fusion methods, fusion weights, decision strategies, decision thresholds and Z-norm parameters.

6.2.1 Performance with respect to fusion methods

Figure 11 shows the detection error tradeoff (DET) curves (Martin et al. 1997) for male speakers in CC4 using both linear and logistic regression fusions. The SNR-dependent PLDA subsystems in these two fusion systems are based on hard-decision. Figures 10 and 11 suggest that the performance of logistic regression fusion is similar to that of linear fusion. However, instead of using the test data to determine the optimal fusion weights, fusion weight for logistic regression fusion were determined from development data. Therefore, logistic regression fusion is more practical.

6.2.2 Performance with respect to fusion weights

Table 4 lists the performance of the fusion systems with different fusion weights under CC4. It can be observed that a large fusion weight tends to achieve better performance. On the other hand, logistic regression fusion can achieve a comparable performance without using this kind exhausted search for the fusion weight.

6.2.3 Performance with respect to decision strategies

Figure 12 shows the DET curves for male speakers in CC4 using both hard- and soft-decision. The thresholds were set to \(\eta _{1}=3\) and \(\eta _{2}=20\) for hard-decision SNR-dependent PLDA, and the fusion weight was set to \(w=0.7.\) The results in this figure are consistent with those in Table 2(a). Evidently, fusion systems outperform both two subsystems. In spite of the performance difference between the hard- and soft-decision SNR-dependent PLDA, Sys1 and Sys2 have similar performance.

6.2.4 Performance with respect to decision thresholds

As shown in Tables 2 and 3, the performance of hard-decision SNR-dependent PLDA is affected by the selection of the thresholds (\(\eta _{1}\) and \(\eta _{2}\)). This subsection is to investigate the effect of different thresholds on the fusion of SNR-independent PLDA and hard-decision SNR-dependent PLDA. The results on CC4 are shown in Table 5. It can be observed that the performance is comparable across different values of \(\eta _{1}\) and \(\eta _{2}.\) In Table 2, when \(\eta _{1}=5\) and \(\eta _{2}=25,\) the hard-decision SNR-dependent PLDA in CC4 performs poorly. However, as shown in Table 5, the fusion system using \(\eta _{1}=5\) and \(\eta _{2}=25\) has similar performance as compared to the fusion systems using other thresholds. This suggests that the fusion operation makes the hard-decision PLDA system less sensitive to \(\eta _{1}\) and \(\eta _{2}.\)

DET performance of SNR-independent PLDA, hard- and soft-decision SNR-dependent PLDA, fusing SNR-independent PLDA and hard- or soft-decision SNR-dependent PLDA. The decision thresholds \(\eta _{1}\) and \(\eta _{2}\) in Eq. 7 were set to 3 and 20, respectively. The linear fusion weight w in Eq. 7 was set to 0.7

6.3 Sensitivity analysis of Z-norm parameters

One important factor that affects the performance of the SNR-dependent systems and the fusion systems is the Z-norm parameters. An experiment was performed to investigate the sensitivity of system performance with respect to the Z-norm parameters. In the experiment, the Z-norm parameters (\(\mu _\mathrm{cln},\,\sigma _\mathrm{cln}\)) and (\(\mu _{15\,\mathrm{dB}},\, \sigma _{15\,\mathrm{dB}}\)) in Fig. 1 were first obtained from the scores of test utterance. Then, the values of \(\mu _\mathrm{cln}\) and \(\mu _{15\,\mathrm{dB}}\) were perturbed by \({\pm }0.1\sigma _\mathrm{cln}\) and \({\pm }0.1\sigma _{15\,\mathrm{dB}},\) respectively. Figure 13 shows that the performance of SNR-dependent PLDA is still better than that of SNR-independent PLDA even if the Z-norm parameters \(\mu _\mathrm{cln}\) is perturbed \(0.1\sigma _\mathrm{cln}.\) This suggests that the fusion systems are fairly robust with respect to the deviation of the Z-norm parameters. Similar results were also obtained by perturbing \(\mu _{15\,\mathrm{dB}}.\)

7 Conclusions

In this paper, fusion of SNR-dependent PLDA models was presented. Both SNR-dependent and fusion of SNR-dependent models were evaluated on the core set of NIST 2012 SRE. Performance of the SNR-dependent PLDA model depends on the decision strategies, the decision thresholds, Z-norm parameters and the degree of mismatch between the SNR of test utterances and the SNR-dependent PLDA models. The SNR-dependent PLDA outperforms the baseline in 9 out of 10 conditions (CC1–5 for both male and female) especially in terms of EER.

Fusion of SNR-dependent and SNR-independent PLDA models can bring benefit regardless of the decision strategies, decision thresholds and Z-norm parameters. The fusion operation makes the hard-decision PLDA system less sensitive to \(\eta _{1}\) and \(\eta _{2}\) and fusion systems are fairly robust with respect to the deviation of the Z-norm parameters. Besides, while logistic regression fusion achieves a comparable performance with linear fusion, it does not require using the brute-force search for the fusion weight. Fusion of SNR-independent PLDA and soft-decision SNR-dependent PLDA with logistic regression, which is the most favoured since it does not need any prior information about the test utterances, brings benefits in 8 out of 10 conditions.

References

Bishop, C. (2006). Pattern recognition and machine learning. New York: Springer.

Brümmer, N. (2014). FoCal. https://www.sitesgooglecom/site/nikobrummer/focal.

Brümmer, N., & de Villiers, E. (2011). The Bosaris toolkit user guide: Theory, algorithms and code for binary classifier score processing. Documentation of Bosaris toolkit. https://sites.google.com/site/bosaristoolkit/

Dehak, N., Kenny, P., Dehak, R., Dumouchel, P., & Ouellet, P. (2011). Front-end factor analysis for speaker verification. IEEE Transactions on Audio, Speech, and Language Processing, 19(4), 788–798.

Ferrer, L., Bratt, H., Burget, L., Cernocky, H., Glembek, O., Graciarena, M., et al. (2011). Promoting robustness for speaker modeling in the community: The PRISM evaluation set. In Proceedings of NIST 2011 workshop.

Garcia-Romero, D., & Espy-Wilson, C. (2011). Analysis of i-vector length normalization in speaker recognition systems. In Proceedings of interspeech (pp. 249–252).

Garcia-Romero, D., Zhou, X., & Espy-Wilson, C. (2012). Multicondition training of Gaussian PLDA models in i-vector space for noise and reverberation robust speaker recognition. In 2012 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 4257–4260).

Hasan, T., & Hansen, J. (2013). Acoustic factor analysis for robust speaker verification. IEEE Transactions on Audio, Speech, and Language Processing, 21(4), 842–853.

Hasan, T., & Hansen, J. (2014). Maximum likelihood acoustic factor analysis models for robust speaker verification in noise. IEEE Transactions on Audio, Speech, and Language Processing, 22(2), 381–391.

Hasan, T., Sadjadi, S. O., Liu, G., Shokouhi, N., Boril, H., & Hansen, J. H. L. (2013). CRSS system for 2012 NIST speaker recognition evaluation. In 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 6783–6787).

Hatch, A., Kajarekar, S., & Stolcke, A. (2006). Within-class covariance normalization for SVM-based speaker recognition. In Proceedings of the 9th international conference on spoken language processing, Pittsburgh, PA, USA (pp. 1471–1474).

Kenny, P. (2010). Bayesian speaker verification with heavy-tailed priors. In Proceedings of Odyssey. 2010 Speaker and language recognition workshop. Brno: Czech Republic.

Kenny, P., Boulianne, G., Ouellet, P., & Dumouchel, P. (2007). Joint factor analysis versus eigenchannels in speaker recognition. IEEE Transactions on Audio, Speech and Language Processing, 15(4), 1435–1447.

Kenny, P., Ouellet, P., Dehak, N., Gupta, V., & Dumouchel, P. (2008). A study of inter-speaker variability in speaker verification. IEEE Transactions on Audio, Speech and Language Processing, 16(5), 980–988.

Leeuwen, D. A., & Saeidi, R. (2013). Knowing the non-target speakers: The effect of the i-vector population for PLDA training in speaker recognition. In 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP), Vancouver, BC, Canada (pp. 6778–6782).

Lei, Y., Burget, L., Ferrer, L., Graciarena, M., & Scheffer, N. (2012). Towards noise-robust speaker recognition using probabilistic linear discriminant analysis. In 2012 IEEE international conference on acoustics, speech and signal processing (ICASSP), Kyoto, Japan (pp. 4253–4256).

Lei, Y., Burget, L., & Scheffer, N. (2013). A noise robust i-vector extractor using vector Taylor series for speaker recognition. In 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 6788–6791).

Lei, Y., Mclaren, M., Ferrer, L., & Scheffer, N. (2014). Simplified VTS-based i-vector extraction in noise-robust speaker recognition. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 4065–4069).

Li, Q., & Huang, Y. (2010). Robust speaker identification using an auditory-based feature. In 2010 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 4514–4517).

Mak, M. W., & Yu, H. B. (2013). A study of voice activity detection techniques for NIST speaker recognition evaluations. Computer, Speech and Language, 28(1), 295–313.

Mallidi, S., Ganapathy, S., & Hermansky, H. (2013). Robust speaker recognition using spectro-temporal autoregressive models. In Proceedings of interspeech.

Martin, A., Doddington, G., Kamm, T., Ordowski, M., & Przybocki, M. (1997). The DET curve in assessment of detection task performance. In Proceedings of Eurospeech’97 (pp. 1895–1898).

Martinez, D., Burget, L., Stafylakis, T., Lei, Y., Kenny, P., & Lleida, E. (2014). Unscented transform for i-vector-based noisy speaker recognition. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 4070–4074).

McLaren, M., Mandasari, M., & Leeuwen, D. (2012). Source normalization for language-independent speaker recognition using i-vectors. In Proceedings of Odyssey 2012: The speaker and language recognition workshop (pp. 55–61).

McLaren, M., Scheffer, N., Graciarena, M., Ferrer, L., & Lei, Y. (2013). Improving speaker identification robustness to highly channel-degraded speech through multiple system fusion. In 2013 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 6773–6777).

Ming, J., Hazen, T., Glass, J., & Reynolds, D. (2007). Robust speaker recognition in noisy conditions. IEEE Transactions on Audio, Speech and Language Processing, 15(5), 1711–1723.

Neto, S. F. D. C. (1999). The ITU-T software tool library. International Journal of Speech Technology, 2(4), 259–272.

NIST. (2012). The NIST year 2012 speaker recognition evaluation plan. http://www.nistgov/itl/iad/mig/sre12cfm.

Pang, X. M., & Mak, M. W. (2014). Fusion of SNR-dependent PLDA models for noise robust speaker verification. In ISCSLP’2014 (pp. 619–623).

Pelecanos, J., & Sridharan, S. (2001). Feature warping for robust speaker verification. In Proceedings of Odyssey, 2001. The speaker and language recognition workshop, Crete, Greece (pp. 213–218).

Prince, S., & Elder, J. (2007). Probabilistic linear discriminant analysis for inferences about identity. In IEEE 11th international conference on computer vision, 2007 (ICCV 2007, pp. 1–8).

Rajan, P., Afanasyev, A., Hautamäki, V., & Kinnunen, T. (2014). From single to multiple enrollment i-vectors: Practical PLDA scoring variants for speaker verification. Digital Signal Processing Online. doi:10.1016/j.dsp.2014.05.001.

Rajan, P., Kinnunen, T., & Hautamäki, V. (2013). Effect of multicondition training on i-vector PLDA configurations for speaker recognition. In Proceedings of interspeech (pp. 3694–3697).

Rao, W., & Mak, M. W. (2013). Boosting the performance of i-vector based speaker verification via utterance partitioning. IEEE Transactions on Audio, Speech and Language Processing, 21(5), 1012–1022.

Reynolds, D. A., Quatieri, T. F., & Dunn, R. B. (2000). Speaker verification using adapted Gaussian mixture models. Digital Signal Processing, 10(1–3), 19–41.

Sadjadi, S. O., Hasan, T., & Hansen, J. (2012). Mean Hilbert envelope coefficients (MHEC) for robust speaker recognition. In Proceedings of interspeech (pp. 1696–1699).

Sadjadi, S., Pelecanos, J., & Zhu, W. (2014). Nearest neighbor discriminant analysis for robust speaker recognition. In Proceedings of interspeech (pp. 1860–1864).

Saeidi, R., & van Leeuwen, D. A. (2012). The Radboud University Nijmegen submission to NIST SRE-2012. In Proceedings of the NIST speaker recognition evaluation workshop.

Shao, Y., & Wang, D. (2008). Robust speaker identification using auditory features and computational auditory scene analysis. In 2008 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 1589–1592).

Yu, C., Liu, G., Hahm, S., & Hansen, J. (2014). Uncertainty propagation in front end factor analysis for noise robust speaker recognition. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP, pp. 4045–4049).

Yu, H., & Mak, M. (2011). Comparison of voice activity detectors for interview speech in NIST speaker recognition evaluation. In Proceedings of interspeech (pp. 2353–2356).

Acknowledgments

This work was in part supported by The Hong Kong Research Grant Council (Grant Nos. PolyU 152117/14E and PolyU 152068/15E) and The Hong Kong Polytechnic University (Grant No. 4-ZZCX).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pang, X., Mak, MW. Noise robust speaker verification via the fusion of SNR-independent and SNR-dependent PLDA. Int J Speech Technol 18, 633–648 (2015). https://doi.org/10.1007/s10772-015-9310-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-015-9310-8