Abstract

This long-term single group study was conducted with pre-service special education elementary teachers taking a required graduate level course on integrating technology into mathematics and science instruction in a New York City public University. The purpose of this study was to explore whether Technological Knowledge (TK), Pedagogical Knowledge (PK), Content Knowledge in mathematics and science (CKM and CKS) and Technological Pedagogical Content Knowledge (TPACK) are independent constructs in the TPACK framework and to develop instruments for assessment of each basic domain of the theoretical TPACK framework. Exploratory and confirmatory factor analyses of the developed instruments suggest that the TPACK construct is independent from TK, PK, CKM and CKS. Further analysis using multiple linear regression showed that TK, PK, and CK are not predictors of TPACK. These findings provide an opportunity for independent assessment of different types of teacher knowledge defined by the TPACK framework. This could help teacher preparation programs to evaluate effectiveness of courses that prepare teachers for integration of technology.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

New advances in educational technologies, increasing adoption rate of technologies in schools and strong emphasis on importance of technological tools in recently implemented new standards (Common Core State Standards for Mathematics, http://www.corestandards.org/Math/; Next Generation Science Standards, https://www.nextgenscience.org/) have placed new demands on classroom teachers to be knowledgeable and effective at teaching with technology and assessing the learning of students who use technology (Hutchison and Reinking 2011). Research shows that many teacher education graduates feel unprepared to use technology to support student learning as they transition to teaching (Dawson 2008; Ottenbreit-Leftwich et al. 2010). This places higher demands on teacher preparation programs to ensure that novice teachers are capable of selecting, evaluating, and using appropriate technologies and resources to create experiences that advance student engagement and learning. (U.S. Department of Education 2017). Teacher educators have increasingly looked to the theoretical framework of Technological Pedagogical Content Knowledge (TPACK), which is used to describe what teachers need to know to effectively integrate technology into their teaching practice (Mishra and Koehler 2006). In the last decade, the TPACK framework has quickly become a widely referenced conceptual framework within teacher education, particularly as teacher education programs are redesigning their curriculum to prepare teachers to use technology effectively (Chai et al. 2010; Niess 2011; Kaplon-Schilis and Lyublinskaya 2015).

The TPACK framework defines seven domains of teacher knowledge: TK (Technological Knowledge), PK (Pedagogical Knowledge), CK (Content Knowledge), PCK (Pedagogical Content Knowledge), TCK (Technological Content Knowledge), TPK (Technological Pedagogical Knowledge), and TPACK (Technological Pedagogical Content Knowledge). TPACK domain as defined by Mishra and Koehler (2006) describes the nature of knowledge that is required by teachers to teach with technology while addressing the complex nature of teacher knowledge for specific subject areas (e.g. mathematics or science) within a specific context. However, some researchers argue that it may be difficult to distinguish the boundaries between different knowledge components of the TPACK framework (Graham 2011). Archambault and Barnett (2010) argue even further that TPACK domains are not separate from each other and measuring these domains is “complicated and convoluted” (p. 1656), and therefore it may be difficult to create instruments for measuring and assessing TPACK domain.

In order to address the effectiveness of teacher education programs, TPACK constructs need to be precisely defined, and reliable instruments need to be created “for measuring and assessing TPACK in a variety of context” (Shinas et al. 2013, p. 340). According to Niess (2008) providing the TPACK framework is not sufficient. Frameworks need to be tested in the real world and the only way to do it is by developing appropriate instruments that are both “consistent with the theory and measure what they set out to measure” (Koehler et al. 2011, p. 17). Since the development of the TPACK framework by Mishra and Koehler (2006), researchers have been developing a variety of instruments to measure pre-service and in-service teachers’ TPACK. Abbitt (2011) argues that the task of developing an efficient, reliable and valid instrument is difficult, more so if you are trying to measure “how teacher’s knowledge influences actual teaching practices” (p. 288). The researchers question what would be the best approach to develop pre-service teachers’ TPACK; what kind of knowledge base pre-service teachers need to have in order to use appropriate technology tools to effectively facilitate all students’ learning of specific content (Brantley-Dias and Ertmer 2013). Some researchers debate whether it is sufficient for pre-service teachers to be instructed focusing only on the construction of a “unique body of TPACK knowledge” (Cherner and Smith 2016). Others argue that teacher preparation programs should focus on training pre-service teachers separately in technology (TK), pedagogy (PK), and content (CK), and assume spontaneous integration of these domains into TPACK knowledge (Hughes 2008). However, there is no sufficient research on how TK, PK, and CK affect the TPACK.

In order to analyze whether TK, PK and CK have an effect on TPACK, we need to determine whether theoretical domains defined by the TPACK framework could be measured independently. Therefore, the purpose of the study was to develop instruments that could measure each basic theoretical TPACK domain independently and analyze whether TK, PK, and CK are predictors of TPACK of preservice special education elementary school teachers. The following research question guided the study: Is there a relationship between basic domains of TPACK framework—TK, PK, and CK—and TPACK domain of preservice special education elementary school teachers?

1.1 TPACK Framework

TPACK was developed as a conceptual framework for inclusion of technological knowledge into Shulman’s (1986) framework of “Pedagogical Content Knowledge (PCK)”. Mishra and Koehler (2006) expanded Shulman’s framework by adding the knowledge of technology as a separate domain since technology, especially the digital technology, have changed (or can change) the nature of the classroom. The TPACK framework is identified with knowledge that teachers need for teaching with technology in their specific content areas and within specific context (Niess 2008). The TPACK framework includes seven domains (see Fig. 1), that can be further classified as basic domains: TK—knowledge and proficiency with technology tools (Shinas et al. 2013), PK—knowledge of educational theories and instructional methodologies needed to develop appropriate instructions, and CK—knowledge of the subject matter; and secondary domains: TCK—knowledge of using technology tools to support specific content matter, PCK—knowledge needed to develop and deliver effective content- specific instruction, TPK—knowledge of how technology can support teaching and learning, and TPACK—knowledge that supports teacher’s ability to integrate content, pedagogy and technology in a unique context (subject matter, grade- level, teachers, school factors, demographics, culture, learning environment, etc.).

The components of the TPACK framework (

Niess et al. (2009) developed a schema with detailed qualitative descriptors for the five levels of TPACK (i.e., recognizing, accepting, adapting, exploring, and advancing), and for each of the four components of TPACK (overarching conception, student understanding, curriculum, and instructional strategies) adapted from Grossman’s (1989) four components of PCK (Fig. 2). This qualitative schema was used to analyze the development of pre-service teachers’ TPACK in teaching mathematics with spreadsheets (Niess et al. 2010).

1.2 TK, PK, CK and TPACK Domains

“Teachers, who have TPACK, act with an intuitive understanding of the complex interplay between the three basic components of knowledge CK, PK and TK” (Baran et al. 2011, p. 371). Recent studies focusing on the interaction between basic domains and secondary domains of TPACK framework produced mixed results. The question whether the basic domains of the TPACK framework affect the teachers’ TPACK domain has not been answered yet.

Most commonly used method to assess pre-service teachers’ TPACK includes self-reports, collected through surveys. According to Dong et al. (2015), the basic domains of TPACK framework, e.g. TK, PK, CK and the secondary domains, e.g. TPK, TCK, and PCK, “should act as epistemic resources to support the teacher’s development of TPACK” (p. 159). Some researchers argue that the basic domains of the TPACK framework are predictors of teachers’ TPACK, with PK having the largest impact on pre-service teachers’ TPACK (Chai et al. 2010). Other researchers suggest that although TK, PK, and CK are correlated with TPACK, TK is not a significant predictor of TPACK (Kartal and Afacan 2017). Mishra and Koehler (2006) propose that the basic domains of TPACK framework might predict the TCK, PCK, TPK and TPACK but also indicate the need for further investigations. Koh et al. (2014) shows that although TK and PK positively affect TPACK, CK does not. Another study with pre-service teachers proposes that TK, PK, and CK have only indirect effects on TPACK, occurring through “the second layer of knowledge domains: TPK, TKC, and PCK.” (Chai et al. 2013, p. 41). Pamuk et al. (2015) in his study also suggests that the second-level knowledge domains (TPK, TCK, PCK) have stronger impact on predicting pre-service teachers’ TPACK development than the basic domains (TK, PK and CK). The study conducted with pre-service science teachers focusing on pre-service teachers’ perception on TPACK suggests that all factors of TK, PK, CK, TCK, TPK, and PCK positively correlated with TPACK factors (Lin et al. 2013). Kaplon-Schilis and Lyublinskaya (2015) used external assessment to collect data for analyzing changes in the TK, PK, CK and TPACK of pre-service special education elementary teachers. Researchers found that the basic domains of TPACK were not influenced by a technology-based pedagogical course for teaching mathematics and science, while TPACK significantly improved. This could have indicated the independence of TPACK domain from the basic domains of the TPACK framework. In order to further examine whether basic domains of TPACK affect teachers’ TPACK, we needed to investigate whether basic domains of the TPACK framework can be measured independently from TPACK domain. And if so, what instruments could be used to measure teachers’ TK, PK, CK, and TPACK.

2 Assessing TPACK

Assessing the complex construct of TPACK has been a challenge for the educational community. As a result, various types of data have been used in research to assess teachers’ TPACK. According to several reviews of the current TPACK studies (Tondeur et al. 2012; Mouza 2016), the commonly used techniques for measuring pre-service teachers’ TPACK include analysis of teaching artifacts, classroom observations, interviews, self-assessment surveys, and questionnaires. The TPACK measurement instruments can be categorized into two types: self-assessment (most commonly used across TPACK studies) and external assessment (based on analysis of observed behavior and teaching artifacts). Studies focusing on issues related to measuring TPACK can be further categorized as qualitative (Archambault 2016) and quantitative (Koh et al. 2014).

Self-assessment (or self-reports) is mostly collected through surveys in which teachers report perceptions of their own TPACK. In most studies, researchers used surveys to measure teachers’ knowledge in seven domains of TPACK framework (TK, PK, CK, TPK, TCK, PCK, and TPACK) using five to seven-point Likert scales (Chai et al. 2010; Archambault 2016; Shinas et al. 2013). One of the commonly used surveys was developed and validated by Schmidt et al. (2009) to examine the changes in perceived TPACK of pre-service teachers’ during a semester of an introductory instructional technology course. Another commonly used survey developed and validated by Lee and Tsai (2010) allows assessment of teachers’ self-efficacy in terms of their TPACK. Although self-assessment instruments for measuring TPACK are easy to use, cost effective, and allow reaching large sample of participants, they represent the teachers’ perception of their TPACK that could be significantly different from the actual TPACK.

The advantage of external assessment is its higher objectivity compared to self-reports. External assessment of TPACK can be performed based on observed behavior and teaching artifacts. Most studies that analyzed teachers’ observed behavior utilized various qualitative techniques in order to measure TPACK based on meetings and interviews (Koehler et al. 2011), instructional observations (Mueller 2010; Hofer and Grandgenett 2012), and team conversations (Mishra et al. 2009). Fewer studies that focus on observed behaviors use quantitative analysis. Lyublinskaya and Tournaki (2012) developed scoring procedure for scripted narratives of instructional observations and teacher presentations at professional development meetings in order to measure teacher’s TPACK.

Another way of assessing teachers’ TPACK is by using teaching artifacts such as teachers’ electronic portfolios (Niess et al. 2010) and lesson plans (Harris et al. 2010; Lyublinskaya and Tournaki 2014). Harris et al. (2010) developed first quantitative measure of TPACK for lesson plans that assessed TPK, TCK, and TPACK. Lyublinskaya and Tournaki (2012) developed and validated TPACK levels rubric based on Niess’ schema for teacher growth for technology integration (Niess et al. 2009). This rubric was used in this study to assess pre-service teachers’ TPACK based on their lesson plans.

While all these studies suggest using various assessment instruments for measuring different domains of teacher knowledge identified in the TPACK framework, the question whether the basic domains of the TPACK framework affect the teachers’ TPACK is still not addressed. Moreover, it is still not clear whether TPACK domain can be measured independently from basic domains. Therefore, the purpose of this study was to analyze whether technological knowledge (TK), pedagogical knowledge (PK), content knowledge (CK) in mathematics and science, and the TPACK of pre-service special education elementary school teachers could be independently measured and then explore the relationship between these domains.

3 The Study Context

Data were collected from all pre-service special education elementary school teachers enrolled in a required graduate course on integrating technology in New York City public University over a period of four consecutive semesters. The objective of the course was to develop pre-service teachers’ TPACK for teaching mathematics and science to students with disabilities. The course met weekly for 15 two-hour sessions and one-hour lab session; all sections had the same instructor. Outside of the class meetings pre-service teachers were expected to spend 3–5 h per week on computers to complete course assignments.

The course examined the use of traditional and emerging instructional technologies that are necessary to foster inquiry, enhance learning and reduce the achievement gap for students with learning disabilities. Pre-service teachers learned how to use various digital tools (e.g. Microsoft office—advanced features of Word, PowerPoint, Excel; SMART Board and SMART Notebook software—interactive features and lesson activities toolkit; Geometer’s Sketchpad—dynamic geometry software for teaching and learning mathematics in grades 3–12; Data Collection-sensors/probes with various interfaces and platforms; calculators, Web 2.0 tools such as blogs, etc.) in elementary mathematics and science classroom. Within the course, pre-service teachers began understanding what role digital technology can play in teaching and learning mathematics and science in inclusion classrooms. Pre-service teachers used specific instructional technology tools to develop mathematics or science activities, and lesson plans that could be used by all students including those with learning disabilities. After learning how to differentiate instruction with technology, how to assess students’ learning using technology, how to use the technology to adapt instructions for learners with disabilities, how to engage and include all type of learners while using various instructional technologies, pre-service teachers developed and taught two lessons, one in mathematics and one in science, in variety of urban elementary classrooms.

4 Participants

During this study, a total of 116 pre-service teachers completed this required pedagogy course. Males comprised 7% of the group and females 93%. The majority of participants (67%) were between the ages of 23 and 26 years old, 7% between the ages of 18 and 22, 15% between the ages of 27 and 32, and 11% of the group were 33 years of age or older. The participants were considered pre-service teachers since they were enrolled in initial certification program for special education; however, 39% of participants held a childhood teaching license, 15% an early childhood license, 3% a special education childhood license, 3% a special education adolescent license, and 40% did not have teaching license. The study sample was representative of the population that annually enrolls in this special education childhood education Master’s program.

On the tests used in this study the participants scored 60% on the test assessing PK, 70% on the test assessing TK, 80% on the test assessing CKM, and 73% on the test assessing CKS. These results are representative of the population involved in this study (Kaplon-Schilis and Lyublinskaya 2015).

5 Instruments

5.1 TKT: Technological Knowledge Test

In order to develop basic technology literacy test aligned to the course curriculum, seven specific content areas were identified in accordance with the course textbook (O’Bannon and Puckett 2010). These areas included (1) hardware and software, (2) the Internet, (3) word processing, (4) digital images and diagrams, (5) databases, (6) spreadsheets, and (7) web authoring and multimedia (Table 1). Initially 51 multiple-choice questions assessing knowledge in these seven areas were selected from the test bank provided by the publisher of the textbook. In order to analyze construct validity, the test was sent to three experts in technology and technological literacy. Based on their comments, 5 items were eliminated due to ambiguity resulting in total of 46 questions requiring 30 min to complete. The experts also confirmed distribution of questions into seven identified content areas. The cumulative scores for each content area were defined as variables TKA—TKG and used for further analysis.

5.2 PKT: Pedagogical Knowledge Test

The basic level of Pedagogical Knowledge was composed from the multiple-choice items of the New York State Teacher Certification Exam (NYSTCE) practice test for Elementary Assessment of Teaching Skills (http://www.nystce.nesinc.com). The validation process for the New York State Teacher Certification Examinations™ (NYSTCE®) tests followed professionally accepted procedures for the validation of certification tests and was monitored by the New York State Education Department. New York Education Law, the Commissioner’s Regulations concerning teacher certification, the New York State Learning Standards, and the input of thousands of practicing public school educators, college and university faculty were all considered in establishing the content validity of the tests. Validity evidence was gathered pertaining to the overall purpose of the tests, the content to be measured, and the specific test questions measuring test content. (http://www.nystce.nesinc.com/content/docs/NYSTCE_Validation_Reliability.pdf). Reliability information is accumulated with each NYSTCE test administration. Estimates of reliability for the NYSTCE tests required of all candidates for certification are typically in the range of 0.95–0.98.

The test included 19 multiple-choice questions that illustrate the objectives of the test—one question for each objective—in three distinct content areas: (1) student development and learning, (2) instruction and assessment, and (3) professional environment (Table 1). The test required 30 min to complete. The cumulative scores for each content area were defined as variables PKA–PKC and used for further analysis.

5.3 CKMT: Content Knowledge in Mathematics Test

The CKMT was comprised of released items from 2011 National Assessment of Educational Progress (NAEP) Grade 4 mathematics test using NAEP online test creation tool (http://nces.ed.gov/nationsreportcard/). The validity and reliability of the 2011 NAEP Grade 4 mathematics test has been verified based on nationally representative samples of 209,000 fourth-graders. The Pearson correlation, r, was used to assess scoring reliability. Correlations ranged from .85 to 1.00, indicating high scoring reliability. Inter-rater agreement was analyzed for each constructed-response item. Cohen’s Kappa for the constructed-response items ranged from .75 to .99. The percent of exact matches between the first and second scores ranged between 87% and 100% indicating high degree of agreement. (https://nces.ed.gov/nationsreportcard/tdw/analysis/).

The NAEP mathematics framework directs that questions to be based on the following five content areas: number properties and operations, measurement, geometry, data analysis, statistics, and probability, and algebra. The framework also specifies that each of the above content areas should occupy a certain proportion of the assessment (https://nces.ed.gov/nationsreportcard/mathematics/). Table 2 shows percentage distribution of items by content area in the CKMT in comparison with the NAEP test.

The CKMT included 34 multiple-choice, short and extended response items. The test required 30 min to complete. The cumulative scores for each content area were defined as variables CKMA–CKME (Table 1) and used for further analysis.

5.4 CKST: Content Knowledge in Science Test

The CKST was comprised of released items from 2009 National Assessment of Educational Progress (NAEP) Grade 4 science test using NAEP online test creation tool (http://nces.ed.gov/nationsreportcard/). The validity and reliability of the 2011 NAEP Grade 4 science test has been verified based on nationally representative samples of 156,500 fourth-graders. The Pearson correlation, r, was used to assess scoring reliability. Correlations ranged from .78 to .98, indicating high scoring reliability. Inter-rater agreement was analyzed for each constructed-response item. Cohen’s Kappa for the constructed-response items ranged from .90 to 1.00. The percent of exact matches between the first and second scores ranged between 83% and 100% indicating high degree of agreement (https://nces.ed.gov/nationsreportcard/tdw/analysis/).

The NAEP Science Framework describes the assessment content and how students’ responses are evaluated. The assessment was designed to measure students’ knowledge of three broad content areas reflecting the science content students are generally exposed to across the K-12 curriculum: (1) physical science, (2) life science, and (3) Earth and space sciences (https://nces.ed.gov/nationsreportcard/science). The framework recommends an approximately equal distribution of questions across the three content areas at grade four. In addition to science content, four science practices describe how students use their science knowledge by measuring what they are able to do with the science content: identifying science principles, using science principles, using scientific inquiry, and using technological design. Sixty percent of the 2009 assessment focused on conceptual understanding (i.e., identifying and using science principles), 30% focused on scientific inquiry, and 10% focused on using technological design.

The CKST included 25 multiple choice and constructed response questions that required 30 min to complete. The questions were almost equally divided between three content areas, 60% of questions focused on identifying and using science principles, 36% on scientific inquiry and 4% on using technological design. The cumulative scores for each content area were defined as variables CKSA–CKSC (Table 1) and used for further analysis.

5.5 TPACK Levels Rubric (Lyublinskaya and Tournaki 2012)

The TPACK levels rubric is designed to measure pre-service teachers’ level of TPACK in four specific components of TPACK: Conception, Students, Curriculum, and Instruction. (Table 1). Two performance indicators have been developed for each level of each component consistent with the qualitative descriptors developed by Niess et al. (2009) and the principles for effective technology use (Goldenberg 2000). The range of possible scores for each component is from 0 to 5, where the component score can be an integer (both performance indicators are met) or a half-integer (one out of two performance indicators are met). The score is assigned for each component independently. In order to achieve a particular level of TPACK, the pre-service or in-service teacher must meet both indicators of that level for each component. Thus, the lowest score across all four components determined the teacher’s total TPACK score. This score provided a conservative measure of the teacher’s level of TPACK development. The rubric was tested for reliability and validity. Content validity was ensured by employing two TPACK experts; both researchers who were involved in the initial development of the TPACK conceptual framework. They reviewed the rubric and provided written comments in response to three specific free-response questions about the rubric. The Pearson correlation, r, was used to assess scoring reliability. Correlations ranged from .61 to .68, indicating acceptable scoring reliability. Confirmatory Factor Analysis using varimax rotation with Kaiser Normalization was performed on two sets of 150 lesson plans collected from pre-service special education elementary school teachers. The procedure confirmed the four factors corresponding to four components of TPACK for each set of lesson plans (Lyublinskaya and Tournaki 2014).

6 Data Analysis

Pohlmann (2004) suggests using factor analysis in evaluating the construct validity of new testing instruments. In order to analyze whether 22 variables defined in this study (see Table 1) form factors that load into five TPACK domains and therefore could be measured independently, we first tested assumptions of exploratory factor analysis (EFA).

6.1 Testing Assumptions

Correlation analysis performed on 22 variables indicated that most items correlated with small to medium r with at least one other item, suggesting reasonable factorability (Field 2013). Bartlett’s test of sphericity was significant (ϗ2 = 1119, df = 231, p < .001), confirming that R-matrix is not an identity matrix.

Only one correlation, between variables Conceptions and Students, was larger than the suggested cut off value of 0.9 that could indicate multicollinearity issues. However, an earlier study confirmed that these two variables were indeed independent (Lyublinskaya and Tournaki 2014). Further, the determinant of the correlation matrix was 0.00002825 > 0.000001 supporting assumption that R-matrix was not singular.

The variables in this study have been measured using different scales. However, according to Field (2013) using correlation matrix in factor analysis extraction ensures that the differences in measurement scales are accounted for.

With a sample of N = 116, it was important to test whether the sample size was adequate for the factor analysis. The Kaiser–Meyer–Olkin (KMO) measure of sampling adequacy was 0.73, which indicates that the sample size was adequate for the analysis (Hutcheson and Sofroniou 1999). Further, the diagonals of the anti-image matrix were above 0.5 for all variables except for PKC, and non-diagonal values of anti-image matrix were relatively small. Field (2013) suggests removing the variable with low KMO and see whether that will affect the adequacy of the sample. We verified that removing PKC did not affect testing assumptions of the factor analysis, and therefore PKC was kept for further analysis. Finally, the communalities ranged from .493 to .919 with the average of 0.683 well above suggested cut off value of 0.3 (Child 2006). According to Field (2013), as communalities become higher the importance of sample size decreases.

6.2 Exploratory Factor Analysis

A Principal Component Analysis (PCA) was conducted on 22 variables with Kaiser varimax rotation suppressing loadings less than 0.4. It led to extraction of seven factors with eigenvalues greater than one. Initial eigenvalues indicated that the first three extracted factors explained 20, 16, and 9% of the variance respectively. The fourth, fifth, sixth and seventh factors explained 6.8, 6.3, 5.3, and 4.6% of the variance respectively with total of 68% of variance explained by the extracted factors. It is known that the Kaiser criterion works well when there are fewer than 30 variables (22 variables in our study) and if communalities are larger than .7 (Field 2013). However, only seven out of 22 variables had communalities larger than 0.7 and therefore the Kaiser’s criterion most likely produced overestimated number of factors. Therefore, we also analyzed the scree plot (see Fig. 3).

As can be seen from the scree plot, the point of inflexion occurs at the 5th data point (factor), so according to Cattell (1966) we should retain four factors. The scree plot provides fairly reliable criterion for factor selection when sample size is 200 or more participants (Stevens 2002), but in the case of this study (N = 116) it might provide an underestimated number of factors.

Based on Kaiser criterion and scree plot, the number of factors should be between four and seven. Since in this study we assessed five types of knowledge: TK, PK, CKM, CKS, and TPACK, confirmatory factor analysis (CFA) was conducted with five factors.

6.3 Confirmatory Factor Analysis

The purpose of the CFA was to verify the factor structure of observed variables and to confirm that observed variables load to theoretical constructs defined by the TPACK framework. A CFA with five factors was conducted on 22 variables using Kaiser varimax rotation suppressing loadings less than 0.4 (see Table 3).

As can be seen from the Table 3, except for two variables (CKMA with cross-loadings and TKE that did not load to any of the factors) all variables loaded into five factors that represent five domains of TPACK. Therefore, from 22 original variables, we omitted these two variables and repeated the CFA procedure with 20 variables (Table 4). CFA yielded five independent factors with loadings ranging from 0.428 to 0.957 corresponding to five domains: TPACK, TK, PK, CKM and CKS. According to George and Mallery (2001) this range of loadings is considered to be acceptable to excellent. In addition, reproduced correlation matrix showed that 37% of residuals were greater than 0.05, which supported the good fit of the model with five factors (Field 2013).

Internal consistency and reliability of each individual instrument was tested using Cronbach’s α analysis and results of the analysis are shown in Table 4. TPACK levels rubric, CKMT and CKST had high reliabilities with α = .96, α = .81, and α = .80 respectively. However, TKT and PKT had relatively low reliability with α = .62 and α = .40, respectively (Table 4).

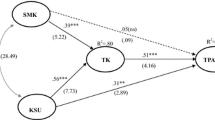

6.4 Multiple Linear Regression

In order to explore whether TK, PK, CKM and CKS are predictors for TPACK we used multiple linear regression analysis. According to Green (1991) the sample size of 116 > 109 is adequate for testing individual predictors using regression analysis. Analysis of bivariate correlations between variables indicated that there were no multicollinearity threats—the highest correlation was between CKM and CKS (r = .395, p < 0.001). At the same time, there were no significant correlations observed between TPACK and the four variables to be analyzed.

The Durbin-Watson statistic value was 2.006, so the assumption of independent errors has been met. The data do not have collinearity issues as confirmed by the fact that all individual VIF values were a little over 1 with average VIF value 1.15 with tolerance .87. In addition, each variable had most of variance loading onto a different dimension (PK had 81% of variance loaded onto dimension 2, TK—74% onto dimension 5, CKM—66% onto dimension 3, and CKS—94% onto dimension 4) which supports non-collinearity assumption. There were 5 cases with standardized residuals less than − 2 or larger than 2 which is 4% of the sample and within allowed 5%.

The model produced R squared value of 0.005—only 0.5% of variance in TPACK is accounted for by TK, PK, CKM and CKS. ANOVA analysis confirmed that the model is not a significant fit [F(4,111) = .150, p = .962]. Analysis of the coefficients of the model further supported conclusion that TK, PK, CKM and CKS are not predictors for TPACK (Table 5).

7 Discussion

7.1 Instruments and Domains

The goal of this study was to analyze the relationship between the basic domains of TPACK framework (TK, PK, and CK) and TPACK of pre-service elementary special education teachers, and therefore it required instruments that could measure these domains independently. Examination of the existing instruments revealed that while there were plenty of reliable self-reported surveys used to evaluate the basic domains of TPACK framework, there were no reliable external assessment tools. Therefore, the study first focused on development of such instruments for TK, PK, and CK in mathematics and science.

The Technology Knowledge Test (TKT) needed to assess pre-service teachers’ “basic technological literacy” without connecting that to pedagogical or content knowledge. The questions were selected to address specific areas for technological literacy indicated in the course’s textbook and confirmed by experts in technology and technological literacy (Table 1). The variables were defined based on these content areas; however, confirmatory factor analysis showed that one of the variables, measuring knowledge of databases, did not load to any of the factors and therefore was eliminated. One of possible explanations could be the fact that this instrument was tested with population of pre-service elementary school teachers who did not have any experiences with databases. Even though construct validity of variables were confirmed by technology experts, the TKT scale resulted with relatively weak internal consistency (Cronbach α value of .62) and needs further validation with larger samples.

In developing instruments to measure pre-service teachers’ PK and CK (in mathematics and science), questions were selected from released items with confirmed validity and reliability. The questions for assessing CKM and CKS came from released items of NAEP and the values of Cronbach’s α showed high reliabilities for CKMT and CKST, confirming good internal consistency of these two tests. However, PKT that was developed from practice test for NYSTCE had much lower than expected reliability (α = 0.40), even though official documentation reports estimates of reliability for the NYSTCE tests in the range of 0.95–0.98. According to Tavakol and Dennick (2011), Cronbach’s α is sensitive to the number of items in a test. A smaller number of items very often results in a smaller α. The number of items on the PKT was 19, about four times less than number of items on actual certification exam (80 multiple-choice questions). Using Spearman–Brown prophecy formula (Nunnally and Bernstein 1994) reliability of .95 on a full test should have led to at least .8 for the value of α on PKT. Nevertheless, three variables that defined PK loaded into one factor suggesting that this factor assessed pedagogical knowledge.

Making decisions on the number of factors should be based on theory and empirical research (Chai et al. 2010). The results EFA suggested that the number of factors could be between 4 and 7. The theory suggest that TK, PK, CKM, CKS, and TPACK should be considered as different constructs (Mishra and Koehler 2006) and therefore five factors were used for CFA. The loadings ranged from 0.428 to 0.957. According to George and Mallery (2001) this range is considered to be acceptable to excellent.

As a result of CFA another variable, CKMA, was removed due to loading onto two factors. The decision to remove this variable is supported by Schonrock-Adema et al. (2009) stating that a variable that loads on more than one factor should be removed if the cross-loading is greater than 0.40. Analysis of correlations matrix shows that CKMA had statistically significant correlations ranging from .384 to .467 (p < .001) with all three variables for CKS and that led to cross-loading. CKMA measured knowledge in content area of number properties and operations. Further analysis is needed to interpret why this variable loaded almost equally onto mathematics and science factors.

Based on results of the final CFA after two variables were removed, it can be concluded that the four basic domains of TPACK and the TPACK domain itself could be independently measured using this study’s instruments. Further analysis using multiple linear regression showed that there were no significant correlations observed between TPACK and TK, PK and CK (in mathematics and science). The testing of the model also confirmed that TK, PK, and CK (in mathematics and science) were not predictors for TPACK.

The practical application of this study is the validation of the instruments to measure the TK, PK, and CK (in mathematics and science) of preservice special education elementary school teachers. The results of factor analysis are good indications that the developed instrument is a reliable measure of TK, PK, CK (in math and science) of graduate level pre-service elementary school teachers. The results from the multiple regression also suggest that the basic domains of TPACK: TK, PK and CK are not predictors for TPACK and therefore can be developed and measured independently. According to Koehler and Mishra (2009) TPACK is an emergent form of knowledge that develops from interaction between TK, PK and CK and goes beyond basic components. TPACK is a different from knowledge of content, pedagogy and technology individually. TK, PK and CK are independent types of knowledge, the independent development of pre-service teachers’ TK, PK, and CK does not guarantee the development of their TPACK.

Based on the results, by increasing pre-service teachers TK, PK, and CK schools of education do not necessarily increase pre-service teachers TPACK. Therefore, teacher preparation programs need to focus on developing teachers’ TPACK in addition to developing teacher’s basic knowledge, such as TK, PK and CK in order to help new teachers to gain the knowledge and skills to effectively integrate technology in their classrooms.

8 Significance of the Study

The rapid transition to digital learning in America’s schools challenges teacher preparation programs to prepare pre-service teachers on how to use instructional technology. At the same time, many pre-service teachers do not feel adequately prepared to use technology effectively in their classroom after they graduate (Gray et al. 2010). The TPACK framework may provide the guidance in redesigning teacher preparation to assure they integrate technology (Bos 2011; Özgün-Koca et al. 2010; Kaplon-Schilis and Lyublinskaya 2015), but there is a need for instruments that would allow to measure the effectiveness of these programs by assessing pre-service teachers’ knowledge in using technology in the classroom.

This study developed instruments that could independently measure each basic domain of TPACK. To the best of our knowledge this is the first study that developed and validated the instrument for external assessment of TK, PK, CK (in mathematics and science), and TPACK of preservice special education elementary school teachers. Furthermore, to the best of our knowledge, this study is the first one that provides initial evidence that TPACK is an independent construct from TK, PK, and CK in the context of integrating technology into teaching mathematics and science by special education pre-service elementary school teachers. Study results also suggest that four basic domains of TPACK are not predictors for TPACK.

The limitation of the study is the relatively small sample size (N = 116), and specific population selected for the study. The study instruments were designed with a specific purpose in mind: examining basic knowledge of pre-service elementary school special education teachers’ and their development of TPACK. The population of the special education elementary school teachers used in the study consisted mostly of females (93%). According to the School and Staffing Survey conducted by the US Department of Education, while 89.3% of all teachers in public elementary schools were female, females composed 96% of the special education elementary teachers, which is consistent with the population used in this study (Goldring et al. 2013). These findings need to be further confirmed with larger sample and different populations. The use of the instrument in different settings and context needs to be further investigated (e.g. elementary school general education teachers). Future study will also focus on determining the factors that contribute to pre-service teachers’ TPACK development, including exploring relationship between all seven domains of the TPACK framework.

References

Abbitt, J. T. (2011). Measuring technological pedagogical content knowledge in preservice teacher education: A review of current methods and instruments. Journal of Research on Technology in Education,43(4), 281–300.

Archambault, L. (2016). Exploring the use of qualitative methods to examine TPACK. In Handbook of technological pedagogical content knowledge for educators, 65-86.

Archambault, L. M., & Barnett, J. H. (2010). Revisiting technology pedagogical content knowledge: Exploring the TPACK framework. Computers & Education,55, 1656–1662.

Baran, E., Chuang, H. H., & Thompson, A. (2011). TPACK: An emerging research and development tool for teacher educators. TOJET: The Turkish Online Journal of Educational Technology,10(4), 370–377.

Bos, B. (2011). Professional development for elementary teachers using TPACK. Contemporary Issues in Technology & Teacher Education,11(2), 167–183.

Brantley-Dias, L., & Ertmer, P. A. (2013). Goldilocks and TPACK: Is the construct ‘just right?’. Journal of Research on Technology in Education,46(2), 103–128.

Cattell, R. B. (1966). The scree test for the number of factors. Multivariate Behavioral Research, 1, 245–276.

Chai, C. S., Koh, J. H. L., & Tsai, C. C. (2010). Facilitating preservice teachers’ development of technological, pedagogical, and content knowledge (TPACK). Educational Technology & Society,13(4), 63–73.

Chai, C. S., Ng, E. M., Li, W., Hong, H. Y., & Koh, J. H. (2013). Validating and modelling technological pedagogical content knowledge framework among Asian preservice teachers. Australasian Journal of Educational Technology,29(1), 41–53.

Cherner, T., & Smith, D. (2016). Reconceptualizing TPACK to meet the needs of twenty-first-century education. The New Educator. https://doi.org/10.1080/1547688x.2015.1063744.

Child, D. (2006). The essential of factor analysis. New York, NY: Continuum.

Dawson, V. (2008). Use of information and communication technology by early career science teachers in Western Australia. International Journal of Science Education,30(2), 203–219.

Dong, Y., Chai, C. S., Sang, G. Y., Koh, J. H. L., & Tsai, C. C. (2015). Exploring the profiles and interplays of pre-service and in-service teachers’ technological pedagogical content knowledge (TPACK) in China. Journal of Educational Technology & Society,18(1), 158–169.

Field, A. (2013). Discovering statistics using SPSS. Thousand Oaks, CA: Sage Publications.

George, D., & Mallery, P. (2001). SPSS for windows. Needham Heights, MA: Allyn & Bacon.

Goldenberg, E. P. (2000). Thinking (and talking) about technology in math classrooms. Issues in Mathematics Education, 1–8.

Goldring, R., Gray, L., & Bitterman, A. (2013). Characteristics of public and private elementary and secondary school teachers in the United States: Results from the 2011–12 Schools and Staffing Survey (NCES 2013-314). U.S. Department of Education. Washington, DC: National Center for Education Statistics. Retrieved December, 23 2018 from http://nces.ed.gov/pubsearch.

Graham, C. R. (2011). Theoretical considerations for understanding Technological Pedagogical Content Knowledge (TPACK). Computers & Education,57(3), 1953–1969.

Gray, L., Thomas, N., & Lewis, L. (2010). Teachers’ use of educational technology in US public schools: 2009. First Look. NCES 2010-040. National Center for Education Statistics.

Green, S. B. (1991). How many subjects does it take to do a regression analysis? Multivariate Behavioral Analysis,26, 499–510.

Grossman, P. L. (1989). A study in contrast: Sources of pedagogical content knowledge for secondary English. Journal of teacher education,40(5), 24–31.

Harris, J., Grandgenett, N., & Hofer, M. J. (2010). Testing a TPACK-based technology integration assessment rubric. Society for Information Technology and Teacher Education.

Hofer, M., & Grandgenett, N. (2012). TPACK development in teacher education: A longitudinal study of preservice teachers in a secondary MA Ed. program. Journal of Research on Technology in Education,45(1), 83–106.

Hughes, J., (2008). In-service teachers’ use and development of TPCK within technology inquiry groups. In Symposium paper presented at the annual American educational research association conference, New York, USA.

Hutcheson, G., & Sofroniou, N. (1999). The multivariate social scientist. London: Sage.

Hutchison, A., & Reinking, D. (2011). Teachers’ perceptions of integrating information and communication technologies into literacy instruction: A national survey in the U.S. Reading Research Quarterly,46(4), 308–329.

Kaplon-Schilis, A., & Lyublinskaya, I. (2015). Exploring changes in technological knowledge (TK), pedagogical knowledge (PK), content knowledge (CK) and TPACK of pre-service special education teachers taking technology-based pedagogical course. In Proceedings of society for information technology & teacher education international conference 2015 (pp. 2936–2943). Chesapeake, VA: Association for the Advancement of Computing in Education (AACE).

Kartal, T., & Afacan, Ö. (2017). Examining Turkish pre-service science teachers’ Technological Pedagogical Content Knowledge (TPACK) based on demographic variables. Journal of Turkish Science Education,14(1), 1–22.

Koehler, M. J., & Mishra, P. (2009). What is technological pedagogical content knowledge? Contemporary Issues in Technology and Teacher Education,9(1), 60–70.

Koehler, M. J., Shin, T. S., & Mishra, P. (2011). How do we measure TPACK? Let me count the ways. In R. Ronau, C. Rakes, & M. Niess (Eds.), Educational technology, teacher knowledge, and classroom impact: A research handbook on frameworks and approaches (pp. 16–31). Hershey, PA: IGI Global.

Koh, J. H. L., Chai, C. S., & Tsai, C. C. (2014). Demographic factors, TPACK constructs, and teachers’ perceptions of constructivist-oriented TPACK. Journal of Educational Technology & Society,17(1), 185–196.

Lee, M. H., & Tsai, C. C. (2010). Exploring teachers’ perceived self-efficacy and technological pedagogical content knowledge with respect to educational use of the World Wide Web. Instructional Science,38(1), 1–21.

Lin, T. C., Tsai, C. C., Chai, C. S., & Lee, M. H. (2013). Identifying science teachers’ perceptions of technological pedagogical and content knowledge (TPACK). Journal of Science Education and Technology,22(3), 325–336.

Lyublinskaya, I., & Tournaki, N. (2012). The effects of teacher content authoring on TPACK and on student achievement in algebra: Research on instruction with the TI-Nspire handheld. In R. Ronau, C. Rakes, & M. Niess (Eds.), Educational technology, teacher knowledge, and classroom impact: A research handbook on frameworks and approaches (pp. 295–322). Hershey, PA: IGI Global.

Lyublinskaya, I., & Tournaki, N. (2014). A study of special education teachers’ TPACK development in mathematics and science through assessment of lesson plans. Journal of Technology and Teacher Education,22(4), 449–470.

Mishra, P., & Koehler, M. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. The Teachers College Record,108(6), 1017–1054.

Mishra, P., Koehler, M. J., & Kereluik, K. (2009). Looking back to the future of educational technology. TechTrends, 53(5), 48–53.

Mouza, C. (2016). Developing and assessing TPACK among pre-service teachers. Handbook of technological pedagogical content knowledge (TPACK) for educators (pp. 169–188).

Mueller, J. (2010, March). Observational measures of technological, pedagogical, content knowledge (TPACK) in the integration of laptop computers in elementary writing instruction. In Society for information technology & teacher education international conference (pp. 3907–3910). Association for the Advancement of Computing in Education (AACE).

Niess, M. L. (2005). Preparing teachers to teach science and mathematics with technology: Developing a technology pedagogical content knowledge. Teaching and Teacher Education,21(5), 509–523.

Niess, M. L. (2008). Knowledge needed for teaching with technologies–Call it TPACK. AMTE Connections,17(2), 9–10.

Niess, M. L. (2011). Investigating TPACK: Knowledge growth in teaching with technology. Journal of educational computing research,44(3), 299–317.

Niess, M. L., Ronau, R. N., Shafer, K. G., Driskell, S. O., Harper, S. R., Johnston, C., et al. (2009). Mathematics teacher TPACK standards and development model. Contemporary Issues in Technology and Teacher Education,9(1), 4–24.

Niess, M. L., van Zee, E. H., & Gillow-Wiles, H. (2010). Knowledge growth in teaching mathematics/science with spreadsheets: Moving PCK to TPACK through online professional development. Journal of Digital Learning in Teacher Education,27(2), 42–52.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory. New York, NY: McGraw-Hill.

O’Bannon, B. W., & Puckett, K. (2010). Preparing to use technology: A practical guide to curriculum integration. Boston, MA: Pearson.

Ottenbreit-Leftwich, A., Glazewski, K., Newby, T., & Ertmer, P. (2010). Teacher value beliefs associated with using technology: Addressing professional and student needs. Computers & Education,55(3), 1321–1335.

Özgün-Koca, S., Meagher, M., & Edwards, M. (2010). Preservice teachers’ emerging TPACK in a technology-rich methods class. Mathematics Educator,19(2), 10–20.

Pamuk, S., Ergun, M., Cakir, R., Yilmaz, H. B., & Ayas, C. (2015). Exploring relationships among TPACK components and development of the TPACK instrument. Education and Information Technologies,20(2), 241–263.

Pohlmann, J. T. (2004). Use and interpretation of factor analysis in The Journal of Educational Research: 1992–2002. The Journal of Educational Research,98(1), 14–23.

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education,42(2), 123–149.

Schonrock-Adema, J., Heijne-Penninga, M., Van Hell, E. A., & Cohen-Schotanus, J. (2009). Necessary steps in factor analysis: enhancing validation studies of educational instruments. Medical Teacher,31, e226–e232.

Shinas, V. H., Yilmaz-Ozden, S., Mouza, C., Karchmer-Klein, R., & Glutting, J. J. (2013). Examining domains of technological pedagogical content knowledge using factor analysis. Journal of Research on Technology in Education,45(4), 339–360.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational researcher,15(2), 4–14.

Stevens, J. P. (2002). Applied multivariate statistics for the social sciences (4th Ed.). Hillsdale, NJ: Erlbaum.

Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s alpha. International Journal of Medical Education,2, 53–55.

Tondeur, J., van Braak, J., Sang, G., Voogt, J., Fisser, P., & Ottenbreit-Leftwich, A. (2012). Preparing pre-service teachers to integrate technology in education: A synthesis of qualitative evidence. Computers & Education,59(1), 134–144.

U.S. Department of Education (2017) Reimagining the role of technology in higher education: A supplement to the National Education Technology Plan. Washington, D.C.: Author, Office of Educational Technology. Retrieved January 31, 2018 from https://tech.ed.gov/files/2017/01/Higher-Ed-NETP.pdf

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. For this type of study formal consent is not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kaplon-Schilis, A., Lyublinskaya, I. Analysis of Relationship Between Five Domains of TPACK Framework: TK, PK, CK Math, CK Science, and TPACK of Pre-service Special Education Teachers. Tech Know Learn 25, 25–43 (2020). https://doi.org/10.1007/s10758-019-09404-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10758-019-09404-x