Abstract

In today’s manufacturing industry more than one performance criteria are considered for optimization to various degrees simultaneously. To deal with such hard competitive environments it is essential to develop appropriate multicriteria scheduling approaches. In this paper consideration is given to the problem of scheduling n independent jobs on a single machine with due dates and objective to simultaneously minimize three performance criteria namely, total weighted tardiness (TWT), maximum tardiness and maximum earliness. In the single machine scheduling literature no previous studies have been performed on test problems examining these criteria simultaneously. After positioning the problem within the relevant research field, we present a new heuristic algorithm for its solution. The developed algorithm termed the hybrid non-dominated sorting differential evolution (h-NSDE) is an extension of the author’s previous algorithm for the single-machine mono-criterion TWT problem. h-NSDE is devoted to the search for Pareto-optimal solutions. To enable the decision maker for evaluating a greater number of alternative non-dominated solutions, three multiobjective optimization approaches have been implemented and tested within the context of h-NSDE: including a weighted-sum based approach, a fuzzy-measures based approach which takes into account the interaction among the criteria as well as a Pareto-based approach. Experiments conducted on existing data set benchmarks problems show the effect of these approaches on the performance of the h-NSDE algorithm. Moreover, comparative results between h-NSDE and some of the most popular multiobjective metaheuristics including SPEA2 and NSGA-II show clear superiority for h-NSDE in terms of both solution quality and solution diversity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Single machine scheduling with due dates performance criteria has attracted the research attention for over than 50 years thanks to numerous practical implications. Especially, the combination of tardiness and earliness performance criteria in a compound objective function seems to be quite natural since it reflects the just-in-time (JIT) objective. JIT is a production planning and control philosophy that seeks to eliminate waste. Completing a job before or after the due date incurs additional cost, which is a waste of resources. What is desirable is to complete each job as close to its due date as possible, neither too early nor too late. Early jobs result in inventory holding costs, while late jobs result to penalties such as loss of customer goodwill and loss of orders. Hence, a major objective in the context of JIT approach is to minimize both earliness and tardiness costs. Scheduling problems with such objective have found numerous real-world applications in various areas including manufacturing (Pinedo 2016), supply chain management (Kojima et al. 2008), real-time computer systems (Jozefowska 2007) and building construction (Polat and Arditi 2005).

Three of the most frequently due dates performance criteria appear in the literature are: total weighted tardiness (∑wjTj), maximum tardiness (Tmax) and maximum earliness (Emax). The first two are regular criteria, that is, their value cannot be decreased by inserting idle time in the schedule; while the latter is a non regular criterion. When we deal with non regular criteria such as the maximum earliness or the total (weighted) earliness it might be interesting to insert machine idle time into the schedule since this could result to lower values of the objective function (Baker 1974; Baker and Trietsch 2009; Pinedo 2016). As far as a single criterion in the objective function is concerned, it is known that the problems 1||Tmax (i.e., minimizing maximum tardiness on a single machine) and 1||Emax (minimizing maximum earliness on a single machine) are both very easily solved using the earliest due date (EDD) and minimum slack (MS) rules respectively (Baker 1974). EDD sequence the jobs in non-decreasing order of their due date, while MS sequence the jobs in non-decreasing order of their slack time. Besides, when the objective is to minimize total weighted tardiness (TWT) the problem becomes NP-hard (Lawer 1977; Lenstra et al. 1977). Hence, the combination of TWT in the objective function with any other criterion results to an intractable combinatorial problem and therefore the best way to proceed is by the means of heuristics.

This paper considers a multicriteria version of the single-machine TWT scheduling problem which combines TWT with maximum tardiness and maximum earliness in the objective function. Formally the problem can be written as \( 1| \, |F\left( {\sum {w_{j} T_{j} } ,T_{{\rm max} } ,E_{{\rm max} } } \right) \). Denoting the problem of minimizing a composite objective function of the three criteria where the only foreknowledge is that this function is nondecreasing in regard to all the arguments (Hoogeveen 2005). In other words, neither criterion can be improved upon without making the others worse. We are interested for a solution approach devoted to the determination of the Pareto-optimal solutions of this tricriteria problem. Considering these three performance measures simultaneously is of great importance in a number of ways. Let us discuss such an application scenario for a company in the textiles industry (this scenario is an adaptation of the problems discussed in Woolsey 1992; Chen and Bulfin 1994; Pinedo 2016). When the salespeople of the company take customer orders, they promise the job will be ready on a specific date. Salespeople are paid commissions on their orders; full commissions are paid for on-time orders, but the commission decreases to some minimum value as the tardiness increases. Some orders are more critical than others and need more careful consideration. These orders are given higher priority. From the perspective of the sales force, minimizing maximum tardiness will be the fairest measure, since the person penalized the most for a tardy job is hurt as little as possible. However, such a schedule could have large sum of (weighted) tardiness due to the many tardy jobs, which is not attractive from a customer perspective. Minimizing the total (weighted) tardiness makes as many customers happy as possible, but quite likely, some orders are very tardy, resulting in some very unhappy salespeople and customers. From the company side perspective however there is also another important concern. Early completion of an order is not desirable because it results to holding inventory costs. In this case, the finished goods of the early job (order) need to be kept in the warehouses for a number of days equal to the earliness of the job. Due to the high unary holding costs of the finished goods per period, minimizing maximum earliness is also a fundamental objective for the company, since the total holding cost for the most early job is as little as possible. Unfortunately, schedules which perform well with respect to the tardiness measures do poorly with respect to the earliness measure and vice versa.

To the best of our knowledge, this is the first study dealing with this particular scheduling problem. This observation stems from the literature review performed (see Sect. 2) with the aim of positioning the problem within the relevant research field. The outcome of this review is summarized in Table 1. Each paper is categorized according to the performance criteria considered for optimization. This review allowed us to draw the following general observations: First, the majority of the existing research papers addressed bicriteria problems in which the primary objective is the minimization of one of the three criteria under consideration and secondary objective the minimization of the number of tardy jobs. Second, some works studied the problem of minimizing both Tmax and Emax. Third, no previous work in the literature studied either Tmax or Emax in combination with TWT.

In this context, we provide an adaptation of our previous heuristic algorithm appeared in (Nearchou 2012) to the new multicriteria environment termed to herein as h-NSDE (the hybrid non-dominated differential evolution) algorithm. The algorithm developed by Nearchou (2012) termed as DE-VNS (Differential Evolution with Variable Neighborhood Search) constitutes one of the best existing solution algorithms for the TWT single-machine scheduling problem. In particular, DE-VNS showed a remarkable high solution quality performance over the most famous TWT benchmarks scheduling problems (Beasley 1990)Footnote 1 which include three data set instances with 40, 50 and 100 jobs. Each data set contains 125 test instances ranging from less to more restricted against jobs’ due dates. DE-VNS found to be a powerful optimization heuristic for the TWT problem able to obtain in reasonable short computational times all the existing best known solutions for these benchmarks. Briefly, the DE component of the algorithm (a population heuristic for optimization over continuous search spaces) is used as global optimizer for solution evolution guiding the search toward the optimal regions of the search space. The VNS component (a random local search heuristic based on the systematic change of neighborhood) is used as a local optimizer performing a sequence of local changes on individual DE solutions until a local optimum is found.

The remainder of the paper is organized as follows: Sect. 2 provides a comprehensive state-of-the-art literature review on the related research field. Section 3 states the problem and describes the multiobjective optimization approaches employed to tackle the problem. Section 4 presents and analyzes the developed h-NSDE solution algorithm. Computational results concerning the performance of h-NSDE and comparisons with three well-known multiobjective evolutionary algorithms namely, SPEA2, NSGA-II and L-NSGA respectively are provided (together with a statistical performance analysis) in Sect. 5. It is highlighted that, the latter three algorithms have never before applied to the problem under consideration. Finally, conclusions and directions for future work are pointed out in Sect. 6.

2 Literature review

This section provides a comprehensive review about previous works on earliness/tardiness multicriteria scheduling on a single machine. We concentrated our bibliographic search in journal papers and conferences proceedings. The review is limited to works considering TWT, Tmax and Emax performance criteria. Moreover, the problem addressed involves the scheduling of a set of independent jobs simultaneously available for processing with known processing times and known (general) due dates. Jobs are processed one at a time without interruption. Machine setup times are sequence independent and are included in the jobs’ processing times. In the analysis we start with the TWT problem and continue with the discussion of critical works concerning combinations of the three criteria.

The single-machine TWT scheduling problem is considered to be intractable i.e., requirements in computer solution time increase exponentially with the number of the jobs to be sequenced. Lawer (1977) and Lenstra et al. (1977) have proven the NP-hardness of the problem. In particular, problem instances with more than 50 jobs cannot be solved to optimality with implicit enumerative techniques such as dynamic programming and branch-and-bound algorithms (Abdul-Razaq et al. 1990; Du and Leung 1990). In the last four decades several heuristic techniques have been proposed for solving the problem characterized as either constructive or improvement. Constructive heuristics (Smith 1956; Baker 1974; Morton et al. 1984; Potts and Van Wassenhove 1991) generate a schedule from scratch by fixing a job in a position at each step according to some simple or complex rule. Due to the poor performance of simple constructive methods especially for large-sized weighted tardiness problems the research interest have been focused (after the decade of 1980s) on improvement heuristics (Potts and Van Wassenhove 1991). Improvement heuristics start from a solution generated by some sequence generating technique and using suitable schemes try to improve this solution with the hope of approaching the global optimum. Improvement methods are generally based on modern heuristics (known as metaheuristics) such as, simulated annealing (Matsuo et al. 1989; Potts and Van Wassenhove 1991; Crauwels et al. 1998), genetic algorithms (GAs) (Crauwels et al. 1998; Kellegoz et al. 2008; Chou 2009), tabu search (Crauwels et al. 1998; Bilge et al. 2007), ant-colony optimization (Bauer et al. 1999), iterated local search (Congram et al. 2002), particle swarm optimization (Tasgetiren et al. 2006), VNS (Wang and Tang 2009) and hybrid solution methods such as the DE-VNS algorithm proposed by Nearchou (2012). An interesting survey on heuristic methods on both weighted and un-weighted tardiness scheduling problems can be found in (Sen et al. 2003). While more comprehensive, but somewhat dated review is given in (Abdul-Razaq et al. 1990). To our knowledge, no other more recent surveys on TWT problem are reported in the literature.

Various bicriteria tardiness/earliness scheduling problems have been addressed using the idea of Pareto-optimal schedule. In an early work, Sen and Gupta (1983) considered the scheduling problem of minimizing a linear combination of flow times and Tmax on a single machine and presents a branch-and-bound technique to arrive at an optimal solution. Nelson et al. (1986) proposed a multicriteria treatment of solution procedures which utilize branch-and-bound method to minimize Tmax as well as mean flow time and number of tardy jobs. Wu et al. (2000) studied the problem involving minimization of the total earliness and Tmax. Four dominant properties for the precedence relationship between jobs in a search for an optimal solution were proposed. The dominance properties were implemented in a branch-and-bound algorithm to facilitate the search for an optimal schedule. Furthermore, a heuristic algorithm was developed to overcome the inefficiency of the branch-and-bound algorithm when addressing large problem instances. Huo et al. (2007) considered the problem of minimizing the weighted Tmaxand the number of tardy jobs. The authors first gave NP-hardness proofs for the scheduling problems when either one of the two criteria is the primary criterion and the other one is the secondary criterion. Then, they gave polynomial-time algorithms for some special cases and proposed heuristics for the general case.

The first result involving minimizing simultaneously Tmax and Emax is due to Garey et al. (1988). The authors presented an O(nlogn) algorithm to check whether for a given threshold value y there exists a feasible schedule such that each job j is executed in the interval \( \left[ {d_{j} - p_{j} - y, \, d_{j} + y} \right] \). With pj and dj being the processing time and the due date of job j respectively. Hoogeveen (1996) also studied a combination of Tmax and Emax on a single machine. The author generalizes Emax to a criterion termed promptness \( P_{{\rm max} } = \hbox{max} \left( {st^{\prime}_{j} - st_{j} ,0} \right) \) where \( st^{\prime}_{j} \) is a preferred start time for job j and stj its actual start time in the generated schedule. Later, Sourd (2001) studied a similar minimization problem in which Tmax is generalized to a maximum cost, and maximum promptness is generalized to hmax = max{hj(stj)}. Each of the functions hj is assumed to be nonincreasing in stj. In addition, much more research studies have been performed on the problem of minimizing some function of total earliness and total tardiness. Du and Leung (1990) have shown that \( 1| \, |\sum {T_{j} } \) is NP-hard. As a consequence, all the earliness/tardiness problems without simplifying assumptions are NP-hard too. Therefore, the determination of the correct sequence to execute the jobs is now not enough since it may be advantageous to insert idle time in the schedule. Some researchers have focused on solving this sub-problem.

A number of researchers considered the dual objective of minimizing Emax and number of tardy jobs. The first results are due to Guner et al. (1998). A branch-and-bound algorithm was presented which solves the problem (with instances up to 25 jobs) to optimality by connecting Moore’s algorithm. Azizoglu et al. (2003) addressed the problem under the context that idle time inserted in the schedule is not allowed. First, they examined the problem of minimizing Emax while keeping the number of tardy jobs to its minimum value. Afterwards, they developed a general procedure to find an efficient schedule minimizing a composite function of the two criteria by evaluating only a small fraction of the efficient solutions. Jolai et al. (2007) addressed the problem through the use of GAs. Four versions of the standard GA were evaluated differ in the way the individual solutions of the initial population are created. A mechanism was employed for seeding the initial population with improved solutions generated by some known simple constructive rules. Molaee et al. (2010) developed two procedures namely a heuristic algorithm based on the beam search strategy and an exact method based on the branch-and-bound algorithm to tackle the problem. Later, Molaee et al. (2011) studied the same problem in the context of an unavailability period. To determine the Pareto frontier the authors developed a solution method that combines a heuristic algorithm together with a branch-and-bound technique based on a binary search tree.

The problem of minimizing Emax and total flow time was studied by Koksalan et al. (1998) and Koksalan and Keha (2003). Koksalan et al. (1998) addressed the problem by the means of heuristics. In particular, they developed heuristic procedures for generating all the efficient sequences for the cases where machine idle time is either allowed or not allowed. Koksalan and Keha (2003) used a GA to solve two different versions of the bi-criteria scheduling problem: minimizing total flow time and Emax, and minimizing total flow time and number of tardy jobs. For the first problem, the authors developed a simple heuristic that produces an approximately efficient solution for each possible value the number of tardy jobs can take over the set of efficient solutions. The second problem was addressed by a GA that further improves the solutions generated by the simple heuristic.

Recently, the most appealing performance criterion in the relevant research field seems to be TWT. This criterion has been examined in accordance to several other secondary optimization criteria including, number of tardy jobs, total weighted flow time, makespan as well as overtime utilization capacity. Wan and Yen (2009) studied the single machine problem with the aim of minimizing TWT subject to the minimum number of tardy jobs. They developed a heuristic algorithm and an exact branch-and-bound approach that could optimally solve problems rather quickly with up to 30 jobs. Tavakkoli-Moghaddam et al. (2006) presented a fuzzy goal programming approach for solving a mixed-integer model of a single-machine scheduling problem minimizing TWT and total flow time. Their solution method was constructed based on the desirability of the decision maker and tolerances considered on goal values. Tavakkoli-Moghaddam et al. (2010) addressed a fuzzy bi-criteria TWT problem with objective to minimize TWT and makespan simultaneously. A fuzzy multi-objective linear programming method was applied with respect to the overall acceptable degree of the decision maker satisfaction. Matsuo (1988) addressed the problem of simultaneously determining overtime utilization and job sequencing over a finite planning horizon in a single machine job shop environment. The goal was to find an overtime utilization level and job sequence that yields a “good” tradeoff between overtime cost and TWT. Yang et al. (2004) studied a similar problem in which the machine can process jobs in two modes: regular time and overtime at different costs per unit time. The objective was to minimize TWT and resource overtime costs. The authors presented a pseudopolynomial time algorithm for determining the optimal usage of regular time and overtime for any fixed sequence of jobs. This algorithm was then used by a local search algorithm for heuristically solving the general scheduling problem. In a recent work, Jaramillo and Erkoc (2017) considered a similar problem under a more general case where preemption is allowed. The aim was to minimize a composite cost function of TWT and overtime capacity utilization through a heuristic solution methodology. Various scenarios were studied concerning overtime usage and delays of the schedule without altering the TWT of the jobs.

The last decade, several researchers have turned their attention to metaheurstics approaches as a means for solving multiobjective single machine scheduling problems with late criteria. The majority of these works concern special variants and extensions of the problem discussed here and therefore we will not proceed with their presentation. These studies (to mention some of them) include problems with a common due date for the jobs (Guan et al. 2011; Giannopoulos and Nearchou 2018), with sequence dependent job setup times (Arroyo et al. 2011; Sioud et al. 2012), with the jobs being processed as a batch on the machine (Kashan et al. 2010), with allowable preemption (Khorshidian et al. 2011), with unequal release times and idle times inserted (Mahnam et al. 2013), with controllable task times (Kayvanfar et al. 2013) etc. More about related multicriteria issues can be found in the critical state-of-the-art reviews of Baker and Scudder (1990), Sen et al. (2003), Hoogeveen (2005) and Lei (2009) as well as in the nice book of T’kindt and Billaut (2006). Table 1 gives a synopsis of the literature related to the single-machine scheduling problem considered.

3 Problem formulation

In this section we present and discuss a general formulation for the \( 1| \, |F\left( {\sum {w_{j} T_{j} } ,T_{{\rm max} } ,E_{{\rm max} } } \right) \) scheduling problem. We also present and analyze the multiobjective optimization approaches employed to handle the problem. The performance of these approaches is investigated (later in the experimental section of the paper) in the context of the h-NSDE solution algorithm. In addition, we provide some comments about the computational complexity of the problem. In our analysis we make use of the following notation:

n | Number of jobs. |

|---|---|

j | Index for jobs, j ∊ {1, 2, …, n} |

p j | Processing time of job j |

d j | Due date for completion of job j |

w j | A positive weight related to the importance of job j |

st j | Starting time of job j |

C j | Completion time of job j |

E j | Earliness of job j |

T j | Tardiness of job j |

T max | Maximum tardiness |

E max | Maximum earliness |

σ | A schedule solution; represented by permutation on the set {1, 2, …, n} of jobs’ indices |

3.1 Formal problem definition

The problem can be formally defined as follows: a set of n independent jobs simultaneously available at time zero must be sequenced on a single machine. The machine is continuously available from time zero onwards for processing of the jobs and it is never idle. The machine can handle one job at a time. Each job j (j = 1, …, n) has a positive (constant) processing time pj which means that it has to be processed for a period of length pj. Furthermore, for each job j a due date dj and a positive weight wj is defined. The former value indicates the preferred completion time for the job while the latter express its importance. Preemption of a job is not allowed. That is, we must finish the job without interruption once it has been started. For a given schedule σ of the n jobs, the starting time of job j in σ is denoted by stj(σ) while its completion time is denoted by Cj(σ). Since preemption is not allowed, then Cj(σ) = stj(σ) + pj. The tardiness of job j is defined as Tj(σ) = max {0, Cj(σ) − dj} and denotes the lateness of the job if it fails to meet its due date. The earliness of job j (which is the opposite of its tardiness) is defined as Ej(σ) = max {0, dj − Cj(σ)}. The objective is to determine an efficient schedule solution which simultaneously minimizes the following three performance criteria:

Total weighted tardiness,

Maximum tardiness,

Maximum earliness,

where [j] in the above equations denotes the job in the jth position of schedule σ. Hence, for example, [6] = 2 means that the sixth job in σ is job 2. Consequently, T[6](σ) refers to the tardiness of the sixth job in σ, that is, the tardiness of job 2. In the rest of the paper we will refer to maximum tardiness and maximum earliness with Tmax and Emax respectively, for short.

3.2 Complexity of the problem

The objectives given in Eqs. (1) and (2) are clearly conflicting with the objective of Eq. (3). Moreover, as we explained in the Introduction section, there may be also interaction between the two tardiness objectives. In particular, minimizing Tmax may result to a schedule with many tardy jobs. This consequently could produce a large TWT value (especially in the case where the tardy jobs carry large priority weights). There may be several schedules which minimize Tmax differ to the TWT value. So, it seems reasonable to choose the one with the lowest TWT value. If instead, TWT is minimized, it is less likely that the waiting of any job will be unacceptably long, however, some jobs could be very tardy which also may be unacceptable in practice. Therefore, it seems quite critical to consider efficient schedules with respect to both tardiness measures.

Obviously, a solution minimizing all the three objectives is impossible to achieve. An ‘acceptable solution’ in such cases is called an efficient solution; which is a solution where the improvement in one objective must worsen some other objective. Bäck (1996) showed that finding the global optimum to a multicriteria optimization problem is NP-complete. Furthermore, taking into account the NP-hardness of the TWT problem one can easily realize the computational difficulties associated for the solution of the tricriteria problem under consideration. In general, an exact solution to such problems at which all decision variables satisfy the associated constraints and all of the individual objective functions have reached their associated optimal values, may not even exist. Hence, the various developed solution algorithms, are in fact, in a situation where, their attempt is to optimize each individual objective to the greatest possible extend. Usually, there is no single optimal solution to these problems but rather a set of optimal solutions known as Pareto-optimal solutions. The solutions in this set are such that they are non-dominated by any other solution of the search space of the given problem when all the objectives are considered, and moreover, they do not dominate each other in the set. Our attempt in this study is to determine a set of Pareto-optimal solutions for the \( 1| \, |F\left( {\sum {w_{j} T_{j} } ,T_{{\rm max} } ,E_{{\rm max} } } \right) \) problem.

3.3 Handling the multiobjective optimization problem

Three different approaches for multiobjective optimization are considered: The first one is the well-known weighted-sum approach which consists of adding all the objective functions (criteria) together into a single function using different weighting coefficients for each one of them. The second approach is based on the Choquet integral method (Marichal 2000) (also known as the fuzzy measures approach) to aggregate the interacting criteria in order to compute the evaluation score of a solution alternative. The third approach is a Pareto-based approach which takes into account the dominance relationship between the individual solutions. Below we describe the investigated multiobjective optimization approaches in detail.

3.3.1 The weighted-sum approach

According to this approach the various performance criteria are compound into a single objective function using an aggregated operator (Yager 1988). Weights (weighting coefficients) are assigned to each separate criterion and the compound objective function is set equal to the sum of the weighted criteria. Hence, using this approach the objective function (to be minimized) for our problem is formulated as:

Traditionally, the proper values for the weights are chosen once (either using a prior knowledge of the criteria or randomly) and remain fixed during the running of the solution algorithm. However, recent works in evolutionary computation field proposed other weight-adjusted methods to fully utilize the power of the population-based evolutionary search. Two such methods are the random-weight method introduced by Murata et al. (1996) and the adaptive-weight method proposed by Gen and Cheng (2000). Therefore, the three weighted-sum models for the objective function are:

Model 1

Weighted-sum function with fixed weights wq (q = 1, 2, 3)

Model 2

Weighted-sum function with random weights estimated by the relation given below. Note that, each weight wq (q = 1, 2, 3) is first assigned a random number in the range (0,1) and then recomputed by Eq. (6).

Model 3

Weighted-sum function with adaptive weights estimated by Eq. (7) below:

where z max q and z min q are respectively the maximum and the minimum values for the qth objective in the population of the individual solutions.

3.3.2 The fuzzy measures approach

The concept of fuzzy measures is a very important field in mathematics with many applications related to decision making (Murofushi and Sugeno 1989, 2000). One such major application of determining fuzzy measures is that they can provide weights for representing synergies between multiple criteria in decision making (Grabisch 1996). In this sub-section we present how fuzzy measures can be used in our multicriteria problem. We start by a short description of the basic mathematics behind fuzzy measures. We try to be self-contained as far as possible giving the most necessary definitions adapted for multicriteria decision making. More insides about this mathematical tool can be found in the excellent work of (Grabisch 1996) as well as in our previous work (Giannopoulos et al. 2012).

Let B = {B1, B2,…, Bm} be a set of alternatives solutions among which the decision maker must choose. In addition, let us consider a set of q criteria Cr = {Cr1, Cr2,…, Crq} and an association among every member of B to Cr: \( x^{i} = \left( {x_{1}^{i} ,x_{2}^{i} , \ldots ,x_{q}^{i} } \right) \in \Re^{q} \), i = 1,…, m.

Definition 1

A fuzzy measure over Cr is a monotonic set function \( u:2^{Cr} \to \left[ {0,1} \right] \) with \( u\left( \emptyset \right) = 0 \) and u(Cr) = 1.

Fuzzy measures have two main features: (a) they use weight factors for every criterion separately as well as for all the combinations among the criteria of Cr. (b) they use the discrete Choquet integral (Eq. (8)), an aggregation operator which generalizes the weighted arithmetic mean:

Definition 2

The discrete Choquet integral Cu with respect to u is defined by:

where, u ∊ FCr with FCr being the set of all fuzzy measures on Cr. (·) indicates a permutation of Cr such that xj ≤ ··· ≤ xq, and A(Crj) = {Crj,…, Crq}, \( A\left( {Cr_{q + 1} } \right) = \emptyset \).

Definition 3

The importance index or Shapley value of criterion Cri with respect to u is defined by:

where, |W| indicates the cardinal of W; and W is formed by all combinations on \( Cr\backslash \left\{ {Cr_{i} } \right\} \). These values are normalized having the property \( \sum {\varphi \left( {u,Cr_{j} } \right)} = 1 \) with which is easier for the decision maker to indicate the importance of each criterion.

Definition 4

The average interaction index between two criteria Cri and Crj with respect to the fuzzy measure u is defined by:

The interaction between two criteria is sufficiently found by the sign of \( u\left( {\left\{ {Cr_{i} ,Cr_{j} } \right\}} \right) - u\left( {\left\{ {Cr_{i} } \right\}} \right) - u\left( {\left\{ {Cr_{j} } \right\}} \right) \). If the sign is negative (positive), the criteria Cri, Crj presents a positive (negative) correlation which expresses a negative (positive) interaction. If Cri, Crj are negatively correlated then if one of them has a good value then the other usually presents a bad value and vice versa. If Cri, Crj are positively correlated then if one of them has a good value then the other usually presents a good value. If \( u\left( {\left\{ {Cr_{i} ,Cr_{j} } \right\}} \right) = u\left( {\left\{ {Cr_{i} } \right\}} \right) + u\left( {\left\{ {Cr_{j} } \right\}} \right) \) the criteria are independent. A proper modeling of interaction should take into consideration all the combinations on Cr. According to the above theory, the criteria set Cr for our multicriteria problem is defined as:

In this case, 2Cr − 2 = 6 fuzzy measures must be addressed since the fuzzy measures \( u\left( \emptyset \right) = 0 \) and u(Cr) = 1. First, the fuzzy measures \( u\left( {\left\{ {TWT} \right\}} \right), \, u\left( {\left\{ {T_{{\rm max} } } \right\}} \right), \, u\left( {\left\{ {E_{{\rm max} } } \right\}} \right) \) for each criterion, must be addressed that shows the importance for each of them. Then, the fuzzy measures for the interaction between criteria should be addressed in order to satisfy specific characteristics. In this case, the alternative solutions that will present low values for TWT, Tmaxand Emax will be favored. Therefore, the fuzzy measures for the interaction of the criteria are:

According to Eq. (12) there is strong synergy between TWT and Emax as well as between Tmaxand Emax. The objective function is therefore, determined using the discrete Choquet integral:

where \( x_{j}^{i} \) is a profile of an alternative solution \( x^{1} = \left( {x_{1}^{1} ,x_{2}^{1} ,x_{3}^{1} } \right) = \left( {x_{TWT}^{1} ,x_{{T_{max} }}^{1} ,x_{{E_{{\rm max} } }}^{1} } \right) \) and \( x_{TWT}^{1} < x_{{T_{max} }}^{1} < x_{{E_{{\rm max} } }}^{1} \). The sets for the calculation of the score according to formula (13) are shown in Table 2.

Without loss of generality, in this paper, TWT and Tmax are considered to be more important than Emax. Hence, the fuzzy measures values of these criteria should be higher than that of Emax criterion as it is shown in Table 3. Assuming no interaction between TWT and Tmax the fuzzy measure of the set {TWT, Tmax} is set equal to the sum of the fuzzy measures of each element. In addition, since there is a synergy between TWT and Emax the value of the fuzzy measure of the set {TWT, Emax} is greater than the sum of the fuzzy measures of each element and bounded in \( \left( {0.75, \, 1} \right) \). The same rule is applied for Tmax and Emax criteria.

3.3.3 The Pareto-based approach

According to this approach the objective function of each individual solution is estimated by a scheme which takes into account the properties of the dominance relation between this solution and the remaining solutions in the population. Each solution in the entire population is compared to all the others in terms of domination. There are three possible outcomes of the dominance check between two solutions say x1 and x2:

-

(a)

x1 dominates x2,

-

(b)

x1 is dominated by x2, or

-

(c)

the solutions x1 and x2 do not dominate each other.

A solution x1 is said to dominate solution x2if both the following conditions are true: (i) x1is no worse than x2 in all objectives. (ii) x1 is strictly better than x2 in at least one objective. If any of these two conditions is violated, then solution x1 does not dominate solution x2. Following this logic the fifth function assignment model employed is as follows:

Model 5

For an individual solution xi the Pareto-based objective function is estimated by:

where, a is the number of individuals dominated by xi. β is the number of individuals which dominate xi, while γ is the number of all the individual solutions in the population.

4 The h-NSDE solution algorithm

In this section, we present h-NSDE for the solution of \( 1| \, |F\left( {\sum {w_{j} T_{j} } ,T_{{\rm max} } ,E_{{\rm max} } } \right) \) scheduling problem. h-NSDE effectively constitutes an adaptation of the DE-VNS algorithm proposed by Nearchou (2012) in the new multicriteria environment. The new algorithm classified as multiobjective metaheuristic (see Carlos and Coello 2000; Jones et al. 2002 for a survey on these algorithms) combines DE to perform global exploration among a population of individual solutions, and the local improvements abilities of VNS to perform local exploitation around selected DE solutions before injecting them into the population of the next generation. The general steps of the algorithm are given below. We first present briefly the operation of the standard DE algorithm as well as the basic VNS method. Then, we give the pseudo-code of the algorithm and discuss the general logic underlying its operation.

4.1 The standard differential evolution (DE) algorithm

DE introduced by Storn and Price (1997) for optimization over continuous spaces and since then has been applied with high success on many numerical optimization problems (Ali and Törn 2004; Kaelo and Ali 2006). Recently, its application has been extended to complex manufacturing combinatorial optimization problems (COPs) with discrete decision variables, such as, the assembly line balancing problem (Nearchou 2008), and machine scheduling problems (Nearchou and Omirou 2006; Onwubolu and Davendra 2006; Tasgetiren et al. 2006).

DE utilizes NS, D-dimensional parameter vectors xi,k, i∈{1…NS} (k denotes the iteration number of the algorithm.) as a population to search Ω. The initial population (k = 0),

is taken to be uniformly distributed in Ω. At each iteration, all vectors in S are targeted for replacement. Therefore, NS competitions are held to determine the members of Sfor the next iterations. This is achieved by using mutation, crossover and acceptance operators. In the mutation phase, for each target vector \( {\mathbf{x}}_{i,k} \), i∈{1, 2, …, NS}, a mutant vector \( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \) is obtained by

where α, β, γ, i ∈{1, 2, …, NS} are mutually distinct random indices and are also different from the current target index i. \( {\mathbf{x}}_{\alpha ,k} \) is known as the base vector and F > 0 is a scaling parameter. Equation (16) is just one way to create the mutant vectors. Another quite popular variation scheme for the mutant vectors is given by,

with α, β, γ, δ being different integers within {1,2,…, NS} and \( {\mathbf{x}}_{best,k} \) denoting the best element in S at iteration k. In Eq. (17) the mutant vector is generated using four different vectors randomly selected from the population together with the population best vector.

The crossover operator is then applied to obtain the trial vector \( {\varvec{\uppsi}}_{i, \, k} \) from \( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \) and \( {\mathbf{x}}_{i,k} \). The crossover is defined by

where Ii is a randomly chosen integer in the set I, i.e., Ii ∈ Ι = {1, 2, …, D}; the superscript j represents the jth component of respective vectors; rnd ∈ (0,1) is drawn randomly for each j. The ultimate aim of the crossover rule is to obtain the trial vector \( {\varvec{\uppsi}}_{i, \, k} \) with components coming from the components of the target vector \( {\mathbf{x}}_{i,k} \) and the mutated vector \( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \). This is ensured by introducing CR and the set Ι. Notice that for CR = 1 the trial vector \( {\varvec{\uppsi}}_{i, \, k} \) is the replica of the mutated vector \( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \). The targeting process (mutation and crossover) continues until all members of S are considered. After all NS \( {\varvec{\uppsi}}_{i, \, k} \) vectors have been generated, acceptance is applied. In the acceptance phase, the cost function value at the trial vector \( f({\varvec{\uppsi}}_{i, \, k} ) \), is compared to \( f({\mathbf{x}}_{i,k} ) \), the value at the target vector and the target vector is updated using

Reproduction (mutation and crossover) and acceptance continues until some stopping conditions are met.

4.2 The variable neighborhood search (VNS) method

VNS introduced by Mladenovic and Hansen (1997) as a general metaheuristic for solving COPs. Since its invention an increased number of researchers applied VNS on a variety of complex COPs (see Hansen and Mladenovic 2001 for a comprehensive survey). The main difference behind VNS and the traditional local search methods is the use of two or more neighborhoods and the systematic change of the neighborhood during the search procedure. The general mechanism of the basic VNS undergoes the steps given below. Further modifications to the basic VNS are analyzed and discussed in (Hansen and Mladenovic 2001).

- Step 1:

-

Initialization

Select the set of neighborhood structures Nz, for z = 1, …, zmax that will be used in the search. Determine an initial solution x to start with, and set a stopping condition.

- Step 2:

-

Repeat the following until the stopping condition is met:

-

1)

Set z = 1;

-

2)

Repeat the following steps until z = zmax:

-

i.

Shaking Generate a point x′ at random from the zth neighborhood of x (x′ ∊ Nz(x));

-

ii.

Local search Apply some local search method on x′ to generate a new local optimum solution x″;

-

iii.

Move or not If x″ is better than the incumbent solution x, move there (x = x″), and continue the search with z = 1; otherwise, set z = z + 1;

-

i.

-

1)

Shaking is the procedure of generating a new solution in the neighborhood of the current solution. In particular, a random point x′ is selected from the Nz neighborhood of the current point x. There are many ways to model a neighborhood search. Two such popular neighborhood models are the adjacent pairwise interchange scheme, and swap (or exchange) scheme. In the former, two adjacent terms in x are randomly selected and interchanged; in the latter scheme, two (non adjacent) terms are randomly selected and interchanged. The solution x′ generated during shaking step is then being improved using a suitable local search heuristic (local search step). Finally, the new solution x″ created by local search heuristic is compared to that of the original solution x and accepted when its quality is higher. VNS is terminated when an appropriate user-defined stopping condition is satisfied. This condition may be a maximum permitted number of iterations, or a maximum processing time allowed, or a maximum number of iterations between two improvements, etc.

4.3 The general logic of h-NSDE

We now present and discuss the h-NSDE algorithm. h-NSDE utilizes two populations: an internal main population S with solutions created by DE and improved by VNS iteratively, and a secondary external population Q for holding the non-dominated solutions. At each iteration the two populations are combined together into a larger temporary population R. Then, a non-dominated sorting is carried out to classify the solutions in R. After this sorting, the non-dominated of them constitutes the new Pareto solutions in Q. Note that, all previous solutions in Q are deleted and replaced by the new elite solutions. Additionally, h-NSDE differs from the standard DE (see Storn and Price 1997) as well as from our previous DE-VNS algorithm (see Nearchou 2012) in the way mutation and acceptance operators are implemented. In particular, mutant vectors are created by mixing the parameters of population vectors randomly chosen from the combined population R and not only from S as traditionally. Hence, α, β, γ in Eq. (16) are integers randomly chosen so that the vectors \( {\mathbf{x}}_{\alpha ,k} ,{\mathbf{x}}_{\beta ,k} ,{\mathbf{x}}_{\gamma ,k} \in S \cup Q \, \forall k = 1,2, \ldots \) Turning to the acceptance operator, the following idea was applied: instead of performing a one-to-one comparison between each target vector \( {\mathbf{x}}_{i,k} \) (the old vector) and its associated trial vector \( {\varvec{\uppsi}}_{i, \, k} \) (the new vector) (see Eq. (18)); in h-NSDE all trials and targets vectors are combined together into a large temporary population and sorted in ascending order of their cost. The first NS vectors of the sorted temporary population constitute the vectors of the new population S. The pseudo-code of the algorithm in step-by-step format is given below. Although we have tried to avoid most mathematical formalism, the following additional notation is necessary for presenting the developed heuristic.

S | Main population of the individual solutions |

Q | Secondary population maintaining the Pareto-set solutions |

N S | Number of individual solutions in S (size of the main population) |

N Q | Maximum permitted number of solutions in Q (Pareto size) |

k | Iteration (generation) number |

k max | Maximum number of iterations |

R | Temporary population used in the mutation phase of the algorithm, R = S ∪ Q |

Φk | Temporary population used in the acceptance phase of the algorithm. Φk holds all the trial vectors of the current iteration k |

\( {\mathbf{x}}_{i,k} \) | The i-th target vector solution in S at iteration k |

\( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \) | The i-th mutant vector corresponding to \( {\mathbf{x}}_{i,k} \) at iteration k |

\( {\varvec{\uppsi}}_{i, \, k} \) | The i-th trial vector corresponding to \( {\mathbf{x}}_{i,k} \) at iteration k |

\( \sigma ({\mathbf{x}}_{i,k} ) \) | Schedule solution corresponding to the i-th target vector solution in S at iteration k |

p vns | Probability of applying VNS method on a particular solution in population S |

Algorithm 1: h-NSDE

-

Input: n, pj, dj, wj (for all j = 1, …, n), NS, NQ (NQ ≤ NS), kmax.

-

Output: Q.

begin

-

Step 1: Set iteration counter, k = 0.

-

Step 2: Generate a random initial population S of \( {\mathbf{x}}_{i,k} \) (i = 1, …, NS) solutions.

-

Step 3: Create an initial empty Pareto population, Q = {∅}.

-

Step 4: Evaluate the solutions in S.

-

Step 5: Combine S and Q populations and create population R = S ∪ Q.

-

Step 6: Perform a non-dominated sorting of the solutions in R and copy the non-dominated of them to population Q.

-

Step 7: For each target vector solution \( {\mathbf{x}}_{i,k} \)(i = 1, …, NS) in S generate a mutant vector \( {\mathbf{\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} }}_{i,k} \) using Eq. (17) and a trial vector \( {\varvec{\uppsi}}_{i, \, k} \) using Eq. (18). Choose vectors \( {\mathbf{x}}_{\alpha ,k} ,{\mathbf{x}}_{\beta ,k} ,{\mathbf{x}}_{\gamma ,k} ,{\mathbf{x}}_{\delta ,k} \) in Eq. (17) randomly from population R. Save each trial vector \( {\varvec{\uppsi}}_{i, \, k} \)(i = 1, …, NS) into a temporary population Φk.

-

Step 8: Evaluate the solutions in Φk.

-

Step 9: Combine S and Φk populations into a single temporary population \( \Phi^{\prime} = S \cup {\Phi}_k \).

-

Step 10: Create S of the new iteration k + 1 from Φ′ as follows: first sort the solutions in Φ′ in ascending order of their cost. Then, copy the top NS solutions of Φ′ into S.

-

Step 11: Alter each solution in S by VNS heuristic with probability pvns.

-

Step 12: if k ≤ kmaxthen go to Step 5.

-

Step 13: Return population Q

end;

Algorithm 1 starts (Steps 1–3) by generating two initial populations of individual solutions termed S and Q respectively. The former is the actual evolving population of the algorithm. That is, it is the active pool with the genetic material (floating-point vectors) for creating new more robust vector solutions from iteration to iteration. Initially, the solutions in S are generated randomly. Population Q serves as a storage area for maintaining the non-dominated solutions from S. In Step 4 the solutions \( {\mathbf{x}}_{i,k} \) of the main population S are evaluated. As we have already explained each \( {\mathbf{x}}_{i,k} \) is a floating-point vector. Evaluating an individual solution corresponds to the computation of its cost (a measure related to the schedule solution \( \sigma ({\mathbf{x}}_{i,k} ) \) corresponding to vector \( {\mathbf{x}}_{i,k} \) and the associated values of the three performance criteria:∑ wjTj, Tmax, Emax). Note that, every floating-point vector is mapped to an actual schedule solution using the sub-range encoding method appeared in Nearchou (2012). This method is demonstrated in the “Appendix” of this study. The cost of each schedule solution is estimated using one of the cost function models formulation described in Sect. 3.3.

A cycle of iterations is then applied to S (Steps 5-11) as in the following. at each iteration, first S and Q populations are combined into a single set R. Then, a non-dominated sorting of the solutions in R is performed. In this sorting each solution i ∊ R is compared with every other solution in R for non-domination. The non-dominated solutions of the sorted population R constitute the non-dominated set Q (Step 6). Algorithm 2 below describes the way population Q is updated in each iteration.

In Step 7 a set of trial vectors are created and maintained in a temporary population Φk. Here, we should report that, the appropriate settings for F and CR control parameters (necessary in Eqs. (17) and (18)) are dynamically estimated during the evolution of the algorithm using a suitable self-adapted control scheme (described in Sect. 5.2 below). The solutions in \( {\Phi}_k \) are then evaluated (Step 8) and combined (Step 9) with the solutions of the current population S to create the solutions of the new S of the next iteration k + 1 (Step 10). Finally, (Step 11) each member of the new S undergoes improvement using a VNS procedure. VNS is applied to each floating-point vector solution in S with probability pvns. This probability is dynamically estimated in the genotypic level of the algorithm being a component (a gene) of the genotype (the vector solution) and adjusted by means of evolution. Details on the VNS procedure employed can be found in our previous work (see Nearchou 2012).

Algorithm 2: Non-dominated sorting

Input: R, NR (NR denotes the size of R).

Output: Q.

begin

-

Step 1. Create an empty temporary population Q′.

-

Step 2. Set population member counter, i = 1.

-

Step 3. For each solution j ∊ R (but j ≠ i) check if solution jdominates solution i. If yes, go to Step 6.

-

Step 4. Set j = j + 1.

-

Step 5. If j ≤ NR then go to Step 3; otherwise if there is empty space in Q′, insert solution i in Q′.

-

Step 6. Set i = i + 1.

-

Step 7. If i ≤ NR then go to Step 3; otherwise replace Q by Q′.

-

Step 8. Return population Q.

end;

5 Computational study

In this section we present computational results obtained for assessing the quality of the h-NSDE algorithm together with a discussion of its implications. The experiments were carried out on known benchmarks (concerning the TWT problem) available by ORLIB library (http://people.brunel.ac.uk/~mastjjb/jeb/orlib/wtinfo.html). The benchmarks include three data set instances with n = 40, 50 and 100 jobs. Each data set contains 125 different test instances providing for each job j (j = 1, …, n) three integers: a processing time pj, a weight wj and a due date dj. Note that, optimal and best known solution values concern the TWT objective (for more details on these benchmarks please see the work of Crauwels et al. 1998).

5.1 Investigation setting

A two-phased numerical investigation was carried out. The first phase aimed at evaluating the performance of five variants of the h-NSDE algorithm, differ in cost function assignment modeling (as described in Sect. 3.3). We will refer to these versions with the abbreviations:

-

h-NSDE-f: uses the weighted-sum approach with fixed weights in the compound objective function, see Eq. (5).

-

h-NSDE-r: uses the weighted-sum approach with random weights in the compound objective function, estimated by Eq. (6).

-

h-NSDE-a: uses the weighted-sum approach with adaptive weights in the compound objective function, estimated by Eq. (7).

-

h-NSDE-fm: uses the fuzzy-measures based approach to build the objective function thus taking into account the interactions between the examined performance criteria, see Eq. (13).

-

h-NSDE-p: uses the Pareto-based method to build the objective function for each individual solution, see Eq. (14).

The second investigation phase aimed at evaluating the best two h-NSDE variants (found in the first phase) against three existing in the literature general-purpose multiobjective evolutionary algorithms namely, SPEA2, NSGA-II and L-NSGA. All the experiments were performed on a PC with 3.2 GHz Intel Core i5 CPU, 6 GB RAM under Windows 7 operating system. SPEA2 (the strength Pareto evolutionary algorithm-2) introduced by Zitzler et al. (2002) and NSGA-II (the non-dominated sorting genetic algorithm-II) proposed by Deb et al. (2002) are very popular in the Evolutionary Computation community as effective multiobjective optimizers and due to their efficiency often constitute test beds against any new multiobjective algorithm. L-NSGA (the Lorenz non-dominated sorting genetic algorithm) developed by Dugardin et al. (2010) is a rather new variant of the NSGA-II algorithm which uses the Lorenz dominance relationship instead of the Pareto dominance with the aim to provide a stronger selection of the non-dominated solutions. Roughly speaking, the Lorenz dominance relationship tries to provide a better domination area by rejecting the solutions founded on the extreme sides of the Pareto front. This can speed up the exploration of the solution space by focusing on the promising solution set. For more information on this topic see Dugardin et al. (2010), Li et al. (2011), Moghaddam et al. (2015).

The performance of the examined algorithms was quantified through the use of three performance indices. The first two indices measures the ‘closeness’ of the obtained solutions to the Pareto-optimal set. These indices are classified as solution quality indices. The third index measures the diversity of the obtained solutions in regard to the non-dominated front. This index is classified as solution diversity index. The employed indices are described below.

-

1.

The quality ratio (P*/P) as a percentage. The larger the value for this ratio for a given algorithm say x the higher its performance. P denotes the total number of Pareto solutions generated by x over a specific test instance. While P* (P* ≤ P) denotes the number of x’s solutions non dominated by any solution of the other algorithms. In particular, since some (or all) Pareto solutions obtained by x may be dominated by other algorithms, all the obtained solutions are compared to each other and the non dominated among them are selected. This final non dominated set of solutions corresponds to the Pareto-best front for the specific test instance. Obviously, when P* = P, then x has a quality ratio equal to 100% meaning that all the solutions obtained by x belong to the Pareto-best front.

-

2.

The Zitzler index (Zitzler and Thiele 1999) here denoted as Z(x, y) which represents the percentage of the Pareto solutions in algorithm x dominated by at least one Pareto solution of algorithm y. Since this measure is not symmetrical, it is also necessary to calculate Z(y, x). Therefore, if Z(x, y) < Z(y, x) then x is better than y.

-

3.

The spread index (Δ) (Deb 2001) is a metric that considers the extent of spread in the obtained solutions using the following relation:

$$ \Delta = \frac{{\sum\nolimits_{r = 1}^{q} {d_{r}^{e} } + \sum\nolimits_{i = 1}^{Q} {\left| {d_{i} - \bar{d}} \right|} }}{{\sum\nolimits_{r = 1}^{q} {d_{r}^{e} + \left| Q \right| \cdot \bar{d}} }} $$(20)where, q denotes the number of objectives. |Q| is the total number of solution in the Pareto-solution set Q. di is a distance measure between neighboring solutions in the objective space and \( \bar{d} \) is the mean value of all these di measures. The parameter d e r is the distance between the extreme solutions corresponding to the rth objective function and any other solution in Q. di is estimated as the minimum distance in the objective space between the ith solution and any other solution h ∊ Q. That is, \( d_{i} = \mathop {\hbox{min} }\limits_{h \in Q \wedge h \ne i} \sum\limits_{r = 1}^{q} {\left| {{\text{f}}_{r}^{i} - {\text{f}}_{r}^{h} } \right|} \) with \( {\text{f}}_{r}^{i} \) being the rth objective function value for the ith member in Q.

Δ takes a zero value for an ideal distribution, only when d e r = 0 and all di values are identical to their mean \( \bar{d} \). As the solutions get clustered more and more closer from the ideal distribution Δ value increases from zero towards greater values. Hence, an algorithm finding smaller Δ value is able to find a better diverse set of non-dominated solutions.

5.2 Control parameters’ settings

All the algorithms were defined to evolve a fixed size population of NS = n individual solutions (with n being the number of the jobs) and run for a maximum of 0.5n CPU seconds. This means, a maximum running time equal to 20 s for 40-job problems, 25 s for 50-job and 50 s for 100-job problems. The maximum size of the Pareto population maintained by each heuristic was defined to be equal to NQ = n solutions. To determine the final Pareto set solutions for each algorithm we applied the following procedure: each one of the algorithms was run 10 times over every test instance starting each time from a different random number seed. The Pareto solutions generated in every different run were combined into a single union set. Then, a non-dominating sorting was performed and the elite solutions of this union set constituted the final Pareto set for the particular algorithm. This means that, for the 125 instances included in each one of the three problem classes, every heuristic was run 3 × 125 × 10 times = 3750 times in total.

To estimate the correct settings for CR and F used within the h-NSDE algorithms a dynamic self-adapted control scheme was adopted previously proposed by Brest et al. (2006). According to this scheme for each new parent vector solution a new pair of CR and F values is estimated using the following relations:

where, Fl = 0.1, Fu = 0.9, r1 = r2 = 0.1, and randj (j∈{1,2,3,4}) are uniform random numbers in the range [0,1]. This dynamic scheme was found superior (in terms of solution quality) to various existing static control schemes examined in preliminary experimental studies. At the start, CR and Fare set to 0.9 and 0.5 respectively for all the members of the initial population.

SPEA2, NSGA-II, and L-NSGA were implemented according to their description in the literature. The individuals solutions (chromosomes) are represented as floating-point vectors (as in h-NSDE) while, chromosomes’ encoding to actual schedule solutions is performed using the well known random-keys method (Bean 1994). To determine the appropriate crossover and mutation rates for these algorithms we used the following experimental framework: we set the mutation rate to a fix value within the discrete range {0.0, 0.01, 0.1} and experimented with various crossover rates in the range {0.3, 0.6, 0.8, 0.9, 1.0}. The influence of each different combination of crossover and mutation rates on the performance of the algorithms was assessed using the notion of non-dominance in relation to the Zitzler and quality ratio metrics (given in Sect. 5.1). The best crossover and mutation rates obtained after the termination of these preliminary runs were 0.8 and 0.1 respectively.

5.3 Discussion of the results

Table 4 summarizes the results corresponding to the first phase of the investigation for all test problems considered in regard to the mean (P*/P) quality ratio. This ratio is averaged over the 125 test instances included in each class of problems. The higher the value of this ratio for a particular algorithm the greater its performance. For example, a quality ratio equal to 15.02% (see the first cell in Table 4) means that circa 15% of the solutions obtained by the specific algorithm (here h-NSDE-f) are non-dominated by any Pareto solution of the other algorithms. Hence, as one can see from Table 4, h-NSDE-a achieved the highest quality ratio with a mean score equal to 73.71%; which means that circa 74% of the solutions obtained by this heuristic are non-dominated by any Pareto solution created by the remaining four algorithms. The second best performance was attained by h-NSDE-fm with a mean quality ratio equal to 50.78%.

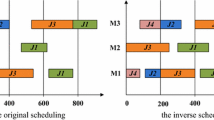

Turning now to the Zitzler index, Table 5(a) shows the values of this performance metric obtained over the seventh instance included in the 40-job data set problems. Recall that, Zitzler index is estimated through an algorithm-to-algorithm comparison iterated procedure. That is, each one of the algorithms is compared against to all the others. Observe for example cell (x, y) = (h-NSDE-f, h-NSDE-a) in the first line (forth column) of Table 5(a). The value 33.3% in this cell is the Zitzler index of h-NSDE-f against h-NSDE-a and means that circa 33% of the solutions generated by h-NSDE-f are dominated by at least one h-NSDE-a solution. The Zitzler index is not symmetric and therefore we must also examine the value in cell (y, x) = (h-NSDE-a, h-NSDE-f) in order to make a safe conclusion concerning the efficiency of the two algorithms. As we can see Z(h-NSDE-a, h-NSDE-f) = 0%. Hence, we can conclude that h-NSDE-a is definitely superior to h-NSDE-f for this particular test instance. Counting the number of y’s dominated by a particular x gives us the overall performance of the latter. This information is reported in the last column of Table 5(b) and typically constitutes a ranking of the five algorithms based on the Zitzler index. Therefore, as we can see, h-NSDE-fm outperforms all the other heuristics attained the smallest Zitzler index; this is depicted with a score 4 (maximum possible score) in the last column of Table 5(a). The second best performance was achieved by h-NSDE-a with an overall score equal to 3 (which means that, h-NSDE-a outperforms all the other heuristics except from h-NSDE-fm). In particular, half of the solutions generated by h-NSDE-a are being dominated by at least one h-NSDE-fm solution (see the related Zitzler index value in the table which is equal to 50%). Figure 1 displays the Pareto solutions obtained by the five algorithms for this single test problem. The P*/P ratio for the algorithms for this particular instance is depicted in Table 5(b). As we can see from this table, h-NSDE-fm reports a quality ratio equal to 100% which means that all its solutions belong to the final Pareto (near-) optimal front obtained. The second best performance in regard to this index is reported by h-NSDE-f (= 66.7%) denoting that circa 67% of h-NSDE-f solutions are non-dominated by any solution of the other algorithms. A deep observation of Fig. 1 verifies this analysis.

Obviously, we cannot give in a single table the detailed Zitzler values for the five algorithms over all the instances of the examined data set. However, we can report the total number of instances in which x wins y. This information is depicted in Table 6. Let us take for example the case where, x = h-NSDE-f and y = h-NSDE-r over the 40-jobs instances. As one can see (first line of Table 6), x wins y in 70 out the 125 in total instances; while in 45 of the remaining instances y wins x (second line first cell in Table 6). So, we can conclude that x is better than y As we can easily realize from Table 6, x didn’t find able to win the other three algorithms. Summing up for each x the number of times it became the winner (in an algorithm-to-algorithm comparison) we have the results shown in the last column of Table 6. Summarizing, for the instances with 40- and 50-jobs, h-NSDE-a showed the highest performance outperforming all the others (this is depicted by a score equal to 4). However, in the most difficult class of instances (that with 100-jobs) h-NSDE-fm outperformed all the others. Moreover, the latter attained the second best performance in all the instances with 40- and 50-jobs.

Tables 7 and 8 summarize the main results concerning the second investigation phase. Here, we compare the best two h-NSDE variants found in the initial investigation phase, i.e., h-NSDE-a and h-NSDE-fm against to SPEA2, NSGA-II and L-NSGA. As far as the quality ratio index is concerned (see Table 7) best results are due to the two h-NSDE variants as well as SPEA2. Specifically, circa 84.5% (in average) of the solutions generated by h-NSDE-a, about 47.5% of the solutions created by h-NSDE-fm and 29.5% of the SPEA2 solutions are non dominated by any solution of the other algorithms. Obviously, NSGA-II and L-NSGA showed much lower performance with the latter being the weakest. For illustration, we demonstrate in Fig. 2 the non-dominated solutions obtained by the five algorithms over the 7th instance included in the 40-job problems (also mentioned in the first investigation phase in accordance with Fig. 1).

Considering now the results related to the Zitzler index (Table 8) we can make a final conclusion about the relative performance of the algorithms. Recall that, the entries in Table 8 indicate the total number of the test instances in which algorithm x outperform y. Comparing for example h-NSDE-a with SPEA2 over the 40-jobs instances the results are favourable for the former. Particularly, in 73 instances out the 125 in total h-NSDE-a outperformed SPEA2. The latter outperformed h-NSDE-a in 39 of the remaining instances. Moreover, we can easily observe from Table 8 that h-NSDE-a outperformed all the other algorithms; a fact that is indicated with a score 4 in the last column (Ranking) of Table 8. The second best performance was achieved by SPEA2 with a score 3 which means that the latter outperformed all the others except from h-NSDE-a. Similarly, h-NSDE-a was also found superior in the case of the 50-jobs instances with SPEA2 being the second best optimizer and h-NSDE-fm the third. Highly noticeable is however the performance of h-NSDE-fm which attained the highest performance in the 100-jobs instances (these are the most difficult instances in the examined data set).

Considering the implications of Tables 7 and 8 on the performance of the algorithms, we can conclude that the two h-NSDE proposals demonstrate a remarkable high quality performance for the goal of progressing towards the Pareto-optimal front. Especially, h-NSDE-a proposal, a parameter-less algorithm seems to be a very promising and attractive multiobjective optimizer for use in scheduling problems. Turning to the remaining algorithms, SPEA2 is definitely the best. While the efficiency of both NSGA-II and L-NSGA markedly inferior from that of all other algorithms, renders these algorithms poor candidate for the scheduling problem considered in this study.

Turning to the spread index, Table 9 shows the mean and standard deviation values for this diversity metric obtained by all five algorithms. As can be seen, h-NSDE-fm performs the best in all the test problems in terms of Δ. Remember, an algorithm finding a smaller Δ value is able to find a better diverse set of non-dominated solutions. Next comes the performance observed with h-NSDE-a. In all cases with the two h-NSDE variants, the standard deviation of Δ in 10 runs over the 125 test instances included in each problem class is rather small (< 0.15) but always larger than that obtained by the other three algorithms (SPEA2, NSGA-II, L-NSGA).

5.4 Statistical analysis

The results of the second investigation phase were further tested for statistical significance. To that purpose, the experimental process described in Sect. 5.2 (i.e., 10 runs of each algorithm over each test instance followed by a non-dominating sorting to determine the final Pareto set) was repeated five times (so that to get five samples) for each algorithm. Considering the spread index (Δ) of each algorithm as a random variable, we first observed that these variables violated the required conditions for normality. Consequently, we conducted the classical Friedman non-parametric test for detecting differences across multiple samples (see Derrac et al. 2011 for a recent application of the test in algorithms ranking). Like many non-parametric tests, the Friedman test uses data ranks as opposed to absolute data values. Similar statistical observations were determined for the case of the quality ratio (P*/P). Table 10 summarizes the comparative results in regard to both spread and quality ratio indices over all the examined test instances. Each entry of this table represents the average ranking of the corresponding algorithm with respect to both indices whereas the last column reports the corresponding p values, namely the probability for the differences across the five algorithms to be due to chance.

The p values shown in the last column of Table 10 indicate that there are highly statistically significant differences between all algorithms in all three problem classes in regard to both performance metrics. In order to examine where the differences actually occur, we conducted a post hoc analysis. In particular, we performed a separate Wilcoxon signed-rank test for all possible pairs of algorithms. Since we made multiple comparisons, we used the Bonferroni adjustment on the results obtained from the Wilcoxon tests in order to get statistically valid results. The essence of the Bonferroni adjustment is to divide the original significance level (in our case, 0.05) by the number of comparisons we are running (in our case, 10). Hence, the new significance level is 0.005. Considering the 40- and 50-job problems, all tests concluded that there are statistically significant differences for all possible pairs of algorithms and that the ranking of the five algorithms with respect to the spread index is as depicted in Table 11(a). The results are similar in the case of 100 jobs, the only exception being that there are no statistically significant differences between h-NSDE-a and NSGA-II. Consequently, the ranking of the algorithms in this case is shown in the last column of Table 11(a).

As far as the quality ratio is concerned (see Table 11(b)), considering the 40-job problems, the tests concluded that there are no statistically significant differences between h-NSDE-a and h-NSDE-fm as well as between NSGA-II and L-NSGA (see the first column of Table 11(b)). In the case of the 50-job problems, the tests concluded that there are no statistically significant differences between h-NSDE-a, SPEA2 and NSGA-II. Finally, for 100-job problems, there are no statistically significant differences between algorithms h-NSDE-a and SPEA2 as well as between NSGA-II and L-NSGA.

As a final comment, the ranks of Table 11 definitely indicate the superiority of h-NSDE-fm in terms of both the solution quality ratio index and the spread index. As far as the spread index is concerned, the second best performance is due to h-NSDE-a, while in terms of solution quality SPEA2 and h-NSDE-a algorithms come second, showing similar performance. NSGA-II and L-NSGA seem to achieve similar performance in terms of solution quality while in terms of diversity quality these algorithms outperformed SPEA2.

6 Conclusions

A single machine scheduling problem with total weighted tardiness, maximum tardiness and maximum earliness objectives was considered. After a comprehensive discussion for positioning the problem within the existing multicriteria scheduling research agenda, we focused on two particular issues. The development of a new efficient algorithm aiming to determine a set of Pareto-optimal solutions to the problem and the numerical investigation of its performance on existing difficult benchmarks test problems. The proposed algorithm termed h-NSDE (the hybrid non-dominated sorting differential evolution) combines a differential evolution (DE) algorithm for global solutions exploration together with a variable neighborhood search (VNS) heuristic for efficient local improvement around selected DE solutions. h-NSDE is parameters’ tuning free since it uses a self-adapted control mechanism that allows the dynamic estimation of the appropriate control parameters settings during its evolution.

Two investigation phases were carried out: in the first phase five variants of h-NSDE were studied which differ in the way the objective (cost) function assignment to the individual solutions is employed. In the second investigation phase the best two h-NSDE variants were compared to existing popular multiobjective population-based algorithms. The investigation study (supported by a statistical performance analysis) showed h-NSDE to be a much promising optimization algorithm for searching for Pareto-optimal solutions in the context of multicriteria scheduling.

Since the study of h-NSDE is still in its initial stages there is till much ground for further research with respect to both new problem areas application and solutions improvement. The next step is to apply the algorithm on more complex scheduling problems such as resource-constrained project scheduling, workforce scheduling, and transportation scheduling problems. Turning to research issues related to the solutions quality and diversity improvement, attention will be paid on the implementation of a more robust diversity preventing strategy. Special schemes borrowed from the Evolutionary Computation field will be studied. A first idea is to investigate the notions of niching and clustering within the context of h-NSDA. Since the dimension of Pareto-optimal set increase with the number of objectives, it is quite interesting to study and implement fast enough robust mechanisms for distance computations and identifications of neighboring solutions. Furthermore, sizing and initializing the main evolving population in h-NSDE is another interesting future direction. In its present form h-NSDE uses a pure random initial population. Our intuition is that seeding the population with solutions generated by some highly disruptive search operators could improve the population diversity and make faster moves toward the true Pareto-optimal front of the examined problems.

Notes

Available on the public OR-Library via http://people.brunel.ac.uk/~mastjjb/jeb/info.html.

References

Abdul-Razaq, T.S., Potts, C.N., Van Wassenhove, L.N.: A survey of algorithms for the single machine total weight tardiness scheduling problem. Discrete Appl. Math. 26, 235–253 (1990)

Ali, M.M., Törn, A.: Population set-based global optimization algorithms: some modifications and numerical studies. Comput. Oper. Res. 31, 1703–1725 (2004)

Arroyo, J.E.C., Ottoni, R., Oliveira, A.: Multi-objective variable neighborhood search algorithms for a single machine scheduling problem with distinct due windows. Electron. Notes Theor. Comput. Sci. 281, 5–19 (2011)

Azizoglu, M., Kondakci, S., Köksalan, M.: Single machine scheduling with maximum earliness and number tardy. Comput. Ind. Eng. 45(2), 257–268 (2003)

Bäck, T.: Evolutionary Algorithms in Theory and Practice. Oxford University Press, New York (1996)

Baker, K.R.: Introduction to Sequencing and Scheduling. Wiley, New York (1974)

Baker, K.R., Trietsch, D.: Principles of Sequencing and Scheduling. Wiley, Hoboken, NJ (2009)

Baker, K.R., Scudder, G.D.: Sequencing with earliness and tardiness penalties: a review. Oper. Res. 38(1), 22–36 (1990)

Bauer A., Bullnheimer B., Hartl R.F., Strauss C.: An ant colony optimization approach for the single machine total tardiness problem. In: Proceedings of CEC’99, pp. 1445–1450. IEEE Press, Piscataway (1999)

Bean, J.: Genetics and random keys for sequencing and optimization. ORSA J. Comput. 6(2), 154–160 (1994)

Beasley, J.E.: OR-library: distributing test problems by electronic mail. J. Oper. Res. Soc. 41(11), 1069–1072 (1990)

Bilge, U., Kurtulan, M., Kırac, F.: A tabu search algorithm for the single machine total weighted tardiness problem. Eur. J. Oper. Res. 176, 1423–1435 (2007)

Brest, J., Greiner, S., Boskovic, B., Mernik, M., Zumer, V.: Self-adapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans. Evolut. Comput. 10(6), 646–657 (2006)

Carlos, A., Coello, C.: An updated survey of GA-based multiobjective optimization techniques. ACM Comput. Surv. 32(2), 109–143 (2000)

Chen, C.-L., Bulfin, R.L.: Scheduling a single machine to minimize two criteria: maximum tardiness and number of tardy jobs. IIE Trans. 26(5), 76–84 (1994)

Chou, F.-D.: An experienced learning genetic algorithm to solve the single machine total weighted tardiness scheduling problem. Expert Syst. Appl. 36(2), 3857–3865 (2009)

Congram, R.K., Potts, C.N., Van de Velde, S.L.: An iterated dynasearch algorithm for the single-machine total weighted tardiness scheduling problem. INFORMS J. Comput. 14(1), 52–67 (2002)

Crauwels, H.A.J., Potts, C.N., Van Wassenhove, L.N.: Local search heuristics for the single machine total weighted tardiness scheduling problem. INFORMS J. Comput. 10, 341–350 (1998)

Deb, K.: Multi-objective Optimization Using Evolutionary Algorithms. Wiley-Interscience Series in Systems and Optimization. John Wiley & Sons (2001)

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evolut. Comput. 6(2), 182–197 (2002)

Derrac, J., García, S., Molina, D., Herrera, F.: A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evolut. Comput. 1, 3–18 (2011)

Du, J., Leung, J.Y.T.: Minimizing total tardiness on one machine is NP-hard. Math. Oper. Res. 15, 483–495 (1990)