Abstract

We develop a mathematical formalism that allows to study decoherence with a great level generality, so as to make it appear as a geometrical phenomenon between reservoirs of dimensions. It enables us to give quantitative estimates of the level of decoherence induced by a purely random environment on a system according to their respectives sizes, and to exhibit some links with entanglement entropy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of decoherence is arguably one of the greatest advances in fundamental physics of the past forty years. Without adding anything new to the quantum mechanical framework, and considering that the Schrödinger equation is universally valid, it explains why quantum interferences virtually disappear at macroscopic scales. Since the pioneering papers [1, 2], a wide variety of models have been designed to understand decoherence in different specific contexts (see the review [3 or 4] and the numerous references therein). In this paper, we would like to embrace a more general point of view and understand the mathematical reason why decoherence is so ubiquitous between quantum mechanical systems.

We start by introducing general quantities and notations to present as concisely as possible the idea underlying the theory of decoherence (§2). We then build two simple but very general models to reveal the mathematical mechanisms that make decoherence so universal, thereby justifying why quantum interferences disappear due to the Schrödinger dynamics only (§3 and §4). We recover in §3.2 and §4.1 the well-known typical decay of the non-diagonal terms of the density matrix in \(n^{-\frac{1}{2}}\), with n the dimension of the Hilbert space describing the environment. The most important result is Theorem 3.3, proved in §3.3, giving estimates for the level of decoherence induced by a random environment on a system of given sizes. We conclude in §3.4 that even very small environments (of typical size at least \(N_{\mathcal {E}} = \ln (N_{\mathcal {S}})\) with \(N_{\mathcal {S}}\) the size of the system) suffice, under assumptions discussed in §3.5. We also give a general formula estimating the level of classicality of a quantum system in terms of the entropy of entanglement with its environment (§4.2, proved in the Annex A), and propose alternative ways of quantifying decoherence in §5.

2 The Basics of Decoherence

The theory of decoherence sheds light on the reason why quantum interferences disappear when a system gets entangled with a macroscopic one, for example an electron in a double-slit experiment that doesn’t interfere anymore when entangled with a detector. According to Di Biagio and Rovelli [5], the deep difference between classical and quantum is the way probabilities behave: all classical phenomena satisfy the total probability formula

relying on the fact that, even though the actual value of the variable A is not known, one can still assume that it has a definite value among the possible ones. This, however, is not correct for quantum systems. It is well-known that the diagonal elements of the density matrix account for the classical behavior of a system (they correspond to the terms of the total probability formula) while the non-diagonal terms are the additional interference terms. As a reminder, this is because the probability to obtain an outcome x is:

Here is the typical situation encountered in decoherence studies. Consider a system \(\mathcal {S}\), described by a Hilbert space \(\mathcal {H}_{\mathcal {S}}\) of dimension d, that interacts with an environment \(\mathcal {E}\) described by a space \(\mathcal {H}_{\mathcal {E}}\) of dimension n, and let \(\mathcal {B} = ({|{i}\rangle })_{1\leqslant i \leqslant d}\) be an orthonormal basis of \(\mathcal {H}_{\mathcal {S}}\). In the sequel, we will say that each \({|{i}\rangle }\) corresponds to a possible history of the system in this basis (this expression will be given its full meaning in a future article dedicated to the measurement problem). Let’s also assume that \(\mathcal {B}\) is a conserved basis during the interaction with \(\mathcal {E}\). When \(\mathcal {E}\) is a measurement apparatus for the observable A, the eigenbasis of \(\hat{A}\) is clearly a conserved basis; in general, the eigenbasis of any observable such that \(\hat{A} \otimes \mathbbm {1}\) commutes with the interaction Hamiltonian is suitable (but the existence of such an observable is not guaranteed, unless \(\hat{H}_{int}\) takes the form \(\sum _i {\hat{\Pi }}^{\mathcal {S}}_i \otimes \hat{H}^{\mathcal {E}}_{i}\), where \(({\hat{\Pi }}^{\mathcal {S}}_i)_{1 \leqslant i \leqslant d}\) is a family of commuting orthogonal projectors).

We further suppose that \(\mathcal {S}\) and \(\mathcal {E}\) are initially non entangled, allowing us to write \({|{\Psi }\rangle } = \left( \sum _{i=1}^d c_i {|{i}\rangle } \right) \otimes {|{\mathcal {E}_0}\rangle }\) as the initial state before interaction. After a time t, due to its Schrödinger evolution in the conserved basis, the total state becomes \({|{\Psi (t)}\rangle } = \sum _{i=1}^d c_i {|{i}\rangle } \otimes {|{\mathcal {E}_i(t)}\rangle }\) for some unit vectors \(({|{\mathcal {E}_i(t)}\rangle })_{1\leqslant i \leqslant d}\). Define \(\eta (t) = \displaystyle \max _{i \ne j} \; |{\langle {\mathcal {E}_i(t) \vert \mathcal {E}_j(t)}\rangle } |\). If \(({|{e_k}\rangle })_{1\leqslant k \leqslant n}\) denotes an orthonormal basis of \(\mathcal {H}_{\mathcal {E}}\), the state of \(\mathcal {S}\), obtained by tracing out the environment, is:

where \(\rho _{\mathcal {S}}^{(d)}\) stands for the (time independent) diagonal part of \(\rho _{\mathcal {S}}(t)\) (which corresponds to the total probability formula), and \(\rho _{\mathcal {S}}^{(q)}(t)\) for the remaining non diagonal terms responsible for the interferences between the possible histories. It is not difficult to show (see the Annex A) that \(|\hspace{-1.111pt}|\hspace{-1.111pt}| \rho _{\mathcal {S}}^{(q)}(t)|\hspace{-1.111pt}|\hspace{-1.111pt}| \leqslant \eta (t)\), where \(|\hspace{-1.111pt}|\hspace{-1.111pt}|M|\hspace{-1.111pt}|\hspace{-1.111pt}|\) stands for the usual operator norm on matrices, i.e. \(|\hspace{-1.111pt}|\hspace{-1.111pt}|M|\hspace{-1.111pt}|\hspace{-1.111pt}| = \sup _{\Vert {|{\Psi }\rangle } \Vert =1} \; \Vert M {|{\Psi }\rangle } \Vert\). Therefore \(\eta\) measures how close the system is from being classical because, as shown in A, we have for all subspaces \(F \subset \mathcal {H}_{\mathcal {S}}\) (recall that, in the quantum formalism, probabilistic events correspond to subspaces):

In other words, \(\eta (t)\) estimates how decohered the system is. Notice well that it is only during an interaction between \(\mathcal {S}\) and \(\mathcal {E}\) that decoherence can occur; any future internal evolution U of \(\mathcal {E}\) lets \(\eta\) unchanged since \({\langle {U \mathcal {E}_j \vert U \mathcal {E}_i}\rangle } = {\langle {\mathcal {E}_j \vert \mathcal {E}_i}\rangle }\). Also, a more precise definition for \(\eta\) could be \(\displaystyle \max _{\begin{array}{c} i \ne j \\ c_i, c_j \ne 0 \end{array}} \; |{\langle {\mathcal {E}_i(t) \vert \mathcal {E}_j(t)}\rangle } |\) with, by convention, \(\eta = 0\) when only one \(c_i\) is non zero, so that \(\rho _{\mathcal {S}}\) being diagonal in a basis becomes equivalent to \(\eta =0\) in this basis. This way \(\eta\) really quantifies the interferences between possible histories (of non zero probability). This is not true with the definition above, as is clear for example for the trivial interaction \({|{\Psi (t)}\rangle } = \sum _{i=1}^d c_i {|{i}\rangle } \otimes {|{\mathcal {E}_0}\rangle }\): here \(\rho _{\mathcal {S}}\) is diagonal (i.e. no interferences) in any orthonormal basis containing the vector \(\sum _{i=1}^d c_i {|{i}\rangle }\), but the simpler definition yields \(\eta = 1\) in any basis.

The aim of the theory of decoherence is to explain why \(\eta (t)\) rapidly goes to zero when n is large, so that the state of the system almost immediatelyFootnote 1 evolves from \(\rho _{\mathcal {S}}\) to \(\rho _{\mathcal {S}}^{(d)}\) in the conserved basis. As recalled in the introduction, a lot of different models already explain this phenomenon in specific contexts. In this paper, we shall build two (excessively) simple but quite universal models that highlight the fundamental reason why \(\eta (t) \rightarrow 0\) so quickly, and that will allow us to determine the typical size of an environment needed to entail proper decoherence on a system.

3 First Model: Purely Random Environment

When no particular assumption is made to specify the type of environment under study, the only reasonable behaviour to assume for \({|{\mathcal {E}_i(t)}\rangle }\) is that of a Brownian motion on the sphere \({\mathbb {S}}^{n} = \{ {|{\Psi }\rangle } \in \mathcal {H}_{\mathcal {E}} \mid \Vert {|{\Psi }\rangle } \Vert = 1 \} \subset \mathcal {H}_{\mathcal {E}} \simeq {\mathbb {C}}^n \simeq {\mathbb {R}}^{2n}\). It boils down to representing the environment as a purely random system with no preferred direction of evolution. This choice will be discussed in §3.5. Another bold assumption would be the independence of the \(({|{\mathcal {E}_i(t)}\rangle })_{1\leqslant i \leqslant d}\); we will dare to make this assumption anyway.

3.1 Convergence to the Uniform Measure

We will first show that the probabilistic law of each \({|{\mathcal {E}_i(t)}\rangle }\) converges exponentially fast to the uniform probability measure on \({\mathbb {S}}^{n}\). To make things precise, endow \({\mathbb {S}}^{n}\) with its Borel \(\sigma\)-algebra \(\mathcal {B}\) and with the canonical Riemannian metric g, which induces the uniform measure \(\mu\) that we suppose normalized to a probability measure. Let \(\nu _t\) be the law of the random variable \({|{\mathcal {E}_i(t)}\rangle }\), that is \(\nu _t(B) = {\mathbb {P}}\big ({|{\mathcal {E}_i(t)}\rangle } \in B \big )\) for all \(B \in \mathcal {B}\). Denote \(\Delta f = \frac{1}{\sqrt{g}} \partial _i (\sqrt{g} g^{ij} \partial _j f)\) the Laplacian operator on \(\mathcal {C}^\infty ({\mathbb {S}}^{n})\) which can be extended to \(L^2({\mathbb {S}}^{n})\), the completion of \(\mathcal {C}^\infty ({\mathbb {S}}^{n})\) for the scalar product \((f,h) = \int _{{\mathbb {S}}^{n}} f(x) h(x) \textrm{d}\mu\). The Hille-Yosida theory allows to define the Brownian motion on the sphere as the Markov semigroup of stochastic kernels generated by \(\Delta\). In particular, this implies that if \(p_t\) is the density after a time t, i.e. \(\nu _t(\textrm{d}x) = p_t(x) \mu (\textrm{d}x)\), then \(p_t = e^{t \Delta }p_0\). Of course, the law \(\nu _0\) of the deterministic variable \({|{\mathcal {E}_i(0)}\rangle } = {|{\mathcal {E}_0}\rangle }\) corresponds to a Dirac distribution, which is not strictly speaking in \(L^2({\mathbb {S}}^{n})\), but we can rather consider it as given by a sharply peaked density (with respect to \(\mu\)) \(p_0 \in L^2({\mathbb {S}}^{n})\). Finally, recall that the total variation norm of a measure defined on \(\mathcal {B}\) is given by \(\Vert \sigma \Vert _{TV} = \underset{B \in \mathcal {B} }{\sup }|\sigma (B) |\).

Proposition 3.1

We have \(\Vert \nu _t - \mu \Vert _{TV} \underset{t \rightarrow +\infty }{\longrightarrow }0\) exponentially fast. Moreover, if \(T({\mathbb {S}}^{n}) = \inf \{ t>0 \mid \Vert \nu _t - \mu \Vert _{TV} \leqslant \frac{1}{e} \}\) denotes the characteristic time to equilibrium for the brownian diffusion on \({\mathbb {S}}^{n}\), then \(T({\mathbb {S}}^{n}) \underset{n \rightarrow +\infty }{\sim }\frac{\ln (2n)}{4n}\).

Proof

See [7] for a precise proof of this proposition. The overall idea is to decompose the density of the measure \(\nu _t\) in an eigenbasis of the Laplacian, so that the Brownian motion (which is generated by \(\Delta\)) will exponentially kill all modes but the one associated with the eigenvalue 0, that is the constant one. The estimate of \(T({\mathbb {S}}^{n})\) is then obtained by examining how fast each mode (multiplied by its multiplicity) is killed. Interestingly enough, the convergence is faster as n increases since \(T({\mathbb {S}}^{n}) \underset{n \rightarrow \infty }{\longrightarrow }0\). \(\square\)

3.2 Most Vectors are Almost Orthogonal

Consequently, we are now interested in the behavior of the scalar products between random vectors uniformly distributed on the complex n-sphere \({\mathbb {S}}^{n}\). The first thing to understand is that, in high dimension, most pairs of unit vectors are almost orthogonal.

Proposition 3.2

Denote by \(S = {\langle { \mathcal {E}_1 \vert \mathcal {E}_2}\rangle } \in {\mathbb {C}}\) the random variable where \({|{\mathcal {E}_1}\rangle }\) and \({|{\mathcal {E}_2}\rangle }\) are two independent uniform random variables on \({\mathbb {S}}^{n}\). Then \({\mathbb {E}}(S) = 0\) and \({\mathbb {V}}(S) = {\mathbb {E}}(|S |^2) = \frac{1}{n}\).

Proof

Clearly, \({|{\mathcal {E}_1}\rangle }\) and \(-{|{\mathcal {E}_1}\rangle }\) have the same law, hence \({\mathbb {E}}(S) = {\mathbb {E}}(-S) = 0\). What about its variance? One can rotate the sphere to impose for example \({|{\mathcal {E}_1}\rangle } = (1,0, \dots , 0)\), and by independence \({|{\mathcal {E}_2}\rangle }\) still follows a uniform law. Such a uniform law can be achieved by generating 2n independent normal random variables \((X_i)_{1 \leqslant i \leqslant 2n }\) following \(\mathcal {N}(0,1)\), and by considering the random vector \({|{\mathcal {E}_2}\rangle } = \left( \frac{X_1+ iX_2}{\sqrt{X_1^2 + \dots + X_{2n}^2 }}, \dots , \frac{X_{2n-1} + i X_{2n}}{ \sqrt{X_1^2 + \dots + X_{2n}^2 }} \right)\). Indeed, for any continuous function \(f: {\mathbb {S}}^{n} \rightarrow {\mathbb {R}}\) (with \(\textrm{d}\sigma ^n\) denoting the measure induced by Lebesgue’s on \({\mathbb {S}}^{n}\)):

which means that \({|{\mathcal {E}_2}\rangle }\) defined this way follows indeed the uniform law.

In these notations, \(|S |^2 =\frac{X_1^2 + X_2^2}{X_1^2 + \dots + X_{2n}^2 }\). Since each \(X_i^2\) follows a \(\chi ^2\) law, it is then a classical lemma to show that \(|S |^2\) follows a \(\beta _{1,n-1}\) distribution, whose mean equals \(\frac{1}{n}\). For a more elementary argument, note that, up to relabelling the variables, we have \(\forall k \in \llbracket 1,n \rrbracket , \; {\mathbb {E}} \left( \frac{X_1^2 + X_2^2}{X_1^2 + \dots + X_{2n}^2 } \right) = {\mathbb {E}}\left( \frac{X_{2k-1}^2 + X_{2k}^2}{X_1^2 + \dots + X_{2n}^2} \right)\) and so:

Alternatively, had we worked on the real sphere \(\subset {\mathbb {R}}^{2n}\) endowed with the real scalar product, the variance would have been \(\frac{1}{2n}\). This highlights the fact that the real and complex spheres are indeed isomorphic as topological or differential manifolds, but not as Riemannian manifolds.

The same result would have been recovered if, instead of picking randomly a pair of vectors, we had chosen uniformly the unitary evolution operators \((U^{(i)}(t))_{1\leqslant i \leqslant d}\) such that \({|{\mathcal {E}_i(t)}\rangle } = U^{(i)}(t) {|{\mathcal {E}_0}\rangle }\), resulting from the interaction Hamiltonian. Again, if no direction of evolution is preferred, it is reasonable to consider the law of each \(U^{(i)}(t)\) to be given by the Haar measure \(\textrm{d}U\) on the unitary group \(\mathcal {U}_n\). If moreover they are independent, then \(U^{(i)}(t)^\dagger U^{(j)}(t)\) also follows the Haar measure for all i, j so that, using [8, (112)]:

\(\square\)

Therefore, \(|{\langle {\mathcal {E}_i(t) \vert \mathcal {E}_j(t)}\rangle } |\) is, after a very short time, of order \(\sqrt{{\mathbb {V}}(S)} = \frac{1}{\sqrt{\dim (\mathcal {H}_\mathcal {E})}}\), which is a well-known estimate already obtained by Zurek in [9]. When \(d=2\), if \(\mathcal {E}\) is composed of \(N_{\mathcal {E}}\) particles and each of them is described by a p-dimensional Hilbert space, then very rapidly:

which is virtually zero for macroscopic environments, therefore decoherence is guaranteed. Of course, this is not true anymore if d is large, because there will be so many pairs that some of them will inevitably become non-negligible, and so will \(\eta\). We would like to determine a condition between n and d under which proper decoherence is to be expected. In other words, what is the minimal size of an environment needed to decohere a given system?

3.3 Direct Study of \(\eta\)

To answer this question, we should be more precise and consider directly the random variable \(\eta _{n,d} = \displaystyle \max _{i \ne j} \; |{\langle {\mathcal {E}_i \vert \mathcal {E}_j}\rangle } |\) where the \(({|{\mathcal {E}_i)}\rangle }_{1\leqslant i \leqslant d}\) are d random vectors uniformly distributed on the complex n-sphere \({\mathbb {S}}^{n}\). In the following, we fix \(\varepsilon \in \left]0,1 \right[\) as well as a threshold \(s \in [0,1[\) close to 1, and define \(d_{max}^{\varepsilon , s}(n) = \min \{ d \in {\mathbb {N}} \mid {\mathbb {P}}(\eta _{n,d} \geqslant \varepsilon ) \geqslant s \}\), so that if \(d_{max}^{\varepsilon , s}(n)\) points or more are placed randomly on \({\mathbb {S}}^{n}\), it is very likely (with probability \(\geqslant s\)) that at least one of the scalar products will be greater that \(\varepsilon\).

Theorem 3.3

The following asymptotic estimates hold:

-

1.

\(\displaystyle d_{max}^{\varepsilon , s}(n) \underset{n \rightarrow \infty }{\sim }\ \sqrt{-2 \ln (1-s)} \left( \frac{1}{1-\varepsilon ^2} \right) ^{\frac{n-1}{2}}\)

-

2.

\(\boxed { \eta _{n,d} \underset{n \text { or } d \rightarrow \infty }{\overset{{\mathbb {P}}}{ \xrightarrow {\hspace{0.8cm}} }} \sqrt{1-d^{-\frac{2}{n}}} }\).

To derive these formulas, we first need the following geometrical lemma.

Lemma 3.4

Let \(A_n = |{\mathbb {S}}^{n} |\) be the area of the complex n-sphere for \(\textrm{d}\sigma ^n\) (induced by Lebesgue’s measure), \(C_{n}^{\varepsilon }(x) = \{ u \in {\mathbb {S}}^{n} \mid |{\langle {u \vert x }\rangle } |\geqslant \varepsilon \}\) the ‘spherical cap’Footnote 2 centered in x of parameter \(\varepsilon\), and \(A_n^{\varepsilon } = |C_n^{\varepsilon } |\) the area of any spherical cap of parameter \(\varepsilon\). Then for all \(n\geqslant 1\):

Proof of Lemma

This result can be directly obtained from the fact that, as noticed in the proof of Proposition 3.2, \(|{\langle { \mathcal {E}_1 \vert \mathcal {E}_2}\rangle } |^2\) follows a \(\beta _{1,n-1}\) distribution when \({|{\mathcal {E}_1}\rangle }\) and \({|{\mathcal {E}_2}\rangle }\) are chosen uniformly and independently on \({\mathbb {S}}^{n}\). We can then write:

A more ‘physicist-friendly’ proof can also be given, based on an appropriate choice of coordinates on the n-sphere. Recall that \({\mathbb {S}}^{n} \subset {\mathbb {C}}^n \simeq {\mathbb {R}}^{2n}\) can be seen as a real manifold of dimension \(2n-1\). Consider the set of coordinates \((r,\theta , \varphi _1, \dots , \varphi _{2n-3})\) on \({\mathbb {S}}^{n}\) defined by the chart

This amounts to choose the modulus r and the argument \(\theta\) of \(x_1+ix_2\), and then describe the remaining parameters using the standard spherical coordinates on \({\mathbb {S}}^{n-1}\), seen as a sphere of real dimension \(2n-3\), including a radius factor \(\sqrt{1-r^2}\). The advantage of these coordinates is that \(C_{n}^{\varepsilon }(1, 0,\dots ,0)\) simply corresponds to the set of points for which \(r \geqslant \varepsilon\).

The metric in these coordinates happens to be diagonal, given by:

-

\({{g}_{rr}}=\left\langle \left. {{e}_{r}} \right|{{e}_{r}} \right\rangle =1+\frac{{{r}^{2}}}{1-{{r}^{2}}}\)

-

\({{g}_{\theta \theta }}=\left\langle \left. {{e}_{\theta }} \right|{{e}_{\theta }} \right\rangle ={{r}^{2}}\)

-

\(g_{\varphi _i \varphi _i} = (1-r^2) [g_{\varphi _i \varphi _i}]\) with [g] the metric corresponding to the spherical coordinates on \({\mathbb {S}}^{n-1}\).

It is now easy to compute the desired quantity:

and, finally,

\(\square\)

We are now ready to prove the theorem.

Proof of Theorem 3.3

For this proof, we find some inspiration in [10], but eventually obtain sharper bounds with simpler arguments. Another major reference concerning spherical caps is [11]. We say that a set of vectors on a sphere are \(\varepsilon\)-separated if all scalar products between any pairs among them are not greater than \(\varepsilon\) in modulus. Denote \(\textrm{d}{\overline{\sigma }}^n\) the normalized Lebesgue’s measure on \({\mathbb {S}}^{n}\), that is \(\textrm{d}{\overline{\sigma }}^n = \frac{\textrm{d}\sigma ^n}{A_n}\), and consider the following events:

-

\(A: \forall k \in \llbracket 1, d-1 \rrbracket , |{\langle { \mathcal {E}_d \vert \mathcal {E}_k }\rangle } |\leqslant \varepsilon\)

-

\(B: ({|{\mathcal {E}_k}\rangle })_{1\leqslant k \leqslant d-1}\) are \(\varepsilon -\)separated

so as to write \({\mathbb {P}}(\eta _{n,d} \leqslant \varepsilon ) = {\mathbb {P}}(A \mid B) {\mathbb {P}}(B) = \frac{ {\mathbb {P}}(A \cap B)}{ {\mathbb {P}}(B)} {\mathbb {P}}(\eta _{n,d-1} \leqslant \varepsilon )\), with:

We need to find bounds on the latter quantity. Obviously, \({\mathbb {E}}\left( \frac{ \left| \bigcup _{k=1}^{d-1} C_{n}^{\varepsilon }({|{\mathcal {E}_k}\rangle }) \right| }{A_n} \Bigg \vert B \right) \leqslant (d-1) \frac{A_n^{\varepsilon }}{A_n}\), corresponding to the case when all the caps are disjoint. For the lower bound, define the sequence \(u_d = {\mathbb {E}}\left( \frac{ \left| \bigcup _{k=1}^{d} C_{n}^{\varepsilon }({|{\mathcal {E}_k}\rangle }) \right| }{A_n} \right)\), which clearly satisfies \(u_d \leqslant {\mathbb {E}}\left( \frac{ \left| \bigcup _{k=1}^{d} C_{n}^{\varepsilon }({|{\mathcal {E}_k}\rangle }) \right| }{A_n} \Bigg \vert B \right)\), because conditioning on the vectors being separated can only decrease the overlap between the different caps. First observe that \(u_1 = \frac{A_n^{\varepsilon }}{A_n} \equiv \alpha\), and compute:

where the main trick was to invert the integrals on \(x_d\) and on y. This result is actually quite intuitive: it states that when adding a new cap, only a fraction \(1-u_{d-1}\) of it on average will be outside the previous caps and contribute to the new total area covered by the caps. Hence \(u_d = 1- (1-\alpha )^d\), and the recurrence relation becomes:

Applying the lemma, we get by induction:

Note that the left inequality is valid only as long as \(d \leqslant \big ( \frac{1}{1-\varepsilon ^2} \big )^{n-1}\), but when d is larger than this critical value, the right hand side becomes very small (of order \(e^{-1/2(1-\varepsilon ^2)^{n-1}}\)), so we may take 0 as a good lower bound in this case. The two bounds are in fact extremely close to each other, and get closer as n or d goes larger. To quantify this precisely, let’s denote \(f_{n,d}(\varepsilon ) = (1- (1-\varepsilon ^2)^{n-1})^{\frac{d(d-1)}{2}}\), \(g_{n,d}(\varepsilon ) = \prod _{k=1}^{d-1} (1-k (1-\varepsilon ^2)^{n-1} )\), and let’s show that \(|f_{n,d}(\varepsilon ) - g_{n,d}(\varepsilon ) |\underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 0\). Two cases have to be considered.

-

First case: if \(d \geqslant d_c \equiv \left( \frac{1}{1-\varepsilon ^2}\right) ^{\frac{3}{5} (n-1)}\), then \(f_{n,d}(\varepsilon )\) is small so we can write:

$$\begin{aligned} |f_{n,d}(\varepsilon ) - g_{n,d}(\varepsilon ) |&\leqslant f_{n,d}(\varepsilon ) = e^{\frac{d(d-1)}{2} \ln (1- (1-\varepsilon ^2)^{n-1})} \\&\leqslant e^{-\frac{(d-1)^2}{2} (1-\varepsilon ^2)^{n-1}} \quad \quad \quad \text {(since } \ln (1-x) \leqslant -x) \\&\leqslant e^{ -\frac{1+ o(1)}{2 (1-\varepsilon ^2)^{\frac{n-1}{5}}}} \quad \quad \quad \text {(using } d \geqslant d_c) \\&\leqslant e^{-\frac{d^{1/3}}{2} (1+o(1))}, \end{aligned}$$where \(1+o(1) = \left( \frac{d-1}{d} \right) ^2 \underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 1\).

-

Second case: if \(d \leqslant d_c\), first note that \(\forall k \in \llbracket 1,d \rrbracket , \forall x \in [ 0,\frac{1}{d^{5/3}}[\),

$$\begin{aligned} 1 \leqslant \frac{(1-x)^k}{1-kx} \leqslant \frac{1- kx + \frac{k(k-1)}{2}x^2}{1-kx} \leqslant 1+ \frac{k(k-1)}{2} \frac{x^2}{(1-x^{2/5})}. \end{aligned}$$Therefore,

$$\begin{aligned} \left|\ln (f_{n,d}(\varepsilon ) - \ln (g_{n,d}(\varepsilon )) \right|=&\left|\sum _{k=1}^{d-1} k \ln (1- (1-\varepsilon ^2)^{n-1}) - \ln (1-k (1-\varepsilon ^2)^{n-1}) \right|\\ \leqslant&\sum _{k=1}^{d-1} \left|\ln \left( 1+ \frac{k(k-1)}{2} \frac{(1-\varepsilon ^2)^{2(n-1)}}{1- (1-\varepsilon ^2)^{\frac{2}{5}(n-1)}} \right) \right|\quad \\&\quad \text {(applying the inequality for } x = (1-\varepsilon ^2)^{n-1}) \\ \leqslant&\frac{(1-\varepsilon ^2)^{2(n-1)}}{1- (1-\varepsilon ^2)^{\frac{2}{5}(n-1)}} \underbrace{\sum _{k=1}^{d-1} \frac{k(k-1)}{2}}_{=\frac{d^3}{6} - \frac{d^2}{2} + \frac{d}{3} \leqslant \frac{d^3}{6} } \\ \leqslant&\frac{ (1-\varepsilon ^2)^{\frac{n-1}{5}} }{ 6 (1-(1-\varepsilon ^2)^{\frac{2}{5}(n-1)}) } \quad \quad \quad \text {(using } d \leqslant d_c) \\ \leqslant&\frac{d^{-\frac{1}{3}} }{ 6 (1-d^{-\frac{2}{3}}) }. \\ \end{aligned}$$Hence:

$$\begin{aligned}&\frac{g_{n,d}(\varepsilon )}{f_{n,d}(\varepsilon )} \in \Big [ \exp \left( -\frac{ (1-\varepsilon ^2)^{\frac{n-1}{5}} }{ 6 (1-(1-\varepsilon ^2)^{\frac{2}{5}(n-1)}) } \right) , 1 \Big ] \\ \Rightarrow \quad&|f_{n,d}(\varepsilon ) - g_{n,d}(\varepsilon ) |\leqslant \left( 1 - \exp \left( - \frac{ (1-\varepsilon ^2)^{\frac{n-1}{5}} }{ 6 (1-(1-\varepsilon ^2)^{\frac{2}{5}(n-1)}) } \right) \right) f_{n,d}(\varepsilon ) \\&\quad \leqslant \frac{ (1-\varepsilon ^2)^{\frac{n-1}{5}} }{ 6 (1-(1-\varepsilon ^2)^{\frac{2}{5}(n-1)}) } \quad \quad \quad \text {(since } 1-e^{-x} \leqslant x \text { and } f_{n,d}(\varepsilon ) \leqslant 1) \\&\quad \leqslant \frac{d^{-\frac{1}{3}} }{ 6 (1-d^{-\frac{2}{3}}) }. \end{aligned}$$

We have thus shown that the difference between the two bounds \(f_{n,d}(\varepsilon )\) and \(g_{n,d}(\varepsilon )\) can be controlled by a quantity that can be expressed solely in terms of either n or d but that anyway vanishes when either n or d tend to infinity. If we call \(\xi\) this vanishing term, it is straightforward to see that:

and after some work, this implies:

hence \(\displaystyle d_{max}^{\varepsilon , s}(n) \underset{n \rightarrow \infty }{\sim }\ \sqrt{-2 \ln (1-s)} \left( \frac{1}{1-\varepsilon ^2} \right) ^{\frac{n-1}{2}}\), which is the first part of the theorem.

The intuition concerning the second statement comes from the following observation. We know that \({\mathbb {P}}(\eta _{n,d} \leqslant \varepsilon ) \simeq f_{n,d}(\varepsilon )\), and this function happens to be almost constant equal to 0 in the vicinity of \(\varepsilon =0\), almost 1 in the vicinity of \(\varepsilon =1\), and to have a very sharp step between the two; this step sharpens as n or d grows larger. This explains why the mass of probability is highly peaked around a critical value \(\varepsilon _c\), so that \(\displaystyle \eta _{n,d} \simeq {\mathbb {E}}(\eta _{n,d})\) converges to a deterministic variable when n or d \(\rightarrow \infty\). This is certainly due to the averaging effect of considering the maximum of a set of \(\frac{d(d+1)}{2}\) scalar products. The critical value \(\varepsilon _c\) satisfies:

Now, the precise proof of the convergence of \(\eta _{n,d}\) in probability goes as follows. Let \(\delta >0\). We have to show that \({\mathbb {P}}\left( \left|\eta _{n,d} - \sqrt{1-d^{-\frac{2}{n}}} \right|\leqslant \delta \right) \underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 1\). It is equivalent but easier to show that \({\mathbb {P}}\left( \sqrt{1-d^{-\frac{2}{n}} - \delta } \leqslant \eta _{n,d} \leqslant \sqrt{1-d^{-\frac{2}{n}} +\delta } \right) \underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 1\). Taking \(f_{n,d}\) as an approximation for the distribution function of \(\eta _{n,d}\), we can write:

where o(1) stands for a quantity that goes to zero when either n or d goes to infinity (bounded by \(\xi\)), and where the max appears because if \(d^{-2/n} \leqslant \delta\), \({\mathbb {P}}\left( \eta _{n,d} \leqslant \sqrt{1-d^{-\frac{2}{n}} +\delta } \right)\) is simply equal to 1. Clearly, \({\mathbb {P}}\left( \eta _{n,d} \leqslant \sqrt{1-d^{-\frac{2}{n}} +\delta } \right) \underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 1\), and similarly, one shows that \({\mathbb {P}}\left( \eta _{n,d} \leqslant \sqrt{1-d^{-\frac{2}{n}} - \delta } \right) \underset{n \text { or } d \rightarrow \infty }{\longrightarrow } 0\), which completes the proof. \(\square\)

During the reviewing process, we discovered that the formula \(\sqrt{1-d^{-\frac{2}{n}}}\) had already been obtained in [12]. However, this work only deals with the maximum of the d scalar products between say the north pole and a set of d independent random vectors. This situation is easier to treat, in particular because the d scalar products are then independent random variables, which is certainly not the case for our \(\frac{d(d+1)}{2}\) scalar products.

3.4 Comparison with Simulation and Consequences

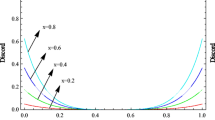

The above expressions actually give incredibly good estimations for \(d_{max}^{\varepsilon , s}(n)\) and \(\eta _{n,d}\), as shown in Figs. 1 and 2.

This theorem has a strong physical consequence. Indeed, \(\mathcal {E}\) induces proper decoherence on \(\mathcal {S}\) as long as \(\eta _{n,d} \ll 1\), that is when \(d^{-2/n}\) is very close to 1, i.e. when \(d \ll e^{n/2}\). Going back to physically meaningful quantities, we write as previously \(n = p^{N_\mathcal {E}}\) and \(d = p^{N_\mathcal {S}}\) where \(N_\mathcal {E}\) and \(N_\mathcal {S}\) stand for the number of particles composing \(\mathcal {E}\) and \(\mathcal {S}\). The condition becomes: \(2 \ln (p)N_\mathcal {S} \ll p^{N_\mathcal {E}}\) or simply:

A more precise condition can be obtained using \(d_{max}\), because \(\mathcal {E}\) induces proper decoherence on \(\mathcal {S}\) as long as \(d \leqslant d_{max}^{\varepsilon , s}(n)\) for an arbitrary choice of \(\varepsilon\) close to 0 and s close to 1. This rewrites: \(2\ln (p)N_\mathcal {S} \leqslant \ln (\sqrt{-2 \ln (1-s)}) + \ln \left( \frac{1}{1-\varepsilon ^2} \right) p^{N_\mathcal {E}} \simeq \varepsilon ^2 p^{N_\mathcal {E}}\) or simply: \(\ln (N_\mathcal {S}) \leqslant 2 \ln (\varepsilon ) + \ln (p) N_\mathcal {E}\). Thus, for instance, a gas composed of thousands of particles will lose most of its coherence if it interacts with only a few external particles. It is rather surprising that so many points can be placed randomly on a n-sphere before having the maximum of the scalar products becoming non-negligible. It is this property that makes decoherence an extremely efficient high-dimensional geometrical phenomenon.

3.5 Discussing the Hypotheses

On the one hand, this result could be seen as a worst case scenario for decoherence, since realistic Hamiltonians are far from random and actually discriminate even better the different possible histories. This is especially true if \(\mathcal {E}\) is a measurement apparatus for example, whose Hamiltonian is by construction such that the \(({|{\mathcal {E}_i(t)}\rangle })_{1\leqslant i \leqslant d}\) evolve quickly and deterministically towards orthogonal points of the sphere.

On the other hand, pursuing such a high level of generality led us to abstract and unphysical assumptions. First, realistic dynamics are not isotropic on the n-sphere (some transitions are more probable than others). Then, the assumption that each \({|{\mathcal {E}_i(t)}\rangle }\) can explore indistinctly all the states of \(\mathcal {H}_{\mathcal {E}}\) is very criticizable. As explained in [13]:

‘...the set of quantum states that can be reached from a product state with a polynomial-time evolution of an arbitrary time-dependent quantum Hamiltonian is an exponentially small fraction of the Hilbert space. This means that the vast majority of quantum states in a many-body system are unphysical, as they cannot be reached in any reasonable time. As a consequence, all physical states live on a tiny submanifold.’

It would then be more accurate in our model to replace \({\mathbb {S}}^{n}\) by this submanifold. But how does it look like geometrically and what is its dimension? If it were a subsphere of \({\mathbb {S}}^{n}\) of exponentially smaller dimension, then n should be replaced everywhere by something like \(\ln (n)\) in what precedes, so the condition would rather be \(N_\mathcal {S} \ll N_\mathcal {E}\) which is a completely different conclusion. Some clues to better grasp the submanifold are found in [14, §3.4]:

‘...one can prove that low-energy eigenstates of gapped Hamiltonians with local interactions obey the so-called area-law for the entanglement entropy. This means that the entanglement entropy of a region of space tends to scale, for large enough regions, as the size of the boundary of the region and not as the volume. (...) In other words, low-energy states of realistic Hamiltonians are not just ‘any’ state in the Hilbert space: they are heavily constrained by locality so that they must obey the entanglement area-law.’

More work is needed in order to draw precise conclusions taking this physical remarks into account.

4 Second Model: Interacting Particles

4.1 The Environment Feels the System

At present, let’s better specify the nature of the environment. Suppose that the energy of interaction dominates the evolution of the whole system \(\mathcal {S} + \mathcal {E}\) and can be expressed in terms of the positions \(x_1, \dots , x_N\) of the N particles composing the environment, together with the state of \(\mathcal {S}\) (this is the typical regime for macroscopic systems which decohere in the position basis [15, §III.E.2.]). If the latter is \({|{i}\rangle }\), denote \(H(i, x_1 \dots x_N)\) this energy. The initial state \({|{\Psi }\rangle } = \left( \sum _{i=1}^d c_i {|{i}\rangle } \right) \otimes \int f( x_1 \dots x_N) {|{ x_1 \dots x_N}\rangle } \textrm{d}x_1 \dots \textrm{d}x_N\) evolves into:

Therefore:

where \(\Delta (i,j, x_1 \dots x_N) = H(j, x_1 \dots x_N) - H(i, x_1 \dots x_N)\) is a spectral gap between eigenvalues of the Hamiltonian, measuring how much the environment feels the transition of \(\mathcal {S}\) from \({|{i}\rangle }\) to \({|{j}\rangle }\) in a given configuration of the environment. In a time interval \([-T,T]\), the mean value \(\frac{1}{2T} \int _{-T}^T {\langle { \mathcal {E}_i(t) \vert \mathcal {E}_j(t)}\rangle } \textrm{d}t\) yields \(\int |f( x_1 \dots x_N) |^2 \textrm{sinc}(\frac{\Delta (i,j, x_1 \dots x_N)}{\hbar } T)\) which is close to zero for all i and j as soon as \(T > \frac{\pi \hbar }{\displaystyle \min _{i, j, x_1 \dots x_N} \Delta (i,j, x_1 \dots x_N)}\), which is likely to be small if \(\mathcal {E}\) is a macroscopic system, for the energies involved will be much greater than \(\hbar\). Similarly, the empirical variance is:

plus terms that go to zero after a short time. Note that the variables \(x_1 \dots x_N\) could be discretized to take p possible values, in which case \(n = \dim (\mathcal {H}_\mathcal {E}) = p^N\), and the integral becomes a finite sum. For a delocalized initial state with constant f, this sum is equal to \(p^{-N}\), and we recover the previous estimate (2) if \(d=2\): \(\eta \sim p^{-N/2}\). This model teaches us that the more the environment feels the difference between the possible histories, the more they decohere.

4.2 Entanglement Entropy as a Measure of Decoherence

What precedes suggests the following intuition: the smaller \(\eta\) is, the more information the environment has stored about the system because the more distinguishable (i.e. orthogonal) the \(({|{\mathcal {E}_i(t)}\rangle })_{1\leqslant i \leqslant d}\) are; on the other hand, the smaller \(\eta\) is, the fewer quantum interferences occur. It motivates the search for a general relationship between entanglement entropy (defined as the von Neumann entropy of the reduced density matrix of either \(\mathcal {S}\) or \(\mathcal {E}\), i.e. how much \(\mathcal {E}\) knows about \(\mathcal {S}\) or vice-versa) and the level of classicality of a system. Such results have already been derived for specific environments [4, (3.76)] [16, 17] but not, to our knowledge, in the general case. The following formula is proved in the annex A when S stands for the linear entropy (or purity defect) \(1-{\text {tr}}(\rho ^2)\), and some justifications are given when S denotes the entanglement entropy:

5 Alternative Definitions for \(\eta\)

Lemma 3.4 allows for another way to quantify decoherence, which could be to ask, for each possible history i, what is the fraction \(F^\varepsilon _{n,d}\) of the other possible histories with which it interferes significantly, that is how many indices j are there such that \(|{\langle {\mathcal {E}_i \vert \mathcal {E}_j}\rangle } |\geqslant \varepsilon\)? As remarked in [18], this quantity is simply given by:

By the law of large numbers, we immediately deduce that \(F^\varepsilon _{n,d} \underset{d \rightarrow \infty }{\overset{\text {a.s.}}{ \longrightarrow }} \mathbb {P}(h_{ij} = 1) = (1- \varepsilon ^2)^{n-1}\), and we recover once again in this expression that the typical level of decoherence is \(\varepsilon \sim \frac{1}{\sqrt{n}}\). More interesting, perhaps, could be to quantify decoherence using the ‘expectation’ of the scalar products \(|{\langle {\mathcal {E}_i \vert \mathcal {E}_j}\rangle } |^2\) for \(i\ne j\), weighted by their quantum probabilities \(\frac{|c_i |^2|c_j |^2}{\sum _{i\ne j} |c_i |^2|c_j |^2} = \frac{|c_i |^2|c_j |^2}{1 - \sum _i |c_i |^4}\). One could then define:

based on a computation made in the annex A.2. The great advantage of this definition is that it can naturally be extended to the infinite dimensional case, unlike \(\displaystyle \max _{i \ne j} \; |{\langle {\mathcal {E}_i(t) \vert \mathcal {E}_j(t)}\rangle } |\) (if the scalar products vary continuously, their supremum is necessarily 1). Our proposal for quantifying decoherence in infinite dimension is therefore:

6 Conclusion

We introduced, in a mathematically rigorous way, general quantities that can be relevant for any study on decoherence, in particular the parameter \(\eta (t)\) that quantifies the level of decoherence at a given instant. Two simple models were then presented, designed to feel more intuitively the general process of decoherence. Most importantly, our study revealed the mathematical reason why the latter is so fast and universal, namely because surprisingly many points can be placed randomly on a n-sphere before having the maximum of the scalar products becoming non-negligible. We also learned that decoherence is neither perfect nor everlasting, since \(\eta\) is not expected to be exactly 0 and will eventually become large again (according to Borel-Cantelli’s lemma for the first model, and finding particular times such that all the exponentials are almost real in the second) pretty much like the ink drop in the glass of water will re-form again due to Poincaré’s recurrence theorem, even though the recurrence time can easily exceed the lifetime of the universe for realistic systems [9]. Finally, decoherence can be estimated by entanglement entropy because \(\eta\) is linked to what the environment knows about the system.

Further works could include the search for a description of the submanifold of reachable states mentioned in §3.5; a generalization to the cases where the initial environment is not in a pure state or the interaction admits no conserved basis; the study of the infinite dimensional case. Another interesting question would be to investigate how \(\eta\) depends on the basis in which decoherence is considered: quantum interferences are indeed suppressed in the conserved basis, but how strong are they in the other bases?

Notes

It is actually very important that the decoherence process (in particular a measurement) is not instantaneous. Otherwise, it would be impossible to explain why an unstable nucleus continuously measured by a Geiger counter is not frozen due to the quantum Zeno effect. See the wonderful model of [6, §8.3 and §8.4] that quantifies the effect of continuous measurement on the decay rate.

We use the quotation marks because, on \({\mathbb {S}}^{n}\) equipped with its complex scalar product, this set doesn’t look like a cap as it does in the real case. QM is nothing but a geometrical way of calculating probabilities (in which the total probability formula is not true, so that it looks like all possible histories interfere), but the geometry in use is quite different from the intuitive one given by the familiar real scalar product. It is noteworthy to remark that the universe, through its quantum statistics, obeys very precisely the geometry of the complex scalar product, and more generally the geometry induced by its canonical extension on tensor products of Hilbert spaces.

References

Zeh, H.D.: On the interpretation of measurement in quantum theory. Found. Phys. 1, 69–76 (1970). https://doi.org/10.1007/BF00708656

Zurek, W.H.: Pointer basis of quantum apparatus: Into what mixture does the wave packet collapse? Phys. Rev. D 24, 1516 (1981). https://doi.org/10.1103/PhysRevD.24.1516

Zurek, W.H.: Decoherence, Einselection, and the quantum origins of the classical. Rev. Modern Phys. 75, 715 (2003). https://doi.org/10.1103/RevModPhys.75.715

Joos, E.: Decoherence through interaction with the environment. In: Decoherence and the appearance of a classical world in quantum theory, pp. 35–136. Springer (1996). https://doi.org/10.1007/978-3-662-03263-3

Di Biagio, A., Rovelli, C.: Stable facts, relative facts. Found. Phys 51, 1–13 (2021). https://doi.org/10.1007/s10701-021-00429-w

Fonda, L., Ghirardi, G., Rimini, A.: Decay theory of unstable quantum systems. Rep. Progr. Phys. 41, 587 (1978). https://doi.org/10.1088/0034-4885/41/4/003

Saloff-Coste, L.: Precise estimates on the rate at which certain diffusions tend to equilibrium. Math. Zeitschrift 217, 641–677 (1994). https://doi.org/10.1007/BF02571965

Spengler, C., Huber, M., Hiesmayr, B.C.: Composite parameterization and Haar measure for all unitary and special unitary groups. J. Math. Phys. 53, 013501 (2012). https://doi.org/10.1063/1.3672064

Zurek, W.H.: Environment-induced superselection rules. Phys. Rev. D 26, 1862 (1982). https://doi.org/10.1103/PhysRevD.26.1862

Moran, P.: The closest pair of n random points on the surface of a sphere. Biometrika 66, 158–162 (1979). https://doi.org/10.1093/BIOMET/66.1.158

Rankin, R.A.: The closest packing of spherical caps in n dimensions. Glasgow Math. J. 2, 139–144 (1955)

Zhang, K.: Spherical cap packing asymptotics and rank-extreme detection. IEEE Trans. Inf. Theor. 63, 4572–4584 (2017)

Poulin, D., Qarry, A., Somma, R., Verstraete, F.: Quantum simulation of time-dependent Hamiltonians and the convenient illusion of Hilbert space. Phys. Rev. Lett. 106, 170501 (2011). https://doi.org/10.1103/PhysRevLett.106.170501

Orús, R.: A practical introduction to tensor networks: Matrix product states and projected entangled pair states. Ann. Phys. 349, 117–158 (2014). https://doi.org/10.1016/j.aop.2014.06.013

Schlosshauer, M.: Decoherence, the measurement problem, and interpretations of quantum mechanics. Rev. Modern Phys. 76, 1267 (2005). https://doi.org/10.1103/RevModPhys.76.1267

Le Hur, K.: Entanglement entropy, decoherence, and quantum phase transitions of a dissipative two-level system. Ann. Phys. 323, 2208–2240 (2008). https://doi.org/10.1016/j.aop.2007.12.003

Merkli, M., Berman, G., Sayre, R., Wang, X., Nesterov, A.I.: Production of entanglement entropy by decoherence. Open Sys. Inf. Dyna. 25, 1850001 (2018). https://doi.org/10.1142/S1230161218500014

Wyner, A.D.: Random packings and coverings of the unit n-sphere. Bell Syst. Techn. J. 46, 2111–2118 (1967)

Acknowledgements

I would like to gratefully thank my PhD supervisor Dimitri Petritis for the great freedom he grants me in my research, while being nevertheless always present to guide me. I also thank my friends Dmitry Chernyak and Matthieu Dolbeault for illuminating discussions.

Funding

no funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

Antoine Soulas wrote the whole manuscript.

Corresponding author

Ethics declarations

Competing interest

the author has no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Annex: Decoherence Estimated by the Entanglement Entropy with the Environment

Annex: Decoherence Estimated by the Entanglement Entropy with the Environment

We establish here the formula (3): we first derive the inequality (1), and then look for a relation between \(\eta\) and the linear entropy or the entanglement entropy. Inserting the second into the first directly yields (3).

1.1 Relation Between \(\eta\) and the Level of Classicality

Let’s keep the notations of §2, where we defined \(\rho _{\mathcal {S}}^{(q)}(t) = \sum _{i \ne j} c_i \overline{c_j} {\langle {\mathcal {E}_j(t) \vert \mathcal {E}_i(t)}\rangle } {|{i}\rangle }{\langle {j}|}\). We have \(|\hspace{-1.111pt}|\hspace{-1.111pt}| \rho _{\mathcal {S}}^{(q)}(t)|\hspace{-1.111pt}|\hspace{-1.111pt}| \leqslant \eta (t)\) because for all vectors \({|{\Psi }\rangle } = \sum _k \alpha _k {|{k}\rangle } \in \mathcal {H}_{\mathcal {S}}\) of norm 1,

Now, if F is a subspace of \(\mathcal {H}_{\mathcal {S}}\) (i.e. a probabilistic event), let \((\varphi _k)_k\) be an orthonormal basis of F. We have:

In a nutshell: \({\mathbb {P}}_{\text {quantum}} = {\mathbb {P}}_{\text {classical}} + \mathcal {O}(\eta )\).

1.2 Relation Between \(\eta\) and the Linear Entropy

We define the linear entropy (or purity defect) of a state \(\rho\) to be \(S_{\text {lin}}(\rho ) = 1 - {\text {tr}}(\rho ^2)\). Since \(\mathcal {S}\) is initially in a pure state, the quantity \(\frac{S_{\text {lin}}(\rho _{\mathcal {S}}(t))}{ S_{\text {lin}}(\rho _{\mathcal {S}}^{(d)})}\) goes from 0 at \(t=0\) to almost 1 when \(t \rightarrow +\infty\). It measures the ratio of purity that has already been lost compared to its final ideal value. Recall that \(\rho _{\mathcal {S}}(t) = \sum _{i=1}^d |c_i |^2 {|{i}\rangle }{\langle {i}|} + \sum _{1 \leqslant i \ne j \leqslant d} c_i \overline{c_j} {\langle {\mathcal {E}_j(t) \vert \mathcal {E}_i(t)}\rangle } {|{i}\rangle }{\langle {j}|}\), so that:

since the last fraction always equals 1 because \(1 = (\sum _i |c_i |^2)(\sum _i |c_i |^2) = \sum _i |c_i |^4 + \sum _{i \ne j} |c_i |^2 |c_j |^2\). Note that, for any given time t, this inequality is actually an equality for the initial state \({|{\Psi _{\mathcal {S}}(0)}\rangle } = c_{i_0} {|{i_0}\rangle } + c_{j_0} {|{j_0}\rangle }\) where \(i_0\) and \(j_0\) denote two indices such that \(\eta (t) = |{\langle {\mathcal {E}_{i_0}(t) \vert \mathcal {E}_{j_0}(t)}\rangle } |\). Thus:

1.3 Relation Between \(\eta\) and the Entanglement Entropy

The entanglement entropy is always much harder to manipulate. We were not able to prove in the general case a similar result when the linear entropy \(S_{\text {lin}}\) is replaced by the entanglement entropy S, but numerical simulations tend to indicate that the same formula is still (almost) true and that there exists a deep link between the quantity \(1 - \eta ^2(t)\) and the ratio \(\frac{S(\rho _{\mathcal {S}}(t))}{S(\rho _{\mathcal {S}}^{(d)})}\). Here are some considerations to get convinced.

In dimension \(d=2\), if one denotes \(f(t) = {\langle {\mathcal {E}_2(t) \vert \mathcal {E}_1(t)}\rangle }\), one can write \(\rho _{\mathcal {S}}(t) = \begin{pmatrix} |c_1 |^2 &{} c_1 \overline{c_2} f(t) \\ \overline{c_1} c_2 {\overline{f}}(t) &{} |c_1 |^2 \end{pmatrix}\), whose eigenvalues are \(\text {ev}_{\pm } = \frac{1}{2}(1 \pm \sqrt{( |c_1 |^2 - |c_2 |^2)^2 + 4 f^2(t) |c_1 |^2 |c_2 |^2 })\). At large times, \(f \ll 1\) and after some calculations we get at leading order:

The ratio preceding \(\eta ^2\) is a one-parameter real function in \(|c_1 |^2\) (since \(|c_2 |^2 = 1 - |c_1 |^2\)) defined on [0, 1]; it turns out that is takes only values in \([-1, -0.7]\) and tends to \(-1\) only when \(|c_1 |^2\) tends to 0 or 1. Therefore, in dimension 2, we still have (at least at leading order):

In higher dimension, if we suppose that one of the \({\langle {\mathcal {E}_j(t) \vert \mathcal {E}_i(t)}\rangle }\) decreases much slower than the others (assume without loss of generality that it is \({\langle {\mathcal {E}_2(t) \vert \mathcal {E}_1(t)}\rangle }\), still denoted f(t)), then after some time \(\rho _{\mathcal {S}}(t)\) is not very different from:

Using the previous inequality in dimension 2:

and, once again, this bound is attained for an appropriate choice of the \((c_i)_{1\leqslant i \leqslant d}\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Soulas, A. Decoherence as a High-Dimensional Geometrical Phenomenon. Found Phys 54, 11 (2024). https://doi.org/10.1007/s10701-023-00740-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10701-023-00740-8