Abstract

The advancement of science, as well as scientific careers, depends upon good and clear scientific writing. Science is the most democratic of human endeavours because, in principle, anyone can replicate a scientific discovery. In order for this to continue, writing must be clear enough to be understood well enough to allow replication, either in principle or in fact. In this paper I will present data on the publication process in Evolutionary Ecology, use it to illustrate some of the problems in scientific papers, make some general remarks about writing scientific papers, summarise two new paper categories in the journal which will fill gaps that appear to be expanding in the literature, and summarise new journal policies to help mitigate existing problems. Most of the suggestions about writing would apply to any scientific journal.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The advancement of science, as well as scientific careers, depends upon good and clear scientific writing. Science is the most democratic of human endeavours because, in principle, anyone can replicate a scientific discovery. In order for this to continue, writing must be clear enough to be understood well enough to allow replication, either in principle or in fact. Having been an editor (Evolutionary Ecology, and before that Evolution) and associate editor for many years, I have noticed many common problems in scientific writing and some emerging gaps in the literature, hence this article. I will first present data on the publication process in Evolutionary Ecology, use it to illustrate some of the problems, make some general remarks about writing scientific papers, and present two new additional categories of papers designed to fill emerging gaps in scientific publication, at least in our field. Most of the suggestions about writing would apply to any scientific journal, not just Evolutionary Ecology.

Background: publication data for Evolutionary Ecology

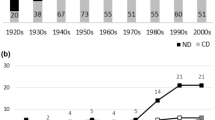

I took over as Editor-in-Chief of Evolutionary Ecology in 2010 and expanded the editorial board. At the time the journal was somewhat unfocussed and subject to large submission fluctuations (Fig. 1a). Consequently I decided to give the journal significant focus and defined and published the journal’s central aim as seeking papers which should yield significant new insights into the effects of evolutionary processes on ecology and/or the effects of ecological processes on evolution. A clear statement of a journal’s aims makes it clear to potential authors whether or not their paper matches the journal, and also makes editorial decisions easier. Since 2010 the submission rate has remained at around 230 manuscripts per year. The rate of acceptance has declined steadily from about 43 % in 2009 to about 22 % in 2014 (Fig. 1a). The increasing rate of all rejections is primarily due to the increasing fraction of rejections without review; the rate of rejections after review has remained fairly constant since 2006, with a temporary increase during the year I took over as Editor (Fig. 1b). Concomitant with the rise in rejections without review has been a decline in the requests for both major and minor revisions (Fig. 1c). I encouraged the Associate Editors to reject without review those papers which were unlikely to pass the review process owing to obvious flaws, because they needed so much work that rejection would encourage the authors to do a better job of analysis and writing, or because they did not fulfill the journal’s aims.

History of numbers of papers and decisions in evolutionary ecology 2005–2014. a numbers submitted and percent accepted. b Fraction of all submitted manuscripts rejected after review and without review. c Fraction with requests for revision or accepted as first decision. d Fraction of requested revisions which were major

Before I started in 2010 there was a catastrophic drop in the number of papers which were accepted without any revision, and this is now very rare (Fig. 1c). When I was editor of Evolution in the 1980s–1990s simple acceptances were more common (about 15 %) than now (Fig. 1), and minor revisions were a lot more common (31 %) than major revisions (about 16 %, Table 2 of Endler 1992). Now the pattern is reversed and major revisions make up about 75 % of all requested revisions (Fig. 1d).

An examination of the times taken for each component of the publication sequence reveals more patterns. Since I took over, I have managed to significantly shorten all components of the publication sequence (Figs. 2, 3). Most importantly, the times to first decision and processing time (handling + external review times) dropped substantially from 2009 and 2011 (Fig. 2). Note that the times are close to log-normally distributed (Fig. 2c, d), so the data are plotted in a log (base 10) scale. Back-transforming (10logtime) before (2005–2009) and after I took over as editor (2011–2014), the time to first decision went from about 68 to 18 days, the process time went from 57 to 16 days, while the time with author for revisions (minor + major) remained similar at 40 and 42 days (Fig. 2), and the total time (process + revisions) went from 102 to 24 days. The overall improvement is also shown in each editorial decision category with much less skewed distributions (Fig. 3). For example, for time to first decision, minor revision times went from 94 to 40 days, major revisions 110 to 49 days, reject after reviews 86 to 50 days, and reject without review 16 to 2.6 days. The long left tail in the log times for all manuscripts, (Fig. 2) results from rejects without review, and disappears when considering each decision category separately (Fig. 3). Clearly Evolutionary Ecology publication speed has improved substantially.

History and distribution of decision times. Data are in log10 (days). a Time to first decision 2005–2014. The vertical lines around 2010 indicate the transition to a new editor (John A. Endler), who took over on 1 January 2010. These are box plots where the box represents the inter-quartile range, the horizontal line within it the median, the vertical dashed lines the range, and the isolated + symbols possible outliers. If the range paired triangles (‘notches’) do not overlap among boxes then the medians are significantly different. b As for a but data are processing time, or the time between submission to receipt of reviews, plus requested revisions to receipt of revised manuscript. c Distribution of first decision times for the previous editor (2005–2009). d Distribution of first decision times for the current editor (2011–2014) after the transition year. The elongated boxes (a, b) and larger left tail after 2010 result from the increasing number of rejections without review (Fig. 1b)

History of first times separated by kinds of decisions. Notice the more symmetrical boxes compared to Fig. 2a. a Time to minor revision request. b Time to major revision request. c Time to reject without review. d Time to reject without review. All show significant improvement

Beware of publication time comparisons with other journals for four reasons: (1) they use arithmetic means when the raw (days) distributions tend to be skewed and either negative exponential or log-normal, (2) they combine all classes of decisions; (3) their means include the reject without review times which make the averages even smaller, and (4), many of them reject without review and/or reject when they actually mean major revisions. The incorrect scaling makes comparisons invalid and the three other operations make the times appear shorter. By illustration, the overall mean time to first decision in Evolutionary Ecology is about 18 days, but keeping each category of decisions separately, the first decision times other than for reject without review is about 50 days, longer due to the time obtaining reviews. Interestingly, the time taken for authors to revise is also about 50 days, leading to total times (process + revisions) of about 80 days for minor revisions and 139 days for major revisions, compared to 50 days for rejects after review, which makes it obvious why journals wanting to seem to have fast turnaround times reject almost all manuscripts either before or after review rather than ask for revisions. There are similar patterns for pooling or not pooling the decision categories for processing time and total time. It would be valuable if journals published statistics like these to make accurate comparisons, rather like banks must do with interest rates in some countries.

Figure 4 shows the distributions of log(times) for various components of the publishing process in Evolutionary Ecology. Not surprisingly the times to first decisions for accepting the rare near-perfect papers and reject-without-review are very short, about as short as possible. Also, not surprisingly, the times with authors is greater for major compared to minor revisions. The times for first decisions are about the same for all reviewed manuscripts. It is interesting is that it takes significantly longer to process manuscripts which are ultimately rejected after review. This pattern is not reflected in the total times because manuscripts requiring revision take time to revise and revisions increase the total time for papers requiring major revisions to be published.

Times for each component of publishing a paper separated by decision types. a Time to first decision (submission to decision email). b Days with author (time from decision to received revisions; if more than one revision, sum of each set of days with author). c Processing time (difference between time with author and time between submission and final decision). d Total time (time between submission and publication or rejection)

Papers rejected after review take the longest to process because it is very common for us to have trouble getting reviewers for these papers. For a large fraction of ultimately rejected papers we have to ask three or even more reviewers (record 14!) before we either get two reviews or have to give up with only one plus the associate editor’s review. (If there are delays, we ask for a third and eventually an additional reviewer within 2 weeks of the last unfulfilled request). According to some reviewers whom I’ve queried, they originally agree to review the paper, but the manuscript is so bad that they give up reading it, and either tell us that they no longer have time to review it, or procrastinate about telling us that they don’t want to bother and we have to cancel their review. This definitely inflates the processing time of rejected papers relative to papers requiring revision. With the proliferation of journals and hence demands on a finite number of potential reviewers, this pattern should get worse. The cure is to submit more carefully prepared papers which are easier to read.

Problems illustrated by the Evolutionary Ecology publication data

This decline in acceptance rate and the steady increase in rejections without review, and the preponderance of major revisions over minor revisions is a joint function of our increasing the scientific quality criteria for acceptance, the more focussed nature of the journal, and the worldwide massive increase in the pressure to publish papers, particularly by Ph.D. students. About 80 % of the requests for major revisions and rejections involve lack of clarity. In these cases neither the reviewers, the Associate Editor, nor I can figure out what the author actually did. The lack of clarity involves (1) the fundamental question or purpose of the paper (2) justification and detail of data collection methods (3) justification and detail of the statistical and/or mathematical analysis of the data, (4) logic of the conclusions from the data, and (5) the discussion and answering the fundamental question of the paper. These problems appear to result from the authors being in far too much of a hurry to submit their papers and consequently submitting papers which are far from being ready to be examined by reviewers. This rush not only results in inferior writing and manuscript preparation but also in superficiality in the conclusions and discussion. This is frequently also associated with poor scholarship, the authors missing out many important papers on the same subject.

Many authors with faults in all aspects of clarity and scholarship are people who have not yet finished their Ph.D.’s and have been either strongly encouraged or forced to publish before they finish their Ph.D. I know of several institutions worldwide who will not even grant a student a Ph.D. unless he/she has published one or more papers. This is a very poor policy for a number of reasons: (1) It insures the lowest quality papers because there is not enough time to think about the results and prepare a manuscript properly, and complete a Ph.D. thesis in 3-5 years. The problem is even more severe for Masters students because the Masters degree is done in a year or two. Oddly, requiring publication is more common in institutions with 3 and 4 year Ph.D. programmes than those allowing more time. It is a serious mistake to expect students forced to publish quickly during their Ph.D. because there may not be enough time to mature their ideas, to do a proper analysis, and to organise their thoughts. (2) This is really bad for an author’s reputation. If a paper is poorly written and presented this implies that the science is also sloppy. This not only leads to very low expectations from editors and reviewers, but also makes rejection without review even more likely in the future. (3), In addition to the outside view, this practice ruins the self-confidence of young scientists. In my own experience, the best students lack self confidence because they are more critical than the pedestrian students, rejection makes it worse. (4) Because there is insufficient time to prepare a decent manuscript before finishing a Ph.D., this forces Ph.D. and Masters students to publish in minor or purely local journals, because the suboptimal papers are likely to be rejected by international journals. This further reduces or destroys the self-respect of young scientists. (5) this reduces the average quality of science, making it more vulnerable to public and government criticisms, to say nothing of the increased likelihood of retractions. This is not to say that Ph.D. students cannot write excellent papers; the point here is that not all can do so right away, and every student should be given a reasonable time to think about what they are doing and its implications.

The next biggest class of reasons for rejections and major revisions is poor analysis and/or poor experimental or sampling design. This is the classical reason for rejection or major revisions. My impression is that it is not much more common than it was 30 years ago, although the specific reasons have shifted as both design and statistical methods have evolved. This is a matter of getting better advice on both experimental/sampling design and statistics before doing the study, or at least before submitting the manuscript. There are many new statistical methods available and authors should make an effort to use them. Many of the older methods (ANOVA, etc.) have implicit assumptions, which have been violated in publications for decades, and the newer methods (GLMM, etc.) allow ways around many of these old problems. We now reject or request major revisions for invalid or not validated statistical analysis. On the other hand if authors can validate their analysis by testing all assumptions of an older method, this is perfectly acceptable, but all assumptions must be tested. Even the new methods require validation; for a summary of validation methods, see Zurr et al. (2010).

Another class of problems is that submitted papers do not match the journal aims; they contain little or no evolution, little or no ecology and do not relate the two if both are present. Most of these are rejected without review. Such papers belong in other journals such as those devoted to behavioural ecology, ecology, evolution, population genetics, or conservation biology. I often make specific suggestions in these cases about what journals the paper should be submitted to, and some have been published in higher impact journals as a result. This outcome is much better than the author waiting for the reviews to find out that the paper is not appropriate. In very few cases I send papers for review which might be inappropriate for Evolutionary Ecology. This occurs when I think that there is a chance that it can be made relevant. If the reviewers like the paper but it cannot be made relevant I offer the author the choice of my sending both the manuscript and reviews to a journal of my or the author’s choice (I also did this when I was the editor of Evolution). Almost all those transferred papers were published in more appropriate journals, and this eliminates all or most of what would be additional reviewing time. But more time would be saved had the authors read the aims of Evolutionary Ecology.

Suggestions for writing a scientific paper

In view of the frequent problems in manuscript preparation it is a good idea to review some guidelines for writing a scientific paper. My comments are not about good experimental design, but about reporting what you did. It is in fact possible to have a terrible experimental or sampling design and faulty conclusions but which are perfectly written. Problems in design should be avoided before the project is started and there are many books and papers on this topic.

Before writing

Decide on the major point of the paper before you start writing. A good paper will make one or possibly two major points. Don’t put in too much or it will be confusing, or never read. Always remember that the major purpose of a paper is to communicate new insights about natural phenomena. But it has to be obvious from the start just what you will communicate.

Abstract or summary

Write this last–you will find it much easier that way. This is because the process of writing tends to clarify your ideas and you will find that you think more clearly about what you have done after the paper is written. Therefore you will write a better summary after the rest of the paper is finished. The summary should contain the general and specific question, a concise description of the results, and what the results tell us. It is usually 100-200 words. Basically, if you cannot describe the central ideas and conclusion in 100 words, then you are not thinking clearly, and your paper will be unreadable. Also, you may find that, even after you have finished the paper and written the summary, that the further clarification of your thoughts means that you will have to rewrite the introduction and discussion sections. This is normal and always makes the paper better.

Introduction

Within the first paragraph ask yourself, and write accordingly: What is the broad question you are asking, and answering in this paper? Why is the question interesting and important–what is the context? It is annoying to have to read several pages before getting to the point of the paper, and this will put the reviewers (and readers if published) into a bad mood, making it less likely that your paper will be appreciated. Within the second or third paragraph ask and write accordingly: What is the specific question you are asking and what is its context? Briefly, how will you answer it? Here is the place to put your hypotheses and predictions it generates. Cite the relevant literature which led up to your question and which puts it in context. Be scholarly and find all previous papers on the subject. It is extremely annoying as a reader to read a paper which unknowingly repeats early work, or misses important papers which place the paper in the context. Bad scholarship is also a disservice to science as a whole.

Materials and methods

This should be written clearly enough so that anyone can replicate your study–this is the very basis of science. It is surprising how often papers are rejected or major revisions are requested as a result of unclear methods of data collection and analysis. Apart from being poor communication, unclear methods make it difficult to judge the quality of the research, and also makes the entire paper seem dubious. Given that unclear methods is the most frequent reason for rejection, it is particularly important to ensure clarity of writing in this section.

Cite other papers for details of methods if you used their methods. If you modified their methods, cite them and then say how your own methods differed.

When deciding what methods, data and analysis to include, always ask yourself ‘what is the minimum critical evidence needed to make my point unambiguously’, and leave everything else out (see Endler 1992). This avoids the other frequent problem of verbosity, which leads to reader boredom, and less (or no) appreciation of the paper. Only put in the details which are directly relevant for this study, even if it is part of a larger study (as it usually is).

Discarded data

Supposing you started with 20 animals, but only 15 behaved and therefore you only collected data on the 15. The sample size really is 15, not 20. However someone doing meta-analysis (comparing the results from many papers) may be interested to know how often animals do not behave, die, or otherwise screw-up an experiment, so there is nothing wrong with a single sentence saying that you started with x and only used y—so long as the reader is clear about the discard criteria and what the actual sample size is. This is separate and additional to how to deal with statistical outliers, and there are many standard statistical methods of objectively identifying outliers. My personal preference is to keep outliers unless something really obvious happened during that data collection, for example, calibration lost on a machine, a large vehicle drove by during sound recording, etc. Both sample size and outlier criteria should always be made explicit.

Statistical analysis

The first part of the explanation of the statistical analysis should explain the general statistical approach taken in the paper; frequently this is not obvious. It is also essential to ensure that the statistical tests actually address both the specific and fundamental questions and hypotheses proposed in the manuscript. In addition to the common problem of lack of sampling and or experimental method details, the description of the statistical methods is frequently inadequate. Again, the details should be specific enough that anyone could replicate the results. For example, if correcting for type I error, give the details and critical threshold. If fitting statistical models, explain exactly how it was done, and exactly how the best models were chosen. All methods must be clear enough to be replicable.

Results

As for the methods section always ask yourself ‘what is the minimum critical evidence needed to make my point unambiguously’, and leave everything else out, while avoiding selective reporting of data. When in doubt, put it in the online appendix. Avoid long paragraphs with excessive numbers of statistical tests, etc., put these in a table. Tables can be used to make a number of points, but don’t make them too complicated. Never duplicate the same results in both a table and a figure, use figures whenever possible because they can communicate much more quickly and concisely than tables. Use multiple panels per figure for similar kinds of graphs because this takes up less space. Never draw a line through your data unless the regression is significantly different from zero. Use histograms with error bars when the data are continuous, and bar charts when the bars refer to discontinuous variables or categories. However, instead of histograms or bars it is better to use box plots or other newer methods in order to give readers an idea of the amount of variation (Weissgerber et al. 2015). For statistical tests report the statistic, degrees of freedom, P value, or equivalent Bayesean statistics, and the effect size for each test. Put these in the text if there are only a few; but if more than 5, put them in a table. Be aware and explicitly correct for biassed results resulting from multiple statistical tests of the same hypothesis, especially if all use the same data set (Nakagawa 2004; Glickman et al. 2014).

Discussion

Avoid a repetition of the results section. Discuss each result which affects your conclusion and if and how it answers your questions and affects your hypotheses. Then put it in perspective for the field as a whole. Finally, discuss the implications of your results. When deciding what to put in the discussion, as for the previous sections, always ask yourself ‘what is the minimum critical evidence needed to make my point unambiguously’, and leave everything else out. Limited speculation towards the end of the discussion is permissible, provided it only requires a few new sets of data. Speculative points are only justified if you are about 95 % of the way towards proving them.

Citing relevant literature

Try to cite all the most critical references, and always give credit for other people by citing their papers, whenever the papers are relevant. Make an effort to be complete and scholarly. It is a disservice to science in general not to do so, but not citing relevant references will also have unfortunate effects on your reputation, even if the paper is rejected.

General

For every aspect of the paper ask yourself ‘what is the minimum critical evidence needed to make my point unambiguously’, and leave everything else out (Endler 1992). Get to the point quickly, make it clear what you want to demonstrate, make it clear what you have demonstrated, and why it is interesting and important (the context). Always check the logic of your statements and the logic of the entire paper. A frequent fault of papers, as first submitted, is that they are illogical, or have left critical things out which make the logic faulty. You always need to give the full evidence for anything you say. Avoid excess verbiage, and to humorously paraphrase Strunk and White (1999), omit ornamental rhetoric. Use plain English and avoid jargon whenever possible. Always use the first person (I or we), it makes sentence structure simpler, and of course authors should take full responsibility for the paper. Every paragraph should make a single point, usually in the first sentence.

It is a very good idea to look at papers in the better scientific journals for examples. Note that if a published paper is hard to read, it may not be your fault, but an artifact of bad writing.

Rewriting

Be prepared to rewrite your paper several times until you are satisfied with it; this is normal. As I mentioned earlier, you definitely should rewrite the abstract and introduction after finishing the rest of the paper because writing will have clarified your ideas. In addition, ask your peers to read it and tell you about anything which is unclear, illogical, or otherwise faulty. Do not wait for reviewers’ comments to rewrite, this will likely result in your paper taking longer to review, makes rejection much more likely, and is a disservice to reviewers, who get asked to review many papers per month.

If English is not your native language, then it is a very good idea to have a native speaker or very experienced English speaker go over your manuscript, give you comments, and then you should rewrite the paper in view of those comments. We try not to confuse bad English with bad logic or bad experimental design, but bad English may make it more difficult to understand what the author did, and certainly gives the author an unfair disadvantage during the review process.

New paper categories in Evolutionary Ecology, and some policy changes

In observing the flow of papers in Evolutionary Ecology and in other journals for which I am Associate Editor, and also discussing rejections of papers of many friends and colleagues, I have realised that the current (2015) trends in publishing are actually discriminating against two forms of papers, to the considerable disservice to the advancement of science. As a result Evolutionary Ecology now invites submissions to two new paper categories in addition to the ordinary categories: New Tests of Existing Ideas and Natural History. The first category is designed for tests of apparently well-established ideas which are based upon what are now regarded as insufficient data or methods, or have poor generality beyond the original taxa tested. These papers will also be valuable to people performing meta-analysis (Nakagawa and Poulin 2012). The second category is designed for the ultimate source of all hypotheses, Natural History.

New Tests of Existing Ideas

As Parker and Nakagawa (2014) discuss, it is becoming apparent that many existing ideas may be based upon insufficient evidence and may be unreliable, and examples of failed ideas in the media tend to discredit science in the public’s mind. Although every scientist agrees upon the importance of replication, current incentives from high impact journals, granting agencies, and ignorant university administrators, appears to be creating a slavish devotion to “novelty “ and actively devalues and discourages new tests of old ideas. Novelty is a good idea and is indeed the basis of science progress, but if carried to excess it actively undermines the reliability of existing understanding of phenomena. If our understanding of existing phenomena is wrong, and future work is based upon it, then even apparent novelty will fail.

The problem is that many old ideas were not tested adequately, owing to insufficient older methods or were attempted before more critical tests using modern methods were possible. Moreover, many apparently established ideas are based upon small sample sizes. Although they may have been acceptable at the time, small sample size can lead to Type I error (false positive results). This is a particularly serious problem when the study has not been replicated because there is no way of knowing whether the results were real or a sampling accident; replication of studies allows us to separate accidents from robust results (see discussion in Parker and Nakagawa 2014). A lack of replication is a problem even with large sample sizes. Well-established phenomena are often based upon data from a single species, or are confined to a single clade (e.g. just vertebrates or just birds), and therefore suffer from a lack of replication and hence generality. The lack of replication in the same taxa and, more importantly, in unrelated taxa, may also be associated with a high rate of type I error, and this is particularly problematic with small sample sizes (Parker and Nakagawa 2014).

How many of our assumptions about phenomena are in fact based upon what we now realise are poor data or analysis? An unknown lack of reliability of supposedly well established concepts can lead to failures of application, or massive amounts of money and other resources wasted in attempts at, for example, conservation biology. This is unhealthy for the advancement of science, immoral in conservation biology, and a potential lack of reliability of apparently well established ideas can progressively reduce or even eliminate the public’s respect and support for science. For this reason Evolutionary Ecology will heretofore have a new class of papers entitled “New Tests of Existing Ideas”.

“New Tests of Existing Ideas” will actively contribute to changing this unhealthy scientific culture by positively encouraging very careful replication of studies and validation of ideas and concepts. In the past, several researchers have advocated that journals should set aside a special section for replication studies. However, no journals in ecology and evolution have taken such an initiative, perhaps because, in some people’s minds, such a section could inadvertently label a replication study as non-novel and even as second-class. Our new paper category should avoid this kind of stigmatization; instead, the new category values and highlights replication studies which validate existing ideas and allow for more serious generalisation; we aim to raise the status of replication work. As described in Parker and Nakagawa (2014), systematic efforts to fund, publish and provide incentives for replications are underway in medical and social sciences. Evolutionary Ecology should lead our field by establishing “New Tests of Existing Ideas”, providing a unique place for studies which replicate, validate, and allow generalization of existing ideas.

To make this new section really special and highly regarded, Evolutionary Ecology will provide a special award for papers published in this section. We will call it the R. A. Fisher Prize because he made significant and thorough contributions to both statistics and evolutionary biology. The prize includes not only recognition of a scientific contribution but also books from the Evolutionary Biology’s publisher, Springer-Verlag.

Before formally submitting your paper to this section of the journal, please discuss it directly with the editor. Please be explicit why your new test of old ideas is appropriate and valuable. For example, does it have a larger sample size, use newer methods of data collection, use better statistics, new and unrelated taxa from the original well established study? The key question is whether or not the replicated study makes the old conclusion more reliable and general, and of course there is the possibility that the generalisation may fail in the replicated test. Both outcomes are valuable and interesting.

Evolutionary ecology natural history

Natural History is the fundamental source of all hypotheses in Evolution, Ecology, Behavioural Ecology and Evolutionary Ecology, yet it is being badly neglected. The only journal which presently has a section specifically devoted to Natural History is The American Naturalist. Unfortunately that section has actually diverged from its original intent, probably because the pressure to publish experiments. More generally, a slavish devotion to Popperism, has selected against purely empirical observations. There appears to be no a place to publish very careful empirical observations which result in new hypotheses. Consequently Evolutionary Ecology now has a new class of papers entitled “Evolutionary Ecology Natural History”. Papers for this category should contain very well documented and well replicated observations which generate new ideas and or hypotheses, or are otherwise impossible to explain within the framework of our current understanding of evolutionary ecology. In addition, the paper must fulfill the goals of Evolutionary Ecology: they should yield significant new data on the effects of evolutionary processes on ecology and/or the effects of ecological processes on evolution. If you wish to submit to this category, please email the editor first to ensure that it is appropriate for the journal.

General policy changes

It is becoming obvious that there is a great value in publishing the data along with a paper. There are three excellent reasons (1) this allows readers to assess the validity and logic of the paper in a deeper way than when the raw data are absent; (2) this allows the direct use of the data in meta-analyses. Meta-analyses are becoming more common and sophisticated (Nakagawa and Poulin 2012) and their reliability will be increased with more raw data. This yields far better generalizations than extracting data from figures and tables; (3) it encourages collaborations and makes them much easier. For example some people wrote to me about data I collected on guppies in the 1970s and 1980s and I had to find it and dig it out in order to share it with them. This resulted in an interesting paper (Egset et al. 2011), but collaboration would have been far more efficient if I had actually published the data, making it more easily available.

One of the facts to come out in considering the lack of replication of studies is the nature of the data used to make the conclusions. In some cases there is conscious or unconscious data selection rather than reporting on the entire data set. For example, in addition to removal of outliers, perhaps the most interesting data subsets were used, or more subtle, some data or some variables may have been discarded without being mentioned in the paper. If there is any data selection for any reason, the methods should be reported with justification, and readers should be able to assess the data selection along with the rest of the paper. For a discussion, see Simmons et al. (2011) and Parker and Nakagawa (2014).

Consequently there are three policy changes for papers in Evolutionary Ecology for any class of paper (original papers, new tests of existing ideas, and evolutionary ecology natural history): (1) Authors should confirm that they are reporting on their full data set, and if not, exactly how and why they chose a subset of the data. (2) It is strongly requested that authors publish the data used to make the conclusions, either as an online appendix or in a data archive such as Dryad. Unlike some journals, this is not required because the publisher (Springer) will not pay for Dryad submissions, and some authors may wish to use their data as parts of future publications and therefore may wish to keep the data confidential. On the other hand, archives such as Dryad allow specifying an embargo date. (3) For the two new classes of papers, please email the editor before submitting to ensure that we think that the paper is appropriate for Evolutionary Ecology; that will avoid rejections without review.

References

Egset CK, Bolstad GH, Rosenqvist G, Endler JA, Pélabon C (2011) Geographic variation in allometry in the guppy (Poecilia reticulata). J Evol Biol 24:2631–2638

Endler JA (1992) Editorial on publishing papers in evolution. Evoltuion 46:1984–1989

Glickman ME, Rao SR, Schultz MR (2014) False discovery rate control is a recommended alternative to Bonferroni-type adjustments in health studies. J Clin Epidemiol 67:850–857

Nakagawa S (2004) A farewell to Bonferroni: the problems of low statistical power and publication bias. Behav Ecol 15:1044–1045

Nakagawa S, Poulin R (eds) (2012) Meta-analytic insights into evolutionary ecology, special issue. Evol Ecol 26:1085–1276

Parker TH, Nakagawa S (2014) Mitigating the epidemic of type I error: ecology and evolution can learn from other disciplines. Front Ecol Evol 2:1–3. doi:10.3389/fevo.2014.00076

Simmons JP, Nelson LD, Simonsohn U (2011) False positive psychology: undisclosed flexibility in data collection and analysis allows presenting any thing as significant. Psychol Sci 22:1359–1366. doi:10.1177/0956797611417632

Strunk WS Jr, White EB (1999) The elements of style, 4th edn. Longmans, London

Weissgerber TL, Milic NM, Winham SJ, Garovic VD (2015) Beyond bar and line graphs: time for a new data presentation paradigm. PLoS Biol 13(4):e1002128. doi:10.1371/journal.pbio.1002128

Zurr AF, Ieno EN, Elphick CS (2010) A protocol for data exploration to avoid common statistical problems. Methods Ecol Evol 1:3–14. doi:10.1111/j.2041-210X.2009.00001.x

Acknowledgments

I am very grateful for interesting discussions with Shinichi Nagkawa and for excellent and useful comments and suggestions from Carolien de Kovel, Shinichi Nakagawa and Tom Reader.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Endler, J.A. Writing scientific papers, with special reference to Evolutionary Ecology . Evol Ecol 29, 465–478 (2015). https://doi.org/10.1007/s10682-015-9773-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10682-015-9773-8