Abstract

This study was performed in order to improve the estimation accuracy of atmospheric ammonia (NH3) concentration levels in the Greater Houston area during extended sampling periods. The approach is based on selecting the appropriate penalty coefficient C and kernel parameter σ 2. These parameters directly influence the regression accuracy of the support vector machine (SVM) model. In this paper, two artificial intelligence techniques, particle swarm optimization (PSO) and a genetic algorithm (GA), were used to optimize the SVM model parameters. Data regarding meteorological variables (e.g., ambient temperature and wind direction) and the NH3 concentration levels were employed to develop our two models. The simulation results indicate that both PSO-SVM and GA-SVM methods are effective tools to model the NH3 concentration levels and can yield good prediction performance based on statistical evaluation criteria. PSO-SVM provides higher retrieval accuracy and faster running speed than GA-SVM. In addition, we used the PSO-SVM technique to estimate 17 drop-off NH3 concentration values. We obtained forecasting results with good fitting characteristics to a measured curve. This proved that PSO-SVM is an effective method for estimating unavailable NH3 concentration data at 3, 4, 5, and 6 parts per billion (ppb), respectively. A 4-ppb NH3 concentration had the optimum prediction performance of the simulation results. These results showed that the selection of the set-point values is a significant factor in compensating for the atmospheric NH3 dropout data with the PSO-SVM method. This modeling approach will be useful in the continuous assessment of NH3 sensor discrete data sources.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The determination of the ammonia (NH3) abundance in the atmosphere is important because NH3 leads to the formation of particulate matter, such as ammonium sulfate (NH4)2SO4, ammonium bisulfate (NH4HSO4), ammonium nitrate (NH4NO3), and ammonium chloride (NH4Cl) aerosols, via relevant chemical reactions [78]. In recent decades, industrial developments, motor vehicle activities, and electric utilities have contributed to increased ammonia concentration levels in urban areas [24, 34, 39]. Major NH3 emission sources also include dairy operations, livestock facilities, and lagoons [5, 18, 23, 38, 66, 67, 71, 84]. There exist substantial uncertainties in temporal and spatial variations of NH3 levels across the globe due to the limited ground-based observations, satellite measurements, and large-scale modeling efforts. With the rapid economic expansion and population growth in many countries, global atmospheric NH3 levels are expected to increase in the future largely due to enhanced anthropogenic emissions. To provide sufficient data to researchers in the field of atmospheric chemistry and physics, long-term atmospheric NH3 concentration measurements and predictions are important. To date, NH3 has not been regulated under the National Ambient Air Quality Standards by the US Environmental Protection Agency.

There are many measurement techniques for ambient concentrations of atmospheric NH3 including autonomous and manual wet chemistry detection techniques, such as bulk denuder techniques [22, 41], wet effluent diffusion denuder (WEDD) techniques [22], and NH3online analyzers [9, 20, 72, 73, 85]. The main drawbacks of manual methods include low temporal resolution, low sampling frequency, and manual handling errors during the periods of specimen preparation, analysis, and the storage process.

Newly developed NH3 spectroscopic instruments include quantum cascade laser absorption spectrometers (QCLASs) [81], photo-acoustic spectrometers (PAS) [32], cavity ring down spectrometers (CRDS) [55], chemical ionization mass spectrometers (CIMS) [61], ion mobility spectrometers (IMS) [56], and open-path Fourier transform infrared (OP-FTIR) spectrometers [26]. The precision of NH3 measurements ranges from 0.018 to 0.94 ppb [10].

It is important to take into account several sensor factors that exist due to NH3 adhesion properties by material surfaces [87], resulting in a slow inlet response time of the various spectrometers mentioned above. Furthermore, NH3 is present in the gaseous, particulate, and liquid phases, which adds further complexity to its measurement [80]. The measurement technique should be specific to the gas phase and should not modify the gas-aerosol equilibrium that depends on environmental conditions. Hence, some NH3 measurement data may be missed or incorrect during continuous long-term monitoring. In addition, to predict and track the trend of NH3 concentration levels, an accurate measurement model is desirable due to the close relationship between NH3 and environmental factors such as ambient temperature, wind direction, wind speed, and relative humidity. Long-term and online atmospheric NH3 concentration detection is challenging due to its uncertain, nonlinear, and dynamic characteristics. Therefore, estimating atmospheric NH3 concentration levels accurately and modeling the correlation between NH3 and environmental factors are essential to investigate air pollution sources.

Recent research proved that artificial neural networks (ANNs) have parallel processing capabilities with high error tolerance and noise filtering. ANN has been applied to establish nonlinear models [15, 30, 54, 64]. For example, in atmospheric chemistry and physics, there are numerous ANN applications with various algorithms reported in the literature [1, 2, 8, 19, 43, 44, 64, 77, 82, 83]. However, reports regarding the ANN approach for prediction of NH3 emission are still limited. Plochl [65] presented the ANN method for estimating parameters E max and K m which determined the nonlinear Michaelis-Menten-like function. With this function, the accumulated NH3 emission level was demonstrated for manure field applications. The same study suggested that an accuracy assessment of the NH3 emission could be obtained from field-applied manure using the feedforward back-propagation ANN method [45]. A recent paper addressed applying ANN as a predictive instrument for modeling NH3 emissions released in sewage sludge [11]. Previous ANN-based NH3 predictions primarily focus on the characterization of NH3 emissions from agricultural activities such as fertilizer applications. In this study, the reported work simulates the temporal variation of NH3 levels in the atmosphere rather than near strong emission sources. In addition, an ANN nonlinear model may be influenced by getting trapped in local minima, over-fitting, complex architecture structure, and random initializing weights [60, 63].

Support vector machine (SVM) is a machine learning method that adopts the structure of a risk minimization principle, whereas ANN implements empirical risk minimization to reduce the errors in the training data. The SVM model produced smaller maximum absolute error (MAE) values compared to ANN [52]. SVM seeks to minimize the upper bound of the generalization error instead of the empirical error, as in conventional neural networks [25, 37, 50, 51]. SVM contains fewer free parameters compared to the ANN model. Thus, SVM based on statistical learning theory has been used in many applications of machine learning because of its high accuracy and good generalization capabilities. An optimal SVM forecasting model is obtained by choosing a kernel function, setting the kernel parameters σ 2, and determining a soft margin constant s and a loss parameter ε [49]. The selection of these parameters has a significant impact on the forecasting accuracy [53].

Most optimization techniques, such as grid algorithm and genetic algorithm (GA), have been used to make the numerical output more accurate with suitable SVM parameters. The grid algorithm is slow and hence does not compare well with GA.

In contrast, the GA is fast and can yield the optimal solution [6, 58]. In addition to GA, the PSO algorithm has been used to optimize the SVM parameters [3, 47, 59]. The PSO method is initialized with a population of random solutions, called particles, for the optimization process. In PSO, each particle searches the space at a velocity that is dynamically adjusted according to its present characteristic behavior and its historical behavior. It was demonstrated that SVM has a better prediction performance than ANN in forecasting ambient air pollution trends, but a relevant investigation for atmospheric NH3 concentration levels using this approach was not available in the published literature. Thus, this work introduces PSO and GA as optimization techniques to simulate and optimize the SVM parameters to obtain an accurate NH3 concentration model.

2 Materials and Methods

2.1 Data Collection and Sampling Site

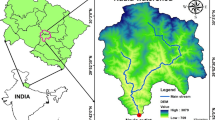

The sampling site for the reported atmospheric NH3 measurements is located atop the University of Houston (UH) Moody Tower (29° 71′ 76″ N, 95° 34′ 13″ W, as shown in Fig. 1), with a height of ~70 m as shown in Fig. 2. Our NH3 sensor was located at the UH Moody Tower monitoring site, which is administrated by the UH and Texas Commission on Environmental Quality (TCEQ). NH3 measurements were conducted from July 22 to September 22, 2012.

During the measurement period, a sensor system based on a 10.4-μm external cavity quantum cascade laser (EC-QCL) and PAS was used [28, 29]. Hourly averaged data are reported in the following sections. The meteorological parameters (e.g., temperature, wind direction, wind velocity, and relative humidity) as well as mixing ratios of other important air pollutants (e.g., oxides of nitrogen (NOx), total reactive nitrogen species (NOy), carbon monoxide (CO), and sulfur dioxide (SO2)) are measured routinely by the instrumentation at the UH Moody Tower monitoring site. Relevant data and associated information can be downloaded from the UH database and are also provided by the Texas Air Monitoring Information System (TAMIS) Web Interface (http://www5.tceq.state.tx.us/tamis/index.cfm?fuseaction=home.welcome).

2.2 Support Vector Machines

SVM was introduced by Vapnik in 1995, based on the statistic learning theory (SLT) and structural risk minimization (SRM). The SVM is one of the best especially for binary classification, which can also be applied to regression algorithms [86].

A set of training data S n = {(x 1, y 1), (x 2, y 2), …, (x n , y n )} is given, where x i ∈ R p is a p dimensional vector of input variables, y i is the desired output value, and n denotes the total number of samples in the data set. To implement the regression calculation, the SRM principle constructs the following optimum decision function in the higher dimensional feature space:

where w = (w 1, w 2, ⋯ w p ) is a p dimensional vector of weights in feature space and b is a scalar threshold. 〈*, *〉 denotes the inner product. A nonlinear function estimation in the original space becomes a linear function estimation in feature space via mapping of the inner product.

The regression function is the solution of a mathematical programming problem. We obtained the following optimized solution:

The corresponding constraints are

A linear ε-insensitive loss function is selected in the standard SVM regression algorithm. Resulting in possible errors and introducing two slack variables ζ i , ζ * i ≥ 0 ⋅ (i = 1, 2, ⋯, l),, the optimization regression function transforms into the following:

Minimize

subject to \( \left\{\begin{array}{l}{y}_i-\left\langle w,x\right\rangle -b\le \varepsilon +{\zeta}_i\\ {}\left\langle w,x\right\rangle +b-{y}_i\le \varepsilon +{\zeta}_i^{*}\\ {}{\zeta}_i,{\zeta}_i^{*}\ge 0\end{array}\right.. \) where ζ i , ζ * i are the slack variables.

The optimized solution of Eq. (4) is given by the Lagrangian saddle point:

where a i , a * i , η i , and η * i are the Lagrangian multipliers.

For the optimal conditions,

Substituting the results of Eq. (6) into Eq. (5), the maximum objective functionals are given as

subject to \( {\displaystyle \sum_{i=1}^n\left({a}_i-{a}_i^{*}\right)=0} \) and 0 ≤ a i , a * i ≤ c.

Only a few of the a i and a * i terms are not equal to zero. We call the samples x i support vectors. Equation (1) can be combined with Eq. (7) to yield

Based on the Mercer’s condition [76], the kernel function k(x i , x) for 〈x i , x〉 in Eq. (7) allows us to reformulate the SVM algorithm by a nonlinear paradigm as with

under constraint conditions \( {\displaystyle \sum_{i=1}^n\left({a}_i-{a}_i^{*}\right)=0} \) and 0 ≤ a i , a * i ≤ c.

From Eq. (1), we have

The kernel functions treated by SVMs are functions with linear, polynomial, or Gaussian radial basis; exponential radial basis; and splines [4, 7, 12, 17, 48, 62, 68–70, 74, 79]. The Gaussian radial basis function that was used in this study can be defined as

where σ is the Gaussian radial basis kernel function width.

The advantages of Gaussian radial basis kernel function are that it is computationally simpler than the other function types and nonlinearly maps the training data into an infinite-dimensional space. Optimizing parameters will become complex polynomial basis function or sigmoid function because two variables need determination in polynomial basis function and sigmoid function, respectively. Thus, it can handle situations in which the relation between input and output variables is nonlinear [21, 46, 62, 74].

2.3 Particle Swarm Optimization

Kennedy and Eberhart [40] developed the first PSO algorithm. In PSO, a set of potential solutions, named the population, searches the optimal outputs in multidimensional space. Each potential solution, called a particle (individual), is given a random velocity and position. The particle has a memory mechanism to keep track of its previous best position (called the p best ) and its degree of fit. In the evolution process of particles, the agent with greatest fitness is called the global best (g best ). The velocity of each particle is represented as V i = (v i1, v i2, ⋯ v iD ) and is updated according to the inertia motion.

The velocity of a particle gradually approaching p best and g best can be calculated according to Eq. (12). The current position (searching point in solution space) can be modified by Eq. (13) where v k id and x k id are the velocity and position of the particle i at iteration k, respectively; v k + 1 id and x k + 1 id are the modified velocity and position at iteration k + 1, respectively; rand are the random variables with range [0,1]; p id is the p best of particle i while p gd is the g best among all particles in the population; the inertia weight w represents the impact of the previous velocity of the particle on its current one; and k denotes the iteration times. The optimization process is illustrated in Fig. 3.

The acceleration coefficients c 1, c 2 control the maximum step size that pushes each particle toward between p best and g best positions. For low values of c 1 and c 2, particles will migrate far away from target regions before being pulled back. On the other hand, particles show abrupt movement toward or past the target regions for high c 1, c 2 values. Hence, the acceleration constants c 1 and c 2 are often set to be 2.0 as empirical values. The selection of c 1 and c 2 was described in detail in two publications [16, 36].

2.4 Genetic Algorithm

The GA is considered to be a heuristic, stochastic, and combinatorial optimization technique based on biological processes of natural evolution developed by Holland (1975) [33]. Goldberg [27] and Vandernoot [75] discussed the mechanism and robustness of GA in solving nonlinear optimization problems. GAs have been successfully applied to many fields such as optimization design, fuzzy logic control, neural networks, expert systems, and scheduling [13, 14, 31, 42]. The heuristic processes of reproduction, crossover, and mutation are applied probabilistically to discrete decision variables that are coded into binary or real number strings. In this study, the GA is utilized effectively to determine suitable parameters in SVM. The GA has two main processing stages. It starts with coding a set of random solutions as some individual chromosomes represent a part of the solution space. Then, the optimal solution is obtained through multiple iterations. During this stage, mutation, crossover, and recombination are applied to obtain a new generation of chromosomes in which the expected value over all the chromosomes is improved compared to the previous generation. This process continues until the termination criterion (predefined maximum iterations or error accuracy) is met. The most accurate chromosome of the last generation is reported as the final solution.

3 Estimation of Atmospheric NH3 Concentration with Particle Swarm Optimization -Support Vector Machine and Genetic Algorithm-Support Vector Machine

3.1 Modeling Architecture

Assuming atmospheric NH3 concentration data A t at a sampling time t from our sensor system, A t − 1, A t − 2 and A t − 3 denote three lagging variables prior to A t . T t represents the ambient temperature measured by a platinum resistance thermometer (Campbell scientific HMP45C [29]). W t represents the wind direction obtained from a wind monitor (Campbell scientific 05103 R.M. [29]). The input variables in SVM consist of the above-stated five variables. The output is the estimated value A * t which corresponds to the forecasting model output of the NH3 concentration.

The input and output data are normalized to prevent the model from being dominated by variables with large values. The normalization processes of all data were carried out using Eq. (14) and Eq. (15):

where x i(NP), y i(NP) are the input and output values of the normalization process; x i , y i are the original input and output data; x max, x min are the maximum and minimum values of the original input data x i ; and y max, y min are the maximum and minimum values of the original output data y i .

As mentioned above, (A t − 1, A t − 1, A t − 3) NP are normalized by A t − 1, A t − 2 and A t − 3, denoting three lagged atmospheric NH3 data points. The normalized temperature value (T t ) NP and wind direction value (W t ) NP are applied to the SVM modeling architecture, as depicted in Fig. 4.

The measurement data sets are divided into a training set and a testing set. Therefore, PSO-SVM and GA-SVM modeling consists of two procedures: a training model and a testing model. During the training model (Fig. 4a), the data from the training set are applied to the SVM model and its output is computed. Furthermore, the c, σ, and ε parameters are updated using the PSO and GA optimization algorithm. The training procedure continues until the error is reduced to a preset minimum limitation 0.01. The c, σ, and ε parameters of SVM are saved to verify the performance and forecast the model output. In the testing model (Fig. 4b), the stored c, σ, and ε parameters are uploaded into the SVM model. Hence, the estimated output is calculated with the testing data set.

3.2 Support Vector Machine-Based Particle Swarm Optimization for Estimation of NH3 Concentration

The penalty coefficient c and kernel parameters σ affect the modeling accuracy, which must be selected manually. In the simulation process, one particle vector has two components, parameters c and σ of the SVM model. The searching ranges are [10, 10,000] for the penalty coefficient c and [10, 1000] for the kernel parameter σ, respectively. The PSO-SVM is computed in the following steps:

-

1.

Selection of the training and testing sample data. The input parameters include ambient temperature, wind direction, and the delayed atmospheric NH3 concentration. The output value is the predicted NH3 concentration level. The delay time m = 3 is set to reconstruct the phase space, and the insensitive loss function constant ε = 0.2 is determined. The total sample data set includes 250 data points. Each point represents hourly averaged NH3 concentration levels measured during the period from 3 p.m. (local time), July 20, 2012, to 1 a.m., July 31, 2012. The first 60 points are treated as the training data, and the remaining 190 points are treated as the testing data.

-

2.

Selection of the PSO parameters. The particle swarm scale is set to 10, the maximum number of iterations is 50, the dimension space is 2, and the acceleration coefficients are c = 1.6 and c 2 = 1.5. The inertia weight ranges from 0.6 (w min) to 0.9 (w max). The particle initial position x i (0) and the speed v i (0) are random.

-

3.

Definition of the fitness function as in Eq. (16),

where A i is the observational NH3 data, Ã i is the predicted NH3 concentration level, and l is the number of the data points.

-

4.

Iterative optimization to renew location and velocity of each particle in accordance with Eq. (12). If the current position of a particle is better than its previous best position, updating is necessary. Particles are moved to their new positions according to Eq. (13).

-

5.

Training of the PSO-SVM model and evaluating the fitness according to Eq. (16).

-

6.

Calculation of the best particle g best according to the particle’s previous best positions p best .

-

7.

Determination of the terminal criteria. Either the maximum iteration number has been reached or the resultant solution stays constant, which stops the iteration process. The solution space g best is the output when the maximum Fitness best is obtained by comparing all the degree of fitness. The model exits and takes step (8); otherwise, it returns to step (4) until the stopping criteria are met.

-

8.

Acquisition of the optimal parameters of the NH3 concentration PSO-SVM model and the predicted output using the test data.

3.3 Support Vector Machine-Based Genetic Algorithm for Estimating NH3 Concentration

The same sampling data are selected as the PSO method in order to compare the simulation performance between GA and PSO described in Sect. 3.2. The steps of optimizing the SVM with GA are described as follows.

-

1.

Initialization of GA. The population size is selected as 10, the maximum number of iterations is 30, and the vector dimension is 2.

-

2.

Encoding of the SVM parameters. The values of c and σ are represented by a chromosome that consists of binary random numbers. Each bit of the chromosome represents whether the corresponding feature is selected or not. A “1” in each bit refers to the selected feature, whereas a “0” means that no feature is selected. The length of bit strings representing c and σ can be selected according to the required precision.

-

3.

Definition of the searching range of c and σ which are the same as for the PSO-SVM.

-

4.

Setting of the fitness function. A global optimal solution was evaluated, and the accuracy regression result was given by the fitness function (Eq. 16).

-

5.

GA operation and computation. GA uses selection, crossover, and mutation operators to generate the offspring of the existing population. Selection is performed to select excellent chromosomes to reproduce. Based on a fitness function, chromosomes with higher fitness values are more likely to yield offspring in the next generation. Crossover is performed randomly to exchange genes between two chromosomes by means of a single-point crossover principle; the rate of crossover is set to P c = 0.9. Mutation is performed according to the adaptive mutation probability P m = 0.1 − [1 : 1 : M] × 0.01/M.

-

6.

Acquisition of optimal SVM parameters. Offspring replace a previous population and form a new population by the three operations mentioned in step (5). The evolutionary process proceeds until one of the stop conditions, the predefined maximum iterations or the minimum error accuracy, is satisfied. The output optimal values are saved and applied to the testing model to forecast the NH3 concentration level.

3.4 Performance Analysis of Particle Swarm Optimization -Support Vector Machine and Genetic Algorithm-Support Vector Machine

The iteration processes of the fitness function for PSO-SVM and GA-SVM are shown in Fig. 5.

Figure 5a shows that the iteration process for PSO-SVM is terminated when the evolution step number reaches 30. An optimized fitness value (best F) is calculated for each iteration time. The best fitness is 1.7312 at the 20th step of the 30 results. Similarly, the evolution generation for GA-SVM is also 30, as shown in Fig. 5b, which is analogous to the PSO-SVM optimization process. The best fitness parameter is 1.5 at the 16th step, which is deduced by comparing all 30 fitness parameters.

The estimation curves for PSO-SVM and GA-SVM are depicted in Fig. 6. The first 60 data points are selected as modeling training samples, and the remaining 190 data points are applied to the testing model. The estimated desired data points are consistent with the inputs. From the simulation outcome, it is possible to conclude that both PSO-SVM and GA-SVM have good estimating performance because the model outputs match closely with the measured NH3 data.

The absolute error is shown in Fig. 7, which indicates the prediction errors in the two algorithms. The difference of each sample point between the estimated and measured values is described directly by the absolute error curve. It is obvious that the estimated error of GA-SVM is larger than that of PSO-SVM during both the training and testing periods.

Absolute errors between the measured and estimated NH3 concentration levels. The selected intervals with green frame are further shown in detail in Fig. 8a, b

The error bars between the measured NH3 concentrations and the simulation outputs are shown in Fig. 8a. This graph represents the error variability and uses a distributing region to indicate the error or uncertainty. The sample data points for the training model range from 20 to 60. The two graphs in Fig. 8a indicate the accuracy of the measurement or, conversely, how far the range is from the true value.

The pink area of error bar demonstrates how the estimated errors vary around the NH3 measurement data considered as the true value. Figure 8a (I) indicates this for the PSO-SVM algorithm, which shows improved accuracy with a narrow, convergent, and centralized error bar compared to that for the GA-SVM shown in Fig. 8a (II). Furthermore, the PSO-SVM performance is better than GA-SVM during the testing modeling (Fig. 8b), and the error bar curves of total sample data varying 0 to 250 are shown in Fig. 8c. Figure 8 proves that the PSO-SVM has the advantages of stability, high approximation accuracy, and good generalization performance.

The numerical simulation results and detailed performance behavior comparison are listed in Table 1. The penalty coefficient c is 5823, and the kernel parameter σ 2 is 395.98 for PSO-SVM. The penalty coefficient c is 1489, and the kernel parameter σ 2 is 73.87 for GA-SVM for the same prerequisites. With the optimal parameters, the training and testing times of PSO-SVM are 8.83 and 0. 55 s, respectively, which are faster than those of GA-SVM. For the PSO-SVM prediction accuracy, the evaluation indices (root-mean-square error (RMSE), maximum error, and minimum error) are 0.8966, 2.5826, and 0.0452 ppb, respectively, which are superior values compared to GA-SVM. Based on the analysis above, PSO-SVM provides a higher retrieval accuracy with a faster running speed than GA-SVM.

4 Forecasting Missing Data Points of Atmospheric NH3 Concentration Levels with Particle Swarm Optimization-Support Vector Machine

4.1 Sample Data Selection and Simulation

Data collected by the NH3 sensor during the sampling period from 7 p.m. July 30, 2012, to 3 a.m. August 8, 2012, are selected. Due to central wave number shift and electromagnetic perturbation of the laser-based sensor system, 17 data points of NH3 concentration were missing during the period from 7 p.m. August 2, 2012, to 5 p.m. August 3, 2012. The PSO-SVM method with a high estimation accuracy and fast operating speed is used to retrieve the missing data points based on the analysis described in Sect. 3.

The first 60 data points of the 200 sample values acquired during the sampling period were used as support vector samples and in the training model to determine the PSO-SVM interior parameters. The output of the test model was used to compensate the missing values and compared with the previous 140 concentration values measured by the NH3 sensor. The missing points were replaced by 3-, 4-, 5-, and 6-ppb NH3 concentration values, respectively. A statistical analysis of the measurement values shows that 5 ppb is in the middle of the range from 0 to 10 ppb where 98 % of the 200 data points are located.

As shown in the Fig. 9a, the simulation results indicate an estimated curve with a good consistency to the measured curve. This validates that the SVM machine learning algorithm optimized by PSO is an effective tool to retrieve unknown data with less samples. Figure 9b shows the scatter plot of predicted NH3 concentration levels versus measurement data from which the fitting formula and coefficient of determination R 2 can be calculated directly. The same simulations are carried out when the drop-off points are set to 3, 4, and 6 ppb, respectively.

The absolute error curves for the NH3 measurement data and the PSO-SVM prediction values are shown in Fig. 10. The prediction errors have a similar variation for 3, 4, and 5 ppb (see Fig. 10a). The missing data points selected as 4 ppb have minimum absolute errors relative to 5 ppb (Fig. 10b). Under this condition, the optimum estimation accuracy is achieved with a higher coefficient of determination R 2 (Fig. 9b). The absolute errors have the same magnitude but opposite phase when the missing data points were set to 5 and 6 ppb. Based on the analysis described above, the different set values have a significant impact on forecasting atmospheric NH3 concentration values with the PSO-SVM algorithm.

4.2 Evaluation of the Model Behavior

The performance criteria in terms of the RMSE, the weighted mean absolute percentage error (WMAPE), the maximum determination coefficient (R 2), adjusted determination coefficient (adj. R 2) and Nash-Sutcliffe coefficient (NS) are used to evaluate the PSO-SVM model behavior (Eqs. 17, 18, 19, and 20). The RMSE measures the residual between the model prediction values and the observed data. WMAPE is the weighted mean absolute percentage error of the prediction. The coefficient of determination, denoted by R 2, is used to describe how well a regression line fits a data set. R 2 is a number between 0 and 1.0. An adjusted R 2 evaluates the linear relation between desired and forecast data. NS assesses the capability of the model in simulating the output data as compared to mean statistics [57, 79].

where n is the number of data points; A t is the measured NH3 concentration level in the t th period; Â t is the estimated output of the NH3 concentration prediction data in the t th period; Ā and \( \overline{\widehat{A}} \) are the means of the measurement data and forecast data, respectively; and p is the number of the model input. In this study, p was selected to be 5 for A t − 1, A t − 2, A t − 3, T t , W t . The statistical performance indices were calculated and are listed in Table 2. The comparison shows that 4 ppb selected as drop-off point results in the best prediction performance.

5 Conclusions

In this work, we demonstrated the capability and applicability of PSO-SVM for predicting atmospheric NH3 concentrations. In PSO-SVM, the PSO algorithm is used to select a penalty coefficient c and the kernel parameter σ 2 that are key parameters that impact the estimation accuracy of SVM. Furthermore, the GA was investigated to optimize SVM for obtaining suitable interior parameters. Data for five meteorological variables (A t − 1, A t − 2, A t − 3, T t , W t ) were used to develop PSO-SVM and GA-SVM models to estimate the NH3 concentration levels in the Greater Houston area. According to the numerical simulation results, we found that PSO-SVM has good prediction performance because the estimation values were consistent with the observed NH3 data. By comparing the model performance criteria, we concluded that PSO-SVM outperformed GA-SVM for RMSE, MAE, and convergence velocity. An increasing global searching ability of the algorithm during the training and testing periods also was found in PSO-SVM.

Furthermore, we used PSO-SVM to retrieve 17 drop-off points during the sampling period from 7 p.m. July 30, 2012, to 3 a.m. August 8, 2012. Assuming missing NH3 concentration levels at 3, 4, 5, and 6 ppb, the simulation results show an estimation curve that has good fitting characteristics with the measured curve. This confirms that the SVM optimized by PSO can estimate unknown data. The PSO-SVM model with set points of 4 ppb resulted in RMSE and WMAPE reductions, and R 2, adj. R 2, and NS increases relative to the 3, 5, and 6 ppb. From the analysis of simulation results, the selections of the different set values are the major influencing factors in forecasting atmospheric NH3 concentrations based on the PSO-SVM algorithm.

This study indicated that the PSO-SVM algorithm is a feasible robust data mining method to amend imperfect data collected by trace gas sensors and to deduce continuous values between sample points. The general algorithms for robust optimization in data mining, such as partial least squares, linear discriminant analysis, principal component analysis, and SVMs, are reported [35, 86]. We will apply these robust data mining algorithms to improve the uncertainty handling for NH3 sensors in future research. Furthermore, our study has extended the applicability of our NH3 prediction model compared to other models such as the environmental data from publicly assessable input files. This modeling approach will be useful in the continuous assessment of air pollution sources.

References

Agirre-Basurko, E., Ibarra-Berastegi, G., & Madariaga, I. (2006). Regression and multilayer perceptron-based models to forecast hourly O3 and NO2 levels in the Bilbao area. Environmental Modelling and Software, 21(4), 430–446.

Al-Alawi, S. M., Abdul-Wahab, S. A., & Bakheit, C. S. (2008). Combining principal component regression and artificial neural networks for more accurate predictions of ground-level ozone. Environmental Modelling and Software, 23(4), 396–403.

AlRashidi, M. R., & EL-Naggar, K. M. (2010). Long term electric load forecasting based on particle swarm optimization. Applied Energy, 87(1), 320–326.

Anandhi, A., Srinivas, V. V., Nanjundiah, R., & Kumar, N. D. (2008). Downscaling precipitation to river basin in India for IPCC SRES scenarios using support vector machine. International Journal of Climatology, 28(3), 401–420.

Aneja, V. P., Bunton, B., Walker, J. T., & Malik, B. P. (2001). Measurement and analysis of atmospheric ammonia emissions from anaerobic lagoons. Atmospheric Environment, 35(11), 1949–1958.

Antanasijević, D., Pocajt, V., Povrenović, D., Perić-Grujić, A., & Ristić, M. (2013). Modelling of dissolved oxygen content using artificial neural networks: Danube River, North Serbia, case study. Environmental Science and Pollution Research, 20(12), 9006–9013.

Ayat, N. E., Cheriet, M., & Suen, C. Y. (2005). Automatic model selection for the optimization of SVM kernels. Pattern Recognition, 38(10), 1733–1745.

Berastegi, G. I., Elias, A., Barona, A., Saenz, J., Ezcurra, A., & Argandoña, J. D. (2008). From diagnosis to prognosis for forecasting air pollution using neural networks: air pollution monitoring in Bilbao. Environmental Modelling & Software, 23(5), 622–637.

Blatter, A., Neftel, A., Dasgupta, P. K., & Simon, P. K. (1994). A combined wet effluent denuder and mist chamber system for deposition measurements of NH3, NH4, HNO-3 and NO3. In G. Angeletti & G. Restelli (Eds.), Physicochemical behaviour of atmospheric pollutants (pp. 767–772). Brussels: European Commission.

Bobrutzki, K. V., Braban, C. F., Famulari, D., Jones, S. K., Blackall, T., Smith, T. E. L., Blom, M., Coe, H., Gallagher, M., Ghalaieny, M., McGillen, M. R., Percival, C. J., Whitehead, J. D., Ellis, R., Murphy, J., Mohacsi, A., Pogany, A., Junninen, H., Rantanen, S., Sutton, M. A., & Nemitz, E. (2010). Field inter-comparison of eleven atmospheric ammonia measurement techniques. Atmospheric Measurement Techniques, 3, 91–112.

Boniecki, P., Dach, J., Pilarski, K., & Piekarska-Boniecka, H. (2012). Artificial neural networks for modeling ammonia emissions released from sewage sludge composting. Atmospheric Environment, 57(9), 49–54.

Bray, M., & Han, D. (2004). Identification of support vector machines for runoff modeling. Journal of Hydroinformatics, 6, 265–280.

Breban, S., Saudemont, C., Vieillard, S., & Robyns, B. (2013). Experimental design and genetic algorithm optimization of a fuzzy-logic supervisor for embedded electrical power systems. Mathematics and Computers in Simulation, 91(5), 91–107.

Caldas, L. G., & Norford, L. K. (2002). A design optimization tool based on a genetic algorithm. Automation in Construction, 11(2), 173–184.

Chelani, A. B., Chalapati Rao, C. V., Phadke, K. M., & Hasan, M. Z. (2002). Prediction of sulphur dioxide concentration using artificial neural networks. Environmental Modelling and Software, 17(2), 159–166.

Cherkassky, V., & Ma, Y. (2004). Practical selection of SVM parameters and noise estimation for SVM regression. Neural networks, 17(1), 113–126.

Cimen, M. (2008). Estimation of daily suspended sediments using support vector machines. Hydrological Sciences Journal, 53(3), 656–666.

Clarisse, L. D., Hurtmans, A. J., Prata, F., Karagulian, C., Clerbaux, M. D., & Mazière, P. F. (2010). Retrieving radius, concentration, optical depth, and mass of different types of aerosols from high-resolution infrared nadir spectra. Applied Optic, 49(19), 3713–3722.

Dutot, A. L., Rynkiewicz, J., Steiner, F. E., & Rude, J. (2007). A 24-h forecast of ozone peaks and exceedance levels using neural classifiers and weather predictions. Environmental Modelling & Software, 22(9), 1261–1269.

Erisman, J. W., Hensen, A., Otjes, R., Jongejan, P., Moels, H., Slanina, J., Khlystov, A., & Bulk, P. v. (2001). Instrument development and application in studies and monitoring of ambient ammonia. Atmospheric Environment, 35, 1913–1922.

Fei, S. W., Wang, M. J., Miao, Y. B., Tu, J., & Liu, C. L. (2009). Particle swarm optimization-based support vector machine for forecasting dissolved gases content in power transformer oil. Energy Conversion and Management, 50, 1604–1609.

Ferm, M. (1979). Method for determination of atmospheric ammonia. Atmospheric Environment, 13(10), 1385–1393.

Ferm, M., De Santis, F., & Varotsos, C. (2005). Nitric acid measurements in connection with corrosion studies. Atmospheric Environment, 39, 6664–6672.

Fraser, M., & Cass, G. (1998). Detection of excess ammonia emissions from in use vehicles and the implications for fine particle control. Environ. Sci. Technol, 32(8), 1053–1057.

Gao, C., Bompard, E., Napoli, R., & Cheng, H. (2007). Price forecast in the competitive electricity market by support vector machine. Physica A: Statistical Mechanics and its Applications, 382(1), 98–113.

Gelle, G., Colas, M., & Delaunay, G. (2000). Blind sources separation applied to rotating machines monitoring by acoustical and vibrations analysis. Mechanical Systems and Signal Processing, 14(3), 427–442.

Goldberg, D. E. (1989). Genetic algorithm in search, optimization and machine learning. Harlow, England: Addison-Wesley.

Gong, L. (2013). Atmospheric ammonia measurements and implications for particulate matter formation in urban and suburban areas of Texas. Ph.D Thesis, Rice University.

Gong, L., Lewicki, R., Griffin, R. J., Flynn, J. H., Lefer, B. L., & Tittel, F. K. (2011). Atmospheric ammonia measurements in Houston, TX using an external-cavity quantum cascade laser-based sensor. Atmospheric Chemistry and Physics, 11, 9721–9733.

Grivas, G., & Chaloulakou, A. (2006). Artificial neural network models for predictions of PM10 hourly concentrations in greater area of Athens. Atmospheric Environment, 40(7), 1216–1229.

Hamid, S., & Mirhosseyni, L. (2009). A hybrid fuzzy knowledge-based expert system and genetic algorithm for efficient selection and assignment of material handling equipment. Expert Systems with Applications, 36(9), 11875–11887.

Harren, F. J. M., Cotti, G., Oomens, J., & Lintel Hekkert, S. (2000). Photoacoustic spectroscopy. In R. A. Meyers (Ed.), Trace gas monitoring. Encyclopedia of analytical chemistry (pp. 2203–2226). Chichester: John Wiley & Sons Ltd.

Holland, J. H. (1975). Adoption in neural and artificial systems. Ann Arbor, MI, USA: The University of Michigan Press.

Hsieh, L. T., & Chen, T. C. (2010). Characteristics of ambient ammonia levels measured in three different industrial parks in southern Taiwan. Aerosol and Air Quality Research, 10, 596–608.

Huan, X., Constantine, C., & Shie, M. (2009). Robustness and regularization of support vector machines. Journal of Machine Learning Research, 10, 1485–1510.

Huang, C. L., & Dun, J. F. (2008). A distributed PSO-SVM hybrid system with feature selection and parameter optimization. Applied Soft Computing, 8(4), 1381–1391.

Kanevski, M., Parkin, R., Pozdnukhov, A., Timonin, V., Maignan, M., Demyanov, V., & Canu, S. (2004). Environmental data mining and modeling based on machine learning algorithms and geostatistics. Environmental Modelling & Software, 19(9), 845–855.

Kawashima, S., & Yonemura, S. (2001). Measuring ammonia concentration over a grassland near livestock facilities using a semiconductor ammonia sensor. Atmospheric Environment, 35(22), 3831–3839.

Kean, A. J., Littlejohn, D., Ban-Weiss, G. A., Harley, R. A., Kirchstetter, T. W., & Lunden, M. M. (2009). Trends in on-road vehicle emissions of ammonia. Atmospheric Environment, 43(8), 1565–1570.

Kennedy, J., & Eberhart, R. C. (1995). Particle swarm optimization. In IEEE International Conference on Neural Networks, 4 (pp. 1942–1948).

Keuken, M. P., Schoonebeek, C. A. M., Wensveen-Louter, A. V., & Slanina, J. (1988). Simultaneous sampling of NH3, HNO3, HCl, SO2 and H2O2 in ambient air by wet annular denuder system. Atmospheric Environment, 22(11), 2541–2548.

Kim, H. J., & Shin, K. S. (2007). Hybrid approach based on neural networks and genetic algorithms for detecting temporal patterns in stock markets. Applied Soft Computing, 7(2), 569–576.

Kolehmainen, M., Martikainen, H., Hiltunen, T., & Ruuskanen, J. (2000). Forecasting air quality parameters using hybrid neural network modeling. Environmental Monitoring and Assessment, 65, 277–286.

Kukkonen, J., Partanen, L., Karpinen, A., Ruuskanen, J., Junninen, H., Kolehmainen, M., Niska, H., Dorling, S., Chatterton, T., Foxall, R., & Cawley, G. (2003). Extensive evaluation of neural networks models for the prediction of NO2 and PM10 concentrations, compared with a deterministic modeling system and measurements in central Helsinki. Atmospheric Environment, 37(32), 4539–4550.

Lim, Y., Moon, Y. S., & Kim, T. W. (2007). Artificial neural network approach for prediction of ammonia emission from field-applied manure and relative significance assessment of ammonia emission factors. European Journal of Agronomy, 26(4), 425–434.

Lin, J. Y., Cheng, C. T., & Chau, K. W. (2006). Using support vector machines for long-term discharge prediction. Hydrological Sciences Journal, 51(4), 599–612.

Lin, S. W., Ying, K. C., Chen, S. C., & Lee, Z. J. (2008). Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Systems with Applications, 35(4), 1817–1824.

Liong, S. Y., & Sivapragasam, C. (2002). Flood stage forecasting with support vector machines. Journal of the American Water Resources Association, 38(1), 173–186.

Liu, C. C., & Chuang, K. W. (2009). An outdoor time scenes simulation scheme based on support vector regression with radial basis function on DCT domain. Image and Vision Computing, 27(10), 1626–1636.

Liu, L. X., Zhuang, Y. Q., & Xue, Y. L. (2011). Tax forecasting theory and model based on SVM optimized by PSO. Expert Systems with Applications, 38(1), 116–120.

Lu, C. J., Lee, T. S., & Chiu, C. C. (2009). Financial time series forecasting using independent component analysis and support vector regression. Decision Support Systems, 47(2), 115–125.

Lua, W. Z., & Wang, W. J. (2005). Potential assessment of the “support vector machine” method in forecasting ambient air pollutant trends. Chemosphere, 59(5), 693–701.

Mahdevari, S., Haghighat, H. S., & Torabi, S. R. (2013). A dynamically approach based on SVM algorithm for prediction of tunnel convergence during excavation. Tunnelling and Underground Space Technology, 38, 59–68.

Maier, H. R., & Dandy, G. C. (2000). Neural networks for the prediction and forecasting of water resources variables: a review of modelling issues and applications. Environmental Modelling and Software, 15(1), 101–124.

Manne, J., Jager, W., & Tulip, J. (2006). Pulsed quantum cascade laser-based cavity ring-down spectroscopy for ammonia detection in breath. Applied Optics, 45(36), 9230–9237.

Myles, L., Meyers, T. P., & Robinson, L. (2006). Atmospheric NH3 measurement with an ion mobility spectrometer. Atmospheric Environment, 40(30), 5745–5752.

Nayak, P. C., Sudheer, K. P., Rangan, D. M., & Ramasastri, K. S. (2004). Short-term flood forecasting with a neurofuzzy model. Water Resources Research, 41, W04004. doi:10.1029/2004WR003562.

Niska, H., Heikkinen, M., & Kolehmainen, M. (2006). Genetic algorithms and sensitivity analysis applied to select inputs of a multi-layer perceptron for the prediction of air pollutant time-series. Lecture Notes in Computer Science, 4224, 224–231.

Niu, D., Li, J., Li, J., & Liu, D. (2009). Middle-long power load forecasting based on particle swarm optimization. Computers & Mathematics with Applications, 57(11–12), 1883–1889.

Noori, R., Khakpour, A., Omidvar, B., & Farokhnia, A. (2010). Comparison of ANN and principal component analysis-multivariate linear regression models for predicting the river flow based on developed discrepancy ratio statistic. Expert Systems with Applications, 37, 5856–5862.

Nowak, J. B., Huey, L. G., Russell, A. G., Tian, D., Neuman, J. A., Orsini, D., Sjostedt, S. J., Sullivan, A. P., Tanner, D. J., Nenes, A., Edgerton, E., & Fehsenfeld, F. C. (2006). Analysis of urban gas phase ammonia measurements from the 2002 Atlanta Aerosol Nucleation and Real-Time Characterization Experiment (ANARChE). Journal of Geophysical Research: Atmospheres, 111, D17308. doi:10.1029/2006JD007113.

Okkan, U. (2012). Performance of least squares support vector machine for monthly reservoir inflow prediction. Fresenius Environmental Bulletin, 21(3), 611–620.

Okkan, U., & Serbes, Z. A. (2012). Rainfall-runoff modeling using least squares support vector machines. Environmetrics, 23, 549–564.

Ordieres, J. B., Vergara, E. P., Capuz, R. S., & Salazar, R. E. (2005). Neural network prediction model for fine particulate matter (PM2.5) on the US-Mexico border in El Paso (Texas) and Ciudad Juarez (Chihuahua). Environmental Modelling and Software, 20(5), 547–559.

Plöchl, M. (2001). Neural network approach for modelling ammonia emission after manure application on the field. Atmospheric Environment, 35(33), 5833–5841.

Pryor, S. C., Barthelmie, R. J., Sørensen, L. L., & Jensen, B. (2001). Ammonia concentrations and fluxes over a forest in the midwestern USA. Atmospheric Environment, 35(32), 5645–5656.

Rumburg, B., Mount, G. H., Filipy, J., Lamb, B., Westberg, H., Yonge, D., Kincaid, R., & Johnson, K. (2008). Measurement and modeling of atmospheric flux of ammonia from dairy milking cow housing. Atmospheric Environment, 42(14), 3364–3379.

Samui, P. (2008). Slope stability analysis: a support vector machine approach. Environmental Geology, 56, 255–267.

Samui, P. (2011). Application of least square support vector machine (LSSVM) for determination of evaporation losses in reservoirs. Scientific Research, Engineering, 3, 431–434.

Samui, P. (2011). Least square support vector machine and relevance vector machine for evaluating seismic liquefaction potential using SPT. Natural Hazards, 59, 811–822.

Sarwar, G., Corsi, R. L., Kinney, K. A., Banks, J. A., Torres, V. M., & Schmidt, C. (2005). Measurements of ammonia emissions from oak and pine forests and development of a non-industrial ammonia emissions inventory in Texas. Atmospheric Environment, 39(37), 7137–7153.

Simon, P. K., Dasgupta, P. K., & Vecera, Z. (1991). Wet effluent denuder coupled liquid/ion chromatography systems. Analytical Chemistry, 63(13), 1237–1242.

Simon, P. K., & Dasgupta, P. K. (1993). Wet effluent denuder coupled liquid/ion chromatography systems: annular and parallel plate denuders. Analytical Chemistry, 65(9), 1134–1139.

Tripathi, S., Srinivas, V. V., & Nanjundiah, R. S. (2006). Downscaling of precipitation for climate change scenarios: a support vector machine approach. Journal of Hydrology, 330, 621–640.

VanderNoot, T. J., & Abrahams, I. (1998). The use of genetic algorithms in the non-linear regression of immittance data. Journal of Electro Analytical Chemistry, 448, 17–23.

Vapnik, V. (1999). The nature of statistical learning theory. New York: Springer–Verlag.

Voukantsis, D., Karatzas, K., Kukkonen, J., Räsänen, T., Karppinen, A., & Kolehmainen, M. (2011). Intercomparison of air quality data using principal component analysis, and forecasting of PM10 and PM2.5 concentrations using artificial neural networks, in Thessaloniki and Helsinki. Science of the Total Environment, 409, 1266–1276.

Walker, J. T., Whitall, D. R., Robarge, W., & Paerl, H. W. (2004). Ambient ammonia and ammonium aerosol across a region of variable ammonia emission density. Atmospheric Environment, 38(9), 1235–1246.

Wang, C. W., Chau, K. W., Cheng, C. T., & Qiu, L. (2009). A comparison of performance of several artificial intelligence methods for forecasting monthly discharge time series. Journal of Hydrology, 374, 294–306.

Warneck, P. (1988). Chemistry of the natural atmosphere. New York: Academic Press.

Whitehead, J. D., Longley, I. D., & Gallagher, M. W. (2007). Seasonal and diurnal variation in atmospheric ammonia in an urban environment measured using a quantum cascade laser absorption spectrometer. Water, Air, and Soil Pollution, 183, 317–329.

Wieland, R., & Mirschel, W. (2008). Adaptive fuzzy modeling versus artificial neural networks. Environmental Modelling & Software, 23(2), 215–224.

Wieland, R., Mirschel, W., Zbell, B., Groth, K., Pechenick, A., & Fukuda, K. (2010). A new library to combine artificial neural networks and support vector machines with statistics and a database engine for application in environmental modeling. Environmental Modelling & Software, 25(4), 412–420.

Wilson, S. M., & Serre, M. L. (2007). Use of passive samplers to measure atmospheric ammonia levels in a high-density industrial hog farm area of eastern North Carolina. Atmospheric Environment, 41(28), 6074–6086.

Wyers, G. P., Otjes, R. P., & Slanina, J. (1993). A continuous flow denuder for the measurement of ambient concentrations and surface fluxes of NH3. Atmospheric Environment, 27(13), 2085–2090.

Xanthopoulos, P., Pardalos, P. M., & Trafalis, T. B. (2013). Robust data mining. Springer.

Yokelson, R. J., Bertschi, I. T., Christian, T. J., Hobbs, P. V., Ward, D. E., & Hao, W. M. (2002). Trace gas measurements in nascent, aged, and cloud processed smoke from African savanna fires by airborne Fourier transform infrared spectroscopy (AFTIR). Geophysical Research: Atmospheres, 108(D13), 8472. doi:10.1029/2002JD002100.

Acknowledgments

This study was supported by the Mid-InfraRed Technologies for Health and the Environment (MIRTHE) Center and National Science Foundation (NSF) under grant no. EEC-0540832. It was also supported by the Natural Science Foundation of China (Grant No. 31470715 and Grant No. 31470714) and international advanced forestry science and technology plan (2013-4-58). The authors gratefully acknowledge Texas Commission on Environmental Quality for supplying the relevant data (http://www5.tceq.state.tx.us/tamis/index.cfm?fuseaction=home.welcome) used in this publication.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Author Contributions

J. Zhang and F.K. Tittel designed the research plan and the preparation of this manuscript. R.J. Griffin and L. Gong provided environmental and atmospheric background knowledge. R. Lewicki developed the EC-QCL sensor architecture to measure atmospheric NH3 concentration levels. J. Zhang and W. Jiang compiled the model coding and carried out numerical simulations. M. Li and B. Jiang provided programming support.

Rights and permissions

About this article

Cite this article

Zhang, J., Tittel, F.K., Gong, L. et al. Support Vector Machine Modeling Using Particle Swarm Optimization Approach for the Retrieval of Atmospheric Ammonia Concentrations. Environ Model Assess 21, 531–546 (2016). https://doi.org/10.1007/s10666-015-9495-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10666-015-9495-x