Abstract

In large-scale collaborative software development, building a team of software practitioners can be challenging, mainly due to overloading choices of candidate members to fill in each role. Furthermore, having to understand all members’ diverse backgrounds, and anticipate team compatibility could significantly complicate and attenuate such a team formation process. Current solutions that aim to automatically suggest software practitioners for a task merely target particular roles, such as developers, reviewers, and integrators. While these existing approaches could alleviate issues presented by choice overloading, they fail to address team compatibility while members collaborate. In this paper, we propose RECAST, an intelligent recommendation system that suggests team configurations that satisfy not only the role requirements, but also the necessary technical skills and teamwork compatibility, given task description and a task assignee. Specifically, RECAST uses Max-Logit to intelligently enumerate and rank teams based on the team-fitness scores. Machine learning algorithms are adapted to generate a scoring function that learns from heterogenous features characterizing effective software teams in large-scale collaborative software development. RECAST is evaluated against a state-of-the-art team recommendation algorithm using three well-known open-source software project datasets. The evaluation results are promising, illustrating that our proposed method outperforms the baselines in terms of team recommendation with 646% improvement (MRR) using the exact-match evaluation protocol.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Collaborative software development has become a new norm in the industry as software systems become larger and more complex, requiring not only teams of software practitioners with various technical backgrounds, but also a systematic and managerial approach to track progress and issues (Gharehyazie and Filkov 2017; Mistrík et al. 2010). To facilitate such processes, online software tracking tools have been developed, such as Jira, Backlog, and Bugzilla, that not only enable practical, systematic software development tracking, but also develop and encourage growing communities of software engineers and developers. Often, such communities of software practitioners can become so massive that it presents challenges for task assignees needing to choose potential team members to work on a particular software development task (e.g., building new features, resolving bugs, and integrating software).

Research has shown that ineffective software teams could lead to unsuccessful projects that result in a delay. In particular, assigning software development tasks to ineffective team members (e.g., developer, tester, and reviewer) may lead to low-quality outcomes and extra costs are required to rework (i.e., issue reopening) (Assavakamhaenghan et al. 2019). In a small community, assigning appropriate team members to complete software development tasks is trivial, as task assignees tend to be familiar with each person’s technical background, relevant experience, and potential compatibility with other team members. However, manually building up a team in a large community of software practitioners with diverse technical backgrounds, experience, and social compatibility is challenging, calling for the ability to intelligently form and automatically recommend suitable teams that not only have the right skill set, but also are socially compatible with the other team members. This not only would assist decision-makers (e.g., task assignees, project managers, etc.) in choosing the right team members for the tasks, but also could be a key enabler in large-scale community-based collaborative software development ecosystems.

Recently, several recommendation methods have been proposed that automatically suggest software practitioners for a given task. However, such previous algorithms merely focus on making recommendations for particular common roles such as developers (Surian et al. 2011; Xia et al. 2015; Yang et al. 2016; Zhang et al. 2020b), peer reviewers (Zanjani et al. 2015; Thongtanunam et al. 2015; Ouni et al. 2016; Yu et al. 2016), and integrators (de Lima Júnior et al. 2018). An example of such single-role recommendation methods includes DevRec (Xia et al. 2013), a system for recommending developers for bug resolution. DevRec computes the affinity of each developer to a given bug report based on the characteristics of bug reports that have been previously fixed by the developer. While code bugs represent an aspect of software development tasks that can be handled by developers, a software task may involve diverse functional responsibilities such as gathering requirements, coding, reviewing, testing, integrating, and deploying, which require an ensemble of software practitioners each of whom is tasked with a distinct role. While one could use a set of different single-role recommenders to suggest potential members for each different role, such improvisation of single-role recommenders could overlook certain limitations, such as dynamic definitions and responsibility of roles, fine-grained skills, and anticipated teamwork compatibility (Gharehyazie et al. 2015; Moe et al. 2010).

While the above single-role recommenders have been proposed for software engineering tasks, each of which tends to be accomplished by one software practitioner, there are cases where a software task requires multiple experts with various technical backgrounds working together to reach a successful solution. For example, a task that aims to implement a stock-price forecasting functionality in a financial management mobile application may require a front-end Swift language developer, a machine learning engineer who can deploy TensorFlow models as services, a computer network programmer to engineer the data communication, and a code tester. This particular scenario would urge the task assignee to select team members that satisfy not only the necessary skills (i.e. Swift, TensorFlow, Python, and socket programming), but also the role requirements (i.e. three developers and one tester). The problem can become more aggravated if there are many choices of team members to choose from, which prevalently characterizes large open-source software systems, and could hinder the ability of the task leader to form an effective team. Such an issue, therefore, behooves an automated system that is capable of narrowing down the set of candidate software practitioners that also satisfy the skill and role requirements. In non-software engineering domains, systems capable of generating and recommending team configurations have been proposed in collaborative photography (Hupa et al. 2010), research collaboration (Datta et al. 2011; Liu et al. 2014), spatial crowdsourcing (Gao et al. 2017), business processes (Cabanillas et al. 2015), and collaborative learning (Ferreira et al. 2017), utilizing collaboration history, trust, and member skill information when deciding which teams to recommend. However, these techniques were not specifically developed for collaborative software development purposes, and hence cannot directly be applied to our problem.

A limited set of studies have investigated the ability to automatically recommend team configurations for collaborative software development. To the best of our knowledge, we are the first to explore this problem, and specifically investigate the following research questions:

-

1.

Is it possible to quantify the ability of a software team to successfully resolve a given software development task?

-

2.

Is it possible to recommend software teams that comply with the role requirements and are suitable for a given software development task?

-

3.

Can the proposed software team recommendation method be adopted for single-role recommendation tasks?

Specifically, we propose RECAST (REC ommendation A lgorithm for S oftware T eams), a software team recommendation method for large-scale collaborative software development. The proposed method utilizes the Max-Logit algorithm to intelligently enumerate candidate teams to approximately maximize the team-fitness score at a task level (i.e., issue) since a resolving of an issue usually involves different roles and a number of team members, without having to exhaustively generate all possible combinations of teams. We propose a set of features that characterize effective software teams, given task requirements and task assignee (i.e. team leader). In this work, we define an effective team as a team that successfully resolves a task (e.g., an issue) without changing team members or re-opening the task. We also adopt features proposed by Liu et al. (2014) that capture the collaboration history and experience of team members. A set of machine learning algorithms are validated and used as the team-fitness scoring function. RECAST is validated on the ability to recommend both whole teams and particular single-roles. A state-of-the-art team recommendation algorithm proposed by Liu et al. (2014) along with a naive randomization-based baseline are used to validate the efficacy of our method on three real-world collaborative software project datasets: Apache, Atlassian, and Moodle, using the standard evaluation protocol for recommendation systems. According to the Mean Reciprocal Rank (MRR), the proposed RECAST achieves an improvement of 646% on average over the best baseline for the exact-match evaluation protocol. With such promising evaluation results, it would be possible to extend RECAST to a real application that helps task assignees to find the right team configurations for their software tasks. Concretely, this paper presents the following key contributions:

-

1.

We establish the problem of automatic team recommendation in the software engineering context. While automatic team formation has been studied in the past, its applicability in collaborative software development has been limited.

-

2.

We propose a learning-based method, RECAST, that recommends software teams, given a task description and assignee. The algorithm utilizes the Max-Logit algorithm to intelligently enumerate teams that approximately maximize the team-fitness score. A machine learning algorithm is used as the scoring function, which learns the features that characterize effective software teams. These features are derived from heterogeneous knowledge graphs that encode collaboration history, task similarity, and team members’ skills, generated from real-world open source software project datasets.

-

3.

We empirically validate our proposed team recommendation algorithm, RECAST, against a state-of-the-art team recommendation algorithm and a randomization-based baseline using the standard evaluation protocol for recommendation systems.

-

4.

We make the datasets and source code available for research purposes at: https://github.com/NoppadolAssava/RECAST.

The remainder of the paper is organized as follows. Section 2 provides the mathematical definition and a real-world example of the problem. Section 3 explains relevant background concepts utilized by our proposed method. Section 4 provides the detail of our proposed method. Section 5 discusses datasets, experiment protocols, evaluation, and results. Section 6 exposes potential threads to validity. Section 7 reviews previous studies related to our problem. Section 8 concludes the paper.

2 Problem Description

Our goal is to develop an algorithm for recommending software teams for a given task and its role requirements. An example issue presented in Fig. 1, taken from the Moodle Tracker, is a software patch that introduces a new chart API and library to the current system. The usernames are anonymized. Carefully investigating the issue’s comments and logs, we have identified four main roles, involving five team members. Note that, though there were more users involved in this issue, most of them were merely component watchers and non-active members (no activities); therefore, we only identified the active ones based on their comments and activities. Each of the team’s active members was responsible for a different aspect of the task. For example, UserA was both the assignee and the main developer. UserB integrated the solutions. UserC created test cases and tested the solutions. UserD surveyed different chart plugins and found that Chart.js was the most suitable. UserE added a patch to the donut charts. This particular example illustrates the need for teamwork with diversified skills and responsibilities to accomplish a software task.

Mathematically, let \(\mathbb {I}=\{i_{1}, i_{2}, i_{3}, ...\}\) be the set of tasks (i.e. issues), \(\mathbb {U}=\{u_{1}, u_{2}, u_{3}, ...\}\) the set of users (potential team members), \(\mathbb {R}=\{r_{1}, r_{2}, r_{3}, ...\}\) the set of roles, and \(\mathbb {S}=\{s_{1}, s_{2}, s_{3}, ...\}\) the set of skills. Furthermore, we make a minimal assumption that each task, \(i = \left \langle u_{0}, T, d \right \rangle \), is a triplet of a task assignee (i.e. \(u_{0} \in \mathbb {U}\)), a team T, and a textual task description (i.e. d). A team T = {〈r1,u1〉,〈r2,u2〉,...} is a set of pairs of a role and its corresponding user. From the example Moodle issue in Fig. 1, the software team comprises one integrator: UserB, one tester: UserC, and three developers: UserA, UserD, and UserE. In this case, this team would be represented as follows:

Given a task i∗ and assignee u0, the objective of RECAST is to return a ranked list of K teams, \(T(i^{*})=\left \langle T_{1}, T_{2}, ... , T_{K} \right \rangle \) that best suite with the given task and role requirements, while ensuring technical skills and teamwork compatibility.

3 Background

This section presents relevant background concepts and knowledge. Developing a recommendation algorithm for software teams requires a presumption of collaborative software development processes, which can be tracked by software development tracking platforms such as Jira issue tracking system developed by Atlassian. Furthermore, since our proposed method uses sentiment analysis to extract features related to the social aspects of team collaboration, and Latent Dirichlet Allocation (LDA) to quantify the similarity between two tasks, we also provide an overview of such techniques.

3.1 Sentiment Analysis

Sentiment analysis refers to the use of natural language processing (NLP) and artificial intelligence techniques to automatically quantify subjectivity in the information. Specifically, sentiment analysis techniques extract meaningful subjective information, opinions, and emotions about the subject from written or spoken language. The primary function of sentiment analysis is to identify the sentiment polarity of a given text, namely positive, negative, or neutral. Advanced sentiment analysis techniques can understand emotional states such as happy, angry, and sad.

Typical sentiment analysis methodology includes five steps as shown in Fig. 2.

-

1.

Data Collection: Raw data (typically text) is collected from the sources. A unit of data, referred to as a document, may contain not only meaningful textual content, but also noises such as abbreviations, URL, slang, and meaningless, undefined words.

-

2.

Text Preparation: Irrelevant information, non-text, and noises are eliminated. The raw text can be prepared by removing web addresses and URLs, stop words, symbols, and punctuation. This preparation step is different across data sources and target languages.

-

3.

Sentiment Detection: The sentiment is detected from the input text. A document usually contains both objective statements describing facts and subjective statements that imply the author’s sentiment. The sentiment detection algorithm then aims to identify those subjective statements to be used in the next step.

-

4.

Sentiment Classification: The identified subjective statement presented in the document is classified into one of the sentiment classes (i.e. good/bad, positive/negative, etc.). Various techniques have been proposed to classify sentiment levels such as rule-based methods and machine learning based methods.

-

5.

Output Representation: The last step is to present output in a meaningful way that fits with the applications (e.g. quantifying sentiment score in user reviews) (Baj-Rogowska 2017).

Teamwork collaboration often involves intra-communication among team members, which can be used to infer the team’s coherence (Hogan and Thomas 2005). Studies have shown that ineffective communication could be an early indication of a premature team failure (Petkovic et al. 2014). Sentiment exhibited in communication can be signaling. For example, praising messages (positive sentiment) could lift the collaboration willingness among team members (Grigore and Rosenkranz 2011). Furthermore, messages that convey criticism (often detected as negative sentiment due to negative keywords) can enable the team to efficiently pinpoint the issues to be solved. The ability to mathematically extract such sentiment from past communication among members could be useful when choosing suitable teams for future tasks.

We conjecture that effective teamwork is characterized by not only the members’ skills and experience but also a high-level of positive (e.g. praises and congratulations) and criticizing (e.g. critiques on features and bug reports) communication. Hence, sentiment analysis is used to identify the intent (as positive or criticizing) of the communication between two users in the same team, that is later used to compute the interaction-based features. Note that sentiment analysis is merely used to extract some “soft signal” in combination with other features to train machine learning models to characterize effective teams, and is not intended to be used as the absolute criteria to identify desirable software teams.

3.2 Latent Dirichlet Allocation (LDA)

Latent Dirichlet Allocation (LDA) is an unsupervised topic modeling algorithm proposed and widely used in the information retrieval field (Blei et al. 2003). Specifically, LDA utilizes a probabilistic-based technique to model different topics from a set of documents, where each topic is mathematically represented by a probability distribution of terms. The inference algorithm allows a document to be represented with a probability distribution over different topics. The basic intuition behind LDA is that the author has a set of topics in mind while composing a document, which is represented as a mixture of topics. Mathematically, the LDA model is described as follows:

Where, P(ti|d) is the probability of term ti appearing in document d, zi a latent (hidden) topic, and |Z| the number of all topics. Note that |Z| needs to be predefined. P(ti|zi = j) is the probability of the term ti being in topic j. P(zi = j|d) is the probability of choosing a term from topic j in the document d.

After the topics are modeled, the distribution of topics can be computed for a given document using statistical inference (Asuncion et al. 2009). A document can then be represented with a vector of real numbers, each of which represents the probability of the document belonging to the corresponding topic.

LDA has been used in software engineering tasks in various applications such as analyzing the topical composition of repositories (Chen et al. 2016), logs (Li et al. 2018), and Stack Overflow articles (Rosen and Shihab 2016). One application of LDA is to measure the similarity between two documents, similar to the work by Al-Subaihin et al. (2019), who used LDA to quantify the similarity between mobile apps’ textual descriptions. Particularly, two documents can be represented with topical distribution vectors where cosine can be used to measure the level of topical similarity between them. Note that, while simpler methods for quantifying document similarity exist such as Jaccard similarity and TF-IDF cosine similarity (Manning et al. 2008), studies have shown that LDA-based similarity yields better results particularly due to its ability to capture deep semantics compared to bag-of-words models such as TF-IDF and Jaccard based methods (Tuarob et al. 2015; Misra et al. 2008). Furthermore, projecting a document into a lower-dimensional space enables LDA to handle the polysemy, synonymy, and high-dimensionality problems that typically pose huge challenges for the bag-of-words based approaches (Alharbi et al. 2017).

In this research, LDA is used to quantify the similarity between two tasks (i.e., issues). Specifically, task descriptions are treated as textual documents from which LDA is first applied to learn the topics. To quantify the similarity between two tasks, their topic distribution vectors are then inferred from the learned topics. The cosine similarity between the two vectors (ranged [0,1]) is then used as the level of similarity between the two tasks.

As an illustrative example, Fig. 3 shows the topic distributions of three Moodle’s issues, namely A, B, and C. The corresponding textual content (title + description) of the three issues are displayed on the left-hand side, and the corresponding probability distributions of the 300 topics, inferred from a learned LDA model, are on the right-hand side. Visually, the distributions of Issue A and Issue B appear to be similar, with mutually high probabilities on Topics 23, 50, 195, and 278. However, the distribution of Issue C is much different from the other two. To interpret these results, even though Issues A and B are labeled as different components (Administration for Issue A and Gradebook for Issue B), they are both related to the user interface of the system that concerns the “course category” features (Issue A is an improvement, while Issue B is a bug). On the other hand, Issue C is about the backup mechanism of the system that is not quite related to Issues A and B. As a result, the cosine similarity of the topic distributions of Issues A and B is 0.79 (topically similar), while that of Issues A and C is only 0.01 (topically different), signifying that Issue B is more similar to Issue A than Issue C, which aligns with the interpretation from the textual content of the three issues.

Furthermore, Table 1 lists the top 10 topics ranked by the corresponding probabilities of Issues A, B, and C. The topic numbers with ∗ are those overlapped with the top 10 topics of Issue A. From this particular example, Issue B has five overlapped topics with those of Issue A, while Issue C has none. Generalizing from this particular example, if we could construct the topic distribution for all the tasks’ textual description, then it would be possible to quantify the topical similarity between each pair of tasks using cosine similarity. Such a method for measuring document similarity has been used widely in the information retrieval domain. For example, Tuarob et al. (2015) used LDA to find similar documents to retrieve and recommend useful tags (keywords) in a document annotation task.

3.3 Potential Game Theory and Max-Logit algorithm

In the game theory literature, a potential game consists of a set of players and a set of possible actions for each player, with an assumption that there is one global potential function that represents all the players’ incentive (Monderer and Shapley 1996). Each player then chooses an action that increases the overall incentive. In every potential game, there exists at least one Nash equilibrium (Osborne and Rubinstein 1994), where a player no longer has an incentive to change the action. Such a concept can be applied to the team recommendation problem where each player is a role, and each player’s action set is the set of candidate members for each role (Liu et al. 2014). At each iteration, a role changes its candidate to minimize a global cost function (equivalent to maximizing the potential function). This iterative process continues until the team reaches a Nash equilibrium.

Max-Logit (Song et al. 2011) is a Nash equilibrium finding algorithm, which can be used to simulate potential games. Compared to other Nash equilibrium finding algorithms, Max-Logit can not only escape from trivial local optima (using randomness), but also coverage to the best Nash equilibrium fast (Liu et al. 2014). In this research, since enumerating all possible team combinations can be exhausting due to the large candidate sets, characterizing large-scale software development communities, we use Max-Logit to enumerate teams that iteratively decrease the global cost function. The Max-Logit algorithm for team recommendation contains two major steps: constructing a new team and deciding whether to accept it as the best team (Liu et al. 2014). We have modified Max-Logit to fit with our software team recommendation which will be discussed in Section 4.

4 Methodology

Figure 4 illustrates the overview framework of RECAST. The data of software projects are collected from Jira issue trackers (see Section 4.1). The collected data are then prepossessed (e.g. removing incompleted issues). The common software issue-related (e.g. issue description) and software team-related (e.g. role) data are extracted from the preprocessed data (see Section 4.2). In this work, we also use heterogeneous knowledge graphs to capture relationship among users, tasks, and skills, which we discuss in Section 4.3. Several features are then extracted from these networks. Such features capture different aspects of team members’ history of collaboration and task characteristics which are commonly used in team formation, such as technical experience, skill diversity, past collaboration among candidates (see Section 4.4).

We use machine learning techniques that learn the characteristics of “good” teams from past tasks, which will be used to quantify the effectiveness of a given team (see Section 4.5).

In the last step, to recommend software teams, our modified version of the Max-Logit algorithm is employed to enumerate candidate teams that maximize the team-fitness score. The top K candidate teams that achieve the highest team-fitness scores are returned as the recommended teams (see Section 4.6).

4.1 Data Collection

RECAST aims to recommend team combinations that are both tailored to the assigneees’ preferences and contributing to the task’s success. Hence, the knowledge graphs and the team-fitness scoring function must be generated and trained from real-world project-based software development data. Fortunately, most large-scale software projects are collaborative, whose development information is recorded in software project tracking tools such as Jira (issue tracking system),Footnote 1 Backlog (task tracking system),Footnote 2 and Bugzilla (bug tracking system).Footnote 3 Such software development tracking systems are often used by software practitioners to plan their projects, track progress, and to communicate among team members. Some tools such as Jira allow projects and tasks to have a hierarchical structure and dependency among them.

Real-world software project data is critical to train machine learning algorithms so that they can accurately predict the team combination suitable for a given task in the project. In this research, among different popular software tracking tools, Jira is chosen for our case studies due to the following reasons:

-

1.

Open-source projects hosted by Jira can be publicly accessible on the Internet. This makes it possible to scrape public data using traditional web crawling techniques. Furthermore, Jira also provides a REST API (e.g., Moodle’s APIFootnote 4) to facilitate information retrieval.

-

2.

Jira has been used as the main software tracking tool for many open-source large-scale software systems such as Atlassian, Apache, and Moodle, which hosts several collaborative projects suitable for our case studies.

-

3.

Jira has Github integration, which allows us to investigate commit activity logs. Such logs are crucial for identification of team members who are developers.

4.2 Data Preprocessing and Extraction

While Jira is chosen for our research, the raw data is preprocessed to retain only information that is not platform-specific. While different software tracking platforms have their own organizations of projects and tasks, here, we define a task to be a piece of collaborative work that is part of a software development. For example, Jira provides different features for different types of issues representing software development tasks, such as new features, bugs, and implementation.

We assume that a task is composed of a task description, a task assignee, and a team. Furthermore, additional information such as a task resolution and interaction among team members is also collected.

4.2.1 Task Description

A task description is usually represented as a simple text document. Hence, any attributes that describe a task are concatenated to produce a continuous chunk of text. For example, Jira provides the following features to describe an issue: summary and description. The textual contents corresponding to these attributes would be concatenated to produce the task’s description.

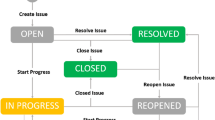

4.2.2 Task Status Resolution

RECAST’s goal is to recommend teams that are not only tailored for the task assignee’s preference, but also functionally effective. Hence, the training data used to train our models must come from tasks that are deemed successful. In this research, a successful task is a task (issue) that is resolved without being re-opened. In Jira, this means that a successful issue must have status “Closed” and resolution “Resolved” without any history of reopening. Furthermore, we track whether a task has been reopened from the issue changelog. Issues that do not meet such a definition are discarded from the training set.

Note that, while this definition of a successful task given above can be subjective, a study has shown that tasks that have been re-opened are likely to be caused by ineffective team configurations (Assavakamhaenghan et al. 2019). Hence, to ensure that the models learn from high-quality samples (i.e. teams that lead to resolving of issues), only tasks that can be resolved without any major interruption that may have caused by flawed teams are used as the training dataset. Such a definition of a successful task only has a direct effect on the generation of the team-fitness scoring function. Hence, if future studies devise a different definition of a “good team,” then the training data can be relabeled without affecting the rest of the methodology pipeline.

4.2.3 Task Dependency

Most modern software tracking tools provide the capability for users to specify explicit relations among tasks. For example, Jira provides the “Issue Link” mechanism so that the relation between two issues can be explicitly and systematically specified. Examples of such issue relations include Relates to, Duplicates, Blocks, and Clones. In this research, to align with our generalized assumption about task hierarchical structure, such issue links are collected to represent the dependency relations between two tasks.

4.2.4 Team Members

Besides the task assignee, we also collect the username, and role of each team member working on the task. Jira focuses on the implementation aspect of the software, the following roles related to issue resolving can be identified: Reporter, Assignee, Developer, Tester, Peer Reviewer, and Integrator.

4.2.5 User Interaction

Social aspects are involved throughout the collaborative development of software, which can be reflected by communication. In this research, we investigate features that characterize team coherence (i.e., the ability for a team to productively work together). Many studies have shown that communication and teamwork are among the key factors for successful software projects (Sudhakar 2012; Lindsjørn et al. 2016).

In this research, communication information among team members is collected from the comment on issue reports. Specifically, only direct comments from one member to others are collected. An example of a direct comment from the Moodle project is shown in Fig. 5, where User A, the peer reviewer, shows gratitude to User B, the assignee, after finishing the code review. While this particular example alone may not be enough to conclude that these two members exhibit a strong characteristic of good teamwork, but hundreds of such direct messages between the two users from several previous issues could be revealing.

4.2.6 Sentiment Analysis

In every issue, team members communicate with each other using comments. For example, they can use the comments to praise for milestones reached or criticize others’ work. These comments are full of sentiment that could be signaling. Therefore, all comments are analyzed to extract sentiment using SentiStrength, a python library for sentiment analysis (Thelwall et al. 2010).Footnote 5 Specifically, the following steps are taken to extract sentiment from a comment:

-

1.

Text Preprocessing: All comments are cleaned by removing stop-words, punctuation, URLs, white-spaces, and numbers. Special symbols used to represent emoji are not removed to preserve emotional expression. A textual comment is then tokenized using the NLTK tokenization tool.

-

2.

Sentiment Detection: SentiStrength library outputs two values representing positive and negative sentiment. The reason for having the two sentiment scores instead of just one (with –/+ sign representing negative/positive sentiment) is because research findings have determined that positive and negative sentiments can coexist (Fox 2008). Note that, from our observation, while positive comments are those related to praises and thanks, the negative ones are not from hate-speech or ill-language as traditionally interpreted from the negative scores. Often, negative comments tend to express criticism on software functionalities or to explain bugs (which necessitate negative words), rather than expressing the authors’ emotion.

-

3.

Trust Propagation: According to Kale et al. (2007), trust and distrust can be propagated from one person to another in the person-person network. In their work, they quantified trust and distrust from the text surrounding each URL link in a blog, that leads to another user’s blog. They computed the link sentiment polarity by quantifying the sentiment of the text surrounding it. The positive sentiment represents trust and the negative sentiment represents distrust. They spread trust links to increase the density of the network so that they could detect trust communities in the network.

Similarly, in our work, interaction among users is not dense enough. Hence, we adopt Kale’s idea to increase link pairs of interaction. However, we do not define positive sentiment as trust and criticism sentiment as distrust. This is because, in software development, a comment containing negative words usually criticizes software features so that developers could effectively understand the requirements. However, we could use Kale’s trust propagation model to propagate positivity and criticism within the interaction network. Mathematically, link polarity can be propagated using this following matrix operation:

$$ C = \alpha_{1}B + \alpha_{2}B^{T}B + \alpha_{3}B^{T} + \alpha_{4}BB^{T} $$(2)Where C is an atomic propagation operator, B is the initial belief between user i and j. For α vector, they reported that {0.4, 0.4, 0.1, 0.1} yields the most accurate result. The belief B can be computed iteratively depending on the steps of propagation i + 1th, Bi+ 1, using the following equation:

$$ B_{i+1} = B_{i} * C_{i} $$(3)

4.3 Network Generation and Indexing

Effective software teams are typically characterized by having extensive experience of relevant tasks, necessary skills, a good history of working together. Hence, the ability to automatically quantify such characteristics could prove useful to a software team recommendation system. Here, we conjecture that such team-related information can be extracted from the heterogeneous networks that represent the relationship between past tasks, users, and skills. The following subsections define different types of information networks used in this research, along with explaining how they are generated from the collected dataset.

4.3.1 Task Similarity Network

The task similarity network \(\mathbb {G}_{TS}=\langle \mathbb {I}, E_{TS} \rangle \) is an undirected graph where a node \(i \in \mathbb {I}\) is a task, and each edge weight e ∈ ETS represents the similarity between the two tasks. We assume that an issue description is represented with a textual document without any available ontology; hence, the task similarity must be quantified using the textual content derived from the issue descriptions.

In this research, task (i.e. issue) descriptions are represented with topical distribution inferred from trained Latent Dirichlet Allocation (LDA) models. Both the traditional non-labeled LDA and labeled LDA (Ramage et al. 2009) are validated for their ability to model latent topics for each corpus of task descriptions. We use MalletFootnote 6 implementation of these LDA algorithms. The number of topics is tuned to optimize the local pairwise mutual information (PMI). The similarity between the two tasks can be calculated using the cosine similarity between the two tasks’ topical distribution vectors. Hence, each edge weight falls into the range [0,1] where 0 represents no similarity and 1 represents perfect similarity.

4.3.2 Task Dependency Network

The task dependency network \(\mathbb {G}_{TD}=\langle \mathbb {I}, E_{TD} \rangle \) is a heterogeneous directed graph, where each node \(i \in \mathbb {I}\) is a task, and each direct edge e(ix,iy,r) represents that task ix depends on task iy with type r, where the type of this edge can be either Relates, Blocks, or Clones. The dependency relationship between two tasks is extracted from the “Issue Link(s)” attribute in a Jira issue page.

4.3.3 User Collaboration Network

The user collaboration network \(\mathbb {G}_{UC}=\langle \mathbb {U}, E_{UC} \rangle \) is an undirected network where each node is a user \(u \in \mathbb {U}\) and each edge weight represents the frequency of tasks on which the two users have collaborated. In Jira, the collaboration relationship is directly extracted from the issue information page where all the collaborators are listed. Specifically, two users collaborate if they are identified as the team members of the same issue. This user collaboration network allows us to understand the collaboration history of the members in a given team, and also enables quantification of team coherence.

4.3.4 User Interaction Network

History of interaction among users can be signaling and helpful for understanding the dynamics within a software team. Ideally, an interaction can be directly sending personal messages to one or more other team members. However, since direct message mechanisms are not available in Jira, we treat a direct comment posted in an issue page (See example in Fig. 5) as an interaction between the message poster and the mentioned users.

Emotions play a big role in teamwork. We observe that effective teams are characterized not only by a high level of communication, but also by meaningful and constructive interaction. Specifically, meaningful comments such as praises, encouragements, and congratulations can raise ones’ motivation and morale. Also, constructive feedback about ones’ work can lead to efficient and productive improvement and resolution. For example, in Fig. 6, User A made a direct comment to User B stating that his code still contains certain issues, with detailed explanation. As a result, User B knew exactly what to proceed to address these comments, and was able to resolve and close this task. While these constructive feedback comments may sound professional and do not intend to offend the target user, the sentiment quantified by traditional sentiment analysis tools is often negative. This is due to the composition of negative keywords used to describe components in the code, such as issues, serious, and scary. Note that, in fact, due to the professional and mature community of software developers, we have only witnessed a few negative comments (as reported by the sentiment analysis tool) that turn out to be offensive or showing that the authors had negative sentiment when writing the comments. Hence, we assume that positive comments are those intended to praise, encourage, or congratulate team members, while negative ones are typically constructive feedback.

The user interaction network \(\mathbb {G}_{UI}=\langle \mathbb {U}, E_{UI} \rangle \) hence is a direct heterogeneous graph where each node is a user \(u \in \mathbb {U}\), and each edge e(ui,uj,polarity) represents the direction of communication (i.e. from ui to uj), and the type of this edge polarity can be either positive (+) or negative (-) sentiment. The weight of each edge is the sentiment level, ranging from [0,1]. Note that, we keep both the positive and negative edges, instead of combining the positive and negative weights into one single value, because our work interprets negative sentiment differently from its original definition. Specifically, here we treat both positive and negative comments to be good characteristics of effective teams.

4.3.5 User Expertise Network

Forming a team having not only necessary technical skills, but also diverse expertise is crucial to the task’s success. The user expertise network captures the skill set that each user possesses based on his/her past task experience. Mathematically, the user expertise network is a bipartite direct graph \(\mathbb {G}_{UE}=\langle \mathbb {U}, \mathbb {S}, E_{UE} \rangle \) where a node can be either a user \(u \in \mathbb {U}\) or a skill \(s \in \mathbb {S}\), and the weight of each edge e(u,s) represents the level of skill s that user u possesses. In Jira, there is no direct way to retrieve explicit software skills (e.g. Java, Python, Deep Learning, etc.) of a particular user. Hence, we use the system components (e.g. Checklist, TurnItIn tool, Application Form) to represent the set of skills. The reason behind this is that each software system already has a well-defined set of system components. A user who has worked on particular components are said to have acquired the necessary skills and experience on them. The weight of each edge e(u,s) is then quantified by the frequency of the tasks related to component s whose teams comprise user u.

4.4 Feature Extraction

The proposed method relies on a machine learning based scoring function that outputs the team-fitness score given task description, a task assignee, and a candidate team. To build such a scoring function, machine learning based algorithms are trained with features extracted from teams whose tasks are deemed successful. Specifically, these features are designed to characterize teams that are suitable for a given task and task assignee. A majority of the features are extracted from the heterogeneous knowledge networks discussed in Section 4.3. We also incorporate features proposed by Liu et al. (2014), as they can be additionally useful. Table 2 lists all the features.

4.4.1 Features Adopted from (Liu et al. 2014)

Liu et al. (2014) proposed a recommendation algorithm for scientific collaboration. In this work, we find that the features used in their work could be useful to our problem. These previously proposed features include:

-

1.

Experience reflects the team’s experience in handling overall tasks. We use the number of tasks in which a member has involved to reflect experience. This feature is normalized with Min-Max normalization. To calculate the overall team experience, the average is used.

-

2.

Role Experience captures the team’s experience in different roles. We use the number of tasks in which a person participated in a particular role to compute this feature. This feature is also normalized using Min-Max normalization. To calculate the overall role experience of the team, the average is used.

-

3.

Win Experience represents overall experience with successful tasks. This feature is computed using the number of successfully resolved issues that a person participated in, normalized with the Min-Max normalization. To calculate the overall win experience of the team, the average is used.

-

4.

Win Rate is the ratio of Win Experience to Experience. To calculate the overall experience of the team, the average is used.

-

5.

Closeness quantifies the overall collaboration history among the members in a team T, and is calculated using the equation below (Liu et al. 2014).

$$ Closeness=\frac{2}{|T|\times(|T|-1)}\underset{u_{i},u_{j}\in T; i<j}{\sum}\frac{1}{ShortestPath(u_{i},u_{j})} $$(4)|T| is the cardinality of the team. The ShortestPath is calculated from the graph \(\mathbb {G}_{UC} = (\mathbb {U},E_{UC})\) where vertices \(\mathbb {U}\) is the set of users. An edge ei ∈ EUC links \(u_{i} \in \mathbb {U}\) and \(u_{j} \in \mathbb {U}\) with weight representing the number of tasks ui and uj have worked together. If there is no path between ui and uj, \(|\mathbb {U}|\) is used instead.

-

6.

Connection represents overall level of the team’s communication activities, and is described as follows:

$$ Connection=\frac{2}{|T|\times(|T|-1)}\underset{u_{i},u_{j}\in T; i<j}{\sum} e_{ij} $$(5)Liu et al. (2014) represents eij with the number of connections between ui and uj. In our research, we use the number of interactions (tagged users in comments) between ui and uj, derived from the user interaction graph \(\mathbb {G}_{UI}\).

4.4.2 Proposed Features

While features proposed by Liu et al. (2014), described in the previous section, can be useful for characterizing the team’s overall experience and collaboration. We find that they are not sufficient to capture certain characteristics of teams that work in software projects, including task requirements, skills, and collaborative compatibility; hence, we define additional software development specific features as follows:

-

1.

Task Familiarity indicates the team’s experience dealing with similar tasks in the past, derived from the task similarity graph \(\mathbb {G}_{TS}\), where each task is represented as a vector of topic distribution inferred from a trained topic model using LDA. The similarity between two tasks is then computed using the cosine similarity of their topic distribution vectors. Mathematically, the Task Familiarity is calculated as follows:

$$ Task Familiarity = \frac{1}{|T|}\underset{u \in T}{\sum} max_{i \in I_{u}}\left (cosinesim \left (i^{*} , i\right )\right ) $$(6)Where T is the set of team members, u is a user, i∗ is the target task, Iu is the set of tasks in which u was involved.

-

2.

Task Proximity reflects the team’s overall experience working in closely related tasks and is derived from the task dependency network \(\mathbb {G}_{TD}\). It is calculated from the inverse of the average of the minimum shortest path between each pair of tasks done between the task assignee and other team members. Note that we only compute the task proximity between the task assignee and each team member, instead of between every possible pair of team members, because we would like this feature to capture team members working in closely related tasks that could be beneficial to the target task initiated by the task assignee. Furthermore, it is often that a person may be involved in more than 1,000 tasks. Calculating the shortest path between all of this user’s tasks to others’ makes it extremely computationally expensive. While one may argue that this feature might be better captured between the target task i∗ (instead of the assignee’s past tasks) and the team members’ tasks, in practice, the task dependency is not always established during the task initiation, but during the task execution. Hence, we do not assume that such task dependency information between the target task and the previous tasks is available. Concretely, the task proximity feature is computed as follows:

$$ \begin{array}{@{}rcl@{}} Task\ Proximity =\frac{|T|\times(|T|-1)}{\alpha + {\sum}_{u \in T, u \neg u_{0}}min_{i_{u_{0}}\in I_{u_{0}}, i_{u} \in I_{u}}(ShortestPath_{N}(i_{u_{0}}, i_{u}))} \end{array} $$(7)Where T is the set of team members, u is a member, u0 is the task assignee, i is a task, Iu is the set of tasks that u was involved, \(ShortestPath_{N}(i_{u_{0}}, i_{u})\) is the shortest path between the tasks involved by u0 and u with the maximum length of N, α is the smoothing factor to prevent the zero-division error. In our research, we use α = 1 and N = 1,000.

-

3.

Team Coherence reflects how the collaboration history of the team members, derived from the user collaboration graph \(\mathbb {G}_{UC}\). It is calculated using the inverse of the average shortest path between each pair of members. A longer path implies less coherence.

$$ Team\ Coherence = \frac{|T|\times(|T|-1)}{\alpha + {\sum}_{u_{i}, u_{j} \in T; i < j}(ShortestPath_{N}(u_{i}, u_{j}))} $$(8)Where T is the set of team members, u is a user, ShortestPathN(ui,uj) is the shortest path from ui to uj with the maximum length of N, α is the smoothing factor to prevent the zero-division error. In our research, we use α = 1 and N = 1,000.

-

4.

Relatedness to Task Assignee (\(Relatedness_{u_{0}}\)) is similar to team coherence. However, this feature focuses on the coherence between the task assignee and the rest of the team.

$$ Relatedness_{u_{0}} = \frac{|T|\times(|T|-1)}{\alpha + {\sum}_{u \in T}(ShortestPath_{N}(u_{0}, u))} $$(9)Where T is the set of team members, u is a member, ShortestPathN(u0,u) is the shortest path from the task assignee u0 to each member u with the maximum of N, α is the smoothing factor to prevent the zero-division error. In our research, we use α = 1 and N = 1,000.

-

5.

Team Positivity reflects how team members interact with positivity in the past, derived from the user interaction network \(\mathbb {G}_{UI}\), considering only positive edges. We adopt trust propagation method (Kale et al. 2007) to expand the relationship of pairs as previously discussed in Section 4.2.6.

$$ Team Positivity = \frac{1}{|T|\times(|T|-1)}\underset{u_{i}, u_{j} \in T; i < j}{\sum} I^{+} (u_{i},u_{j}) $$(10)Where T is the set of team members, ui and uj are a pair of team members, I+(ui,uj) is the average weight of the positive edges between ui and uj in the user interaction graph \(\mathbb {G}_{UI}\) after applying the trust propagation model.

-

6.

Team Criticism reflects how team members express constructive criticism towards each other. It is derived the same way as the team positivity discussed previously, but only uses the negative edges in the user interaction network.

$$ Team Criticism = \frac{1}{|T|\times(|T|-1)}\underset{u_{i}, u_{j} \in T; i < j}{\sum} I^{-} (u_{i},u_{j}) $$(11)Where T is the set of team members, ui and uj are a pair of team members, I+(ui,uj) is the average weight of the negative edge weights between ui and uj after applying the trust propagation.

-

7.

Positivity towards Task Assignee is similar to Team Positivity, but only considers the positivity (e.g., praises, gratitude, and congratulations) expressed by team members towards the task assignees.

$$ Positivity \rightarrow Task\ Assignee = \frac{1}{|T|}\underset{u \in T}{\sum} I^{+}(u, u_{0}) $$(12)Where T is the set of team members, u is a team member, u0 is the task assignee, I+(u,u0) is positive interaction score from u to u0.

-

8.

Criticism towards Task Assignee is similar to Team Criticism, but only focuses on the criticism made towards the task assignee.

$$ Criticism \rightarrow Task \ Assignee = \frac{1}{|T|}\underset{u \in T}{\sum} I^{-}(u, u_{0}) $$(13)Where T is the set of team members, u is a team member, u0 is the task assignee, I−(u,u0) is negative interaction score from u to u0.

-

9.

Skill Competency quantifies the team’s ability (skills) to satisfy the target task’s requirements. Each member’s skills are derived from the user expertise network \(\mathbb {G}_{UE}\). Mathematically, this feature is computed using a set-overlap between the team’s skills and the required skills.

$$ Skill Competency = \frac{|S_{T} \cap S_{r}|}{|S_{r}|} $$(14)Where ST is the set of team members’ skills, Sr is the set of required skills.

-

10.

Skill Diversity reflects the diversity of the team members. Teams with high skill diversity tend to comprise individuals who are experts in particular skills, rather than a few persons who have abundant but shallow skills. Hence, this feature, when working together with the skill competency, can distinguish teams comprising experts with necessary skills, and prevent the scoring function from giving high scores to teams comprising only a few persons who can do a little of everything. Skill diversity is measured by the number of unique, required skills that a team can offer (no duplicate skills), divided by the sum of required skills offered by each team member.

$$ Skill\ Diversity = \frac{|S_{T} \cap S_{r}|}{{\sum}_{u \in T}|S_{u} \cap S_{r}|} $$(15)Where T is the set of team members, ST is the set of team skills, Sr is the set of required skills, Su is the skill set of u.

-

11.

Skill Experience computes the experience of the team with respect to each required skill. It considers each member’s past tasks that require the same set of skills as those of the target task.

$$ Skill Experience = \frac{1}{|T||S_{r}|}\underset{u \in T}{\sum}\underset{s \in S_{u} \cap S_{r}}{\sum} |P_{u}(s)| $$(16)Where T is the set of team members, u is a user, Sr is the set of required skills and Su is the set of skills that u experienced, Pu(s) is the set of tasks that require skill s and involve user u.

-

12.

Team Contribution directly quantifies the team activeness, considering the collective history of the team members’ activities in the past tasks. Activities include changing issue status, making comments, and updating code to the repository, which can be collected from each tasks’ log (i.e. a changelog of an issue report). The contribution of a member is defined as the average ratio of the member’s activities in his/her previous tasks.

$$ Team\ Contribution = \frac{1}{|T|}\underset{u\in T}{\sum}\frac{{\sum}_{i\subset I_{u} }c_{u,i}}{|I_{u}|} $$(17)Where T is the set of team members, u is a user, Iu is a set of tasks in which u participated, cu,i is the contribution of member u in task i which can be calculated using the following formula:

$$ c_{u,i} = \frac{|Activities\ of\ u\ in\ task\ i|}{|All\ activities\ in\ task\ i|} $$(18) -

13.

Domain Experience reflects team members’ experience on the tasks within the same domain of the target task. This is different from the Experience feature discussed in Section 4.4.1 which quantifies the team experience from all the past tasks. Domain experience can be calculated if the software tracking platforms allow tasks to have a hierarchical structure. In Jira, a software system is divided into different domains (i.e. Jira projects), each of which is used to categorize tasks into groups.

$$ Domain\ Experience = \frac{1}{|T|}\underset{u \in T}{\sum} |D_{p*}(u)| $$(19)Where T is the set of team members, u is a user, p ∗ is the target task, Di∗(u) is the set of tasks in the same domain as i∗ and involve u.

-

14.

Co-Task Frequency reflects how often the team members work together, derived from the average number of tasks co-worked by each pair of team members.

$$ Co-Task\ Frequency = \frac{1}{|T|\times(|T|-1)}\underset{u_{x}, u_{y} \in T; x < y}{\sum} |I_{u_{x},u_{y}}| $$(20)Where T is the set of team members, u is a user, \(I_{u_{x}, u_{y}}\) is the set of tasks that ux and uy work together.

4.5 Scoring Function Generation

This section describes the method for choosing the best machine learning algorithms to use as the team-fitness scoring function. The function is used to compute scores for candidate teams, allowing them to be ranked. Mathematically, the team-fitness scoring function Ffitness(d,u0,t) ∈ [0,1] quantifies the chance that a given team t would be a suitable team for a task described by d and led by user u0.

We frame the team-fitness quantification into a binary classification problem, where a classifier predicts a given team whether it is effective or non-effective. An effective team is defined as a team that leads to task success. Therefore, for a given task, if the corresponding team completes the task with success (the definition of a successful task is defined in Section 4.2.2), such a team is considered effective. The probability output from such a classifier is then used as the team-fitness score. To train the scoring function, teams from successful tasks (See Section 5.1) are treated as positive samples. For each positive sample, 100 negative samples are synthesized by filling a random (role-compatible) user in each role. Such a data labeling method would allow the classifier to better recognize the characteristics of teams that lead to task success. For each task, the task description, task assignee, and the team members are extracted and used to compute team features discussed in Section 4.4.2.

A number of machine learning classification models drawn from diverse families of classification algorithms are considered, including Logistic Regression (LR), Decision Tree (DT), Random Forest (RF), Naive Bayes (NB), Quadratic Discriminant Analysis (QDA), k-Nearest Neighbors (kNN), and Artificial Neural Network (ANN). These traditional machine learning classification models have been widely used to validate the predictability of many classification tasks in many reputable papers (Tuarob et al. 2020; Tantithamthavorn et al. 2018; Jiarpakdee et al. 2020; McIntosh et al. 2019; Choetkiertikul et al. 2017). Regardless, the proposed framework is highly configurable in the sense that other classifiers could be tested without much change to the existing code.

5-Fold cross-validation is used to validate each model using ROC as the main criteria. The best model for each dataset is then chosen to be the team-fitness scoring function and will be used together with the Max-Logit algorithm to recommend teams.

4.6 Team Recommendation

To recommend software teams, RECAST takes a task description, task assignee, and the required roles as inputs, and output a list of K recommended teams, ranked by the team-fitness scores. Here, the variable K is configurable. A naive method would be to enumerate all the possible candidate teams from the given roles, compute the team-fitness score for each candidate team, and then rank all the teams based on the assigned scores. However, the size of all the possible team combinations can become exponentially large, especially for large-scale software communities that house thousands of software practitioners. To intelligently select a subset of all the possible combinations of teams to compute the team-fitness scores, we adopt the Max-Logit algorithm (Dai et al. 2015). Such a Nash-equilibrium finding algorithm narrows down the subset of candidate teams by iteratively finding a better team after each iteration. Max-Logit has also been used by Liu et al. (2014) to prune the search space for team recommendation. However, according to Liu et al. (2014) the original Max-Logit implementation was designed to output only one approximately best team after the algorithm terminates.

In this work, since we would like to recommend more than one candidate team, the original Max-Logit algorithm is modified to also keep track of enumerated teams and their corresponding scores, so that these teams can be part of the recommendation, as outlined in Algorithm 1. In Line 8, the newly enumerated teams and their costs are retained in RecTeams, so that they can be sorted, selected, and returned in Lines 15 and 16. The cost function Cost(T) is used to compute the cost of a given candidate team T, and is defined as the inverse of the team-fitness score. Interested readers are encouraged to consult the original definition of the Max-Logit algorithm by Monderer and Shapley (1996).

4.7 Evaluation Metrics for Team Recommendation

Precision@K, Mean Average Precision (MAP), Mean Reciprocal Rank (MRR), Mean Rank (MR), and Mean Rank of Hits (Hit MR) are used as the evaluation metrics. These metrics, when used in combination, have been shown effective for evaluation of recommendation systems (Zhang et al. 2020b; Ragkhitwetsagul and Krinke 2019; Tuarob et al. 2015). Let Q be the set of test tasks, i.e. the query set, T(p ∈ Q) = 〈T1,T2,...,TK〉 be the ranked list of recommended K teams for task p, T0 be the actual team, and H(T0,Ti) be a binary function that returns 1 if Ti is a match with T0 (i.e. a hit), and 0 (i.e. a miss) otherwise. In this research, two definitions of H(T0,Ti) is used:

-

1.

Exact-Match (HEXACT(T0,Ti)): Ti is said to be an exact-match with T0 if the set of team members in Ti is the same as T0.

-

2.

Half-Match (HHALF(T0,Ti)): Ti is said to be a half-match with T0 if at least half of the team members of Ti overlap with those of T0.

The evaluation metrics are then defined as:

4.7.1 Precision@K and Mean Average Precision (MAP)

Precision is a well-known metric in the information retrieval literature, traditionally used to quantify how precise the predicted answers are. Traditional precision does not take ordering of correct answers into account. Hence, for the recommendation task, precision is computed at every top K recommendations to justify the optimal K that maximizes the tradeoff between preciseness and variety of the recommended results.

The reported precision@K is the average of the individual precision values from all the test samples in Q.

Mean average precision (MAP) is the average of the precision@K, where K = 1, ..., 10.

4.7.2 Mean Reciprocal Rank (MRR)

MRR takes the order of the first correct answers into account and is a reliable accuracy-based metric for the exact-match protocol. The reciprocal rank of a query is the multiplicative inverse of the rank of the first correct recommended team. The mean reciprocal rank is then the average of the reciprocal ranks of the results of the query set Q. Formally, given testing set Q, let rankp be the rank of the first correct answer of the query p ∈ Q, then MRR of the query set Q is defined as:

If the set of recommended teams does not contain a match, then \(\frac {1}{rank_{P}}\) is defined to be 0.

4.7.3 Mean Rank (MR) and Mean Rank of Hits (Hit MR)

MR quantifies the average rankings of the first matched teams of the query set Q. If the ranking of the first matched team is larger than 100, its rank is set to 101 to avoid including heavy outliers into the computation. Hit MR is similar to MR, but only considers recommended lists that contain a matched team. Note that, since the Max-Logit algorithm does not enumerate all the possible combinations of the teams, there may be cases where the algorithm terminates (i.e. reaches the maximum number of iterations) without discovering a matched team.

5 Experiment, Results, and Discussion

This section presents the datasets used for model validation, evaluation protocols, experimental results, and related discussion. The evaluation is conducted in two parts. The first part is to identify the most suitable classification algorithm for the team-fitness scoring function. The latter validates the efficacy of the proposed software team recommendation mechanism. We focus on the following research questions:

-

RQ1: Is it possible to quantify the ability of a software team to successfully resolve a given software development task? Every ranking-based recommendation algorithm relies on the ability to quantify the relevance of items, so they can be ranked and recommended (Zhang et al. 2020b). In the context of our research, such items are software team configurations. Hence, the ability to accurately quantify such a relevance score (i.e. the team-fitness score) for a given software team, that allows more suitable teams to be ranked higher than less worthy ones, is crucial for the further development of the software team recommendation algorithm.

-

RQ2: Is it possible to recommend software teams that comply with the role requirements and are suitable for a given software development task? Once RQ1 is satisfactorily answered, the next question would be how to integrate the team-fitness scoring function with a recommendation framework, that takes the role requirements and the task description from the task assignee, and suggests a ranked list of top software teams for the task.

-

RQ3: Can the proposed software team recommendation method be adopted for single-role recommendation tasks?: A natural question would arise as to whether the proposed software team recommendation algorithm could be modified such that it recommends members only for particular roles, instead of the whole team. For example, the task assignee may want to specifically choose a particular member for the Developer role, and let the recommender suggests suitable members for the Reviewer role. This research question explores the possibility of doing so.

5.1 Datasets

Three real-world software development datasets from three open-source well-known software systems (i.e. Moodle, Apache, and Atlassian) hosted in the Jira platform are used to validate our method. Table 3 summarizes the statistics of these datasets in terms of task sizes, components, roles, and data collection periods. The definition of a successful task is defined in Section 4.2.2. Note that, Atlassian has a significantly less proportion of successful tasks (1.2%). This is because most of Atlassian tasks do not clearly specify the role of each member. Hence, these are filtered out due to incompatibility with our assumption that a team member must have a role. On the other hand, most Moodle issues explicitly label each member with a role, and therefore has the largest proportion of successful teams, compared to the other two datasets. The successful issues of each dataset are separated chronologically into 80% training and 20% testing sets. The training/testing data is split chronologically to avoid the model to learn from future issues. The 80% training data is used to generate the knowledge graphs and evaluate the team-fitness scoring functions using 5-fold cross validation. The other 20% of the data is used to validate the team recommendation methods.

In the Moodle dataset, besides the assignee, the reviewer, tester, and integrator roles are explicitly labeled. In the Apache dataset, only the reviewer roles are explicitly given. In the Atlassian dataset, the developer, reviewer, and tester roles are explicitly annotated. Note that the developer roles in some systems such as Moodle and Apache are not explicitly labeled. To identify these developers, we make an assumption that a team member who has committed the code to the corresponding GitHub repository is deemed as a developer. The GitHub auto-generated commit logs can be easily extracted and parsed from a Jira issue.

Figure 7 illustrates the distribution of successful and non-successful tasks of the three datasets over time. Note that, while there are more non-successful tasks than the successful ones at a given time, it does not mean that these non-successful tasks are failed ones - they simply do not match the definition of a successful task defined in Section 4.2.2. Furthermore, the numbers of successful tasks of Apache and Atlassian datasets start to emerge quite late compared to the non-successful ones. This is because, before these periods, each task could be either small or did not have sufficient information to identify necessary members’ roles, hence is treated as a non-successful task.

Figure 8 illustrates the distributions of successful and non-successful teams in terms of the team size (number of members including the task assignee) of the three datasets. A task with zero team size means that it does not have a designated task assignee and members’ roles cannot be identified.

5.2 Evaluation of Scoring Function

This section report the empirical results of the proposed team-fitness scoring function that answer RQ1: Is it possible to quantify the ability of a software team to successfully resolve a given software development task?

The probability output by a binary classifier, which predicts whether a candidate team is effective or not, is used as the team-fitness score. The score ranges in [0,1] where 1/0 indicates that the candidate team is perfectly suitable/unsuitable for the given task description and task assignee. Different machine learning classification algorithms are evaluated based on their ability to fit the training data, where positive samples are generated from actual successful teams, and the negative samples are synthesized by randomizing team members.

5-fold stratified cross-validation is performed on the training data. The area under the receiver operating characteristic curve (i.e. ROC-AUC) is used as the main evaluation metric, due to its ability to quantify the model fitness to the data, regardless of the cut-off probability. Furthermore, traditional classification metrics such as precision, recall, F1 of the positive class (successful teams), and MCC (Matthews Correlation Coefficient) are also reported. The ROC-AUC value of 0.5 is equivalent to random guess, while the value of 1.0 indicates a perfect fit.

Table 4 summarizes the average ROC-AUC, precision, recall, F1, and MCC values of each classification model on the three datasets. In terms of precision, RF is the top performer for the Moodle and Apache datasets, while ANN yields the highest precision for the Atlassian dataset. It is worth noting that some classifiers have relatively poor precision in general, namely LR, NB, and QDA. We suspect that this may be caused by the class imbalance issues in the training data that bias the models towards the majority class (i.e. non-successful). In terms of recall, LR gives the highest recall for the Moodle and Atlassian datasets, while DT for the Apache dataset.

F1 represents the single metric that reflects both precision and recall. We found that RF yields the highest F1 for both the Moodle and Atlassian datasets with F1 of 0.890 and 0.460 respectively, while DT for Apache with F1 of 0.991. Table 5 summarizes the p-values from the Mann-Whitney U Test between RF and other classification algorithms in terms of ROC, precision, recall, and F1. While DT yields the top F1 for the Apache dataset (i.e. F1 = 0.991), it is only higher than that of RF (i.e. F1 = 0.986) negligibly by 0.5%. The statistical tests in Table 5 also confirm that the F1 values of RF and DT are not significantly different since the corresponding p-values are greater than 0.05. Hence, it can be inferred without loss of generality that RF is the best classifier for all the datasets in terms of F1. It is worth noting that while DT, RF, kNN, and ANN yield reasonably high F1 (i.e. > 0.8 for Moodle and Apache, and > 0.4 for Atlassian), the other classifiers have noticeably poor F1. Most of these poor-F1 classifiers give high recall but very low precision. Such a phenomenon may be caused by the imbalance of the datasets that leads to a bias towards the majority class, which could be mitigated using data balancing techniques such as SMOTE and under-sampling of the majority classes (Tantithamthavorn et al. 2018). However, from a preliminary investigation, we found that such data balancing techniques, while marginally improving the performance of some classifiers, did not improve the best classification results yielded by RF. Table 6 compares the classification results using different data-balancing methods, including SMOTE, under-sampling of the majority class, and over-sampling of the minority class, using RF as the classifier and 5-fold cross-validation protocol. According to the results, not employing any data-balancing method on the training data (i.e. None) yields the highest performance in almost all the metrics, except for recall where the under-sampling method gives a better recall value. However, careful investigation of the impact of data imbalance is left for potential future work without affecting the research objectives of this paper.

The area under the ROC curve (i.e. ROC) measures the overall fitness of the classification model to the training data regardless of the cut-off probability. While precision, recall, and F1 have been used as the main criteria for selecting classifiers, in our proposed method, we do not utilize the final classification results (i.e. effective vs. non-effective teams), but rather use the raw probability, which is the primary output of the classification algorithm, for ranking purposes. In another word, changing the cut-off probability affects precision, recall, and F1, but not the ROC. Therefore, ROC is used as the main evaluation metric to select the classifier for the team-fitness scoring function. Note that, ROC has also been used as the main criteria for many classification tasks to measure the overall discriminative power of the model rather than its final classification results (Hassan et al. 2017; Tuarob et al. 2020). Furthermore, if the model is used for ranking purposes, even with the presence of imbalanced data, the ordering of what the model perceives as positive cases is preserved and is unaffected by the different cut-off probability values (Jeni et al. 2013). From the experimental results in Table 4, RF gives the best ROC for all three datasets with ROC values of 0.993, 0.994, and 0.981 for Moodle, Apache, and Atlassian respectively. It is worth noting that, ROC values appear to be high even for generally ineffective classifiers such as LR, NB, and QDA. This is expected due to the highly imbalanced nature of the datasets. Hence, other classification metrics such as precision, recall, and F1 are also used as the second criteria for the model selection.

From the above experimental results, it is evidenced that RF yields the highest ROC for all the datasets. Furthermore, in terms of classification performance, RF also achieves relatively high F1 compared with other classifiers even with the presence of imbalanced data. These could be due to the following reasons:

-

RF is built with a multitude of decision trees, which is suitable for ruled extracted features, each of which indicates a soft signal that represents the class attribute (Breiman 2001). Since all of our features are computed using a set of rules and mathematical expressions, rather than using natural representations such as term-weights (for documents) or RBG values (for images), it is not surprising that tree-based classifiers such as RF and DT would be suitable for the features characterizing our datasets.

-

RF has the capability to avoid over-fitting issues due to the randomness mechanism (Yi et al. 2019). This allows RF to achieve better recall while maintaining high precision.

-

RF has a built-in automatic feature-selection mechanism (Zhang et al. 2020a). Specifically, randomly selecting a subset of features to construct a decision tree enables the algorithm to recognize important features, resulting in overall high classification performance.

-

RF has been reported to have the ability to tolerate class imbalance without having to rely on data balancing techniques (Khoshgoftaar et al. 2007). Such capability is especially crucial to handle our imbalanced datasets.

Such analysis also aligns with the previous studies that found RF to be suitable classifiers for the tasks where features are rule-extracted and datasets are imbalanced (Tuarob et al. 2018; Tuarob et al. 2020). Therefore, RF is used to generate the team-fitness scoring functions for the subsequent team recommendation evaluation.

It is also worth noting that classification performance for the Atlassian dataset is much lower than that of the other two datasets in terms of precision, recall, and F1. This could be due to the insufficient training data of the Atlassian dataset with only 2,917 positive samples (due to excessive removal of incomplete software teams), compared to Moodle and Apache with 27,284 and 43,196 positive samples respectively. Therefore, as part of possible future work, we could harvest more samples from such a software system, or investigate semi-supervised techniques to make use of unlabelled samples (Zhang et al. 2017).

5.3 Evaluation of Team Recommendation

This section explains the evaluation protocol and discusses the empirical results of the proposed software team recommendation algorithm, which answer RQ2: Is it possible to recommend software teams that comply with the role requirements and are suitable for a given software development task?

In the software team recommendation task, RECAST takes a task description, task assignee, and required team roles as input, and recommends a ranked list of K teams based on the team-fitness scores. To evaluate the efficacy of this task, standard evaluation protocols for recommender systems are employed. Specifically, the method is validated based on its ability to guess the team configurations of the future (successful) tasks in the testing set. For each dataset (See Section 5.1), 20% most recent tasks are allocated for testing, while the other 80% is used for generating required knowledge networks as discussed in Section 4.3 and training the scoring function (See Section 4.5).

5.3.1 Baseline Algorithms

To the best of our knowledge, we are not aware of any previously proposed team recommendation algorithms that are specifically designed for collaborative software development. Hence, in terms of recommendation performance, we compare our proposed algorithm with a state-of-the-art team recommendation algorithm proposed by Liu et al. (2014) (i.e. Liu), and a baseline that randomly selects role-compatible users to fill in each required role (i.e. Random).