Abstract

Forestry best management practices (BMPs) have proven to be very effective in protecting adjacent stream water quality at the plot scale. However, our knowledge is incomplete about the effectiveness of forestry BMPs in large watersheds where industrial forests are intensively managed. In this study, we compared long-term concentrations and loadings of total suspended solids (TSS), nitrate/nitrite nitrogen (NO3NO2–N), total Kjeldahl nitrogen (TKN), and total phosphorus (TP) before (1978–1988) and after extensive implementation of forestry BMPs (1994–2008) at the outlet of a 5000-km2 river basin that is predominately covered by intensively managed pine forests in Central Louisiana, USA. Our study shows that after extensive BMP implementation, both concentrations and loads of TSS in the basin outlet decreased significantly from 34 to 25 mg L−1 and from 55,000 to 36,700 t year−1, respectively. However, no significant difference was found in NO3NO2–N, TKN, and TP concentrations between the two periods. The results of nutrient loadings varied, whereby the annual nitrogen loading declined without significant differences (from 1790 to 1600 t year−1 for TKN and from 176 to 158 t year−1 for NO3NO2–N, respectively) but the annual TP loading increased significantly (from 152 to 192 t year−1) after BMP implementation. The increase in TP loading is likely due to an increased application of phosphorus fertilizer, which offset BMPs’ effects especially during high-flow conditions. These results strongly suggest that current forestry BMPs in this region are effective in reducing sediment loading, but current BMP guidelines for fertilization and nutrient management need to be reviewed and improved.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The degradation of water quality in various water bodies is one of the world’s most concerning environmental problems. Several decades of research have demonstrated that water quality degradation is strongly associated with land-use activities, such as intensive agriculture and forest practices (Hewlett et al. 1984; Likens et al. 1970), urbanization (Foley et al. 2005), and building and road construction (Kreutzweiser et al. 2005). Agricultural and forestry activities can increase erosion and sediment loads, and leach nutrients and chemicals to groundwater, streams, and rivers (Carpenter et al. 1998; Foley et al. 2005; McCoy et al. 2015). Urbanization substantially degrades water quality, especially where wastewater treatment is absent (Foley et al. 2005). The resulting degraded water quality has led to dissolved oxygen depletion (Giri and Qiu 2016; Ice and Sugden 2003), increased algal blooms (Tsegaye et al. 2006), impairments to water supplies (Foley et al. 2005), and a growth of waterborne disease (Bennett et al. 2001; Townsend et al. 2003).

Waters from forested landscapes are generally considered of high quality, but that may not be the case with industrial forest land (Anderson and Lockaby 2011; Ice et al. 1997). Forest operations, such as harvesting, yarding, road construction, and site preparation, can have adverse impacts on water quality of adjacent water bodies (Appelboom et al. 2002; Morris et al. 2015; Wear et al. 2013). Surface soil erosion has been cited as the most important water quality concern related to forest practices in the USA (Binkley and Brown 1993; Yoho 1980), which not only has profound effects on stream headwater environments but may also have substantial effects on areas far downstream due to the capability of suspended sediments to travel exceptionally long distances (Hotta et al. 2007). Nutrient pollution caused by silvicultural activities in forest waters is also an issue. Early experimental studies of nutrient responses to timber harvest have reported dramatic nutrient increases in forest waters after harvesting (Likens et al. 1970). Since forest watersheds provide 80% of freshwater resources in the USA (Ice and Binkley 2003; US EPA 2000), water quality in forested watersheds has received more and more attention in the recent decades. As a result, forestry best management practices (BMPs) are implemented to prevent or reduce the adverse impacts of forest activities on water quality while permitting the intended forest management activities to occur (Wang et al. 2004).

There have been significant research efforts aimed at verifying the effectiveness of forestry BMPs, although most of them were conducted at the plot scale and/or for short terms (Anderson and Lockaby 2011; Aust and Blinn 2004; Cristan et al. 2016). Forestry BMPs are usually region specific, but most of them share similar prescriptions. Most previous studies (e.g., Cristan et al. 2016) have demonstrated that when constructed correctly and in adequate numbers, forestry BMPs reduced negative impacts on water quality, including surface erosion (Arthur et al. 1998; Brown et al. 2013; Lang et al. 2015) and nutrient runoff (Dahlgren 1998; Hewlett et al. 1984; McBroom et al. 2008; Secoges et al. 2013; Stednick 2008; Wynn et al. 2000), and help protect stream biologic integrity (DaSilva et al. 2013; Vowell 2001). Implementation rates and quality are critical to BMP effectiveness, which can be enhanced with pre-operation planning and with the involvement of registered professionals (Cristan et al. 2016). However, in contrast to the large number of detailed studies on the short-term effectiveness of BMPs at the plot scale, only limited attention has been given to the long-term performance of BMPs at a basin scale.

This is especially the case for the Southern Coastal Plain region of the USA, home to some of the most productive forests in the USA which are intensively managed for forest production. Southern forests are a vital factor in maintaining and improving water quality in the South, and their watersheds have consistently been shown to have lower sediment and nutrient yields with better aquatic biological conditions than non-forested watersheds (West 2002). However, the wide application of forestry management operations in combination with the abundant water resources in the region increases the potential for water quality impacts (Grace 2005). It has been reported that forest operations accounted for 5900 km of impaired rivers and streams in the South, with the majority of more serious water quality problems located in Louisiana, Mississippi, and Oklahoma (West 2002; NASF and SAF 2000). In this context, the South would likely be the optimal region to evaluate the extent and nature of the water quality impacts of forest operations. As discussed above, numerous short-term and/or plot-scale studies have been undertaken to evaluate the effectiveness of forestry BMPs, but their results may not necessarily reflect the same as a watershed or river basin scale study. In addition, some effects found from those plot-scale and/or short-term studies are not necessarily observable on long-term basin scale cases. To our knowledge, very few studies have examined the long-term effectiveness of forestry BMPs in the Gulf Coastal Plain region, and none exists for the state of Louisiana at a basin scale.

Therefore, to gain a better understanding about the effectiveness of forestry BMPs in the South and Southeast USA, this study was conducted in a forestry-dominated basin in central Louisiana to assess the long-term effectiveness of forestry BMPs. According to the manual of recommended forestry BMPs for Louisiana, forestry BMP compliance in the state was below 10% before 1989, and increased steadily to more than 80% by 1994 (LDAF and LDEQ 2000). Based on the information, this study compared the long-term response of stream water quality to industrial forest operations before (1978–1988) and after (1994–2008) the extensive implementation of forestry BMPs. The goal of this study was to determine whether the current forestry BMPs are effective in reducing sediment, nitrogen, and phosphorus levels and loads at a basin scale.

Methods

Site description

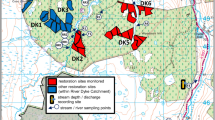

In this study, we gathered 1978–2008 historical records on water quality of the Little River that drains an area of approximately 5000-km2 land in central Louisiana, USA. The Little River is formed by the confluence of the Dugdemona River and Castor Creek, which together drain approximately two third of the Little River basin, at a geographical location of 92° 21′ 46″ W and 31° 47′ 48″ N. The river flows initially southeastwardly in north-central Louisiana and turns east-northeastwardly into Catahoula Lake (Fig. 1). The basin is composed of rounded hills in the North, flat-lying deposits in the Central region, and dissected terrace deposits in the South. Specifically, the uppermost part of the Little River above Catahoula Lake flows through a mixed oak-gum bottomland forest interspersed with stands of bald cypress (Gaydos et al. 1973; LDEQ 2002). Climate in the Little River Basin can be classified as humid subtropical with long hot summers and short mild winters. The long-term annual precipitation is about 1470 mm, of which about one quarter travels as surface runoff to streams (Gaydos et al. 1973).

BMP implementation and separation of two analysis periods

Headwaters of the Little River are mostly forested and mainly affected by intensively managed industrial forest activities (DaSilva et al. 2013; Mast and Turk 1999). Voluntary forestry BMPs were developed by the State of Louisiana in 1988 (Bourland 1988) and revised in 2000 (LDAF and LDEQ 2000) to protect water quality during forestry operations. Since the implementation of forestry BMPs, the Louisiana Department of Agriculture and Forestry has completed eight surveys, with BMP compliance rates reported as < 10, 51, 80, 83, 96, 96, 96, and 74% (95%) in 1988, 1991, 1994, 1997, 2000, 2002, 2003, and 2009, respectively. It is worth noting that although the 2009 BMP survey (Kaller 2009) reported a decline in full BMP implementation rates (74%), over 95% of individually rated BMPs met or exceeded minimum BMP requirements, which is comparable with previous surveys. Therefore, in this study, we divided the 1978–2008 timespan into two periods: a pre-regulation period from 1978 to 1988 and a post-regulation period from 1994 to 2008. We did not include data from 1989 to 1993 in this study in order to avoid possible interference from the transitional time from no BMP implication to full BMP implementation. Additionally, the variability in land-use changes was relatively small during the study period (Mast and Turk 1999), and annual production of sawtimber was reported to remain relatively constant in the regulation years (Nature Conservancy 2007). Based on those governmental reports and the confirmation from the landowner of the forestland (Plum Creek Timber, Inc.), it was assumed that changes in variability of harvest intensity were not significant during the studied period.

Louisiana’s forestry best management practices include a series of guidelines for forest operations during the entire rotation, from timber harvesting to site preparation, reforestation, fertilization, and other silvicultural treatments. Streamside management zones (SMZs) were identified and delineated during pre-harvesting planning and flagged and marked adjacent to all perennial and intermittent streams before harvesting. In the studied region, SMZs were generally 10.7 m for intermittent streams, and 15.2 and 30.5 m for perennial streams that were less and more than 6.1 m in width, respectively. Harvesting could occur within the SMZs of perennial streams, and precautions were given along perennial streams to protect the remaining trees within the SMZs. Roads were located outside the SMZs. During harvesting, trees were felled directionally away from water bodies. Log landings were located where skidding would avoid road ditches and sensitive sites to have a minimal impact on the natural drainage pattern. For regeneration, intensive site preparation activities were avoided on steep slopes or highly erosive soils, and not allowed to enter SMZs and cross-stream channels (Brown 2010; LDAF and LDEQ 2000).

Data collection

Daily precipitation records were collected from three weather stations administered by the National Oceanic and Atmospheric Administration, including LA Winnfield 3 N (ID: USC00169803), LA Jonesboro 4 ENE (ID: USC00164732), and LA Columbia Lock (ID: USC00161979) during 1978–2008 (Fig. 1). Monthly and annual precipitation for each station was calculated separately first, and then the averages from the three stations were used to represent the precipitation level in the studied region for further analysis.

Daily river discharge data were obtained for the study period from the United States Geological Survey (USGS) at the station of Little River near Rochelle, LA (USGS station No.: 07372200). Water quality data were obtained for the same time period from the Louisiana Department of Environmental Quality (LDEQ) for the Little River south of Rogers (LDEQ site No. LA081602_00) (Fig. 1). Water quality data were collected on a monthly basis, including a variety of field and laboratory parameters. Total suspended solids (TSS), total Kjeldahl nitrogen (TKN, the sum of organic nitrogen and ammonia nitrogen), nitrate plus nitrite nitrogen (NO3NO2–N), and total phosphorus (TP) were used in this study. Although discharge data and water quality data were not measured at the exact same location, there was no major input or output between the USGS and LDEQ sites; therefore, the discharge at the USGS site was used to compute nutrient and sediment mass loading using the LDEQ’s water quality data. Details in methods for field sample collections and laboratory analysis can be found in LDEQ’s Quality Assurance Project Plan for the Ambient Water Quality Monitoring Network (LDEQ 2014).

Data analysis

The relationship between the frequency and magnitude of daily streamflow was depicted through the Weibull plotting position formula, which has been adopted as the standard plotting position method by the US Water Resources Council in 1981 (Water Resources Council 1981). This method plots the full range of streamflow conditions measured at a monitoring location against the probability of exceeding a given flow rate for a given time period (Vogel and Fennessey 1995). In this study, the exceedance probability was separately calculated for the two periods (before and after the wide implementation of forestry BMPs). All data used for calculation were based on measured daily discharge (in m3·s−1) from the USGS station. In the generated flow duration curves (FDC, Fig. 2), flow conditions were classified as “high flows,” “moist conditions,” “mid-range flows,” “dry conditions,” and “low flows,” which corresponded to exceedance probabilities less than 10, 10–40, 40–60, 60–90, and greater than 90%, respectively. All parameters of interest collected from the LDEQ site were across all five flow conditions and in an equal manner for both the pre-regulation and post-regulation periods.

Studies have found a close positive relationship of riverine TSS, TKN, NO3NO2–N, and TP loads with the river discharge. The general log-linear regression model can be described in an equation as below (Xu 2013; Joshi and Xu 2015):

where Q day represents daily discharge in liters, Si(t) represents daily loads in grams, i is the type of element, and ε(t) is an error term assumed to be normally distributed. In order to adjust the change caused by the application of BMPs, rating curves were developed for each element (i.e., TSS, TKN, NO3NO2–N, and TP) and separately for the pre-BMP implementation period (1978–1988) and the post-BMP implementation period (1994–2008). The potential log biasing in the transformation procedure was checked by the correction factor (CF) given by Duan (1983) and modified by Gray et al. (2015):

where e i is the difference between ith field observations and model estimates, and n is the total number of samples used in the given rating curve. The fitted parameters and the statistical measures of fitness are summarized in Table 1. However, since the application of the CF did not result in better estimates (Table 1) and due to the previous arguments regarding the reliability of the correction factors (Khaleghi et al. 2015), CF was not used in this study for loads estimates.

Long-term monthly river discharge and monthly concentrations of TSS, TKN, NO3NO2–N, and TP were calculated based on the field measurements from USGS and LDEQ. Daily mass transports of TSS, TKN, NO3NO2–N, and TP during “high flow” conditions and annual mass transports of all four parameters were estimated based on Eq. (1). In this study, if more than 15 days data (any 15 days) were missing in a year, calculations of the total mass transport for that particular year would be considered invalid and excluded from the results (5 years were removed). Annual nitrogen to phosphorus molar ratios were also calculated based on mass transports of total nitrogen (TN, the sum of NO3NO2–N and TKN) and TP to understand possible changes in nutrient sources.

The Student’s t test was used to determine statistically significant differences between 1978–1988 and 1994–2008 on long-term annual and seasonal precipitation and river discharge. A one-way analysis of covariance (ANCOVA) was conducted to compare the effectiveness of forestry BMPs on TSS, TKN, NO3NO2–N, and TP concentrations and loads while controlling for precipitation and river discharge. Specifically, for annual and seasonal concentrations, the covariate was river discharge; for annual and seasonal loads, daily mass transports during “high flow” conditions, and TN/TP ratios, the covariate was precipitation. Levene’s test and normality checks were carried out to make sure that assumptions were met. For all presented results, except for p values, standard errors and 95% confidence intervals were also provided as evidence for a difference (Nuzzo 2014; Sterne and Smith 2001).

The Mann–Kendall trend model was used to detect trends in the time series of the annual values of TSS, TKN, NO3NO2–N, and TP concentrations for both pre-regulation and post-regulation periods (Kendall 1975; Mann 1945), which is derived statistically as:

where S is the Mann–Kendall test value, x j and x k are the annual median values in years j and k, j > k, respectively. Annual median values instead of mean value were used for calculation to avoid the disturbance from any outliers that may be caused by dilution effect of river discharges.

The Mann–Kendall test was to find the positive (increasing) or negative (decreasing) trends in concentrations of TSS, TKN, NO3NO2–N, and TP. To estimate the true slope of an existing trend, the Sen’s nonparametric method (1968) was used, which was calculated as:

where Q is the slope and B is a constant. Q is calculated as:

in the given Eq. (6), i = 1, 2, 3, …, N, whereas, at time j and k (j > k), Xj and Xk are the values of data pairs, respectively. The median of those N values of Qi is Sen’s estimator, given as:

All analyses were performed with the SAS Statistical Software package (SAS 1996).

Results

Precipitation and river hydrological conditions

Annual precipitation ranged from 960 to 1872 mm with an average of 1450 mm during the 1978–1988 period, and fluctuated from 1004 to 1724 mm with an average of 1410 mm during the 1994–2008 period (Fig. 3 above). In general, the interannual variation in precipitation during these two periods seemed to be very similar (p = 0.69). Long-term monthly average precipitation had similar trends for both periods, which decreased from early spring to late summer and then increased in subsequent months until the end of a year (Fig. 3 below). Long-term monthly average precipitation fluctuated from 83 to 173 mm and from 84 to 147 mm during 1978–1988 and 1994–2008, respectively. Precipitation was relatively lower in May and from October to December during 1994–2008 compared to 1978–1988 (Fig. 3 below). However, no significant difference was found between the two periods for monthly precipitation (p = 0.52).

During the period from 1978 to 1988, annual discharge of the Little River averaged 67 m3 s−1, ranging between 0.27 and 3002 m3 s−1. From 1994 to 2008, the river had an average annual discharge of 61 m3 s−1, varying from 0.19 to 2144 m3 s−1. Overall, the variation of discharge seemed to have decreased after the full implementation of forestry BMPs, especially in the 2000s (Fig. 4(a)). Seasonally, the Little River had high flows in the winter months with its highest in February, and low flows in the summer months with the lowest in August for both periods (Fig. 4(b)). Long-term monthly average discharge varied from 3 to 141 m3 s−1 during 1978–1988 and from 2 to 149 m3 s−1 during 1994–2008 (Fig. 4(b)). Similar to the long-term seasonal precipitation, a decrease in average monthly discharge could be found in May and during October–December in 1994–2008 compared to 1978–1988. However, no significant difference was found in the long-term annual discharge (p = 0.66) and in the monthly average discharge (p = 0.75) between the two periods.

Long-term annual concentrations and loads

After controlling for discharge, average long-term annual TSS concentration in the river significantly decreased from 34 to 25 mg L−1 after the wide implementation of BMPs (p = 0.038, Table 2). In general, measured TSS concentrations fluctuated from 2 to 122 mg L−1 during 1978–1988 and from 2 to 158 mg L−1 during 1994–2008. Specifically, annual TSS concentrations varied largely during the pre-BMP period and seemed to be more stable during the 1990s. However, the variation of annual TSS concentrations seemed to increase again in the 2000s (Fig. 5(a)). Annual TSS loads ranged from 8690 to 109,000 metric tons (t) with a mean of 55,000 t in pre-BMPs period and from 18,000 to 65,000 t averaging 36,700 t in the post-BMP period. The variations of TSS loads were similar to those of discharge (Figs. 4(a) and 6(a)) with relatively less fluctuation during 1994–2008. There was a significant difference before and after the use of BMPs in terms of annual TSS loads while controlling for precipitation (p = 0.024, Table 3).

Long-term annual loads of (a) total suspended solids, (b) total Kjeldahl nitrogen, (c) nitrate and nitrite nitrogen, and (d) total phosphorus of the Little River during 1978–2008. Lines represent simulations estimated through Eq. (1), and dots are field measurements

Unlike TSS, annual TKN concentrations fluctuated throughout both the pre-regulation and post-regulation periods with less variability in 2006–2008 (Fig. 5(b)). TKN concentrations averaged 0.97 mg L−1, ranging from 0.27 to 3.59 mg L−1 before the implementation of BMPs, and averaged 1.04 mg L−1 varying from 0.02 to 2.32 mg L−1 in the 20 years afterwards. There was no significant difference found in TKN concentration after controlling for discharge between these two periods (p = 0.68, Table 2). Long-term TKN loads highly corresponded with discharge (Figs. 4(a) and 6(b)). Large variation can be found in annual TKN loads: from 292 to 3540 t year−1 during 1978–1988 averaged 1790 t and from 751 to 2660 t year−1 during 1994–2008 averaged 1600 t. After controlling for precipitation, there was no significant difference between these two periods neither in TKN loads (p = 0.88, Table 3).

Long-term NO3NO2–N concentrations showed less variation in annual concentrations with few exceptions (Fig. 5(c)). In the pre-BMP period, measured NO3NO2–N concentrations averaged 0.12 mg L−1 fluctuating from 0.01 to 1.02 mg L−1. In contrast, starting from 1994, NO3NO2–N concentrations varied from 0.02 to 1.08 mg L−1 averaging at 0.12 mg L−1. Annual loads of NO3NO2–N averaged 176 t (from 25 to 358 t) and 158 t (from 74 to 262 t) during 1978–1988 and 1994–2008, respectively. The trend of NO3NO2–N loads closely corresponded to annual discharge as well (Figs. 4(a) and 6(c)). No statistically significant differences between these two periods were found in loads while controlling for precipitation (p = 0.94, Table 3) nor in annual concentration while controlling for discharge (p = 0.57, Table 2).

Similar to NO3NO2–N, except for a few measurements, long-term annual TP concentrations had relatively small variations for both 1978–1988 and 1994–2008 (Fig. 5(d)). Average annual TP concentrations ranged from 0.02 to 0.90 mg L−1 before 1988 and from 0.01 to 1.03 mg L−1 since 1994 in the studied period. After controlling for discharge, no significant difference in annual TP concentration (p = 0.50, Table 2) can be found between pre-BMP (averaged 0.12 mg L−1) and post-BMP periods (averaged 0.11 mg L−1). Like other nutrients, TP loads had a similar trend to the discharge (Figs. 4(a) and 6(d)), and there was a significant difference between 1978–1988 and 1994–2008 after controlling for precipitation (p = 0.024, Table 3). Annual loads of TP varied from 31 to 280 t, averaging 152 t in pre-BMP period, while post-BMP period loads ranged from 90 to 320 t averaging 192 t.

After controlling for precipitation, the TN/TP ratio decreased significantly from 28 to 21 after the wide implementation of forestry BMPs (p < 0.01, Table 3). Also, interannual TN/TP ratios were much less variable during 1994–2008.

Long-term seasonal concentrations and loads

Average monthly TSS concentrations ranged from 23 to 43 mg L−1 and 12 to 56 mg L−1 for 1978–1988 and 1994–2008, respectively. The seasonal trends of long-term monthly TSS concentrations for the two periods were similar, both of which decreased from highs in February to lows in early summer, increased to relatively high levels in fall, and then decreased in December and was low until the next peak (Fig. 7(a)). However, it was also obvious that the seasonal variation was greater after extensive BMP implementation. Nine out of 12 months showed an equal or decreased long-term monthly TSS concentrations during 1994–2008 compared to the pre-BMPs period (Fig. 7(a)). Specifically, average monthly TSS concentrations were significantly lower from March to June (p = 0.01, Table 4) and from September to December (p < 0.01, Table 4) after controlling for discharge. However, no year-round significant difference was found between the two periods in long-term seasonal trends of TSS concentration (p = 0.14).

Average monthly TKN concentrations changed from 0.71 to 1.28 mg L−1 and from 0.73 to 1.40 mg L−1 before and after extensive implementation of BMPs, respectively. Like TSS, fluctuations of seasonal trends for the two periods were similar, during both of which TKN increased in spring, went down in early summer, went up again in the fall, and decreased in winter (Fig. 7(b)). Five out of 12 months had an equal or lower measurement of TKN concentration. After controlling for discharge, there was no statistical difference between the pre-regulation and post-regulation periods in terms of long-term monthly TKN concentration (p = 0.41).

Average monthly NO3NO2–N concentrations fluctuated from 0.07 to 0.21 mg L−1 before 1988 and from 0.06 to 0.29 mg L−1 since 1994. Seasonal trends for long-term monthly NO3NO2–N concentration were similar to each other as well for two studied periods (Fig. 7(c)). For 8 months of a year, long-term monthly NO3NO2–N concentrations were lower after BMPs. However, no significant difference was observed in long-term monthly NO3NO2–N concentrations while controlling for discharge between the two periods (p = 0.76).

In 1978–1988, average monthly TP concentrations varied from 0.07 to 0.19 mg L−1 while in 1994–2008 they varied from 0.08 to 0.24 mg L−1. In 1978–1988, long-term monthly TP concentrations seemed to be lower in winter months and higher in summer months. In comparison, long-term average TP concentrations during 1994–2008 did not show a clear seasonal trend besides a pulse in November (Fig. 7(d)). After the extensive implementation of BMPs, lower long-term monthly TP concentrations were observed 9 months out of the year. Specifically, average monthly TP concentrations in 1994–2008 were significantly lower from May to October after controlling for discharge (p < 0.01, Table 4). However, there was no significant difference between pre-BMP and post-BMP periods for long-term year-round TP concentrations (p = 0.31).

Long-term monthly loads of TSS, TKN, NO3NO2–N nitrogen, and TP in the Little River showed a seasonal trend closely correlated to river discharge (Figs. 4(b) and 8). From 1978 to 1988, average amounts of monthly TSS, TKN, NO3NO2–N, and TP from the Little River to Catahoula Lake ranged from 244 to 9240 t, from 8 to 301 t, from 1 to 30 t, and from 1 to 25 t, respectively. In comparison, during 1994 to 2008, the mean amounts of monthly TSS, TKN, NO3NO2–N, and TP entering Catahoula Lake varied from 101 to 8050 t, from 5 to 326 t, from 0.5 to 32 t, and from 1 to 40 t, respectively. In general, after extensive implementation of forestry BMPs, long-term monthly loads seemed to be lower for TSS, higher for TP, and relatively the same for nitrogen, which was most evident during months with greater discharge in winter and spring (Fig. 8). No significant difference was found for long-term seasonal loads of TSS, TKN, NO3NO2–N, and TP after controlling for precipitation (p = 0.65, 0.73, 0.74, 0.14, respectively).

Streamflow frequency and magnitude

Flow duration curves (FDCs) of the daily discharge generated from the two studied periods were very similar (Fig. 2). Based on FDCs, daily discharge that was greater than 195 and 196 m3 s−1 could be considered as “high flows” for 1978–1988 and 1994–2008, respectively (Fig. 2). During “high flow” conditions, average daily loads of TSS were decreased from 877 to 702 t after the extensive implementation of forestry BMPs, while controlling for precipitation (p < 0.01, Table 5). In comparison, no significant differences were found for average daily TKN (p = 0.94, Table 5) and NO3NO2–N (p = 0.37, Table 5) loads during 1994–2008. Contrary to TSS, significant increases were found for average daily TP loads since 1994 after controlling for precipitation (from 2.2 t in 1978–2008 to 3.4 t in 1994–2008, p < 0.01, Table 5).

Trend analysis of annual median concentrations

For TSS, TKN, NO3NO2–N, and TP concentrations, Sen’s slope estimates (Q) were − 1.7, 0.023, 0.001, and − 0.005 for the 1978–2008 period, and were − 0.838, 0.020, 0.004, and 0.001 during the 1994–2008 period (Table 6). A significant decreasing trend was detected only in annual median concentrations of TSS in the post-regulation period (1994–2008, p < 0.05, Table 6), while a less significant increasing trend was detected for annual median concentrations of TKN in the pre-regulation period (0.05 < p < 0.1, Table 6). In comparison, no other significant trend was found based on the Mann–Kendall model. It is also worth noting that TP changed its annual trend from a decreasing direction during 1978–1988 to an increasing direction during 1994–2008, although neither of them was not statistically significant. Those results were in agreement with ANCOVA results calculated by annual average concentrations.

Discussion

BMP effectiveness on sediment runoff

The TSS reduction found in this study from 1994 to 2008 demonstrates that long-term BMPs have the capacity to mitigate impacts of forest operations on water quality regarding surface erosion at a river basin scale. This fills the knowledge gap in the verification of the overall effects of forest activities on water quality in terms of sediment transport. In more detail, many previous studies have frequently cited forest road activities as one of the major contributors to surface soil runoff on forestlands, with the majority of investigations on soil erosion (Grace 2005). In the Coastal Plain region, erosion rate studies have been conducted extensively as well (Beasley and Granillo 1988; Blackburn et al. 1986; Grace 2002). However, few of them have related observed erosion rates to the quantity of sediment delivered to water systems or water quality. Sediment delivery to forest streams does not necessarily mirror erosion losses observed upslope since downslope sediment trapping characteristics are also influential (Grace 2005). Also, even though forested SMZs are considered effective in trapping sediment and reducing stream TSS concentrations (Cristan et al. 2016), very little effort has been made to assess the frequency of SMZ breakthroughs for the Coastal Plain region. In this case, this study provides direct evidence that BMPs have favorable impacts on the water that drains forests in terms of reducing sediment runoff, and those positive effects are especially profound during “high flow” conditions.

Deciphering the relative importance of implemented forest operations leading to the reduction in sediment runoff is difficult due to the complex interactions of land use, landform, and disturbance. In general, BMP implementation was very high for harvesting operations (96.4%) and site preparation treatments (95.8%) (Kaller 2009), which may play a bigger role in surface erosion control since previous studies in the same region have reported significant increases in sediment concentrations in the water draining from areas affected by those treatments without BMP implementation (Beasley 1979). In contrast, more attention may be needed for forest road construction and maintenance in the studied area, which has been recognized as one of the primary risk areas in relation to sediment loss from forest management activities. According to the Louisiana Department of Agriculture and Forestry, road construction within SMZs had significantly lower BMP implementation (50%) than any other SMZ activity (Kaller 2009). In addition, due to the flat watershed topography in Louisiana and the resulting sluggish stream flow, the effectiveness of BMP implementation may be aided since sediments could be stored within the stream channel through deposition.

Measured TSS concentrations in the Little River Basin are well within the range of other published data in the Coastal Plain region (Beasley and Granillo 1988; Keim and Schoenholtz 1999; Riekerk 1982, 1983; Williams and Askew 1988), and the result of this study is consistent with other publications regarding the long-term performance of BMPs on sediment reduction in other regions. Reiter et al. (2009) assessed long-term data on stream discharge, suspended sediment, and turbidity to determine impacts of sediment control practices (BMPs) on water quality over 30 years in the Pacific Northwest of Washington. The authors found that turbidity declined at all monitoring sites in their study. When evaluated for the entire watershed, turbidity levels still declined, even with continued active forest management. They concluded that declined sediment production was directly related to reducing sediment production from roads and minimizing the amount of road runoff reaching stream channels (Reiter et al. 2009). In another 15-year paired watershed study on the effects of BMPs on controlling nonpoint source pollution in the Ridge and Valley of central Pennsylvania, Lynch and Corbett (1990) found significant, however relatively small, increases at 2 years post-harvest in turbidity. They concluded that BMPs were effective due to no serious alterations of water quality.

BMP effectiveness on nutrient runoff

Phosphorus

BMPs do not seem to have any discernible long-term effect on phosphorus concentrations at a basin scale in this study. Phosphorus, especially phosphate, is of concern because elevated concentrations can result in eutrophication of freshwater lakes. A phosphate concentration standard of 0.5 mg L−1 has been established to protect freshwater lakes (MacDonald et al. 1991). Changes in levels of phosphorus in forest streams are usually associated with the transport of soil particles carried by overland flow (Barling and Moore 1994; Gravelle et al. 2009), and harvesting (Grace 2005) and site preparation (Johnson et al. 1986) are often referenced with the potential to increase phosphorus concentrations. In this study, the long-term BMP implementation seemed to be very effective in trapping sediments, especially during “high flow” conditions. However, similar positive effects could not be found for TP. The TN/TP ratio showed a significant decrease during 1994–2008, indicating that possible external phosphorus sources may exist after the wide implementation of forestry BMPs. Therefore, effects of forestry BMPs in phosphorus control may be offset by other factors like fertilization.

Fertilization practices in the Southern USA have been changing with an increased area of pine plantations being fertilized annually since 1991 (Fox et al. 2004; Fox et al. 2007). In the lower Coastal Plain and Gulf Coasts, poorly drained, clayey Ultisols tend to be severely phosphorus deficient (Fisher and Garbett 1980). Along the Gulf Coast, well-drained, clayey to loamy soils on the Citronelle and associated geological formations also have been found to be phosphorus deficient (Allen and Lein 1998). Therefore, forest fertilization is usually included in silvicultural regimes to increase plantation growth (Allen 1987). In the South, from 1978 to 1991, fertilized forested land increased around 80 ha year−1, while beginning in 1991, the area of fertilized forestland doubled approximately every 2 years through 1999 when fertilization peaked at 640,000 ha. Since 1999, fertilized forested land has averaged 570,000 ha year−1 (Albaugh et al. 2007). As a result, before 1990, less than 80,000 ha of southern pine plantations were fertilized whereas over 500,000 ha were fertilized in 2004 (Forest Nutrition Cooperative 2005). During this period, fertilizer applications were mainly for phosphorus fertilization which was added to at least 80% of the fertilized forestland in all but 1 year, aiming at solving the problems of insufficient phosphorus availability in soils for tree demand throughout the rotation and phosphorus deficiencies developed soon after the seedlings are planted (Albaugh et al. 2007; Fox et al. 2007; Pritchett and Comerford 1982). Specifically, from 2000 to 2004, at least 80% of fertilized area in the South was in the Coastal Plain region with an average amount of 237,000 t fertilizer year−1 applied to forests (Albaugh et al. 2007).

The effect of phosphorus fertilization would be mostly obvious during phosphorus exports in large or intense storm events. Results in this study have shown that during “high flow” conditions, TP loadings were significantly increased by 55% in the post-BMP implementation era (Table 5). It could be attributed to the reason that changes in TP concentrations and loads in forest waters are usually determined by the rapid addition of phosphorus during fertilization, and the slow movement of phosphorus into the stream over time from soils near the stream. Since flow duration curves for the two periods are similar, it is very likely that TP concentrations were higher during “high flow” conditions after the increased use of phosphorus fertilizer. This may indicate that although forestry BMPs were significantly effective in TSS reduction, in large or intense storm events, the offset effects from the fertilizer overwhelmed the effects of BMPs, leading to an increase in the TP concentration in the river. By contrast, in drier conditions, BMPs’ significant effects in trapping sediments may dominate, which could greatly reduce the movement of phosphorus and decrease the TP concentration in forest waters. As a result, TP loadings in 1994–2008 could increase with the average TP concentrations for the two periods being generally the same (Tables 2 and 3) since phosphorus exports in large or intense storm events could account for the majority of the total TP mass transport. This could also be an explanation why average TP concentrations were lower from May to October (Fig. 7(d)) and why monthly average TP loads were much greater from January to April in 1994–2008 (Fig. 8(d)).

Nitrogen

Study findings indicate that the long-term effectiveness of nitrogen reduction is also limited at the basin scale. Some previous studies have claimed that harvesting and site preparation would typically lead to increased water yields, soil moisture, and solar radiation on the soil surface due to the removal of forest vegetation. As a result, nitrogen concentration, especially nitrate and nitrite, in forest waters may increase after timber harvest because of decreased plant uptake, increased flushing by shallow flow pathways, and increased soil temperatures that increase mineralization and nitrification rates (Martin et al. 1984; Marchman et al. 2015). Those conclusions are mostly derived from short-term studies, and may not be valid for long-term cases. Although some forestry studies have shown short-term increases in stream nitrogen levels after harvesting (e.g., Likens et al. 1970; Pardo et al. 1995), many other studies have noted that nitrogen exports returned to pre-harvest levels a few years after harvesting because of uptake by re-growing vegetation while soil nitrification returned to pre-disturbance rates (Edwards and Williard 2010). The efficiency of nitrogen self-regulating mechanisms in forests over longer periods of time may explain why no change is found in nitrogen concentrations over the 30-year span of this basin scale study.

In addition, forestry BMPs are not developed to fundamentally control nitrogen transport through all mechanisms. Nitrogen, mainly nitrate and nitrite, is transported primarily through leaching of soluble species. Much of the TKN is also transported as dissolved organic nitrogen. Forestry BMPs are designed to control nitrogen transport mainly through minimizing the incidence of Horton overland flow moving from the harvesting areas to the streams, and SMZs are often considered as one of the most functional parts in BMPs that sequester nitrogen transport and promote denitrification (Lowrance et al. 2000). However, most forestry BMPs do not have the capacity to affect subsurface processes, except for encouraging infiltration of surface flows to the extent possible. Therefore, dissolved nutrients such as nitrate, which commonly travels by subsurface pathways, could not be substantially influenced by BMPs. This is demonstrated in this study by similar amounts of nitrogen mass transport during “high flow” conditions between the two study periods (Table 5). Based on the discussion above, at basin scale, it may be expected to see limited long-term effectiveness of forestry BMPs on nitrogen control.

Comparisons with other studies in the Southern/Southeastern Coastal Plain region

The nitrogen and phosphorus levels observed in this study are within the range of those reported from other publications for forest streams in the Southern/Southeastern Coastal Plain region. For instance, in a study of decadal nutrient inputs of coastal rivers, He and Xu (2015) reported similar ranges of annual concentrations for TKN, NO3NO2–N, and TP in the past 30 years in Sabine River (0.52–1.49, 0.04–0.10, and 0.04–0.11 mg L−1, respectively) and Calcasieu River (0.42–1.22, 0.07–0.24, and 0.06–0.16 mg L−1, respectively) whose river basins are forest-pasture dominated (54 and 51%, respectively) in coastal Louisiana. In another study focusing on effects of forest harvesting and BMPs on nutrient concentrations in the Upper Coastal Plain, authors observed median concentrations ranging from 0.01 to 0.05 mg L−1 for TP and from 0.44 to 2.89 mg L−1 for total nitrogen (TN) during pre-harvest period, and varying from 0.008 to 0.03 mg L−1 for TP and from 0.41 to 2.99 mg L−1 for TN in post-harvest period for all their monitoring sites in small headwater streams in Southwest Georgia (Marchman et al. 2015).

Reported effectiveness of forestry BMPs in adjusting phosphorus and nitrogen levels in water draining from forested watersheds is variable for short-term and/or plot-scale cases in the Southern Coastal Plain region (Edwards and Williard 2010; Marchman et al. 2015; Wynn et al. 2000). In comparison, very few studies have been conducted reporting long-term effectiveness of forestry BMPs at basin scale in terms of nutrient runoff (Cristan et al. 2016). Based on the discussion above taken collectively, we suggest more future studies to be taken to test the long-term effectiveness of forestry BMPs on nutrient runoff at a large watershed scale.

Strengths and limitations of long-term watershed-scale BMP evaluation

In plot-scale/short-term studies, effectiveness of forestry BMPs may be overestimated or underestimated due to the types of flow conditions that occur during monitoring. For example, most suspended sediment transport occur during large or intense storm conditions (Beasley 1979), which occur infrequently and randomly. Therefore, even if sediment is delivered to a channel and remains relatively available, it may not be flushed from the watershed during the period of stream water monitoring. This would result in an overestimate of the BMP efficiency because the temporary storage would be interpreted as better BMP effectiveness than actually occurred. In contrast, long-term watershed-scale studies could overcome this type of shortcomings to a great extent and better represent the collective effectiveness of forestry BMPs.

However, large watersheds tend to have myriad sinks that may delay or attenuate the solute signal from the land. For instance, some eroded sediment originating from management activities may be stored on the hillside or in the channel. If the area of storage was a riparian buffer and if storage is permanent, then the attribution of the reduction of the constituent delivery is fully appropriate in the evaluation of the BMP effectiveness. If storage was not by the riparian buffer or if it was not permanent, substantial amounts of sediment delivered to a stream channel can be stored for decades and perhaps longer before being flushed from the watershed (Trimble 1981), leading to BMP efficiencies being incorrectly evaluated in some situations. Additionally, there may be changes in the forestry equipment and approaches over a long period of time, some of which are hard to track and include in the statistical analysis. Therefore, it should be noted that limitations exist for the evaluation of long-term BMP effectiveness in a large watershed.

Conclusions

This study assessed long-term trends of total suspended solids, nitrate/nitrite nitrogen, total Kjeldahl nitrogen, and total phosphorus concentrations and loads in an intensively managed forested watershed in the Southern USA. The goal of the study was to examine the effectiveness of forestry BMPs in water quality protection at a basin scale and in a longer term. Based on the results, we conclude that forestry BMPs are effective in reducing sediment runoff from the intensively managed forested watershed. Although stream sediment loading was strongly reduced following BMP implementation, TP loading at the basin outlet increased by 27%, which was probably caused by a drastic increase in the application of phosphorus fertilizer after 1991. Stream nitrogen concentrations and loading in the post-BMP implementation era showed little change when compared to those in the pre-BMP implementation period. The results suggest that current BMP guidelines for fertilization and nutrient management need to be reviewed and improved. This study filled a knowledge gap in the long-term effectiveness of forestry BMPs at a basin scale in the Southern Coastal Plain region of the USA. Since forestry BMPs are region specific, we suggest that future studies be conducted in other regions at a large watershed scale.

References

Albaugh, T. J., Allen, H. L., & Fox, T. R. (2007). Historical patterns of forest fertilization in the southeastern United States from 1969 to 2004 (article). Southern Journal of Applied Forestry, 31(3), 129–137.

Allen, H. L. (1987). Forest fertilizers (article). Journal of Forestry, 85(2), 37–46.

Allen, H. L., & Lein, S. (1998). Effects of site preparation, early fertilization, and weed control on 14-year old loblolly pine. Proceedings - Southern Weed Science Society, 51, 104–110.

Anderson, C. J., & Lockaby, B. G. (2011). The effectiveness of forestry best management practices for sediment control in the southeastern United States: a literature review (review). Southern Journal of Applied Forestry, 35(4), 170–177.

Appelboom, T. W., Chescheir, G. M., Skaggs, R. W., & Hesterberg, D. L. (2002). Management practices for sediment reduction from forest roads in the coastal plains (article). Transactions of the ASAE, 45(2), 337–344.

Arthur, M. A., Coltharp, G. B., & Brown, D. L. (1998). Effects of best management practices on forest streamwater quality in eastern Kentucky. Journal of the American Water Resources Association, 34(3), 481–495. https://doi.org/10.1111/j.1752-1688.1998.tb00948.x

Aust, W. M., & Blinn, C. R. (2004). Forestry best management practices for timber harvesting and site preparation in the eastern United States: an overview of water quality and productivity research during the past 20 years (1982–2002). Water, Air, & Soil Pollution, 4(1), 5–36. https://doi.org/10.1023/B:WAFO.0000012828.33069.f6

Barling, R. D., & Moore, I. D. (1994). Role of buffer strips in management of waterway pollution: a review (review). Environmental Management, 18(4), 543–558. https://doi.org/10.1007/bf02400858

Beasley, R. S. (1979). Intensive site preparation and sediment losses on steep watersheds in the Gulf Coastal Plain (article). Soil Science Society of America Journal, 43(2), 412–417. https://doi.org/10.2136/sssaj1979.03615995004300020036x

Beasley, R. S., & Granillo, A. B. (1988). Sediment and water yields from managed forests on flat coastal plain sites (Article). Water Resources Bulletin, 24(2), 361–366. https://doi.org/10.1111/j.1752-1688.1988.tb02994.x

Bennett, E. M., Carpenter, S. R., & Caraco, N. F. (2001). Human impact on erodable phosphorus and eutrophication: a global perspective (article). Bioscience, 51(3), 227–234. https://doi.org/10.1641/0006-3568(2001)051[0227:hioepa]2.0.co;2

Binkley, D., & Brown, T. C. (1993). Forest practices as nonpoint sources of pollution in North America (Article). Water Resources Bulletin, 29(5), 729–740. https://doi.org/10.1111/j.1752-1688.1993.tb03233.x

Blackburn, W. H., Wood, J. C., & Dehaven, M. G. (1986). Storm flow and sediment losses from site-prepared forestland in east Texas (article). Water Resources Research, 22(5), 776–784. https://doi.org/10.1029/WR022i005p00776

Bourland, T. (1988). Recommended forestry best management practices for Louisiana. Louisiana Department of Agriculture and Forestry. 15 p.

Brown, K. R. (2010). Effectiveness of forestry best management practices in minimizing harvesting impacts on streamflow and sediment loading in low-gradient headwaters of the Gulf Coastal Plain. M.S. thesis, Louisiana State University, Baton Rouge, Louisiana, U.S.A.

Brown, K. R., Aust, W. M., & McGuire, K. J. (2013). Sediment delivery from bare and graveled forest road stream crossing approaches in the Virginia Piedmont (article). Forest Ecology and Management, 310, 836–846. https://doi.org/10.1016/j.foreco.2013.09.031

Carpenter, S. R., Caraco, N. F., Correll, D. L., Howarth, R. W., Sharpley, A. N., & Smith, V. H. (1998). Nonpoint pollution of surface waters with phosphorus and nitrogen (article). Ecological Applications, 8(3), 559–568. https://doi.org/10.2307/2641247

Cristan, R., Aust, W. M., Bolding, M. C., Barrett, S. M., Munsell, J. F., & Schilling, E. (2016). Effectiveness of forestry best management practices in the United States: literature review (review). Forest Ecology and Management, 360, 133–151. https://doi.org/10.1016/j.foreco.2015.10.025

Dahlgren, R. (1998). Effects of forest harvest on stream-water quality and nitrogen cycling in the Caspar Creek watershed. In: Proceedings of the Conference on Coastal Watersheds: the Caspar Creek Story. USDA Forest Service Gen. Tech. Rep. PSW-GTR-168, pp. 45–53.

DaSilva, A., Xu, Y. J., Ice, G., Beebe, J., & Stich, R. (2013). Effects of timber harvesting with best management practices on ecosystem metabolism of a low gradient stream on the United States Gulf Coastal Plain (article). Water, 5(2), 747–766. https://doi.org/10.3390/w5020747

Duan, N. (1983). Smearing estimate: a nonparametric retransformation method. Journal of the American Statistical Association, 78(383), 605–610. https://doi.org/10.1080/01621459.1983.10478017

Edwards, P. J., & Williard, K. W. J. (2010). Efficiencies of forestry best management practices for reducing sediment and nutrient losses in the Eastern United States (article). Journal of Forestry, 108(5), 245–249.

Fisher, R. F., & Garbett, W. S. (1980). Response of semi-mature slash and loblolly-pine plantations to fertilization with nitrogen and phosphorus (article). Soil Science Society of America Journal, 44(4), 850–854. https://doi.org/10.2136/sssaj1980.03615995004400040039x

Foley, J. A., DeFries, R., Asner, G. P., Barford, C., Bonan, G., Carpenter, S. R., et al. (2005). Global consequences of land use (review). Science, 309(5734), 570–574. https://doi.org/10.1126/science.1111772.

Forest Nutrition Cooperative. (2005). Summary of operational fertilization in the southeastern United States: 2004 update. FNC Res. Note 21, Forest Nutrition Cooperative, North Carolina State Univ., Raleigh, NC, and Virginia Polytechnic Institute and State Univ., Blacksburg, VA. 7 p.

Fox, T. R., Jokela, E., & Allen, H. L. (2004). The evolution of pine plantations in the southern United States, P. 63–82 in Southern forest science: Past, present, future. USDA For. Serv. Gen. Tech. Rep. SRS-75. 394 p.

Fox, T. R., Allen, H. L., Albaugh, T. J., Rubilar, R., & Carlson, C. A. (2007). Tree nutrition and forest fertilization of pine plantations in the southern United States (article). Southern Journal of Applied Forestry, 31(1), 5–11.

Gaydos, M. W., Rogers, J. E., & Smith, R. P. (1973). Water resources of the Little River basin (pp. 1–57). Louisiana: U.S. Government Printing Office.

Giri, S., & Qiu, Z. Y. (2016). Understanding the relationship of land uses and water quality in twenty first century: a review (review). Journal of Environmental Management, 173, 41–48. https://doi.org/10.1016/j.jenvman.2016.02.029

Grace, J. M. (2002). Effectiveness of vegetation in erosion control from forest road sideslopes (article). Transactions of the ASAE, 45(3), 681–685.

Grace, J. M. (2005). Forest operations and water quality in the south (article; proceedings paper). Transactions of the ASAE, 48(2), 871–880. https://doi.org/10.13031/2013.18295

Gravelle, J. A., Ice, G., Link, T. E., & Cook, D. L. (2009). Nutrient concentration dynamics in an inland Pacific Northwest watershed before and after timber harvest (article). Forest Ecology and Management, 257(8), 1663–1675. https://doi.org/10.1016/j.foreco.2009.01.017

Gray, A. B., Pasternack, G. B., Watson, E. B., Warrick, J. A., & Goni, M. A. (2015). Effects of antecedent hydrologic conditions, time dependence, and climate cycles on the suspended sediment load of the Salinas River, California. Journal of Hydrology, 525, 632–649. https://doi.org/10.1016/j.jhydrol.2015.04.025

He, S., & Xu, Y. J. (2015). Three decadal inputs of nitrogen and phosphorus from four major coastal rivers to the summer hypoxic zone of the Northern Gulf of Mexico. Water Air and Soil Pollution, 226(9). https://doi.org/10.1007/s11270-015-2580-6

Hewlett, J. D., Post, H. E., & Doss, R. (1984). Effect of clear-cut silviculture on dissolved ion export and water yield in the Piedmont (article). Water Resources Research, 20(7), 1030–1038. https://doi.org/10.1029/WR020i007p01030

Hotta, N., Kayama, T., & Suzuki, M. (2007). Analysis of suspended sediment yields after low impact forest harvesting (article). Hydrological Processes, 21(26), 3565–3575. https://doi.org/10.1002/hyp.6583

Ice, G., & Binkley, D. (2003). Forest streamwater concentrations of nitrogen and phosphorus: a comparison with EPA’s proposed water quality criteria (article). Journal of Forestry, 101(1), 21–28.

Ice, G., & Sugden, B. (2003). Summer dissolved oxygen concentrations in forested streams of northern Louisiana (article). Southern Journal of Applied Forestry, 27(2), 92–99.

Ice, G. G., Stuart, G. W., Waide, J. B., Irland, L. C., & Ellefson, P. V. (1997). 25 years of the clean water act: how clean are forest practices (vol 95, pg 9, 1997) (correction, addition). Journal of Forestry, 95(11), 2–2.

Johnson, D. W., Cole, D. W., Vanmiegroet, H., & Horng, F. W. (1986). Factors affecting anion movement and retention in 4 forest soils (article). Soil Science Society of America Journal, 50(3), 776–783. https://doi.org/10.2136/sssaj1986.03615995005000030042x

Joshi, S., & Xu, Y. J. (2015). Assessment of suspended sand availability under different flow conditions of the lowermost Mississippi River at Tarbert Landing during 1973-2013 (article). Water, 7(12), 7022–7044. https://doi.org/10.3390/w7126672

Kaller, M. D. (2009). Forestry best management practice. http://www.ldaf.state.la.us/wp-content/uploads/2014/04/BMPsummary2009.pdf. Accessed September 2ed, 2016.

Keim, R. F., & Schoenholtz, S. H. (1999). Functions and effectiveness of silvicultural streamside management zones in loessial bluff forests (article). Forest Ecology and Management, 118(1–3), 197–209. https://doi.org/10.1016/s0378-1127(98)00499-x

Kendall, M. G. (1975). Rank correlation methods (4th ed.pp. 202–231). London: Charles Griffin.

Khaleghi, M. R., Varvani, J., Kamyar, M., Gholami, V., Ghaderi, M. (2015). An evaluation of bias correction factors in sediment rating curves: a case study of hydrometric stations in Kalshor and Kashafroud watershed, Khorasan Razavi Province, Iran. International Bulletin of Water Resources and Development. I–X.

Kreutzweiser, D. P., Capell, S. S., & Good, K. P. (2005). Effects of fine sediment inputs from a logging road on stream insect communities: a large-scale experimental approach in a Canadian headwater stream (article). Aquatic Ecology, 39(1), 55–66. https://doi.org/10.1007/s10452-004-5066-y

Lang, A. J., Aust, W. M., Bolding, M. C., Barrett, S. M., McGuire, K. J., & Lakel, W. A. (2015). Streamside management zones compromised by stream crossings, legacy gullies, and over-harvest in the Piedmont (Article). Journal of the American Water Resources Association, 51(4), 1153–1164. https://doi.org/10.1111/jawr.12292

LDAF and LDEQ. (2000). Recommended forestry best management practices for Louisiana. http://www.ldaf.state.la.us/wp-content/uploads/2014/04/BMP.pdf. Accessed September 2ed, 2016.

LDEQ. (2002). 2002 water quality inventory report. http://www.deq.louisiana.gov/portal/tabid/2200/Default.aspx. Accessed September 2ed, 2016.

LDEQ. (2014). Quality assurance project plan for the ambient water quality monitoring network. http://www.deq.louisiana.gov/portal/Portals/0/planning/QAPPs/QAPP_1004_r08%202014%20FINAL%2010-14-14.pdf. Accessed 2 September, 2016.

Likens, G. E., Bormann, F. H., Johnson, N. M., Fisher, D. W., & Pierce, R. S. (1970). Effects of Forest cutting and herbicide treatment on nutrient budgets in Hubbard Brook Watershed Ecosystem (article). Ecological Monographs, 40(1), 23–47. https://doi.org/10.2307/1942440

Lowrance, R., Hubbard, R. K., & Williams, R. G. (2000). Effects of a managed three zone riparian buffer system on shallow groundwater quality in the Southeastern Coastal Plain (article). Journal of Soil and Water Conservation, 55(2), 212–220.

Lynch, J. A., & Corbett, E. S. (1990). Evaluation of best management practices for controlling nonpoint pollution from silvicultural operations (Article). Water Resources Bulletin, 26(1), 41–52. https://doi.org/10.1111/j.1752-1688.1990.tb01349.x

MacDonald, L. H., Smart, A. W., & Wissmar, R. C. (1991). Monitoring guidelines to evaluate effects of forestry activities on streams in the Pacific Northwest and Alaska. US Environmental Protection Agency report EPA 910/9-91-001.

Mann, H. B. (1945). Non-parametric test against trend (article). Econometrica, 13(3), 245–259. https://doi.org/10.2307/1907187.

Marchman, S. C., Miwa, M., Summer, W. B., Terrell, S., Jones, D. G., Scarbrough, S. L., & Jackson, C. R. (2015). Clearcutting and pine planting effects on nutrient concentrations and export in two mixed use headwater streams: Upper Coastal Plain, Southeastern USA (article). Hydrological Processes, 29(1), 13–28. https://doi.org/10.1002/hyp.10121.

Martin, C. W., Noel, D. S., & Federer, C. A. (1984). Effects of forest clearcutting in New England on stream chemistry (article). Journal of Environmental Quality, 13(2), 204–210. https://doi.org/10.2134/jeq1984.00472425001300020006x

Mast, M. A., & Turk, J. T. (1999). Environmental characteristics and water quality of Hydrologic Benchmark Network stations in the West-Central United States, 1963–95. U.S. Geological Survey Circular 1173–B, 130 p.

McBroom, M. W., Beasley, R. S., Chang, M., & Ice, G. G. (2008). Water quality effects of clearcut harvesting and forest fertilization with best management practices (article). Journal of Environmental Quality, 37(1), 114–124. https://doi.org/10.2134/jec2006.0552

McCoy, N., Chao, B., & Gang, D. D. (2015). Nonpoint source pollution (article). Water Environment Research, 87(10), 1576–1594. https://doi.org/10.2175/106143015x14338845156263

Morris, B. C., Aust, W. M., & Bolding, M. C. (2015). Effectiveness of forestry BMPS for stream crossing sediment reduction using rainfall simulation. In: Proceedings 17th Biennial Southern Silvicultural Research Conference. Shreveport, Louisiana.

NASF and SAF. (2000). A review of waterbodies listed as impaired by silvicultural operations. https://forested.remote-learner.net/pluginfile.php/200/mod_resource/content/0/SAF%20Report.Review%20of%20Waterbodies%20Listed%20as%20Impaired%20by%20Silvicultural%20Operations%202000.pdf. Accessed July 18th, 2017.

Nature Conservancy. (2007). Proposed Louisiana Forest Legacy Program—assessment of need. http://www.ldaf.state.la.us/wp-content/uploads/2014/04/laforestaonassessmentofneed.pdf. Accessed July 18th, 2017.

Nuzzo, R. (2014). Statistical errors (news item). Nature, 506(7487), 150–152. https://doi.org/10.1038/506150a

Pardo, L. H., Driscoll, C. T., & Likens, G. E. (1995). Patterns of nitrate loss from a chronosequence of clear-cut watersheds (article; proceedings paper). Water Air and Soil Pollution, 85(3), 1659–1664. https://doi.org/10.1007/bf00477218

Pritchett, W. L., & Comerford, N. B. (1982). Long-term response to phosphorus fertilization on selected southeastern coastal-plain soils (article). Soil Science Society of America Journal, 46(3), 640–644. https://doi.org/10.2136/sssaj1982.03615995004600030038x

Reiter, M., Heffner, J. T., Beech, S., Turner, T., & Bilby, R. E. (2009). Temporal and spatial turbidity patterns over 30 years in a managed forest of Western Washington (Article). Journal of the American Water Resources Association, 45(3), 793–808. https://doi.org/10.1111/j.1752-1688.2009.00323.x

Riekerk, H. (1982). Water quality management in flatwoods of Florida. In S. S. Coleman et al. (Eds.), Impacts of intensive forest management practices, proc (pp. 53–57). Gainesville: Univ. of Florida.

Riekerk, H. (1983). Impacts of silviculture on flatwoods runoff, water quality, and nutrient budgets (Article). Water Resources Bulletin, 19(1), 73–79. https://doi.org/10.1111/j.1752-1688.1983.tb04559.x

SAS/STAT. (1996). User’s Guide, Version 6.12 (4th ed.). Cary, NC, USA: SAS Institute Inc..

Secoges, J. M., Aust, W. M., Seiler, J. R., Dolloff, C. A., & Lakel, W. A. (2013). Streamside management zones affect movement of silvicultural nitrogen and phosphorus fertilizers to Piedmont Streams (article). Southern Journal of Applied Forestry, 37(1), 26–35. https://doi.org/10.5849/sjaf.11-032

Sen, P. K. (1968). Estimates of the regression coefficient based on Kendall’s tau (article). Journal of the American Statistical Association, 63(324), 1379–1389.

Stednick, J. D. (2008). Long-term water quality changes following timber harvesting. In Hydrol. Biol. Responses For Practices (pp. 157–170). Springer.

Sterne, J. A. C., & Smith, G. D. (2001). Sifting the evidence - what’s wrong with significance tests? (article). British Medical Journal, 322(7280), 226–231. https://doi.org/10.1136/bmj.322.7280.226

Townsend, A. R., Howarth, R. W., Bazzaz, F. A., Booth, M. S., Cleveland, C. C., Collinge, S. K., Dobson, A. P., Epstein, P. R., Holland, E. A., Keeney, D. R., Mallin, M. A., Rogers, C. A., Wayne, P., & Wolfe, A. H. (2003). Human health effects of a changing global nitrogen cycle (review). Frontiers in Ecology and the Environment, 1(5), 240–246. https://doi.org/10.2307/3868011.

Trimble, S. W. (1981). Changes in sediment storage in the Coon Creek Basin, Driftless Area, Wisconsin, 1853 to 1975 (article). Science, 214(4517), 181–183. https://doi.org/10.1126/science.214.4517.181.

Tsegaye, T., Sheppard, D., Islam, K. R., Johnson, A., Tadesse, W., Atalay, A., et al. (2006). Development of chemical index as a measure of in-stream water quality in response to land-use and land cover changes (Article). Water Air and Soil Pollution, 174(1–4), 161–179. https://doi.org/10.1007/s11270-006-9090-5

US EPA, Office of Water. (2000). Achieving cleaner waters across America: supporting effective programs to prevent water pollution from forestry operations. EPA 841-F-002. Washington, DC.

Vogel, R. M., & Fennessey, N. M. (1995). Flow duration curves 2: a review of applications in water-resources planning (Article). Water Resources Bulletin, 31(6), 1029–1039. https://doi.org/10.1111/j.1752-1688.1995.tb03419.x

Vowell, J. L. (2001). Using stream bioassessment to monitor best management practice effectiveness (article; proceedings paper). Forest Ecology and Management, 143(1–3), 237–244. https://doi.org/10.1016/s0378-1127(00)00521-1.

Wang, J. X., McNeel, J., & Milauskas, S. (2004). Logging sediment control act and forestry best management practices in West Virginia: a review (article). Northern Journal of Applied Forestry, 21(2), 93–99.

Water Resources Council. (1981). Guidelines for determining flood flow frequency. https://water.usgs.gov/osw/bulletin17b/dl_flow.pdf. Accessed 2 March 2017.

Wear, L. R., Aust, W. M., Bolding, M. C., Strahm, B. D., & Dolloff, C. A. (2013). Effectiveness of best management practices for sediment reduction at operational forest stream crossings (article). Forest Ecology and Management, 289, 551–561. https://doi.org/10.1016/j.foreco.2012.10.035

West, B. (2002). Water quality in the South. In D. N. Wear & J. Greis (Eds.), Southern Forest Resource Assessment (pp. 455–477). General tech. Report SRS-53. Asheville: USDA Forest Service, Southern Research Station.

Williams, T. M., & Askew, G. R. (1988). Impact of drainage and site conversion of pocosin wetlands on water quality. In D. D. Hook et al. (Eds.), The ecology and management of wetands. Vol. 2. Management, use and value of wetlands (pp. 213–218). Portland: Timber Press.

Wynn, T. M., Mostaghimi, S., Frazee, J. W., McClellan, P. W., Shaffer, R. M., & Aust, W. M. (2000). Effects of forest harvesting best management practices on surface water quality in the Virginia coastal plain. Transactions of the ASAE, 43(4), 927–936. https://doi.org/10.13031/2013.2989

Xu, Y. J. (2013). Transport and retention of nitrogen, phosphorus and carbon in North America’s Largest River Swamp Basin, the Atchafalaya River Basin. Water, 5(2), 379–393. https://doi.org/10.3390/w5020379

Yoho, N. S. (1980). Forest management and sediment production in the south–a review. Southern Journal of Applied Forestry, 4(1), 27–36.

Acknowledgements

The authors acknowledge the US Geological Survey and the Louisiana Department of Environmental Quality for making the long-term river discharge records and water quality data available for this study.

Funding

During this study, Zhen Xu was financially supported through a US Department of Agriculture McIntire Stennis grant and a grant from the National Fish and Wildlife Foundation (project no.: 8004.12.036402).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Xu, Z., Xu, Y.J. Assessing effectiveness of long-term forestry best management practices on stream water quality at a basin scale—a case study in Southern USA. Environ Monit Assess 190, 108 (2018). https://doi.org/10.1007/s10661-018-6497-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10661-018-6497-6