Abstract

Navarro and Manly (Popul Ecol 51:505–512, 2009) (NM) have proposed a randomization protocol for null model analysis of species occurrences at discrete locations based on probability distributions and generalized linear models. In the NM method, presences-absences are governed by independent Bernoulli random variables. In addition, a non-observable non-negative random variable (“quasi-abundance”) from either Poisson, Binomial or Negative Binomial distributions are log-linearly related to the qualitative effects of species and location. By connecting the probability of occurrence of each species on each location and the quasi-abundance distributions, one generalized linear model for the observed presences-absences is selected by profile deviance, and the resulting fitted probabilities of the null model with minimum deviance is used to generate random matrices via parametric bootstrap. This work contributes with a unified theoretical formulation of the NM method, based on Faddy distributions, to allow general distributions of over-dispersed and under-dispersed discrete random variables. For a subset of the Faddy models, the log concave property of the inverse link function guarantees convergence to a global minimum deviance thus providing unique estimates for the linear parameters of the models. The method is illustrated using presence-absence data of island lizard communities. Interpretations of this combined GLM-parametric bootstrap protocol are discussed, highlighting the way fitted probabilities under the chosen null model are related to the row and column totals of the observed table. Additional properties of the probabilistic NM protocol, with possible avenues of future research, are also discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Statistical methods based on randomization tests have been useful to determine whether a random process can explain a particular observed pattern of species occurrences at discrete locations. This strategy has contributed to the development of null model analysis of presence-absence (1–0) data in community ecology. Depending on the assumptions made in a particular situation, randomization tests for presence-absence matrices may rely on simulation protocols where the row and column totals of the randomised matrices are allowed to vary. This is the case when the null model assumes that the presence of species k on site l is a Bernoulli-distributed random variable with parameter \(\pi_{kl}\) (the “cell” probability of occurrence of species k on location l, \(\pi_{kl}\), k = 1,.., t; l = 1,…,s). Following ideas used in contingency table analysis by Whittam and Siegel-Causey (1981), Gilpin and Diamond (1982) pioneered this strategy to deliberately construct a null model from which they could test whether species co-occurrences are non-randomly structured, to provide support to Diamond’s (1975) assembly rules. Gilpin and Diamond raised a discussion about the suitability of using an empirical formula for a cell probability versus a modelling approach, by fitting a logistic model. Although these authors did not favour the idea in using logistic regression for the estimation of cell probabilities, other attempts supported that the modelling approach is not unrealistic (Ryti and Gilpin 1987). On this line of thinking, Navarro and Manly (2009) (NM) suggested that null model analyses of presence-absence data require a definition of statistical independence and developed several possible variations of the logistic function for modelling the presence and absence of species on discrete locations, and its further application in null model analysis of species occurrences. In particular, the estimated probabilities \(\hat{\pi }_{kl}\) resulting from those fitted null models naturally induced randomization protocols for the generation of null matrices.

The work of Navarro and Manly (2009) has been cited in varied contexts as plant communities (Götzenberger et al. 2012), associations in microbial communities (Pascual-García et al. 2014) and evaluation of chemical risks (Tornero-Velez et al. 2012); it has also been cited and suggested by methodologists who have evaluated the performance of (“frequentist”) algorithms for the analysis of presence-absence matrices (Veech 2013; Kallio 2016). The NM method assumes a log-linear model for the so-called “quasi-abundance” (unobserved counts) as a function of additive qualitative species and location effects. By linking the probability structures of i.i.d. non-negative quasi-abundances and i.i.d Bernoulli presences-absences, NM were able to produce null scenarios from three families of generalized linear models (GLMs) for \(\pi_{kl}\) when quasi-abundances follow (1) Poisson, (2) Negative Binomial and (3) Binomial distributions. This array of candidate (null) models was put to compete one to each other (using profile deviance), such that the model attaining the minimum deviance could be selected as the “best” null model for the estimation of species occurrences on discrete locations. This best model makes cell probabilities available for simulation in a randomization protocol seeking to detect non-random patterns in the observed presence-absence matrix. All those competitor null models are characterized by two main assumptions. First, the occurrences of different cells in the presence–absence matrix are independent. Second, the models include only additive qualitative effects of species and locations as explanatory variables. These assumptions provide minimal restrictions about each species–location combination for the purpose to apply a probabilistic approach in null model analyses of presence-absence data.

The motivations for our formal analysis of presence-absence data comes from classic literature on over and under dispersed count data. However, the resulting analysis will also apply to many situations where the presences/absence data is fundamentally binary, including the Case (1986) lizard data set analysed in this paper. A question that can be raised now is whether only three families of distributions for count data are sufficient to support the existence of an “optimal” null model for the randomization protocol. A guidance for finding the answer is the relationship between the expected value and the variance of the assumed distribution for the quasi-abundance. When this is Poisson, both the expected value and the variance are equal, while when it is Negative-Binomially-distributed the former is larger than the latter (showing over-dispersion relative to the Poisson distribution), and the expected value will be smaller than the variance when assuming a binomially distributed random variable (showing under-dispersion relative to the Poisson distribution). The Negative Binomial and the Binomial distribution are not the only over-dispersed and under-dispersed distributions, respectively. Thus, it would be worth including other over-dispersed and under-dispersed distributions in the null model selection process. To achieve this goal, we consider a family of distributions proposed by Faddy (1997) which offered a unified approach to over-dispersed and under-dispersed non-negative discrete random variables, based on extended Poisson process modelling. The purpose of this paper is to provide a theoretical formulation of the original Navarro and Manly (2009) protocol for null model analysis that will allow the extension of quasi-abundance distributions, using Faddy’s (1997) method such that an ampler array of candidate null models can be evaluated. It is also discussed that the choice of predictors (e.g., variables associated to species and location effects), and the null-model selection process are important steps for the generation of null scenarios and, in general, for a proper interpretation of results under any probabilistic approach in null model analysis.

2 Estimation of the probability of occurrence of species on discrete locations with GLMs

2.1 Null models using species and locations as qualitative predictors

Assume that t species on s locations are of interest, and the abundance of species k on location l, has been registered for each of the t × s species-location combination (denoted as \(n_{kl}\)). The NM protocol assumed that the \(n_{kl}\)’s are realizations of t × s non-negative independent discrete random variables \(N_{kl}\), with probability mass function (p.m.f.) \(f_{kl} (n_{kl} )\). A procedure for modelling the dependence of the expected value of \(N_{kl}\) on suitable covariates in null models based on \(N_{kl}\) suggests the use of qualitative effects of species and locations, in a similar fashion found in a t × s factorial arrangement without replication, i.e., to define a non-interaction model involving row (species) and column (location) effects. Now, abundance (count) data, are typically studied with log-linear models. Therefore, the expected value of the abundance or mean intensity \(\mu_{kl} = E(N_{kl} )\) can be modelled using a multiplicative relationship through the log-linear model:

here, \(\gamma\) = constant effect, \(\alpha_{k}\) = qualitative species effect and \(\beta_{l}\) = qualitative site effect. Very often \(N_{kl}\) is unknown and the only information available is the presence and absence of each species in the locations, the statistical problem would be reduced to estimating the probability of occurrence of species k on location l, \(\pi_{kl}\). The absence of information about \(N_{kl}\) forces us to refer it as the “quasi-abundance”. The null-model proposed by NM assumes that families of quasi-abundance models are shared for all species, and that it is possible to associate the estimation problem for \(\pi_{kl}\) with a particular quasi-abundance distribution, when the only information available is a binary matrix.

Let \(y_{kl}\) be the (k, l)-th entry of an observed t × s binary matrix, where \(y_{kl} = 1\) if species k occurs in location l, and \(y_{kl} = 0\) otherwise, k = 1,…,t, l = 1,…,s. Let \(R_{k} = \sum\nolimits_{l = 1}^{s} {y_{kl} }\) the total number of locations where species k was found, \(C_{l} = \sum\nolimits_{k = 1}^{t} {y_{kl} }\) the total number of species occurring in location l. Under the NM null model, the \(y_{kl}\)’s are realizations of t × s independent binary random variables \(Y_{kl}\) with p.m.f.’s Bernoulli-distributed with parameter \(\pi_{kl} = P(Y_{kl} = 1)\). Here, each \(\pi_{kl}\) is regarded as the probability of occurrence of species k on site l and, simultaneously, as the expected value of \(Y_{kl}\). The expected row or column totals are the sum of the probabilities of the corresponding row or column:

The dependence of \(E(Y_{kl} ) = \pi_{kl}\) on the species and island effects can be modelled by connecting the probability structures of \(N_{kl}\) and \(Y_{kl}\), under the following premises:

-

1.

Species k is absent on island l if and only if its associated quasi-abundance is nil.

-

2.

Species k is present on island l if and only if its associated quasi-abundance is greater than 0.

The null model also assumes that

which will be called the assumption of continuity, in accordance with premises (1) and (2). The estimation of all probabilities of occurrence \(E(Y_{kl} ) = \pi_{kl}\) under the null model uses the two expressions in (2):

Then,

where \(f_{kl} ( \cdot )\) is the p.m.f. of \(N_{kl}\) with mean \(\mu_{kl}\). The function \(f_{kl} (0)\) in (3) may depend on a parameter vector \({{\varvec{\upzeta}}}_{(k,l)} = (\zeta_{1(k,l)} ,\zeta_{2(k,l)} , \ldots ,\zeta_{r(k,l)} )\) for some r. Without loss of generality, we set \(\zeta_{1(k,l)} = \mu_{kl}\) as one of the parameters on which \(f_{kl} (0)\) depends. Thus, we express \(f_{kl} (0)\) as

where \({{\varvec{\upzeta}}}^{\prime}_{(k,l)} = (\zeta_{2(k,l)} , \ldots ,\zeta_{r(k,l)} )\). Therefore:

2.2 Estimation of the probability of occurrence of species on discrete locations using qualitative predictors

Equations (1) and (4) lead to a model for \(E(Y_{kl} ) = \pi_{kl}\) of the form:

Distinct probability distributions for \(N_{kl}\) will provide different ways to estimate \(\pi_{kl}\) under the null model. If the right-hand expression in (5) can be written as:

where g is a monotonic, differentiable function, \({\mathbf{x}}_{kl}^{{\text{T}}}\) is a p × 1 vector of dummy variables and \({{\varvec{\uptheta}}}\) is a p × 1 vector of parameters such that \({{\varvec{\uptheta}}}^{\prime} = [\theta_{1} , \cdots ,\theta_{p} ]\), then we will be working with a GLM for \(\pi_{kl}\) with link function g (Dobson and Barnett 2018). Expression (5) shows that the link function (6) maps the mean intensity \({\mu}_{\text{kl}}\) onto the [0–1] Bernoulli parameter space for \(\pi_{kl}\).

Letting \(\alpha_{1} = \beta_{1} = 0\), \({{\varvec{\uptheta}}}\) can be parameterized with \(p = t + s - 1\) free parameters: \({{\varvec{\uptheta}}}^{{\text{T}}} = \left[ {\theta_{1} ,\theta_{2} , \ldots ,\theta_{t} ,\theta_{t + 1} , \ldots ,\theta_{t + s - 1} } \right]\), where \(\theta_{1} = \gamma ,\theta_{2} = \alpha_{2} , \ldots ,\theta_{t} = \alpha_{t} ,\theta_{t + 1} = \beta_{2} , \ldots ,\theta_{t + s - 1} = \beta_{s}\). Maximum likelihood estimation of the parameter vector \({{\varvec{\uptheta}}}\) may involve extra parameters depending on the distribution of the quasi-abundance model connected to \(\pi_{kl}\).

3 Distributions for the quasi-abundance and model selection

3.1 Link functions induced by Poisson, negative binomial and binomial distributions (Navarro and Manly 2009)

We briefly describe the quasi-abundance distributions already mentioned in Navarro and Manly (2009) and the resulting GLMs for \(\pi_{kl} = E(Y_{kl} )\).

-

(1)

\(N_{kl} \sim {\text{Poisson}}(\mu_{kl} )\). The GLM for \(\pi_{kl}\), is:

$$ \pi_{kl} = \pi (\gamma ,\alpha_{k} ,\beta_{l} ) = 1 - \exp ( - \exp (\gamma + \alpha_{k} + \beta_{l} )) = 1 - \exp ( - \mu_{kl} ) $$(7)The link function is the known complementary log-log link:

$$ \eta_{kl} = g(\pi_{kl} ) = \log \left[ { - \log (1 - \pi_{kl} )} \right] $$(8) -

(2)

\(N_{kl} \sim {\text{negative binomial}}\,(\mu_{kl} ,\upphi )\). Here \({{\varvec{\upzeta}}}^{\prime}_{(k,l)} = (\upphi )\), for all k, l (i.e., \(\upphi\) is equal for all the t × s species-location combinations). The GLM for \(\pi_{kl}\)is:

$$ \pi_{kl} = \pi (\gamma ,\alpha_{k} ,\beta_{l} ,\upphi ) = 1 - \left( {\frac{1}{{\upphi \exp (\gamma + \alpha_{k} + \beta_{l} ) + 1}}} \right)^{1/\upphi } = 1 - \left( {\frac{1}{{\upphi \mu_{kl} + 1}}} \right)^{1/\upphi } , $$(9)with link function:

$$ \eta_{kl} = g(\pi_{kl} ;\varphi ) = \log \left( {\left( {{1 \mathord{\left/ {\vphantom {1 {(1 - \pi_{kl} )}}} \right. \kern-\nulldelimiterspace} {(1 - \pi_{kl} )}}} \right)^{\varphi } - 1} \right) - \log \varphi $$(10)McCullagh and Nelder (1989) refer this as a one-parameter link family for binomial data (Aranda-Ordaz 1981) containing both the (canonical) logistic link (\(\varphi = 1\), when \(N_{kl}\) follows a geometric distribution) and the complementary log–log link (\(\varphi \to 0\)) as special cases).

-

(3)

\(N_{kl} \sim {\text{binomial}}\; (\mu_{kl} ,m)\). Here \({{\varvec{\upzeta}}}^{\prime}_{(k,l)} = (m)\), for all k, l (i.e., m is shared by all the t × s combinations). The GLM is:

$$ \pi_{kl} = \pi (\gamma ,\alpha_{k} ,\beta_{l} ,m) = 1 - \left( {1 - \frac{{\exp (\gamma + \alpha_{k} + \beta_{l} )}}{m}} \right)^{m} = 1 - \left( {1 - \frac{{\mu_{kl} }}{m}} \right)^{m} $$(11)with link function:

$$ \eta_{kl} = g(\pi_{kl} ;m) = \log m + \log \left( {1 - (1 - \pi_{kl} )^{1/m} } \right) $$(12)

For the case m = 1, (11) turns into a log-Bernoulli model. Letting \(m \to \infty\) with \(\pi_{kl}\) fixed in (12), the complementary log–log link function (8) is obtained again. In the next section, the quasi-abundance distributions are extended to include Faddy distributions, which yields a more general family of link functions that includes the canonical link functions for negative binomial and the Poison distributions.

3.2 Extending the distributions for the quasi-abundance

Faddy (1997) considered “pure birth” stochastic processes X(t) of events occurring in the time interval (t, t + δt) such that

\(\lambda_{n}\), the “rate” of the process in (13), is allowed to depend on the current number of events. If \(\lambda_{n} = \lambda\) is constant, the process X(t) is Poisson distributed with mean \(\lambda t\). When \(\lambda_{n}\) is not constant, X(t) does not have a Poisson distribution. In fact, an increasing sequence \(\lambda_{0} < \lambda_{1} < \lambda_{2} < \ldots\) induces an over-dispersed distribution for X(t) relative to the Poisson distribution (\({\text{Var[}}X(t)] > {\text{E[}}X(t)]\)). Analogously, a decreasing sequence \(\lambda_{0} > \lambda_{1} > \lambda_{2} > \ldots\) induces an under-dispersed distribution for X(t) relative to the Poisson distribution (\({\text{Var[}}X(t)] < {\text{E[}}X(t)]\)). The probabilities \(p_{n} (t) = {\text{P}}[X(t) = n]\) can be found numerically, by solving Kolmogorov forward differential equations (Faddy 1997). For any of discrete probability distribution on {0, 1,2, …}, namely \(\{ \omega_{0} ,\omega_{1} ,\omega_{2} ,...\}\), it is always possible to find a sequence \(\lambda_{0} ,\lambda_{1} ,\lambda_{2} ,...\) such that \(p_{n} (t) = \omega_{n}\), and t is some fixed time. The procedure for obtaining that sequence is recursive, and it starts with:

As time is arbitrary, t can be taken to 1. Thus: \(\lambda_{0} = - \log (\omega_{0} )\). We are interested here in the probability distribution on {0, 1,2, …} for \(N_{kl}\). Assume k and l in \(N_{kl}\) as fixed. As \(p_{0} (1) = \omega_{0} = P(N_{kl} = 0)\), Faddy´s procedure indicates that

Faddy (1997) suggested

in order to produce general concave sequences and to cover over-dispersed probability distributions “between” Poisson and Negative Binomial, and under-dispersed distributions relative to Poisson. When c = 0, the \(\lambda_{n}\)’s are constant, giving a Poisson distribution for X(t); values of c > 0 generate increasing sequences of \(\lambda_{n}\)’s, producing over-dispersed distributions for X(t) relative to the Poisson distribution. Finally, c < 0, the result is a decreasing sequence and an under-dispersed distribution. Letting n = 0 in (15), we obtain: \(\lambda_{0} = ab^{c}\). Then, using (14):

According to Eq. 2, Sect. 2.1, the probability structures of \(N_{kl}\) with mean \(\mu_{kl}\) and \(Y_{kl}\) with parameter \(\pi_{kl}\) can be linked using expression (16):

or, equivalently:

The connection between \(\pi_{kl} = E(Y_{kl} )\) and \(\mu_{kl}\) leads us to ask about the possibility of expressing \(\pi_{kl}\) in terms the mean of any Faddy distribution, say μ, using Eq. 17. Closed expressions for μ in terms of a, b and c are not available in general, but Faddy (1997, Eq. 3.3) proposed a “diffusion approximation to the mean μ”. This approximation is valid as long as the rate of the process is large. Although the diffusion approximation clearly does not apply in this case, we have chosen to use it with the only purpose of establishing a wider collection of generalized linear models with valid link functions (as indicated by Eq. 6). Thus, applying Eq. 3.3 in Faddy (1997) for \(\mu_{kl}\), we set:

Expression (18) is an equality for the cases c = 0 and c → 1. These “Faddy-inspired” link functions express the dependence of the mean quasi-abundance on the species and site effects, \(\alpha_{k}\) and \(\beta_{l}\), namely \(E(N_{kl} ) = \mu_{kl} = \exp (\gamma + \alpha_{k} + \beta_{l} )\), as a function of the usual predictor \(g(\pi_{kl} ) = {\mathbf{x}}_{kl}^{{\text{T}}} {{\varvec{\uptheta}}}\), as in (6) Sect. 2.2, with a (Faddy and Smith 2011, Eq. 5) given by:

Substituting (19) in (17), we obtain:

This expression for \(\pi_{kl}\) includes two generalized linear models described in Sect. 3.1, when b and c are set to particular values: for c = 0, b > 0, model (7) is obtained, and the values c = 1 and b = 1/ϕ in (20) lead to model (9). Moreover, the binomial distribution for the quasi-abundance with probability of success \(p = \frac{{\mu_{kl} }}{m}\) (case 3, Sect. 3.1) can be included as a member of the Faddy distributions in this modelling process, but parameterization (15) is not useful. One suitable parameterization for the rate of the process \(\lambda_{n}\) inducing a generalized linear model under the assumption of a binomial quasi-abundance is (Faddy and Smith 1997):

The proof is straightforward. From (14) and letting n = 0 in the last expression above:

Expression (21) is the same as Eq. 11, in Sect. 3.1. Other expressions for the rate parameter \({\lambda }_{n}\) having the binomial distribution as a particular case are described in Faddy and Smith (2011, 2012), but they will not be considered here.

3.3 Link functions induced by Faddy distributions

The GLMs with link function \(\eta_{kl} = g(\pi_{kl} )\) can be expressed as:

where X = (xij)(ts)×(t+s−1) is the design matrix assuming the constraints \(\hat{\alpha }_{1} = \hat{\beta }_{1} = 0\). The different link functions for every Faddy distribution based on the models given by Eq. 20 (for fixed values of b and c, say, b0 and c0, respectively) and 11 (for a fixed value m0), are shown below.

-

1.

c0 = 1. The negative binomial family with fixed dispersion parameter \(b_{0}\), defines the link function:

$${ \eta }_{\text{kl}} \, = \text{ log} \left({\text{b}}_{0}{\text{(}{1}/\text{(1 }-{\mu}_{\text{kl}}\text{))}}^{\frac{1}{{\text{b}}_{0}}}-{1}\right)\text{, }\quad{{k}}{=1, \ldots , }{{t}}{; \,}\quad{{l}}{=1,\ldots, }{\text{s.}}$$(23) -

2.

0 < c0 < 1 (Models where the distribution of the quasi-abundance lies between Poisson and Negative Binomial). The link is:

\({\eta}_{\text{kl}}= \text{ log} {\text{b}}_{0}+ \text{log} \left[{\left({1}-\frac{{1}-{\text{c}}_{0}}{{\text{b}}_{0}}\text{log(1}-{ \pi }_{\text{kl}}\text{)}\right)}^{\frac{1}{{1}-{\text{c}}_{0}}}-{1} \right], \, {{k}}{=1,\ldots, }{{t}}\text{; }{{l}}{=1,\ldots, }{\text{s}}.\)

Letting \(d_{0} = 1 - c_{0}\), then 0 < d0 < 1.

$${\eta}_{\text{kl}}\text{ = log}{\text{b}}_{0}+ \text{log} \left[{\left({1}-\frac{{\text{d}}_{0}}{{\text{b}}_{0}}\text{log(1}-{\pi}_{\text{kl}}\text{)}\right)}^{\frac{1}{{\text{d}}_{0}}}-{1}\right] , {{k}}{=1,\ldots, }{{t}}\text{; }{{l}}{=1,\ldots, }{\text{s.}}$$(24) -

3.

c0 = 0. This case produces the complementary log-log link (Eq. 11, Sect. 3.1).

-

4.

c0 < 0 (Models where the distribution of the quasi-abundance lies between Poisson and Binomial)

The link function is the same as in case 2, but now the parameter \(d_{0} = 1 - c_{0}\) implies d0 > 1.

-

5.

The quasi-abundance follows a binomial distribution with parameter m, fixed in m0. The link function is given by Eq. 11, Sect. 3.1, with m = m0.

3.4 Null model selection by profile deviance

The wide array of distributional families for the quasi-abundance will produce different estimates for the probability of occurrence of species k on island l. Given an observed presence-absence matrix, the selection of generalized linear (null) models for the estimation of \(\pi_{kl}\) is of interest. The deviance is typically used as a criterion for goodness-of-fit in generalized linear model selection. It is defined as the maximized log-likelihood ratio criterion for testing the fitted model against the saturated alternative, which assumes a separate parameter for each of the N expectations, which in our case consist of N = t × s observations (McCullagh and Nelder 1989); the deviance for modelling observed Bernoulli response variables ykl, is given by:

here \({\widehat{\varvec{\Pi}}} = {[}\hat{\pi }_{{{11}}} , \ldots ,\hat{\pi }_{ts} {]}\) is the vector of fitted probabilities and \({\widehat{\varvec{\Pi}}}_{\max }\) is the vector of maximum likelihood estimates corresponding to the maximal model, which turns out to be simply the observed vector \({\mathbf{y}} = (y_{11} , \cdots ,y_{ts} )^{{\text{T}}}\). McCullagh and Nelder (1989) have emphasized that the deviance can be used as a measure of goodness-of-fit only when it is approximately independent of the estimated parameters, \({\widehat{\boldsymbol{\theta }}}\). This is not the case when each observation \(y_{kl}\) is Bernoulli-distributed: the fitted probabilities \(\widehat{\varvec{\Pi}}\) are not independent from the deviance. Therefore, the usual chi-square tests of the deviance for goodness-of-fit are not supported, as the distribution of the deviance given the estimated parameters reduces to a conditionally degenerate distribution, in the sense that the deviance \(D\) is a function of \(\widehat{{\boldsymbol{\theta }}}\). Even for a large number of cells (t × s), D is not independent of \({\widehat{\boldsymbol{\theta }}}\). Applications of the deviance for binary data are constrained “to those cases where the chi-square approximations work reasonably well”: (1) when the deviance is used in the comparison of two nested models and (2) in profile likelihood (or profile deviance) (McCullagh and Nelder 1989). Profile likelihood is one of the tools for goodness-of-link analyses where several link functions indexed by one or more parameters are tested against a pre-established link, usually the canonical link. In the case of modelling binary random variables, it is known that the logit link \(\eta_{kl} = \log \left\{ {\pi_{kl} /(1 - \pi_{kl} )} \right\}\) is the canonical link function connecting the mean response and the linear predictor. However, “canonical links do not always provide the best fit available for a given data set” (Czado and Munk 2000). Thus, non-canonical links are sometimes preferable (Huang 2020).

For simplicity, let us suppose that the link families we establish as competitor models are indexed by only one extra parameter, say \(\varsigma\). The binomial and negative binomial distributions are examples of one-parameter links of that sort. The usual application of profile likelihood for goodness-of-link analysis is that a particular GLM is fitted and embedded in a wider class of models including one extra parameter \(\varsigma\), with \(\varsigma_{0}\) the value corresponding to the link function for the original model. The deviance (likelihood ratio) test is applied to find the estimate \(\hat{\varsigma }\) giving the best fit in the complete family of models, and to compare \(\hat{\varsigma }\) with that at \(\varsigma_{0}\). This process produces an estimate of the profile deviance for \(\varsigma\). A model reaching the minimum deviance in the profile will be classified as a “good” candidate for a null model. Whenever possible, the model attaining the overall minimum deviance will be used as representative of the null models for the occurrence for each species-location combination. Calculation of the profile deviance for each family tested in this study follows the procedure described above, and it can be extended to more than one extra parameter (e.g., in Faddy distributions there are two extra parameters, b0 and c0).

3.5 Implementing algorithms for maximum likelihood estimation in the profile deviance

One advantage of seeing several families of discrete distributions for the quasi-abundance as Faddy distributions is that most of the inverse link functions for the calculations are log-concave [Tang and Ye (2020); see also “Appendix”], ensuring that the deviance is concave in the linear parameters and thus standard optimization methods should provide unique solutions.

3.5.1 Poisson, negative binomial, overdispersed (b > 0, 0 < c < 1) and underdispersed (b > 0, c < 0) Faddy-distributed models

At first glance, the only task needed to determine the minimum deviance among competitor models is to apply unconstrained optimization techniques for an assorted set of b and c values such that b > 0 and c < 1. However, the basic assumptions of the NM null model (Sect. 2.1) impose some restrictions in the fitting process. One constraint is that the expected value of the unobserved quasi-abundance \({\mu}_{\text{kl}}\) is an upper bound of the probability \({\pi}_{\text{kl}}\) [i.e. \({\pi}_{\text{kl}}\left({\mu}_{\text{kl}}\right){\le}{\mu}_{\text{kl}}\); see “Appendix”]. Additionally, the inverse link functions (20) are only log-concave in the region R = {b > 0, c ≤ 1, b ≥ ‒c}, a helpful property that guarantees the attainment of a global minimum of the deviance function (see “Appendix”). Therefore, our search for a suitable null model was carried out in that region, R. We also computed the deviance values inside the “non-permissible” region R1 = {b > 0, c < 0, b < ‒c}; however, as a way to detect possible convergence issues in the maximum likelihood estimation inside R1, the same set of initial values were used in the analysis of the example data described in Sect. 4.1. The profile deviance procedure was run in R (R Core Team 2021), specifically with the glm function for the Poisson case, and the maxBFGS function from the maxLik package (Henningsen and Toomet 2011) for the Aranda-Ordaz family of links, corresponding to the negative binomial distribution (Eq. 10). Here BFGS stands for the variable metric optimization method by Broyden-Fletcher-Goldfarb-Shanno (Fletcher 2000). For the remaining Faddy models, the quasi-Newton method L-BFGS-B without constraints as implemented in R (Byrd et al. 1995) was used.

3.5.2 Binomial models

Maximum likelihood estimation corresponding to the models assuming a binomial distribution with parameter m (Eq. 19) may suffer of a well-known issue in log-binomial models whenever the log-likelihood is maximized (i.e., the deviance is minimized) on the boundary of the parameter space. Very often, the iterative process of model fitting fails to locate the maximum as the algorithm used may inadvertently iterate to a prohibited place. One must guarantee that the fitted probabilities always remain inside the interval [0,1]. For the case m = 1, one solution is given by an EM algorithm (Marschner 2014), implemented in R in the logbin package (Donoghoe and Marschner 2018). However, for m > 1, Eq. 19 cannot be handled by the log-binomial model, and one must rely on alternative algorithms for ML estimation. Williamson et al. (2013) have suggested to implement “brute force” procedures for the optimization of the log-likelihood when all else fails. Our brute force method tries to estimate the minimum deviance using constraints. To searching a feasible solution \(\widehat{\theta}\), we solved the following non-linear optimization problem:

To search the minimum deviance for different values of the binomial parameter m0, subject to the set of constraints indicated in Eq. 26, we applied the Augmented Lagrangian Adaptive Barrier Minimization Algorithm implemented in R-package alabama (Varadhan 2015).

4 Generation of “null matrices”

4.1 Algorithm

In this section we briefly discuss the randomization protocol implemented in Navarro and Manly (2009). To obtain the fitted probabilities \(\hat{\pi }_{kl}\), the model with the overall minimum deviance is chosen among competitor models with different links. These probabilities can then be used to produce random binary matrices. Let \({\mathbf{Y}}_{t \times s} = (Y_{kl} )\), where each \(Y_{kl}\) is distributed as \(F({{\varvec{\uptheta}}},\varsigma )\), F = Bernoulli (\(\pi_{kl}\)), depending on \({{\varvec{\uptheta}}}\), and assume that a total of NRM random matrices will be generated. The allocation of a presence or an absence to each cell (k,l) for each random matrix is given by:

where \(U{}_{kl}\) is a [0,1]-uniformly distributed pseudo-random number generated for cell (k,l) and \(\hat{\pi }_{kl}\) is the fitted probability for that cell. The values \(w_{kl}\) of the random variables \(W_{kl}\) are interpreted as realizations of i.i.d. Bernoulli random variables

and their generation is described as a parametric bootstrap of the estimated distributions \(\hat{F}\). Because of the assumptions in the basic GLM, each row total (or each column total) is a random variable. Thus, the protocol allows row and column totals to vary from one simulation to another compared to the corresponding row and column totals of the observed presence-absence matrix. This protocol represents the null model algorithm, from which the pattern in the observed matrix needs to be quantified with an appropriate metric and compared to the distribution of the metric generated by simulating a large number of null matrices and calculating the metric for each (Gotelli and Ulrich 2012).

Metrics for testing non-random patterns may have paradoxical features, combining “checkerboardness” and species aggregation (Stone and Roberts 1992), or how the species share sites (Roberts and Stone 1990). Consequently, ecologists suggest that different indices summarizing potential patterns of presence/absence matrices be reported (Peres-Neto et al. 2001), in terms of avoidance (like the C-score), aggregation (like the T-score) and number of locations shared (like the S-score). The C-score is the mean number of checkerboard units per species-pair of the community (Stone and Roberts 1990) and has been often used as a statistic for testing actual distributions for non-randomness. In particular, the C-score is motivated as the way a pair of species might colonize a pair of islands. A high C-score is interpreted as a high average degree of mutual exclusivity between species. The T-score measures the degree of togetherness, enumerating the mean number of sites where the species occur together. A high T-score indicates that, on average, species tend to co-occur between sites. Finally, the S-score measures the average number of shared sites for all species pairs. A high S-score means that most species share most sites. The observed values for these three metrics are contrasted to the null distribution of random values, by means of a one-tailed randomization test.

4.2 Example: lizards on Islands in the Gulf of California

We illustrate the model selection process by considering a data set analyzed in Navarro and Manly (2009) and Manly (1995) about the presence and absence of 20 lizard species on 25 islands in the Gulf of California, taken from Case (1983). Species identities are displayed in Manly (1995). In Navarro and Manly (2009, Fig. 2) the minimum deviance was attained by a Poisson model for Nkl. For null model selection, in the present study we included Faddy distributions corresponding to GLMs with link functions defined by the diffusion approximation to the mean, for a selected set of values of b > 0, 0 < c0 < 1 and c0 < 0 in the profile deviance but separating the permissible deviance values (those computed inside region R, as described in Sect. 3.2) from those in which a global minimum is not assured. A global profile deviance plot was generated for these models, using bilinear interpolation as implemented in the R-package fields (Nychka et al. 2017). We also included a separate profile deviance analysis for Faddy distributions inducing a binomial quasi-abundance, using a small set of binomial parameter values, m (Navarro and Manly 2009). The best null model chosen was the one attaining the overall minimum deviance. Once this best model was selected, NRM = 9999 matrices were generated using the parametric bootstrap given by (27) and (28). t × s pseudo-random numbers uniformly distributed on [0,1] were generated using the R-function runif (R Core Team 2021), to produce either a presence or an absence. The simulated matrices were generated for the following subsets:

-

0.

Unconstrained simulations.

-

1.

Simulated matrices without degenerate distributions (a degenerate distribution means a presence-absence matrix with rows and/or columns made of just zeros).

-

2.

Simulated matrices with the same grand total as the observed presence-absence matrix.

These scenarios represent different universes of simulated matrices in the testing procedure for a non-random pattern, and their comparison are useful in highlighting any bias that might occur in null model tests. Thus, subset 1 is motivated by the claim that degenerate arrangements are problematic and disadvantageous for any null model test since degenerate simulated matrices will bias the testing procedures towards the rejection of the null hypothesis (Wilson 1995; Gotelli 2000). A similar assertion justifies the interest in Subset 2 (Gotelli 2000).

Stone and Roberts’ C-, T- and S- scores were computed for the observed matrix, and the mean and standard deviation for the C-, T- and S-scores on each randomly generated matrix meeting conditions 0.-2. were obtained. The observed C-, T-, and S-scores were contrasted to the null distribution of random values, by means of a one-tailed randomization test. The probability of rejection was computed as

where \(Score_{{{\text{obs}}}}\) and \(Score_{j}\) are the observed and simulated scores of the j-th randomized matrix, respectively. Numerical procedures were implemented in R for all randomizations and tests, using algorithms described in Navarro (2003).

5 Results

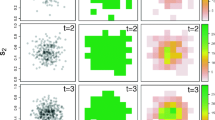

Table 1 displays the profile deviance of the null model selection for Faddy distributions corresponding to different values of the parameters b, c and d = 1 − c., Deviances for overdispersed Faddy distributions behaved similarly to those for negative binomial distributions, all of them being larger than the deviance for the Poisson distribution. In contrast, deviances for underdispersed Faddy distributions in the permissible region R were consistently smaller than deviances for negative binomial, overdispersed Faddy and Poisson distributions. It is worth noticing that deviances outside the permissible region, described as a “quasi-under-dispersed region”, seem to be even smaller, but the implied link functions cannot occur under our null model. The profile deviance shown in Tables 1 and 2 (depicted by Fig. 1) suggests that the minimum deviance is possibly attained on the lower boundary of the valid region R, b = − c, c < 0, as b goes to infinity. Thus, we fitted a new “Faddy model” in the profile deviance, given by:

Null model selection of the lizard data. The plot shows the profile deviance for several members of the Faddy distribution family with parameters b and c, when the generalized linear models for the quasi-abundance have link functions defined via the diffusion approximation to the mean, and deviances are taken from Table 1. The level curves were estimated by bilinear interpolation. Two more members of the family, Poisson and Negative Binomial, were added for completeness, with the Geometric distribution as a particular case of the latter, corresponding to the logit link. Log-concavity of the deviance function does not hold for Region 1 (c < 0, b < − c), while log-concavity is present for Region 2 (c < 0, b ≥ − c) and Region 3 (b > 0, 0 ≤ c ≤ 1) thus, the minimum deviance was only searched inside these two regions (see “Appendix”). Observing the deviance values in Tables 1 and 2, it is proposed that the overall minimum deviance is attained by an underdispersed Faddy distribution (23) with \({\text{b}}\text{ } = -{\text{c}} \to { \infty}\)

The non-canonical link function associated to (29) is \({\eta}_{\text{kl}}= \log(\log\left(1-\log\left(1-{\pi}_{\text{kl}}\right)\right))\), which can be called a “complementary triple-log link”. This limiting Faddy model turned out to be the optimal (Tables 1 and 2) among all models of the form (20) and among those models where the quasi-abundance follows a binomial distribution (Table 3). Therefore, for the lizard data, model (29) is a good representative null model for random matrix generation. The null model analysis continued with the ML estimation of the parameters for that model (not reported), the estimated probabilities for each cell, \(\hat{\pi }_{kl}\), and the deviance residuals (not reported) for each binary observation under this model. Fitted cell probabilities are shown in Table 4, as well as the observed and estimated row and column totals. A non-linear relation between the estimated cell probabilities and species and island effects is present for variables like the row and column totals, Rk and Cl. In order to illustrate this, we can explore the relationship between the probability of occurrence of “species” 16 (Sauromalus) for the lizard dataset, and the number of species on the islands, Cl. It was noticed above that the chosen null model distinguishes the variation in richness and composition between sites for the estimation of probabilities of occurrence. Let us consider the set of islands where Sauromalus is present. In Fig. 2 it is evident the non-linear behaviour of \(\hat{\pi }_{16,l}\) for increasing values of Cl, with probabilities as large as 1, the maximum value, for species-rich islands [San Marcos (14), Coronados (15) and Carmen (16), 10 species each; San José (22) and Espíritu Santo (24), 11 species each; and Tiburón (1), 13 species]. Furthermore, islands 4, 8 and 9 (Pond, San Lorenzo Norte and San Lorenzo Sur) have got the same composition of (widespread) species: Phyllodactylus, Cnemidophorus tigris, Uta and Sauromalus) On the other hand, the widespread species C. tigris didn't occur on Mejia Island (island number 2). Therefore, a higher probability of occurrence was given to species Sauromalus on islands having widespread species, namely, on islands 4, 8 and 9. This example illustrates that under the assumption of independence, the row and column effects govern the fitted probabilities in a way not followed by other approaches that make use of the observed row and column totals. Thus, if one chooses any of the eight algorithms SIM1–SIM8 in Gotelli (2000) and considers species 16 (Sauromalus) and islands 2, 4, 8 and 9), the probability of the first “hit” on the four cells (16,2), (16,4), (16,8) and (16,9) are all the same (for example, for SIM8 the probability is 0.003) because the species total is 18 (the number of times Sauromalus is present) and the island totals are all the same (4). The probabilities are governed by both site and species marginal totals only. This apparently trivial finding makes us to connect our approach of using the observed binary table in the null model and the pioneering approaches by Gilpin and Diamond (1982) and Ryti and Gilpin (1987). Under the Gilpin and Diamond (1982) strategy, fitting the expected row and column sums to the observed row and column sums is computationally less problematic since the number of observations is equal to the number of parameters.

Relation between the estimated probability of occurrence of Sauromalus (vertical axis) and richness, Cl, on islands (numbers in parentheses) where Sauromalus is present (horizontal axis) based on the presence-absence matrix of 20 lizard species on 25 islands in the Gulf of California (Manly 1995). (Null-model) probabilities were estimated using Eq. 23, b = − c → ∞. The four islands encircled have the same richness (four each); in addition, composition of species for islands 4 (Pond), 8 (San Lorenzo Norte) and 9 (San Lorenzo Sur) is the same: Phyllodactylus, Cnemidophorus tigris, Uta and Sauromalus). These are ubiquitous species, however C. tigris does not occur on Mejia island (number 2). This explains the differences in the probability of occurrence under the assumption of additivity of species and island effects. A similar explanation can be given for the difference in probability of occurrence of Sauromalus on islands (18) and (19)

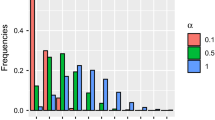

In Navarro and Manly (2009) results for the C-score were described using a different null model (Poisson), and it was found that the observed C-score was significantly larger than those from the simulated matrices. Here we updated the results of the randomization test for the C-score and added new ones for the S- and T-scores, using the limiting Faddy model (29) as the null model. All this information is shown in Table 5. It is confirmed that neither the species seem to occur together nor the species to share the same islands, on the basis of the non-significant S- and T- scores. The estimated p-values for subsets 1. and 2. (Sect. 4.2) varied in comparison to the whole set of 9999 simulations but the conclusions were consistent in terms of a significance level of \(\alpha = 0.05\).

6 Discussion

The selection of the randomized distribution to be used as a null hypothesis in the analysis of species co-occurrences is one of the controversial problems in community ecology and biogeography. The material presented here gives a way to evaluate a wide range of null models for the analysis of species occurrences on discrete locations. Testing that observed data is consistent with the null hypothesis of a random pattern is not an easy task because several random or randomized distributions (null models) may be compared to empirical data. The method developed in Navarro and Manly (2009) and its extension allowing underdispersed and overdispersed quasi-abundance (Faddy) distributions are interpretable in terms of hypothetical probability distributions of non-observable non-negative discrete random variables: the quasi-abundance. This auxiliary variable is crucial for giving some structure to a procedure that seeks to provide suitable null-model estimates of the probability of occurrence, given the simplest information about the species and locations (expressed as qualitative additive effects). The null model selection approach for presence-absence data has two advantages: the straight availability of cell probabilities for simulation of binary variables, and the ability to estimate differently the probability of occurrence of a particular species even for sites with equal number of species (i.e. equal column totals). Much of this behaviour is associated with the maximum likelihood estimation of species and location effects. As an example, using the best null model, in the lizard data set, the widespread species Phyllodactylus (species 2 in Table 4) has some probabilities of occurrence practically equal to 1 for species-rich islands. If it is desired to generate random binary matrices “by reading” the fitted probabilities obtained with any GLM among the competitor null models, then it will be observed that some cells will always receive a presence in each random matrix (or some cells will always receive an absence). This behaviour has been seen in the simulation protocols where the row and column totals of the randomized matrices are maintained, particularly in highly nested matrices. This is an area where some underlying philosophy about the assumptions in the null models is important.

The null models in this study are characterized by two main assumptions. First, occurrences of different cells in the presence-absence matrix are independent. Second, the models include only additive qualitative effects of species and islands as independent variables. In terms of ecological variables, the null models simply impose two conditions for the fitted probabilities: they are governed by both site and species marginal totals only. Although ecologists have been reluctant to use row and column totals as explanatory variables of cell probabilities, possibly it is a good opportunity to reformulate the assumptions and procedures behind all model-fitting approaches for the estimation of cell probabilities in null-model analyses. The null-model selection strategy described in the present study can be combined with probabilistic approaches proposed in the past, e.g. Gilpin and Diamond’s (1982), and nourish the collection of methods for the analysis of species occurrences. We offer here a vast family of models whose links are induced by Faddy distributions in order to search the best null model by the profile deviance, a well-established statistical method of model selection. This strategy guarantees a systematic method that eliminates the subjectivity involved in the choice of competitor models.

The proper selection of an “optimal” null model should not be ignored, as interpretations may change if the model chosen is not supported by statistical arguments. This was apparent in our method: an extra assumption of the null model [the so-called “assumption of continuity” expressed by Eq. (2), Sect. 2.1] imposed restrictions to the set of valid models to be compared (Sect. 3.5.1). In fact, the strategy for optimality is not complete yet using our protocol, as it may be questionable to apply unconstrained and constrained optimization for the same ML estimation problem. Apparently, the fit for a constrained linear model may not be as good as the fit for a similar unconstrained linear model. This is another issue that needs to be explored more in-depth.

Another question to raise is how divergent the profile deviance can be in selecting the “appropriate” null model. To tackle this question, we suggest: (1) specify a fixed GLM not equal to the model chosen by profile deviance (the “optimal model”), (2) simulate data from that “non-optimal” model, and (3) determine how often the profile deviance selects a non-optimal model. An extra topic worth exploring in depth is the choice of test statistics (indices) to summarize co-occurrence patterns in the observed presence-absence data. The selection of the index or test statistic is crucial for the detection of patterns and there is no general agreement on which index should be used. For instance, in this study we used three indices that presumably address Diamond’s assembly rules: the number of species combinations, the number of species forming perfect checkerboards and the C-score. However, “ad-hoc” tests based on the deviance can be used under the combined GLM-parametric bootstrap used here. Some results were analyzed by Navarro (2003) using the overall minimum deviance (OMD). He found that the OMD is positively associated to the C-score. The connotation of this association means that the larger the deviance the more segregated the species. Thus, the OMD apparently summarise patterns of species’ complementarities in a presence-absence matrix. Further investigation is necessary for explaining the association between these indices based on the deviance and the C-score; the obvious task would be to explore the deviance of a particular model and find out how much it is altered by an increase or decrease of the number of co-occurring species.

Data Availability

All data and code generated or analysed during this study are included in this published article [and its supplementary information files].

References

Aranda-Ordaz FJ (1981) On two families of transformation to additivity for binary response data. Biometrika 68:357–363

Byrd RH, Lu P, Nocedal J, Zhu C (1995) A limited memory algorithm for bound constrained optimization. SIAM J Sci Comput 16:1190–1208. https://doi.org/10.1137/0916069

Case TJ (1983) Niche overlap and the assembly of island lizard communities. Oikos 41:427–433

Czado C, Munk A (2000) Noncanonical links in generalized linear models—when is the effort justified? J Stat Plan Inference 87:317–345

Diamond JM (1975) Assembly of species communities. In: Cody ML, Diamond JM (eds) Ecology and evolution of communities. Harvard University Press, Cambridge, pp 342–444

Dobson AJ, Barnett AG (2018) An introduction to generalized linear models, 4th edn. Chapman and Hall/CRC, Boca Raton

Donoghoe MW, Marschner IC (2018) logbin: an R package for relative risk regression using the log-binomial model. J Stat Softw 86(9):1–22

Faddy MJ (1997) Extended Poisson process modelling and analysis of count data. Biom Ournal 39(4):431–440

Faddy MJ, Smith DM (2011) Analysis of count data with covariate dependence in both mean and variance. J Appl Stat 38(12):2683–2694

Faddy MJ, Smith DM (2012) Extended Poisson process modelling and analysis of grouped binary data. Biom J 54(3):426–435

Fletcher R (2000) Practical methods of optimization, 2nd edn. Wiley, Chichester

Gilpin ME, Diamond JM (1982) Factors contributing to non-randomness in species co-occurrences on islands. Oecologia 52:75–84

Gotelli NJ (2000) Null model analysis of species co-occurrence patterns. Ecology 81:2606–2621

Gotelli NJ, Ulrich W (2012) Statistical challenges in null model analyses. Oikos 121:171–180

Götzenberger L, de Bello F, Bråthen KA, Davison J, Dubuis A, Guisan A, Lepš J, Lindborg R, Moora M, Pärtel M, Pellissier L, Pottier J, Vittoz P, Zobel K, Zobel M (2012) Ecological assembly rules in plant communities-approaches, patterns and prospects. Biol Rev 82:111–127

Henningsen A, Toomet O (2011) maxLik: a package for maximum likelihood estimation in R. Comput Stat 26(3):443–458. https://doi.org/10.1007/s00180-010-0217-1

Huang X (2020) Improved wrong-model inference for generalized linear models for binary responses in the presence of link misspecification. Stat Methods Appl. https://doi.org/10.1007/s10260-020-00529-3

Kallio A (2016) Properties of fixed-fixed models and alternatives in presence-absence data analysis. PLoS ONE 11(11):e0165456. https://doi.org/10.1371/journal.pone.0165456

Manly BFJ (1995) A note on the analysis of species co-occurrences. Ecology 76:1109–1115

Marschner IC (2014) Combinatorial EM algorithms. Stat Comput 24(6):921–940

McCullagh P, Nelder JA (1989) Generalized linear models. Chapman and Hall, London

Navarro J (2003) Generalized linear models and Monte Carlo methods in the analysis of species co-occurrences. Dissertation. University of Otago, Dunedin, New Zealand

Navarro J, Manly BFJ (2009) Null model analyses of presence-absence matrices need a definition of independence. Popul Ecol 51:505–512

Nychka D, Furrer R, Paige J, Sain S (2017). Fields: tools for spatial data. https://doi.org/10.5065/D6W957CT. R package version 11.6, https://github.com/NCAR/Fields

Peres-Neto PR, Olden J, Jackson DA (2001) Environmentally constrained null models: site suitability as occupancy criterion. Oikos 93:110–120

Pascual-García A, Tamames J, Bastolla U (2014) Bacteria dialog with Santa Rosalia: are aggregations of cosmopolitan bacteria mainly explained by habitat filtering or by ecological interactions? BMC Microbiol 14:284. https://doi.org/10.1186/s12866-014-0284-5

R Core Team (2021) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Roberts A, Stone L (1990) Island-sharing by archipelago species. Oecologia 83:560–567

Ryti RT, Gilpin ME (1987) The comparative analysis of species occurrence patterns on archipelagos. Oecologia 73:282–287. https://doi.org/10.1007/BF00377519

Stone L, Roberts A (1990) The checkerboard score and species distributions. Oecologia 85:74–79. https://doi.org/10.1007/BF00317345

Stone L, Roberts A (1992) Competitive exclusion, or species aggregation? An aid in deciding. Oecologia 91:419–424

Tang W, Ye Y (2020) The existence of maximum likelihood estimate in high-dimensional binary response generalized linear models. Electron J Stat 14:4028–4053

Tornero-Velez R, Egeghy P, Cohen Hubal E (2012) Biogeographical analysis of chemical co-occurrence data to identify priorities for mixtures research. Risk Anal 32(2):224–236

Williamson T, Eliasiw M, Fick GH (2013) Log-binomial models: exploring failed convergence. Emerg Themes Epidemiol 10:14

Wilson JB (1995) Null models for assembly rules: the Jack Horner effect is more insidious than the Narcissus effect. Oikos 72:139–144

Varadhan R (2015) Alabama: constrained nonlinear optimization. R package version 2015.3-1. https://CRAN.R-project.org/package=alabama. Accessed 25 Aug 2020

Veech JA (2013) A probabilistic model for analysing species co-occurrence. Glob Ecol Biogeogr 22:252–260

Whittam TS, Siegel-Causey D (1981) Species interactions and community structure in Alaskan seabird colonies. Ecology 62:1515–1524

Acknowledgements

We wish to thank an anonymous reviewer for suggestions that led to a remarkable improvement of the present work.

Funding

This study was carried out during Jorge Navarro’s sabbatical year in the Department of Mathematics and Statistics, University of Wyoming, under the sponsorship of the Consejo Nacional de Ciencia y Tecnología, Mexico (2018–000007-01EXTV-00185).

Author information

Authors and Affiliations

Contributions

JN developed the model extension based on a suggestion given by BFJM. JN also designed, programmed and tested the R code for estimation and randomization tests. BFJM and KG provided conceptual support. All authors contributed to writing.

Corresponding author

Ethics declarations

Conflict of interest

All authors have no conflict of interest to declare.

Additional information

Handling Editor: Luiz Duczmal.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix

The basic null model is given in Eq. (1) in terms of the expected value of the unobserved quasi-abundance \(\mu_{kl} = E(N_{kl} )\), k = 1,.., t; l = 1,…,s:

where \({\alpha }_{1}={\beta }_{1}=0\). These unobserved means are connected to probabilities of observed Bernoulli-distributed outcomes \({Y}_{kl}\), \(E({Y}_{kl})={\pi }_{kl}\), using different generalized linear models. One collection of models defines link functions in terms of the diffusion approximation to the mean quasi-abundance for Faddy distributions with parameters b and c, as described in Sects. 3.2 (Eq. 20) and Sect. 3.5.1. Another generalized linear model based on Faddy distributions is given by Eq. 21. This “Appendix” focuses mostly on models given by Eq. 20 (indicated here as Eq. 30):

where b > 0. The estimation of each generalized linear model is performed by maximum likelihood, and a model-selection process using profile deviance is conducted for varying values of b and c, the deviance function given by Eq. 25.

The following lemma applies to any model under the NM protocol:

Lemma

If \(P(Y_{kl} = 0) = P(N_{kl} = 0);\quad P(Y_{kl} = 1) = P(N_{kl} \ge 1)\), then, for all probability mass functions on the non-negative integers \(N_{kl}\):

Proof

The equality in \({\pi}_{\text{kl}}\left({\mu}_{\text{kl}}\right){\le}{\mu}_{\text{kl}}\) is achieved when \({\text{P}}\left({\text{N}}_{\text{kl}}\text{ = 0}\right)\text{ = 1}-{ \pi}_{\text{kl}}\), \({\text{P}}\left({\text{N}}_{\text{kl}}\text{ = 1}\right)\text{=}{ \pi}_{\text{kl}}\), and \({\text{P}}\left({\text{N}}_{\text{kl}}\text{ > 1}\right)\text{ = 0}\).

The inequality (31) is essential for the appropriate application of the “Faddy inspired” models (30) and will constrain the set of competing models in the profile deviance.

Theorem

Under the NM protocol, the generalized linear models connecting the probabilities of the observed Bernoulli-distributed outcomes \(Y_{kl}\) with parameter \(E(Y_{kl} ) = \pi_{kl}\), and the quasi-abundance whose link functions are defined in terms of the diffusion approximation to the mean for Faddy distributions with parameters b and c, are restricted to the set

R = {b > 0, c ≤ 1, b ≥ ‒c}.

Proof

For (31) to be true, the second derivative of \({\pi}_{\text{kl}}\left({\mu}_{\text{kl}}\right)\) evaluated at \({\mu}_{\text{kl}}\)= 0 must be less than or equal to 0. And it turns out for Eq. (30) this is also a sufficient condition. Evaluating the derivative in Eq. (30) we have:

In addition, \({\pi}_{\text{kl}}\left({\mu}_{\text{kl}}\right){\le}{\mu}_{\text{kl}}\) implies that \(\frac{{d}^{2}\pi ({\mu }_{kl})}{{d}^{2}{\mu }_{kl}} \le 0\) and

Note that the last term in the numerator is the only term that can be negative. Therefore, the last inequality implies that

For \(b>0\), and \(0\le c\le 1\), this last expression is always valid. However, the validity of this expression forces the inequality:

In particular, for c < 0, we must have: \(b>-c\).

The case b = ‒c in Eq. (30)

On the lower boundary of the valid region R, b = ‒c, c < 0, the Faddy model can be written as:

In this case, the probability mass function of \({\text{N}}_{\text{kl}}\) is under-dispersed. The profile deviance of the lizard data suggested that the minimum deviance is possibly attained at this boundary as b goes to infinity. Thus, we were also interested in the “Faddy model” given by:

Putting model (32) to compete in the profile deviance for the lizard data, turns out to be the optimal model among all models of the form (30).

Log-concavity for models given by Eq. (30)

The proposed Faddy models (30) fitted by maximum likelihood have good properties in the permissible region R. For every data set and for every starting value in this region, the minimization procedures will converge to a global minimum. This statement can be proved by noticing that the “inverse links functions” defined by expression (30) for b and c in R, all have the property of log-concavity. Therefore, as far as maximum likelihood estimation is concerned, a subset of the link functions induced by Faddy models have the same good properties as the usual link functions for binary data (except for the exponential family properties). For parameters outside this region, convergence to the global minimum may or not may be assured; it will depend on the observed binary data and on the initial starting values. The model fitting process was also tried for the lizard data using Faddy models with b < ‒c and values of the deviances were computed but they were highlighted so that their interpretation should be made with caution. To prove the claim that the deviance function

is concave when the inverse links functions \({\pi }_{kl}\) are defined by expression (30), we make use of a result given in Tang and Ye (2020) that the sum of concave functions is concave. Thus, a sufficient condition for the existence of a unique MLE estimator of the log-linear parameters is that \(\mathrm{log}({\pi }_{kl}\left({\mu }_{kl}\right))\) and \(\mathrm{log}(1-{\pi }_{kl}\left({\mu }_{kl}\right))\) be concave. This is equivalent to show that

\(\frac{{\partial }^{2}\mathrm{log}({\pi }_{kl}\left({\mu }_{kl}\right))}{\partial {{\mu }_{kl}}^{2}}\le 0\) and \(\frac{{\partial }^{2}\mathrm{log}({1-\pi }_{kl}\left({\mu }_{kl}\right))}{\partial {{\mu }_{kl}}^{2}}\le 0\)

It can be shown that, for c < 1 and b > 0:

The case c = 1, b > 0 is analogous:

Thus, \(\mathrm{log}(1-{\pi }_{kl}\left({\mu }_{kl}\right))\) is log-concave. Showing log-concavity of \(\mathrm{log}({\pi }_{kl}\left({\mu }_{kl}\right))\) by looking at the sign of \(\frac{{\partial }^{2}\mathrm{log}({\pi }_{kl}\left({\mu }_{kl}\right))}{\partial {{\mu }_{kl}}^{2}}\) is more complex but the resulting expression can be inspected in a similar way as the previous case.

With the exception of the last term in the numerator, all terms are positive for c < 1 and b > 0. Thus, we need to demonstrate that:

One can show that the inequality does not hold for R1 = {c < 0, 0 < b < ‒c} (Region 1). At first glance, it appears that the inequality holds for R2 = {c < 0, b ≥ ‒c} (Region 2), and also for R3 = {0 ≤ c < 1, b > 0} (Region 3). Therefore, numerically maximizing the function f over the Region

always yields a non-positive value. To show that the expression fails over R1, we can demonstrate that it fails in the neighbourhood of \({\mu }_{kl}=0\). It can be shown that the evaluation of f at 0 in the limit and its first two derivatives yields:

By continuity of f and its first two derivatives, there exists \(\varepsilon >0\), such that for \(\varepsilon >{\mu }_{kl}>0\) and \(-c>b\) we have \({f}^{{^{\prime}}{^{\prime}}}\left({\mu }_{kl}\right)>0\), then \({f}^{^{\prime}}\left({\mu }_{kl}\right)>0\) and, consequently, \(f\left({\mu }_{kl}\right)>0.\) However, a necessary condition for log-concavity of \(f\) is that \(f\left({\mu }_{kl}\right)\le 0\) for all \({\mu }_{kl}>0.\) Thus, f fails to be log-concave in R1 = {c < 0, 0 < b < ‒c}. By the same logic we can see that, for Regions 2 and 3, \(f\left({\mu }_{kl}\right)\le 0\) in the neighbourhood of \(0\). Although this is not an analytic proof that the function f(\({\mu }_{kl},b,c)\) over Regions 2 and 3 is always negative, this “proof” by numerical maximization seems sufficient.

Remark

The most extreme function that is not in the “Faddy family” (30) but satisfies (31) is:

We explored this model and we found that it yields a higher likelihood (smaller deviance) than any other model in the permissible region. However, in a “real-world” setting one would not be able to justify this kind of model.

Rights and permissions

About this article

Cite this article

Navarro Alberto, J.A., Manly, B.F.J. & Gerow, K. Extending null scenarios with Faddy distributions in a probabilistic randomization protocol for presence-absence data. Environ Ecol Stat 29, 625–654 (2022). https://doi.org/10.1007/s10651-022-00537-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10651-022-00537-4