Abstract

The aim of this study is to determine teachers’ digital competency on the basis of European DigCompEdu framework and its relationships with some demographic and teacher characteristics. It was designed as a cross–sectional survey within the quantitative research paradigm. The sample consisted of 368 (199 male and 169 female) teachers working in a major city located in the Central Anatolia of Türkiye during the 2021–2022 academic year. Data were collected through a questionnaire including the Digital Competencies Scale for Educators and questions regarding teachers’ demographic and professional characteristics such as age, gender, subject taught, educational background, school level and location of employment. The findings reveal that participating teachers are at the integrator (B1) level of digital competency on average and those who are male, teach math and science related courses, have postgraduate degree, and work in metropolitan cities are more digitally competent than their counterparts. Teachers’ digital competency is independent of their age and type of school whereas it is positively and moderately associated with the number of digital devices teachers had. Furthermore, the regression analysis explains 25% of its variance through gender, educational background, subject and the number of information technology devices as being the significant predictors.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The rapid development and diffusion of technology has opened the door to the information society and digital age in which time and distance limitations are reduced and the use of digital tools and network connections is increased (Zhao, Pinto Llorente & Gomez, 2021). The requirements as well as promises of such technology–based innovations in learning and teaching have been recognized by politicians and academics in the field of education (Koc & Demirbilek, 2018). The digitalization of education has led to changes in the labor market, educational standards and outcomes, the identification of needs, and brought about rethinking the role of teachers and reorganizing the educational processes (Bilyalova et al., 2020). Today, schools are expected to prepare students as digital citizens equipped with necessary digital skills to be survived in a highly digitalized society. However, recent studies indicate an alarming gap between the level of digital competency that teachers should have to effectively make such a preparation and the level of digital competency they actually have, and thus suggest further research on exploring teachers’ digital skills and developing policies and interventions to improve them (Gordillo et al., 2021).

Despite the efforts to improve individuals’ digital competencies, they are still insufficient and this raises the threat of a new digital divide, not because of the lack of access to technology, but because of the insufficient level of digital competency (Pérez–Escoda et al., 2016). Due to its nature, digital competency is not simply gained by using technology, but rather requires special training for this purpose. Therefore, if the lack of digital competence is not addressed through effective education programs, the aforementioned possibility of digital divide might affect not only adults, but also young people and children (Fernández–Cruz & Fernández–Díaz, 2016; Fraile et al., 2018; Pérez–Escoda et al., 2016). Today’s students defined as digital natives use technology intensively in daily life, but their digital competency level is not considered to be adequate (Johnson et al., 2014). Accordingly, educational initiatives and activities promoting digital competence are very important in terms of making usage of today’s media tools in a healthy and beneficial way.

It is crucial for students as future generation to acquire digital competencies, which are necessary for living, learning and working in this networked society. In order to achieve this, teachers should have these competencies as well (Toker et al., 2021) because they are seen as key actors with the greatest responsibility in preparing children for the global and digital economy (Yünkül, 2020). Increasing the digital competencies of teachers will in turn enable students to become high–quality digital citizens. From this perspective, it is necessary to inspect teachers’ digital competence.

1.1 The concept of digital competency

Digital competency is an emerging concept to define skills for the use of current technology and it has been used interchangeably with the concept of digital literacy (Bozkurt et al., 2021; Reisoğlu & Çebi, 2020a). Having a multidimensional and complex structure, it is germane to 21st century skills and influenced by socio–cultural issues. As an umbrella term combining previously used concepts such as digital literacy, information literacy, internet literacy and so on, it involves knowledge, skills, attitudes, strategies and awareness required to carry out performing tasks, solving problems, communicating, managing information, creating and sharing content, and working collaboratively using information and communication technologies (Ilomäki et al., 2016). Digitally competent individuals display confident, critical and responsible use and interaction with digital technologies for learning, working and participation in society (European Commision, 2019). Besides these definitions, the conceptualization of digital competence is still debated in terms of whether it has concrete skills to be developed or continuing practices that need to be supported (Zhao, Sanchez Gomez, Pinto Llorente et al., 2021).

Recently, several theoretical frameworks have been proposed in order to create a common language in determining and examining individuals’ digital competencies of individuals (Reisoğlu & Çebi, 2020a). The European Commission first proposed the Digital Competence Framework (DigComp) in 2013 as a roadmap for how to use and review digital competency and defined its key elements that address necessary knowledge, skills and attitudes (Zhao, Sanchez Gomez, Pinto Llorente et al., 2021). DigComp consists of 21 competencies gathered under five main dimensions: information and data literacy, communication and collaboration, digital content development, security, and problem solving. Vuorikari et al. (2016) expanded it to DigComp 2.0 by producing scenarios suitable for use in the fields of education and employment. Next, Carretero et al. (2017) designed DigComp 2.1 to better understand digital competence for general student employment in Europe. The continuously improved DigComp framework was updated again in 2022 and DigComp 2.2 was developed by Vuorikari et al. (2022). In this revision, some adjustments were made by focusing on the terms of knowledge, skills and attitudes rather than extensive changes.

The first dimension of DigComp framework, information and data literacy, consists of three sub–competencies related to searching, scanning and filtering, evaluating, and managing information, data and digital content. The second, communication and collaboration, includes six sub–skills related to interaction through digital technologies, sharing information and content, digital citizenship, collaboration through digital technologies, ethics, and digital identity management. The third, digital content development, involves four sub–competencies regarding creating digital content, reorganizing and integrating digital content, copyrights and licensing, and programming. The fourth, security, contains four sub–competencies with respect to protection of devices, personal data and privacy, physical and psychological health, and the environment. The last one, problem solving, comprises of four sub–skills related to solving technical problems, identifying technological needs and solutions, creative use of digital technologies, and identifying digital competence requirements (Reisoğlu & Çebi, 2020a; Vuorikari et al., 2016).

1.2 The European DigCompEdu framework

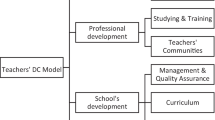

Based on the DigComp framework, The European Framework for the Digital Competence of Educators (DigCompEdu) was developed in order to improve the digital competencies of educators in Europe (Redecker, 2017). Being one of the most comprehensive and up–to–date model for today’s teachers, DigCompEdu consists of 22 competencies organized in six dimensions. The first one, professional engagement (PE), comprises four sub–competencies regarding professional interaction, professional collaboration, reflective practice, and continuing professional digital development. The second, digital resources (DR), involves three sub–skills related to selecting, creating and editing, and managing, protecting and sharing digital resources. The third, teaching and learning (TL), contains four sub–competencies germane to teaching, guidance, collaborative learning, and self–regulated learning. The fourth, assessment (A), includes three sub–skills respecting evaluation strategies, analysis of findings, and feedback. The fifth, empowering learners (EL), details three sub–competencies regarding access and participation, differentiation and individualization, and active engagement. The last dimension, facilitating learners’ digital competence (FLDC), encompasses five sub–skills relating to information and media literacy, digital communication and collaboration, creation of digital content, responsible use, and digital problem solving (Redecker, 2017). DigCompEdu framework has provided a theoretical basis for the development of measurement tools, conducting research studies and designing educational policies and training programs for exploring and enhancing teachers’ digital proficiency levels.

The DigCompEdu framework was created to structure personalized education plans and expand their features internationally, highlighting the need for investment in technology and the potential for personal development and social inclusion through digital technologies. (Cabero–Almenara et al., 2021b; Llorente–Cejudo et al., 2023). It has been applied for self–reflection and self–perception among educators in various countries, demonstrating its importance in both assessing and developing digital competence (Cabero–Almenara et al., 2023; Muammar et al., 2023; Toker et al., 2021). Moreover, it has been employed to develop and validate assessment tools, training programs, or MOOCs to improve educators’ digital competence, highlighting their practical importance in educational settings (Cabero–Almenara et al., 2023; Cabero–Almenara, Barragán–Sánchez, Cabero–Almenara et al., 2021a, b, c, d; Palacios–Rodríguez et al., 2022). The framework has also been instrumental in shaping the digital competencies of educators at almost all levels, including pre–university and university educators and teachers (Cabero–Almenara, Barragán–Sánchez, Cabero–Almenara et al., 2021a, b, c, d; Esteve–Mon et al., 2022). Experts have recognized it as the most appropriate framework for teaching digital competence (Cabero–Almenara, Gutiérrez–Castillo et al., 2021). Furthermore, it has been used to identify factors to increase students’ digital competence and differentiate digital inequalities at the societal level, highlighting its broader impact on education and society (Barboutidis & Stiakakis, 2023). Overall, the DigCompEdu framework has a crucial role in guiding educators’ development, assessment, and enhancement of digital competence, contributing to the quality of education and the effective integration of digital technologies in teaching and learning.

1.3 Overview of the related literature

In accordance with the emergence of both the concept of digital competency and the relevant frameworks, research on teachers’ or teacher candidates’ status on this issue has begun to be carried out in recent years. The emergence of the COVID–19 pandemic and its significant impact on education has made digital competence a hot topic and accelerated relevant research and publication on this subject (Wang & Si, 2023). A recent meta–analysis of previous studies underlined the importance of digital competence as one of the challenges facing teachers today and the necessity of relevant teacher training (Fernandez–Batanero et al., 2022). Another literature review comparing assessment tools for digital competence based on DigComp and related frameworks discussed how the related data collected were analyzed and used in the studies (Mattar et al., 2022). One another systematic review highlighted that most of the studies employed DigComp framework as both a conceptualization and evaluation reference and focused on the investigation of participants’ perception and level of digital competency (Zhao, Pinto Llorente, & Sanchez Gomez, 2021). A latest review conducted by Heine et al. (2023) analyzed the definitions of digital resources as a scientific term, compared aspects of digital resources and then developed conclusions on the definition of digital resources as an aspect of teachers’ professional digital competence. Another latest review concluded that growing number of studies aimed to explain the acquisition of digital competency particularly from external factors such as demographics, psychological traits, teaching practices, organization and so on (Saltos–Rivas et al., 2023).

A group of descriptive studies focused on the level of digital competence and its relations with some personal factors. For instance, Arslan (2019) found that teachers working in the primary and secondary schools in Türkiye had high level of digital competency and it was independent of gender and education level whereas dependent on tenure, with those having less than ten years of experience were more competent than those having more than twenty years of experience. Another study by Kaya (2020) indicated that the digital competency level of Turkish teacher candidates was moderate and males were more competent than females. One another study by Erol and Aydın (2021) showed that Turkish language teachers had high competency level on average and it was significantly differed in favor of those who were younger, less senior, and more technology user. Aslan (2021) found that elementary school and social studies pre–service teachers’ digital literacy self–efficacy levels varied across gender in favor males, subject in favor of elementary school teaching, and technology ownership in favor of having computer and internet at home. Fidan and Cura Yeleğen (2022) revealed that teachers’ digital competence was significantly differed across gender, seniority, subject taught, internet usage time, and Web 2.0 tool use in public schools located in the Western region of Türkiye. According to their qualitative data, teachers mostly expressed the lack of implementation and practice regarding their digital needs.

Similar studies were conducted in other countries as well. Diz–Otero et al. (2022) investigated that digital competence of secondary school teachers in Spain was at the low level and those with undergraduate and master’s degree and in the field of social sciences were more knowledgeable in digital content creation than their counterparts. Based on the DigCompEdu framework, Ghomi and Redecker (2019) developed a self–assessment tool to measure teachers’ digital competence and applied it over a group of German teachers. They found significant differences between non–STEM and STEM teachers, non–computer science and computer science teachers, and teachers with negative attitudes to the benefits of technologies and those with neutral or positive attitudes in favor the latter ones. Moreover, teachers experienced in technology integration into classroom teaching using technologies reported higher scores compared to their peers. McGarr and McDonagh (2021) surveyed recent entrants into teacher education programs in Ireland and reported low level of skills in the use of some digital technologies other than social media and quite positive perceptions of digital technology use in education. A qualitative study on Indonesian elementary school teachers reported inadequate level of digital literacy to support the students’ needs and characteristics (Atmojo et al., 2022). Zakharov et al. (2021) calculated digital literacy indices and digital competency index of the Russian teachers using a diagnostic tool developed on the basis of DigComp 2.0 framework. Their results demonstrated that participating teachers had an average level of competency and they were the most advanced in digital content and assessment and the weakest in digital technology and resource management dimensions. Furthermore, teachers, aged 35–49 and taught math and computer science courses reported higher digital competency scores than did their counterparts. Using the DigCompEdu framework and its assessment criteria, Lucas and Bem–Haja et al. (2021) found gender and age differences in digital competency of in–service teachers in Portugal, in favor of male and younger teachers. Tzafilkou et al. (2023) revealed inefficient digital competence among teachers in Greece, with primary school teachers having low scores in professional development and learner support and female teachers having low scores in innovative education and school improvement but higher scores in professional development.

There are also studies on the digital competence of university educators. For example, a study on higher education teachers in Spain revealed that the level of digital teaching competence was basic–intermediate measured by DigCompEdu questionnaire with digital resources being reported as the most advanced dimension and younger and more experienced teachers in technology use were more competent than others (Cabero–Almenara et al., 2021). In another study, the digital competence of university professors in Spain was found to be moderate according to the DigCompEdu framework, with male professors in the fields of Architecture and Forensic and Social Sciences and female professors in the fields of Judicial and Social Sciences having the highest level compared to other fields (Cabero–Almenara, Guillén–Gámez, Cabero–Almenara et al., 2021a, b, c, d). Yazon et al. (2019) found that faculty members in a Philippine university were competent in processing and collecting data through digital technologies but had limited skills in understanding digital applications, finding information, using information, and creating knowledge. They also found that educators’ digital competence assessed by DigCompEdu tool in teaching and learning, assessing learning, empowering students, and facilitating student digital competence were only satisfactory. Muammar et al. (2023) showed that most faculties in higher education institutions in the United Arab Emirates (UAE) were digitally competent in all dimensions of DigCompEdu framework.

Some studies demonstrated that teachers’ or candidates’ digital competency level was positively correlated with some affective and contextual factors including self–efficacy for technology integration (Kaya, 2020), motivation to use technology in teaching practices (Guillen–Gamez et al., 2020), the number of digital tools used in teaching (Ghomi & Redecker, 2019; Lucas and Bem–Haja et al., 2021) opinions about distance education and pre–college school memories (Polat, 2021), tendency for lifelong learning (Gökbulut, 2021), positive management, management’s development support, and teacher educators’ self reported pedagogical efficacy (Instefjord & Munthe, 2017), beliefs about ease of technology use (Lucas and Bem–Haja et al., 2021) and the perceived usefulness of technology in teaching (Antonietti et al., 2022), student teaching and learning process and digital competence improvement in student learning (Nunez–Canal et al., 2022) and supportive professional development and school change progress development (Cattaneo et al., 2022).

A small number of studies proposed some interventions for developing teachers’ digital competency and/or tested them in case study or experimental research designs. For example, Reisoğlu and Çebi (2020b) designed and implemented a 70–hour training program for pre–service teachers based on the DigComp and DigCompEdu frameworks. They found that training should be given in communication and collaboration, digital content creation, safety issues and should include professional engagement, digital resources, teaching–learning, assessment, and empowering learner areas. Their findings suggest that such training courses include collaborative study, role modeling and theory–practice connection. Pongsakdi et al. (2021) investigated the impact of digital pedagogy training on Finnish in–service teachers’ attitudes towards digital technologies and reported that teachers who had low confidence in technology use before the training showed an increased confidence level. Adopting DigCompEdu as both theoretical reference and self–assessment approach, Lucas and Dorotea et al. (2021) developed and tested the contribution of three continuous professional development sessions for the improvement of Portugal teachers’ digital competence. The comparison of pre–test and post–test measures showed significant improvements in all proficiency areas. Çebi and Reisoğlu (2019) designed an educational activity based on DigComp framework and implement it to Turkish pre–service teachers. Their results indicated increases in participants post–training scores compared to pre–training ones. A qualitative study examined Norwegian teachers’ experiences about participating in professional development events that focus on sharing pedagogical ideas about the use of digital technology in teaching and learning. The interview data revealed that participants considered these events helpful for fostering their digital competence and transformative digital agency (Brynildsen et al., 2022).

1.4 Purpose of the study

The review of related literature reveals that the majority of previous studies are grounded on the European Commission’s policies and frameworks (i.e., DigComp or DigCompEdu), indicating that they are widely accepted in both the conceptualization and measurement of teachers’ digital competency within the scientific and academic community. However, there was no study examining teachers’ digital competency based on such frameworks in Türkiye while this study was being designed. Considering that Türkiye is a strategic partner and candidate country for the European Union (EU) and has been making important reforms within this context, it is crucial to conduct research on this issue with theoretical reference of European definition and assessment perspectives. The EU Council determines a number of chapters/benchmarks and requests candidate countries to fulfill them during the membership negotiation process. Türkiye’s EU accession process has been conducted in 35 benchmarks covering almost every area of social life such as science and research, information society and media, education and culture, statistics, intellectual property and so on. Exploring teachers’ digital competence according to the DigComEdu framework hope to allow for not only revealing and comparing the current situation according to European standards but also contributing to attempts and improvements to be made towards the fulfillment of those EU benchmarks.

Such a research study may also help teachers and educational policy makers in Türkiye raise awareness about European standards for educators’ digital competence and thus give the opportunity to self–evaluate themselves within this context. This may provide important information especially to reveal the need for Turkish teachers’ professional development and contribute to the development and implementation of related in–service trainings. Any enhancement in teachers’ digital competence will in turn contribute to using digital technologies in teaching and learning activities in the schools. Such improvements will hopefully be beneficial for students to gain the knowledge and skills they need to sustain themselves in the digitalizing society. Furthermore, beyond the Turkish educational context, this study has the potential to contribute international field of teacher education as it brings research evidence from a developing and socio–culturally distinct country. It is well–known that technology integration or digital education is influenced by contextual factors such as curriculum, educational system, world views, cultural and social norms, highlighting the significance of conducting research in different contexts.

Based on the abovementioned rationale, the present study aims to investigate the level of digital competency of Turkish teachers on the basis of DigCompEdu framework and its relationships with some demographic and teacher characteristics. In order to fulfill this purpose, the following research questions were proposed:

-

1.

What is the digital competence level of teachers on the DigCompEdu assessment scale?

-

2.

How is teachers’ digital competency significantly differed or associated with gender, age, subject taught, educational background, school level, location of employment, and number of information technology devices owned?

-

3.

To what extent demographic and teacher characteristics predict teachers’ digital competency?

2 Method

2.1 Research design

Since this study explores the current state of teachers’ digital competence, it was designed as a cross–sectional survey within the quantitative research paradigm. The survey model is a popular research design in education and is a non–experimental model that researchers use to describe the attitudes, ideas, behaviors, or characteristics of a group (Creswell, 2012; Şen & Yıldırım, 2019). Survey design also allows for the examination of relations among variables through correlation or comparison type analyses (Karasar, 2008), which make it suitable for the purpose of exploring teachers’ digital competence according to demographic and teacher characteristics.

2.2 Participants

The population of this research consists of teachers working in the public schools in a major city located in the Central Anatolia of Türkiye during the 2021–2022 academic year. A sample of 368 teachers was recruited from this population by using a non–probability and convenient sampling approach. An online questionnaire was developed and distributed to all teachers employed in the city with an invitation to take part in the study. Hence, participants were made up of those teachers who voluntarily filled out the questionnaire from February to April in 2022.

Of the participants, 199 (54%) were male and 169 (46%) were female teachers. Their ages ranged from 22 to 65 with an average age of 37.40 (SD = 8.04). The number of information technology devices they own varied between 1 and 8 with an average number of 2.91 devices (SD = 1.25). The school level they were working distributed as follows: 18% primary school (grade 1 to 4), 50% secondary school (grade 5 to 8) and 32% high school (grade 9 to 12). A small proportion of teachers were employed in villages (12%) while the remaining was employed in counties (43%) and city center (45%). The majority (76%) had undergraduate degree whereas others (24%) had postgraduate degree. Regarding their subjects (i.e., type of courses taught in the schools), 30% taught math and science related courses (Math, Physics, Chemistry, Biology, and Information Technology), 37% taught social course (Turkish Language, Social Sciences, History, Geography, Philosophy, Foreign Languages), 14% taught vocational and art related courses (Visual/Fine Arts, Music, Vocational Training, Sport) and 19% taught primary and special education related courses (Classroom Teaching, Guidance and Counseling).

2.3 Instruments

The online questionnaire form used for data collection consisted of two parts. The first one included questions regarding teachers’ demographic and professional characteristics such as age, gender, subject, educational background, school level, location of employment, and the number of information technology devices owned. Age and number of devices owned were measured as continuous variables via open–ended queries while others were operationalized as categorical variables through multiple–choice items. The second part of the form collected data to measure teachers’ digital competence through the Digital Competency Scale for Educators (DCSE) (also known as DigCompEdu Check–In) which was originally developed European Commission Joint Research Centre based on the DigCompEdu framework (Redecker, 2017) and adapted to Turkish language by Toker et al. (2021). The DCSE contains 22 items under six dimensions: professional engagement (PE, 4 items), digital resources (DR, 3 items), teaching and learning (TL, 4 items), assessment (A, 3 items), empowering learners (EL, 3 items), and facilitating learners’ digital competence (FLDC, 5 items). The item values range from 0 to 4 points and are summed to calculate dimension and total scores. The scoring criteria in Table 1 is used to evaluate the competency levels in the dimensions and overall of the scale.

According to Redecker (2017), the PE dimension refer to educators’ ability to use digital tools to improve teaching and professional interactions with their stakeholders, individual professional development, collective well–being, and continuous innovation in education. It has four sub–competencies: organizational communication, professional collaboration, reflective practice, and digital continuous professional development. The second dimension, DR, concerns effective identification of the most appropriate resources with learning objectives, student groups, and learning styles. It consists of three sub–competence areas stated as selecting digital resources, creating and organizing digital resources, and managing, protecting, and sharing digital resources. The TL dimension evaluates the ability to effectively organize the use of digital technologies in different stages and settings of the learning process and has four sub–competencies including teaching, guidance, collaborative learning, and self–regulated learning. The fourth one, A, focuses on using digital tools to enhance existing assessment strategies and facilitate decision–making, with having three sub–competence areas as assessment strategies, analyzing findings, and feedback and planning. The EL involves the ability to employ digital technologies to improve active participation and personalization of students. It has three sub–competencies as accessibility and inclusion, differentiation and personalization, and actively engaging learners. The last dimension, FLDC, emphasizes five sub–competencies areas including information and media literacy, digital communication, digital content production, responsible use, and digital problem solving.

2.4 Data analysis

The collected data were initially subjected to validity and reliability analyses. Since the DCSE is based on the European theoretical framework of DigCompEdu, confirmatory factor analysis (CFA), a theory–driven technique, was preferred to validate the DCSE within this study context of Turkish teachers. IBM SPSS Amos 22 software was used for performing CFA in order to assess the reliability, convergent validity and discriminant validity of the DCSE. Both absolute and incremental goodness–of–fit indices were used for model assessment in this study. Firstly, the Chi–square statistic (χ2) was computed as it is the traditional approach to testing model fit. Since χ2 is known to be biased toward large samples and complex models, χ2/df ratio was calculated and values less than 3 were considered to indicate good model fit (Kline, 2005). Furthermore, the following various fit indices were also employed: Comparative Fit Index (CFI), Tucker–Lewis Index (TLI), The Root Mean Square of Error of Approximation (RMSEA), and The Standardized Root Mean Square Residuals (SRMR). The CFI and TLI values greater than 0.95 and RMSEA and SRMR values equal or less than 0.05 were considered to indicate a good fit (Hair et al., 2010).

Descriptive statistics were initially calculated to summarize all variables and then inferential analyses were conducted to examine relationships among the variables. The preliminary inspection of data with graphs and skewness coefficients indicated no serious violations of normality, suggesting the use of parametric statistics. Hence, group differences based on the effect of categorical variables (e.g., gender, subject) on the DCSE scores were examined by performing independent samples t–test and analysis of variance (ANOVA) and relationships between DCSE scores and continuous variables (e.g., age, number of technology devices owned) were explored through Pearson correlation coefficients. Furthermore, multiple regression were conducted to predict DCSE scores from a set of demographic and teachers characteristics.

3 Results

3.1 Psychometric properties of the DCSE

The results of CFA with maximum likelihood method for the measurement model of the DCSE are shown in Fig. 1, which shows that six–dimension 22–item model fits well with the dataset (χ2 = 296.82, df = 190, p < .01, χ2/df = 1.56, CFI = 0.97, TLI = 0.97, RMSEA = 0.039, SRMR = 0.036). Standardized factor loadings for all items, correlations among the dimensions, average variance extracted (AVE), maximum shared variance (MSV), composite reliability (CR), and Cronbach alpha for all dimensions were computed to ensure construct validity and reliability of the DCSE. The CR values were found equal to or over the benchmark value of 0.70, ranging from 0.70 to 0.87 as well as Cronbach alpha coefficients varied from 0.66 to 0.87, indicating acceptable reliability (Hair et al., 2010; Nunnally & Bernstein, 1994). The standardized factor loadings ranged from 0.53 to 0.83, higher than the recommended value of 0.50 (Hair et al., 2010), and their t–test values are greater than 1.96 (p < .01). This ensures that all items load adequately on their corresponding dimension and thus indicates satisfactory convergent validity. The AVE values were 0.46, 0.45, 0.40 and 0.49 for PE, DR, TL, EL and 0.58 and 0.57 for A and FLDC dimensions respectively. Even though being lower than their corresponding CR values, more than half were slightly lower than the threshold value of 0.50 (Hair et al., 2010), weakening the convergent validity. According to Fornell and Larcker (1981), convergent validity is still acceptable if an AVE value is less than 0.05 but its corresponding CR value is above 0.06. Discriminant validity is established if the square root of AVE for a construct is higher than its correlations with other constructs (Fornell & Larcker, 1981). None of the square roots of the AVE values for the dimensions were found above the absolute value of regarding correlations ranging from 0.76 to 0.97, indicating that the model did not meet the required discriminant validity. This was also evidenced by the finding that the AVE values for all dimensions were less than their respective MSV values varying between 0.90 and 0.95 (Hair et al., 2010).

Further inspection of CFA outputs in Fig. 1 showed that covariance values among the dimensions varied from 0.76 to 0.97, suggesting that the dimensions were strongly correlated with each other and hence they were not as mutually exclusive as possible (i.e., not fully acting separate variables). This was also considered another explanation of weak divergent validity. According to Brown (2006), such a pattern of correlations among the factors/dimensions indicates potential of a second–order factor accounting for the intercorrelations among the first–order factors. Therefore, another CFA was conducted to test the second–order model of DCSE and its results were given in Fig. 2. The goodness–of–fit indices suggested a well–fitting model with the data (χ2 = 312.92, df = 199, p < .01, χ2/df = 1.57, CFI = 0.97, TLI = 0.97, RMSEA = 0.039, SRMR = 0.03). A Chi–square difference test (χ2diff) was conducted to test whether the imposition of second–order model (Fig. 2) resulted in a statistically significant decrease in fit relative to the first–order model (Fig. 1). The result was not significant (χ2diff = 16.10, dfdiff=9, p > .05), suggesting to maintain the second–order model because it is more parsimonious representation of the data than the first–order model. Furthermore, the CR and Cronbach alpha values for the second–order factor were computed as 0.97 and 0.94 respectively, which were over the benchmark value of 0.70 and thus indicated a good reliability (Hair et al., 2010; Nunnally & Bernstein, 1994). The standardized factor loadings from the second–order factor to the first–order factors were also quite substantial, ranging from 0.83 to 0.99 (p < .01), and the AVE value for the second–order factor (0.85) was well above the threshold value of 0.50 and less than CR (0.97), suggesting a good convergent validity (Hair et al., 2010).

Overall, considering both CFA findings together, it was decided that the first–order model fitted the data well and produced acceptable reliability and convergent validity but did not show favorable discriminant validity. On the other hand, the second–order model showed both good fitting indices and validity properties. Together with the high correlations among the first–order factors, the findings suggest the consideration of more general (i.e., one–factor or unidimensional) assessment of digital competence. Consequently, for further analyses in this study, the dimension scores of DCSE were reported for descriptive purposes but the total score of DCSE was used for inferential analysis to reduce some possible risk of hypothesis testing (e.g., Type I error, multicollinearity issues).

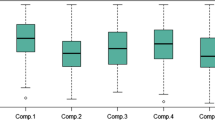

3.2 Descriptive statistics and correlations of the DCSE

The mean and standard deviation for each dimension score as well as the total score of the DCSE was presented in Table 2. On average, participating teachers were at the explorer (A2) level in PE (Mean = 7.40, SD = 3.58), TL (Mean = 7.38, SD = 3.62) and A dimension (Mean = 5.45, SD = 2.61), and integrator (B1) level in DR (Mean = 6.21, SD = 2.76), EL (Mean = 5.83, SD = 2.77) and FLDC dimension. As far as their total scores from the DCSE was concerned, participants were averagely at the integrator (B1) digital competency level (Mean = 41.49, SD = 17.07). Their individual digital competency levels calculated via DCSE total score were distributed as follows: 7% newcomer (A1), 30% explorer (A2), 33% integrator (B1), 20% expert (B2), 7% leader (C1), and 3% pioneer (C2).

Table 2 also showed Pearson product–moment correlation coefficients between the dimension scores as measured by the DCSE. The coefficient values ranging between 0.56 and 0.75 (p < .01) revealed that dimension scores were positively and strongly associated with each other. As teachers’ digital competency level in any dimension increased, their competency levels in other dimensions tended to increase as well.

3.3 Comparison/association of DCSE scores across/with demographic characteristics

An independent samples t–test was conducted to compare the DCSE total scores for male and female teachers (Table 3). There was a significant gender difference found in the DCSE total scores [t(366) = 3.84, p < .01]. Males (Mean = 44.50, SD = 17.9) reported higher scores than females (Mean = 37.90, SD = 15.3). The magnitude of the difference in the means was quite small (Eta squared = 0.04). Another independent samples t–test was carried out to compare teachers’ DCSE total scores across their educational background (Table 3). The findings indicated a significant difference [t(366) = 3.71, p < .01] with graduates from four–year undergraduate programs (Mean = 39.48, SD = 15.8) having higher scores than those from postgraduate programs (Mean = 47.95, SD = 19.4). The level of graduation had a moderate effect on digital competence (Eta squared = 0.10).

Pearson’s product moment correlation coefficients were calculated to find out whether teachers’ total score on the DCSE was associated with age and number of information technology devices owned. Teachers’ total DCSE score was significantly and moderately correlated with the number of devices owned (r = .46, p < .01) whereas it was not significantly correlated with age (r=–.01, p > .05). Teachers with higher number of technology devices were more likely to be more digitally competent.

3.4 Comparison of DCSE scores across teacher characteristics

A one–way between groups ANOVA was conducted to examine whether participating teachers’ DCSE total scores differed according to their subjects. Since the homogeneity of variance assumption was not met, the Welch correction was applied. As seen in Table 4, there was a significant subject difference found in the DCSE total scores [Welch F(3, 152) = 7.01, p < .01]. Post–hoc comparisons using the Dunnett’s C test indicated that participants who teach math and science related courses (Mean = 47.8, SD = 19.08) reported significantly higher scores than those teaching social course (Mean = 39.45, SD = 14.56), vocational and art courses (Mean = 39.42), SD = 18.39), and primary and secondary courses (Mean = 36.94, SD = 14.69). The effect size, calculated as Eta squared, was 0.06, indicating small actual mean differences among the subject groups.

Another one–way between groups ANOVA was performed for the school levels teachers were working during this study (Table 4). There was no significant difference [F(2, 365) = 1.32, p > .05] between the DCSE total scores of teachers working at primary schools (Mean = 38.88, SD = 15.03), secondary schools (Mean = 42.73, SD = 17.41) and (Mean = 41.05, SD = 17.59).

One another one–way between groups ANOVA was carried out for the locations of teachers’ employment at the time of this research (Table 4). There was no violation of homogeneous variance assumption. The findings showed a significant difference in teachers’ DCSE total scores across their location [F(2, 365) = 4.13, p < .05]. Post–hoc comparisons using the Tukey HDS test demonstrated that the mean score of teachers employed in the city centers (Mean = 43.44, SD = 17.50) were significantly higher than those employed in the villages (Mean = 35.28, SD = 12.80). Albeit reaching statistical significance, the Eta squared statistic (0.02) indicated a quite small actual difference between the mean scores.

3.5 Regression of DCSE scores on demographic and teacher characteristics

A standard multiple linear regression analysis was conducted to assess the ability of demographic and teacher characteristics to predict teachers’ DCSE total scores. A total of 11 predictor variables were simultaneously entered into the regression model after the categorical variables were dummy coded. The data were preliminary screened according to the assumptions of regression analysis. Tabachnick and Fidell (2013) recommend the formula of N > 50 + 8 m (m = number of predictors) for sample size requirement. The sample size of this study (n = 368) greatly exceeded their recommended minimum value (138 for 11 predictors). Pearson’s correlations among the predictors were not high, varying between –0.47 and 0.30. Moreover, the Tolerance values, ranging from 0.23 to 0.92, were not less than the cut–off value of 0.10 as well as the variance inflation factor (VIF) values, ranging from 1.09 to 4.33, were not more than the cut–off value of 10, suggesting no violation of multicollinearity assumption (Pallant, 2007). The inspection of Boxplots indicated six to eight univariate outliers and Mahalanobis values exceeding the critical Chi–square value of 31.26 for 11 predictors indicated two multivariate outliers, all of which were removed from the analysis (Tabachnick & Fidell, 2013). Finally, the skewness and kurtosis values were computed and found that their absolute values were no longer than 1, suggesting that the variables were normally distributed (Kline, 2005).

Table 5 summarized the excerpts from the regression analysis. The model was significant and explained 25% of the variance in teachers’ DCSE scores [R2 = 0.25, F(11, 346) = 10.28, p < .01]. The examination of standardized regression coefficients indicated that the predictors with a unique and significant contribution to the model were number of information technology devices owned (β = 0.36, p < .01), subject (β = 0.20, p < .05), gender (β = 0.11, p < .05), and educational background (β = 0.11, p < .05), in order of importance from largest to smallest. Having more information technology devices, teaching math and science related courses, being male, and having postgraduate degree had a positive influence on digital competency.

4 Discussion

4.1 Validation of the DCSE in Turkish context

Since the development of DCSE (i.e., DigCompEdu Check–In) as an assessment tool of educators’ digital competence based on the European DigCompEdu framework, several studies has been recently adapted and validated it in different languages/contexts and varying results have been reported in terms of its factorial structure. While some studies acknowledged the original six–factor structure of the tool (e.g., Llorente–Cejudo et al., 2023), others resulted in a three–factor structure (Gallardo–Echenique et al., 2023) or one–factor structure (Martín Párraga et al., 2022). Toker et al. (2021) conducted an adaptation and validation study of the DCSE on a sample of Turkish teachers. They verified the six–factor structure but did not mention the assessment of convergent and discriminant validity in their article. Later, Çebi and Reisoğlu (2023) validated its Turkish version through both first–order and second–order CFA but did not assess its discriminant validity. Using the same Turkish version of the tool, the present study showed that six–factor structure well fitted the data but lacked discriminant validity and thus suggested using the total score for further analyses including hypothesis testing. On the whole, such contradictory findings as well as the lack of adequate evidence for discriminate validity among the studies suggest that the tool might be sensitive to cultural or country context. This issue is expected as it is well–known in the process of cross–cultural adaptation and psychometric validation of research instruments (Arafat et al., 2016).

4.2 The level of digital competence

The findings indicate that teachers who participated in this study are on average at the integrator (B1) level of digital competence. They seem to have moderate skills to use digital technologies considering the cut–off points of the DCSE used for assessing digital competence in this study. Based on the DigCompEdu framework, this result implies that participants have already assimilated new digital technologies and developed related basic skills and they are currently able to implement them into their daily works and professional teaching practices for various purposes. They are willing for building new ideas and tools and expanding their practices, but still continue to work on comprehending ideal or optimum technology integration and need more time for experimentation and reflection to reach expert (B2) level (Redecker, 2017). The result is consistent with several previous research conducted abroad (Artacho et al., 2020; Cabero–Almenara et al., 2021b; Diz–Otero et al., 2022; Lucas and Bem–Haja et al., 2021; Nunez–Canal et al., 2022; Zakharov et al., 2021). However, it contradicts a few national studies showing the digital literacy of teachers at a high level (e.g., Arslan, 2019). The most important reason for this may be the adoption of different theoretical foundations and measurement tools other than DigCompEdu in those studies.

The result of B1 proficiency level implies that participant teachers have already known available and common digital technologies and implemented them meaningfully in teaching activities using basic strategies. It means that their technology integration is at the amateur level and thus opens to development for upper professional levels (e.g., B2 = Expert, C1 = Leader, C2 = Pioneer). They can fit digital resources in many aspects of professional practice and employ variety of digital and pedagogical activities to foster student learning. However, they need more confidence and experiment with complex and creative digital tools and novel pedagogical practices in order to have a critical, comprehensive and innovative approach to technology integration (Redecker, 2017). Such progression calls for a continuous digitalization of schools and professional development. Moreover, due to the nature of the upper level proficiencies requiring higher–order skills, the emphasis of this progression should be on providing schools with innovative digital resources and offering constructivist and experience–based teacher training programs. For example, pre–service and in–service teacher education may apply problem or case–based learning scenarios in which teachers can critically analyze teaching or learning situations, strategically select digital technologies and pedagogical methods, innovatively design and implement suitable solutions, and make reflections on what works best, when and why. Teachers with high digital competence (e.g., Leaders or Pioneers) might be asked to serve as role models or mentors to their colleagues in order to help them identify their strengths and weakness, learn from one another, and support each other’s digital progression. Such approaches can increase teachers’ confidence and comprehension in using digital technologies in their professional practices and consequently expand their digital competence.

4.3 The role of demographic/teacher characteristics in digital competence

Participating teachers’ digital competence differs across gender in favor of males. The related literature has contradictory evidence about the gender difference in teachers’ or teacher candidates’ digital proficiency. While some studies show that males are more competent than females (Aslan, 2021; Guillen–Gamez et al., 2020; Kaya, 2020; Lucas and Bem–Haja et al., 2021), others indicate no significant difference (Cabero–Almenara et al., 2021b; Diz–Otero et al., 2022; Zakharov et al., 2021). The gender difference favoring male teachers in digital competence can be explained by the research evidence on male teachers showing earlier acceptance (Sırakaya, 2019) and having higher self–efficacy of technology (Kartal et al., 2018) than female teachers. Increased adoption and confidence naturally foster talent development. In fact, teachers’ confidence in using digital technologies has been shown a significant positive predictor of their digital competence in the literature (Lucas Bem–Haja et al., 2021). The use of an assessment tool based on self–reports might have an effect on this finding because prior research suggests that males tend to overestimate their confidence and ability to use technology (Gebhardt et al., 2019). Furthermore, it has been determined that men’s attitudes towards technology are higher than women’s at an early age (Yarar & Karabacak, 2015). The reasons for this may be that men have easier access to computer use outside of school than women and that perceive more support from their parents and peers regarding computer use than women (Vekiri & Chronaki, 2008). Especially in Turkish society, which is seen as male–dominated, the fact that men are more freer than women and that parents support their children’s development make it easier for men to access technology (Aslan, 2021). Therefore, it can be that men’s computer self–efficacy and value beliefs develop positively (Vekiri & Chronaki, 2008). Men’s higher attitudes towards technology use enable them to be more competent users regarding their technology use skills (Cai et al., 2017).

The digital competency level of participants who teach math and science related courses such as Math, Physics, Chemistry, Biology and Information Technology is higher than those teaching other courses. Although there are several contradictory results in the literature showing either no significant subject differences (Gökbulut, 2021) or differences between other subjects (Aslan, 2021; Diz–Otero et al., 2022), this result is corroborating with the prior evidence of subject differences that are more common in the literature, like teachers teaching STEM, Math and Computer Science related subjects being found more digitally competent than their peers (Akkoyunlu & Yılmaz Soylu, 2010; Ghomi & Redecker, 2019; Yılmaz & Toker, 2022; Zakharov et al., 2021). Similar to gender difference explained above, one reason for this finding might be that teachers in the field of numerical and computer sciences have higher acceptance and self–efficacy of technology usage as shown in previous studies (e.g., Sırakaya, 2019). Another factor underlying this difference may be that the course contents taught by participating teachers in digital subjects are more suitable for the use of digital technology. Differences in professional fields might lead to differences in digital competencies in subjects (Akkoyunlu & Yılmaz Soylu, 2010). Moreover, prior research suggests that teachers dealing with computer sciences show more openness to new technologies, an important factor or classifier for digital competence (Lucas and Bem–Haja et al., 2021).

Another factor affecting participating teachers’ digital competence is their educational background. Participants with postgraduate degrees (e.g., master’s or doctorate) are more digitally competent than those with undergraduate degrees. This result supports those studies showing higher level of technology use by teachers with postgraduate education compared to those with undergraduate education (Çelik, 2019; Sipahioğlu, 2019) whereas it contradicts with others indicating no significant difference based on the education level (Arslan, 2019; Guillen–Gamez et al., 2020; Gökbulut, 2021). It can be thought that postgraduate education provides participants with additional or new learning opportunities beyond undergraduate education, through which they can gain knowledge and skills related to digital technology and its use in pedagogical contexts. This is a reasonable interpretation because prior research suggests that professional development is among the most important motives and expectations of teachers or candidates for enrolling and pursuing a graduate degree (Incikabi et al., 2013).

The results reveal that participants’ digital competence is independent of whether they are working in primary, secondary or high schools. This is one of the unique contributions of the study because no evidence related to this issue was found during the review of related literature. This result may be due to the widespread use of digital tools and content in all school types as part of the digitalization in education. Based on this result, it can also be argued that relevant in–service trainings for participating teachers address all school levels and do not pose a disadvantage for any level. On the other hand, the study indicates that digital competency level is influenced by the location of employment. Participants working in the schools located in the city center are more digitally competent than those working in the village schools. The role of location of employment in teachers’ digital abilities is an unexplored topic because there is also no research evidence associated with this factor in the literature. The reason for why participants employed in the city centers have the higher competence might be the better technical facilities (e.g., ownership of digital tools, quality of internet connection, etc.) available both in the schools and students’ houses. Indeed, the unique effect of location of employment turned to insignificant in the regression analysis. This may be due to the overlap with other predictors entered into the model such as the number of information technology devices owned. It is a well–known fact that the limited access to digital technology in the villages and their schools is the major barrier for teachers’ both attitudes and uses of technology in their teaching practices. Unless teachers and students have suitable technologies, they will not be able to integrate technology–based teaching and learning activities, which will, in turn, obstructs the promotion of digital competence. Indeed, prior research demonstrates that some contextual factors such as students’ access to technology, classroom equipment, network infrastructure, peer influence on technology use and school facilitation significantly predict teachers’ digital competence (Lucas and Bem–Haja et al., 2021).

As far as the correlates of digital competence are concerned, the results shows that participants’ competence level is not associated with age. This is consistent with several studies (Diz–Otero et al., 2022; Guillen–Gamez et al., 2020) but contradictory with most of the previous studies indicating that younger teachers are more likely than older ones to have higher digital competence (Cattaneo et al., 2022; Erol & Aydın, 2021; Gökbulut, 2021; Lucas and Bem–Haja et al., 2021). The negative effect of age on participants’ digital competence is expected because young teachers compared to older ones are more recent graduates of pre–service education, which is the most influential stage for teacher candidates to become prepared for effective integration of latest technologies in teaching. Furthermore, young individuals are known to demonstrate positive attitudes toward technology, which could facilitate technology use and related competence development (Cattaneo et al., 2022). Therefore, failing to indicate age effect, this study may imply that in–service trainings offered to participating teachers meet learning needs of teachers with different ages in the use of technology. On the other hand, the number of information technology devices participants own is positively associated with their digital competence, which means the more devices participating teachers own, the more they are digitally competent. This result is similar to those found by Cattaneo et al. (2022) and Lucas and Ben–Haja et al. (2021), indicating digital tool use being associated with higher competence. The most possible explanation for this result is that owning more digital tools can lead to more practice and thus expertise in digital skills. This result implies that schools should provide teachers with a wide–range of digital resources to support their inspiration and experimentation.

5 Conclusions, limitations and future research

Today’s teachers are expected to use digital technologies effectively in the schools in order to equip children with necessary digital skills and prepare them for the challenges deemed by the increasingly digitalizing world. In particular to Turkish education system, the need for technology–enhanced education that emerged with the recent COVID–19 pandemic and massive earthquakes in southern Türkiye has further increased the importance of this expectation. The identification and development of teachers’ digital competence is of high priority to meet this expectation and thus emphasized in the 2023 Vision Plan developed by Turkish Ministry of National Education. Within this context, this study identified the current status of participating teachers’ digital competence on the basis of European DigCompEdu framework. It concludes that participants are at the integrator (B1) level of digital competency on average and those who are male, teach math and science related courses, have postgraduate degree, and work in metropolitan cities are more digitally competent than their counterparts. Furthermore, participants’ digital competency is independent of their age and type of school whereas it is positively and moderately associated with the number of digital devices owned.

As with any research study, this study has several limitations. First, although the research was conducted in a large province in the Central Anatolian Region of Türkiye, it has geographical limitations. Second, using the convenience sampling method to recruit participants creates the limitation that the findings may not be generalized to all teachers in the country. Third, the data obtained in the study is limited to the self–report scale expressions and personal information questions used in the data collection tool described in the method section. Last, the regression model and its prediction power are limited to those variables measured in the study.

Considering the limitations and findings of this study, the following suggestions were presented to the researchers:

-

1.

The assessment tool used in this study was developed before the COVID–19 pandemic which leads to the emergence of new technologies and their integration in teaching and learning. Together with the contradictory findings in its adaptation and validation studies, this calls for further studies of the tool in different contexts to reach broader agreement on its validity and reliability.

-

2.

The digital competency of teachers who participated in the present research was found to be differed across their gender, educational background and the place of employment. In order to determine the situations that may cause this result, future studies can be diversified to determine why and how digital competencies differ.

-

3.

In this study, the subject variable was analyzed in four groups. It is possible that the presence of information technology teachers may affect the results obtained, and this is among the limitations of the research. Expansion of research can be achieved by measuring and observing teachers’ digital competencies belong to information technology related subjects.

-

4.

Inconsistent with the related literature, this study shows that participants’ digital competency is not dependent on their age. Future studies may operationalize age variable in different manner such as separating the age groups of teachers according to generations.

-

5.

It was concluded that the number of devices owned by the participants was moderately correlated with their digital competencies. This situation can be further examined in future studies by considering type of devices or number of devices teachers have in their schools.

-

6.

The replication of this study or future studies can consider different demographic and contextual factors and use them as predictors to increase the explained variance in teachers’ digital competence.

Data availability

The data collected and analyzed within this study will be available from the corresponding author on a reasonable request.

References

Akkoyunlu, B., & Yılmaz Soylu, M. (2010). Öğretmenlerin sayısal yetkinlikleri üzerine bir çalışma. Türk Kütüphaneciliği, 24(4), 748–768. https://dergipark.org.tr/tr/pub/tk/issue/48858/622491.

Antonietti, C., Cattaneo, A., & Amenduni, F. (2022). Can teachers’ digital competence influence technology acceptance in vocational education? Computers in Human Behavior, 132. https://doi.org/10.1016/J.CHB.2022.107266.

Arafat, S., Chowdhury, H., Qusar, M., & Hafez, M. (2016). Cross cultural adaptation and psychometric validation of research instruments: A methodological review. Journal of Behavioral Health, 5(3), 129. https://doi.org/10.5455/jbh.20160615121755.

Arslan, S. (2019). İlkokullarda ve ortaokullarda görev yapan öğretmenlerin dijital okuryazarlık düzeylerinin çeşitli değişkenler açısından incelenmesi. Sakarya Üniversitesi.

Artacho, E. G., Martinez, T. S., Martin, O., Marin, J. L. M., J. A., & Garcia, G. G. (2020). Teacher training in lifelong learning–the importance of digital competence in the encouragement of teaching innovation. Sustainability (Switzerland), 12(7), 2852. https://doi.org/10.3390/su12072852.

Aslan, S. (2021). Analysis of digital literacy self–efficacy levels of pre–service teachers. International Journal of Technology in Education (IJTE), 4(1), 57–67. https://doi.org/10.46328/ijte.47.

Atmojo, I. R. W., Ardiansyah, R., & Wulandari, W. (2022). Classroom teacher’s digital literacy level based on instant digital competence assessment (IDCA) perspective. Mimbar Sekolah Dasar, 9(3), 431–445. https://doi.org/10.53400/mimbar-sd.v9i3.51957.

Barboutidis, G., & Stiakakis, E. (2023). Identifying the factors to enhance digital competence of students at vocational training institutes. Technology Knowledge and Learning, 28(2), 613–650. https://doi.org/10.1007/S10758-023-09641-1/TABLES/19.

Bilyalova, A. A., Salimova, D. A., & Zelenina, T. I. (2020). Digital transformation in education. Lecture Notes in Networks and Systems, 78, 265–276. https://doi.org/10.1007/978-3-030-22493-6_24.

Bozkurt, A., Hamutoğlu, N. B., Kaban, L., Taşçı, A., G., & Aykul, M. (2021). Dijital bilgi çağı: Dijital Toplum, dijital dönüşüm, dijital eğitim ve dijital yeterlilikler. Açıköğretim Uygulamaları ve Araştırmaları Dergisi, 35–63. https://doi.org/10.51948/auad.911584.

Brown, T. A. (2006). Confirmatory factor analysis for applied research. The Guilford.

Brynildsen, S., Nagel, I., & Engeness, I. (2022). Teachers’ perspectives on enhancing professional digital competence by participating in TeachMeet. Italian Journal of Educational Technology, 30(2), 45–63. https://doi.org/10.17471/2499-4324/1252.

Cabero–Almenara, J., Barragán–Sánchez, R., Palacios–Rodríguez, A., & Martín–Párraga, L. (2021a). Design and validation of t–MOOC for the development of the digital competence of non–university teachers. Technologies, 9(4), 84. https://doi.org/10.3390/TECHNOLOGIES9040084.

Cabero–Almenara, J., Barroso–Osuna, J., Gutiérrez–Castillo, J. J., & Palacios–Rodríguez, A. (2021b). The teaching digital competence of health sciences teachers. A study at andalusian universities (Spain). International Journal of Environmental Research and Public Health, 18(5), 1–13. https://doi.org/10.3390/ijerph18052552.

Cabero–Almenara, J., Guillén–Gámez, F. D., Ruiz–Palmero, J., & Palacios–Rodríguez, A. (2021c). Digital competence of higher education professor according to DigCompEdu. Statistical research methods with ANOVA between fields of knowledge in different age ranges. Education and Information Technologies, 26(4), 4691–4708. https://doi.org/10.1007/s10639-021-10476-5.

Cabero–Almenara, J., Gutiérrez–Castillo, J. J., Palacios–Rodríguez, A., & Barroso–Osuna, J. (2021d). Comparative European DigCompEdu Framework (JRC) and common framework for teaching digital competence (INTEF) through expert judgment. Texto Livre: Linguagem E Tecnologia, 14(1), e25740. https://doi.org/10.35699/1983-3652.2021.25740.

Cabero–Almenara, J., Barroso–Osuna, J., Gutiérrez–Castillo, J. J., & Palacios–Rodríguez, A. (2023). T–MOOC, cognitive load and performance: Analysis of an experience. Revista Electrónica Interuniversitaria De Formación Del Profesorado, 26(1), 99–113. https://doi.org/10.6018/REIFOP.542121.

Cai, Z., Fan, X., & Du, J. (2017). Gender and attitudes toward technology use: A meta–analysis. Computers and Education, 105, 1–13. https://doi.org/10.1016/j.compedu.2016.11.003.

Carretero, S., Vuorikari, R., & Punie, Y. (2017). DigComp 2.1: The Digital Competence Framework for Citizens. With eight proficiency levels and examples of use. In Joint Research Centre. http://publications.jrc.ec.europa.eu/repository/bitstream/JRC106281/web-digcomp2.1pdf_(online).pdf.

Cattaneo, A. A. P., Antonietti, C., & Rauseo, M. (2022). How digitalised are vocational teachers? Assessing digital competence in vocational education and looking at its underlying factors. Computers & Education, 176, 104358. https://doi.org/10.1016/J.COMPEDU.2021.104358.

Çebi, A., & Reisoğlu, İ. (2019). Öğretmen adaylarının dijital yeterliklerinin geliştirilmesine yönelik bir eğitim etkinliği: Böte ve diğer branşlardaki öğretmen adaylarının görüşleri. Eğitim Teknolojisi Kuram Ve Uygulama, 9(2), 539–565. https://doi.org/10.17943/etku.562663.

Çebi, A., & Reisoğlu, İ. (2023). Adaptation of self–assessment instrument for educators’ digital competence into Turkish culture: A study on reliability and validity. Technology Knowledge and Learning, 28(2), 569–583. https://doi.org/10.1007/s10758-021-09589-0.

Çelik, A. (2019). Öğretmenlerin Eğitim teknolojileri kullanım düzeylerinin belirlenmesi: Sakarya Ili örneği. Sakarya Üniversitesi.

Creswell, J. W. (2012). Educational research: planning, conducting, and evaluating quantitative and qualitative research (4th ed.).

Diz–Otero, M., Portela–Pino, I., Dominguez–Lloria, S., & Pino–Juste, M. (2022). Digital competence in secondary education teachers during the COVID–19–derived pandemic: Comparative analysis. Education and Training. https://doi.org/10.1108/ET-01-2022-0001.

Erol, S., & Aydın, E. (2021). Digital literacy status of Turkish teachers. International Online Journal of Educational Sciences, 13(2), 620–633. https://doi.org/10.15345/iojes.2021.02.020.

Esteve–Mon, F. M., Nebot, M. Á. L., Cosentino, V. V., & Adell–Segura, J. (2022). Digital teaching competence of university teachers: Levels and teaching typologies. International Journal of Emerging Technologies in Learning (IJET), 17(13), 200–216. https://doi.org/10.3991/IJET.V17I13.24345.

European Commision (2019). The Digital Competence Framework 2.0 | EU Science Hub. The European Commission’s Science and Knowledge Service. https://ec.europa.eu/jrc/en/digcomp/digital-competence-framework.

Fernandez–Batanero, J. M., Montenegro–Rueda, M., Fernandez–Cerero, J., & Garcia–Martinez, I. (2022). Digital competences for teacher professional development. Systematic review. European Journal of Teacher Education, 45(4), 513–531. https://doi.org/10.1080/02619768.2020.1827389.

Fernandez–Cruz, F. J., & Fernandez–Diaz, M. J. (2016). Generation Z’s teachers and their digital skills. Comunicar, 24(46), 97–105. https://doi.org/10.3916/C46-2016-10.

Fidan, M., & Cura Yeleğen, H. (2022). Öğretmenlerin dijital yeterliklerinin çeşitli değişkenler açısından incelenmesi ve dijital yeterlik gereksinimleri. Ege Journal of Education, 23(2), 150–170. https://doi.org/10.12984/EGEEFD.1075367.

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50.

Fraile, M. N., Penalva–Velez, A., & Lacambra, A. M. M. (2018). Development of digital competence in secondary education teachers’ training. Education Sciences, 8(3). https://doi.org/10.3390/EDUCSCI8030104.

Gallardo–Echenique, E., Tomás–Rojas, A., Bossio, J., & Freundt–Thurne, U. (2023). Evidence of validity and reliability of DigCompEdu CheckIn among professors at a Peruvian private university. Publicaciones, 53(2), 69–88. https://doi.org/10.30827/publicaciones.v53i2.26817.

Gebhardt, E., Thomson, S., Ainley, J., & Hillman, K. (2019). Gender differences in computer and information literacy (Vol. 8). Springer International Publishing. https://doi.org/10.1007/978-3-030-26203-7.

Ghomi, M., & Redecker, C. (2019). Digital competence of educators (DigCompedu): Development and evaluation of a self–assessment instrument for teachers’ digital competence. CSEDU 2019–Proceedings of the 11th International Conference on Computer Supported Education, 1, 541–548. https://doi.org/10.5220/0007679005410548.

Gökbulut, B. (2021). Examination of teachers’ digital literacy levels and lifelong learning tendencies. Journal of Higher Education and Science, 11(3), 469–479. https://doi.org/10.5961/jhes.2021.466.

Gordillo, A., Barra, E., Garaizar, P., & Lopez–Pernas, S. (2021). Use of a simulated social network as an educational tool to enhance teacher digital competence. Revista Iberoamericana De Tecnologias Del Aprendizaje, 16(1), 107–114. https://doi.org/10.1109/RITA.2021.3052686.

Guillen–Gamez, F. D., Mayorga–Fernandez, M. J., & Alvarez–Garcia, F. J. (2020). A study on the actual use of digital competence in the practicum of education degree. Technology Knowledge and Learning, 25(3), 667–684. https://doi.org/10.1007/s10758-018-9390-z.

Hair, J., Black, W., Babin, B., & Anderson, R. (2010). Multivariate data analysis (7th ed.). Prentice–Hall.

Heine, S., Krepf, M., & König, J. (2023). Digital resources as an aspect of teacher professional digital competence: One term, different definitions – A systematic review. Education and Information Technologies, 28(4), 3711–3738. https://doi.org/10.1007/s10639-022-11321-z.

Ilomäki, L., Kantosalo, A., & Lakkala, M. (2011). What is digital competence?http://linked.eun.org/web/guest/in-depth3.

Ilomäki, L., Paavola, S., Lakkala, M., & Kantosalo, A. (2016). Digital competence–an emergent boundary concept for policy and educational research. Education and Information Technologies, 21(3), 655–679. https://doi.org/10.1007/s10639-014-9346-4.

Incikabi, L., Pektas, M., Ozgelen, S., & Kurnaz, M. A. (2013). Motivations and expectations for pursuing graduate education in mathematics and science education. The Anthropologist, 16(3), 701–709. https://doi.org/10.1080/09720073.2013.11891396.

Instefjord, E. J., & Munthe, E. (2017). Educating digitally competent teachers: A study of integration of professional digital competence in teacher education. Teaching and Teacher Education, 67, 37–45. https://doi.org/10.1016/j.tate.2017.05.016.

Johnson, L., Johnson, L., Becker, S. A., Estrada, V., Freeman, A., Kampylis, P., Vuorikari, R., & Punie, Y. (2014). NMC Horizon Report Europe: 2014 Schools Edition.

Karasar, N. (2008). Bilimsel araştırma yöntemi (17th ed.). Nobel Yayın Dağıtım.

Kartal, O. Y., Temelli, D., & Şahin, Ç. (2018). Ortaokul matematik öğretmenlerinin bilişim teknolojileri öz–yeterlik düzeylerinin cinsiyet değişkenine göre incelenmesi. Kuramsal Eğitimbilim, 11(4), 922–943. https://doi.org/10.30831/akukeg.410279.

Kaya, R. (2020). Eğitim fakültesi öğrencilerinin teknoloji entegrasyonu öz–yeterlik algilari ile dijital yeterlik seviyeleri arasindaki ilişkinin incelenmesi. Balıkesir Üniversitesi.

Kline, R. B. (2005). Principles and practice of structural equation modeling (2nd ed.). Guilford Press.

Koc, M., & Demirbilek, M. (2018). What is technology (T) and what does it hold for STEM education? Definitions, issues, and tools. Research Highlights in STEM Education, 64–80.

Llorente–Cejudo, C., Barragán–Sánchez, R., Puig–Gutiérrez, M., & Romero–Tena, R. (2023). Social inclusion as a perspective for the validation of the DigCompEdu Check–In questionnaire for teaching digital competence. Education and Information Technologies, 28(8), 9437–9458. https://doi.org/10.1007/s10639-022-11273-4.

Lucas, M., Bem-Haja, P., Siddiq, F., Moreira, A., & Redecker, C. (2021). The relation between in-service teachers’ digital competence and personal and contextual factors: What matters most? Computers and Education, 160. https://doi.org/10.1016/J.COMPEDU.2020.104052.

Lucas, M., Dorotea, N., & Piedade, J. (2021b). Developing teachers’ digital competence: Results from a pilot in Portugal. Revista Iberoamericana De Tecnologias Del Aprendizaje, 16(1), 84–92. https://doi.org/10.1109/RITA.2021.3052654.

Martín Párraga, L., Cejudo, L., C., & Barroso Osuna, J. (2022). Validation of the DigCompEdu Check–In Questionnaire through structural equations: A study at a university in Peru. Education Sciences, 12(8), 574. https://doi.org/10.3390/educsci12080574.

Mattar, J., Ramos, D. K., & Lucas, M. R. (2022). DigComp–Based digital competence assessment tools: Literature review and instrument analysis. Education and Information Technologies, 27(8), 10843–10867. https://doi.org/10.1007/s10639-022-11034-3.

McGarr, O., & McDonagh, A. (2021). Exploring the digital competence of pre–service teachers on entry onto an initial teacher education programme in Ireland. Irish Educational Studies, 40(1), 115–128. https://doi.org/10.1080/03323315.2020.1800501.

Muammar, S., Hashim, K. F., Bin, & Panthakkan, A. (2023). Evaluation of digital competence level among educators in UAE higher education institutions using Digital competence of educators (DigComEdu) framework. Education and Information Technologies, 28(3), 2485–2508. https://doi.org/10.1007/s10639-022-11296-x.

Nunez–Canal, M., de Obesso, M., de las, M., & Perez–Rivero, C. A. (2022). New challenges in higher education: A study of the digital competence of educators in Covid times. Technological Forecasting and Social Change, 174, 121270. https://doi.org/10.1016/J.TECHFORE.2021.121270.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory. McGraw–Hill.

Palacios–Rodríguez, A., Martín–Párraga, L., & Gutiérrez–Castillo, J. J. (2022). Enhancing digital competency: Validation of the training proposal for the development of teaching digital competence according to DigCompEdu. Contemporary Education and Teaching Research, 3(2), 29–32. https://doi.org/10.47852/BONVIEWCETR2022030201.

Pallant, J. (2007). SPSS survival manual: A step–by–step guide to data analysis using SPSS. Open University.

Perez–Escoda, A., Castro–Zubizarreta, A., & Fandos–Igado, M. (2016). Digital skills in the Z generation: Key questions for a curricular introduction in primary school. Comunicar, 24(49), 71–79. https://doi.org/10.3916/C49-2016-07.

Polat, M. (2021). Pre–service teachers’ digital literacy levels, views on distance education and pre–university school memories. International Journal of Progressive Education, 17(5), 299–314. https://doi.org/10.29329/ijpe.2021.375.19.

Pongsakdi, N., Kortelainen, A., & Veermans, M. (2021). The impact of digital pedagogy training on in–service teachers’ attitudes towards digital technologies. Education and Information Technologies, 26(5), 5041–5054. https://doi.org/10.1007/S10639-021-10439-W/TABLES/3.

Redecker, C. (2017). European framework for the digital competence of educators: DigCompEdu. In Punie, Y. (Ed.), EUR 28775 EN. Publications of the European Union. https://doi.org/10.2760/159770.

Reisoğlu, İ., & Çebi, A. (2020a). Dijital yeterlik ve kuramsal çerçeveler. In Dijital Yeterlik: Dijital Çağda Dönüşüm Yolculuğu (pp. 1–18). Ankara: Pegem Akademi Yayıncılık. https://doi.org/10.14527/9786058006041.01.

Reisoğlu, İ., & Çebi, A. (2020b). How can the digital competences of pre–service teachers be developed? Examining a case study through the lens of DigComp and DigCompEdu. Computers and Education, 156. https://doi.org/10.1016/j.compedu.2020.103940.

Saltos–Rivas, R., Novoa–Hernandez, P., & Rodriguez, R. S. (2023). Understanding university teachers’ digital competencies: A systematic mapping study. Education and Information Technologies, 1–52. https://doi.org/10.1007/s10639-023-11669-w.

Şen, S., & Yıldırım, İ. (2019). Eğitimde araştırma yöntemleri (1st ed.). Nobel.

Sipahioğlu, S. (2019). Fen Bilimleri öğretmenlerinin eğitimde teknoloji kullanımına yönelik tutumlarının çeşitli değişkenlere göre incelenmesi. Atatürk Üniversitesi.

Sırakaya, M. (2019). İlkokul ve ortaokul öğretmenlerinin teknoloji kabul durumları. İnönü Üniversitesi Eğitim Fakültesi Dergisi, 20(2), 578–590. https://doi.org/10.17679/inuefd.495886.