Abstract

As online learning has been widely adopted in higher education in recent years, artificial intelligence (AI) has brought new ways for improving instruction and learning in online higher education. However, there is a lack of literature reviews that focuses on the functions, effects, and implications of applying AI in the online higher education context. In addition, what AI algorithms are commonly used and how they influence online higher education remain unclear. To fill these gaps, this systematic review provides an overview of empirical research on the applications of AI in online higher education. Specifically, this literature review examines the functions of AI in empirical researches, the algorithms used in empirical researches and the effects and implications generated by empirical research. According to the screening criteria, out of the 434 initially identified articles for the period between 2011 and 2020, 32 articles are included for the final synthesis. Results find that: (1) the functions of AI applications in online higher education include prediction of learning status, performance or satisfaction, resource recommendation, automatic assessment, and improvement of learning experience; (2) traditional AI technologies are commonly adopted while more advanced techniques (e.g., genetic algorithm, deep learning) are rarely used yet; and (3) effects generated by AI applications include a high quality of AI-enabled prediction with multiple input variables, a high quality of AI-enabled recommendations based on student characteristics, an improvement of students’ academic performance, and an improvement of online engagement and participation. This systematic review proposes the following theoretical, technological, and practical implications: (1) the integration of educational and learning theories into AI-enabled online learning; (2) the adoption of advanced AI technologies to collect and analyze real-time process data; and (3) the implementation of more empirical research to test actual effects of AI applications in online higher education.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Advances in the Internet, wireless communication, and computing technologies have shed light on educational changes in online higher education, particularly the application of Artificial Intelligence in Education (AIEd) in recent years (Chen et al., 2020a, b; Ouyang & Jiao, 2021). Online and distance learning refers to delivering lectures, virtual classroom meetings, and other teaching materials and activities via the Internet (Harasim, 2000; Holmberg, 2005). This educational model has been extensively integrated into higher education to transform instruction and learning modes as well as provide fair educational opportunities to online learners (Hu, 2021; Liu et al., 2020; Mubarak et al., 2020; Yang et al., 2014). In the online education context, AI applications (e.g. intelligent tutoring systems, teaching robots, learning analytics dashboards, adaptive learning systems) have been used to promote online students’ learning experience, performance, and quality (Chen et al., 2020a, b; Hinojo-Lucena et al., 2019). Varied AIEd techniques (e.g., natural language processing, artificial neural networks, machine learning, deep learning, genetic algorithm) have been implemented to create intelligent learning environments for behavior detection, prediction model building, learning recommendation, etc. (Chen et al., 2020a, b; Rowe, 2019). Overall, the applications of AI systems and technologies have transformed online higher education and have provided opportunities and challenges for improving higher education quality. Previous reviews have provided substantial insight into the AIEd field. For instance, existing review work has summarized the trends of AIEd (Tang et al., 2021; Xie et al., 2019; Zhai et al., 2021), applications (Alyahyan & Düştegör, 2020; Hooshyar et al., 2016; Liz-Domínguez et al., 2019; Shahiri et al., 2015), theoretical paradigms (Ouyang & Jiao, 2021) and AI roles in education ( Xu & Ouyang, 2021). However, there is limited literature reviews that examine the purposes and effects of applying AI techniques in the online higher education context. More important, a major challenge is to gain a deep understanding of the empirical effect of AI applications in online higher education. To achieve this purpose, this systematic review collects, reviews, and summarizes the empirical research of AI in online higher education with particular aims to analyze the application purposes, the AI algorithms used, and effects of AI techniques generated in online higher education.

2 Literature review

AIEd refers to the use of AI technologies or applications in educational settings to facilitate instruction, learning, and decision making processes of stakeholders, such as students, instructors, and administrators (Hwang et al., 2020). In online higher education, AI can support instructional design and development by providing automatic learning resources or paths (Christudas et al., 2018), offering automatic assessments (Aluthman, 2016) or predictions of student performance (Almeda et al., 2018; Moreno-Marcos et al., 2019). From the instructional perspective, AI can play the role as a tutor to observe students’ learning processes, analyze their learning performances, and provide chances for instructors to get rid of repetitive and tedious teaching tasks (Chen et al., 2020a, b; Hwang et al., 2020). Moreover, from the learner perspective, One of the crucial objectives of AIEd is to providing personalized learning guidance or support based on students’ learning status, preferences, or personal characteristics (Hwang et al., 2020). For instance, AIEd can provide learning materials or paths based on students’ needs (Christudas et al., 2018), diagnose students’ strengths, weaknesses, or knowledge gaps (Liu et al., 2017), or provide automated feedback and promoting collaboration between students (Aluthman, 2016; Benhamdi et al., 2017; Zawacki-Richter et al., 2019). Furthermore, AIEd can help educational administrators make decisions about course development, pedagogical design and academic transformation. For example, AI algorithm models can mine and analyze available educational data from higher education system database to understand course status, student learning performance, which can help administrators or decision-makers to make changes needed in the course (George & Lal, 2019). In summary, AI-enhanced technology has played an essential role in education from the instructor, learner and administrator perspectives, with its potential to open new opportunities and challenges for higher education transformation.

Multiple AI algorithms have been applied in higher education to facilitate automatic recommendation, academic prediction, or assessment. For example, Sequential Pattern Mining (SPM) has been utilized in recommender systems for capturing historical learning sequence patterns in learner interactions with the system and discovering suitable recommendation items for learners’ learning sequences (Romero et al., 2013a). Evolutionary algorithms such as Genetic Algorithms (GA), Particle Swarm Optimization (PSO) and Ant Colony Optimization (ACO) have been used for learning content optimization (Christudas et al., 2018). Machine Learning (ML) has been used in academic prediction, such as predicting the academic success of students in online courses, whether students would successfully complete their college degree, or predict students’ selection of courses in higher education (Rico-Juan et al. 2019). Lykourentzou et al. (2009) used three machine learning techniques, namely feed-forward neural networks, support vector machines and probabilistic ensemble simplified fuzzy ARTMAP to predict dropout-prone students in early stages of the e-learning course. Moseley and Mead (2008) used a machine learning technique called decision trees to predict student attrition in higher educational nursing institutions. Natural language processing (NLP) has been used for code detection or emotional analysis. For example, Rico-Juan et al. (2019) adopted NLP for automatic detection of inconsistencies between numerical scores and textual feedback in peer-assessment process. In summary, different AI algorithms have been used in AIEd to achieve automatic recommendation, academic prediction, or assessment functions in order to improve instruction and learning quality.

Although there are existing systematic reviews on AIEd (e.g., AIEd trends, paradigms, tools, or applications)(Ouyang & Jiao, 2021; Tang et al., 2021), there is an inadequacy of review work examining AIEd in the higher education context. Among a collection of 37 AIEd review articles published between 2011 and 2021, only 6 review articles focus on higher education, published in the years of 2019 and 2020 (see Fig. 1). Among those six review articles, Hinojo-Lucena et al. (2019) used the bibliometric method to review the applications of AI in higher education. This review analyzed the number of authors, main source titles, organizations, authors, and countries of AIEd in higher education. Zawacki-Richter et al. (2019) synthesized 146 articles about the application of AI in higher education and concluded four major areas, namely profiling and prediction, intelligent tutoring systems, assessment, and evaluation, as well as adaptive learning systems. However, this review work did not conduct further analysis for examining the effects of AI in online higher education. Moreno-Marcos et al. (2018) used a systematic literature review to examine the AI models used for performance prediction in MOOCs. This review found that AI algorithms used for prediction were: regression, support vector machines (SVM), decision trees (DTs), random forest (RF), naive Bayes (NB), gradient boosting machine (GBM), neural networks (NN), etc. We concluded that existing literature review work mainly focuses on the application of AIEd in general, and few work specifically focuses on the online higher education. Among those work that focus on AI in online higher education, they mainly focus on describing the applications of AI in a specific educational context (e.g., MOOCs), which resulted in the lack of a holistic picture of the AIEd trends, categorizations, and applications in online higher education.

As an effort to further understand AI in online higher education, this systematic review examines empirical research of AI applications in online higher education from the instructional and learning perspective and investigates the functions of AI applications, algorithms used, and the effects of AI on the instruction and learning process within online higher education. To be specific, this review focuses on the following three research questions:

-

RQ1: What are the functions of AI applications in online higher education?

-

RQ2: What AI algorithms are used to achieve those functions in online higher education?

-

RQ3: What are the effects and implications of AI applications on the instruction and learning processes in online higher education?

3 Methodology

The systematic review methodology used in this study is based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) principles, which consists of a 27-item checklist and a four-phase flow diagram (Moher et al., 2009). The following section will introduce the systematic review procedures.

3.1 Database search

In order to locate the relevant articles, the systematic search was conducted on the following electronic databases: Web of Science, Scopus, ACM, IEEE, Taylor & Francis, Wiley, EBSCO. We selected these databases since they were considered as the major publisher databases (Guan et al., 2020). Filters were limited to the time period from January 2011 to December 2020 and were applied to the peer-reviewed and empirical research articles written in English in order to ensure the quality of the review articles. After the screening of the full articles, the snowballing approach was performed based on the guidelines to find the articles that were not extracted by using the search strings (Wohlin, 2014). At this stage, Google Scholar was used to searching specific articles.

3.2 Search terms

A structured search strategy was used for various bibliographic databases with keywords used according to each database’s specific requirements. In both the electronic and manual searches, specific keywords related to AI and commonly-used algorithms or techniques (i.e., “intelligence”, “AI”, and “AIEd”), AI applications (i.e., “intelligent tutoring system”, “expert system”, and “prediction model”), and algorithms (i.e., “decision tree”, “machine learning”, “neural network”, “deep learning”, “k-means”, “random forest”, “support vector machines”, “logistic regression”, “fuzzy-logic”, “Bayesian network”, “latent Dirichlet allocation”, “natural language processing”, “genetic algorithm”, and “genetic programming”) were used. In addition, as this review focused on the context in online higher education, the following keywords were added: “online education”, “online learning”, “e-learning”, “MOOC”, “SPOC” “blended learning”, “higher education”, “online higher education”, “undergraduate education”, and “graduated education”.

3.3 Inclusion and exclusion criteria

The search criteria were designed to locate the articles that focused on the applications of AI in online higher education. In terms of the research questions, a set of inclusion and exclusion criteria were adopted (see Table 1).

3.4 The screening process

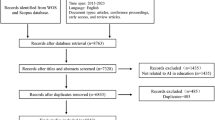

The screening process involved the following steps: (1) removing the duplicated articles, (2) removing the articles that did not meet the inclusion criteria based on the titles and abstracts, (3) reading the full texts again and removing the articles that did not meet the inclusion criteria, (4) using the snowballing approach to further locate the articles in Google Scholar, and (5) extracting data from the final filtered articles (see Fig. 2). All articles were imported into the Mendeley software for screening.

The search produced 434 articles from the previously-used search terms, including 92 duplicates that were deleted. By reviewing the titles and abstracts, the number of articles was reduced to 91 based on the criteria (see Table 1). The selected articles were examined by the second author to determine whether they were suitable for the purpose of the study. The first author independently reviewed approximately 30% of the articles to confirm the reliability. The inter-rater agreement was initially 90% and then was brought to 100% agreement after discussion. Then, the full-text of articles were reviewed by both authors to verify that the articles met all the criteria for inclusion in the review. Eventually, a total of 32 articles that met the criteria were included in the final systematic review.

3.5 Analysis

The articles that met the inclusion criteria were analyzed using the bibliometric analysis approach (Neuendorf & Kumar, 2015). We calculated the frequencies for each category of AIEd in online higher education. The qualitative content analysis method was carried out to categorize the articles (Zupic & Čater, 2015). We classified the information from the articles relevant to the research questions. Three strategies were used to establish the credibility of the analysis. First, two researchers had ongoing meetings to verify the categories of the reviewed articles (Graneheim & Lundman, 2004). Second, detailed explanations of the categories that emerged as findings for each research question were provided in the result section (Hsieh & Shannon, 2005). Finally, we provided examples to demonstrate how well the categories represented the data to answer the research questions (Graneheim & Lundman, 2004).

4 Results

Among the 32 empirical articles, 72% of the articles were published after 2016. The articles finally selected were published in 23 different journals. The major countries or areas for the 32 studies were also identified. The most prolific country or area in AIEd research was Spain that had 5 publications (16%), followed by the USA (4 publications, 13%), and Taiwan (4 publications, 13%). Furthermore, three journals were found with more than two relevant articles that met the criteria, including Computers in Human Behavior (n = 4, 13%), Computers & Education (n = 3, 9%), and Interactive Learning Environments (n = 3, 9%) (see Appendix Table 2).

4.1 RQ1: What are the functions of AI applications in online higher education?

There are four major functions of AI applications in online higher education: predictions of learning status, performance or satisfaction (n = 21, 66%), resource recommendation (n = 7, 22%), automatic assessment (n = 2, 6%), and improvement of learning experience (n = 2, 6%) (see Fig. 3).

4.1.1 Predictions of learning status, performance or satisfaction

The first function of AI application is the prediction of student performances, that is to illustrate student learning status or performance in advance. Among 32 reviewed articles, 21 articles (66%) focused on the predictions of student performance in online higher education context. Further examinations identified three categories: prediction of dropout risks (n = 13, 41%), prediction of student academic performances (n = 7, 22%), and prediction of student satisfactions about online courses (n = 1, 3%) (see Appendix Table 2).

In the first category regarding the prediction models for diagnosing the risks of student dropout, Mubarak et al. (2020) constructed a prediction model to predict the students who were at-risk of dropout based on the interaction logs in the online learning environment. The results showed that the proposed models achieved an accuracy of 84%, which was better than the baseline of machine learning models. Aguiar et al. (2014) analyzed engineering students’ electronic portfolios to predict their persistence in online courses, and results proved a consistently better performance than those models based on the traditional academic data (SAT scores, GPA, demographics, etc.) alone. The second category is the prediction of student academic performance. For example, Almeda et al. (2018) used the classification models to predict whether students would succeed in the online courses and further used the regression models to predict students’ numerical scores. One key finding was that the features related to course comments were significant predictors of the final grades. Romero et al. (2013b) collected the forum messages to predict student performance and they found that the students who actively participated in the forum, posted messages more frequently with a high quality were more likely to pass the course. The third category is the prediction of student satisfaction levels about online courses. Only one study was located: Hew et al. (2020) analyzed the course features of 249 randomly sampled MOOCs and 6,393 students’ perceptions were examined to understand what factors predicted student satisfaction. They found that the course instructor, content, assessment, and time schedule played significant roles in explaining the student satisfaction levels.

4.1.2 Resource recommendation

Among 32 reviewed articles, 7 articles (22%) focused on the resource recommendation in online higher education context. For example, Benhamdi et al. (2017) designed a recommendation approach to provide online students with the appropriate learning materials based on their preferences, interests, background knowledge, and memory capacities to store information. The results concluded that this recommendation system improved students’ learning quality. Christudas et al. (2018) used the compatible genetic algorithm (CGA) to provide suitable learning content for individual students based on the preferred learning objects they previously chose. The results showed that the students’ scores and satisfaction levels were improved in an e-learning environment. In the online programming courses, Cárdenas-Cobo et al. (2020) developed a system called CARAMBA to suggest suitable learning exercises for students in scratch programming. Results confirmed that those exercise recommendations in Scratch had improved the student’s programming capabilities. In summary, AI has been used in online higher education to recommend learners suitable and personalized resources based on learners’ fixed and dynamic characteristics.

4.1.3 Automatic assessment

Among 32 reviewed articles, 2 articles (6%) focused on the automatic assessment in online higher education context. Hooshyar et al. (2016) developed an ITS system called Tic-tac-toe Quiz for Single-Player (TRIS-Q-SP) to provide students formative assessment of their computer programming performances and problem-solving capacities. The empirical research demonstrated that the proposed system enhanced student learning interests, positive attitudes, degree of technology acceptance, and problem solving activities. Aluthman (2016) developed the automated essay evaluation (AEE) system to provide students with immediate assessment, feedback, and automated scores in an online English learning environment and examined the effects of utilizing AEE on undergraduate students’ writing performance. The results indicated that the AEE system had a positive effect on improving students’ writing performance. In summary, AI has been used in online higher education to automatically assess students’ performances and learning capacities, to provide timely feedback to students, and to improve students’ self-awareness and self-reflection.

4.1.4 Improvement of learning experience

Among 32 reviewed articles, 2 articles (6%) focused on the optimization of learning experiences by improving learner interactions with learning environments or resources in online higher education context. Ijaz et al. (2017) created virtual reality (VR) tool that applied the AI technique for history learning. The VR tool allowed students immerse themselves into the virtual environments of cities and learn by browsing and interacting with virtual citizens. The results confirmed that this AI-enabled virtual learning mode was more engaging and motivating for students, compared to simply reading the history texts or watching educational videos. Koć-Januchta et al. (2020) compared student interaction and learning quality between the designed AI-enriched biology books and traditional e-books. Results found that the students who used the AI-enriched book asked more questions and kept higher retention than those engaged in reading the traditional e-books.

4.2 RQ2: What AI algorithms are used to achieve those functions in online higher education?

Among the 32 reviewed articles, 24 articles specified the AI algorithms they used in the research. Those eight articles used AI systems or tools (e.g., recommendation systems) but did not specify the AI algorithms they used. Among the 24 articles, the most commonly used AI algorithms were DT (n = 14, 44%), NN (n = 8, 25%), NB (n = 7, 22%) and SVM (n = 7, 22%). Some research used multiple algorithms in one study (see Fig. 4 and Appendix Table 3).

The distribution of AI algorithms. Note: DT: Decision Tree; NN: Neural Network; SVM: Support Vector Machine; NB: Naive Bayes; RF: Random Forest; LGR: Logistic Regression; LR: Liner Regression; KNN: K-Nearest Neighbours; NLP: Neural Language Processing; BN: Bayes Network; XGBoost: Extreme Gradient Boosting; SVC: Support Vector Classification; Splines: Multivariate Adaptive Regression Splines; SMO: Sequential Minimal Optimizer; RG: Regression; LDA: Linear Discriminant Analysis; IOHMM: Input-Output Hidden Markov Model; GaNB: Gaussian Naive Bayes; GA: Genetic Algorithm; CART: Classification and Regression Tree; MLP: Multi-Layer Perceptron; BART: Bayesian Additive Regressive Trees

The systematic review found that relatively traditional algorithms included DT, LGR, NB, SVM, and NLP. For example, Almeda et al. (2018) used the J48 DT to predict whether a student would successfully pass the course and further used the regression models to predict student’s numerical grades. Mubarak et al. (2020) proposed two AI models, namely the Logistic Regression and the input-output hidden Markov model, to predict student dropout risk. Yoo and Kim (2014) used online discussion participation as the predictor for class project performance and used Support Vector Machine (SVM) for data processing and automatic classifiers. Helal et al. (2018) created different classification models for predicting student performance, including two black-box methods, namely NB and SMO, an optimization algorithm for training SVM, and two white box methods (i.e., J48 and JRip). Natural language processing (NLP) was applied to automatically assess student performance and to detect student satisfaction. For example, Aluthman (2016) adopted the NLP techniques to automatically evaluate essays and provided students with both automated scores and immediate feedback. Hew et al. (2020) used the NLP techniques to identify what students commented to predict students’ satisfaction levels with MOOCs.

Advanced machine learning algorithms such as NN and GA were also used in some research. For example, Sukhbaatar et al. (2019) employed the NN method to predict student failure tendency based on multiple variables extracted from the online learning activities in a learning management system. Results showed that 25% of the failing students were correctly identified after the first quiz submission; after the mid-term examination, 65% of the failing students were correctly predicted. Yang et al. (2017) presented a time series NN method for predicting the evolution of student average CFA grade in two MOOCs. Results found that the NN-based algorithms consistently outperformed a baseline model that simply averaged historical CFA data. Christudas et al. (2018) presented a GA-enable approach for recommending personalized learning content for individual students in the e-learning system and the results found an improvement of students’ final scores in the course.

An important factor in the AI models is the choice of input variables. Primary variables used in the 32 articles included demographic, assignment, previous score, quiz access, forum access, course material access, login behavior, etc. (see Appendix Table 3). Demographic variables include students’ gender, age, race, ethnicity information. The assignment category includes whether the assignment is completed and submitted. The previous score refers to the historical grades of students for different courses or learning activities, the standardized high school test scores or continuous assessment activities. The quiz access includes the times of quiz attempt, whether the quiz is complete or not, quiz scores, and solving time. The forum is where student’s ideas on a particular subject have exchange and communicated with their instructor and peers. This category includes the number of forum views, number of forum posts, times of reply, post content, etc. The course material information contains the total time material viewed, and time consumed on materials. Login behaviors mainly include the online learning days, times of access, time consumption per week. Among those variables, forum and course material access were the most commonly used, followed by student scores and login behaviors.

When using AI algorithms, researchers tended to compare the efficiency and effectiveness of using different AI algorithms to address the same research purpose. For example, Moreno-Marcos et al. (2018) collected the data from a Java programming MOOC to determine what factors affected the predictions and in which way it was possible to predict scores. In this work, four algorithms, namely RG, SVM, DT, and RF, were used and results were compared to identify which one provided the best results. In a blended learning context, Sukhbaatar et al. (2019) proposed an early prediction scheme to identify the student who was at risk of failing. NN, SVM, DT, and NB methods were compared for the failure prediction in terms of prediction effectiveness. Baneres et al. (2019) presented an adaptive predictive model called GRADUAL AT-RISK MODEL and developed an early warning system, and an early feedback prediction system to intervene at-risk identification of students. Four classification algorithms, NB, CART DT, KNN, and SV, were tested to determine which classification algorithm best fit the GAR model. In addition, Howard et al. (2018) examined eight prediction methods, including BART, RF, PCR, Splines, KNN, NN, and SVM, to identify students’ final grades. Huang et al. (2020) collected the applied eight classifiers based on students’ online learning logs, namely GaNB, SVC, linear-SVC, LR, DT, RF, NN, and XGBoost, to predict the student academic performances. They also employed five evaluators, namely accuracy, precision, recall, the F1-measure, and AUC to measure the predictive performance for classification methods.

4.3 RQ3: What are the effects and implications of AI applications on the instruction and learning processes in online higher education?

The positive effects were identified by using AI applications in online higher education to improve the instruction and learning quality: a high quality of AI-enabled prediction with multiple input variables (n = 20, 62%), a high quality of AI-enabled recommendations based on student characteristics (n = 5, 16%), an improvement of students’ academic performances (n = 5, 16%), an improvement of online engagement and participation (n = 2, 6%) (see Fig. 5 and Appendix Table 4).

4.3.1 A high quality of AI-enabled prediction with multiple input variables

Evidence has been reported that students enrolled in online courses have higher dropout rates than those in the traditional classroom settings (Breslow et al., 2013; Tyler-Smith, 2006). An increase in rates of dropout unavoidably leads to reduce graduation rates, which may have a negative effect on the online learning quality (Simpson, 2018).AI applications in online higher education are mainly the prediction models to predict the student’s risk of dropouts and final academic performance. The prediction of student academic performance help identifies the students who have difficulties understanding the course materials or who are at risk of failing the exam (Tomasevic et al., 2020). For example, the results obtained by Baneres et al. (2019) proved that the prediction system achieved early detection of potential at-risk students, offered the guidance and feedback with visualization dashboards, and enhanced the interaction with at-risk students. In this way, the prediction system helped instructors or administrators identify students’ learning issues, assist students in regulating and reflecting on the learning processes, and further provide students with instant intervention and guidance at an early stage during the course (Moreno-Marcos et al., 2018).

Articles reviewed in this work indicated a high quality of accuracy of the prediction models that have used multiple input variables and advanced AI algorithms. For example, Aguiar et al. (2014) found that the performance of the prediction models with ePortfolio data was consistently better than those models based on academic performance data alone. Costa et al. (2017) predicted students’ academic failure in introductory programming courses based on multiple student data, including age, gender, student registration, semester, campus, year of enrolling in the course, status on discipline, number of correct exercises, and performance of the students etc. Moreover, advanced AI algorithms such as genetic algorithms and input-output hidden Markov model has been applied in predicting systems, which was proved to achieve more accurate results than traditional algorithms (Mubarak et al., 2020). Therefore, to achieve an accurate prediction, AI-enabled models should first consider using multiple input variables from students’ learning processes rather than merely using summative performance scores, and second use advanced AI algorithms to achieve precisions of the relations between the learning inputs and performance outputs (Chassignol et al., 2018; Godwin & Kirn, 2020; Tomasevic et al., 2020).

4.3.2 A high quality of AI-enabled recommendations based on student characteristics

A high quality of recommendation requires the algorithm model take into consideration students’ diverse characteristics, such as knowledge levels, learning styles or preferences, learning profiles and interests, etc. Our review showed that five studies related to recommendation have reported that their methods generated a high-quality recommendation for students. For example, Benhamdi et al. (2017) proposed a new recommendation approach based on collaborative, content-based filtering to provide students the best learning materials according to their preferences, interests, background knowledge, and memory capacity. The experiment results showed there was a significant difference between the marks of pre-tests and post-tests, which indicated that students acquired more knowledge when they used the proposed recommender system. Additionally, Bousbahi & Chorfi (2015) used the case-based reasoning (CBR) approach and the special retrieval information technique to recommend the most appropriate MOOCs courses that best-suited student needs based on their learning profiles, needs, and knowledge levels. In addition to personalized learning recommendation. Dwivedi & Bharadwaj (2015) designed an e-Learning recommender system for a group of students by considering students’ learning styles, knowledge levels, and ratings of learners in a group. The results demonstrated the effectiveness of the proposed group recommendation strategy. Although those studies verified the short-term effects of recommendation systems, there is a lack of investigations on the effects of applying the recommendation systems or methods in students’ long-term learning. Future work should introduce both fixed and dynamic characteristics of students, carry out experiments with large sample size, in order to confirm the accuracy of recommendation systems.

4.3.3 An improvement of students’ academic performance

The results indicated that AI systems and tools helped improve students’ academic performances by optimizing learning environments and experiences, recommending learning resources or providing automatic feedback and assessment in online learning. For example. Ijaz et al. (2017) found that students in the VR context combined with AI techniques performed better in comprehending the materials than the control groups without AI support. Cárdenas-Cobo et al. (2020) presented an easy-to-use web application called CARAMBA involving Scratch alongside a recommender system for exercises. Results confirmed that, in terms of pass rates, the recommending exercises in Scratch had a positive effect on the student’s programming abilities. The pass rate was over 52%, which was 8% higher than that in the previous exercises with Scratch (without recommendation) and 21% higher than the historical results of traditional programming teaching (without Scratch). Compared to the traditional learning approaches (e.g., reading textbooks), AI can provide students with more intelligent and personalized interaction forms, such that the interaction between students and learning resources and the degree of participation in learning can be improved. More importantly, since improper contents that do not fit students’ learning styles, or their knowledge or ability levels may lead to information overload or lack of learning orientation, which would negatively affect student academic performance (Chen, 2008; Christudas et al., 2018). AI can optimized personalized resource recommendations based on students’ characteristics, which has been emphasized as a crucial issue in e-learning and online learning (Chang & Ke, 2013). In addition, providing automatic feedback was also a good way to improve student academic performance because it could give students personalized diagnoses and suggestions (e.g., Aluthman, 2016), that improves student’s learning motivations and effectiveness (Gardner et al., 2002; Henly, 2003). In conclusion, it can be summarized from the existing research that with the support of AI, student academic performance can be promoted in terms of the final grades, completion rate of course or learning satisfaction levels.

4.3.4 An improvement of online engagement and participation

AI systems or techniques can positively influence student’s online engagement through providing personalized resources, automatic assessment, and timely feedback. For example, Ijaz et al. (2017) investigated a technological combination of AI-enabled virtual reality with an aim to improve the learning experiences and learner engagement. The results found that compared to simply reading the history texts or watching educational videos, the AI-enabled learning mode was more engaging and motivating for the students. Koć-Januchta et al. (2020) explored students’ engagement and patterns of activity with AI book and traditional digital E-book. The research collected students’ pre- and post-test scores, cognitive load, motivation, usability questionnaires and interviews. The results found that students who used the AI-enriched books asked more questions and kept higher retention than those engaged in reading the traditional e-books, which indicated the improvement of student engagement. Therefore, the results indicate that AI supported learning has potential to improve students’ online engagement with learning materials, online courses and their peers. Given that online students often have a low level of participation in online learning, which would lead to problems such as dropping out or academic failure, AI technological support has potential to improve students’ online engagement with learning materials, online courses and their peers. This is a great way to improve students’ learning engagement and participation and thus reduce their academic failure to some extent.

5 Discussions and implications

The application of artificial intelligence (AI) has brought new challenges for improving instruction and learning in online higher education. Given that there is limited literature review examining the actual effects of AI in online higher education, it is necessary to gain a deep understanding of the functions, effects, and implications of AI applications in online higher education. Furthermore, there has been a critical gap between what AIEd technologies can do, how they are implemented in authentic online higher education settings, and to what extent the use of AI applications influence actual online instruction and learning (Kabudi et al., 2021; Ouyang & Jiao, 2021). The systematic literature review specifically focuses on AI applications in online higher education and the results show that performance prediction, resource recommendation, automatic assessment, and improvement of learning experiences are the four main funcitons of AI applications in online higher education. Regarding AI techniques, it is found that the algorithms such as DT, LRG, NN, and BT, are the most commonly adopted in the online educational contexts. Advanced DL algorithms such as GA and DNN have seldom been found, which is consistent with the findings from Zawacki-Richter et al. (2019) and Chen et al. (2020b). Regarding the actual effects of AI in online higher education, several empirical research have reported positive effects of AI application in improving online instruction and learning quality, including a high quality of AI-enabled prediction, a high quality of AI-enabled recommendations, an improvement of academic performances as well as an improvement of online engagement and participation. Based on the review results, to achieve a high quality of prediction, assessment or recommendation, AI-enabled systems or models should first model take into consideration students’ diverse characteristics from both learning processes and summative performances, and second use advanced AI algorithms to achieve precisions of the outcome in order to improve students’ learning motivation, engagement and performance. With the innovation and advancement of AI technologies and techniques, the applications of AI promote the transformation of higher education from traditional, instructor-directed lecturing to AI-enabled, student-centered learning (Chen et al., 2020a; Ouyang & Jiao, 2021).

Based on the results of this literature review, we propose the theoretical, technological, and practical implications for the applications of AI in online higher education. First, from the theoretical perspective, educational theories have not been adopted to underpin the application of AI in online higher education. Similar to the previous work (Chen et al., 2020b, Ouyang & Jiao, 2021; Zawacki-Richter et al., 2019), few studies have focused on building the connection between the educational and learning theories and AI-supported online higher education. Although advanced AI technology has the potential to improve online higher education quality (Holmes et al., 2019), good educational outcomes do not occur by merely using advanced AI technologies (Castañeda & Selwyn, 2018; Du Boulay, 2000; Selwyn, 2016). More importantly, the use of AI technologies and applications generally imply different pedagogical perspectives, which in turn pose critical influences on the design and implementation of instruction and learning (Hwang et al., 2020; Ouyang & Jiao, 2021). As Chen et al. (2020b) suggested, social constructivism (Vygotsky, 1978), situational theory (Kim & Grunig, 2011), distributed cognition (Hollan et al., 2000) are worthwhile to be studied while integrating AI applications in online higher education. Ouyang & Jiao (2021) proposed the three paradigms of AIEd from the theoretical perspective (i.e., AI-directed, learner-as-recipient, AI-supported, learner-as-collaborator, and AI-empowered, learner-as-leader), which could serve as the reference framework to explore varied ways of addressing the learning and instructional issues with the AI applications. In summary, based on the existing educational and learning theories, researchers and practitioners can integrate pedagogy and learning sciences with AIEd applications to derive multiple perspectives and interpretations about AIEd in online higher education (Hwang et al., 2020; Hwang & Tu, 2021; Ouyang & Jiao, 2021).

Second, from the technological perspective, AI technologies, models and applications in online higher education are expected to seek the potential of integrating students’ learning process characteristics with AI, to connect and strengthen interactions between AI and educators and students, and to address the issues regarding the biases in AI algorithms, and non-transparency of why and how AI decisions are made (Hwang et al., 2020; Hwang & Tu, 2021; Ouyang & Jiao, 2021). This review has illustrated the importance of data collection and analysis of learning process data in addition to summative data in order to achieve a high quality of AI-enabled prediction or recommendation. The advancement of emerging computer technologies, such as quantum computing, wearable devices, robot control, and sensing devices, and 5G wireless communication technologies, have provided new affordances and opportunities to integrate AI with collection and analysis of online learning processes in online higher education (Chen et al., 2020b; Hwang et al., 2020). When integrating into online higher education, AI has the potential to provide student with a practical or experiential learning experience, particularly when AI is integrated with other technologies such as VR, 3D, gaming, and simulation, and thereby improving the student learning experience and academic performance. To advance the state-of-the-art of AIEd technologies in online higher education, it is necessary to provide a bridge to facilitate the interaction and collaboration between educators or students with AI systems or tools, which can help obtain a multifaceted understanding of student status and achieve a good learning performance prediction (Giannakos et al., 2019). With the support of real-time AI algorithm models, it has the potential to collect and feedback information from human to AI systems in a timely fashion. In this way, AI applications can collect and make sense of the user-generated data to provide a deeper understanding of the real-time interaction between humans and technologies in online higher education (Giannakos et al., 2019; Ouyang & Jiao, 2021; Xie et al., 2019). Future research can consider to develop prediction models that can be used in heterogeneous contexts across platforms, thematic areas, and course durations, This approach has potential to enhance the predictive power of current models by improving algorithms or adding novel higher-order features from students or groups (Moreno-Marcos et al., 2019). Overall, since online higher education stresses learner-centered learning, integration of human intelligence and machine intelligence can help AIEd transform from traditional lecturing to learner-centered learning (Ouyang & Jiao, 2021).

Third, from the practical perspective, AIEd advancement calls for more empirical research to examine what are the different roles of AI in online higher education, how AI are connected to the existing educational and learning theories, and to what extent the use of AI technologies influence online learning quality (Hwang et al., 2020; Kabudi et al., 2021; Ouyang & Jiao, 2021). As researchers pointed out in a recent literature review, there has been a discrepancy between the potentials of AIEd and their actual implementations in online higher education (Kabudi et al., 2021). The discrepancy is caused by a separation of AI technology and the complex educational system (Xu & Ouyang, 2021). The review results also show a limited research work on examining the long-term effect and implication of applying AI to improve online instruction and learning. Therefore, AIEd needs to be designed and applied with the awareness that the AI technology is a part of a larger educational system, consisting of multiple components, e.g., learners, instructors, and information and resources (Riedl, 2019). To better examine the learning effects, AIEd empirical research should design more comprehensive assessment methods to incorporate various student features (e.g., student motivation, anxiety, higher-order thinking, behavioral patterns) in the AI model, and use multimodal learning analytics to collect and analyze data (e.g., process-oriented discourse data, physiological sensing data, eye-tracking) (Ouyang & Jiao, 2021). In addition, the review indicates that most empirical research is conducted in a short period of time duration, therefore more empirical research is needed to enlarge the sample size and experiment duration in order to verify the effects of AI applications in online higher education. Overall, a deep understanding can be achieved by conducting more empirical research to examine the roles of AI in online higher education, educational and learning theories underpinned AIEd, and the actual effects of AI on online learning quality (Gartner, 2019; Law, 2019; Tegmark, 2017).

6 Conclusions

This systematic review provides an overview of empirical research on the applications of AI in online higher education. Specifically, this literature review examines the functions of empirical researches, the algorithms used in empirical researches and the effects and implications generated by empirical research. Although the research and practice of AI applications in online higher education are still in the preliminary and exploratory stage, AI is proved to be positive to enhance online instruction and learning quality by offering accurate prediction, assessment and engaging students with online materials and environments (Yang et al., 2020; Zawacki-Richter et al., 2019). The innovative applications of AI in online higher education are conducive to reform instructional design and development methods, as well as advance the constructions of the intelligent, networked, personalized, and lifelong educational system (Arsovic & Stefanovic, 2020; Ouyang & Jiao, 2021; Yang et al., 2020).

This systematic review has several limitations, which lead to future research directions. First, the process of search query might not guarantee full completeness and absence of bias. Although we used the keyword list suggested by the previous review studies to search for the relevant articles, not all studies were included as diverse terms have been used to represent AI technologies. Second, the studies reviewed in this article were filtered from the seven prominent databases and the articles were limited to journal articles. For example, the recent conference proceedings were excluded, which may lead to the absence of the latest technical reports of AIEd in online higher education. Since AIEd is an interdisciplinary field where scholars come from different fields particularly computer science and education areas, studies might be published as conference papers that were not included. Therefore, future studies can adjust the screening criteria such that more relevant studies can be included. Third, the current study only provided a systematic overview of AI in online higher education, a formal meta-analysis would be beneficial to report the effect sizes of selected empirical research to gain a deeper understanding of the field.

Critical questions that need to be carefully considered include: How AI algorithms and models can be improved in online higher education? How AI systems or tools should be implemented to improve the instruction and learning practices in online higher education? How to conduct longitudinal empirical research in order to reveal authentic and long-term results of applying AI in online instruction and learning? This systematic review has provided initial implications for those questions, such as taking into consideration students’ diverse characteristics, using advanced AI algorithms to achieve precisions, conducting longitudinal research to examine long-term effect of AI applications. Future work should continue on this research and practice trend. Overall, consistent with previous work (e.g., Deeva et al., 2021; Holmes et al., 2019; Hwang et al., 2020), AIEd applications in online higher education are expected to enable learners to reflect on learning and inform AI systems to adapt accordingly, improve prediction, recommendation and assessment accuracy, and facilitate learner agency, empowerment, and personalization in student-centered learning.

References

* Reviewed articles (n = 32)

* Aguiar, E., Chawla, N. V., Brockman, J., Ambrose, G. A., & Goodrich, V. (2014). Engagement vs performance: using electronic portfolios to predict first semester engineering student retention. Journal of Learning Analytics, 1(3), 7–33. https://doi.org/10.18608/jla.2014.13.3

* Almeda, M. V., Zuech, J., Utz, C., Higgins, G., Reynolds, R., & Baker, R. S. (2018). Comparing the factors that predict completion and grades among for-credit and open/MOOC students in online learning. Online Learning, 22(1), 1–18. https://doi.org/10.24059/olj.v22i1.1060

* Aluthman, E. S. (2016). The effect of using automated essay evaluation on esl undergraduate students’ writing skill. International Journal of English Linguistics, 6(5), 54. https://doi.org/10.5539/ijel.v6n5p54

Alyahyan, E., & Düştegör, D. (2020). Predicting academic success in higher education: literature review and best practices. In International Journal of Educational Technology in Higher Education (Vol. 17, Issue 1). Springer. https://doi.org/10.1186/s41239-020-0177-7

Arsovic, B., & Stefanovic, N. (2020). E-learning based on the adaptive learning model:case study in Serbia. Sadhana-Academy Proceedings in Engineering Sciences, 45(1), 266. https://doi.org/10.1007/s12046-020-01499-8.

* Baneres, D., Rodriguez-Gonzalez, E., M., & Serra, M. (2019). An early feedback prediction system for learners at-risk within a first-year higher education course. IEEE Transactions on Learning Technologies, 12(2), 249–263. https://doi.org/10.1109/TLT.2019.2912167

* Benhamdi, S., Babouri, A., & Chiky, R. (2017). Personalized recommender system for e-Learning environment. Education and Information Technologies, 22(4), 1455–1477. https://doi.org/10.1007/s10639-016-9504-y

* Bousbahi, F., & Chorfi, H. (2015). MOOC-Rec: A case based recommender system for MOOCs. Procedia - Social and Behavioral Sciences, 195, 1813–1822. https://doi.org/10.1016/j.sbspro.2015.06.395

Breslow, L., Pritchard, D. E., DeBoer, J., Stump, G. S., Ho, A. D., & Seaton, D. T. (2013). Studying learning in the worldwide classroom: Research into edX’s first MOOC. Research & Practice in Assessment, 8, 13–25. Retrieved from https://files.eric.ed.gov/fulltext/EJ1062850.pdf

* Burgos, C., Campanario, M. L., de la Peña, D., Lara, J. A., Lizcano, D., & Martínez, M. A. (2018). Data mining for modeling students’ performance: A tutoring action plan to prevent academic dropout. Computers and Electrical Engineering, 66, 541–556. https://doi.org/10.1016/j.compeleceng.2017.03.005

* Cárdenas-Cobo, J., Puris, A., Novoa-Hernández, P., Galindo, J. A., & Benavides, D. (2020). Recommender systems and scratch: An integrated approach for enhancing computer programming learning. IEEE Transactions on Learning Technologies, 13(2), 387–403. https://doi.org/10.1109/TLT.2019.2901457

Castañeda, L., & Selwyn, N. (2018). More than tools? Making sense of the ongoing digitizations of higher education. International Journal of Educational Technology in Higher Education, 15(22), https://doi.org/10.1186/s41239-018-0109-y

Chassignol, M., Khoroshavin, A., Klimova, A., & Bilyatdinova, A. (2018). Artificial intelligence trends in education: A narrative overview. 7th International Young Scientist Conference on Computational Science. Procedia Computer Science, 136, 16-24

Chang, T. Y., & Ke, Y. R. (2013). A personalized e-course composition based on a genetic algorithm with forcing legality in an adaptive learning system. Journal of Network and Computer Applications, 36(1), 533–542. https://doi.org/10.1016/j.jnca.2012.04.002

Chen, C. M. (2008). Intelligent web-based learning system with personalized learning path guidance. Computers & Education, 51(2), 787–814. https://doi.org/10.1016/j.compedu.2007.08.004

* Chen, W., Niu, Z., Zhao, X., & Li, Y. (2014). A hybrid recommendation algorithm adapted in e-learning environments. World Wide Web, 17(2), 271–284. https://doi.org/10.1007/s11280-012-0187-z

Chen, X., Xie, H., & Hwang, G. J. (2020a). A multi-perspective study on artificial intelligence in education: grants, conferences, journals, software tools, institutions, and researchers. Computers and Education: Artificial Intelligence, 1, 100005. https://doi.org/10.1016/j.caeai.2020.100005

Chen, X., Xie, H., Zou, D., & Hwang, G. J. (2020b). Application and theory gaps during the rise of artificial intelligence in education. Computers and Education: Artificial Intelligence, 1(July), 100002. https://doi.org/10.1016/j.caeai.2020.100002

* Christudas, B. C. L., Kirubakaran, E., & Thangaiah, P. R. J. (2018). An evolutionary approach for personalization of content delivery in e-learning systems based on learner behavior forcing compatibility of learning materials. Telematics and Informatics, 35(3), 520–533. https://doi.org/10.1016/j.tele.2017.02.004

* Costa, E. B., Fonseca, B., Santana, M. A., de Araújo, F. F., & Rego, J. (2017). Evaluating the effectiveness of educational data mining techniques for early prediction of students’ academic failure in introductory programming courses. Computers in Human Behavior, 73, 247–256. https://doi.org/10.1016/j.chb.2017.01.047

Deeva, G., Bogdanova, D., Serral, E., Snoeck, M., & De Weerdt, J. (2021). A review of automated feedback systems for learners: Classification framework, challenges and opportunities. Computers & Education, 162, 104094. https://doi.org/10.1016/j.compedu.2020.104094

Du Boulay, B. (2000). Can we learn from ITSs? In International conference on intelligent tutoring systems (pp. 9–17). Springer. https://springerlink.bibliotecabuap.elogim.com/chapter/10.1007/3-540-45108-0_3

* Dwivedi, P., & Bharadwaj, K. K. (2015). E-Learning recommender system for a group of learners based on the unified learner profile approach. Expert Systems, 32(2), 264–276. https://doi.org/10.1111/exsy.12061

Gardner, L., Sheridan, D., & White, D. (2002). A web-based learning and assessment system to support flexible education. Journal of Computer Assisted Learning, 18, 125e136. https://doi.org/10.1046/j.0266-4909.2001.00220.x

Gartner (2019). Hype cycle for emerging technologies, 2019. Gartner. Retrieved on 2021/1/1 https://www.gartner.com/en/documents/3956015/hype-cycle-for-emerging-technologies-2019

George, G., & Lal, A. M. (2019). Review of ontology-based recommender systems in e-learning. Computers & Education, 142(July), 103642. https://doi.org/10.1016/j.compedu.2019.103642

Giannakos, M. N., Sharma, K., Pappas, I. O., Kostakos, V., & Velloso, E. (2019). Multimodal data as a means to understand the learning experience. International Journal of Information Management, 48, 108–119. https://doi.org/10.1016/j.ijinfomgt.2019.02.003

Godwin, A., & Kirn, A. (2020). Identity‐based motivation: Connections between first‐year students' engineering role identities and future‐time perspectives. Journal of Engineering Education, 109(3), 362–383. https://doi.org/10.1002/jee.20324

Graneheim, U. H., & Lundman, B. (2004). Qualitative content analysis in nursing research: concepts, procedures and measures to achieve trustworthiness. Nurse Education Today, 24(2), 105e112. https://doi.org/10.1016/j.nedt.2003.10.001

Guan, C., Mou, J., & Jiang, Z. (2020). Artificial intelligence innovation in education: A twenty-year data-driven historical analysis. International Journal of Innovation Studies, 4(4), 134–147. https://doi.org/10.1016/j.ijis.2020.09.001

Harasim, L. (2000). Shift happens: Online education as a new paradigm in learning. The Internet and higher education, 3(1–2), 41–61. https://doi.org/10.1016/S1096-7516(00)00032-4

* Helal, S., Li, J., Liu, L., Ebrahimie, E., Dawson, S., Murray, D. J., & Long, Q. (2018). Predicting academic performance by considering student heterogeneity. Knowledge-Based Systems, 161(July), 134–146. https://doi.org/10.1016/j.knosys.2018.07.042

Henly, D. C. (2003). Use of web-based formative assessment to support student learning in a metabolism/nutrition unit. European Journal of Dental Education, 7(3), 116e122. https://doi.org/10.1034/j.1600-0579.2003.00310.x

* Hew, K. F., Hu, X., Qiao, C., & Tang, Y. (2020). What predicts student satisfaction with MOOCs: A gradient boosting trees supervised machine learning and sentiment analysis approach. Computers & Education, 145, 103724. https://doi.org/10.1016/j.compedu.2019.103724

Hinojo-Lucena, F. J., Aznar-Díaz, I., Cáceres-Reche, M. P., & Romero-Rodríguez, J. M. (2019). Artificial intelligence in higher education: A bibliometric study on its impact in the scientific literature. Education Sciences, 9(1), 51. https://doi.org/10.3390/educsci9010051

Hollan, J., Hutchins, E., & Kirsh, D. (2000). Distributed cognition: Toward a new foundation for human-computer interaction research. ACM Transactions on Computer-Human Interaction (TOCHI), 7(2). https://doi.org/10.1145/353485.353487

Holmberg, B. (2005). Theory and practice of distance education. Routledge

Holmes, W., Bialik, M., & Fadel, C. (2019). Artifificial intelligence in education: Promises and implications for teaching and learning. Center for Curriculum Redesign

* Hooshyar, D., Ahmad, R. B., Yousefi, M., Fathi, M., Horng, S. J., & Lim, H. (2016). Applying an online game-based formative assessment in a flowchart-based intelligent tutoring system for improving problem-solving skills. Computers & Education, 94, 18–36. https://doi.org/10.1016/j.compedu.2015.10.013

* Howard, E., Meehan, M., & Parnell, A. (2018). Contrasting prediction methods for early warning systems at undergraduate level. The Internet and Higher Education, 37, 66–75. https://doi.org/10.1016/j.iheduc.2018.02.001

Hsieh, H. F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. https://doi.org/10.1177/1049732305276687

Hu, Y. H. (2021). Effects and acceptance of precision education in an AI-supported smart learning environment. Education and Information Technologies. https://doi.org/10.1007/s10639-021-10664-3

* Hu, Y., Lo, C., & Shih, S. (2014). Developing early warning systems to predict students’ online learning performance. Computers in Human Behavior, 36, 469–478. https://doi.org/10.1016/j.chb.2014.04.002

* Huang, A. Y. Q., Lu, O. H. T., Huang, J. C. H., Yin, C. J., & Yang, S. J. H. (2020). Predicting students’ academic performance by using educational big data and learning analytics: evaluation of classification methods and learning logs. Interactive Learning Environments, 28(2), 206–230. https://doi.org/10.1080/10494820.2019.1636086

Hwang, G. J., & Tu, Y. F. (2021). Roles and research trends of artificial intelligence in mathematics education: A bibliometric mapping analysis and systematic review. Mathematics. https://doi.org/10.3390/math9060584

Hwang, G. J., Xie, H., Wah, B. W., & Gašević, D. (2020). Vision, challenges, roles and research issues of artificial intelligence in education. Computers and Education: Artificial Intelligence, 1, 100001. https://doi.org/10.1016/j.caeai.2020.100001

* Ijaz, K., Bogdanovych, A., & Trescak, T. (2017). Virtual worlds vs books and videos in history education. Interactive Learning Environments, 25(7), 904–929. https://doi.org/10.1080/10494820.2016.1225099

* Jayaprakash, S. M., Moody, E. W., Eitel, J. M., Regan, J. R., & Baron, J. D. (2014). Early alert of academically at-risk students: An open source analytics initiative. Journal of Learning Analytics, 1, 6–47. https://doi.org/10.18608/jla.2014.11.3

Kabudi, T., Pappas, I., & Olsen, D. H. (2021). AI-enabled adaptive learning systems: A systematic mapping of the literature. Computers and Education: Artificial Intelligence, 2, 100017. https://doi.org/10.1016/j.caeai.2021.100017

Kim, J. N., & Grunig, J. E. (2011). Problem solving and communicative action: A situational theory of problem solving. Journal of Communication, 61(1), 120–149. https://doi.org/10.1111/j.1460-2466.2010.01529.x

* Koć-Januchta, M. M., Schönborn, K. J., Tibell, L. A. E., Chaudhri, V. K., & Heller, H. C. (2020). Engaging with biology by asking questions: Investigating students’ interaction and learning with an artificial intelligence-enriched textbook. Journal of Educational Computing Research, 58(6), 1190–1224. https://doi.org/10.1177/0735633120921581

Law, N. W. Y. (2019). Human development and augmented intelligence. In The 20th international conference on artificial intelligence in education (AIED 2019). Springer. Retrieved on 2021/1/1 from https://www.sciencedirect.com/science/refhub/S2666-920X(21)00014-undefined/sref31

* Li, J., Chang, Y., Chu, C., & Tsai, C. (2012). Expert systems with applications a self-adjusting e-course generation process for personalized learning. Expert Systems With Applications, 39(3), 3223–3232. https://doi.org/10.1016/j.eswa.2011.09.009

Liu, M., Kang, J., Zou, W., Lee, H., Pan, Z., & Corliss, S. (2017). Using data to understand how to better design adaptive learning. Technology Knowledge and Learning, 22(3), 271–298. https://doi.org/10.1007/s10758-017-9326-z

Liu, S., Guo, D., Sun, J., Yu, J., & Zhou, D. (2020). MapOnLearn: The use of maps in online learning systems for education sustainability. Sustainability, 12(17), 7018. https://doi.org/10.3390/su12177018

Liz-Domínguez, M., Caeiro-Rodríguez, M., Llamas-Nistal, M., & Mikic-Fonte, F. A. (2019). Systematic literature review of predictive analysis tools in higher education. Applied Sciences (Switzerland), 9(24). MDPI AG. https://doi.org/10.3390/app9245569

Lykourentzou, I., Giannoukos, I., Nikolopoulos, V., Mpardis, G., & Loumos, V. (2009). Dropout prediction in e-learning courses through the combination of machine learning techniques. Computers & Education, 53(3), 950–965. https://doi.org/10.1016/j.compedu.2009.05.010

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., Prisma Group. (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS medicine, 6(7), e1000097. https://doi.org/10.1371/journal.pmed.1000097.t001

Moreno-Marcos, P. M., Muñoz-Merino, P. J., Alario-Hoyos, C., Estévez-Ayres, I., & Delgado Kloos, C. (2018). Analysing the predictive power for anticipating assignment grades in a massive open online course. Behaviour & Information Technology, 37(10–11), 1021–1036. https://doi.org/10.1080/0144929X.2018.1458904

Moreno-Marcos, P. M., Alario-Hoyos, C., Munoz-Merino, P. J., & Kloos, C. D. (2019). Prediction in MOOCs: A Review and Future Research Directions. IEEE Transactions on Learning Technologies, 12(3), 384–401. https://doi.org/10.1109/TLT.2018.2856808

Moseley, L. G., & Mead, D. M. (2008). Predicting who will drop out of nursing courses: a machine learning exercise. Nurse Education Today, 28(4), 469–475. https://doi.org/10.1016/j.nedt.2007.07.012

* Mubarak, A. A., Cao, H., & Zhang, W. (2020). Prediction of students’ early dropout based on their interaction logs in online learning environment. Interactive Learning Environments. https://doi.org/10.1080/10494820.2020.1727529

Neuendorf, K. A., & Kumar, A. (2015). Content analysis. The international Encyclopedia of Political Communication, 1-10. https://doi.org/10.1002/9781118541555.wbiepc065

Ouyang, F. & Jiao, P. (2021). Artificial Intelligence in Education: The Three Paradigms. Computers & Education: Artificial Intelligence, 100020. https://doi.org/10.1016/j.caeai.2021.100020

Rico-Juan, J. R., Gallego, A. J., & Calvo-Zaragoza, J. (2019). Automatic detection of inconsistencies between numerical scores and textual feedback in peer-assessment processes with machine learning. Computers & Education, 140, 103609. https://doi.org/10.1016/j.compedu.2019.103609

Riedl, M. O. (2019). Human-centered artificial intelligence and machine learning. Human Behavior and Emerging Technologies, 1(1), 33–36. https://doi.org/10.1002/hbe2.117

Rowe, M. (2019). Shaping our algorithms before they shape us. Artificial Intelligence and Inclusive Education (pp. 151–163). Springer. https://doi.org/10.1007/978-981-13-8161-4_9

Romero, C., Espejo, P. G., Zafra, A., Romero, J. R., & Ventura, S. (2013). Web usage mining for predicting final marks of students that use Moodle courses. Computer Applications in Engineering Education, 21(1), 135–146. https://doi.org/10.1002/cae.20456

* Romero, C., López, M. I., Luna, J. M., & Ventura, S. (2013). Predicting students’ final performance from participation in on-line discussion forums. Computers & Education, 68, 458–472. https://doi.org/10.1016/j.compedu.2013.06.009

Selwyn, N. (2016). Is technology good for education? Polity Press. Retrieved on 2021/1/10 from http://au.wiley.com/WileyCDA/WileyTitle/productCd-0745696465.html

Shahiri, A. M., Husain, W., & Rashid, N. A. (2015). A review on predicting student’s performance using data mining techniques. Procedia Computer Science, 72, 414–422. https://doi.org/10.1016/j.procs.2015.12.157

Simpson, O. (2018). Supporting students in online, open and distance learning (1st ed.). Routledge

* Sukhbaatar, O., Usagawa, T., & Choimaa, L. (2019). An artificial neural network based early prediction of failure-prone students in blended learning course. International Journal of Emerging Technologies in Learning, 14(19), 77–92. https://doi.org/10.3991/ijet.v14i19.10366

Tang, K. Y., Chang, C. Y., & Hwang, G. J. (2021). Trends in artificial intelligence supported e-learning: A systematic review and co-citation network analysis (1998-2019). Interactive Learning Environments. https://doi.org/10.1080/10494820.2021.1875001

Tegmark, M. (2017). Life 3.0: Being human in the age of artificial intelligence. (Knopf)

Tomasevic, N., Gvozdenovic, N., & Vranes, S. (2020). An overview and comparison of supervised data mining techniques for student exam performance prediction. Computers & Education, 143, 103676. https://doi.org/10.1016/j.compedu.2019.103676

Tyler-Smith, K. (2006). Early attrition among first time eLearners: A review of factors that contribute to drop-out, withdrawal and non-completion rates of adult learners undertaking eLearning programmes. Journal of Online Learning and Teaching, 2(2), 73–85. Retrieved on 2021/1/11 from https://jolt.merlot.org/documents/Vol2_No2_TylerSmith_000.pdf

Vygotsky, L. (1978). Mind in society: The development of higher psychological processes. Harvard University Press

* Wakelam, E., Jefferies, A., Davey, N., & Sun, Y. (2020). The potential for student performance prediction in small cohorts with minimal available attributes. British Journal of Educational Technology, 51(2), 347–370. https://doi.org/10.1111/bjet.12836

Wohlin, C. (2014). Guidelines for snowballing in systematic literature studies and a replication in software engineering. In Proceedings of the 18th international conference on evaluation and assessment in software engineering (pp. 1-10). https://doi.org/10.1145/2601248.2601268

Xie, H., Chu, H. C., Hwang, G. J., & Wang, C. C. (2019). Trends and development in technology-enhanced adaptive/personalized learning: A systematic review of journal publications from 2007 to 2017. Computers & Education, 140, 103599. https://doi.org/10.1016/j.compedu.2019.103599

* Xing, W., Chen, X., Stein, J., & Marcinkowski, M. (2016). Computers in human behavior temporal predication of dropouts in MOOCs: Reaching the low hanging fruit through stacking generalization. Computers in Human Behavior, 58, 119–129. https://doi.org/10.1016/j.chb.2015.12.007

Xu, W. & Ouyang, F. (2021). A systematic review of AI role in the educational system based on a proposed conceptual framework. Education and Information Technologies. https://doi.org/10.1007/s10639-021-10774-y

* Xu, X., Wang, J., Peng, H., & Wu, R. (2019). Prediction of academic performance associated with internet usage behaviors using machine learning algorithms. Computers in Human Behavior, 98(April), 166–173. https://doi.org/10.1016/j.chb.2019.04.015

* Yang, T., Brinton, C. G., & Joe-wong, C. (2017). Behavior-based grade prediction for MOOCs via time series neural networks. IEEE Journal of Selected Topics in Signal Processing, 11(5), 716–728. https://doi.org/10.1109/JSTSP.2017.2700227

Yang, Y. T. C., Gamble, J. H., Hung, Y. W., & Lin, T. Y. (2014). An online adaptive learning environment for critical-thinking-infused English literacy instruction. British Journal of Educational Technology, 45(4), 723–747. https://doi.org/10.1111/bjet.12080

Yang, C., Huan, S., & Yang, Y. (2020). A practical teaching mode for collegessupported by Artificial Intelligence. International Journal of Emerging Technologies in Learning, 15(17), 195–206. https://doi.org/10.3991/ijet.v15i17.16737

* Yoo, J., & Kim, J. (2014). Project performance? Investigating the roles of linguistic features and participation patterns. International Journal of Artificial Intelligence in Education, 24, 8–32. https://doi.org/10.1007/s40593-013-0010-8

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. https://doi.org/10.1186/s41239-019-0171-0

Zhai, X., Chu, X., Chai, C. S., Siu, M., Jong, Y., Istenic, A. … Li, Y. (2021). A Review of Artificial Intelligence (AI) in Education from 2010 to 2020. 2021. Complexity, 8812542. https://doi.org/10.1155/2021/8812542

* Zohair, L. M. (2019). Prediction of student’s performance by modelling small dataset size. International Journal of Educational Technology in Higher Education, 16(1). https://doi.org/10.1186/s41239-019-0160-3

Zupic, I., & Čater, T. (2015). Bibliometric methods in management and organization. Organizational Research Methods, 18(3), 429–472. https://doi.org/10.1177/1094428114562629

Acknowledgements

This work is financially supported by the National Natural Science Foundation of China, No. 62177041.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest to declare.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ouyang, F., Zheng, L. & Jiao, P. Artificial intelligence in online higher education: A systematic review of empirical research from 2011 to 2020. Educ Inf Technol 27, 7893–7925 (2022). https://doi.org/10.1007/s10639-022-10925-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-022-10925-9