Abstract

The aim of this study was to develop a scale which can be used to measure the computational thinking skills (CTS) of high school students. Validity and reliability testing of the scale was performed with the participation of 785 students. Exploratory and confirmatory factor analysis showed that the five-point Likert scale had a construct consisting of four factors Problem-solving, Cooperative Learning & Critical Thinking, Creative Thinking, and Algorithmic Thinking expressed by 42 items. The factor loadings of the scale varied from .475 to .853. The confirmatory factor analysis performed to reveal the factorial validity of the scale showed that the Chi-square value (χ2 = 2679.07; sd = 815, p = 0.00) was significant. The fitness index values were found to be RMSA = .0075; SRMR = .081; NNFI = .91; CFI = .92; GFI = .90; and AGFI = .88. The Cronbach’s Alpha internal consistency coefficient was .969 for the overall scale. In addition, the stability of the scale was examined to obtain information about its reliability and the test-re-test method was used. It was concluded as a result of the analysis that the scale was a valid and reliable measurement tool which can be used to measure the CTS of high school students.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Some of the pioneer thinkers in this field include Papert, Wing and Wolfram. Wing (2006) defines computational thinking (CT) as a way of solving problems, designing systems and understanding human behavior by drawing on the concepts fundamental to computer science. One of the earliest references to CT is contained in Papert (1996), where Papert describes the value of applying human cognitive primitives to object oriented problems by noticing the relationships between the components of a complex system. Other similar references can be found in Vee (2013) and Wolfram (2016), in which there are direct references to the fundamental ideas of dividing a complex task into a set of simpler tasks. In addition to problem-solving and data modeling and presentation, the author also states that CT contains lesser known concepts such as binary searching, iteration, and parallelization. CT is a basic skill not only for computer scientists, but for everyone (Wing 2006). CT should be integrated into the analytical thinking skills of each and every child for reading, writing, and arithmetic (ISTE 2015; Wing 2008). This definition has often caused debates among computer scientists, cognitive researchers and educators. Many people have suggested revisions and improvements to Wing’s definition. However, the most commonly accepted definitions seem to be those of Wing (2006, 2008) and the International Society for Technology in Education’s (ISTE). Wing (2011) later updated the definition given above as “the thought processes involved in formulating problems and their solutions so that the solutions are represented in a form that can be effectively carried out by an information processing agent.”

Curzon (2015) defines CT as a problem-solving skill possessed by humans. Bundy (2007) notes that CT affects research in almost all disciplines, that large amounts of data can be processed thanks to CT and that new meanings can be derived from data. While Lu and Fletcher (2009) associate CT with abstract thinking which individuals can use in their everyday lives, Czerkawski and Lyman III (2015) state that CT contributes to problem-detection and individuals’ problem-solving processes. Papert (1996) describes the value of applying human cognitive primitives to object oriented problems by noticing the relationships between the components of a complex system. In addition, CT has the potential to expand its capacity and ability to resolve individuals’ problems unprecedentedly (ISTE 2011). On the other hand, it can be said that CT competence has a remarkable impact on performing daily activities -that information technologies are used to perform-more effectively (Lee et al. 2014). For these reasons, studies aimed at teaching students CTS have gained pace. In addition to updates in favor of CT in computer science curriculum in higher education, developed countries such as the UK and the USA have conducted projects aimed at improving the CTS of students in K-12 programs. In England, there are some initial steps for the integration of CT in the classroom (Department for Education England 2013). In the United States, the main path for pre-university students to participate in CT activities and curriculum is the Advanced Placement Computer Science courses (Berkeley 2017; Harvard 2017). In conclusion, many developed countries aim to improve the CTS skills of students at K-12 level through coding and programming courses.

Based on the above thinking, various projects are being conducted throughout the world to develop CTS of students. Projects carried out by the NSF, ISTE, and CSTA explore how students gain CTS in all disciplines and fields. The long-term goals of these projects include providing all students with opportunities to gain these skills and to show that these skills can be applied to different problems and concepts. Similarly, the Hour of Code event organized by Code.org has reached millions of students in more than 180 countries (Code.org 2017). In addition, the Bebras International Challenge on Informatics and Computational Thinking is organized to help students of all ages gain CTS (Demir and Seferoğlu 2017).

2 Theoretical framework

The ISTE, the Computer Science Teachers Association (CSTA) and the US National Science Foundation (NSF) carried out a national project to guide educators who were aware of the significance of CT and wished to help students gain CT skills and to outline a general framework. The aim of this project was to determine a general framework through a common and shared definition of CT. The project brought together various educators from higher and secondary education as well as industry experts. At the end of the project, a consensus was achieved regarding the basic components of CT, the significance of CT for all students as a learning objective and how to integrate CT in a K-12 educational environment. A general framework and a definition were drawn up as an explanation of the components which can be used by educators to teach CTS at all grade levels and in all content areas.

According to this definition, CT is a problem-solving process that includes (Barr et al. 2011):

-

1.

Formulating problems in a way that enables us to use a computer and other tools to help solve them,

-

2.

Logically organizing and analyzing data, as well as representing data through abstractions, such as models and simulations,

-

3.

Automating solutions through algorithmic thinking,

-

4.

Logically organizing and analyzing data,

-

5.

Identifying, analyzing and implementing possible solutions with the goal of achieving the most efficient and effective combination of steps and resources,

-

6.

Generalizing and transferring this problem-solving process to a wide variety of problems.

As can be understood from the descriptions given above, CT has multiple components. There are varying opinions regarding the main components of CT. Kazimoglu et al. (2012) state that CT involves five main skills, including problem-solving, developing algorithms, error debugging, simulation and socialization. Ater-Kranov et al. (2010) argue that CT consists of critical thinking and problem-solving components. Brennan and Resnick (2012) describe a CT framework that involves three key dimensions: ‘computational concepts’ (sequences, loops, events, parallelism, conditionals, operators, and data); ‘computational practices’ (experimenting and iterating, testing and debugging, reusing and remixing, abstracting and modularizing); and ‘computational perspectives’ (expressing, connecting, and questioning). However, the most suitable components in light of Wing’s (2006) widely-accepted definition of CT given above include problem-solving, algorithmic thinking, critical thinking, cooperative learning, and creative thinking (ISTE 2015). In the project conducted by the ISTE, CSTA, and NSF in partnership, 82% of the 697 participants agreed that this definition captured the main elements of CT. Another 9% stated that the definition could be used as a tool for building a consensus at K-12 level. These skills could be shortly explained as follows:

2.1 Problem solve

John Dewey defines problem solve (PS) as anything that confuses the human mind, challenges him, and is vague (Gelbal 1991). Anderson and Smith (1984) notes that PS is conscious or unconscious all purpose-oriented behaviours. According to Mayer (1998), learning needs to be done systematically in order to develop problem solving skills. The most obvious way to improve problem solving performance is to teach the basic skills. The general procedure is to analyse each problem into the cognitive skills needed for solution and then systematically teach each skill to mastery (Mayer 1998).

Coping with problem depends on a person’s problem solving skill, the ability to evaluate oneself cognitively and to focus considerably (Heppner et al. 1985). When a person encounters a problem, first, he/she searches for clues with his/her senses, perceptual processes step in; and then gathered data are given meaning and interpreted. Later, the most possible behavioural reactions are considered and the most befitting behaviour manner is selected.

In the last phase, a solution is put into practice that is considered to be the best (Jane 2001). The way a person evaluates and perceives his/her own problem solving skills is an important metacognitive component that affects how he/she approaches and copes with difficulties (Heppner and Reeder 1983). The way a person perceives himself/herself affects data handling system, which is related to problems encountered from oneself and the environment simultaneously (Heppner and Krauskopf 1987).

It improves individuals’ critically, scientific, creativity thinking and high level thinking skills such as PS that PS process is involvement in training. Consequently, Individuals who understand how to cope with problems and who use creativity, logically and scientific thinking skills and find solutions for them and apply them can contribute to the process of modernization (Mayer 1992).

Although focusing on basic teaching skills is a way of problem solving, research findings show that knowing basic teaching skills is not effective alone. So it is possible to develop problem solving through education. The fact that education is based on problem solving means that individuals gain more experience and therefore they become better problem solvers (Thornton 1998). The involvement of the problem-solving process in education improves individuals’ higher order thinking skills such as critical, scientific, creative thinking and problem solving. Thus, individuals who know how to cope with problems and find and implement solutions, using their creativity, logically and scientific thinking skills, can contribute to the process of modernization (Mayer 1992).

2.2 Algorithmic thinking

An algorithm is defined as “a set of rules that precisely defines a sequence of operations such that each rule is effective and definite and such that the sequence terminates in a finite time” (Knuth 1980). Algorithmic thinking (AT) is an important ability in an information based society, one that all should possess. Algorithmic thinking is related to the concept of creating and processing algorithms. As the term ‘algorithm’ essentially refers to a sequence of logical steps aimed at performing a well-defined task, the creation of algorithms is mainly a human activity (Katai 2015).

Algorithms are everywhere in modern society. Many fields of modern life involve the processes of following procedures, applying protocols or implementing techniques, all of which can be viewed as human-processed algorithms. Thus, a developed AT may be beneficial for a wide range of human activities. On the other hand, one of the main characteristics of the digital era is that the control behind the technology that has pervaded all sectors of society is implemented through computer-processed algorithms. Most people come into daily contact with computer-processed algorithms through information technology (IT) resources.

In 1999 the US Committee on Information Technology Literacy of the National Research Council (NRC 1999) called for an educational focus on Fluency with Information Technology. The NRC commit-tee recommended a pedagogical approach that, besides contemporary skills and intellectual capabilities, incorporates ten fundamental IT concepts including ‘algorithmic thinking and programming’ and ‘modelling and abstraction’. In other words, being fluent in IT assumes an understanding of the basic concepts and principles of IT resources. From this perspective AT is closely related to computational thinking (Hu 2011). Accordingly, developing students’ AT should be included as an objective in all educational programs at all levels and connected to lifelong learning.

As a result, solving a problem can be realized by placing the proceedings in sequence. For the purpose, it can be claimed that one of the important components of CT is Algorithmic Thinking (Korkmaz et al. 2017).

2.3 Critical thinking

As a concept, critical thinking has been expressed in several ways. A major influence in critical thinking traces back to the work of John Dewey. From a philosophical perspective, Dewey proposes that critical thinking involves the suspension of judgement and healthy skepticism. Early writers such as Ennis suggest that students should be assisted in the engagement of thinking that is reflective, reasonable and directed on what to believe or do (Ennis 1962). Ennis views critical thinking as ‘the correct assessing of statements’, and notes that an individual who is able to think critically, according to this definition, has the skills to evaluate statements. Critical thinking could be defined as the active, regular and functional process that is carried out to be able to make better use of the understanding and presentation skills of the individual’s or others’ ideas and thoughts (Chaffee 1994).

Watson and Glaser view critical thinking as being more than a specific set of cognitive skills; critical thinking is also a composite of skills, knowledge and attitudes (Watson and Glaser 1980). The authors explain that critical thinking comprises an understanding of the nature of making inferences and generalizations, and the skills of being able to carefully consider the logic and accuracy of evidence. These authors also express the notion that having the ability to think critically is a key element to being fully functional in our modern and complex society. For them, critical thinking is a fundamental requirement of being able to actively participate in one’s social and political circles. Attitude also plays a significant role, for attitude influences the person’s ability to question life’s complexities or underlying assumptions in a situation or circumstance (Simpson and Courtney 2002). In addition, an important step in problem solving and decision-making is the need to utilize critical-thinking abilities to reframe a problem or situation (Facione and Facione 1993).

Paul emphasizes that critical and creative thinking ‘have an intimate relationship to figuring things out. There is a natural marriage between them’ (Paul 1990). In order to step outside the everyday reasoning and approaches to problem solving, a person needs to develop an imagination of the possibilities and potentials inherent in a particular circumstance. This often demands a creative leap of faith and a willingness to be ‘playful’ with future possibilities. Creative thought processes are a motivator, because they make work more interesting. Creative thinking is used in various fields of thought, from philosophy to mechanical to technical endeavors (Facione et al. 1994). Critical thinking requires comments and evaluations. The answers are not in the form of black or white, but rather in the form of tones of the color. It requires consideration of a large number of measures that may conflict with certain conditions. Besides, it demands independence and objectivity.

According to the definitions above, it can be said that critical thinking is a skill that we can acquire and develop it in our lives later on. Therefore, the educators have a lot of responsibility. Today, the most important aim of education is to train individuals who can adapt to different conditions and think flexible and open. The assumptions we have, the generalities, the prejudices, the stereotypes affect our perception of the world and our behavior. However, as Paul (1984) notes, people often do not realize that cognitive models affect their behavior. This awareness can be achieved by the ability of individuals to think critically. Teachers who support critical thinking in their class have significant contributions to the cognitive development of students and influence attitudes toward critical thinking positively. When the critical thinking skills are used regularly in the courses, the students tend to increase their participation in the critical thinking process. Consequently; the acquisition of critical thinking skills and tendencies should be among the goals of contemporary education programs and the thinking skills should be in a basic position in the learning process (Seferoğlu and Akbıyık 2006). In this context, it should be possible to determine whether or not an individual possesses CTS by measuring the skills mentioned above.

2.4 Cooperative learning

Cooperative learning is a classroom method in which students work together in small groups in learning activities and they are awarded depending on their group performance (Slavin 1980). According to Slavin (1987), this method is based on the idea that students work together to learn and that everyone besides the group is responsible for their own learning.

Cooperative learning is a method to achieve learning at the highest level in which students with different abilities working in small groups to reach their common goal (Johnson and Johnson 1994). In other words, cooperative learning can be defined as the educational use of small groups that enable students to work together to maximize their own and other students’ learning (Johnson and Johnson 1995; Johnson et al. 1994).

The success of a group is always more than the gains of members individually. As a result; cooperative learning, which takes place in the literature as an active learning method widely used today in educational environments, is an important component of computational thinking.

2.5 Creativity thinking

The first studies on creativity was initiated in the 1950s by the American Psychological Association (APA) under the presidency of Guilford. According to Guilford (1967), creativity is formed by the combination of six important factors; “general sensitivity to the problems, habit of thought, flexibility of view change, originality, capacity to redefine the instrument and sense-making” (reported in Rouquette 2007). In 1986, Dictionary of Developmental and Educational Psychology defined creativity as “the capacity of an individual to produce a new idea, insight, an invention or an artistic object that carry social, spiritual, aesthetic, scientific or technological value” (Piirto 2004). The ability of creative thinking is closely related to personal characteristics of an individual such as independence, self-discipline, risk-taking motivation, tolerance of uncertainty and achievement motive (Amabile 1985: 393). Creativity in general terms can be expressed as making new combinations from old ideas find out new products (Boden 1998). According to Mayer (1999), creativity is to produce new and useful products.

According to Sternberg and Lubart (1999), creativity is the ability to produce original and useful (appropriate) conditions and works, and creativity is important for the individual to solve everyday problems and for society to discover new and original inventions and information. Fisher (2004) stated that creativity can be defined when it is seen, but it is very difficult to define how it emerges as a result of an intellectual process.

However, creativity is also needed to solve problems that cannot be solved in family or business life (Lau 2011). Cropley (2001) suggests that creativity is a characteristic feature of each individual at different levels. Therefore, it can be said that creativity which has important tasks such as finding the problems encountered at the present time or predicting possible problems, offering solutions for them and resolving them is one of the important components of CT.

As a result, in the middle of twenty-first century, the computational thinking skills are expected to be among the basic skills used by everyone such as reading, writing, logic and mathematics (Wing 2006, 2011, 2016) and the computational thinking skills are the combination of problem solving, algorithmic thinking, critical thinking, cooperative learning and creative thinking skills (ISTE 2015).

The use of computer technologies in virtually every field of life has changed the way work is done today. Although the human mind is the most powerful problem-solving tool by far, the ability to expand the power of human thought using computers and other digital tools has become a significant part of our everyday lives and work. People need to understand how, when, and where computers and other digital tools use to solve their problems (ISTE 2015). In addition; students need to have the skills that capture the main components of CT and be able to use these skills in face of a problem (Barr et al. 2011). Students already learn several elements of the CTS skill set in various disciplines, but it is necessary to design environments which allow students to use these skills together in a powerful way. Furthermore, CT will, by the mid-twenty-first century, be one of the fundamental skills used by everyone, such as reading, writing, logic and mathematics, according to Wing (2006, 2011 and 2016). Therefore, CT is a skill that must be taught starting from early childhood (Wing 2008).

As can be understood from the literature, CT is a field which needs to be studied and it is necessary to perform activities to develop CTS. Although, as noted above, CT is expected to be one of the fundamental skills used by everyone by the mid-twenty-first century (Wing 2006, 2011, 2016) and CTS can be used by in all age groups and in every discipline (ISTE 2015), the number of studies on CT is limited in the literature. In this context, it should be possible to determine whether or not an individual possesses CTS by measuring the skills mentioned above. Although there are a sufficient number of academic studies addressing these factors separately, the number of studies which address factors that define CTS together is limited. Moreover, apart from an effort to develop a scale to determine the CTS of university students (Korkmaz et al. 2017), it seems that there is no valid and reliable measurement tool which can be used to measure the CTS of students engaged in academic studies. Currently, this kind of skills will be more and more important and necessary, especially when we come to think about modern education and educational technology. From this perspective, contribution of this paper should be quite significant. Therefore, this study aimed to develop a measurement tool to determine the CTS of high school students. In this way, it may be possible to better reveal the identifiers and outcomes of CTS and organize effective activities to improve such skills.

3 Method

The steps of the CTS scale development and the characteristics of the sample can be found below.

3.1 Sample

Based on the principle of availability, students enrolled in high schools located in the city center of Kırşehir were included in this study. The sample of the study consisted of 785 students who were selected by simple random sampling and who volunteered to participate in the study. The rule that “the number of participants must be at least five times of the number of items” (Gorsuch 1983, p.332; Tavşancıl 2002) was considered with regard to the sample size. 275 students participated in the first application, which involved the exploratory factor analysis (EFA) of the scale, and 270 students participated in the second application which involved the confirmatory factor analysis (CFA). The data obtained from two different groups was used in exploratory and confirmatory factor analysis (Fabrigar et al. 1999). The test-re-test method was used to determine the consistency of the scale and the application included 240 students.

3.2 The development process of the measurement tool

In order to create the draft form of the CTS scale, studies on CT were reviewed. Chen et al. (2017) have developed an instrument that has good psychometric properties and has the potential to reveal student learning challenges and growth in terms of CT. Atmatzidou and Demetriadis (2016) investigated the development of students’ computational thinking skills in the context of educational robotics learning activity. Korkmaz et al. (2017) developed the scale that is a valid and reliable measurement tool that could measure the computational thinking skills of the students. Şahiner and Kert (2016) examined computational thinking research between 2006 and 2015. Subsequently the relevant conceptual framework (Wing 2006, 2008, 2011; ISTE 2015, 2016; ISTE-CSTA 2011; Demir and Seferoğlu 2017; Bati 2017) were reviewed.

In the second step, a pool of items consisting of 53 items was created based on the information from the literature on CTS and the contribution of researchers and experts, and a draft form consisting of 53 items aimed at measuring the CTS of high school students was drawn up.

In the next step, the opinions of experts were taken to ensure the content validity of the draft scale. Expert opinion is a commonly used method to determine content validity, which refers to the quantitative and qualitative adequacy of scale items (Balcı 2009: 112; Büyüköztürk et al. 2015: 117). In order to find out whether or not the draft form would measure the CTS of students, the opinions of five faculty members from the Department of Computer and Instructional Technologies Teaching, two computer engineer and three faculty member specializing in educational sciences were received. A three-point assessment form was used to gauge the opinions of these experts. The experts were asked to choose one of the following options: “Appropriate”, “Somewhat appropriate”, “Inappropriate”. Based on the opinions of the experts, the content validity of the scale items was determined using the content validity ratio developed by Veneziano and Hooper (1997: 68–69). For each item, the ratio was calculated as the number of experts who gave a positive answer divided by the number of experts who gave a negative answer minus one. The number of experts and the content validity ratios were determined for content validity indices of the items.

The items with a content validity ratio below 0.80 were excluded from the scale. Some items were removed from the scale based on the content validity ratio calculations and some items were revised. The draft scale consisting of 53 items was ready for the initial application after this process. The possible responses to the items in the five-point Likert scale included (1) “Strongly disagree”, (2) “Disagree”, (3) “Neutral”, (4) “Agree”, and (5) “Strongly agree”. The formed draft scale has been examined by 22 students whose comments have been taken beforehand, it has been questioned whether the students have had hardship in understanding the items and how they perceive each item, the items not understood or determined to be understood differently have been examined again and the draft scale has been finalized.

3.3 Data analysis

The fitness of the data for analysis was tested by assumption of normality and determination of missing data. First of all, the missing data was checked and it was seen that the rate of missing data was below 5% for each item. The missing data was replaced with item average without changing the average of the item. Kurtosis and skewness coefficients calculated to validate the assumption of normality for the items were in the −10 < kurtosis <10 range and the −3 < skewness <3 range (Kline 2005).

KMO and Bartlett tests were performed for the construct validity of the scale and to find out whether or not factor analysis was necessary (Kalaycı 2009: 321–322; Seçer 2013: 119). Based on the values obtained, exploratory factor analysis was applied to the data.

Principal components analysis was performed to determine the factors of the scale. Then, factor loadings were examined using the Varimax vertical rotation technique on the data. The items with a factor loading below .40 and without at least 0.100 difference between their loadings on two factors (i.e. the items which cross-loaded on two factors) were removed from the scale as a result of the principal components analysis (Büyüköztürk 2008: 124–125), and the analysis was repeated. A factor loading of 0.30 and above (Seçer 2013: 129) and at least 40% explained variance are accepted to be sufficient (Büyüköztürk 2008; Balcı 2009).

The scale was applied to another sample other than the sample of this study in order to test the accuracy of the construct revealed by the exploratory factor analysis. Confirmatory content analysis was performed on the resulting data. The confirmatory factor analysis tests whether the data validates the model created based on the previously obtained information (Seçer 2013).

Item total correlations were calculated to determine the discriminative power of the items. The Cronbach’s Alpha reliability coefficient, the split-half correlation value, the Spearman-Brown reliability coefficient, and the Guttman split-half reliability coefficient were calculated to assess the reliability of the overall scale and its factors. A reliability coefficient of 0.80 or above is accepted as an indicator of high reliability for the scale (Kalaycı 2009: 405). In addition, the stability of the scale was assessed using the test-re-test method. Correlation values between two applications performed four weeks apart were calculated to this end. IBM SPSS 22.00 and Lisrel 8.80 were used for data analysis.

4 Results

4.1 Findings related to the validity of the scale

The validity of a measurement tool is determined by to what degree it measures the property under examination. In other words, it is the tool’s ability to measure the target variable without mixing it with other variables (Turgut and Baykul 2011). The construct validity, total item correlation, corrected correlation and item discrimination were analyzed in order to determine the validity of the CTS scale. The findings are given below.

4.2 Construct validity

4.2.1 Findings related to the exploratory factor analysis

Kaiser-Meyer-Olkin (KMO) and Bartlett’s tests were used to determine whether or not the data set was suitable for factor analysis. The KMO value of the scale was found to be .749, whereas the Bartlett’s value was χ2 = 6263.762 (sd = 1378, p = 0.00). This finding indicates that the scale was suitable for factor analysis (Kalaycı 2009; Sönmez and Alacapınar 2014).

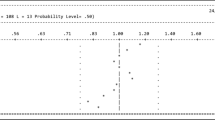

First of all, the principle components analysis was performed to determine the number of factors. Then, seven factors with an eigenvalue over 1.00 were found as a result of the Varimax vertical rotation technique. Considering the high number of factors, the Cattell’s scree test was performed.

One of the important things to know in order to interpret the chart shown in Fig. 1 is that the space between two points indicates a factor (Seçer 2013: 128). The factors after the fourth point were small and the distance between these points were very similar. As a result, the factor count of the scale was determined to be four.

Based on the results of the EFA, six items were removed as it was not clear which factor they measured, and five items were removed after the repeated analysis. It was found based on the data that there were four factors which had an eigenvalue over 1 and explained at least 5% of the variance. The first factor explained 47.69% of the variance; the second factor explained 9.45% of the variance; the third factor explained 5.09% of the variance, and the fourth factor explained 4.25% of the variance. The total variance explained by the scale was 66.49%. According to the results of the analysis, 20 out of 42 items in the scale had high loading values on the first factor, eight items had high loading values on the second factor, nine items had high loading values on the third factor, and five items had high loading values on the fourth factor.

After these examinations, the scale consisting of 42 items and four factors had a KMO value of .923 and a Bartlett value of χ2 = 8497.54 (sd = 861, p = 0.00). Taking the contents of the items, institutional statements and expert opinions into account, the first factor was named “Problem Solving” (PS), the second factor was named “Cooperative Learning and Critical Thinking” (CL&CT), the third factor was named “Creative Thinking” (CT), and the fourth factor was named “Algorithmic Thinking” (AT). Table 1 shows findings related to the EFA performed for the CTS scale.

As shown in Table 1, the factor loadings of the items included in the analysis were clustered under four factors. The items with a factor loading below 0.40 were removed from the 53-item scale as a result of the repeated factor analysis and the item-test correlation was calculated for each item to determine the discriminative power. The items with an item-test correlation of 0.40 and above were retained. A scale consisting of 42 items and four factors was obtained as a result. The factor loading was found to be between .475 and .853 for each item.

4.2.2 Findings related to the confirmatory factor analysis

The CFA was performed on the data collected from 270 high school students who were not included in the sample used for the EFA, which was performed to validate the four-factor construct of the scale. Figure 2 shows the t values related to the factorial model of the scale and the factor-item correlation.

As shown in Fig. 2, the factor loading varied from .45 to .62 for the PS factor, from .67 to .82 for the CL&CT factor; from .43 to .66 for the CT factor, and from .45 to .57 for the AT factor. If there is not a red arrow between the factor and the item, it indicates that the t value of the item is significant at <.05 significance level (Jöreskog and Sörbom 1993). Table 2 shows the t values obtained as a result of the CFA.

As shown in Table 2, the t value for the items in the CTS scale varied from 8.12 to 10.02. Therefore, all t values obtained as a result of the CFA were significant at .01 significance level (Jöreskog and Sörbom 1993; Kline 2005: 41).

Table 3 shows the acceptance criteria for the fitness index values examined using the fitness index values obtained from the CFA.

As shown in Table 3, χ2/sd = 3.28 is smaller than 5, which indicates that the model has acceptable fitness (Kline 2005; Şimşek 2007). RMSA = .075, S-RMR = .081, NNFI = .91, CFI = .92, GFI = .90 and AGFI = .88 values also show that the fitness indices of the confirmatory factor analysis are at an acceptable level (Hu and Bentler 1999). These findings show that the four-factor model obtained as a result of the CFA has a sufficient fitness level.

4.3 The discriminative power of the items

Based on the total item correlation method, correlations between scores obtained from an item and scores obtained from the factors were calculated in order to assess whether each item measured the desired property. In addition, 56 students from the top 27% and 56 students from the bottom 27% were selected based on their scale scores and the independent groups t-test was performed to determine the discriminative power of the items. Table 4 shows the item-factor correlation value calculated for each item, and Table 5 shows the t values related to the discriminative power of the items and findings related to significance levels.

As shown in Table 4, the item-test correlation coefficient varied from 0.640 to 0.843 for the first factor, from 0.467 to 0.809 for the second factor, from 0.639 to 0.817 for the third factor, and from 0.564 to 0.741 for the fourth factor. Each item had a significant and positive correlation with the factor which contained the respective item (p < 0.001). Therefore, each item was consistent with its factor, in other words, served the general purpose of the factor (Carmines and Zeller 1982).

As shown in Table 5, the independent sample t-test value related to the factors and the total score varied from 7.474 and 11.128 for the 42 items in the scale. The t value for the entire scale was found to be 13.646. Each difference was at a significant level (p < 0.001). Based on this finding, it is safe to say that both the overall scale and each of the scale items could measure the desired property in a significant manner and each item had high discriminative power.

4.4 Findings related to the reliability of the scale

Internal consistency and stability levels were calculated to assess the reliability of the scale. The procedures and the findings are given below.

4.4.1 Internal consistency level

The Cronbach’s Alpha reliability coefficient, the split-half correlation value, the Spearman-Brown reliability coefficient and the Guttman split-half reliability coefficient were calculated to assess the reliability of the overall scale and its factors. Table 6 shows values obtained from the reliability analysis for the overall scale and its factors.

As shown in Table 6, the scale consisting of four factors and 42 items had a split-half correlation of .827, a Spearman-Brown reliability coefficient of .905, a Guttmann Split-half value of .897, and a Cronbach’s Alpha reliability coefficient of .969. On the other hand, the split-half correlation varied from .779 to .914, the Spearman-Brown reliability coefficient varied from .880 to .955, the Guttmann Split-half value varied from .864 to .954, and the Cronbach’s Alpha coefficient varied from .828 to .696 for the factors. Therefore, the findings related to internal consistency and split-half reliability coefficients of the scale show that the overall scale and its factors produce reliable results.

4.4.2 Stability level

The stability of the scale was examined to obtain information about its reliability, and the test-re-test method was used. This sample consisted of 240 Anatolian high school students in the province of Kırşehir who had not participated in previous applications. The time between the two applications with this sample was four weeks. Table 7 summarizes the findings related to the test-re-test results for the overall scale and its factors.

The test-re-test reliability coefficient varied from .84 to .93 as shown in Table 7, and each correlation was positive and significant (p < 0.001). The correlation coefficient for the overall scale was calculated to be .89. The Pearson correlation coefficient between the two applications was in the 0.70–0.89 range, which indicates very high correlation (Kalaycı 2009). 116). Therefore, both the overall scale and the factors of the scale allow for stable measurements. Based on the results of the reliability analysis, it is safe to say that the CTS scale is a valid and reliable measurement tool.

5 Discussion

In this study, a scale was developed to reveal the CTS of students. The five-point Likert scale consists of 42 items under four factors. 34 of the Problem Solving, Creative Thinking, and Algorithmic Thinking items are positive, whereas 8 of the Cooperative Learning & Critical Thinking items are negative. Negative items are scored inversely.

The sample of this study consisted of students who were enrolled in high schools located in the urban center of the province of Kırşehir in the 2016–2017 academic year, and the first application which involved the exploratory factor analysis was performed with 275 students, whereas the second application which involved the confirmatory factor analysis was performed with 270 students. The test-re-test application for the scale reliability studies was performed with the participation of 240 students. Therefore, a total of 785 students participated in the scale development process.

The KMO test performed to assess the fitness of the data obtained using the 53-item draft scale for the factor analysis showed that the KMO value was .749. The item count of the scale was reduced to 42 items as a result of the factor analysis and the second analysis performed for the rotation procedure showed a KMO value of .923. As a result of the rotation procedure which was carried out to perform the principal components analysis of the factors, it was found that the scale had four factors. These factors were named PS, CL&CT, CT and AT.

The confirmatory factor analysis performed to assess the factorial validity of the scale showed adequate fitness: χ2/df (3.28), RMSEA (0.075), SRMR (0.081), GFI (0.90), AGFI (0.88), NNFI (0.91), CFI (0.92). The model was validated based on the data obtained.

The test-re-test method was used to assess the reliability of the scale with the participation of 240 students, and the correlation between the two applications was found to be .89. The Cronbach’s Alpha reliability coefficient was calculated to assess the fitness level between the scale items. The reliability coefficient was .969 for the overall scale, .962 for the first factor, .937 for the second factor, .937 for the third factor and .828 for the fourth factor. The fact that reliability coefficients calculated for both the entire scale and the factors of the scale were .70 and above can be interpreted as an indication of the scale’s reliability (Büyüköztürk 2002; Gorsuch 1983).

While the explained variance of the scale was found to be 66.49%, the eigenvalue calculated while determining the factors constituting the scale was 47.69% for the first factor, 9.45% for the second factor, 5.09% for the third factor and 4.25% for the fourth factor. The item-total test correlation value, which was calculated for each item to assess to what degree the respective item measured the desired property, varied from .467 to .843.

A four-factor construct was found as a result of the construct validity analysis. The items under the factors of Cooperative Learning and Critical Thinking were observed to cluster under a single factor. Creative thinking, which is one of the factors of the scale, is the life-long ability to express oneself, inquire, and use one’s imagination (Craft 2003). Algorithmic thinking is one of the basic information technology concepts which allow individuals to specialize in information technologies (Cooper et al. 2000). Critical thinking means to use cognitive skills or strategies which increase the likelihood of displaying an intended behavior (Halpern 2013). Problem-solving can be seen as a general characteristic of the individual’s cognitive construct (Newell and Simon 1972). Cooperative learning is the effort to improve learning as much as possible both individually and together with members of small groups (Veenman et al. 2002).

As can be understood from the relevant literature, the results of the exploratory and confirmatory factor analysis for the CTS scale are acceptable. Findings related to the validity and the reliability of the scale show that the scale can be used to measure the CTS of students at high school level. Considering that there are only a handful of valid and reliable measurement tools for the CTS of students (Korkmaz et al. 2017), it is believed that this study will significantly contribute to the literature. The scale was developed based on the skills mentioned in the definition of ISTE (2015). Prospective studies are recommended to improve the internal consistency and test the validity of the scale using samples from different grade levels. In addition; computational thinking is limited to the sub-skills expressed in the definition made by ISTE (2015) in this scale and to the basic items taking place in the scales developed separately for these skills.

References

Amabile, T. M. (1985). Motivation and creativity: Effects of motivational orientation on creative writers. Journal of Personality and Social Psychology, 48(2), 393–399.

Anderson, C. W., & Smith, E. L. (1984). Children's preconceptions and content-area textbooks in G.G. Duffy. Comprehension Instruction Perspectives and Suggestions. New York: Longman.

Ater-Kranov, A., Bryant, R., Orr, G., Wallace, S., & Zhang, M. (2010). Developing a community definition and teaching modules for computational thinking: Accomplishments and challenges. Proceedings of the 2010 ACM Conference on Information Technology Education, 143–148.

Atmatzidou, S., & Demetriadis, S. (2016). Advancing students’ computational thinking skills through educational robotics : A study on age and gender relevant differences. Robotics and Autonomous Systems, 75, 661–670. https://doi.org/10.1016/j.robot.2015.10.008.

Balcı, A. (2009). Research in Social Sciences: Methods, Techniques and Principles. Ankara: Pegem A.

Barr, D., Harrison, J., & Conery, L. (2011). Computational thinking: A digital age skill for everyone. Retrieved from: http://files.eric.ed.gov/fulltext/EJ918910.pdf.

Bati, H. (2017). Computational thinking and integrative education (STEAM) in science education. Pamukkale University Journal of Education, 41, 91–103. https://doi.org/10.9779/PUJE800.

Berkeley, (2017). The beauty and joy of computing. Retrieved from: http://bjc.berkeley.edu

Boden, M. A. (1998) What is creativity, creativity in human evolution and prehistory (1 ed.). Steven J. Mithen, Routledge.

Brennan, K., & Resnick, M. (2012). New frameworks for studying and assessing the development of computational thinking. 2012 annual meeting of the american educational research association (Vancouver: Canada). Retrieved from: http://scratched.gse.harvard.edu/ct/files/AERA2012.pdf.

Bundy, A. (2007). Computational thinking is pervasive. Retrieved from: http://www.inf.ed.ac.uk/publications/online/1245.pdf.

Büyüköztürk, Ş. (2002). Data analysis handbook. Ankara: Pegem Publication.

Büyüköztürk, Ş. (2008). Manual of data analysis for social sciences. Ankara: Pegem A Publications.

Büyüköztürk, Ş., Çakmak, E. K., Akgün, Ö. E., Karadeniz, Ş., & Demirel, F. (2015). Scientific research methods. Ankara: Pegem A Publications.

Carmines, E. G., & Zeller, R. A. (1982). Reliability and validity assessment (5th ed.). Beverly Hills: Sage Publications Inc..

Chaffee, J. (1994). Thinking critically. Boston: Houghton Mifflin.

Chen, G., Shen, J., Barth-Cohen, L., Jiang, S., Huang, X., & Eltoukhy, M. (2017). Assessing elementary students’ computational thinking in everyday reasoning and robotics programming. Computers & Education, 109, 162–175. https://doi.org/10.1016/j.compedu.2017.03.001.

Code.org. (2017). Hour of code. Retrieved from: https://hourofcode.com/tr/gb.

Cooper, S., Dann, W., & Pausch, R. (2000). Developing algorithmic thinking with Alice. In Proceedings of Isecon, 17, 506–539.

Craft, A. (2003). Creative thinking in the early years of education. Early Years, 23(2), 143–154.

Cropley, A. (2001). Creativity in education and Learning Great Britain: Clays.

Curzon, P. (2015). Computational thinking: Searching to speak. Retrieved from: http://teachinglondoncomputing.org/free-workshops/computational-thinking-searching-to-speak/.

Czerkawski, B. C., & Lyman III, E. W. (2015). Exploring issues about computational thinking in higher education. Tech Trends, 59(2), 57–65. https://doi.org/10.1007/s11528-015-0840-3.

Demir, Ö., & Seferoğlu, S. S. (2017). New concepts, different uses: An evaluation related to computational thinking. Readings of Educational Technology, 41(August), 468–483.

Department for Education England (2013). National curriculum in England:Computing programmes of study key stages 1 and 2. Ref: DFE-00171e2013. Retrieved from: https://www.gov.uk/government/publications/nationalcurriculum-in-england-computing-programmes-of-study.

Ennis, R. H. (1962). A concept of critical thinking. Havard Educational Review, 22, 88–111.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299.

Facione, P. A., & Facione, N. C. (1993). Test Manual: the California Critical Thinking Skills Test, Form A and Form B. Millbrae: The California Academic Press.

Facione, N. C., Facione, P. A., & Sanchez, C. A. (1994). Critical thinking disposition as a measure of competent clinical judgement: The development of the California thinking disposition inventory. Journal of Nursing Education, 33, 345–350.

Fisher, R. (2004). Creativity across the curriculum. In R. Fisher & M. Williams (Eds.), In unlocking reativity (pp. 160–172). Britain: David Fulton.

Gelbal, S. (1991). Problem solving. Hacettepe University Journal of Education, 6, 167–173.

Gorsuch, R. L. (1983). Factor analysis (2. ed.). Hillsdale: Lawrence Erlbaum Associates.

Guilford, J. P. (1967). Creativity: Yesterday, today and tomorrow. The Journal of Creative Behavior, 1(1), 3–14.

Halpern, D. F. (2013). Thought and knowledge: An introduction to critical thinking. New York: Psychology Press.

Harvard (2017). CS50: Introduction to computer science. Retrieved from: https://cs50.harvard.edu

Heppner, P. P., & Krauskopf, C. J. (1987). The integration of personal problem solving processes within counseling counsel. Psychologist, 15, 371–447.

Heppner, P. P., & Reeder, L. (1983). The relationship between problem solving self-appraisal and psychological adjustment. Cognitive Therapy and Research, 9(4), 415–427.

Heppner, P. P., Baumgardner, A. H., & Jakson, J. (1985). Depression and attributional style: Are they related? Cognitive Therapy and Research, 9, 105–113.

Hu, C. (2011). Computational thinking: What it might meanand what we might do about it. In M. Goldweber (Ed.), 16th annual joint conference on Inno-vation and Technology in Computer Science Education (pp. 223–227). New York: ACM.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

ISTE (2015). Computational thinking leadership toolkit (First Edition). Retrieved from: https://www.iste.org/explore/articleDetail?articleid=152&category=Solutions&article=Computational-thinking-for-all.

ISTE (2016). ISTE standards for students. Retrieved from: https://www.iste.org/standards/standards/for-students-2016.

ISTE-CSTA (2011). Computational thinking: Teacher resources (2. edition) (Grant No: CNS-1030054). Retrieved from: https://www.iste.org/explore/articleDetail?articleid=152&category=Solutions&article=Computational-thinking-for-all.

Jane, L. (2001). The relationship between social problem solving and bullying behaviour among male and female adult prisoners. Aggresive Behavior, 27, 297–312.

Johnson, D.W., & Johnson, R.T. (1994). Leading the cooperative school. (Second edition), Edina, Minnesota: Interaction Book Company.

Johnson, D. W., & Johnson, R. T. (1995). Creative controversy: Intellectual challenge in the classroom. Edina: Interaction Book Company.

Johnson, D. W., Johnson, R. T., & Holubec, E. J. (1994). The nuts & bolts of cooperative learning. In Edina. Minnesota: Interaction Book Company.

Jöreskog, K., & Sörbom, D. (1993). Lisrel 8: Structural equation modeling with the Simplis command language. USA: Scientific Software International.

Kalaycı, Ş. (2009). SPSS applied multivariate statistical techniques. Ankara: Asil Publication.

Katai, Z. (2015). The challenge of promoting algorithmic thinking of both sciences- and humanities-oriented learners. Journal of Computer Assisted Learning, 31(4), 287–299. https://doi.org/10.1111/jcal.12070.

Kazimoglu, C., Kiernan, M., Bacon, L., & MacKinnon, L. (2012). Learning programming at the computational thinking level via digital game-play. Procedia Computer Science, 9, 522–531. https://doi.org/10.1016/j.procs.2012.04.056.

Kline, R. B. (2005). Structural equation modeling (2nd ed.). New York: The Guilford Press.

Knuth, D.E. (1980). Algorithms in modern mathematics and computer Science Stanford Department of Computer Science Report No. STAN-CS-80-786, 1980.

Korkmaz, Ö., Çakir, R., & Özden, M. Y. (2017). A validity and reliability study of the computational thinking scales (CTS). Computers in Education, 72, 558–569. https://doi.org/10.1016/j.chb.2017.01.005.

Lau, J. Y. (2011). An introduction to critical thinking and creativity: Think more, think better. Wiley.

Lee, T. Y., Mauriello, M. L., Ahn, J., & Bederson, B. B. (2014). CTArcade: Computational thinking with games in school age children. International Journal of Child-Computer Interaction, 2(1), 26–33. https://doi.org/10.1016/j.ijcci.2014.06.003.

Lu, J. J., & Fletcher, G. H. L. (2009). Thinking about computational thinking. ACM Special Interest Group on Computer Science Education Conference (SIGCSE 2009). Chattanooga: ACM Press.

Mayer, R. E. (1992). Thinking, problem solving, Cognition. New York: W H Freeman and Company.

Mayer, R. E. (1998). Cognitive , metacognitive , and motivational aspects of problem solving. Instructional Science, 26(1–2), 49–63. https://doi.org/10.1023/A:1003088013286.

Mayer, R. E. (1999). Fifity years of creativiy research. Ed by Robert J. Handbook of creativity, Sternberg, Cambridge University Press.

National Research Council. (1999). Being fluent with infor-mation technology. Washington, DC: National AcademyPress.

Newell, A., & Simon, H. A. (1972). Human problem solving (Vol. 104, No. 9). Englewood Cliffs, NJ: Prentice-Hall.

Papert, S. (1996). An exploration in the space of mathematics educations. International Journal of Computers for Mathematical Learning, 1(1), 95–123.

Paul, R. W. (1984). Critical thinking fundamental to education for a free society. Educational Leadership, 1, 5–14.

Paul, R. (1990). Critical thinking. In Rohnert Park. California: Sonoma State University.

Piirto, J. (2004). Understanding creativity. Great potential press, Inc. In USA.

Rouquette, M. L. (2007). Creativity . Ankara: Dost.

Şahiner, A., & Kert, S. B. (2016). Examining studies related with the concept of computational thinking between the years of 2006-2015. European Journal of Science and Technology, 5(9), 38–43.

Seçer, İ. (2013). Practical data analysis and reporting with SPSS and LISREL. Ankara: Anı Publication.

Seferoğlu, S., & Akbıyık, C. (2006). Critical thinking and teaching. Hacettepe Universitesi Journal of Education, 30, 193–200. https://doi.org/10.1080/03634526109377142.

Simpson, E., & Courtney, M. (2002). Critical thinking in nursing education: Literature review. International Journal of Nursing Practice, 8, 89–98.

Şimşek, Ö. F. (2007). Introduction to Structural Equation Modeling; Basic Principles and LISREL Applications. Ankara: Ekinoks Press.

Slavin, R. E. (1980). Cooperative learning: Theory research and Practice. Rentice Hall: Englewood Cliffs.

Slavin, R. E. (1987). Cooperative learning in student teams: What research says to the teacher. Washington, D.C.: National Education Association.

Sönmez, V., & Alacapınar, G. F. (2014). Sampled scientific research methods. Ankara: Anı Publication.

Sternberg, R. J., & Lubart, T. I. (1999). The concept of creativity: Prospects and paradigms. In R. J. Sternberg (Ed.), Handbook of creativity (pp. 3–15). Cambridge: Cambridge University Press.

Tavşancıl, E. (2002). Attitude Measurement and Data Analysis with SPSS. Ankara: Nobel Publications.

Thornton, S. (1998). Kids solve the problem. Istanbul: Gendaş Publishing.

Turgut, M. F., & Baykul, Y. (2011). Measurement and evaluation in education. Ankara: PegemA Publication.

Vee, A. (2013). Understanding computer programming as a literacy. Literacy in Composition Studies, 1(2), 42e64.

Veenman, S., Benthum, N., Bootsma, D., Dieren, J., & Kemp, N. (2002). Cooperative learning and teacher education. Teaching and Teacher Education, 18, 87–103.

Veneziano, L., & Hooper, J. (1997). A method for quantifying content validity of health-related questionnaires. American Journal of Health Behavior, 21(1), 67–70.

Watson, G., & Glaser, E. M. (1980). Watson-Glaser Critical Thinking Appraisal. San Antonio: The Psychological Corporation.

Wing, J. M. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35.

Wing, J. M. (2008). Computational thinking and thinking about computing. Philosophical Transactions of the Royal Society, 366, 3717–3725.

Wing, J. M. (2011). Research notebook: Computational thinking What and why? The Link Magazine, Spring. Carnegie Mellon University, Pittsburgh. Retrieved from: https://www.cs.cmu.edu/link/research-notebook-computational-thinking-what-and-why.

Wing, J. M. (2016). Computational thinking. 10 years later. Microsoft Research blog. Retrieved from: https://www.microsoft.com/en-us/research/blog/computational-thinking-10-years-later/.

Wolfram, S. (2016). How to teach computational thinking. Blog Stephen Wolfram. Retrieved from: http://blog.stephenwolfram.com/2016/09/how-to-teachcomputational-thinking/

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yağcı, M. A valid and reliable tool for examining computational thinking skills. Educ Inf Technol 24, 929–951 (2019). https://doi.org/10.1007/s10639-018-9801-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-018-9801-8