Abstract

Many patients with schizophrenia display neuropsychological deficits in concert with cognitive biases, particularly the tendency to jump to conclusions (JTC). The present study examined the effects of a generic psychoeducational cognitive bias correction (CBC) program. We hypothesized that demonstrating the fallibility of human cognition to patients would diminish their susceptibility to the JTC bias. A total of 70 participants with schizophrenia were recruited online. At baseline, patients were asked to fill out a JTC task (primary outcome) and the Paranoia Checklist before being randomized to either the CBC or a waitlist control condition. The CBC group received six successive pdf-converted PowerPoint presentations teaching them about cognitive biases; we neither placed any emphasis on psychosis-related cognitive distortions nor addressed psychosis. Six weeks after inclusion, subjects were re-administered the JTC task and the Paranoia Checklist. At a medium-to-large effect size the JTC bias was significantly improved under the CBC condition in comparison to controls for both the per protocol and the intention to treat analysis. The Paranoia Checklist remained essentially unchanged over time. No effects were observed for depression. Psychoeducational and cognitive programs are urgently needed as many patients are still deprived of any psychological treatment despite recommendations of most guidelines. Self-help may bridge the large treatment gap in schizophrenia and motivate patients to seek help. The study asserts both the feasibility and effectiveness of self-help programs in schizophrenia.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Human cognition is fallible (Kahneman 2012; Schacter 1999). We cannot perceive and keep track of everything that meets our senses and cannot store all thoughts and images that travel through our mind (Chun and Marois 2002). Experimental psychologists have used the metaphor of a cognitive “bottleneck” to describe this process (Broadbent 1958; Miller 2013), and it is now well established that our reasoning, perception and memory are subject to a number of cognitive errors or biases that can distort our representation of reality (Pohl 2004). Although these cognitive biases are ubiquitous in the general population, and some of these have been associated with positive mental health outcomes (Bentall 1992), recent studies have now shown that specific biases are heightened in certain psychological disorders. To provide just a few examples, depressive patients show mood-dependent memory biases (Roiser and Sahakian 2013; Wittekind et al. 2014); patients with obsessive–compulsive disorder maintain an unrealistic pessimism that they are endangered relative to others (Moritz and Jelinek 2009; Moritz and Pohl 2009); and pathological gamblers exhibit greater illusory control over non-contingent outcomes (Fortune and Goodie 2012). Unlike cognitive deficits, which are commonly defined as a reduction of overall speed and/or performance when completing tasks, biases are preferences, styles or distortions with which information is processed and are not pathological per se. They may also be conceptualized as ‘mental shortcuts’ which can under some circumstances lead to erroneous decisions.

Cognitive biases have perhaps most extensively been studied in the context of psychosis. Following the seminal work of Garety and coworkers in the late 1980s (Garety et al. 1991; Huq et al. 1988), researchers have detected a number of cognitive biases in individuals with schizophrenia (Freeman 2007; Garety and Freeman 2013; Savulich et al. 2012; van der Gaag 2006). For example, many patients display overconfidence in erroneous judgments (Moritz and Woodward 2006; Moritz et al. 2003, 2012; Peters et al. 2007); have a bias against integrating disconfirmatory evidence (BADE; Speechley et al. 2012); tend to over-rely on confirmatory evidence and reasoning heuristics (Balzan et al. 2012b, 2013); and perhaps most robustly, have been shown to exhibit a jumping to conclusions (JTC) bias (Balzan et al. 2012a; Garety et al. 2005; Lincoln et al. 2010; Moritz and Woodward 2005). Although the pattern of results is not fully consistent across trials, there is also mounting evidence for attributional biases (Bentall et al. 1994; Lincoln et al. 2010; Mehl et al. 2014).

It is important to note that these biases are distinct from the well-established neuropsychological deficits associated with the disorder (Fioravanti et al. 2012; Heinrichs and Zakzanis 1998; Schaefer et al. 2013). Moreover, while they may occur in healthy participants too, the aforementioned biases are escalated in people with psychosis, and importantly, have been tied to the formation and maintenance of delusions.

Accordingly, new treatment options, such as metacognitive training (MCT; Moritz et al. 2014a, b; Woodward et al. 2014), Social Cognition and Interaction Training (SCIT; Combs et al. 2007; Roberts and Penn 2009) and Reasoning Training (Ross et al. 2011; Waller et al. 2011) have begun to address specific psychosis-related biases based on the idea that fostering doubt and sharpening critical thinking will undermine the scaffolds of (systematized) delusional ideas. While a number of studies indicate that these programs show some promise for the reduction of positive symptoms, the effects on the jumping to conclusions bias are more modest (Moritz et al. 2014a, b). For example, in a recent study (Moritz et al. 2014b) it was found that delusional symptoms could be reduced following a metacognitive training (MCT) for up to 3 years but yielded little effect on JTC. Likewise, a British group (Ross et al. 2011) observed that a reasoning training that partially overlaps with the MCT led to delayed decision-making; however, the core JTC bias remained virtually unaffected. On the other hand, a study by Balzan et al. (2014) has shown significant changes in cognitive biases post-treatment; of note, this particular version of MCT focused on targeting specific biases, rather than administering the full program. Interestingly, while the program only highlighted two specific biases (JTC and BADE), changes were also noted for another bias suggesting that effects of a bias correction program may “spread” to other biases.

Treatment packages like the MCT are molar: they incorporate a number of different elements making it hard to delineate core driving mechanisms. Dismantling studies are therefore needed to pinpoint active ingredients. For the present study, we therefore adopted a focused approach. We confined the program to psychoeducation about cognitive biases. Rather than addressing only those biases implicated in psychosis we aimed to teach participants about a wide range of cognitive biases, most of which are not implicated in psychosis to date. The aim was to demonstrate to patients the fallibility of human cognition per se and thus plant the seeds of doubt for overconfident and biased judgments. A potential advantage of a generic psychoeducational cognitive bias correction (CBC) program is its normalizing character as it does not address psychosis; the latter may create resentment in patients who do not accept their diagnosis. The study was set up as a self-help intervention in view of recent evidence that patients with schizophrenia may sufficiently grasp and benefit from psychoeducational information and (online) self-help (Alvarez-Jimenez et al. 2014).

Given that that CBC aims to reduce delusional ideation via highlighting the fallibility of human cognition generally rather than targeting specific symptoms of psychosis, the primary outcome measure was the JTC bias, which itself has been linked to heightened delusional states. Therefore, reducing the tendency to jump to conclusions may similarly reduce delusional ideation. While we also examined delusional/paranoia severity and depression as secondary measures, it can be argued that teaching patients to be cautious about their judgments may only act prophylactically against new delusional ideas rather than correcting established firm beliefs, which are more resistant to change (because they are, for example, intertwined with the personality and biography of their holder).

Methods

Participants

First, invitation emails were sent to 351 patients with an established diagnosis of schizophrenia who had been hospitalized in the Psychiatry Department of the University Medical Center Hamburg-Eppendorf for schizophrenia or schizoaffective disorder before and had consented to be recontacted for studies. Further, the study was advertised on moderated fora specialized for people with psychosis that required registration to keep the rate of false-positive diagnoses low. Recruitment was carried out between February 2014 and March 2014. Patients were also asked for allowance to contact their doctors to verify diagnostic status of a schizophrenia spectrum disorder. The study was approved by the ethics committee of the German Psychological Society (DGPs).

The CBC program was organized in six chapters consisting of six PowerPoint presentations (each 29 to 57 sides; total: 263 slides) which dealt with approximately 20 cognitive biases (30 exercises) and paradigms demonstrating the fallibility of human cognition (i.e., anchor heuristic, availability heuristic, default/status quo bias, confirmation bias, Cocktail Party effect of selective attention, clustering illusion, optical illusions (e.g., Müller-Lyer illusion, Ponzo illusion), attributional biases, false consensus effect, endowment effect, perceived superiority, Stroop effect of selective attention, hindsight bias, false memory effect, illusory correlation, gambler’s fallacy, illusion of control, framing effect, omission bias, effect of mere contact). For most exercises, we adopted a “seeing is believing” approach as used in the MCT: participants first had to perform certain tasks that usually elicit false/biased responses and were then informed about common mistakes and how this bias occurs.

Inclusion criteria were a diagnosis of schizophrenia made by a mental health expert (for a subgroup we obtained evidence confirming this information), age between 18 and 65 years and consent to participate in two surveys via the Internet. Finally, participants had to provide an email address at the end of the baseline survey (participants were instructed how to create anonymous email addresses if they wished to). Participation was not rewarded with any financial reimbursement in order to ward off individuals with questionable motives for participation. Instead, all participants received self-help handbooks on relaxation exercises at the end of the re-assessment (pdf files for download) in addition to the six CBC presentations (otherwise, the CBC group would not have received an incentive at the post assessment which according to our experience would elevate noncompletion rates).

Links on the internet fora and in emails sent to prior inpatients directed interested parties to the online baseline survey which consisted of the following sections: in-depth explanation of the study rationale, electronic informed consent (no names or personal addresses were requested; however, optionally, patients were asked for their permission to approach their therapists for diagnostic verification), demographic variables (age, gender, school education), medical history, psychopathological instruments on paranoia and depression (see below), JTC task (see below), request of an email address (the email address served to send participants the pdf-files and to match pre and post survey data). Pre and post surveys were programmed using the online package unipark® which does not store IP addresses to ensure anonymity.

Upon completion of the baseline survey, participants were randomized either to the CBC group or the waitlist group. Randomization was performed electronically using random allocation according to time of conducting the survey. No stratification procedure was adopted. The six PowerPoint presentations were dispatched weekly to participants via email attachment. Participants in the wait-list group were informed that they would receive the package following post assessment 6 weeks later. The trial did not involve guided self-help, that is, the patients did not receive any personal assistance over the intervention period.

Six weeks later, participants were requested via email to participate in the post assessment (a web link directing participants to the post survey was included). For this purpose, participants were first requested to enter their email address at the first page of the survey to allow matching of pre and post data. Up to two reminders were sent if individuals failed to participate in the post assessment upon invitation (reminders were sent approximately 4 days apart). The post survey again assessed paranoia and depression severity. JTC was tested using a parallel version. If subjects endorsed that they had read at least one of the six attachments (experimental group only) they were posed a number of questions on the feasibility and subjective effectiveness of the technique (see Table 2). At the end of the assessment, we expressed gratitude for taking part in the study and provided access to the experimental material (not previously sent to the control group) and a self-devised self-help manual containing relaxation techniques (attached as ebook).

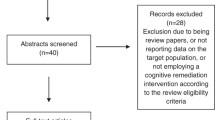

The initial page of the online assessment summarizing the purpose and the participation conditions of the study and inclusion criteria was accessed by 107 individuals. Of these, 27 did not proceed to the second page. As soon as 70 participants, who satisfied inclusion criteria and left their email addresses, completed the baseline survey, the inclusion phase was terminated and the online survey was closed. Sixty-five out of 70 participants (i.e., 93 % of the baseline sample) completed the post assessment 6 weeks later. For thirty-two patients we obtained verification by doctors via fax or email that patients fulfilled diagnostic criteria for a schizophrenia spectrum disorder.

Questionnaires

For both the pre and post assessment we administered two scales that probed for paranoia and depression (items were posed in random order). Items had to be rated on a 5-point Likert scale ranging from “fully applies” to “does not apply at all”. Paranoia was measured using the 18 frequency items of the Paranoia Checklist (Freeman et al. 2005). Previous studies have confirmed good psychometric properties (Freeman et al. 2005; Lincoln et al. 2010). A recent factor analytic study Moritz et al. (2012) showed that the scale is best represented by two factors termed suspiciousness (11 items; “Bad things are being said about me behind my back”) and core paranoia (5 items; “I can detect coded messages about me in the press/TV/radio”). In a recent online study (Moritz et al. 2014a, b) the Paranoia Checklist frequency scale had a high test–retest reliability (r = 0.93) and was sensitive to change. Along with the Paranoia Checklist, the “Center for Epidemiologic Studies-Depression Scale” (CES-D) was administered on both occasions (Hautzinger and Brähler 1993; Radloff 1977). The CES-D is a unidimensional questionnaire tapping into core depressive symptoms and is considered reliable (Hautzinger and Brähler 1993; Radloff 1977).

For the baseline assessment we also administered the Community Assessment of Psychic Experiences (CAPE), a 42-item self-report instrument capturing positive, depressive and negative symptoms (Stefanis et al. 2002). Items had to be rated on 4-point Likert scales for frequency. Previous studies have demonstrated good convergent validity and discriminative validity across groups of individuals with psychotic, affective and anxiety disorders and the general population (Hanssen et al. 2003). The long-term reliability is also satisfactory (Konings et al. 2006). The excellent psychometric properties of the original scale have been confirmed for the German version (Moritz and Laroi 2008). The mean scores of the CAPE subscales are reported in Table 1.

Jumping to Conclusions (JTC)

For the measurement of JTC, participants were presented a computerized version of the fish task, a variant of the beads task. The following instruction was shown on the screen: “Below you see two lakes with red and green fish. Lake A: 80 % red and 20 % green fish. Lake B: 80 % green and 20 % red fish. A fisherman randomly chooses one of the two lakes and then fishes from this lake only. Based on the caught fish, you should decide whether the fisherman caught fish from lake A or B. Important: (1) The fisherman catches fish from one lake only. (2) He throws the fish back after each catch. The ratio of green and red fish stays the same. (3) You can look at as many fish as you need to be completely sure as to which lake the fisherman has chosen.”

Up to 10 fish were caught; the fourth and the ninth fish were in the color of the nondominant lake (lake B). Upon a decision for lake A or B (the subject could decide either for lake A or B or make no decision) the task was terminated and the survey skipped to the next section. Each new fish was highlighted with an arrow and shown along with previously caught fish. A decision after one (conservative criterion) or two fish is considered as “jumping to conclusions”.

Results

Background Variables, Retention

As can be derived from Table 1, patients were around 40 years old and predominantly female which did not differ across groups. At baseline, approximately every second patient decided after the first or second fish on the JTC task which again was not significant between groups. Eight of the patients did not take any antipsychotics; status was not different between groups across time. The retention rate was excellent (93 %); only five patients were lost to follow-up. As can be derived from Table 1, no participant was in inpatient treatment at baseline. Approximately two thirds were receiving outpatient treatment, which did not change much over time and was not significantly different between groups (all ps > .05). Antipsychotic medication was prescribed in most patients which was not different between groups and across time (all ps > .05).

Jumping to Conclusions

For the core JTC parameter (decision after 1 fish), the effect of Group was nonsignificant, F(1,62) = 0.14, p = .905, \( \upeta_{\text{partial}}^{2} = .00 \). However, the effect of Time was significant, F(1,62) = 4.07, p = .048, \( \upeta_{\text{partial}}^{2} = .062 \), which was qualified by a significant interaction, F(1,62) = 6.17, p = .016, \( \upeta_{\text{partial}}^{2} = .09 \), at a medium-to-large effect size. As can be seen in Fig. 1, the JTC effect decreased in the experimental group relative to the wait list control groups. As the primary outcome was a binary measure, we re-run the analyses using Generalized estimating equations (GEE) procedures for binary outcomes which yielded similar results for the interaction (Wald χ2: p = .018).

When JTC effect was defined as a decision after the first or second fish a smaller but still significant interaction emerged, F(1,62) = 3.91, p = .051, \( \upeta_{\text{partial}}^{2} = .06 \). Both the effects of Group, F(1,62) = 0.14, p = .905, \( \upeta_{\text{partial}}^{2} = .00 \), and Time were nonsignificant, F(1,62) = 2.28, p = .136, \( \upeta_{\text{partial}}^{2} = .035 \). Generalized estimating equations (GEE) procedures for binary outcomes also yielded a significant interaction (Wald χ2: p = .049).

To account for missing values (intention to treat analysis), linear mixed models were adopted; status of significance remained the same (Fig. 1).

Psychopathology

For paranoia, neither the effects of Group, F(1,63) = 0.30, p = .587, \( \upeta_{\text{partial}}^{2} = .005 \), Time, F(1,63) = 1.52, p = .223, \( \upeta_{\text{partial}}^{2} = .024 \), nor the interaction were significant, F(1,63) = 0.52, p = .475, \( \upeta_{\text{partial}}^{2} = .008 \).

For depression, the group effect yielded no significance, F(1,63) = 3.29, p = .929, \( \upeta_{\text{partial}}^{2} = .000 \). Unexpectedly, perhaps owing to regression to the mean, depression decreased at trend level at a medium effect size over time, F(1,63) = 3.78, p = .056, \( \upeta_{\text{partial}}^{2} = .057 \). However, the interaction was not significant, F(1,63) = 0.83, p = .366, \( \upeta_{\text{partial}}^{2} = .013 \). No significant correlation emerged between the number of modules and change in any of the dependent variables. Again, when linear mixed models were used to estimate the ITT effects, status of significance remained the same (Fig. 2).

When looking at the scales itemwise two items reached significance. Patients in the CBC group improved significantly more than the waitlist group, t(63) = 2.40, p = .02, d = 603, on the Paranoia Checklist item “My actions and thoughts might be controlled by others”. In addition, the experimental group improved more on the depression item “I feel lonely”, t(63) = 2.03, p = .047, d = 514.

Test–Retest Reliability and Impact of Diagnostic Verification

Test–retest reliability was assessed for the wait-list control group which yielded satisfactory results (Paranoia Checklist: r = .823; depression Scale: r = .787). For the JTC parameters correlations were significant but less strong (core JTC: r = .566, decision after 1st or 2nd fish: r = .716). We also re-ran all the above analyses with diagnostic verification (yes (n = 32) versus no (n = 38) participants). Main or interaction effects remained unchanged if this variable was entered as a group factor.

Fidelity

23 of the 29 respondents in the experimental group had read all modules (79.3 %) and only one disclosed that he/she did not read any of the presentations. Each one participant had read 1, 2, 3, 4 or 5 out of the 6 presentations. Approximately 4 out of 5 (78.6 %) intend to apply the learning aims for the future. The feedback on the modules is displayed in Table 2 which was mainly positive. Most patients found the CBC program adequate for self-administration, comprehensive and helpful. However, symptom improvement was less often endorsed.

Discussion

The study asserts that a generic cognitive bias correction (CBC) approach, a variant of metacognitive training (MCT), is feasible in a psychosis population. Jumping to conclusions, which is not only implied in psychosis formation but also a risk factor for poor functional outcome (Andreou et al. 2014), was significantly reduced in the intervention group at a medium (decision after 1 or 2 fish) or even medium-to-large effect size when a more conservative measure was applied (decision after 1 fish). The trial thus confirms the hypothesis that a psychoeducational CBC program demonstrating patients the fallibility of human cognition reduces this core bias. Importantly, the fish task was not demonstrated or mentioned in any of the six CBC presentations.

Of the 70 patients who entered the trial, 93 % could be recontacted after 6 weeks. Of those allocated to the experimental group, most participants read at least four out of six presentations. We had expected that a large proportion of the subjects would not read the material due to technical difficulties, suspiciousness (e.g., fear of computer viruses or spyware) or fatigue. The vast majority found the material helpful and almost four out of five intended to use the lessons learnt for the future. Plausible mean scores and satisfactory test–retest reliability—both comparable to research in patients with fully established diagnoses (Moritz et al. 2013)—demonstrate the quality of the data.

Delusional ideation, as measured with the Paranoia Checklist, declined nonsignificantly in both groups. Subsidiary analyses showed that patients in the experimental group improved on the core delusional item “My actions and thoughts might be controlled by others”, which however, would not have withstood correction for multiple comparisons and should therefore be interpreted with caution.

Future research using more fine-grained instruments, preferably expert ratings, should examine if special types of delusions, particularly delusion of control, are improved by this novel kind of program and if the intervention leads to more symptomatic insight that could contribute to paradoxical worsening in self-report measures (i.e., despite improvement patients report more symptoms at post than at baseline because they now either acknowledge or perceive the pathology of these symptoms). A self-report measure such as the Paranoia Checklist is limited in this regard as it necessitates a basic level of insight into the pathological nature of one’s feelings and behavior. At the peak of psychosis, however, patients may not disclose symptoms because of suspiciousness or because they deem these phenomena as normal and justified. Future variants of the program should also incorporate information about psychosis and address delusion-specific biases which were purposefully excluded from the present study. This methodological constraint might have been one reason why no overall effect emerged on delusions.

The study has a number of limitations that we would like to bring to the readers’ attention. Apart from using self-report instruments the short test–retest interval precludes any speculation whether effects on JTC are sustained and whether effects on delusion severity may have evolved at a later point in time. Secondly, diagnostic status was not formally confirmed for all patients (n = 32 out of 70; the remainder confirmed that a mental health expert had determined a diagnosis of schizophrenia but this information was not formally confirmed). However, results remained unchanged if verification status was taken into account. While careful psychometric checks assert the quality of the data, we cannot entirely dismiss the possibility that some participants did not fulfill full diagnostic criteria for schizophrenia spectrum disorders. Having said that, a recent study demonstrated that online studies are more difficult to sabotage than often thought (Moritz et al. 2013): experts asked to simulate having schizophrenia differ from real patients on a number of psychometric aspects. We must also admit that the sample was not representative as more women than men participated and patients were rather stable (no inpatients). Finally, we did not include an active control group. We chose a waitlist control group as it is otherwise hard to determine—in case of overall improvements—the unique contribution of either intervention. Now that the feasibility and partial effectiveness of the program has been shown, subsequent trials should compare the CBC group against an active control.

To conclude, the present program shows promise to ameliorate a cognitive bias that is ascribed a core role in delusion formation. At the same time, it did not result in major improvement on psychopathology, presumably because we deliberately decided against addressing psychosis. We can only speculate why this generic MCT was superior than the original MCT in improving JTC but was less potent for psychopathology. For example, the new program was self-paced and provided more extensive corrective experiences for many areas of daily life, whereas the MCT has a more narrow focus on single biases. The new program may thus challenge feelings of omniscience more than MCT. We recommend to blend the present program with other programs, particularly cognitive behavioral therapy (CBT), MCT as well as other cognitive bias modification programs targeting emotional symptoms (Steel et al. 2010; Turner et al. 2011).

References

Alvarez-Jimenez, M., Alcazar-Corcoles, M. A., Gonzalez-Blanch, C., Bendall, S., McGorry, P. D., & Gleeson, J. F. (2014). Online, social media and mobile technologies for psychosis treatment: A systematic review on novel user-led interventions. Schizophrenia Research, 156, 96–106.

Andreou, C., Treszl, A., Roesch-Ely, D., Kother, U., Veckenstedt, R., & Moritz, S. (2014). Investigation of the role of the jumping-to-conclusions bias for short-term functional outcome in schizophrenia. Psychiatry Research, 218, 341–347.

Balzan, R. P., Delfabbro, P. H., Galletly, C. A., & Woodward, T. S. (2012a). Over-adjustment or miscomprehension? A re-examination of the jumping to conclusions bias. Australian and New Zealand Journal of Psychiatry, 46, 532–540.

Balzan, R. P., Delfabbro, P. H., Galletly, C. A., & Woodward, T. S. (2012b). Reasoning heuristics across the psychosis continuum: The contribution of hypersalient evidence-hypothesis matches. Cognitive Neuropsychiatry, 17, 431–450.

Balzan, R. P., Delfabbro, P. H., Galletly, C. A., & Woodward, T. S. (2013). Confirmation biases across the psychosis continuum: The contribution of hypersalient evidence-hypothesis matches. British Journal of Clinical Psychology, 52, 53–69.

Balzan, R. P., Delfabbro, P. H., Galletly, C. A., & Woodward, T. S. (2014). Metacognitive training for patients with schizophrenia: Preliminary evidence for a targeted, single-module programme. Australian and New Zealand Journal of Psychiatry, 48, 1126-1136.

Bentall, R. P. (1992). A proposal to classify happiness as a psychiatric disorder. Journal of Medical Ethics, 18, 94–98.

Bentall, R. P., Kinderman, P., & Kaney, S. (1994). The self, attributional processes and abnormal beliefs: towards a model of persecutory delusions. Behaviour Research and Therapy, 32, 331–341.

Broadbent, D. (1958). Perception and communication. London: Pergamon Press.

Chun, M. M., & Marois, R. (2002). The dark side of visual attention. Current Opinion in Neurobiology, 12, 184–189.

Combs, D. R., Adams, S. D., Penn, D. L., Roberts, D., Tiegreen, J., & Stem, P. (2007). Social cognition and interaction training (SCIT) for schizophrenia. Schizophrenia Bulletin, 33, 585.

Fioravanti, M., Bianchi, V., & Cinti, M. E. (2012). Cognitive deficits in schizophrenia: an updated metanalysis of the scientific evidence. BMC Psychiatry, 12, 64.

Fortune, E. E., & Goodie, A. S. (2012). Cognitive distortions as a component and treatment focus of pathological gambling: A review. Psychology of Addictive Behaviors, 26, 298–310.

Freeman, D. (2007). Suspicious minds: The psychology of persecutory delusions. Clinical Psychology Review, 27, 425–457.

Freeman, D., Garety, P. A., Bebbington, P. E., Smith, B., Rollinson, R., Fowler, D., & Dunn, G. (2005). Psychological investigation of the structure of paranoia in a non-clinical population. British Journal of Psychiatry, 186, 427–435.

Garety, P. A., & Freeman, D. (2013). The past and future of delusions research: from the inexplicable to the treatable. British Journal of Psychiatry, 203, 327–333.

Garety, P. A., Freeman, D., Jolley, S., Dunn, G., Bebbington, P. E., Fowler, D. G., & Dudley, R. (2005). Reasoning, emotions, and delusional conviction in psychosis. Journal of Abnormal Psycholology, 114, 373–384.

Garety, P. A., Hemsley, D. R., & Wessely, S. (1991). Reasoning in deluded schizophrenic and paranoid patients. Biases in performance on a probabilistic inference task. Journal of Nervous and Mental Disease, 179, 194–201.

Hanssen, M. S. S., Bijl, R. V., Vollebergh, W., & Van Os, J. (2003). Self-reported psychotic experiences in the general population: A valid screening tool for DSM-III-R psychotic disorders? Acta Psychiatrica Scandinavica, 107, 369–377.

Hautzinger, M., & Brähler, M. (1993). Allgemeine Depressionsskala (ADS) [General Depression Scale]. Göttingen: Hogrefe.

Heinrichs, R. W., & Zakzanis, K. K. (1998). Neurocognitive deficit in schizophrenia: A quantitative review of the evidence. Neuropsychology, 12, 426–445.

Huq, S. F., Garety, P. A., & Hemsley, D. R. (1988). Probabilistic judgements in deluded and non-deluded subjects. Quarterly Journal of Experimental Psychology, 40A, 801–812.

Kahneman, D. (2012). Thinking, fast and slow. London: Penguin Books.

Konings, M., Bak, M., Hanssen, M., van Os, J., & Krabbendam, L. (2006). Validity and reliability of the CAPE: A self-report instrument for the measurement of psychotic experiences in the general population. Acta Psychiatrica Scandinavica, 114, 55–61.

Lincoln, T. M., Mehl, S., Exner, C., Lindenmeyer, J., & Rief, W. (2010a). Attributional style and persecutory delusions. Evidence for an event independent and state specific external-personal attribution bias for social situations. Cognitive Therapy and Research, 34, 297–302.

Lincoln, T. M., Ziegler, M., Lullmann, E., Muller, M. J., & Rief, W. (2010b). Can delusions be self-assessed? Concordance between self- and observer-rated delusions in schizophrenia. Psychiatry Research, 178, 249–254.

Lincoln, T. M., Ziegler, M., Mehl, S., & Rief, W. (2010c). The jumping to conclusions bias in delusions: Specificity and changeability. Journal of Abnormal Psychology, 119, 40–49.

Mehl, S., Landsberg, M. W., Schmidt, A. C., Cabanis, M., Bechdolf, A., Herrlich, J., Wagner, M. (2014). Why do bad things happen to me? Attributional style, depressed mood, and persecutory delusions in patients with schizophrenia. Schizophrenia Bulletin, 40, 1338–1346.

Miller, E. A. (2013). The “working” of working memory. Dialogues in Clinical Neuroscience, 15, 411–418.

Moritz, S., Andreou, C., Schneider, B. C., Wittekind, C. E., Menon, M., Balzan, R. P., & Woodward, T. S. (2014a). Sowing the seeds of doubt: A narrative review on metacognitive training in schizophrenia. Clinical Psychology Review, 34, 358–366.

Moritz, S., Göritz, A. S., Van Quaquebeke, N., Andreou, C., Jungclaussen, D., & Peters, M. J. (2014b). Knowledge corruption for visual perception in individuals high on paranoia. Psychiatry Research, 30, 700–705.

Moritz, S., & Jelinek, L. (2009). Inversion of the “unrealistic optimism” bias contributes to overestimation of threat in obsessive-compulsive disorder. Behavioural and Cognitive Psychotherapy, 37, 179–193.

Moritz, S., & Laroi, F. (2008). Differences and similarities in the sensory and cognitive signatures of voice-hearing, intrusions and thoughts: Voice-hearing is more than a disorder of input. Schizophrenia Research, 102, 96–107.

Moritz, S., & Pohl, R. F. (2009). Biased processing of threat-related information rather than knowledge deficits contributes to overestimation of threat in obsessive-compulsive disorder. Behavior Modification, 33, 763–777.

Moritz, S., Van Quaquebeke, N., & Lincoln, T. M. (2012a). Jumping to conclusions is associated with paranoia but not general suspiciousness: A comparison of two versions of the probabilistic reasoning paradigm. Schizophrenia Research and Treatment, 2012, 384039.

Moritz, S., Van Quaquebeke, N., Lincoln, T. M., Kother, U., & Andreou, C. (2013). Can we trust the internet to measure psychotic symptoms? Schizophrenia Research and Treatment, 2013, 457010.

Moritz, S., Veckenstedt, R., Andreou, C., Bohn, F., Hottenrott, B., Leighton, L., et al. (2014c). Sustained and “sleeper” effects of group metacognitive training for schizophrenia: a randomized clinical trial. JAMA Psychiatry, 71, 1103–1111.

Moritz, S., & Woodward, T. S. (2005). Jumping to conclusions in delusional and non-delusional schizophrenic patients. British Journal of Clinical Psychology, 44, 193–207.

Moritz, S., & Woodward, T. S. (2006). The contribution of metamemory deficits to schizophrenia. Journal of Abnormal Psychology, 115, 15–25.

Moritz, S., Woodward, T. S., & Ruff, C. C. (2003). Source monitoring and memory confidence in schizophrenia. Psychological Medicine, 33, 131–139.

Moritz, S., Woznica, A., Andreou, C., & Kother, U. (2012b). Response confidence for emotion perception in schizophrenia using a Continuous Facial Sequence Task. Psychiatry Research, 200, 202–207.

Peters, M. J., Cima, M. J., Smeets, T., de Vos, M., Jelicic, M., & Merckelbach, H. (2007). Did I say that word or did you? Executive dysfunctions in schizophrenic patients affect memory efficiency, but not source attributions. Cognitive Neuropsychiatry, 12, 391–411.

Pohl, P. F. (2004). Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory. Hove: Psychology Press.

Radloff, L. S. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurement, 1, 385–401.

Roberts, D. L., & Penn, D. L. (2009). Social cognition and interaction training (SCIT) for outpatients with schizophrenia: A preliminary study. Psychiatry Research, 166, 141–147.

Roiser, J. P., & Sahakian, B. J. (2013). Hot and cold cognition in depression. CNS Spectrums, 18, 139–149.

Ross, K., Freeman, D., Dunn, G., & Garety, P. (2011). A randomized experimental investigation of reasoning training for people with delusions. Schizophrenia Bulletin, 37, 324–333.

Savulich, G., Shergill, S., & Yiend, J. (2012). Biased cognition in psychosis. Journal of Experimental Psychopathology, 3, 514–536.

Schacter, D. L. (1999). The seven sins of memory. Insights from psychology and cognitive neuroscience. American Psychologist, 54, 182–203.

Schaefer, J., Giangrande, E., Weinberger, D. R., & Dickinson, D. (2013). The global cognitive impairment in schizophrenia: Consistent over decades and around the world. Schizophrenia Research, 150, 42–50.

Speechley, W., Moritz, S., Ngan, E., & Woodward, T. S. (2012). Impaired integration of disconfirmatory evidence and delusions in schizophrenia. Journal of Experimental Psychopathology, 3, 688–701.

Steel, C., Wykes, T., Ruddle, A., Smith, G., Shah, D. M., & Holmes, E. A. (2010). Can we harness computerised cognitive bias modification to treat anxiety in schizophrenia? A first step highlighting the role of mental imagery. Psychiatry Research, 178, 451–455.

Stefanis, N. C., Hanssen, M., Smirnis, N. K., Avramopoulos, D. A., Evdokimidis, I., & Stefanis, C. N. (2002). Evidence that three dimensions of psychosis have a distribution in the general population. Psychological Medicine, 32, 347–358.

Turner, R., Hoppitt, L., Hodgekins, J., Wilkinson, J., Mackintosh, B., & Fowler, D. (2011). Cognitive bias modification in the treatment of social anxiety in early psychosis: A single case series. Behavioural and Cognitive Psychotherapy, 39, 341–347.

van der Gaag, M. (2006). A neuropsychiatric model of biological and psychological processes in the remission of delusions and auditory hallucinations. Schizophrenia Bulletin, 32(Suppl. 1), 113–122.

Waller, H., Freeman, D., Jolley, S., Dunn, G., & Garety, P. (2011). Targeting reasoning biases in delusions: A pilot study of the Maudsley Review Training Programme for individuals with persistent, high conviction delusions. Journal of Behavior Therapy and Experimental Psychiatry, 42, 414–421.

Wittekind, C. E., Terfehr, K., Otte, C., Jelinek, L., Hinkelmann, K., & Moritz, S. (2014). Mood-congruent memory in depression—the influence of personal relevance and emotional context. Psychiatry Research, 215, 606–613.

Woodward, T. S., Balzan, R. P., Menon, M., & Moritz, S. (2014). Metacognitive training and therapy: an individualized and group intervention for psychosis. In P. H. Lysaker, G. Dimaggio & M. Brüne (Eds.), Social cognition and metacognition in schizophrenia. Psychopathology and treatment approaches (pp. 179–195). Oxford, UK: Academic Press Inc.

Conflict of Interest

Steffen Moritz, Lisa Endlich, Helena Mayer-Stassfurth, Christina Andreou, Nora Ramdani, Franz Petermann and Ryan P. Balzan declare that they have no conflict of interest. The study was not externally funded and results neither published nor presented elsewhere.

Informed Consent

All procedures were in accordance with the ethical standards of the Ethical Board of the German Psychological Society (DGPs). We obtained informed consent from all individual participants taking part in our study. Study aims were fully disclosed.

Animal Rights

No animal studies were carried out by the authors for this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Franz Petermann shares senior authorship.

Rights and permissions

About this article

Cite this article

Moritz, S., Mayer-Stassfurth, H., Endlich, L. et al. The Benefits of Doubt: Cognitive Bias Correction Reduces Hasty Decision-Making in Schizophrenia. Cogn Ther Res 39, 627–635 (2015). https://doi.org/10.1007/s10608-015-9690-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10608-015-9690-8