Abstract

We provide a new hierarchy of semidefinite programming relaxations, called NCTSSOS, to solve large-scale sparse noncommutative polynomial optimization problems. This hierarchy features the exploitation of term sparsity hidden in the input data for eigenvalue and trace optimization problems. NCTSSOS complements the recent work that exploits correlative sparsity for noncommutative optimization problems by Klep et al. (MP, 2021), and is the noncommutative analogue of the TSSOS framework by Wang et al. (SIAMJO 31: 114–141, 2021, SIAMJO 31: 30–58, 2021). We also propose an extension exploiting simultaneously correlative and term sparsity, as done previously in the commutative case (Wang in CS-TSSOS: Correlative and term sparsity for large-scale polynomial optimization, 2020). Under certain conditions, we prove that the optima of the NCTSSOS hierarchy converge to the optimum of the corresponding dense semidefinite programming relaxation. We illustrate the efficiency and scalability of NCTSSOS by solving eigenvalue/trace optimization problems from the literature as well as randomly generated examples involving up to several thousand variables.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A polynomial optimization problem (POP) consists of minimizing a polynomial over a basic closed semialgebraic set, namely an intersection of finitely many polynomial superlevel sets. Even if solving a POP is NP-hard in general [25], one can rely on the so-called “moment-sums of squares (SOS) hierarchy”, also referred to as “Lasserre’s hierarchy” [23] to compute a sequence of lower bounds for the POP. Each lower bound in the sequence is obtained by solving a semidefinite program (SDP) [2]. Thanks to Putinar’s Positivstellensatz [35], if the quadratic module generated by the polynomials describing the semialgebraic set is Archimedean, the sequence of these SDP lower bounds converges from below to the global optimum of the POP.

Although most POPs involve commuting variables, we are also interested in noncommutative POPs (NCPOP), i.e., POPs involving noncommuting variables (e.g. matrices, operators on a Hilbert space). The applications of NCPOPs include control theory and linear systems in engineering [37], quantum theory and quantum information science [16, 30, 32], matrix factorization ranks [15], machine learning [50, 51] and so on, and new applications are emerging.

The noncommutative (nc) analogue of Lasserre’s hierarchy [12, 31, 33], often called the “Navascués-Pironio-Acín (NPA) hierarchy”, or “moment-sums of hermitian squares (SOHS) hierarchy”, allows one to compute arbitrarily close lower bounds of the minimal eigenvalue of an nc polynomial over an nc semialgebraic set. In the same spirit, one can also obtain a hierarchy of SDP relaxations to approximate as closely as desired the minimal trace of an nc polynomial over an nc semialgebraic set [9]. We also refer the interested reader to [22] for the case of more general trace polynomials, i.e., polynomials in noncommuting variables and traces of their products.

From the view of applications, the common bottleneck of the Lasserre/NPA hierarchy is that the involved sequence of SDP relaxations becomes intractable very quickly as the number of variables n or the relaxation order d increases. In the commutative setting, the matrices involved at relaxation order d is of size \(\left( {\begin{array}{c}n+d\\ d\end{array}}\right) \); in the nc setting, the size of matrices involved at relaxation order d is even larger. It is already hard to solve such an SDP for \(n\simeq 30\) (\(n\simeq 20\) in the nc setting) and \(d=2\) on a modern standard laptop (at least when one relies on interior-point solvers).

1.1 Remedies by exploiting sparsity for (NC)POP

In certain situations, the SDP relaxations arising from POPs can be solved with adequate first-order methods rather than with costly interior-point algorithms; see e.g., [28, 49], where the authors exploit the constant trace property of the matrices in SDP relaxations of quadratically constrained quadratic programs or combinatorial optimization problems. In any case, it is worth finding remedies in view of the sparsity of (NC)POPs to prevent from the computational blow-up of the Lasserre/NPA hierarchy, by decreasing the sizes of the matrices involved in the SDP relaxations.

The first remedy is to partition the input variables into cliques when the polynomials involved in the objective function and the constraints fulfill a so-called correlative sparsity pattern. The resulting moment-SOS hierarchy is obtained by assembling sparse SDP matrices in terms of these cliques of variables [41, 42]. Under certain conditions, the lower bounds given by this hierarchy still converge to the global optimum of the original problem [24]. When the sizes of these cliques of variables are small enough (e.g., less than 10 in [41] or less than 20 in [20]), one can significantly improve the scalability of Lasserre’s hierarchy to handle problems with a large number of variables. For instance, by exploiting correlative sparsity, one can compute roundoff error bounds [26, 27] with up to several hundred variables, and solve optimal power flow problems [20] or deep learning problems [13] with up to thousands of variables. Several extensions have been investigated, including volume computation of sparse semialgebraic sets [38], or minimization of rational functions [8, 29]. Recently, Klep, the second author and Povh designed an nc analogue of this sparsity adapted hierarchy for both eigenvalue and trace minimization problems [21]. Nevertheless, when the sizes of variable cliques provided by correlative sparsity are relatively big (say \(\ge 20\) in the commutative setting and \(\ge 10\) in the nc setting), or when the correlative sparsity pattern is even fully dense, one might face again the same issue of untractable SDPs.

Another complementary remedy consists of exploiting term sparsity. For unconstrained problems, it means that the objective function involves few terms (monomials or words). One can then reduce the size of the associated SDP matrix by computing a smaller monomial basis via the Newton polytope method [36]. The nc analogue for this method is the Newton chip method [11, §2.3] in the context of eigenvalue optimization and the tracial Newton polytope [11, §3.3] in the context of trace optimization.

Besides obtaining a smaller monomial basis, in both unconstrained and constrained cases, one can rely on a term-sparsity adapted moment-SOS hierarchy (called TSSOS), following the line of research recently pursued by the two authors, Lasserre and Mai in [44, 46, 47]. The core idea of TSSOS is partitioning the monomial bases used to construct SDP relaxations into blocks in view of the correlations between monomials and then building SDP matrices to comply with this block structure. More precisely, one first define the term sparsity pattern (tsp) graph associated with a POP whose nodes are monomials from the monomial basis. Two nodes of the tsp graph are connected via an edge if and only if the product of the corresponding monomials belongs to the supports of the polynomials involved in the POP or is a monomial square. TSSOS is based on an iterative procedure, whose input is the tsp graph of the POP. Each iteration consists of two steps: first one performs a support extension operation on the graph and successively one performs a chordal extension operation on the graph (“maximal” chordal extensions are used in [47] while approximately minimum chordal extensions are used in [46]). At each iteration, one can construct an SDP relaxation with matrices of sparsity pattern represented by the corresponding graph. In doing so, TSSOS provides us with a two-level moment-SOS hierarchy involving sparse SDP matrices. TSSOS can be further combined with correlative sparsity, which allows one to solve large-scale POPs with several thousand variables and constraints [48]. Apart from (commutative) POPs, the idea of TSSOS can be also used to develop more efficient SOS-based algorithms for other problems, e.g. the approximation of joint spectral radius [45].

Contributions Motivated by the performance of TSSOS for commutative POPs, we develop an nc analogue of the TSSOS framework (called NCTSSOS) in this paper, in order to handle large-scale eigenvalue/trace optimization problems with sparse input data.

First, we extend in Sect. 3 the notion of term sparsity patterns to unconstrained eigenvalue optimization problems. We show how to build the tsp graph and derive a two-step iterative procedure to enlarge the graph via the support extension operation and the chordal extension operation. Based on this, we then give the NCTSSOS hierarchy. The generalization to constrained eigenvalue optimization problems is provided in Sect. 3.2. Under certain conditions, we prove that the optima of the NCTSSOS hierarchy converge to the optimum of the corresponding dense relaxation. In Sect. 4, we show how to benefit simultaneously from both correlative and term sparsity, to obtain an nc variant of the so-called “CS-TSSOS” hierarchy [48]. Section 5 is dedicated to trace optimization. For both unconstrained and constrained trace optimization problems, we provide a term sparsity (and combined with correlative sparsity) adapted hierarchy of SDP relaxations. In Sect. 6, we demonstrate the computational efficiency, scalability and accuracy of the NCTSSOS hierarchy by various numerical examples involving up to several thousand variables.

The algorithmic framework of the NCTSSOS hierarchy has been released as an open-source Julia [4] package, also called NCTSSOS, which is available online and comes together with a documentation.Footnote 1

Our term sparsity (and combined with correlative sparsity) adapted moment-SOHS hierarchies appear in a similar manner as the ones obtained for the commutative case [46,47,48]. We believe that it is of interest for researchers using noncommutative optimization tools to have a self-contained paper stating explicitly the construction of tsp graphs, support/chordal extension operations, as well as the different term sparsity (and combined with correlative sparsity) adapted SDP formulations, either for eigenvalue or trace optimization. While the overall strategy to obtain tsp graphs for eigenvalue optimization is very similar to the commutative case, it is less straightforward for trace optimization, where it is mandatory to introduce the cyclic analog of the tsp graph and the support extension operation. Furthermore, we would like to emphasize that the main contribution of this paper is to show a significant quantitative improvement with respect to the previous results obtained for various nc eigenvalue/trace optimization problems. We hope that these results will convince researchers in related fields, including quantum information physicists relying on the NPA hierarchy, about the potential impact that NCTSSOS could have on solving their problems more efficiently.

2 Notation and preliminaries

In this section, we recall some notations, definitions and basic results that will be used in the rest of this paper.

2.1 Noncommutative polynomials

For a positive integer r, let us denote by \({\mathbb {S}}_r\) (resp. \({\mathbb {S}}^+_r\)) the space of all symmetric (resp. positive semidefinite (PSD)) matrices of size r, and by \({\mathbb {S}}_r^n\) the set of n-tuples \({\underline{A}} = (A_1, \ldots , A_n)\) of symmetric matrices \(A_i\) of size r. For matrices \(A,B\in {\mathbb {S}}_r\) (resp. vectors \({\mathbf {u}},{\varvec{v}}\in {\mathbb {R}}^r\)), let \(\langle A, B\rangle \in {\mathbb {R}}\) (resp. \(\langle {\mathbf {u}}, {\varvec{v}}\rangle \in {\mathbb {R}}\)) be the trace inner-product, defined by \(\langle A, B\rangle ={\mathrm{Tr}}(A^TB)\) (resp. \(\langle {\mathbf {u}},{\varvec{v}}\rangle ={\mathbf {u}}^T{\varvec{v}}\)) and let \(A\circ B\in {\mathbb {S}}_r\) denote the Hadamard, or entrywise, product of A and B, defined by \([A\circ B]_{ij} = A_{ij}B_{ij}\). For a fixed \(n\in {\mathbb {N}}\backslash \{0\}\), let \({\underline{X}}=(X_1, \ldots , X_n)\) be a tuple of letters and consider the set of all possible words of finite length in \({\underline{X}}\) which is denoted by \(\langle {\underline{X}}\rangle \). The empty word is denoted by 1. We denote by \({\mathbb {R}}\langle {\underline{X}}\rangle \) the ring of real polynomials in the noncommutating variables \({\underline{X}}\). An element f in \({\mathbb {R}}\langle {\underline{X}}\rangle \) can be written as \(f=\sum _{w\in \langle {\underline{X}}\rangle }a_ww\), \(a_w\in {\mathbb {R}}\), which is called a noncommutative polynomial (nc polynomial for short). The support of f is defined by \({\mathrm{supp}}(f):=\{w\in \langle {\underline{X}}\rangle \mid a_w\ne 0\}\) and the degree of f, denoted by \(\deg (f)\), is the length of the longest word in \({\mathrm{supp}}(f)\). For a given \(d\in {\mathbb {N}}\), let us denote by \({\mathbf {W}}_d\) the column vector of all words of degree at most d arranged with respect to. the lexicographic order. The ring \({\mathbb {R}}\langle {\underline{X}}\rangle \) is equipped with the involution \(\star \) that fixes \({\mathbb {R}}\cup \{X_1, \ldots , X_n\}\) point-wise and reverses words, so that \({\mathbb {R}}\langle {\underline{X}}\rangle \) is the \(\star \)-algebra freely generated by n symmetric letters \(X_1, \ldots , X_n\). The set of symmetric elements in \({\mathbb {R}}\langle {\underline{X}}\rangle \) is defined as \({\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle :=\{f\in {\mathbb {R}}\langle {\underline{X}}\rangle \mid f^{\star }=f\}\). We use \(|\cdot |\) to denote the cardinality of a set and let \([m]:=\{1,2,\ldots ,m\}\) for \(m\in {\mathbb {N}}\backslash \{0\}\).

2.2 Sums of hermitian squares

An nc polynomial of the form \(g^{\star }g\) is called a hermitian square. An nc polynomial \(f\in {\mathbb {R}}\langle {\underline{X}}\rangle \) is called a sum of hermitian squares (SOHS) if there exist nc polynomials \(g_1, \ldots , g_r\in {\mathbb {R}}\langle {\underline{X}}\rangle \) such that \(f = g_1^{\star }g_1+g_2^{\star }g_2+\ldots +g_r^{\star }g_r\). The set of SOHS is denoted by \(\varSigma \langle {\underline{X}}\rangle \). Checking whether a given nc polynomial \(f\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) is an SOHS can be cast as a semidefinite program (SDP) due to the following theorem.

Theorem 1

( [19], Theorem 1.1) Let \(f\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) with \(\deg (f)=2d\). Then \(f\in \varSigma \langle {\underline{X}}\rangle \) if and only if there exists a matrix \(Q\succeq 0\) satisfying

Any symmetric matrix Q (not necessarily PSD) satisfying (1) is called a Gram matrix of f. The standard monomial basis \({\mathbf {W}}_d\) used in (1) can be reduced via the Newton chip method; see Chapter 2 in [11].

2.3 Semialgebraic sets and quadratic modules

Given \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), the semialgebraic set \({\mathcal {D}}_S\) associated with S is defined by

The operator semialgebraic set \({\mathcal {D}}_S^{\infty }\) is the set of all bounded self-adjoint operators \({\underline{A}}\) on a Hilbert space endowed with a scalar product \(\langle \cdot ,\cdot \rangle \) making \(g({\underline{A}})\) a PSD operator, for all \(g\in S\). The quadratic module \({\mathcal {M}}_S\), generated by S, is defined by

and the truncated quadratic module \({\mathcal {M}}_{S,d}\) of order \(d\in {\mathbb {N}}\), generated by S, is

A quadratic module \({\mathcal {M}}\) is Archimedean if for each \(h\in {\mathbb {R}}\langle {\underline{X}}\rangle \), there exists \(N\in {\mathbb {N}}\) such that \(N-h^{\star }h\in {\mathcal {M}}\). The noncommutative analog of Putinar’s Positivstellensatz describing noncommutative polynomials positive on \({\mathcal {D}}_S^{\infty }\) with Archimedean \({\mathcal {M}}_S\) is due to Helton and McCullough:

Theorem 2

( [18], Theorem 1.2) Let \(\{f\}\cup S\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and assume that \({\mathcal {M}}_S\) is Archimedean. If \(f(A)\succ 0\) for all \(A\in {\mathcal {D}}_S^{\infty }\), then \(f\in {\mathcal {M}}_S\).

2.4 Moment and localizing matrices

With \({\mathbf {y}}=(y_{w})_{w\in \langle {\underline{X}}\rangle }\) being a sequence indexed by the standard monomial basis \(\langle {\underline{X}}\rangle \) of \({\mathbb {R}}\langle {\underline{X}}\rangle \), let \(L_{{\mathbf {y}}}:{\mathbb {R}}\langle {\underline{X}}\rangle \rightarrow {\mathbb {R}}\) be the linear functional

Given a monomial basis \({\mathbf {B}}\), the noncommutative moment matrix \(M_{{\mathbf {B}}}({\mathbf {y}})\) associated with \({\mathbf {B}}\) and \({\mathbf {y}}\) is the matrix with rows and columns indexed by \({\mathbf {B}}\) such that

If \({\mathbf {B}}\) is the standard monomial basis \({\mathbf {W}}_d\), we also denote \(M_{{\mathbf {W}}_d}({\mathbf {y}})\) by \(M_{d}({\mathbf {y}})\).

Suppose \(g=\sum _{w}b_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and let \({\mathbf {y}}=(y_{w})_{w\in \langle {\underline{X}}\rangle }\) be given. For any positive integer d, the noncommutative localizing matrix \(M_{d}(g{\mathbf {y}})\) associated with g and \({\mathbf {y}}\) is the matrix with rows and columns indexed by \({\mathbf {W}}_d\) such that

2.5 Eigenvalue optimization for noncommutative polynomials

Given \(f=\sum _{w}a_ww\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), the eigenvalue minimization problem for f is defined by:

Assume that \({\mathbf {B}}\) is a monomial basis. Then \((\text {EP}_0)\) is equivalent to the following SDP ( [11]):

Writing \(M_{{\mathbf {B}}}({\mathbf {y}})=\sum _{w}A_{w}y_{w}\) for appropriate matrices \(\{A_{w}\}_w\), the dual SDP of (6) is

where \({\mathbf {B}}^{\star }{\mathbf {B}}:=\{u^{\star }v\mid u,v\in {\mathbf {B}}\}\) and \(\delta _{1w}\) is the usual Kronecker symbol.

Given \(f=\sum _{w}a_ww\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), let us consider the following eigenvalue minimization problem for f over the operator semialgebraic set \({\mathcal {D}}_S^{\infty }\):

For convenience, we set \(g_0:=1\) and let \(d_j=\lceil \deg (g_j)/2\rceil \) for \(j=0,1,\ldots ,m\). Assume that \({\hat{d}}\ge d := \max \{\lceil \deg (f)/2\rceil ,d_1,\ldots ,d_m\}\) is a positive integer. As shown in [34], one has the following hierarchy of moment relaxations, indexed by \({\hat{d}}\), to obtain a sequence of lower bounds for the optimum \(\lambda _{\min }(f, S)\) of (\(\text {EQ}_0\)):

We call \({\hat{d}}\) the relaxation order. If the quadratic module \({\mathcal {M}}_S\) generated by S is Archimedean, then the sequence of lower bounds \((\lambda _{{\hat{d}}}(f, S))_{{{\hat{d}}}\ge d}\) converges to \(\lambda _{\min }(f, S)\). See, e.g., [11, Corollary 4.11] for a proof.

For each j, writing \(M_{{\hat{d}}-d_j}(g_j{\mathbf {y}})=\sum _{w}D_{w}^jy_{w}\) for appropriate matrices \(\{D_{w}^j\}_{j,w}\), we can write the dual SDP of (9) as

2.6 Trace optimization for noncommutative polynomials

Given \(g,h\in {\mathbb {R}}\langle {\underline{X}}\rangle \), the nc polynomial \([g, h]:=gh-hg\) is called a commutator. Two nc polynomials \(g,h\in {\mathbb {R}}\langle {\underline{X}}\rangle \) are said to be cyclically equivalent, denoted by \(g{\mathop {\sim }\limits ^{\text {cyc}}} h\), if \(g-h\) is a sum of commutators. Let \(w\in \langle {\underline{X}}\rangle \). The canonical representative [w] of w is the minimal one with respect to the lexicographic order among all words cyclically equivalent to w. For \({\mathbf {A}}\subseteq \langle {\underline{X}}\rangle \), \([{\mathbf {A}}]:=\{[w]\mid w\in {\mathbf {A}}\}\). For an nc polynomial \(f=\sum _{w}a_ww\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), the canonical representative of f is defined by \([f]:=\sum _{w}a_w[w]\in {\mathbb {R}}\langle {\underline{X}}\rangle \) and the cyclic degree of f is defined as \({\mathrm{cdeg}}(f):=\deg ([f])\). We warn the reader about a small abuse of notation as [k] stands for \(\{1,\dots ,k\}\) when k is a positive integer.

The normalized trace of a matrix \(A=[a_{ij}]\in {\mathbb {S}}_r\) is given by \({\mathrm{tr}}\,A=\frac{1}{r}\sum _{i=1}^ra_{ii}\). Given \(f=\sum _{w}a_ww\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), the trace minimization problem for f is defined by

Let \(d={\mathrm{cdeg}}(f)\). As shown in [11], \((\text {TP}_0)\) admits the following moment relaxation:

The dual of \((\text {TP})\) reads as

Given \(f=\sum _{w}a_ww\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), the trace minimization problem for f over the semialgebraic set \({\mathcal {D}}_S\) is defined by

We produce lower bounds on \({\mathrm{tr}}_{\min }(f, S)\) by restricting ourselves to a specific subset of \({{\mathcal {D}}_S^\infty }\), obtained by considering the algebra of all bounded operators on a Hilbert space to finite von Neumann algebras [39] of type I and type II. We introduce \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1}\) as the trace minimum of f on \({{\mathcal {D}}_S^{{{\,\mathrm{II}\,}}_1}}\). Since \({{\mathcal {D}}_S}\) is contained in \({{\mathcal {D}}_S^{{{\,\mathrm{II}\,}}_1}}\), one has \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1} \le {\mathrm{tr}}_{\min }(f,S)\). For a proper definition of \({{\mathcal {D}}_S^{{{\,\mathrm{II}\,}}_1}}\), we refer the interested reader to, e.g., [11, Definition 1.59]. As shown in [10], one has the following series of moment relaxations indexed by \({\hat{d}}\ge d\) to obtain a hierarchy of lower bounds for \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1}\):

We call \({\hat{d}}\) the relaxation order. If the quadratic module \({\mathcal {M}}_S\) generated by S is Archimedean, then the sequence of lower bounds \((\mu _{{\hat{d}}}(f, S))_{{{\hat{d}}}\ge d}\) converges to \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1}\). See, e.g., [11, Corollary 5.5] for a proof.

The dual of (15) reads as

2.7 Chordal graphs and sparse matrices

In this subsection, we briefly revisit the relationship between chordal graphs and sparse matrices, which is crucial for the sparsity-exploitation of this paper. For more details on chordal graphs and sparse matrices, the reader is referred to [40].

An (undirected) graph G(V, E) or simply G consists of a set of nodes V and a set of edges \(E\subseteq \{\{v_i,v_j\}\mid (v_i,v_j)\in V\times V\}\). When G is a graph, we also use V(G) and E(G) to indicate the node set of G and the edge set of G, respectively. The adjacency matrix of G is denoted by \(B_G\) for which we put ones on its diagonal. For two graphs G, H, we say that G is a subgraph of H if \(V(G)\subseteq V(H)\) and \(E(G)\subseteq E(H)\), denoted by \(G\subseteq H\). For a graph G(V, E), a cycle of length k is a set of nodes \(\{v_1,v_2,\ldots ,v_k\}\subseteq V\) with \(\{v_k,v_1\}\in E\) and \(\{v_i, v_{i+1}\}\in E\), for \(i=1,\ldots ,k-1\). A chord in a cycle \(\{v_1,v_2,\ldots ,v_k\}\) is an edge \(\{v_i, v_j\}\) that joins two nonconsecutive nodes in the cycle.

A graph is called a chordal graph if all its cycles of length at least four have a chord. Note that any non-chordal graph G(V, E) can always be extended to a chordal graph \({\overline{G}}(V,{\overline{E}})\) by adding appropriate edges to E, which is called a chordal extension of G(V, E). A clique \(C\subseteq V\) of G is a subset of nodes where \(\{v_i,v_j\}\in E\) for any \(v_i,v_j\in C\). If a clique C is not a subset of any other clique, then it is called a maximal clique. It is known that maximal cliques of a chordal graph can be enumerated efficiently in linear time in the number of nodes and edges of the graph [5].

Given a graph G(V, E) with \(V=[|V|]\), a symmetric matrix Q with rows and columns indexed by V is said to have sparsity pattern G if \(Q_{ij}=Q_{ji}=0\) whenever \(i\ne j\) and \(\{i,j\}\notin E\), i.e., \(B_G\circ Q=Q\). Let \({\mathbb {S}}_G\) be the set of symmetric matrices with sparsity pattern G. A matrix in \({\mathbb {S}}_G\) exhibits a block structure: each block corresponds to a maximal clique of G. The maximal block size is the maximal size of maximal cliques of G, namely, the clique number of G. Note that there might be overlaps between blocks because different maximal cliques may share nodes.

Given a maximal clique C of G(V, E), we define a matrix \(P_{C}\in {\mathbb {R}}^{|C|\times |V|}\) as

where C(i) denotes the i-th node in C, sorted in the ordering compatibly with V. Note that \(P_{C}QP_{C}^T\in {\mathbb {S}}_{|C|}\) extracts a principal submatrix defined by the indices in the clique C from a symmetric matrix \(Q\in {\mathbb {S}}_{|V|}\), and \(P_{C}^TQ_{C}P_{C}\) inflates a \(|C|\times |C|\) matrix \(Q_{C}\) into a sparse \(|V|\times |V|\) matrix.

The PSD matrices with sparsity pattern G form a convex cone

When the sparsity pattern graph G is chordal, the cone \({\mathbb {S}}^+_{|V|}\cap {\mathbb {S}}_G\) can be decomposed as a sum of simple convex cones, as stated in the following theorem.

Theorem 3

( [1], see also [40]) Let G(V, E) be a chordal graph and assume that \(C_1,\ldots ,C_t\) are the list of maximal cliques of G(V, E). Then a matrix \(Q\in {\mathbb {S}}^+_{|V|}\cap {\mathbb {S}}_G\) if and only if there exists \(Q_{i}\in {\mathbb {S}}^+_{|C_i|}\) for \(i=1,\ldots ,t\) such that \(Q=\sum _{i=1}^tP_{C_i}^TQ_{i}P_{C_i}\).

Given a graph G(V, E) with \(V=[|V|]\), let \(\varPi _{G}\) be the projection from \({\mathbb {S}}_{|V|}\) to the subspace \({\mathbb {S}}_G\), i.e., for \(Q\in {\mathbb {S}}_{|V|}\),

We denote by \(\varPi _{G}({\mathbb {S}}^+_{|V|})\) the set of matrices in \({\mathbb {S}}_G\) that have a PSD completion, i.e.,

One can check that the PSD completable cone \(\varPi _{G}({\mathbb {S}}^+_{|V|})\) and the PSD cone \({\mathbb {S}}^+_{|V|}\cap {\mathbb {S}}_G\) form a pair of dual cones in \({\mathbb {S}}_G\); see [40, Section 10.1] for a proof. Moreover, for a chordal graph G, the decomposition result for the cone \({\mathbb {S}}^+_{|V|}\cap {\mathbb {S}}_G\) in Theorem 3 leads to the following characterization of the PSD completable cone \(\varPi _{G}({\mathbb {S}}^+_{|V|})\).

Theorem 4

( [17], see also [40]) Let G(V, E) be a chordal graph and assume that \(C_1,\ldots ,C_t\) are the list of maximal cliques of G(V, E). Then a matrix \(Q\in \varPi _{G}({\mathbb {S}}^+_{|V|})\) if and only if \(Q_{i}=P_{C_i}QP_{C_i}^T\succeq 0\) for \(i=1,\ldots ,t\).

3 Eigenvalue optimization for noncommutative polynomials with term sparsity

In this section, we consider the eigenvalue optimization problem for noncommutative polynomials with term sparsity. For the reader’s convenience, we first deal with the unconstrained case and then generalize to the constrained case.

3.1 The unconstrained case

In this subsection, we describe an iterative procedure to exploit term sparsity for the moment-SOHS relaxations (6–7) of the unconstrained NCPOP \((\text {EP}_0)\) defined in (5).

Let \(f=\sum _{w\in {\mathbf {A}}}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) with \({\mathrm{supp}}(f)={\mathbf {A}}\) (w.l.o.g. assuming \(1\in {\mathbf {A}}\)). Assume that \({\mathbf {B}}\) is the monomial basis returned by the Newton chip method ( [11, §2.3]) with \(r=|{\mathbf {B}}|\). To represent the term sparsity in f, in the sequel we will consider graphs with \(V:={\mathbf {B}}\) as the set of nodes. Suppose that G(V, E) is such a graph. We define the support of G by

We further define two operations on G: support extension and chordal extension.

(1) support extension The support extension of G, denoted by \({\mathrm{SE}}(G)\), is the graph with nodes \({\mathbf {B}}\) and with edges

where \({\mathbf {B}}^2:=\{u^{\star }u\mid u\in {\mathbf {B}}\}\).

Example 1

Consider the graph G(V, E) with

Then \(E({\mathrm{SE}}(G))=\{\{1,YZ\},\{Y,ZX\},\{Y,Z\}\}\). See Fig. 1 for the support extension \({\mathrm{SE}}(G)\) of G.

The support extension \({\mathrm{SE}}(G)\) of G for Example 1. The dashed edges are added after the support extension

(2) Chordal extension: For a graph G, we denote any specific chordal extension of G by \({\overline{G}}\). There are generally various chordal extensions of G. In this paper, we will consider two particular types of chordal extensions: the maximal chordal extension and approximately smallest chordal extensions. By the maximal chordal extension, we refer to the chordal extension that completes every connected component of G. The maximal chordal extension can be easily computed by listing all connected components. Another advantage of the maximal chordal extension is that there is no overlap among maximal cliques. However, the clique number of the maximal chordal extension may be large among all possible chordal extensions. A chordal extension with the smallest clique number is called a smallest chordal extension. Computing a smallest chordal extension of a graph is an NP-complete problem in general. Fortunately, several heuristic algorithms, e.g., the greedy minimum degree and the greedy minimum fill-ins, are known to efficiently produce a good approximation; see [6] for more detailed discussions. Throughout the paper, we assume that for graphs G, H,

This assumption is reasonable since any chordal extension of H restricting to G is also a chordal extension of G.

Example 2

Consider the graph G(V, E) with \(V=\{X_1,X_2,X_3,X_4,X_5,\) \(X_6\}\) and \(E=\{\{X_1,X_2\},\{X_2,X_3\},\{X_3,X_4\},\{X_4,X_5\},\{X_5,X_6\},\{X_6,X_1\}\}.\) See Fig. 2 for a smallest chordal extension \({\overline{G}}\) of G which has 4 maximal cliques of size 3. On the other hand, the maximal chordal extension of G has one maximal clique of size 6.

A smallest chordal extension \({\overline{G}}\) of G for Example 2. The dashed edges are added after the chordal extension

Now we define \(G_0(V,E_0)\) to be the graph with \(V={\mathbf {B}}\) and

which is called the term sparsity pattern (tsp) graph associated with f. We then iteratively define a sequence of graphs \((G_k(V,E_k))_{k\ge 1}\) by alternately performing support extension and chordal extension to \(G_0(V,E_0)\):

When f is sparse (i.e., \(G_1\) is not complete), by replacing \(M_{{\mathbf {B}}}({\mathbf {y}})\succeq 0\) with the weaker condition \(B_{G_k}\circ M_{{\mathbf {B}}}({\mathbf {y}})\in \varPi _{G_k}({\mathbb {S}}^+_{r})\) in (6), we obtain a series of sparse moment relaxations of \((\text {EP})\) (and \((\text {EP}_0)\)) indexed by \(k\ge 1\):

We call k the sparse order. By construction, one has \(G_{k}\subseteq G_{k+1}\) for all \(k\ge 1\) and therefore the sequence of graphs \((G_k(V,E_k))_{k\ge 1}\) stabilizes after a finite number of steps. We denote the stabilized graph by \(G_{\bullet }(V,E_{\bullet })\) and the corresponding moment relaxation by \((\text {EP}^{\bullet })\) (with optimum \(\lambda _{\bullet }(f)\)).

For each \(k\ge 1\), the dual SDP of (24) reads as

where \(A_{w}\) is defined in Sect. 2.5.

Theorem 5

Assume that \(f\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Then the following hold:

-

(1)

For each \(k\ge 1\), there is no duality gap between \((\text {EP}^k)\) (24) and its dual (25).

-

(2)

The sequence \((\lambda _k(f))_{k\ge 1}\) is monotone nondecreasing and \(\lambda _k(f)\le \lambda _{\min }(f)\) for all k (with \(\lambda _{\min }(f)\) defined in (6)).

-

(3)

If the maximal chordal extension is used in (23), then \((\lambda _k(f))_{k\ge 1}\) converges to \(\lambda _{\min }(f)\) in finitely many steps, i.e., \(\lambda _{\bullet }(f)=\lambda _{\min }(f)\).

Proof

(i) Note that the SDP problem \((\text {EP})\) (6) has a Slater’s point, i.e., a strictly feasible solution (see, e.g., Proposition 4.9 in [11]), say \(M_{{\mathbf {B}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\). Since each block of \(B_{G_k}\circ M_{{\mathbf {B}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) is a principal submatrix of \(M_{{\mathbf {B}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\), we have that \(B_{G_k}\circ M_{{\mathbf {B}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) is a Slater’s point of \((\text {EP}^k)\) by Theorem 4. So by the duality theory of convex programming, there is no duality gap between \((\text {EP}^k)\) and its dual.

(ii) Because \(G_{k}\subseteq G_{k+1}\), each maximal clique of \(G_k\) is a subset of some maximal clique of \(G_{k+1}\). Thus by Theorem 4, we have that \((\text {EP}^k)\) is a relaxation of \((\text {EP}^{k+1})\) (and also a relaxation of \((\text {EP})\)). This yields the desired conclusions.

(iii) Let \({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}=(y^{{{\,\mathrm{opt}\,}}}_{w})_w\) be an arbitrary feasible solution of \((\text {EP}^{\bullet })\). Note that \(\{y_{w}\mid w\in {\mathrm{supp}}(G_{\bullet })\cup {\mathbf {B}}^2\}\) is the set of decision variables involved in \((\text {EP}^{\bullet })\) and \(\{y_{w}\mid w\in {\mathbf {B}}^{\star }{\mathbf {B}}\}\) is the set of decision variables involved in \((\text {EP})\). We then define a vector \({\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}=({\overline{y}}^{{{\,\mathrm{opt}\,}}}_{w})_{w\in {\mathbf {B}}^{\star }{\mathbf {B}}}\) as follows:

If the maximal chordal extension is used in (23), then matrices in \(\varPi _{G_k}({\mathbb {S}}_{r}^+)\) for all \(k\ge 1\) are block-diagonal (up to permutation). As a consequence, \(B_{G_{k}}\circ M_{{\mathbf {B}}}({\mathbf {y}})\in \varPi _{G_k}({\mathbb {S}}_{r}^+)\) implies \(B_{G_{k}}\circ M_{{\mathbf {B}}}({\mathbf {y}})\succeq 0\). By construction, we have \(M_{{\mathbf {B}}}({\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}})=B_{G_{\bullet }}\circ M_{{\mathbf {B}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\succeq 0\). Therefore \({\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}\) is a feasible solution of \((\text {EP})\) and hence \(L_{{\mathbf {y}}^{{{\,\mathrm{opt}\,}}}}(f)=L_{{\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}}(f)\ge \lambda _{\min }(f)\). This yields \(\lambda _{\bullet }(f)\ge \lambda _{\min }(f)\) since \({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}\) is an arbitrary feasible solution of \((\text {EP}^{\bullet })\). By (ii), we already have \(\lambda _{\bullet }(f)\le \lambda _{\min }(f)\). Therefore, \(\lambda _{\bullet }(f)=\lambda _{\min }(f)\). \(\square \)

If approximately smallest chordal extensions are used in (23), the sequence \((\lambda _k(f))_{k\ge 1}\) doesn’t necessarily converge to \(\lambda _{\min }(f)\). The following is an example.

Example 3

Consider the nc polynomial \(f=X^2-XY-YX+3Y^2-2XYX+2XY^2X-YZ-ZY+6Z^2+9X^2Y+9Z^2Y-54ZYZ+142ZY^2Z\) ( [21]). The monomial basis given by the Newton chip method is \(\{1,X,Y,Z,YX,YZ\}\). We have \(E_0=\{\{1,YX\},\{1,YZ\},\{X,YX\},\{X,Y\},\{Y,Z\},\{Y,YZ\},\{Z,YZ\}\}\). Figure 3 shows the tsp graph \(G_0\) (without dashed edges) and its chordal extension \(G_1\) (with dashed edges) for f. The graph sequence \((G_k)_{k\ge 1}\) immediately stabilizes at \(k=1\). Solving the SDP problem (\(\text {EP}^1\)) associated with \(G_1\), we obtain \(\lambda _1(f)\approx -0.00355\) whereas we have \(\lambda _{\min }(f)=0\).

The tsp graph \(G_0\) and its chordal extension \(G_1\) for Example 3

The next result states that \(\lambda _1(f)=\lambda _{\min }(f)\) always holds for a quadratic f.

Proposition 1

Suppose that the nc polynomial \(f\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) in (\(\text {EP}_0\)) is quadratic, i.e., \(\deg (f)=2\). Then \(\lambda _1(f)=\lambda _{\min }(f)\).

Proof

Let \({\mathbf {A}}={\mathrm{supp}}(f)\). As f is quadratic, we may take \({\mathbf {B}}=\{1,X_1,\ldots ,X_n\}\) as a monomial basis. Let \(G_0\) be the tsp graph associated with f. We only need to prove that if f admits a PSD Gram matrix, then f admits a Gram matrix in \({\mathbb {S}}^+_{n+1}\cap {\mathbb {S}}_{G_0}\). Suppose that \(Q=[q_{ij}]_{i,j=0}^n\) is a PSD Gram matrix for f indexed by \({\mathbf {B}}\). Note that for \(i,j>0\), if \(\{X_i,X_j\}\not \in E(G_0)\), then we must have \(X_iX_j,X_jX_i\notin {\mathbf {A}}\), which implies \(q_{ij}=0\); for \(i=0,j>0\), if \(\{1,X_j\}\not \in E(G_0)\), then we must have \(X_j\notin {\mathbf {A}}\), which implies \(q_{0j}=q_{j0}=0\). It follows that \(Q\in {\mathbb {S}}_{G_0}\) as desired. \(\square \)

3.2 The constrained case

In this subsection, we generalize the iterative procedure in Sect. 3.1 to the constrained case and we show how to iteratively exploit term sparsity for the moment-SOHS hierarchy (9–10) of the constrained NCPOP \((\text {EQ}_0)\) defined in (8).

Assume that \(f=\sum _{w}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Let

As in Sect. 2.5, we set \(g_0:=1\), and let \(d_j=\lceil \deg (g_j)/2\rceil \), \(j\in \{0\}\cup [m]\) and \(d=\max \{\lceil \deg (f)/2\rceil ,d_1,\ldots ,d_m\}\). Fixing a relaxation order \({\hat{d}}\ge d\), we define a graph \(G_{{\hat{d}}}^{\text {tsp}}(V_{{\hat{d}}}^{\text {tsp}},E_{{\hat{d}}}^{\text {tsp}})\) with \(V_{{\hat{d}}}^{\text {tsp}}={\mathbf {W}}_{{\hat{d}}}\) and

where \({\mathbf {W}}_{{\hat{d}}}^2:=\{u^{\star }u\mid u\in {\mathbf {W}}_{{\hat{d}}}\}\). We call \(G_{{\hat{d}}}^{\text {tsp}}\) the term sparsity pattern (tsp) graph associated with \({\mathbf {A}}\) (or f and S).

For a graph G(V, E) with \(V\subseteq \langle {\underline{X}}\rangle \) and \(g\in {\mathbb {R}}\langle {\underline{X}}\rangle \), let us define

Let \(G_{{\hat{d}},0}^{(0)}=G_{{\hat{d}}}^{\text {tsp}}\) and \(G_{{\hat{d}},j}^{(0)}\) be the empty graph for \(j\in [m]\). Then for each \(j\in \{0\}\cup [m]\), we iteratively define a sequence of graphs \((G_{{\hat{d}},j}^{(k)}(V_{{\hat{d}},j},E_{{\hat{d}},j}^{(k)}))_{k\ge 1}\) with \(V_{{\hat{d}},j}={\mathbf {W}}_{{\hat{d}}-d_j}\) via two successive operations:

(1) support extension Let \(F_{{\hat{d}},j}^{(k)}\) be the graph with \(V(F_{{\hat{d}},j}^{(k)})={\mathbf {W}}_{{\hat{d}}-d_j}\) and

where \(u^{\star }{\mathrm{supp}}(g_j)v:=\{u^{\star }wv\mid w\in {\mathrm{supp}}(g_j)\}\).

(2) chordal extension: Let

Let \(r_j=|{\mathbf {W}}_{{\hat{d}}-d_j}|\) for \(j\in \{0\}\cup [m]\). Then by replacing \(M_{{\hat{d}}-d_j}(g_j{\mathbf {y}})\succeq 0\) with the weaker condition \(B_{G_{{\hat{d}},j}^{(k)}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}})\in \varPi _{G_{{\hat{d}},j}^{(k)}}({\mathbb {S}}^+_{r_j})\) for \(j\in \{0\}\cup [m]\) in (9), we obtain the following series of sparse moment relaxations for (\(\text {EQ}_{{\hat{d}}}\)) indexed by \(k\ge 1\):

We call k the sparse order. By construction, one has \(G_{{\hat{d}},j}^{(k)}\subseteq G_{{\hat{d}},j}^{(k+1)}\) for all j, k. Therefore, for every j, the sequence of graphs \((G_{{\hat{d}},j}^{(k)})_{k\ge 1}\) stabilizes after a finite number of steps. We denote the stabilized graphs by \(G_{{\hat{d}},j}^{(\bullet )}\) for all j and denote the corresponding moment relaxation by \((\text {EQ}_{{\hat{d}},\bullet }^{\text {ts}})\) (with optimum \(\lambda _{{\hat{d}},\bullet }^{\text {ts}}(f, S)\)).

For each \(k\ge 1\), the dual of \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) reads as

where \(\{D_{w}^j\}_{j,w}\) is defined in Sect. 2.5.

For \(\varepsilon >0\), we define the nc \(\varepsilon \)-neighborhood of 0 to be

Theorem 6

Let \(\{f\}\cup S\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Then the following hold:

-

(1)

Suppose that \({\mathcal {D}}_S\) contains an nc \(\varepsilon \)-neighborhood of 0.

Then for all \({\hat{d}},k\), there is no duality gap between \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) (31) and its dual (32).

-

(2)

Fixing a relaxation order \({\hat{d}}\ge d\), the sequence \((\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) is monotone nondecreasing and \(\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S)\le \lambda _{{\hat{d}}}(f, S)\) for all k (with \(\lambda _{{\hat{d}}}(f, S)\) defined in (9)).

-

(3)

Fixing a sparse order \(k\ge 1\), the sequence \((\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S))_{{\hat{d}}\ge d}\) is monotone nondecreasing.

-

(4)

If the maximal chordal extension is used in (30), then \((\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) converges to \(\lambda _{{\hat{d}}}(f, S)\) in finitely many steps, i.e., \(\lambda _{{\hat{d}},\bullet }^{\text {ts}}(f, S)=\lambda _{{\hat{d}}}(f, S)\).

Proof

(i) By Proposition 4.9 in [11], the SDP \((\text {EQ}_{{\hat{d}}})\) (9) admits a Slater’s point, say \({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}\). Then \(M_{{\hat{d}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}), M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) are positive definite. Since each block of \(B_{G_{{\hat{d}},0}^{(k)}}\circ M_{{\hat{d}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) (resp. \(B_{G_{{\hat{d}},j}^{(k)}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\)) is a principal submatrix of \(M_{{\hat{d}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) (resp. \(M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\)), we have that \(B_{G_{{\hat{d}},0}^{(k)}}\circ M_{{\hat{d}}}({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}), B_{G_{{\hat{d}},j}^{(k)}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\) correspond to a Slater’s point of \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) by Theorem 4. So by the duality theory of convex programming, there is no duality gap between \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) and its dual.

(ii) For all j, k, because \(G_{{\hat{d}},j}^{(k)}\subseteq G_{{\hat{d}},j}^{(k+1)}\), each maximal clique of \(G_{{\hat{d}},j}^{(k)}\) is a subset of some maximal clique of \(G_{{\hat{d}},j}^{(k+1)}\). Hence by Theorem 4, \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) is a relaxation of \((\text {EQ}_{{\hat{d}},k+1}^{\text {ts}})\) (and also a relaxation of \((\text {EQ}_{{\hat{d}}})\)). Therefore, \((\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) is monotone nondecreasing and \(\lambda ^{\text {ts}}_{{\hat{d}},k}(f, S)\le \lambda _{{\hat{d}}}(f, S)\) for all k.

(iii) The conclusion follows if we can show that \(G_{{\hat{d}},j}^{(k)}\subseteq G_{{\hat{d}}+1,j}^{(k)}\) for all \({\hat{d}},j\) since by Theorem 4 this implies that \((\text {EQ}_{{\hat{d}},k}^{\text {ts}})\) is a relaxation of \((\text {EQ}_{{\hat{d}}+1,k}^{\text {ts}})\). Let us prove \(G_{{\hat{d}},j}^{(k)}\subseteq G_{{\hat{d}}+1,j}^{(k)}\) by induction on k. For \(k=1\), from (27), we have \(E_{{\hat{d}},0}^{(0)}\subseteq E_{{\hat{d}}+1,0}^{(0)}\), which implies that \(G_{{\hat{d}},j}^{(1)}\subseteq G_{{\hat{d}}+1,j}^{(1)}\) for all \({\hat{d}},j\). Now assume that \(G_{{\hat{d}},j}^{(k)}\subseteq G_{{\hat{d}}+1,j}^{(k)}\) for all \({\hat{d}},j\) hold for a given \(k\ge 1\). Then from (21), (29), (30) and by the induction hypothesis, we have \(G_{{\hat{d}},j}^{(k+1)}\subseteq G_{{\hat{d}}+1,j}^{(k+1)}\) for all \({\hat{d}},j\), which completes the induction and also completes the proof.

(iv) Let \({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}=(y^{{{\,\mathrm{opt}\,}}}_{w})_w\) be an arbitrary feasible solution of \((\text {EQ}_{{\hat{d}},\bullet }^{\text {ts}})\). Note that \(\{y_{w}\mid w\in \bigcup _{i=0}^m{\mathrm{supp}}_{g_j}(G_{{\hat{d}},j}^{(\bullet )})\cup {\mathbf {W}}_{{\hat{d}}}^2\}\) is the set of decision variables involved in \((\text {EQ}_{{\hat{d}},\bullet }^{\text {ts}})\) and \(\{y_{w}\mid w\in {\mathbf {W}}_{{\hat{d}}}^{\star }{\mathbf {W}}_{{\hat{d}}}\}\) is the set of decision variables involved in (\(\text {EQ}_{{\hat{d}}}\)). We then define a vector \({\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}=({\overline{y}}^{{{\,\mathrm{opt}\,}}}_{w})_{w\in {\mathbf {W}}_{{\hat{d}}}^{\star }{\mathbf {W}}_{{\hat{d}}}}\) as follows:

If the maximal chordal extension is used in (30), then the matrices in \(\varPi _{G_{{\hat{d}},j}^{(k)}}({\mathbb {S}}^+_{r_j})\) for all \(k\ge 1\) are block-diagonal (up to permutation). As a consequence, \(B_{G_{{\hat{d}},j}^{(k)}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}})\in \varPi _{G_{{\hat{d}},j}^{(k)}}({\mathbb {S}}^+_{r_j})\) implies \(B_{G_{{\hat{d}},j}^{(k)}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}})\succeq 0\). By construction, we have \(M_{{\hat{d}}-d_j}(g_j{\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}})=B_{G_{{\hat{d}},j}^{(\bullet )}}\circ M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}^{{{\,\mathrm{opt}\,}}})\succeq 0\) for all \(j\in \{0\}\cup [m]\). Therefore \({\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}\) is a feasible solution of (\(\text {EQ}_{{\hat{d}}}\)) and hence \(L_{{\mathbf {y}}^{{{\,\mathrm{opt}\,}}}}(f)=L_{{\overline{{\mathbf {y}}}}^{{{\,\mathrm{opt}\,}}}}(f)\ge \lambda _{{\hat{d}}}(f, S)\), which yields \(\lambda _{{\hat{d}},\bullet }^{\text {ts}}(f, S)\ge \lambda _{{\hat{d}}}(f, S)\) since \({\mathbf {y}}^{{{\,\mathrm{opt}\,}}}\) is an arbitrary feasible solution of \((\text {EQ}_{{\hat{d}},\bullet }^{\text {ts}})\). By (ii), we already have \(\lambda _{{\hat{d}},\bullet }^{\text {ts}}(f, S)\le \lambda _{{\hat{d}}}(f, S)\). Therefore, \(\lambda _{{\hat{d}},\bullet }^{\text {ts}}(f, S)=\lambda _{{\hat{d}}}(f, S)\). \(\square \)

Following from Theorem 6, we have the following two-level hierarchy of lower bounds for the optimum \(\lambda _{\min }(f,S)\) of \((\text {EQ}_0)\):

We call the array of lower bounds (34) (and its corresponding moment-SOHS relaxations (31–32)) the NCTSSOS hierarchy associated with \((\text {EQ}_0)\).

Remark 1

The NCTSSOS hierarchy entails a trade-off between the computational cost and the quality of the obtained lower bound via the two parameters \({\hat{d}}\) and k. Besides, one has the freedom to choose a specific chordal extension for any graph involved in (30) (e.g., maximal chordal extensions, approximately smallest chordal extensions and so on). This choice affects the resulting sizes of SDP blocks and the quality of the lower bound given by the corresponding SDP relaxation. Intuitively, chordal extensions with smaller clique numbers should lead to SDP blocks of smaller sizes and lower bounds of (possibly) lower quality while chordal extensions with larger clique numbers should lead to SDP blocks with larger sizes and lower bounds of (possibly) higher quality.

Example 4

Consider \(f=2-X^2+XY^2X-Y^2+XYXY+YXYX+X^3Y+YX^3+XY^3+Y^3X\) and \(S=\{1-X^2,1-Y^2\}\). The graph sequence \((G_{2,0}^{(k)})_{k\ge 1}\) for f and S is given in Fig. 4. In fact the graph sequence \((G_{2,j}^{(k)})_{k\ge 1}\) stabilizes at \(k=2\) for all j (with approximately smallest chordal extensions). Using NCTSSOS, we obtain that \(\lambda ^{\text {ts}}_{2,1}(f, S)\approx -2.55482\), \(\lambda ^{\text {ts}}_{2,2}(f, S)=\lambda _{2}(f, S)\approx -2.05111\).

The graph sequence \((G_{2,0}^{(k)})_{k\ge 1}\) in Example 4: left for \(k=1\); right for \(k=2\). The dashed edges are added after a chordal extension

Remark 2

In the case of eigenvalue minimization over nc balls/multi-balls (as in the above example), the first dense relaxation is exact; see Proposition 4.16 and Proposition 4.17 from [11]. We believe that there is no analog of this finite convergence result for term sparsity, i.e., the first or the second step is not always exact. Actually, we have tested this on randomly generated examples of degree 4 (as we do later on for Table 9 in Sect. 6 but without considering correlative sparsity). When using the minimum relaxation order 2 and maximal chordal extensions, the NCTSSOS hierarchy takes a few (typically \(2\sim 3\)) steps to converge to the value of the dense relaxation (i.e., the exact value in this case). The sizes of the resulting SDPs heavily depend on the considered problem.

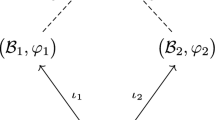

4 Eigenvalue optimization for noncommutative polynomials with combined correlative-term sparsity

The exploitation of term sparsity developed in the previous section can be combined with the exploitation of correlative sparsity discussed in [21] to reduce the computational cost further. To begin with, let us recall some basics on correlative sparsity. For more details, the reader is referred to [21].

4.1 Eigenvalue optimization for noncommutative polynomials with correlative sparsity

As in the commutative case, the exploitation of correlative sparsity in the moment-SOHS hierarchy for NCPOPs consists of two steps: (1) partition the set of variables into subsets according to the correlations between variables emerging in the problem, and (2) construct a sparse moment-SOHS hierarchy with respect to the former partition of variables [21].

More concretely, assuming \(f=\sum _{w}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\) \(\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), we define the correlative sparsity pattern (csp) graph associated with f and S to be the graph \(G^{\text {csp}}\) with nodes \(V=[n]\) and edges E satisfying \(\{i,j\}\in E\) if one of following holds:

-

(1)

there exists \(w\in {\mathrm{supp}}(f)\text { s.t. }X_i,X_j\in {\mathrm{var}}(w)\);

-

(2)

there exists l, with \(1\le l\le m, \text { s.t. }X_i,X_j\in {\mathrm{var}}(g_l)\),

where we use \({\mathrm{var}}(g)\) to denote the set of variables effectively involved in \(g\in {\mathbb {R}}\langle {\underline{X}}\rangle \). Let \({\overline{G}}^{\text {csp}}\) be a chordal extension of \(G^{\text {csp}}\) and \(I_l,l\in [p]\) be the maximal cliques of \({\overline{G}}^{\text {csp}}\) with cardinality denoted by \(n_l,l\in [p]\). Let \({\mathbb {R}}\langle {\underline{X}}(I_l)\rangle \) denote the ring of nc polynomials in the \(n_l\) variables \({\underline{X}}(I_l) = \{X_i\mid i\in I_l\}\). We then partition the constraints \(g_1,\ldots ,g_m\) into groups \(\{g_j\mid j\in J_l\}, l\in [p]\) which satisfy

-

(1)

\(J_1,\ldots ,J_p\subseteq [m]\) are pairwise disjoint and \(\bigcup _{l=1}^pJ_l=[m]\);

-

(2)

for any \(j\in J_l\), \({\mathrm{var}}(g_j)\subseteq {\underline{X}}(I_l)\), \(l\in [p]\).

Next, with \(l\in [p]\) fixed, d a positive integer and \(g\in {\mathbb {R}}\langle {\underline{X}}(I_l)\rangle \), let \(M_d({\mathbf {y}}, I_l)\) (resp. \(M_d(g{\mathbf {y}}, I_l)\)) be the moment (resp. localizing) submatrix obtained from \(M_d({\mathbf {y}})\) (resp. \(M_d(g{\mathbf {y}})\)) by retaining only those rows (and columns) indexed by \(w\in \langle {\underline{X}}(I_l)\rangle \) of \(M_d({\mathbf {y}})\) (resp. \(M_d(g{\mathbf {y}})\)).

Then with \({\hat{d}}\ge d:= \max \{\lceil \deg (f)/2\rceil ,\lceil \deg (g_1)/2\rceil ,\ldots ,\lceil \deg (g_m)/2\rceil \}\), the moment SDP relaxation for \((\text {EQ}_0)\) based on correlative sparsity is defined as

Remark 3

As shown in [21], under Archimedean’s condition (slightly stronger than compactness), the sequence \((\lambda ^{\text {cs}}_{{\hat{d}}}(f, S))_{{\hat{d}}\ge d}\) converges to the global optimum \(\lambda _{\min }(f, S)\).

4.2 Eigenvalue optimization for noncommutative polynomials with combined correlative-term sparsity

The combination of correlative sparsity and term sparsity proceeds in a similar manner as for the commutative case in [48]. Assume that \(f=\sum _{w}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \), \(G^{\text {csp}}\) is the csp graph associated with f and S, and \({\overline{G}}^{\text {csp}}\) is a chordal extension of \(G^{\text {csp}}\). Let \(I_l,l\in [p]\) be the maximal cliques of \({\overline{G}}^{\text {csp}}\) with cardinality denoted by \(n_l,l\in [p]\). Then the set of variables \({\underline{X}}\) is partitioned into \({\underline{X}}(I_1), {\underline{X}}(I_2), \ldots , {\underline{X}}(I_p)\). Let \(J_1,\ldots ,J_p\) be defined as in Sect. 4.1.

Now we consider the term sparsity pattern for each subsystem involving the variables \({\underline{X}}(I_l)\), \(l\in [p]\) respectively as follows. Let

for \(l\in [p]\). As before, let \(g_0=1\), \(d_j=\lceil \deg (g_j)/2\rceil \), \(j\in \{0\}\cup [m]\) and \(d=\max \{\lceil \deg (f)/2\rceil ,d_1,\ldots ,d_m\}\). Fix a relaxation order \({\hat{d}}\ge d\). Let \({\mathbf {W}}_{{\hat{d}}-d_j,l}\) be the standard monomial basis of degree \(\le {\hat{d}}-d_j\) with respect to the variables \({\underline{X}}(I_l)\) and \(G_{{\hat{d}},l}^{\text {tsp}}\) be the tsp graph with nodes \({\mathbf {W}}_{{\hat{d}},l}\) associated with \({\mathbf {A}}_l\) defined as in Sect. 3.2. Assume that \(G_{{\hat{d}},l,0}^{(0)}=G_{{\hat{d}},l}^{\text {tsp}}\) and \(G_{{\hat{d}},l,j}^{(0)},j\in J_l, l\in [p]\) are empty graphs. Letting

we iteratively define a sequence of graphs \((G_{{\hat{d}},l,j}^{(k)}(V_{{\hat{d}},l,j},E_{{\hat{d}},l,j}^{(k)}))_{k\ge 1}\) with \(V_{{\hat{d}},l,j}={\mathbf {W}}_{{\hat{d}}-d_j,l}\) for each \(j\in \{0\}\cup J_l,l\in [p]\) by

where \(F_{{\hat{d}},l,j}^{(k)}\) is the graph with \(V(F_{{\hat{d}},l,j}^{(k)})={\mathbf {W}}_{{\hat{d}}-d_j,l}\) and

Let \(r_{l,j}=|{\mathbf {W}}_{{\hat{d}}-d_j,l}|\) for all l, j. Then for each \(k\ge 1\) (called the sparse order), the sparse moment relaxation based on combined correlative-term sparsity for \((\text {EQ}_0)\) is given by

For any l, j, write \(M_{{\hat{d}}-d_j}(g_j{\mathbf {y}}, I_l)=\sum _{w}D_{w}^{l,j}y_{w}\) for appropriate matrices \(\{D_{w}^{l,j}\}_{l,j,w}\). Then for each \(k\ge 1\), the dual of \((\text {EQ}^{\text {cs-ts}}_{{\hat{d}},k})\) reads as

where \({\mathscr {C}}_{{\hat{d}}}^{(k)}\) is defined as in (37).

By similar arguments as for Theorem 6, we can prove the following theorem.

Theorem 7

Assume that \(\{f\}\cup S\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Then the following hold:

-

(1)

Fixing a relaxation order \({\hat{d}}\ge d\), the sequence \((\lambda ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) is monotone nondecreasing and \(\lambda ^{\text {cs-ts}}_{{\hat{d}},k}(f, S)\le \lambda ^{\text {cs}}_{{\hat{d}}}(f, S)\) for all \(k\ge 1\) (with \(\lambda ^{\text {cs}}_{{\hat{d}}}(f, S)\) defined in Sect. 4.1).

-

(2)

Fixing a sparse order \(k\ge 1\), the sequence \((\lambda ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{{\hat{d}}\ge d}\) is monotone nondecreasing.

-

(3)

If the maximal chordal extension is used in (38), then \((\lambda ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) converges to \(\lambda ^{\text {cs}}_{{\hat{d}}}(f, S)\) in finitely many steps.

From Theorem 7, we deduce the following two-level hierarchy of lower bounds for the optimum \(\lambda _{\min }(f, S)\) of \((\text {EQ}_{0})\):

5 Trace optimization for noncommutative polynomials with term sparsity

The results presented in the previous sections concerning eigenvalue optimization for noncommutative polynomials with term sparsity can be slightly adjusted to deal with trace optimization for noncommutative polynomials with term sparsity. We present the main results concerning trace optimization in this section and omit the proofs.

5.1 The unconstrained case

Let \(f=\sum _{w\in {\mathbf {A}}}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) with \({\mathrm{supp}}(f)={\mathbf {A}}\) (w.l.o.g. assuming \(1\in {\mathbf {A}}\)) and let \(d={\mathrm{cdeg}}(f)\). We define \(H_0(V,E_0)\) to be the graph with \(V={\mathbf {W}}_d\) and

We iteratively define a sequence of graphs \((H_k(V,E_k))_{k\ge 1}\) by

where \({\mathrm{CSE}}(H_{k-1})\) (the cyclic support extension of \(H_{k-1}\)) is the graph with nodes \({\mathbf {W}}_d\) and with edges

Let \(r=|{\mathbf {W}}_d|\). As for eigenvalue optimization, we can consider the following series of sparse moment relaxations for \((\text {TP})\) indexed by \(k\ge 1\):

The dual of \((\text {TP}^k)\) reads as

where \(A_{w}\) is defined as in Sect. 2.5. We call k the sparse order. There is no duality gap between \((\text {TP}^k)\) and its dual. By construction, one has \(H_{k}\subseteq H_{k+1}\) for all \(k\ge 1\) and therefore the sequence of graphs \((H_k(V,E_k))_{k\ge 1}\) stabilizes after a finite number of steps. We denote the stabilized graph by \(H_{\bullet }(V,E_{\bullet })\) and the optimum of the corresponding SDP relaxation by \(\mu _{\bullet }(f)\).

As for eigenvalue optimization, we obtain the following hierarchy of lower bounds for \({\mathrm{tr}}_{\min }(f)\):

Moreover, if the maximal chordal extension is used in (44), then \((\mu _k(f))_{k\ge 1}\) converges to \(\mu (f)\) in finitely many steps, i.e., \(\mu _{\bullet }(f)=\mu (f)\).

Remark 4

The monomial basis \({\mathbf {W}}_d\) used in this subsection can be replaced by the reduced monomial basis returned by the tracial Newton polytope method [11, §3.3]. However, the implementation of this method involves solving \(\left( {\begin{array}{c}n+d\\ d\end{array}}\right) \) LPs with \(n+1\) decision variables, which becomes expensive for large n. Hence we stick to the standard monomial basis \({\mathbf {W}}_d\) for the numerical experiments performed in this paper (see Sect. 6).

5.2 The constrained case

Assume that \(f=\sum _{w}a_{w}w\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \) and \(S=\{g_1,\ldots ,g_m\}\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). As before, let \({\mathbf {A}}={\mathrm{supp}}(f)\cup \bigcup _{j=1}^m{\mathrm{supp}}(g_j)\), \(g_0=1\) and \(d_j=\lceil \deg (g_j)/2\rceil \), \(j\in \{0\}\cup [m]\). Let \(d=\max \{\lceil {\mathrm{cdeg}}(f)/2\rceil ,d_1,\ldots ,d_m\}\). Fix a relaxation order \({\hat{d}}\ge d\). We define a graph \(H_{{\hat{d}}}^{\text {tsp}}(V_{{\hat{d}}},E_{{\hat{d}}}^{\text {tsp}})\) with \(V_{{\hat{d}}}={\mathbf {W}}_{{\hat{d}}}\) and

which is called the cyclic tsp graph associated with \({\mathbf {A}}\) (or f and S). Let \(H_{{\hat{d}},0}^{(0)}=H_{{\hat{d}}}^{\text {tsp}}\) and \(H_{{\hat{d}},j}^{(0)}\) be the empty graph for \(j\in [m]\). We iteratively define a sequence of graphs \((H_{{\hat{d}},j}^{(k)}(V_{{\hat{d}},j},E_{{\hat{d}},j}^{(k)}))_{k\ge 1}\) with \(V_{{\hat{d}},j}={\mathbf {W}}_{{\hat{d}}-d_j}\) for each \(j\in \{0\}\cup [m]\) via two successive operations:

(1) cyclic support extension Let \(K_{{\hat{d}},j}^{(k)}\) be the graph with \(V(K_{j,{\hat{d}}}^{(k)})={\mathbf {W}}_{{\hat{d}}-d_j}\) and

(2) chordal extension Let

Let \(r_j=|{\mathbf {W}}_{{\hat{d}}-d_j}|\). As for eigenvalue optimization, we then consider the following series of sparse moment relaxations for (\(\text {TQ}_{{\hat{d}}}\)) indexed by \(k\ge 1\):

We call k the sparse order. By construction, one has \(H_{{\hat{d}},j}^{(k)}\subseteq H_{{\hat{d}},j}^{(k+1)}\) for all j, k. Therefore, for every j, the sequence of graphs \((H_{{\hat{d}},j}^{(k)})_{k\ge 1}\) stabilizes after a finite number of steps. We denote the stabilized graphs by \(H_{{\hat{d}},j}^{(\bullet )}\) for all j and the optimum of the corresponding SDP relaxation by \(\mu _{{\hat{d}},\bullet }(f, S)\).

For each \(k\ge 1\), the dual of \((\text {TQ}_{{\hat{d}},k}^{\text {ts}})\) reads as

where \(D_{w}^j\) is defined as in Sect. 2.5.

As for eigenvalue optimization, we have the following conclusions.

Theorem 8

Assume that \(\{f\}\cup S\in {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Then the following hold:

-

(1)

If \({\mathcal {D}}_S\) contains an nc \(\varepsilon \)-neighborhood of 0, then for all \({\hat{d}},k\), there is no duality gap between \((\text {TQ}_{{\hat{d}},k}^{\text {ts}})\) (51) and its dual (52).

-

(2)

Fixing a relaxation order \({\hat{d}}\ge d\), the sequence \((\mu ^{\text {ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) is monotone nondecreasing and \(\mu ^{\text {ts}}_{{\hat{d}},k}(f, S)\le \mu _{{\hat{d}}}(f, S)\) for all \(k\ge 1\) (with \(\mu _{{\hat{d}}}(f, S)\) defined in (15)).

-

(3)

Fixing a sparse order \(k\ge 1\), the sequence \((\mu ^{\text {ts}}_{{\hat{d}},k}(f, S))_{{\hat{d}}\ge d}\) is monotone nondecreasing.

-

(4)

If the maximal chordal extension is used in (50), then \((\mu ^{\text {ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) converges to \(\mu _{{\hat{d}}}(f, S)\) in finitely many steps, i.e., \(\mu _{{\hat{d}},\bullet }(f, S)=\mu _{{\hat{d}}}(f, S)\).

Following from Theorem 8, we have the following two-level hierarchy of lower bounds for the optimum \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1}\):

The array of lower bounds (53) (and its associated moment-SOHS relaxations (51)-(52)) is what we call the NCTSSOS hierarchy associated with \((\text {TQ}_0)\).

Example 5

Consider again \(f=2-X^2+XY^2X-Y^2+XYXY+YXYX+X^3Y+YX^3+XY^3+Y^3X\) and \(S=\{1-X^2,1-Y^2\}\). The graph sequence \((H_{2,0}^{(k)})_{k\ge 1}\) for f and S is given in Fig. 5. In fact the graph sequence \((H_{2,j}^{(k)})_{k\ge 1}\) stabilizes at \(k=2\) for all j (with approximately smallest chordal extensions). Using NCTSSOS, we obtain that \(\mu ^{\text {ts}}_{2,1}(f, S)=\mu ^{\text {ts}}_{2,2}(f, S)=\mu _{2}(f, S)\approx -2\).

The graph sequence \((H_{2,0}^{(k)})_{k\ge 1}\) in Example 5: left for \(k=1\); right for \(k=2\). The dashed edges are added after a chordal extension

5.3 Combining correlative sparsity with term sparsity

We can also combine correlative sparsity with term sparsity for trace optimization. Fix a relaxation order \({\hat{d}}\ge d\). Let \({\mathbf {A}},I_l,J_l,{\mathbf {A}}_l,{\mathbf {W}}_{{\hat{d}},l},{\mathbf {W}}_{{\hat{d}}-d_j,l}\) be defined as in Sect. 4.2. Let \(H_{{\hat{d}},l}^{\text {tsp}}\) be the cyclic tsp graph with nodes \({\mathbf {W}}_{{\hat{d}},l}\) associated with \({\mathbf {A}}_l\) defined as in Sect. 5.2. Assume that \(H_{{\hat{d}},l,0}^{(0)}=H_{{\hat{d}},l}^{\text {tsp}}\) and \(H_{{\hat{d}},l,j}^{(0)},j\in J_l, l\in [p]\) are empty graphs. Letting

we iteratively define a sequence of graphs \((H_{{\hat{d}},l,j}^{(k)}(V_{{\hat{d}},l,j},E_{{\hat{d}},l,j}^{(k)}))_{k\ge 1}\) with \(V_{{\hat{d}},l,j}={\mathbf {W}}_{{\hat{d}}-d_j,l}\) for each \(j\in \{0\}\cup J_l,l\in [p]\) by

where \(K_{{\hat{d}},l,j}^{(k)}\) is the graph with \(V(K_{{\hat{d}},l,j}^{(k)})={\mathbf {W}}_{{\hat{d}}-d_j,l}\) and

Let \(r_{l,j}=|{\mathbf {W}}_{{\hat{d}}-d_j,l}|\) for all l, j. Then for each \(k\ge 1\), the moment relaxation based on combined correlative-term sparsity for \((\text {TQ}_0)\) is given by

For each \(k\ge 1\), the dual of \((\text {TQ}^{\text {cs-ts}}_{{\hat{d}},k})\) reads as

where \(D_{w}^{l,j}\) is defined as in Sect. 4.2 and \({\mathscr {D}}_{{\hat{d}}}^{(k)}\) is defined as in (54).

Theorem 9

Assume that \(\{f\}\cup S\subseteq {\mathrm{Sym}}\,{\mathbb {R}}\langle {\underline{X}}\rangle \). Let \(\mu ^{\text {cs}}_{{\hat{d}}}(f, S)\) be the optimum of the \({\hat{d}}\)-th order sparse moment relaxation based on correlative sparsity for \((\text {TQ}_{0})\). Then the following hold:

-

(1)

Fixing a relaxation order \({\hat{d}}\ge d\), the sequence \((\mu ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) is monotone nondecreasing and \(\mu ^{\text {cs-ts}}_{{\hat{d}},k}(f, S)\le \mu ^{\text {cs}}_{{\hat{d}}}(f, S)\) for all \(k\ge 1\).

-

(2)

Fixing a sparse order \(k\ge 1\), the sequence \((\mu ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{{\hat{d}}\ge d}\) is monotone nondecreasing.

-

(3)

If the maximal chordal extension is used in (55), then \((\mu ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) converges to \(\mu ^{\text {cs}}_{{\hat{d}}}(f, S)\) in finitely many steps.

From Theorem 9, we deduce the following two-level hierarchy of lower bounds for the optimum \({\mathrm{tr}}_{\min }(f,S)^{{{\,\mathrm{II}\,}}_1}\):

6 Numerical experiments

In this section, we present numerical results of the proposed NCTSSOS hierarchies for both unconstrained and constrained noncommutative polynomial optimization problems. Our tool to implement these hierarchies, named NCTSSOS, is written as a Julia package. NCTSSOS utilizes the Julia packages LightGraphs [7] to handle graphs, ChordalGraph [43] to generate approximately smallest chordal extensions and JuMP [14] to model SDP. Finally, NCTSSOS relies on MOSEK [3] to solve SDP. NCTSSOS is freely available at

https://github.com/wangjie212/NCTSSOS.

All numerical examples were computed on an Intel Core i5-8265U@1.60GHz CPU with 8GB RAM memory. The timing includes the time for pre-processing (to get the block structure in NCTSSOS), the time for modeling SDP and the time for solving SDP. For most examples tested in this paper (except the random ones), we use approximately smallest chordal extensions since they are much more scalable than the maximal chordal extension while yielding (almost) the same optimum. For comparison purpose, we also implement the dense moment-SOHS relaxation in NCTSSOS. The notations that we use in the following subsections are listed in Table 1.

6.1 Eigenvalue optimization examples

We first focus on the unconstrained case and consider the eigenvalue minimization problem for the following functions.

-

The nc version of the Broyden banded function

$$\begin{aligned} f_{\text {Bb}}({\mathbf {x}})=\sum _{i=1}^n\left( 2X_i+5X_i^3+1-\sum _{j\in J_i}\left( X_j+X_j^2\right) \right) ^{\star }\left( 2X_i+5X_i^3+1-\sum _{j\in J_i}\left( X_j+X_j^2\right) \right) , \end{aligned}$$where \(J_i=\{j\mid j\ne i, \max (1,i-5)\le j\le \min (n,i+1)\}\).

-

The nc version of the chained singular function

$$\begin{aligned} f_{\text {cs}}({\mathbf {x}})=&\sum _{i\in J}(\left( X_{i}+10X_{i+1}\right) ^{\star }\left( X_{i}+10X_{i+1}\right) +5\left( X_{i+2}-X_{i+3}\right) ^{\star }\left( X_{i+2}-X_{i+3}\right) \\&+\left( X^2_{i+1}-4X_{i+1}X_{i+2}+4X_{i+2}^2\right) ^{\star } \left( X^2_{i+1}-4X_{i+1}X_{i+2}+4X_{i+2}^2\right) \\&+10\left( X^2_{i}-20X_iX_{i+3}+100X_{i+3}^2\right) ^{\star }\left( X^2_{i}-20X_iX_{i+3}+100X_{i+3}^2\right) , \end{aligned}$$where \(J=\{1,3,5,\ldots ,n-3\}\).

-

The nc version of the generalized Rosenbrock function

$$\begin{aligned} f_{\text {gR}}({\mathbf {x}})=1+\sum _{i=1}^n\left( 100\left( X_i-X_{i-1}^2\right) ^{\star } \left( X_i-X_{i-1}^2\right) +\left( 1-X_i\right) ^{\star }\left( 1-X_i\right) \right) . \end{aligned}$$ -

The nc version of the chained Wood function

$$\begin{aligned} f_{\text {cW}}({\mathbf {x}})=&1+\sum _{i\in J}\left( 100\left( X_{i+1}-X_{i}^2\right) ^{\star } \left( X_{i+1}-X_{i}^2\right) +\left( 1-X_i\right) ^{\star } \left( 1-X_i\right) +90\left( X_{i+3}\right. \right. \\&\left. -X_{i+2}^2\right) ^{\star }\left( X_{i+3}-X_{i+2}^2\right) +\left( 1-X_{i+2}\right) ^{\star }\left( 1-X_{i+2}\right) +10\left( X_{i+1}+X_{i+3}\right. \\&\left. \left. -2\right) ^{\star }\left( X_{i+1}+X_{i+3}-2\right) +0.1\left( X_{i+1} -X_{i+3}\right) ^{\star }\left( X_{i+1}-X_{i+3}\right) \right) , \end{aligned}$$where \(J=\{1,3,5,\ldots ,n-3\}\) and 4|n.

-

The nc version of the Broyden tridiagonal function

$$\begin{aligned} f_{\text {Bt}}({\mathbf {x}})=&\left( 3X_1-2X_1^2-2X_2+1\right) ^{\star }\left( 3X_1-2X_1^2-2X_2+1\right) \\&+\sum _{i=2}^{n-1}\left( 3X_i-2X_i^2-X_{i-1}-2X_{i+1}+1\right) ^{\star }\left( 3X_i-2X_i^2-X_{i-1}-2X_{i+1}+1\right) \\&+\left( 3X_n-2X_n^2-X_{n-1}+1\right) ^{\star }\left( 3X_n-2X_n^2-X_{n-1}+1\right) . \end{aligned}$$

To solve the unconstrained eigenvalue minimization problem of these functions, we always rely on the Newton chip method to compute a monomial basis, which turns out to be much smaller than the standard monomial basis. We compute \(\lambda ^{\text {ts}}_{1}(f)\) (the optimum of \((\text {EQ}^{\text {ts}}_{1})\)) using approximately smallest chordal extensions and compare the resulting values with \(\lambda _{\min }(f)\) (the optimum of \((\text {EP})\)) corresponding to the dense approach. The results are reported in Table 2, 3, 4, 5, 6, respectively. It is evident from these tables that our sparse approach is much more scalable than the dense approach. The dense approach can never be executed due to the memory limit when the problem has over 100 variables while the sparse approach can easily handle problems with 4000 variables. Meanwhile when the dense approach is executable, the optimal value provided by the sparse approach is quite close (or even equal in many cases) to the one provided by the dense approach.

Now let us consider the constrained case. Let \({\mathcal {D}}\) be the semialgebraic set defined by \(\{1-X_1^2,\ldots ,1-X_n^2,X_1-1/3,\ldots ,X_n-1/3\}\) and the optimization problem is minimizing the eigenvalue of the nc Broyden banded function over \({\mathcal {D}}\). We compute \(\lambda ^{\text {cs-ts}}_{{\hat{d}},1}(f,S)\) (the optimum of \((\text {EQ}^{\text {cs-ts}}_{{\hat{d}},1})\)) using approximately smallest chordal extensions with \({\hat{d}}=3\) (the minimum relaxation order). The results are reported in Table 7. To show the benefits of our method by contrast with the usual sparse approach based on correlative sparsity, we also display the results for the latter approach (i.e., \((\text {EQ}^{\text {cs}}_{{\hat{d}}})\)) and the results for the dense approach in the table. Again one can see from the table that our sparse approach is more scalable than the approach that exploits only correlative sparsity as well as the dense approach. Actually, the last two can never be executed due to the memory limit even when the problem has only 6 variables.

6.2 Randomly generated examples

To compare the performance of the maximal chordal extension with approximate smallest chordal extensions, let us consider the eigenvalue minimization for a random quartic polynomial f over the unit ball. To this end, let \(h\in {\mathbb {R}}\langle X_{1},\ldots ,X_{20}\rangle \) be a random quartic nc polynomials with t terms and coefficients taken from \([-1,1]\), and let \(f=(h+h^{\star })/2\). The results of solving \((\text {EQ}^{\text {ts}}_{2,1})\) are provided in Table 8. It can be seen that even though approximate smallest chordal extensions might give a slightly looser bound, it yields significantly smaller block sizes and hence is more efficient than using the maximal chordal extension.

Now we construct randomly generated examples whose csp graph consists of p maximal cliques of size 15 as follows: let \(f=\sum _{l=1}^p(h_l+h_l^{\star })/2\) where \(h_l\in {\mathbb {R}}\langle X_{10l-9},\ldots ,X_{10l+5}\rangle \) is a random quartic nc polynomials with 15 terms and coefficients taken from \([-1,1]\), and let \(S=\{g_l\}_{l=1}^p\) where \(g_l=1-X_{10l-9}^2-\cdots -X_{10l+5}^2\). We consider the eigenvalue minimization problem for f over the multi-ball \({\mathbb {B}}\) defined by S. Let \(p=50,100,\ldots ,400\) so that we obtain 8 such instancesFootnote 2. We compute the NCTSSOS hierarchy \((\lambda ^{\text {cs-ts}}_{{\hat{d}},k}(f, S))_{k\ge 1}\) with \({\hat{d}}=2\) and report the results of the first three steps (where we use the maximal chordal extension for the first step and use approximate smallest chordal extensions for the second and third steps, respectively) in Table 9. As one may expect, neither the dense approach nor the approach that exploits only correlative sparsity can handle problems with so large sizes. On the other hand, our sparse approach easily scales up to 4005 variables.

6.3 Trace optimization examples

Let us consider the unconstrained trace minimization for the nc Broyden banded function and the nc Broyden tridiagonal function. We compute \(\mu ^{\text {cs-ts}}_{1}(f)\) (the optimum of \((\text {EQ}^{\text {cs-ts}}_{1})\)) using approximately smallest chordal extensions. The results are reported in Table 10–11, respectively. As for eigenvalue minimization, the sparse approach is much more scalable than the dense approach, which actually can never be executed for these examples due to the memory limit.

We next consider the trace minimization for the nc Broyden banded function over the semialgebraic set \({\mathcal {D}}\) defined in Sect. 6.1. We compute \(\mu ^{\text {cs-ts}}_{{\hat{d}},1}(f,S)\) (the optimum of \((\text {TQ}^{\text {cs-ts}}_{{\hat{d}},1})\)) using approximately smallest chordal extensions and compare with the results for the approach that exploits only correlative sparsity and the results for the dense approach. The minimum relaxation order \({\hat{d}}=3\) is used. The results are reported in Table 12, which again demonstrate the scalability of our sparse approach.

Finally, we consider the trace minimization for the randomly generated quartic nc polynomials used in Sect. 6.1 over the multi-ball \({\mathbb {B}}\). We compute the NCTSSOS hierarchy \((\mu ^{\text {cs-ts}}_{{\hat{d}}, k}(f,S))_{k\ge 1}\) with the relaxation order \({\hat{d}}=2\). We report the results of the first three steps (where we always use approximate smallest chordal extensions) in Table 13. As one could expect, neither the dense approach nor the approach that exploits only correlative sparsity can handle these problems. On the other hand, our sparse approach is easily scalable up to 4005 variables.

7 Conclusions and outlook

We have presented the sparsity (term sparsity and combined correlative-term sparsity) adapted moment-SOHS hierarchies for both eigenvalue optimization and trace optimization involving noncommutative polynomials. Numerical experiments demonstrate that these sparse hierarchies are very efficient and scale well with the problem size when appropriate sparsity patterns are accessible. One question left for future investigation is to develop a Gelfand-Naimark-Segal’s style construction for extracting a minimizer adapted to our sparse settings.

Recently a moment-SOHS hierarchy for optimization problems involving trace polynomials was proposed in [22]. It would be worth extending further our sparsity-exploiting framework to handle trace polynomials.

We also plan to use the sparsity adapted moment-SOHS hierarchies developed in this paper to tackle large-scale NCPOPs arising from quantum information and condensed matter physics.

Notes

The polynomials are available at https://wangjie212.github.io/jiewang/code.html.

References

Agler, J., Helton, W., McCullough, S., Rodman, L.: Positive semidefinite matrices with a given sparsity pattern. Linear Algebra Appl. 107, 101–149 (1988)

Anjos, M.F., Lasserre, J.B. (eds.): Handbook on semidefinite, conic and polynomial optimization. International Series in Operations Research & Management Science

ApS, M.: The MOSEK optimization toolbox. Version 8.1. (2017). http://docs.mosek.com/8.1/toolbox/index.html

Bezanson, J., Edelman, A., Karpinski, S., Shah, V.B.: Julia: a fresh approach to numerical computing. SIAM Rev. 59(1), 65–98 (2017)

Blair, J.R., Peyton, B.: An introduction to chordal graphs and clique trees. In: Graph Theory and Sparse Matrix Computation. pp. 1–29. Springer (1993)

Bodlaender, H.L., Koster, A.M.: Treewidth computations I: upper bounds. Inf. Comput. 208(3), 259–275 (2010)

Bromberger, S., Fairbanks, J., other contributors: JuliaGraphs/LightGraphs.jl: an optimized graphs package for the julia programming language (2017). 10.5281/zenodo.889971

Bugarin, F., Henrion, D., Lasserre, J.B.: Minimizing the sum of many rational functions. Math. Programm. Comput. 8(1), 83–111 (2016)

Burgdorf, S., Cafuta, K., Klep, I., Povh, J.: The tracial moment problem and trace-optimization of polynomials. Math. Program. 137(1–2), 557–578 (2013). https://doi.org/10.1007/s10107-011-0505-8

Burgdorf, S., Cafuta, K., Klep, I., Povh, J.: The tracial moment problem and trace-optimization of polynomials. Math. Program. 137(1), 557–578 (2013)

Burgdorf, S., Klep, I., Povh, J.: Optimization of polynomials in non-commuting variables, vol. 2. Springer, bERLIN (2016)

Cafuta, K., Klep, I., Povh, J.: Constrained polynomial optimization problems with noncommuting variables. SIAM J. Optim. 22(2), 363–383 (2012). https://doi.org/10.1137/110830733

Chen, T., Lasserre, J.B., Magron, V., Pauwels, E.: Polynomial optimization for bounding Lipschitz constants of deep networks. arXiv preprint arXiv:2002.03657 (2020)

Dunning, I., Huchette, J., Lubin, M.: JuMP: A modeling language for mathematical optimization. SIAM Rev. 59(2), 295–320 (2017)

Gribling, S., De Laat, D., Laurent, M.: Lower bounds on matrix factorization ranks via noncommutative polynomial optimization. Found. Comput. Math. 19(5), 1013–1070 (2019)

Gribling, S., de Laat, D., Laurent, M.: Bounds on entanglement dimensions and quantum graph parameters via noncommutative polynomial optimization. Math. Program. 170(1), 5–42 (2018)

Grone, R., Johnson, C.R., Sá, E.M., Wolkowicz, H.: Positive definite completions of partial Hermitian matrices. Linear Algebra Appl. 58, 109–124 (1984)

Helton, J., McCullough, S.: A Positivstellensatz for non-commutative polynomials. Trans. Am. Math. Soc. 356(9), 3721–3737 (2004)

Helton, J.W.: Positive noncommutative polynomials are sums of squares. Ann. Math. 156, 675–694 (2002)

Josz, C., Molzahn, D.K.: Lasserre hierarchy for large scale polynomial optimization in real and complex variables. SIAM J. Optim. 28(2), 1017–1048 (2018)

Klep, I., Magron, V., Povh, J.: Sparse noncommutative polynomial optimization. Math Programm. (2021). https://doi.org/10.1007/s10107-020-01610-1

Klep, I., Magron, V., Volčič, J.: Optimization over trace polynomials. arXiv preprint arXiv:2006.12510 (2020)

Lasserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11(3), 796–817 (2001)

Lasserre, J.B.: Convergent SDP-relaxations in polynomial optimization with sparsity. SIAM J. Optim. 17(3), 822–843 (2006)

Laurent, M.: Sums of squares, moment matrices and optimization over polynomials. Emerg. Appl. Algebr. Geom. (2009). https://doi.org/10.1007/978-0-387-09686-5_7

Magron, V.: Interval enclosures of upper bounds of roundoff errors using semidefinite programming. ACM Trans. Math. Softw. 44(4), 1–18 (2018)

Magron, V., Constantinides, G., Donaldson, A.: Certified roundoff error bounds using semidefinite programming. ACM Trans. Math. Softw. 43(4), 1–34 (2017)

Mai, N.H.A., Lasserre, J.B., Magron, V., Wang, J.: Exploiting constant trace property in large-scale polynomial optimization. arXiv preprint arXiv:2012.08873 (2020)

Mai, N.H.A., Magron, V., Lasserre, J.B.: A sparse version of Reznick’s Positivstellensatz. arXiv preprint arXiv:2002.05101 (2020)