Abstract

We study the convergence rate of the Circumcentered-Reflection Method (CRM) for solving the convex feasibility problem and compare it with the Method of Alternating Projections (MAP). Under an error bound assumption, we prove that both methods converge linearly, with asymptotic constants depending on a parameter of the error bound, and that the one derived for CRM is strictly better than the one for MAP. Next, we analyze two classes of fairly generic examples. In the first one, the angle between the convex sets approaches zero near the intersection, so that the MAP sequence converges sublinearly, but CRM still enjoys linear convergence. In the second class of examples, the angle between the sets does not vanish and MAP exhibits its standard behavior, i.e., it converges linearly, yet, perhaps surprisingly, CRM attains superlinear convergence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We deal in this paper with the convex feasibility problem (CFP), defined as follows: given closed and convex sets \(K_1, \dots , K_m\subset \mathbb {R}^n\), find a point in \(\cap _{i=1}^mK_i\).

We study the Circumcentered-Reflection method (CRM) for solving CFP under an error bound regularity condition. CRM and generalized circumcenters were introduced in [11, 12], and subsequently studied in [7,8,9,10, 14, 16] with the geometrical appeal of accelerating classical projection/reflection based methods. We will see that a nonaffine setting embedded with an error bound condition provides, at least, linear convergence of CRM. Moreover, we present a quite general instance for which CRM converges superlinearly, opening a path for future research lines on circumcenters-type schemes. In addition to these contributions, we show that CRM is faster than the famous Method of Alternating Projections (MAP), even in the lack of an error bound. MAP has a vast literature (see, for instance, [2, 3, 21, 23]) and concerns CPF for two sets, in principle. However, the discussion below allows us to apply both CRM and MAP to the multi-set CFP.

Two very well-known methods for CFP related to MAP are the Sequential Projection Method (SePM) and the Simultaneous Projection Method (SiPM), which can be traced back to [18, 20] respectively, and are defined as follows. Consider the operators \({\widehat{P}}, {\overline{P}}:\mathbb {R}^n\rightarrow \mathbb {R}^n\) given by \({\widehat{P}}:=P_{K_m}\circ \dots \circ P_{K_1}\), \(\overline{P}:=\frac{1}{m}\sum _{i=1}^mP_{K_i}\), where each \(P_{K_i}:\mathbb {R}^n\rightarrow K_i\) is the orthogonal projection onto \(K_i\). Starting from an arbitrary \(z \in \mathbb {R}^n\), SePM and SiPM generate sequences \((\hat{x}^k)_{k\in \mathbb {N}}\) and \((\bar{x}^k)_{k\in \mathbb {N}}\) given by \(\hat{x}^{k+1}={\widehat{P}}(\hat{x}^k)\), \(\bar{x}^{k+1}=\overline{P}(\bar{x}^k)\), respectively, where \(\bar{x}^{0} = \hat{x}^{0} = z\). When \(\cap _{i=1}^mK_i\ne \emptyset\), the sequences generated by both methods are known to be globally convergent to points belonging to \(\cap _{i=1}^mK_i\), i.e., to solve CFP. Under suitable assumptions, both methods have interesting convergence properties also in the infeasible case, i.e., when \(\cap _{i=1}^mK_i =\emptyset\), but we will not deal with this case. See [4] for an in-depth study of these and other projections methods for CFP.

An interesting relation between SePM and SiPM was found by Pierra [22]. Consider the sets \(\mathrm {K}:=K_1 \times \dots \times K_m\subset \mathbb {R}^{nm}, \mathrm {U}:=\{(x,\dots ,x)\mid x\in \mathbb {R}^m\}\subset \mathbb {R}^{nm}\). Apply SePM to the sets \(\mathrm {K},\mathrm {U}\) in the product space \(\mathbb {R}^{n m}\), i.e., take \(\mathrm {x}^{k+1}=P_{\mathrm {U}}\left( P_{\mathrm {K}}(\mathrm {x}^k)\right)\) starting from \(\mathrm {x}^0\in \mathrm {U}\). Clearly, \(\mathrm {x}^k\) belongs to \(\mathrm {U}\) for all \(k\in \mathbb {N}\), so that we may write \(\mathrm {x}^k=(x^k,\ldots ,x^k)\) with \(x^k\in \mathbb {R}^n\). It was proved in [22] that \(x^{k+1}={\overline{P}}(x^k)\), i.e., a step of SePM applied to two convex sets in the product space \(\mathbb {R}^{n\times m}\) is equivalent to a step of SiPM in the original space \(\mathbb {R}^n\). Thus, SePM with just two sets plays a sort of special role and, therefore, carries a name of its own, namely MAP. Observe that in the equivalence above one of the two sets in the product space, namely \(\mathrm {U}\), is a linear subspace. This fact is essential for the convergence of CRM applied for solving CFP; see [1, 14].

Let us start to focus on the alleged acceleration effect of CRM with respect to MAP. There is abundant numerical evidence of this effect (see [11, 13, 14, 19]); in this paper, we will present some analytical evidence, which strengthens the results from [14]. In view of Pierra’s reformulation [22], the general CFP can be seen as a specific convex-affine intersection problem and since both CRM and MAP converge for the general convex-affine intersection problem, from now on, we seek a point common to a given closed convex set \(K\subset \mathbb {R}^n\) and an affine manifold \(U\subset \mathbb {R}^n\).

For finding a point in \(K\cap U\ne \emptyset\), MAP and CRM iterate by means of the operators \(T=P_U\circ P_K\) and \(C(\cdot )={{\,\mathrm{circ}\,}}(\cdot , R_K(\cdot ), R_U(R_K(\cdot )))\), respectively, where \(R_K=2P_K-{{\,\mathrm{Id}\,}}\) and \(R_U=2P_U-{{\,\mathrm{Id}\,}}\) are the reflection operators over K and U, respectively. For a point \(x\in \mathbb {R}^n\), C(x), when exists, is the point equidistant to \(x,R_K(x)\) and \(R_U(R_K(x))\) that lies in the affine manifold determined by the latter three points.

A first result in the analytical study of the acceleration effect of CRM over MAP was derived in [14], where it was proved that, for all \(x\in U\), C(x) is well-defined and \({{\,\mathrm{dist}\,}}(C(x),K\cap U)\le {{\,\mathrm{dist}\,}}(T(x),K\cap U)\), where \({{\,\mathrm{dist}\,}}\) stands for the Euclidean distance. The previous inequality means that the point obtained after a CRM step is closer to (or at least no farther from) \(K\cap U\) than the one obtained after a MAP step from the same point. This local (or myopic) acceleration does not imply immediately that the CRM sequence converges faster than the MAP one. In order to show global acceleration, we will focus on special situations where the convergence rate of the MAP can be precisely established.

MAP is known to be linearly convergent in several special situations, e.g., when both K and U are affine manifolds (see [21]) or when \(K\cap U\) has nonempty interior (see [3]). In Sect. 2 we will consider another such case, namely when a certain so-called error bound (EB from now on) holds, meaning that there exists \(\omega \in (0,1)\) such that \({{\,\mathrm{dist}\,}}(x, K)\ge \omega {{\,\mathrm{dist}\,}}(x,K\cap U)\) for all \(x\in U\). This error bound resembles the regularity conditions presented in [3, 4, 15]. We will prove that in this case both the MAP and the CRM sequences converge linearly, with asymptotic constants bounded by \(\sqrt{1-\omega ^2}\) for MAP, and by the strictly better bound \(\sqrt{{1-\omega ^2}}/\sqrt{{1+\omega ^{2}}}\) for CRM, thus showing that under EB, CRM is faster than MAP. For the case of MAP, linear convergence under the error bound condition with this asymptotic constant is already known (see, for instance, [3, Corollary 3.14]) even if U is not affine; we present it for the sake of completeness. Then, in Sect. 3 we will exhibit two rather generic families of examples where CRM converges indeed faster than MAP. In the first one, \(K\subset \mathbb {R}^{n+1}\) will be the epigraph of a convex function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) and \(U\subset \mathbb {R}^{n+1}\) a support hyperplane of K. We will show that in this situation, under adequate assumptions on f, the MAP sequence converges sublinearly, while the CRM sequence converges linearly, and we will give as well an explicit bound for the asymptotic constant of the CRM sequence. Also, we will present a somewhat pathological example for which both the MAP sequence and the CRM one converge sublinearly. In the second family, K will still be the epigraph of a convex f, but U will not be a supporting hyperplane of K; rather it will intersect the interior of K. In this case, under not too demanding assumptions on f, the MAP sequence converges linearly (we will give an explicit bound of its asymptotic constant), while CRM converges superlinearly. These results firmly corroborate the already established numerical evidence in [14] of the superiority of CRM over MAP.

2 Preliminaries

We recall first the definition of Q-linear and R-linear convergence.

Definition 2.1

Let \((y^k)_{k\in \mathbb {N}}\subset \mathbb {R}^n\) be a convergent sequence to \(y^*\). Assume that \(y^k\ne y^*\) for all \(k\in \mathbb {N}\). Define

Then, the convergence of \((y^k)_{k\in \mathbb {N}}\) is

-

(i)

Q-superlinearly if \(q=0\);

-

(ii)

Q-lineary if \(q\in (0,1)\);

-

(iii)

Q-sublinearly if \(q\ge 1\);

-

(iv)

R-superlinearly if \(r=0\);

-

(v)

R-linearly if \(r\in (0,1)\);

-

(vi)

R-sublinearly if \(r\ge 1\).

The values q, r are called asymptotic constants of \((y^k)_{k\in \mathbb {N}}\).

It is well known that Q-linear convergence implies R-linear convergence (with the same asymptotic constant), but the converse statement does not hold true.

We remind now the notion of Fejér monotonicity in \(\mathbb {R}^{n}\).

Definition 2.2

A sequence \((y^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to a set M when \(\left\Vert y^{k+1}-y\right\Vert \le \left\Vert y^k-y\right\Vert\), for all \(y\in M\).

Proposition 2.3

If \((y^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to M then

-

(i)

\((y^k)_{k\in \mathbb {N}}\) is bounded;

-

(ii)

if a cluster point \({\bar{y}}\) of \((y^k)_{k\in \mathbb {N}}\) belongs to M, then \(\displaystyle \lim _{k\rightarrow \infty }y^k={\bar{y}}\).

Proof

See Theorem 2.16 in [4]. \(\square\)

We end this section with the main convergence results for MAP and CRM.

Proposition 2.4

Take closed and convex sets \(K_1,K_2\subset \mathbb {R}^n\) such that \(K_1\cap K_2\ne \emptyset\). Let \(P_{K_1}, P_{K_2}\) be the orthogonal projections onto \(K_1,K_2\) respectively. Consider the sequence \((z^k)_{k\in \mathbb {N}}\) generated by MAP starting from any \(z^0\in \mathbb {R}^n\), i.e., \(z^{k+1}=P_{K_2}(P_{K_1}(z^k))\). Then \((z^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to \(K_1\cap K_2\) and converges to a point \(z^*\in K_1\cap K_2\).

Proof

See [17, Theorem 4]. \(\square\)

Let us present the formal definition of the circumcenter.

Definition 2.5

Let \(x,y,z\in \mathbb {R}^n\) be given. The circumcenter \({{\,\mathrm{circ}\,}}(x,y,z)\in \mathbb {R}^n\) is a point satisfying

-

(i)

\(\left\Vert {{\,\mathrm{circ}\,}}(x,y,z) - x\right\Vert =\left\Vert {{\,\mathrm{circ}\,}}(x,y,z) - y\right\Vert =\left\Vert {{\,\mathrm{circ}\,}}(x,y,z) - z\right\Vert\) and,

-

(ii)

\({{\,\mathrm{circ}\,}}(x,y,z)\in {{\,\mathrm{aff}\,}}\{x,y,z\}:=\{w\in \mathbb {R}^n \mid w=x+\alpha (y-x)+\beta (z-x),\;\alpha ,\beta \in \mathbb {R}\}\).

The point \({{\,\mathrm{circ}\,}}(x,y,z)\) is well and uniquely defined if the cardinality of the set \(\{x,y,z\}\) is one or two. In the case in which the three points are all distinct, \({{\,\mathrm{circ}\,}}(x,y,z)\) is well and uniquely defined only if x, y and z are not collinear. For more general notions, definitions and results on circumcenters see [12, 13].

Consider now a closed convex set \(K\subset \mathbb {R}^n\) and an affine manifold \(U\subset \mathbb {R}^n\). Let \(P_K, P_U\) be the orthogonal projections onto K, U respectively. Define \(R_K,R_U, T, C:\mathbb {R}^n\rightarrow \mathbb {R}^n\) as

Proposition 2.6

Let \(K\subset \mathbb {R}^n\) be a nonempty closed convex set. Then, the orthogonal projection \(P_K\) onto K is firmly nonexpansive, that is, for all \(x,y\in \mathbb {R}^n\) we have

Proof

See [5, Theorem 4.16]. \(\square\)

Proposition 2.7

Assume that \(K\cap U\ne \emptyset\). Let \((x^k)_{k\in \mathbb {N}}\) be the sequence generated by CRM starting from any \(x^0\in U\), i.e., \(x^{k+1}=C(x^k)\). Then,

-

(i)

for all \(x\in U\), we have that C(x) is well defined and belongs to U;

-

(ii)

for all \(x\in U\), it holds that \(\left\Vert C(x) -y\right\Vert \le \left\Vert T(x)-y\right\Vert\), for any \(y\in K\cap U\);

-

(iii)

\((x^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to \(K\cap U\);

-

(iv)

\((x^k)_{k\in \mathbb {N}}\) converges to a point in \(K\cap U\).

Proof

All these results can be found in [14]: (i) is proved in Lemma 3, (ii) in Theorem 2, and (iii) and (iv) in Theorem 1. \(\square\)

3 Linear convergence of MAP and CRM under an error bound assumption

We start by introducing an assumption on a pair of convex sets \(K,K'\subset \mathbb {R}^n\), denoted as EB (as in Error Bound), which will ensure linear convergence of MAP and CRM.

-

EB

\(K\cap K'\ne \emptyset\) and there exists \(\omega \in (0,1)\) such that \({{\,\mathrm{dist}\,}}(x,K)\ge \omega {{\,\mathrm{dist}\,}}(x,K\cap K')\) for all \(x\in K'\).

Now we consider a closed and convex set \(K\subset \mathbb {R}^n\) and an affine manifold \(U\subset \mathbb {R}^n\). Assuming that K, U satisfy Assumption EB, we will prove linear convergence of the sequences \((z^k)_{k\in \mathbb {N}}\) and \((x^k)_{k\in \mathbb {N}}\) generated by MAP and CRM, respectively. We start by proving that, for both methods, both distance sequences \(({{\,\mathrm{dist}\,}}(z^k, K\cap U))_{k\in \mathbb {N}}\) and \(({{\,\mathrm{dist}\,}}(x^k, K\cap U))_{k\in \mathbb {N}}\) converge linearly to 0, which will be a corollary of the next proposition.

Proposition 3.1

Assume that K, U satisfy EB. Consider \(T,C:\mathbb {R}^n\rightarrow \mathbb {R}^n\) as in (2.1). Then, for all \(x\in U\),

with \(\omega\) as in Assumption EB.

Proof

It follows easily from Proposition 2.6 that

for all \(x\in \mathbb {R}^n\) and all \(y\in K\cap U\subset K\). Invoking again Proposition 2.6, we get from (3.2)

for all \(x\in U, y\in K\cap U\), using Assumption EB in the last inequality. Take now \(y=P_{K\cap U}(x)\). Then, in view of (3.3),

using the definition of \(P_{K\cap U}\) in the first and the third inequality and Proposition 2.7(ii) in the second inequality. Note that (3.1) follows immediately from (3.4). \(\square\)

Corollary 3.2

Let \((z^k)_{k\in \mathbb {N}}\) and \((x^k)_{k\in \mathbb {N}}\) be the sequences generated by MAP and CRM starting at any \(z^0\in \mathbb {R}^n\) and any \(x^0\in U\), respectively. If K, U satisfy Assumption EB, then the sequences \(({{\,\mathrm{dist}\,}}(z^k,K\cap U))_{k\in \mathbb {N}}\) and \(({{\,\mathrm{dist}\,}}(x^k,K\cap U))_{k\in \mathbb {N}}\) converge Q-linearly to 0, and the asymptotic constants are bounded above by \(\sqrt{1-\omega ^2}\), with \(\omega\) as in Assumption EB.

Proof

In view of the definition of \(P_{K\cap U}\), (3.1) can be rewritten as

for all \(x\in U\). Since \(z^{k+1}=T(z^k)\), we get from the first inequality in (3.5),

using the fact that \((z^k)_{k\in \mathbb {N}}\subset U\). Hence

By the same token, using the second inequality in (3.5) and Proposition 2.7(ii), we get

The inequalities in (3.6) and (3.7) imply the result. \(\square\)

We remark that the result for MAP holds when U is any closed and convex set, not necessarily an affine manifold. We need U to be an affine manifold in Proposition 2.7 (otherwise, \((x^k)_{k\in \mathbb {N}}\) may even diverge), but this proposition is used in our proofs only when the CRM sequence is involved.

Next, we show that, under Assumption EB, CRM achieves a linear rate with an asymptotic constant better than the one given in Corollary 3.2.

Proposition 3.3

Let \((x^k)_{k\in \mathbb {N}}\) be the sequence generated by CRM starting at any \(x^0\in U\). If K, U satisfy Assumption EB, then the sequence \(({{\,\mathrm{dist}\,}}(x^k,K\cap U))_{k\in \mathbb {N}}\) Q-converges to 0 with the asymptotic constant bounded above by \(\sqrt{\dfrac{1-\omega ^2}{1+\omega ^{2}}},\) where \(\omega\) is as in Assumption EB.

Proof

Take \(y^*\in K\cap U\) and \(x\in U\). Note that

using the definition of orthogonal projection onto K, the fact that \(y^*\in K\) and Proposition 2.6 in the first inequality, and again Proposition 2.6 regarding U in the second inequality.

Now, we will invoke some results from [14] to prove that C(x) is indeed the orthogonal projection of x onto the intersection of U with the halfspace \(H_x^+:=\{y\in \mathbb {R}^n \mid \left\langle {y-P_K(x)},{ x-P_K(x)} \right\rangle \le 0\}\) containing K. In [14, Lemma 3] it is proved that \(C(x)=P_{H_x\cap U}(x)\) with \(H_x:=\{y\in \mathbb {R}^n \mid \left\langle {y-P_K(x)},{ x-P_K(x)} \right\rangle = 0\}\). Using the arguments employed at the beginning of the proof of [14, Lemma 5], we get

Hence, the above equality and the fact that \(y^*\in K\cap U \subset H_x^+\cap U\) imply \(\left\langle {y^*-C(x)},{x-C(x)} \right\rangle \le 0\) and since x, \(P_U(P_K(x))\) and C(x) are collinear (see [14, Eq. (7)]), we get \(\left\langle {y^*-C(x)},{P_U(P_K(x))-C(x)} \right\rangle \le 0\). Thus,

using the definition of the distance in the last two inequalities. Now, taking \(y^{*}=P_{K\cap U}(x)\) and using the error bound condition for x and C(x), we obtain

Rearranging (3.10), we get \((1+\omega ^2){{\,\mathrm{dist}\,}}^2(C(x), K\cap U)\le (1-\omega ^2){{\,\mathrm{dist}\,}}^2(x,K\cap U)\) and, since \(x^{k+1}=C(x^k)\), we have

which implies the result. \(\square\)

Propositions 3.1 and 3.3 do not entail immediately that the sequences \((x^k)_{k\in \mathbb {N}}, (z^k)_{k\in \mathbb {N}}\) themselves converge linearly; a sequence \((y^k)_{k\in \mathbb {N}}\subset \mathbb {R}^n\) may converge to a point \(y\in M\subset \mathbb {R}^n\), in such a way that \(({{\,\mathrm{dist}\,}}(y^k, M))_{k\in \mathbb {N}}\) converges linearly to 0 but \((y^k)_{k\in \mathbb {N}}\) itself converges sublinearly. Take for instance \(M=\{(s,0)\in \mathbb {R}^2\}\), \(y^k=\left( 1/k,2^{-k}\right)\). This sequence converges to \(0\in M\), \({{\,\mathrm{dist}\,}}(y^k,M)=2^{-k}\) converges linearly to 0 with asymptotic constant equal to 1/2, but the first component of \(y^k\) converges to 0 sublinearly, and hence the same holds for the sequence \((y^k)_{k\in \mathbb {N}}\). The next lemma, possibly of some interest on its own, establishes that this situation cannot occur when \((y^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to M. The result below is similar to [5, Theorem 5.12], however we include its proof for the sake of completeness.

Lemma 3.4

Consider a nonempty closed convex set \(M\subset \mathbb {R}^n\) and \((y^k)_{k\in \mathbb {N}}\subset \mathbb {R}^n\). Assume that \((y^k)_{k\in \mathbb {N}}\) is Fejér monotone with respect to M, and that \(({{\,\mathrm{dist}\,}}(y^k,M))_{k\in \mathbb {N}}\) converges R-linearly to 0. Then \((y^k)_{k\in \mathbb {N}}\) converges R-linearly to some point \(y^*\in M\), with asymptotic constant bounded above by the asymptotic constant of \(({{\,\mathrm{dist}\,}}(y^k,M))_{k\in \mathbb {N}}\).

Proof

Fix \(k\in \mathbb {N}\) and note that the Fejér monotonicity hypothesis implies that, for all \(j\ge k\),

By Proposition 2.3(i), \((y^k)_{k\in \mathbb {N}}\) is bounded. Take any cluster point \({\bar{y}}\) of \((y^k)_{k\in \mathbb {N}}\). Taking limits with \(j\rightarrow \infty\) in (3.11) along a subsequence \((y^{k_j})_{j\in \mathbb {N}}\) of \((y^k)_{k\in \mathbb {N}}\) converging to \({\bar{y}}\), we get that \(\left\Vert {\bar{y}}-P_M(y^k)\right\Vert \le {{\,\mathrm{dist}\,}}(y^k,M)\). Since \(\lim _{k\rightarrow \infty }{{\,\mathrm{dist}\,}}(y^k,M)=0\), we conclude that \((P_M(y^k))_{k\in \mathbb {N}}\) converges to \({\bar{y}}\), so that there exists a unique cluster point, say \(y^*\). Therefore, \(\lim _{k\rightarrow \infty }y^k=y^*\), and hence \(\left\Vert y^*-P_M(y^k)\right\Vert \le {{\,\mathrm{dist}\,}}(y^k,M)\). Since \(y^*=\lim _{k\rightarrow \infty }P_M(y^k)\), we conclude that \(y^*\in M\). Observe further that

Taking kth-root and then \(\limsup\) with \(k\rightarrow \infty\) in (3.12), and using the R-linearity hypothesis,

establishing both that \((y^k)_{k\in \mathbb {N}}\) converges R-linearly to \(y^*\in M\) and the statement on the asymptotic constant. \(\square\)

With the help of Lemma 3.4, we prove next the R-linear convergence of the MAP and CRM sequences under Assumption EB, and give bounds for their asymptotic constants.

Theorem 3.5

Consider a closed and convex set \(K\subset \mathbb {R}^n\) and an affine manifold \(U\subset \mathbb {R}^n\). Assume that K, U satisfy Assumption EB. Let \((z^k)_{k\in \mathbb {N}}, (x^k)_{k\in \mathbb {N}}\) be the sequences generated by MAP and CRM, respectively, starting from arbitrary points \(z^0\in \mathbb {R}^n, x^0\in U\). Then both sequences \((z^k)_{k\in \mathbb {N}}\) and \((x^k)_{k\in \mathbb {N}}\) converge R-linearly to points in \(K\cap U\), and the asymptotic constants are bounded above by \(\sqrt{1-\omega ^2}\) for MAP, and by \(\sqrt{\dfrac{1-\omega ^2}{1+\omega ^{2}}}\) for CRM, with \(\omega\) as in Assumption EB.

Proof

In view of Propositions 2.4 and 2.7(iii) and (iv), both sequences are Fejér monotone with respect to \({K\cap U}\) and converge to points in \({K\cap U}\). By Corollary 3.2, both sequences \(({{\,\mathrm{dist}\,}}(z^k,{K\cap U}))_{k\in \mathbb {N}}\) and \(({{\,\mathrm{dist}\,}}(x^k,{K\cap U}))_{k\in \mathbb {N}}\) are Q-linearly convergent to 0, and henceforth R-linearly convergent to 0. Corollary 3.2 shows that the asymptotic constant of the sequence \(({{\,\mathrm{dist}\,}}(z^k,{K\cap U}))_{k\in \mathbb {N}}\) is bounded above by \(\sqrt{1-\omega ^2}\), and Proposition 3.3 establishes that the asymptotic constant of the sequence \(({{\,\mathrm{dist}\,}}(x^k,{K\cap U}))_{k\in \mathbb {N}}\) is bounded above by \(\sqrt{{1-\omega ^2}}/\sqrt{{1+\omega ^{2}}}\). Finally, by Lemma 3.4, both \((z^k)_{k\in \mathbb {N}}\) and \((x^k)_{k\in \mathbb {N}}\) are R-linearly convergent, with the announced bounds for their asymptotic constants. \(\square\)

We remark that, in view of Theorem 3.5, the upper bound for the asymptotic constant of the CRM sequence is substantially better than the one for the MAP sequence. Note that the CRM bound reduces the MAP one by a factor of \(\sqrt{1+\omega ^2}\), which increases up to \(\sqrt{2}\) when \(\omega\) approaches 1.

4 Two families of examples for which CRM is much faster than MAP

We will present now two rather generic families of examples for which CRM is faster than MAP. In the first one, MAP converges sublinearly while CRM converges linearly; in the second one, MAP converges linearly and CRM converges superlinearly.

In both families, we work in \(\mathbb {R}^{n+1}\). K will be the epigraph of a proper convex function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\), and U the hyperplane \(\{(x,0)\mid x\in \mathbb {R}^n\}\subset \mathbb {R}^{n+1}\). From now on, we consider f to be continuously differentiable in \({{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\), where \({{\,\mathrm{dom}\,}}(f):=\{x\in \mathbb {R}^n\mid f(x)<+\infty \}\) and \({{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\) is its topological interior. Next, we make the following assumptions on f:

-

A1.

\(0\in {{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\) is the unique minimizer of f.

-

A2.

\(\nabla f(0)=0\).

-

A3.

\(f(0)=0\).

We will show that under these assumptions, MAP always converges sublinearly, while, adding an additional hypothesis, CRM converges linearly.

Note that under hypotheses A1 to A3, \(0\in \mathbb {R}^{n}\) is the unique zero of f and hence \(K\cap U=\{(0,0)\}\subset \mathbb {R}^{n+1}\). In view of Propositions 2.4 and 2.7, the sequences generated by MAP and CRM, with arbitrary initial points in \(\mathbb {R}^{n+1}\) and U, respectively, both converge to (0, 0), and are Fejér monotone with respect to \(\{(0,0)\}\), so that, in view of A1, for large enough k the iterates of both sequences belong to \({{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\times \mathbb {R}\). We take now any point \((x,0)\in U\), with \(x\ne 0\) and proceed to compute \(P_K(x,0)\). Since \((x,0)\notin K\) (because \(x\ne 0\) and \(K\cap U=\{(0,0)\}\)), \(P_K(x,0)\) must belong to the boundary of K, i.e., it must be of the form (u, f(u)), and u is determined by minimizing \(\left\Vert (x,0)-(u,f(u))\right\Vert ^2\), so that \(u-x +f(u)\nabla f(u) =0\), or equivalently

Note that since \(x\ne 0\), \(u\ne 0\) by A3. With the notation of Sect. 3 and bearing in mind that T and C are the MAP and CRM operators defined in (2.1), it is easy to check that

with u as in (4.1). Moreover,

Next, we compute \(C(x,0)={{\,\mathrm{circ}\,}}((x,0),R_K(x,0), R_U(R_K(x,0)))\). Suppose that \(C(x,0)=(v,s)\). The conditions

give rise to two quadratic equations whose solution is

using (4.1) in the last equality.

We proceed to compute the quotients \(\left\Vert T(x,0)-0\right\Vert /\left\Vert (x,0)-0\right\Vert\), \(\left\Vert C(x,0)-0\right\Vert /\left\Vert (x,0)-0\right\Vert\). Since both the MAP and the CRM sequences converge to 0, these quotients are needed for determining their convergence rates. In view of (4.2) and (4.3), these quotients reduce to \(\left\Vert u\right\Vert /\left\Vert x\right\Vert , \left\Vert v\right\Vert /\left\Vert x\right\Vert\). We state the result of the computation of these quotients in the next proposition.

Proposition 4.1

Take \((x,0)\in U\) with \(x\ne 0\). Let \(T(x,0)=(u,0)\) and \(C(x,0)=(v,0)\). Then,

with \({\bar{u}}=u/\left\Vert u\right\Vert\),

and

Proof

In view of (4.1),

and (4.4) follows by dividing the numerator and the denominator by \(\left\Vert u\right\Vert\).

We proceed to establish (4.5). In view of (4.3), we have

using the gradient inequality \(\langle \nabla f(u),u\rangle \ge f(u)\), which holds because f is convex and \(f(0)=0\). Now, (4.5) follows by dividing (4.7) by \(\left\Vert u\right\Vert ^2\). Finally, (4.6) follows by multiplying (4.5) by \(\dfrac{\left\Vert u\right\Vert ^2}{\Vert x\Vert ^2}=\dfrac{\left\Vert T(x,0)\right\Vert ^2}{\Vert (x,0)\Vert ^2}\). \(\square\)

Next we compute the limits with \(x\rightarrow 0\) of the quotients in Proposition 4.1.

Proposition 4.2

Take \((x,0)\in U\) with \(x\ne 0\). Let \(T(x,0)=(u,0)\) and \(C(x,0)=(v,0)\). Then,

and

Proof

By convexity of f, using A3, \(f(y)\le \langle \nabla f(y),y\rangle \le \left\Vert \nabla f(y)\right\Vert \,\left\Vert y\right\Vert\) for all \(y\in {{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\) sufficiently close to 0. Hence, for all nonzero \(y\in {{\,\mathrm{int}\,}}({{\,\mathrm{dom}\,}}(f))\), \(0< f(y)/\left\Vert y\right\Vert \le \left\Vert \nabla f(y)\right\Vert\), using A1 and A3. Since \(\lim _{y\rightarrow 0}\nabla f(y)=0\) by A1 and A2 and the convexity of f, it follows that

Now we take limits with \(x\rightarrow 0\) in (4.4). Since \((u,0)=P_K((x,0))\) and using the continuity of projections, \(\lim _{x\rightarrow 0}u=0\). Thus,

using (4.10) and the fact that \(\left\Vert {\bar{u}}\right\Vert = \left\Vert u/\left\Vert u\right\Vert \right\Vert = 1\). We have proved that (4.8) holds. Now we deal with (4.9). Taking \(x\rightarrow 0\) in (4.6), we have

The second \(\limsup\) on the right-hand side of (4.11) is equal to \(\lim _{x\rightarrow 0}\left[ \frac{\left\Vert u\right\Vert }{\left\Vert x\right\Vert }\right] ^2\) and by (4.8) it is equal to 1 and so (4.9) follows from the already made observation that \(\lim _{x\rightarrow 0}u=0\). \(\square\)

We proceed to establish the convergence rates of the sequences generated by MAP and CRM for this choice of K and U.

Corollary 4.3

Consider \(K, U\subset \mathbb {R}^{n+1}\) given by \(K= {{\,\mathrm{epi}\,}}(f)\), with \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) satisfying A1 to A3 and \(U:=\{(x,0)\mid x\in \mathbb {R}^n\}\subset \mathbb {R}^{n+1}\). Let \((z^k,0)_{k\in \mathbb {N}}\) and \((x^k,0)_{k\in \mathbb {N}}\), be the sequences generated by MAP and CRM, starting from \((z^0,0)\in \mathbb {R}^{n+1}\) and \((x^0,0) \in U\), respectively. Then,

and

with

Proof

Since \(T(z^k,0)=(z^{k+1},0)\), \(C(x^k,0)=(x^{k+1},0)\), and \(\lim _{k\rightarrow \infty }x^k=\lim _{k\rightarrow \infty }z^k=0\), it suffices to apply Proposition 4.2 with \(x=z^k\) in (4.8) and \(x=x^k\) in (4.9).

\(\square\)

We add now an additional hypothesis on f.

-

A4.

f satisfies \(\displaystyle \liminf _{x\rightarrow 0}\dfrac{f(x)}{\left\Vert x\right\Vert \,\left\Vert \nabla f(x)\right\Vert }>0.\)

Observe that by using the convexity of f and Cauchy-Schwarz inequality, we have

Thus, A1 to A3 imply \(f(x)/(\left\Vert x\right\Vert \,\left\Vert \nabla f(x)\right\Vert )\in (0,1]\), for all \(x\ne 0\), so that A4 just excludes the case in which the \(\liminf\) above is equal to 0. Next we rephrase Corollary 4.3.

Corollary 4.4

Consider \(K, U\subset \mathbb {R}^{n+1}\) given by \(K= {{\,\mathrm{epi}\,}}(f)\), with \(f:\mathbb {R}^n\rightarrow \mathbb {R}\cup \{+\infty \}\) satisfying A1 to A3 and \(U:=\{(x,0)\mid x\in \mathbb {R}^n\}\subset \mathbb {R}^{n+1}\). Then the sequence generated by MAP from an arbitrary initial point converges sublinearly. If f also satisfies hypothesis A4, then the sequence generated by CRM from an initial point in U converges linearly, and its asymptotic constant is bounded above by \(\sqrt{1-\gamma ^2}<1\), with \(\gamma\) as in (4.13).

Proof

Immediate from Corollary 4.3 and hypothesis A4.

\(\square\)

Next we discuss several situations for which hypothesis A4 holds, showing that it is rather generic. The first case is as follows.

Proposition 4.5

Assume that f, besides satisfying A1 to A3, is of class \({{\mathcal {C}}}^2\) and \(\nabla ^2f(0)\) is nonsingular. Then, assumption A4 holds, and \(\gamma \ge \lambda _{\min }/(2\lambda _{\max })>0\) where \(\lambda _{\min }, \lambda _{\max }\) are the smallest and largest eigenvalues of \(\nabla f^2(0)\), respectively.

Proof

In view of A2, A3 and the hypothesis on \(\nabla f^2(0)\), we have

Also, using the Taylor expansion of \(\nabla f\) around \(x=0\), \(\nabla f(x)=\nabla ^{2}f(0)x + o(\left\Vert x\right\Vert )\), so that

and the result follows by taking \(\liminf\) in (4.16), since the right hand converges to \(\dfrac{\lambda _{\min } }{2\lambda _{\max }}>0\) as \(x \rightarrow 0\). \(\square\)

Note that nonsingularity of \(\nabla ^2f(0)\) holds when f is of class \({{\mathcal {C}}}^2\) and strongly convex.

We consider next other instances for which assumption A4 holds. Now we deal with the case in which \(f(x)=\phi (\left\Vert x\right\Vert )\) with \(\phi :\mathbb {R}\rightarrow \mathbb {R}\cup \{+\infty \}\), satisfying A1 to A3. This case has a one dimensional flavor, and computations are easier. The first point to note is that

so that assumption A4 becomes:

- A4\(^\prime\):

-

\(\phi\) satisfies \(\displaystyle \liminf _{t\rightarrow 0}\dfrac{\phi (t)}{t\phi '(t)}>0\).

More importantly, in this case \(\nabla f(x)\) and x are collinear, which allows for an improvement in the asymptotic constant: we will have \(1-\gamma\) instead of \(\sqrt{1-\gamma ^2}\) in (4.12), as we show next. We reformulate Propositions 4.1 and 4.2 for this case.

Proposition 4.6

Assume that \(f(x)=\phi (\left\Vert x\right\Vert )\), with \(\phi :\mathbb {R}\rightarrow \mathbb {R}\cup \{+\infty \}\) satisfying A1 to A3 and A4\(^{\prime }\). Take \((x,0)\in U\) with \(x\ne 0\). Let \(C(x,0)=(v,0)\). Then,

-

(i)

$$\begin{aligned} \frac{\left\Vert C(x,0)\right\Vert }{\left\Vert T(x,0)\right\Vert }=\frac{\left\Vert v\right\Vert }{\left\Vert u\right\Vert }= 1-\frac{\phi (\left\Vert u\right\Vert )}{\phi '(\left\Vert u\right\Vert )\left\Vert u\right\Vert }, \end{aligned}$$(4.18)

-

(ii)

$$\begin{aligned} \limsup _{x\rightarrow 0}\frac{\left\Vert C(x,0)\right\Vert }{\left\Vert (x,0)\right\Vert }= 1-\liminf _{x\rightarrow 0}\frac{f(x)}{\left\Vert x\right\Vert \,\left\Vert \nabla f(x)\right\Vert }= 1-\liminf _{t\rightarrow 0}\frac{\phi (t)}{t\phi '(t)}. \end{aligned}$$(4.19)

Proof

In this case

so that (4.1) becomes

and (4.3) can be rewritten as

Hence,

establishing (4.18). Then, (4.19) follows from (4.18) as in the proofs of Propositions 4.1 and 4.2, taking into account (4.17). \(\square\)

Corollary 4.7

Let \((x^k,0)_{k\in \mathbb {N}}\) be the sequence generated by CRM with \((x^0,0)\in U\). Assume that \(f(x)=\phi (\left\Vert x\right\Vert )\) with \(\phi :\mathbb {R}\rightarrow \mathbb {R}\cup \{+\infty \}\) satisfying A1 to A3. Then,

with

If \(\phi\) satisfies hypothesis A4\(^{\prime }\) then, the CRM sequence is Q-linearly convergent, with asymptotic constant equal to \(1-{\hat{\gamma }}\).

Proof

It is an immediate consequence of Proposition 4.6(ii), in view of the definition of the circumcenter operator C, given in (2.1). \(\square\)

We verify next that assumption A4\(^\prime\) is rather generic. It holds, e.g., if \(\phi\) is analytic around 0.

Proposition 4.8

If \(\phi\) satisfies A1 to A3 and is analytic around 0 then it satisfies A4\(^{\prime }\), and \({\hat{\gamma }}= 1/p\), where \(p :=\min \{j\mid \phi ^{(j)}(0)\ne 0\}\).

Proof

In this case

and

, and the result follows taking limits with \(t\rightarrow 0\), taking into account (4.20). \(\square\)

Note that for an analytic \(\phi\) the asymptotic constant is always of the form \(1-1/p\) with \(p\in \mathbb {N}\). This is not the case in general. Take, e.g., \(\phi (t)=\left|t\right|^{\alpha }\) with \(\alpha \in \mathbb {R}\), \(\alpha > 1\). Then a simple computation shows that \({\hat{\gamma }}=1/\alpha\). Note that \(\phi\) is of class \({{\mathcal {C}}}^p\), where p is the integer part of \(\alpha\), but not of class \({{\mathcal {C}}}^{p+1}\), so that Proposition 4.8 does not apply.

Take now

i.e., \(f(x)=\phi (\left\Vert x\right\Vert )\) with \(\phi (t)=1-\sqrt{1-t^2}\), when \(t\in [-1,1], \phi (t)=+\infty\) otherwise. Note that f satisfies A1 to A3 and its effective domain is the unit ball in \(\mathbb {R}^n\). Since \(\phi\) is analytic around 0 and \(\phi ''(0)\ne 0\), we get from Proposition 4.8 that \({\hat{\gamma }}=1/2\) and so the asymptotic constant of the CRM sequence is also 1/2. Note that the graph of f is the lower hemisphere of the ball \(B\subset \mathbb {R}^{n+1}\) centered at (0, 1) with radius 1. Observe also that the projection onto B of a point of the form \((x,0)\in \mathbb {R}^{n+1}\) is of the form (u, t) with \(t<1\), so it belongs to \({{\,\mathrm{epi}\,}}(f)\). Hence, the sequences generated by CRM for the pair K, U with \(K={{\,\mathrm{epi}\,}}(f)\) and \(K=B\) coincide. It follows easily that the sequence generated by CRM for a pair K, U where K is any ball and U is a hyperplane tangent to the ball, converges linearly, with asymptotic constant equal to 1/2. We remark that in all these cases the sequence generated by MAP converges sublinearly, by virtue of Corollary 4.4.

We look now at a case where hypothesis A4\(^{\prime }\) fails. Define

so that \(f(x)=\phi (\left\Vert x\right\Vert )\) with \(\phi (t)=e^{-1/t^2}\), when \(t\in (-3^{-1/2}, 3^{-1/2}), \phi (t)=+\infty\) otherwise. Again f satisfies A1 to A3. It is easy to check that \(\phi (t)/(t\phi '(t))=(1/2)t^2\), so that \(\lim _{t\rightarrow 0}\phi (t)/(t\phi '(t))=0\) and A4\(^{\prime }\) fails. It is known that this \(\phi\), which is of class \({{\mathcal {C}}}^\infty\) but not analytic, is extremely flat (in fact, \(f^{(k)}(0)=0\) for all k), and not even CRM can overcome so much flatness; in view of Corollary 4.7, in this case it converges sublinearly, as MAP does. The examples above are also presented as a study case in [6], illustrating the slow convergence of the proximal point algorithm, Douglas-Rachford algorithm and alternating projections.

Let us abandon such an appalling situation, and move over to other examples where CRM will be able to exhibit again its superiority; next, we deal with our second family of examples. In this case we keep the framework of the first family with just one change, namely in hypothesis A3 on f; now we will request that \(f(0)<0\). With this single trick (and a couple of additional technical assumptions), we will achieve linear convergence of the MAP sequence and superlinear convergence of the CRM one. We will assume also that the effective domain of f is the whole space (differently from the previous section, we don’t have now interesting examples with smaller effective domains; also, since now the limit of the sequences can be anywhere, a hypothesis on the effective domain becomes rather cumbersome). We’ll also demand that f be of class \({{\mathcal {C}}}^2\).

Finally, we will restrict ourselves to the case of \(f(x)=\phi (\left\Vert x\right\Vert )\), with \(\phi :\mathbb {R}\rightarrow \mathbb {R}\cup \{+\infty \}\). This assumption is not essential, but will considerably simplify our analysis. Thus, we rewrite the assumptions for \(\phi\), in this new context. We assume that function \(\phi\) is proper, strictly convex and twice continuously differentiable, satisfying

- A2\(^{\prime }\).:

-

\(\phi '(0)=0\).

- A3\(^{\prime }\).:

-

\(\phi (0)<0\).

In the remainder of the paper we will study the behavior of the MAP and CRM sequences for the pair \(K,U\subset \mathbb {R}^{n+1}\), where K is the epigraph of \(f(x)=\phi (\left\Vert x\right\Vert )\), with \(\phi\) satisfying hypotheses A2\(^{\prime }\) and A3\(^{\prime }\) above, and \(U:=\{(x,0)\mid x\in \mathbb {R}^n\}\subset \mathbb {R}^{n+1}\). As in the previous case, Propositions 2.4 and 2.7 ensure that both sequences converge to points in \(K\cap U\). Since we are dealing with convergence rates, we will exclude the case in which the sequences of interest have finite convergence. We continue with an elementary property of the limit of these sequences.

Proposition 4.9

Assume that K, U are as above. Let \((x^*,0)\) be the limit of either the MAP or the CRM sequences and \(t^*:=\left\Vert x^*\right\Vert\). Then, \(\phi (t^*)=0\) and \(\phi '(t^*)>0\).

Proof

Since these sequences stay in U, remain outside K (otherwise convergence would be finite), and converge to points in \(K\cap U\), it follows that their limits must belong to \({{\,\mathrm{bd}\,}}(K)\cap U\), where \({{\,\mathrm{bd}\,}}(K):=\{(x,f(x))\mid x\in \mathbb {R}^n\}\) denotes the boundary of K. So, we conclude that \(0=f(x^*)=\phi (t^*)\). Now, since \(\phi '(0)=0\), in view of A2\(^{\prime }\), and \(\phi '\) is strictly increasing, we conclude that \(\phi '(t)>0\) for all \(t>0\). Note that \(x^*\ne 0\), because \(f(x^*)=0\) and \(f(0)<0\) by A3\(^{\prime }\). Hence \(t^*=\left\Vert x^*\right\Vert >0\), so that \(\phi '(t^*)>0\). \(\square\)

Now we analyze the behavior of the operators C and T, in this case.

Proposition 4.10

Assume that \(K,U\subset \mathbb {R}^{n+1}\) are defined as

and

where

and \(\phi\) satisfies A2\(^{\prime }\) and A3\(^{\prime }\). Let T and C be the operators associated to MAP and CRM respectively, and \((z^*,0)\) and \((x^*,0)\) the limits of the sequences \((z^k)_{k\in \mathbb {N}}\) and \((x^k)_{k\in \mathbb {N}}\) generated by these methods, starting from some \((z^0,0)\in \mathbb {R}^{n+1}\), and some \((x^0,0)\in U\), respectively. Then,

and

Proof

Since, in this case, \(\nabla f(x)=\dfrac{\phi '(\left\Vert x\right\Vert )}{\left\Vert x\right\Vert }x\) for all \(x\ne 0\), we rewrite (4.1) and (4.3) as

and

In view of (4.23) and (4.24), u, v and x are collinear. In terms of the operators C and T, we have that x, C(x) and T(x) are collinear, so the same holds for the whole sequences generated by MAP, CRM and hence also for their limits \((z^*,0), (x^*,0)\). This is a consequence of the one-dimensional flavor of this family of examples. So, we define \(s:=\left\Vert z^*\right\Vert\), \(t:=\left\Vert x^*\right\Vert\), \(r:=\left\Vert u\right\Vert\), and therefore we get \(u=(r/s)z^*=(r/t)x^*\). We compute next the quotients

and

needed for determining the convergence rate of the MAP and CRM sequences. We start with the MAP case.

using (4.23) in the second equality and the fact that \(s=\phi (\left\Vert z^*\right\Vert )=f(z^*)=0\), established in Proposition 4.9, in the fourth one.

Now, we perform a similar computation for the operator C, needed for the CRM sequence.

using (4.24) in the second equality, and Proposition 4.9, which implies \(\phi (t)=0\), in the fifth one.

Finally, we take limits in (4.25) with \(x\rightarrow z^*\) and in (4.26) with \(x\rightarrow x^*\). Note that, since \(u=P_K(x)\), \(\lim _{x\rightarrow z^*}u=P_K(z^*)=z^*\), because \(z^*\in K\). Hence we take limit with \(r\rightarrow s\) in the right hand side of (4.25). We also take limits with \(x\rightarrow x^*\) in (4.26). By the same token, taking limit with \(r\rightarrow t\) in the right hand side, we get

and

The results follow, in view of the definitions of s and t, from (4.27) and (4.28), respectively. \(\square\)

Note that the denominators in the expressions of (4.27) and (4.28) are the same; the difference lies in the numerators: in the MAP case it is 1; in the CRM one, the presence of the factor \((\phi (r)-\phi (t))/(r-t)\) makes the numerator go to 0 when r tends to t.

Corollary 4.11

Under the assumptions of Proposition 4.10 the sequence generated by MAP converges Q-linearly to a point \((z^*,0)\in K\cap U\), with asymptotic constant equal to \(1/(1+\phi '(\left\Vert z^*\right\Vert )^2)\), and the sequence generated by CRM converges superlinearly.

Proof

The result for the MAP sequence follows from (4.21) in Proposition 4.10, observing that for \(x=z^k\), we have \(T(x,0)=(z^{k+1}, 0)\). Note that the asymptotic constant is indeed smaller than 1, because \(z^*\ne 0\), and \(\phi '(\left\Vert z^*\right\Vert )\ne 0\) by Proposition 4.9. The result for the CRM sequence follows from (4.22) in Proposition 4.10, observing that for \(x=x^k\), we have \(C(x,0)=(x^{k+1},0)\). \(\square\)

We now present an example that, although very simple, enables one to visualize how fast CRM is in comparison to MAP.

Example 4.12

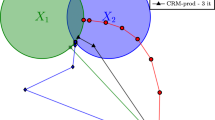

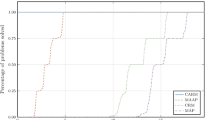

Let \(\phi :\mathbb {R}\rightarrow \mathbb {R}\) given by \(\phi (t) = \left|t\right|^\alpha - \beta\), where \(\alpha >1\) and \(\beta \ge 0\). Consider \(K, U\subset \mathbb {R}^2\) such that \(K :={{\,\mathrm{epi}\,}}(\phi )\) and U is the abscissa axis. Note that, if \(\beta = 0\), the error bound condition EB between K and U does not hold. For any \(\beta >0\), though, it is easily verifiable that EB is valid. Figure 1 shows CRM and MAP tracking a point in \(K\cap U\) up to a precision \(\varepsilon > 0\), with the same starting point \((1.1, 0)\in \mathbb {R}^2\). We fix \(\alpha =2\) and take \(\beta = 0\) in Fig. 1a and \(\beta = 0.06\) in Fig. 1b. We count and display the iterations of the MAP sequence \((z^k)_{k\in \mathbb {N}}\) and the CRM sequence \((x^k)_{k\in \mathbb {N}}\) until \({{\,\mathrm{dist}\,}}(z^k,K\cap U) \le \varepsilon\) and \({{\,\mathrm{dist}\,}}(x^k,K\cap U) \le \varepsilon\), with \(\varepsilon = 10^{-3}\). The figures below depict the results on MAP and CRM derived in Corollaries 4.4 and 4.11.

We emphasize that in the cases above MAP exhibits its usual behavior, i.e., linear convergence. The examples of the first family were somewhat special because, roughly speaking, the angle between K and U goes to 0 near the intersection. On the other hand, the superlinear convergence of CRM is quite remarkable. The additional computations of CRM over MAP reduce to the trivial determination of the reflections and the solution of a system of two linear equations in two variables, for finding the circumcenter [7, 11]. Now MAP is a typical first-order method (projections disregard the curvature of the sets), and thus its convergence is generically no better than linear. We have shown that the CRM acceleration improves this linear convergence to superlinear in a rather large class of instances. Long live CRM!

We conjecture that CRM enjoys superlinear convergence whenever U intersects the interior of K. The results in this section firmly support this conjecture.

References

Aragón Artacho, F.J., Campoy, R., Tam, M.K.: The Douglas-Rachford algorithm for convex and nonconvex feasibility problems. Math. Methods Oper. Res. 91, 201–240 (2020). https://doi.org/10.1007/s00186-019-00691-9

Bauschke, H.H., Bello-Cruz, J.Y., Nghia, T.T.A., Phan, H.M., Wang, X.: Optimal rates of linear convergence of relaxed alternating projections and generalized Douglas-Rachford methods for two subspaces. Numer. Algorithms 73(1), 33–76 (2016). https://doi.org/10.1007/s11075-015-0085-4

Bauschke, H.H., Borwein, J.M.: On the convergence of von Neumann’s alternating projection algorithm for two sets. Set Valued Anal. 1(2), 185–212 (1993). https://doi.org/10.1007/BF01027691

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38(3), 367–426 (1996). https://doi.org/10.1137/S0036144593251710

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd edn. CMS Books in Mathematics, Springer International Publishing (2017). https://doi.org/10.1007/978-3-319-48311-5

Bauschke, H.H., Dao, M.N., Noll, D., Phan, H.M.: Proximal point algorithm, Douglas-Rachford algorithm and alternating projections: a case study. J. Convex Anal. 23(1), 237–261 (2016)

Bauschke, H.H., Ouyang, H., Wang, X.: On circumcenters of finite sets in Hilbert spaces. Linear Nonlinear Anal. 4(2), 271–295 (2018)

Bauschke, H.H., Ouyang, H., Wang, X.: Circumcentered methods induced by isometries. Vietnam J. Math. 48, 471–508 (2020)

Bauschke, H.H., Ouyang, H., Wang, X.: On circumcenter mappings induced by nonexpansive operators. Pure and Applied Functional Analysis 6(2), 257–288 (2021)

Bauschke, H.H., Ouyang, H., Wang, X.: On the linear convergence of circumcentered isometry methods. Numer. Algorithm 87, 263–297 (2021). https://doi.org/10.1007/s11075-020-00966-x

Behling, R., Bello-Cruz, J.Y., Santos, L.R.: Circumcentering the Douglas-Rachford method. Numer. Algorithm 78(3), 759–776 (2018). https://doi.org/10.1007/s11075-017-0399-5

Behling, R., Bello-Cruz, J.Y., Santos, L.R.: On the linear convergence of the circumcentered-reflection method. Oper. Res. Lett. 46(2), 159–162 (2018). https://doi.org/10.1016/j.orl.2017.11.018

Behling, R., Bello-Cruz, J.Y., Santos, L.R.: The block-wise circumcentered- reflection method. Comput. Optim. Appl. 76(3), 675–699 (2020). https://doi.org/10.1007/s10589-019-00155-0

Behling, R., Bello-Cruz, J.Y., Santos, L.R.: On the circumcentered-reflection method for the convex feasibility problem. Numer. Algorithms 86, 1475–1494 (2021). https://doi.org/10.1007/s11075-020-00941-6

Behling, R., Fischer, A., Haeser, G., Ramos, A., Schönefeld, K.: On the constrained error bound condition and the projected Levenberg-Marquardt method. Optimization 66(8), 1397–1411 (2017). https://doi.org/10.1080/02331934.2016.1200578

Behling, R., Gonçalves, D.S., Santos, S.A.: Local convergence analysis of the Levenberg-Marquardt framework for nonzero-residue nonlinear least-squares problems under an error bound condition. J. Optim. Theory Appl. 183(3), 1099–1122 (2019). https://doi.org/10.1007/s10957-019-01586-9

Cheney, W., Goldstein, A.A.: Proximity maps for convex sets. Proc. Am. Math. Soc. 10(3), 448–450 (1959). https://doi.org/10.2307/2032864

Cimmino, G.: Calcolo approssimato per le soluzioni dei sistemi di equazioni lineari. La Ricerca Scientifica 9(II), 326–333 (1938)

Dizon, N., Hogan, J., Lindstrom, S.B.: Circumcentering reflection methods for nonconvex feasibility problems. arXiv (2019). https://arxiv.org/abs/1910.04384

Kaczmarz, S.: Angenäherte Auflösung von Systemen linearer Gleichungen. Bull. Int. Acad. Pol. Sci. Lett. Class. Sci. Math. Nat. A 35, 355–357 (1937)

Kayalar, S., Weinert, H.L.: Error bounds for the method of alternating projections. Math. Control Signal Syst. 1(1), 43–59 (1988). https://doi.org/10.1007/BF02551235

Pierra, G.: Decomposition through formalization in a product space. Math. Program. 28(1), 96–115 (1984). https://doi.org/10.1007/BF02612715

von Neumann, J.: Functional Operators: The Geometry of Orthogonal Spaces, Annals of Mathematics Studies, vol. 2, no. 22. Princeton University Press, Princeton (1950). https://doi.org/10.2307/j.ctt1bc543b

Acknowledgements

We thank the anonymous referees for their valuable suggestions which significantly improved this manuscript.

Funding

RB was partially supported by the Brazilian Agency Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq), Grants 304392/2018-9 and 429915/2018-7; YBC was partially supported by the National Science Foundation (NSF), Grant DMS—1816449.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Arefidamghani, R., Behling, R., Bello-Cruz, Y. et al. The circumcentered-reflection method achieves better rates than alternating projections. Comput Optim Appl 79, 507–530 (2021). https://doi.org/10.1007/s10589-021-00275-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-021-00275-6