Abstract

The dynamic resource allocation is a good feature of the cloud computing environment. However, it faces serious problems in terms of service quality, fault tolerance, and energy consumption. It was necessary, then, to find an effective method that can effectively address these important issues and increase cloud performance. This paper presents a dynamic resource allocation model that can meet customer demand for resources with improved and faster responsiveness. It also proposes a multi-objective search algorithm called Spacing Multi-Objective Antlion algorithm (S-MOAL) to minimize both the makespan and the cost of using virtual machines. In addition, its impact on fault tolerance and energy consumption was studied. The simulation revealed that our method performed better than the PBACO, DCLCA, DSOS and MOGA algorithms, especially in terms of makespan.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent advances in information technologies and telecommunications have a major impact on lifestyles. It affected many areas of life, including employment, education, health, and so on [1, 2]. This prompted researchers to focus more on the cloud paradigm, as it is an important part of modern technologies [3]. Cloud is the collection of heterogeneous resources known for their on-demand self-service, scalability, resource pooling, broad network access, rapid, elasticity, and higher availability [4, 5]. These features allow flexible and dynamic sharing resources among a large number of customers. However, resource management requires a good strategy to deal with the problems resulting from their dynamic allocation and release [6, 7].

The virtual machine (VM) is a fundamental component of the cloud environment. It is a type of software that can run an operating system and applications on the operating system of the physical machine [3]. This technology makes it possible to create virtual networks, servers, devices, etc; creating a pool of rentable, replaceable, and reassignable resources. This renders the provision of resources dynamic, fair, profitable, and most importantly, the virtual machines become available to customers, at any time. This model of cloud computing is called Infrastructure as a Service (IaaS) [5]. Accordingly, the state of the system needs to be dynamically adjusted according to resource requirements and availability for a better performance in the cloud computing environment. Like so, task scheduling is the most critical aspect within this issue. Yet, it is a difficult problem to solve (NP-hard) [6].

Dynamism in the cloud lies in the ability to automatically adjust resources according to the needs of the user. This management is subject to some constraints of cloud providers and cloud users, which can pose problems for the delivery of cloud services. Moreover, cloud dynamism, requiring continuous monitoring of requests and resources, handling of ever-changing requirements, schedules and prices, selecting appropriate services and plans to meet overall objectives of the cloud [8, 9]. Dynamic resource allocation is really apparent in the case of the public cloud due to the large number of users. This problem becomes more acute in the case of infrastructure as a service.

Since computing resources are entities in which tasks are executed, the diversity of their capabilities causes a disparity in the execution time of tasks. In other words, virtual machines are configured with various processing speeds and memory, which indicates that a task executed on different VMs may result in varying execution costs. This results in a conflict of objectives because the virtual machine that runs the task in a short time becomes more expensive, which raises the question of service quality to customers. Besides, fault tolerance is one of the major challenges to ensure reliability, robustness, and availability of services, as well as the applications’ execution in the cloud computing system, especially because service downtime is a major problem facing cloud providers in the last years [10, 11]. Typically, task failure may be due to VM malfunctioning or power failure. Likewise, regarding the efficiency of data centers, studies have concluded that in average about 55% of the energy consumed in a data center, is used up by the computing system, while the rest used by supporting systems such as cooling, uninterrupted power supply, etc. [12,13,14,15].

At present, current works deal with these issues separately, which leads to the improvement of each aspect independently without achieving an optimal solution. Furthermore, the dynamic aspect of the cloud has not been explicitly addressed, which raises doubt on the effectiveness of existing methods in real-world applications. For this reason, this research focuses on dynamic virtual machine scheduling to achieve efficient resource allocation. At the same time, we must maintain service quality to customers by minimizing the makespan and cost of using virtual machines; ensuring the permanence and durability of the service over time by fault prevention and fault tolerance. We also offer the Cloud Resource Provider (CRP) the possibility to consume energy consciously. More precisely, the contributions of this paper are summarized as follows:

-

Construct a dynamic resource allocation model to manage the energy consumption of unused VMs with fault tolerance.

-

Propose a new approach named Spacy Multi-Objectives Antlion algorithm S-MOAL to minimize makespan and cost.

In light of the above, the paper discusses the related work in resource allocation. Afterwards, Sect. 3 defines the problem and describes the proposed dynamic resource allocation model. Section 4 presents the S-MOAL algorithm to schedule tasks over virtual machines. Section 5 shows the simulation results of the proposed method, and Sect. 4 concludes the paper.

2 Related works

The cloud computing paradigm provides easy and cheap access to measurable and billable computing resources. As such, customers can get different services on-demand, anytime and anywhere. These characteristics confer a dynamic resource allocation capacity in this environment and also raise the question of the effectiveness of task scheduling methods used for this purpose. Indeed, various studies were held about the issue, yet, each addressed one or two problems without generating an optimal solution [16]. Furthermore, the dynamic aspect of the cloud environment has been approached from different angles.

A number of researchers highlighted dynamicity through proposed scheduling approaches that employ the information on resources available and properties of scheduled tasks to overcome the diverse issues of resource allocation. For example, the study [17] used the Cloud Information Service (CIS) to identify the services needed to perform the tasks received from the user. It presented the Discrete Symbiotic Organism Search (DSOS) algorithm to improve the makespan. In the work [18], authors proposed a task scheduling algorithm to minimize both makespan and energy consumption. However, they did not consider cost and fault tolerance issues. In addition, the Pareto-based multi-objective scheduling algorithm introduced in [19] targeted to allocate different on-demand virtual machines with optimal makespan and various prices by neglecting the energy consumption of the system. Whereas in [20] authors optimized a task scheduling solution by proposing an improved genetic algorithm which allows finding an optimal assignment of subtasks on the resources with a minimum of makespan. However, the proposed method did not compare with existing scheduling methods. Also, the virtualization characteristic of cloud computing environment was not considered.

Instead, in [4], researchers developed a dynamic scheduling algorithm that balances the workload among all the virtual machines by neglecting resources usage cost. In [10], they proposed a dynamic clustering algorithm (DCLCA) to update and reflect on the status of cloud resources. The given solution produces a group of independent tasks as a set of suitable computational size or task clusters, using task characteristics focusing on priority or a partition. This work did not also consider resource usage cost constraint. In paper [21], the authors used a method to analyze the flow of information about resources to classify the attributes of computing resources. This method speeds up the allocation of 14.65% and 7.43%, respectively, compared to the traditional HEFT algorithm. Other work based on the ant colony algorithm called PBACO took into account the makespan, cost, deadline violation rate, and resource utilization as constraints for multi-objective optimization. However, this solution did not deal with the dynamic change of the cloud environment [22]. There are other studies, which allow enhancing the performance of cloud, but need to be more adaptive with the dynamic resource allocation, as with the works [23,24,25,26].

On the other hand, some studies used predicting methods to monitor the alterations of resources consumed and required, which change dynamically. As in the case of work [27], the authors used a Markov decision process to estimate the execution time of user requests based on the state of the environment. In addition, the system uses a genetic algorithm to develop a task scheduling method. Furthermore, in [28], they proposed a new scheduling approach named PreAntPolicy. It consists of a prediction model based on fractal mathematics and a scheduler based on an improved ant colony algorithm. Also, in the article [29], the authors presented a new adaptive scheduling algorithm based on the auction mechanism taking into account the network bandwidth and the auction deadline. The proposed algorithm has good results in terms of cloud service providers profits and the resource utilization without dealing with execution time constraints and power consumption of the VMs. Furthermore, in [30], researchers combined the Taguchi method with a differential evolution algorithm (DEA) to minimize total cost and makespan. However, this solution still need to be improved to adapt to the allocation of resources. The proposed algorithm in the study [31] uses estimated execution time and various optimization techniques to determine an almost optimal resource allocation plan. However, it did not take into account operating costs and energy consumption factors.

Another class of researchers using the properties of virtualization technology, such as migrating and consolidating virtual machines, as well as modifying the state of virtual machines (awake, sleep, listening, etc.) to cope with dynamic changes in the cloud environment. For instance, researchers at [32] exploited the virtualization technique to solve the dynamic resource allocation problem. They proposed a Dynamic Energy Saving Resource Allocation (DPRA) mechanism to place the virtual machines properly on the physical machines to minimize VM migrations. In the work [33], they focused on dynamic virtual machine scheduling to achieve energy efficiency and satisfy deadline constraints with heterogeneous physical machines. Similarly, in [34] presented a task-scheduling model for cloud computing to minimize energy consumption of data center servers, without paying attention to task execution time. Other researchers proposed an online resource allocation algorithm using VMs consolidation to achieve energy efficiency and reduce service-level agreement (SLA) violations of cloud. For this end, authors considered the power usage of servers, the number of migrations, and the path length of migrations in data centers [35]. Yet, the problem of fault tolerance was not addressed.

Instead, the authors of [36] combined placement controllers and periodic reassignments of virtual machines to achieve the highest energy efficiency subject to predefined service levels. Additionally, to optimize the deployment of virtual machine image replicas in cloud storage clusters, a new mechanism is proposed in [37]. The latter helps improve the overall throughput of a storage cluster and speeds up image installation operations. Alternatively, another work aimed to achieve energy reduction for cloud providers and payment saving for cloud users at the same time [15]. Nevertheless, this work required more evaluations to prove its effectiveness in a cloud environment.

In addition to the above, there is a research category that addresses the dynamic resource allocation in the cloud, but in an inefficient way. In this regard, some researchers attempted to mix the three trends mentioned above to adapt the dynamic changes in the cloud environment. For example, in the Ref. [38], the authors used a solution to correctly map virtual machines to a group of tasks in a data center. To our knowledge, this work did not optimize energy consumption efficiency. Furthermore, in [13], researchers sought to find a good trade-off of energy consumption and the makespan without considering monetary costs associated with the use of VMs. The same problem is discussed in the work [39], the authors proposed a PD-LBP method for the allocation of multi-agent tasks in dynamic grid and cloud environments. This solution aims to accelerate online response for improving the performances of task allocation. Evaluation results show that PD-LBP has a good ability to assign large-scale tasks in a dynamic environment, although this is not always feasible.

In the same vein, a hybrid algorithm presented in [40] for load balancing, and maximizing the throughput of the cloud provider network. Furthermore, In [41] authors used a new GA-based approach (MOGA) to improve the accuracy of resource state prediction in the data center, thereby reducing energy consumption. Yet they have neglected QoS constraints. Another work presented a dynamic resource management system’s design for cloud services. Their system seeks to adapt to the changing demands of users in order to minimize energy consumption. However, the results were poor in terms of optimizing resource allocation [42]. In the paper [43], the authors suggested a new approach for the dynamic autonomous management of resources, without including the VMs state criteria. The proposed method gave acceptable results in terms of extensibility, feasibility, and flexibility. The researchers in [44] adopted an MPC technique to design the dynamic algorithm and adjust the supply and price according to customer demands. Nevertheless, dynamic scheduling in this work is not intended to achieve the goal of fault tolerance.

Table 1 below summarizes existing mechanisms for dealing with dynamic resource allocation and the key issues in this area.

3 Representation of the studied problem

3.1 Description of the problem

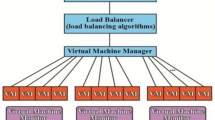

This study focuses on the dynamic allocation of resources in infrastructure as a service. In IaaS, the computing resources are dynamically delivered in a virtualized environment via the Internet (cloud public). So, when customers send requests to determine their resource requirements via customers interface (Fig. 1), the cloud resources provider makes a set of virtual machines available to them. Each virtual machine is configured with its own processors and memory resources, which gives an overview of a resource pool. These resources are dynamically reserved and released.

In addition, the customer pays the virtual machine used according to use time. However, the available resource capacity should always meet the demands of customers, which prompts the administrators to customize properly the limited amount of resources. The Cloud Resources Provider (CRP) also needs to conserve energy consumption and provide fault tolerance for the cloud system to ensure service continuity at any time.

3.2 Formulation of problem

In each allocation, the monitor detects the number of resources available to specify the number of tasks that can participate in the scheduling operation (Fig. 1). To tackle this problem, the following formulations are used:

Input

-

Set of tasks \(T = \{T_1,T_2,\ldots ,T_n\}\) represented by an identifier id, length ln, file size file and \(arrival\,\,time\). Tasks arrive in the queud continuously, also considered independent, where:

-

\(T_{i}\ne \emptyset \,\,\,\,\,\, i= 1,2,\ldots ,n\),

-

\(T_{i}\cap T_{j}= \emptyset \,\,\,\,\,\, i,j= 1,2,\ldots ,n\,\,\,and\,\,\,i\ne j\)

-

-

Set of \(VM_s=\{VM_1,VM_2,\ldots , VM_m\}\) characterized by an identifier id, millions of instructions per second mips parameter, number of CPUs (\(\lambda\)) and RAM size.

Output Achieving a better mapping of \(T_i\) to \(VM_j\) \((T_i, VM_j)\), in manner to reduce the makespan and the cost.

Makespan Equal to the latest finish time among all the execution tasks set, which is equivalent to the maximum finish time. The makespan f(t) is obtained using Eq. 1.

Cost The cost of the VM utilization is denoted by g and is defined as the sum prices of running all the tasks on the VMs, the cost is expressed as follows:

where \(g_{i}\) is the cost associated with the execution of task i on the resource j; \(pc_j\) is the price of using the resource j, \(t_{{j}}\) is the time of running the task i on resource j; \({C_{ij}}\) is the transfer rate cost for a bus of 32 bits wide with a clock speed of \({mips_{j}}\); \(\varepsilon\) is the transfer price, N is the number of all tasks and \(file_{i}\) is the file size occupied by the task i.

Function objective The aim is to minimize the value of the objective function F by optimizing the makespan and the cost, as shown in Eq. 3.

Subject to :

Constraints 3a and b mean that the CPU and memory configuration of the selected virtual machine must be greater than or equal to user requirements. While 3c means that the number of tasks loaded by the virtual machine is less than or equal to its number of processors. Moreover, to determine the feasible solutions, the variable \(x_{ij}\) is considered equal to 1 if the task \(T_{i}\) is assigned to \(VM_{j}\), otherwise \(x_{ij}\) take 0, as illustrated in Eq. 4.

3.3 The proposed dynamic resource allocation model

The proposed dynamic resource allocation (DRA) model permits to deal with the main interesting issues facing both customers and CRP, which are: minimizing both the makespan and cost, reducing the energy consumption and improving fault tolerance. Moreover, making the cloud system elastic, gives the possibility of expanding or shrinking their infrastructure by acquiring/releasing resources [19]. That is why, the proposed method takes into account the adjustment of VMs aware task scheduling. Henceforth, we performed the DRA model under the following assumptions:

-

The scheduling follows the change of the system according to the arrival rate of the tasks and the availability of the resources (the set of tasks or the set of VM is not fixed).

-

Each task is scheduled once, but if it fails, it can be rescheduled.

-

A task is scheduled to a VM as soon as it arrives with respecting the deadline.

To meet the above constraints, we designed our model architecture to automatically meet customers’ on-demand needs. As a result, the resource becomes accessible directly and easily once released. More precisely, to make our system ready to respond to different inside and outside events of the cloud changing environment, four parameters are defined (\(\alpha , \beta ,\mu , \gamma\)), which provide information about the number of selected tasks, availability of RAM resources, arrival rate and the number of failed tasks respectively (Fig. 1). Besides, the monitor analyzes the status of system changes, such as currently used virtual machines, actual task processing time, and task patterns, etc. Accordingly, we use two mechanisms (Fig. 2), the first is mainly concerned with service quality, while the second offers the possibility to increase and reduce resources’ quantity; minimizing the energy consumption of unused virtual machines, such as explained in next:

3.3.1 Dynamic task selection mechanism

The primary purpose of this mechanism is to use resource availability information to dynamically select the appropriate tasks from the arrival tasks and the failed tasks (pool of tasks). The creation of the selected task set is as follows:

-

Calculate the selected tasks set size (\(\alpha\)):

$$\begin{aligned} \alpha = \sum _{ }^{ } VM_{cpu_j} \end{aligned}$$(5)\(\alpha\) represents the number of released CPUs

-

Measure the common average RAM between the available resources (\(\beta\)):

$$\begin{aligned} \beta = \frac{1}{\alpha } \times \sum _{}^{}VM_{ram_j} \end{aligned}$$(6)$$\begin{aligned}&T_{ram_i} \le \beta&\end{aligned}$$(6a)$$\begin{aligned}&T_{ram_i} \le min\,\,\left\{ VM_{ram_j} \right\}&\end{aligned}$$(6b)

The constraint 6a means that resource requirements of the selected tasks must be less than the average of RAM available resources. This aims to select the same types of tasks to increase the availability of resources because of the convergence of the execution time of the tasks (resources released in the convergence time). This constraint is significant in the case of large arrival. While the constraint 6b permits to ensure the possibility of scheduling the tasks on the current ready VMs. Furthermore, the ready VMs are those check this condition:

-

\(\forall t\in [0; \infty [ \exists t=t_i:(VM_{cpu}(t_i)\ge 1 \wedge VM_{ram}(t_i) \in ]0; 100\% ])\), it is a VM that has an availability in CPU and RAM at time t.

Since the resources are provided according to an agreement between a CRP and its customers, the deadline (D) must be respected. As such, the sorting of pool tasks is based on their arrival time, with tasks with tight deadlines being processed earlier than others to avoid SLAFootnote 1 violations. Therefore, the Realtime Task (\(RT_i\)) that belongs to \(VM_j\) is given as Eq. 7. The task with \(min\left\{ RT_{i} \right\}\) is selected firstly.

where \(t_c\) represents the current time and \(f_{ij}\) is the execution time of task \(T_i\) on \(VM_j\).

3.3.2 Dynamic VMs adjustment mechanism

In this study, we deal with two types of VMs that can be encountered through the dynamic resource allocation in a cloud environment, as is done in the following paragraphs:

Unused VMs (UVMs): Adjusting the situation of unused virtual machines is considered an important step in the allocation of resources to enable their efficient use. Therefore, decrease the energy consumption (EC) tax for the CRP, knowing that the EC of the running VM (used VM) is payable by the customer. Accordingly, a standard Markov decision process (MDP) mechanism is adopted to overcome this issue. It is formalized as a tuple M = (S, A, \(\Delta\)) where:

-

S is the set of all possible VM states.

-

A is the set of all possible VM actions.

-

\(\Delta\) is a transition function \(\Delta : S \times A \mapsto \Delta (S)\).

This mechanism is designed to reduce the energy consumption of the UVMs where:

-

\(S=\{V_a,Vs,V_t,V_l\}\) : \(V_a\) is the awake state, \(V_s\) is the sleep state, \(V_t\) is the switching from the sleep state to the awake state and \(V_l\) is the listening period (Fig. 3).

From the perspective of the whole system, the energy conservation level of the system is achieved according to minimizing the EC of UVMs, which is calculated according to Eq. 8.

-

Where \(EC_a\) is the EC of an awakening VMs, \(EC_s\) is the EC of a sleeping VMs, \(EC_t\) is the EC of a switching VMs and \(EC_l\) is the EC of a listening VMs.

The transition function (\(\Delta\)) as defined in the MDP is Markovian, that is the probability of reaching the next state depends only on the current state and action and not on the history of earlier states [45]. \(p(\acute{s}\mid {s}'', EC_{{s}''})\) represents the probability that the VM will change to state \({s}''\), with energy consumption \(EC_{{s}''}\). We impose classically that \(\forall {s}',EC_{{s}''} \sum p(\acute{s}\mid {s}'', EC_{{s}''}) =1\) and \(p(\acute{s}\mid {s}'')> 1\). In addition, a matrix representation of these transition probabilities is conventionally used noting \(P_{v}\) and its dimensions are \(\left| 4 \right| \times \left| 4 \right|\) (matrix 9).

Hence, the transition of an unutilized VMs status is accorded with the variation of arrival rate (\(\mu\)) as follows:

Knowing that, \(\gamma\) is the number of failed tasks.

Failed VMs (FVMs): Actually, resource allocation in a cloud environment suffers from service downtime. It is a major problem facing cloud providers, which leads to a low level of service quality provided to customers. Because once task failure occurs, it impacts the execution of the tasks scheduled to the failed components [10]. In this work, the executed task failed due to a failure of the virtual machine, while the virtual machine is considered failed if:

-

The execution time of one of its loaded tasks exceeds the expected execution time on this virtual machine. This requires rescheduling these tasks and replacing the failed virtual machine. However, we do not know the actual task execution time before scheduling, but its lower and upper bounds can be obtained (Eq. 11) [15]. It is determined as follows:

$$\begin{aligned} f_{i} \notin [min\left\{ VM_{mips_{j}} \right\} , max\left\{ VM_{mips_j} \right\} ] \end{aligned}$$(11)

We measure the disparity in the failed task scheduling performance (DPSF) by dividing the time spent in the case of no failed tasks by the time delay in the case of failed tasks, as shown in the Eq. 12.

The DPSF indicates the system’s ability to provide fault tolerance. The pseudo-code of dynamic resource allocation is presented in Algorithm 1.

It is worth noting that the previous parameters contribute to forming the ready VMs set to execute the selected tasks. In addition, a good adjustment of these ensures the elasticity and scalability of the system over time. Moreover, the value of \(\mu\) and \(\gamma\) is related to the (task-VM) mapping achieved through the scheduling approach. It can also affect the other parameters (\(\beta , \alpha\)); this is what we seek to address in the next section.

4 Proposed scheduling approach based on ALO

Ant Lion Optimizer (ALO) is a new heuristic approach proposed by Mirjalili and Seyedali to solve optimization problems. As its name implies, the ALO algorithm mimics the intelligent behaviour of antlions in hunting ants in nature [46]. In such a way, when ants move over a search space, antlions hunt them using traps. In this study, ALO operators (Fig. 4) are used as follows :

4.1 Coding

In order to solve the current problem, the ant is considered a task seeking to find a suitable ready virtual machine and the antlion as a targeted ready virtual machine. Each combination (task-VM) refers a solution of S-MOAL algorithm. While, the solutions are presented in a vector, as shown in Fig. 5. For example, task three is placed in the virtual machine of index eight and the size of the vector used is equal to the number of tasks.

4.2 Initialization

First, an initial ant population is randomly created and is the main search agent in S-MOAL approach. After that, the fitness value of each ant is evaluated by the steps described below, using the objective function to find the best ants and consider them an elite (optimal ants), while the interaction between ants and antlions in the trap generates a \(x_i\) solution. The initial set of ants used during the optimization is preserved in the following matrix:

Where: \(a_{i,j}\in [0,1]\)

It is further assumed that the antlions are hiding somewhere in the search space where their positions are saved in the matrix 14.

Where: \(A_{i,j}\in [0,1]\)

4.3 Random walks of ants

The ants move over the search space using random walks around the antlions. To mimic such behavior, the initial population provided by the previous step (matrix 13) is manipulated, as follows:

where cum calculates the cumulative sum, h is the maximum number of iteration, \(\xi (k)\) is a stochastic function, k shows the step of random walk (iteration in this study) and rand is a random number generated with uniform distribution in the interval of [0, 1].

In order to keep the random walk in the boundaries of the search space and prevent the ants from overshooting, the random walks should be normalized using the following equation:

The borders \(\sigma _1^k\) and \(\sigma _2^k\) of W(k) updating are defined as proportional to the current number of iterations using the roulette wheel method, and \(\alpha\) provides the number of CPUs available in the cloud.

It should be mentioned that the population of antlions is never evaluated, whereas the locations of ants are assumed in the first iteration, then they are relocated to new positions of antlions in the remaining of iterations until the ants become better. Therefore, S-MOAL simulates the entrapment of ants in antlions pits by changing the random walks around antlions. This way, the likelihood of choosing the random walk is proportional to its fitness value, which corresponds to the act of ant trapping in antlion pits.

4.4 Building a trap for sliding ants towards the antlion

In nature, bigger antlions construct bigger pits to increase their chance of survival. Then, they shoot sand outwards the center of the pit once they realize that an ant is in the trap. For that reason, the roulette wheel operator is employed to find \(\rho _1\) and \(\rho _2\) from the matrix 14 and therefore choosing a fitted antlion that is able to catch the ant. In this regard, S-MOAL simulates this antlion attitude by formulating the following equations:

where L is a ratio, \(q^k\) and \(p^k\) are the best locations for the antlions at k-the iteration. In Eqs. 17 and 18, \(L=10^{G \frac{k}{\dot{s}}}\) where \(\dot{s}\) is the stability factor and G is defined based on the current iteration (G = 2 when k > 0.1\(\dot{s}\), G = 3 when k > 0.5\(\dot{s}\), G = 4 when k > 0.75\(\dot{s}\), G= 5 when k > 0.9\(\dot{s}\) and G= 6 when k > 0.95\(\dot{s}\)). These parameters allow for forming the solution \(x_i^k\) (Fig. 6).

4.5 Catching prey and re-building the pit

In the final part of hunting, the ant is attracted to the bottom of the pit and caught in the antlion’s jaw. After that, the antlion pulls the ant inside the sand and consumes its body [47]. So that, S-MOAL simulates this process by using Eq. 19.

Where

-

\(F^k\) is the fitness of executing a task i on a VM j.

-

The solution \(x_i^k\) is an outcome of the preceding steps \(x_{i}^k \in \left\{ q^k,p^k,w(k) \right\}\), that gives a good result according to Eq. 19 (\(x_{i}^k\) is the best of \(\left\{ q^k,p^k,w(k) \right\}\)) (Fig. 6).

4.6 Elitism

At this step, the produced solutions are gathered to form an elitist set.

4.7 Extract the non-dominant solutions

The purpose of this step is to examine the solutions acquired from the previous step (elitist set) that exists in the archive (Fig. 4). In doing so, the Pareto method is used, which is an effective mechanism to help find a compromise between two objectives. In this search, the Pareto is defined as follow:

-

Definition The vector \(F(\dot{x})\) is said to dominate another vector \(F(\ddot{x})\) denoted \(F_i (\dot{x}) \prec F_i(\ddot{x})\) if only if \(f_i (\dot{x}) < g_i(\ddot{x})\) for all \(i\in \left\{ 1,2,\ldots n \right\}\) and \(f_i(\dot{x}) < g_j(\ddot{x})\) for some \(j\in \left\{ 1,2,\ldots n \right\}\) [48].

This way, non-dominant solutions are sorted and evaluated according to their suitability.

4.8 Treat the non-dominant solutions

To determine the relationship between two non-dominant solutions, a technique based on Spacing Distance is applied. This latter aims to remove the worst solutions from the archive and replace them with the best to accommodate new solutions. Equations from 20 through 24 are used to determine which solutions from non-dominants are worth preserving in the archive and which should be discarded.

where \(\pi _{f}^{i}\) and \(\pi _{g}^{i}\) are the average distance for the non-dominant set in terms of makespan and cost respectively, \(\nu\) is the number of non-dominant solutions. The following equations show how to calculate the distance per individual from its neighbors (\(\theta ^{i}_f\) and \(\theta ^{i}_g\)):

Thus, the new fitness of each individual is:

Finally, the non-dominant solutions that have the best \(\bar{F}(i)\) are retained. Thence, the algorithm continues to loop until reaching the stop criterion (maximum number of iterations). The pseudo-code of S-MOAL is presented as Algorithm 2.

5 Experimental setup and results

This section presents the experiments carried out to evaluate the proposed solution. First, we describe the simulation setup considered in the experiments. Then, three experiments are adopted during the evaluation, empirical experiment, statistical experiment and the DRA model experiment. Second, we give the results of the proposed dynamic resource allocation method and discuss its effect on the different optimized metrics.

5.1 Simulation setup

5.1.1 Tools and platform

To check the performance of our method, we used a Cloudsim 3.0.3 toolkit. It is a simulation tool to mimic the cloud computing scenarios. The experiments are done on Intel (R) core (TM) i5 3320M Processor 2.6 GHz equipped with 4GB RAM, Windows 7 platform, using the Eclipse IDE Luna release 4.4.0.

5.1.2 Cloud infrastructure

The experiments are conducted for one data center using the parameter setup of VMs as shown in Table 2, where the configuration of the three types of virtual machines is inspired by the configuration of Google instances [49]. While the parameter setup of tasks is shown in Table 3. Besides, energy consumption values (Table 4) of different virtual machine states have been chosen according to the assumption (\(EC_a> EC_s> EC_t > EC_l\)). Moreover, the stability factor (\(\dot{s}\)) of S-MOAL is set at 4.

5.1.3 Evaluation methods

In order to evaluate the effectiveness of the proposed method, it has been compared with other existing methods in the literature, including heuristic algorithms DSOS, PBACO, DCLCA, and MOGA. The DSOS algorithm has the advantage of converging faster as the search increases, making it suitable for large-scale scheduling problems. The PBACO method provides better results with regard to the makespan, cost, and deadline violation. The comparison with DCLCA makes it possible to evaluate S-MOAL in terms of fault tolerance, especially because DCLCA provides better quality fault tolerance scheduling. Instead, the MOGA algorithm is mainly used to minimize energy consumption. Nevertheless, these algorithms are designed as mentioned in the works [17, 10, 41, 22], but in a constructive manner to solve a multi-objective problem taking into consideration the parameters and formulations of the issues treated in this paper.

In addition, to examine whether the optimization metrics obtained by S-MOAL are significantly more efficient than those of the other methods, a one sided t-test is used. It is a statistical test to find an observed or extreme result. This test conforms to two hypotheses:

-

The null hypothesis (\(H_0\)) is defined as a no difference between the optimization metrics for the proposed method and the other methods.

-

The alternative hypothesis (\(H_1\)) is the opposite of the \(H_0\) and assumes that some difference exists between optimization metrics.

On the other hand, to evaluate the impact of the parameters (\(\alpha , \beta , \mu\)) on the behavior of the proposed DRA model, S-MOAL is compared with the DSOS, PBACO and MOGA algorithms. Furthermore, this comparison aims to appreciate the scalability and elasticity of the proposed model. In other words, this experiment is used to preview how the DRA model responds to dynamic system change and customer requirements.

5.2 Discussion of the performance optimization results

In this experiment, the number and type of used VMs are mentioned in Table 5. Each test is performed 10 times, then the average value is taken. It should also be noted that S-MOAL refers, in this part, to the implementation of Algorithm 1. The obtained results are detailed below.

5.2.1 Empirical experiment

Figure 7 shows the makespan variation for different tasks. This figure shows that the makespan increases as the number of tasks increases. It is clear that S-MOAL performs better than the other algorithms in terms of makespan. The examination of the proposed approach in terms of cost is presented in Fig. 8. It is apparent from the comparison of the three scheduling algorithms that the proposed approach decreases the value of the cost, due to the completion of each task in an appropriate virtual machine that ensures a minimum price. Therefore, S-MOAL allows a better match between the tasks and the virtual machines. These results also reflected the effectiveness of the mechanisms of task selection and virtual machine adjustment; chiefly because the main purpose of both mechanisms is to form adequate sets of ready virtual machines and selected tasks in each allocation. Furthermore, the third test checks the S-MOAL deadline violation percentage for 600 tasks at different deadlines. The deadline violation rate (\(D_{violation}\)) is calculated by the formula 25.

whereas V is the number of executing tasks that violated the deadline. The real execution time of tasks is calculated using the Eq. 7. The S-MOAL algorithm gives a reducing range which is from 8 up to 23.5% compared to the other algorithms (Fig. 9). This is due to the ability to select parallel tasks with convergent deadlines. Besides, our method dynamically starts and stops virtual machines, as needed, which dynamically increases and decreases the number of resources according to the number of tasks. As a result, the execution of the tasks becomes faster without violating the deadline.

Figure 10 shows the total energy consumption for unused VMs, measured as shown in Eq. 8. We can see that energy consumption of all algorithms is proportional to the number of tasks, but the slope of the S-MOAL graph is the least. That is due to a good adjustment of the unused VMs state, which helps to save energy. In this work, fault tolerance ratio is measured as shown in Eq. 12. From Fig. 11, the S-MOAL shows an improvement in dealing with the failed tasks. In comparison with the DSOS and DCLCA algorithms, it presents a lower DPSF, which permits to deduce that S-MOAL provides better fault tolerance with the increased number of tasks.

5.2.2 The statistical experiment

In order to ensure the optimization performance of the proposed method, the statistical analysis in the Tables and helps prove the effectiveness of the results obtained from the empirical experience in terms of makespan and energy consumption. However, since the empirical experiment shows that DSOS has better results than the other compared methods in terms of makespan as well as for MOGA in terms of energy consumption, we confine the comparison of S-MOAL with the these two latter. The choice of significance level at which we reject \(H_0\) is 1% with critical values obtained from statistical Tables 6 and 7 as 2.738 and as 3.052 respectively.

Table 6 shows the calculated values of the average makespan metric obtained statistically at ten times. It demonstrates that for samples 300 to 1000, the calculated t-value is greater than 2.738 (critical value), which indicates that there is a significant difference between the S-MOAL makespan and that of the algorithms. Henceforth, \(H_1\) is accepted for samples 300 through 1000. However, for the rest of the samples, the calculated value t is less than the critical value, which means that the difference between performances is not significant. This leads to the acceptance of \(H_0\) for samples 100 and 200. In addition, it can be deduced that S-MOAL outperforms DSOS when the search space is larger because, for samples 300 and above, the makespan is significantly lower than that of DSOS.

On the other hand, Table 7 represents the comparative results of energy consumption for the proposed method and the MOGA algorithm. The same interpretation says about the energy consumption where the calculated t-value is greater than the critical value 3.052 for all the samples, which indicates that there is a significant difference between energy consumption performance of S -MOAL and MOGA. This is due to the efficiency of virtual machine tuning compared to the MOGA algorithm.

5.2.3 The dynamic model experiment

Since our model is dynamic, sets of allocations are obtained in each test. The purpose of this experiment is to assess the response of the proposed DRA model with changes in the rate of requests’ arrival (\(\mu =1, \mu =0.5, \mu =0.2\)) and customer needs. Figure 12 shows the number of allocations for different arrival rates. Visually, the S-MOAL curve located above the DSOS and MOGA curves in the three graphs, indicates that S-MOAL is better than the other algorithms.

Figure 13 presents the number of CPUs that are made available to customers in a different \(\mu\). S-MOAL released a large number of CPUs compared to DSOS and MOGA algorithms. For \(\mu =1\) the CPUs resources (\(\alpha\)) are normally released as soon as the running task is completed. Instead, in a case, \(\mu = 0.5\) the \(\alpha\) is minimized, but approximately stable. Whereas a great amount of resources is released when the arrival rate of tasks achieves \(\mu =0. 2\). In terms of memory, few amounts are released in case of \(\mu\) = 1 and \(\mu\) = 0.5. However, when \(\mu\) achieves 0.2, the released RAM increases (Fig. 14). Furthermore, the results in Fig. 15 show a few amounts of unused VMs from the S-MOAL algorithm compared to the other methods, which reflect the efficient use of resources.

These results are convincing because for \(\mu\) = 1 the number of allocation is regular due to the overhead of the system and with almost no released resources. Furthermore, for \(\mu =\) 0.5, the number of allocations has become important because there is a balance between current and incoming tasks. Like so, the number of released resources increases. For \(\mu =\) 0.2, the number of allocations is small, reflecting the high availability of resources.

Additionally, the results show that the system can control the change of resources according to the variation of the arrival tasks rate, using the parameters (\(\alpha , \, \beta , \, \mu , \, \gamma\)). Although the number of tasks changes, the system maintains better responsiveness and behavior, while the number of resources used is adjusted according to the arrival rate (number of customers). This reflects the scalability and flexibility of the system. This experiment used a large number of tasks (between 10,000 and 50,000 tasks), which means that S-MOAL is able to serve on a large scale.

6 Summary and conclusion

In this paper, we proposed an efficient dynamic resource allocation method for cloud computing environments. To simulate the interaction between CRP and customers, we scheduled a set of independent tasks on a set of available virtual machines consisting of RAM and CPU resources. The proposed DRA model highlights the key issues faced by resource providers in the cloud, including fault tolerance and energy consumption. Instead, the Spacing Multi-Objective Antlion algorithm (S-MOAL) aimes at improving service quality that is offered to customers by solving the conflict between makespan and cost. This method combines the dynamic change of the cloud system state and the task scheduling to solve various issues, which makes it effective.

To evaluate the performance of the proposed method, three experiments were used. The first shows good S-MOAL results in terms of makespan, cost, deadline, fault tolerance, and energy consumption compared to the DSOS, PBACO, DCLCA and MOGA algorithms. Likewise, the statistical experiment confirms the significant improvement of the S-MOAL for makespan and energy consumption. While the last experiment proved that our dynamic model resulted in an efficient responsiveness compared to the DSOS and MOGA algorithms, regarding the number of allocations, released resources and adjustment of unused virtual machines.

We believe that the improved performance achieved by the S-MOAL algorithm is due to its attractive manner of hunting. Using the Pareto method led to improve the archive and thus, obtaining a near-optimal solution. The proposed method is also valuable for the dynamic management of resources through adjusting the unused VMs, which economizes the energy. This study is significant since it takes into account fault tolerance performance that makes the execution of tasks flawless on cloud computing systems.

In the future, we would like to apply this method in a real cloud computing system. In addition, we plan to implement it for workflow. We will also try to use other effective mechanisms for task selection and virtual machine tuning.

Notes

Service level agreement.

References

Gupta, B., Agrawal, D.P., Yamaguchi, S.: Handbook of Research on Modern Cryptographic Solutions for Computer and Cyber Security. IGI Global, Hershey (2016)

Gupta, B.B.: Computer and Cyber Security: Principles, Algorithm, Applications, and Perspectives. CRC Press, Boca Raton (2018)

Hamdaqa, M., Tahvildari, L.: Cloud computing uncovered: a research landscape. In: Advances in Computers, vol. 86, pp. 41–85. Elsevier (2012)

Kumar, M., Sharma, S.C.: Deadline constrained based dynamic load balancing algorithm with elasticity in cloud environment. Comput. Electr. Eng. 69, 395–411 (2018)

Mell, P., Grance, T., et al.: The Nist Definition of Cloud Computing. NIST, Gaithersburg (2011)

Madni, S.H.H., Latiff, M.S.A., Coulibaly, Y., et al.: Resource scheduling for infrastructure as a service (iaas) in cloud computing: challenges and opportunities. J. Netw. Comput. Appl. 68, 173–200 (2016)

Zhan, Z.-H., Liu, X.-F., Gong, Y.-J., Zhang, J., Chung, Henry Shu-Hung, Li, Yun: Cloud computing resource scheduling and a survey of its evolutionary approaches. ACM Comput. Surv. (CSUR) 47(4), 63 (2015)

Chowhan, S.S., Shirwaikar, S., Kumar, A.: Predictive modeling of service level agreement parameters for cloud services. Int. J. Next-Gener. Comput. 7(2), 115–129 (2016)

Sadashiv, N., Dilip Kumar, S.M.: Broker-based resource management in dynamic multi-cloud environment. Int. J. High Perform. Comput. Netw. 12(1), 94–109 (2018)

Latiff, M.S.A., Madni, S.H.H., Abdullahi, M., et al.: Fault tolerance aware scheduling technique for cloud computing environment using dynamic clustering algorithm. Neural Comput. Appl. 29(1), 279–293 (2018)

Yan, H., Zhu, X., Chen, H., Guo, H., Zhou, W., Bao, W.: Deft: dynamic fault-tolerant elastic scheduling for tasks with uncertain runtime in cloud. Inf. Sci. 477, 30–46 (2019)

Chou, L.-D., Chen, H.-F., Tseng, F.-H., Chao, H.-C., Chang, Yao-Jen: Dpra: dynamic power-saving resource allocation for cloud data center using particle swarm optimization. IEEE Syst. J. 12(2), 1554–1565 (2018)

Juarez, F., Ejarque, J., Badia, R.M.: Dynamic energy-aware scheduling for parallel task-based application in cloud computing. Future Gener. Comput. Syst. 78, 257–271 (2018)

Yong, L., Sun, N.: An effective task scheduling algorithm based on dynamic energy management and efficient resource utilization in green cloud computing environment. Clust. Comput. 22(1), 513–520 (2019)

Zhang, Y., Cheng, X., Chen, L., Shen, H.: Energy-efficient tasks scheduling heuristics with multi-constraints in virtualized clouds. J. Grid Comput. 16, 459–475 (2018)

Belgacem, A., Beghdad-Bey, K., Nacer, H.: Task scheduling in cloud computing environment: a comprehensive analysis. In: International Conference on Computer Science and its Applications, pp. 14–26, 24–25 April, Springe in Algiers, Algeria (2018)

Abdullahi, M., Ngadi, M.A., et al.: Symbiotic organism search optimization based task scheduling in cloud computing environment. Future Gener. Comput. Syst. 56, 640–650 (2016)

Azad, P., Navimipour, N.J.: An energy-aware task scheduling in the cloud computing using a hybrid cultural and ant colony optimization algorithm. Int. J. Cloud Appl. Comput. (IJCAC) 7(4), 20–40 (2017)

Durillo, J.J., Prodan, R.: Multi-objective workflow scheduling in amazon ec2. Clust. comput. 17(2), 169–189 (2014)

Keshanchi, B., Souri, A., Navimipour, N.J.: An improved genetic algorithm for task scheduling in the cloud environments using the priority queues: formal verification, simulation, and statistical testing. J. Syst. Softw. 124, 1–21 (2017)

Wei, J., Zeng, X.: Optimal computing resource allocation algorithm in cloud computing based on hybrid differential parallel scheduling. Clust. Comput. 22, 7577–7583 (2018)

Zuo, L., Shu, L., Dong, S., Zhu, C., Hara, Takahiro: A multi-objective optimization scheduling method based on the ant colony algorithm in cloud computing. IEEE Access. 3, 2687–2699 (2015)

Belgacem, A., Beghdad-Bey, K., Nacer, H.: Enhancing cost performance using symbiotic organism search based algorithm in cloud. In: 2018 International Conference on Smart Communications in Network Technologies (SaCoNeT), pp.s 306–311, 27–31 Oct 2018, IEEE in El Oued, Algeria (2018)

Belgacem, A., Beghdad-Bey, K., Nacer, H.: A new task scheduling approach based on spacing multi-objective genetic algorithm in cloud. In: International Conference on Computer Science and Information Systems, pp. 189–195, 9–12 September, in Pozna, Poland (2018)

Belgacem, A., Beghdad-Bey, K., Nacer, H.: Task scheduling optimization in cloud based on electromagnetism metaheuristic algorithm. In: 2018 3rd International Conference on Pattern Analysis and Intelligent Systems (PAIS), pp. 1–7, 24–25 October 2018, IEEE in Tebessa, Algeria (2018)

Zhang, F., Cao, J., Li, K., Khan, S.U., Hwang, Kai: Multi-objective scheduling of many tasks in cloud platforms. Future Gener. Comput. Syst. 37, 309–320 (2014)

Barrett, E., Howley, E., Duggan, J.: A learning architecture for scheduling workflow applications in the cloud. In: Web Services (ECOWS), 2011 Ninth IEEE European Conference on, pages 83–90, 14–16 Sept 2011, IEEE in Lugano, Switzerland (2011)

Duan, H., Chen, C., Min, G., Yu, W.: Energy-aware scheduling of virtual machines in heterogeneous cloud computing systems. Future Gener. Comput. Syst. 74, 142–150 (2017)

Kong, W., Lei, Y., Ma, J.: Virtual machine resource scheduling algorithm for cloud computing based on auction mechanism. Optik Int. J. Light Electron. Opt. 127(12), 5099–5104 (2016)

Tsai, J.-T., Fang, J.-C., Chou, J.-H.: Optimized task scheduling and resource allocation on cloud computing environment using improved differential evolution algorithm. Comput. Oper. Res. 40(12), 3045–3055 (2013)

Wang, W.-J., Chang, Y.-S., Lo, W.-T., Lee, Y.-K.: Adaptive scheduling for parallel tasks with qos satisfaction for hybrid cloud environments. J. Supercomput. 66(2), 783–811 (2013)

Chou, L.-D., Chen, H.-F., Tseng, F.-H., Chao, H.-C., Chang, Y.-J.: Dpra: dynamic power-saving resource allocation for cloud data center using particle swarm optimization. IEEE Syst. J. 12(2), 1554–1565 (2016)

Ding, Y., Qin, X., Liu, L., Wang, T.: Energy efficient scheduling of virtual machines in cloud with deadline constraint. Future Gener. Comput. Syst. 50, 62–74 (2015)

Dong, Z., Liu, N., Rojas-Cessa, R.: Greedy scheduling of tasks with time constraints for energy-efficient cloud-computing data centers. J. Cloud Comput. 4(1), 5 (2015)

Jiang, H.-P., Chen, W.-M.: Self-adaptive resource allocation for energy-aware virtual machine placement in dynamic computing cloud. J. Netw. Comput. Appl. 120, 119–129 (2018)

Wolke, A., Tsend-Ayush, B., Pfeiffer, C., Bichler, M.: More than bin packing: dynamic resource allocation strategies in cloud data centers. Inf. Syst. 52, 83–95 (2015)

Cong, X., Yang, J., Weng, J., Wang, Y., Hui, Yu.: Optimising the deployment of virtual machine image replicas in cloud storage clusters. Int. J. High Perform. Comput. Netw. 10(4–5), 423–435 (2017)

Alsadie, D., Tari, Z., Alzahrani, E.J., Zomaya, A.Y.: Dynamic resource allocation for an energy efficient vm architecture for cloud computing. In: Proceedings of the Australasian Computer Science Week Multiconference, p. 16, January 29–February 02, 2018, ACM in Brisband, Queensland, Australia (2018)

Kong, Y., Zhang, M., Ye, D.: A belief propagation-based method for task allocation in open and dynamic cloud environments. Knowl. Based Syst. 115, 123–132 (2017)

Mousavi, S., Mosavi, A., Varkonyi-Koczy, A.R., Fazekas, G.: Dynamic resource allocation in cloud computing. Acta Polytech. Hung. 14(4), 83–104 (2017)

Tseng, F.-H., Wang, X., Chou, L.-D., Chao, H.-C., Leung, Victor C.M.: Dynamic resource prediction and allocation for cloud data center using the multiobjective genetic algorithm. IEEE Syst. J. 12(2), 1688–1699 (2018)

Xiao, Z., Song, W., Chen, Q.: Dynamic resource allocation using virtual machines for cloud computing environment. IEEE Trans. Parallel Distrib. Syst. 24(6), 1107–1117 (2013)

Onat Yazir, Y., Matthews, C., Farahbod, R., Neville, S., Guitouni, A., Ganti, S., Coady, Y.: Dynamic resource allocation in computing clouds using distributed multiple criteria decision analysis. In: 2010 IEEE 3rd International Conference on Cloud Computing, pp. 91–98, 5–10 July 2010, in Miami, FL, USA (2010)

Zhang, Q., Zhu, Q., Boutaba, R.: Dynamic resource allocation for spot markets in cloud computing environments. In: 2011 Fourth IEEE International Conference on Utility and Cloud Computing, pp. 178–185, 5–8 December 2011, in Victoria, NSW, Australia (2011)

Doshi, P., Goodwin, R., Akkiraju, R., Verma, K.: Dynamic workflow composition: using markov decision processes. Int. J. Web Serv. Res. (IJWSR) 2(1), 1–17 (2005)

Mirjalili, S.: The ant lion optimizer. Adv. Eng. Softw. 83, 80–98 (2015)

Mirjalili, S., Jangir, P., Saremi, S.: Multi-objective ant lion optimizer: a multi-objective optimization algorithm for solving engineering problems. Appl. Intell. 46(1), 79–95 (2017)

Das, I., Dennis, J.E.: Normal-boundary intersection: a new method for generating the pareto surface in nonlinear multicriteria optimization problems. SIAM J. Optim. 8(3), 631–657 (1998)

On line: Tarifs de google compute engine. https://cloud.google.com/compute/pricing. Accessed 17 Jan 2019

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Belgacem, A., Beghdad-Bey, K., Nacer, H. et al. Efficient dynamic resource allocation method for cloud computing environment. Cluster Comput 23, 2871–2889 (2020). https://doi.org/10.1007/s10586-020-03053-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-020-03053-x