Abstract

Assessments that are designed to be credible and useful in the eyes of potential users must rigorously evaluate the state of knowledge but also address the practical considerations—politics, economics, institutions, and procedures—that affect real-world decision processes. The Third US National Climate Assessment (NCA3) authors integrated a vast array of sources of scientific information to understand what natural, physical and social systems are most at risk from climate change. They were challenged to explore some of the potentially substantial sources of risk that occur at the intersections of social, economic, biological, and physical systems. In addition, they worked to build bridges to other ways of knowing and other sources of knowledge, including intuitive, traditional, cultural, and spiritual knowledge. For the NCA3, inclusion of a broad array of people with on-the-ground experience in various communities, sectors and regions helped in identifying issues of practical importance. The NCA3 was more than a climate assessment; it was also an experiment in testing theories of coproduction of knowledge. A deliberate focus on the assessment process as well as the products yielded important outcomes. For example, encouraging partnerships and engagement with existing networks increased learning and made the idea of a sustained assessment more realistic. The commitment to building an assessment focused on mutual learning, transparency, and engagement contributed to the credibility and legitimacy of the product, and the saliency of its contents.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction: learning from assessments

Global change assessments by definition must incorporate both social and physical science and consider the potential implications of current and future sources of stress and opportunity (NRC 2007). The Third US National Climate Assessment (NCA3, Melillo et al. 2014) provided participants an opportunity for learning, not only about how to integrate a wide array of information, but also how to knit together different ways of knowing, and how to make assessments more relevant to decision-makers. This paper describes how this assessment—building on lessons from other US and international assessments—was designed, and the processes through which it was implemented. It delineates key elements of success and describes some challenges encountered by those involved, aiming to inform future assessment processes in the US and elsewhere.

Despite a vast array of available sources of scientific information, from satellite data and ocean observing systems, to information about human and ecosystem vulnerabilities, to social science and economics data, understanding what is most at risk from climate change is a daunting task. When these sources of data are integrated with knowledge held by on-the-ground resource managers, business executives, or policy-makers, and a range of geographic and time scales are represented, there is a virtual explosion of data sources, ways of understanding the world, and potential paths for analysis and interpretation. This challenged NCA3 authors to step outside of more familiar, traditional, and disciplinary approaches to explore some of the potentially substantial sources of risk that occur at the intersections of social, economic, biological, and physical systems. They also had to make difficult choices about which issues to emphasize, given the limits of a “manageable” final report. With initial instructions to most teams to condense their work into eight pages, only the most critical issues could be included, even if underlying foundation reports were hundreds or in some cases thousands of pages. This led to tradeoffs about perceptions of risk and personal values within each author team, and significant opportunities for learning in an interdisciplinary and transdisciplinary context.

Assessments that are designed to be credible and useful in the eyes of potential users must rigorously evaluate the state of knowledge but also address the practical considerations—politics, economics, institutions, and procedures—that affect real-world decision processes. In addition, they often must build bridges to other ways of knowing and other sources of knowledge, including intuitive, traditional, cultural, and spiritual knowledge. These need to be respected and incorporated if assessments are to be useful and meaningful to multiple audiences, including Native Americans and a range of other communities.

2 Background on the Third National Climate Assessment process

The National Climate Assessment responds to the 1990 Global Change Research Act (GCRA), Section 106, which requires that an assessment be prepared at least every 4 years that synthesizes, analyzes, evaluates, and assesses the knowledge developed within the US Global Change Research Program (USGCRP), but also identifies impacts across a series of sectors and projected timeframes. Only three such integrated assessments have been completed in the 24 years since 1990. The USGCRP, which was created by the GCRA, is a consortium of thirteen federal science agencies that is charged with coordinating and managing the substantial federal investments in climate science (https://globalchange.gov). Though these assessments are required every 4 years, only two previous assessments were completed (in 2000 and 2009), for a variety of reasons discussed elsewhere (see Buizer et al. 2013).

The first two National Climate Assessments focused primarily on summarizing outcomes of research and documenting the state of knowledge, generally focusing on a synthesis of published, peer-reviewed literature. However, they varied significantly in the extent of stakeholder involvement. For a number of reasons (see Buizer et al. 2013) their outcomes were not as widely used as the authors had hoped. The NCA3 builds on these assessments, but also reflects a new sense of urgency from scientists, managers, and decision-makers across the country—based on growing evidence that climate change is no longer only an issue for the future, but a problem that requires action now. Because impacts are now observable in every region and sector across the country, the products and processes of this assessment were more explicitly designed to be useful in decision contexts. The increased visibility of impacts changed the tenor of the NCA3 conversation and encouraged a stronger focus on assessing response strategies than in previous assessments.

Individuals, communities and companies across the globe are now focusing on building a more resilient future. The terms they use, such as sustainability, adaptation, resilience, and preparedness, imply a wide variety of activities, but those working in these arenas have a great deal in common. They may work in their communities to reduce use of fossil fuels, promote health care for the urban poor, or manage invasive species in a wildlife refuge, but all are concerned about the health of the planet and of human and natural systems in the face of disruption and change. The NCA3 brought together people with diverse interests and expertise; some participants had engaged in previous assessments, others were specifically included to bring new capacity and new ideas into the process. A deliberate focus on community-building within the NCA3 allowed new relationships to be forged among those who otherwise might never have met. The investments in relationship-building within the assessment recognized the interdisciplinary nature of the scientific challenges, but was also intended help sustain the assessment process beyond the report and improve the relevance of the final products in connecting science to decision-making. Consequently, this assessment was “owned” by a much larger group of individuals because of the NCA network of partner organizations and the outreach efforts through NGOs and professional societies (Cloyd et al., Submitted for publication in this special issue).

3 Building the NCA3 process

Initial partners in the NCA3, especially federal agency representatives, staff in the US Global Change Research Program Coordination Office, and the NCA3 federal advisory committee, created a common mission and vision statement based on the perceived need to support decisions and be inclusive and useful to a broad audience of decision-makers and citizens across the US and the globe (USGCRP 2011). They quite consciously worked to create and test innovative and collaborative products and processes that would be useful and possible at this time in US history, recognizing the growing need for “actionable information” to support adaptation and mitigation.

Building the capacity for this type of assessment meant involving people who know how to remove the barriers between scientific disciplines and those who can build connections with user communities in regions and sectors that may use very different language and terminology. Facilitators, communicators, and “boundary spanners” (those who help connect scientists and scientific information with decision-makers and the public) (Guston 2001; Carr and Wilkinson 2005; Hoppe 2010; Lemos et al. 2012) were deliberately incorporated in the NCA3 process because of the need to bridge the gap between scientists and decision-makers in public and private organizations, government agencies, and businesses. The lessons learned over the last decade about the importance of managing the boundary between science and decision-making, e.g., in the context of the National Oceanographic and Atmospheric Administration’s (NOAA) Regional Integrated Science Assessments (RISAs), and many others were explicitly incorporated into the design of the NCA3. In that sense, the NCA3 can be viewed as a large-scale experiment designed to test theories about knowledge networks (Jacobs et al. 2010; Bidwell et al. 2013) and knowledge-to-action research.

The authors, federal advisory committee members, and staff of the NCA3 felt that building the interdisciplinary and regional capacity to sustain this effort would be critical to ensuring that its findings are useful in decision-making. Stakeholders who participated in NCA3 town halls across the country indicated that they were more likely to use the results of the NCA3 if they knew that they could count on the NCA process to produce rigorous and relevant information over time. From their perspective, relevance of the information produced was directly related to their own ability to access, understand, and contribute to the process. Similarly, the importance of building relationships among scientists and stakeholders during the assessment process was recognized as essential in ensuring credible outcomes that are also perceived as relevant and usable. More than a report based on peer-reviewed literature, the inclusive process itself was viewed from the beginning as an important outcome of the NCA3 effort.

4 The National Climate Assessment Development and Advisory Committee (NCADAC): lessons learned

In part because of the decision to make the NCA3 assessment more inclusive and transparent than previous national assessments, the federal advisory committee that took responsibility for producing the NCA3, the National Climate Assessment Development and Advisory Committee (NCADAC) was unusually large, including 44 non-federal participants and 16 federal agency ex-officio representatives. Establishing this committee took 18 months – far more than the original 6—month estimate. This significantly impacted the overall schedule for the assessment and reduced the time available to get the report done: rather than the 4 years allotted by statute to complete the assessment, the NCADAC essentially had only 2.5 years. These regulatory and political transaction costs need to be understood and factored into future assessment planning to minimize barriers to progress and optimize outputs and outcomes from ongoing assessment processes.

Although establishing the NCADAC caused a major delay in the report development, the time in the “holding pattern” was well spent. Because the advisory committee was not yet in place, no decisions could be made—so a series of methodology workshops were set up as “listening sessions” to help inform the process. These NCA methodology workshops (NCA report series, https://globalchange.gov) established a foundation of common knowledge among participants and built capacity for subsequent assessment activities. They gave the participants time to assess priorities, solidify goals and objectives of the NCA3 effort, build knowledge of assessment processes, and broaden the participant community so there could be a collective path forward. In fact, separate communities started to evolve around the workshop topics, including information management, communications, indicators of change, scenarios and modeling, vulnerability assessment, and valuation techniques. Most visible and active of these communities today is the group that focused on indicators; it has developed a broad vision and a pilot demonstration within USGCRP for social, physical, and environmental indicators of climate change (Kenney and Janetos, Submitted for publication in this special issue).

Based on its size, there were fears that the NCADAC would be unwieldy and expensive to support, but surprisingly few major problems arose. This was in part because of strong leadership, which included three experienced chairs and a 12-member Executive Secretariat. The latter included individuals with significant experience in previous assessments who had a wide range of disciplinary, legal, and engagement expertise. Executive Secretariat input was solicited on all process and content issues prior to presentation of these ideas for review and decision by the broader committee. Inclusion of a range of people on the NCADAC who were process experts was another unusual aspect of this advisory committee that served the overall assessment extremely well. Because webinars and conference calls were frequently used instead of in-person meetings and the in-person meetings themselves could be generously characterized as “frugal,” the costs of supporting the NCADAC were far lower than anticipated.

Another critical decision was to include the USGCRP agencies, the chair of the USGCRP, the White House Council on Environmental Quality (CEQ), and the Department of Homeland Security as full participants in the NCADAC, albeit as non-voting members.Footnote 1 Given that the full NCADAC agreed to make decisions by consensus rather than by vote, the limitation on voting by the 16 federal members was not as significant as it might otherwise have been. But having the federal agencies engaged as equal participants and encouraging them to make their scientific and other resources available to support the process was critical to achieving approval of the final document. Although they could not participate in the consensus decision to approve the final draft report, government scientists played important roles in providing scientific expertise on multiple topics and were active in author teams. This balance worked in favor of a more credible and defensible product. Without government input in the process of developing the draft NCA3 report, it is highly unlikely that a consensus could have been reached (or as easily reached) on the ultimate product. Without such a consensus, the document might not have been accepted and released as a government document.

5 Leadership

Not surprisingly, the NCA3 products and process reflected the values and experience of those who led it and of the 30 author teamsFootnote 2 who were selected by the NCADAC. Running a process that had so much visibility and such high expectations can be a daunting task even with a small group of participants; the involvement of 60 people on the NCADAC and of hundreds to thousands more in authoring underlying documents raised the stakes substantially. As with both previous US assessments, navigating the scientific and policy issues that arose almost daily over a two-and-a-half-year period was a major challenge. The assignment was to develop an unassailable scientific document through a transparent and inclusive process while avoiding potential political pitfalls and practical irrelevance. Enlisting authors and reviewers who were as representative as possible of their respective expert or stakeholder communities and who had impeccable credentials was critical to the ultimate success of the endeavor. Balancing many different interests and scientific disciplines required a delicate hand and chapter authors who were willing to step outside of their comfort zone to experiment with new ways of learning and knowing.

The NCADAC was led by a trio of very experienced leaders. The chair, Dr. Jerry Melillo of the Marine Biological Laboratory at Woods Hole, co-led the two previous NCAs and has had a distinguished career as an ecologist spanning decades of work on climate-related topics. As an economist,, co-chair, Dr. Gary Yohe (Wesleyan University), provided important insight on costs and consequences of climate impacts, while also providing important linkages to the IPCC Fifth Assessment. Terese (T.C.) Richmond, the second co-chair and a natural resources lawyer from Seattle, represented stakeholder and private sector interests on the leadership team and worked to ensure that outcomes were useful for decision-makers. In many ways she took on the role of an ombudsman, ensuring that concerns of individual NCADAC members, authors, and staff were properly addressed.

Another element that contributed to meeting the strategic goals of the assessment was the willingness of the leadership to argue strongly for positions, yet compromise for the good of the process at the right moment. Given the ambitious expectations, there had to be trust among participants and an awareness that the collective outcome was more important than winning personal battles. Matching the capacity of the leadership to the nature of the challenge is important to the success of assessments, especially as the nature of these challenges changes over time.

The leadership also had to balance the ambitious goals of the NCADAC, author teams, and staff with what was actually “doable” from the perspective of the authors and the federal agencies. For example, NCADAC members suggested that each author team should have expert observers/assessment specialists who could assist with the consistent characterization of uncertainties, but this was not possible due to a lack of time and resources. Many decisions made during the assessment represented a compromise between an optimal approach and what was possible, balancing scheduling constraints with concerns about quality and accuracy.

6 Sources of knowledge

NCA3 took advantage of multiple sources of knowledge, ranging from traditional ecological knowledge of Indigenous Nations to the latest satellite technology. Recent advancements in understanding climate communication, public perceptions, and information systems were incorporated as well. Many participants noted the richness of the conversation that took place within author teams, due at least in part to the transdisciplinary nature of the assignment. Explicitly focusing on sources of risk and topics of greatest concern within regions and sectors, as opposed to starting with climate drivers, was very helpful in reframing conversations in ways that were more meaningful to decision-makers. A team of communication experts provided advice on a wide range of issues, not the least of which were how to explain complex issues simply and how to focus on communication outcomes that could reach a wide range of audiences. For example, there was an effort to make sure the graphics and associated captions painted a coherent picture that reinforced the text across the whole report, because many people learn visually or by examples rather than through reading text carefully.

A benefit of the large advisory committee was its topical diversity, providing the NCA3 with subject expertise and sources of information not only on climate science and regional and economic sector impacts but also other important topics and issues, such as decision support, adaptation, and mitigation. The wide range of strategies, participants, and contributors, along with the highly transparent approach to conducting this assessment, probably contributed to the overall credibility of the final report and its broad appeal across the US. Further, the strong emphasis on diversity on the NCADAC and the inclusivity of the engagement strategy may have contributed to the absence of legal challenges to date.

7 Documenting scientific findings and levels of certainty: traceable accounts

Another way to understand how different sources of knowledge were integrated into individual chapters of the NCA3 is through the traceable accounts, an NCA3 innovation designed to document the process that the authors used to reach their conclusions and describe their level of certainty. Authors were asked to go beyond standard referencing conventions that documented their scientific sources and describe how they selected the key issues, which literature they depended on most, and which scientific uncertainties are most important now and in the future. This highly transparent approach enhanced the clarity of the process and avoided heavy reliance on terms like “likely” and “virtually certain” used in the text of other assessments to characterize certainty in ways that most audiences either do not understand or interpret in widely different ways (Ekwurzel et al. 2011; Morgan et al. 2009). It will be interesting to see if subsequent assessment processes take advantage of this approach.

Inclusion of direct links to data used to support conclusions and to the references for each climate science graphic reinforced the robust nature of the report’s conclusions. Documenting both the thought processes and the data used built the credibility of the NCA3 and provided information of interest to more sophisticated users. Though hard to evaluate, it would be good to know whether potential critics of the NCA3 process accessed the data and found it convincing. The transparent documentation avoided the accusation made in past assessments, however, that the science was a “black box.”

8 Coproduction and assessment

The term “coproduction of knowledge” is useful in describing mutual learning between scientists and stakeholders (Lemos and Morehouse 2005; Mauser et al. 2013). The theory behind it—that if a scientific product is intended to be used by decision-makers, the decision-makers need to be involved in the problem definition, the discussion of solutions, and creation of the product—was definitely supported in the context of this assessment. A primary motivation for collaborative knowledge production in an applied context is to facilitate access to the facts for decision-makers and to help scientists understand how that information is used. Identifying what motivates stakeholders to feel ownership and see value in the information is a challenge, but one way to enhance both the perception and the reality of relevance to decision processes is to engage them in generating that information. Experience in NOAA RISAs (e.g., Dilling and Lemos 2011; Lemos et al. 2014; McNie 2008) has shown that scientists tend to be viewed as more legitimate sources of information if they actively engage in long-term relationships with stakeholders, build their own understanding of the tacit knowledge of practitioners, and come to be trusted by them.

The concept of coproduction as an approach to engagement was reinforced by experience in the NCA3 effort: the NCA3 report itself served as a convening point for conversations between stakeholders and scientists. They negotiated over which topics to cover, what the evidence was, and where the remaining uncertainties were. As a result, communities formed that can be the core of a sustained assessment process (Buizer et al. 2013). An example of the community-building effort was the facilitated engagement of author teams in sorting through multiple technical input documents and peer-reviewed literature in order to agree on chapter key messages and a process for writing the supporting material (e.g., Moser and Davidson, Submitted for publication in this special issue).

Embedded in this concept of coproduction is the perception that how you engage people in assessment and who to engage in assessment processes is at least as important as the findings themselves. Strategic engagement with the specific stakeholders who are most likely to benefit from a conversation with scientists is an important place to start, but training scientists in how to be receptive to tacit knowledge of on-the-ground experts is at least as important. The coproduction approach should optimize the experience of the participants while also working toward the desired end-point of the research itself. However, the goal of achieving long-term trusted relationships in the process of assessment is not always attainable; not all researchers are prepared to invest the time and energy required to engage in useful ways with decision-makers and vice-versa.

Based on personal communications with members of the NCADAC, an interesting outcome of the NCA3 process was that the more participants were asked for their input about the assessment process, the more invested they became in it. For example, some NCADAC members who were initially skeptical about whether the process was workable became much more interested and supportive as they saw their own ideas bearing fruit, learned to value the input of others, and in some cases became more willing to dedicate more time and give input on options for the path forward. The early methodology workshops also fostered a more thoughtful and inclusive process. This primed the pump for the deliberate and ambitious coproduction process that subsequently developed.

The concepts of use-inspired research and decision support influenced the structure, framework, and components of the NCA3 report, including the selection of chapter topics and the directions to authors to focus on what was most at risk. For example, the explicitly cross-sectoral chapters (Energy, Water and Land; Urban Systems, Infrastructure, and Vulnerability; Biogeochemical Cycles; Rural Communities; Indigenous Peoples, Lands, and Resources; Land Use and Land Cover Change) illustrate the expansion from the narrow sectoral and disciplinary approach in previous assessments to one that embraced the complexity of real-world challenges. Similarly, the input from users resulted in strong direction to authors to focus on multiple stresses and issues that matter to communities, businesses, and policy-makers. All key characteristics of the report format (strictly limited chapter length, the inclusion of key messages for each chapter, a searchable web format, and use of strong graphics and images illustrating climate change impacts and responses) derived from the strong desire to make it both useful and used. Further, the way the report was delivered through partner networks and trusted intermediaries reflected an understanding of what makes information trusted and useful to stakeholders (Cloyd et al., Submitted for publication in this special issue).

9 Assessment and knowledge networks

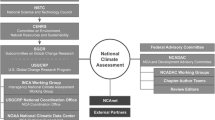

A guiding principle in the engagement strategy of the NCA3 was to build capacity for assessment by tapping into the strength of existing professional and private networks (USGCRP 2011). A “network of networks” approach was explicitly adopted, with the intent to rapidly expand outreach and the ability to harvest knowledge from external groups (Cloyd et al., Submitted for publication in this special issue). Knowledge networks, defined here as groups of people who have intersecting interests and who choose to engage with each other (e.g., via the Internet, social media or in person) to share information and knowledge about selected topics, are increasingly seen as a powerful means to address complex problems (Dyer and Hatch 2006; Jacobs et al. 2010; Eden 2011; Bidwell et al. 2013; Kirchhoff 2013). The most deliberate network-building effort of the NCA3 was the NCAnet, which now involves more than 150 organizations representing at least 100,000 members. (For a discussion of their importance in the NCA3 outreach strategy and helping to sustain the process, see Cloyd et al. (Submitted for publication in this special issue)).

The concept of knowledge networks also permeated the development of working groups of the NCADAC, including the Executive Secretariat. Within the overall umbrella of the NCADAC, each Secretariat member was assigned to organize particular activities that occurred within the purview of the NCADAC, including coordination of groups of chapters (e.g., regions, sectors); report review and response processes; engagement and communication; approach to the sectoral analyses; and guidance on characterizing uncertainty and “traceable accounts.” All Secretariat members also had assignments to ensure the internal consistency of the information across the 30 chapters of the report. Several members served as convening lead authors of chapter teams and most were authors on at least one chapter. Because they had roles as authors as well as leaders of the NCADAC, they could evaluate whether the teams were following the guidance that had been provided. This tiered approach to authorship and oversight was crucial to effectively managing a very complex system with multiple moving parts. In addition, deliberate community building within and between the author teams, the NCADAC, and the staff, included social events that helped build personal and professional relationships. This was difficult, given government travel restrictions, but a great deal was accomplished within the meetings that did take place.

10 Electronic innovation

From the beginning, key decision-makers for the NCA3 at NOAA (the supporting agency for the Federal Advisory Committee and home of the Technical Support Unit) and at the Office of Science and Technology Policy in the White House favored a “new generation” of assessment that was entirely electronic, because of the rapidly changing information world and the administration’s commitment to innovation. The plans for web-based delivery of the NCA3 strongly impacted both the product development and the experience of the authors. This was one of the first major government reports delivered via the Internet, and this mode greatly enhanced both accessibility of the products and traceability of the findings. Based on reactions from government and outside stakeholders, this approach was extremely successful and will influence future assessments.

Web-based delivery provides instant access through search engines on the web, ensuring that the NCA3 report can be regularly accessed by people seeking answers to climate-related questions, not just by those who already know what a National Climate Assessment is. The linked data also allow more sophisticated decision-makers to “look behind the conclusions” and directly access the evidence underlying them. Electronic delivery, including compatibility with Facebook and Twitter, also meant that a broader audience was engaged in the NCA3 rollout process.

The development of an interagency Global Change Information System as the underlying data platform for the NCA3 is intended to be the foundation of a long-term interagency investment of the USGCRP that would allow interoperability and data access for a wide array of potential uses, including support of some “live” indicators of change that could be updated more regularly than the quadrennial reports (Kenney and Janetos, Submitted for publication in this special issue and Waple et al., Submitted for publication in this special issue).

Other electronic platforms were important during the development and review of the assessment report. For example, chapter authors were provided with easily accessed electronic workspaces, which helped to facilitate draft development, author collaboration, and the assessment process itself. However, version control was a critical concern and required negotiated agreements about how authors, editors, and staff could amend chapters, especially in later iterations. Status spreadsheets were used to track responses to each of an estimated 10,000 review comments over the duration of the NCA3 process. This was very time-consuming and tedious, requiring intense central management and coordination. On the other hand, the comment-response process and the technical input reports (from the public) were received and documented through automatic online mechanisms and carefully tracked by the central NCA3 staff; these more automated systems worked extremely well.

11 Sources of tension and lessons learned

The NCA3 is simultaneously a summary of the state of knowledge of a changing climate in the US, a process for engaging researchers and stakeholders, and an assessment that depended on coproduction of knowledge between users and experts. The NCA3 was and is path-breaking in all three respects. But these aims are not necessarily easily reconciled as the reflections below demonstrate.

There were many specific sources of tension, including the need for busy professionals, all volunteering their time, to meet deadlines while developing the highest-quality outcomes possible. For example, despite the collective agreement to prioritize the needs of managers and policy-makers for the NCA3 report, there were differences of opinion about how ambitious the report should be in moving beyond GCRA legal requirements to be as supportive as possible of decision-makers’ needs for scientific information. Instructions to authors on handling issues such as characterizing risk and levels of certainty were not followed to equal degrees by all of the teams—there were so many complexities in the process and so many guidance documents that some authors moved forward without explicitly following the guidance. Further, there were ongoing concerns about internal consistency across the 840 pages of the final report. A final challenge was ensuring readability and consistency of the document given the influence of 300 individuals in drafting it. In the end, all of these concerns were resolved, resulting in unanimous approval by the NCADAC of the final report. This outcome was primarily due to the hands-on efforts of the NCADAC leadership and NCA3 staff developing a consensus approach to each unresolved issue with the affected authors. Understanding these process-related tensions and preparing for them can minimize the challenges for future assessments.

Use of non-traditional material in the NCA3 report also posed challenges. Although the NCADAC agreed from the beginning that it was important to incorporate experiential knowledge in addition to standard peer-reviewed literature, there were ongoing debates about exactly how to do this. In many cases the quality of the data was indisputable because it derived from very highly reviewed or vetted government sources even if not from official “peer-reviewed” literature. However, even in the context of the NCADAC’s publicly and federally approved guidance document on how to handle information quality, there were questions from federal reviewers about several of the sources used. An important innovation in the NCADAC guidance given on information quality was the requirement to make information use consistent with the quality of the data. For example, information used as illustrations and case studies does not require the same kind of academic review that sources of major science conclusions do (USGCRP 2012). Given known challenges in all review processes, including peer-review, the status of peer-reviewed literature relative to other sources is worth discussing carefully. Future assessments will need to address this problem and to identify which data are critical to include even if not peer-reviewed.

There were also lessons learned by physical scientists about handling the evolving understanding of climate drivers and impacts. One issue was how to include new scientific insights that developed over the course of the assessment. The most important debate had to do with handling the new Community Model Intercomparison Project (CMIP) 5 inputs, which were not available early enough in the NCA3 process to be useful for impact assessment. However, the science chapter authors were adamant that this material had to be included if the NCA3 were to be taken seriously (Kunkel et al., Submitted for publication in this special issue). In turn, authors of impacts chapters insisted that studies that did not use the standard climate scenarios used in NCA3 should be eligible for inclusion. The NCA leadership set up special subcommittees to address science issues where there were significant debates so that broadly acceptable outcomes could be negotiated.

Extreme events occurring during the NCA3 assessment process (including Superstorm Sandy) brought up new issues about prediction and physical mechanisms of climate impacts but also highlighted the importance of interdisciplinary work and cascading effects, especially linkages among systems and the vulnerability of urban infrastructure, reinforcing earlier conclusions about the importance of systems thinking and the potential for catastrophic failures.

12 Integration of knowledge and sustained assessment

A number of assessment authors and NCADAC members indicated in personal communications that they were willing to stay engaged throughout the relatively arduous NCA3 process in part because they expected to build the infrastructure and capacity for future assessments, not just create a single report. A principal frustration voiced by participants in previous assessments was that assurances about future engagement with them were not realized. Several participants mentioned that if this were to happen again after the explicit commitments made in the NCA3 process, future engagement with stakeholders as well as scientists would be seriously impaired. Many NCA3 participants hope that the now-trained array of “assessors” across the country will assure that some form of assessment activity will continue even if federal leadership for a sustained assessment does not materialize.

Despite this investment in assessment capacity, some teams were better at facilitating inter- and trans-disciplinary conversations than others. Previous experience in bridging the gap between science and decision-making was one criterion in the selection of authors and members of the NCADAC. Inclusion of industry, government, and NGO representatives in the NCADAC and in chapter teams also helped to ensure that the topics were relevant to decision-making and the degrees of certainty about the findings were clear and defensible. An example of this helpful input from stakeholders is evident in the relatively major changes that evolved in the decision-support chapter (Moss et al. 2014), from a relatively theoretical public draft to a final version that included more examples and conclusions based on managers’ experience.

Integration of knowledge across sectors and regions was greatly enhanced in the rigorous review process. Up to thirty versions of some of the chapters were prepared over an 18-month period, each responding to new input from author teams, the NCADAC, the Executive Secretariat, external chapter reviewers, federal agencies, the National Academy of Sciences, the White House, and the public. In some cases, inconsistencies were identified across chapters that led to important interdisciplinary discussions and new ways to clarify, explain, and defend scientific understanding. In others, the conclusion was that more research was required to resolve the issues at hand. This thorough review process resulted in a much more robust report and far less criticism than might otherwise have been expected.

13 Staff contributions

To ensure effective communications across chapter teams and among the many players involved, NCA staff and editors were assigned to author teams and NCADAC working groups to support them in numerous ways, such as meeting planning or facilitation, assistance with graphics and writing, filling important knowledge gaps, coordination across chapters, and audience-tailored translation of scientific text. The fact that the professional staff had significant content knowledge, good writing and communication skills, and authority to engage with the author teams as needed was an important component of success and should be replicated in future assessments.

Central staffing for the NCA3 included experienced professionals (at the USGCRP office and the NOAA Technical Support Unit) with expertise in a wide range of sectoral and scientific topics; their contributions to the overall process were significant. The level of staff support for author teams was much more visible in this process than in other assessments. High-level staff commitment and quality input often helped authors meet key deadlines. Graduate students and others from partner organizations and universities provided additional support to many teams. The effort, motivation, and capacity of staff members was widely noted as exceptional in conversations, in public meetings, and by authors and NCADAC members in the final evaluation questionnaire. In such complex processes there is a need for trusted staff who can meet expectations without biasing the outcomes.

A critical factor in the ultimate success of NCA3 was the careful work of those who edited and prepared the document for electronic delivery, designed the graphics, trained the participants for the deluge of media requests, conducted town halls to share the draft in all of the regions of the US, and checked and rechecked the responses to comments. A notably smooth rollout process in May of 2014 (and beyond) resulted from years of planning and preparation, the help of the NCAnet, and the huge contributions of climate communications professionals and their networks. The many people involved in every aspect of the report development and review meant that there had been a great deal of socializing of the contents prior to the release, ensuring high credibility and very limited criticism of the findings.

14 Conclusions

Learning from assessment processes involves personal and collective experience and judgment; there is a human element of assessments that is not typically addressed in academic literature. For the NCA3, inclusion of a broad array of people who both study climate change and experience it in their personal lives helped in identifying issues that really matter. Many stakeholders viewed building a sustained assessment process as an investment in relevance in a decision context. Other critical ingredients of decision relevance included using interdisciplinary, risk-based approaches, providing transparent access to data and evidence, and framing controversial topics in unbiased ways. Strong leaders and staff who understand what is achievable, who can harness the power of knowledge networks and benefit from technology and electronic innovations contributed to the success of assessment processes and products.

The NCA3 was more than a climate assessment; it was also an experiment in testing theories of coproduction of knowledge. The development of author teams that included both scientists and decision-makers was a central tenet of the assessment approach and allowed integration of multiple kinds and sources of knowledge. Explicitly incorporating coproduction had two benefits: the potential for more insightful information about impacts and increased relevance to decisions. The highly engaged NCA3 staff and leadership also advanced knowledge integration and sharing across the assessment enterprise.

Finally, encouraging the use of existing sectoral, regional, professional, and academic networks as partners (particularly the NCAnet “network of networks”) increased learning and made the idea of a sustained assessment more realistic. The commitment to building an assessment focused on mutual learning, transparency, and engagement contributed to the credibility and legitimacy of the product, the saliency of its contents to abroad public, and to the absence (to date) of legal challenges.

Notes

The Council on Environmental Quality provides oversight on regulatory and policy matters related to natural resources and the environment. It is parallel to the Office of Science and Technology Policy within the White House. The Department of Homeland Security—which includes the Federal Emergency Management Agency—did not exist at the time of the formation of the USGCRP in 1990, but is now very engaged in climate-related matters and chose to join the NCADAC as a non-USGCRP agency.

Each chapter was led by two coordinating lead authors and typically had 6 additional authors, resulting in a total of approximately 240 primary plus ~60 contributing authors of the whole report.

References

Bidwell D, Dietz T, Scavia D (2013) Fostering knowledge networks for climate adaptation. Nat Clim Chang 3:610–611

Buizer JL, Fleming P, Hays SL, Dow K, Field CB, Gustafson D, Luers A, Moss RH (2013) Report on preparing the nation for change: Building a sustained National Climate Assessment process, National Climate Assessment and Development Advisory Committee. http://downloads.globalchange.gov/nca/NCADAC/NCADAC_Sustained_Assessment_Special_Report_Sept2013.pdf

Carr A, Wilkinson R (2005) Beyond participation: boundary organizations as a new space for farmers and scientists to interact. Soc Nat Resour 18(3):255–265

Cloyd E, Moser S, Maibach E, Maldonado J, Chen T (submitted for this issue) Engagement in the Third US National Climate Assessent: Commitment, Capacity, and Communication for Impact. Climatic Change

Dilling L, Lemos MC (2011) Creating usable science: opportunities and constraints for climate knowledge use and their implications for science policy. Global Environ Chang 21(2):680–689. doi:10.1016/j.gloenvcha.2010.11.006

Dyer JH, Hatch NW (2006) Relation-specific capabilities and barriers to knowledge transfers: creating advantage through network relationships. Strateg Manag J 27(8):701–719

Eden S (2011) Lessons on the generation of usable science from an assessment of decision support practices. Environ Sci Policy 14:11–19. doi:10.1016/j.envsci.2010.09.011

Ekwurzel B, Frumhoff PC, McCarthy JJ (2011) Climate uncertainties and their discontents: increasing the impact of assessments on public understanding of climate risks and choices. Clim Chang 108:791–802

Guston DH (2001) Boundary organizations in environmental policy and science: an introduction. Sci Technol Hum Values 26(4):399–408

Hoppe R (2010) Lost in translation? Boundary work in making climate change governable. In: Driessen P, Leroy P, van Vierseen W (eds) From climate change to social change: perspectives on science-policy interfaces. International Books, Utrecht, pp 109–130

Jacobs K, Lebel L, Buizer J, Addams L, Matson P et al (2010) Linking knowledge with action in the pursuit of sustainable water-resources management. Proc Natl Acad Sci. doi:10.1073/pnas.0813125107

Kenney M, Janetos A (submitted for this issue) Building an Integrated National Climate Indicator System. Climatic Change

Kirchhoff C (2013) Understanding and enhancing climate information use in water management. Clim Chang 119(2):495–509

Kunkel K, Moss R, Parris A (submitted for this issue) Innovations in Science and Scenarios for Assessment. Climatic Change

Lemos MC, Morehouse BJ (2005) The co-production of science and policy in integrated climate assessments. Global Environ Chang 15:57–68. doi:10.1016/j.gloenvcha.2004.09.004

Lemos MC, Kirchhoff CJ, Ramprasad V (2012) Narrowing the climate information usability gap. Nat Clim Chang 2:789–794. doi:10.1038/NCLIMATE1614

Lemos MC, Kirchhoff CJ, Kalafatis SE, Scavia D, Rood RB (2014) Moving climate information off the shelf: boundary chains and the role of RISAs as adaptive organizations. Weather Clim Soc 6(2):273–285

Mauser W, Klepper G, Rice M, Schmalzbauer BS, Hackmann H, Leemans R, Moore H (2013) Transdisciplinary global change research: the co-creation of knowledge for sustainability. Curr Opin Environ Sustain 5(3–4):420–431

McNie EC (2008) Co-producing useful climate science for policy: Lessons from the RISA Program. Ph.D. dissertation, University of Colorado at Boulder

Melillo, JM, Richmond TC, Yohe GW, eds. (2014) Climate change impacts in the United States: The Third National Climate Assessment. U.S. Global Change Research Program, 841 pp. (NCA 3 report)

Morgan MG, Dowlatabadi H, Henrion M, Keith D, Lempert R, McBride S, Small M, Wilbanks T (2009) Best practice approaches for characterizing, communicating, and incorporating scientific uncertainty in decisionmaking. CCSP 5.2, A report by the Climate Change Science Program and the Subcommittee on Global Change Research. National Oceanic and Atmospheric Administration, Washington

Moser SC, Davidson MA (submitted for this issue) Coastal Assessment: The Making of an Integrated Assessment. Climatic Change

Moss R, Scarlett PL, Kenney MA, Kunreuther H, Lempert R, Manning J, Williams BK, Boyd JW, Cloyd ET, Kaatz L, Patton L (2014): Ch. 26: Decision support: Connecting science, risk perception, and decisions. Climate change impacts in the United States: The Third National Climate Assessment, Melillo JM, Richmond TC, Yohe GW, eds., U.S. Global Change Research Program, 620–647. doi:10.7930/J0H12ZXG

National Climate Assessment. NCA report series, vols. 1–9. All volumes published online by the United States Global Change Research Program, accessible at http://www.globalchange.gov/engage/process-products/NCA3/workshops

National Research Council (2007) Evaluating progress of the U.S. Climate Change Science Program: methods and preliminary results. National Academy Press, Washington, DC

U.S. Global Change Research Program (2011) National Climate Assessment Interim Strategy—Summary (Final) Accessed at http://www.globalchange.gov/sites/globalchange/files/NCADAC-May2011-Interim-Strategy.pdf

U.S. Global Change Research Program (2012) Guidance on information quality assurance to chapter authors of the National Climate Assessment questions tools. Accessed at http://www.globalchange.gov/sites/globalchange/files/Question-Tools-2-21-12.pdf

Waple AM, Champion S, Kunkel K, Tilmes C (submitted for this issue) Innovations in Information Management and Access for Assessments. Climatic Change

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors are members of the development team for the Third National Climate Assessment, specifically, the Director (Jacobs) and a member of the Executive Secretariat (Buizer), and thus are not unbiased observers.

This article is part of a special issue on “The National Climate Assessment: Innovations in Science and Engagement” edited by Katharine Jacobs, Susanne Moser, and James Buizer.

Rights and permissions

About this article

Cite this article

Jacobs, K.L., Buizer, J.L. Building community, credibility and knowledge: the third US National Climate Assessment. Climatic Change 135, 9–22 (2016). https://doi.org/10.1007/s10584-015-1445-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-015-1445-8