Abstract

With probably no exception, in atmospheric numerical models, a high vertical resolution is used close to the surface, with gradually reduced resolution higher up. This seems an obvious choice given the importance and complexity of processes close to the ground, and the cost of using a high near-surface resolution throughout the model atmosphere. But there are disadvantages involved that deserve attention. One is that the performance of numerical schemes is generally better for uniform resolution, in particular when the finite-volume approach is used. Another is that with the usual terrain-following vertical coordinate, horizontal flow across high topography will be subject to severe resolution changes encountering the topography. An unintended experiment of the impact of these disadvantages is a by-product of the so-called “parallel” run of two models at the U.S. National Centers for Environmental Prediction in 2006, when the operational Eta model was compared against its intended replacement, the NMM model. In that four+ month experiment the Eta model more accurately forecast 10-m wind speed and 2-m temperatures over the mostly high topography of the western United States than the NMM, despite its much poorer vertical resolution over that area and not too different physical parametrizations. It is suggested that the severe NMM grid cell resolution change of horizontal flow encountering high topography with terrain-following coordinates is the main cause of this result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To better resolve the complex atmospheric processes near the ground, in atmospheric models normally higher vertical resolution is used close to the surface, gradually relaxing the resolution with increasing altitude. This is simple to do using the traditional terrain-following vertical coordinate, yet it becomes an issue with coordinates that intersect topography. Being able to deal with steep model terrain, coordinates intersecting topography nevertheless do appear to enjoy increasing attention (e.g., Chow et al. 2019; Mesinger and Veljovic 2020; Arthur et al. 2020). Thus, how much of a disadvantage, if so, is their inability to use increased resolution next to the model surface?

As to this issue, note the words of Thuburn (2011) referring to coordinates that intersect topography “A disadvantage remains that vertical resolution in the boundary layer becomes reduced at mountain tops as model grids are typically vertically stretched at higher altitudes.” More recently, Chow et al. (2019) write that in contrast to the terrain-following coordinates “non-conforming grid methods lack uniform grid spacing above the terrain, thus accurate implementation of models based on similarity theory can be difficult.”

The situation though is not so simple since in addition to the vertical resolution, the results depend on the resolution surrounding a model surface-layer cell, and the schemes in place. A good illustration of that complexity is the discovery of a problem with the formally very attractively looking Lorenz–Arakawa finite-difference vertical advection scheme, Eq. 2 of Mesinger et al. (2012). Nevertheless, the scheme was found, loc. cit. Figure 4, to permit a physically wrong advection from below ground. This was avoided by replacing the scheme with a finite-volume scheme, so that the grid point values are considered to represent the average for the mass of air inside the grid cell, and not values at the grid points themselves (e.g., Durran 2010, Sect. 5.2). With values of grid cells changed in a Lagrangian way by advection from neighbouring cells, no physically wrong advection was possible.

An unintended experiment on the issues at hand was the “parallel” run comparing in 2006 the results of the then National Centers for Environmental Prediction (NCEP) operational Eta model against those of its intended replacement, the Nonhydrostatic Mesoscale Model (NMM). To introduce the inspection of the surface-layer results of that parallel we shall in the next section summarize the boundary-layer parametrizations of the two models. We follow this by a section giving information on the purpose and dynamics of the NCEP 2006 four+ month parallel run, comparing the operational Eta system versus its planned replacement, “WRF-NMM” (Weather Research and Forecasting NMM) with a newly developed Gridpoint Statistical Interpolation (GSI) analysis system. In Sect. 4 we display the surface-layer results of this comparison, as available in DiMego (2006), along with some additional verification numbers. We end this short paper with concluding comments in Sect. 5.

2 Boundary-Layer Parametrizations of the Eta Model and NMM

To introduce this section, one needs to point out that the NMM model was generated from the Eta model, following a decision of the NCEP management in summer 2002 to abandon the step-topography eta coordinate in favour of the traditional terrain-following “sigma”. More details on that decision can be found in Mesinger and Veljovic (2017). Comprehensive reports on boundary-layer and turbulence parametrizations in place at the time when the Eta model was being prepared for its expected operational use can be found in Janjić (1990, 1994). The parametrizations consisted of a viscous, or molecular, sublayer next to the surface, different over land and ice, and water; coupled to the Mellor–Yamada level 2 (MY-2) parametrization in the remaining part of the lowest model layer, and the Mellor–Yamada level 2.5 (MY-2.5) parametrization above.

The Janjić (1994) paper however reflects the situation of several years before its publication, with only remarks on changes done in the early 1990s. Real-time use of the code during the winter 1990/91 revealed large overestimations of surface fluxes over the warm Gulf Stream water off the U.S. New England coast. This was discovered to be due to the finite-difference type prescription of the master length scale, l, used for the MY-2 vertical transports in the lowest layer. These transports were considered to be taking place in the bottom half of that layer, and the master length scale was prescribed as 0.25 of that at the top of the layer. Its replacement by that of the “l bulk” scheme of Mesinger (Mesinger and Lobocki 1991, also in Mesinger 2010) successfully addressed that overestimation problem.

Another area of development following the state as documented in Janjić (1990, 1994) was that of the MY-2.5 master length scale. That state was one of the Blackadar (1962) relation for the length scale, l:

used subsequently by Mellor and Yamada (1974), but with an additional stipulation that the asymptotic value of l for the height z → ∞, l0, is:

α being an empirical constant, q the square root of twice the TKE (turbulence kinetic energy), and κ the von Kármán constant, 0.4. Mellor and Yamada (1974) set α = 0.1. Janjić (1994) reports on experiments with the Galperin et al. (1988) restriction of the value of l0 and on finding this restriction unnecessary once the relation above is checked for cases of q(z), being close to zero for the entire column, for which the relation gives the value of l0 clearly not intended. Additionally, for improved performance he sets α = 0.075 over water, and 0.2 over land.

With the situation as specified being inadequate for a model atmosphere extending far above the planetary boundary layer, it was replaced by one that in addition to the distance from the surface accounts for stability, the local value of TKE, and the model layer thickness, based on relations nowadays used in one or another form in many other models (Mesinger 1993b).

An additional change resulted from noticing inadequate transports between layers of different depth close to the ground when master length scales are prescribed Blackadar-style at layer interfaces as functions of interface distance from the surface. With the finite-volume numerics of the Eta model, it was found appropriate and also beneficial to consider a master length scale defined for layers and use as the interface value the average of the two layers sharing the interface (Mesinger 1993b, also in Mesinger 2010).

An Eta turbulence closure development of that time is the discovery of a major oversight of the original MY-2.5 treatment of the parametrization’s realizability problem. This was the solution to the problem by clipping the MY-2.5 shear and stability functions GM and GH, done by Mellor and Yamada (1982) and others, including Janjić (1990, Eq. 3.9). However, Mesinger (1993a) noted that this is equivalent to artificially reducing the master length scale in the nominator of the TKE generation term, but not in the denominator. This unphysical way very much prevented the generation of turbulence above the boundary layer. Instead, as reported by Mesinger (1993a, 2010), a consistent thing to do is impose an upper limit on the MY-2.5 master length scale.

Two upgrades of the Eta boundary-layer code were reported on at the 1996 NWP symposium in Norfolk, Virginia. One, (Janjic 1996), is the introduction of the viscous sublayer over land, following a theoretical analysis of Zilitinkevich (1995). This analysis leads to a dependence of the roughness length for temperature, \({z}_{0{\rm T}}\), on the roughness length for momentum, \({z}_{0M}\):

where \({u}_{*}\) is the friction velocity, ν molecular viscosity, and \({A}_{0}\) an empirical constant. Zilitinkevich refers to data which result in a value of \({A}_{0}=0.8.\) Using this or a similar value, roughness lengths for temperature are obtained much smaller than those for momentum, qualitatively agreeing with, for example, experimental data displayed by Bougeault (1997, Fig. 7). Several other studies of the ratio of \({z}_{0T}\) over \({z}_{0M}\) were also made about that time, e.g., Chen et al. (1997). Chen et al. (1997) specifically looked at the performance of (3) using three parametrizations of the surface layer and two sets of experimental data. They found that the choice of parametrizations had little impact and using κC in place of \({A}_{0}\) in (3) decided on implementing (3) over land in the operational Eta model with C = 0.1, amounting to \({A}_{0}=0.04.\)

With (3) coupled to parametrizations of the surface layer an issue of concern are situations in which the standard Monin–Obukhov (M–O) surface-layer parametrization breaks down or becomes inapplicable. These were reviewed by Mahrt (1999). In addition, difficulties arise with cases in which the parameters of (3) are not always physically well posed (Mahrt 1996; Mahrt et al. 1997; Sun et al. 1999).

Another planetary boundary layer upgrade reported on at the mentioned NWP symposium was the introduction of the form-drag parametrization, to account for the impact of subgrid scale topography within the model grid cell, Mesinger et al. (1996). This was done using the approach introduced by Mason (1986) and tested by Georgelin et al. (1994) against data of the Pyrenees Experiment. It involves an “effective roughness length”, \({z}_{0}^{\mathrm{eff}}\), representing wind-direction-dependent drag due to orographic obstacles inside the grid cell. It is calculated for four cardinal directions and interpolated to the specific direction of the wind in place.

3 The 2006 NCEP Parallel Test

Our intention here is to present surface-layer results, to the extent available and felt relevant, of the 2006 parallel test of the NCEP WRF NMM model, using a GSI data assimilation system (Kleist et al. 2009), versus the Eta model, using its EDAS, 3DVAR based data assimilation system. Already in 2002 so-called HiResWindow nest runs of the 10-km Eta model were replaced by those of an 8-km NMM, as summarized by Mesinger and Veljovic (2017). This was done along with a commitment of NCEP/EMC (Environmental Modeling Center) to move for the replacement of the operational Eta by the NMM, recoded from the Eta to use the WRF coding infrastructure and the traditional terrain-following vertical coordinate, an option of the Eta code.

With no changes to the Eta dynamics following 2002, beyond a change in cloud physics and radiation in July 2003, just about all regional modelling efforts of NCEP/EMC went into adjusting the NMM code for improved results compared to the Eta model for an obligatory pre-implementation parallel test (Mesinger and Veljovic 2017). This test was eventually run for the period 1 January–22 May 2006, with the results reported in DiMego (2006).

While the test was run with an identical domain size and resolution, and the same number of vertical layers, the different vertical coordinate involved a drastic difference in the distribution of vertical resolution (DiMego 2006, slide 10). The Eta model used a 20-m layer thickness at sea level, its layer thicknesses in terms of pressure increasing in the vertical, while the NMM had a 40-m thickness at sea level, its layer thicknesses in terms of pressure decreasing in the vertical, up to 420 hPa. This was NMM's interface for its remaining 18 pressure layers above. With this distribution, above the U.S. Great Plains at elevations rising to about a mile the surface-layer thickness of the NMM became less than 35 m, while that of the Eta model increased to more than 150 m.

An additional issue requiring attention is that of possible Eta model and the NMM code changes following the summary of the preceding section. One that must have occurred in both is a change in the Zilitinkevich constant \({A}_{0}\) = κC in (1), from C = 0.1 to 0.2. Namely, the Eta operational code from 2003 is available as it has been “frozen” for use in North American Regional Reanalysis (NARR, Mesinger et al. 2006), and it contains the C value of 0.2.

Despite considerable efforts in which several participants in the NCEP land-surface and surface-layer implementations of the time kindly took place, attempts to find the specific background for this change in terms of a reference or experiments done were not successful. One source we have is a presentation by Geoff DiMego of September 2002 “NCEP Mesoscale Modeling: Where We Are and Where We’re Going.” On its slide 7 it summarizes the Eta/Noah land-surface model (LSM) upgrades of July 2001, including a line stating, “dynamic thermal roughness length refinements.” This must refer to modification of Eq. 1 to have the final value of \({z}_{0{\rm T}}\) include the impact of vegetation, changing dependent on the season, such as studied in Chen and Zhang (2009).

Another source available is the presentation by Geoff DiMego and Eric Rogers of April 2005, “Spring 2005 Upgrade Package for North American Mesoscale (NAM) Decision Brief”. Its slide 17 includes a point “Lowered roughness length for heat to reduce skin temperature, and hence lower diagnosed 2-m air temp”, with more information on what happens as a result. This might well refer to this increase of C from 0.1 to 0.2, since greater C will result in a lower \({z}_{0{\rm T}}\).

One aspect of the two models that needs to be mentioned is that of the possible impact of the difference in vertical coordinates they used. In verification of surface-layer values against analyses the Eta model with its discretized values of surface elevations required accounting for elevation difference between the model topography and verification fields topography, generally considerably greater than for a terrain-following coordinate model such as the NMM. While this sounds as an unfavourable feature of the Eta model, it is in our case sufficient to have in mind that it is not one which should benefit the Eta model in verifications of surface-layer fields against those of the NMM.

What remains to be noted are the changes that were done specifically to the NMM in efforts to make it perform well in comparison against the Eta model, and, of course, also once it is accepted as its replacement. These changes are spelled out in DiMego (2006). The intensity of the attention dedicated to the NMM, and its newly developed data assimilation system compared to the Eta model is perhaps best revealed by the fact that even a code problem of the Eta LSM code, euphemistically referred to as a “glitch” in DiMego (2006, slide 18), was not corrected prior to running the parallel.

Of the NMM dynamical core changes the most conspicuous is the introduction of the nonhydrostatic correction, here not relevant given that the parallel was run with a 12-km horizontal resolution. Among the various physics and land-surface refinements one change specifically relevant to our land-surface results intercomparison is the abandonment of the Eta form drag parametrization of Mason (1986) and Georgelin et al. (1994)–apparently because of the low values of \({z}_{0}^{\mathrm{eff}}\) of the Eta model over the flat area of the U.S. Great Plains and Midwest states, along with the decision to introduce a vegetation impact. Namely, slide 13 of DiMego (2006) shows the values of \({z}_{0M}\) of the two models, with a comment “... much greater coverage of reasonably large \({z}_{0}\) values (> 1 m) in the “NAM-WRF” the last referring in fact to the WRF-NMM, NAM standing for “North American Model”. Also, slide 9 says that instead of \({z}_{0}^{\mathrm{eff}}\) the NMM has \({z}_{0}\) depending “on elevation subgrid variability and vegetation type”.

In addition, one needs to note the two points of slide 19 targeting specific performance issues that sound as having been ad hoc type changes. The first of them says that surface heat exchange coefficients were reduced in statically stable environments, and particularly over elevated terrain. The second notes a modification that “corrects NMM's excessive low-level cooling at night, due in part to thinner surface layer especially over mountainous terrain in the WRF-NMM.”

While this summary of changes made lacks specifics that would be standard in papers on parametrizations, they certainly should be expected to have been well tested given the purpose of the parallel and given the time of several years following the decision of NCEP/EMC in summer 2002 to move for a model using a terrain-following coordinate.

4 Surface-Layer Results over High Topography of the NCEP Parallel Test

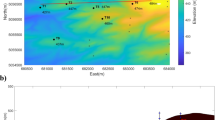

Many results of the NCEP 2006 parallel test are reported in DiMego (2006) separately for about the western part of the contiguous United States (ConUS), or “West”, and the remaining eastern part, “East”. These two parts are presented in Fig. 1, according to slide 51 of DiMego (2006). The “West” of Fig. 1 includes the area of the U.S. Great Plains, with topography decreasing from about two miles just east of the Front Range to the line in red in the figure, at sea level at its southern tip, up to about 300 m at its middle and about 200 m at its top. Over most of this area the depth of the lowest layer of the Eta model is certainly considerably greater than that of the NMM, set up to squeeze over high topography all 42 of its layers from its model ground surface up to the 420 hPa pressure surface, at around 7300 m.

Verification areas used for some of the variables in the 2006 NCEP parallel test comparing the operational Eta model using its 3DVAR data assimilation, and the WRF-NMM using its GSI data assimilation system. After DiMego (2006), slide 51

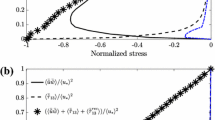

We focus here on the surface-layer variables of 2-m temperature and 10-m wind speed, these being the two primary surface-layer variables with verification results displayed in DiMego (2006) for the West and East domains of Fig. 1. The two systems' verification of 2-m temperatures out to 84-h forecast time for the two domains are displayed in slide 62 of DiMego (2006). The actual errors in these plots being a bit hard to follow, for better visibility in Fig. 2 instead of temperatures we are showing the temperature errors over the West, the domain we are interested in.

Minima of the actual temperatures in this plot occur at 12 h, 36 h, etc., and prior to these times repeatedly NMM errors are greater than those of the Eta, including those at 12 h. It may be somewhat surprising to see the relatively large errors of the NMM in the beginning given that it was using a more advanced data assimilation system than the Eta model. Note however that assimilation of 2-m temperatures is notoriously problematic (e.g., Mesinger et al. 2006). Maxima occur at 21 h, 45 h, and 69 h, and at these each time the Eta model is also more accurate. Of the 29 error values displayed, 19 times the Eta errors are in absolute values smaller than those of the NMM, 7 times the NMM errors are smaller, with 3 declared a tie, because of our reading for Fig. 2 discretized values off the plot of slide 62 of DiMego (2006). The average absolute error of the NMM values displayed is 0.63 °C, and that of the Eta model is 0.42 °C. The maximum absolute error of the Eta model is 0.7 °C, with 12 of the NMM errors being greater than that, and the maximum absolute error, that at 12 h, amounting to 1.75 °C.

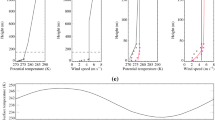

Plots of the 10-m wind speeds for the two domains and the observed values are shown in Fig. 3, this time for both domains. Along with these plots DiMego (2006) points out regarding the NMM (NAMX in the plots) “improvement in east with very low bias, especially daytime”. While DiMego (2006) lists some tuning-type changes in the horizontal diffusion, and several physics changes, perhaps the considerable increase in the value of \({z}_{0M}\), primarily in the East, used by the NMM to include the effect of vegetation seems a good candidate to be primarily responsible for this reduction in the NMM bias compared to that of the Eta model. “Slightly more low bias” compared to the Eta model is the DiMego (2006) comment for the West. Assessment of this difference in the West as being “slight” notwithstanding, one might well wonder what feature of the Eta model could be the most responsible for several years of the NCEP/EMC efforts to fail improving upon the Eta surface-layer variables in the West examined here.

Wind speed at 10 m over the “West” (left) and “East” (right) domains of Fig. 1, of the parallel test of the 2006 NCEP operational system NAM (Eta with its 3DVAR data assimilation), and NAMX (NMM with its GSI data assimilation), January 1 to May 22, 2006, compared to NCEP Forecast Verification System (OBS). (From DiMego 2006, slide 65, also Mesinger and Veljovic 2020, Fig. 2)

5 Concluding Comments

Surface-layer physics and dynamics are certainly areas of extraordinary complexity, including molecular processes next to the ground, the impact of features of the ground including vegetation that range widely from place to place, transitioning to turbulence higher up, and still higher to larger and larger-scale dynamics. Regarding the land cover impact and its seasonality note, e.g., Chen and Zhang's (2009) extensive observational follow-up on Eq. 1 of Zilitinkevich (1995). But the question we are facing is why would any of these favour the Eta model in comparison to the NMM over the U.S. West compared to the East? Among all the model components involved, we suspect the dynamical core of the two models to possibly have in that sense the leading role. Why? Both models use the Arakawa horizontal advection schemes of Janjić (1984), which are finite-volume type in the sense that they conserve total kinetic energy, enstrophy, and internal energy, by considering grid-point values of variables involved as defining the values of model grid-boxes, or cells. In other words, they are not considered to represent point-values of some continuous functions. Further discussion of this issue and more of the relevant references can be seen, e.g., in Mesinger et al. (2018).

The schemes involved achieve the conservations required so that neighbouring grid-boxes exchange the quantities involved, maintaining the domain totals. Thus, if in horizontal advection of, e.g., temperature, advection is taking place from boxes of greater mass into those of smaller mass, a greater temperature change needs to occur in boxes of smaller mass, for internal energy to remain conserved. This will be the case in horizontal advection over high topography when a terrain-following coordinate system is used. This is a numerical artefact not existing when using the eta or eta-like system and could be the reason favouring the Eta surface-layer performance over the U.S. West seen in Figs. 2 and 3.

With the finite-volume discretization involving a downside as just described, one might wonder why would one choose it? The answer is that the finite-volume approach enables one to mimic in a relatively straightforward and inexpensive way important conservation properties of the continuous equations. It is of course a matter of choice of model developers which priorities they will decide upon, and some of the choices preclude various others. In a recent comparison of five dynamical cores tested within the U.S. Next Generation Global Prediction System (NGGPS) project, both hort-listed models were finite-volume models (e.g., Mesinger et al. 2018). One of them was accepted to be the operational model of the U.S. National Weather Service.

Results presented certainly are not a proof of the reason for the better surface-layer skill of the Eta model against the NMM over high topography, and other reasons are possible. However, the results shown do demonstrate that higher vertical resolution of the surface layer, despite a considerable effort of a reputable NWP centre, did not guarantee improvement in the results. This clearly justifies attention to other possibilities, among them to that of topography intersecting vertical coordinates, enabling a horizontally uniform model layer thicknesses.

Data availability

The datasets analysed during the current study are available in the publicly available reference DiMego (2006).

References

Arthur RS, Lundquist KA, Wiersema DJ, Bao J, Chow FK (2020) Evaluating implementations of the immersed boundary method in the Weather Research and Forecasting model. Mon Weather Rev 148:2087–2109

Blackadar AK (1962) The vertical distribution of wind and turbulent exchange in a neutral atmosphere. J Geophys Res 67:3095–3102

Bougeault P (1997) Physical parametrizations for limited area models: some current problems and issues. Meteorol Atmos Phys 63:71–88

Chen F, Zhang Y (2009) On the coupling strength between the land surface and the atmosphere: From viewpoint of surface exchange coefficients. Geophys Res Lett 36:L10404. https://doi.org/10.1029/2009GL037980

Chen F, Janjic Z, Mitchell K (1997) Impact of atmospheric surface-layer parameterization in the new land-surface scheme of the NCEP mesoscale Eta model. Boundary-Layer Meteorol 85:391–421

Chow FK, Schär C, Ban N, Lundquist KA, Schlemmer L, Shi X (2019) Crossing multiple gray zones in the transition from mesoscale to microscale simulation over complex terrain. Atmosphere 10(5):274. https://doi.org/10.3390/atmos10050274

DiMego G (2006) WRF-NMM & GSI Analysis to replace Eta Model & 3DVar in NAM Decision Brief. National Centers for Environmental Prediction, 115 pp. https://www.yumpu.com/en/document/view/29953548/nam-wrf-noaa-national-operational-model-archive-

Durran DR (2010) Numerical methods for fluid dynamics with applications to geophysics, 2nd edn. Springer, Berlin

Galperin B, Kantha LH, Hassid S, Rosati A (1988) A quasi-equilibrium turbulent energy model for geophysical flows. J Atmos Sci 45:55–62

Georgelin M, Richard E, Petitdidier M, Druilhet A (1994) Impact of subgrid-scale orography parameterization on the simulation of orographic flows. Mon Weather Rev 122:1509–1522

Janjić ZI (1984) Nonlinear advection schemes and energy cascade on semi-staggered grids. Mon Weather Rev 112:1234–1245

Janjić ZI (1990) The step-mountain coordinate: Physical package. Mon Weather Rev 118:1429–1443

Janjić ZI (1994) The step-mountain eta coordinate model: Further developments of the convection, viscous sublayer, and turbulence closure schemes. Mon Weather Rev 122:927–945

Janjic ZI (1996) The surface layer in the NCEP Eta Model. In: Proceedings of the 11th conference on numerical weather prediction, 19–23 August 1996, Norfolk, VA, 354–355

Kleist DT, Parrish DF, Derber JC, Treadon R, Wu W-S, Lord S (2009) Introduction of the GSI into the NCEP global data assimilation system. Wea Forecasting 24:1691–1705

Mahrt L (1996) The bulk aerodynamic formulation over heterogeneous surfaces. Boundary-Layer Meteorol 78:87–119

Mahrt L (1999) Stratified atmospheric boundary layers. Boundary-Layer Meteorol 90:375–396

Mahrt L, Sun J, MacPherson JI, Jensen NO, Desjardins RL (1997) Formulation of surface heat flux: application to BOREAS. J Geophys Res 102(D24):29,621-29,649

Mason PJ (1986) On parametrization of orographic drag. Seminar physical parametrization for numerical models of the atmosphere. ECMWF Reading U.K., pp 139–165

Mellor GL, Yamada T (1974) A hierarchy of turbulence closure models for planetary boundary layers. J Atmos Sci 31:1791–1806

Mellor GL, Yamada T (1982) Development of a turbulence closure model for geophysical fluid problems. Rev Geophys Space Phys 20:851–875

Mesinger F (1993a) Forecasting upper tropospheric turbulence within the framework of the Mellor-Yamada 2.5 closure. Res Act Atmos Ocean Model WMO, Geneva, CAS/JSC WGNE Rep vol 18, pp 4.28–4.29

Mesinger F (1993b) Sensitivity of the definition of a cold front to the parameterization of turbulent fluxes in the NMC's Eta model. Res Act Atmos Ocean Model, WMO, Geneva, CAS/JSC WGNE Rep vol 18, pp 4.36–4.38

Mesinger F (2010) Several PBL parameterization lessons arrived at running an NWP model. In: International conference on planetary boundary layer and climate change. IOP Publishing, IOP Conference Series: Earth and Environmental Science 13. https://doi.org/10.1088/1755-1315/13/1/012005

Mesinger F, Lobocki L (1991) Sensitivity to the parameterization of surface fluxes in NMC's Eta model. Preprints, Ninth Conference on Numerical Weather Prediction, Denver, CO, 14–18 October 1991; American Meteorological Society, Boston, MA, pp 213–216

Mesinger F, Veljovic K (2017) Eta vs. sigma: Review of past results, Gallus-Klemp test, and large-scale wind skill in ensemble experiments. Meteorol Atmos Phys 129:573–593

Mesinger F, Veljovic K (2020) Topography in weather and climate models: Lessons from cut-cell Eta vs. European Centre for Medium-Range Weather Forecasts experiments. J Meteorol Soc Japan 98:881–900. https://doi.org/10.2151/jmsj.2020-050

Mesinger F, Wobus RL, Baldwin ME (1996) Parameterization of form drag in the Eta model at the National Centers for Environmental Prediction. Preprints, Eleventh Conference on Numerical Weather Prediction, Norfolk, VA, 19–23 August 1996; American Meteorological Society, Boston, MA, pp 324–326

Mesinger F, DiMego G, Kalnay E, Mitchell K, Shafran PC, Ebisuzaki W, Jovic D, Woollen J, Rogers E, Berbery EH, Ek MB, Fan Y, Grumbine R, Higgins W, Li H, Lin Y, Manikin G, Parrish D, Shi W (2006) North American regional reanalysis. Bull Am Meteorol Soc 87:343–360

Mesinger F, Chou SC, Gomes J, Jovic D, Bastos P, Bustamante JF, Lazic L, Lyra AA, Morelli S, Ristic I, Veljovic K (2012) An upgraded version of the Eta model. Meteorol Atmos Phys 116:63–79

Mesinger F, Rančić M, Purser RJ (2018) Numerical methods in atmospheric models. In: Oxford research encyclopedia of climate science. https://doi.org/10.1093/acrefore/9780190228620.013.617

Sun J, Massman W, Grantz DA (1999) Aerodynamic variables in the bulk formulation of turbulent fluxes. Boundary-Layer Meteorol 91:109–125

Thuburn J (2011) Vertical discretization: some basic ideas. In: Lauritzen PH, Jablonowski C, Taylor MA, Nair RD (eds) Numerical techniques for global atmospheric models. Springer, Berlin, pp 59–74

Zilitinkevich SS (1995) Non-local turbulent transport: Pollution dispersion aspects of coherent structure of convective flows. In: Power H, Moussiopoulos N, Brebbia CA (eds) Air Pollution III—Volume I: air pollution theory and simulation. Computational Mechanics Publications, Southampton Boston, pp 53–60

Acknowledgements

It is a pleasure to acknowledge assistance of Drs. Eric Rogers, Michael Ek, and Matthew Pyle, of NCEP/EMC, and Fei Chen, of the National Center for Atmospheric Research, Boulder, Colorado, in efforts to recall specifics of the NCEP 2006 parallel test. Prof. Larry Mahrt, of the NorthWest Research Associates, Redmond, Washington, helped with information regarding applicability of (1). Prof. Katarina Veljovic, of the Faculty of Physics of the University of Belgrade, assisted in generating Fig. 2 of this paper. Suggestions and comments of two anonymous reviewers helped improve the text of this note and are gratefully acknowledged. The late Sergej Zilitinkevich, via his untimely passing, generated the birth of this modest contribution, dedicated to the greatness of Sergej's mind, his humanity and unwavering spirit.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares to have no conflict of interest regarding statements made in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mesinger, F. Vertical Resolution of the Surface Layer versus Finite-volume and Topography Issues. Boundary-Layer Meteorol 187, 95–104 (2023). https://doi.org/10.1007/s10546-022-00745-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10546-022-00745-2